Abstract

Background

Residents in Accreditation Council for Graduate Medical Education accredited emergency medicine (EM) residencies were assessed on 23 educational milestones to capture their progression from medical student level (Level 1) to that of an EM attending physician (Level 5). Level 1 was conceptualized to be at the level of an incoming postgraduate year (PGY)-1 resident; however, this has not been confirmed.

Objectives

Our primary objective in this study was to assess incoming PGY-1 residents to determine what percentage achieved Level 1 for the 8 emergency department (ED) patient care–based milestones (PC 1–8), as assessed by faculty. Secondary objectives involved assessing what percentage of residents had achieved Level 1 as assessed by themselves, and finally, we calculated the absolute differences between self- and faculty assessments.

Methods

Incoming PGY-1 residents at 4 EM residencies were assessed by faculty and themselves during their first month of residency. Performance anchors were adapted from ACGME milestones.

Results

Forty-one residents from 4 programs were included. The percentage of residents who achieved Level 1 for each subcompetency on faculty assessment ranged from 20% to 73%, and on self-assessment from 34% to 92%. The majority did not achieve Level 1 on faculty assessment of milestones PC-2, PC-3, PC-5a, and PC-6, and on self-assessment of PC-3 and PC-5a. Self-assessment was higher than faculty assessment for PC-2, PC-5b, and PC-6.

Conclusions

Less than 75% of PGY-1 residents achieved Level 1 for ED care-based milestones. The majority did not achieve Level 1 on 4 milestones. Self-assessments were higher than faculty assessments for several milestones.

What was known and gap

While emergency medicine has conceptualized Level 1 performance to be at the level of an incoming resident, research to date has not assessed this aspect of the milestone framework.

What is new

A study using faculty and residents' self-ratings on the Emergency Medicine Milestones.

Limitations

Single specialty, small sample, and lack of standardization of faculty assessments all limit generalizability.

Bottom line

Less than 75% of entering residents achieved Level 1 for emergency department care-based milestones, and the majority did not achieve Level 1 on 4 of these milestones.

Introduction

Medical education has moved to a competency-based education and assessment model. This is a distinct deviation from previous time-based models and has been driven by the Accreditation Council for Graduate Medical Education (ACGME), first through the Outcome Project and, more recently, the Milestones Project.1,2 The core element of the new approach is to use real-time, competency-based assessments. These frequent low-stakes, competency-based assessments would replace many of the traditional, less frequent, high-stakes global assessments, which are often done long after the actual behavior or skill being evaluated was performed.2,3

Presently, all emergency medicine (EM) residents must be rated on a continuum describing the trainee's level of function across 23 milestones.3 These milestone assessments capture EM residents' progression across a continuum of maturation, ranging from medical student level up to that of an attending physician, via a 5-level hierarchical progression score.4 Subcompetencies measure discrete and observable skills in interpersonal and communication skills (ICS), professionalism (Prof), patient care (PC), medical knowledge (MK), practice-based learning and improvement (PBLI), and systems-based practice (SBP).4 For convenience, the milestones have been subdivided by EM program directors into 3 categories: emergency department (ED) care-based milestones (PC 1–8), procedural-based milestones (PC 9–14), and systems-based milestones (MK, SBP 1–3, PBLI, Prof 1–2, and ICS 1–2).

The ACGME describes Level 1 as “The resident demonstrates milestones expected of an incoming resident.”4 Level 1 milestones were initially conceptualized to be at the level of an incoming postgraduate year (PGY)-1 resident. However, this has not been confirmed, and it is unknown whether incoming PGY-1 residents have achieved Level 1 milestones. Previous studies have shown that trainees overestimate their knowledge and abilities,5,6 yet this has not been shown to date with the milestones. We designed this study to determine what percentage of incoming PGY-1 EM residents are judged by faculty to have achieved Level 1 milestones on the 8 ED care-based subcompetencies (PC 1–8).

The primary outcome of this study was the percentage of incoming PGY-1 EM residents who were judged by faculty to have achieved Level 1 milestones for each of the 8 ED care-based subcompetencies. The secondary outcomes were the percentage of residents who had achieved Level 1 by their own judgement and the absolute differences between faculty and self-evaluations.

Methods

Study Design

This was an observational study conducted at 4 EM residency programs in July 2013 to assess incoming PGY-1 residents. All incoming PGY-1 EM residents beginning their residency in July 2013, who graduated medical school within the previous 12 months, were eligible for inclusion. PGY-1 EM residents who had completed a year of residency training previously and residents who graduated medical school more than 1 year prior to beginning residency, were excluded from the study.

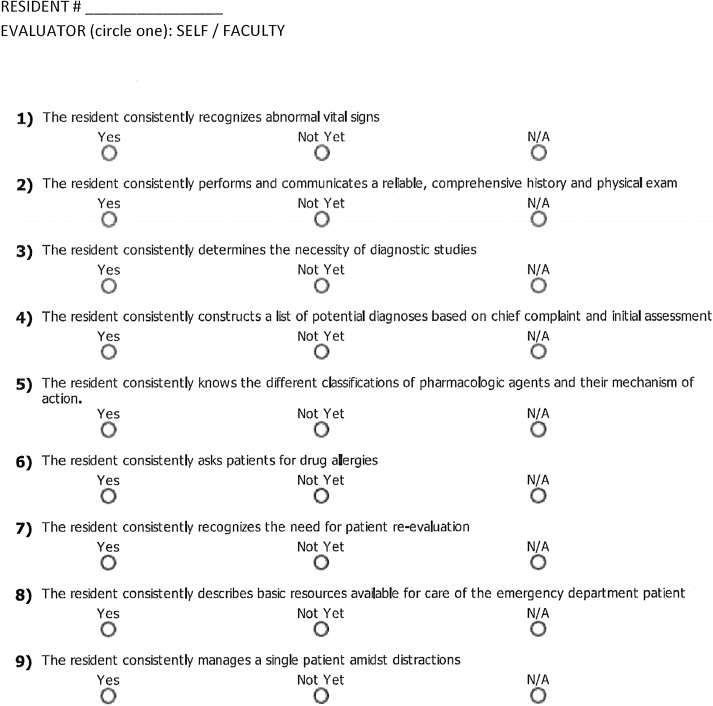

The residents were assessed on 9 Level 1 subcompetencies (8 milestones) by direct observation from EM faculty over the course of their first month of residency (figure). Core faculty who worked with the subject resident completed the questionnaire after working several shifts with the resident. The survey was sent out at the end of the resident's first month of residency. Residents completed self-assessments on the same Level 1 subcompetencies once at the end of their first month of residency. Questions on the assessment form were adapted from the Level 1 PC milestone subcompetencies published by the ACGME.4 Faculty and residents were asked to state whether they judged the resident to have met the particular subcompetency by answering “Yes,” “No,” or “Not Applicable” (N/A). If a response of N/A was received, that data point was removed. The majority response was used to determine whether the residents met the subcompetency or not. For example, if 2 faculty members assessed the resident as “Yes,” and 1 faculty member assessed the resident as “No,” this was considered a “Yes” response. Identical forms were used for the self-assessment and the faculty assessment.

FIGURE.

Nine Questions That Address 8 Patient Care Subcompetencies Evaluated

Although the milestones themselves have been assigned specific levels by program directors and core faculty,6 assessment tools for the milestones have not yet been well studied. Five program directors with more than 30 combined years of experience collaborated to construct the assessment tool and to provide evidence for content validity.7 The authors have roles on the Joint Milestones Task Force and long-term experience as medical education leaders. For response process standardization, questions on the assessment tool were field tested with assistant program directors, and feedback was gathered about the questions and the tool. Assessors at each participating site were trained to use the assessment tool by the author at that site. Training consisted of 1-on-1 discussions between the author and faculty to ensure they understood the milestone project and the subcompetencies being evaluated. Faculty participants were specifically asked to assess whether the resident met the criteria as a simple “Yes” or “No” answer. Residents did not receive training for self-assessments.

This study was approved by the Institutional Review Boards of all participating institutions.

Data Collection

Faculty and residents were provided with the questionnaires, and the data were compiled by local site directors. Data from the local sites were deidentified before being sent to the principal investigator for analysis.

Data Analysis

Descriptive statistics were used to describe the percentage of PGY-1 EM residents who were assessed to have achieved Level 1. Disagreements were compared using the McNemar test and Kappa coefficient.

Results

There were 42 PGY-1 residents at the 4 participating programs. One resident had previous training and was excluded. This left 41 residents in the study population.

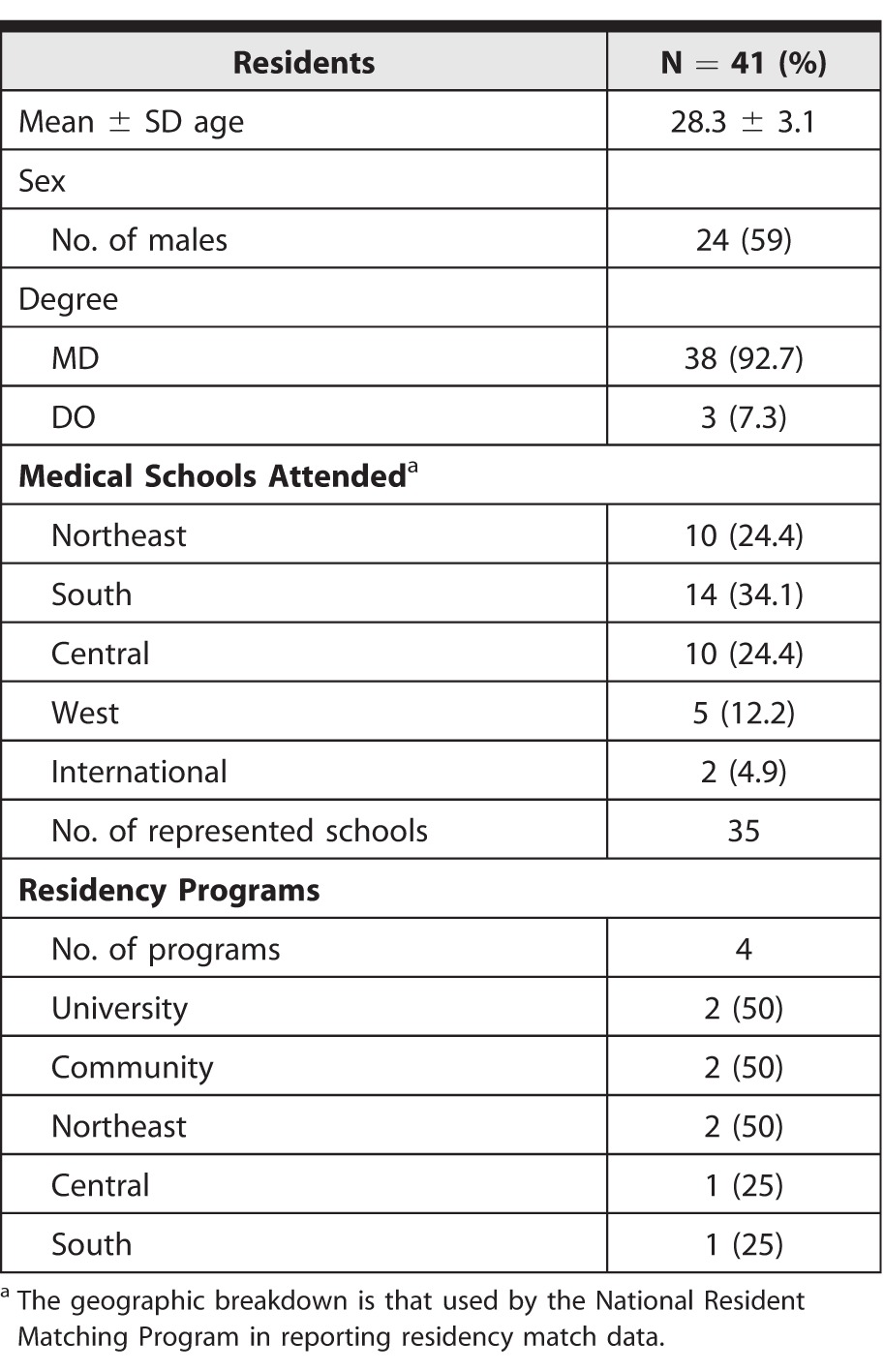

Demographic data for the residents and the programs are included in table 1. The sites for the 4 residency programs were 2 university hospitals and 2 suburban community hospitals.

TABLE 1.

Demographic Data

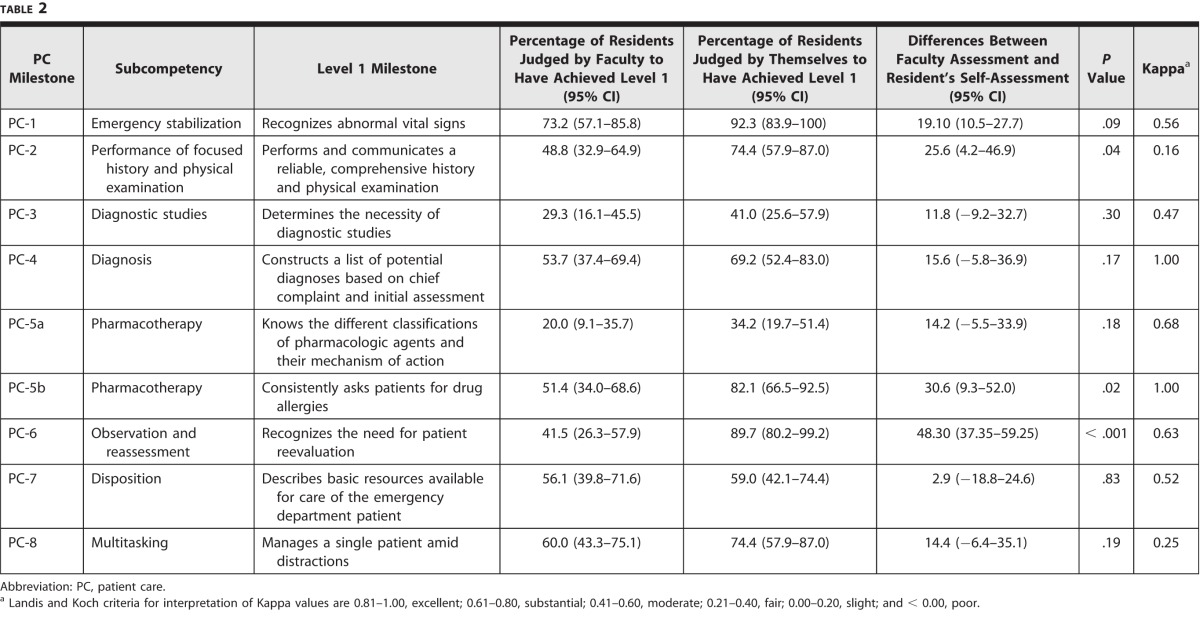

Faculty assessments were received for all 41 residents (100%), and self-assessments were received for 39 residents (95%). The percentage of incoming PGY-1 EM residents, assessed by both faculty and themselves to have achieved Level 1, are summarized in table 2.

TABLE 2.

Percentage of Residents Achieving Each Subcompetency

The percentage of PGY-1 residents assessed by faculty as having achieved Level 1 ranged from 20% (PC-5a) to 73.2% (PC-1), and from 34.2% (PC-5a) to 92.3% (PC-1) on self-assessment. The majority of PGY-1 residents were assessed by faculty as not having achieved Level 1 on PC-2, PC-3, PC-5a, and PC-6. Self-assessment was higher than faculty assessment for PC-2, PC-5b, and PC-6. Differences in proportions between faculty assessment and self-assessment were most pronounced for subcompetency PC-6, observation and reassessment (48.3%; 95% CI 37.35%–59.25%; P < .001).

Discussion

Although a consensus of EM program directors and core faculty was used to determine the milestones and subcompetencies that incoming PGY-1 residents should be able to obtain,4 our study showed that less than 75% of incoming PGY-1 residents actually achieved this level of performance (Level 1) on all 9 subcompetencies assessed. Further, as predicted, residents' self-assessments were higher than faculty assessments.

Overall, a low percentage of incoming PGY-1 EM residents were assessed by faculty as having achieved Level 1 (20%–73%), and the majority of PGY-1 residents were assessed by faculty as not having achieved Level 1 on several subcompetencies. This information is valuable to program directors and educators. Although Level 1 milestones were initially conceptualized to be the expected level of an incoming PGY-1 resident, our data suggest that this may not be the case. Because the use of the milestones is new, it is still unclear what level residents should attain at each PGY level. The initial thought that Level 1 is the level of a graduating medical student may just not be true. Residency training curricula should probably still include teaching and training to allow them to achieve the Level 1 milestones.

Although our study focused on EM milestones, our results have implications for other specialties. For example, the EM PC-2 Milestone (“Performance of focused history and physical examination”) is similar to that for residents in internal medicine, pediatrics, and general surgery. Just as we found that many incoming EM residents were assessed to have not achieved Level 1, the same may hold true for incoming residents in other specialties. Program directors in those specialties may want to include an orientation period that includes training toward their Level 1 milestones.

Compared to faculty assessments, resident self-assessments were significantly higher for subcompetencies PC-2, PC-5b, and PC-6. We were not surprised by this finding, as Davis et al5 previously reported on the limitations of physician self-assessments of competency when compared with objective external measures.5

We were concerned by the findings for PC-2 (“Performs and communicates a reliable, comprehensive history and physical examination”). The finding that resident self-assessment indicated that the PGY-1 EM resident demonstrated the ability to “perform a reliable and comprehensive physical examination” was countered by faculty assessment indicating that this was demonstrated much less often. We believe most graduating medical students should have received sufficient education and opportunity for experience by the time they enter residency training to possess this competency (at least for adult patients). Overall, it appears that milestones remain a work in progress, and that residents may enter EM residency without satisfactory performance at Level 1 for all subcompetencies.

The limitations of our study include the small sample of EM residency programs and the small population of PGY-1 EM residents. In addition, our results are restricted to the 8 preselected ED care-based milestones. The study also is limited by the fact that there was no standardization of assessment provided by the faculty, although this reflects the “real-time” end-of-shift or end-of-rotation assessments by EM faculty.

Future work to further test the validity of our findings should involve repeating our assessment as part of a larger multicenter trial, along with a follow-up after 6 months to assess for improvement among PGY-1 EM residents in these domains.

Conclusion

Less than 75% of PGY-1 residents were judged by faculty to have achieved Level 1 milestones for ED care-based subcompetencies. The majority of residents were judged to have not achieved Level 1 on 4 of the subcompetencies, and the majority of residents rated themselves as not having achieved Level 1 performance on 2 subcompetencies. Self-assessments were higher than faculty assessments for several subcompetencies. Our findings have important implications for EM programs and may also be relevant to other specialties.

Footnotes

Moshe Weizberg, MD, is Emergency Medicine Program Director, Department of Emergency Medicine, Staten Island University Hospital; Michael C. Bond, MD, is Emergency Medicine Program Director, Department of Emergency Medicine, School of Medicine, University of Maryland; Michael Cassara, DO, is Emergency Medicine Associate Program Director, Department of Emergency Medicine, Hofstra North Shore–LIJ School of Medicine; Christopher Doty, MD, is Emergency Medicine Program Director, Department of Emergency Medicine, University of Kentucky; and Jason Seamon, MD, is Emergency Medicine Associate Program Director, Joint Milestones Task Force, Department of Emergency Medicine, Grand Rapids Medical Education Partners, Michigan State University.

Funding: The authors report no external funding source for this study.

Conflict of interest: The authors declare they have no competing interests.

These data were presented as a poster at the Council of Emergency Medicine Residency Directors Academic Assembly, New Orleans, Louisiana, April 2014, and as an oral presentation at the Society for Academic Emergency Medicine Annual Meeting, Dallas, Texas, May 2014.

The authors would like to thank all of the emergency medicine residents who participated in this study.

References

- 1.Nasca TJ, Philibert I, Brigham T, Flynn TC. The next GME accreditation system—rationale and benefits. N Engl J Med. 2012;366(11):1051–1056. doi: 10.1056/NEJMsr1200117. [DOI] [PubMed] [Google Scholar]

- 2.Swing SR. Assessing the ACGME general competencies: general considerations and assessment methods. Acad Emerg Med. 2002;9(11):1278–1288. doi: 10.1111/j.1553-2712.2002.tb01588.x. [DOI] [PubMed] [Google Scholar]

- 3.Beeson MS, Carter WA, Christopher TA, Heidt JW, Jones JH, Meyer LE, et al. The development of the emergency medicine milestones. Acad Emerg Med. 2013;20(7):724–729. doi: 10.1111/acem.12157. [DOI] [PubMed] [Google Scholar]

- 4.Beeson MS, Christopher TA, Heidt JW, Jones JH, Promes SB, Meyer LE. The Emergency Medicine Milestone Project 2013. doi: 10.4300/JGME-05-01s1-02. https://www.acgme.org/acgmeweb/Portals/0/PDFs/Milestones/EmergencyMedicineMilestones.pdf. Accessed August 27, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Davis DA, Mazmanian PE, Fordis M, Van Harrison R, Thorpe KE, Perrier L. Accuracy of physician self-assessment compared with observed measures of competence: a systematic review. JAMA doi: 10.1001/jama.296.9.1094. . 2006:296(9):1094–1102. [DOI] [PubMed] [Google Scholar]

- 6.Korte RC, Beeson MS, Russ CM, Carter WA, Reisdorff EJ. Emergency Medicine Milestones Working Group. The emergency medicine milestones: a validation study. Acad Emerg Med. 2013;20(7):730–735. doi: 10.1111/acem.12166. [DOI] [PubMed] [Google Scholar]

- 7.Downing SM. Validity: on the meaningful interpretation of assessment data. Med Educ. 2003;37(9):830–837. doi: 10.1046/j.1365-2923.2003.01594.x. [DOI] [PubMed] [Google Scholar]