Abstract

Background

Variation in physicians' practice patterns contributes to unnecessary health care spending, yet the influences of modifiable determinants on practice patterns are not known. Identifying these mutable factors could reduce unnecessary testing and decrease variation in clinical practice.

Objective

To assess the importance of the residency program relative to physician personality traits in explaining variations in practice intensity (PI), the likelihood of ordering tests and treatments, and in the certainty of their intention to order.

Methods

We surveyed 690 interns and residents from 7 internal medicine residency programs, ranging from small community-based programs to large university residency programs. The surveys consisted of clinical vignettes designed to gauge respondents' preferences for aggressive clinical care, and questions assessing respondents' personality traits. The primary outcome was the participant-level mean response to 23 vignettes as a measure of PI. The secondary outcome was a certainty score (CS) constructed as the proportion of vignettes for which a respondent selected “definitely” versus “probably.”

Results

A total of 325 interns and residents responded to the survey (47% response rate). Measures of personality traits, subjective norms, demographics, and residency program indicators collectively explained 27.3% of PI variation. Residency program identity was the largest contributor. No personality traits were significantly independently associated with higher PI. The same collection of factors explained 17.1% of CS variation. Here, personality traits were responsible for 63.6% of the explained variation.

Conclusions

Residency program affiliations explained more of the variation in PI than demographic characteristics, personality traits, or subjective norms.

What was known and gap

Prior research has attributed variations in physicians' practice patterns to the residency program in which they trained, yet the influences of modifiable determinants of practice patterns are not known.

What is new

A survey of internal medicine residents showed the residency program to be the largest contributor to variations in resource use.

Limitations

Response rate of less than 50% creates the potential for respondent bias; survey tool lacks validity evidence.

Bottom line

Residency programs can leverage their influence over residents' resource usage patterns to improve the value of care provided by their graduates.

Introduction

A report from the Institute of Medicine (IOM) estimates that approximately 30% of health care spending is for care that is unnecessary.1 Physician choices are behind much of this waste, but the origins of variation in physician practice patterns are complex. While a sizeable literature links this variation to geography, another IOM report suggests that practice variation is as large within regions as across them.2 Since it is not easy to overcome variations explained by geography, a better understanding of the determinants that are modifiable is necessary. Those determinants might be found among physicians' training, experience, practice environment, and/or personal characteristics, and may range from more changeable to less changeable.

Training variation is an appealing factor to investigate because it is mutable, and previous research suggests that it is influential. For example, the residency program at which a physician trained is predictive of patient outcomes even after the physician graduates3; exposure to conflict of interest policies during residency has been associated with subsequent prescribing patterns4; and the spending intensity of the region of residency training has been associated with the intensity of physician spending after training.5,6 Medical educators believe that graduate medical education influences the later practice patterns of its learners,7,8 thus creating an opportunity to inculcate residents the principles of cost-consciousness and resource stewardship. Accordingly, residency programs have the potential to reduce unnecessary testing and variation in clinical practice not only during training, but also throughout a physician's career.

While these models sound promising, systematic research on the formation of physicians' practice patterns is scarce, and other factors, like personal traits, may also play a major role. We therefore conducted a multicenter study of residents at 7 internal medicine (IM) residency programs in a single metropolitan area. The goal of the study was to assess the importance of the residency program relative to individual physician characteristics (including demographics, attitudes, psychological traits, and perceived behavior control) in explaining variations in resident physicians' likelihood of ordering tests and treatments and in the certainty of their intention to order.

Methods

Participants

Seven internal medicine (IM) residency programs in the Philadelphia metropolitan area participated in the study (Crozer-Keystone Health System, Drexel University, Lankenau Medical Center, Pennsylvania Hospital, Temple University, Thomas Jefferson University, and the Hospital of the University of Pennsylvania), ranging from small community-based programs to large university residency programs. All preliminary, transitional, and categorical IM interns and residents were invited to complete a survey in March 2014. Eligible participants received an e-mail invitation, with weekly reminders for 1 month. The e-mail contained a link to the web-based survey (Qualtrics LLC). Survey participants were entered into a lottery to win 1 of 2 $500 gift cards (selected from the first 100 respondents) or 1 of 4 $300 gift cards (from the remaining respondents).

Vignettes

To determine respondents' average practice intensity, we assembled 34 clinical vignettes designed to gauge preferences for aggressive clinical care. From the Health Tracking Physician Survey,9 we drew 6 vignettes relevant to general medicine. We developed 28 additional clinical vignettes describing situations where ordering a test or treatment did not reflect high-value care based on the Choosing Wisely Campaign10 and a literature review.11 They could be answered as “definitely yes,” “probably yes,” “probably no,” and “definitely no.” A total of 7 medical education, instrument design, and high-value care experts reviewed these vignettes prior to piloting them with 23 IM faculty. The extent of concentration across responses was assessed using the Simpson index,12 calculated as the sum of the square of each response level's share of respondents. With 4 response categories, this index ranges between 0.25 (equal distribution of answers across response levels) and 1 (all answers in a single response category). We excluded 11 vignettes with a Simpson index value greater than 0.60. The remaining 23 vignettes (4 from the Health Tracking Physician Survey and 7 designed by the authors) spanned diagnostic testing (n = 13), request for consultation (n = 2), and treatment (n = 8), and were predominately outpatient based (n = 13).

Attitudes and Psychological Traits

We used several scales with evidence of reliability and validity in other contexts to assess physician attitudes, psychological traits, and perceived behavior control that may influence physicians' intention to order a test or treatment.13–21 The Risk Aversion Scale measures a physician's attitude toward risk-taking in 6 questions rated on a 6-point scale from “strongly agree” to “strongly disagree.”18,19 A higher score suggests risk aversion and has previously been associated with higher resource utilization.19 Two domains from the revised Physicians' Reaction to Uncertainty Scales measure stress related to uncertainty (5 questions) and related to concern about bad outcomes (3 questions), also rated on a 6-point scale from “strongly agree” to “strongly disagree.”15,16 A higher score is indicative of stress related to uncertainty or fear of bad outcomes, and has also been linked with higher resource utilization.22 The Big Five Inventory21 measures personality traits (openness, conscientiousness, extraversion, agreeableness, and neuroticism) with 10 questions using a 5-point scale ranging from “disagree strongly” to “agree strongly.” The Core Self-Evaluation scale measures self-perceived ability (self-efficacy and self-esteem), neuroticism, and perception of locus of control with 12 questions using a 5-point scale that ranges from “disagree strongly” to “agree strongly.”17

The theories of reasoned action and planned behavior20 posit that many behaviors can be predicted by a person's intentions,20,23,24 which are influenced by expected attitudes and perception of related subjective norms. On this basis, we created 11 additional questions to assess subjective norms (“Attending physicians at my institution pay attention to the number of tests ordered,” with answer choices of “yes,” “no,” or “I don't know”); behavioral intentions (“I only order tests that will change patient management”); and self-perceived knowledge related to high-value care (“I know where to find how much my patients will be billed for tests”) on a 5-point scale ranging from “strongly disagree” to “strongly agree.” We also collected basic sociodemographic data.

Main Outcomes

The primary outcome was the participant-level mean response to the 23 vignettes as a measure of practice intensity (PI). The secondary outcome was a certainty score (CS) constructed as the proportion of vignettes for which a respondent selected “definitely” versus “probably.”

The protocol was approved by the Institutional Review Boards at all sites.

Data Analysis

Cronbach α statistic was calculated for the PI and CS outcomes, as was the PI-CS Pearson correlation coefficient. Linear regression models predicting each outcome as a function of 4 blocks of explanatory variables (personality traits, perceptions of subjective norms, demographics, and residency program indicators) were estimated. A Shapley-Owen decomposition25–27 of the R2 value from each model was conducted to assess how much of the total explained variation was attributable to each block of variables and each of the specific personality dimensions. All analyses were performed using Stata version 13.1 (StataCorp LP). P < .05 was considered statistically significant.

Results

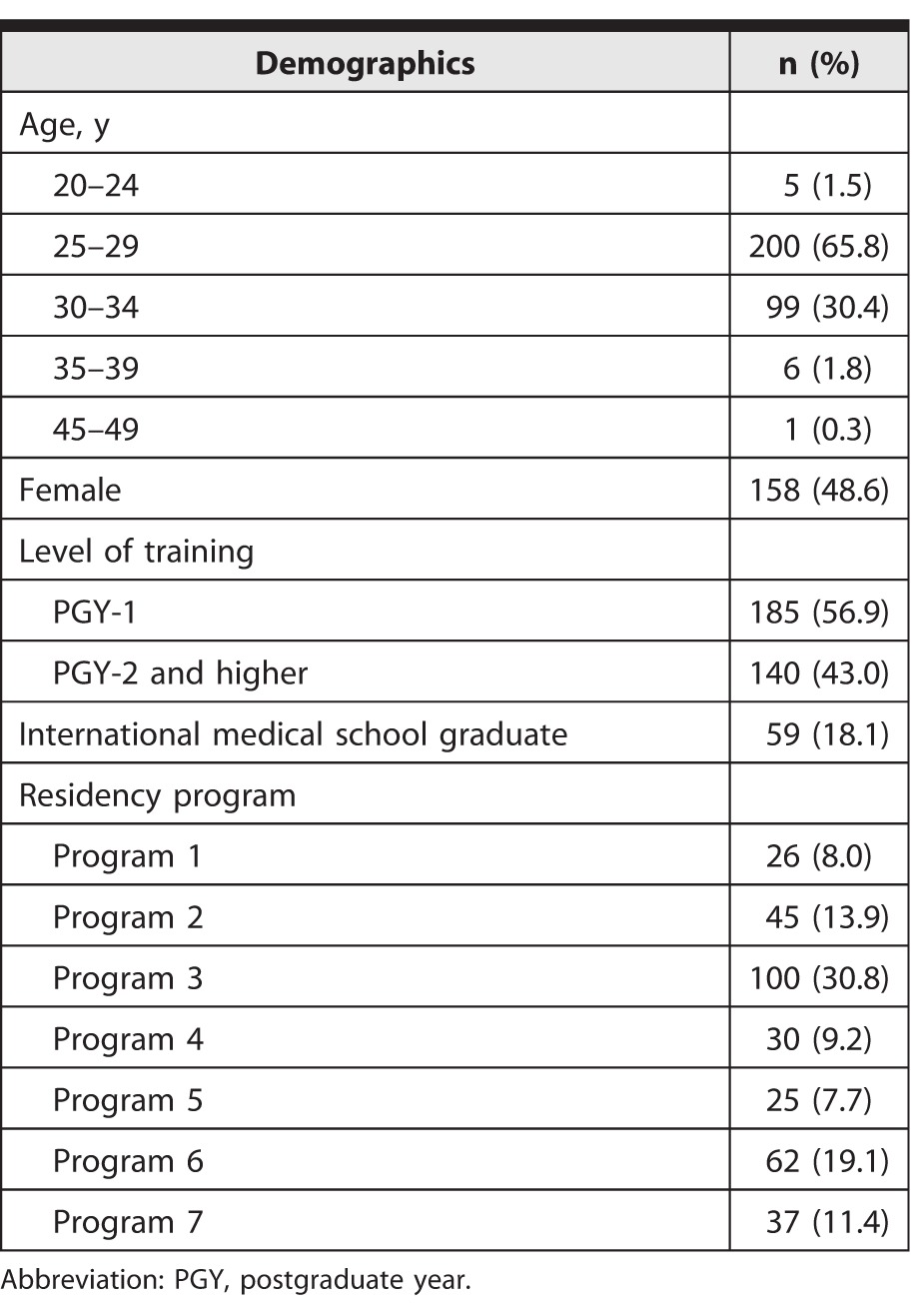

Of the 690 residents surveyed, 325 (47%) responded. Their characteristics are shown in table 1. Median survey completion time was 17.7 minutes (interquartile range [IQR] = 13.5–26.7).

TABLE 1.

Demographics of Survey Respondents (N = 325)

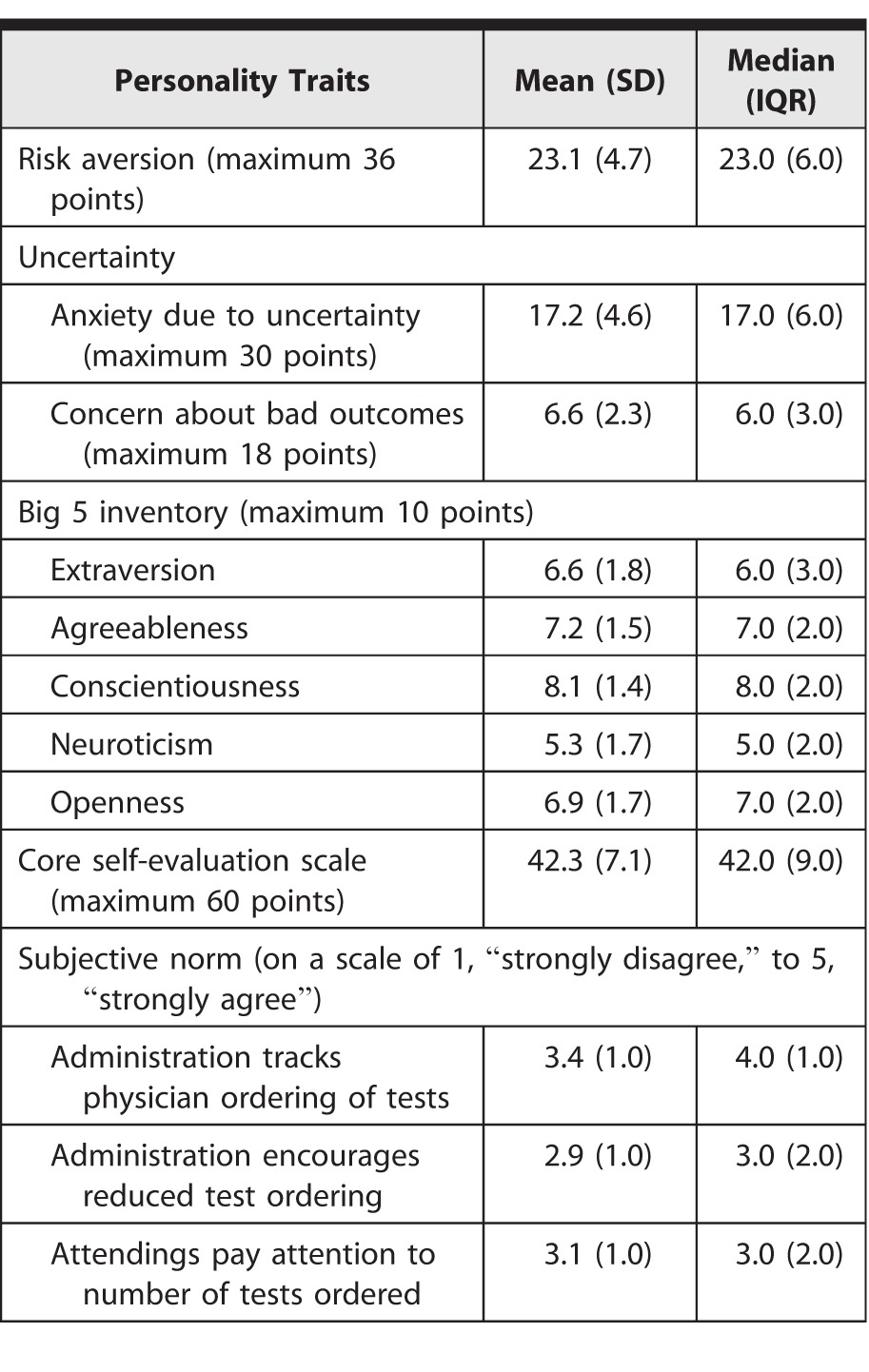

The mean PI was 2.52 with a SD of 0.31, where a higher score (maximum possible score of 4) indicates a more intense practice style (median 2.48, IQR = 0.39). The mean CS was 0.36 with a SD of 0.20 (median 0.35, IQR = 0.30), where a higher score implies that the respondent was more certain in his or her answer. Cronbach α was 0.68 for PI and 0.78 for CS. The PI-CS Pearson correlation coefficient was −0.045 (P = .42). Scores for each of the determinants of behavioral intentions in our study are shown in table 2.

TABLE 2.

Determinants of Behavioral Intention

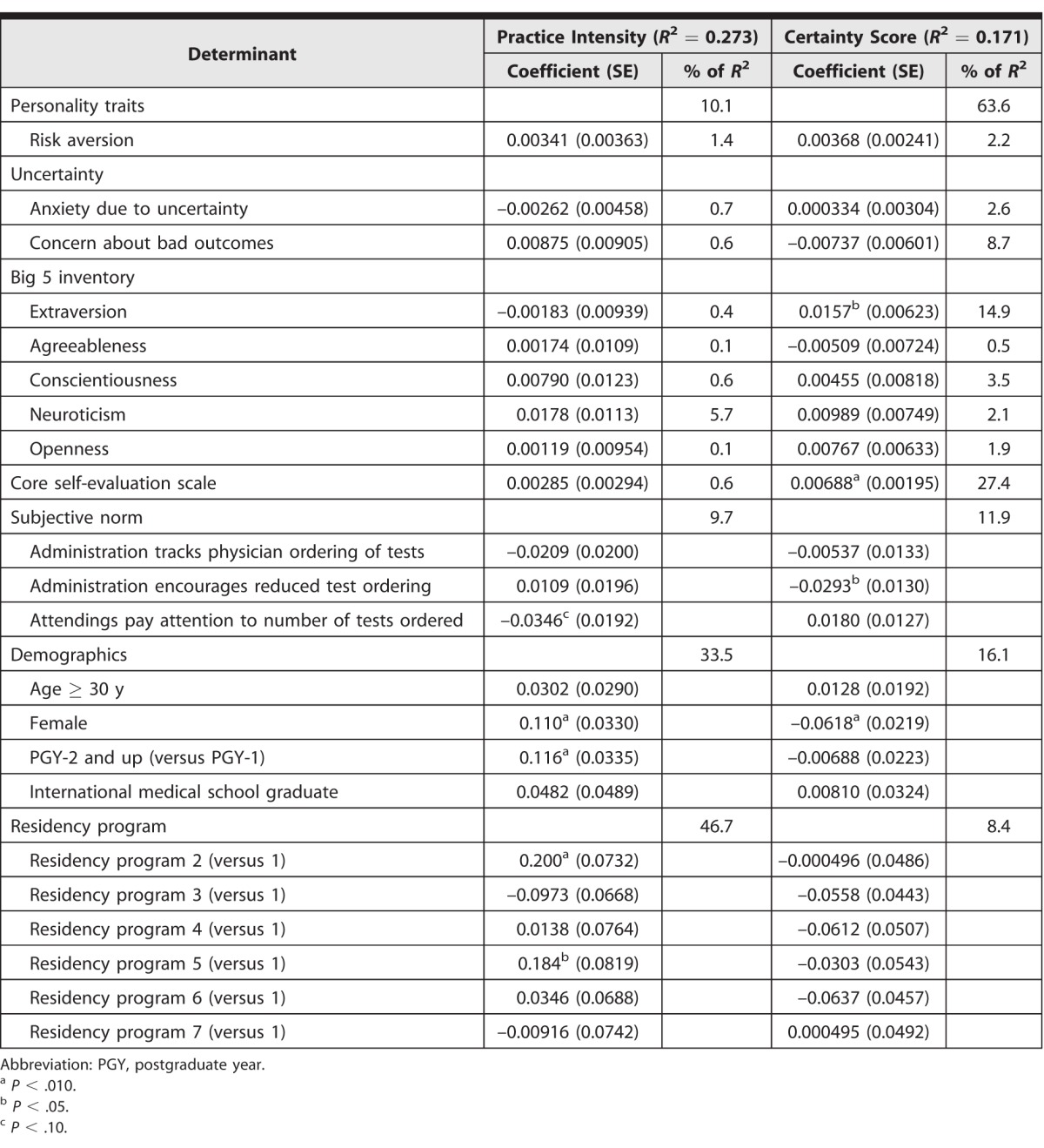

The 4 blocks of explanatory variables collectively explained 27.3% of the variation in the PI. Table 3 reports the association of each of the personality elements with PI along with its decomposed contribution to the total predictable variation. Considering 100% of the explained variation, personality traits overall contributed 10.1% of the explained variation, with that effect dominated by the neuroticism scale (5.7%), where respondents with a higher score for neuroticism had significantly more aggressive practice patterns after controlling for the other factors. Subjective norms of the institutional culture contributed 9.7% of the explained variation. Demographic characteristics contributed 33.5%. Women and international medical graduates had significantly more aggressive practice styles, and residents were less aggressive than interns. Residency programs accounted for the remaining 46.7% of the explained variation.

The explanatory variables collectively explained 17.1% of variation in the CS. Considering 100% of the explained variation, personality traits contributed 63.6% (table 3), led by core self-evaluation (27.4%). Subjective norms of the institutional culture contributed 11.9% of the explained variation, while demographics contributed 16.1%. Women were, on average, less certain about their answers. The CS scores did not significantly differ between interns and residents. Residency programs accounted for the remaining 8.4% of the explained variation.

TABLE 3.

Percentage of Total R2 Attributable to Determinants of Behavioral Intentions

Discussion

We were able to create a measure of practice intensity with substantial discrimination across individuals. While further work is required to test the association of this measure (derived from responses to hypothetical vignettes) to actual practice intensity, the measure has some evidence in support of construct validity. We also developed a measure of physicians' confidence in practice choices that lends further insight into practice variation. Notably, the correlation between practice intensity and certainty was negligible, suggesting each captures a distinct aspect of clinical decision making.

Second, while measurable personality traits (particularly neuroticism) contribute to our understanding of variation in practice intensity, experience and sex contribute more, and the residency program contributes the most. The contribution of the residency program suggests that practice intensity is principally created by the socialization that occurs within training. Previous work reveals that the academic orientation of a physician's medical school predicts future resource utilization, with academic environments fostering more intensive use of diagnostic services,28 while other work suggests that physicians who trained at academic medical schools and/or residency programs, in general, order fewer tests except when confronted with uncertainty.29 Similarly, the spending pattern of the geographic region where a residency program is located has been associated with the spending pattern of its graduates, regardless of their later practice site.5 The residency programs in this study were all located within the same metropolitan area; however, further underscoring that within-region variation is substantial and important.30 Future investigation should focus on identifying specific differences between residency programs that drive this variation in an effort to influence physician practice patterns as they develop.

Third, while practice intensity is most associated with a training program, certainty is most associated with personality. To the extent that personality characteristics are less mutable, strategies to reduce practice intensity might need to bypass personality characteristics. Further exploration is needed to understand how to modulate individuals' practice intensity at the systems level.

This study has limitations. First, physician practice style was measured using hypothetical clinical situations. Vignettes have been used successfully in other settings to assess physicians' quality of care,31,32 and they help isolate individual clinician impact from what, in the real world, are often team decisions. Second, the portions of the survey that measure subjective norms did not undergo testing for validity. Third, our model explained only 27.3% of the total variation in the mean physician-level practice intensity score, suggesting the presence of other factors that drive practice. Fourth, our sample includes only 7 residency programs in 1 metropolitan area, limiting generalizability. Fifth, the response rate of 47%, while high for a survey of resident physicians, leaves open the possibility that nonresponders differed systematically from responders.

Conclusion

Internal medicine trainees' residency program affiliations explained more of the variation in their practice patterns than demographic characteristics, personality traits, or subjective norms. The combination of demographics and the residency program identity explained a large proportion of observed variation, suggesting residency programs play an important role in influencing physician practice patterns during their formation. Residency programs can leverage this influence to improve the value derived from physicians' practice patterns after training is complete.

Footnotes

C. Jessica Dine, MD, MSHP, is Assistant Professor of Medicine, Perelman School of Medicine, University of Pennsylvania, and Senior Fellow, Leonard Davis Institute of Health Economics, University of Pennsylvania; Lisa M. Bellini, MD, is Professor of Medicine and Vice Dean for Faculty and Resident Affairs, Perelman School of Medicine, University of Pennsylvania; Gretchen Diemer, MD, is Assistant Professor of Medicine and Associate Dean of Graduate Medical Education and Affiliations, Sidney Kimmel Medical College, Thomas Jefferson University; Allison Ferris, MD, is Assistant Professor of Medicine, College of Medicine, Drexel University; Ashish Rana, MD, is Professor of Medicine, Crozer-Keystone Health System; Gina Simoncini, MD, is Assistant Professor of Medicine, Temple University School of Medicine; William Surkis, MD, is Assistant Professor of Medicine, Lankenau Medical Center; Charles Rothschild, MD, is a Fellow in Pediatric Critical Care Medicine, Ann and Robert H. Lurie Children's Hospital of Chicago; David A. Asch, MD, is Professor of Medicine, Perelman School of Medicine, University of Pennsylvania, Senior Fellow, Leonard Davis Institute of Health Economics, University of Pennsylvania, and Executive Director, Penn Medicine Center for Health Innovation; Judy A. Shea, PhD, is Professor of Medicine and Associate Dean of Medical Education Research, Perelman School of Medicine, University of Pennsylvania; and Andrew J. Epstein, PhD, is Research Associate Professor of Medicine, Perelman School of Medicine, University of Pennsylvania, and Senior Fellow, Leonard Davis Institute of Health Economics, University of Pennsylvania.

Funding: The authors report no external funding source for this study.

Conflict of interest: The authors declare they have no competing interests.

References

- 1.Yong PL, RS Saunders, Olsen LA, editors. The Healthcare Imperative: Lowering Costs and Improving Outcomes. Washington, DC: National Academies Press;; 2010. Institute of Medicine (US) Roundtable on Evidence-Based Medicine. eds. [PubMed] [Google Scholar]

- 2.Committee on Geographic Variation in Health Care Spending and Promotion of High-Value Care; Board on Health Care Services; Institution of Medicine; Newhouse JP, Garber AM, Graham RP, et al, eds. Variation in Health Care Spending: Target Decision Making, Not Geography. Washington, DC: The National Academies Press;; 2013. [PubMed] [Google Scholar]

- 3.Asch DA, Nicholson S, Srinivas S, Herrin J, Epstein AJ. Evaluating obstetrical residency programs using patient outcomes. JAMA. 2009;302(12):1277–1283. doi: 10.1001/jama.2009.1356. [DOI] [PubMed] [Google Scholar]

- 4.Epstein AJ, Busch SH, Busch AB, Asch DA, Barry CL. Does exposure to conflict of interest policies in psychiatry residency affect antidepressant prescribing? Med Care. 2013;51(2):199–203. doi: 10.1097/MLR.0b013e318277eb19. [DOI] [PubMed] [Google Scholar]

- 5.Chen C, Petterson S, Phillips R, Bazemore A, Mullan F. Spending patterns in region of residency training and subsequent expenditures for care provided by practicing physicians for Medicare beneficiaries. JAMA. 2014;312(22):2385–2393. doi: 10.1001/jama.2014.15973. [DOI] [PubMed] [Google Scholar]

- 6.Sirovich BE, Lipner RS, Johnston M, Holmboe ES. The association between residency training and internists' ability to practice conservatively. JAMA Intern Med. 2014;174(10):1640–1648. doi: 10.1001/jamainternmed.2014.3337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Cooke M. Cost consciousness in patient care—what is medical education's responsibility? N Engl J Med. 2010;362(14):1253–1255. doi: 10.1056/NEJMp0911502. [DOI] [PubMed] [Google Scholar]

- 8.Weinberger SE. Providing high-value, cost-conscious care: a critical seventh general competency for physicians. Ann Intern Med. 2011;155(6):386–388. doi: 10.7326/0003-4819-155-6-201109200-00007. [DOI] [PubMed] [Google Scholar]

- 9.Center for Studying Health System Change. 2008 Health Tracking Physician survey public use file: user's guide. 2015 http://www.hschange.org/CONTENT/1136/1136.pdf. Accessed June 22. [Google Scholar]

- 10.Choosing Wisely: an initiative of the ABIM Foundation. 2015 http://www.choosingwisely.org. Accessed June 19. [Google Scholar]

- 11.Qaseem A, Alguire P, Dallas P, Feinberg LE, Fitzgerald FT, Horwitch C, et al. Appropriate use of screening and diagnostic tests to foster high-value, cost-conscious care. Ann Intern Med. 2012;156(2):147–149. doi: 10.7326/0003-4819-156-2-201201170-00011. [DOI] [PubMed] [Google Scholar]

- 12.Simpson EH. Measurement of diversity. Nature. 1949;163(4148):688. [Google Scholar]

- 13.Fiscella K, Franks P, Zwanziger J, Mooney C, Sorbero M, Williams GC. Risk aversion and costs: a comparison of family physicians and general internists. J Fam Pract. 2000;49(1):12–17. [PubMed] [Google Scholar]

- 14.Forrest CB, Nutting PA, von Schrader S, Rohde C, Starfield B. Primary care physician specialty referral decision making: patient, physician, and health care system determinants. Med Decis Making. 2006;26(1):76–85. doi: 10.1177/0272989X05284110. [DOI] [PubMed] [Google Scholar]

- 15.Gerrity MS, White KP, DeVellis RF, Dittus RS. Physicians' reactions to uncertainty: refining the constructs and scales. Motiv Emot. 1995;19(3):175–191. [Google Scholar]

- 16.Gerrity MS, DeVellis RF, Earp JA. Physicians' reactions to uncertainty in patient care: a new measure and new insights. Med Care. 1990;28(8):724–736. doi: 10.1097/00005650-199008000-00005. [DOI] [PubMed] [Google Scholar]

- 17.Judge TA, Erez A, Bono J, Thoresen CJ. The core self-evaluation scale: development of a measure. Person Psychol. 2003;56(2):303–331. [Google Scholar]

- 18.McKibbon KA, Fridsma DB, Crowley RS. How primary care physicians' attitudes toward risk and uncertainty affect their use of electronic information resources. J Med Libr Assoc. 2007;95(2):138–146. doi: 10.3163/1536-5050.95.2.138. e49–e50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Pearson SD, Goldman L, Orav EJ, Guadagnoli E, Garcia TB, Johnson PA, et al. Triage decisions for emergency department patients with chest pain: do physicians' risk attitudes make the difference? J Gen Intern Med. 1995;10(10):557–564. doi: 10.1007/BF02640365. [DOI] [PubMed] [Google Scholar]

- 20.Perkins MB, Jensen PS, Jaccard J, Gollwitzer P, Oettingen G, Pappadopulos E, et al. Applying theory-driven approaches to understanding and modifying clinicians' behavior: what do we know? Psychiatr Serv. 2007;58(3):342–348. doi: 10.1176/ps.2007.58.3.342. [DOI] [PubMed] [Google Scholar]

- 21.Rammstedt B, John OP. Measuring personality in one minute or less: a 10-item short version of the Big Five Inventory in English and German. J Res Pers. 2007;41(1):203–212. [Google Scholar]

- 22.Allison JJ, Kiefe CI, Cook EF, Gerrity MS, Orav EJ, Centor R. The association of physician attitudes about uncertainty and risk taking with resource use in a Medicare HMO. Med Decis Making. 1998;18(3):320–329. doi: 10.1177/0272989X9801800310. [DOI] [PubMed] [Google Scholar]

- 23.Linbert C, Lamb R. Doctors' use of clinical guidelines: two applications of the Theory of Planned Behaviour. Psychol Health Med. 2002;7(3):301–310. [Google Scholar]

- 24.Jenner EA, Watson PWB, Millere L, Jones F, Scott GM. Explaining hand hygiene practice: an extended application of the Theory of Planned Behaviour. Psychol Health Med. 2002;7(3):311–326. [Google Scholar]

- 25.Huettner F, Sunder M. Axiomatic arguments for decomposing goodness of fit according to Shapley and Owen values. Electron J Statist. 2012;6:1239–1250. [Google Scholar]

- 26.Kruskal W. Relative importance by averaging over orderings. Am Statistician. 1987;41(1):6–10. [Google Scholar]

- 27.Grömping U. Estimators of relative importance in linear regression based on variance decomposition. Am Statistician. 2007;61(2):139–147. [Google Scholar]

- 28.Epstein AM, Begg CB, McNeil BJ. The effects of physicians' training and personality on test ordering for ambulatory patients. Am J Public Health. 1984;74(11):1271–1273. doi: 10.2105/ajph.74.11.1271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Pineault R. The effect of medical training factors on physician utilization behavior. Med Care. 1977;15(1):51–67. doi: 10.1097/00005650-197701000-00004. [DOI] [PubMed] [Google Scholar]

- 30.Epstein AJ, Nicholson S. The formation and evolution of physician treatment styles: an application to cesarean sections. J Health Econ. 2009;28(6):1126–1140. doi: 10.1016/j.jhealeco.2009.08.003. [DOI] [PubMed] [Google Scholar]

- 31.Peabody JW, Luck J, Glassman P, Dresselhaus TR, Lee M. Comparison of vignettes, standardized patients, and chart abstraction: a prospective validation study of 3 methods for measuring quality. JAMA. 2000;283(13):1715–1722. doi: 10.1001/jama.283.13.1715. [DOI] [PubMed] [Google Scholar]

- 32.Peabody JW, Luck J, Glassman P, Jain S, Hansen J, Spell M, et al. Measuring the quality of physician practice by using clinical vignettes: a prospective validation study. Ann Intern Med. 2004;141(10):771–780. doi: 10.7326/0003-4819-141-10-200411160-00008. [DOI] [PubMed] [Google Scholar]