Abstract

Coding of sound location in auditory cortex (AC) is only partially understood. Recent electrophysiological research suggests that neurons in mammalian auditory cortex are characterized by broad spatial tuning and a preference for the contralateral hemifield, that is, a nonuniform sampling of sound azimuth. Additionally, spatial selectivity decreases with increasing sound intensity. To accommodate these findings, it has been proposed that sound location is encoded by the integrated activity of neuronal populations with opposite hemifield tuning (“opponent channel model”). In this study, we investigated the validity of such a model in human AC with functional magnetic resonance imaging (fMRI) and a phase-encoding paradigm employing binaural stimuli recorded individually for each participant. In all subjects, we observed preferential fMRI responses to contralateral azimuth positions. Additionally, in most AC locations, spatial tuning was broad and not level invariant. We derived an opponent channel model of the fMRI responses by subtracting the activity of contralaterally tuned regions in bilateral planum temporale. This resulted in accurate decoding of sound azimuth location, which was unaffected by changes in sound level. Our data thus support opponent channel coding as a neural mechanism for representing acoustic azimuth in human AC.

Keywords: auditory, fMRI, opponent coding, planum temporale, sound localization

Introduction

Humans can localize a sound source accurately within the range of a few degrees (Grothe et al. 2010; Brungart et al. 2014) even though auditory space is not mapped directly on the surface of the cochlea. Sound location is instead computed from spectral information and binaural disparities such as interaural level and interaural time differences (ITDs and ILDs, e.g., King and Middlebrooks 2011). ITDs and ILDs are considered to be most informative for sound localization in the horizontal plane. In mammals, processing of these cues starts already in the superior olivary complex in the brain stem (Yin 2002). Yet lesion and reversible inactivation studies demonstrate that the final stages of the ascending auditory pathway, that is, the auditory cortex, also play a role in spatial hearing. That is, reversible or permanent lesions lead to strong sound localization deficits in cats (e.g., Jenkins and Merzenich 1984; Malhotra et al. 2004), ferrets (King et al. 2007), non-human primates (Heffner and Heffner 1990), and humans (e.g., Zatorre and Penhune 2001; Duffour-Nikolov et al. 2012).

How acoustic space is represented in the cortex remains unclear. A “place code” model (Jeffress 1948) proposes that auditory azimuth is systematically represented in an “azimuth map” similar to the representation of visual space in occipital cortex. Although the existence of such a map has been demonstrated in subcortical nuclei in the avian brain (e.g., Knudsen and Konishi 1978; Carr and Konishi 1988), in the mammalian subcortical structures, an auditory space map has only been discovered in the superior colliculus (King and Palmer 1983; Middlebrooks and Knudsen 1984). Here, topographic representations of multiple sensory modalities (vision, somatosensation, and audition) are integrated to control the orientation of movements towards novel stimuli (King et al. 2001). No organized representation of auditory space, ITDs or ILDs, has been discovered at any other stage of the mammalian subcortical auditory pathway, including the inferior colliculus (e.g., Leiman and Hafter 1972; Middlebrooks et al. 2002).

At a cortical level, the caudolateral (CL) region of primate auditory cortex shows more spatial selectivity than primary auditory areas (Recanzone et al. 2000; Tian et al. 2001). In this region, local neuronal populations encode the contralateral auditory azimuth accurately (but with largest errors for midline locations, Miller and Recanzone 2009). It has therefore been proposed that area CL is part of an auditory “where” stream (Rauschecker and Tian 2000). Human neuroimaging studies similarly suggest that posterior auditory areas, specifically in the planum temporale (PT), are involved in auditory spatial processing (Warren and Griffiths 2003; Brunetti et al. 2005; Deouell et al. 2007; Van der Zwaag et al. 2011). However, to the best of our knowledge, there is no report of a topographical map of sound azimuth in human or primate auditory cortex. Furthermore, recent electrophysiological findings suggest that most mammalian cortical auditory neurons have broad spatial receptive fields modulated by sound intensity (e.g., Stecker et al. 2005; King et al. 2007). Several authors therefore argued that the acoustic azimuth may be represented through opponent population coding in the mammalian auditory cortex, that is, through the combined activity of broadly tuned neuronal populations with an overall preference for opposite acoustic hemifields (e.g., McAlpine 2005; Stecker et al. 2005; Day and Delgutte 2013). Opponent coding ensures that location coding remains constant despite intensity changes as location is not encoded by the peak response but by the region of steepest change in the response azimuth function (RAF; Stecker et al. 2005). Moreover, opponent population coding accommodates for the observed heightened spatial acuity along the frontal midline observed in human psychoacoustic research (Makous and Middlebrooks 1990; Phillips 2008).

The present study examines the validity of a bilateral, opponent channel model in human auditory cortex with functional magnetic resonance imaging (fMRI) and a phase-encoding stimulation paradigm with frequency modulated (FM) sounds at different intensity levels. RAFs computed from our data suggest that neuronal populations in human auditory cortex exhibit broad spatial tuning and sample acoustic space unhomogeneously. Additionally, location preference and spatial selectivity appear to be affected by intensity modulations. Interestingly, most of the steepest regions in the RAFs are close to the interaural midline. By implementing the data in a two-channel model, we were able to decode azimuth position accurately and irrespectively of changes in sound intensity. Our data thus suggest that an opponent channel-coding model is the most probable neural mechanism by which acoustic azimuth is represented in human auditory cortex.

Materials and Methods

Participants

Ten subjects participated in the imaging experiment. Data of 2 participants had to be discarded due to displacement of the ear phones during the scanning session. Data of the remaining 8 participants (median age = 27 years, 5 males) are included in the analysis. Participants reported no history of hearing loss. The Ethical Committee of the Faculty of Psychology and Neuroscience at Maastricht University granted approval for the study.

Stimuli

To map the representation of the acoustic azimuth in human auditory cortex, we used a phase-encoding stimulation paradigm similar to the phase-encoding paradigms used to map the topographic organization of visual space in visual cortex (e.g., Engel et al. 1997) and the cochleotopic organization of auditory cortex (Striem-Amit et al. 2011). We constructed auditory stimuli moving smoothly in the horizontal plane at zero elevation relative to the listener, making a full circle of 360° around the head of the participant in 20 s (rotation speed = 18°/s). The stimuli used in the experiment consisted of recordings of logarithmic FM sweeps, with frequency decreasing exponentially at a speed of 2.5 octaves/s. The FM sweeps were 0.450 s long and spanned either of 2 frequency ranges: 250–700 Hz (Fig. 1A) or 500–1400 Hz (Fig. 1C); FM sweeps were repeated at a rate of 2 Hz.

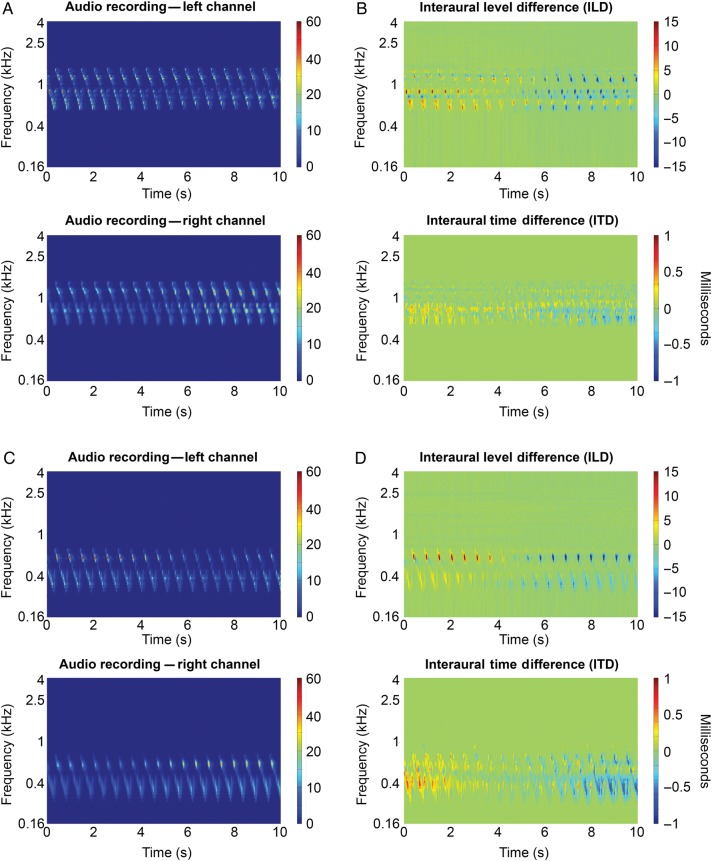

Figure 1.

Stimulus properties. (A) Spectrograms displaying the frequency-time representation of the audio recordings made by the binaural microphones in the left (top) and right (bottom) ear of a representative participant. Plotted are the first 10 s of the movement phase of the sound, that is, half a circle (from −90° to 90°), of a stimulus in the 500−1400 Hz frequency range. Colors indicate power. (B) Interaural level (top) and interaural time spectrogram for the audio fragment shown in (A). ILD was computed as the difference in power between the left and right ear. Colors indicate differences in power between the left ear and the right ear. To calculate the ITD, we first computed the IPDs from the frequency-time spectrogram. We then converted the phase differences to time differences. Colors indicate timing differences between the left and right ear. (C) Similar as (A) but for a stimulus in the 250–700 Hz frequency range. (D) Similar as (B) but for the audio fragment shown in (B).

Before and after the circular movement around the head, the sound was presented at one position (left or right) for 10 s. These stationary periods at the beginning and end of each stimulus presentation were added to accommodate for attention effects at the sudden onset of the sound as well as for neural/hemodynamic on- and offset effects during fMRI. Consequently, the full duration of each stimulus was 40 s: a stationary period of 10 s followed by a full circle around the head in 20 s and another stationary period of 10 s. Sound onset and offset were ramped with a 50-ms linear slope. Starting position of the sound was either left or right, and rotation direction was either clockwise or counter clockwise.

To maximize the availability of spatial cues in the stimuli, we made individual stimulus recordings for each participant with binaural microphones (OKM II Classic Microphone; sampling rate = 44.1 kHz) placed in the ear canals of each participant. Recordings were made in a standard room (internal volume = 95 m3). The walls and ceiling consisted of gypsum board; the floor consisted of wood covered with a thin carpet. Participants were positioned on a chair in the middle of the room. A 3D sound system consisting of 22 loudspeakers was arranged in a sphere around the participant in the far field (12 speakers in the horizontal plane at the elevation of the interaural axis and at a distance of 2.4 m from the participant, 5 speakers at vertical azimuth of <0°, and 5 speakers at vertical azimuth of >0°). The virtual reality software Vizard (Worldviz) was used to accurately position sounds in the acoustic 3D environment. Sounds were presented at 75 dB SPL. To minimize the effects of head movement during the recording sessions, participants were seated on a chair and fixated a cross positioned on the wall (eye level) at a distance of 2.75 m.

The recording procedure resulted in realistic, well localizable stimuli. Figure 1 shows the spectrograms of the audio recordings as well as the ILD and ITD spectrograms for a representative recording. The ILD and ITD spectrograms of the stimulus with frequency range 500 Hz–1400 Hz (Fig 1B) show that this stimulus mostly contains ILD cues for azimuth position; the ITD scale is limited. Recordings in the lower frequency range (250 Hz–700 Hz), in contrast, appear to contain both ILD and ITD (Fig 1D). According to the duplex theory of sound localization, ILDs should be negligible at low frequencies (Rayleigh 1907; Grothe et al. 2010). Note that this is true only in the absence of reverberation. The presence of ILD in our recordings also for the stimulus in the lowest frequency range is probably caused by the room we used to make the recordings. Considerable ILDs are in fact observed when low-frequency sounds are presented in the far field in rooms with sound reflecting surfaces (e.g., Rakerd and Hartmann 1985).

During the fMRI session, the audio recordings were delivered binaurally through MR-compatible earphones (Sensimetrics Corporation, www.sens.com, last accessed October 27, 2015). Sounds were played at 3 different intensity levels leading to a total of 24 conditions (i.e., 2 frequency levels, 2 starting points, 2 rotation directions, 3 intensity levels). The lowest intensity was subjectively scaled to be comfortable and clearly audible above the scanner noise. Intensity increased in 10 dB steps to the next intensity level. Consequently, the medium intensity condition was +10 dB compared with the low-intensity condition and the loud intensity condition +20 dB compared with the low-intensity condition. Due to the long duration of our stimuli, silent imaging techniques were not possible for data collection. Sound intensity was also adjusted to equalize the perceived loudness of the lower and higher frequency sound per participant.

Data Acquisition

Blood-oxygen-level-dependent (BOLD) signals were measured with a Siemens whole body MRI scanner at the Scannexus MRI scanning facilities (Maastricht, www.scannexus.nl, last accessed October 27, 2015). Due to an MRI scanner upgrade, data acquisition of 3 participants was performed with a Siemens Trio 3.0T whereas data of the other 5 participants were acquired with a Siemens Prisma 3.0T. Data acquisition parameters were similar for both MRI scanners. Functional MRI data were recorded with a standard T2*-weighted echo planar imaging sequence covering the temporal cortex as well as parts of the parietal, occipital, and frontal cortex (echo time [TE] = 30 ms; repetition time [TR] = 2000 ms; flip angle Trio [FA] = 77°; flip angle Prisma = 90°; matrix size = 100 × 100; voxel size 2 × 2 × 2 mm3; number of slices = 32]). An anatomical image of the whole brain was obtained with a T1-weighted MPRAGE sequence. Acquisition parameters for anatomical scans on the Trio were as follows: TE = 2.98 ms, TR = 2300 ms, voxel size 1 × 1 × 1 mm3, and matrix size = 192 × 256 × 256. Acquisition parameters for anatomical scans on the Prisma were as follows: TE = 2.17 ms, TR = 2250 ms, voxel size 1 × 1 × 1 mm3, and matrix size = 192 × 256 × 256. Each condition was presented 3 times, resulting in 72 trials in total. Functional data acquired with the Trio scanner (3 subjects, see above) were acquired in 3 runs of 24 trials in which each condition was presented once. Functional data acquired with the Prisma scanner were acquired in 6 runs of 12 trials each in which each run presented sounds of one intensity only, that is, soft, medium, or loud. The order of runs was randomized and counterbalanced across participants. Starting position and rotation direction of the sounds were counterbalanced and randomized both within and across runs. Participants were instructed to listen attentively to the location of the sounds and to fixate on a fixation cross to prevent stimulus-locked eye movements.

fMRI Analysis

Data were analyzed with BrainVoyager QX (Brain Innovation) and customized Matlab code (The MathWorks).

Preprocessing

Preprocessing of functional data consisted of correction for head motion (trilinear/sinc interpolation, the first volume of the first run functioned as reference volume for alignment), interscan slice-time (sinc interpolation), linear drifts removal, and temporal high-pass filtering (threshold at 7 cycles per run). Functional data were coregistered to the T1-weighted images of each individual and sinc-interpolated to 3D Talairach space (2 mm3 resolution; Talairach and Tournoux 1988). T1 scans were segmented to define the border between gray and white matter with the automatic segmentation procedure of BrainVoyager QX and complemented with manual improvements where necessary. To ensure optimum co-registration of the auditory cortex across participants, we performed cortex-based alignment of the single-participant cortical surface reconstruction (CBA; Goebel et al. 2006) constrained by an anatomical mask of Heschl's gyrus (HG) (Kim et al. 2000). This procedure is similar to the functional cortex-based alignment procedure (Frost and Goebel 2013) and uses an anatomical definition of a region of interest to optimize the local realignment of this region rather than a global realignment of the entire cortex. Finally, functional data were projected from volume space to surface space by creating mesh time courses. A single value was obtained for each vertex of a cortex mesh by sampling (trilinear interpolation) and averaging the values between the gray/white matter boundary and up to 4 mm into the gray matter toward the pial surface. As all our analyses were performed on the functional data after this resampling on the cortical sheet, we refer to a cortical location as “vertex”.

Individual Estimation of Time-to-Peak of Hemodynamic Response Function

To ensure that interindividual differences in the shape of the hemodynamic response function (HRF; see for instance Handwerker et al. 2004) do not influence our results, we started the analysis by empirically deriving the optimal time-to-peak (TTP) of the HRF for each participant. To this end, we estimated—per functional run—different versions of a General Linear Model (GLM). In each GLM version, the boxcar predictors modeling sound on/sound off were convolved with a double gamma HRF function (Friston et al. 1995) with a different TTP. We tested TTPs of 4–8 s in steps of 2 s (in agreement with the TR), resulting in 3 different GLM estimates per run. For every GLM estimate, we calculated the number of significantly active voxels (auditory > baseline, P < 0.05, Bonferroni corrected) and the average t value across these significantly activated voxels. The optimal TTP value for each participant was selected based on the GLM estimate that maximized both statistics across runs.

Response Azimuth Functions

The first analysis concentrates on the spatial tuning properties of human auditory cortex. To characterize spatial tuning of each vertex, we analyzed the hemodynamic responses to azimuth with a finite impulse response (FIR) deconvolution (Dale 1999). This method provides a beta weight per TR (i.e., every 2 s) and thus—given our trial duration of 40 s—we obtained 20 beta estimates per vertex. To accommodate for the BOLD on- and offset responses, we selected only the central 10 time points associated with sound movement within each trial (i.e., we discarded the 10-s periods at the beginning and end of the trial during which a static sound was presented). Using the participant-specific TTP, the 10 remaining beta weights were converted to the corresponding azimuth positions. This resulted in a RAF consisting of 10 linearly spaced response estimations per trial with an azimuthal distance of 36° between 2 consecutive bins. As our participants frequently reported front/back reversals, which is common in human sound localization (e.g., Oldfield and Parker 1984; Musicant and Butler 1985), we pooled trials across rotation direction for all analyses.

To determine the preferred azimuth position of a vertex, that is, the directionality, we computed for each vertex the vector sum of the azimuths eliciting a peak response in the RAF (Fig. 2A). This procedure is adapted from a study investigating spike rate patterns in response to sound source location (Middlebrooks et al. 1998) and has been implemented in the context of rate azimuth functions as well (e.g., Stecker et al. 2005). In agreement with Middlebrooks et al. (1998), we defined a peak response as 75% or more of the maximum response of that vertex. For each group of one or more adjacent peak response locations, we then computed the vector sum. The direction of a vector was defined by the stimulus azimuth, the length by the beta value estimated with the FIR deconvolution as described earlier. Best azimuth centroid was defined by the direction of the resulting vector. In case a vertex exhibited multiple clusters of peak responses, the preferred azimuth was defined by the resulting vector with the biggest length.

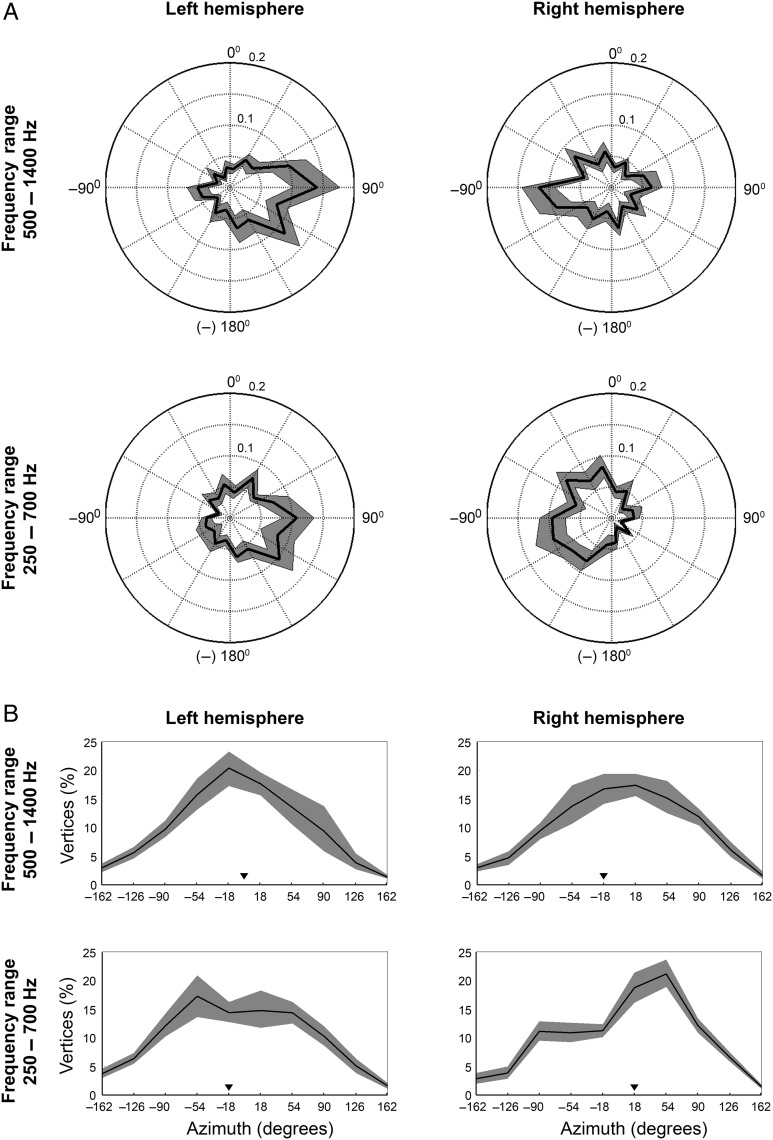

Figure 2.

A preference for contralateral azimuth positions and steepest slopes located mostly near frontal midline. (A) Plotted are the average distributions of azimuth preference per hemisphere and frequency condition across RAFs of all participants. The angular dimension signals the azimuth position of the stimulus; the radial dimension signals the proportion of vertices exhibiting a directional preference for each azimuth position tested (black line). The shaded gray area indicates the 95% confidence interval (estimated with bootstrapping, 10 000 repetitions). (B) Plots show the average distribution of steepest slope location on the azimuth across the RAFs of all participants. We included either the steepest positive slope (for ipsilaterally tuned vertices) or negative slopes (for contralaterally tuned vertices). The black line indicates the average proportion of steepest slopes per azimuth position. Black triangles signal the median of the distribution. The shaded gray area again indicates the 95% confidence interval (estimated with bootstrapping, 10 000 repetitions).

We furthermore estimated from each RAF the azimuthal position of the steepest ascending and descending slope (Stecker et al. 2005). To this end, we mildly smoothed the RAFs with a moving average window [width = 3 azimuthal locations, weights (0.2, 0.6, 0.2)]. We subsequently calculated the spatial derivative of the smoothed RAFs and used the maxima and minima of the derivatives to define the 2 slopes.

Finally, we quantified a vertex's tuning width with the equivalent rectangular receptive field (ERRF). The ERRF has been used previously to measure spatial sensitivity in cat auditory cortex (Lee and Middlebrooks 2011). Specifically, we transformed the area under the RAF into a rectangle with its height equivalent to the maximum response and an equivalent area. Although this method does not provide information on absolute tuning width, it does enable the comparison of spatial selectivity across conditions.

Note that in these analyses of the RAFs, we only included auditory responsive vertices (GLM auditory > baseline, False Discovery Rate (FDR; Benjamini and Hochberg 1995), q < 0.05) that exhibited a relatively slowly varying angular response. The rationale for this criterion is that, given the current experimental design and the sluggish hemodynamic response, high-frequency oscillations likely reflect noise rather than neural responses to the stimuli. To check for such high-frequency oscillations, we therefore estimated the Fourier transform of each response. We excluded vertices having >20% of total power in the high-frequency bands (i.e., 4 or 5 cycles per angular response profile).

Topographic Organization of Azimuth Preference

To map the topographic organization of azimuth preference, we plotted azimuth preference maps in which the preferred azimuth of each vertex was color coded and projected on the cortical surface. To simplify the visualization, we binned azimuth preference in bins of 20°. We additionally collapsed sound locations in the front and back as, as was mentioned before, front/back localization is weak in humans (e.g., Oldfield and Parker 1984; Musicant and Butler 1985). This was done by pooling azimuthal preferences for sound positions at positive azimuths θ and π-θ and negative azimuths −θ and τ-θ from the frontal midline in one azimuth position. This resulted in 20 azimuth bins linearly spaced from −90° to 90°. We color-coded each vertex's preferred azimuth position in a green–blue–red color scale.

Finally, to evaluate whether azimuth tuning is level invariant, we computed the consistency of azimuth preference across the 3 sound intensity conditions (soft, medium, and loud, see Stimuli). Azimuth tuning was considered to be consistent if azimuth centroids across all 3 sound levels were not spaced further than 45° apart from each other nor switched hemifield (e.g., from contralateral to ipsilateral). Vertices that met this consistency criterion were marked in the azimuth tuning maps.

Opponent Channel Coding Model

Finally, we evaluated the validity of an opponent coding model by testing whether azimuth can be decoded from differences in the average activity of spatially sensitive auditory regions in each hemisphere.

Spatially Sensitive Regions in Auditory Cortex

First we identified “spatially responsive regions” in each hemisphere using a regression analysis with “azimuth predictors”. Although the azimuth position of the stimuli was well known, we inferred the azimuth predictors from the audio recordings to directly relate the neural response to the perceived ILD and/or ITD. We computed azimuth predictors for the lowest frequency conditions based on either ILD or ITD, while for the relatively higher frequency conditions based on ILD only (see description of stimuli in Materials and Methods). The ILD azimuth predictor was calculated as the arithmetic difference in power (measured as root mean square; RMS) between the left and right ear in each audio recording, convolved with a standard double gamma hemodynamic response function with the participant-specific TTP parameter. To compute the ITD regressors, we calculated the interaural phase difference (IPD) per frequency and time point from the recordings and converted this to ITD (see also Fig. 1). We then constructed a weighted average of the ITD across frequencies based on the overall energy present in each frequency band. This weighted average is thought to reflect the ITD of the direct sound when sounds are presented in moderately reverberant rooms as was done here (Shinn-Cunningham et al. 2005, although note that only nearby sound sources were tested). Finally, we convolved the weighted average of the ITD with the hemodynamic response function.

Comparing the ILD and ITD regressors indicates a strong correlation between these predictors (r = 0.94; SD = 0.06). Thus, it is redundant to create a model with a combination of the 2 azimuth predictors Accordingly, we used the ILD predictor and refer to it as azimuth predictor in both frequency conditions. In addition to this azimuth predictor, we also constructed a predictor to explain the variance caused by the general auditory response to sound independent of sound position. This predictor is the binaural sum, which is the sum in power (again measured as RMS) in the left and right ear of the recording convolved with the participant-specific HRF.

We then estimated a Random Effects General Linear Model (RFX GLM) with these predictors to identify the auditory vertices exhibiting spatial sensitivity, that is, regions that are modulated by azimuth. Importantly, we estimated the GLM on only 2 out of 3 sound intensity levels. The data set of the remaining intensity condition was used at a later stage to test whether the population coding model is level invariant. In total, we estimated 3 RFX GLMs, each including 2 sound levels: loud and medium, loud and soft and medium and soft. For each model estimation, we employed the contrast “azimuth predictor > baseline” to find the populations of vertices, whose responses were modulated by sound location.

Decoding Azimuth Position

Next we tested whether sound azimuth can be decoded from these spatially sensitive regions using an opponent channel coding model. We computed azimuth position estimates for each time point from the measured opponent population response as the difference in average hemodynamic response of the spatially responsive vertices in each hemisphere:

| (1) |

where P is the measured population response, the average hemodynamic response of spatially sensitive vertices in the left hemisphere, and the same for the right hemisphere. The average hemodynamic response was mildly temporally smoothed (moving average window spanning 3 time points). We then computed the correlation between the reconstructed azimuthal trajectory (based on the azimuth estimates at all time points) and the actual azimuthal trajectory of the stimulus as an index of similarity. This procedure was repeated for the test data set of each of the 3 GLM estimates, that is, for the sound intensity condition that was left out of the original GLM.

Finally, we assessed whether sound azimuth is encoded more accurately by an opponent channel model or by a local, one-channel coding model. To this end, we also calculated indices of similarity between azimuthal trajectories estimated with local, unilateral population responses (i.e., or ) and the actual sound azimuthal trajectory.

Results

General

Presentation of the sounds resulted in widespread activity in the auditory cortex, including large extents of HG and Heschl's sulcus (HS), and areas in planum polare, PT, and the superior temporal gyrus and sulcus (STG and STS; FDR, q < 0.05).

TTP Values in Auditory Cortex

The results of the GLM estimations testing the optimal time-to-peak (TTP) parameter for the hemodynamic response function indicated for 7 out of 8 participants an optimal TTP of 6 s (see Supplementary Table 1). The HRF of the remaining participant appears to be approximated most accurately with a TTP of 4 s. An optimal TTP of 6 s is in line with commonly observed delays in the HRF and although a TTP of 4 s is relatively short, this is also still within the normal range of values (Buxton et al. 2004).

Spatial Tuning Properties of Human Auditory Cortex

Response azimuth functions were constructed for each auditory responsive vertex (GLM, auditory > baseline, FDR, q < 0.05). As described earlier, vertices exhibiting high-frequency oscillations were discarded from this analysis (average proportion of vertices discarded = 11.8% [SD {standard deviation} = 3.4%]).

Figure 2A plots the average distribution of preferred azimuth across participants as computed from the RAFs. These results indicate that a preponderance of vertices has a directional preference for contralateral sound locations (54% on average in both frequency conditions). Some 30% (lowest frequency condition) to 23% (highest frequency condition) of the measured vertices was tuned to ipsilateral locations. The remaining vertices in our sample responded strongest to azimuth locations close to the frontal midline (16% in the lowest frequency condition and 23% in the highest frequency condition). Although vertices tuned to either frontal midline or ipsilateral locations were consistently observed, their relatively smaller number suggests that auditory space is not sampled homogeneously by human auditory cortex.

It has been argued previously that the slopes of the RAF may contain more information on sound azimuth than the peak response itself (e.g., Harper and McAlpine 2004; McAlpine 2005; Stecker et al. 2005). We therefore also computed from the RAF the azimuth position of the greatest change in response, that is, the steepest slope. In Figure 2B, the proportion of steepest slopes is plotted per azimuth position. The shape of the distribution shows that in most conditions, steepest slopes of the RAF are located relatively close to the frontal midline. Specifically, the median of the distributions is located at either of the 2 measured azimuth positions straddling the frontal midline, that is, at +18° or −18° (see Fig. 2B).

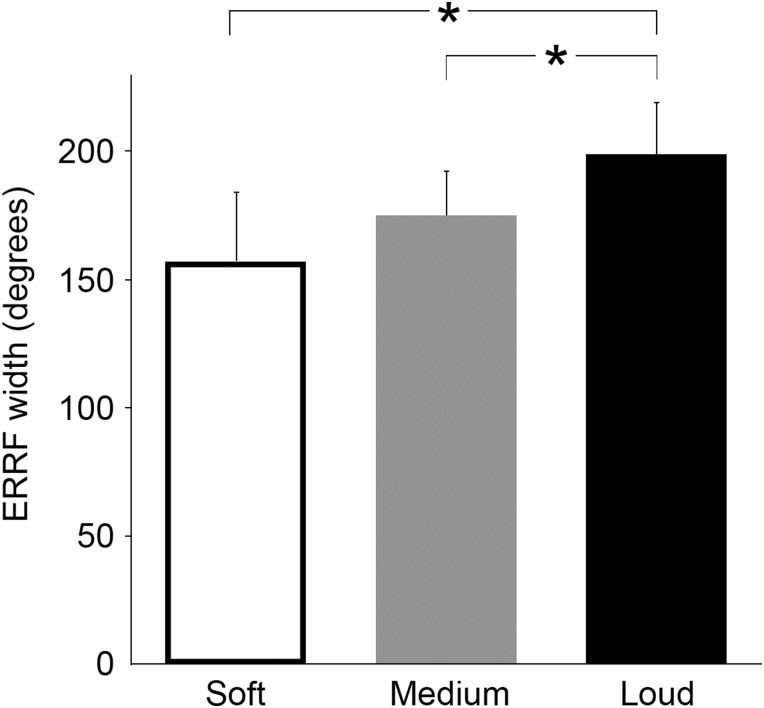

Finally, the average median ERRF width across participants indicates that spatial tuning broadens with increasing sound intensity (Fig. 3). That is, the median ERRF width averaged across participants increased from 157° [SD = 29°] in the soft intensity condition to 175° [SD = 20°] in the medium intensity condition, and 199° [SD = 22°] in the loud intensity condition (repeated-measures ANOVA, F1.53 = 13.973, P < 0.01). Post hoc pairwise comparisons revealed that average median ERRF width in the loud intensity condition was significantly higher compared with the soft condition (P < 0.05) and compared with the medium intensity condition (P < 0.05).

Figure 3.

Spatial tuning broadens with increasing sound intensity. The graph shows the average ERRF for each sound intensity condition. Specifically, we first computed the median of the ERRF distribution per sound intensity condition for each participant. We then computed the average across all participants (plotted here). An increase in ERRF signals less spatial selectivity. Asterisks denote a significant difference between conditions (P < 0.05).

Topographic Organization of Spatial Preference

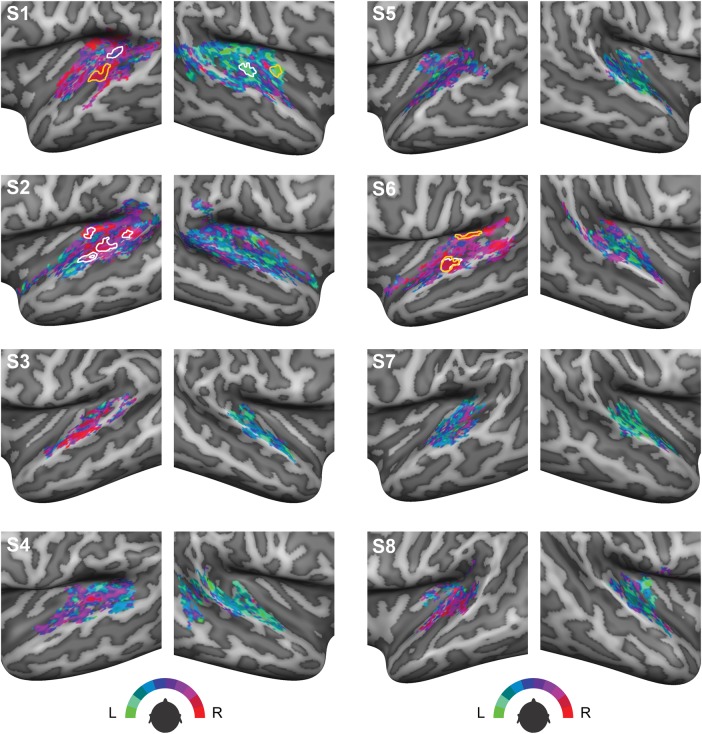

Figure 4 displays individual maps on the cortical surface of each participant in which azimuth preference measured during a representative condition is color coded. These azimuth preference maps do not show a clear point-to-point organization or a spatial gradient, thereby suggesting that human auditory cortex does not contain a clear spatiotopic representation of sound azimuth. Visual inspection of these maps also indicates the presence of large interindividual differences between maps. Moreover, azimuth preference in large parts of the auditory cortex is different for different sound intensities. In Figure 4, we delineated the clusters of vertices that do exhibit consistent spatial tuning across intensity-level modulations, which were observed in the left hemisphere of 3 participants and the right hemisphere of 1 participant only. Interestingly, these clusters appear to be located mainly in posterior auditory areas, that is, PT.

Figure 4.

Azimuth preference plotted on the cortical surface. Colors indicate preferred azimuth position in the 250–700 Hz condition presented at a medium sound level. Clusters delineated in white are cortical regions where azimuth preference in the 250 Hz–700 Hz condition is robust to changes in sound level. Areas delineated in orange signal cortical regions where azimuth preference in the 500–1400 Hz frequency range is consistent across sound levels.

Decoding Sound Azimuth with an Opponent Channel Coding Model

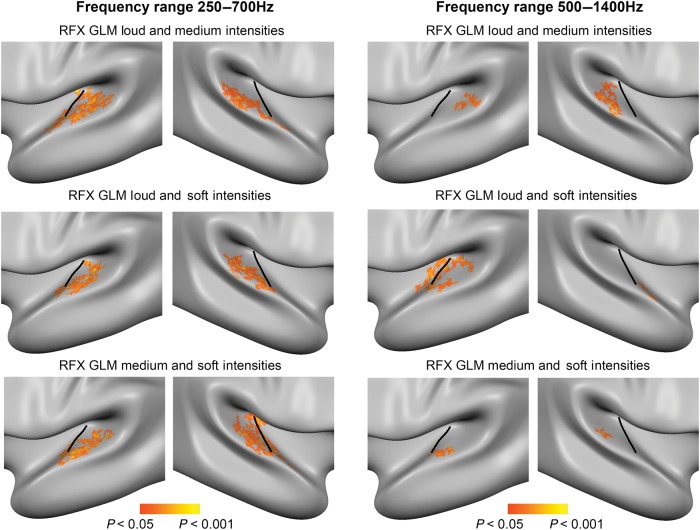

As a first step towards decoding azimuth position, we identified populations of vertices exhibiting spatial sensitivity, that is, regions modulated by ILD. To this end, we estimated RFX GLMs with azimuth predictors modeling the ILD. For each RFX GLM, one sound level condition was excluded from the model estimation in order to create a separate test data set from which sound azimuth position is decoded at a later stage. The results of the RFX GLMs show that spatially sensitive regions are mostly located in PT, that is, most regions are posterior to HG (see Fig. 5). Spatially sensitive regions were more extensive in the low-frequency condition. All significant regions were contralaterally tuned, that is, exhibiting a preference for contralateral sound locations.

Figure 5.

Group maps of spatially sensitive regions in auditory cortex. Results of the RFX GLM estimated on 2 out of 3 sound intensities to identify vertices in auditory cortex that are modulated by ILD, that is, that exhibit spatial sensitivity. The clusters shown here on the average group surface are identified with the contrast “azimuth predictor (ILD) > baseline” (vertex-level threshold P < 0.05; cluster-size threshold P < 0.05; 3000 iterations; biggest cluster in each hemisphere is shown). All regions responded maximally to contralateral sound locations.

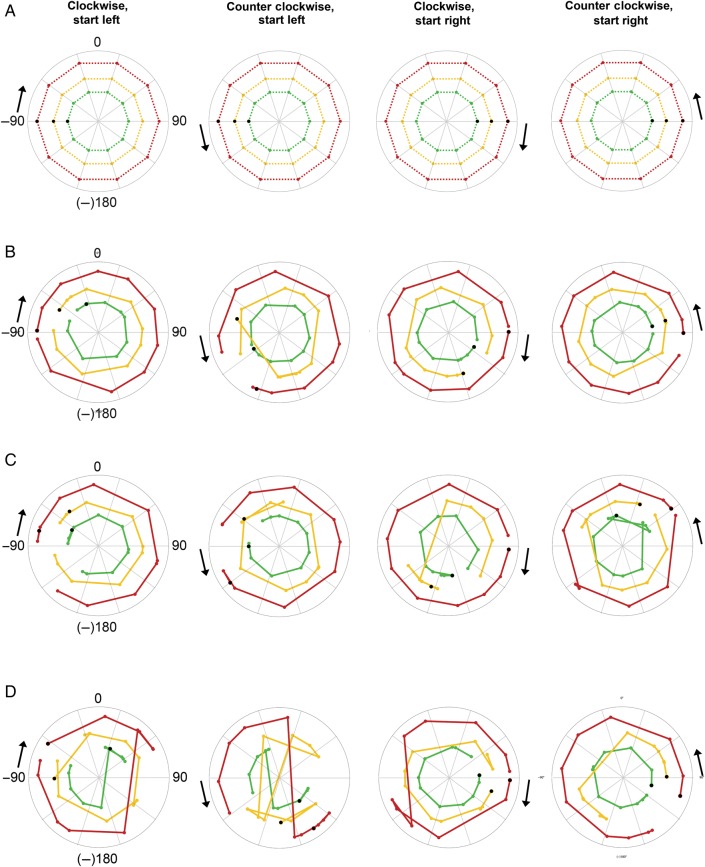

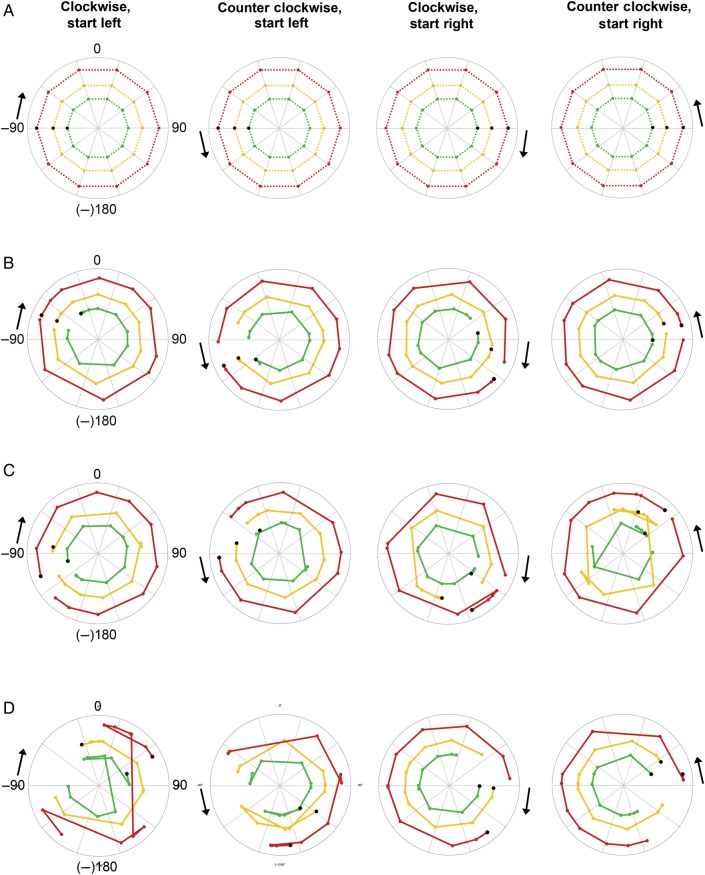

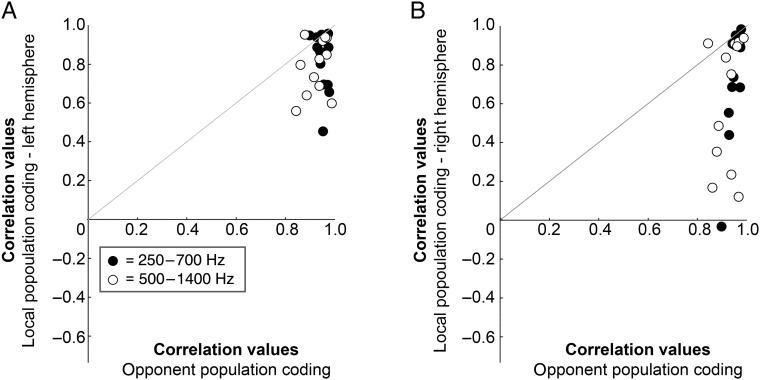

Figures 6 and 7 show the reconstructed sound azimuth trajectories that were decoded from the measured neural response in the test data set with an opponent channel model (Figs 6B and 7B) and local, one-channel model (Figs 6CD, 7C,D). Note that the azimuthal trajectories decoded with an opponent channel coding model resemble the actual azimuthal trajectories (Figs 6A and 7A) more closely than the azimuthal trajectories decoded with a unilateral, one-channel model. A comparison of the average correlation of the decoded trajectory to the actual azimuthal trajectory across two-channel and one-channel coding models (after Fisher transformation of the correlation values to ensure normality; Fisher 1915) demonstrates that the opponent model indeed performs better (opponent channel model [mean {M} = 5.4, standard deviation {SD} = 1.0]) than the local one-channel model in the right hemisphere (M = 3.2, SD = 1.9, paired-samples t-test t(23) = 6.904, P < 0.001) and the local one-channel model in the left hemisphere (M = 3.7, SD = 1.3, paired-samples t-test t(23) = 4.879, P < 0.001; see also plots of correlation values before Fisher transformation in Figure 8).

Figure 6.

Decoding sound azimuth from FM tones (range 500–1400 Hz). (A) Each polar plot shows the known sound azimuth position over time modeled with a bilateral, two-channel code. Black arrows indicate starting point of the sound (left or right) and motion direction (clockwise or counter clockwise). Colored dots indicate the position of the sound every 36° or 2 s, that is, at every measured time point. Black dots indicate the starting position, that is, the first time point measured. Colored dotted lines connect the measured time points and indicate the azimuthal trajectory of the sound in the various sound intensity conditions: red corresponds to loud intensity, yellow to medium intensity, and green to soft intensity. Note that the radius in these plots is arbitrary and was selected to create nonoverlaying azimuthal trajectories for ease of visualization. (B) Polar plots show the azimuth position decoded from the measured BOLD response in both hemispheres with a bilateral, two-channel opponent population code. The closer the decoded trajectory resembles the known azimuth trajectory shown in (A), the higher the decoding accuracy. (C) Here, azimuth position is decoded from the measured BOLD response in the left hemisphere with a unilateral, local population coding model. (D) Same as for C but for the right hemisphere.

Figure 7.

Decoding sound azimuth from FM tones (range 250–700 Hz). (A) Each polar plot shows the known sound azimuth position over time modeled with a bilateral, two-channel code. Black arrows indicate starting point of the sound (left or right) and motion direction (clockwise or counter clockwise). Colored dots indicate the position of the sound every 36° or 2 s, that is, at every measured time point. Black dots indicate the starting position, that is, the first time point measured. Colored dotted lines connect the measured time points and indicate the azimuthal trajectory of the sound in the various sound intensity conditions: red corresponds to loud intensity, yellow to medium intensity and green to soft intensity. Note that the radius in these plots is arbitrary and was selected to create nonoverlaying azimuthal trajectories for ease of visualization. (B) Polar plots show the azimuth position decoded from the measured BOLD response in both hemispheres with a bilateral, two-channel opponent population code. The closer the decoded trajectory resembles the known azimuth trajectory shown in (A), the higher the decoding accuracy. (C) Here, azimuth position is decoded from the measured BOLD response in the left hemisphere with a unilateral, local population coding model. (D) Same as for C but for the right hemisphere.

Figure 8.

Decoding accuracy of the opponent channel coding model is higher than single-channel coding. (A) Plotted against each other are the correlations between the outcome of the bilateral, opponent population coding model and the actual azimuth position of the sound (x-axis), and between the outcome of a unilateral, single-channel coding model (left hemisphere, y-axis). Black dots represent correlations for the sounds in the 250–700 Hz frequency range, white dots for the 500–1400 Hz frequency range. Within each frequency range, each dot represents the correlation value for one of the conditions tested in this study (12 in total), e.g., soft intensity, starting left, moving clockwise. Dots above the gray diagonal indicate a higher correlation for the local population coding model than for the opponent population coding model. Values below the gray diagonal indicate the opposite.

To ensure that the superior performance of the bilateral model does not depend on the inclusion of more vertices in this model compared with the unilateral model, we repeated the analysis of the opponent coding model with 1000 random samples of only half of the spatially sensitive vertices in each hemisphere. The results indicate that the number of vertices included in the bilateral model is not critically relevant: also with an equal number of data points, the correlation between the opponent coding model and the actual azimuthal trajectory is higher than the correlation between the local, unilateral model, and the azimuthal trajectory (see Supplementary Figs 1 and 2). Specifically, as before, a significantly higher correlation value is observed for the opponent channel model (for each condition, the mean correlation value of 1000 random samples was used; M = 5.3, SD = 1.0) compared with the local one-channel model in the right hemisphere (M = 3.2, SD = 1.9, paired-samples t-test t(23) = 6.353, P < 0.001) and in the left hemisphere (M = 3.7, SD = 1.3, paired-samples t-test t(23) = 4.798, P < 0.001).

Discussion

In this study, we tested the validity of a bilateral, opponent channel model of cortical representation of azimuth in human auditory cortex using a phase-encoding fMRI paradigm. FMRI-derived RAFs showed that a large proportion of neuronal populations in auditory cortex present broad spatial tuning with an overall preference for sound locations in the contralateral hemifield. Spatial selectivity decreased further with increasing sound intensity. We did not observe a clear spatial gradient of location preference on the cortical surface. Moreover, in most participants, location preference varied with sound level across the entire auditory cortex. Additionally, we showed that most of the steepest slopes in the RAFs were located near the interaural midline. Implementing a two-channel opponent coding model based on the subtraction of activity of contralaterally tuned regions in each hemisphere's PT, however, enabled us to closely reconstruct the actual azimuthal trajectory of the sound. Finally, this reconstruction was robust to changes in sound intensity.

Low Spatial Selectivity

Electrophysiological studies investigating spatial tuning properties of the neurons in the mammalian auditory pathway commonly report broad spatial tuning profiles and an inhomogeneous sampling of acoustic space at both a subcortical (e.g., McAlpine et al. 2001) and a cortical level (e.g., Recanzone et al. 2000; Stecker et al. 2005). In this study, we report similar findings for human auditory cortex as assessed with fMRI. However, it should be noted that each vertex (i.e., the spatial unit of our investigation) samples the averaged response of thousands of neurons and thus a broad spatial tuning profile may have resulted from averaging many narrow tuning profiles. Yet our measure of tuning width (the ERRF) does indicate that our fMRI measurement is sensitive to the spatial tuning of underlying neuronal populations. Specifically, the ERRF analysis showed a dependency of spatial selectivity on sound intensity, that is, ERRF increased with increasing sound levels. If our findings would only reflect the random averaging of thousands of neurons within each vertex, we would not expect to observe this dependence of ERRF on sound level. Additionally, our failure to find a spatiotopic organization in AC parallels previous attempts in mammalian auditory cortex, which did not discover any clear systematic organization either (e.g., Middlebrooks et al. 1998; 2002; Recanzone et al. 2000) even with fine-grained single-cell recordings and with sounds presented at near-threshold. Although a lack of sensitivity in our investigation cannot be excluded, it is worth noting that—with similar resolution and acquisition/analyses methods—tonotopic maps can be reliably obtained (Moerel et al. 2014).

Furthermore, the difficulty of distinguishing with fMRI local neural inhibition and excitation demands additional caution in interpreting our findings. Kyweriga, Stewart, Cahill et al. (2014) recently described neurons in rat auditory cortex—mostly tuned to locations at or near the interaural midline—for which contralateral sounds elicited strong local inhibition. With fMRI, such an effect of inhibition may result in an increase of measured BOLD signal. However, Kyweriga, Stewart, Cahill et al. (2014) observed this type of local inhibition and relatively sharp spatial tuning only for a small subset of neurons, that is, frontally tuned neurons. Others have also reported that only a relatively small proportion of auditory neurons are tuned to frontal locations (e.g., Stecker et al. 2005). It is therefore improbable that the high proportion of broadly contralaterally tuned vertices observed in the present study result from this inhibitory mechanism. Interestingly, a recent study investigating the effects of attention on spatial selectivity in cat auditory cortex showed that tuning width narrows when a cat attends to sound location (Lee and Middlebrooks 2011). Also in this case, local inhibition has been implied as the underlying mechanism. In the current study, participants were listening attentively to the sound location yet were not required to make an active response. It therefore remains an open question whether and where neuronal populations in human auditory cortex narrow their spatial tuning profiles in a similar way during an active spatial hearing task.

Opponent Channels in Bilateral Planum Temporale

In this study, we identified regions in auditory cortex that are modulated by sound azimuth location. These spatially sensitive regions were located mainly in posterior auditory regions, occupying portions of the PT adjacent to HS. Interestingly, these areas may correspond to the human caudolateral field (hCL), possibly extending into the human middle lateral field (hML; Moerel et al. 2014). This finding coincides with research demonstrating that more posterior areas in monkey (Rauschecker and Tian 2000; Recanzone et al. 2000; Tian et al. 2001), cat (Stecker et al. 2003), and human auditory cortex (Warren and Griffiths 2003; Brunetti et al. 2005; Deouell et al. 2007; Van der Zwaag et al. 2011) carry more spatial information than primary auditory regions and is in agreement with the proposal of an auditory “where” pathway (Rauschecker and Tian 2000; Maeder et al. 2001). Additionally, PT has been implicated in the processing of auditory motion (Baumgart et al. 1999; Krumbholz et al. 2005; Alink et al. 2012). Although we do not directly compare moving sounds to stationary sounds in the present study, our results regarding PT are in line with these prior findings.

From the fMRI responses of these spatially sensitive regions in PT, we decoded sound azimuth position with an opponent channel model. Such a model is in agreement with our data as the RAFs indicated that the azimuthal positions showing the largest modulation in neural response to sound location are around the frontal midline. Similar distributions of steepest slopes have been reported for the auditory cortex of other mammalian species such as cats and gerbils (McAlpine 2005; Stecker et al. 2005; King et al. 2007). Our results furthermore indicate that the two-channel opponent model carries more information on sound azimuth position than a local, unilateral channel model. Specifically, decoding and reconstruction of the azimuthal trajectory was more accurate with opponent channel coding than with one-channel coding. This is in line with other studies of local, unilateral population coding of auditory azimuth. A recent study by Miller and Recanzone (2009), for instance, demonstrated highly accurate model estimates for contralateral locations using a local, unilateral population. However, frontal location estimates still showed a considerable error, that is, model estimates were worse where behavioral sound localization is better. These authors therefore also suggest that it is possible that the 2 hemispheres both contribute to sound localization at or near the frontal midline. Also, several psychophysical (e.g., Phillips et al. 2006; Vigneault-MacLean et al. 2007) and computational (Mlynarski 2015) studies have demonstrated the likeliness of an opponent population coding model. Importantly, in the present study, we showed that the supremacy of the opponent channel model is not critically dependent on the number of vertices included. This further strengthens our conclusion that opponent coding is a relevant mechanism to represent the auditory azimuth.

Note that a two-channel opponent code can also be represented within a single hemisphere, that is, through a contra- and an ipsilaterally tuned channel in unilateral auditory cortex (Stecker et al. 2005). In fact, we did observe small groups of ipsilaterally tuned vertices in the present study; however, these did not survive rigorous statistical testing and therefore could not be investigated further. Future research of azimuth preference in human auditory cortex with higher sensitivity and higher resolution (e.g., with fMRI at ultra-high magnetic field strengths; see for instance Van der Zwaag et al. 2011) can contribute to our understanding of the role of these populations of ipsilaterally tuned vertices.

Level Invariance

The behavioral accuracy of spatial hearing does not change with sound level modulations. However, single-cell studies at the level of auditory cortical neurons have repeatedly demonstrated a decrease in spatial selectivity with increasing sound level (e.g., Rajan et al. 1990; Kyweriga, Stewart, Wehr et al. 2014). Our RAF analysis indicates similar interactions between spatial selectivity of individual auditory vertices and sound intensity. And in addition to the observed increase in tuning width, azimuth preference itself appeared to be modulated by sound level as well. Only in 3 participants did we observe some regions in PT that exhibited consistent azimuth preference across sound level. The location of these consistently tuned regions in PT is in agreement with the increasingly level-invariant azimuth tuning observed at higher stages of the auditory pathway in monkeys and cats (Stecker et al. 2003; Miller and Recanzone 2009). Most convincingly, however, in all subjects, decoding azimuth position with a two-channel opponent model proved to be robust to changes in sound level. Specifically, we defined cortical regions of spatial sensitivity with only 2 sound intensity conditions and decoded azimuth position from the third sound intensity condition, that is, an independent test data set with high accuracy despite the changes in sound intensity. Overall, these results suggest that whereas some degree of spatial tuning can be found at the level of local populations within PT, level-invariant coding of azimuth is achieved through the combination of information from 2 opposite (i.e., controlaterally tuned) neural channels.

The Role of the Auditory Cortex in Sound Localization

Given the processing and binaural integration that already occurs at subcortical stages of the auditory pathway, it remains unclear what the contribution of the auditory cortex is to mammalian spatial hearing. Lesion and reversible inactivation studies demonstrate that correct sound localization is not possible without an intact auditory cortex both for humans (e.g., Zatorre and Penhune 2001; Duffour-Nikolov et al. 2012) and other mammals (e.g., Jenkins and Merzenich 1984; Heffner and Heffner 1990; King et al. 2007). Unilateral lesions, for instance, commonly lead to degraded localization abilities in the contralesional hemifield (e.g., Malhotra et al. 2004, 2008). Contralesional spatial hearing deficits are expected given unilateral population coding models such as the local population code of Miller and Recanzone (2009) or an opponent channel code with both channels in one hemisphere (Stecker et al. 2005).

Our findings suggest that a two-channel, bilateral population model codes the auditory azimuth with significantly higher accuracy than a unilateral model. Such a model would predict degraded performance in the entire auditory space, even with a unilateral lesion. However, the spatially sensitive regions in PT observed in the present study are contralaterally tuned. It is thus likely that these regions within unilateral PT encode contralateral space to some extent, for example through a local population code as described by Miller and Recanzone (2009). Yet, an important difference between a local, unilateral population code and a bilateral, two-channel code is that the two-channel code predicts that unilateral lesions will cause largest localization errors at or near the frontal midline. Until now, results from lesion studies are not unequivocal on this. That is, some studies reported reduced localization acuity at the frontal midline (Thompson and Cortez 1983), whereas for others localization accuracy at frontal locations has not been reported extensively (e.g., Jenkins and Merzenich 1984). Finally, other studies did not find localization deficits for frontal locations (e.g., Malhotra et al. 2004, 2008). The extent of the lesions, the time between the lesion and the test, the nature of the stimuli and task (see for instance Duffour-Nikolov et al. 2012), and the species investigated are all possible reasons for this variability.

Acallosal individuals, that is, individuals without the corpus callosum, also may contribute to our understanding of the cortical representation of auditory space. Reduced spatial hearing abilities for these individuals have been reported for the entire azimuth range (e.g., Poirier et al. 1993), especially for moving sound sources (Lessard et al. 2002). This suggests that bilateral integration at a cortical level is required for a more detailed analysis of interaural disparities changes (affecting multiple frequency and modulations) as compared with simpler integration at subcortical stages of the auditory pathway (Lessard et al. 2002). This may also explain why lesion studies—in most cases conducted using static auditory stimuli—so far have mainly reported contralesional localization deficits (e.g., Thompson and Cortez 1983; Jenkins and Merzenich 1984; King et al. 2007; Malhotra et al. 2004, 2008). Future lesion or reversible inactivation studies comparing localization acuity for static auditory stimuli to moving, complex stimuli could contribute to our understanding of the role of the cortex in binaural integration and spatial hearing.

Supplementary Material

Supplementary Material can be found at http://www.cercor.oxfordjournals.org/ online.

Funding

This work was funded by Maastricht University and the Netherlands Organization for Scientific Research (NWO), Middle-sized Infrastructural Grant 480-09-006 and VICI 453-12-002 (E.F.), and the European Research Council under the European Union's Seventh Framework Programme (FP7/2007-2013)/ERC grant agreement number 295673 (B. de G.). Funding to pay the Open Access publication charges for this article was provided by the Netherlands Organization for Scientific Research (NWO).

Supplementary Material

Notes

Conflict of Interest: None declared.

References

- Alink A, Euler F, Kriegeskorte N, Singer W, Kohler A. 2012. Auditory motion direction encoding in auditory cortex and high-level visual cortex. Hum Brain Mapp. 33:969–978. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baumgart F, Gaschler-Markefski B, Woldorff MG, Heinze HJ, Scheich H. 1999. A movement-sensitive area in auditory cortex. Nature. 400:724–726. [DOI] [PubMed] [Google Scholar]

- Benjamini Y, Hochberg Y. 1995. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J Roy Stat Soc B. 57:289–300. [Google Scholar]

- Brunetti M, Belardinelli P, Caulo M, Del Gratta C, Della Penna S, Ferretti A, Lucci G, Moretti A, Pizzella V, Tartaro A et al. 2005. Human brain activation during passive listening to sounds from different locations: an fMRI and MEG study. Hum Brain Mapp. 26:251–261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brungart DS, Cohen J, Cord M, Zion D. 2014. Assessment of auditory spatial awareness in complex listening environments. J Acoust Soc Am. 135:1808–1820. [DOI] [PubMed] [Google Scholar]

- Buxton RB, Uludağ K, Dubowitz DJ, Liu TT. 2004. Modeling the hemodynamic response to brain activation. Neuroimage. 23:S220–S233. [DOI] [PubMed] [Google Scholar]

- Carr CE, Konishi M. 1988. Axonal delay lines for time measurement in the owl's brainstem. Procl Natl Acad Sci USA. 85:8311–8315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dale AM. 1999. Optimal experimental design for event-related fMRI. Hum Brain Mapp. 8:109–114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Day ML, Delgutte B. 2013. Decoding sound source location and separation using neural population activity patterns. J Neurosci. 33:15837–15847. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deouell LY, Heller AS, Malach R, D'Esposito M, Knight RT. 2007. Cerebral responses to change in spatial location of unattended sounds. Neuron. 55:985–996. [DOI] [PubMed] [Google Scholar]

- Duffour-Nikolov C, Tardif E, Maeder P, Bellmann Thiran A, Bloch J, Frischknecht R, Clarke S. 2012. Auditory spatial deficits following hemispheric lesions: dissociation of explicit and implicit processing. Neuropsychol Rehabil. 22:674–696. [DOI] [PubMed] [Google Scholar]

- Engel SA, Glover GH, Wandell BA. 1997. Retinotopic organization in human visual cortex and the spatial precision of functional MRI. Cereb Cortex. 7:181–192. [DOI] [PubMed] [Google Scholar]

- Fisher RA. 1915. Frequency distribution of the values of the correlation coefficient in samples from an indefinitely large population. Biometrika. 10:507–521. [Google Scholar]

- Friston KJ, Frith CD, Turner R, Frackowiak RSJ. 1995. Characterizing evoked hemodynamics with fMRI. Neuroimage. 2:157–165. [DOI] [PubMed] [Google Scholar]

- Frost MA, Goebel R. 2013. Functionally informed cortex based alignment: an integrated approach for whole-cortex macro-anatomical and ROI-based functional alignment. Neuroimage. 83:1002–1010. [DOI] [PubMed] [Google Scholar]

- Goebel R, Esposito F, Formisano E. 2006. Analysis of functional image analysis contest (FIAC) data with BrainVoyager QX: from single-subject to cortically aligned group general linear model analysis and self-organizing group independent component analysis. Hum Brain Mapp. 27:392–401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grothe B, Pecka M, McAlpine D. 2010. Mechanisms of sound localization in mammals. Physiol Rev. 90:983–1012. [DOI] [PubMed] [Google Scholar]

- Handwerker DA, Ollinger J, D'Esposito M. 2004. Variation of BOLD hemodynamic responses across subjects and brain regions and their effects on statistical analyses. Neuroimage. 21:1639–1651. [DOI] [PubMed] [Google Scholar]

- Harper NS, McAlpine D. 2004. Optimal neural population coding of an auditory spatial cue. Nature. 430:682–686. [DOI] [PubMed] [Google Scholar]

- Heffner HE, Heffner RS. 1990. Effect of bilateral auditory cortex lesions on sound localization in Japanese macaques. J Neurophysiol. 64:915–931. [DOI] [PubMed] [Google Scholar]

- Jeffress LA. 1948. A place theory of sound localization. J Comp Physiol Psychol. 41:35–39. [DOI] [PubMed] [Google Scholar]

- Jenkins WM, Merzenich MM. 1984. Role of cat primary auditory cortex for sound-localization behavior. J Neurophysiol. 52:819–847. [DOI] [PubMed] [Google Scholar]

- Kim JJ, Crespo-Facorro B, Andreasen NC, O'Leary DS, Zhang B, Harris G, Magnotta VA. 2000. An MRI-based parcellation method for the temporal lobe. Neuroimage. 11:271–288. [DOI] [PubMed] [Google Scholar]

- King AJ, Bajo VM, Bizley JK, Campbell RA, Nodal FR, Schulz AL, Schnupp JW. 2007. Physiological and behavioral studies of spatial coding in the auditory cortex. Hear Res. 229:106–115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- King AJ, Middlebrooks JC. 2011. Cortical representation of Auditory Space. In: Winer JA, Schreiner CE, editors. The Auditory Cortex. New York: (NY: ): Springer Science + Business Media; p 329–341. [Google Scholar]

- King AJ, Palmer AR. 1983. Cells responsive to free-field auditory stimuli in guinea-pig superior colliculus: distribution and response properties. J Physiol. 342:361–381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- King AJ, Schnupp JW, Doubell TP. 2001. The shape of ears to come: dynamic coding of auditory space. Trends Cogni Sci. 5:261–270. [DOI] [PubMed] [Google Scholar]

- Knudsen EI, Konishi M. 1978. A neural map of auditory space in the owl. Science. 200:795–797. [DOI] [PubMed] [Google Scholar]

- Krumbholz K, Schönwiesner M, Rübsamen R, Zilles K, Fink GR, Von Cramon DY. 2005. Hierarchical processing of sound location and motion in the human brainstem and planum temporale. Eur J Neurosci. 21:230–238. [DOI] [PubMed] [Google Scholar]

- Kyweriga M, Stewart W, Cahill C, Wehr M. 2014. Synaptic mechanisms underlying interaural level difference selectivity in rat auditory cortex. J Neurophysiol. 112:2561–2571. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kyweriga M, Stewart W, Wehr M. 2014. Neuronal interaural level difference response shifts are level-dependent in the rat auditory cortex. J Neurophysiol. 111:930–938. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee CC, Middlebrooks JC. 2011. Auditory cortex spatial sensitivity sharpens during task performance. Nat Neurosci. 14:108–114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leiman AL, Hafter ER. 1972. Responses of inferior colliculus neurons to free field auditory stimuli. Exp Neurol. 35:431–449. [DOI] [PubMed] [Google Scholar]

- Lessard N, Lepore F, Villemagne J, Lassonde M. 2002. Sound localization in callosaJl agenesis and early callosotomy subjects: brain reorganization and/or compensatory strategies. Brain. 125:1039–1053. [DOI] [PubMed] [Google Scholar]

- Maeder PP, Meuli RA, Adriani M, Bellmann A, Fornari E, Thiran J, Pittet A, Clarke S. 2001. Distinct pathways involved in sound recognition and localization: a human fMRI study. Neuroimage. 14:802–816. [DOI] [PubMed] [Google Scholar]

- Makous JC, Middlebrooks JC. 1990. Two dimensional sound localization by human listeners. J Acoust Soc Am. 87:2188–2200. [DOI] [PubMed] [Google Scholar]

- Malhotra S, Hall AJ, Lomber SG. 2004. Cortical control of sound localization in the cat: unilateral cooling deactivation of 19 cerebral areas. J Neurophysiol. 92:1625–1643. [DOI] [PubMed] [Google Scholar]

- Malhotra S, Stecker GC, Middlebrooks JC, Lomber SG. 2008. Sound localization deficits during reversible deactivation of primary auditory cortex and/or the dorsal zone. J Neurophysiol. 99:1628–1642. [DOI] [PubMed] [Google Scholar]

- McAlpine D. 2005. Creating a sense of auditory space. J Physiol. 566:21–28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McAlpine D, Jiang D, Palmer AR. 2001. A neural code for low-frequency sound localization in mammals. Nat Neurosci. 4:396–401. [DOI] [PubMed] [Google Scholar]

- Middlebrooks JC, Knudsen EI. 1984. A neural code for auditory space in the cat's superior colliculus. J Neurosci. 4:2621–2634. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Middlebrooks JC, Xu L, Eddins AC, Green DM. 1998. Codes for sound-source location in nontonotopic auditory cortex. J Neurophysiol. 80:863–881. [DOI] [PubMed] [Google Scholar]

- Middlebrooks JC, Xu L, Furukawa S, Macpherson EA. 2002. Cortical neurons that localize sounds. Neuroscientist. 8:73–83. [DOI] [PubMed] [Google Scholar]

- Miller LM, Recanzone GH. 2009. Populations of auditory cortical neurons can accurately encode acoustic space across stimulus intensity. Proc Natl Acad Sci USA. 106:5931–5935. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mlynariski W. 2015. The opponent channel population code of sound location is an efficient representation of binaural sounds. PLoS Comput Biol. 11:e1004294. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moerel M, De Martino F, Formisano E. 2014. An anatomical and functional topography of human auditory cortical areas. Front Neurosci. 8 doi:10.3389/fnins.2014.00225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Musicant AD, Butler RA. 1985. Influence of monaural spectral cues on binaural localization. J Acoust Soc Am. 77:202–208. [DOI] [PubMed] [Google Scholar]

- Oldfield SR, Parker SP. 1984. Acuity of sound localisation: a topography of auditory space. II. Pinna cues absent. Perception. 13:601–617. [DOI] [PubMed] [Google Scholar]

- Phillips DP. 2008. A perceptual architecture for sound lateralization in man. Hear Res. 238:124–132. [DOI] [PubMed] [Google Scholar]

- Phillips DP, Carmichael ME, Hall SE. 2006. Interaction in the perceptual processing of interaural time and level differences. Hear Res. 211:96–102. [DOI] [PubMed] [Google Scholar]

- Poirier P, Miljours S, Lassonde M, Lepore F. 1993. Sound localization in acallosal human listeners. Brain. 116:53–69. [DOI] [PubMed] [Google Scholar]

- Rajan R, Aitkin LM, Irvine DRF, McKay J. 1990. Azimuthal sensitivity of neurons in primary auditory cortex of cats. I. Types of sensitivity and the effects of variations in stimulus parameters. J Neurophysiol. 64:872–887. [DOI] [PubMed] [Google Scholar]

- Rakerd B, Hartmann WM. 1985. Localization of sound in rooms. II: The effects of a single reflecting surface. J Acoust Soc Am. 78:524–533. [DOI] [PubMed] [Google Scholar]

- Rauschecker JP, Tian B. 2000. Mechanisms and streams for processing of “what” and “where” in auditory cortex. Proc Natl Acad Sci USA. 97:11800–11806. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rayleigh L. 1907. On our perception of sound direction. Philos Mag. 13:214–232. [Google Scholar]

- Recanzone GH, Guard DC, Phan ML, Su TIK. 2000. Correlation between the activity of single auditory cortical neurons and sound-localization behavior in the macaque monkey. J Neurophysiol. 83:2723–2739. [DOI] [PubMed] [Google Scholar]

- Shinn-Cunningham BG, Kopco N, Martin TJ. 2005. Localizing nearby sound sources in a classroom: Binaural room impulse responses. J Acoust Soc Am. 117:3100–3115. [DOI] [PubMed] [Google Scholar]

- Stecker GC, Harrington IA, Middlebrooks JC. 2005. Location coding by opponent neural populations in the auditory cortex. PLoS Biol. 3:0520–0528. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stecker GC, Mickey BJ, Macpherson EA, Middlebrooks JC. 2003. Spatial sensitivity in field PAF of cat auditory cortex. J Neurophysiol. 89:2889–2903. [DOI] [PubMed] [Google Scholar]

- Striem-Amit E, Hertz U, Amedi A. 2011. Extensive cochleotopic mapping of human auditory cortical fields obtained with phase-encoding FMRI. PLoS One. 6:17832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. 1988. Co-Planar Stereotaxic Atlas of the Human Brain: 3-Dimensional Proportional System – An Approach to Cerebral Imaging. New York: (NY: ): Thieme Medical Publishers. [Google Scholar]

- Thompson GC, Cortez AM. 1983. The inability of squirrel monkeys to localize sound after unilateral ablation of auditory cortex. Behav Brain Res. 82: 211–216. [DOI] [PubMed] [Google Scholar]

- Tian B, Reser D, Durham A, Kustov A, Rauschecker JP. 2001. Functional specialization in rhesus monkey auditory cortex. Science. 292:290–293. [DOI] [PubMed] [Google Scholar]

- Van der Zwaag W, Gentile G, Gruetter R, Spierer L, Clarke S. 2011. Where sound position influences sound object representations: A 7-T fMRI study. Neuroimage. 54:1803–1811. [DOI] [PubMed] [Google Scholar]

- Vigneault-MacLean BK, Hall SE, Phillips DP. 2007. The effects of lateralized adaptors on lateral position judgments of tones within and across frequency channels. Hear Res. 224:93–100. [DOI] [PubMed] [Google Scholar]

- Warren JD, Griffiths TD. 2003. Distinct mechanisms for processing spatial sequences and pitch sequences in the human auditory brain. J Neurosci 23:5799–5804. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yin TCT. 2002. Neural mechanisms of encoding binaural localization cues in the auditory brainstem. In: Fay RR, Popper AN, editors. Integrative Functions in the Mammalian Auditory Pathway. New York: (NY: ): Springer; p. 99–159. [Google Scholar]

- Zatorre RJ, Penhune VB. 2001. Spatial localization after excision of human auditory cortex. J Neurosci. 21:6321–6328. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.