Abstract

Cortical areas, such as the dorsal subdivision of the medial superior temporal area (MSTd) and the ventral intraparietal area (VIP), have been shown to integrate visual and vestibular self-motion signals. Area V6 is interconnected with areas MSTd and VIP, allowing for the possibility that V6 also integrates visual and vestibular self-motion cues. An alternative hypothesis in the literature is that V6 does not use these sensory signals to compute heading but instead discounts self-motion signals to represent object motion. However, the responses of V6 neurons to visual and vestibular self-motion cues have never been studied, thus leaving the functional roles of V6 unclear. We used a virtual reality system to examine the 3D heading tuning of macaque V6 neurons in response to optic flow and inertial motion stimuli. We found that the majority of V6 neurons are selective for heading defined by optic flow. However, unlike areas MSTd and VIP, V6 neurons are almost universally unresponsive to inertial motion in the absence of optic flow. We also explored the spatial reference frames of heading signals in V6 by measuring heading tuning for different eye positions, and we found that the visual heading tuning of most V6 cells was eye-centered. Similar to areas MSTd and VIP, the population of V6 neurons was best able to discriminate small variations in heading around forward and backward headings. Our findings support the idea that V6 is involved primarily in processing visual motion signals and does not appear to play a role in visual–vestibular integration for self-motion perception.

SIGNIFICANCE STATEMENT To understand how we successfully navigate our world, it is important to understand which parts of the brain process cues used to perceive our direction of self-motion (i.e., heading). Cortical area V6 has been implicated in heading computations based on human neuroimaging data, but direct measurements of heading selectivity in individual V6 neurons have been lacking. We provide the first demonstration that V6 neurons carry 3D visual heading signals, which are represented in an eye-centered reference frame. In contrast, we found almost no evidence for vestibular heading signals in V6, indicating that V6 is unlikely to contribute to multisensory integration of heading signals, unlike other cortical areas. These findings provide important constraints on the roles of V6 in self-motion perception.

Keywords: heading, macaque, reference frame, self-motion, V6, visual motion

Introduction

Sensory signals provide cues critical to estimating our instantaneous direction of self-motion (heading). While visual input can be sufficient for robust self-motion perception (Gibson, 1950; Warren, 2004), combining visual and vestibular signals is known to improve heading perception (Telford et al., 1995; Ohmi, 1996; Bertin and Berthoz, 2004; Fetsch et al., 2009; Butler et al., 2010; de Winkel et al., 2010). Because the dorsal subdivision of the medial superior temporal area (MSTd) and the ventral intraparietal area (VIP) in macaques process both visual and vestibular signals (Duffy, 1998; Bremmer et al., 2002; Schlack et al., 2002; Page and Duffy, 2003; Gu et al., 2006; Fetsch et al., 2007; Takahashi et al., 2007; Chen et al., 2011c, 2013a) and are connected to V6 (Shipp et al., 1998; Galletti et al., 2001), V6 could also be among the cortical areas that represent self-motion based on visual and vestibular signals.

Previous work has provided mixed evidence regarding whether V6 represents heading. Human neuroimaging studies have reported that V6 prefers optic flow patterns with a single focus of expansion that simulate self-motion (Cardin and Smith, 2010, 2011; Pitzalis et al., 2010, 2013b; Cardin et al., 2012a,b). However, when optic flow stimuli are presented in succession, V6 fails to show adaptation effects (in contrast to MSTd), suggesting that it may not contain neurons that are selective for heading based on optic flow (Cardin et al., 2012a). An alternative hypothesis regarding the role of V6 in optic flow processing is that V6 discounts visual self-motion cues to represent object motion (Galletti et al., 2001; Galletti and Fattori, 2003; Pitzalis et al., 2010, Pitzalis et al., 2013a,b; Cardin et al., 2012a). Thus, it remains uncertain whether V6 is a viable neural substrate for heading perception.

Our understanding of the functional roles of V6 is limited by the lack of functional characterization of V6 neurons to important self-motion signals. Although many studies have demonstrated that human V6 is activated by optic flow (Cardin and Smith, 2010, 2011; Pitzalis et al., 2010, 2013b; Cardin et al., 2012a,b), optic flow tuning of single neurons has never been measured in macaque V6. Similarly, macaque V6 neurons have never been tested for vestibular selectivity to self-motion. Thus, the first major goal of this study is to measure visual and vestibular heading selectivity of isolated V6 neurons.

To better understand the roles of V6 in processing self-motion, it is also important to determine the spatial reference frame(s) in which V6 neurons signal heading. In areas MSTd and VIP, most neurons process visual heading signals in an eye-centered reference frame (Fetsch et al., 2007; Chen et al., 2013b). Previously, Galletti et al. (1993, 1995) mapped visual receptive fields (RFs) of neurons in the parieto-occipital sulcus (where V6 and V6A are located) and reported that the vast majority of RFs shifted with gaze position, indicating that they were eye-centered. Interestingly, a minority of neurons had RFs that remained in the same spatial location regardless of gaze position, indicating that they were head-centered (Galletti et al., 1993, 1995). Subsequent studies attributed the head-centered RFs to V6A and not V6 (Galletti et al., 1999b; Gamberini et al., 2011). However, there is evidence that human V6+ (likely dominated by V6) may be capable of representing the velocity of motion relative to the observers head (Arnoldussen et al., 2011, 2015). Therefore, a second major goal of this study is to determine the spatial reference frame of heading selectivity for macaque V6 neurons.

We tested V6 neurons with either optic flow or passive inertial motion stimuli that probed the full range of possible headings in 3D. Our findings indicate that V6 carries robust visual heading signals in an eye-centered reference frame but is unlikely to carry a meaningful multisensory (visual/vestibular) representation of heading.

Materials and Methods

Animal preparation.

All procedures were approved by the Baylor College of Medicine and Washington University Institutional Animal Care and Use Committees and were in accordance with National Institutes of Health guidelines. Surgical methods and training regimens have been described in detail previously (Gu et al., 2006; Fetsch et al., 2007). Briefly, two male rhesus monkeys (Macaca mulatta, 8 and 9 kg) were anesthetized and underwent sterile surgery to chronically implant a custom lightweight circular Delrin head cap to allow for head stabilization and a scleral search coil for measuring eye position (Robinson, 1963; Judge et al., 1980). After recovery from surgical procedures, the monkeys were trained to perform a fixation task using standard operant conditioning techniques with a fluid reward. Before recordings, a custom recording grid, containing staggered rows of holes with 0.8 mm spacing, was secured inside the Delrin head cap, and burr holes were made to allow insertion of tungsten microelectrodes through a transdural guide tube.

Virtual reality system.

During experimentation, the monkey was seated comfortably in a custom chair that was secured to a 6-degree of freedom motion platform (Moog 6DOF2000E; Moog). The motion platform forming the base of a virtual reality system (Fig. 1A) is capable of producing physical translation along any direction in 3D, along with corresponding visual stimuli. To display visual stimuli, the virtual reality system was equipped with a rear-projection screen subtending 90° × 90° of visual angle and a three-chip digital light processing projector (Digital Mirage 2000; Christie). Platform motion and visual stimuli were updated synchronously at 60 Hz. To generate visual stimuli, we used the OpenGL graphics library to simulate translational self-motion through a 3D cloud of stars that was 100 cm wide, 100 cm tall, and 40 cm deep. Star density was 0.01/cm3, and ∼1500 stars were visible within the field of view at any given time point. Individual elements of the star field appeared as 0.15 × 0.15 cm triangles. To provide binocular disparity cues, the stars were displayed as red–green anaglyphs and viewed through red–green filters (Kodak Wratten filters; red #29, green #61).

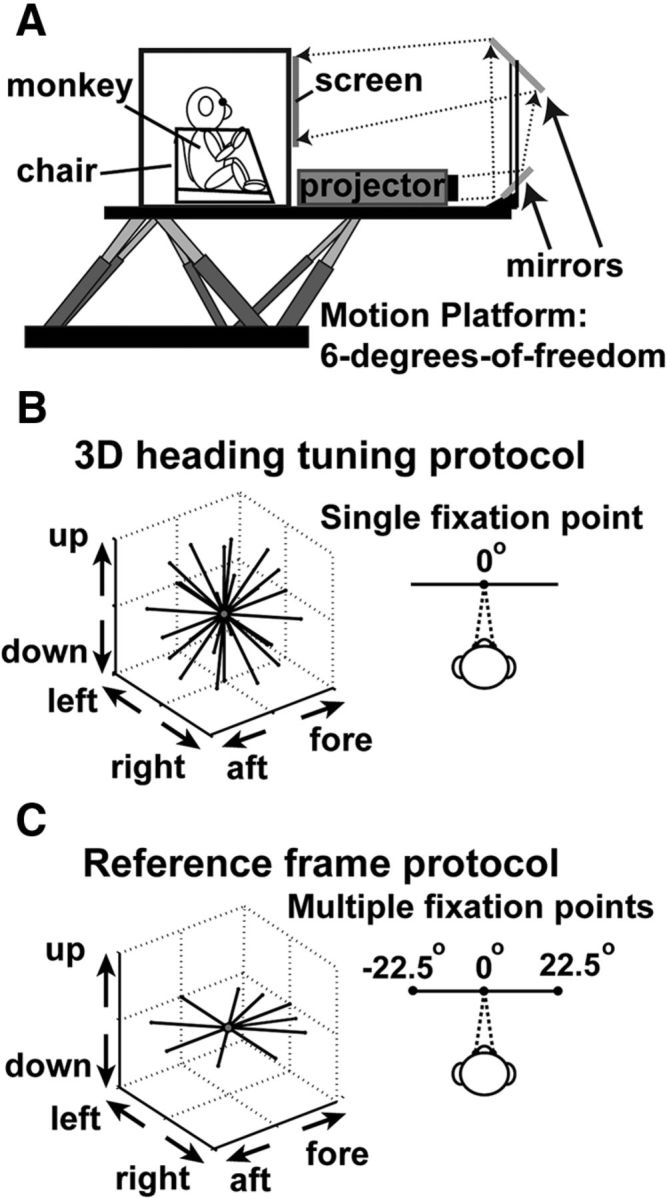

Figure 1.

Experimental apparatus and illustration of stimuli. A, Schematic of the 3D virtual reality system. The monkey, field coil, projector, and screen are mounted on a motion platform capable of translating along any direction in 3D. B, For the 3D heading tuning protocol, the monkeys were required to maintain fixation on a central target while 26 different headings (vectors) were presented to measure heading tuning. C, For the reference frame protocol, the monkey had to fixate on a target at one of three possible locations (left, center, and right). For each target location, 10 different headings were presented in the horizontal plane.

3D heading tuning protocol.

Using the virtual reality system described above, we examined the 3D heading tuning of V6 neurons. To initiate a trial, the head-fixed monkey first acquired and maintained fixation on a central visual target (0.2° × 0.2°), within a 2° × 2° fixation window. The monkey's task was to maintain fixation during presentation of either a 2 s vestibular stimulus or a 2 s visual stimulus. If the monkey broke fixation at any point during the required fixation time, the trial was aborted. The stimulus had a Gaussian velocity profile (see Fig. 7B, gray curve), with a total displacement (real or simulated) of 13 cm, a peak velocity of ∼30 cm/s, and a peak acceleration of ∼0.1 g (∼0.98 m/s2). For the heading tuning protocol, 26 possible directions of self-motion were presented, sampled evenly around a sphere (Fig. 1B). These directions include all combinations of eight different azimuth angles spaced 45° apart (0, 45, 90, 135, 180, 225, 270, and 315°) and three different elevation angles spaced 45° apart (0 and ±45°), accounting for 24 of the 26 possible directions. The remaining two directions were elevation angles of 90° and −90°, which correspond to straight upward and downward movements.

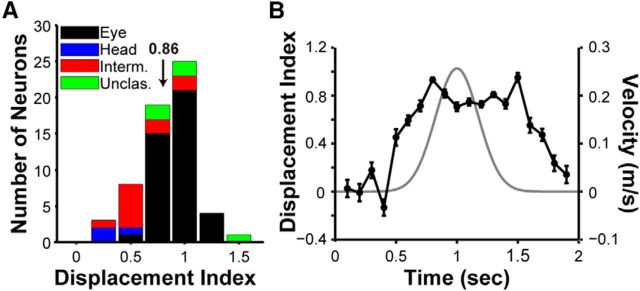

Figure 7.

Quantification of reference frames by the DI. A, Distribution of DI values for V6 neurons with significant heading tuning for at least two fixation locations (n = 60). Arrow indicates mean DI (0.86). The stacked bars are color coded to classify neurons based on their reference frames. Black, eye-centered; blue, head-centered; red, intermediate (between eye- and head- centered); green, unclassified (see Materials and Methods). B, The time course of the DI (black line), as computed using a sliding window analysis (see Materials and Methods). Error bars indicate SEM. The gray curve shows the Gaussian stimulus velocity profile.

There were two possible sensory stimulus conditions presented during the 3D heading tuning protocol. (1) For the vestibular condition, the visual display was blank except for a central fixation point. During fixation, the monkey was moved passively by the motion platform along one of the 26 trajectories described above. (2) For the visual condition, the animal maintained visual fixation while a 3D cloud of stars simulated one of the 26 movement trajectories and the motion platform remained stationary. One block of trials consisted of 53 distinct stimulus conditions: 26 heading directions for each stimulus type (visual and vestibular) plus one blank trial (fixation target only) to measure spontaneous activity. The goal was to acquire three to five blocks of successful trials for each neuron (three blocks, n = 34; four blocks, n = 11; five blocks, n = 61), and all stimulus conditions were interleaved randomly within each block of trials. Neurons without a minimum of three blocks of trials were excluded from analysis.

Reference frame protocol.

To quantify the spatial reference frames used by V6 neurons to signal heading, we used an approach similar to that detailed previously for studies in areas MSTd (Fetsch et al., 2007) and VIP (Chen et al., 2013b). Briefly, the monkey fixated on a target that could appear at one of three possible locations (central, 0°; left of center, −22.5°; or right of center, 22.5°; Fig. 1C). During fixation, 10 heading trajectories were presented in the horizontal plane: eight directions spaced 45° apart (0, 45, 90, 135, 180, 225, 270, and 315°), plus two additional directions (±22.5°) around straight ahead (Fig. 1C). The velocity profiles were the same as described above. There were a total of 63 (randomly interleaved) trial types: 10 heading directions crossed with two stimulus types (visual and vestibular) and three fixation locations plus three blank trials (one for each fixation location) to measure spontaneous activity. However, when it became clear that the vast majority of V6 neurons do not respond to vestibular signals (see Results), the reference frame protocol was streamlined to include only the visual stimulus condition (33 trials for each block). A total of n = 28 neurons were tested with the full reference frame protocol, whereas n = 45 neurons were tested with the streamlined protocol. Just as in the 3D heading tuning protocol, the goal was to maintain neural recordings long enough to acquire data from three to five blocks of stimulus presentations (three blocks, n = 6; four blocks, n = 14; five blocks, n = 53).

Neural recordings.

We made extracellular recordings of single neurons in V6 with epoxy-coated tungsten microelectrodes (1–2 MΩ impedance at 1 kHz; FHC). Microelectrodes were advanced into the cortex through a transdural guide tube using a remote-controlled microdrive (FHC). To localize V6, we first took structural MRI scans and identified V6 and the surrounding cortical areas using CARET software (Van Essen et al., 2001). Structural MRI scans were segmented, flattened, and morphed onto a standard macaque atlas using CARET. Subsequently, areal boundaries from different cortical parcellation schemes could be mapped onto the flat maps and the original MRI volumes using CARET. Areas V3, V6A, and V6 were identified based on the “Galletti et al. 99” parcellation scheme (Galletti et al., 1999a), and the surrounding areas were identified based on the “LVE00” parcellation scheme (Lewis and Van Essen, 2000).

This MRI analysis procedure provided a set of candidate grid locations to access V6 with microelectrodes. Next, we manually mapped the physiological response properties of neurons by making many electrode penetrations within and around the candidate grid locations. We measured the size (width and height) and visuotopic location of each cell's visual RF to help distinguish V6 from neighboring regions. By also mapping RFs in areas V3 and V6A, we were able to use RF size and previously described topographic relationships of areas V3, V6A, and V6 (Galletti et al., 1999a, 2005) to validate our localization of V6. The CARET software also allowed us to project the locations of recorded neurons onto flat maps of the cortical surface, as shown in Figure 2A for each animal. The manually mapped RFs in V6 are shown in Figure 2B (ellipses). RF locations of neurons and their projected cortical locations (Fig. 2A) are consistent with previous descriptions of V6 retinotopy, which suggests that we properly targeted V6 (Galletti et al., 1999a, 2005).

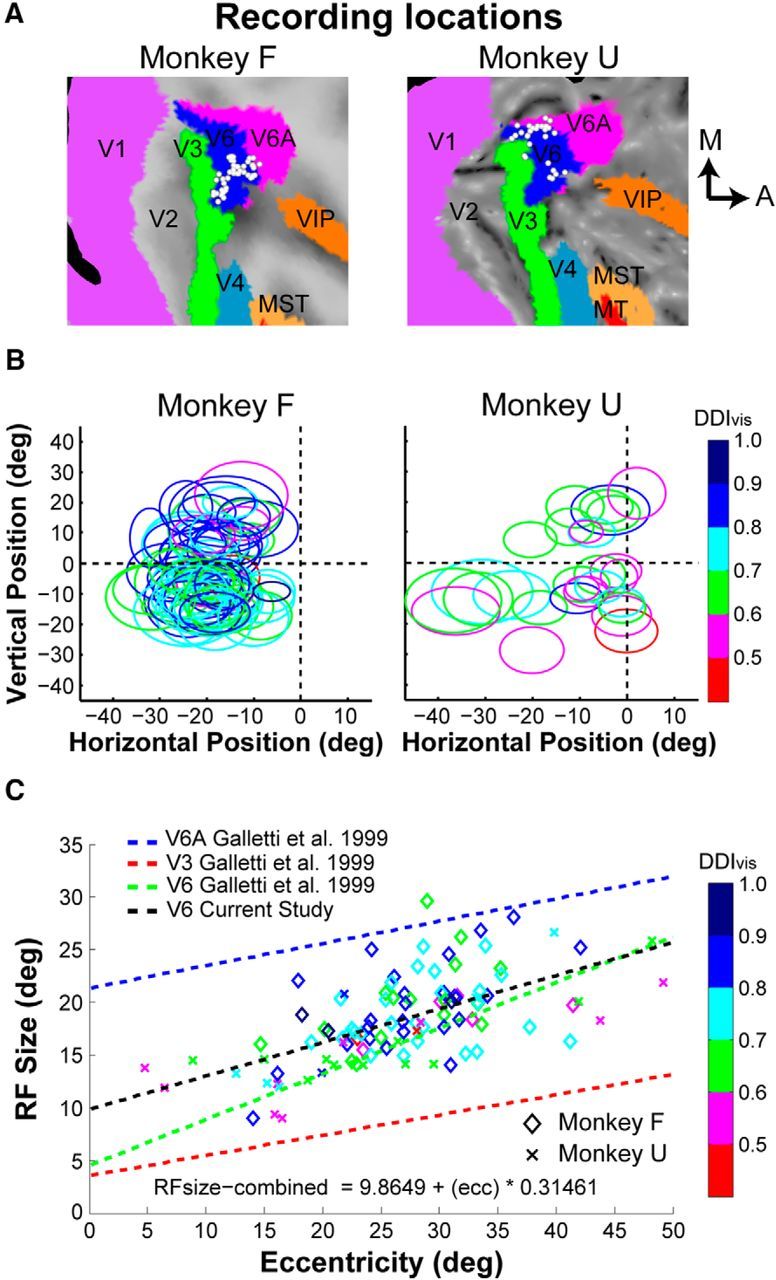

Figure 2.

Recording locations in V6 and RF properties. A, Recording sites in V6 have been projected onto flattened MRI maps of each monkey using CARET software (see Materials and Methods). A, anterior; M, medial. B, RFs for each neuron are shown as ellipses, separately for each animal. The RFs are color coded to reflect the visual DDIvis value for each neuron. C, RF size (square root of RF area) is plotted as a function of eccentricity (n = 106 V6 neurons). The black trend line shows the linear regression fit for V6 neurons in this study, and this relationship is very similar to a previously reported trend (green line) for V6 (Galletti et al., 1999a). In contrast, the trend lines for areas V6A (blue line) and V3 (red line), from a previous report (Galletti et al., 1999a), show that RF sizes in V6 are distinct from those in V6A and V3.

We also plotted RF size (square root of RF area) against eccentricity for all V6 neurons (Fig. 2C). For comparison, we plotted the relationships between RF size and eccentricity from a previous study (Galletti et al., 1999a) of areas V6A (blue line), V3 (red line), and V6 (green line). Our RF size versus eccentricity relationship (black line) is very similar to that reported previously for V6 (Galletti et al., 1999a). In contrast, RF sizes reported previously for V6A are substantially larger, and RFs reported for V3 are substantially smaller (Fig. 2C). Thus, the combination of our MRI localization of recording sites and the functional properties of the recorded neurons suggest strongly that our recordings were correctly targeted to V6. For the 3D heading tuning protocol, all neurons encountered in V6 were included as long as their RFs were contained within the area of the visual display (n = 106). The same requirement was used for the reference frame protocol; however, because we often ran the protocols in succession, some cells were lost during the 3D heading tuning protocol, and this reduced the total number of neurons tested in the reference frame protocol (n = 73).

Data analyses.

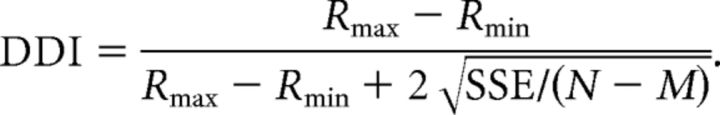

All analyses were performed using custom scripts written in MATLAB (MathWorks). Except when noted, the central 1 s of neuronal activity during stimulus presentation was used to calculate the firing rate for each trial. For all 106 neurons recorded during the 3D heading tuning protocol, we plotted the mean firing rate data on Cartesian axes by transforming the spherical tuning functions using the Lambert cylindrical equal-area projection (Snyder, 1987). We then evaluated the tuning strength of each neuron by computing its direction discrimination index (DDI; Takahashi et al., 2007). The DDI metric quantifies the strength of response modulation (difference in firing rate between preferred and null directions) relative to response variability. DDI was defined as follows:

|

Here, Rmax and Rmin represent the maximum and minimum responses from the measured 3D tuning function, respectively. SSE is the sum squared error around the mean responses, N is the total number of observations (trials), and M is the number of tested stimulus directions (for the 3D heading tuning protocol, M = 26). DDI is a signal-to-noise metric (conceptually similar to d′) that is normalized to range from 0 to 1. Neurons with strong response modulations relative to their variability will take on values closer to 1, whereas neurons with weak response modulations take on values closer to 0. However, note that even neurons with very weak tuning may have DDI values well above 0 because (Rmax − Rmin) will always be positive as a result of response variability. Moreover, neurons with moderate response modulations and large variability may yield DDI values similar to those with smaller response modulations and low variability (see Results).

We estimated the heading preference of neurons with significant heading tuning (ANOVA, p < 0.05, n = 96) by computing the vector sum of the responses for all 26 headings. In addition, we computed a confidence interval (CI) for the preferred heading of each neuron by resampling the data (with replacement). The procedure was repeated 1000 times to produce a 95% CI for the azimuth preference and a 95% CI for the elevation preference. We used these CIs to determine whether the heading preference of each neuron was significantly different from axes within the vertical (i.e., fronto-parallel) plane.

To determine whether the distribution of preferred azimuth angles differed significantly from a uniform distribution, we performed a test for uniformity based on resampling procedures described previously (Takahashi et al., 2007). We started by calculating the SSE (across bins) between the measured distribution and an ideal uniform distribution containing the same number of observations. We then recomputed the SSE between a resampled distribution and the ideal uniform distribution. This second procedure was repeated 1000 times to generate a distribution of SSE values. We then determined whether the SSE between the experimental data and the ideal uniform distribution was outside the 95% CI (p < 0.05) of SSE values computed between the ideal uniform distribution and the 1000 resampled distributions. After the uniformity test, we performed a modality test based on the kernel density estimate method (Silverman, 1981; Fisher and Marron, 2001), as described previously (Takahashi et al., 2007). A von Mises function (the circular analog of the normal distribution) was used as the kernel for the circular data. Watson's U2 statistic (Watson, 1961), corrected for grouping (Brown, 1994), was computed as a goodness-of-fit test statistic to obtain a p value through a bootstrapping procedure with 1000 iterations. We generated two p values. The first p value (puni) was a test for unimodality, and the second p value (pbi) was a test for bimodality. In the scenario in which puni < 0.05 and pbi > 0.05, unimodality is rejected and the distribution is considered bimodal.

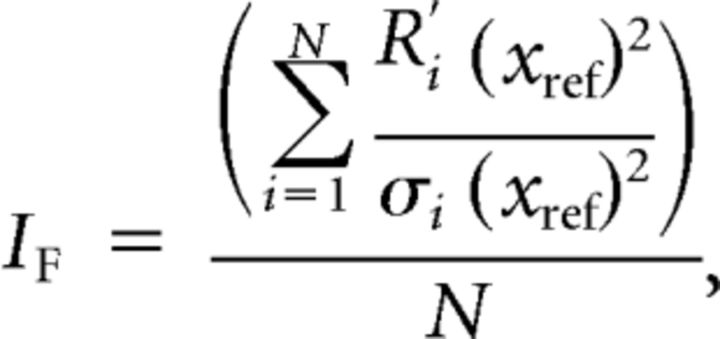

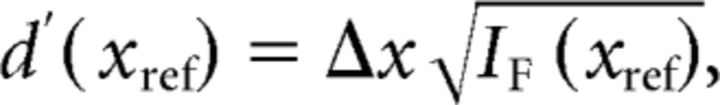

Using a procedure described previously (Gu et al., 2010), responses from V6 neurons that were significantly tuned (ANOVA, p < 0.05) in the horizontal plane were used to compute Fisher information (n = 86). Fisher information provides a theoretical upper limit on the precision with which any unbiased estimator can discriminate small variations in a variable around a reference value. By assuming that neurons have independent Poisson spiking statistics, the average Fisher information can be defined as follows:

|

where IF is the Fisher information, N is the number of neurons within the population, Ri′(xref) is the derivative of the tuning curve at xref (the reference heading) for the ith neuron, and σi(xref) is the standard deviation of the response of the ith neuron at the reference heading. To obtain the slope of the tuning curve, Ri′(xref), we interpolated the tuning function to 0.1° resolution with a spline function and then computed the derivative of the interpolated tuning function. Because we assume Poisson statistics, the response variance of a neuron is equal to the mean firing rate.

We expressed the upper bound for the discriminability (d′) of two closely spaced stimuli as follows:

|

where Δx is the difference between two stimuli (xref and xref + Δx). For direct comparison with area MSTd, we used a criterion value of d′ = √2 (Gu et al., 2010). To produce 95% CIs on IF, we used a bootstrapping procedure in which random samples of neurons were generated via resampling with replacement (1000 iterations) from the recorded population. The relationships given above for Fisher information and d′ assume that neurons have independent noise. Although this is unlikely to be the case for V6, we demonstrated previously that incorporating correlated noise into the computation of Fisher information changed the overall amount of information in the population but had little effect on the shape of the relationship between Fisher information and reference heading (Gu et al., 2010).

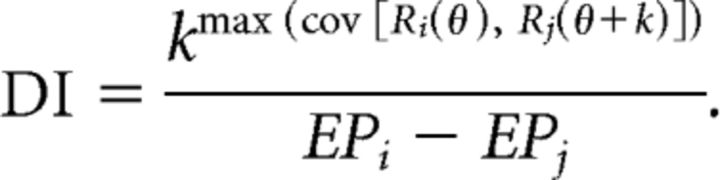

For the 73 neurons recorded during the reference frame protocol, the spatial reference frame of heading tuning was assessed using a cross-covariance technique (Avillac et al., 2005; Fetsch et al., 2007; Chen et al., 2013b). As described previously for areas MSTd (Fetsch et al., 2007) and VIP (Chen et al., 2013b), we linearly interpolated the tuning functions for each eye position to 1° resolution. For each pair of eye positions, we determined the amount of displacement (relative shift of the two tuning curves) that resulted in the largest covariance. We then normalized the shift having maximum covariance by the change in eye position to yield the displacement index (DI):

|

The variable k (in degrees) is the relative displacement of the tuning functions (Ri and Rj). The superscript above k refers to the maximum covariance between the tuning curves as a function of k (ranging from −180° to 180°). The denominator (EPi − EPj) represents the difference in eye position between the pair of fixation target locations at which the tuning functions were measured. If the tuning curve of a neuron shifts by an amount equal to the change in eye position, then DI takes on a value of 1 (eye-centered tuning). If the tuning curve of a neuron shows no shift with eye position, then DI takes on a value of 0 (head-centered tuning).

Fifty-six neurons in our sample showed significant heading tuning at all three tested eye positions and therefore yielded three DI values (one for each pair of tuning curves); for these neurons, we took the average to provide a single DI value. Four additional neurons were tuned for two eye positions and provided a single DI value (60 neurons total with DI values). The remaining 13 neurons lacked significant heading tuning (ANOVA, p < 0.05) for at least two eye positions, and thus no DI could be computed. We also computed how the DI changed over time during the 2 s stimulus presentation as done previously in MSTd (Fetsch et al., 2007). For each neuron, we computed firing rates within a sliding 200 ms window that was moved in increments of 100 ms, and we then computed the DI for each time window, as described above.

We computed CIs for each DI using a bootstrap method (Chen et al., 2013b). We generated bootstrap tuning curves by resampling (with replacement) the data for each heading and then computed a DI for each bootstrapped tuning curve. This procedure was repeated 1000 times to produce a 95% CI. A DI value was considered significantly different from a particular value (0 or 1) if its 95% CI did not include that value. A neuron was classified as eye-centered if the CI did not include 0 but included 1. A neuron was classified as head/body-centered if the CI did not include 1 but included 0. Finally, neurons were classified as having “intermediate” reference frames if the CI was contained within the interval between 0 and 1, without including 0 or 1. All other cases were designated as “unclassified.”

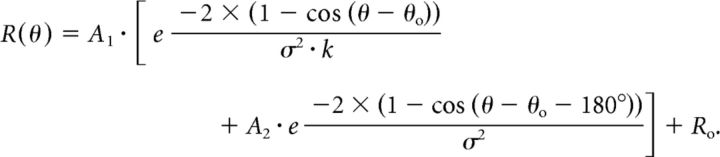

In addition to the DI analysis, we also used a curve-fitting analysis to assess whether the tuning of a neuron was more consistent with an eye-centered or head-centered representation (for details, see Fetsch et al., 2007; Chen et al., 2013b). To compare eye-centered and head-centered models, we first fit the response profile of each V6 neuron with a modified circular Gaussian of the following form (Fetsch et al., 2007):

|

Here, A1 is the overall response amplitude, Ro is the baseline firing rate, θo is the peak location of the tuning curve, and σ is the tuning width. The second exponential term allows for a second peak 180° out-of-phase with the first if A2 is sufficiently large. Similar to MSTd (Fetsch et al., 2007), only a small number of V6 neurons (n = 5) required the second term to be fit adequately. The relative widths of the two peaks are determined by k. Data for all eye positions were fit simultaneously using “fmincon” in MATLAB. Five of the six parameters in the third equation were free to vary with eye position. Only θo (the tuning curve peak) was a common parameter across the curve fits for different eye positions, and it was constrained in one of two ways: (1) its value was offset by the eye fixation location for each curve (eye-centered model); or (2) its value was constant across eye positions (head-centered model).

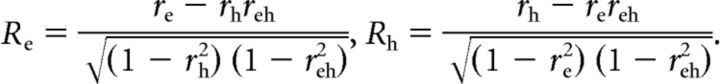

For each model (eye-centered and head-centered), the best-fitting function was compared with the data to determine the goodness-of-fit. To remove the correlation between the eye-centered and head-centered models, we computed partial correlation coefficients as follows:

|

Here, the simple correlation coefficients between the data and the eye- and head- centered models are given by re and rh, respectively, whereas the correlation between the two models is given by reh. Partial correlation coefficients Re and Rh were normalized using Fisher's r-to-Z transform to allow for comparisons based on the difference between Z scores (Angelaki et al., 2004; Smith et al., 2005; Fetsch et al., 2007; Chen et al., 2013b). Using this analysis, we generated a scatter plot of Z-scored partial correlation coefficients for the eye-centered versus head-centered models (see Results; Fig. 8C). A cell was considered to be significantly eye-centered if the Z score for the eye-centered model was >1.645 and exceeded the head-centered Z score by at least that amount (equivalent to p < 0.05). Similarly, a cell was considered to be head-centered if the Z score for the head-centered model was >1.645 and exceeded the eye-centered Z score by at least that amount. The remaining neurons were considered unclassified. This classification was applied to all neurons with a curve-fit R2 ≥ 0.75 for either the head-centered or eye-centered model (n = 58).

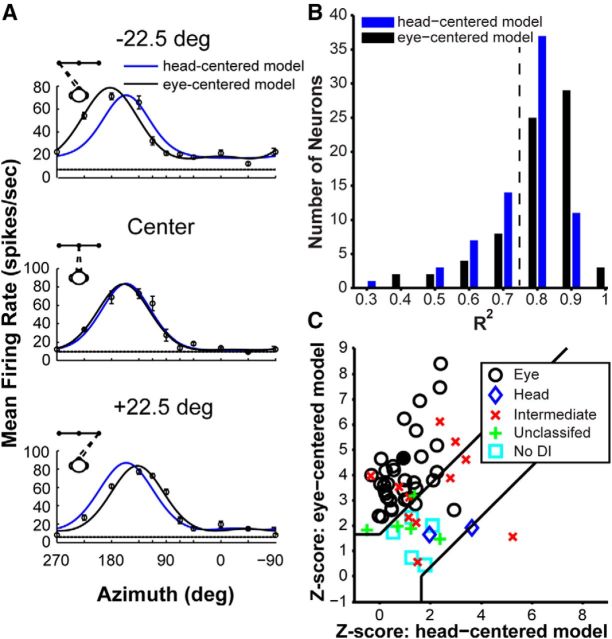

Figure 8.

Quantification of reference frames by model fitting. A, Heading tuning curves (mean ± SEM firing rate) for an example V6 neuron (filled circle in C) measured at three different eye positions, along with fits of the eye-centered (black curves) and head-centered (blue curves) models. B, Distribution of R2 values for the eye-centered model (black bars) and the head-centered model (blue bars), for all neurons recorded during the reference frame protocol (n = 73). The dotted black vertical line represents R2 = 0.75. C, Z-transformed partial correlation coefficients for the eye- and head-centered model fits. Data are shown for neurons with curve fits having R2 ≥ 0.75 for either the eye- or head- centered model (n = 58). Data points falling in the top left region represent neurons that are classified as eye-centered, whereas data points falling in the bottom right region are classified as head-centered neurons. Data points falling between the lines are considered unclassified. The different markers indicate neuron classification based on the DI bootstrap analysis: black circles denote eye-centered cells, blue diamonds denote head-centered cells, red × symbols denote intermediate (between eye- and head-centered) neurons, green + symbols denote neurons that are considered unclassified, and cyan squares indicate neurons for which the DI could not be computed.

Results

Single-unit activity was recorded from 106 V6 neurons in two head-fixed monkeys that performed a fixation task (n = 77 from the left hemisphere in monkey F and n = 29 from the right hemisphere in monkey U). Using a virtual reality system (Fig. 1A), we presented either optic flow that simulated translational motion (visual condition) or actual platform motion (vestibular condition) along one of 26 headings in spherical coordinates (Fig. 1B). In addition, for 73 V6 neurons (n = 46 from monkey F and n = 27 from monkey U), we recorded responses to 10 different headings in the horizontal plane while the animal fixated at three different target locations (Fig. 1C).

As described in Materials and Methods, the location of each recording site was mapped onto segmented and flattened MRI images (Fig. 2A). RF dimensions and visuotopic locations were quantified for each animal (Fig. 2B). We additionally plotted RF size (square root of RF area) against eccentricity for V6, as well as neighboring areas V3 and V6A (Fig. 2C). Together, the relationship between recording site locations and RF locations, as well as the relationship between RF size and eccentricity, is consistent with previous descriptions of the visuotopic organization of V6 (Galletti et al., 1999a, 2005), suggesting that our recordings were targeted correctly.

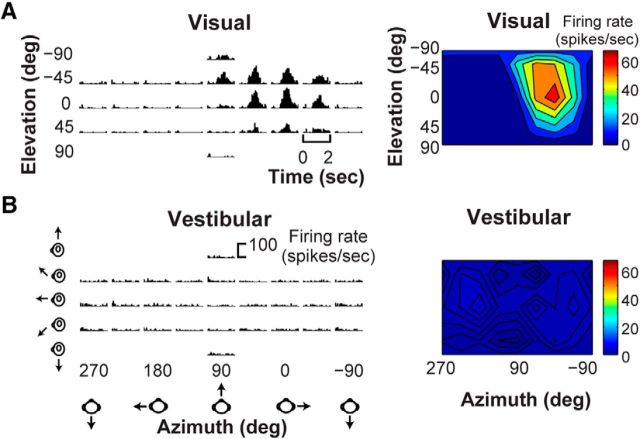

V6 responds primarily to visual heading stimuli

We first tested V6 neurons with visual and vestibular stimuli that probed a range of headings in 3D (see Materials and Methods). In general, V6 cells showed robust responses to optic flow simulating 3D translation but little or no response to platform motion, as illustrated in the peristimulus time histograms (PSTHs) for a typical V6 neuron in Figure 3, A and B (left panels). To visualize 3D heading tuning, mean firing rate was plotted on Cartesian axes using the Lambert cylindrical equal-area projection (Snyder, 1987; Fig. 3A,B, right panels). The example neuron responded most vigorously to optic flow stimuli that simulated rightward translation but showed no significant tuning to vestibular stimuli.

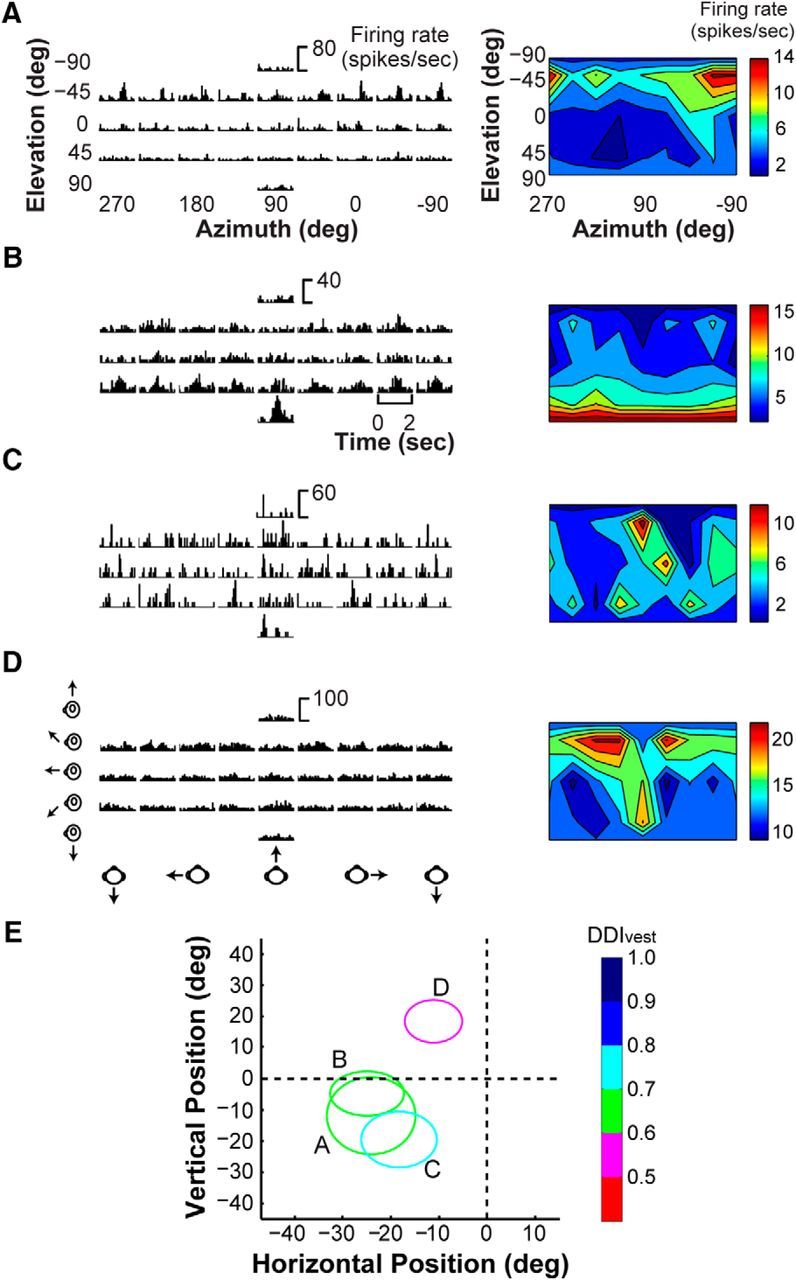

Figure 3.

3D heading tuning for an example V6 neuron. A, PSTHs (left) of responses of the example neuron to optic flow stimuli simulating 26 heading trajectories in 3D space. Visual heading tuning is summarized as a color contour map (right) using the Lambert cylindrical equal-area projection method (see Materials and Methods). B, PSTHs and the associated color contour map are shown for responses of the same neuron to vestibular heading stimuli.

Among the 106 V6 neurons recorded, 96 (90.6%) were significantly tuned for heading based on optic flow, whereas only four neurons (3.8%) were significantly tuned for heading in response to platform motion (ANOVA, p < 0.05). This suggests that vestibular signals are absent or rare in V6. Figure 4A–D shows raw data from the four V6 neurons with significant vestibular tuning. Two neurons with RFs near the horizontal meridian (Fig. 4E, labeled A and B) show PSTHs with clear temporal response modulations that are related to the stimulus trajectory for at least some headings (Fig. 4A,B), suggesting that these neurons have genuine vestibular responses. The other two neurons (Fig. 4C,D) do not show compelling temporal response modulations and may be false positives. Thus, only 2 of 106 neurons that we sampled in V6 appear to exhibit clear vestibular heading tuning. We considered the possibility that these neurons are not in V6. However, none of the four neurons with significant tuning have RFs near the vertical meridian (Fig. 4E), which represents the V6/V6A border (Galletti et al., 1999a, 2005), and although two neurons have RFs closer to the horizontal meridian, which is the V3/V6 border (Galletti et al., 1999a, 2005), their RF size and eccentricity do not suggest that they were V3 neurons.

Figure 4.

Neurons with statistically significant vestibular tuning in V6. A–D, PSTHs (left) of the responses of the four neurons with significant vestibular tuning based on our statistical criterion (ANOVA, p < 0.05) are shown. Their respective vestibular heading tuning profiles are shown as color contour maps (right), format as in Figure 3. E, RF positions for the neurons for which responses are plotted in A–D. RFs are color coded to reflect the vestibular DDIvest value for each neuron.

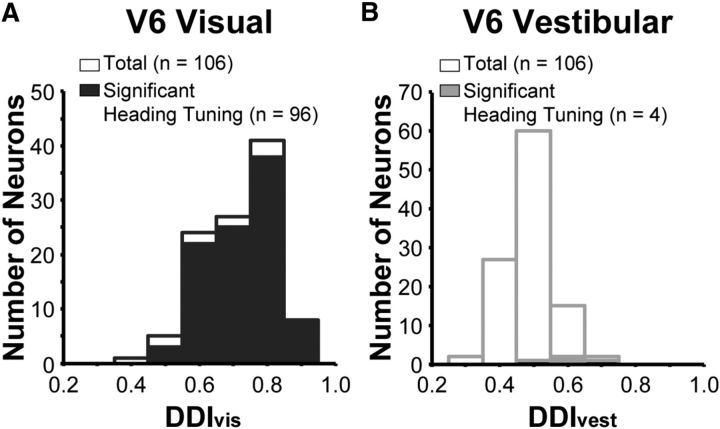

To quantify the strength of heading tuning, we computed a DDI, which is a signal-to-noise measure that takes on values between 0 and 1 (see Materials and Methods). In V6, neurons with significant tuning tend to have DDI values greater than ∼0.5 (Fig. 5; Table 1), consistent with analogous data from other areas (Gu et al., 2006; Takahashi et al., 2007; Chowdhury et al., 2009; Chen et al., 2010, 2011a,c). The mean value of DDI for the visual condition (DDIvis, 0.72 ± 0.010 SEM; Fig. 5A) is significantly greater than the mean DDI for the vestibular condition (DDIvest, 0.49 ± 0.007 SEM; Fig. 5B; paired t test, p = 5.27 × 10−37), indicating much stronger heading selectivity for visual than vestibular cues.

Figure 5.

Summary of visual and vestibular heading selectivity. A, The distribution of DDIvis values for all 106 V6 neurons tested under the 3D heading tuning protocol. Filled bars indicate neurons that are significantly tuned for heading based on optic flow (ANOVA, p < 0.05). Open bars represent neurons without significant visual heading tuning. B, The distribution of DDIvest values for vestibular heading tuning in V6, format as in A.

Table 1.

Summary of mean DDIvis and DDIvest values for cortical areas studied using the 3D heading tuning protocol

| Cortical area | DDIvis | DDIvest |

|---|---|---|

| V6 | 0.72 ± 0.010 | 0.49 ± 0.007 |

| MT | 0.78 ± 0.013* | 0.47 ± 0.0009 |

| MSTd | 0.76 ± 0.01* | 0.59 ± 0.01** |

| VIP | 0.66 ± 0.01* | 0.61 ± 0.01** |

| VPS | 0.60 ± 0.01* | 0.69 ± 0.01** |

| PIVC | 0.49 ± 0.009* | 0.74 ± 0.02** |

Values are shown as mean ± SEM. Specific p values can be found in Results.

*p < 0.05, significant difference between the mean DDIvis value from the mean V6 DDIvis value.

**p < 0.05, significant difference between the mean DDIvest value from the mean V6 DDIvest value.

Because the same stimulus protocol was used to measure 3D heading tuning in five other cortical areas in previous studies, we are able to directly compare mean values of DDIvis and DDIvest for V6 with those from the middle temporal area (MT; Chowdhury et al., 2009), MSTd (Gu et al., 2006), VIP (Chen et al., 2011c), the visual posterior sylvian area (VPS; Chen et al., 2011a), and the parieto-insular vestibular cortex (PIVC; Chen et al., 2010; Table 1). We found that the mean DDIvis for V6 is significantly greater than the mean DDIvis values for areas VPS (unpaired t test, p = 1.73 × 10−14), VIP (p = 0.0044), and PIVC (p = 2.86 × 10−37) but is significantly less than mean DDIvis values for areas MSTd (p = 0.036) and MT (p = 8.31 × 10−4). In contrast, the mean value of DDIvest for V6 is significantly less than the corresponding mean values for areas MSTd (unpaired t test, p = 3.83 × 10−8), VPS (p = 1.12 × 10−6), VIP (p = 9.34 × 10−9), and PIVC (p = 7.40 × 10−51). The mean DDIvest for V6 is not significantly different from that measured in MT (p = 0.93). Thus, the pattern of results for V6 is overall most similar to that of MT (Chowdhury et al., 2009), showing strong selectivity for optic flow but little if any selectivity for vestibular heading cues.

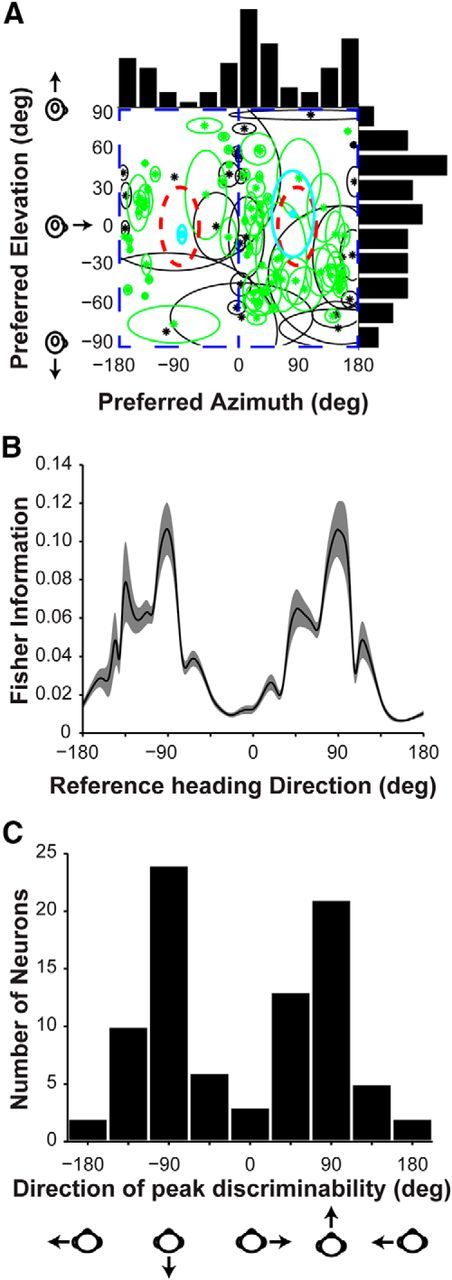

We also examined the heading preferences of V6 neurons with significant tuning (n = 96) by computing the vector sum of neural responses (Fig. 6A, shown for the visual condition only). Each data point in Figure 6A represents the heading preference of a V6 neuron in terms of azimuth and elevation angles. Only two V6 neurons had a heading preference within 30° of straight forward or backward directions (Fig. 6A, cyan data points inside red ellipses). This lack of V6 neurons with fore/aft heading preferences raises the question of whether V6 neurons simply prefer laminar motion and thus have heading preferences within the vertical plane. To test whether heading preferences deviate from the vertical plane, we computed the 95% CI for the heading preference of each neuron (Fig. 6A, ellipses; see Materials and Methods). For 70 of 96 neurons (Fig. 6A, cyan and green symbols), we found that heading preferences deviated significantly from the vertical plane, suggesting that many V6 neurons are tuned for headings that produce at least some degree of nonlaminar optic flow.

Figure 6.

Summary of V6 visual heading preferences and discriminability. A, Scatter plot of the visual heading preferences of V6 neurons. Each datum is plotted at the preferred azimuth and elevation of a V6 neuron with significant tuning (n = 96). Green and cyan data points represent the 70 neurons for which the 95% CI around the heading preference does not overlap with heading directions in the vertical plane (blue rectangular outlines). The 95% CIs are represented as ellipses whose major axes are determined by 95% CIs computed separately for the preferred azimuth and elevation. The red outlines represent ±30° around straight forward and backward headings. Cyan symbols highlight the two neurons that fall within this region. B, The average Fisher information computed from the visual responses of V6 neurons that are significantly tuned (ANOVA, p < 0.05) in the horizontal plane (n = 86). The error bands correspond to 95% CIs computed by bootstrap (see Materials and Methods). C, Distribution of the directions of peak discriminability (i.e., peak Fisher information) for V6 neurons with significant visual heading tuning in the horizontal plane (n = 86).

The distribution of azimuth preferences of V6 neurons is strongly non-uniform (p < 0.001, uniformity test; see Materials and Methods) and clearly bimodal (puni < 0.01 and pbi > 0.99, modality test; see Materials and Methods), with most neurons tending to prefer lateral (leftward or rightward) motion (Fig. 6A, top histogram). To better understand how this non-uniform distribution of azimuth preferences influences the precision of heading discrimination, we computed the average Fisher information for neurons with significant heading tuning (ANOVA, p < 0.05) in the horizontal plane (n = 86; Fig. 6B; see Materials and Methods). In addition, Figure 6C shows the distribution of azimuth angles having peak heading discriminability (peak Fisher information) for each V6 neuron. Because the heading tuning curves of V6 neurons are broad and tend to prefer lateral motion, the largest Fisher information (and thus peak discriminability) occurs around forward and backward headings, at which few neurons have their maximal responses. As observed previously, discrimination is best where tuning curves are steepest (Regan and Beverley, 1983, 1985; Wilson and Regan, 1984; Bradley et al., 1987; Vogels and Orban, 1990; Snowden et al., 1992; Hol and Treue, 2001; Purushothaman and Bradley, 2005; Gu et al., 2006; Chen et al., 2011c). It should be noted that this pattern of results may be expected if V6 neurons simply represent laminar flow (i.e., 2D visual motion) and have broad tuning. However, many V6 neurons appear to prefer directions that are not within the vertical plane (Fig. 6A, green symbols), suggesting that this may not be the case (see Discussion).

Reference frames of visual heading tuning in V6

We evaluated the spatial reference frames in which visual heading signals are coded in V6 by measuring heading tuning in the horizontal plane while the animal fixated at one of three different eye positions. Of the 73 cells tested, 60 V6 neurons showed significant heading tuning (ANOVA, p < 0.05) for at least two eye positions, thus allowing for the reference frame analysis (see Materials and Methods). Because the monkey was head fixed, varying eye position allows us to determine whether the reference frame of V6 responses is eye-centered or head-centered. Note that we cannot distinguish head- and body-centered reference frames with this experiment, so we simply use the term head-centered to denote a reference frame that could potentially be head-, body-, world-centered.

To assess whether visual responses of V6 neurons are eye-centered or head-centered, we first computed the DI of each neuron. A DI equal to 1 occurs when the tuning curve of a neuron fully shifts with eye position (eye-centered reference frame), and a DI equal to 0 occurs when the tuning curve of a neuron remains invariant for all eye positions (head-centered reference frame). For the 60 V6 neurons for which DI could be computed (Fig. 7A), the DI averaged 0.86 ± 0.004 SEM. This value is significantly <1 (t test, p = 1.68 × 10−5), indicating a small shift toward a head-centered reference frame, but is statistically indistinguishable from the mean DI values obtained previously for areas MSTd (mean DI = 0.89, p = 0.30; Fetsch et al., 2007) and VIP (mean DI = 0.89, p = 0.30; Chen et al., 2013a). By computing 95% CIs for each DI value, we placed V6 neurons into one of four classifications: eye-centered, head-centered, intermediate, or unclassified (see Material and Methods). With this method, 41 of 60 (68.3%) neurons were classified as eye-centered (Fig. 7A, black bars), 3 of 60 (5%) neurons were head-centered (Fig. 7A, blue bars), 11 of 60 (18.3%) were intermediate (Fig. 7A, red bars), and 5 of 60 (8.3%) were unclassified (Fig. 7A, green bars). For comparison, in MSTd, 67% of neurons were classified as eye-centered, 5% of neurons were head-centered, 14.7% of neurons were intermediate, and 13.3% of neurons were unclassified (Fetsch et al., 2007). In VIP, 60.6% of neurons were eye-centered, no neurons were head-centered, 12.1% of neurons were intermediate, and 27.3% were unclassified (Chen et al., 2013b). Thus, similar to areas MST and VIP, most V6 neurons show eye-centered visual heading tuning, with a modest fraction of neurons having reference frames shifted toward head coordinates.

To examine the temporal evolution of the spatial reference frame of heading tuning, we computed DI as a function of time using a 200 ms sliding window (see Materials and Methods). Results show that the DI remains fairly constant throughout the duration of the stimulus velocity profile (Fig. 7B), as found previously for MSTd (Fetsch et al., 2007). Thus, there is no clear evidence for a temporal evolution of reference frames of visual heading tuning in V6.

We also performed a curve-fitting analysis to assess whether heading tuning in V6 is best explained by an eye-centered or head-centered model (see Materials and Methods). Curve fits for an example V6 neuron are shown in Figure 8A, revealing that the eye-centered model (black curves) is better suited to describe the tuning of the neuron than the head-centered model (blue curves). The distribution of R2 values for the head-centered (median R2 = 0.78; mean R2 = 0.76) and eye-centered (median R2 = 0.83; mean R2 = 0.81) models is shown in Figure 8B for all neurons recorded under the reference frame protocol (n = 73). The eye-centered model provides significantly better fits overall (p = 5.41 × 10−9, Wilcoxon's signed-rank test).

Of the 73 neurons represented in Figure 8B, 58 had an R2 ≥ 0.75 for either the head-centered or eye-centered models (Fig. 8B, values to the right of the vertical black line). For these 58 neurons, we computed normalized partial correlation coefficients for the eye- and head-centered models, to remove the inherent correlations between models (Fig. 8C). Cells in the top left region were significantly better fit by the eye-centered model than the head-centered model, and the reverse was true for cells falling in the bottom right region (p < 0.05). Using this method, we found that 69% (40 of 58) of neurons were classified as eye-centered, 3.4% (2 of 58) were classified as head-centered, and 27.6% (16 of 58) were unclassified (falling between the two regions). The markers in Figure 8C represents the classification of neurons according to the DI analysis (see Materials and Methods), and it is clear that the classifications achieved by the two methods are overall very similar.

Discussion

To investigate how V6 neurons respond to self-motion cues, we measured responses to optic flow and passive inertial motion stimuli probing a range of headings in 3D. Although the majority of V6 neurons are selective for heading defined by optic flow, we found that almost no V6 neurons respond to inertial motion. When measuring visual heading tuning at different eye positions, we found that heading tuning of most V6 cells was eye-centered. We also found that the population of V6 neurons was best able to discriminate small variations in heading around forward and backward headings. Overall, our results are consistent with neuroanatomy results suggesting that V6 is an early stage for processing of visual motion (Galletti et al., 2001). However, additional testing will be required to determine whether V6 discounts self-motion signals to improve object-motion representation, as hypothesized by some investigators (Galletti et al., 2001; Galletti and Fattori, 2003; Pitzalis et al., 2010, 2013a,b; Cardin et al., 2012a).

V6 processing of self-motion signals

The anatomical association of V6 with areas MSTd and VIP (Shipp et al., 1998; Galletti et al., 2001) suggested the possibility that V6 would respond to both visual and vestibular signals. Early macaque studies revealed directional selectivity in V6 neurons (Galletti et al., 1996, 1999a), and human studies demonstrated a preference for optic flow patterns consistent with self-motion (Cardin and Smith, 2010, 2011, 2012a,b; Pitzalis et al., 2010, 2013b). Given these functional properties, as well as the connectivity of V6 with areas MT, MSTd, and VIP (Shipp et al., 1998; Galletti et al., 2001), it seemed probable that we would find visual heading selectivity in V6 neurons (Figs. 3, 5), although it had not been tested previously. However, would V6 neurons process vestibular signals? A human fMRI study reported that galvanic vestibular stimulation did not significantly activate V6, suggesting that vestibular signals do not reach this area (Smith et al., 2012). However, it remained unclear whether single neurons in macaque V6 would similarly show a lack of vestibular response. We found that ∼4% of V6 neurons (4 of 106) with statistically significant vestibular tuning, of which two neurons showed convincing vestibular responses (Fig. 4). This is consistent with the ineffectiveness of vestibular stimulation in human V6 (Smith et al., 2012), suggesting that V6 is an unlikely source of multisensory heading signals. In this regard, V6 is similar to MT (Chowdhury et al., 2009) but clearly different from areas MSTd and VIP. These novel findings for macaque V6 support neuroanatomical results suggesting that V6 is an early stage of processing for visual motion (Galletti et al., 2001) and strengthen the proposed homology between human and macaque V6 (Pitzalis et al., 2006).

Reference frames of heading tuning in V6

Previous studies have examined the spatial reference frames of visual RFs in the parieto-occipital sulcus, in which V6 and V6A are located. These studies found that RFs were eye-centered for a large majority of neurons, whereas a small population had head-centered RFs (Galletti et al., 1993, 1995). Additional investigation suggested that head-centered RFs are only found in V6A and not V6 (Galletti et al., 1999b; Gamberini et al., 2011). However, in humans, fMRI studies have presented evidence that V6+ (which is most likely dominated by contributions from V6) can represent the rotational and translational speed of the head, indicating that V6+ may process such visual signals in a “head-centric” reference frame (Arnoldussen et al., 2011, 2015). Given the diversity of previous findings, it was necessary to test the spatial reference frames of visual heading tuning in single V6 neurons.

We found little evidence for a head-centered reference frame of visual heading tuning in V6, because the proportion of head-centered cells was not greater than that expected by chance (Figs. 7A, 8C). Although most V6 neurons signaled heading with an eye-centered reference frame (Figs. 7, 8), we note that a substantial minority of neurons exhibits intermediate reference frames. Correspondingly, the distribution of DI values is slightly but significantly shifted toward values <1 (Fig. 7A), indicating a slight bias toward a head-centered representation (Fig. 8C). These findings are similar to those reported previously for areas MSTd and VIP (Fetsch et al., 2007; Chen et al., 2013b).

Distribution of visual heading preferences in V6

We have found that very few V6 neurons prefer headings within 30° of forward and backward (Fig. 6A). Unlike MSTd, in which an appreciable subpopulation of neurons prefer radial optic flow patterns (Gu et al., 2006, 2010), our population only included two neurons with a preference for radial optic flow. We further showed that the peak discrimination directions for most V6 neurons are close to fore and aft headings (Fig. 6B,C), as observed previously in areas MSTd and VIP (Gu et al., 2010; Chen et al., 2011b).

One explanation for our distribution is that V6 neurons are tuned for laminar motion within the fronto-parallel plane, in which case they might be ill-suited to represent heading. If that were true, the distribution of peak discrimination directions (Fig. 6) might still be expected if the neurons have sufficiently broad tuning. However, we found that the 95% CIs on heading preferences deviated from the fronto-parallel (vertical) plane for 70 of 96 V6 neurons (Fig. 6A). This result suggests that V6 is capable of processing nonlaminar components of optic flow.

Why are there so many V6 cells that prefer headings outside the vertical plane but so few within ±30° of fore/aft headings? One possibility is that neurons in V6 prefer particular combinations of direction and speed and binocular disparity, such that the neurons are better stimulated by headings with a small fore/aft component rather than headings within the vertical plane. If so, V6 could be more interested in the local motion of objects over global motion patterns related to heading, and this issue merits additional study.

The role of visual self-motion signals in V6

Early studies of macaque V6 neurons demonstrated strong visual motion selectivity (Galletti et al., 1996, 1999a). In light of human fMRI studies that revealed that V6 responds to optic flow patterns that simulate self-motion (Cardin and Smith, 2010, 2011; Pitzalis et al., 2010, 2013b; Cardin et al., 2012a,b), it is perhaps unsurprising that we found clear heading selectivity in V6 neurons (Figs. 3, 5). However, although human MST shows “adaptation” when presented with successive optic flow patterns, V6 does not, therefore casting some doubt on whether V6 neurons are heading selective (Cardin et al., 2012a). However, the clear heading tuning of V6 neurons that we observed (Figs. 3, 5), together with our finding that V6 is most sensitive to variations in heading around forward and backward headings (Fig. 6), similar to areas MSTd (Gu et al., 2010) and VIP (Gu et al., 2010; Chen et al., 2011b), suggests that V6 does represent heading from optic flow. However, the paucity of neurons in V6 that prefer fore/aft headings characterized by radial optic flow should be noted, and this might contribute to differences across areas in some neuroimaging studies (such as adaptation to optic flow).

If V6 neurons are heading selective, why was there a lack of adaptation in human V6 (Cardin et al., 2012a)? One possibility is that binocular disparity cues were present in our visual stimuli (see Materials and Methods), whereas the stimulus was monocular in the human adaptation study (Cardin et al., 2012a). Other studies suggest that binocular disparity signals are important to V6 responses in humans (Cardin and Smith, 2011; Arnoldussen et al., 2013, 2015), leading to the suggestion that V6 may require the presence of binocular disparity cues to represent heading (Arnoldussen et al., 2015) and, perhaps, to exhibit adaptation effects as well.

Alternatively, it has been hypothesized that V6 does not play a major role in estimating heading but rather discounts global self-motion signals to compute object motion (Galletti et al., 2001; Galletti and Fattori, 2003; Pitzalis et al., 2010, 2013a,b; Cardin et al., 2012a). This functional account of V6 could explain the lack of adaptation effects in human V6. Although it is possible that V6 neurons prefer local motion of objects over global motion patterns related to heading, we are not aware of any studies that have directly examined responses of macaque V6 neurons to object motion within the context of a global flow field. Thus, while it is clear that V6 processes visual motion signals similar to other areas, such as MT, MSTd, and VIP, it remains for additional studies to clarify whether V6 is involved in heading perception or whether it might play a fundamental role in flow parsing operations (Rushton and Warren, 2005; Warren and Rushton, 2008, 2009) to dissociate object motion and self-motion.

Footnotes

This work was supported by National Institutes of Health Grants EY017866 (D.E.A. and S.L.), EY016178 (G.C.D.), and F32EY025146 (R.H.F). We thank Dr. Yun Yang for his assistance with data collection.

The authors declare no competing financial interests.

References

- Angelaki DE, Shaikh AG, Green AM, Dickman JD. Neurons compute internal models of the physical laws of motion. Nature. 2004;430:560–564. doi: 10.1038/nature02754. [DOI] [PubMed] [Google Scholar]

- Arnoldussen DM, Goossens J, van den Berg AV. Adjacent visual representations of self-motion in different reference frames. Proc Natl Acad Sci U S A. 2011;108:11668–11673. doi: 10.1073/pnas.1102984108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arnoldussen DM, Goossens J, van den Berg AV. Differential responses in dorsal visual cortex to motion and disparity depth cues. Front Hum Neurosci. 2013;7:815. doi: 10.3389/fnhum.2013.00815. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arnoldussen DM, Goossens J, van Den Berg AV. Dissociation of retinal and headcentric disparity signals in dorsal human cortex. Front Syst Neurosci. 2015;9:16. doi: 10.3389/fnsys.2015.00016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Avillac M, Denève S, Olivier E, Pouget A, Duhamel JR. Reference frames for representing visual and tactile locations in parietal cortex. Nat Neurosci. 2005;8:941–949. doi: 10.1038/nn1480. [DOI] [PubMed] [Google Scholar]

- Bertin RJ, Berthoz A. Visuo-vestibular interaction in the reconstruction of travelled trajectories. Exp Brain Res. 2004;154:11–21. doi: 10.1007/s00221-003-1524-3. [DOI] [PubMed] [Google Scholar]

- Bradley A, Skottun BC, Ohzawa I, Sclar G, Freeman RD. Visual orientation and spatial frequency discrimination: a comparison of single neurons and behavior. J Neurophysiol. 1987;57:755–772. doi: 10.1152/jn.1987.57.3.755. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Klam F, Duhamel JR, Ben Hamed S, Graf W. Visual-vestibular interactive responses in the macaque ventral intraparietal area (VIP) Eur J Neurosci. 2002;16:1569–1586. doi: 10.1046/j.1460-9568.2002.02206.x. [DOI] [PubMed] [Google Scholar]

- Brown BK. Grouping correction for circular goodness-of-fit tests. J R Stat Soc B. 1994;56:275–283. [Google Scholar]

- Butler JS, Smith ST, Campos JL, Bülthoff HH. Bayesian integration of visual and vestibular signals for heading. J Vis. 2010;10:23. doi: 10.1167/10.11.23. [DOI] [PubMed] [Google Scholar]

- Cardin V, Smith AT. Sensitivity of human visual and vestibular cortical regions to egomotion-compatible visual stimulation. Cereb Cortex. 2010;20:1964–1973. doi: 10.1093/cercor/bhp268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cardin V, Smith AT. Sensitivity of human visual cortical area V6 to stereoscopic depth gradients associated with self-motion. J Neurophysiol. 2011;106:1240–1249. doi: 10.1152/jn.01120.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cardin V, Hemsworth L, Smith AT. Adaptation to heading direction dissociates the roles of human MST and V6 in the processing of optic flow. J Neurophysiol. 2012a;108:794–801. doi: 10.1152/jn.00002.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cardin V, Sherrington R, Hemsworth L, Smith AT. Human V6: functional characterisation and localisation. PLoS One. 2012b;7:e47685. doi: 10.1371/journal.pone.0047685. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen A, DeAngelis GC, Angelaki DE. Macaque parieto-insular vestibular cortex: responses to self-motion and optic flow. J Neurosci. 2010;30:3022–3042. doi: 10.1523/JNEUROSCI.4029-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen A, DeAngelis GC, Angelaki DE. Convergence of vestibular and visual self-motion signals in an area of the posterior sylvian fissure. J Neurosci. 2011a;31:11617–11627. doi: 10.1523/JNEUROSCI.1266-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen A, DeAngelis GC, Angelaki DE. A comparison of vestibular spatiotemporal tuning in macaque parietoinsular vestibular cortex, ventral intraparietal area, and medial superior temporal area. J Neurosci. 2011b;31:3082–3094. doi: 10.1523/JNEUROSCI.4476-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen A, DeAngelis GC, Angelaki DE. Representation of vestibular and visual cues to self-motion in ventral intraparietal cortex. J Neurosci. 2011c;31:12036–12052. doi: 10.1523/JNEUROSCI.0395-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen A, Deangelis GC, Angelaki DE. Functional specializations of the ventral intraparietal area for multisensory heading discrimination. J Neurosci. 2013a;33:3567–3581. doi: 10.1523/JNEUROSCI.4522-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen X, DeAngelis GC, Angelaki DE. Eye-centered representation of optic flow tuning in the ventral intraparietal area. J Neurosci. 2013b;33:18574–18582. doi: 10.1523/JNEUROSCI.2837-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chowdhury SA, Takahashi K, DeAngelis GC, Angelaki DE. Does the middle temporal area carry vestibular signals related to self-motion? J Neurosci. 2009;29:12020–12030. doi: 10.1523/JNEUROSCI.0004-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Winkel KN, Weesie J, Werkhoven PJ, Groen EL. Integration of visual and inertial cues in perceived heading of self-motion. J Vis. 2010;10:1. doi: 10.1167/10.12.1. [DOI] [PubMed] [Google Scholar]

- Duffy CJ. MST neurons respond to optic flow and translational movement. J Neurophysiol. 1998;80:1816–1827. doi: 10.1152/jn.1998.80.4.1816. [DOI] [PubMed] [Google Scholar]

- Fetsch CR, Wang S, Gu Y, Deangelis GC, Angelaki DE. Spatial reference frames of visual, vestibular, and multimodal heading signals in the dorsal subdivision of the medial superior temporal area. J Neurosci. 2007;27:700–712. doi: 10.1523/JNEUROSCI.3553-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fetsch CR, Turner AH, DeAngelis GC, Angelaki DE. Dynamic reweighting of visual and vestibular cues during self-motion perception. J Neurosci. 2009;29:15601–15612. doi: 10.1523/JNEUROSCI.2574-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fisher NI, Marron JS. Mode testing via the excess mass estimate. Biometrika. 2001;88:499–517. doi: 10.1093/biomet/88.2.499. [DOI] [Google Scholar]

- Galletti C, Fattori P. Neuronal mechanisms for detection of motion in the field of view. Neuropsychologia. 2003;41:1717–1727. doi: 10.1016/S0028-3932(03)00174-X. [DOI] [PubMed] [Google Scholar]

- Galletti C, Battaglini PP, Fattori P. Parietal neurons encoding spatial locations in craniotopic coordinates. Exp Brain Res. 1993;96:221–229. doi: 10.1016/S0079-6123(08)63269-0. [DOI] [PubMed] [Google Scholar]

- Galletti C, Battaglini PP, Fattori P. Eye position influence on the parieto-occipital area PO (V6) of the macaque monkey. Eur J Neurosci. 1995;7:2486–2501. doi: 10.1111/j.1460-9568.1995.tb01047.x. [DOI] [PubMed] [Google Scholar]

- Galletti C, Fattori P, Battaglini PP, Shipp S, Zeki S. Functional demarcation of a border between areas V6 and V6A in the superior parietal gyrus of the macaque monkey. Eur J Neurosci. 1996;8:30–52. doi: 10.1111/j.1460-9568.1996.tb01165.x. [DOI] [PubMed] [Google Scholar]

- Galletti C, Fattori P, Gamberini M, Kutz DF. The cortical visual area V6: brain location and visual topography. Eur J Neurosci. 1999a;11:3922–3936. doi: 10.1046/j.1460-9568.1999.00817.x. [DOI] [PubMed] [Google Scholar]

- Galletti C, Fattori P, Kutz DF, Gamberini M. Brain location and visual topography of cortical area V6A in the macaque monkey. Eur J Neurosci. 1999b;11:575–582. doi: 10.1046/j.1460-9568.1999.00467.x. [DOI] [PubMed] [Google Scholar]

- Galletti C, Gamberini M, Kutz DF, Fattori P, Luppino G, Matelli M. The cortical connections of area V6: an occipito-parietal network processing visual information. Eur J Neurosci. 2001;13:1572–1588. doi: 10.1046/j.0953-816x.2001.01538.x. [DOI] [PubMed] [Google Scholar]

- Galletti C, Gamberini M, Kutz DF, Baldinotti I, Fattori P. The relationship between V6 and PO in macaque extrastriate cortex. Eur J Neurosci. 2005;21:959–970. doi: 10.1111/j.1460-9568.2005.03911.x. [DOI] [PubMed] [Google Scholar]

- Gamberini M, Galletti C, Bosco A, Breveglieri R, Fattori P. Is the medial posterior parietal area V6A a single functional area? J Neurosci. 2011;31:5145–5157. doi: 10.1523/JNEUROSCI.5489-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gibson JJ. The perception of the visual world. Boston: Houghton Mifflin; 1950. [Google Scholar]

- Gu Y, Watkins PV, Angelaki DE, DeAngelis GC. Visual and nonvisual contributions to three-dimensional heading selectivity in the medial superior temporal area. J Neurosci. 2006;26:73–85. doi: 10.1523/JNEUROSCI.2356-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu Y, Fetsch CR, Adeyemo B, Deangelis GC, Angelaki DE. Decoding of MSTd population activity accounts for variations in the precision of heading perception. Neuron. 2010;66:596–609. doi: 10.1016/j.neuron.2010.04.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hol K, Treue S. Different populations of neurons contribute to the detection and discrimination of visual motion. Vision Res. 2001;41:685–689. doi: 10.1016/S0042-6989(00)00314-X. [DOI] [PubMed] [Google Scholar]

- Judge SJ, Richmond BJ, Chu FC. Implantation of magnetic search coils for measurement of eye position: an improved method. Vision Res. 1980;20:535–538. doi: 10.1016/0042-6989(80)90128-5. [DOI] [PubMed] [Google Scholar]

- Lewis JW, Van Essen DC. Corticocortical connections of visual, sensorimotor, and multimodal processing areas in the parietal lobe of the macaque monkey. J Comp Neurol. 2000;428:112–137. doi: 10.1002/1096-9861(20001204)428:1%3C112::AID-CNE8%3E3.0.CO;2-9. [DOI] [PubMed] [Google Scholar]

- Ohmi M. Egocentric perception through interaction among many sensory systems. Brain Res Cogn Brain Res. 1996;5:87–96. doi: 10.1016/S0926-6410(96)00044-4. [DOI] [PubMed] [Google Scholar]

- Page WK, Duffy CJ. Heading representation in MST: sensory interactions and population encoding. J Neurophysiol. 2003;89:1994–2013. doi: 10.1152/jn.00493.2002. [DOI] [PubMed] [Google Scholar]

- Pitzalis S, Galletti C, Huang RS, Patria F, Committeri G, Galati G, Fattori P, Sereno MI. Wide-field retinotopy defines human cortical visual area v6. J Neurosci. 2006;26:7962–7973. doi: 10.1523/JNEUROSCI.0178-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pitzalis S, Sereno MI, Committeri G, Fattori P, Galati G, Patria F, Galletti C. Human v6: the medial motion area. Cereb Cortex. 2010;20:411–424. doi: 10.1093/cercor/bhp112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pitzalis S, Fattori P, Galletti C. The functional role of the medial motion area V6. Front Behav Neurosci. 2013a;6:91. doi: 10.3389/fnbeh.2012.00091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pitzalis S, Sdoia S, Bultrini A, Committeri G, Di Russo F, Fattori P, Galletti C, Galati G. Selectivity to translational egomotion in human brain motion areas. PLoS One. 2013b;8:e60241. doi: 10.1371/journal.pone.0060241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Purushothaman G, Bradley DC. Neural population code for fine perceptual decisions in area MT. Nat Neurosci. 2005;8:99–106. doi: 10.1038/nn1373. [DOI] [PubMed] [Google Scholar]

- Regan D, Beverley KI. Spatial-frequency discrimination and detection: comparison of postadaptation thresholds. J Opt Soc Am. 1983;73:1684–1690. doi: 10.1364/JOSA.73.001684. [DOI] [PubMed] [Google Scholar]

- Regan D, Beverley KI. Postadaptation orientation discrimination. J Opt Soc Am A. 1985;2:147–155. doi: 10.1364/JOSAA.2.000147. [DOI] [PubMed] [Google Scholar]

- Robinson DA. A method of measuring eye movement using a scleral search coil in a magnetic field. IEEE Trans Biomed Eng. 1963;10:137–145. doi: 10.1109/tbmel.1963.4322822. [DOI] [PubMed] [Google Scholar]

- Rushton SK, Warren PA. Moving observers, relative retinal motion and the detection of object movement. Curr Biol. 2005;15:R542–R543. doi: 10.1016/j.cub.2005.07.020. [DOI] [PubMed] [Google Scholar]

- Schlack A, Hoffmann KP, Bremmer F. Interaction of linear vestibular and visual stimulation in the macaque ventral intraparietal area (VIP) Eur J Neurosci. 2002;16:1877–1886. doi: 10.1046/j.1460-9568.2002.02251.x. [DOI] [PubMed] [Google Scholar]

- Shipp S, Blanton M, Zeki S. A visuo-somatomotor pathway through superior parietal cortex in the macaque monkey: cortical connections of areas V6 and V6A. Eur J Neurosci. 1998;10:3171–3193. doi: 10.1046/j.1460-9568.1998.00327.x. [DOI] [PubMed] [Google Scholar]

- Silverman BW. Using kernel density estimates to investigate multimodality. J R Stat Soc B. 1981;43:97–99. [Google Scholar]

- Smith AT, Wall MB, Thilo KV. Vestibular inputs to human motion-sensitive visual cortex. Cereb Cortex. 2012;22:1068–1077. doi: 10.1093/cercor/bhr179. [DOI] [PubMed] [Google Scholar]

- Smith MA, Majaj NJ, Movshon JA. Dynamics of motion signaling by neurons in macaque area MT. Nat Neurosci. 2005;8:220–228. doi: 10.1038/nn1382. [DOI] [PubMed] [Google Scholar]

- Snowden RJ, Treue S, Andersen RA. The response of neurons in areas V1 and MT of the alert rhesus monkey to moving random dot patterns. Exp Brain Res. 1992;88:389–400. doi: 10.1007/BF02259114. [DOI] [PubMed] [Google Scholar]

- Snyder JP. Map projections—a working manual. Washington, DC: U.S. Government Publishing Office; 1987. [Google Scholar]

- Takahashi K, Gu Y, May PJ, Newlands SD, DeAngelis GC, Angelaki DE. Multimodal coding of three-dimensional rotation and translation in area MSTd: comparison of visual and vestibular selectivity. J Neurosci. 2007;27:9742–9756. doi: 10.1523/JNEUROSCI.0817-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Telford L, Howard IP, Ohmi M. Heading judgments during active and passive self-motion. Exp Brain Res. 1995;104:502–510. doi: 10.1007/BF00231984. [DOI] [PubMed] [Google Scholar]

- Van Essen DC, Drury HA, Dickson J, Harwell J, Hanlon D, Anderson CH. An integrated software suite for surface-based analyses of cerebral cortex. J Am Med Inform Assoc. 2001;8:443–459. doi: 10.1136/jamia.2001.0080443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vogels R, Orban GA. How well do response changes of striate neurons signal differences in orientation: a study in the discriminating monkey. J Neurosci. 1990;10:3543–3558. doi: 10.1523/JNEUROSCI.10-11-03543.1990. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Warren PA, Rushton SK. Evidence for flow-parsing in radial flow displays. Vision Res. 2008;48:655–663. doi: 10.1016/j.visres.2007.10.023. [DOI] [PubMed] [Google Scholar]

- Warren PA, Rushton SK. Optic flow processing for the assessment of object movement during ego movement. Curr Biol. 2009;19:1555–1560. doi: 10.1016/j.cub.2009.07.057. [DOI] [PubMed] [Google Scholar]

- Warren WH. Optic flow. In: Werner JS, Chalupa LM, editors. The visual neurosciences. Cambridge, MA: Massachusetts Institute of Technology; 2004. [Google Scholar]

- Watson GS. Goodness-of-fit tests on a circle. Biometrika. 1961;48:109–114. doi: 10.2307/2333135. [DOI] [Google Scholar]

- Wilson HR, Regan D. Spatial-frequency adaptation and grating discrimination: predictions of a line-element model. J Opt Soc Am A. 1984;1:1091–1096. doi: 10.1364/JOSAA.1.001091. [DOI] [PubMed] [Google Scholar]