Abstract

Sophisticated behavioral paradigms partnered with the emergence of increasingly selective techniques to target the basolateral amygdala (BLA) have resulted in an enhanced understanding of the role of this nucleus in learning and using reward information. Due to the wide variety of behavioral approaches many questions remain on the circumscribed role of BLA in appetitive behavior. In this review, we integrate conclusions of BLA function in reward-related behavior using traditional interference techniques (lesion, pharmacological inactivation) with those using newer methodological approaches in experimental animals that allow in vivo manipulation of cell type-specific populations and neural recordings. Secondly, from a review of appetitive behavioral tasks in rodents and monkeys and recent computational models of reward procurement, we derive evidence for BLA as a neural integrator of reward value, history, and cost parameters. Taken together, BLA codes specific and temporally dynamic outcome representations in a distributed network to orchestrate adaptive responses. We provide evidence that experiences with opiates and psychostimulants alter these outcome representations in BLA, resulting in long-term modified action.

Keywords: basolateral amygdala, orbitofrontal cortex, outcome devaluation, discounting, reversal learning, amphetamine, opiate

Introduction

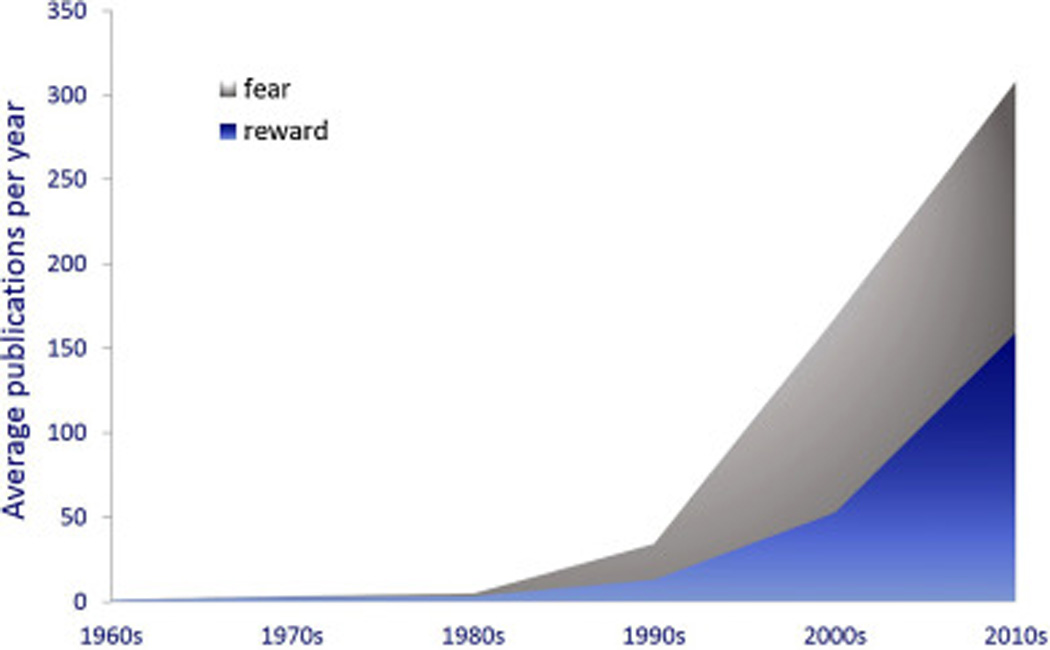

Until recently, the widely accepted view of the amygdala centered on its important role in aversive learning and Pavlovian fear conditioning. In the past decade, however, there has been a surge of research (Figure 1) positioning the amygdala as one of the most important neural contributors to reward learning and decision making, with the basolateral nucleus (BLA) particularly implicated in adaptive, goal-directed behavior. Because of varied methodological and behavioral approaches questions remain on the precise role of the BLA, but convergence is emerging in these findings. Here we synthesize this research to provide clarity on the role of the BLA in appetitive behavior.

Figure 1. Amygdala and reward.

Pubmed search terms “amygdala” and “reward” or “amygdala” and “fear” from 1960–2014, excluding 2015. A steeper rise in the number of publications on the amygdala in appetitive behavior now accounts for approximately half of the reports in the 2010s.

Over a decade ago Baxter and Murray (2002) wrote, “Recent evidence supports a role for the amygdala in processing positive emotions as well as negative ones, including learning about the beneficial biological value of stimuli.” Early evidence for amygdala involvement in reward learning came from Weiskrantz (1956) and Schwartzbaum (1960), showing that amygdalectomized monkeys were impaired at bar pressing after the conditions for reinforcement changed (extinction, reconditioning, etc.). However, many of these and other early studies (e.g., Jones and Mishkin, 1972, Kesner and Andrus, 1982) involved nonselective targeting of the amygdala that, we understand now, included other medial temporal lobe structures. Higher resolution techniques confirmed a role for the amygdala in appetitive behavior (Cador et al., 1989; Hiroi et al., 1991; Málková et al., 1997; McDonald and White, 1993) and have enhanced our understanding of the circumscribed role for amygdala in reward (see: Murray et al., 2009).

In this review, we aim to provide a comprehensive evaluation of the role of the BLA in learning and using reward information. We reconcile a role for the BLA from comparison of results obtained using traditional interference techniques (e.g., lesion and inactivation) coupled with sophisticated behavioral paradigms in experimental animals. To supplement this we add data collected with newer methodological approaches in experimental animals, including those allowing targeted cell type-specific manipulations (e.g., optogenetics) and in vivo electrophysiological and neurochemical recordings. Our review of the literature suggests BLA signaling codes specific and dynamic reward representations that guide goal-directed behavior. In addition to this, we provide evidence for BLA function as contributing to the integration of incentive value, reward history, and cost within a broader reward circuit. Lastly, we briefly apply this understanding to addiction.

There has been much progress summarizing the role of the amygdala in human neuroimaging and patient population studies as well as its overarching role in a wide range of behaviors, from fear to reinforcement (Janak and Tye, 2015; Lüthi and Lüscher, 2014). The role of the BLA specifically in fear has been particularly well-reviewed (see: Davis, 1992; Fanselow and LeDoux, 1999; Fanselow and Gale, 2003; Paré et al., 2004; Maren, 2006; Fanselow and Wassum, in press), with findings showing that plasticity in this region is critical for associative processes in fear conditioning. We focus our review of the literature to the BLA and to animal models of reward learning and reward-related behavior, with an emphasis on rat and nonhuman primate research findings.

Why BLA?

Largely on the basis of aversive conditioning studies, the amygdala has been proposed to process emotional information in a serial manner between the lateral and central nuclei (LeDoux, 2000). But studies assessing the role of the amygdala in appetitive conditioning suggest the BLA and central amygdala (CeN) may function in parallel to mediate distinct aspects of emotional processing (for review see: Balleine and Killcross, 2006; Phillips et al., 2003). In rats and nonhuman primates, the BLA is the more “cortex-like” amygdala nucleus, receiving robust and variegated sensory information from frontal cortex and thalamus, and in turn, sending projections back to these areas as well as to rhinal cortex, hippocampus, (McDonald, 1998, 2003; Pitkänen et al., 2000), striatum, hypothalamus, basal forebrain, and other neurotransmitter system hubs (reviewed in Cardinal et al., 2002). Greater complexity sensory input (John et al., 2013; Stefanacci and Amaral, 2002) seems to be a distinguishing feature of primate over rodent BLA. Of particular relevance to our focus on learning and the use of reward information to guide action are BLA afferents to the nucleus accumbens (NAc), dorsomedial striatum (DMS), and medial and orbital frontal cortex (OFC) (reviewed under BLA microcircuitry).

The anatomical convergence of information in BLA as well as its feedback mechanisms, have led to the well-accepted idea that this structure integrates the sensory properties of both appetitive and aversive stimuli with their affective valence (Aggleton, 2000; Fanselow and LeDoux, 1999; Ghashghaei and Barbas, 2002; Janak and Tye, 2015; John et al., 2013; Lüthi and Lüscher, 2014; Morrison and Salzman, 2010). After systematic study of laminar projections from sensory areas to primate amygdala, Barbas and colleagues proposed that the amygdala is the site for “low-resolution information” providing quick detection of significant stimuli that are important for survival (John et al., 2013). Detection of these sensory stimuli then gets linked to affective valence (value) and further refined through bidirectional communication with the OFC. The literature we review here supports this general interpretation, however, we propose a more nuanced role for the BLA in representing higher-resolution information, the values of not the stimuli per se, but rather the specific rewarding outcomes they predict, with its contribution most critical early in learning and when incentive value is uncertain or in flux.

BLA microcircuitry

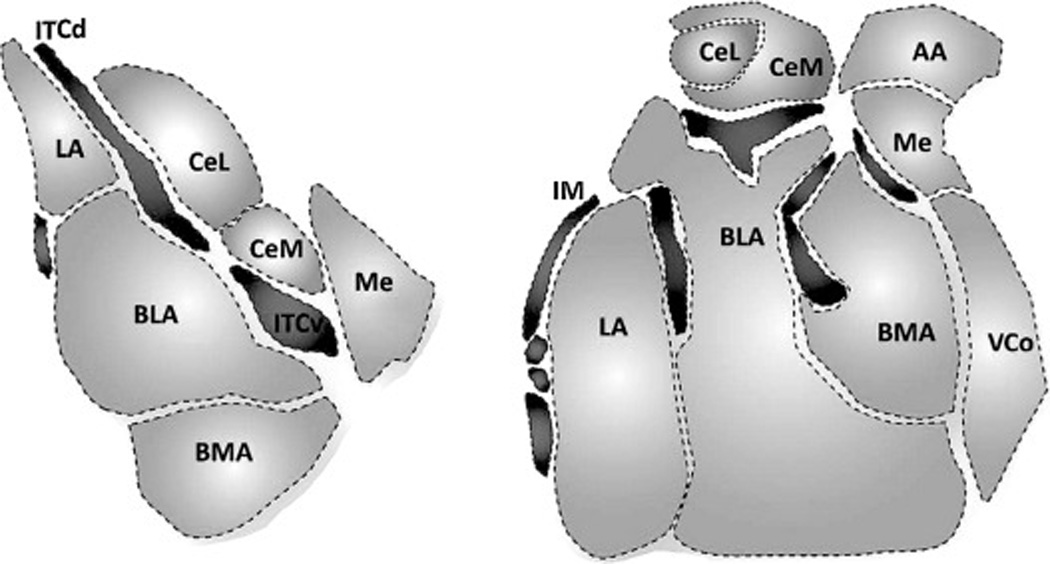

The circuitry of the amygdala has been extensively reviewed elsewhere (see Duvarci and Pare, 2014; Ehrlich et al., 2009; Janak and Tye, 2015). Here we provide a brief and simplified summary. In general, the BLA complex of rodents and primates (Figure 2), including the lateral amygdala, basal and basomedial nuclei, consists primarily of glutamatergic principal neurons with inhibitory interneurons. GABAergic intercalated cells that lie between the BLA and CeN provide an additional inhibitory influence (Marowsky et al., 2005). The BLA is densely innervated by glutamatergic cells from thalamic and cortical regions and these are targeted primarily to the lateral amygdala, which sends excitatory projections to the basal and basomedial nuclei. Sensory information is also received directly by the basal amygdala. Excitatory connections between the BLA and cortical regions are reciprocal, however, the BLA sends unidirectional, excitatory output to the CeN, NAc, DMS and bed nucleus of the stria terminalis.

Figure 2. Rodent and monkey amygdala nuclei.

Coronal sections of amygdala nuclei in the mouse and the macaque monkey. Modified from John et al. 2013.

Several elements of this circuitry are of particular relevance to our discussions here. Of these, projections to the NAc and DMS are positioned to influence motivated behavior and action selection, respectively. Amygdala input to NAc (core and shell) regulates responses to a wide range of environmental stimuli: those linked with both appetitive and aversive outcomes (Britt et al., 2012; Lalumiere, 2014; Lüthi and Lüscher, 2014). Regulation of dopamine efflux in the NAc by the BLA is vital in responding appropriately to motivationally significant stimuli (Phillips et al., 2003). This regulation is achieved both directly at the terminals (Jones et al., 2010) and indirectly via projections to medial PFC (Jackson and Moghaddam, 2001). In vivo optical stimulation of glutamatergic fibers from the BLA to the NAc invigorates reward responses via a dopamine D1-like receptor-dependent mechanisms (Stuber et al., 2011). The less-extensively studied BLA input to the DMS has been implicated in goal-directed instrumental learning (Corbit et al., 2013). Stamatakis et al. (2014) showed that the BLA may also interact with the bed nucleus to influence motivated behavior more generally, beyond aversive conditioning (Kim et al., 2013).

The dense and reciprocal cortical connections position the BLA to function as a modifier of sensory input and to contribute to decision making. Reciprocal connections with the OFC (Baxter et al., 2000; Saddoris et al., 2005; Schoenbaum et al., 1999, 2000, 2003; Stalnaker et al., 2007) and insular cortex (Miranda and McGaugh, 2004; Parkes and Balleine, 2013) are vital in this regard. The function of the OFC-BLA circuit is particularly evident in settings that depend upon the acquisition and use of Pavlovian stimulus-reward associations, with BLA-insula connections participating in gustatory associative encoding.

Within the BLA glutamate is the primary input signal, but the BLA also receives other input signals, including a dopaminergic projection from the ventral tegmental area and serotonergic inputs to the lateral amygdala from the dorsal raphé (Sadikot and Parent, 1990). The BLA sends projections to dopamine D1-expressing medium spiny neurons (Floresco et al., 2001; Wall et al., 2013). BLA GABA interneurons are less well-studied (especially in appetitive conditioning), but are thought to gate associative learning. Dopamine, specifically presynaptic D2 receptor function, regulates transmission by these GABAergic interneurons to spiny principal neurons (Chu et al., 2012), and by this “disinhibition” may thereby greatly influence responses to dynamic changes in reward environments. Serotonin input (primarily at 5HT2a receptors) facilitates GABAergic inhibition of BLA (Rainnie, 1999). Experiencing punishment or the absence of reward may reduce this inhibition and contribute to a hyperexcitable BLA (Jiang et al., 2009). Thus, the activity and degree of inhibition within BLA is at least partially gated by dopamine and 5-HT signaling. A similar mechanism has been described for the µ–opioid receptors located presynaptically almost exclusively on the intercalated cells. Activation of these receptors attenuates GABAergic inputs to the CeN from the BLA (Finnegan et al., 2006), but may also work via inhibitory circuits within the BLA itself.

BLA in appetitive conditioning

Given the BLA’s anatomical connections and accepted role in integrating sensory and affective information, it is perhaps surprising that axon-sparing, excitotoxic BLA lesions have no effect on many primary measures of both Pavlovian and instrumental appetitive learning. This is even more surprising given that BLA lesions or pharmacological inactivation profoundly affect both the acquisition and expression of conditioned fear responses (Davis, 1992; Fanselow and LeDoux, 1999) and active avoidance of an aversive stimulus (Killcross et al., 1997; Lázaro-Muñoz et al. 2010). The BLA is not required for the acquisition of an appetitive Pavlovian conditioned goal-approach or orienting response (Everitt et al., 2000; Hatfield et al., 1996; Parkinson et al., 2000), an instrumental action (Balleine et al., 2003; Corbit and Balleine, 2005), or for a reward-related instrumental discrimination (Schoenbaum et al., 2003). Deficits are, however, revealed when a representation of a specific predicted reward (i.e., outcome of instrumental action or stimulus presentation) must be encoded in the learned association and used to guide responding. One such case of this is outcome devaluation. Pre- and post-training BLA lesions or inactivations do not disrupt Pavlovian or instrumental conditioned responding, but they do render this behavior insensitive to selective devaluation of the specific predicted outcome (Balleine et al., 2003; Balleine and Killcross, 2006; Baxter and Murray, 2002; Hatfield et al. 1996; Johnson et al., 2009; Murray and Izquierdo, 2007; Parkes and Balleine, 2013; Pickens et al., 2003; Wassum et al., 2009). This will be discussed in more detail below. The BLA is also required to use these outcome-specific representations; post-training BLA lesions impair outcome-specific reinstatement, the ability of non-contingent reward delivery to bias action selection towards those actions that have previously earned the same specific reward (Ostlund and Balleine, 2007a, 2008a).

Strong evidence that the BLA is required for encoding and using detailed outcome-specific reward representations comes from data collected with the outcome-specific Pavlovian-instrumental transfer (PIT) task. In this task an environmental reward-predictive stimulus (CS) will selectively invigorate the performance of those actions that earn the same specific reward associated with the stimulus (Colwill and Motzkin, 1994; Colwill and Rescorla, 1988; Holmes et al., 2010; Kruse et al., 1983). Because the CSs are never directly associated with the instrumental actions, this requires retrieval of a mental representation of the specific shared outcome encoded in both the Pavlovian stimulus-outcome (S-O) and instrumental response-outcome (R-O) association (Corbit and Janak, 2010; Dickinson and Balleine, 2002). This capacity requires the BLA (Blundell et al., 2001; Corbit and Balleine, 2005; Delamater, 2012; Ostlund and Balleine, 2008a) and recent data from our laboratory suggest transient fluctuations in glutamate released into the BLA during PIT can encode outcome-specific motivational information provided by the reward-predictive stimuli (Malvaez et al., 2015). In support of this, BLA neurons fire during anticipation of rewarding (or aversive) outcomes in a manner predictive of outcome type (Schoenbaum et al., 1998).

Interestingly, this role in representing outcome-specific reward information appears to be specific to the amygdala’s basolateral nucleus. The adjacent CeN is neither required for outcome-specific PIT, nor for sensitivity of Pavlovian or instrumental responses to post-training outcome devaluation (Hatfield et al., 1996; Corbit and Balleine, 2005). The CeN is also not required to develop specific consummatory conditioned responses (Chang et al., 2012), a function for which the BLA is required (Chang et al., 2012b). Rather, the CeN is thought to be important for encoding and using more general (i.e., non-specific) motivational or affective information. The dissociable contributions of the BLA and CeN to motivated behavior have been reviewed previously (Balleine and Killcross, 2006).

The role of the BLA in representing outcome-specific information is limited to motivationally significant events. An intact BLA is not required to represent the outcome-specific aspects of neutral events (Dwyer and Killcross, 2006; Parkes and Westbrook, 2010). Moreover, the BLA is required to learn the response for a conditioned reinforcer and for conditioned cue-induced reinstatement of responding, but not for responding to primary reward (Cador et al., 1989; Fuchs et al., 2002; Kantak et al., 2002; Meil and See, 1997). The BLA is also not required to encode sensory-specific information in a simple flavor-nutrient association for conditioned flavor preference (Delamater, 2012) or for olfacto-gustatory conditioning (Wheeler et al., 2013). Therefore, the BLA encodes motivationally-relevant, outcome-specific representations in instrumental R-O associations and such encoding for Pavlovian S-O associations depends on CS modality and is most critical when such CSs are auditory and/or visual.

If the BLA is vital for encoding motivationally-relevant, outcome-specific representations, what elements of the reward are incorporated into this representation? Indeed, reward representations have long been recognized to include multiple components (Delamater, 2012; Delamater and Oakeshott, 2007; Konorski, 1967). Recent proposals describe the amygdala (and more specifically, the BLA) as encoding a dynamic representation of reward value that incorporates both stimuli associated with reward and reinforcement history (Morrison and Salzman, 2010; Paton et al., 2006). Here we consider three broad and interrelated domains of reward: incentive value, reward history, and reward cost. First is the current value of a reward relative to the organism’s internal need state, information fundamental in controlling pursuit of reward to allow reward seeking that is flexible to transient needs; incentive value. A second parameter of reward is consideration of the history of reward attainment that is unique to the organism, the timeline that can ostensibly span as far in the past as the uterine environment (without considering transgenerational influences) or as recent as the previous trial of learning immediately preceding the present time; reward history. We focus primarily on investigation of recent reward history and trial-by-trial performance in this review. Third is consideration of the energy investment required to obtain reward, the price of reward (usually considered relative to the benefit of the reward itself), which we refer to here as reward cost. We summarize evidence from animal behavioral neuroscience studies demonstrating that the BLA codes specific reward representations and that it is vital for dynamically updating these representations to include incentive values, recent reward history and reward cost in response to new or uncertain conditions.

Reward Valuation and Revaluation

Perhaps the most straightforward consideration for a reward’s value is its current biological significance (e.g., food is biologically significant when hungry, but less so when sated). Evidence suggests that this biological significance is not innately attached to specific reward representations, but rather must be acquired through experience with the reward in a relevant need state- so called incentive learning (Balleine et al., 1995; Balleine and Dickinson 1998; Dickinson and Balleine, 1994, 2002). While pre-training BLA-lesions do not prevent the acquisition of an instrumental or Pavlovian conditioned response, they do render these behaviors insensitive to post-training outcome devaluation brought about by either selective satiation of the reward or by a conditioned taste aversion (Balleine et al., 2003; Johnson et al., 2009). Insensitivity to devaluation has been reported in monkeys with whole, excitotoxic amygdala lesions (Izquierdo and Murray, 2007; Malkova et al., 1997) and in rats with specific BLA lesions (Balleine et al., 2003; Corbit and Balleine, 2005; Coutureau et al., 2009; Hatfield et al., 1996; Ostlund and Balleine, 2008a; Pickens et al., 2003).

Evidence from recordings of amygdala neurons supports the encoding of outcome-specific value by this structure. Valence-specific (i.e., positive or negative) populations of neurons that form functional circuits (Zhang et al., 2013) have been identified in the primate amygdala (Belova et al., 2007, 2008; Paton et al., 2006; Sangha et al., 2013; Uwano et al. 1995) and these neurons have been shown to flexibly respond to predictive stimuli on the basis of the value of the outcome they predict (Belova et al., 2007). Valence encoding neurons in the BLA are also sensitive to incentive value changes; amygdala neural responses to visual food stimuli can be eliminated by making the food item unpalatable (i.e., devaluation; Nishijo et al., 1988).

The BLA is particularly important for the actual encoding of incentive value. In reward devaluation experiments the amygdala must be “online” during the devaluation experience (e.g., selective satiation) for animals to later make adaptive responses (e.g., to avoid choosing objects or actions previously associated with the devalued food reward; Wang et al., 2005; West et al., 2012). Indeed, BLA inactivation or NMDA receptor blockade during, but not after selective satiation results in disrupted outcome devaluation effects assessed in a visual discrimination task in monkeys (Wellman et al., 2005) or instrumental action in rats (Parkes and Balleine, 2013). Because sensitivity to outcome devaluation requires a rather detailed (e.g., specific taste) representation of the rewarding outcome (Balleine and Dickinson, 1998), these results show that the BLA is critical for encoding need-based incentive value into a detailed outcome representation in either a Pavlovian S-O or instrumental R-O association. In further support of this, both monkeys with whole amygdala lesions (Izquierdo and Murray, 2007) and rats with BLA lesions (Hatfield et al., 1996, Johnson et al., 2009) will choose adaptively following a devaluation procedure in control tests conducted in the absence of the Pavlovian or instrumental contingencies (often measured by post-test consumption).

The BLA is important for coding value changes not only when they decrease, but also when they increase. For example, an intact BLA is required to learn about a post-training positive shift in the incentive value of a food reward upon experience with the specific food hungry for first time. This relies on a BLA µ-opioid receptor-dependent mechanism (Wassum et al., 2009, 2011). The BLA has also been demonstrated to be necessary for acquiring socially transmitted increases in food value (Wang et al., 2006). Importantly, food palatability responses (e.g., licking microstructure) are not altered by blockade of BLA µ opioid receptors (Wassum et al., 2009), indicating that the BLA may not be critical for expression of the hedonic experience derived from the rewards themselves, but rather for encoding the incentive value changes that result from that experience. That populations of BLA neurons do reflect taste-specific gustatory information (Fontanini et al., 2009) may then suggest that specific rewards can be represented in BLA neuronal activity with a µ-opioid receptor-dependent mechanism (along with perhaps others) functioning with these signals to encode incentive value into the reward representation. In further support of this, we have found transient glutamate concentration changes in BLA microenvironments to be outcome-specific (Malvaez et al., 2015).

Because the BLA is vital for encoding biologically-based incentive value into a specific reward representation in either a Pavlovian or instrumental association, it is likely that it is typically engaged post-training when these values are in flux, as discussed above, but also during initial learning when such value is originally established. Consistent with this idea, BLA neurons fire in response to reward-predictive cues during learning (Ambroggi et al., 2008; Paton et al., 2006; Schoenbaum et al., 1999; Tye and Janak, 2007; Uwano et al., 1995) and in anticipation of reward in an olfactory instrumental discrimination task (Schoenbaum et al., 1998). Similarly, the transient release of glutamate into the BLA tracks instrumental reward seeking after relatively little training (Wassum et al., 2012). Moreover, strengthening of excitatory thalamo-lateral amygdala synapses early in learning predicts successful Pavlovian cue-reward learning (Tye et al., 2008) and optical stimulation of the BLA-to-NAc projections supports acquisition of a self-stimulation response, with inhibition of this pathway attenuating sucrose-cue conditioned responding (Stuber et al., 2011).

Although the BLA is not required to acquire many Pavlovian and instrumental conditioned responses, the evidence described above suggests it is active during learning and is required to learn reward value changes. This suggests that pre-training BLA lesions disrupt the formation of specific Pavlovian S-O or instrumental goal-directed R-O associations, thereby forcing acquisition via an alternate form of learning that does not require the BLA- the inflexible stimulus-response (S-R) associative architecture (i.e., habit). In support of this, pre-training BLA lesions also render instrumental responding insensitive to changes in the R-O contingency (Balleine et al., 2003), another marker of habit. Moreover, primate amygdala neurons can show prospective activity that reflects internally-generated plans towards future goals similar to what would be expected if one was using an R-O associative architecture to guide actions (Hernadi et al., 2015).

The BLA links incentive value to the outcome representation encoded in both S-O and R-O associations, but it is not acting alone to serve this function. Evidence suggests that the amygdala provides outcome-specific value information to the prefrontal cortex (namely OFC), and that it must interact with the OFC in stimulus-guided tasks to update this information following selective satiation (Baxter et al., 2000; Saddoris et al., 2005; Zeeb and Winstanley, 2013). Rudebeck and colleagues (2013) outlined this mechanism by showing that amygdala lesions attenuate value coding in OFC on a two-choice visual discrimination task. The Schoenbaum laboratory has shown that BLA-specific lesions impair OFC encoding of S-O representations and the acquisition of outcome-specific value into cue-related OFC activity (Schoenbaum et al., 2003). OFC lesions also disrupt such associative encoding in the BLA, suggesting a bidirectional functional relationship (Saddoris et al., 2005). Importantly, the OFC is necessary for devaluation sensitivity when tasks are heavily guided by reward-predictive stimuli and is actually unnecessary for the sensitivity of instrumental action to devaluation (Ostlund and Balleine, 2007b), which may indicate that connections between the BLA and OFC are more important for stimulus-predicted (i.e., S-O) reward values (Ostlund and Balleine, 2007c).

Connections between the BLA and the gustatory region of the insular cortex have been proposed as vital for the encoding and use of outcome-specific value information in instrumental R-O associations. Blockade of NMDA receptors unilaterally in the BLA before devaluation and then in the contralateral gustatory insular cortex just before instrumental test abolishes the sensitivity of instrumental action to outcome devaluation (Parkes and Balleine, 2013). Reversing the order of infusion leaves devaluation intact, supporting the idea that outcome-specific reward values encoded in the BLA are sent to the insula for use in guiding instrumental action (Parkes and Balleine, 2013). In further support of this, palatable taste responses occur in the BLA before they occur in the gustatory insula and BLA inactivation will alter insula neuronal taste responses (Piette et al., 2012). Secondarily, these data suggest that at least NMDA receptors (and also opioid receptors- Wassum et al., 2009) in the BLA are unnecessary for using an updated value to guide instrumental action. The extent to which other signaling systems (e.g., AMPA, serotonin, dopamine) are involved in the actual use of specific reward values to guide instrumental action remains an open avenue for exploration. It is likely that the BLA does contribute to online consideration of reward value because, as mentioned, prospective neuronal planning activity encoding the value of distant goals has been identified in the primate amygdala (Hernádi et al., 2015).

The BLA must also interact with the striatum to manage appropriate responses to changes in reward value. In particular, projections from BLA to the NAc core mediate sensitivity of instrumental action to devaluation (Shiflett and Balleine, 2010) and connections to the DMS are vital for acquiring instrumental R-O associations and using changes in reward value to guide instrumental action (Corbit et al., 2013). Although these functional links may be achieved indirectly via cortical connections between both structures, there is evidence of a direct influence of BLA projections to the NAc in appetitive behavior. Appetitive conditioning enhances plasticity in BLA direct projections to the NAc and activating BLA-NAc glutamatergic projections promotes motivated behavior (Namburi et al., 2015; Stuber et al., 2011).

Reward History

The case of selective satiation outcome devaluation described above is particularly interesting because under these circumstances the recent history with a specific reward greatly influences its current incentive value. Reward history in a broader sense is also important for optimizing reward procurement and recent work in our laboratories (Izquierdo et al., 2013; Ochoa et al., 2015; Stolyarova et al., 2014, 2015; Wassum et al., 2014) has directed us to consider the importance of this variable in psychopathology, including addiction.

Crespi (1942) first described a shift in the attractiveness of a reward as determined by its history, or “contrast” to previous reward. In this early study, groups of rats were trained to run a maze for different magnitude rewards. Not surprisingly, those rats trained to run for a smaller magnitude reward displayed slower run latencies than those trained to run for larger magnitude rewards. However, when the reward magnitudes shifted, so did performance: experiencing a small magnitude reward after training to earn a large reward slowed responses to longer latencies than rats consistently receiving the smaller magnitude reward (negative contrast). The converse was also true. These data shed light on the important role of reward history in appetitive behavior. Many years later, the McGaugh lab was the first to show that the amygdala mediates the responses to these shifts in reward magnitude (Salinas et al., 1993).

The BLA is involved when the outcome of an action (e.g., maze run) differs in magnitude from that expected based on that action’s reward history (Salinas et al., 1993). Is the BLA also involved in detecting and/or using shifts in reward contingency when a previously rewarded response is no longer rewarded? As mentioned, the BLA is generally not necessary for the formation of initial stimulus-reward associations necessary for discrimination learning, as was initially thought (Gaffan et al., 1993; Jones and Mishkin, 1972; Kesner and Williams, 1995; Schoenbaum et al., 2003), and there is even evidence that selective pre-training BLA lesions slightly enhance the ability to form stimulus-reward associations (Izquierdo et al., 2013). The role for BLA in reversing these associations is even less clear: there are reports of impairment (Churchwell et al., 2009; Jones and Mishkin, 1972; Schoenbaum et al., 2003), no effect (Izquierdo and Murray, 2007), and a nullification of learning deficits produced by OFC lesions (Stalnaker et al., 2007) after BLA lesions.

The variety of methodological details (sensory modality, pre- or post-training lesions, etc.) makes it challenging to definitively resolve a role for BLA in reversal learning, but consideration of its role in encoding outcome-specific representations does provide a potential parsimonious explanation of these disparate findings. The BLA may be less involved when animals can solve the discrimination problem (either initial or reversal) without forming representations of specific reward outcomes; when, for example, a win-stay, lose-shift strategy can be adopted, or when a simple S-R associative structure can adequately guide behavior. Such strategies may be employed in tasks wherein only one type of rewarding outcome is earned or when two or more rewards can be obtained, but reward discrimination is not required for action selection. Removing the contribution of the BLA in forming a specific outcome representation may alter the way the association is learned (pre-training manipulation) or the associations available for use (post-training/pre-test manipulation) and, therefore, the way the animal adapts to changes in contingency. Support for this comes from the recent analysis of how previous-trial outcomes (i.e., very recent reward history) affect responses on the subsequent trial (using positive feedback, correct+1 and using negative feedback, error+1). Such fine-grained analyses reveal that whole amygdala lesions actually facilitate learning from positive feedback in monkeys (Rudebeck and Murray, 2008) and selective BLA lesions facilitate responding after negative feedback in rats (Izquierdo et al., 2013). This suggests that pre-training BLA lesions may result in original encoding that is more sensitive to this form of prediction error-like feedback. Indeed, in these tasks only one outcome type was used such that outcome-specific representations do not confer a benefit. Moreover, on a similar single-outcome task, pre-training neurotoxic amygdala lesions in monkeys facilitated the rate of instrumental extinction, perhaps due to impaired initial R-O encoding (Izquierdo and Murray, 2005).

In tasks requiring comparison between two different outcomes (positive v. negative reward (Schoenbaum et al., 2003) or low v. high magnitude (Churchwell et al., 2009) that do require (or at least benefit from) outcome-specific representations, BLA lesions have been shown to disrupt reversal learning. Moreover, BLA inactivation impairs the benefit conferred by the use of outcome-specific representations (i.e., expectancies) on an instrumental discrimination working memory task (Savage et al., 2007) and BLA lesions prevent the use of previously learned information about food availability to guide behavior (Galarce et al., 2010).

Therefore, the BLA is required for responding to violations in a cue or action’s recent reward history (e.g., contingency shifts) to the extent that the response relies on an outcome-specific representation for the comparison. Indeed, BLA neurons in the rat can develop selective cue-induced firing in an appetitive go, no-go discrimination task that will switch in accordance with a contingency reversal (Schoenbaum et al., 1999). Similar findings have been reported in the primate amygdala (Paton et al., 2006). There is some evidence that activity in the BLA may track aspects of a reward history-based, previous-to-current outcome comparison. In a study of BLA single-unit activity a portion of the neurons that phasically responded to reward delivery during initial instrumental learning was found to reverse responding when the reward was omitted during extinction, with another small population of neurons that were not active during original learning also responding to reward omission (Tye et al., 2010). In accord with this, Roesch et al. (2012) found that neurons in the BLA similarly respond to violations in reward expectation and can increase both when a reward is better and when it is worse than expected based on reward history. Moreover, during initial learning primate amygdala neurons will show valence-specific responses to unexpected rewards (i.e., rewards that deviate from reward history; Belova et al., 2007; Henry et al., 2007). These results suggest that the BLA becomes plastic when reward contingency changes and that neurons in this region use recent reward history to make a comparison between expected and received rewards.

In further support of this, BLA neurons will respond when the cue-reward contingency changes not as a result of changes in the contiguous relationship between the cue and reward, but rather as a result of background reward availability. Bermudez and Schultz (2010) demonstrated that single amygdala neurons in the monkey can be sensitive to background reward, and as such, can code for the positive contrast between received and background reward (i.e., “true reward predictions”; Schultz, 2011). Activity of single BLA neurons can track both positive and negative reward-prediction errors and the BLA is required to use this information to support effective cue-reward learning (Esber and Holland, 2014). These data suggest that the extent of context profitability experienced by the animal (i.e., reward habitat or reward environment) may be reflected, and perhaps used, by BLA neurons for comparisons to outcome-specific reward representations important for learning. For most organisms dynamic, complex environments are the norm, not the exception. Therefore, strong models of adaptive, goal-directed behavior must adequately consider fluctuations of reward profitability in the environment, thereby systematically incorporating reward history as a factor in learning, accounting for positive and negative contrast effects, for example. We discuss one such model in Models of Reward Procurement below.

In addition to background reward within a context, longer-term reward history may also contribute to reward habitat. Though a detailed consideration of these factors is not within the scope of this review, potent reinforcers and powerful environmental experiences may recalibrate reward history and alter the ‘gain’ on future reward valuation (i.e., incentive learning) and responding. Such influential factors include, but are not limited to, social experiences (e.g., whether animals are socially-or single-housed), quality of early-life care, timing of weaning and food restriction, and exposure to potent drug reinforcers (Lüthi and Lüscher, 2014). Of particular interest is reward experience during development. Adolescence, for example, may serve as a critical window for establishing a “baseline” reward history that calibrates responses to future rewards in adulthood. It is poorly understood how different reward environments in early life (reward histories) may contribute to shaping reward circuitry, including the BLA, and behavior. We have new evidence that increased structural remodeling in amygdala may be a characteristic of adolescence that contributes to a reward sensitive phenotype (Stolyarova and Izquierdo, 2015), but this is an avenue ripe for future exploration.

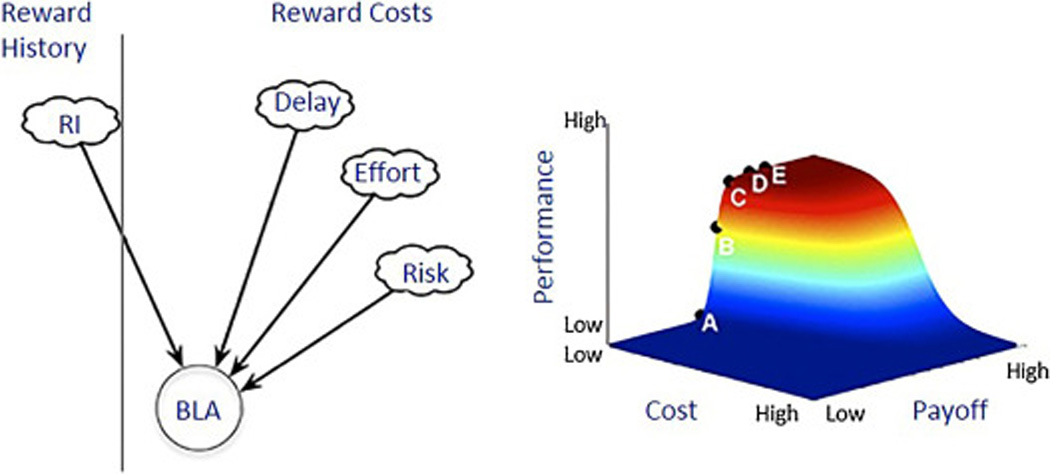

Reward Cost, or Benefit

Expected information about reward (e.g., expected outcome availability, value relative to current need state, reward history, etc.) is typically integrated into a calculation with the current price of procuring the reward (Figure 3). Such cost-benefit analysis is reflected in the reduction in reward-seeking behavior when effort requirements increase and findings that animals will choose less effortful options when rewards earned are of equal magnitude in decision-making tasks. Evidence laid out above suggests that the BLA may encode elements of the benefit in this analysis by encoding motivationally-relevant, outcome-specific representations and perhaps deviations in this relative to reward history and habitat. But the contribution of the BLA to effort or cost calculations (or at least to performance on tasks requiring such a calculation) has also been evaluated. We consider these here, dividing our analysis of cost across 3 different forms: physical and cognitive effort, delay, and reward probability/risk.

Figure 3. An adapted model of reward procurement for the integration of incentive value, reward history, and costs by the basolateral amygdala (BLA).

According to this model, a signal of subjective reward intensity (RI), which we call incentive value, gets integrated from the past into the present (a vertical line denotes past and present). In the present, there is an integration of cost parameters (risk, delay, effort). The evidence we review suggests this integration occurs dynamically in the BLA, as part of a distributed network. The putative product of BLA signaling (to areas like the dorsomedial striatum, nucleus accumbens, and orbitofrontal cortex) is a position on the “reward mountain” (A through E). The mountain is a scalar representation of “cost” and “payoff.” Modified from Breton et al. 2014.

Physical and Cognitive Effort

As with reversal learning, investigations of BLA involvement in physical effort have produced variable results. Reports with whole amygdala lesions in monkeys (Aggleton and Passingham, 1982; Baxter et al., 2000) or BLA-specific lesion/inactivation in rats (McGregor and Roberts, 1993; McGregor et al., 1994) show no effect on the physical effort to procure reward, as measured by progressive ratio. Impairments tend to manifest when task designs incorporate choice paradigms, likely because the BLA is required for representing specific motivationally-significant outcomes, which choice requires. Indeed, BLA inactivation or BLA-anterior cingulate disconnection biases choices in favor of a low effort, small reward over a large reward at greater effort (Floresco and Ghods-Sharifi, 2007; Ghods-Sharifi et al., 2009; Ostrander et al., 2011). This could be because rats are unable to encode (pre-training lesions) or access (post-training manipulations) the specific outcome in R-O associations and as a result, default to an easiest-option strategy. Ostrander et al. (2011) found that BLA lesions produced only transient work aversion, and with practice, even lesioned animals showed comparable rates of effort discounting to sham-operated rats. Importantly, when cues or task parameters changed, BLA-lesioned animals were impaired anew. This may reflect a lesion-induced strategy shift to one slower to encode the effort variable.

More recently, animal models of cognitive (attentional) effort have been developed to better reflect the effort demands in humans (Hosking et al., 2014). In a modification of the 5-choice serial reaction time task, rats can choose (by lever press) to sustain greater attentional effort to obtain a larger reward or opt for a less difficult trial with a lower attentional cost, but a lower magnitude reward. Following the selection, one of 5 available ports is illuminated. In the low effort/low reward condition the port remains illuminated for 1 s allowing ample time for the rat to attend to the cue and respond. But for the high effort/high reward condition the port is only illuminated for 0.2 s, requiring the rat to attend to and respond much more quickly. In these task conditions, BLA inactivation caused hard-working rats to ‘slack off’, consistent with the physical effort-averse phenotype in choice tasks of BLA-lesion rats described above. Interestingly however, BLA inactivation caused ‘slacker’ rats to engage in more attentional effort. That these individual differences exist to begin with suggests (at least attentional) effort cost can be subjectively integrated with reward value. While it is possible that the BLA inactivation caused a strategy shift to one independent of reward value, these data could suggest, as interpreted by the authors, that the BLA is involved in the cost-benefit calculation of a choice, coding a unique subjective value of available options that incorporates outcome-specific value in combination with subjective cost of that outcome. More data with recordings of BLA neuronal activity during these cost calculations are required to fully support this, but there is already evidence that the activity of BLA neurons in the monkey amygdala can reflect economic evaluation of options (e.g., spend now, save later) and internal action plans for future reward procurement (Grabenhorst et al., 2012; Hernadi et al., 2015).

Delay

The action-reward waiting interval (i.e., delay) is also an element of a reward’s cost. Similar to general effects on effort, BLA lesions/inactivations tend to produce a delay-averse phenotype, which has sometimes been interpreted as enhanced impulsivity. Winstanley et al. (2004) found that BLA lesions decrease preference for a large, delayed reward over a smaller, immediate reward. Disconnection of the BLA and medial OFC replicates this effect (Churchwell et al., 2009). These data suggest that the BLA may encode information about the temporal delay of an anticipated reward, such that when this information is absent subjects defer to a delay-averse strategy. In support of this, activity in primate amygdala is also sensitive to the expected time of reward delivery (Bermudez et al., 2012).

Risk and Probability

Risk of punishment, reward omission, or lower reward probability are reward costs that are highly relevant to everyday human behavior and misbehavior (e.g., gambling). As above, BLA inactivation results in a risk-averse pattern in a choice task during trials with the greatest uncertain outcomes, decreasing choice of the larger-uncertain over smaller-certain option (Ghods-Sharifi et al., 2009). BLA lesions will also make rats risk averse when gambling with losses such that they are more likely to accept a smaller guaranteed loss (Tremblay et al., 2014). Interestingly however, in a task developed to be a rodent analog of the Iowa Gambling Task, post-training excitotoxic BLA lesions produce a risk-prone phenotype, characterized by an inability to avoid disadvantageous high reward options that carry a large risk of a time-out punishment (Zeeb and Winstanley, 2011). This finding is supported by another risky decision-making task in rodents involving explicit foot-shock punishment (Orsini et al., 2015). These data suggest the BLA may be vital for the encoding of both rewarding and punishing outcomes and incorporating this into an outcome representation. Indeed, there are both positive and negative valence encoding neurons in the BLA (Belova et al., 2007, 2008; Paton et al., 2006; Sangha et al., 2013; Uwano et al., 1995) and the BLA is required for active avoidance of aversive events (Killcross et al., 1997; Lázaro-Muñoz et al., 2010). Recent evidence suggests this reward and punishment encoding in the BLA may be mediated by distinct opposing plasticity in neurons projecting to the NAc and CeN: reward enhances and aversive stimuli attenuate BLA-NAc plasticity, and vice versa for the projection to the CeN (Namburi et al., 2015).

In sum, inactivations or lesions of the BLA in rodents tend to produce a safe-choice inclination in a variety of cost-benefit choice paradigms, although when punishment is signaled or explicit, BLA lesions result in a higher-risk phenotype. Therefore, under normal conditions, the BLA plays a critical role in integrating price and payoff (cost-benefit factors) in reward choices, and in generating exploitative strategies in uncertain situations. Exactly how this occurs remains unknown. There are at least two possibilities, which are not necessarily mutually exclusive: The BLA may encode elements of cost itself (perhaps especially punishment risk), or, by way of representing specific reward information (including value), may participate in a cost-benefit analysis over a distributed network.

Models of reward procurement

The evidence described above implicates the BLA in outcome-specific values, reward history and, perhaps also some elements of reward cost used for guiding reward-seeking behavior and decision making. This idea is congruent with the notion that the BLA is vital for “state value” (Morrison and Salzman, 2010). Although there are several computational models that help synthesize these results and clarify the role of the BLA in appetitive behavior (see especially model-based reinforcement learning (Dayan and Daw, 2008; Doll et al., 2012; Doya et al., 2002; Prévost et al., 2013), there is one emerging model that encompasses all of these elements. According to Shizgal and colleagues (Breton et al., 2014), reward-seeking behavior is an output measure, which this group refers to as a “reward mountain”, resulting from an integration of costs and reward history (Figure 3). The vigor by which animals exert such reward-seeking behavior is dependent upon an estimation of what has been experienced in the recent past (reward history) with the current cost of reward. The model predicts that manipulations that act on early stages of reward processing shift the three-dimensional reward mountain along the payoff axis, making animals more sensitive to reward, and consequently increasing effort expenditure for reward. Conversely, manipulations that act on later stages produce shifts along the price axis, making animals more sensitive to the cost. Of course, a single node in a computational model is likely not manifest as a single brain region, but there is evidence that the BLA displays many properties of the hypothetical “integrator” node in the reward mountain model. Indeed, the data described above implicate the BLA in the use of reward history and cost information to guide reward seeking.

Effort to procure reward is not only dependent upon an estimation of reward history, but is also dynamically influenced by uncertainty in the environment about reward availability. Both positive and negative contrast effects are mediated by an adaptive response to uncertainty about how conditions are predicted to change over time: the animal’s “belief” about the profitability of its habitat (McNamara et al., 2013). The effort expenditure is highest in animals where foraging opportunities change most rapidly and dramatically for the better, since this maximizes the rewards they can obtain (e.g., bracing for poor habitats in the future). Conversely, animals that find themselves in mostly-impoverished environments will reduce their efforts until rich conditions, though seldom experienced, return. This aspect of the model may inform recent data showing that BLA (specifically serotonin in this region) is required in reward choices involving probabilistic, uncertain reward outcomes (Rygula et al., 2014) rather than deterministic ones (Ochoa et al., 2015). One interpretation of these findings is that introducing uncertainty through probabilistic outcome delivery fundamentally changes reward encoding in BLA. This is a distinct possibility since animals in these studies had experience with the task before surgery (Rygula et al., 2014: Ochoa et al., 2015). Thus, stimuli that predict ambiguous outcomes may engage the BLA more than predictable, deterministic ones (Belova et al., 2007; Henry et al., 2007; Hsu et al., 2005). It is interesting to note that selective PIT, which requires an intact BLA as described above, is typically conducted with probabilistic reward-paired cues.

Consistent with such timing-dependent effects, BLA manipulations most selectively affect early acquisition of reward learning, when reward contingencies and values are most uncertain, leaving later learning intact, when these associations are more cemented. We note here that just as likely a possibility is that BLA is needed for encoding specific reward values early in training, but is less needed once those reward values are set, or until they change- in which case the BLA is reengaged. Additionally, early in learning the animal may encode high-resolution details about the reward (sensory and affective properties) that later may become less salient. Uncertainty may, therefore, be a trigger for BLA involvement in reward learning and reward seeking behavior.

BLA in Addiction

The BLA is clearly a focal region in appetitive learning and memory. Addiction to drugs or alcohol can be conceptualized as the consequence of maladaptive appetitive learning. The role of BLA in drug-seeking and self-administration behavior has been reviewed previously (Everitt and Wolf, 2002; See, 2002). Here we aim to briefly highlight how the BLA may contribute to the long-lasting, off-drug effects on learning about and responding to rewards after experience with opiates and psychostimulants, within the context of the role for the BLA described in this review.

Without an intact BLA, instrumental reward learning relies on an inflexible S-R behavioral strategy that is insensitive to changes in the consequences of the action. While such habits are a normal part of behavior, they can become maladaptive. Indeed, this is thought to occur after chronic exposure to addictive substances and to underlie certain aspects of addiction (Everitt and Robbins, 2005; Hogarth et al., 2013; Ostlund and Balleine, 2008b; Robbins and Everitt, 2002; Zapata et al., 2010). Considerable data suggests that goal-directed control of drug seeking relies on a BLA-NAc circuit such that pre-existing or drug-induced alterations to this circuit could bias one towards maladaptive drug-seeking habits.

Disruptions in this BLA-dependent encoding of incentive value into outcome-specific representations could also lead to maladaptive reward valuations, and therefore, maladaptive reward seeking. In support of this, experience with opiates in the withdrawal state can enhance opiate-seeking behavior, an effect that occurs independently of an opportunity to learn that the drug-seeking action will lead to alleviation of withdrawal (i.e. negative reinforcement; Hutcheson et al., 2001). This suggests that chronic opiate administration and withdrawal could disrupt the fundamental incentive learning process. Indeed, we have shown that in early opiate withdrawal, the experience-dependent incentive value of both sucrose and water reward was increased, resulting in enhanced value-driven reward seeking (Wassum et al., 2014). Importantly, such reward seeking was inconsistent with the emotional experience of reward consumption and occurred in the face of circumstances that would otherwise negatively impact reward value. This was shown to be dependent on BLA mu opioid receptors (Wassum et al., 2014).

Reward valuation may also be disrupted in withdrawal following chronic methamphetamine exposure. Upon a fine-grained analysis of trial-by-trial feedback responses in 2-choice discrimination reversal learning, we reported that rats show enhanced sensitivity to positive feedback to food rewards during protracted methamphetamine withdrawal (Stolyarova et al., 2015). Drug-pretreated rats benefit more from correctly-performed trials than saline-treated animals and this imbalance occurs most prominently before rats reach 50% percent accuracy, when reward contingencies are most divergent from that experienced before drug exposure. We have additional evidence that rats, when given freely available options, prefer and work for larger magnitude rewards during methamphetamine withdrawal (Stolyarova et al., 2015), providing support for the idea that there are long-lasting effects on reward valuation after the termination of drug experience.

The above described effects likely result from long-term drug-induced changes in BLA signaling. Indeed, the long-lasting effect of chronic cocaine exposure to produce insensitivity to reward devaluation has been suggested to rely on a persistent, off-drug alteration in BLA signaling (Schoenbaum and Setlow, 2005). Using immunohistochemical and stereological analysis, it was recently found that amphetamine conditioned place preference experience alters synaptic connectivity within the BLA network through its GABA interneurons, resulting in long-lasting effects on BLA signaling (Rademacher et al., 2015). After 30 days of withdrawal from cocaine, the proportion of BLA delay-dependent anticipatory neurons is reduced. Cocaine, therefore, affects the long-term perception of delay to reward, through changes in BLA coding (Zuo et al., 2012). Pelloux et al. (2013) found that rats with BLA lesions increased their cocaine-seeking responses under punishment, indicating aberrant risk assessment as well. This is consistent with effects of psychostimulants on impulsivity measures on choice paradigms (reviewed in Setlow et al., 2009). Collectively, these studies provide evidence that long after psychostimulant experience, rats display decreased cost sensitivity and increased reward sensitivity and that these effects may be mediated, in part, through the BLA.

Related to one recent reward procurement model, the period of withdrawal and abstinence from psychostimulants and opiates could be considered a peak negative contrast effect, when reward history changes dramatically for the worse: from recent experience with a potent drug reinforcer to its sudden unavailability. As such, one would expect enhanced reward procurement strategies when given access to primary or conditioned reward in this timeframe (McNamara et al., 2013). Though there has been a focus on corticostriatal adaptations after drug exposure, critical changes in reward learning and reward choices are also likely mediated through BLA.

Conclusions and Future Considerations

Our conclusion here is that the BLA is vital for encoding motivationally salient, reward-specific representations that include reward value and are used in comparison to reward history and cost to inform appetitive behavior. Overall the data reviewed above suggest that the results of BLA lesions and inactivations should be understood in the context of the behavioral task demands, which may determine the extent of BLA involvement in reward seeking. As technologies advance, the opportunity for cell- or pathway- specific, temporally precise manipulations may continue to refine our understanding of data collected with blunter tools. Similarly, neural and neurochemical recordings in well-designed behavioral tasks will be imperative to determine the information encoded in the BLA. In particular, we have highlighted a need for BLA neuronal activity during the online use of reward value and in cost-benefit calculations.

The application of new and emerging technology is needed, but study of the BLA would also benefit from better and more refined behavioral models that are aligned with emergent computational models. As mentioned, models of adaptive, goal-directed behavior should consider fluctuations in environment profitability, including both long-term and recent-past reward history, because these may operate at the level of the BLA to set the ‘gain’ on incentive learning processes. Evaluation of how this function of the BLA changes in development, across the lifespan, and following exposure to addictive substances will be particularly exciting. Given the role of the BLA in both aversive and appetitive conditioning, as demonstrated by recently-published work (Namburi et al., 2015), the mechanism by which BLA incorporates positive and negative valence into complex learning and behavior is a ripe avenue of investigation. Continued evaluation of the role of the BLA in both normal and maladaptive appetitive behavior will hasten our understanding of addiction and other disorders of behavioral control.

Highlights.

Questions remain on the circumscribed role of BLA in appetitive behavior

We reconcile a role for BLA in light of recent technology and computational models

BLA functions as an integrator of reward value, history, and cost

As integrator, BLA codes for specific and temporally dynamic outcome representations

Opiates and psychostimulants alter these outcome representations in BLA

Acknowledgements

We thank Alexandra Stolyarova for her help in the preparation of this manuscript and Andrew Holmes for his helpful comments on this review. This work was supported by UCLA’s Division of Life Sciences Recruitment and Retention fund (Izquierdo & Wassum), NIH grant DA035443 (Wassum).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Conflict of Interest. There is nothing to disclose nor are there any conflicts of interest.

References

- Aggleton JP, Passingham RE. An assessment of the reinforcing properties of foods after amygdaloid lesions in rhesus monkeys. J. Comp. Physiol. Psychol. 1982;96(1):71–77. doi: 10.1037/h0077861. [DOI] [PubMed] [Google Scholar]

- Aggleton JP. The amygdala: a functional analysis. second ed. New York: Oxford UP; 2000. [Google Scholar]

- Ambroggi F, Ishikawa A, Fields HL, Nicola SM. Basolateral amygdala neurons facilitate reward-seeking behavior by exciting nucleus accumbens neurons. Neuron. 2008;59(4):648–661. doi: 10.1016/j.neuron.2008.07.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balleine BW, Dickinson A. The role of incentive learning in instrumental outcome revaluation by sensory-specific satiety. Learn. Behav. 1998;26(1):46–59. [Google Scholar]

- Balleine BW, Garner C, Gonzalez F, Dickinson A. Motivational control of heterogeneous instrumental chains. J. Exp. Psychol. Anim. Behav. Process. 1995;21:203–217. [Google Scholar]

- Balleine BW, Killcross S. Parallel incentive processing: an integrated view of amygdala function. Trends. Neurosci. 2006;29(5):272–279. doi: 10.1016/j.tins.2006.03.002. [DOI] [PubMed] [Google Scholar]

- Balleine BW, Killcross AS, Dickinson A. The effect of lesions of the basolateral amygdala on instrumental conditioning. J. Neurosci. 2003;23(2):666–675. doi: 10.1523/JNEUROSCI.23-02-00666.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baxter MG, Parker A, Lindner CC, Izquierdo AD, Murray EA. Control of response selection by reinforcer value requires interaction of amygdala and orbital prefrontal cortex. J. Neurosci. 2000;20(11):4311–4319. doi: 10.1523/JNEUROSCI.20-11-04311.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baxter MG, Murray EA. The amygdala and reward. Nat. Rev. Neurosci. 2002;3(7):563–573. doi: 10.1038/nrn875. [DOI] [PubMed] [Google Scholar]

- Belova MA, Paton JJ, Morrison SE, Salzman CD. Expectation modulates neural responses to pleasant and aversive stimuli in primate amygdala. Neuron. 2007;55(6):970–984. doi: 10.1016/j.neuron.2007.08.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belova MA, Paton JJ, Salzman CD. Moment-to-moment tracking of state value in the amygdala. J. Neurosci. 2008;28(40):10023–10030. doi: 10.1523/JNEUROSCI.1400-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bermudez MA, Schultz W. Responses of amygdala neurons to positive reward-predicting stimuli depend on background reward (contingency) rather than stimulus-reward pairing (contiguity) J. Neurophysiol. 2010;103(3):1158–1170. doi: 10.1152/jn.00933.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bermudez MA, Göbel C, Schultz W. Sensitivity to temporal reward structure in amygdala neurons. Curr. Biol. 2012;22(19):1839–1844. doi: 10.1016/j.cub.2012.07.062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blundell P, Hall G, Killcross S. Lesions of the basolateral amygdala disrupt selective aspects of reinforcer representation in rats. J. Neurosci. 2001;21(22):9018–9026. doi: 10.1523/JNEUROSCI.21-22-09018.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Breton YA, Conover K, Shizgal P. The effect of probability discounting on reward seeking: a three-dimensional perspective. Front. Behav. Neurosci. 2014;8:284. doi: 10.3389/fnbeh.2014.00284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Britt JP, Benaliouad F, McDevitt RA, Stuber GD, Wise RA, Bonci A. Synaptic and behavioral profile of multiple glutamatergic inputs to the nucleus accumbens. Neuron. 2012;76(4):790–803. doi: 10.1016/j.neuron.2012.09.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cador M, Robbins TW, Everitt BJ. Involvement of the amygdala in stimulus-reward associations: interaction with the ventral striatum. Neuroscience. 1989;30(1):77–86. doi: 10.1016/0306-4522(89)90354-0. [DOI] [PubMed] [Google Scholar]

- Cardinal RN, Parkinson JA, Hall J, Everitt BJ. Emotion and motivation: the role of the amygdala, ventral striatum, and prefrontal cortex. Neurosci. Biobehav. Rev. 2002;26(3):321–352. doi: 10.1016/s0149-7634(02)00007-6. [DOI] [PubMed] [Google Scholar]

- Chang SE, Wheeler DS, Holland PC. Effects of lesions of the amygdala central nucleus on autoshaped lever pressing. Brain. Res. 2012a;1450:49–56. doi: 10.1016/j.brainres.2012.02.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang SE, Wheeler DS, Holland PC. Roles of nucleus accumbens and basolateral amygdala in autoshaped lever pressing. Neurobiol. Learn. Mem. 2012b;97(4):441–51. doi: 10.1016/j.nlm.2012.03.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chu HY, Ito W, Li J, Morozov A. Target-specific suppression of GABA release from parvalbumin interneurons in the basolateral amygdala by dopamine. J. Neurosci. 2012;32(42):14815–14820. doi: 10.1523/JNEUROSCI.2997-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Churchwell JC, Morris AM, Heurtelou NM, Kesner RP. Interactions between the prefrontal cortex and amygdala during delay discounting and reversal. Behav. Neurosci. 2009;123(6):1185–1196. doi: 10.1037/a0017734. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Colwill RM, Motzkin DK. Encoding of the unconditioned stimulus in Pavlovian conditioning. Learn. Behav. 1994;22(4):384–394. [Google Scholar]

- Colwill RM, Rescorla RA. Associations between the discriminative stimulus and the reinforcer in instrumental learning. J. Exp. Psychol. Anim. Behav. Process. 1988;14(2):155–164. [Google Scholar]

- Corbit LH, Balleine BW. Double dissociation of basolateral and central amygdala lesions on the general and outcome-specific forms of pavlovian-instrumental transfer. J. Neurosci. 2005;25(4):962–970. doi: 10.1523/JNEUROSCI.4507-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbit LH, Janak PH. Posterior dorsomedial striatum is critical for both selective instrumental and Pavlovian reward learning. Eur. J. Neurosci. 2010;31(7):1312–1321. doi: 10.1111/j.1460-9568.2010.07153.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbit LH, Leung BK, Balleine BW. The role of the amygdala-striatal pathway in the acquisition and performance of goal-directed instrumental actions. J. Neurosci. 2013;33(45):17682–17690. doi: 10.1523/JNEUROSCI.3271-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coutureau E, Marchand AR, Di Scala G. Goal-directed responding is sensitive to lesions to the prelimbic cortex or basolateral nucleus of the amygdala but not to their disconnection. Behav. Neurosci. 2009;123(2):443–448. doi: 10.1037/a0014818. [DOI] [PubMed] [Google Scholar]

- Crespi LP. Quantitative Variation of Incentive and Performance in the White Rat. Am. J. Psychol. 1942;55(4):467–517. [Google Scholar]

- Davis M. The role of the amygdala in conditioned fear. In: Aggleton JP, editor. The Amygdala: Neurobiological Aspects of Emotion, Memory, and Mental Dysfunction. New York: Wiley-Liss; 1992. [Google Scholar]

- Dayan P, Daw ND. Decision theory, reinforcement learning, and the brain. Cogn. Affect. Behav. Neurosci. 2008;8(4):429–453. doi: 10.3758/CABN.8.4.429. [DOI] [PubMed] [Google Scholar]

- Delamater AR. On the nature of CS and US representations in Pavlovian learning. Learn. Behav. 2012;40(1):1–23. doi: 10.3758/s13420-011-0036-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delamater AR, Oakeshott S. Learning about multiple attributes of reward in Pavlovian conditioning. Ann. N. Y. Acad. Sci. 2007;1104:1–20. doi: 10.1196/annals.1390.008. [DOI] [PubMed] [Google Scholar]

- Dickinson A, Balleine BW. The role of learning in the operation of motivational systems. In: Gallistel CR, editor. Learning, Motivation and Emotion, Volume 3 of Steven’s Handbook of Experimental Psychology. 3 ed. New York: John Wiley & Sons; 2002. pp. 497–533. [Google Scholar]

- Dickinson A, Balleine BW. Motivational control over goal-directed action. Learn. Behav. 1994;22(1):1–18. [Google Scholar]

- Doll BB, Simon DA, Daw ND. The ubiquity of model-based reinforcement learning. Curr. Opin. Neurobiol. 2012;22(6):1075–1081. doi: 10.1016/j.conb.2012.08.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doya K, Samejima K, Katagiri K, Kawato M. Multiple model-based reinforcement learning. Neural. Comput. 2002;14(6):1347–1369. doi: 10.1162/089976602753712972. [DOI] [PubMed] [Google Scholar]

- Duvarci S, Pare D. Amygdala microcircuits controlling learned fear. Neuron. 2014;82(5):966–980. doi: 10.1016/j.neuron.2014.04.042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dwyer DM, Killcross S. Lesions of the basolateral amygdala disrupt conditioning based on the retrieved representations of motivationally significant events. J. Neurosci. 2006;26(32):8305–8309. doi: 10.1523/JNEUROSCI.1647-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ehrlich I, Humeau Y, Grenier F, Ciocchi S, Herry C, Lüthi A. Amygdala inhibitory circuits and the control of fear memory. Neuron. 2009;62(6):757–771. doi: 10.1016/j.neuron.2009.05.026. [DOI] [PubMed] [Google Scholar]

- Esber GR, Holland PC. The basolateral amygdala is necessary for negative prediction errors to enhance cue salience, but not to produce conditioned inhibition. Eur. J. Neurosci. 2014;40(9):3328–3337. doi: 10.1111/ejn.12695. [DOI] [PubMed] [Google Scholar]

- Everitt BJ, Cardinal RN, Hall J, Parkinson JA, Robbins TW. Differential involvement of amygdala subsystems in appetitive conditioning and drug addiction. In: Aggleton JP, editor. The amygdala: A functional analysis. New York: Oxford University Press; 2000. pp. 353–390. [Google Scholar]

- Everitt BJ, Robbins TW. Neural systems of reinforcement for drug addiction: from actions to habits to compulsion. Nat. Neurosci. 2005;8(11):1481–1489. doi: 10.1038/nn1579. [DOI] [PubMed] [Google Scholar]

- Everitt BJ, Wolf ME. Psychomotor stimulant addiction: a neural systems perspective. J. Neurosc. 2002;22(9):3312–3320. doi: 10.1523/JNEUROSCI.22-09-03312.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fanselow MS, Gale GD. The amygdala, fear, and memory. Ann. N. Y. Acad. Sci. 2003;985:125–34. doi: 10.1111/j.1749-6632.2003.tb07077.x. [DOI] [PubMed] [Google Scholar]

- Fanselow MS, LeDoux JE. Why we think plasticity underlying Pavlovian fear conditioning occurs in the basolateral amygdala. Neuron. 1999;23(2):229–232. doi: 10.1016/s0896-6273(00)80775-8. [DOI] [PubMed] [Google Scholar]

- Fanselow MS, Wassum KM. Kandel ER, Dudai Y, Mayford MR, editors. The Origins and Organization of Vertebrate Pavlovian Conditioning. Cold Spring Harb Perspect Biol. 2015 doi: 10.1101/cshperspect.a021717. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Finnegan TF, Chen SR, Pan HL. Mu opioid receptor activation inhibits GABAergic inputs to basolateral amygdala neurons through Kv1.1/1.2 channels. J. Neurophysiol. 2006;95(4):2032–2041. doi: 10.1152/jn.01004.2005. [DOI] [PubMed] [Google Scholar]

- Floresco SB, Blaha CD, Yang CR, Phillips AG. Dopamine D1 and NMDA receptors mediate potentiation of basolateral amygdala-evoked firing of nucleus accumbens neurons. J. Neurosci. 2001;21(16):6370–6. doi: 10.1523/JNEUROSCI.21-16-06370.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Floresco SB, Ghods-Sharifi S. Amygdala-prefrontal cortical circuitry regulates effort-based decision making. Cereb. Cortex. 2007;17(2):251–260. doi: 10.1093/cercor/bhj143. [DOI] [PubMed] [Google Scholar]

- Fontanini A, Grossman SE, Figueroa JA, Katz DB. Distinct subtypes of basolateral amygdala taste neurons reflect palatability and reward. J. Neurosci. 2009;29(8):2486–2495. doi: 10.1523/JNEUROSCI.3898-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fuchs RA, Weber SM, Rice HJ, Neisewander JL. Effects of excitotoxic lesions of the basolateral amygdala on cocaine-seeking behavior and cocaine conditioned place preference in rats. Brain. Res. 2002;929(1):15–25. doi: 10.1016/s0006-8993(01)03366-2. [DOI] [PubMed] [Google Scholar]

- Gaffan D, Murray EA, Fabre-Thorpe M. Interaction of the amygdala with the frontal lobe in reward memory. Eur. J. Neurosci. 1993;5(7):968–975. doi: 10.1111/j.1460-9568.1993.tb00948.x. [DOI] [PubMed] [Google Scholar]

- Galarce EM, McDannald MA, Holland PC. The basolateral amygdala mediates the effects of cues associated with meal interruption on feeding behavior. Brain. Res. 2010;1350:112–122. doi: 10.1016/j.brainres.2010.02.042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghashghaei HT, Barbas H. Pathways for emotion: interactions of prefrontal and anterior temporal pathways in the amygdala of the rhesus monkey. Neuroscience. 2000;115(4):1261–1279. doi: 10.1016/s0306-4522(02)00446-3. [DOI] [PubMed] [Google Scholar]

- Ghods-Sharifi S, St Onge JR, Floresco SB. Fundamental contribution by the basolateral amygdala to different forms of decision making. J. Neurosci. 2009;29(16):5251–5259. doi: 10.1523/JNEUROSCI.0315-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grabenhorst F, Hernádi I, Schultz W. Prediction of economic choice by primate amygdala neurons. Proc. Natl. Acad. Sci. U. S. A. 2012;109(46):18950–18955. doi: 10.1073/pnas.1212706109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hatfield T, Han JS, Conley M, Gallagher M, Holland P. Neurotoxic lesions of basolateral, but not central, amygdala interfere with Pavlovian second-order conditioning and reinforcer devaluation effects. J. Neurosci. 1996;16(16):5256–5265. doi: 10.1523/JNEUROSCI.16-16-05256.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henry JD, Green MJ, de Lucia A, Restuccia C, McDonald S, O’Donnell M. Emotion dysregulation in schizophrenia: reduced amplification of emotional expression is associated with emotional blunting. Schizophr. Res. 2007;95(1-3):197–204. doi: 10.1016/j.schres.2007.06.002. [DOI] [PubMed] [Google Scholar]

- Hernádi I, Grabenhorst F, Schultz W. Planning activity for internally generated reward goals in monkey amygdala neurons. Nat. Neurosci. 2015;18(3):461–469. doi: 10.1038/nn.3925. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hiroi N, White NM. The lateral nucleus of the amygdala mediates expression of the amphetamine-produced conditioned place preference. J. Neurosci. 1991;11(7):2107–2116. doi: 10.1523/JNEUROSCI.11-07-02107.1991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hogarth L, Balleine BW, Corbit LH, Killcross S. Associative learning mechanisms underpinning the transition from recreational drug use to addiction. Ann. N. Y. Acad. Sci. 2013;1282:12–24. doi: 10.1111/j.1749-6632.2012.06768.x. [DOI] [PubMed] [Google Scholar]

- Holmes NM, Marchand AR, Coutureau E. Pavlovian to instrumental transfer: a neurobehavioural perspective. Neurosci. Biobehav. Rev. 2010;34(8):1277–1295. doi: 10.1016/j.neubiorev.2010.03.007. [DOI] [PubMed] [Google Scholar]

- Hosking JG, Cocker PJ, Winstanley CA. Dissociable contributions of anterior cingulate cortex and basolateral amygdala on a rodent cost/benefit decision-making task of cognitive effort. Neuropsychopharmacology. 2014;39(7):1558–1567. doi: 10.1038/npp.2014.27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hsu M, Bhatt M, Adolphs R, Tranel D, Camerer CF. Neural systems responding to degrees of uncertainty in human decision-making. Science. 2005;310(5754):1680–1683. doi: 10.1126/science.1115327. [DOI] [PubMed] [Google Scholar]

- Hutcheson DM, Everitt BJ, Robbins TW, Dickinson A. The role of withdrawal in heroin addiction: enhances reward or promotes avoidance? Nat. Neurosci. 2001;4(9):943–947. doi: 10.1038/nn0901-943. [DOI] [PubMed] [Google Scholar]

- Izquierdo A, Murray EA. Opposing effects of amygdala and orbital prefrontal cortex lesions on the extinction of instrumental responding in macaque monkeys. Eur. J. Neurosci. 2005;22(9):2341–2346. doi: 10.1111/j.1460-9568.2005.04434.x. [DOI] [PubMed] [Google Scholar]

- Izquierdo A, Murray EA. Selective bilateral amygdala lesions in rhesus monkeys fail to disrupt object reversal learning. J. Neurosci. 2007;27(5):1054–1062. doi: 10.1523/JNEUROSCI.3616-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Izquierdo A, Darling C, Manos N, Pozos H, Kim C, Ostrander S, Cazares V, Stepp H, Rudebeck PH. Basolateral amygdala lesions facilitate reward choices after negative feedback in rats. J. Neurosci. 2013;33(9):4105–4109. doi: 10.1523/JNEUROSCI.4942-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jackson ME, Moghaddam B. Amygdala regulation of nucleus accumbens dopamine output is governed by the prefrontal cortex. J. Neurosci. 2001;21(2):676–681. doi: 10.1523/JNEUROSCI.21-02-00676.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Janak PH, Tye KM. From circuits to behaviour in the amygdala. Nature. 2015;517(7534):284–292. doi: 10.1038/nature14188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang X, Xing G, Yang C, Verma A, Zhang L, Li H. Stress impairs 5-HT2A receptor-mediated serotonergic facilitation of GABA release in juvenile rat basolateral amygdala. Neuropsychopharmacology. 2009;34(2):410–423. doi: 10.1038/npp.2008.71. [DOI] [PubMed] [Google Scholar]

- John YJ, Bullock D, Zikopoulos B, Barbas H. Anatomy and computational modeling of networks underlying cognitive-emotional interaction. Front. Hum. Neurosci. 2013;7:101. doi: 10.3389/fnhum.2013.00101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson AW, Gallagher M, Holland PC. The basolateral amygdala is critical to the expression of pavlovian and instrumental outcome-specific reinforcer devaluation effects. J. Neurosci. 2009;29(3):696–704. doi: 10.1523/JNEUROSCI.3758-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones JL, Day JJ, Aragona BJ, Wheeler RA, Wightman RM, Carelli RM. Basolateral amygdala modulates terminal dopamine release in the nucleus accumbens and conditioned responding. Biol. Psychiatry. 2010;67(8):737–744. doi: 10.1016/j.biopsych.2009.11.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones B, Mishkin M. Limbic lesions and the problem of stimulus--reinforcement associations. Exp. Neurol. 1972;36(2):362–377. doi: 10.1016/0014-4886(72)90030-1. [DOI] [PubMed] [Google Scholar]