Abstract

Objectives

Cochlear implantation does not automatically result in robust spoken language understanding for postlingually deafened adults. Enormous outcome variability exists, related to the complexity of understanding spoken language through cochlear implants (CIs), which deliver degraded speech representations. This investigation examined variability in word recognition as explained by “perceptual attention” and “auditory sensitivity” to acoustic cues underlying speech perception.

Design

Thirty postlingually deafened adults with CIs and 20 age-matched controls with normal hearing (NH) were tested. Participants underwent assessment of word recognition in quiet, and “perceptual attention” (cue-weighting strategies) based on labeling tasks for two phonemic contrasts: (1) “cop”-“cob”, based on a duration cue (easily accessible through CIs) or a dynamic spectral cue (less accessible through CIs), and (2) “sa”-“sha”, based on static or dynamic spectral cues (both potentially poorly accessible through CIs). Participants were also assessed for “auditory sensitivity” to the speech cues underlying those labeling decisions.

Results

Word recognition varied widely among CI users (20 to 96%), but was generally poorer than for NH participants. Implant users and NH controls showed similar “perceptual attention” and “auditory sensitivity” to the duration cue, while CI users showed poorer attention and sensitivity to all spectral cues. Both attention and sensitivity to spectral cues predicted variability in word recognition.

Conclusions

For CI users, both “perceptual attention” and “auditory sensitivity” are important in word recognition. Efforts should be made to better represent spectral cues through implants, while also facilitating attention to these cues through auditory training.

Keywords: Cochlear implants, Sensorineural hearing loss, Speech perception

Introduction

Cochlear implants (CIs) have dramatically improved the lives of adults who acquire sensorineural hearing loss. On the whole, postlingually deafened adults with CIs are able to recognize about 60% of spoken words and 70% of sentences presented to them in quiet in standard clinical tests (Firszt et al., 2004; Gifford et al., 2008; Holden et al., 2013). However, enormous outcome variability exists, and this variability remains largely unexplained. The primary factors that have been identified as predictors of speech perception outcomes relate to the remaining integrity of the auditory system: amount of residual hearing before implantation, prior use of hearing aids, duration of hearing loss, age at implantation, and etiology of hearing loss (Eggermont & Ponton, 2003; Kelly et al., 2005; Lazard et al., 2012). However, these factors serve only as indirect predictors because it is unclear how their effects on speech perception are imparted. And although each factor shows a significant relationship to speech perception outcomes, the sum of these factors still accounts for less than 25% of the variance in those outcomes. Moreover, these factors do not represent viable targets of intervention when seeing a patient who is considering cochlear implantation or has a poor outcome.

The variance in speech perception exhibited by CI users is most often attributed to variability among patients in the fidelity of speech signal representation through CI processing and neural stimulation. Current CI speech processors operate by recovering amplitude structure (the temporal envelope – the slowly varying modulation of the signal amplitude over time) in up to 22 independent frequency channels. However, the effective number of available channels is typically limited to four to seven, mostly due to overlapping regions of neural stimulation by adjacent implant electrodes (Friesen et al., 2001). Thus, the spectral structure (the detailed frequency-specific information) delivered is significantly degraded (Wilson & Dorman, 2008). Therefore, listeners with CIs lack sensitivity to many spectral cues that underlie speech perception. A reasonable assumption, then, would be that speech outcomes vary proportionately with extent of spectral degradation and that for individual patients, improving signal quality would be all that is required for improving outcomes.

Clinicians should recognize, however, that understanding speech is more complicated than simply having good auditory sensitivity (Liberman et al., 1967; Remez et al., 1994). Language processing is strongly dependent on the recognition of phonemic structure, the mental organization of the fundamental units of language. In particular, the lexicon (an individual’s store of words) is organized according to phonemic structure. Thus, effective recognition of spoken words depends on the ability to recover phonemic structure from the signal. However, phonemes are not distinctly represented within the acoustic speech signal, ready to be plucked from the signal and lined up like beads on a string. In fact, there is “a marked lack of correspondence between sound and perceived phoneme” (Liberman et al., 1967). Instead, listeners must know which components, or “acoustic cues,” of the speech signal should be attended to for the recovery of phonemic structure (Repp, 1982). These cues are generated as acoustic consequences of the articulatory gestures (movements of the larynx, pharynx, palate, and tongue) used to produce speech, and can consist of spectral (frequency-related), temporal (duration-related), and amplitude (intensity-related) structure. Spectral cues can be further broken down into structure described as static (stable across time) or dynamic (varying over time). Because each articulatory gesture can affect structure in each of the spectral, temporal, and amplitude domains, cues to each phonemic distinction co-vary across these domains. Consequently, it would seem that all cues affiliated with a distinction should be equally as effective in signaling that distinction. If that were so, the perceptual consequences of acquired hearing loss would be minimal: a temporal or amplitude cue preserved in the face of hearing loss and subsequent implantation could substitute for a diminished spectral cue. The current investigation examined the extent to which that may be true.

Prior to conducting this investigation with CI users, clues to its outcome could be gleaned from work with other populations. It is now known from second-language learning studies that all phonetic cues are not necessarily equal: for a given language, some cues are more informative than others. The amount of perceptual attention, or weight, given to each cue is similar for individual adult speakers of a given language, most likely because those strategies lead to the most accurate and efficient speech recognition in that language (Nittrouer, 2005). Conversely, cues are not attended to if they are not informative or do not exist for a given language. A perfect example is the /r/ - /l/ contrast (e.g. “rock” versus “lock”), based primarily on differences in onset frequency of the third formant (the third resonance peak in the speech signal), which is readily recognized by primary English-speaking listeners but is largely ignored by primary Japanese-speaking listeners. This phonemic contrast does not exist in the Japanese language, so native Japanese speakers have difficulty attending to the third formant cue when learning the /r/ - /l/ contrast in English as a second language. Importantly, however, this lack of perceptual attention by Japanese listeners is not explained by a lack of auditory sensitivity to the cue, as Japanese listeners are just as able as English listeners to discriminate this acoustic difference (the isolated third formants from /r/ versus /l/) in a nonspeech discrimination task (Miyawaki et al., 1975).

Postlingually deafened adults with CIs face a different but related dilemma. Most likely these individuals have developed typical strategies of perceptual attention, or “cue-weighting strategies,” for their primary language before losing their hearing. Upon implant activation, they must learn to make sense of the degraded representations of speech. It is conceivable that the availability of cues from their CIs (their auditory sensitivity) may constrain the choice of cues they can weight, so these individuals may shift their cue-weighting strategies (especially away from spectral cues) to those cues that are better represented through their CIs, namely duration and amplitude cues. Importantly, the cues that are most accessible to CI users may not be those that are most informative for efficient perception of speech in their native language.

Some evidence to support a shift in perceptual attention for early CI users was found in a study by Hedrick and Carney (1997), examining the labeling decisions for synthetic fricative-vowel syllables by four adult postlingually deafened CI users. The CI users in that study were found to weight an amplitude cue more heavily than NH listeners, while placing reduced weight on spectral cues. A more recent study assessed the use of acoustic cues for a tense/lax vowel contrast, varying in vowel-inherent spectral change and vowel duration, as well as a word-final fricative voicing contrast (with cues consisting of F1 transition, vowel duration, consonant duration, and consonant voicing) (Winn et al., 2012). In that study, CI users showed decreased use of spectral cues and greater use of durational cues than NH listeners. In neither of these studies, however, was weighting of cues examined for a relationship with speech recognition performance.

Three other studies have indirectly related perceptual attention to success in speech recognition. Kirk et al. (1992) found that CI users’ abilities to perceptually attend to formant transitions during vowel identification predicted word recognition. Donaldson et al. (2013) examined vowel identification by adult CI users, using /dVd/ syllables that were modified to retain quasi-static spectral cues (from an 80-ms segment of the vowel center) or dynamic spectral cues (from two 20-ms segments of the vowel edges). CI users were found to have poorer vowel recognition when using either type of spectral cue alone, versus in the presence of duration cues. In a study by Peng et al. (2012) investigating recognition of speech prosody, the authors examined the use of F0 contour, intensity, and duration cues in the perception of prosody using question-statement identification for CI and NH listeners. Accuracy in question-statement identification was poorer for CI than NH listeners, but CI listeners’ weighting of F0 contour predicted accuracy in question-statement identification. However, in none of these cases was it clear whether the effects on speech (or prosody) recognition were due to perceptual attention strategies or on auditory sensitivity to the relevant cues. Notably, the authors of the Peng et al. (2012) study acknowledged that their results did not clarify whether “(a) those CI listeners who use alternative cues are also more sensitive to those cues, or (b) all CI listeners are sufficiently sensitive to these alternative cues, but not all of them can adjust their listening strategies to attend to them” (p. 78).

To more directly address the relationships of auditory sensitivity, perceptual attention, and speech recognition performance, a recent study by Moberly et al. (2014) examined whether two distinct cues were equal in their capacity to elicit accurate speech recognition by postlingually deafened adult CI users. In that study, listeners were assessed for perceptual attention while labeling synthetic versions of the “ba”-“wa” phonemic contrast, as well as performance of word recognition in quiet. Listeners’ auditory sensitivity to spectral change was also evaluated in order to gauge their sensitivity to spectral structure. For the ba-wa contrast, normal hearing (NH) adults weight a dynamic spectral cue (the rate of formant transitions – relatively slow changes in the peak resonant frequencies) more heavily than an amplitude cue (the onset rise time – the time it takes for the amplitude of the signal to reach its maximum). It was predicted that if both cues were equal in their ability to elicit accurate speech recognition (i.e., the “phonemic informativeness” of the cues), CI users would shift their attention to the amplitude cues that were well-represented by their implants, without loss of word recognition accuracy. However, this was not observed. The adults with CIs showed variable attentional (i.e., cue-weighting) strategies, and those individuals who continued to attend to the spectral cue strongly, like NH adults, showed the best word recognition. On the other hand, sensitivity to the spectral cue in that study – as measured by a discrimination task – did not independently explain variance in word recognition. Of course, that study only assessed a single phonemic contrast, limiting the generalizability of the findings.

The current study was designed to further examine, using a different set of stimuli, the contributions of perceptual attention and auditory sensitivity to word recognition for postlingually deafened adults with contemporary CIs. Three hypotheses were tested. (1) Perceptual attention to the same acoustic cues that underlie the perception of phonemic contrasts by listeners with NH would predict word recognition for a group of postlingually deafened adults with CIs. To test this hypothesis, adults with CIs and adults with NH performed perceptual labeling tasks while listening to two sets of phonemic contrasts, along with a task of word recognition under quiet conditions. Support of this hypothesis would provide further evidence that perceptual attention to acoustic cues plays an important role in word recognition. (2) Auditory sensitivity for nonspeech correlates of the acoustic cues that underlie those phonemic contrasts would not independently predict word recognition abilities for the CI users. To test this hypothesis, auditory sensitivity was assessed using discrimination tasks and results were again analyzed as predictors of word recognition. Evidence supporting this hypothesis would further confirm that good auditory sensitivity alone is not sufficient for accurate word recognition. (3) Auditory sensitivity for nonspeech correlates would not predict perceptual attention to those related cues during the labeling tasks. Evidence supporting this hypothesis would suggest that perceptual attention is not driven solely by which cues are most accessible through implants. If the three hypotheses were supported, findings would suggest that efforts focused on improving auditory sensitivity to cues through CIs may be neither sufficient nor necessary to improve speech understanding. Rather, adjustment of attentional strategies through auditory training might assist in optimizing outcomes.

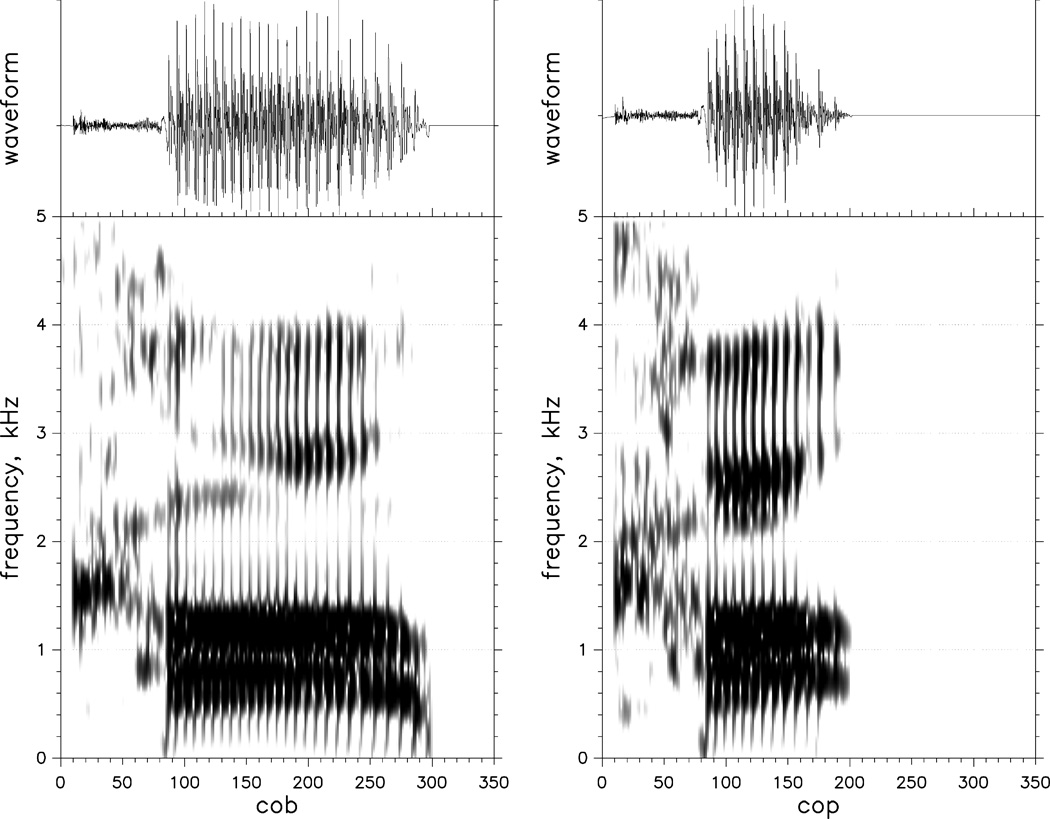

To address the first hypothesis, two sets of stimuli were chosen to examine perceptual attention during a labeling task: “cop” versus “cob” and “sa” versus “sha.” These sets were selected because each labeling decision was based on a different set of two cues. The cop-cob decision in this experiment depended on a duration cue (vocalic length) and a dynamic spectral cue (syllable-final formant transitions). The duration cue should be readily accessible through a CI, whereas the dynamic spectral cue is likely more poorly represented through a CI (Figure 1). In the Moberly et al. (2014) study, the ba-wa contrast was chosen to test the weighting of an amplitude cue versus a dynamic spectral cue. Whereas with the ba-wa contrast adults with NH typically weight the cue that should be less accessible through a CI (the spectral cue), in this case, adults with NH typically weight the cue that should be more accessible to CI users (the duration cue) (Nittrouer, 2004; Wardrip-Fruin & Peach, 1984). Therefore, it was hypothesized that CI users would likewise weight the duration cue heavily, and that the amount of perceptual attention paid to the duration cue would explain variability in word recognition, since paying attention to this cue appears to be important for adults with NH.

Figure 1.

Spectrograms of natural “cob” and “cop” stimuli produced by a male English speaker (reprinted with permission from Nittrouer et al., 2014).

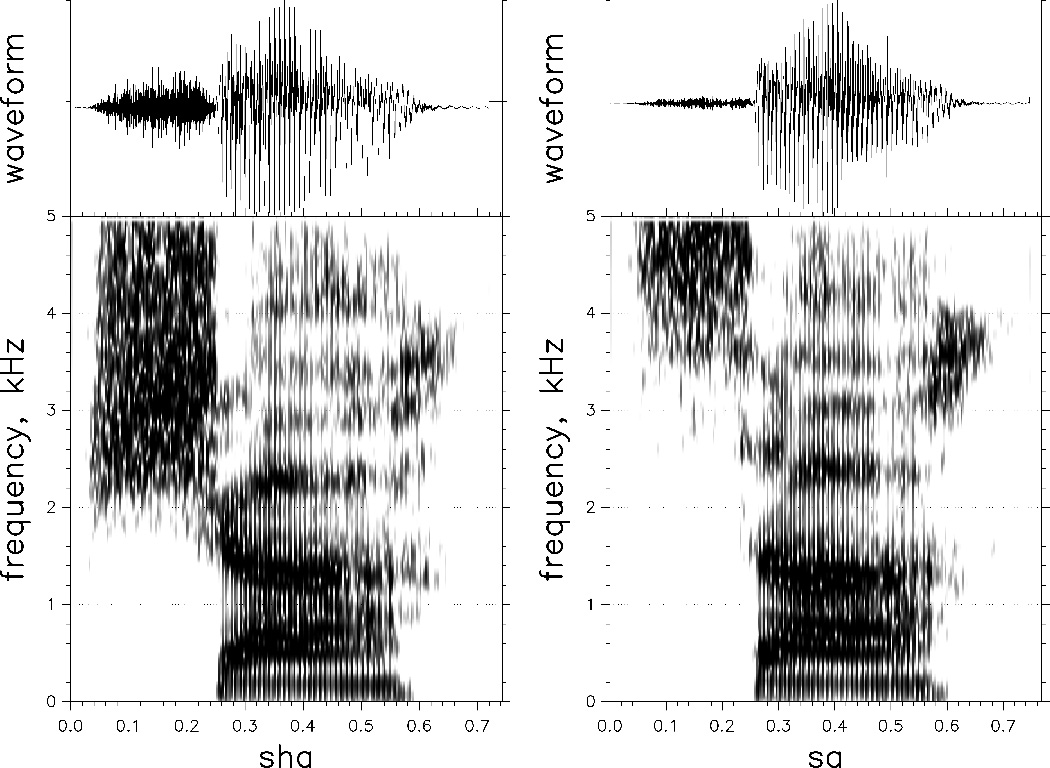

The sa-sha decision depended on a static spectral cue (fricative-noise spectrum) and a dynamic spectral cue (syllable-initial formant transitions) (Figure 2). Adults with NH heavily attend to the fricative noise in the sa-sha contrast (Hedrick & Ohde, 1993; Perkell et al., 1979; Stevens, 1985). In this case, it was predicted that CI users would show poorer attention to the dynamic spectral cue, but it was harder to predict attention to the static spectral cue. Although this cue does involve spectral structure, it could be better represented through a CI processor than relatively low-frequency spectral structure that changes rapidly (i.e., dynamic formant transitions). Talkers tend to hold the vocal-tract configuration that creates fricative noise for a relatively long time (hundreds of milliseconds), and the noise has a high-frequency, but broad-band structure. If any spectral structure is accessible to CI users, this kind should be. In any event, it might be that perceptual attention to this cue would predict better word recognition for individuals with CIs, because it is weighted heavily in fricative labeling by adults with NH. Use of this contrast should reveal how CI users fare in the face of a labeling decision that requires attention to two types of cues that are poorly accessible through their CIs.

Figure 2.

Spectrograms for natural “sha” and “sa” stimuli produced by a male English speaker.

In order to address the second and third hypotheses, auditory sensitivity to three acoustic cues relevant to the labeling tasks was assessed using discrimination tasks. Because listeners place stimuli heard as speech into phonemic categories, it was important that these stimuli not be recognizable as speech. Thus, nonspeech analogs of the relevant cues used in labeling were developed, using sine wave signals.

Materials and Methods

Participants

Thirty adults who wore CIs and were between 52 and 88 years of age were recruited from a pool of departmental patients and were enrolled. Participants had varying etiologies of hearing loss and ages of implantation, though most experienced a progressive decline in hearing during adulthood. All participants met criteria for implantation no earlier than age 45 years, based on the appropriate sentence recognition criteria used at the time of their candidacy evaluations. All used Cochlear devices, except one who wore an Advanced Bionics device. All had CI-aided thresholds better than 35 dB hearing level for the frequencies .25 to 4 kHz, measured by audiologists within the 12 months prior to testing. All had at least 9 months of experience using their implants. Six participants had bilateral implants, 14 used a right implant, and 10 used a left implant. A hearing aid was worn on the ear contralateral to the CI by 14 participants. All participants used an Advanced Combined Encoder (ACE) speech processing strategy except for the Advanced Bionics user who used a HiRes Fidelity 120 processing strategy. Participants wore devices using their everyday mode during testing and were instructed not to change device settings throughout the experiment. Participants with CIs underwent unaided audiometry prior to testing to assess their residual hearing in each ear.

Twenty NH participants were tested as a control group. They were matched closely to age and gender of the first 20 listeners enrolled with CIs. The control participants were evaluated for NH, measured at the time of testing, defined as four-tone (.5, 1, 2, and 4 kHz) pure tone average (PTA) thresholds of better than 25 dB hearing level in the better hearing ear. Recognizing that this criterion might be difficult to achieve for some participants, it was relaxed to 30 dB hearing level PTA for those older than 60 years, of which only one had a better-ear PTA worse than 25 dB hearing level. Control participants were identified from a pool of patients with non-otologic complaints in the Department of Otolaryngology, as well as recruited using ResearchMatch, a national research recruitment database.

All participants underwent a screening test of cognitive function to determine eligibility for data inclusion in analyses. The Mini-Mental State Examination (MMSE) is a validated screening assessment of memory, attention, and ability to follow instructions, used here to rule out cognitive impairment (Folstein et al., 1975). Raw scores were converted to T scores, based on age and education, with a T score less than 29 being suggestive of cognitive impairment (Folstein et al., 1975). A T score of 50 represents the mean, with a difference of 10 from the mean indicating one standard deviation. Thus, a score of less than 29 is more than 2 standard deviations below the mean; participants with T scores less than 29 were excluded from data analyses.

All participants were adults whose first language was American English and who had graduated from high school, except for one NH participant who only finished 11th grade. Socioeconomic status (SES) was assessed because it may predict language abilities. The SES was quantified using a metric defined by Nittrouer and Burton (2005), which indexes the occupational status and educational level using two eight-point scales between 1 and 8. Scores of “8” were the highest occupation and educational level that could be achieved. The two scores were then multiplied, resulting in SES scores between 1 and 64. The data regarding demographics, audiologic testing, and SES are shown for the 28 CI participants whose data were included in analyses in Table 1.

Table 1.

Cochlear implant participant demographics. SES: Socioeconomic status; PTA: Unaided four-tone pure tone average at .5, 1, 2, and 4 kHz

| Participant | Gender | Age (years) |

Implantation Age (years) |

SES | Side of Implant |

Hearing Aid |

Etiology of Hearing Loss | Better-Ear PTA (dB HL) |

|---|---|---|---|---|---|---|---|---|

| 1 | F | 62 | 54 | 24 | B | N | Genetic | 105 |

| 2 | F | 64 | 62 | 35 | R | Y | Genetic, progressive as adult | 75 |

| 3 | M | 64 | 61 | 18 | L | N | Noise, Meniere's | 80 |

| 4 | F | 64 | 58 | 15 | R | Y | Genetic, progressive as adult | 105 |

| 5 | F | 52 | 47 | 12 | L | Y | Progressive as adult, sudden | 105 |

| 6 | M | 67 | 65 | 24 | R | N | Genetic, progressive as adult | 84 |

| 7 | M | 56 | 52 | 30 | B | N | Rubella, progressive | 105 |

| 8 | F | 54 | 48 | 16 | R | Y | Genetic, progressive | 105 |

| 9 | M | 77 | 67 | 49 | L | N | Genetic, progressive | 93 |

| 10 | M | 77 | 76 | 48 | R | Y | Progressive as adult, noise, sudden | 71 |

| 11 | M | 88 | 83 | 30 | R | Y | Progressive as adult | 88 |

| 12 | F | 66 | 56 | 12 | B | N | Otosclerosis, progressive as adult | 105 |

| 13 | M | 52 | 50 | 30 | B | N | Progressive as adult | 105 |

| 16 | F | 61 | 59 | 35 | R | N | Progressive as adult | 105 |

| 18 | F | 75 | 63 | 9 | R | N | Genetic, progressive as adult | 95 |

| 19 | F | 73 | 67 | 36 | L | N | Genetic, autoimmune | 105 |

| 20 | M | 76 | 74 | 25 | L | N | Ear infections | 105 |

| 21 | M | 80 | 58 | 30 | L | Y | Meniere's | 69 |

| 22 | F | 80 | 78 | 12 | R | Y | Progressive as adult | 65 |

| 23 | F | 79 | 73 | 30 | R | N | Progressive as adult | 86 |

| 24 | F | 58 | 53 | 35 | B | N | Progressive as adult | 105 |

| 25 | M | 57 | 56 | 30 | R | Y | Autoimmune, sudden | 76 |

| 26 | M | 53 | 50 | 12 | B | N | Noise, progressive as adult | 98 |

| 29 | F | 59 | 58 | 16 | R | N | Sudden hearing loss | 80 |

| 30 | M | 80 | 79 | 36 | R | Y | Progressive as adult | 66 |

| 31 | F | 66 | 62 | 16 | L | Y | Progressive as child and adult | 84 |

| 32 | M | 68 | 67 | 9 | L | Y | Progressive as adult | 73 |

| 34 | M | 59 | 54 | 35 | L | Y | Meniere's, noise | 81 |

Equipment and Materials

All stimuli were presented via computer in a soundproof booth or acoustically insulated testing room. Labeling and discrimination stimuli were presented via computer over a loudspeaker positioned one meter from the participant at zero degrees azimuth. Scoring for the auditory discrimination and speech labeling was done at the time of testing by the experimenter by directly entering responses into the computer. Along with the screening measures (audiometry and the MMSE cognitive screen), and the measures of perceptual attention, auditory sensitivity, and word recognition, additional measures of word reading ability (using the Wide Range Achievement Test), expressive vocabulary (using the Expressive One-Word Picture Vocabulary Test, 4th edition), and receptive vocabulary (using the Receptive One-Word Picture Vocabulary Test, 4th edition) were collected. These additional measures served as metrics of overall language proficiency, and were collected as potential predictors of word recognition. For these tasks, responses were video- and audio-recorded; participants wore FM transmitters in specially designed vests that transmitted speech signals to the receivers, which provided direct input to the camera. Responses for these tasks were scored at a later time. In this way, two staff members could independently score responses in order to check reliability. All participants were tested wearing their usual auditory prostheses (single CI, two CIs, CI plus hearing aid, or none for the controls), which were checked at the start of testing.

Stimuli

Two sets of stimuli (cop versus cob and sa versus sha) were selected for use in the speech labeling tasks, along with three sets of stimuli that were relevant nonspeech analogs of cues in the labeling tasks for use in the auditory discrimination tasks. All stimuli were presented at a 20-kHz sampling rate with 16-bit digitization.

Cop-cob stimuli for labeling

Natural tokens of cop and cob spoken by a male talker were used, and were derived from Nittrouer (2004). Three tokens of each word were used so that any natural, but irrelevant variability in acoustic structure (e.g., intonation contour) could not cue category labeling. In each token, the release burst and any voicing during closure for the final stop (/p/ or /b/) were deleted, thus leaving only two acoustic cues for categorization: vocalic length and offset frequency of the first formant (F1). Vocalic length was manipulated either by reiterating one pitch period at a time from the most stable spectral region of the syllable (to lengthen syllables) or by deleting pitch periods from the most stable spectral region of the syllable (to shorten syllables). Seven stimuli varying in vocalic duration from roughly 82 ms to 265 ms were created from each token. These endpoint values were selected because they matched the mean lengths of the natural tokens. This cue (vocalic duration) was the continuously varied cue in this experiment. Going into this experiment, it was presumed to be represented well by CI processing. Final formant transitions were not modified. For the tokens used, the first formant (F1) at offset had a mean frequency of 625 Hz for cob and 801 Hz for cop. It was presumed that this cue might be difficult for CI users to recognize. In all there were 42 cop-cob stimuli: 2 formant transitions × 7 lengths × 3 tokens of each.

Sa-sha stimuli for labeling

The other set of labeling stimuli involved a fricative-place contrast (sa-sha), originally created by Nittrouer and Miller (1997). Fourteen hybrid stimuli made up of seven natural fricative noises and two synthetic vocalic portions were used. The seven noises were created by combining natural /s/ and /ʃ/ noise. The noises were 100-ms sections, excised from natural /s/ and /ʃ/ tokens produced by a male talker. These sections of fricative noise were combined using seven /ʃ/-to-/s/ amplitude ratios, creating a continuum of noises from one that was most strongly /ʃ/-like to one that was most strongly /s/-like. This method of stimulus creation preserved details of fricative spectra, while varying the amplitude of noise in the F3 region of the following vocalic portion, a primary cue to sibilant place (Strevens, 1960; Perkell et al., 1979; Stevens, 1985; Hedrick & Ohde, 1993; Henry & Turner, 2003). This cue was the continuously varied one in this set of stimuli. Although spectral in nature, it was thought that it might be well-preserved in CI processing, because it is both relatively steady-state and high frequency. For the vowels in these stimuli, two synthetic sections were generated using Sensyn, a version of the Klatt synthesizer. These vocalic portions were appropriate for /ɑ/ vowels, following the production of either /s/ or /ʃ/; that is, onset F2 and F3 varied appropriately. This cue of onset formant frequencies was the binary cue in this set of stimuli. This cue would presumably be difficult to recognize with a CI.

Duration stimuli for discrimination

Eleven non-speech stimuli were used in the discrimination task, varying in length from 110 ms to 310 ms in 20-ms steps. The stimuli consisted of three steady-state sinusoids of equal amplitude of the frequencies 650 Hz, 1130 Hz, and 2600 Hz, representing typical steady-state formant values in “cop” or “cob.” These stimuli will be referred to as dur stimuli.

Dynamic spectral stimuli for discrimination

Two sets of thirteen dynamic spectral glide stimuli were used. These stimuli were all 150 ms long, consisting of the same steady-state sinusoids as the dur stimuli. For the first set of 13 stimuli, the last 50 ms of 12 of these stimuli had falling glides; these will be termed the glide-fall stimuli. These stimuli replicated the falling formants of the cop-cob stimuli. In each step on the continuum, the offset frequencies of the sine waves changed by 20 Hz. As an example, the lowest tone varied between 650 Hz and 410 Hz at offset between the first and thirteenth stimulus. The other set of dynamic spectral stimuli consisted of the reverse of the glide-fall stimuli, representing rising formants, with the rising portion occurring during the first 50 ms. These stimuli will be termed the glide-rise stimuli.

General Procedures

All testing took place at the Eye and Ear Institute (EEI) of the Ohio State University Wexner Medical Center. Approval was obtained from the Institutional Review Board of the Ohio State University, and informed written consent was obtained. Participants were tested during a single two-hour session. Auditory discrimination and speech labeling measures were collected first, followed by word reading, expressive vocabulary, MMSE, receptive vocabulary, and word recognition. Auditory discrimination and labeling tasks were alternated, with order of task assigned consecutively as participants enrolled.

Task-Specific Procedures

Speech labeling

Participants were introduced to response labels and corresponding pictures for either the cop-cob or the sa-sha contrast. To visually reinforce task training and to ensure accurate recording of responses by the tester, 8 in. × 8 in. hand-drawn pictures were used. In the case of the cop-cob stimuli, a picture of a police officer served as the response target for cop and a picture of a corn cob served as the response target for cob. For the sa-sha stimuli, a picture of a creature from outer space served as the target for sa and a king from another country served as the target for sha.

Natural versions of “cop” and “cob” or of “sa” and “sha” were presented over the speaker, and participants were asked to label verbally what they heard, as well as to point to the corresponding picture. Training tasks were performed immediately before testing. Ten digitized natural samples were presented over the speaker to give participants practice performing the task in that format. The final practice task was that participants heard “best exemplars” of the stimulus set: that is, the endpoint stimuli along the continuum combined with the appropriate binary cues. For example, the best exemplar of sha was the stimulus with the most /ʃ/-like fricative noise and the vocalic portion that was appropriate for a preceding /ʃ/. Ten trials of these best exemplars (five of each) were presented once to participants with feedback. Next, ten of these stimuli were presented, without feedback. Participants needed to respond to eight out of the ten correctly to proceed to testing. They were given three chances to meet this criterion.

During testing, stimuli were presented in ten blocks of the 14 stimuli for either cop-cob or sa-sha. Participants needed to respond correctly to 70% of the best exemplars during testing in order to have their data included in statistical analyses.

Auditory discrimination

An AX procedure was used. In this procedure, participants judge one stimulus, which varies across trials (X), in comparison to a constant standard (A). The constant “A” stimulus serves as the category exemplar. For the dur stimuli, the “A” stimulus was the shortest member of the continuum. For both the glide-fall and glide-rise stimuli, the “A” stimulus was the one without transitions. A cardboard response card was used to visually reinforce task training and to ensure accurate recording of responses by the tester. One half of the card showed two black squares, and the other half showed one black square and one red circle. The participant responded by pointing to the picture of the two black squares and saying “same” if the stimuli were judged as the same, and by pointing to the picture of the black square and the red circle and saying “different” if the stimuli were judged as different. The experimenter controlled stimulus presentation and entered responses by keyboard. Stimuli were presented in 10 blocks of 11 (dur) or 13 (glide-fall and glide-rise) stimuli.

Before testing in each condition, participants were presented with five pairs of stimuli that were identical and five pairs of stimuli that were maximally different, in random order. They were asked to report whether the stimuli were “same” or “different,” and were given feedback. Next these same training stimuli were presented, and participants were asked to report if they were “same” or “different,” without feedback. Participants needed to respond correctly to 80% of training trials without feedback in order to proceed to testing. During testing, participants needed to respond correctly to at least 70% of same and maximally different stimuli to have their data included in analyses.

Word reading

The Wide Range Achievement Test 4 (WRAT, Wilkinson & Robertson, 2006) is a standardized measure of reading skills. The participant was asked to read the words presented on a single page. Participants were scored for words correctly read aloud. All participants exhibited at least a standard score of 70 on the WRAT (better than two standard deviations below the mean).

Expressive vocabulary

The Expressive One-Word Picture Vocabulary Test, 4th edition (EOWPVT-4, Brownell, 2000) was presented next. For each item, the participant was shown a drawing and the tester asked, “What is this?” or “What is he/she doing?” depending on the picture. The participant was asked to give a single word response corresponding to what the picture represented. Testing was discontinued when the participant made six consecutive incorrect responses.

Receptive vocabulary

The Receptive One-Word Picture Vocabulary Test, 4th edition (ROWPVT-4, Brownell, 2000) was presented next. For each item, the participant was shown four pictures on an easel. The examiner said a word, and the participant responded by repeating the word and then stating the number (1 through 4) associated with the picture that represented that word. If the participant did not repeat the word correctly, the examiner would say the word until it was repeated correctly by the participant prior to selecting a picture. This was done to ensure that participants understood the word that was being presented. Testing was discontinued when the participant made six consecutive incorrect responses.

Mini-Mental State Examination

The MMSE tasks were presented in a written fashion whereby the participant read the instructions, and the tester read along out loud in order to ensure that the participants understood the tasks. This type of modification of the MMSE, having a version for the participants to read, has been used previously in individuals with hearing loss (De Silva et al., 2008).

Word recognition

The CID-22 (Central Institute for the Deaf) word lists were used to obtain a measure of word recognition (Hirsh et al., 1952). All words on these lists are monosyllabic and commonly occur in the vocabularies of most speakers of American English. The phonetic composition within each list is balanced, so the frequency of occurrence of these phonemes matches the frequency in English.

The word lists were presented via a loudspeaker at zero degree azimuth. Each participant heard the same 50-word list. Participants were later scored on a phoneme-by-phoneme basis. All phonemes in a single word needed to be correct with no intervening phonemes in order for that word to be scored as correct. Both phoneme and whole word scores were recorded, and percent correct whole word recognition scores were used as dependent measures for the participants with CIs.

Analyses

Computation of weighting factors from labeling tasks

To calculate measures of the perceptual weights for the duration versus spectral cues (for cop-cob) or the static spectral versus dynamic spectral cues (for sa-sha), logistic regressions were performed. The proportion of /cob/ responses given to each stimulus on each continuum (or the proportion of /sa/ responses) was used in the computation of these weighting factors. Values of the continuously set property and the binary set property served as the independent variables. The raw regression coefficient derived for each cue was used as the weighting factor. Larger weighting factors indicate that more perceptual attention was given to that cue.

Computation of d′ values from discrimination tasks

The discrimination functions of each listener were used to compute an average d′ value for each condition (Holt & Carney, 2005; Macmillan & Creelman, 2005). The d′ value was selected as the discrimination measure because it is bias-free. The d′ value was calculated at each step along the continuum as the difference between the z-value for the “hit” rate (proportion of different responses when A and X stimuli were different) and the z-value for the “false alarm” rate (proportion of different responses when A and X stimuli were identical). A hit rate of 1.0 and a false alarm rate of 0.0 require a correction in the calculation of d′ and were assigned values of .99 and .01, respectively. A value of zero for d′ means participants cannot discriminate the difference between stimuli at all. A positive d′ value suggests the “hit” rate was greater than the “false alarm” rate. Using the above correction values, the minimum d′ value would be 0, and the maximum d′ value would be 4.65. The average d′ value was then calculated across all steps of the continuum, and this value was used in analyses.

Examination for group differences

A series of independent-samples t tests was performed to examine whether group mean differences existed for weighting factors and d′ values between the NH and CI groups.

Search for predictor variables

A series of separate linear regression analyses was performed to uncover the nature of relationships among various demographic factors, predictor variables, and the dependent measure of word recognition.

Results

Thirty participants with CIs and twenty NH controls underwent testing. Two participants from the CI group and one from the NH group obtained a T score on the MMSE of less than 29 (suggesting cognitive impairment); thus, data from these three participants were excluded from analyses. Demographic factor means, predictor variable test scores, and word recognition scores for the remaining 28 CI and 19 NH participants are shown in Table 2. Independent-samples t tests were performed first to examine whether differences existed between the CI and NH groups on demographic measures of age and SES, as well as reading ability, expressive or receptive vocabulary, and MMSE scores; no differences were found. Only word recognition was significantly different.

Table 2.

Means and standard deviations (SDs) of demographics, as well as language and cognitive test scores, for normal-hearing (NH) and cochlear implant (CI) groups. The t value and p value columns show results of t-test, comparing means between NH and CI groups. Degrees of freedom for all t-tests were 45. SES: Socioeconomic status

| Groups | ||||||

|---|---|---|---|---|---|---|

| NH | CI | |||||

| Mean | (SD) | Mean | (SD) | t | p | |

| N | 19 | 28 | ||||

| Demographics | ||||||

| Age (years) | 63.7 | (8.1) | 66.7 | (10.2) | 1.07 | 0.29 |

| SES (score) | 32.8 | (18.7) | 25.3 | (11.3) | 1.71 | 0.09 |

| Test Scores | ||||||

| Reading (standard score) | 104.1 | (11.8) | 99.6 | (10.7) | 1.30 | 0.20 |

| Expressive vocabulary (standard score) | 102.9 | (19.6) | 96.0 | (15.3) | 1.30 | 0.20 |

| Receptive vocabulary (standard score) | 112.9 | (18.9) | 106.7 | (18.2) | 1.07 | 0.29 |

| Cognitive MMSE (T score) | 51.1 | (8.2) | 47.3 | (8.5) | 1.54 | 0.13 |

For the CI group, one-way ANOVA tests were then performed to see if side of implant (right, left, or bilateral) influenced any scores for weighting factors, d′ values, or word recognition. No differences were found for any measures based on whether participants used a right-sided CI, a left-sided CI, or two CIs. Additionally, using independent-samples t tests, no differences were found on the same measures between those who wore only CIs versus a CI plus hearing aid. Therefore, data were combined across all CI participants who passed testing criteria in subsequent analyses.

Reliability

An estimate of inter-scorer reliability was obtained for the tests that required audio-visual recording and later scoring of responses. Responses were reviewed by two independent scorers for 25% of all participants (13 participants). Mean percent agreement in scores for the two scorers across these participants ranged from 90 to 99% for the measures of word reading, expressive and receptive vocabulary, and word recognition. These outcomes were considered good reliability, and the scores from the staff members who initially scored all the samples were used in further analyses.

Potential predictors of variance in word recognition: audiologic history, word reading, vocabulary, and cognitive status

Before examining perceptual attention and auditory sensitivity, as well as their abilities to explain variance in word recognition, it was important to examine other potential predictors of variance in word recognition. It has previously been found that age at implantation, duration of hearing loss, and amount of residual hearing can serve as indirect predictors of some of the variance in speech recognition outcomes, so evidence was sought for the abilities of these variables to predict variance in word recognition in this group of adult CI users. If these factors did predict variance in word recognition, the significant predictors would need to be accounted for when seeking predictive power for the test variables of interest. Separate linear regression analyses were performed with percent correct word recognition score as the dependent measure and demographic and audiologic measures as predictors, including age, age at onset of hearing loss, duration of hearing loss (computed as age minus age at onset of hearing loss), age at implantation, and better-ear residual PTA. None of these variables predicted a significant proportion of variability in word recognition.

In addition to these demographic and audiologic factors, language and cognitive factors could potentially explain variance in word recognition. Thus, separate linear regression analyses were performed with word recognition as the dependent measure. Predictor variables were word reading, expressive or receptive vocabulary (standard scores), or cognitive score (MMSE T score). None of these variables evaluated predicted a significant amount of variance in word recognition. Therefore, these demographic, audiologic, language, and cognitive factors were not considered during further analyses of predictors of variance in word recognition.

Perceptual attention (weighting factors)

The first question of interest was whether CI users and NH listeners demonstrated similar cue weighting for the cop-cob and sa-sha contrasts. Table 3 shows means for the CI group and the NH group for weighting factors for the two contrasts. For the cop-cob labeling task, one NH participant and five CI participants did not meet endpoint criteria, either at the level of training (criterion of 80 percent correct responses to the endpoint stimuli) or testing (criterion of 70 percent correct responses to endpoints). Inspection of weighting factors for the remaining participants and independent-samples t tests show that these factors were similar for participants with NH and those with CIs. Thus, the majority of CI users were able, as predicted, to make use of the duration cue for the cop-cob contrast, and they weighted this cue as heavily as NH participants. In this case, these CI users were also able to make use of the spectral cue to the same extent as the participants with NH. No significant correlation was found between attention to the duration cue and attention to the spectral cue for either the NH or CI group, suggesting that greater attention to one cue was not associated with less attention to the other.

Table 3.

Means and standard deviations (SDs) of word recognition, perceptual attention weighting factors, and auditory sensitivity d' values for normal-hearing (NH) and cochlear implant (CI) groups. The t value and p value columns show results of t-test, comparing means between NH and CI groups. Degrees of freedom for all t-tests were N-2.

| Groups | ||||||

|---|---|---|---|---|---|---|

| NH | CI | |||||

| Mean | (SD) | Mean | (SD) | t | p | |

| Test Scores | ||||||

| N | 19 | 28 | ||||

| Word recognition (percent correct) | 97.1 | (2.5) | 66.5 | (18.7) | 7.05 | <.001 |

| Perceptual Attention | ||||||

| N | 18 | 23 | ||||

| Cop-cob spectral cue (weighting factor) | 0.4 | (1.0) | 0.4 | (0.8) | 0.04 | 0.97 |

| Cop-cob duration cue (weighting factor) | 7.2 | (3.7) | 7.9 | (3.4) | 0.59 | 0.56 |

| N | 18 | 13 | ||||

| Sa-sha static spectral cue (weighting factor) | 7.9 | (3.5) | 5.6 | (3.9) | 1.77 | .087 |

| Sa-sha dynamic spectral cue (weighting factor) | 7.5 | (3.0) | 1.6 | (1.4) | 2.85 | .008 |

| Auditory Sensitivity | ||||||

| N | 19 | 15 | ||||

| glide-fall (d' value) | 3.7 | (0.4) | 2.5 | (0.9) | 4.81 | <.001 |

| N | 19 | 12 | ||||

| glide-rise (d' value) | 3.1 | (0.5) | 2.6 | (1.0) | 1.51 | 0.14 |

| N | 18 | 27 | ||||

| dur (d' value) | 2.2 | (0.7) | 2.4 | (0.6) | 1.14 | 0.26 |

Turning to the sa-sha labeling task, all NH participants met criteria for this task. However, 15 out of 28 participants with CIs were unable to meet criteria for inclusion in data analyses. For the 13 CI users who did meet criteria on the sa-sha labeling task, weighting factors for the dynamic spectral cue were smaller than for the NH group, indicating poorer attention to this cue. There was a trend towards smaller weighting factors for the CI group as compared with the NH group for the static spectral cue, but a statistically significant difference was not found.

Because so many CI participants were unable to perform the sa-sha labeling task, t tests were performed to examine potential differences in demographics, audiologic factors, and test measures between the group of CI users who did and the group who did not meet criteria for sa-sha testing. Factors examined were SES, age, age at onset of hearing loss, age at implantation, duration of deafness, better-ear residual PTA, word reading ability, expressive and receptive vocabulary, and MMSE score. No differences were found.

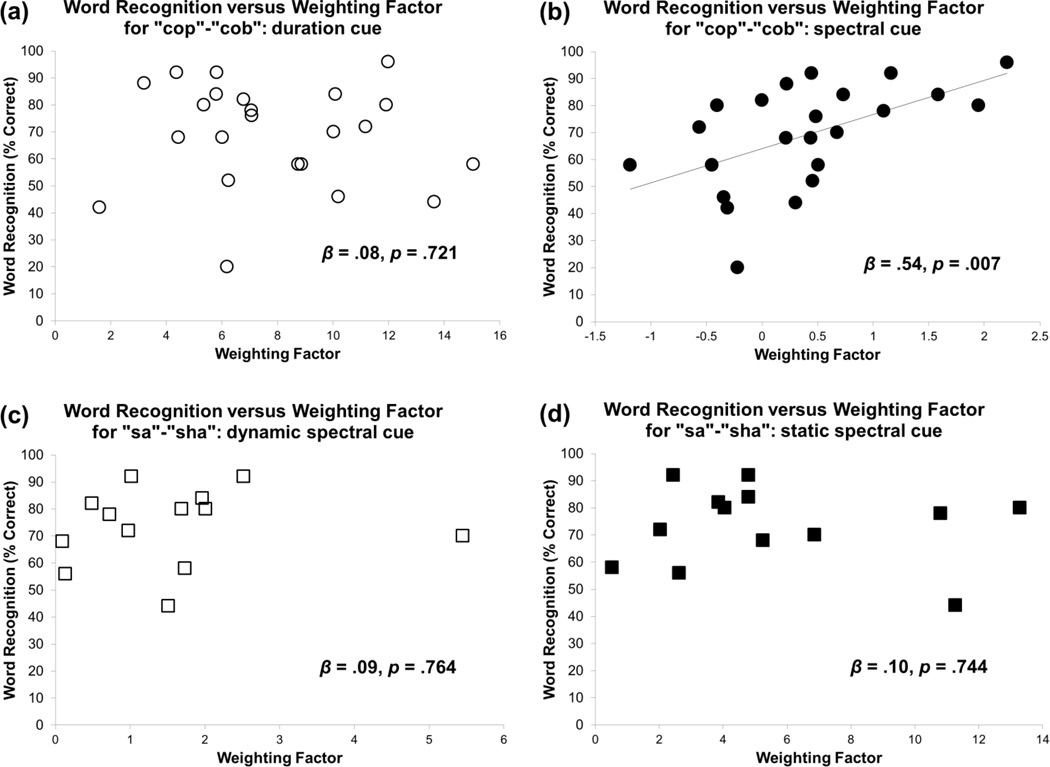

Perceptual attention and word recognition

The next question of interest, which addressed the first main hypothesis of the study, concerned how weighting strategies affected word recognition. Using the weighting factors derived from the cop-cob and sa-sha labeling tasks, it was asked if these weighting factors explained significant proportions of variance in word recognition scores for this group of adult CI users. Scatter plots for word recognition versus the weighting factors for the cop-cob contrast for individual CI users are shown in Figure 3a and 3b. Linear regression analyses were performed separately for each weighting factor, using word recognition as the dependent measure. The only weighting factor that predicted a significant amount of variance in word recognition was the weighting factor for the dynamic spectral cue in the cop-cob contrast, β = .54, p = .007. This finding suggests that the ability of CI users to pay attention to spectral structure, even if it is not the structure they weight most heavily in the contrast, does play a role in word recognition ability.

Figure 3.

Scatterplot of word recognition versus weighting factor for individual CI users for the “cop”-“cob” contrast for the duration cue (a) and the spectral cue (b) and for the “sa”-“sha” contrast for the dynamic spectral cue (c) and the static spectral cue (d).

On the other hand, neither the weighting factor for the static nor the dynamic spectral cue in the sa-sha contrast predicted word recognition (Figure 3c and 3d). However, a potential problem with these regression analyses was that data needed to be excluded from the 15 CI users who did not meet criteria on the sa-sha task. With only 13 participants left in the analysis, there were simply too few to find an effect, if one existed. Therefore, two-sample t tests were performed comparing word recognition between the group of CI users who met criteria on the sa-sha task and the group who did not. The mean word recognition score was better for the group who met criteria (73.5% versus 60.4%), but this difference was not quite statistically significant, t(26) = 1.95, p = .06, probably due to the small sample sizes examined in this analysis. These results of weighting factors for the cop-cob and sa-sha contrasts suggest that the ability to pay attention to spectral cues is important in word recognition.

Auditory sensitivity to duration and spectral cues

The subsequent question asked whether cue discrimination of duration (dur) and dynamic spectral cues (glide-fall and glide-rise) would predict variance in word recognition abilities. Twenty-seven of the 28 CI users showed sufficient discrimination of the dur cue to meet criteria for inclusion in analyses, but, overall, CI users showed greater difficulty meeting criteria for data inclusion for discrimination of the dynamic spectral cues, glide-fall (13 CI users did not meet criteria) and glide-rise (16 CI users did not meet criteria). All NH participants were able to complete the spectral discrimination tasks, and only one NH participant could not complete the duration discrimination task. Table 3 shows d′ values from the discrimination tasks for the NH group and the CI group. For the dur cue, the groups showed no significant difference in d′ values and, thus, no difference in auditory sensitivity. On the other hand, even when excluding those individuals who did not meet criteria for data inclusion, the remaining 15 CI users showed poorer sensitivity than the NH group to the spectral cue glide-fall. A similar trend was found for the glide-rise cue, but only 12 CI users met criteria.

For the CI participants, t tests were again performed to examine differences in demographics, audiologic factors, and test measures between those who did or did not meet criteria for glide-fall and glide-rise. Factors examined were SES, age, age at onset of hearing loss, age at implantation, duration of deafness, better-ear PTA, word reading ability, expressive and receptive vocabulary, and MMSE score. None of these differed for participants who did or did not meet criteria during the glide discrimination tasks.

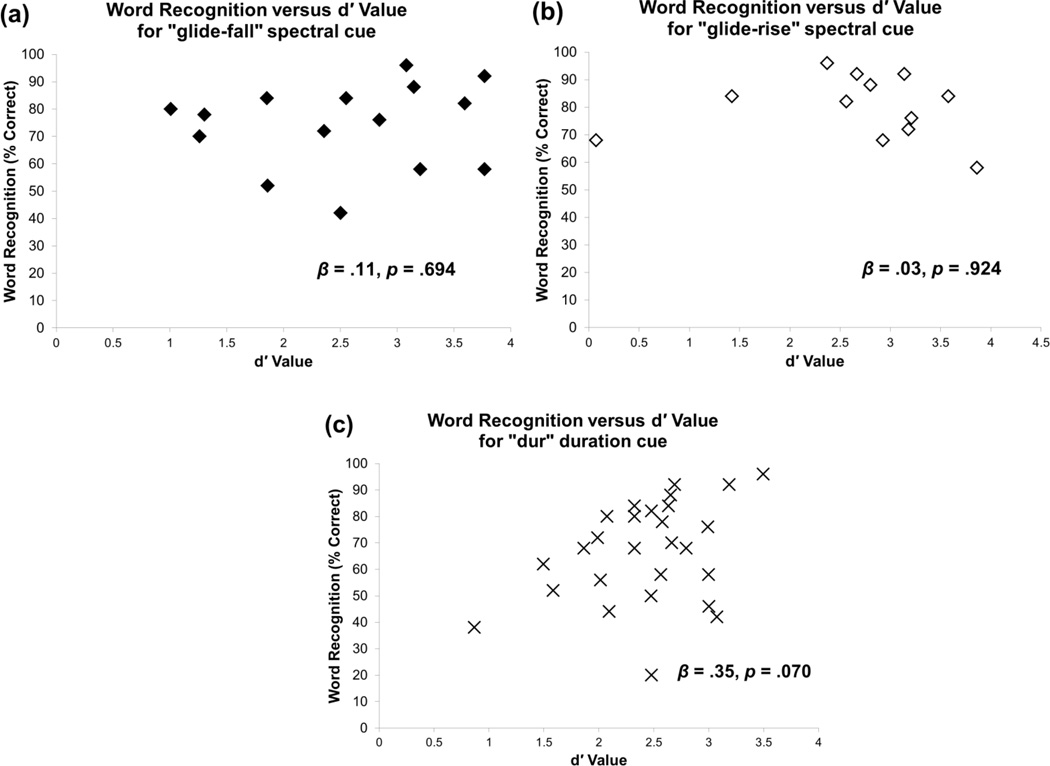

Auditory sensitivity and word recognition

The second main hypothesis of the study was that auditory sensitivity to the cues underlying phonemic contrasts would not independently predict word recognition. Figures 4a and 4b show scatter plots of word recognition versus d' value for the glide-fall and glide-rise cues, respectively, and Figure 4c shows word recognition versus d' value for the dur cue. In none of these cases did the d' value predict word recognition, though there was a nearly significant relationship between sensitivity to the dur cue and word recognition. However, similar to the problem with relating sa-sha cue weighting to word recognition, the finding of a large number of CI users who were unable to meet task criteria for glide-fall and glide-rise limited the ability to examine the effect of spectral discrimination on word recognition. Therefore, mean word recognition scores were compared between the group who met criteria for glide-fall (15 out of 28 participants) versus the group who did not, and the group who met criteria showed significantly higher word recognition (74.1% versus 57.7%), t(26) = 2.54, p = .017. Similarly, the group of 12 CI users who met criteria for the glide-rise task showed higher word recognition than the group of CI users who did not (80% versus 56.4%), t(26) = 4.21, p < .001). Importantly, however, discrimination ability for the dur cue did not explain variance in word recognition. Thus, for the cues in this experiment, sensitivity to dynamic spectral cues did appear to relate to word recognition, whereas sensitivity to the duration cue did not.

Figure 4.

Scatterplot of word recognition versus discrimination (d' value) for individual CI users for the “glide-fall” cue (a), the “glide-rise” cue (b), and the “dur” cue (c).

Perceptual attention and auditory sensitivity

The third main hypothesis of the study was that auditory sensitivity would not independently predict perceptual attention. To test this hypothesis, linear regressions were performed with weighting factors for the cop-cob contrast and the sa-sha contrast as the dependent measures and d' values for the dur, glide-fall, and glide-rise cues as predictors, for both the NH and CI groups independently. These analyses failed to find any d' values that served as significant predictors of weighting factors for either group. Thus, findings support the hypothesis that perceptual attention during labeling of a phonemic contrast does not depend on auditory sensitivity to acoustic cues.

Discussion

The experiment presented here was conducted to examine factors that might predict variability in word recognition in quiet for postlingually deafened adults using CIs. Measures were collected that are thought to underlie robust spoken language recognition. These included metrics of the amount of perceptual attention paid to acoustic cues in phonemic labeling and auditory sensitivity to those cues. Measures were collected and compared between a group of experienced CI users and a group of NH peers. Three hypotheses were tested: (1) that the use of perceptual attention strategies like those used by NH listeners while labeling phonemic contrasts would predict word recognition ability, (2) that auditory sensitivity to spectral and duration cues would not predict word recognition, and (3) that auditory sensitivity to those same cues would not predict perceptual attention strategies. These three hypotheses were tested by measuring word recognition, along with labeling responses for the “cop”-“cob” and “sa”-“sha” phonemic contrasts, and discrimination of nonspeech spectral and duration cues.

The Moberly et al. (2014) study examined whether two distinct cues to the “ba”-“wa” contrast, a spectral cue and an amplitude cue, were equal in their capacity to elicit accurate speech recognition. It was found that they were not equal: individuals who attended to the spectral cue strongly, like NH adults, showed the best word recognition. On the other hand, auditory sensitivity to the spectral cue in that study did not independently explain variance in word recognition. The current study extends the findings from that study by examining CI users’ perception of two other phonemic contrasts. For the cop-cob contrast, adults with NH typically weight the duration cue over the dynamic spectral cue. It was expected that CI users would have relatively intact auditory sensitivity to the duration cue, which was confirmed. The first hypothesis was that stronger weighting of the duration cue, like NH adults, would predict better word recognition; however, this was not found, which suggests that the ability to weight this temporal cue, duration, was not sufficient to explain variability in word recognition. On the other hand, the ability to pay attention to the dynamic spectral cue in the cop-cob contrast did predict approximately 25% of the variance in word recognition. For the sa-sha contrast, the phonemic decision can be made based on a dynamic spectral cue or a static spectral cue. This labeling task was found to be too difficult for many of the CI users, with only about half of them able to pass criteria for inclusion of their data in analyses. However, a trend was seen for better word recognition for those who could successfully complete the sa-sha labeling task as compared with those who could not.

The second notable finding from this study related to auditory sensitivity for CI users to two types of cues that underlie speech perception, a duration cue and dynamic spectral cues. As expected, CI users showed intact sensitivity for the duration cue, which should be represented robustly by CIs, but overall poor sensitivity for the dynamic spectral cues, which would likely be represented poorly by CIs. With regard to the second hypothesis, that auditory sensitivity to the nonspeech cues would not predict word recognition, this hypothesis was partially supported. Sensitivity to the duration cue did not predict word recognition. The relationship of word recognition with sensitivity to the dynamic spectral cues was less clear, as several CI users could not complete those discrimination tasks. However, those participants who could complete the spectral discrimination tasks had significantly higher word recognition scores than those who could not. These findings support the idea that auditory sensitivity to dynamic spectral structure, especially spectral changes in a relatively low-frequency range, does appear to play an important, though incomplete, role in the ability to recognize speech through a CI. Sensitivity to these spectral changes is not typically assessed in either the research or clinical setting, but results suggest that measuring sensitivity to these dynamic cues may be important.

The third hypothesis tested in this study examined the relationship between perceptual attention strategies and auditory sensitivity to the types of acoustic structure that underlie phonemic decisions. Interestingly, no evidence was found to support the idea that better auditory sensitivity resulted in participants directing their perceptual attention to those types of cues during phonemic labeling. This finding replicates findings for 51 children with CIs listening to the same discrimination and labeling stimuli used in this study: sensitivity to acoustic cues did not strongly predict the weight assigned to cues in those decisions (Nittrouer et al., 2015). Where both adults and children are concerned, some participants were able to attend to cues that were less salient through CIs, suggesting that factors other than simple cue saliency account for how perceptual attention is directed. Instead, individual variability in this kind of attention must play a role, and this conclusion suggests that auditory training could be valuable post implantation.

This study more broadly expands findings from previous studies of cue weighting by CI users. In agreement with the studies by Hedrick and Carney (1997) and Winn et al. (2012), CI listeners weighted amplitude or durational cues more heavily than spectral cues when making labeling decisions for phonemic categories, because these cues were either more informative for making phonemic decisions or were more accessible through their implants. Nonetheless, it has been found that attention to dynamic spectral structure is what predicts word recognition by CI users (Kirk et al,1992). Lastly, Donaldson et al. (2013) attributed success in vowel identification to better access to both spectral and durational cues, while accepting that cue weighting strategies may have influenced results.

Perhaps one of the most striking findings of this study was that approximately half of the CI users could not even complete the labeling or discrimination tasks that involved spectral cues. These results are likely (though not conclusively) representative of the abilities of adult CI users to access and attend to spectral structure more generally. This is important and should be eye-opening for clinicians, because spectral structure in the speech signal supports the perception of many phonemic contrasts, and a large number of CI users simply are not sensitive to and cannot attend to that structure, which is essential for the most accurate word recognition. Moreover, no demographic, audiologic, language, or cognitive measures were found to differentiate the groups who could or could not access or attend to spectral structure. These findings suggest the need for clinical tools that could assess both sensitivity and attention to spectral structure to prognosticate outcomes and to assess patients postoperatively.

Limitations and Future Directions

In spite of the useful results reported here, one principal problem constrained interpretation. The spectral discrimination and sa-sha labeling tasks were found to be too difficult for many of the CI users to meet criteria for data inclusion in analyses, limiting the numbers of participants whose data could be included in exploring sources of variance in word recognition. Nonetheless, the fact that this basic contrast of the English language proved so difficult for many adults with CIs should prompt further investigation into their sensitivity and attention to spectral structure in general.

Conclusion

Variability in outcomes for patients who undergo cochlear implantation is frustrating for patients and clinicians alike. Results from both this study and the Moberly et al. (2014) study support the idea that being able to perceptually attend to spectral structure within speech may explain some of this variability, and that attention to this spectral structure is critical to accurate word recognition for adult CI users. Even if the spectral structure delivered through CIs is degraded, many implant users may still have sufficient sensitivity to spectral cues to be able to pay attention to those cues. On the other hand, the factors clinicians tend to focus on in predicting speech recognition outcomes for patients with CIs, such as duration of deafness and age at implantation, appear to play much less important and less direct roles in predicting outcomes.

The findings of this study provide two important targets for future clinical intervention. Processing and mapping strategies of current CIs should be modified to improve the delivery of spectral structure through implants. Perhaps even more importantly, adults with CIs may benefit from intensive auditory training that facilitates perceptual learning of appropriate weighting strategies for static and dynamic spectral cues, which should optimize their speech perception outcomes.

Acknowledgements

Research reported in this publication was supported by the Triological Society Career Development Award to Aaron Moberly. In addition, reported research was supported by the National Institute on Deafness and Other Communication Disorders of the National Institutes of Health under award numbers R01 DC000633 and R01 DC006237 to Susan Nittrouer. ResearchMatch, used to recruit control participants, is supported by NIH Clinical and Translational Science Award (CTSA) program, grants UL1TR000445 and 1U54RR032646-01. The authors would like to acknowledge the following individuals for assistance in data collection and scoring: Jessica Apsley, Lauren Boyce, Emily Hehl, Jennifer Martin, and Demarcus Williams.

Footnotes

Conflict of interest: The authors declare no conflicts of interest.

References

- Brownell R. Expressive One-Word Picture Vocabulary Test. Novato, CA: Academic Therapy Publications; 2000. [Google Scholar]

- Brownell R. Receptive One-Word Picture Vocabulary Test. Novato, CA: Academic Therapy Publications; 2000. [Google Scholar]

- De Silva ML, McLaughlin MT, Rodrigues EJ, et al. A Mini-Mental Status Examination for the hearing impaired. Age Ageing. 2008;37:593–595. doi: 10.1093/ageing/afn146. [DOI] [PubMed] [Google Scholar]

- Donaldson GS, Rogers CL, Cardenas ES, et al. Vowel identification by cochlear implant users: Contributions of static and dynamic spectral cues. J Acoust Soc Am. 2013;134:3021–3028. doi: 10.1121/1.4820894. [DOI] [PubMed] [Google Scholar]

- Eggermont JJ, Ponton CW. Auditory-evoked potential studies of cortical maturation in normal hearing and implanted children: correlations with changes in structure and speech perception. Acta Otolaryngol. 2003;123:249–252. doi: 10.1080/0036554021000028098. [DOI] [PubMed] [Google Scholar]

- Firszt JB, Holden LK, Skinner MW, et al. Recognition of speech presented at soft to loud levels by adult cochlear implant recipients of three cochlear implant systems. Ear Hear. 2004;25:375–387. doi: 10.1097/01.aud.0000134552.22205.ee. [DOI] [PubMed] [Google Scholar]

- Folstein MF, Folstein SE, McHugh PR. “Mini-mental state”. A practical method for grading the cognitive state of patients for the clinician. J Psychiatr Res. 1975;12:189–198. doi: 10.1016/0022-3956(75)90026-6. [DOI] [PubMed] [Google Scholar]

- Friesen LM, Shannon RV, Baskent D, et al. Speech recognition in noise as a function of the number of spectral channels: comparison of acoustic hearing and cochlear implants. J Acoust Soc Am. 2001;110:1150–1163. doi: 10.1121/1.1381538. [DOI] [PubMed] [Google Scholar]

- Gifford RH, Shallop JK, Peterson AM. Speech recognition materials and ceiling effects: considerations for cochlear implant programs. Audiol Neurootol. 2008;13:193–205. doi: 10.1159/000113510. [DOI] [PubMed] [Google Scholar]

- Hedrick MS, Ohde RN. Effect of relative amplitude of frication on perception of place of articulation. J Acoust Soc Am. 1993;94:2005–2026. doi: 10.1121/1.407503. [DOI] [PubMed] [Google Scholar]

- Henry BA, Turner CW. The resolution of complex spectral patterns by cochlear implant and normal-hearing listeners. J Acoust Soc Am. 2003;113:2861–2873. doi: 10.1121/1.1561900. [DOI] [PubMed] [Google Scholar]

- Hirsh IJ, Davis H, Silverman SR, et al. Development of material for speech audiometry. J Speech Hear Dis. 1952;17:321–337. doi: 10.1044/jshd.1703.321. [DOI] [PubMed] [Google Scholar]

- Holden LK, Finley CC, Firszt JB, et al. Factors affecting open-set word recognitionin adults with cochlear implants. Ear Hear. 2013;34:342–360. doi: 10.1097/AUD.0b013e3182741aa7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holt RF, Carney AE. Multiple looks in speech sound discrimination in adults. JSLHR. 2005;48:922–943. doi: 10.1044/1092-4388(2005/064). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kelly AS, Purdy SC, Thorne PR. Electrophysiological and speech perception measures of auditory processing in experienced adult cochlear implant users. Clin Neurophys. 2005;116:1235–1246. doi: 10.1016/j.clinph.2005.02.011. [DOI] [PubMed] [Google Scholar]

- Lazard DS, Vincent C, Venail F, et al. Pre-, per- and postoperative factors affecting performance of postlinguistically deaf adults using cochlear implants: a new conceptual model over time. PLoS One. 2012;7:e48739. doi: 10.1371/journal.pone.0048739. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liberman AM, Cooper FS, Shankweiler DP, et al. Perception of the speech code. Psych Rev. 1967;74:431–461. doi: 10.1037/h0020279. [DOI] [PubMed] [Google Scholar]

- Macmillan NA, Creelman CD. Detection theory: A user’s guide. Mahwah, NJ: Erlbaum; 2005. [Google Scholar]

- Miyawaki K, Strange W, Verbrugge RR, et al. An effect of linguistic experience: The discrimination of [r] and [l] by native speakers of Japanese and English. Percept Psychophys. 1975;18:331–340. [Google Scholar]

- Moberly AC, Lowenstein JH, Tarr E, et al. Do adults with cochlear implants rely on different acoustic cues for phoneme perception than adults with normal hearing? JSLHR. 2014;57:566–582. doi: 10.1044/2014_JSLHR-H-12-0323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nittrouer S. The role of temporal and dynamic signal components in the perception of syllable-final stop voicing by children and adults. J Acoust Soc Am. 2004;115:1777–1790. doi: 10.1121/1.1651192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nittrouer S. Age-related differences in weighting and masking of two cues to word-final stop voicing in noise. J Acoust Soc Am. 2005;118:1072–1088. doi: 10.1121/1.1940508. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nittrouer S, Burton LT. The role of early language experience in the development of speech perception and phonological processing abilities: Evidence from 5-year-olds with histories of otitis media with effusion and low socioeconomic status. J Comm Dis. 2005;38:29–63. doi: 10.1016/j.jcomdis.2004.03.006. [DOI] [PubMed] [Google Scholar]

- Nittrouer S, Caldwell-Tarr A, Moberly AC, et al. Perceptual weighting strategies of children with cochlear implants and normal hearing. J Comm Dis. 2015 doi: 10.1016/j.jcomdis.2014.09.003. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nittrouer S, Lowenstein JH. Children’s weighting strategies for word-final stop voicing are not explained by auditory capacities. JSLHR. 2007;50:58–73. doi: 10.1044/1092-4388(2007/005). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nittrouer S, Lowenstein JH. Does harmonicity explain children?s cue weighting of fricative-vowel syllables? J Acoust Soc Am. 2009;125:1679–1692. doi: 10.1121/1.3056561. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nittrouer S, Miller ME. Predicting developmental shifts in perceptual weighting schemes. J Acoust Soc Am. 1997;101:2253–2266. doi: 10.1121/1.418207. [DOI] [PubMed] [Google Scholar]

- Peng S, Chatterjee M, Nelson L. Acoustic cue integration in speech intonation recognition with cochlear implants. Trends Amplif. 2012;16:67–82. doi: 10.1177/1084713812451159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perkell JS, Boyce SE, Stevens KN. Articulatory and acoustic correlates of the [s-sh] distinction. Speech Communication Papers, 97th Meeting of the Acoustical Society of America. 1979:109–113. [Google Scholar]

- Remez RE, Rubin PE, Berns SM, et al. On the perceptual organization of speech. Psychol Rev. 1994;101:129–156. doi: 10.1037/0033-295X.101.1.129. [DOI] [PubMed] [Google Scholar]

- Repp BH. Phonetic trading relations and context effects: New evidence for a phonetic mode of perception. Psychol Bull. 1982;92:81–110. [PubMed] [Google Scholar]

- Stevens KN. Evidence for the role of acoustic boundaries in the perception of speech sounds. In: Fromkin VA, editor. Phonetic Linguistics: Essays in Honor of Peter Ladefoged. New York, NY: Academic Press; 1985. [Google Scholar]

- Strevens P. Spectra of fricative noise in human speech. Lang Speech. 1960;3:32–49. [Google Scholar]

- Wardrip-Fruin C, Peach S. Developmental aspects of the perception of acoustic cues in determining the voicing features of final stop consonants. Lang Speech. 1984;27:367–379. doi: 10.1177/002383098402700407. [DOI] [PubMed] [Google Scholar]

- Wilkinson GS, Robertson GJ. Wide Range Achievement Test. Fourth Edition. Lutz, FL: Psychological Assessment Resources; 2006. [Google Scholar]

- Wilson BS, Dorman MF. Cochlear implants: Current designs and future possibilities. J Rehabil Res Dev. 2008;45:695–730. doi: 10.1682/jrrd.2007.10.0173. [DOI] [PubMed] [Google Scholar]

- Winn MB, Chatterjee M, Idsardi WJ. The use of acoustic cues for phonetic identification: Effects of spectral degradation and electric hearing. JASA. 2012;131:1465–1479. doi: 10.1121/1.3672705. [DOI] [PMC free article] [PubMed] [Google Scholar]