Abstract

In reaching to grasp an object, the arm transports the hand to the intended location as the hand shapes to grasp the object. Prior studies that tracked arm endpoint and grip aperture have shown that reaching and grasping, while proceeding in parallel, are interdependent to some degree. Other studies of reaching and grasping that have examined the joint angles of all five digits as the hand shapes to grasp various objects have not tracked the joint angles of the arm as well. We, therefore, examined 22 joint angles from the shoulder to the five digits as monkeys reached, grasped, and manipulated in a task that dissociated location and object. We quantified the extent to which each angle varied depending on location, on object, and on their interaction, all as a function of time. Although joint angles varied depending on both location and object beginning early in the movement, an early phase of location effects in joint angles from the shoulder to the digits was followed by a later phase in which object effects predominated at all joint angles distal to the shoulder. Interaction effects were relatively small throughout the reach-to-grasp. Whereas reach trajectory was influenced substantially by the object, grasp shape was comparatively invariant to location. Our observations suggest that neural control of reach-to-grasp may occur largely in two sequential phases: the first determining the location to which the arm transports the hand, and the second shaping the entire upper extremity to grasp and manipulate the object.

Keywords: arm, hand, manipulation, trajectory, upper extremity

early studies of reaching and grasping suggested that these two components of prehensile movements proceed in parallel (Jeannerod 1984, 1986). The hand begins to open as the arm begins to transport it toward a target object. Shortly after transport of the hand passes the point of peak velocity and begins to decelerate, the grip passes the point of peak aperture and begins to close, shaping the hand to grasp the object. In addition to proceeding in parallel, the processes of reaching and grasping were viewed as independent. Variations in the location to which the subject reached caused relatively little change in the orientation of the hand at the wrist, or in the grip aperture (Gentilucci et al. 1991; Paulignan et al. 1990, 1991b). Conversely, variation in the orientation or size of the object caused relatively little change in the kinematics of the proximal upper extremity (Lacquaniti and Soechting 1982; Paulignan et al. 1991a; Soechting 1984; Stelmach et al. 1994).

Subsequent studies, however, found interactions between the kinematics of the arm and hand (Gentilucci et al. 1996). When human subjects matched the location and orientation of a rod held in the hand to the location and orientation of a target rod, for example, orientation errors depended on target location and arm posture, as well as on target orientation, suggesting that control of hand orientation was not independent of location (Soechting and Flanders 1993). Also, when subjects grasped rods at various locations and orientations, the kinematics of reaching depended not only on the location, but also on the orientation of the rod (Desmurget et al. 1996). Similarly, maximum grip aperture was found to vary depending on location (Paulignan et al. 1997). Studies in monkeys also showed that reaching to grasp the same object at different locations involved differences in the time course of grip aperture, as well as wrist velocity, as did reaching to grasp a small vs. large object at the same location (Roy et al. 2002). Reaching and grasping thus were viewed as being, to some degree, interdependent. Surprisingly, the extent to which variation in the motion of different upper extremity joints depends on 1) the location to which the subject is reaching vs. 2) the object the subject will be grasping, and/or 3) the interaction between location and object, all as a function of time, has yet to be examined quantitatively.

Furthermore, early studies of reach-to-grasp kinematics generally used the motion of the distal forearm (or wrist) as a surrogate for motion at the shoulder and elbow and used grip aperture, the distance between the tips of the thumb and index finger, as a surrogate for the more complex hand shape involving all the metacarpophalangeal (MCP), proximal interphalangeal (PIP), and distal interphalangeal (DIP) joints. A number of studies since have examined the simultaneous motion at the joints of all five digits during grasping, both in humans (Mason et al. 2001; Pesyna et al. 2011; Santello et al. 2002, 1998; Santello and Soechting 1998; Winges et al. 2003) and in monkeys (Mason et al. 2004; Theverapperuma et al. 2006). These studies have focused on variation in the digit and wrist angles used to grasp different objects, however, without simultaneously examining variation at the shoulder and elbow.

Although to some extent interdependent kinematically, reaching and grasping generally are thought to be controlled through separate cortical pathways (Cavina-Pratesi et al. 2010; Cisek and Kalaska 2010; Davare et al. 2011; Grafton 2010; Rizzolatti et al. 1998; Rizzolatti and Luppino 2001). Location information for reaching processed in the parietal reach region (Buneo and Andersen 2006) is sent to the dorsal premotor cortex (PMd) (Batista et al. 2007), which projects in turn to the “horseshoe” of proximal upper extremity representation in the primary motor cortex (M1) (Kwan et al. 1978; Park et al. 2001). Concurrently, object information for grasping processed in the anterior intraparietal area (Baumann et al. 2009; Murata et al. 2000) is sent to the ventral premotor cortex (PMv) (Fluet et al. 2010; Raos et al. 2006; Umilta et al. 2007), which projects in turn to the central core of distal representation in M1. These pathways for reaching and for grasping generally are viewed as functioning independently and in parallel.

Certain studies, however, have suggested that cortical motor areas might process reaching and grasping sequentially. In an early study of reaching, for example, neurons in both M1 and PMd were found to show sequential (rather than concurrent) representations of reach direction, target location, and then movement distance (Fu et al. 1995). A more recent study likewise found that neurons throughout both PMd and PMv were related to both reach location and object grasp shape, but location-related discharge peaked earlier, and object-related discharge peaked later (Stark et al. 2007). Such observations raise the possibility that, to some extent, reaching may be processed earlier and grasping later during a single upper-extremity movement.

Here, we tested the hypothesis that reach and grasp are controlled sequentially by examining the simultaneous kinematics of joint angles from the shoulder to the five digits more extensively than previous studies, using movements in which monkeys reached, grasped, and then manipulated. During the reach-to-grasp epoch of such movements, the motion of the arm and hand is unconstrained by physical contact with the object, and the kinematics reflect only the ongoing neural control. Having monkeys reach to, grasp, and manipulate four different objects in up to eight different locations enabled us to quantify how the kinematics of both arm and hand joints varied during the reach-to-grasp epoch, depending on the location to which the subject reached, on the object grasped, and on location × object interactions, all as a function of time. If reach and grasp are controlled equivalently throughout the movement, then we expected the effects of location and object to proceed in parallel. But to the extent that reach and grasp are controlled sequentially, we expected location and object effects to proceed serially.

METHODS

Subjects

Three Rhesus monkeys, monkey L, monkey X, and monkey Y (all males weighing 9–11 kg) were subjects in the present study. Monkeys X and Y have been subjects in previous studies (Aggarwal et al. 2013; Mollazadeh et al. 2011, 2014). All procedures for the care and use of these nonhuman primates followed the Guide for the Care and Use of Laboratory Animals and were approved by the University Committee on Animal Resources at the University of Rochester, Rochester, New York.

Behavioral Task

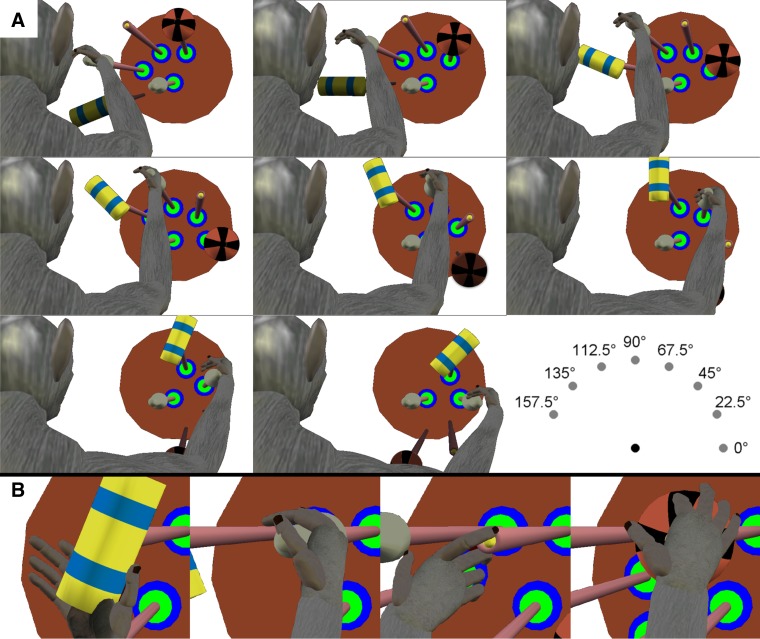

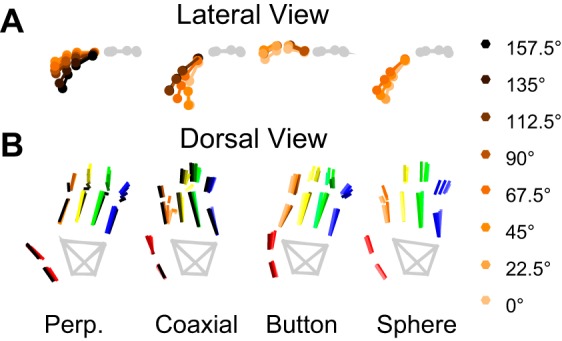

To dissociate location and object, each monkey was trained to reach to, grasp, and manipulate four objects, each positioned at from four to eight locations (Fig. 1A). The four objects were arranged in a center-out fashion, located at angular separations of 45° on a circle of 13-cm radius centered on a fifth object. All five objects lay in a frontal plane ∼32 cm in front of the monkey. Each of the five objects was mounted on a rod extending horizontally toward the monkey, with the center object pointed at the monkey's shoulder. The center object was a cylinder 20 mm in diameter and 60 mm in length mounted coaxially with the mounting rod. The four peripheral, target objects comprised a button 8 mm in diameter mounted inside a tube of inner diameter 13 mm, a sphere 48 mm in diameter, a cylinder 20 mm in diameter and 60 mm long mounted coaxially with the mounting rod (identical to the center object), and another cylinder 25 mm in diameter and 63 mm in length mounted perpendicular to the rod. The grasp shapes used for each object are illustrated in Fig. 1B. The center object, the peripheral coaxial cylinder and the perpendicular cylinder each were manipulated by pulling the object ∼1 cm toward the subject against a small spring load. The button was pushed a similar distance against a similar load, whereas the sphere required 45° clockwise rotation against a spring torque. Manipulation of each object was detected with a microswitch. Closing the microswitch required 3.7 N for the center object and peripheral coaxial cylinder, 4.9 N for the perpendicular cylinder, 1.8 N for the button, and 0.03 N·m for the sphere. These loads were not varied during the present experiments. The objects were positioned in a fixed clockwise order: perpendicular cylinder, coaxial cylinder, button, and sphere, spanning 135°.

Fig. 1.

Reach, grasp, manipulate task. A: 8 frames illustrate the task apparatus as if viewed from over the monkey's right shoulder, with the monkey's head at the left of the frame and its right hand reaching and grasping the coaxial cylinder at 8 different locations. In each trial, the monkey reached from the center object (a coaxial cylinder) to one of four radially arranged peripheral objects, positioned in a fixed clockwise order: a perpendicular cylinder (yellow with blue stripes), a coaxial cylinder (gray), a button (yellow), and a sphere (orange and black stripes). Each object was supported by a horizontal mounting rod with rings of green and blue light-emitting diodes (LEDs) at the base. In different blocks of trials, this entire array of peripheral objects was rotated about the center object to position the array in one of 8 orientations at 22.5° intervals, as illustrated by the 8 different frames. In each orientation, the monkey was required to operate only those objects in the 8 different locations, 157.5° (leftmost location), 135°, 112.5°, 90°, 67.5°, 45°, 22.5°, 0° (right horizontal location), that were visible and reached readily. The location/object combinations that were not included in the experiment are darkened and have no green and blue LED rings in the figure. Additionally, because of the fixed ordering of the objects, the sphere and button were never presented at the leftmost locations, and the perpendicular cylinder was not presented for rightmost locations. B: close-ups illustrate the different hand shapes used to grasp each of the 4 objects when at the 67.5° location. All images were created using joint angles calculated at the time of peripheral object contact for monkey L and then rendered using the MSMS software package. [MSMS and work created with MSMS used with permission (Davoodi and Loeb 2012).]

Each trial was initiated when the monkey grasped and pulled on the center object. After a variable initial hold period of 1,500 to 2,000 ms, blue light-emitting diodes (LEDs) were illuminated around the horizontal rod supporting one of the four peripheral objects, cuing the monkey to reach to, grasp, and manipulate that peripheral object. Once the object was manipulated to its final position, green LEDs around the horizontal rod were illuminated and remained illuminated as long as the object remained in this position. The monkey was required to hold the object in its final, manipulated position for 1,000 ms to receive a water reward. Trials of different objects were presented in a pseudorandom block design. If a subject failed a trial (by failing to release the center object within 1,000 ms of the blue LED cue onset, by failing to contact the cued peripheral object within 1,000 ms of releasing the center object, by contacting the wrong peripheral object, or by failing to maintain the static, final hold position for 1,000 ms) the same object was presented on subsequent trials until the trial was performed successfully. Because the monkey thus knew which type of trial would follow an error trial, successful trials preceded by an error trial were excluded from analysis.

All aspects of the behavioral task were controlled by custom software running in TEMPO (Reflective Computing, Olympia, WA), which also sent behavioral event markers into the collected data stream. These markers encoded the time and nature of events, including 1) illumination of the blue LEDs that cued the monkey to begin movement and instructed which peripheral object to operate (Cue); 2) release of the center object; 3) contact of a peripheral object, detected with semiconductor strain gauges mounted on the horizontal rods (Contact); and 4) closure of a microswitch indicating successful object manipulation (Switch).

To dissociate the location to which the monkey reached vs. the object the monkey grasped and manipulated, in the present study the entire apparatus holding the four peripheral objects was rotated about the center object to one of eight orientations at 22.5° increments (Fig. 1A). Each object thereby was positioned at different locations. The monkeys were not required to reach to, grasp, and manipulate every object at all eight apparatus orientations, however, for three reasons. First, when head-restrained, the monkey's vision of objects below the horizontal level of the center object was occluded by the primate chair. Second, biomechanical constraints on the shoulder made it difficult for the monkey to reach an object positioned at the far left (180°). Third, given the fixed arrangement of the objects on the apparatus, we used only locations at which the monkey was able to reach to, grasp, and manipulate at least two different objects with the apparatus stationary at a given orientation. The bottom right frame of Fig. 1A illustrates the resulting locations included in the dataset, spanning an overall range of 157.5°. With the apparatus in any of the eight orientations, the monkey performed ∼10 randomized blocks of trials to each of the visible and accessible objects before the apparatus was rotated to a different orientation, with these different orientations also being presented in a variable order.

Data Collection

Motion tracking and joint angle calculations.

Prior to beginning each recording session, 36 self-adhesive, 3-mm-diameter reflective markers were placed at specific locations tattooed on the monkey's skin. Three markers were placed on the lateral aspect of the upper arm, three on the dorsal aspect of the proximal forearm, five on the dorsal aspect of the distal forearm, five on the dorsum of the hand, four (two on the first metacarpal and two on the proximal phalanx) on the thumb, and four on each finger (two on each proximal and two on each intermediate phalanx). An 18-camera video motion analysis system (Vicon) then tracked the position of each marker at 200 frames/s in an earth-fixed Cartesian reference frame throughout the recording session.

Twenty-two joint angles (Table 1) were calculated off-line from the 36 marker locations in each video frame using a rigid body model. Angles were defined in a manner consistent with the recommendations of the International Society of Biomechanics (Wu et al. 2005). In each frame, a single elbow point was estimated from the location and orientation of the three upper arm and three proximal forearm markers. The elbow point was assumed to be ∼1.7 cm deep to the recorded markers on the skin. Next, a fixed shoulder point for the entire session was estimated by determining the point that minimized the variance in distance to the elbow point across all frames. For calculation of shoulder angles, the thorax was assumed to remain in a constant orientation parallel to the plane formed by the peripheral objects. In each frame, a single distal forearm point along the long axis of the forearm then was estimated using the five distal forearm markers and an assumed depth of ∼1.1 cm from the dorsal skin surface. The line segment between this distal forearm point and the elbow point then defined the long axis of the forearm. The angular orientation (composed of three orthogonal axes, or attitude matrix) of the upper arm relative to the shoulder was defined by the longitudinal axis that passed through the shoulder and elbow points, with internal/external rotation at the shoulder determined by the distal forearm point. Next, the angular orientation of the forearm was estimated using the line between the elbow point and the distal forearm point as the longitudinal axis with the plane of five distal forearm markers defining the dorsal surface of the forearm. Finally, the angular orientation of the hand (palm) was estimated similarly using the plane of the five hand markers relative to their own proximo-distal axis.

Table 1.

Joint angles

| Group | Joint | Abbreviation | + Direction |

|---|---|---|---|

| Shoulder | Shoulder internal/external rotation | ShoulderIER | Internal rotation |

| Shoulder flexion/extension | ShoulderFlex | Flexion | |

| Shoulder abduction/adduction | ShoulderAbAd | Adduction | |

| Elbow | Elbow flexion/extension | ElbowFlex | Flexion |

| Wrist | Forearm pronation/supination | ArmPS | Pronation |

| Wrist flexion/extension | WristFlex | Flexion | |

| Wrist radial/ulnar deviation | WristRU | Radial deviation | |

| Proximal digits flexion/extension | Thumb flexion/extension | ThumbFlex | Flexion |

| Index MCP flexion/extension | IndexMCP | Flexion | |

| Middle MCP flexion/extension | MiddleMCP | Flexion | |

| Ring MCP flexion/extension | RingMCP | Flexion | |

| Little MCP flexion/extension | LittleMCP | Flexion | |

| Digits abduction/adduction | Thumb abduction/adduction | ThumbAbAd | Adduction |

| Index abduction/adduction | IndexAbAd | Adduction | |

| Middle abduction/adduction | MiddleAbAd | Adduction | |

| Ring abduction/adduction | RingAbAd | Adduction | |

| Little abduction/adduction | LittleAbAd | Adduction | |

| Intermediate digits flexion/extension | Thumb MCP flexion/extension | ThumbMCP | Flexion |

| Index PIP flexion/extension | IndexPIP | Flexion | |

| Middle PIP flexion/extension | MiddlePIP | Flexion | |

| Ring PIP flexion/extension | RingPIP | Flexion | |

| Little PIP flexion/extension | LittlePIP | Flexion | |

MCP, metacarpophalangeal; PIP, proximal interphalangeal.

Using the fixed shoulder point and three attitude matrices (of the shoulder, forearm, and hand), the seven angles of rotation of the arm were calculated. The three axes of rotation of the shoulder were calculated using the orientation of the upper arm attitude matrix relative to a fixed thorax coordinate frame. A single angle of the elbow (flexion/extension) was calculated. The hand orientation relative to the forearm orientation was used to calculate forearm pronation/supination (ArmPS) as well as flexion/extension (WristFlex) and radial/ulnar deviation at the wrist (WristRU).

For the fingers, a plane was fit to the four markers on each digit. The abduction/adduction angle at each MCP joint was calculated by comparing the orientation of the fitted digit plane to the orientation of the hand. The MCP flexion/extension angle was calculated for each digit as the angle between the line defined by the two proximal-phalanx markers and the plane of the hand. The PIP flexion/extension angle was calculated as the angle between the two pairs of markers on the proximal and intermediate phalanges. DIP flexion/extension angles were not evaluated.

Similarly, the four markers of the thumb were fit with a plane. Two thumb angles were calculated at the carpometacarpal (CMC) joint, abduction/adduction (i.e., palmar abduction, perpendicular to the plane of the hand) and flexion/extension (i.e., radial abduction, parallel to the plane of the hand). Thumb MCP flexion/extension angle was calculated as the angle between the lines defined by the two pairs of markers on the first metacarpal and proximal phalanx. Neither thumb opposition nor flexion at the interphalangeal joint was evaluated. Note that, in grouping joint angles across the five digits, we have considered each digit as having a most proximal joint at which both abduction/adduction and flexion/extension rotation can occur and an intermediate joint at which only flexion occurs. We, therefore, have grouped the thumb CMC joint angles with those of the MCP joints for the four fingers, and the thumb MCP joint with the PIP joints of the four fingers (Table 1).

Temporal Normalization of Joint Angle Kinematics

The duration of movements naturally varied from trial to trial. To permit more accurate comparison of joint angle trajectories across trials, we, therefore, normalized the joint angle data in time between two points: the onset of movement and peripheral object contact. To identify the onset of movement, the instantaneous speed in three-dimensional Cartesian space was calculated in 5-ms steps (video frame rate of 200 Hz) for each of the 36 markers and then averaged across all 36 markers at each step. Using the period from 100 ms before to 100 ms after the appearance of the cue in each trial to estimate baseline noise, a baseline mean and standard deviation of speed across all successful trials in a recording session was determined. The time in each trial at which the average speed of the markers exceeded 5 SDs of the baseline then was defined as the onset of movement. The time of contact with a peripheral object was detected in each trial with two pairs of semiconductor strain gauges mounted on the horizontal rod supporting each object so as to detect forces exerted on the object in two orthogonal directions, both lying in a frontal plane. When the vector sum of these forces, sampled at 1 kHz, exceeded a preset threshold, a behavioral event marker identifying the time of peripheral object contact was stored in the data stream, along with the Cartesian coordinates of the 36 markers.

The time series of each joint angle then was resampled using linear interpolation (interp1 in Matlab) to produce the same number of samples for each trial, equivalent to the median movement duration for the session. Data after the time of contact was resampled to the same rate. Data before the onset of movement was left unchanged at the original 200-Hz sampling rate.

Data Analysis

ANOVA.

At each normalized time point, each joint angle was submitted to two-way ANOVA, using a model that included two main effects (location, object), as well as their interaction (location × object). The sum-of-squares variance resulting from the ANOVA model was used to quantify effect size, defined as the variance observed as a function of a given predictor variable (location, object, or interaction), divided by the total variance observed. In preliminary analyses, we found that the unexplained variance (“error”), which results from measurement inaccuracy, natural variability in repeated trials of the “same” movement, and potentially other unknown factors, often fluctuated in time, rendering the instantaneous fraction of the total variance attributable to all the identified factors relatively larger at some times and smaller at others. To reduce the fluctuations in effect sizes that resulted from such random fluctuations in unexplained variance, and thereby better compare effect sizes across time, we, therefore, chose to normalize effect size by using the maximum error sum-of-squares (SSError) variance observed across all time points. Normalized effect size, ηi2, thus was calculated at each time point, t, as:

| (1) |

where i is location (Loc), object (Obj), or Loc × Obj. Using the maximum SSError in this way tended to reduce the estimated location, object, and interaction effect sizes, but enhanced comparison of different effect sizes across time. The significance of each effect at each time point was determined using the standard ANOVA F-test (using the maximum SSError value). Because multiple time points were tested, Bonferroni correction was achieved by setting a significance threshold of P < 0.05, divided by the number of time points tested (153 time points for monkey L, 143 for monkey X, 185 for monkey Y).

In addition to ηi2, the relative ratio of summed squared variance for location vs. object was quantified. These ratios, Qm, ignore the summed squared variance of the interaction and error terms and simply compare the extent to which variance depended on location vs. object:

| (2) |

A value of 1 thus corresponds to a location effect with no object effect, whereas a value of 0 indicates an object effect with no location effect.

We also calculated a second ratio, Qx, of the summed squared variance attributable to the interaction term (location × object) relative to that attributable to the two main factors:

| (3) |

Here, a value of 0 indicates that no variance was attributable to the interaction term (i.e., independent main effects) whereas a value of 1 indicates that all variance was attributable to the interaction effect and none to either main factor.

Linear discriminant analysis.

A small effect for a given factor in ANOVA (i.e., an effect that accounts for a relatively small fraction of the overall variance) does not necessarily indicate that different categories of that factor cannot be discriminated. We therefore extended our analysis by examining the time course of discriminable information on location and object. Linear discriminant analysis (LDA) was performed using 5 subsets of the 22 joint angles: 1) the three shoulder angles [shoulder internal/external rotation (ShoulderIER), shoulder abduction/adduction (ShoulderAbAd), shoulder flexion/extension (ShoulderFlex)]; 2) the three wrist angles (ArmPS, WristFlex, WristRU); 3) the MCP flexion/extension angles for the four fingers (IndexMCP, MiddleMCP, RingMCP, LittleMCP) and thumb flexion/extension (ThumbFlex) at the CMC joint; 4) the MCP abduction/adduction angles for the four fingers (IndexAbAd, MiddleAbAd, RingAbAd, LittleAbAd) and thumb abduction/adduction (ThumbAbAd); and 5) the PIP joint angles for the four fingers (IndexPIP, MiddlePIP, RingPIP, LittlePIP) and thumb MCP flexion/extension (ThumbMCP). Because not all objects were used at every location, LDA was performed separately for location given the object and for object given the location. In the location LDA, depending on the given object, the classifier chose between four, six, or eight locations with a chance level of 25%, 16.7%, or 12.5%, respectively. Results at each time step then were averaged across all four objects. Conversely, in the object LDA, depending on the location, the classifier chose between two, three, or four objects with a chance level of 50%, 33%, or 25%, respectively, and results were averaged across all eight locations. Each LDA was performed with 10-fold cross-validation as follows. Data were randomly partitioned into 10 subsets of trials. For each subset, the remaining 90% of trials from the daily session were used as a training dataset to generate a linear classifier for location or for object. The test subset (10%) then was used to estimate the classification accuracy. Ninety-five percent Clopper-Pearson confidence intervals were generated using an exact binomial distribution fit (Clopper and Pearson 1934; Newcombe 1998).

RESULTS

We analyzed upper extremity kinematics in one session from each of three monkeys. As detailed in Table 2, during these sessions the monkeys performed from 745 to 824 total trials, of which from 688 to 798 were performed successfully, with from 637 to 752 trials being retained for analysis. All three sessions lasted slightly more than 80 min. The duration of the epoch from movement onset to peripheral object contact, which we refer to as the reach-to-grasp epoch, differed among the three monkeys (P < 10−3, Kruskal-Wallis test), with medians ranging from 235 to 335 ms. The temporal normalization described in the methods, therefore, produced a different number of samples (inclusive of the first sample at movement onset and the last sample at peripheral object contact) for each monkey's data.

Table 2.

Sessions

| L20120928 | X20121219 | Y20130516 | |

|---|---|---|---|

| Total trials, no. (%) | 768 (100) | 824 (100) | 745 (100) |

| Successes, no. (%) | 717 (93.4) | 788 (95.6) | 688 (92.3) |

| Analyzed, no. (%) | 685 (89.2) | 752 (91.3) | 637 (85.5) |

| Elapsed time (H:MM:SS) | 1:26:11 | 1:22:41 | 1:22:33 |

| Median movement time (25th, 75th %tile), ms | 255 (225, 295) | 235 (205, 275) | 335 (290, 390) |

| No. of samples including M and C | 52 | 48 | 68 |

H, MM, SS: hours, minutes, seconds, respectively; M, movement onset; C, peripheral object contact.

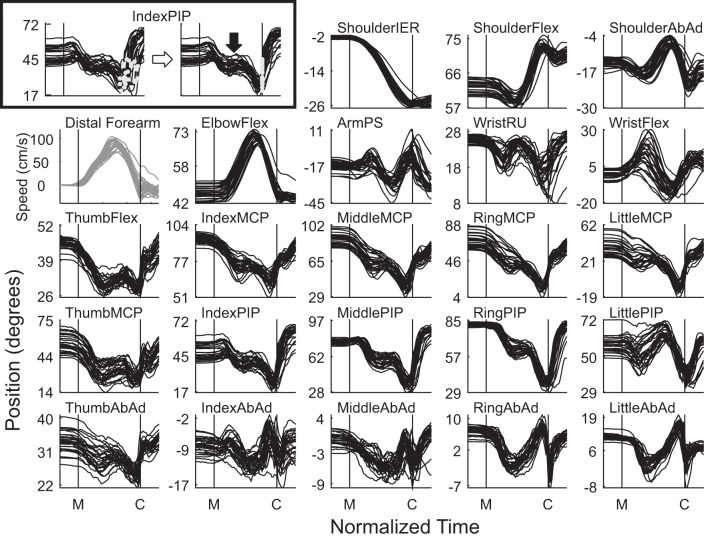

Temporal Trajectories of Individual Joint Angles

Time courses of each of the 22 joint angles during 28 trials in which monkey L successfully reached, grasped, and manipulated the coaxial cylinder at the 45° location are shown in Fig. 2. The box at top left uses the data from IndexPIP to illustrate the time normalization that has been applied to all subsequent data. In the left frame, the data before normalization have been aligned at the time of movement onset, and a gray dot marks the time of peripheral object contact in each trial. Considerable variability resulted from variation in the speed with which the monkey executed the movement, complicating evaluation of the joint-angle trajectory across trials. We, therefore, time normalized these joint angle data as described in the methods to provide a consistent number of samples in each trial between movement onset and peripheral object contact. The frame to the right of the horizontal arrow shows the IndexPIP data after time normalization, aligned now at both movement onset (M) and peripheral object contact (C), indicated by the two vertical lines. Although considerable trial-to-trial variability in absolute angle still was present, the time course of the trajectory can be seen to have been relatively consistent from trial to trial. Other frames of Fig. 2 show all 22 joint angles from the same trials after time normalization, as well as the simultaneous translational speed of the distal forearm point (gray). The relative consistency in each of the 22 joint angles across the 28 trials indicates that the movements were performed in a relatively stereotyped manner.

Fig. 2.

Time course of 22 joint angles (see methods for definitions). Each frame shows data from 28 successfully performed trials in which monkey L reached to, grasped, and manipulated the coaxial cylinder at the 45° location. The two frames in the box at top left illustrate the time normalization process (see methods) for the IndexPIP joint, converting (rightward arrow) the real-time data aligned at the movement onset (left) with light gray circles indicating peripheral object contact in each trial to the time-normalized data aligned at both movement onset (M) and peripheral object contact (C), with each alignment indicated by a vertical line. The downward arrow emphasizes a pause in the progressing extension of the IndexPIP. The remaining frames show similarly time-normalized data for all 22 joint angles (black), as well as the translational speed of the distal forearm point (gray), which had a typical bell shape. Larger positive angles represent internal rotation, flexion, pronation, radial deviation, or adduction, while smaller or negative values represent external rotation, extension, supination, ulnar deviation, or abduction.

During these movements to the coaxial cylinder at 45°, although the speed of the distal forearm point showed the conventional bell-shaped profile, the trajectories of individual joint angles showed a variety of temporal patterns. Only ShoulderIER showed a monophasic transition from one angular position to another. The elbow flexion/extension (ElbowFlex) angle first increased and then decreased as the hand was transported first away from the central object toward the shoulder and then toward the peripheral object away from the shoulder. ShoulderFlex, ShoulderAbAd, LittlePIP, MiddleAbAd, RingAbAd, and LittleAbAd angles all decreased and then increased before decreasing again prior to peripheral object contact, although timing and magnitude differed among joints; WristFlex showed the inverse pattern. Still other angles, including ArmPS, WristRU, and IndexAbAd, showed two similar phases of motion in the same direction separated by a reversal of direction in between. Of particular interest was the motion of the ThumbFlex, IndexMCP, MiddleMCP, RingMCP, LittleMCP, ThumbMCP, IndexPIP, MiddlePIP, RingPIP, and ThumbAbAd angles, which collectively determine most of the opening and closing of the hand. All of these angles initially decreased (extended), paused or slightly reversed near the middle of the reach-to-grasp epoch, and then decreased again, apparently separating the time course of hand opening into two phases. The transition between these two phases occurred near the temporal midpoint between movement onset and peripheral object contact, approximately at the time of peak transport speed of the distal forearm point. Furthermore, all of these latter joints continued to extend (decreasing values), opening the hand, until immediately prior to peripheral object contact. Only at that time did these MCP and PIP flexion/extension angles increase again, closing the fingers around the object. Rather than reaching maximal aperture at the time of peak transport velocity, the hand thus continued to open until it was almost in contact the object.

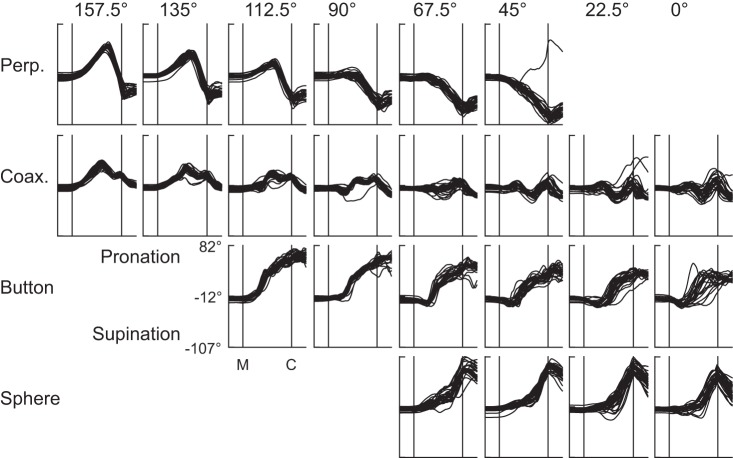

Effects of Location and Object on Joint Angles as a Function of Time

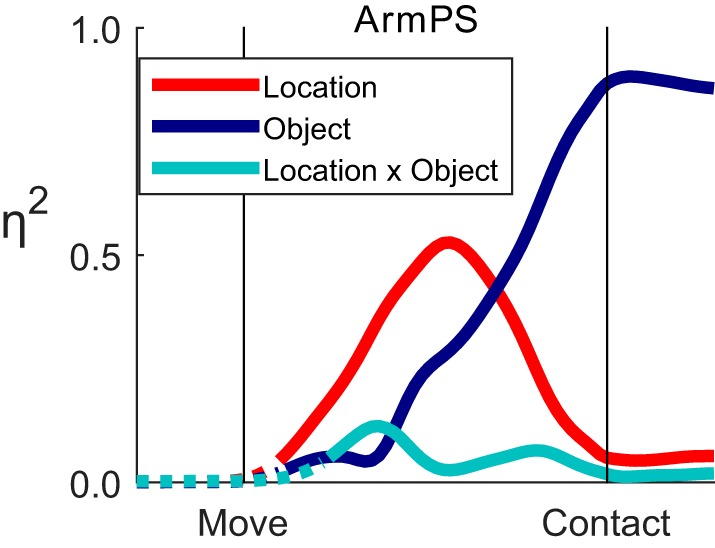

Figure 3 shows the time course of the ArmPS angle during reaches to each of the 24 combinations of location and object in a single session from monkey L. For any given object (row), ArmPS angle varied, depending on location; for any given location (column), ArmPS angle varied, depending on object. We, therefore, used two-way ANOVA to quantify the extent to which joint angles varied in relation to location, object, and their interaction, all as a function of time.

Fig. 3.

Forearm pronation/supination angles. ArmPS angle is shown as a function of normalized time for each of the 24 combinations of location (columns) and object (rows). The median time from movement onset (M) to peripheral object contact (C) in this session was 255 ms, and all trials have been time normalized to match this duration. Note that, in one trial with the perpendicular cylinder located at 45°, the monkey performed successfully, although in an aberrant fashion. Data are from session L20120928.

Figure 4 shows the normalized effect sizes (ηi2), resulting from such time-resolved ANOVA performed on the data for ArmPS shown in Fig. 3. After movement onset (M), the main effect of location (red) increased until approximately midway between movement onset and peripheral object contact (C) and thereafter decreased, reaching small but still significant values prior to contact. In contrast, the main effect of object (blue) remained small until shortly before the midpoint and then rose progressively until contact, at which time the object effect accounted for almost all of the variance in ArmPS angle. In comparison, the location × object interaction effect (cyan), although significant, remained small throughout the reach-to-grasp movements.

Fig. 4.

Effect size (η2) as a function of time for one joint angle. The variance in ArmPS angle attributable to location (red), to object (blue), and to location × object interaction (cyan) is shown as a function of normalized time using the data from Fig. 3. Solid curves indicate times at which variation for a given effect was significant; dotted curves are shown when an effect was insignificant.

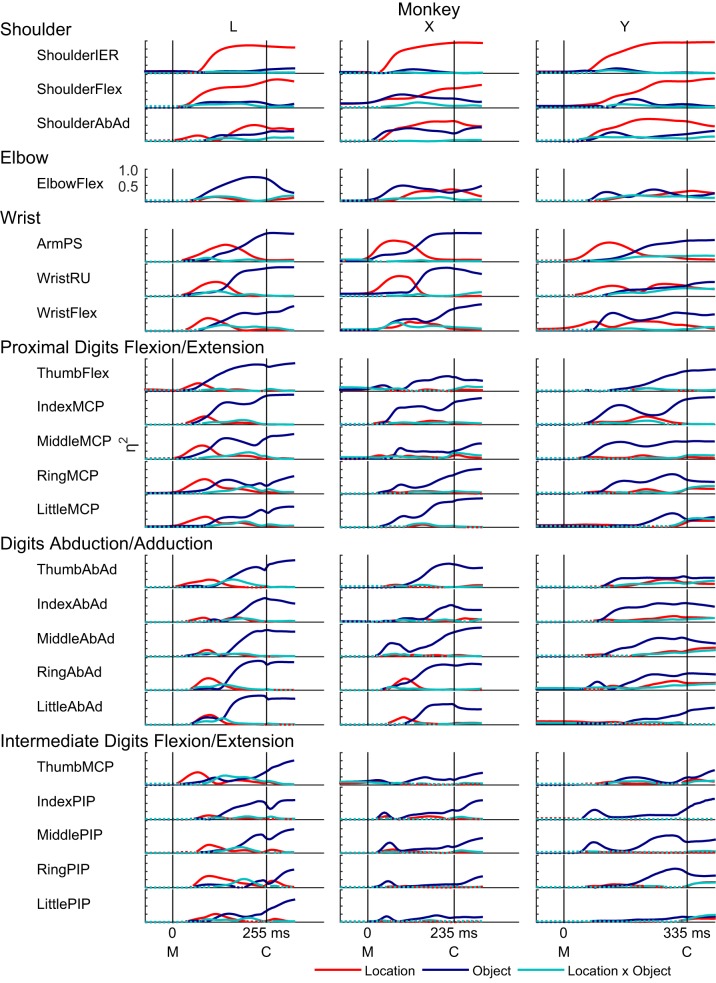

In this way, we examined effect sizes as a function of normalized time for each of the 22 joint angles in each of the three monkeys (Fig. 5). As expected, the largest effects of location were found in proximal joints, appearing as prominent red traces in shoulder and wrist angles, while the largest effects of object were found in the joints of the hand, appearing as prominent blue traces in the proximal and intermediate digit joint angles. For any given joint angle, although location and object effects differed in magnitude, both typically attained significance early in the course of the present movements and evolved concurrently, with small but significant interaction effects (cyan traces) present as well.

Fig. 5.

Effect sizes (η2) for 22 joint angles in 3 monkeys. The variance in each angle attributable to location (red), to object (blue), and to location × object interaction (cyan) is shown as a function of normalized time for each of the 3 monkeys. Solid curves indicate times at which the effect was significant; dotted curves are shown when an effect was insignificant. Note that, because the duration of the reach-to-grasp epoch differed among the monkeys, here we have adjusted the abscissa of each display according to the median duration across all successful trials for all 24 object-location combinations for each monkey individually.

Perhaps more remarkable, however, were three ways in which the present data deviated from these expectations. First, proximal joints often showed substantial object effects, and, conversely, distal joints often showed substantial location effects. ShoulderIER was dominated by location effects with little or no object effect, consistent with the role of internal/external rotation at the shoulder in positioning the hand at different locations in the frontal plane of the objects. The other shoulder angles, ShoulderAbAd and ShoulderFlex, both had clear object effects, however, in some cases beginning quite early in the reach-to-grasp. In contrast, the ElbowFlex angle showed surprisingly little location effect and instead had a large object effect in each of the three monkeys. The wrist angles, ArmPS, WristRU, and WristFE, each had location and object effects of comparable magnitude. In all joint angles of the hand, object effects predominated. Nevertheless, small location effects were present in some, particularly in monkey L. So, although location effects were most prominent proximally and object effects were most prominent distally, many proximal joints showed object effects, and many distal joints showed location effects. Overall, object effects in proximal joints were somewhat more marked than location effects in distal joints.

Second, although location and object effects both became significant very close to movement onset and evolved concurrently, location effects generally became large early in the course of these movements, while object effects generally became large later, typically reaching their maxima for a given joint only in the second half of the movements. In the shoulder joints, location effects typically continued to increase throughout the reach-to-grasp; but in the more distal joints of the wrist and digits, location effects typically rose initially, peaked, and then declined as the object effects increased. The early location effects in the joint angles of the wrist and digits, which were particularly prominent in monkey L, indicate that the early orientation and opening of the hand as it released and moved away from the center object varied depending on the location to which the hand was being transported. Only later, while still opening, did the shape of the hand come to vary primarily depending on the object about to be grasped. Rather than location effects evolving in parallel with object effects, location effects thus rose earlier in the course of the present movements, and object effects later.

Third, interaction effects, while often significant, typically were small. For no joint angle did the interaction effect account for more variance than either the location effect or the object effect. The comparatively small interaction effects may have reflected the spatial (proximal vs. distal) and temporal (early vs. late) separation of the larger location and object main effects.

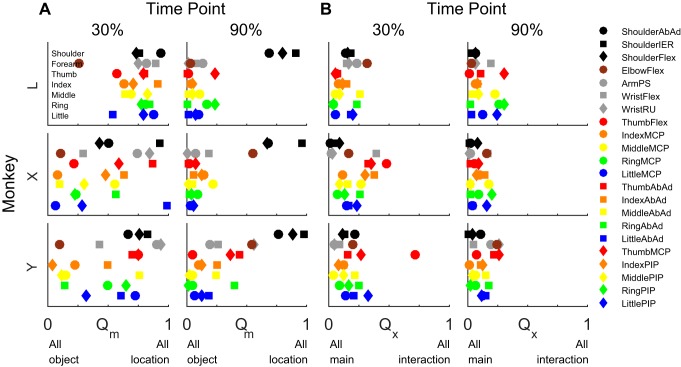

We compared the relative sizes of location, object, and interaction effects at two normalized time points, one 30% and the second 90% of the normalized time from movement onset to peripheral object contact, selected to sample the early and late phases of movement, respectively. To compare the relative sizes of location and object main effects, we calculated Qm, the variance attributable to location over the sum of the variance attributable to location and to object (Eq. 2), for each joint angle. Figure 6A shows that, at the 30% time point, different joint angles had a wide variety of Qm ratios, but by the 90% time point only a few joint angles, particularly all three shoulder angles in each of the three monkeys, had Qm ratios > 0.5, indicating larger location than object effects. Other joint angles with Qm ratios > 0.5 included ElbowFlex in monkeys X and Y (Qm = 0.55 and 0.54, respectively) and WristRU in monkey Y (0.56). At the 90% time point, all other joints had Qm ratios < 0.5, indicating larger object than location effects. These comparisons confirm that, while location effects were substantial in many joints in the early phase of movement, in the late-phase location effects were prominent at the shoulder, but object effects predominated more distally.

Fig. 6.

The relative ratios of object, location, and interaction effects at the 30% and 90% normalized time points. A: the ratio of location and object η2 values (Qm, Eq. 2) was calculated for each joint angle in each of the 3 monkeys. Early in the movement, both object and location effects were substantial in many joint angles from proximal to distal. Later in the movement, all of the joint angles distal to the elbow were primarily object related, while the shoulder joints were location related. B: the ratio of interaction to object and location η2 values (Qx, Eq. 3) was calculated. Most joint angles showed relatively small interaction effects at both the 30% and 90% points and thus depended on the main effects of object and location relatively independently.

We also compared size of interaction effects to the total explained variance by calculating Qx, the variance attributable to location × object interactions divided by the summed variance attributable to location, to object, and to their interaction (Eq. 3). This ratio will be closer to 1 the more location and object interacted and closer to 0 the more location and object were independent of one another. Figure 6B shows that Qx ratios generally were < 0.4 for the large majority of joint angles at both the 30% and the 90% time points. At the 30% time point, only monkey X showed Qx ratios > 0.4 in two joints angles, WristFlex and ThumbFlex, indicating substantial location × object interactions early in the movement. In monkey Y only ThumbFlex had a large Qx. In monkey L no joint angles had a large Qx at the 30% time point. By the 90% time point, all Qx ratios were < 0.4. These Qx ratios thus confirm that location and object were largely, although not entirely, independent of one another during the present reach-to-grasp movements.

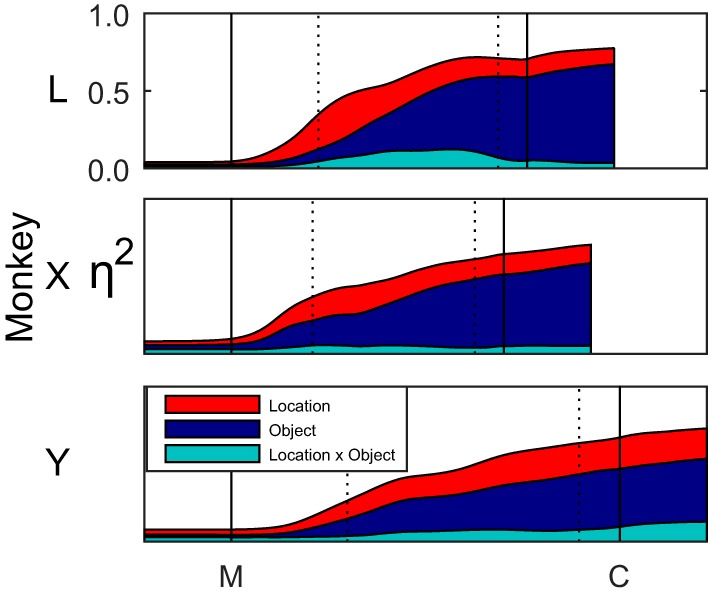

To provide an overview of how the fractions of joint angle variance attributable to these effects progressed over time, we averaged ηi2 values across all 22 joint angles for location, for object, and for location × object interactions separately at each normalized time point. These averages are shown as a stacked display for each monkey in Fig. 7. Main effects of location and of object began to appear at approximately the same time on average. At the 30% time point, location effects were as large as, or somewhat larger than, object effects (location/object/interaction: monkey L: 0.22/0.08/0.05; monkey X: 0.15/0.16/0.05; monkey Y: 0.12/0.11/0.04). Thereafter, object effects grew more than location effects, which actually declined somewhat in monkeys L and X, although remaining relatively constant in monkey Y. By the 90% time point, still prior to contact with the object, object effects were substantially larger on average than location effects in all three monkeys (location/object/interaction; monkey L: 0.12/0.52/0.07; monkey X: 0.13/0.44/0.04; monkey Y: 0.20/0.36/0.08). The ratio of location, object, and interaction effects then remained relatively constant after peripheral object contact as the monkey grasped and manipulated the object. Interaction effects, although significant, accounted for relatively little of the variance in joint angles in any of the three monkeys.

Fig. 7.

Effects of object and location combined across joints. The η2 values for location, object, and interaction effects each were averaged across all 22 joint angles, and these averages were stacked to represent the cumulative explained variance as a function of time. Black vertical lines indicate the alignment times of movement onset (M) and peripheral object contact (C); dotted vertical lines indicate the 30% and 90% normalized time points. After the onset of movement, location effects (red) increased early, while object effects (blue) increased later. Interaction effects (cyan) remained relatively small throughout.

LDA of Joint Angles as a Function of Time

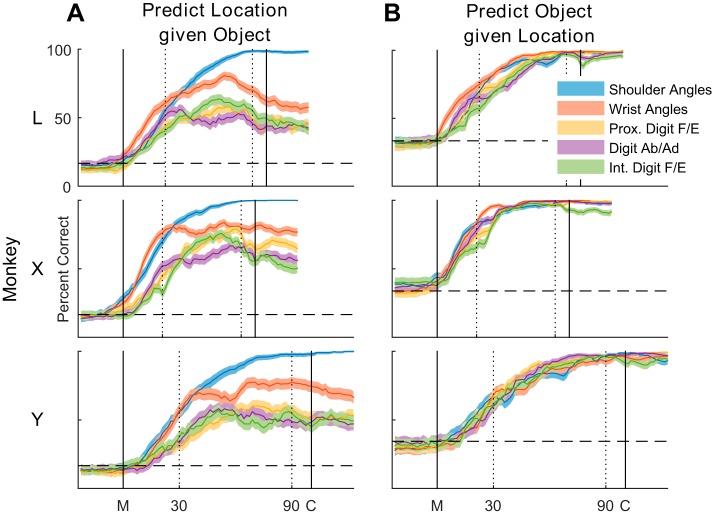

The fact that most joint angles showed significant variation with both location and object suggested that proximal joints had information about object, and conversely distal joints had information about location. We, therefore, used LDA to assess the discriminable information present in different groups of joint angles for classification of location and object as a function of normalized time during the reach-to-grasp epoch. Separate LDAs were performed to classify location as a function of time given the object (Fig. 8A) and to classify object as a function of time given the location (Fig. 8B).

Fig. 8.

Linear discriminant analysis. The ability to predict the target location given the object (A) or the target object given its location (B) was assessed in each monkey as a function of normalized time using different groups of joint angles. Black vertical lines indicate the alignment times of movement onset (M) and peripheral object contact (C); dotted vertical lines indicate the 30% and 90% normalized time points. A: in predicting location, shoulder angles performed best overall, followed by wrist angles, and then by the three groups of digit angles. B: in predicting object, all five joint-angle groups followed a similar time course and attained near-perfect classification. For each joint-angle group, the solid curve represents percent correct across 10-folds, and the shaded region indicates the 95% confidence interval. Horizontal dashed lines represent chance. F/E, flexion/extension; Ab/Ad, abduction/adduction.

In predicting location, shoulder joint angles (blue) were easily dissociated for different locations of a given object, reaching nearly 100% correct before peripheral object contact. Wrist angles (red) were next most strongly predictive of location. In the first part of the movements, wrist angles predicted location as accurately as shoulder angles in all three monkeys. But wrist angles were not perfectly separated depending on location, and LDA performance with wrist angles was lower relative to shoulder angles by the temporal midpoint of the movements. Prediction of location with proximal digit flexion/extension angles (orange), digit abduction/adduction angles (violet), or intermediate digit flexion/extension angles (green) initially exceeded chance in parallel with prediction by shoulder and wrist angles. But shortly after movement onset (M), prediction of location by these digit joint-angle groups became less accurate than prediction by more proximal joint angles. Nevertheless, note that just prior to peripheral object contact (C), wrist joints and even digit joints still had some degree of discriminable information on location.

In contrast to prediction of location, each of the five joint-angle groups provided similar prediction of object as a function of time. In all three monkeys, all five joint-angle groups reached levels of object prediction close to 100% correct prior to peripheral object contact. Whereas the prediction of object by the joint angles of the hand was to be expected, the equivalent prediction of object by wrist angles and particularly shoulder angles is noteworthy and confirms that these proximal joint angles varied systematically in relation to the object.

Hand Configurations and Reach Trajectories

Although ANOVA showed little effect of location on digit joint angles after the early phase of movements (Fig. 5), LDA provided evidence of discriminable variation in digit joint angles depending on location, even immediately prior to peripheral object contact (Fig. 8A). To visualize this variation in greater detail, using data from monkey L we examined a palm-fixed view of the overall hand configuration at the 90% time point. Figure 9 shows stick figures indicating the relative positions of markers on the hand for each of the 24 combinations of location and object in a lateral view of the index finger (A) and a dorsal view of the entire hand (B). The end of each line segment indicates the position of a marker averaged across all successful trials for one location-object combination. The average marker positions for all 24 combinations have been translated and rotated to co-register the five markers on the back of the palm (gray). The four columns show the results sorted according to object, from the perpendicular cylinder (left) to the sphere (right).

Fig. 9.

Hand and digit markers at the 90% time point. The average positions of each of the 20 digit markers (colored) are plotted relative to the 5 markers of the hand (gray) after translating and rotating all markers to co-register the 5 hand markers. Line segments connect markers on the same rigid-body segment. The 24 location-object combinations are grouped by object from the perpendicular cylinder on the left to the sphere on the right. The index finger is shaded from light orange to black according to location. A: lateral view of the index finger and hand markers. B: dorsal view of the entire hand (thumb, red; index finger, orange; middle, yellow; ring, green; little, blue).

The top row (Fig. 9A) shows a lateral view of the markers on the back of the index finger shaded according to location, from 0° (light orange) to 157.5° (black). Modest variation in the degree of index finger flexion is evident depending on location for the perpendicular and coaxial cylinders, but relatively little if any variation in index finger flexion was present for the button or sphere. The bottom row (Fig. 9B) shows all markers viewed from the back of the hand, with different colors used for the markers on different digits. Again the index finger line segments have been shaded from light orange to black, but line segments for other digits have not been shaded similarly depending on target location. The multiple blue line segments representing the middle phalanx of the little finger (blue), for example, thus indicate the degree of variation in the position of this digit depending on location. A modest degree of location-dependent variation in hand configuration again can be appreciated, although more variation in hand configuration depended on object.

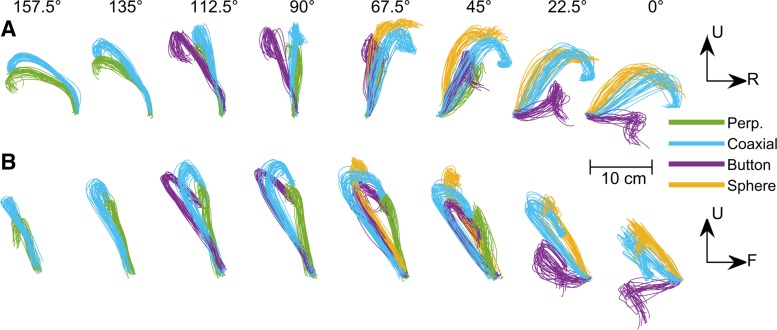

Conversely, to visualize the object-related variation of the arm in greater detail, we examined an earth-fixed view of the trajectory of the distal forearm point computed directly from the Cartesian coordinates of only the distal forearm markers. In Fig. 10, each frame shows the distal-forearm-point trajectories for all successful trials of movements to a given location. The top row (Fig. 10A) shows the trajectories projected onto a frontal plane; the bottom row (Fig. 10B) shows the same trajectories projected onto a sagittal plane. In each frame, the trajectories for reaching to grasp different objects are shown in different colors.

Fig. 10.

Trajectories of the distal forearm point. Three-dimensional trajectories in earth-fixed, Cartesian space (U, up; R, right; F, front) have been projected in a frontal plane as if viewed from behind the monkey (A), and in a sagittal plane as if viewed from the monkey's right side (B). Trajectories are shown from multiple trials for each location (columns, from 157.5° at left to 0° at right) and for each object (colors: perpendicular cylinder, green; coaxial cylinder, cyan; button, violet; sphere, orange). For any given target location, the trajectory of the distal forearm point, beginning early in the movement, depended in part on the object.

Examining these trajectories across the columns shows that they did vary depending largely on location. At any given location, however, the trajectory of the distal forearm point through space depended to some extent on the object to which the hand was being transported, beginning quite early in the reach. This motion of the distal forearm point is determined by rotation at the elbow and shoulder joints only. To isolate motion at the shoulder joint, we also examined similar plots (not illustrated) of the trajectory of the elbow point, which is determined solely by rotations at the shoulder. These plots likewise demonstrated variation depending primarily on location, but also depending to some extent on the object, beginning early in the reach. Consistent with the LDA results (Fig. 8B), these plots confirm that, in addition to the larger variation in distal forearm point trajectories depending on location, the smaller variation depending on object was observed consistently, with little overlap of the trajectories to different objects by the end of the movements.

DISCUSSION

Rather than tracking arm endpoint and grip aperture, we quantified the variation in 22 joint angles throughout the upper extremity during the reach-to-grasp epoch of movements in which monkeys reached to, grasped, and then manipulated different objects at multiple locations. Joint angles from the shoulder proximally to the fingers distally varied systematically depending on both the location to which the arm reached and the object the hand grasped. Early in the movements, joint angles on average depended on location as much as, if not more than, object. Later in the movements, joint angles on average depended primarily on the object about to be grasped and manipulated. Interaction effects were relatively small throughout the movements. While remaining relatively independent, location effects and object effects thus became prominent in two sequential phases of the present reach-to-grasp movements.

Temporal Profile of Reach-to-Grasp

Simple models of reach-to-grasp can predict many experimentally observed kinematic phenomena without explicitly considering the underlying neural control (Flash and Hogan 1985; Smeets and Brenner 1999, 2002; Yang and Feldman 2010). Had the arm moved along straight trajectories and had the digits all opened and closed in parallel, predictions from such models might indicate that all joint angles follow unimodal, bell-shaped velocity profiles toward the final posture for a given location/object combination, without need for sequential phases of control. But in the present reach-grasp-manipulate movements, the arm did not move in straight trajectories (Fig. 10), the digits did not open and close entirely in parallel, and only ShoulderIER showed a monophasic sigmoidal rotation consistent with a bell-shaped velocity profile (Fig. 2). [Indeed, that the motion of the distal forearm point, which is the combination of ShoulderIER, ShoulderFlex, ShoulderAbAd, and ElbowFlex rotation, showed a bell-shaped velocity profile (Fig. 2) suggests that such a velocity profile of the reaching arm is at some level a controlled variable.] These observations complicate modeling the present data with respect to a variety of potential controlled variables. We, therefore, cannot reject the possibility that the early prominence of location effects and later of object effects resulted simply from the geometry of upper extremity movement.

Nevertheless, we advance the alternate hypothesis that the patterns of variation at the individual joints used first to direct the extremity to a given general location and second to shape the entire extremity for the impending grasp reflect two sequential phases in the neural control of what appeared to be single, smooth movements. In fact, a pause occurred in the rotation of many joint angles in the midst of many of the present reach-to-grasp movements (Fig. 2), approximately at the time that location effects waned and object effects waxed. Although not emphasized, similar pauses in hand opening approximately at the time of peak transport velocity can be observed in some of the earliest video studies of human reach-to-grasp (Jeannerod 1984, Figs. 3 and 6; Jeannerod 1986, Fig. 2). Such pauses may reflect a shift from an initial phase of neural control that predominantly directs the extremity to the intended location to a subsequent phase of neural control that shapes the extremity for grasp and manipulation of the intended object. In addition to this shift between two phases, we observed that the hand continued to open in the second phase until shortly before object contact. In part, these differences between the present data and previous studies of reach-to-grasp kinematics may have resulted from the fact that the present monkeys, rather than simply grasping a constant object, grasped and immediately manipulated different objects differently. Further studies that dissociate the object grasped and the manipulation performed will be needed to determine the extent to which the manipulation influences the reach and the grasp.

Effects of Object on Proximal Joint Angles

Certainly rotations at the shoulder and elbow joints transport the hand to a particular location, rotations of the forearm and wrist orient the hand, and rotations at the joints of the digits shape the hand. Although in early studies these different aspects of reach-to-grasp movements were thought to be independent, many investigators since have noted that transport, orientation, and shaping of the hand are necessarily interrelated to some extent (see Introduction). Consistent with these observations, we found substantial object effects in proximal joint angles. At any given location, the hand shapes used to grasp the different objects (despite their circular symmetry) required that the distal forearm be positioned differently with respect to the objects. As is evident in Fig. 10, for example, by the time of peripheral object contact, the distal forearm point typically was positioned highest for the sphere (orange), intermediate for the coaxial cylinder (cyan), and lowest for the button (violet) at any of the locations sampled, reflecting the fact that this monkey placed its hand on the sphere from above, on the coaxial cylinder from the side, and positioned the hand below and in front of the button to press it with the index finger. The trajectories that transported the hand to these positions relative to the object separated quite early during the movements. Consequently, small object effects began to appear quite early in some proximal joint angles, including ShoulderFlex, ShoulderAbAd and ElbowFlex. Indeed, the ElbowFlex angle had large object effects, substantially larger than location effects in monkey L and similar in magnitude to location effects in monkeys X and Y, throughout the reach-to-grasp epoch. The trajectory of the proximal upper extremity thus varied so as to position the hand not only in the necessary location, but also in the necessary spatial relationship to the object.

In theory, the hand did not need to be positioned differently relative to different objects simply to grasp them. Although the trajectories illustrated in Fig. 10 typically placed the hand on the sphere from above but on the coaxial cylinder from the side, for example, the monkey could have grasped the sphere from the side or the coaxial cylinder from above. The monkeys' choices in this regard may have resulted from the additional requirement that each object be manipulated differently. Indeed, in a previous study of monkeys performing different actions on objects at the same location, the posture of the arm was found to vary depending on the action being performed (Tillery et al. 1995). The manipulation about to be performed thus also may influence neural control of the arm's trajectory during movements that involve reaching, grasping, and manipulation. The influence of the different impending manipulations, associated in the present study with different objects, therefore, may have enhanced the discrimination of objects using only angles at the shoulder joint (Fig. 8B). Understanding this influence will require additional studies that triply dissociate the location, the object, and the manipulation.

Effects of Location on Distal Joint Angles

We also found variation in distal joint angles, depending on the location to which the monkey reached. Although much of the location-related variation in distal joint angles occurred relatively early in the movements (Fig. 5), some location-related variation still was present at the 90% time point (Fig. 9). The early location effects on distal angles reflect variation in the opening of the hand as the central object was released. Effects at the 90% time point, in contrast, reflect location-dependent differences as the hand approached its final grasp shape.

The late location-dependent differences in hand shape (Fig. 9) overall seemed relatively minor compared with the object-dependent differences in distal forearm position (Fig. 10). This impression was confirmed by the lower prediction accuracies of location from digit joint angles compared with the high prediction accuracy of object from shoulder joint angles (Fig. 8). This suggests that, as a given object was placed at different locations, the monkeys tended to vary distal forearm position and orientation as needed to apply a relatively consistent grasp shape. As noted by other authors, choosing to use a consistent grasp shape imposes a constraint that reduces the problem of how to co-vary redundant degrees of freedom throughout the upper extremity (Desmurget et al. 1996; Tillery et al. 1995). If the distal joints are constrained to a certain configuration, then the wrist must orient the hand in a certain attitude, and the proximal joints must place the wrist at an appropriate location.

The use of a consistent hand shape at the expense of variation in reach trajectory and hand orientation raises the possibility that such a strategy provides some efficiency for neural control. The shoulder, elbow, and wrist have only 7 rotational degrees of freedom, whereas the digits of the hand have 22. Although the highly articulated digits provide great flexibility for producing many different hand shapes, varying all 22 degrees of freedom gradually for the same object at different locations might be computationally challenging. Instead of reconfiguring the shape of the hand, adjusting the location and orientation of the arm at the shoulder, elbow, and wrist may simplify neural control of the entire reach-to-grasp movement. We speculate that, insofar as possible given biomechanical constraints, the nervous system might prioritize the hand shape to be used, and the reach trajectory then might be adjusted accordingly.

At the same time, we note that, although the present movements covered a large fraction of the natural upper extremity workspace, a considerable fraction of the potential workspace was not sampled. Similar movements performed in that part of the workspace might have required substantial changes in hand shape for biomechanical reasons. In the present study, for example, the coaxial cylinder was consistently grasped with the palm to the side and some or all of the four fingers on top of the object. Had this object been located substantially below the level of the shoulder, the biomechanical limits of wrist angles likely would have precluded use of this hand shape, and the coaxial cylinder would have been grasped instead with the palm still to the side but now with the fingers on the bottom of the object. Further investigation will be needed to understand the points at which such transitions in the hand shape chosen to grasp the same object occur.

Interaction Effects: Independence vs. Interdependence of Location and Object

A large change in the grasp shape used at certain locations (like that described just above for the coaxial cylinder) would have resulted in a substantial location × object interaction effects. But interaction effects in the present data, although significant for many joint angles over much of the reach-to-grasp epoch, on average were comparatively small (e.g., Figs. 5 and 7). Interaction effects were smaller than either of the main effects much of the time in many joint angles. In some instances, an interaction effect became larger than one main effect while remaining smaller than the other main effect. Rarely was an interaction effect larger than the main effects of both location and object. Quantitatively small interaction effects suggest that variation in joint angles depending on location and depending on object proceeded relatively independently. The early predominance of location effects vs. the later appearance of object effects, as well as the hypothesized strategy of using a consistent hand shape across locations, may have minimized location × object interactions during the present reach-to-grasp movements.

Implications for Neural Control

Our detailed examination of joint angle kinematics from the shoulder to the fingers as a function of time during reach-to-grasp movements is consistent with the hypothesis that neural control of reaching and that of grasping proceed to some extent sequentially rather than entirely simultaneously. Moreover, our findings that joint angle kinematics both proximally and distally vary in relation to both location and object are consistent with observations that neural activity in both PMd and PMv may be related both to reaching and to grasping (Bansal et al. 2012; Raos et al. 2004; Stark et al. 2007). These findings also raise the possibility that control of reaching and grasping each may involve both the horseshoe of proximal representation and the central core of digit representation in M1 (Kwan et al. 1978; Park et al. 2001). We speculate that the neural activity controlling reach location and that controlling hand shape for grasping the object in large part may not be as simultaneously coordinated in M1 as previously thought. Rather, neural activity potentially could pass in part sequentially through the same neural populations in M1, while also making subtle changes that combine location and object information when necessary. Additional studies will be needed to understand the spatiotemporal integration of neural information about location and object in M1 that produce the descending control needed for the two sequential phases of reach-to-grasp.

GRANTS

This work was supported by National Institute of Neurological Disorders and Stroke Grant R01 NS-079664.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the author(s).

AUTHOR CONTRIBUTIONS

Author contributions: A.G.R. and M.H.S. conception and design of research; A.G.R. and M.H.S. performed experiments; A.G.R. analyzed data; A.G.R. and M.H.S. interpreted results of experiments; A.G.R. prepared figures; A.G.R. drafted manuscript; A.G.R. and M.H.S. edited and revised manuscript; A.G.R. and M.H.S. approved final version of manuscript.

ACKNOWLEDGMENTS

The authors thank Marsha Hayles for editorial comments and Andre T. Roussin and Jay P. Uppalapati for assistance with data collection.

REFERENCES

- Aggarwal V, Mollazadeh M, Davidson AG, Schieber MH, Thakor NV. State-based decoding of hand and finger kinematics using neuronal ensemble and LFP activity during dexterous reach-to-grasp movements. J Neurophysiol 109: 3067–3081, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bansal AK, Truccolo W, Vargas-Irwin CE, Donoghue JP. Decoding 3D reach and grasp from hybrid signals in motor and premotor cortices: spikes, multiunit activity, and local field potentials. J Neurophysiol 107: 1337–1355, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Batista AP, Santhanam G, Yu BM, Ryu SI, Afshar A, Shenoy KV. Reference frames for reach planning in macaque dorsal premotor cortex. J Neurophysiol 98: 966–983, 2007. [DOI] [PubMed] [Google Scholar]

- Baumann MA, Fluet MC, Scherberger H. Context-specific grasp movement representation in the macaque anterior intraparietal area. J Neurosci 29: 6436–6448, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buneo CA, Andersen RA. The posterior parietal cortex: sensorimotor interface for the planning and online control of visually guided movements. Neuropsychologia 44: 2594–2606, 2006. [DOI] [PubMed] [Google Scholar]

- Cavina-Pratesi C, Monaco S, Fattori P, Galletti C, McAdam TD, Quinlan DJ, Goodale MA, Culham JC. Functional magnetic resonance imaging reveals the neural substrates of arm transport and grip formation in reach-to-grasp actions in humans. J Neurosci 30: 10306–10323, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cisek P, Kalaska JF. Neural mechanisms for interacting with a world full of action choices. Annu Rev Neurosci 33: 269–298, 2010. [DOI] [PubMed] [Google Scholar]

- Clopper CJ, Pearson ES. The use of confidence or fiducial limits illustrated in the case of the binomial. Biometrika 26: 404–413, 1934. [Google Scholar]

- Davare M, Kraskov A, Rothwell JC, Lemon RN. Interactions between areas of the cortical grasping network. Curr Opin Neurobiol 21: 565–570, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davoodi R, Loeb GE. Real-time animation software for customized training to use motor prosthetic systems. IEEE Trans Neural Syst Rehabil Eng 20: 134–142, 2012. [DOI] [PubMed] [Google Scholar]

- Desmurget M, Prablanc C, Arzi M, Rossetti Y, Paulignan Y, Urquizar C. Integrated control of hand transport and orientation during prehension movements. Exp Brain Res 110: 265–278, 1996. [DOI] [PubMed] [Google Scholar]

- Flash T, Hogan N. The coordination of arm movements: an experimentally confirmed mathematical model. J Neurosci 5: 1688–1703, 1985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fluet MC, Baumann MA, Scherberger H. Context-specific grasp movement representation in macaque ventral premotor cortex. J Neurosci 30: 15175–15184, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fu QG, Flament D, Coltz JD, Ebner TJ. Temporal encoding of movement kinematics in the discharge of primate primary motor and premotor neurons. J Neurophysiol 73: 836–854, 1995. [DOI] [PubMed] [Google Scholar]

- Gentilucci M, Castiello U, Corradini ML, Scarpa M, Umilta C, Rizzolatti G. Influence of different types of grasping on the transport component of prehension movements. Neuropsychologia 29: 361–378, 1991. [DOI] [PubMed] [Google Scholar]

- Gentilucci M, Daprati E, Gangitano M, Saetti MC, Toni I. On orienting the hand to reach and grasp an object. Neuroreport 7: 589–592, 1996. [DOI] [PubMed] [Google Scholar]

- Grafton ST. The cognitive neuroscience of prehension: recent developments. Exp Brain Res 204: 475–491, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeannerod M. The formation of finger grip during prehension. A cortically mediated visuomotor pattern. Behav Brain Res 19: 99–116, 1986. [DOI] [PubMed] [Google Scholar]

- Jeannerod M. The timing of natural prehension movements. J Mot Behav 16: 235–254, 1984. [DOI] [PubMed] [Google Scholar]

- Kwan HC, MacKay WA, Murphy JT, Wong YC. Spatial organization of precentral cortex in awake primates. II. Motor outputs. J Neurophysiol 41: 1120–1131, 1978. [DOI] [PubMed] [Google Scholar]

- Lacquaniti F, Soechting JF. Coordination of arm and wrist motion during a reaching task. J Neurosci 2: 399–408, 1982. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mason CR, Gomez JE, Ebner TJ. Hand synergies during reach-to-grasp. J Neurophysiol 86: 2896–2910, 2001. [DOI] [PubMed] [Google Scholar]

- Mason CR, Theverapperuma LS, Hendrix CM, Ebner TJ. Monkey hand postural synergies during reach-to-grasp in the absence of vision of the hand and object. J Neurophysiol 91: 2826–2837, 2004. [DOI] [PubMed] [Google Scholar]

- Mollazadeh M, Aggarwal V, Davidson AG, Law AJ, Thakor NV, Schieber MH. Spatiotemporal variation of multiple neurophysiological signals in the primary motor cortex during dexterous reach-to-grasp movements. J Neurosci 31: 15531–15543, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mollazadeh M, Aggarwal V, Thakor NV, Schieber MH. Principal components of hand kinematics and neurophysiological signals in motor cortex during reach to grasp movements. J Neurophysiol 112: 1857–1870, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murata A, Gallese V, Luppino G, Kaseda M, Sakata H. Selectivity for the shape, size, and orientation of objects for grasping in neurons of monkey parietal area AIP. J Neurophysiol 83: 2580–2601, 2000. [DOI] [PubMed] [Google Scholar]

- Newcombe RG. Two-sided confidence intervals for the single proportion: Comparison of seven methods. Stat Med 17: 857–872, 1998. [DOI] [PubMed] [Google Scholar]

- Park MC, Belhaj-Saif A, Gordon M, Cheney PD. Consistent features in the forelimb representation of primary motor cortex in rhesus macaques. J Neurosci 21: 2784–2792, 2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paulignan Y, Frak VG, Toni I, Jeannerod M. Influence of object position and size on human prehension movements. Exp Brain Res 114: 226–234, 1997. [DOI] [PubMed] [Google Scholar]

- Paulignan Y, Jeannerod M, MacKenzie C, Marteniuk R. Selective perturbation of visual input during prehension movements. 2. The effects of changing object size. Exp Brain Res 87: 407–420, 1991a. [DOI] [PubMed] [Google Scholar]

- Paulignan Y, MacKenzie C, Marteniuk R, Jeannerod M. The coupling of arm and finger movements during prehension. Exp Brain Res 79: 431–435, 1990. [DOI] [PubMed] [Google Scholar]

- Paulignan Y, MacKenzie C, Marteniuk R, Jeannerod M. Selective perturbation of visual input during prehension movements. 1. The effects of changing object position. Exp Brain Res 83: 502–512, 1991b. [DOI] [PubMed] [Google Scholar]

- Pesyna C, Pundi K, Flanders M. Coordination of hand shape. J Neurosci 31: 3757–3765, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raos V, Umilta MA, Gallese V, Fogassi L. Functional properties of grasping-related neurons in the dorsal premotor area F2 of the macaque monkey. J Neurophysiol 92: 1990–2002, 2004. [DOI] [PubMed] [Google Scholar]

- Raos V, Umilta MA, Murata A, Fogassi L, Gallese V. Functional properties of grasping-related neurons in the ventral premotor area F5 of the macaque monkey. J Neurophysiol 95: 709–729, 2006. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Luppino G. The cortical motor system. Neuron 31: 889–901, 2001. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Luppino G, Matelli M. The organization of the cortical motor system: new concepts. Electroencephalogr Clin Neurophysiol 106: 283–296, 1998. [DOI] [PubMed] [Google Scholar]

- Roy AC, Paulignan Y, Meunier M, Boussaoud D. Prehension movements in the macaque monkey: effects of object size and location. J Neurophysiol 88: 1491–1499, 2002. [DOI] [PubMed] [Google Scholar]

- Santello M, Flanders M, Soechting JF. Patterns of hand motion during grasping and the influence of sensory guidance. J Neurosci 22: 1426–1435, 2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Santello M, Flanders M, Soechting JF. Postural hand synergies for tool use. J Neurosci 18: 10105–10115, 1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Santello M, Soechting JF. Gradual molding of the hand to object contours. J Neurophysiol 79: 1307–1320, 1998. [DOI] [PubMed] [Google Scholar]

- Smeets JB, Brenner E. A new view on grasping. Motor Control 3: 237–271, 1999. [DOI] [PubMed] [Google Scholar]

- Smeets JBJ, Brenner E. Does a complex model help to understand grasping? Exp Brain Res 144: 132–135, 2002. [DOI] [PubMed] [Google Scholar]

- Soechting JF. Effect of target size on spatial and temporal characteristics of a pointing movement in man. Exp Brain Res 54: 121–132, 1984. [DOI] [PubMed] [Google Scholar]

- Soechting JF, Flanders M. Parallel, interdependent channels for location and orientation in sensorimotor transformations for reaching and grasping. J Neurophysiol 70: 1137–1150, 1993. [DOI] [PubMed] [Google Scholar]

- Stark E, Asher I, Abeles M. Encoding of reach and grasp by single neurons in premotor cortex is independent of recording site. J Neurophysiol 97: 3351–3364, 2007. [DOI] [PubMed] [Google Scholar]

- Stelmach GE, Castiello U, Jeannerod M. Orienting the finger opposition space during prehension movements. J Mot Behav 26: 178–186, 1994. [DOI] [PubMed] [Google Scholar]

- Theverapperuma LS, Hendrix CM, Mason CR, Ebner TJ. Finger movements during reach-to-grasp in the monkey: amplitude scaling of a temporal synergy. Exp Brain Res 169: 433–448, 2006. [DOI] [PubMed] [Google Scholar]

- Tillery SI, Ebner TJ, Soechting JF. Task dependence of primate arm postures. Exp Brain Res 104: 1–11, 1995. [DOI] [PubMed] [Google Scholar]

- Umilta MA, Brochier T, Spinks RL, Lemon RN. Simultaneous recording of macaque premotor and primary motor cortex neuronal populations reveals different functional contributions to visuomotor grasp. J Neurophysiol 98: 488–501, 2007. [DOI] [PubMed] [Google Scholar]