Abstract

Background

A study evaluating subjective trainee responses to simulation training organized by the Malta Foundation Program in particular whether this changed their clinical practice.

Method

Feedback using a standardized questionnaire was obtained from 120 (M=55%) participants. A 0–10 Likert scale was used to evaluate responses.

Results

Participants scored the simulation sessions as “useful” at 7.7 (95% confidence interval [CI] 7.4–8.0), rated “the overall experience” at 7.5 (95% CI 7.2–7.8), and thought it made a change in “daily practice” at 5.83 (95% CI 5.4–6.3). The score for the tutor “creating a satisfactory learning environment” and “quality of simulator equipment” was 7.8 (95% CI 7.6–8.1) and 7.7 (95% CI 7.4–8), respectively. Trainees rated “how close was the simulation to a real-life scenario” as 6.24 (95% CI 5.9–6.6). When asked whether the presence of colleagues hindered or helped, the majority were neutral 50 (41.7%), 36 (30%) said it hindered, while only 21 (28.3%) felt it helped. In contrast, 94 (78.33%) stated it was useful to observe colleagues while only 5 (4.2%) stated it was not. Likelihood for future participation was 7.4 (95% CI 7–7.8). Trainees recommended a median of 3 (interquartile range 2–5) simulations per year.

Conclusion

Trainees rated the sessions as useful and asked for more sessions possibly at an undergraduate level. Rating for equipment and tutors was positive; however, some felt that the effect on daily practice was limited. Most were comfortable observing others and uncomfortable being observed. The value of increasing sessions to 3–4 per year, timing them before clinical attachments and audiovisual prebriefing for candidates naïve to simulation needs to be evaluated in future studies.

Keywords: simulation, foundation, training, acute, medical, mannequin

Background

Simulation-based medical training has become a recommended and useful training tool for both undergraduate and postgraduate medical trainees. Advances in simulator technology together with rapidly rising public expectations in the quality of medical care have led to increasing efforts at replacing or supplementing traditional learning methods with simulator-based medical education replicating realistic and life-like clinical encounters.1

The Malta Foundation Program for newly qualified doctors is an affiliated school of the UK Foundation Program that includes a 2-year residency training program after graduation from medical school leading to a medical license to practice in Malta or the UK. It includes structured teaching, hands-on training, and continuous assessments. It has been making use of simulation-based training since 2009.2

Constructivism in education occurs when the learner engages in an experience with a tutor and then “constructs” his own interpretation of that experience. Simulation training provides an opportunity to observe the potential benefit of this method of teaching. This audit was aimed at obtaining subjective responses from learners to see if simulation training helped transform their medical knowledge into changed and hopefully improved medical practice.3

Methodology

The Malta foundation Program has organized regular 3-hour simulation training sessions for every trainee in each foundation year focusing on care of the acutely ill patient in which trainees were faced with various acute hospital-based case scenarios. Table 1 shows some commonly used clinical scenarios and Table 2 shows the main educational objectives during a typical scenario, for example, “acute myocardial infarction”.

Table 1.

Commonly used clinical scenarios

| Acute myocardial infarction |

| Pulmonary edema |

| Acute arrhythmia |

| Acute pulmonary embolism |

| Acute exacerbation of COPD |

| Hypoglycemia |

| Upper gastrointestinal bleed presenting with melena |

| Upper gastrointestinal bleed presenting with hematemesis |

| Acute confusional state |

| Epileptic seizures and status epilepticus |

Abbreviation: COPD, chronic obstructive pulmonary disease.

Table 2.

Educational objectives during a typical scenario “acute myocardial infarction (AMI)”

| Educational objectives |

|---|

| Objectives and grading based on ability to: |

| • Take a good targeted history, examination, and take appropriate investigations. |

| • To interpret clinical findings and results. |

| • To correctly manage and treat and refer when appropriate. |

| • To establish correct prioritization with efficiency. |

| • To demonstrate appropriate communication skills with patient and colleagues. |

| • To demonstrate good teamwork skills. |

| Grading system: |

| • Pass/fail |

| Introduction of clinical scenario |

| • Tutor sets clinical scenario to all trainees in particular the two trainees being assessed. |

| • For example, “70-year-old male known case of hypertension presents with central compressing chest pain to the casualty department. You are the doctor seeing the patient. Please take a focused history, examination, investigations and manage accordingly. If you have any difficulty you can call your senior (trainees are given a mobile pager and number)”. |

| Focused history taking |

| • Trainee takes targeted history from simulator mannequin. |

| • Tutor dubbing simulator mannequin responds. |

| • For example, site, onset, character of pain and any associated symptoms, past medical and surgical history, drug history, social and family history. |

| Physical examination |

| • Trainee examines simulator mannequin. |

| • Tutors alter heart sounds, breath sounds, and added sounds according to scenario presented. |

| • For example, clear chest and normal heart sounds heard in patient having an AMI. |

| Targeted investigations |

| • Trainees able to request parameters such as temperature, pulse, BP, and oxygen saturation which can be altered during the clinical scenario by the tutors and displayed on a monitor next to the simulator mannequin. |

| • Trainees able to request any relevant urgent and routine investigations such as a 12 lead ECG. For example, 12 lead ECG would show ST elevation in the anterior leads. |

| • Trainees able to practice practical skills – venous cannulation and arterial and venous blood can be taken by the trainees from a separate venipuncture training arm which is placed close to the simulator mannequin. |

| • For example, serum troponin and routine bloods requested. |

| Management |

| • Trainees able to request oxygen, fluids, or drugs as necessary. |

| • For example, oxygen, dual antiplatelets, anticoagulation, and pain relief. |

| • Trainees able to request senior help when deemed necessary they are given pager mobile and are able to call medical senior. For example, on-call cardiology senior contacted by trainee for further medical advice and to consider urgent percutaneous coronary intervention in a patient having AMI. |

| • One of the tutors will be assigned the role of the medical senior and will respond on pager mobile. Any conversation held between trainees, nurse, and seniors can be heard from the observation room. |

| Debriefing |

| • Following the end of the scenario all trainees and tutor gather for a debriefing session were trainees are given feedback on the scenario, have time for reflection and can discuss any problems they encountered. |

Abbreviations: AMI, acute myocardial infarction; BP, blood pressure; ECG, electrocardiogram; ST, segment elevation.

The tutors present prepared clinical scenarios at each session. The main educational objectives of the sessions included proper assessment of the situation, taking a short focused history, examination, appropriate investigations, and management. Candidates were also assessed on their ability to correctly prioritize, refer when appropriate, and communicate well with the patient and their colleagues.

Setting

Simulation sessions were held in the simulation training room at the postgraduate training center at Mater Dei Hospital.

The sessions were hands on and utilized a mannequin – the Gaumard HAL® S3201 (Gaumard Scientific, Miami, FL, USA) Advanced tetherless patient simulator with many of the physiological and anatomical responses of a human, including variable vital signs pupil reaction, cyanosis, fits (manifested as a vibratory movement of the mannequin) and heart, breath and bowel sounds which could be altered by the tutors according to the scenario presented. A trained nurse was available at each scenario to assist the participants by delivering the items needed, such as oxygen masks, drip sets, and administering any fluids or drugs being prescribed by the trainee. Participants were also able to practice other skills such as intravenous cannula insertion and bloodletting on a separate model.

A maximum of eight participants were allowed per session and then assessed in pairs. The remaining six participants were asked to view the session from a separate observation room fully equipped with audiovisual aids giving them access to the scenario room and all vital signs. The tutors present during each session consisted of a registered specialist consultant in internal medicine, two senior postgraduate trainees in internal medicine, and a registered nurse.

The three tutors were seated in the control room behind a glass screen within the simulation room and were in full control of the vital and clinical signs as well as dubbing the voice of the simulator mannequin. The nurse assisted the participants realistically during the actual scenario. Trainees were allowed telephone access to their seniors or colleagues whose role was played by one of the three tutors.

Each case scenario lasted 15–20 minutes and participants were asked to manage the patient’s presenting complaint as they would during any acute real-life situation. After each scenario, the tutors provided both the participants and observers with a 15-minute debriefing session that would include giving feedback to the two trainees being assessed. Trainees were graded from A to D based on their performance, with D being the lowest pass mark. Trainees obtaining an F would fail the session and thus require a repeat session.

Design and data collection

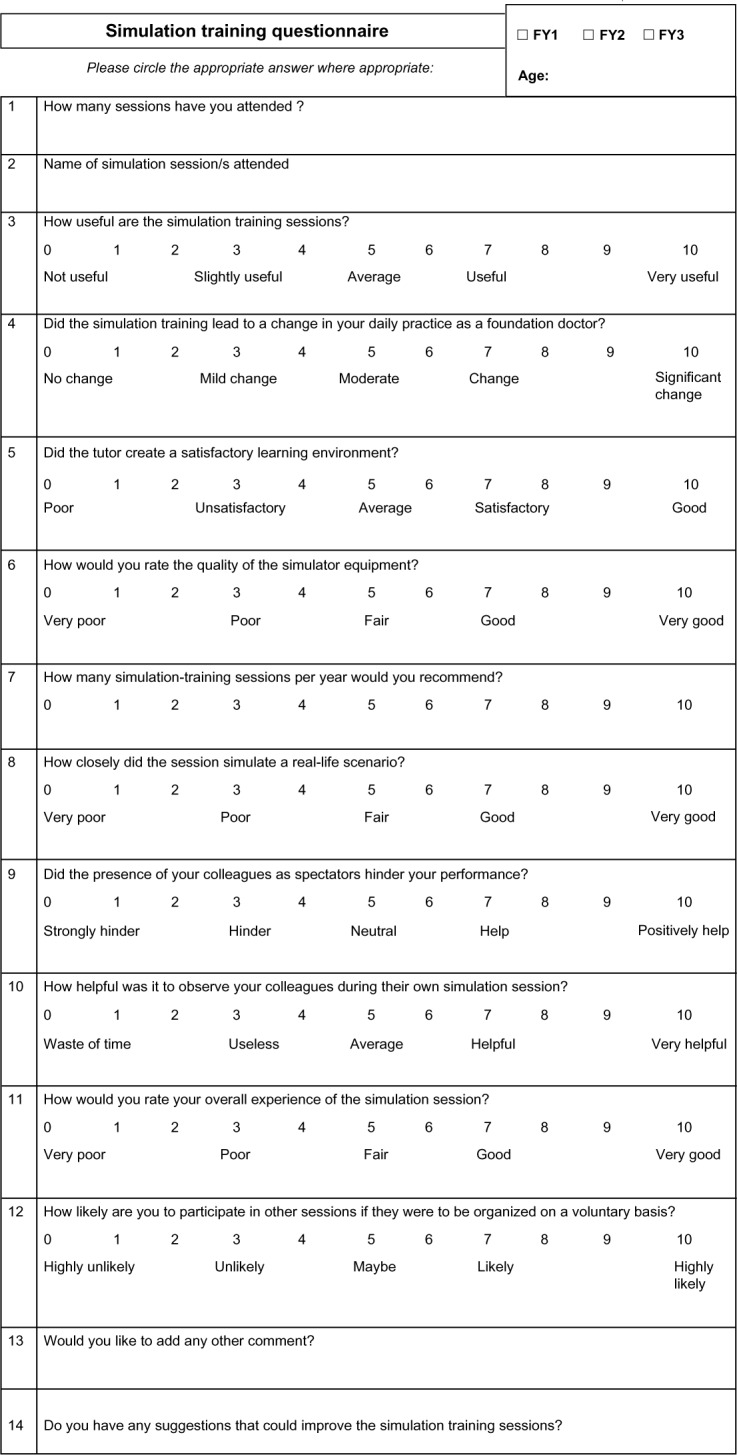

Data protection permission was obtained from the hospital data protection officer and ethics committee in line with EU legislation. Questionnaires were distributed and collected over a 14-day period in 2015. Data collection was done in a separate venue and at a later date from the simulation sessions. Written informed consent was obtained from the Foundation doctors who answered 14 questions related to their experience during the simulation training sessions organized by the Malta Foundation Program. Data for calculation of cost was provided by Malta Foundation Program school administration. Validation of the questionnaire was done by peer review of two specialists in internal medicine. Internal consistency of the questions was tested with Cronbach alpha. For Questions 3, 5, 6, 11, and 12 it was 0.8, and for Questions 4 and 8 it was 0.6. Figure 1 shows the questionnaire that was used and the 0–10 point Likert scale where 5 was neutral, 0 scored the lowest response, and 10 the highest response.

Figure 1.

Simulation training questionnaire.

The questionnaires were analyzed using Microsoft Excel and Minitab 17 (Minitab Inc., State College, PA, USA). The average scores out of 10, standard deviation, confidence intervals (CIs), median, and interquartile range (IQR) were calculated. The 95% CI of the response was calculated from the total number, mean, and standard deviation.

Results

A total of 120 participants (n=120) completed the questionnaire out of 210 Foundation Program doctors in 2015; 70 first year, 45 second year, and 5 unknown. The main results are shown in Table 3 and Figure 2.

Table 3.

Trainee response of simulation session using a 10-point likert scale

| Question (0–10 Likert scale) 0=lowest score, 5=neutral, 10=highest score | Mean±SD | 95% confidence interval for mean

|

||

|---|---|---|---|---|

| Upper | Lower | |||

| Q3 | How useful are the simulation sessions? | 7.68±1.76 | 7.36 | 7.99 |

| Q4 | Did the simulation session lead to a change in your daily practice? | 5.83±2.34 | 5.40 | 6.25 |

| Q5 | Did the tutor create a satisfactory learning environment? | 7.83±1.36 | 7.59 | 8.08 |

| Q6 | How would you rate the quality of the simulator equipment? | 7.7±1.46 | 7.44 | 7.96 |

| Q7 | How many simulation training sessions per year would you recommend? | 3.84±2.3 | 3.43 | 4.26 |

| Q8 | How closely did the session simulate a real-life scenario? | 6.24±1.83 | 5.91 | 6.57 |

| Q9 | Did the presence of your colleagues as spectators hinder your performance? | 5.26±1.93 | 4.91 | 5.61 |

| Q10 | How helpful was it to observe your colleagues during their own simulation session? | 6.92±1.70 | 6.61 | 7.22 |

| Q11 | How would you rate your overall experience of the simulation session? | 7.49±1.43 | 7.23 | 7.75 |

| Q12 | How likely are you to participate in other sessions if they were to be organized on a voluntary basis? | 7.44±2.17 | 7.04 | 7.83 |

Figure 2.

Median, interquartile range, and range for questions 3–12.

Abbreviation: Q, question.

From a Likert scale of 0–10, participants scored the simulation session as “useful” at 7.7 (95% CI 7.4–8.0), though providing a moderate change in “daily practice” at 5.83 (95% CI 5.4–6.3). When asked “how close was the simulation to a real-life scenario” the score was 6.24 (95% CI 5.9–6.6).

The score for the tutor “creating a satisfactory learning environment” was 7.8 (95% CI 7.6–8.1) and that for “quality of simulator equipment” was 7.7 (95% CI 7.4–8). The effect of the presence of colleagues on performance was evaluated among trainees. The majority n=50 (41.7%) were neutral, n=36 (30%) felt it hindered their performance while only 21 (28.3%) felt it helped. In contrast, the majority n=94 (78.33%) stated it was helpful to observe colleagues.

The likelihood for future participation was 7.4 (95% CI 7–7.8). Trainees rated “the overall experience” at 7.5 (95% CI 7.2–7.8) and recommended a median of 3 (IQR 2–5) simulations per year.

In 2014, a total of three trainees obtained a failure during their session and thus required a repeat session during this same year.

The questionnaire also allowed participants to voice their suggestions for possible improvement with many requesting more sessions at an earlier stage and with some commenting on technical difficulties that were encountered during the sessions.

Costs were calculated as shown in Table 4 bearing in mind the depreciation of equipment by 20% per year. A total of 25 simulation sessions were organized during 2014 for 200 foundation program trainees; with sessions consisting of eight trainees and five staff members (one nurse, one technician, and three medical tutors).

Table 4.

Total cost/trainee/session

| Assumptions on expenditure | €/session/trainee |

|---|---|

| Simulation center training room property, cleaning, and maintenance | 25 €/session/trainee |

| Simulator mannequin and equipment (100,000 €, 20% depreciation/year) | 100 €/session/trainee |

| Staff costs (20 €/hour/staff member) (three medical tutors, one nurse, and one technician) | 37.50 €/session/trainee |

| Total | 162.5 €/session/trainee |

Discussion

It has been reported that simulation-based medical training is a useful educational tool. It promotes skill acquisition through active participant practice, experiential and repetitive learning, reflection, and feedback in a controlled safe environment that simulates a real-life scenario.4,5 Simulation training is being widely used in anesthesia, endoscopy training, trauma, as well as advanced cardiac life support.6

Analysis of results shows that trainee responses were mostly positive. Participants stated that the sessions were useful (7.68) and that tutors created a satisfactory learning environment (7.83). The quality of the simulator equipment was also praised (7.7). Respondents felt that the simulations were of high educational value and requested a median of 3 simulation sessions per year rather than the current yearly session. On the other hand, despite the positive feedback in almost all areas, when asked about whether simulation led to a change in clinical day-to-day practice, the responses were more neutral.

This could be explained by the following considerations. First of all, many trainees attended simulation sessions later in the year with the result that they would have already gained significant first hand experience in their daily clinical practice. Second, many trainees felt that the simulation session did not “closely simulate a real-life scenario”, which could account for the moderate response to “a change in daily practice”. This was also the first experience to this kind of training for 1st year trainees who might have had higher expectations of this special training method being unaware of its inevitable limitations. A more positive response on change in daily practice might have been achieved by providing a prebriefing session, perhaps with audiovisual description of simulation so as to help naïve trainees in familiarizing with the simulation environment prior to the actual simulation sessions.7 However, 2nd year trainees would have an advantage in this respect as they would have had their first session the previous year.

A number of participants requested that sessions be organized earlier on during their foundation training, if not at undergraduate level. Undergraduate simulation training as well as simulation in the 1st month of the program would be ideal especially if followed by two sessions during the year. This is, however, difficult to achieve in view of time constraints and trainer availability together with increasing costs to the employer.

In fact a research study performed by Miles et al8 evaluated UK Foundation doctors’ induction experiences and results showed that trainees felt they are having inadequate inductions prior to rotations. Perhaps introducing simulation sessions earlier on during induction may ameliorate the induction experience.

In a similar study performed by the University of Hertfordshire in 2008, results were mostly positive.9 On the other hand this same study differed from our study, in that the majority of participants did not feel that the presence of their peers hindered their performance. In our case there were more individuals who felt being observed by their peers hindered their performance (30%) as opposed to those who did not (28.3%), while the majority remained neutral on the matter (41.7%). This discrepancy could be explained by the fact that in the Hertfordshire study, participants were from different professions and possibly different centers, while all trainees in our study were doctors from the same year working in the same foundation school after 5 years of medical school together. The rationale for this would be embarrassment or fear of being watched, supervised, judged, or criticized after their simulated scenario.9

Furthermore, evaluation of performance might have played a role in this since debriefing and feedback was given to all trainees together and trainees failing the scenario would require a repeat session.

An end of session debriefing ensures adequate individualized feedback is given to each trainee allowing participants to reflect on their own performance and create memorable experiences that will aid in lifelong learning.10 A systematic review in 2005 highlighted that feedback and debriefing occurring during simulation training sessions are among the most important parts of simulation-based medical education.11

Limitations of this study included the small sample size and feedback based on a single 3-hour session with limited scenarios. The study did not analyze or quantify improved patient outcomes following the simulation session or compare this to a control group consisting of trainees who were traditionally trained but looked specifically at subjective trainee responses. Negative experience by some trainees at the session might have led to negative feedback and may not truly reflect the outcomes of that session. Another limitation is that responses were not taken immediately after the session. On the other hand, the small number of tutors and the identical use of mannequin and simulation center equipment were positive aspects to this study.

Simulation sessions allow the trainee to practice in ways that would not be possible in a real-life scenario as this would be unethical and unsafe to the sick patient. It provides a controlled environment permitting multiple practice attempts where mistakes or failures will not put patients’ welfare at stake.12

This form of training assesses individual learning as well as the participant’s abilities of working within a team – a crucial concept of the multidisciplinary nature of modern medical care.5 It targets specific trainee needs by allowing repetition and providing consistency that would be difficult to achieve when dealing with real patients.13 Simulation training also allows exposure to rare complex scenarios and gives multiple trainees the same learning opportunities.12 Simulation training has even been shown to be more cost-effective than conventional learning techniques by leading to improved standards in patient care.14 In a meta-analysis done by McGaghie et al,1 simulation-based medical education with deliberate practice was found to yield better results than traditional clinical education. Improved patient practice and outcome was seen in laparoscopic surgeries, bronchoscopies, and managing difficult obstetric deliveries.

In a study performed by Karakus et al,15 computer-based simulation training in emergency medicine was found to have led to a statistically significant improvement in skill acquisition and success rate in managing complicated cases when compared to a control group of students who were traditionally trained.

This form of training has proved to be of utmost benefit as it is able to target multiple trainees within a given set time-frame despite time restrictions for medical education.16

Limitations associated with simulation training include the cost of the simulator equipment, instructors, and venue.17 In our case, the relatively high costs were mainly due to the cost of the mannequin, and the small number of sessions per year.

The lack of human emotion provided by the mannequin as well as the lack of environmental confounding factors, which may lead to possible deviations from reality within the controlled setting.18 Lack of adequate physical signs including the quality of sound generated by the mannequin may also account for the lack of realism and may be deceiving for the trainee.19 Trainees may fail to manage the mannequin as a real person and thus omit important practices such as proper history taking, full examination, consent, and use of protective equipment. Consequently, this may lead to unsafe practice, which may be reflected in day-to-day management of patients.19

Conclusion

Foundation doctors expressed overall satisfaction with the quality of the simulation training session and thought it was a positive learning experience. The quality of the equipment was good, the tutors were thought to have created a favorable educational environment and most trainees were willing to participate in future sessions. On the other hand, respondents expressed the opinion that simulation sessions led to a moderate change in their daily clinical practice. The value of undergraduate sessions, increasing sessions to 3–4 per year, and audiovisual prebriefing for candidates naïve to simulation are important suggestions that need evaluation to optimize simulation training.

Acknowledgments

We would like to acknowledge the Malta Foundation Program directors Dr Tonio Piscopo and Mr Kevin Cassar and the head of the postgraduate training program Dr Ray Galea for permission in performing this study. No organization or programs have provided any funding sources for this study.

Footnotes

Author contributions

All authors contributed toward data analysis, drafting, and revising the paper and agree to be accountable for all aspects of the work.

Disclosure

The authors report no conflicts of interest in this work.

References

- 1.McGaghie WC, Issenberg SB, Cohen ER, Barsuk JH, Wayne DB. Does simulation-based medical education with deliberate practice yield better results than traditional clinical education? A meta-analytic comparative review of the evidence. Acad Med. 2011;86(6):706–711. doi: 10.1097/ACM.0b013e318217e119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.The Foundation Programme Malta 2015. Available from: http://www.fpmalta.com.

- 3.Bradley C. The role of high-fidelity clinical simulation in teaching and learning in the health professions. 2011. Available from: http://www.kcl.ac.uk/study/learningteaching/kli/research/hern/hern-j4/Claire-Bradley-hernjvol4.pdf.

- 4.Weaver SJ, Salas E, Lyons R, et al. Simulation-based team training at the sharp end: a qualitative study of simulation-based team training design, implementation, and evaluation in healthcare. J Emerg Trauma Shock. 2010;3(4):369–377. doi: 10.4103/0974-2700.70754. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Perkins GD. Simulation in resuscitation training. Resuscitation. 2007;73(2):202–211. doi: 10.1016/j.resuscitation.2007.01.005. [DOI] [PubMed] [Google Scholar]

- 6.Wayne DB, Didwania A, Feinglass J, Fudala MJ, Barsuk JH, McGaghie WC. Simulation-based education improves quality of care during cardiac arrest team responses at an academic teaching hospital: a case-control study. Chest. 2008;133(1):56–61. doi: 10.1378/chest.07-0131. [DOI] [PubMed] [Google Scholar]

- 7.Crichton F, Joseph S. 0068 Managing the expectations of foundation doctors new to simulation. BMJ STEL. 2014;1(Suppl 1):A46. [Google Scholar]

- 8.Miles S, Kellett J, Leinster SJ. Foundation doctors’ induction experiences. BMC Med Educ. 2015;15:118. doi: 10.1186/s12909-015-0395-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Alinier G, Harwood C, Harwood P, Montague S, Huish E, Ruparelia K. Development of a programme to facilitate interprofessional simulation-based training for final year undergraduate healthcare students. Higher Education Academy/University of Hertfordshire; 2008. [Google Scholar]

- 10.Newby JP, Keast J, Adam WR. Simulation of medical emergencies in dental practice: development and evaluation of an undergraduate training programme. Aust Dent J. 2010;55(4):399–404. doi: 10.1111/j.1834-7819.2010.01260.x. [DOI] [PubMed] [Google Scholar]

- 11.Issenberg SB, McGaghie WC, Petrusa ER, Lee Gordon D, Scalese RJ. Features and uses of high-fidelity medical simulations that lead to effective learning: a BEME systematic review. Med Teach. 2005;27(1):10–28. doi: 10.1080/01421590500046924. [DOI] [PubMed] [Google Scholar]

- 12.Al-Elq AH. Simulation-based medical teaching and learning. J Family Community Med. 2010;17(1):35–40. doi: 10.4103/1319-1683.68787. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.McKenna KD, Carhart E, Bercher D, Spain A, Todaro J, Freel J. Simulation Use in Paramedic Education Research (SUPER): a descriptive study. Prehosp Emerg Care. 2015;19(3):432–440. doi: 10.3109/10903127.2014.995845. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Cohen ER, Feinglass J, Barsuk JH, et al. Cost savings from reduced catheter-related bloodstream infection after simulation-based education for residents in a medical intensive care unit. Simul Healthc. 2010;5(2):98–102. doi: 10.1097/SIH.0b013e3181bc8304. [DOI] [PubMed] [Google Scholar]

- 15.Karakus A, Duran L, Yavuz Y, Altintop L, Caliskan F. Computer-based simulation training in emergency medicine designed in the light of malpractice cases. BMC Med Educ. 2014;14:155. doi: 10.1186/1472-6920-14-155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Grant DJ, Marriage SC. Training using medical simulation. Arch Dis Child. 2012;97(3):255–259. doi: 10.1136/archdischild-2011-300592. [DOI] [PubMed] [Google Scholar]

- 17.Okuda Y, Bryson EO, DeMaria S, Jr, et al. The utility of simulation in medical education: what is the evidence? Mt Sinai J Med. 2009;76(4):330–343. doi: 10.1002/msj.20127. [DOI] [PubMed] [Google Scholar]

- 18.Good ML. Patient simulation for training basic and advanced clinical skills. Medical Educ. 2003;37(Suppl 1):14–21. doi: 10.1046/j.1365-2923.37.s1.6.x. [DOI] [PubMed] [Google Scholar]

- 19.Weller JM, Nestel D, Marshall SD, Brooks PM, Conn JJ. Simulation in clinical teaching and learning. Med J Aust. 2012;196(9):594. doi: 10.5694/mja10.11474. [DOI] [PubMed] [Google Scholar]