Abstract

Study Hypothesis

The Centers for Medicare and Medicaid Services (CMS) recently published ED timeliness measures. These data show substantial variation in hospital performance and suggest the need for process improvement initiatives. However, the CMS measures are not risk adjusted and may provide misleading information about hospital performance and variation. We hypothesized that substantial hospital level variation would persist after risk adjustment.

Methods

This cross sectional study included hospitals that participated in the ED Benchmarking Alliance (EDBA) and CMS ED measure reporting in 2012. Outcomes included the CMS measures corresponding to median annual boarding time, length-of-stay (LOS) of admitted patients, LOS of discharged patients, and waiting time of discharged patients. Covariates included hospital structural characteristics and case-mix information from the American Hospital Association (AHA) Survey, CMS Cost Reports, and the EDBA. We used a gamma regression with a log link to model the skewed outcomes. We used indirect standardization to create risk adjusted measures. We defined “substantial” variation as coefficient of variation (CoV) > 0.15.

Results

The study cohort included 723 hospitals. Risk adjusted performance on the CMS measures varied substantially across hospitals, with CoV > 0.15 for all measures. Ratios between the tenth and ninetieth percentiles of performance ranged from 1.5-fold for LOS of discharged patients to 3-fold for waiting time of discharged patients.

Conclusions

Policy-relevant variations in publicly reported CMS ED timeliness measures persist after risk adjustment for non-modifiable hospital and case-mix characteristics. Future ‘positive deviance’ studies should identify modifiable process measures associated with high performance.

Introduction

Background

Emergency department (ED) crowding has been identified as a top threat to the quality and safety of care delivered in ED settings.1 In 2012, the Centers for Medicare and Medicaid Services (CMS) began a ‘pay-for-reporting’ program for hospital level, ED timeliness measures. Preliminary analysis demonstrates substantial hospital-level variation on these measures.2 These publicly reported data are not risk adjusted3, and it is unclear if performance variation is explained by non-modifiable factors such as hospital characteristics or case-mix acuity.

Importance

The CMS measures are proxies of health care quality to be used by patients, health administrators, and policy makers. However, these measures may penalize hospitals for factors beyond their control. The stakes are large, as these published data may be used by patients to choose hospitals for their care, health systems for marketing purposes, and payers for reimbursement. Risk adjustment for non-modifiable factors is required to facilitate ‘apples-to-apples’ comparisons. To our knowledge, a risk adjustment methodology has not yet been developed for the CMS ED timeliness measures. The presence of substantial hospital-level variation after risk adjustment would provide support for initiatives (e.g. process redesign, “pay-for-performance” programs) to standardize and improve care. Conversely, the lack of variation after risk adjustment would suggest limited opportunities for improvement on these measures.

Goals of This Investigation

We tested the specific hypothesis that substantial hospital performance variation would persist despite risk adjustment for non-modifiable factors.

Methods

Study Design

This is a cross-sectional study using 2012 data. The IRB of the study team’s institution approved this study. A Theoretical Construct is presented in the Appendix.

Sample Selection

We identified hospitals that reported ED performance data to both the ED Benchmarking Alliance (EDBA)4 and CMS in 2012. The EDBA is voluntary consortium of over 1,100 emergency departments that collect annual, hospital-level measures on ED throughput and case-mix5 that are otherwise unavailable in existing datasets. Because our Theoretical Construct postulates that case-mix may be an important, non-modifiable predictor of ED timeliness, we focused on EDBA hospitals to access an expanded set of case-mix measures.

We excluded pediatric, specialty, critical access, and Veterans Affairs hospitals as such facilities are likely to have different patient populations and structural characteristics compared to general acute hospitals.

Data Sources

We analyzed data from the 2012 CMS Hospital Compare website3, American Hospital Association (AHA) Survey file6, the EDBA,7,8 and the CMS Cost Reports.9 Publicly available CMS Hospital Compare data include annual, facility-level data on all CMS “pay-for-performance” measures. The AHA Survey includes annual data on facility characteristics, volume, and services. The EDBA include facility level, self-reported, aggregated annual data on ED patient characteristics. The publicly available CMS Cost Reports include annual, facility-level billing data.

Outcomes

The dependent variables included the four CMS ED timeliness measures, downloaded from CMS Hospital Compare website3:

ED-1b (length-of-stay [LOS], admitted): Median time patients spent in the emergency department, before they were admitted to the hospital as an inpatient

ED-2b (boarding time, admitted): Median time patients spent in the emergency department, after the doctor decided to admit them as an inpatient before leaving the emergency department for their inpatient room

OP-18b (LOS, discharged): Median time patients spent in the emergency department before being sent home

OP-20 (waiting time, discharged): Median time patients spent in the emergency department before they were seen by a healthcare professional

These variables are reported as annual medians with a hospital as the reporting unit. Admitted patients are eligible for the computation of ED-1b and ED-2b; conversely, discharged patients are eligible for OP-18b and OP-20. The ED-1b and ED-2b measures exclude encounters for psychiatric/ mental health problems. OP-18b excludes encounters for psychiatric/ mental health problems and for transfer patients.

Predictors

We identified measures that corresponded with potential predictor domains as described in the Appendix-Theoretical Construct. All structural factors were from the 2012 American Hospital Association (AHA) Survey File, including ownership (public, not-for-profit, for-profit), trauma center designation (level 1 or 2), Council of Teaching Hospitals Designation, presence of psychiatric services, level of health system centralization (centralized health system, centralized physician/insurance health system, moderately centralized health system, decentralized health system, independent hospital system), financial structure (e.g. presence of independent practice association, group practice without walls, open physician-hospital organization, closed physician-hospital organization, integrated salary model, equity model, foundation model), region of U.S., and metropolitan designation. Hospitals were designated as ‘metropolitan’ if the Core Based Statistical Area was coded as ‘Division’ or ‘Metro.’ Our study team verified trauma center status from state websites, if such data were missing from the AHA file. All other missing data were coded as a missing category.

Case-mix variables were extracted from all 4 study databases. The AHA file contained data on annual ED visits and hospital admissions. We used EDBA case-mix variables previously found to be associated with ED timeliness measures8, including proportions of pediatric visits (<18 years of age), ambulance arrivals, transfers to another facility, overall admissions from ED, and admissions from ED among ambulance arrivals in 2012. The denominator for the first four measures is total annual ED visits. The denominator for the last measure is total ED visits by those transported by emergency medical services. We obtained the 2012 hospital case-mix index (CMI) from the CMS Cost Reports. A hospital’s CMI represents the Median diagnosis-related group relative weight for that hospital.

Cost Report data were also used to construct an annual occupancy rate ([total inpatient days + total observation bed days – nursery inpatient days] /total bed days available)10 and an intensive care unit annual occupancy rate (total ICU patient bed-days/ staffed ICU bed-days).

Data Analysis

Descriptive statistics were used to summarize all hospital characteristics and ED timeliness outcomes. To assess for potential sampling bias, we used the AHA survey data to describe characteristics of EDBA participants and EDBA non-participants that operated an ED.

We used regression models to assess the relationships between CMS ED timeliness measures and potential predictors. (Appendix. Data Dictionary and Regression Code) All CMS ED timeliness measures were right-skewed, and they were modeled using a gamma distribution with a Pearson Chi-Square scale parameter and log link. We included hospitals with missing data in the analysis to reduce bias. For categorical variables, we included missing data as a separate category. For continuous predictor variables, we categorized data into quartiles of non-missing data, with missing data included via the use of a missing category. Missing data ranged from 0.8% for CMS case-mix to 27.3% for percent of EMS arrivals admitted. Since CMS ED timeliness measures were right skewed and modeled with a log link, we calculated the ratio of geometric mean (by exponentiating the model coefficient) and its 95% confidence interval to measure the association between timeliness measures and each ED characteristics. Compared to arithmetic mean, geometric mean is less affected by the extreme values and a better measure of central tendency for right skewed data. The ratio of geometric mean could be roughly interpreted as the relative change to the mean level. To further facilitate the interpretation of the results and get a measure of absolute arithmetic mean difference, we used the method of recycled predictions to provide an estimate of marginal effects of each hospital characteristic.11 For categorical data marginal effects were calculated against the referent level of each variable, and for continuous data marginal effects were calculated for an increase in 10,000 for ED volume above the mean. To improve interpretability, we display marginal effect estimates only for significant predictors.

We used indirect standardization to generate risk-adjusted estimates. For each ED timeliness measure, observed/expected (O/E) ratios were generated for each ED by dividing the observed value by the corresponding value predicted by the regression model. We generated a risk adjusted measure for each hospital by multiplying the hospital specific O/E ratio with the mean value of the measure observed in the entire cohort. Box-and-whiskers plots of unadjusted and adjusted data were generated for each of the CMS timeliness measures. We also calculated the coefficient of variation (CV) for unadjusted and adjusted measures. The CV, defined as a ratio of the standard deviation to the mean, is a normalized measure of dispersion and commonly used to quantify variation in the health services literature. We defined “substantial” variation as a CV of ≥0.15.12

Data corresponding to the EDBA case-mix variables may not be widely available, which may affect the generalizability of our main analyses. In a sensitivity analysis, we repeated risk adjusted profiling of all hospitals after excluding EDBA variables from regression modeling. We assessed the stability of performance ranking when EDBA variables were excluded.

Analyses were conducted using Stata 13 (College Station, TX, USA).

Results

Study Sample

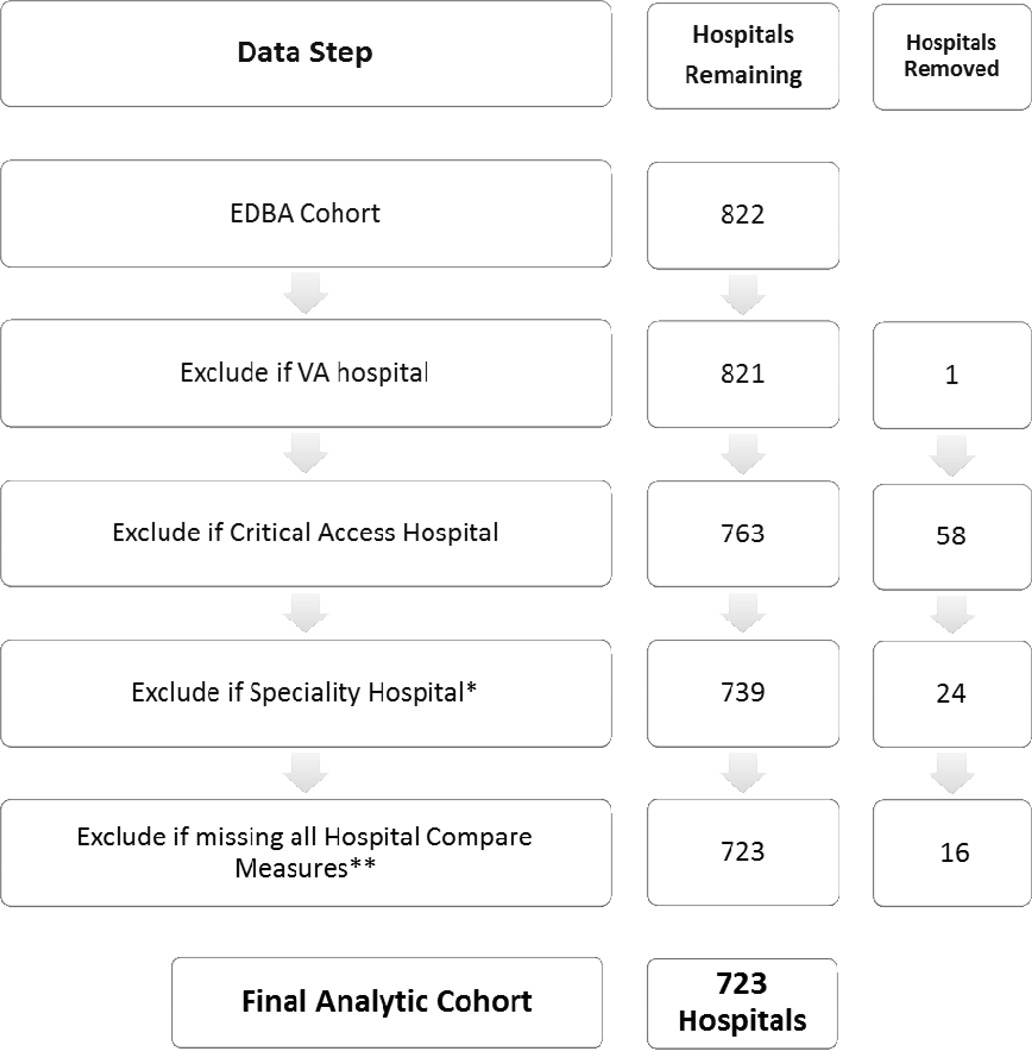

There were 723 hospitals that reported data to both CMS Hospital Compare and the EDBA in 2012. (Figure 1) Characteristics of cohort and non-cohort hospitals with an ED are presented in Table 1. Of all facilities reporting data to CMS Hospital Compare, EDBA participants were more likely to be in a metro location, in the geographic south, for-profit, part of a decentralized health system, and have higher ICU occupancy, ED volume and hospital admissions compared to EDBA non-participants.

Figure 1.

Sample Flowchart

*Includes Surgical (n=1), Psychiatric (n=1), Heart (n=4), Obstetrics and Gynecology (n=2), Rehabilitation (n=3), Children’s (n=5), Acute Long-Term Care(n=7) and Other Specialty Treatment(n=1) Hospitals.

**Measures are ED1B (LOS, admitted), ED2B (boarding time), OP18(LOS, discharged),OP20(wait time). The number of hospitals reporting to CMS Hospital Compare: ED1B- 3,019; ED2B- 3,019; OP18- 2,928; OP20-2,933.

Table 1.

Study Cohort Characteristics

| Characteristic | Study Sample EDBA Participants n=723* |

EDBA Non-Participants n=2303 |

|---|---|---|

| Metro Location (%) | 78.3% | 69.0% |

| Region (%) | ||

| Northeast | 10.8% | 17.4% |

| South | 55.6% | 38.2% |

| West | 16.5% | 19.5% |

| Midwest | 17.2% | 25.0% |

| Ownership (%) | ||

| Public | 11.8% | 16.8% |

| Not-For-Profit | 53.0% | 67.8% |

| For-Profit | 35.3% | 15.5% |

| Teaching Hospital (%) | 8.6% | 7.9% |

| Trauma Center (%) | 23.9% | 21.2% |

| Psychiatric Inpatient Services (%)** | 38.3% | 37.1% |

| Psychiatric Consultation Services (%)** | 35.1% | 37.0% |

| Health System Centralization (%) | ||

| Centralized Health System | 12.9% | 12.0% |

| Centralized Physician/Insurance Health System | 0.1% | 0.3% |

| Moderately Centralized Health System | 0.0% | 0.2% |

| Decentralized Health System | 66.3% | 49.7% |

| Independent Hospital System | 0.0% | 0.0% |

| Unknown | 20.8% | 37.9% |

| Independent Practice Association (%)** | 8.0% | 7.5% |

| Group Practice Without Walls (%)** | 1% | 2.4% |

| Open Physician- Hospital Organization (%)** | 13.4% | 12.7% |

| Closed Physician- Hospital Organization (%)** | 3.7% | 3.9% |

| Integrated Salary Model (%)** | 30.6% | 34.7% |

| Equity Model (%)** | 0.8% | 0.7% |

| Foundation Model (%)** | 5.5% | 3.3% |

| Preferred Provider Organization (%)** | 4.0% | 4.5% |

| Indemnity Fee for Service Plan (%)** | 1.7% | 1.4% |

| Annual Occupancy (median, 25th-75th percentile)£ | 0.58 (0.44 – 0.69) | 0.53 (0.39 – 0.66) |

| Annual ICU Occupancy (median, 25th-75th percentile)¥ | 0.61 (0.47 – 0.75) | 0.57 (0.43 – 0.72) |

| Annual ED Visits (median, 25th-75th percentile) |

38,029 (22,510 – 61,564) |

29,776 (16,052 – 50,411) |

| Annual Hospital Admissions (median, 25th-75th percentile) |

9101 (4,518 – 16,679) |

6,839 (2,834 – 13,534) |

| CMS Case-Mix Index (median, 25th-75th percentile) † | 1.48 (1.32 – 1.66) | 1.43 (1.25 – 1.63) |

There were 33,336,348 ED visits in 2012 to the study sample hospitals (from the AHA Survey File). The aggregate visits used to generate ED timeliness measures (from CMS Hospital Compare): ED1b-419,697; ED2b- 403,675; OP18b- 374,805; OP20- 398,132

Data missing for 16.7% of EDBA participants and 14.4% of EDBA non-participants.

Annual occupancy missing for 4% of EDBA participants and 4% of EDBA non-participants.

Annual ICU occupancy missing for 9.1% of EDBA participants and 15.6% of EDBA non-participants.

CMS Case-Mix Index missing for 0.8% of EDBA participants and 1.0% of EDBA non-participants.

Risk Adjustment Model

Full regression model results are presented in Appendix Table 1, and Table 2 includes marginal effect estimates for significant predictors. The marginal effect can be interpreted as the average change in minutes associated with a predictor, compared to a reference group. For example, a hospital in a metro location would on average have a 21.4 minutes longer ED median LOS for admitted patients compared to a hospital in a non-metro location. Metro location and region were significant predictors of all four CMS Hospital Compare measures. Ownership type, occupancy, ED visits, hospital admissions and proportion of visits from ambulance arrivals were significant predictors of three of the CMS Hospital Compare measures. Measures of case-mix were either predictive on none (Appendix Table 1) or one of the CMS Hospital Compare measures. (Table 2)

Table 2.

Predictors of ED Timeliness Measures

| Characteristic | ED-1: LOS, Admitted Effect Size (95% CI) |

ED-2: Boarding Time Effect Size (95% CI) |

OP18: LOS, Discharged Effect Size (95% CI) |

OP-20: Wait Time, Discharged Effect Size (95% CI) |

|---|---|---|---|---|

| Hospital Reporting Measure (n) | 723 | 717 | 698 | 697 |

| Metro Location (%) | 21.4 (7.7, 35.2) | 12.5 (3.1, 21.8) | 9.2 (2.8, 15.5) | 4.0 (1.6, 7.1) |

| Region (%) | ||||

| Midwest | ||||

| Northeast | 64.3 (44.3, 84.3) | 44.0 (29.4, 58.7) | 3.3 (−4.7, 11.4) | 2.8 (−1.4, 6.9) |

| South | 26.3 (13.5, 39.0) | 13.4 (5.1, 21.7) | 13.6 (7.6, 19.7) | 5.2 (2.2, 8.3) |

| West | 34.8 (18.6, 51.1) | 23.9 (12.8, 35.0) | 17.6 (10.0, 25.2) | −0.6 (−4.1, 2.8) |

| Ownership (%) | ||||

| Not-For-Profit | ||||

| Public | 16.1 (−1.5, 33.7) | 6.7 (−1.3, 14.7) | 3.3 (−1.2, 7.7) | |

| For-Profit | −20.2 (−33.0, −7.5) | −13.6 (−19.5, −7.6) | −8.4 (−11.1, −5.6) | |

| Teaching Hospital (%) | 26.3 (4.0, 48.7) | 14.4 (3.9, 24.9) | ||

| Psychiatric Consultation Services (%) | −4.4 (−10.2, 1.5) | |||

| Independent Practice Association (%) | −4.8 (−8.3, −1.3) | |||

|

Open Physician- Hospital Organization (%) |

−21.7 (−35.1, −8.2) | |||

| Foundation Model (%) | −4.7 (−8.8, −0.6) | |||

| Annual Occupancy (%) | ||||

| 1st Quartile | ||||

| 2nd Quartile | 12.8 (−1.1, 26.7) | 12.9 (3.9, 22.0) | 4.1 (−2.3, 10.5) | |

| 3rd Quartile | 17.4 (0.7, 34.0) | 13.8 (3.0, 24.6) | 8.9 (1.1, 16.7) | |

| 4th Quartile | 39.7 (20.7, 58.7) | 30.6 (17.7, 43.5) | 10.9 (2.2, 19.6) | |

|

Annual ED Visits (per 10,000 above the mean) |

4.6 (2.5, 6.7) | 1.5 (0.5, 2.5) | 0.7 (0.2, 1.2) | |

| Annual Hospital Admission | ||||

| 1st Quartile | ||||

| 2nd Quartile | 26.1 (10.4, 41.7) | 19.9 (9.7, 30.0) | 14.5 (7.5, 21.5) | |

| 3rd Quartile | 20.5 (−0.3, 41.3) | 18.4 (4.8, 32.0) | 16.7 (7.2, 26.1) | |

| 4th Quartile | 30.1 (3.8, 56.5) | 24.4 (7.0, 41.8) | 21.9 (9.5, 34.2) | |

| CMS Case-Mix Index | ||||

| 1st Quartile | ||||

| 2nd Quartile | −1.4 (−17.7, 14.9) | −5.9 (−10.2, −1.6) | ||

| 3rd Quartile | −12.0 (−30.3, 6.2) | −9.0 (−13.7, −4.3) | ||

| 4th Quartile | −30.5 (−50.1, −10.9) | −9.4 (−14.4, −4.3) | ||

| Proportion Pediatric Visits | ||||

| 1st Quartile | ||||

| 2nd Quartile | 3.8 (−6.6, 14.2) | |||

| 3rd Quartile | 7.9 (−3.1, 18.8) | |||

| 4th Quartile | 5.7 (−5.6, 17.0) | |||

| Proportion Ambulance Arrivals | ||||

| 1st Quartile | ||||

| 2nd Quartile | 13.6 (−2.5, 29.7) | 12.9 (5.5, 20.3) | 4.6 (0.4, 8.7) | |

| 3rd Quartile | 10.3 (−5.4, 26.1) | 13.7 (6.4, 20.9) | 1.0 (−2.9, 4.8) | |

| 4th Quartile | 26.9 (9.1, 44.6) | 15.2 (7.0, 23.3) | −1.3 (−5.4, 2.8) | |

| Proportion Admitted | ||||

| 1st Quartile | ||||

| 2nd Quartile | −4.2 (−11.0, 2.6) | |||

| 3rd Quartile | −2.0 (−9.9, 6.0) | |||

| 4th Quartile | 3.9 (−5.6, 13.3) | |||

|

Proportion of Ambulance Arrivals Admitted |

||||

| 1st Quartile | ||||

| 2nd Quartile | −4.5 (−8.0, −1.0) | |||

| 3rd Quartile | −0.4 (−4.2, 3.3) | |||

| 4th Quartile | −0.6 (−4.5, 3.4) | |||

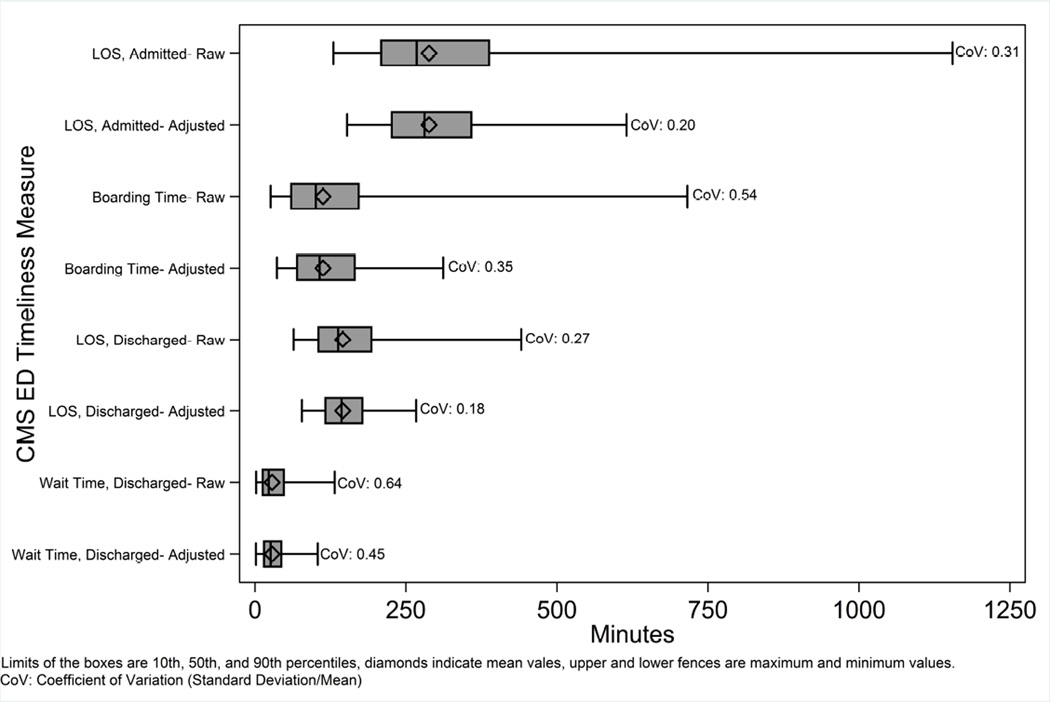

Variations in Performance

We illustrate hospital variation in both unadjusted and risk-adjusted measures. (Figure 2, Appendix Table 2) Substantial variation was observed in both unadjusted and risk-adjusted data (all CoVs > 0.15), although there was some narrowing of variation after risk adjustment. The ratios of risk-adjusted values at ninetieth percentile of performance to the tenth percentile of performance were: ED-1b: 1.6 (359 minutes/227 minutes); ED-2b: 2.4 (165 minutes /70 minutes); OP-18b:1.5 (178 minutes /117 minutes); and OP-20: 3 (44 minutes /15 minutes). Hospital characteristics associated with the top 5th, middle 90th, and bottom 5th percentiles of performance are reported in Appendix Table 3.

Figure 2.

Risk Adjusted Variation in CMS Measure Performance

Sensitivity Analysis to EDBA Variables

After excluding EDBA variables from risk-adjustment models, we found that 15–25% hospitals changed one performance quartile rank with no hospitals changing more than one quartile. (Appendix Table 4) Classification of performance outliers (top and bottom 5th percentile) was stable between the two approaches, with fewer than 4% of hospitals changing classification (i.e. from top/bottom outlier to middle 90%; no hospitals changed from one outlier category to the other). Similar to the main analysis, risk adjusted analysis without EDBA variables demonstrated substantial variation. (Appendix Table 2, Appendix Figure)

Limitations

This study has several potential limitations. First, our sample differed from non-EDBA participants in several characteristics. However, there is substantial overlap in characteristics between the study cohort and non-participants, and there is no a priori reason to believe that associations between predictors and outcomes would vary between the two groups. Nevertheless, future work should verify our findings in the entire cohort of hospitals that report CMS timeliness measures. Second, data corresponding to the EDBA case-mix variables may not be widely available. Our sensitivity analysis suggests that exclusion of EDBA variables may affect performance ranking but is reliable for identifying performance outliers. Third, we did not have the resources to perform multiple imputation for missing data, and there is the possibility of bias related to missing data. Fourth, there is the possibility of omitted variable bias as we did not have data on all possible factors that may be related to ED throughput. Fifth, the selection of variables to include in risk adjustment can be controversial.13 The Appendix-Theoretical Construct provides the rationale for our selection process but we acknowledge that other approaches may have merit. Sixth, CMS hospital compare data are self-reported by hospitals, although the risk of inaccurate data is mitigated by CMS auditing procedures.14

Finally, although we report common measures of variation, we acknowledge that the definition of “substantial” variation is ultimately a value judgment. We believe that the observed reported risk-adjusted variations are policy relevant, and that they merit future work to standardize and improve hospital performance on ED timeliness measures.

Discussion

We found that substantial variation in CMS ED timeliness measures remains after controlling for non-modifiable hospital characteristics and case-mix variables. There are several important implications of our results. First, the existence of persistent variation after risk adjustment suggests opportunities for improvement. Specifically, performance variation may be related to modifiable processes, and future work should focus on identifying hospital “best practices” to improve performance on the ED CMS timeliness measures. Second, the use of unadjusted data by consumers may lead to misleading conclusions about performance by individual hospitals. We believe that CMS should refine risk-adjustment methodology and present risk-adjusted data.

We found that two hospital characteristics (metro location and region) are associated with all four timeliness measures. We also found that ownership type, occupancy, ED visits, hospitals admissions and proportion of visits from ambulance arrivals are associated with the majority of timeliness measures. These findings may reflect regional practice norms as well as operational complexity associated with certain hospital characteristics. These characteristics may also be proxies for high-need patient populations.

The case-mix variables in our datasets were marginally predictive of ED timeliness. There are at least two potential, non-exclusive explanations. First, it is possible that other case-mix measures that are not available in our data are important for risk adjustment. Second, case-mix may be less important than non-measured process and operational factors for ED timeliness. The latter possibility is supported by the large variation in hospital performance after adjusting for available hospital characteristics and case mix. Qualitative studies suggest that specific processes and management approaches may explain much of the variation in performance.16

Our results are robust to the inclusion or exclusion of EDBA case mix variables. Specifically, we could reliably identify high and low outliers of performance without EDBA variables. This suggests that our risk profiling approach can be used to identify highest and lowest performers in the entire universe of hospitals that report data to CMS Hospital Compare, regardless of EDBA participation. Study of high performance outliers may reveal effective, modifiable, and generalizable hospital strategies.17

Our findings are similar to those published from an analysis of 2008–2009 data from the National Hospital Ambulatory Medical Care Survey (NHAMCS).15 Minor differences in regression model results are likely attributable to differences in available predictors variables. Our study builds on these prior findings by using updated, audited, and publicly reported measures from the CMS Hospital Compare database; including unique case-mix data from the EDBA; and assessing risk-adjusted, hospital-level variations in performance.

In conclusion, policy-relevant variations in publicly reported CMS ED timeliness measures persist after risk adjustment for non-modifiable hospital and case-mix characteristics. Future ‘positive deviance’ studies should identify modifiable process measures associated with high performance.

Supplementary Material

Acknowledgments

Grant Support:

This study was supported by National Institutes of Health (NIH) grant R21AG044607 (Dr. Sun). Dr. Chang is supported by NIH grant K12HL108974.

The funding organizations had no role in the design and conduct of the study; collection, management, analysis, and interpretation of the data; and preparation, review, or approval of the manuscript. The contents do not necessarily represent the official views of the National Institutes of Health.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Conflicts of Interest: None

Author Contributions Statement

BCS and KJM designed the study. BCS and KJM obtained funding for this study. JA and CR were responsible for EDBA data collection. LP was responsible for data management. AL and RF performed the data analysis. BCS drafted the manuscript. All authors contributed substantially to manuscript revisions. BCS takes responsibility for the paper as a whole. BCS, AL, and RF had full access to all the data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis. All authors approved the final report for submission.

References

- 1.Institute of Medicine. Future of Emergency Care: Hospital-Based Emergency Care at the Breaking Point. Washington, DC: The National Academies Press; 2006. [Google Scholar]

- 2.Le ST, Hsia RY. Timeliness of Care in US Emergency Departments: An Analysis of Newly Released Metrics From the Centers for Medicare & Medicaid Services. JAMA internal medicine. 2014 Sep 15; doi: 10.1001/jamainternmed.2014.3431. [DOI] [PubMed] [Google Scholar]

- 3.US Department of Health & Human Services. [Accessed May 1, 2013];Emergency Department Throughput Measures. 2013 http://www.medicare.gov/hospitalcompare/data/emergency-wait-times.aspx.

- 4.EDBA. [Accessed December 9, 2011];Emergency Department Benchmarking Alliance. 2011 http://edbenchmarking.org/

- 5.Handel D, Fu R, Augustine J, Hsia RY, Shufflebarger CM, Sun BC. Association of Emergency Department and Hospital Operation Characteristics on Elopments and Length of Stay. Ann Emerg Med. 2012 Oct;60(4s):S13. doi: 10.1016/j.jemermed.2013.08.133. [DOI] [PubMed] [Google Scholar]

- 6.American Hospital Association. AHA Annual Survey Database. 2014. [Accessed March 27, 2015]. http://www.ahadataviewer.com/book-cd-products/AHA-Survey/ [Google Scholar]

- 7.Welch SJ, Augustine JJ, Dong L, Savitz LA, Snow G, James BC. Volume-related differences in emergency department performance. Jt Comm J Qual Patient Saf. 2012 Sep;38(9):395–402. doi: 10.1016/s1553-7250(12)38050-1. [DOI] [PubMed] [Google Scholar]

- 8.Handel DA, Fu R, Vu E, et al. Association of emergency department and hospital characteristics with elopements and length of stay. J Emerg Med. 2014 Jun;46(6):839–846. doi: 10.1016/j.jemermed.2013.08.133. [DOI] [PubMed] [Google Scholar]

- 9.Centers for Medicare & Medicaid Services. [Accessed March 27, 2015];Cost Reports. 2014 https://www.cms.gov/Research-Statistics-Data-and-Systems/Downloadable-Public-Use-Files/Cost-Reports/?redirect=/costreports/

- 10.Medpac. [Accessed Dec 29, 2014];A Data Book: Health care spending and the Medicare program. 2014 http://www.medpac.gov/documents/publications/jun14databooksec6.pdf.

- 11.Graubard BI, Korn EL. Predictive margins with survey data. Biometrics. 1999 Jun;55(2):652–659. doi: 10.1111/j.0006-341x.1999.00652.x. [DOI] [PubMed] [Google Scholar]

- 12.Zhang Y, Baik SH, Fendrick AM, Baicker K. Comparing local and regional variation in health care spending. N Engl J Med. 2012 Nov;367(18):1724–1731. doi: 10.1056/NEJMsa1203980. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.National Quality Forum. Risk Adjustment for Socioeconomic Status or Other Sociodemographic Factors. 2014 file:///C:/Users/sunb/Downloads/ra-ses_technical-report.pdf.

- 14.Hospital Compare. [Accessed March 29, 2015];Timely and effective care measures. 2014 http://www.medicare.gov/hospitalcompare/Data/Measures.html.

- 15.Pines JM, Decker SL, Hu T. Exogenous Predictors of National Performance Measures for Emergency Department Crowding. Ann Emerg Med. 2012 May 23; doi: 10.1016/j.annemergmed.2012.01.024. [DOI] [PubMed] [Google Scholar]

- 16.McHugh M, Van Dyke KJ, Howell E, Adams F, Moss D, Yonek J. Changes in Patient Flow Among Five Hospitals Participating in a Learning Collaborative. Journal for healthcare quality : official publication of the National Association for Healthcare Quality. 2011 Sep 13; doi: 10.1111/j.1945-1474.2011.00163.x. [DOI] [PubMed] [Google Scholar]

- 17.Bradley EH, Curry LA, Webster TR, et al. Achieving rapid door-to-balloon times: how top hospitals improve complex clinical systems. Circulation. 2006 Feb 28;113(8):1079–1085. doi: 10.1161/CIRCULATIONAHA.105.590133. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.