Abstract

Adolescence is a time of increased risk for the onset of psychological disorders associated with deficits in face emotion labeling. We used functional magnetic resonance imaging (fMRI) to examine age-related differences in brain activation while adolescents and adults labeled the emotion on fearful, happy and angry faces of varying intensities [0% (i.e. neutral), 50%, 75%, 100%]. Adolescents and adults did not differ on accuracy to label emotions. In the superior temporal sulcus, ventrolateral prefrontal cortex and middle temporal gyrus, adults show an inverted-U-shaped response to increasing intensities of fearful faces and a U-shaped response to increasing intensities of happy faces, whereas adolescents show the opposite patterns. In addition, adults, but not adolescents, show greater inferior occipital gyrus activation to negative (angry, fearful) vs positive (happy) emotions. In sum, when subjects classify subtly varying facial emotions, developmental differences manifest in several ‘ventral stream’ brain regions. Charting the typical developmental course of the brain mechanisms of socioemotional processes, such as facial emotion labeling, is an important focus for developmental psychopathology research.

Keywords: face, emotion, brain, adolescence, development

Introduction

Adolescence involves dramatic socioemotional changes, including heightened reward sensitivity, emotional reactivity and risk-taking, as well as decreased threat avoidance (Steinberg, 2005). Adolescence is a common age of onset for schizophrenia, bipolar disorder and major depression (Merikangas et al., 2010). The occurrence of potentially problematic behavior and psychological disorders in adolescence may reflect adolescent brain immaturity in areas related to threat avoidance and inhibition (e.g. prefrontal cortex) compared to reward sensitivity and emotional reactivity (e.g. amygdala) (Ernst et al., 2006; Casey et al., 2010). These brain regions are also highly involved in emotional face processing. The ability to correctly label subtle-to-overt emotions on faces is essential for adaptive socioemotional development and is disrupted in multiple psychological disorders, including schizophrenia, bipolar disorder and major depression. This study used a novel task to identify age-related differences in the brain regions involved in correctly labeling face emotions of varying degrees of intensity.

The ability to correctly label face emotions undergoes a protracted developmental time-course from early childhood (age 6.5) to adulthood, with adolescence as a potentially key period of transition (Vieillard and Guidetti, 2009; Mancini et al., 2013; Naruse et al., 2013; Susilo et al., 2013). Considerable research, primarily in adults, shows that face processing relies on a core set of brain regions, including the superior temporal cortex, prefrontal cortex, inferior/middle occipital gyrus, inferior parietal lobule and amygdala, all of which respond to faces conveying emotions (Ishai, 2008; Cohen Kadosh et al., 2011; Sarkheil et al., 2013). Much research has probed basic aspects of face processing, such as whether a face is detected; other tasks require no response or a response other than emotion labeling (see reviews Kanwisher, 2010; Wiggins and Monk, 2013). However, few neuroimaging studies delineate neural correlates of face-emotion labeling, and no studies in either adults or adolescents map regions engaged when choosing labels for several different, subtly expressed emotions. Including subtly expressed emotions may increase ecological validity and better capture developmental differences in face-labeling skills.

As noted earlier, adolescence is a time of increased risk for the onset of psychopathology (Merikangas et al., 2010), whose etiology and maintenance may be related to problems processing facial emotions. Indeed, schizophrenia (Strauss et al., 2010), bipolar disorder (McClure et al., 2005; Brotman et al., 2008b) and depression (Schepman et al., 2012) all typically manifest first in adolescence and are associated with deficits in face emotion identification. Moreover, face emotion labeling deficits are present in adolescents at risk for developing these disorders (Brotman et al., 2008a; Lopez-Duran et al., 2013), suggesting that face emotion labeling deficits may develop in tandem with socioemotional symptoms. Facial emotion labeling deficits in adolescents with or at risk for depression are particularly apparent with more subtle faces compared to full-intensity faces (Schepman et al., 2012; Lopez-Duran et al., 2013). Thus, identifying the neural underpinnings of the ability to correctly identify face emotions is important to establish a foundation for brain research in populations in which face labeling is disrupted.

Despite evidence that the ability to label face emotions continues to mature through adolescence, to our knowledge, no research has compared adults and adolescents on brain regions engaged during face labeling, nor examined non-linear brain responses across subtle-to-overt emotional faces. Thus, this study mapped developmental differences in the brain regions involved in correctly labeling emotions, by comparing adult and adolescent responses to fearful, happy and angry faces across degree of intensity, as well as to the process of correctly labeling emotions more generally (i.e. regardless of intensity). To accomplish this, adults and adolescents were scanned while they performed a novel task developed to probe the face emotion labeling process.

Materials and methods

Participants

Data from 23 healthy adults and 21 adolescents were included. Of 27 adults and 25 adolescents who completed the task, 4 adults and 5 adolescents were excluded from analysis because of excessive head motion (>10% of frames removed after motion censoring, 2 adults); inadequate data in each of the conditions (<62 TRs, corresponding to approximately 8–10 trials, retained per condition after trials with incorrect responses were removed and motion censoring, 1 adult and 4 adolescents); and technical problems resulting in data loss (1 adult). All participants had normal or corrected-to-normal vision. Exclusion criteria consisted of orthodontic braces, other conditions contraindicated for MRI, and history of neurological disorders, Intelligence Quotient (IQ) < 80, and psychopathology, as screened by the Kiddie Schedule for Affective Disorders and Schizophrenia (K-SADS; Kaufman et al., 1997) in adolescents and the Structured Clinical Interview for DSM Disorders (SCID; Spitzer et al., 1992) in adults.

Participants were recruited from the greater Washington, D.C. area via advertisements and received monetary compensation. Adult participants and parents of minor participants gave written informed consent; minors gave written assent. Procedures were approved by the Institutional Review Board of the National Institute of Mental Health.

Facial emotion labeling task

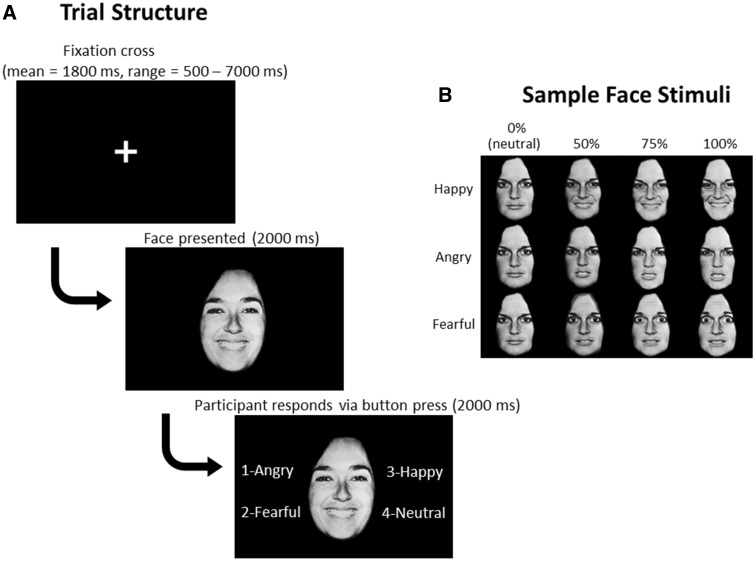

During fMRI acquisition, participants performed a facial emotion labeling task. Face stimuli were from 10 actors (4 male, 6 female) in the Pictures of Facial Affect set (Ekman and Friesen, 1976). Angry, fearful and happy faces were morphed with neutral faces, using FantaMorph Deluxe software (www.fantamorph.com), to create 0% (i.e. neutral), 50, 75 and 100% intensity emotion faces (Figure 1). Across 4 runs of ∼8.5 min each, there were a total of 28 trials per emotion intensity condition (e.g. Angry 50%, Angry 75%, etc.), except for neutral faces (i.e. 0% intensity of each angry, fearful and happy), which had a total of 84 trials (28 trials × 3).

Fig. 1.

Facial emotion labeling task schematic. A. Screenshots from a sample trial. Fixation cross timing varies across trials, and each set of timings was unique to each participant. B. Example of stimuli from one actor. Emotion faces morphed with neutral to create varying intensities of emotion.

In each trial, participants first viewed a fixation cross for a variable amount of time (mean = 1800 ms, ranging from 500 ms to 7000 ms). Timings unique to each participant for the fixation cross (i.e. intertrial intervals) were generated using the stim_analyze script from Analysis of Functional Neuroimages (AFNI; Cox, 1996). This approach allowed us to obtain a robust estimate of baseline. Next, a face was presented for 2000 ms. Then, four options to label the emotion (‘angry’, ‘fearful’, ‘happy’ or ‘neutral’) appeared next to the face for an additional 2000 ms; participants responded via a button box attached to their right hand (MRI Devices, Milwaukee, WI), synchronized to fMRI acquisition. Order of faces presentation was randomized. The options of which buttons to press to label the emotion, presented in the same order each time, appeared next to the face on each trial to reduce the working memory load. Of note, because participants were required to hold their responses until the options appeared next to the face, reaction times are not interpretable with this task. The task design reflects careful balancing of multiple considerations, including limiting the influence of potential differences in working memory and reading skills, the timing of the emotion labeling process (i.e. when the decision which button to push is made vs when the button is pushed) as well as length of the task and tolerability in the MRI.

Behavioral data analysis

To examine whether accuracy to identify face emotion differs by age group, emotion and intensity level, we conducted an ANOVA with Age Group (adult vs adolescent) as a between-subjects factor and Emotion (angry vs fearful vs happy) and Intensity (0% vs 50% vs 75% vs 100%) as within-subjects factors. To investigate significant interactions, false discovery rate (FDR) corrected pairwise post hoc comparisons were performed.

fMRI acquisition

MRI data were acquired using a 3T GE MR750 scanner with a 32-channel head coil. Participants viewed stimuli projected onto a screen via mirrors. Blood oxygen level dependent (BOLD) images were acquired in 47 contiguous axial slices parallel to the AC-PC (anterior-posterior commissure) line, covering the whole brain, and used an echoplanar single-shot gradient echo pulse sequence (matrix size = 96 × 96, repetition time (TR) = 2300 ms, echo time (TE) = 25 ms, flip angle = 50°, field of view (FOV) = 240 mm, voxel size = 2.5 × 2.5 × 2.6 mm). A high-resolution T1-weighed anatomical image was acquired in the axial plane for spatial normalization (124 1.2-mm slices, flip angle = 15°, matrix = 256 × 256, FOV = 240 mm).

Analytic plan

fMRI preprocessing

fMRI data were preprocessed as part of the standard processing stream using AFNI. The first four TRs of each run were discarded to allow the magnet to reach steady state, leaving 222 TRs for analysis in each of the four runs. Slice timing correction was performed. In addition, motion correction, affine alignment of the EPI to the T1 image and of the T1 image to the Talairach template were combined and applied as single per-volume transformation, resulting in a final voxel size of 2.5 × 2.5 × 2.5 mm. Images also underwent spatial smoothing (full width at half maximum blur estimates xyz = 6.14, 6.07, 5.65) and intensity scaling.

Individual level models

For each participant, trials in which participants labeled emotion correctly were categorized by emotion (fearful, happy, angry) and intensity (0%, 50%, 75%, 100%). The resulting event types (i.e. conditions) were modeled as regressors convolved with AFNI’s γ-variate basis function over 4000 ms of face presentation for each trial (2000 ms before and 2000 ms after options to label the face appear). Incorrect trials were modeled with a nuisance regressor for the main analysis; for the secondary analysis including all trials, regardless of accuracy, this nuisance regressor was omitted. Motion parameters (estimated in the x, y, z, roll, pitch, yaw directions) and fourth degree polynomials modeling low-frequency drift, based on run durations of 508 s, were included in the baseline model. To address excessive head motion, TRs pairs with more than 1 mm framewise displacement were censored. Beta coefficients were estimated for each voxel and each regressor. The beta images, which represented estimated activation in each condition for each participant, were then used in group-level analyses.

Group level models

AFNI’s 3dLME was utilized to create a whole-brain linear mixed effects model with Age Group (adult vs adolescent) as a between-subjects factor and Emotion (fearful vs happy vs angry) and Intensity (0%, 50%, 75% and 100%) weighted linearly, quadratically and cubically as within-subjects factors. To identify developmental differences in the brain regions activated when correctly labeling emotions of varying degrees of intensity, we examined interactions of Age Group × Emotion × Intensity-Linear; Age Group × Emotion × Intensity-Quadratic; and Age Group × Emotion × Intensity-Cubic. To identify developmental differences in the brain regions involved in correctly labeling emotions regardless of intensity, we examined the Age Group × Emotion interaction. For all contrasts, the cluster-extent threshold was set to k ≥ 38 (594 mm3) at a height threshold of P < 0.005, equivalent to a whole-brain corrected false positive probability of P < 0.05, as calculated by 3dClustSim, using blur estimates averaged across participants. All activation maps were identified with a mask to include only areas of the brain where 90% of participants had valid data. FDR-corrected post hoc analyses were performed to characterize the interactions in R (www.r-project.org) and SPSS statistical software, using values extracted and averaged from the clusters. As a secondary analysis, we reran the whole-brain model including all trials, regardless of accuracy.

In these analyses, age was used as a dichotomous variable to be consistent with and facilitate comparisons to many prior papers that have used this approach (Thomas et al., 2011; Somerville et al., 2013). Additionally, the dichotomous approach avoided statistical problems due to having fewer participants at the oldest end of the scale. That is, if age was used as a continuous variable, the few oldest participants would have a disproportionate effect on the analyses; a dichotomous approach limits the influence of these few participants. Nevertheless, we reran the analyses with age as a continuous variable.

Lastly, because of previous findings of developmental progression in the amygdala (Guyer et al., 2008), we conducted a region-of-interest (ROI) linear mixed effects analysis with values extracted and averaged across each amygdala. This analysis specifically modeled developmental associations with amygdala activity during correct face emotion labeling, comparing adult and adolescent amygdala responses to fearful, happy and angry faces both (i) across degree of intensity and (ii) regardless of intensity.

Results

Behavior

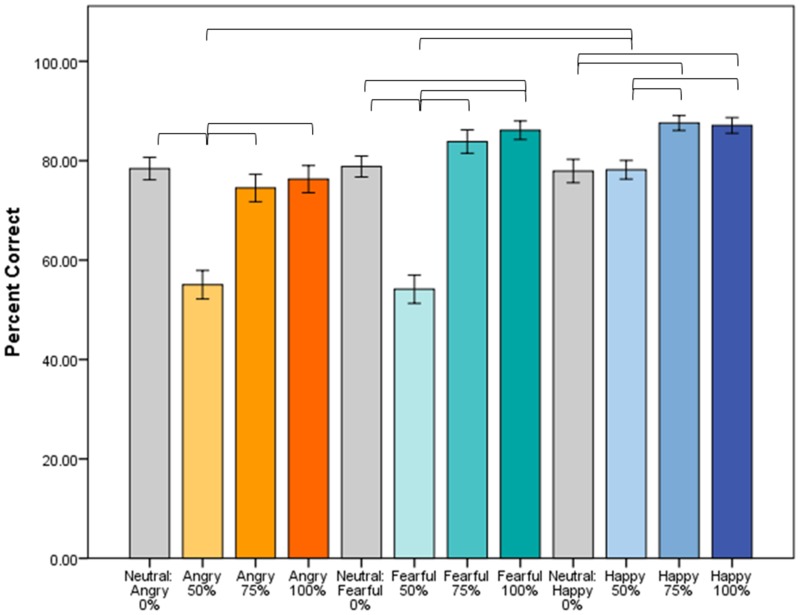

Both adults (78.7% accuracy) and children (74.1% accuracy) perform well above chance (25%) (Table 1). The Age Group × Emotion × Intensity interaction predicting accuracy was not significant (F6,252 < 1); thus, adults and adolescents did not differ in their ability to identify emotions at any intensity level. However, the Emotion × Intensity interaction was significant (F6,252 = 24.02, P < 0.001). Post hoc contrasts indicated that this interaction was driven by 50% intensity faces across all emotions, which are less likely to be identified correctly than other intensity faces. Additionally, angry and fearful faces at 50% intensity are more difficult to identify correctly than 50% happy faces (Figure 2).

Table 1.

Participant characteristics

| Adults | Adolescents | |||||

|---|---|---|---|---|---|---|

| n | 23 | 21 | χ2 | df | P | |

| Gender (% female) | 44% | 57% | 0.82 | 1 | 0.365 | |

| Age (years) | ||||||

| Mean (s.d.) | 29.3 (7.5) | 14.9 (2.4) | ||||

| Range | 19.3–47.4 | 9.8–18.0 | t | df | P | |

| Overall task accuracy (s.d.) | 78.7% (10.6%) | 74.1% (10.5%) | 1.47 | 42 | 0.148 | |

Fig. 2.

Accuracy of face emotion identification. Across both adults and adolescents (N = 44), accuracy differs by emotion, depending on intensity level (Emotion × Intensity, F6,252 = 24.02, P < 0.001). Fifty percent intensity faces are less likely to be identified correctly than 75% or 100% intensity faces, and angry and fearful faces at 50% intensity are less likely to be identified correctly than 50% happy faces. Adults and adolescents do not differ in identifying emotions of different intensities (Age Group × Emotion × Intensity, F < 1, not shown). Brackets indicate significant pairwise comparisons (P < 0.05). Error bars indicate standard error.

Developmental differences in brain mechanisms of labeling emotions with varying degrees of intensity (Age Group × Emotion × Intensity-Linear, -Quadratic, and -Cubic)

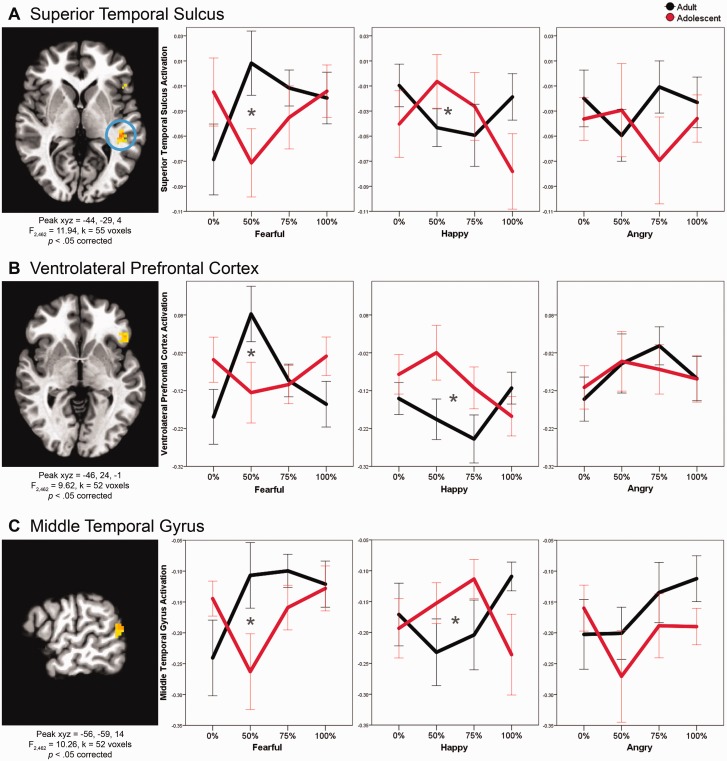

We tested whether brain activation associated with correctly labeling emotions, with intensity modeled linearly, quadratically and cubically, differs in adolescents vs adults by conducting a whole-brain linear mixed model with Age Group × Emotion × Intensity-Linear, -Quadratic and -Cubic interactions. In adolescents vs adults, three brain regions (superior temporal sulcus, ventrolateral prefrontal cortex and middle temporal gyrus) respond differently to faces, depending on emotion and intensity levels. Specifically, the Age Group × Emotion × Intensity-Quadratic interaction was significant in three clusters (superior temporal sulcus: F2,462 = 11.94, xyz = −44, −29, 4, k = 55, P < 0.05 corrected; ventrolateral prefrontal cortex: F2,462 = 9.62, xyz = −46, 24, −1, k = 52, P < 0.05 corrected; middle temporal gyrus: F2,462 = 10.26, xyz = −56, −59, 14, k = 52, P < 0.05 corrected; Figure 3). Thus, in these three regions, adults and adolescents differ on the quadratic shape of their response curve across intensity levels, depending on emotion. For the Age Group × Emotion × Intensity-Linear or -Cubic interactions, no clusters were significant. Post hoc analyses indicated that the Age Group × Emotion × Intensity-Quadratic interactions in all three clusters were driven by significant differences between adult and adolescent response curves to fearful (superior temporal sulcus: P = 0.002; ventrolateral prefrontal cortex: P = 0.003; middle temporal gyrus: P = 0.011) and happy (superior temporal sulcus: P = 0.012; ventrolateral prefrontal cortex: P = 0.021; middle temporal gyrus: P = 0.009) faces. In each of the three brain regions, adults show an inverted-U-shaped response to intensities of fearful faces (i.e. greater activation to middle intensity [50%] and less activation to low [0%] and high [100%] intensities), whereas adolescents show a U-shaped pattern (i.e. less activation to middle intensity and greater activation to low and high intensities). In contrast, adults and adolescents demonstrate the opposite pattern to happy faces: whereas adults show a U-shaped pattern across intensities of happy faces, adolescents show an inverted-U-shaped pattern. Adults and adolescents do not differ in their quadratic response curves to intensity levels of angry faces.

Fig. 3.

Developmental differences in brain mechanisms of facial emotion labeling (Age Group × Emotion × Intensity-Quadratic). For all figures, brain image threshold set at whole-brain corrected false probability rate of P < 0.05. Axial view for (A) and (B), sagittal for (C). In all figures, values were extracted from the clusters and averaged for the plots. Asterisks indicate significantly different quadratic trends between adults and adolescents (P < 0.05 FDR-corrected for multiple comparisons).

Additional whole-brain analyses

First, the superior temporal sulcus, ventrolateral prefrontal cortex and middle temporal gyrus clusters in the Age Group × Emotion × Intensity-Quadratic interaction, identified using age as a dichotomous variable, were also identified using age as a continuous variable, albeit at a lower threshold (see Supplementary Results for details). Second, when including both correct and incorrect trials, no clusters were significant in the Age Group × Emotion × Intensity-Linear, -Quadratic or -Cubic interactions.

Amygdala ROI analysis

Next, using an ROI approach to focus specifically on the amygdala, we tested whether adolescents and adults differ on amygdala activation associated with correctly labeling emotions, with intensity modeled linearly, quadratically and cubically. To do this, we used a linear mixed model with Age Group × Emotion × Intensity-Linear, -Quadratic and -Cubic interactions. The Age Group × Emotion × Intensity-Linear (F2,462 < 1), -Quadratic (F2,462 < 1) and -Cubic (F2,462 = 2.05, P = 0.130) interactions were not significant in the bilateral amygdalae. Of note, the only significant contrast in the model was Emotion × Intensity-Cubic (F2,462 = 4.72, P = 0.009). Additional ROI analysis results are in Supplementary Table S2.

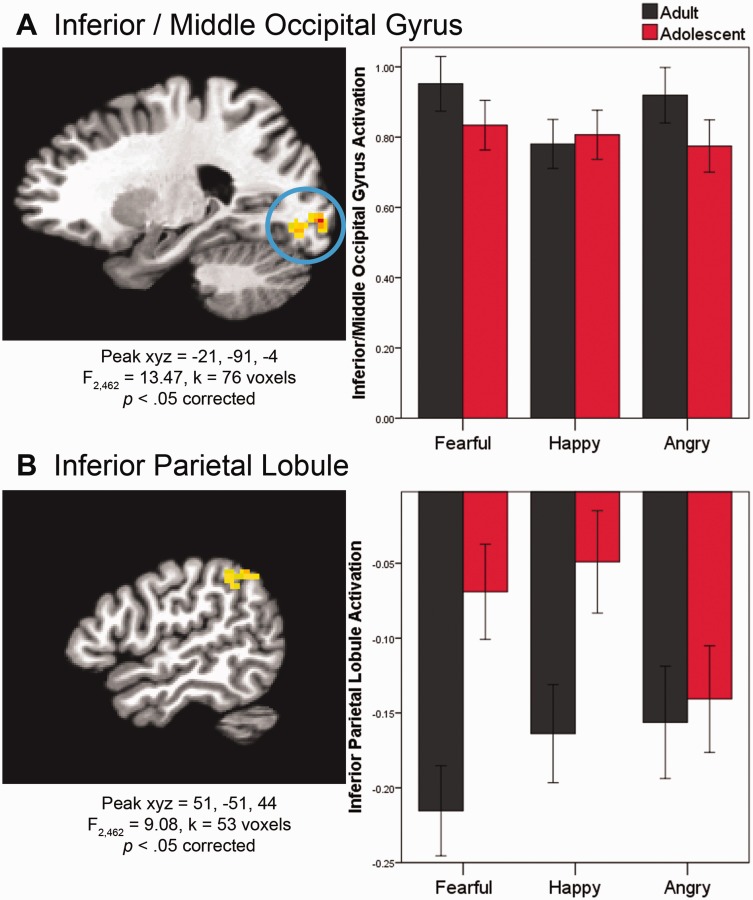

Developmental differences in brain mechanisms of labeling emotions regardless of intensity (Age Group × Emotion)

We then tested whether adults and adolescents differed on brain activation associated with correctly labeling emotions, regardless of intensity, by examining the Age Group × Emotion interaction in the whole-brain linear mixed model. For adolescents vs adults, two brain regions (inferior/middle occipital gyrus and inferior parietal lobule) respond differently during face labeling, depending on emotion. First, the Age Group × Emotion interaction was significant in the inferior/middle occipital gyrus (F2,462 = 13.47, xyz = −21, −91, −4, k = 76, P < 0.05 corrected; Figure 4). Post hoc tests indicated that in adults, the inferior/middle occipital gyrus activates more in response to negative (angry and fearful) than positive (happy) emotional faces (P < 0.001). In contrast, in adolescents, inferior/middle occipital gyrus activation differs between the two negative emotions (fearful > angry, P = 0.021), and neither negative emotion differs significantly from the positive emotion (happy).

Fig. 4.

Developmental differences in brain mechanisms of facial emotion labeling (Age Group × Emotion). Brain images in sagittal view. See caption for Figure 3 for further information on brain images and plots. FDR-corrected post hoc analyses indicate that in (A), for adults, Fearful > Happy and Angry > Happy; for adolescents, Fearful > Angry. In (B), for adults, Fearful > Happy > Angry; for adolescents, Fearful > Angry and Happy > Angry; within both Fearful and Happy, adults > adolescents.

The Age Group × Emotion interaction was also significant in the inferior parietal lobule (F2,462 = 9.08, xyz = 51, −51, 44, k = 53, P < 0.05 corrected; Figure 4). Post hoc analyses indicated that adults deactivate inferior parietal lobule more than adolescents in response to fearful (P = 0.014) and happy (P = 0.036) faces, but adults and adolescents do not differ in their response to angry faces (P = 0.766). Results from additional whole-brain contrasts are in Supplementary Table S1.

Additional whole-brain analyses

First, the inferior/middle occipital gyrus and inferior parietal lobule clusters in the Age Group × Emotion interaction, identified with age as a dichotomous variable, were also identified using age as a continuous variable (see Supplementary Results for details). Second, when the analysis was rerun including both correct and incorrect trials, the Age Group × Emotion interaction was significant in the ventromedial prefrontal cortex/BA 10 (F2,462 = 13.62, P < 0.05 corrected).

Amygdala ROI analysis

Lastly, to focus specifically on the amygdala, using an ROI approach, we tested whether adolescents and adults differ on amygdala activation associated with correctly labeling emotions, regardless of intensity, by examining the Age Group × Emotion interaction in the linear mixed model. The Age Group × Emotion interaction was not significant in the bilateral amygdalae (F2,462 < 1) (Supplementary Table S2).

Discussion

To our knowledge, this is the first study to examine age-related differences in brain regions involved in labeling subtly varying emotional expressions and the first study to examine non-linear brain responses when labeling subtle-to-overt expressions. We documented several differences in the brain regions recruited by adults vs adolescents when correctly labeling face emotions. Specifically, in the superior temporal sulcus, ventrolateral prefrontal cortex and middle temporal gyrus, adults show an inverted-U-shaped response to increasing intensities of fearful faces and a U-shaped response to increasing intensities of happy faces, whereas adolescents show the opposite pattern. In contrast to these findings in regions sensitive to intensity of the face emotion, other regions show developmental differences, regardless of emotion intensity. In the inferior/middle occipital gyrus, we found differences in activation between negative (angry, fearful) and positive (happy) emotions in adults. In adolescents, however, activation in this region discriminates between angry and fearful emotions and, unlike in adults, does not discriminate either negative emotion from happy. Also, in the inferior parietal lobule, adults show more deactivation than adolescents in response to fearful and happy faces, whereas adolescents and adults show similar levels of deactivation to angry faces.

These findings on emotion labeling, particularly in the superior temporal sulcus, ventrolateral prefrontal cortex and middle temporal gyrus, are in line with prior work on other aspects of face processing, which found consistent developmental differences for fearful, and to a lesser extent, happy faces (Monk et al., 2003; Guyer et al., 2008; Johnson and Casey, 2014). We found that for fearful and happy faces, adults and adolescents both showed quadratic response curves, but in opposite directions; the differences between adults and adolescents were most pronounced between the peak and nadir of those curves, which occurred with subtle, ambiguous (∼50% faces), which were the most difficult for both adults and adolescents to identify (i.e. lowest accuracy). Our results support and extend previous work to show that developmental differences in neural activity are particularly apparent when subjects correctly label the emotion on subtle, ambiguous fearful and happy faces.

Adolescence is characterized by normative decreases in threat avoidance and increases in reward sensitivity that contribute to sensation-seeking and risk-taking behaviors (Steinberg, 2005). Our finding of decreased ventrolateral prefrontal activation to middle intensity fearful faces in adolescents relative to adults may reflect decreased responsivity to social cues alerting one to potential threats in the environment, which in turn could be reflective of the decreased threat avoidance characteristic of adolescence. In addition, greater superior temporal sulcus activation to middle intensity happy faces in adolescents vs adults may reflect of greater responsiveness to stimuli associated with reward and approach, which is also characteristic of adolescence. This possible interpretation must be considered tentative, since the superior temporal sulcus has not traditionally been thought to be a primary reward processing region. However, data do suggest that superior temporal sulcus may be involved in processing reward (De Pascalis et al., 2010; Miedl et al., 2015), particularly social aspects of reward (Korn et al., 2012; Morelli et al., 2014). Notably, age-related differences were detected with middle intensity faces, suggesting that diminished threat avoidance and augmented reward sensitivity in adolescents may be most apparent in ambiguous situations where the ‘correct’ response is not obvious. To probe these possibilities directly, future studies could explore interactions among threat avoidance and reward sensitivity, ambiguity and brain activation while labeling faces. Finally, ambiguous stimuli may be more difficult for adolescents to identify, and thus brain responses may reflect effort rather than ambiguity itself. However, adults and adolescents did not differ on accuracy to at any emotion intensity, decreasing the likelihood that greater difficulty in adolescents is primarily driving the findings. Future studies that control for effort, measured more directly, such as through pupil dilation, will be necessary to address this possibility.

Our findings are somewhat in contrast to previous face processing studies that focused on whether or not a face is detected (Cohen Kadosh and Johnson, 2007; Kanwisher, 2010). That is, whereas studies of face detection found increased cortical specialization with development, here, with face emotion labeling, we found a more complex pattern: activation differs with age in a manner that is neither more specialized nor more diffuse. The developmental patterns of face emotion labeling vs face detection may reflect the fact that the former is a more complex, higher-order process that undergoes a more prolonged period of development. Or, it may be that we were able to detect this more complex developmental pattern because our task (labeling subtle-to-overt emotions) is more complex than previous tasks (identifying whether or not a stimulus is a face). Overall, our findings suggest that, even though adults and adolescents perform similarly on the face emotion labeling task, their performance is subserved by different brain mechanisms. However, these findings should be replicated in a longitudinal study designed to rule out cohort effects.

Of note, in our data, the superior temporal sulcus, ventrolateral prefrontal cortex and middle temporal gyrus respond to faces by activating less (i.e. deactivating) relative to baseline. Additionally, both adults and adolescents deactivate inferior parietal lobule to varying degrees in response to faces. This is consistent with previous work on brain mechanisms of detecting overt emotions on faces (Cohen Kadosh et al., 2013), which also found deactivation in superior temporal sulcus during passive viewing. Functional MRI does not provide an absolute baseline measure of activation; instead, in our data, the baseline reflects activation while viewing a fixation cross. While acknowledging the difficulties in interpreting deactivation in functional MRI studies, it is interesting to note that our finding of deactivation during face emotion processing is consistent with previous work.

The regions implicated in labeling emotions in our data have been identified previously as lying within the ‘ventral stream’ involved in representing object identity (Grill-Spector et al., 2008; except for inferior parietal lobule, which may play a complementary role integrating and utilizing information from ventral and dorsal streams to manage responses, Singh-Curry and Husain, 2009). In particular, these ventral stream regions, including the inferior/middle occipital gyrus, temporal lobe and ventrolateral prefrontal cortex respond more strongly to faces than to other objects (Grill-Spector et al., 2008; Ishai, 2008) and are sensitive to, and necessary for, recognizing face emotions (Pitcher et al., 2008; Cohen Kadosh et al., 2013). Our study supports the idea that these ventral stream regions represent the classes into which objects can be sorted; whereas prior studies emphasize gross perceptual features of such stimuli (e.g. broad classes like faces, houses, animals, tools, etc., Grill-Spector et al., 2008; Dunsmoor et al., 2014), the current report extends this research by using stimuli that can be arranged into classes based on more fine-tuned features, such as varying intensities of specific emotions, that require greater classification skill. Indeed, prior research suggests that classification skill reflects engagement of these ventral stream regions (Gauthier et al., 1997). Our study extends this work to the realm of development, suggesting that maturation in the ability to parse complex emotions through fine-tuned perceptual features reflects maturation in function within the ventral stream.

Of note, the role of the ventrolateral prefrontal cortex in our study is consistent with previous work that suggests emotion labeling heavily recruits ventrolateral prefrontal cortex, which in turn downregulates amygdala responses to emotional faces (Lieberman et al., 2007). The ventrolateral prefrontal recruitment that we documented may explain why, in this study, we do not detect significant differences in activation in the amygdala for the main effect of emotion or other interactions with emotion, although overall, we do find amygdala activation in response to the faces (Supplementary Results). Future studies directly comparing amygdala–ventrolateral prefrontal effective connectivity during emotional face labeling vs passive viewing or other implicit processing of emotional faces will be necessary to further investigate this issue. This will be particularly important to investigate with regard to age-related differences, given that adolescents and adults have been shown to differentially recruit ventrolateral prefrontal cortex during cognitive regulation of negative social emotion (Silvers et al., 2014) as well as in numerous cognitive control studies (Tamm et al., 2002; Velanova et al., 2008).

Our primary analyses examined neural processes associated with correctly labeling emotions; when incorrect trials were not excluded, no clusters were significant for the Age Group × Emotion × Intensity-Linear, -Quadratic, or -Cubic interactions, and a different cluster was significant for the Age Group × Emotion interaction. Including incorrect with correct trials is advantageous to ensure that neural effects are estimated from the same number of trials per condition and to provide a fuller picture of the emotion labeling process. Of note, however, adults and adolescents did not differ on accuracy to identify emotions, and thus, it is unlikely that differences in accuracy are driving our results. Additionally, there is considerable heterogeneity in the types of errors that participants make and therefore in the underlying psychological and neural mechanisms. For example, a trial could be incorrect because a happy face was labeled angry, or a fearful face was labeled neutral, and so on, all of which represent potentially different processes. Thus, combining correct and incorrect trials (and the multiple processes that these represent) would introduce noise. Also, interpretability of clusters identified when including all trials, regardless of accuracy, is suspect, as effects could be due to correct trials, incorrect trials, or any subset of the incorrect trials (anger labeled as fearful, happy labeled as angry, etc.) To directly probe the neural mechanisms underlying labeling incorrect trials, it will be necessary to design a labeling task that generates enough trials of each subset of incorrect responses to investigate these distinct processes separately.

Limitations

Our study has at least two limitations. First, although our sample size is comparable to or greater than similar studies on face emotion (13 adults, Ishai et al., 2004; 14 adults, Cohen Kadosh et al., 2010; e.g. 14 adults, 22 adolescents, Cohen Kadosh et al., 2011; 14 adults, 12 adolescents, 16 children, Cohen Kadosh et al., 2013), it is nonetheless modest (23 adults, 21 adolescents), relative to data from large consortiums. However, because these data repositories depend on gathering data from multiple lab groups, they have largely relied on resting state scans or brief, relatively simple paradigms (Schumann et al., 2010; Di Martino et al., 2014). This article used a novel paradigm (labeling face emotions with varying degrees of subtlety) that was uniquely poised to answer an outstanding question about the development of face emotion processing. Nevertheless, in future research, our findings will need to be replicated with a larger sample.

Second, as the vast majority of face paradigms do, we presented faces without explicitly monitoring attention to the faces. Thus, in this study and others that did not measure attention, it is possible that potential differences in attention to the faces may affect neural response. Of note, the age groups did not differ on emotion labeling accuracy, suggesting that both adults and adolescents directed attention to the faces. However, to test the effects of attention directly, future research using tools that explicitly measure attention, such as eyetracking, will be necessary.

Future directions

This study lays the foundation for a program of research on face emotion labeling. Specifically, as this study documented typical developmental changes in the brain mechanisms underlying face emotion labeling, a natural next step is to chart how the developmental trajectory of these brain mechanisms may veer off-course in adolescents and adults with psychopathology in which face emotion labeling is compromised (e.g. bipolar disorder, McClure et al., 2005; Brotman et al., 2008a; risk for bipolar disorder, Brotman et al., 2008b; schizophrenia, Strauss et al., 2010; depression, Schepman et al., 2012; risk for depression, Lopez-Duran et al., 2013). Identifying the brain mechanisms underlying alterations of specific socioemotional processes, such as emotional face labeling, that may contribute to psychopathology symptoms could yield targets for hypothesis-driven medical and behavioral treatments.

Supplementary Material

Acknowledgements

We gratefully acknowledge the Functional Magnetic Resonance Imaging Facility at the National Institutes of Health for technical support and the Emotion and Development Branch, including Elizabeth Harkins, for assistance with data collection and processing. We also thank the families who participated.

Funding

This research was supported by the Intramural Research Program of the National Institute of Mental Health, National Institutes of Health, conducted under NIH Clinical Study Protocol 00-M-0198 (NCT000006177).

Supplementary data

Supplementary data are available at SCAN online.

Conflict of interest. None declared.

References

- Brotman M.A., Guyer A.E., Lawson E.S., et al. (2008a). Facial emotion labeling deficits in children and adolescents at risk for bipolar disorder. The American Journal of Psychiatry, 165(3), 385–9. [DOI] [PubMed] [Google Scholar]

- Brotman M.A., Skup M., Rich B.A., et al. (2008b). Risk for bipolar disorder is associated with face-processing deficits across emotions. Journal of the American Academy of Child and Adolescent Psychiatry, 47(12), 1455–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Casey B.J., Jones R.M., Levita L., et al. (2010). The storm and stress of adolescence: insights from human imaging and mouse genetics. Developmental Psychobiology, 52(3), 225–35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen Kadosh K., Cohen Kadosh R., Dick F., Johnson M.H. (2011). Developmental changes in effective connectivity in the emerging core face network. Cerebral Cortex, 21(6), 1389–94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen Kadosh K., Henson R.N., Cohen Kadosh R., Johnson M.H., Dick F. (2010). Task-dependent activation of face-sensitive cortex: an fMRI adaptation study. The Journal of Cognitive Neuroscience, 22(5), 903–17. [DOI] [PubMed] [Google Scholar]

- Cohen Kadosh K., Johnson M.H. (2007). Developing a cortex specialized for face perception. Trends in Cognitive Sciences, 11(9), 367–9. [DOI] [PubMed] [Google Scholar]

- Cohen Kadosh K., Johnson M.H., Henson R.N., Dick F., Blakemore S.J. (2013). Differential face-network adaptation in children, adolescents and adults. Neuroimage, 69, 11–20. [DOI] [PubMed] [Google Scholar]

- Cox R.W. (1996). AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Computers and Biomedical Research, 29(3), 162–73. [DOI] [PubMed] [Google Scholar]

- De Pascalis V., Varriale V., D'Antuono L. (2010). Event-related components of the punishment and reward sensitivity. Clinical Neurophysiology, 121(1), 60–76. [DOI] [PubMed] [Google Scholar]

- Di Martino A., Yan C.G., Li Q., et al. (2014). The autism brain imaging data exchange: towards a large-scale evaluation of the intrinsic brain architecture in autism. Molecular Psychiatry, 19(6), 659–67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunsmoor J.E., Kragel P.A., Martin A., LaBar K.S. (2014). Aversive learning modulates cortical representations of object categories. Cerebral Cortex, 24(11), 2859–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ekman P., Friesen W.V. (1976). Pictures of Facial Affect. Palo Alto, CA: Consulting Psychologists Press. [Google Scholar]

- Ernst M., Pine D.S., Hardin M. (2006). Triadic model of the neurobiology of motivated behavior in adolescence. Psychological Medicine, 36(3), 299–312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gauthier I., Anderson A.W., Tarr M.J., Skudlarski P., Gore J.C. (1997). Levels of categorization in visual recognition studied using functional magnetic resonance imaging. Current Biology, 7(9), 645–51. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K., Golarai G., Gabrieli J. (2008). Developmental neuroimaging of the human ventral visual cortex. Trends in Cognitive Sciences, 12(4), 152–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guyer A.E., Monk C.S., McClure-Tone E.B., et al. (2008). A developmental examination of amygdala response to facial expressions. The Journal of Cognitive Neuroscience, 20(9), 1565–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ishai A. (2008). Let's face it: it's a cortical network. Neuroimage, 40(2), 415–9. [DOI] [PubMed] [Google Scholar]

- Ishai A., Pessoa L., Bikle P.C., Ungerleider L.G. (2004). Repetition suppression of faces is modulated by emotion. Proceedings of the National Academy of Sciences of the United States of America, 101(26), 9827–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson D.C., Casey B.J. (2014). Easy to remember, difficult to forget: the development of fear regulation. Developmental Cognitive Neuroscience, 11, 42–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N. (2010). Functional specificity in the human brain: a window into the functional architecture of the mind. Proceedings of the National Academy of Sciences of the United States of America, 107(25), 11163–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaufman J., Birmaher B., Brent D., et al. (1997). Schedule for affective disorders and schizophrenia for school-age children—present lifetime version (K-SADS-PL): initial reliability and validity data. Journal of the American Academy of Child and Adolescent Psychiatry, 36, 980–8. [DOI] [PubMed] [Google Scholar]

- Korn C.W., Prehn K., Park S.Q., Walter H., Heekeren H.R. (2012). Positively biased processing of self-relevant social feedback. The Journal of Neuroscience, 32(47), 16832–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lieberman M.D., Eisenberger N.I., Crockett M.J., Tom S.M., Pfeifer J.H., Way B.M. (2007). Putting feelings into words: affect labeling disrupts amygdala activity in response to affective stimuli. Psychological Science, 18(5), 421–8. [DOI] [PubMed] [Google Scholar]

- Lopez-Duran N.L., Kuhlman K.R., George C., Kovacs M. (2013). Facial emotion expression recognition by children at familial risk for depression: high-risk boys are oversensitive to sadness. The Journal of Child Psychology and Psychiatry, 54(5), 565–74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mancini G., Agnoli S., Baldaro B., Bitti P.E., Surcinelli P. (2013). Facial expressions of emotions: recognition accuracy and affective reactions during late childhood. Journal of Psychology, 147(6), 599–617. [DOI] [PubMed] [Google Scholar]

- McClure E.B., Treland J.E., Snow J., et al. (2005). Deficits in social cognition and response flexibility in pediatric bipolar disorder. The American Journal of Psychiatry, 162(9), 1644–51. [DOI] [PubMed] [Google Scholar]

- Merikangas K.R., He J.P., Burstein M., et al. (2010). Lifetime prevalence of mental disorders in U.S. adolescents: results from the National Comorbidity Survey Replication—Adolescent Supplement (NCS-A). Journal of the American Academy of Child and Adolescent Psychiatry, 49(10), 980–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miedl S.F., Wiswede D., Marco-Pallares J., et al. (2015). The neural basis of impulsive discounting in pathological gamblers. Brain Imaging and Behavior, 9, 887–98. [DOI] [PubMed] [Google Scholar]

- Monk C.S., McClure E.B., Nelson E.E., et al. (2003). Adolescent immaturity in attention-related brain engagement to emotional facial expressions. Neuroimage, 20(1), 420–8. [DOI] [PubMed] [Google Scholar]

- Morelli S.A., Sacchet M.D., Zaki J. (2014). Common and distinct neural correlates of personal and vicarious reward: a quantitative meta-analysis. Neuroimage, 112, 244–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Naruse S., Hashimoto T., Mori K., Tsuda Y., Takahara M., Kagami S. (2013). Developmental changes in facial expression recognition in Japanese school-age children. The Journal of Medical Investigation, 60(1-2), 114–20. [DOI] [PubMed] [Google Scholar]

- Pitcher D., Garrido L., Walsh V., Duchaine B.C. (2008). Transcranial magnetic stimulation disrupts the perception and embodiment of facial expressions. The Journal of Neuroscience, 28(36), 8929–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sarkheil P., Goebel R., Schneider F., Mathiak K. (2013). Emotion unfolded by motion: a role for parietal lobe in decoding dynamic facial expressions. Social Cognitive and Affective Neuroscience, 8(8), 950–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schepman K., Taylor E., Collishaw S., Fombonne E. (2012). Face emotion processing in depressed children and adolescents with and without comorbid conduct disorder. Journal of Abnormal Child Psychology, 40(4), 583–93. [DOI] [PubMed] [Google Scholar]

- Schumann G., Loth E., Banaschewski T., et al. (2010). The IMAGEN study: reinforcement-related behaviour in normal brain function and psychopathology. Molecular Psychiatry, 15(12), 1128–39. [DOI] [PubMed] [Google Scholar]

- Silvers J.A., Shu J., Hubbard A.D., Weber J., Ochsner K.N. (2014). Concurrent and lasting effects of emotion regulation on amygdala response in adolescence and young adulthood. Developmental Science, 18(5), 771–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Singh-Curry V., Husain M. (2009). The functional role of the inferior parietal lobe in the dorsal and ventral stream dichotomy. Neuropsychologia, 47(6), 1434–48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Somerville L.H., Jones R.M., Ruberry E.J., Dyke J.P., Glover G., Casey B.J. (2013). The medial prefrontal cortex and the emergence of self-conscious emotion in adolescence. Psychological Science, 24(8), 1554–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spitzer R.L., Williams J.B., Gibbon M., First M.B. (1992). The Structured Clinical Interview for DSM-III-R (SCID). I: History, rationale, and description. Archives of General Psychiatry, 49(8), 624–9. [DOI] [PubMed] [Google Scholar]

- Steinberg L. (2005). Cognitive and affective development in adolescence. Trends in Cognitive Sciences, 9(2), 69–74. [DOI] [PubMed] [Google Scholar]

- Strauss G.P., Jetha S.S., Ross S.A., Duke L.A., Allen D.N. (2010). Impaired facial affect labeling and discrimination in patients with deficit syndrome schizophrenia. Schizophrenia Research, 118(1-3), 146–53. [DOI] [PubMed] [Google Scholar]

- Susilo T., Germine L., Duchaine B. (2013). Face recognition ability matures late: evidence from individual differences in young adults. The Journal of Experimental Psychology: Human Perception and Performance, 39(5), 1212–7. [DOI] [PubMed] [Google Scholar]

- Tamm L., Menon V., Reiss A.L. (2002). Maturation of brain function associated with response inhibition. Journal of the American Academy of Child and Adolescent Psychiatry, 41(10), 1231–8. [DOI] [PubMed] [Google Scholar]

- Thomas L.A., Hall J.M., Skup M., Jenkins S.E., Pine D.S., Leibenluft E. (2011). A developmental neuroimaging investigation of the change paradigm. Developmental Science, 14(1), 148–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Velanova K., Wheeler M.E., Luna B. (2008). Maturational changes in anterior cingulate and frontoparietal recruitment support the development of error processing and inhibitory control. Cerebral Cortex, 18(11), 2505–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vieillard S., Guidetti M. (2009). Children's perception and understanding of (dis)similarities among dynamic bodily/facial expressions of happiness, pleasure, anger, and irritation. The Journal of Experimental Child Psychology, 102(1), 78–95. [DOI] [PubMed] [Google Scholar]

- Wiggins J.L., Monk C.S. (2013). A translational neuroscience framework for the development of socioemotional functioning in health and psychopathology. Developmental Psychopathology, 25(4 Pt 2), 1293–309. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.