Abstract

Brain-Robot Interaction (BRI), which provides an innovative communication pathway between human and a robotic device via brain signals, is prospective in helping the disabled in their daily lives. The overall goal of our method is to establish an SSVEP-based experimental procedure by integrating multiple software programs, such as OpenViBE, Choregraph, and Central software as well as user developed programs written in C++ and MATLAB, to enable the study of brain-robot interaction with humanoid robots.

This is achieved by first placing EEG electrodes on a human subject to measure the brain responses through an EEG data acquisition system. A user interface is used to elicit SSVEP responses and to display video feedback in the closed-loop control experiments. The second step is to record the EEG signals of first-time subjects, to analyze their SSVEP features offline, and to train the classifier for each subject. Next, the Online Signal Processor and the Robot Controller are configured for the online control of a humanoid robot. As the final step, the subject completes three specific closed-loop control experiments within different environments to evaluate the brain-robot interaction performance.

The advantage of this approach is its reliability and flexibility because it is developed by integrating multiple software programs. The results show that using this approach, the subject is capable of interacting with the humanoid robot via brain signals. This allows the mind-controlled humanoid robot to perform typical tasks that are popular in robotic research and are helpful in assisting the disabled.

Keywords: Engineering, Issue 105, Neuroscience, brain-robot interaction (BRI), humanoid robot, Cerebot, electroencephalograph (EEG), steady-state visual evoked potential (SSVEP), multiple software integration, canonical correlation analysis (CCA), closed-loop control, telepresence control

Introduction

Brain-Robot Interaction (BRI), which provides an innovative communication pathway between human and a robotic device via brain signals, is prospective in helping the disabled in their daily lives 1,2. A variety of methods are able to acquire brain signals either invasively or non-invasively, such as electrocorticography (ECoG), electroencephalograph (EEG), functional magnetic resonance imaging (fMRI), etc. The most commonly used non-invasive method for building the BRI system is to acquire EEG signals from electrodes placed on the scalp. This method is inexpensive, easy to use, and provides an acceptable temporal resolution 3. Among a variety of robotic devices, humanoid robots are advanced as they are created to imitate some of the same physical and mental tasks that humans undergo daily. BRI with a humanoid robot will play an important role in assisting the sick and elderly, as well as performing unsanitary or dangerous jobs. But control of a humanoid robot through BRI system is highly challenging, as the humanoid robot with full body movement is developed to perform complex tasks such as personal assistance 4, 5.

Steady-State Visual Evoked Potential (SSVEP) is a type of brain signal evoked by the modulation of visual stimulus at a given frequency 6. It contains sinusoids at the fundamental and harmonic frequencies of the flickering stimulus, and prominently appears throughout the visual cortex in the occipital region of the scalp 7. The reason for choosing the SSVEP signals is that the SSVEP-based BRI system yields relatively high information transfer rate and requires less training 8. Other types of brainwaves, such as event-related potentials (ERPs) 9 or motor-imagery (MI) potentials 10, can also be embedded into this experimental procedure.

Our procedure for brain-robot interaction with humanoid robots is based on Cerebot - a mind-controlled humanoid robot platform - consisting of an EEG data acquisition system and a humanoid robot 11. The EEG system is able to record, pre-process and display bio-potential signals acquired by various types of electrodes. It provides multiple analog I/Os and digital I/Os and is capable of recording up to 128 signal channels simultaneously at a sampling rate of 30 kHz with 16-bits resolution. Its software development kits in C++ and MATLAB are easy for users to design the experimental procedures. The humanoid robot has 25 degrees of freedom and is equipped with multiple sensors, including 2 cameras, 4 microphones, 2 sonar rangefinders, 2 IR emitters and receivers, 1 inertial board, 9 tactile sensors, and 8 pressure sensors. It provides Choregraphe and C++ SDK for creating and editing movements and interactive robot behaviors.

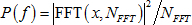

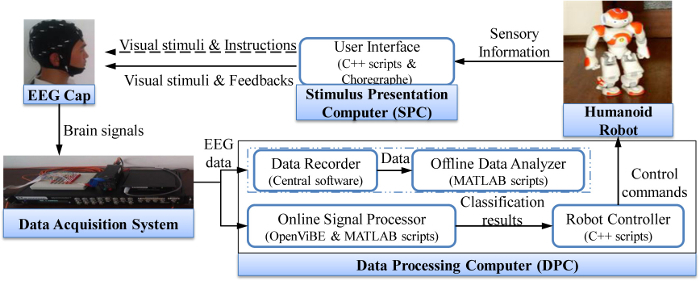

The overall goal of this method is to establish an SSVEP-based experimental procedure by integrating multiple software programs, such as OpenViBE, Choregraph, Central software as well as user developed programs written in C++ and MATLAB, to enable the study of brain-robot interaction with humanoid robots 11. Figure 1 shows the system structure. The dedicated stimulus presentation computer (SPC) displays the User Interface to provide the subject with visual stimuli, instructions and environmental feedbacks. The dedicated data processing computer (DPC) runs the Data Recorder and the Offline Data Analyzer in the offline training process, and runs the Online Signal Processor and the Robot Controller for the online control of the humanoid robot. Compared with other SSVEP-based control systems, our system is more reliable, more flexible, and especially more convenient to be reused and upgraded as it is developed by integrating a number of standardized software packages, such as OpenViBE, Choregraph, Central software, and modules written in C++ and MATLAB.

The following procedure was reviewed and approved by Tianjin medical university general hospital ethics committee, and all subjects gave written consent.

Protocol

1. Acquiring EEG Signals

Explain the experimental procedure to the subject and obtain written informed consent to participate in experiments.

Measure the circumference of the subject's head using a tape measure and select the EEG cap size that is close to the measurement. The electrodes arrangement is based on the "International 10-20 System" 12.

Measure the distance between the nasion and inion. Use a skin marker pencil to mark 10% of the distance as a reference for aligning the cap, and mark the midpoint of the distance as the vertex on the subject's scalp.

Place the EEG cap on the subject by aligning the 10% mark with the midpoint of the FP1 and FP2 electrodes. Position electrode Cz of the cap on the vertex.

Make sure that the Fz, Cz, Pz and Oz are on the midline of the head, and that each pair of electrodes located on either side of the midline are in a horizontal line.

Smear the REF1 and REF2 electrodes with conductive gel. Place the reference electrodes on the left and right mastoids using medical tape. Tighten the chinstrap.

Place a blunt-tipped syringe in the electrode holders and inject conductive gel into each electrode in the following order: first, the "ground" electrode on the forehead and second, the five electrodes used in the experiment, O2, O1, Oz, Pz, and Cz.

Seat the subject in a comfortable chair 60 cm in front of a stimulus monitor. Instruct the subject to keep his /her eyes in the same horizontal level with the center of the monitor.

Connect the electrode wires to the EEG data acquisition system. Configure the sampling rate to 1 kHz.

Examine the EEG signal quality on the dedicated DPC. If there is a problem with a particular electrode, re-inject gel to adjust the impedance of the channel.

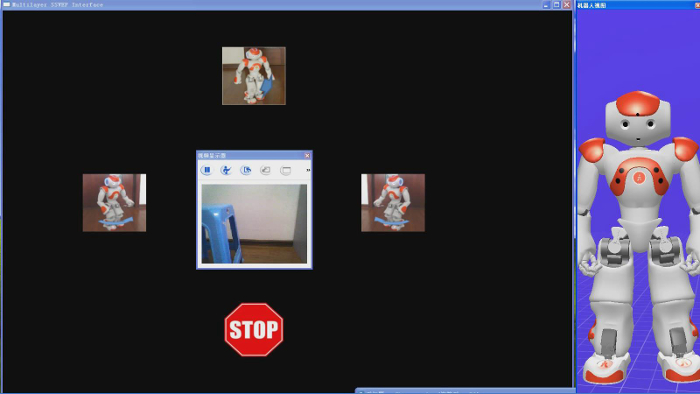

Use the dedicated SPC to flicker four robot images at frequencies of 4.615, 12, 15 and 20 Hz as visual stimuli on the User Interface as shown in Figure 2.

2. Offline Analyzing SSVEP Features

Conduct 32 trials of offline training experiments for each subject and record their brain signals acquired from the EEG system throughout this process. NOTE: this process is only conducted by first-time subjects of the experiment to establish their SSVEP feature vectors and to train the classifier.

When a trial starts, select one stimulus as the target randomly and display a yellow arrow above it.

1 sec later, flicker the four visual stimuli at different frequencies on the User Interface for 5 sec.

Request the subject to focus on the selected stimulus target while keeping his/her body movement to a minimum.

After each trial, give the subject 3 sec to relax, and then start the next trial.

When all trials are finished, read the saved data. Extract a 3-sec data epoch between 2 sec and 5 sec after the trigger which selects a stimulus as the target in each trial.

- Calculate the power spectrum density (PSD) of the data epochs using the Offline Data Analyzer in MATLAB scripts. Use the processing algorithm described in details below:

- Calculate the canonical correlation analysis (CCA) coefficients of the multichannel EEG data with a reference data. CCA is a multivariable statistical method used for two sets of data to find their underlying correlation 13. Considering the 3s segment of multichannel EEG data X and reference data Y of the same length, use CCA to find the weight vectors, Wx and Wy, to maximize the correlation coefficient ρ between x = XTWx and y = YTWy. Set the reference data to be the periodic signals at certain stimulus frequencies.

where f1, f2, f3, f4 are the feature frequencies of the four visual stimuli.

where f1, f2, f3, f4 are the feature frequencies of the four visual stimuli. - Spatially filter the multichannel EEG data X using the calculated CCA coefficients Wx to obtain an one-dimension filtered data x, which has the most prominent correlation with the linear combination of the reference data.

- Calculate the PSD of the spatially filtered data x using Fast Fourier Transform (FFT).

where NFFT is the sample size of data x, and FFT(x, NFFT) returns the NFFT-point discrete Fourier transform of x.

where NFFT is the sample size of data x, and FFT(x, NFFT) returns the NFFT-point discrete Fourier transform of x. - Normalize the PSD with respect to its mean value between 3 and 30 Hz.

where

where  denotes the average of the power spectrum between 3 and 30Hz.

denotes the average of the power spectrum between 3 and 30Hz.

Calibrate the classification parameters for the four stimulus frequencies. First, observe the normalized PSD spectrum that corresponds to its stimulus target, and manually configure the frequency with the strongest PSD amplitude as the feature frequency. Based on the feature frequency, divide the normalized PSD data into two sets: one is acquired when the corresponding stimulus is selected as the target, and the other is acquired when the non-target stimuli are selected. Calculate the mean value of each set respectively. Determine the threshold by the median of the two mean values to classify the target and non-target sets 14.

3. Online Processing Brain Signals

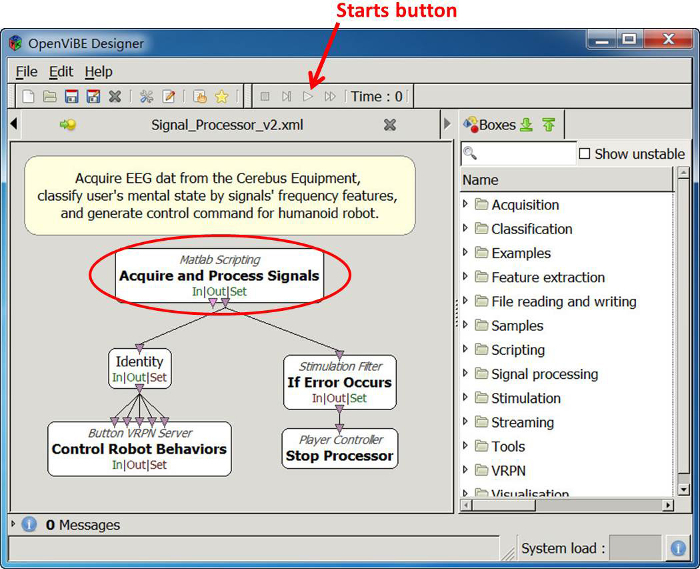

Open the Online Signal Processor as shown in Figure 3, which is developed based on the OpenViBE environment and the MATLAB scripts, on the DPC for online processing brain signals.

Double click the Acquire and Process Signal box, which is marked with a red cycle in Figure 3, to open the OpenViBE setting window. Configure the classification parameters for the subject: set the sampling rate as 1 kHz, set the time length for FFT as 3 sec, and set the feature frequencies according to the results of offline analysis, e.g., 4.667, 24, 15 and 20 Hz.

Click the Starts button to run the Online Signal Processor, which processes the real-time data using the following algorithm in three steps.

First, the algorithm acquires EEG data from channels Oz, O1, O2, Pz and CPz of the EEG system every 0.5s, and extracts the data segment of the last 3s for online processing.

Second, the algorithm processes the 3-sec data segment using the algorithms described in step 2.7, and calculates the real-time PSD for classification.

Third, the algorithm classifies the brainwave patterns according to the PSD amplitudes at the four feature frequencies. When the amplitude of one feature frequency is above a given threshold, classify the stimulus flickering at the corresponding frequency as the SSVEP target.

4. Connecting the Humanoid Robot

Press the Chest button of the humanoid robot and wait for it to start up. NOTE: this process takes approximately 1 min and is completed when the robot says "OGNAK GNOUK" while its Chest button becomes white.

Establish its Wireless Fidelity (WiFi) connection to the DPC 15.

Open the Robot Controller programmed in Visual C++ scripts on the DPC. The controller receives the classification results from the Online Signal Processor via Virtual-Reality Peripheral Network (VRPN) interface, translates them into the robot commands, and controls the corresponding behaviors of the humanoid robot via wireless connection.

Configure the Robot Controller by inputting the IP address of the robot according to its WiFi connection, defining three sets of robot behaviors which can be easily switched to perform the three different tasks, and setting the execution parameters of these behaviors (e.g., walking speed or distance) to fit the task requirement. NOTE: more details of these behaviors are described in steps 5.2.1, 5.3.1, and 5.4.1.

Click the Build button of the Visual Studio platform to run the Robot Controller.

Open the Choregraphe program on the SPC. Click "Connect to" button to pop up a Connection widget. Select the robot to be controlled according to its IP address, and double click its icon to connect to it.

Open the video monitor from the view menu in the Choregraphe menu bar, and move the monitor to the front of the User Interface to display live video feedback from the robot's camera.

5. Conducting Closed-loop Control Experiments

Request the subject to perform three specific closed-loop control tasks within different environments in order to evaluate the brain-robot interaction performance. NOTE: these tasks, which are important in robotic applications because they are helpful in assisting the disabled and elderly in their daily lives, need to activate different robot behaviors.

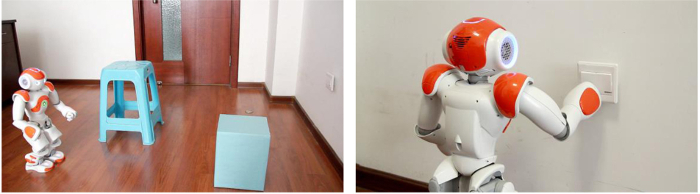

- Conduct the first closed-loop control task to telepresence control the humanoid robot via brain signals to walk through obstacles and push a light switch to turn on the light, as shown in Figure 4.

- To accomplish the first task, control the robot behaviors which are encoded by the four SSVEP stimuli as: stepping forward with a fixed distance of 0.15 m, turning left with a fixed radian of 0.3 rad, turning right with a fixed radian of 0.3 rad, and pushing the switch using its right hand. NOTE: the configuration process is described in step 4.4.

- Inform the subject of the task objective and the four behaviors to be controlled.

- Run the Robot Controller as in steps 4.5-4.7, and start the experiment. Give the subject the freedom to perceive the environment and make decisions based on live video feedback, and to activate the robot behaviors by staring at the corresponding stimuli. NOTE: no instruction or help should be given to the subject unless in emergency situations, e.g., the robot is about to collide with an obstacle.

- Conduct the second task to telepresence control the humanoid robot via brain signals to walk toward the staircase following the exit sign, as shown in Figure 5. When confronting passersby, ask the subject to control the robot to say "excuse me" and wait for the passersby to give way.

- Control the robot behaviors encoded by the four SSVEP stimuli as: walking forward continuously at a speed of 0.05 m/sec, turning left continuously at a speed of 0.1 rad/sec, turning right continuously at a speed of 0.1 rad/sec, and stopping all the walking behaviors.

- Switch the Robot Controller to the posture-dependent control function 16. NOTE: when the robot is walking, this function uses the fourth stimulus as the command of stopping the walking behaviors; when the robot is not walking, it uses the fourth stimulus to control the robot to say "excuse me". The function is implemented in C++ scripts by detecting the robot's walking status.

- Inform the subject of the task objective and the five behaviors to be controlled.

- Run the Robot Controller and start the experiment, as described in step 5.2.3.

- Conduct the third task to telepresence control the humanoid robot to walk toward a balloon target, to pick it up, and to deliver it to the subject's hand, as shown in Figure 6.

- Control the robot behaviors encoded by the four SSVEP stimuli as: stepping forward with a fixed distance of 0.15 m, turning left with a fixed radian of 0.3 rad, turning right with a fixed radian of 0.3 rad, and picking up objects.

- Activate the posture-dependent control function in the Robot Controller to reuse the fourth stimulus as the command of putting down the object when it has already been picked up.

- Inform the subject of the task objective and the five behaviors to be controlled.

- Run the Robot Controller and start the experiment, as described in step 5.2.3.

Representative Results

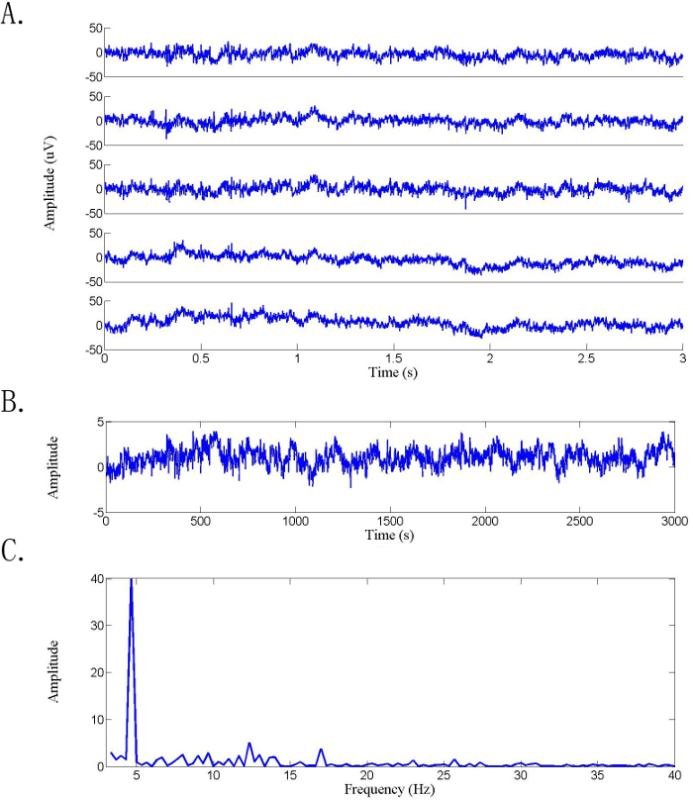

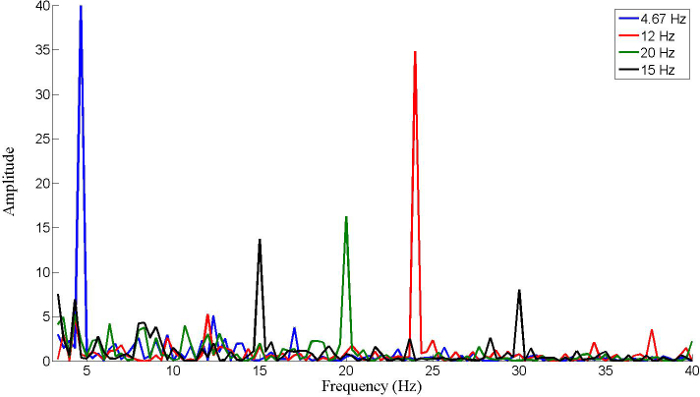

The results presented here were obtained from a male subject having corrected-to-normal version. Figure 7 shows the procedure of processing EEG data, including extracting a multichannel data epoch (Figure 7A), spatially filtering the data using CCA coefficients (Figure 7B), and calculating the normalized PSD (Figure 7C).

Figure 8 shows the normalized PSD obtained using single trials in which the subject stared at the targets flickering at different frequencies. The prominent peak appears at the fundamental or harmonic frequency of the flickering stimulus. The BRI system maps these patterns of SSVEP responses into commands for the control of different robot behaviors.

Figures 4-6 show the three closed-loop control tasks performed to evaluate the brain-robot interaction performance. These tasks are popular in robotic research and are helpful in assisting the disabled and elderly in their daily lives. Figure 4 shows the telepresence control experiment of walking through obstacles and pushing a switch to turn on the light via brainwaves. Figure 5 shows the telepresence control experiment of walking toward the stair case following the exit sign. Figure 6 shows the telepresence control experiment of picking up a balloon and delivering it to the subject's hand.

Figure 1. System Structure for Brain-Robot Interaction with a Humanoid Robot. The brain signals are measured through the EEG data acquisition system. The user interface elicits SSVEP responses and displays live video feedback in the closed-loop control experiments. For first-time subjects, the Data Recorder and the Offline Data Analyzer are used in the offline training process to analyze their SSVEP features offline and to train the classifier for each subject. Then the Online Signal Processor and the Robot Controller are configured for the online control of a humanoid robot. Please click here to view a larger version of this figure.

Figure 1. System Structure for Brain-Robot Interaction with a Humanoid Robot. The brain signals are measured through the EEG data acquisition system. The user interface elicits SSVEP responses and displays live video feedback in the closed-loop control experiments. For first-time subjects, the Data Recorder and the Offline Data Analyzer are used in the offline training process to analyze their SSVEP features offline and to train the classifier for each subject. Then the Online Signal Processor and the Robot Controller are configured for the online control of a humanoid robot. Please click here to view a larger version of this figure.

Figure 2. User Interface for the SSVEP-based BRI System. The User Interface displays live video feedback in the middle window and flickers four images on the periphery representing humanoid robot behaviors at four frequencies. The 3D representation at the right panel indicates the current posture of the humanoid robot. Please click here to view a larger version of this figure.

Figure 2. User Interface for the SSVEP-based BRI System. The User Interface displays live video feedback in the middle window and flickers four images on the periphery representing humanoid robot behaviors at four frequencies. The 3D representation at the right panel indicates the current posture of the humanoid robot. Please click here to view a larger version of this figure.

Figure 3. Online Signal Processor implemented in the OpenViBE programming environment. The Acquire and Process Signals box marked with the red cycle invokes the processing algorithm written in MATLAB scripts. The Starts button on the menu panel starts up the program. Please click here to view a larger version of this figure.

Figure 3. Online Signal Processor implemented in the OpenViBE programming environment. The Acquire and Process Signals box marked with the red cycle invokes the processing algorithm written in MATLAB scripts. The Starts button on the menu panel starts up the program. Please click here to view a larger version of this figure.

Figure 4. Telepresence Control Experiment of Walking Through Obstacles and Pushing a Light Switch.

Please click here to view a larger version of this figure.

Figure 4. Telepresence Control Experiment of Walking Through Obstacles and Pushing a Light Switch.

Please click here to view a larger version of this figure.

Figure 5. Telepresence Control Experiment of Walking Toward the Staircase Following the Exit Sign.

Please click here to view a larger version of this figure.

Figure 5. Telepresence Control Experiment of Walking Toward the Staircase Following the Exit Sign.

Please click here to view a larger version of this figure.

Figure 6. Telepresence Control Experiment of Delivering a Balloon to the Subject.

Please click here to view a larger version of this figure.

Figure 6. Telepresence Control Experiment of Delivering a Balloon to the Subject.

Please click here to view a larger version of this figure.

Figure 7. Procedure of Processing Multichannel EEG Data. (A) Multichannel data epoch extracted from the trial in which the subject is staring at the stimulus at 4.615 Hz; (B) Spatially filtered data using CCA coefficients; (C) Normalized PSD of the spatially filtered data. Please click here to view a larger version of this figure.

Figure 7. Procedure of Processing Multichannel EEG Data. (A) Multichannel data epoch extracted from the trial in which the subject is staring at the stimulus at 4.615 Hz; (B) Spatially filtered data using CCA coefficients; (C) Normalized PSD of the spatially filtered data. Please click here to view a larger version of this figure.

Figure 8. Normalized PSD Obtained in Single Trials in Which the Subject Is Staring at Stimuli Flickering at Different Frequencies.

Please click here to view a larger version of this figure.

Figure 8. Normalized PSD Obtained in Single Trials in Which the Subject Is Staring at Stimuli Flickering at Different Frequencies.

Please click here to view a larger version of this figure.

Discussion

This paper presents an SSVEP-based experimental procedure to establish the brain-robot interaction system with humanoid robots by integrating multiple software programs. Because human intent is perceived by interpreting real-time EEG signals, it is critical to verify the electrode connections and EEG signal qualities before conducting the experiment. If signals acquired from all the electrodes are of poor qualities, it is necessary to check the connection of the ground and reference electrodes first. If there are problems with parts of the electrodes, re-inject conductive gel to adjust the impedance of those channels.

Another common issue while acquiring EEG signals is the interference of artifacts and noises 17. As the EEG signal has small signal to noise ratio (SNR), artifact and noise can easily obscure changes in PSD of the SSVEP responses. It is important to keep the signal amplifier of the EEG system away from the power supplies and other noise sources. During the experiment, eye blink and body movement should be kept to a minimum to reduce artifacts. To further improve the robustness against such interferences, our method utilizes a CCA-based technique to interpret multichannel EEG data. The results show that this technique is effective in extracting features from EEG signals containing noises and artifacts.

To begin a new task of closed-loop control of humanoid robots, we need to manually configure the definition and parameter of each robot behavior to fit the new task requirement. This is due to the limited number of control commands available in the current BRI system, and thus can be improved by evoking more types of brainwave patterns. Hwang et al.18 proposed a dual-frequency stimulation method for producing more visual stimuli. Wang et al. 19, Allison et al. 20, Pan et al. 21, and Li et al.22 proposed several hybrid methods to combine the SSVEP-based model with other brainwave patterns, including ERPs and MI. It is also feasible to adopt switching techniques based on machine learning or hierarchical architecture to control full body movement of the humanoid robot using limited brainwave patterns 23.

Considering the available flashing frequencies of the LCD monitor 24 and the influence among harmonic components of SSVEPs 25, we scanned all the possible flashing frequencies from 1 to 60 Hz and found using the four frequencies, i.e., 4.615, 12, 15, and 20 Hz, are likely the best choice as they achieved the highest average accuracy rate for our subjects. Therefore, we used the four stimuli on the interface to control the humanoid robot behaviors, including walking forward, turning left, turning right, and stopping walking/pushing the switch/picking up objects, which are feasible enough to control a humanoid robot to accomplish the tasks presented in this manuscript.

The advantages of the BRI system are its reliability and flexibility as it is developed by integrating multiple software programs, such as OpenViBE, Choregraph, Central software and user developed programs in C++ and MATLAB. It is efficient and reliable for designing different experimental procedures using the standardized software. Our system is a powerful tool to investigate new algorithms and techniques for the brain-robot interaction with a humanoid robot. It can be easily upgraded to explore BRI applications in assisting the sick and elderly, and performing unsanitary or dangerous jobs.

Disclosures

The authors have nothing to disclose.

Acknowledgments

The authors would like to express their gratitude to Mr. Hong Hu for his assistance in performing the experiments reported in this paper. This work was supported in part by the National Natural Science Foundation of China (No. 61473207).

References

- McFarland DJ, Wolpaw JR. Brain-Computer Interface Operation of Robotic and Prosthetic Devices. Computer. 2008;41:52–56. [Google Scholar]

- Lebedev MA, Nicolelis MA. Brain-machine interfaces: Past, present and future. Trends Neruosci. 2006;29(9):536–546. doi: 10.1016/j.tins.2006.07.004. [DOI] [PubMed] [Google Scholar]

- Wolpaw JR, Birbaumer N, McFarland DJ, Pfurtscheller G, Vaughan TM. Brain-computer interfaces for communication and control. Clin. Neurophysiol. 2002;113:767–791. doi: 10.1016/s1388-2457(02)00057-3. [DOI] [PubMed] [Google Scholar]

- Bell CJ, Shenoy P, Chalodhorn R, Rao RP. Control of a humanoid robot by a noninvasive brain-computer interface in humans. J. Neural. Eng. 2008;5:214–220. doi: 10.1088/1741-2560/5/2/012. [DOI] [PubMed] [Google Scholar]

- Li W, Li M, Zhao J. Control of humanoid robot via motion-onset visual evoked potentials. Front. Syst. Neurosci. 2014;8:247. doi: 10.3389/fnsys.2014.00247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Regan D. Some characteristics of average steady-state and transient responses evoked by modulated light. Electroencephalogr. Clin. Neurophysiol. 1966;20:238–248. doi: 10.1016/0013-4694(66)90088-5. [DOI] [PubMed] [Google Scholar]

- Vialatte FB, Maurice M, Dauwels J, Cichocki A. Steady-state visually evoked potentials: focus on essential paradigms and future perspectives. Prog. Neurobiol. 2010;90:418–438. doi: 10.1016/j.pneurobio.2009.11.005. [DOI] [PubMed] [Google Scholar]

- Bin G, Gao X, Wang Y, Li Y, Hong B, Gao S. A high-speed BCI based on code modulation VEP. J. Neural. Eng. 2011;8:025015. doi: 10.1088/1741-2560/8/2/025015. [DOI] [PubMed] [Google Scholar]

- Sutton S, Braren M, Zubin J, John ER. Evoked-potential correlates of stimulus uncertainty. Science. 1965;150:1187–1188. doi: 10.1126/science.150.3700.1187. [DOI] [PubMed] [Google Scholar]

- Pfurtscheller G, Lopes da Silva HF. Event-related EEG/MEG synchronization and desynchronization: basic principles. Clin. Neurophysiol. 1999;110:1842–1857. doi: 10.1016/s1388-2457(99)00141-8. [DOI] [PubMed] [Google Scholar]

- Zhao J, Meng Q, Li W, Li M, Sun F, Chen G. OpenViBE-based brainwave control system for Cerebot. Proc. IEEE International Conference on Robotics and Biomimetics. 2013. pp. 1169–1174.

- Homan RW, Herman J, Purdy P. Cerebral location of international 10-20 system electrode placement. Electroencephalogr. Clin. Neurophysiol. 1987;66:376–382. doi: 10.1016/0013-4694(87)90206-9. [DOI] [PubMed] [Google Scholar]

- Bin G, Gao X, Yan Z, Hong B, Gao S. An online multi-channel SSVEP-based brain-computer interface using a canonical correlation analysis method. J. Neural. Eng. 2009;6:046002. doi: 10.1088/1741-2560/6/4/046002. [DOI] [PubMed] [Google Scholar]

- Wang Y, Wang R, Gao X, Hong B, Gao S. A practical VEP-based brain-computer interface. IEEE Trans. Neural Syst. Rehabil. Eng. 2006;14:234–239. doi: 10.1109/TNSRE.2006.875576. [DOI] [PubMed] [Google Scholar]

- Aldebaran Robotics Co; 2015. Setting NAO's WiFi connection - Aldebaran 2.1.3.3 documentation [Internet] Available from: http://doc.aldebaran.com/2-1/nao/nao-connecting.html. [Google Scholar]

- Chae Y, Jeong J, Jo S. Toward brain-actuated humanoid robots: asynchronous direct control using an EEG-based BCI. IEEE T. Robot. 2012;28:1131–1144. [Google Scholar]

- Croft RJ, Barry RJ. Removal of ocular artifact from the EEG: a review. Neurophysiol. Clin. 2000;30:5–19. doi: 10.1016/S0987-7053(00)00055-1. [DOI] [PubMed] [Google Scholar]

- Hwang HJ, Hwan Kim D, Han CH, Im CH. A new dual-frequency stimulation method to increase the number of visual stimuli for multi-class SSVEP-based brain-computer interface (BCI) Brain Res. 2013;1515:66–77. doi: 10.1016/j.brainres.2013.03.050. [DOI] [PubMed] [Google Scholar]

- Wang M, Daly I, Allison B, Jin J, Zhang Y, Chen L, Wang X. A new hybrid BCI paradigm based on P300 and SSVEP. J Neurosci Methods. 2015;244:16–25. doi: 10.1016/j.jneumeth.2014.06.003. [DOI] [PubMed] [Google Scholar]

- Allison BZ, Jin J, Zhang Y, Wang X. A four-choice hybrid P300 SSVEP BCI for improved accuracy. Brain-Computer Interfaces. 2014;1:17–26. [Google Scholar]

- Pan JH, Xie Q, Herman Y, Wang F, Di H, Laureys S, Yu R, Li Y. Detecting awareness in patients with disorders of consciousness using a hybrid brain-computer interface. J. Neural. Eng. 2014;11:056007. doi: 10.1088/1741-2560/11/5/056007. [DOI] [PubMed] [Google Scholar]

- Li J, Ji H, Cao L, Zhang D, Gu R, Xia B, Wu Q. Evaluation and application of a hybrid brain computer interface for real wheelchair parallel control with multi-degree of freedom. Int J Neural Syst. 2014;24:1450014–14. doi: 10.1142/S0129065714500142. [DOI] [PubMed] [Google Scholar]

- Zhao J, Meng Q, Li W, Li M, Chen G. SSVEP-based hierarchical architecture for control of a humanoid robot with mind. Proc. 11th World Congress on Intelligent Control and Automation. 2014. pp. 2401–2406.

- Zhu D, Bieger J, Molina GG, Aarts RM. A survey of stimulation methods used in SSVEP-based BCIs. Comput Intell Neurosci. 2010;2010:1–12. doi: 10.1155/2010/702357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muller-Putz GR, Scherer R, Brauneis C, Pfurtscheller G. Steady-state visual evoked potential (SSVEP)-based communication: impact of harmonic frequency components. J Neural Eng. 2005;2:123–130. doi: 10.1088/1741-2560/2/4/008. [DOI] [PubMed] [Google Scholar]