Abstract

Since the founding of the first School-Based Health Centers (SBHCs) over 45 years ago, researchers have attempted to measure their impact on child and adolescent physical and mental health and academic outcomes.

A review of the literature finds that SBHC evaluation studies have been diverse, encompassing different outcomes and varying target populations, study periods, methodological designs, and scales.

A complex picture emerges of the impact of SBHCs on health outcomes, which may be a function of the specific health outcomes examined, the health needs of specific communities and schools, the characteristics of the individuals assessed, and/or the specific constellation of SBHC services. SBHC evaluations face numerous challenges that affect the interpretation of evaluation findings, including maturation, self-selection, low statistical power, and displacement effects.

Utilizing novel approaches such as implementing a multipronged approach to maximize participation, entering-class proxy baseline design, propensity score methods, dataset linkage, and multisite collaboration may mitigate documented challenges in SBHC evaluation.

Keywords: School-based Health Centers, Program Evaluation, School Health Services

Background: History of SBHCs

Since the founding of the first School-Based Health Centers (SBHCs) over 45 years ago, researchers have attempted to measure their impact on child and adolescent physical and mental health and academic outcomes [1]. The focus of the current paper is three-fold: first, to provide a brief overview of SBHCs; second, to identify methodological challenges when evaluating SBHCs; and finally, to describe new approaches to designing impact evaluations that can mitigate these methodological challenges. We summarize innovative methodologies that practitioners, researchers, and funders can use to support rigorous evaluations of SBHCs’ impact.

SBHCs are defined as health centers located in schools or on school grounds that provide acute, primary and preventive health care [[2], [3], [4]]. Depending on resources, health needs, state laws, and other school-level and community factors [[5], [6]], SBHCs may provide immunizations; testing and treatment of sexually transmitted infections (STIs); contraception, pregnancy testing, prenatal care; mental health assessment and treatment; crisis intervention and referrals; substance abuse counseling; health education; and dental care. Services are often rendered by a multi-disciplinary team that may include physicians, nurse practitioners, physician assistants, school nurses, health educators, dentists, and mental health providers. SBHCs also vary significantly in their hours of operation, with some open a few hours a week, and others open for the full school day, weekends, and/or through the summer [[2], [3], [7]].

There has been tremendous growth in the establishment of SBHCs across the United States, with a more than ten-fold increase in the number of SBHCs in the past 20+ years, from 150 in 1989 to 1,930 in 2011 [8]. SBHCs are distributed widely but unevenly in 46 of the 50 states, including 232 in New York, 224 in Florida, 172 in California, and 87 in Louisiana. Over half (54%) of SBHCs are located in urban areas, 28% are in rural areas, and 18% are located in suburban areas [[2], [8]].

Conceptually, SBHCs have the potential to improve physical and mental health as well as academic outcomes. Embedded within schools—the only public institution with the capacity to reach the majority of youth—SBHCs have the ability to provide services to most children and adolescents [9]. SBHCs are designed to provide youth-friendly services and to reduce barriers associated with accessing services (e.g., finances, inconvenient hours, transportation) [10]. They have the capacity to teach young people when and how to access health care and to modify attitudes and behaviors regarding such care. SBHCs also have the ability to provide youth with medical, mental health, and dental services to which they might otherwise not have access. Ultimately, healthy children and adolescents are better able to focus and learn which may improve academic outcomes [11].

SBHC Research: Scope of the Evidence Base

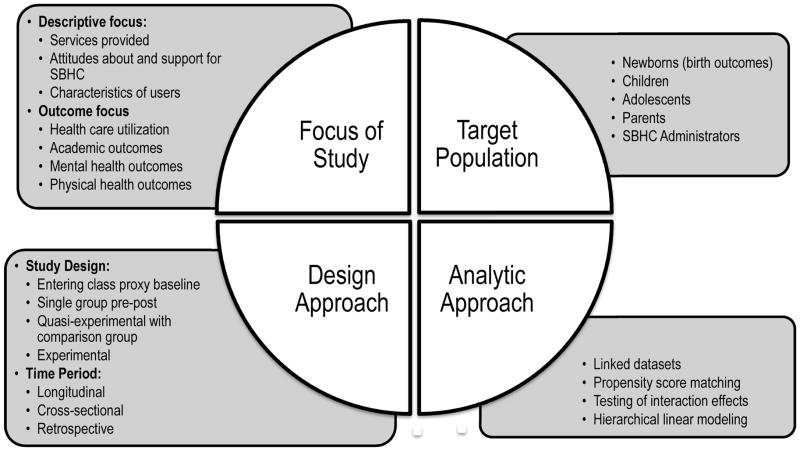

In an era of increasing accountability, there has been interest by researchers, administrators, and funders in examining the impact of SBHCs on multiple health and academic outcomes. A review of the literature finds that SBHC evaluation studies have been diverse, encompassing different outcomes and varying target populations, study periods, methodological designs, and scales (see Figure 1). Note that studies addressing SBHC cost or cost-effectiveness are beyond the scope of this report.

Figure 1.

Scope of SBHC evaluation literature: focus, target population, design and analytic approach

SBHC evaluations examining health care utilization have demonstrated impact on the use of health services, including increased health maintenance visits as well as reduced emergency department visits and hospitalizations. Other evaluations of SBHCs’ behavioral health impact have reported lower rates of suicidality and depression, increased physical activity, increased hormonal contraception use, increased likelihood of having been screened for a STI including HIV, lower pregnancy rates and, among teen parents who used SBHCs, higher newborn birth weights [[12], [13], [14], [15], [16], [17], [18], [19], [20], [21]]. Some studies have also found that access to SBHCs is associated with positive academic outcomes, including increased attendance and grade point average and reduced rate of dropout [[22], [23], [24]].

A recent systematic review of SBHCs’ impact by the Community Preventive Service Task Force of the Centers for Disease Control and Prevention (CDC) [25] echoed the positive findings regarding the effect of SBHCs on health and academic outcomes, but also identified gaps in evidence. The review cited several health outcomes for which evidence was insufficient, including impact on: risk-taking behaviors (e.g., smoking, substance use, nutrition, and physical activity), contraceptive use among male adolescents, and pregnancy complications among female adolescents [26]. In another recent systematic review of SBHCs’ impact on sexual, reproductive, and mental health [27], of the 27 studies included from 1990–2012, only three were categorized as examining outcomes beyond health care utilization or behavioral health risks, and each found positive impacts of SBHCs for only a subset of the primary outcomes studied or some of the subgroups studied [[17], [20], [28]]. While this review did not include published studies before 1990 [29] or after March 2012 [[30], [31]] as well as several studies published during the period covered by their review [[19], [23], [32]], it did demonstrate the limited data available on a SBHCs’ reproductive and mental health outcomes.

Thus, a complex picture emerges of the impact of SBHCs on health outcomes. Impact may be a function of the specific health outcomes examined, the health needs of specific communities and schools, and/or the specific constellation of SBHC services offered. Moreover, the strength of the effect may vary depending on the population in question: males; rural; undocumented; minorities; lesbian, gay, bisexual and transgender (LGBT) youth; and younger or older students. Untangling mechanisms of impact is necessary to ensure that effective models are put into practice to support positive health outcomes among children and adolescents.

Challenges with SBHC evaluation

The lack of consistent findings may reflect real limitations in SBHCs’ capacity to change health care outcomes. They may also reflect methodological and logistical challenges inherent in conducting research in schools. The challenges of evaluating SBHCs are well documented [[1], [28], [33], [34], [35], [36]] and include, but are not limited to, the following:

Selection bias – Selection bias in an evaluation may obscure or exaggerate the measured impact of an SBHC. Selection bias operates on multiple levels: the processes by which students enroll in particular schools (often a function of neighborhood segregation by race/ethnicity and socioeconomic status), systematic differences between students who do and do not use SBHC services, differential attrition (e.g., school dropout, transfer), and factors that influence parental permission (both for SBHC enrollment and participation in evaluations) [[33], [37], [38], [39]]. SBHCs are, frequently by design, implemented in schools and communities where health care needs are greatest; thus, students attending a school with an SBHC may differ systematically from students in a school selected by researchers to serve as a control or comparison school. These selection biases are likely to be associated with health behaviors, risk-taking behaviors, access to other resources, and health outcomes, introducing bias that is difficult to control for in estimating an effect [[36], [37], [40]]. While statistical adjustment through regression modeling is often used to address selection bias, this method often does so incompletely and cannot adjust for unmeasured sources of bias.

Maturational and historical effects – Evaluations examining changes over time may not be able to disentangle the effect of the SBHC from those that occur naturally over time as a function of adolescent development. Changes in health behaviors may be a function of development rather than a function of the intervention. For example, as sexual experience increases with age [41], controlling for age and/or grade is essential in SBHC evaluation. Such evaluation designs are also subject to the potential biasing effect of changes in the health care service delivery and policy landscape, or the provision of support or services provided by entities other than the SBHC that occur over time.

Sample size and statistical power – An important component of any research study is the ability (i.e., statistical power) to detect differences in a study sample that exist in “truth.” Increasing the number of participants or schools allows for more precise estimates of a given behavior. Statistical power is also influenced by the variance or “noise” in the data, the expected effect size, and the prevalence or incidence of the outcome of interest. A study examining outcomes that are relatively rare (e.g., teen birth) or for which the SBHC is expected to have a clinically meaningful but empirically small effect (e.g., reduction in depression symptoms) are frequently statistically underpowered.

Heterogeneity in services delivered or received - SBHCs demonstrate considerable heterogeneity in hours of availability, health care provider staffing, and range of services offered. In the most recent SBHC census, 29% of health centers had only primary care staffing, 34% had both primary care and mental health, and 37% had primary care, mental health, and additional staffing (e.g., dental, case management). There is considerable heterogeneity in the services offered as demonstrated by the fact that half of SBHCs reported that they were prohibited from distributing contraception and only 39% dispensed behavioral health medication [8]. Hours of availability and staffing also influence the range services provided [7] and students vary considerably, in number of visits and types of services used [17]. Thus, treating SBHCs as homogeneous interventions ignores the possibility that some SBHC models may be more effective than others.

Displacement effects – Displacement occurs when students use an SBHC instead of other sources of health care. Displacement can be beneficial or not. Primary and preventive care delivered by an SBHC may reduce emergency department use; this is generally considered a good outcome and has been demonstrated in several studies based on various research designs [[7], [42]]. Likewise, students may decide to use their SBHC for reproductive health and discontinue using these services at a community family planning clinic. Such displacement, while it may be convenient for the student, would result in no net increase in reproductive health care utilization, and would, therefore, overstate the impact SBHCs have on utilization and overall effectiveness if not considered in evaluation data collection plans.

Accounting for clustering effects – Data collected among students across several different schools must account for the hierarchical structure of the data (i.e., students nested or “clustered” within schools). Youth who attend the same schools will be more similar to one another than to students in another school. Statistically, they have correlated errors, a violation of one of the assumptions for regression analyses. Specialized analyses can adjust for these correlations and this nesting of students within schools [43]. Failing to account for nested data in analyses and thus, for the influence of context may lead to false positive outcome findings.

New approaches to evaluating SBHCs’ impact

As summarized in Table 1 and discussed below, five recent innovative approaches in SBHC evaluation can be used to address these challenges. The ideas vary in both scale and approach and each provides SBHC clinicians, researchers, and administrators with a new paradigm by which to approach future impact evaluations. While this manuscript was not structured as a systematic review, an example of each approach in practice is also provided.

Table 1.

Summary of five recent innovations in SBHC evaluation work

| New approach | Problems addressed | Overview and Key Finding | Partial Citation |

|---|---|---|---|

| Maximizing participation to minimize self-selection bias |

|

In 29 middle schools, multiple approaches were used, including engaging teachers, providing incentives for both teachers and students, and integrating consent forms with required forms. Employing this multipronged approach yielded an active consent rate of 79%, and a non-response rate of only 10%. | Esbensen et al. (2008) [47]. Active parental consent in school-based research: How much is enough and how do we get it? |

| Entering-class proxy baseline study design |

|

Simulated cohort using cross-sectional design, with 9th graders in both SBHC and non-SBHC schools serving as unexposed baseline to examine impact over time. While the proportion of sexually active females who ever used a hormonal method was similar among 9th graders in schools with and without an SBHC (21% vs. 20%), among 10th–12th grade sexually experienced females, it was substantially higher among those at SBHC schools (60% vs. 26%, p<0.001). | Minguez et al. (2015) [31]. Reproductive health impact of a school health center. |

| Propensity score methods |

|

A study using data from a survey modified from the California Healthy Kids from students in 15 schools used baseline (pre-SBHC treatment) data to model and statistically adjust for probability of using SBHC services. In adjusted linear regression models, compared to students who did not use the SBHC, those who ever did were significantly more likely to report caring relationships with adults (β=0.09, 95%CI:0.06–0.13), high expectations of adults (β=0.08, 95%CI: 0.03–0.13), and meaningful participation in school (β=0.11, 95%CI:0.06–0.15). The authors also found significant dose-response effect, with higher levels of school assets with increasing use of SBHC. | Stone et al. (2013) [54]. The relationship between use of school-based health centers and student-reported school assets. |

| Linking datasets |

|

A study in Baltimore linked pregnant teen mothers’ medical records and their newborns’ birth certificates to examine comprehensiveness of pre-natal care and birth outcomes among teens receiving prenatal care in a school-based versus hospital-based setting. In regression models adjusting for baseline differences, teens receiving hospital-based prenatal care were more likely to deliver a low birth weight infant compared to teens who received school-based prenatal care (AOR=3.75, 95% CI: 1.05–13.36). These findings were attenuated after adjusting for adequacy of prenatal care (AOR=2.31, 95% CI 0.65– 8.24). | Barnet et al. (2003) [32]. Reduced low birth weight for teenagers receiving prenatal care at a school-based health center: effect of access and comprehensive care. |

| Collaborative research |

|

Collection of nationally representative data from more than 9,000 students in 96 schools in New Zealand to allow sufficiently powered analyses stratified by SBHC staffing and hours of availability. An increased rate of staffing by doctors and nurses (hours per week per 100 students) at SBHCs was significantly related to fewer students reporting involvement in pregnancy (AOR = 0.94, 95% CI = 0.89, 0.99). In schools with more than 10 hours of staffing per 100 students, sexually active students had significantly lower odds of reporting involvement with pregnancy compared to sexually active students in schools with no school-based health services (AOR = 0.34, 95%CI = 0.11, 0.99). | Denny et al. (2012) [30]. Association between availability and quality of health services in schools and reproductive health outcomes among students: a multilevel observational study. |

Approach 1: Maximizing Participation to Minimize Self-Selection Bias

Suboptimal participation rates—both in terms of absolute numbers and representativeness—introduce the possibility of bias and have been documented as a challenge [[35], [44], [45], [46]]. Through planning, coordinating with school events, and collaborating with teachers and administrators, researchers can work to obtain high, representative rates of participation while adhering to ethical principles [13], regardless of the format by which parental permission is solicited (i.e., active or passive “opt-out”). School-wide surveys that have been institutionalized often have established methods for informing parents about research and student participation. In some districts, permission forms for participation in research are sent out during the summer with the standard annual school package of materials or included in parent handbooks for parents to review at their leisure. Alternatively, some districts time the delivery of active consent materials with automated calls (“robocalls”) to the home as well as pairing them with other materials that require parental signature or with Parent-Teacher meetings. Non-monetary incentives, such as pizza or ice-cream parties, homework passes, raffles, or appealing to civic duty, are also strategies that can be used to boost participation rates.

A recent evaluation of a school-based violence prevention program across 29 schools reported its best practices for yielding active consent response rates. These approaches included: collaborative meetings with administrators and teachers during the planning process; providing incentives to the school for increasing levels of participation (starting at 80%, with graduated increased incentives for response up to 95%); providing incentives to teachers for actively participating in the collection of consent forms (regardless of whether consent was affirmative); appending evaluation consent forms to required forms that are being sent to parents (e.g., school health forms); and sending forms home with students rather than using mail. Using this multipronged approach, the team achieved participation rates approaching and exceeding 80%, at a cost of $7.93 per participant [47].

Approach 2: Entering-Class Proxy-Baseline Study Design (ECPB)

The Entering-Class Proxy-Baseline Study Design (ECPB) design is a simulated cohort design recently used in two SBHC evaluations in New York City [[31], [48]]. Data collection activities entailed a cross-sectional data collection effort at the beginning of the school year across all school grades (e.g., 9th–12th) and from participants in both a control and intervention/treatment school. Data from the incoming class (9th graders) serve as baseline data, whereas each ensuing grade at the intervention school is treated as an additional year of exposure to the SBHC intervention.

Three different analyses can be used to assess impact. First, a regression that includes a school by grade interaction, and controls for baseline behaviors, can be used to assess whether the SBHC has an impact, and whether that impact differs across different levels of exposure. Second, a set of regressions controlling for potential confounders examines differences between treatment and control schools, first among 9th graders to establish a baseline, and then among 10th, 11th, and 12th graders. This analysis yields similar results as the prior example and is best used in research with small sample sizes, such as when examining subgroups (e.g., males, minorities). Lastly, this design allows for the comparison among three groups: control group, treatment group-SBHC users, and treatment group-SBHC non-users.

This design addresses several evaluation challenges. First, data can be collected at a single point in time and student surveys can be anonymous (increasing the possibility of using passive versus active parental consent); this increases the likelihood of participation, reduces selection bias, and increases the sample size. Additionally, the inclusion of a comparison group reduces the threat of maturation effects. This design is best utilized when: (1) treatment and control schools are demographically proximate, have similar neighborhood characteristics, and draw students from comparable source populations; and (2) schools are exposed to similar district policies and/or local historical events. Under these conditions threats to internal validity, particularly selection and history effects, are minimized. This is also a good design choice when there is an opportunity to sample the full student population at one time point. A one-time cross-sectional study requires fewer resources and places less strain on the school administration and student body.

ECPB is not without limitations. The method assumes a relatively constant set of admission policies over time and no change in school catchment areas. There are difficulties in matching public high schools on key demographic factors that may influence SBHC use. High schools often have unique features (e.g., leadership of the school, historical events occurring at the school). The timing of the fall survey administration can also be difficult; ideally surveys should be administered before the entering-class has access to the SBHC.

Approach 3: Propensity Score Methods (PSM)

Though issues pertaining to self-selection can be attenuated through careful design, documented bias cannot be eradicated, particularly for quasi-experimental designs in which identifying an appropriate comparison group is challenging. Thus, there are several situations in which statistical control may not be sufficient. Some factors that confound the association between SBHCs and outcomes of interest are complex characteristics (e.g., motivation, “healthy volunteer” effects, socioeconomic status, access to and/or use of other primary care providers) that are difficult to measure, and, as such, cannot be adequately controlled for in statistical analyses [[28], [49]]. In other cases, students exposed and not exposed to SBHCs may differ so greatly that there is little overlap between the two groups, leading to a comparison of the “highest” or “best” in one group to the “lowest” or “worst” in another.

Ideal for use in these situations, Propensity Score Methods (PSM) is an approach of matching, stratifying, or weighting within a regression model on a propensity score, which represents the probability of receiving the treatment—in this case SBHC services—modeled as a single covariate [[50], [51]]. Conceptually, in a randomized trial with a 1:1 randomization scheme, the propensity score would be 0.5. With PSM in a quasi-experimental evaluation, the probability of being exposed to an SBHC is modeled based on observed data [52]. Identification of the covariates that inform the estimation of the propensity score, generally based on baseline assessment and published conceptual and empirical evidence, is crucial. With the wealth of published data on the characteristics of SBHC users versus non-users, PSM is a particularly suitable approach for SBHC evaluations.

A recent study made use of PSM when evaluating the extent to which SBHC utilization promotes students’ developmental assets [53]. The authors used data from 7,314 respondents to the California Healthy Kids Survey (CHKS) to inform estimates of the frequency of SBHC use across four categories. They assessed a dose-response relationship between SBHC use and three key predictors of academic performance (“school assets”): the extent to which students perceived that they had access to caring, supportive teachers; that teachers had high expectations of their future success; and that they had opportunities to participate in activities at school. In unadjusted analyses, students with more frequent use of the SBHC differed significantly from those who did not use the SBHC both on individual socio-demographic characteristics as well as school-level characteristics, such as school size. After matching by propensity score to create 2,981 pairs (matched on the probability they would receive SBHC services), no significant differences between the two groups (at alpha = 0.1) remained, allowing the evaluators to identify a significant effect of SBHCs on school assets. Additional analyses using weighting by propensity score allowed the evaluators to detect significant dose-response effects on all outcomes studied, particularly among students visiting the SBHC more than 10 times, by comparing them to a valid counterfactual.

Using PSM allows evaluators to address the perennial problem of self-selection at the analysis stage, rather than at the design stage. By addressing the self-selection bias introduced by the systematic differences between those who do and do not receive SBHC services, PSM allow evaluators to estimate treatment effects for populations, rather than only those who were treated [51]. Yet propensity score matching and weighting, while promising, may not be feasible in all evaluations. Specification of the model that generates the propensity scores requires high quality baseline data collected before outcome assessment.

Approach 4: Linking datasets

Tremendous opportunity exists to link large diverse datasets and to examine the impact of SBHCs on a range of health and academic outcomes with sufficient sample size, while preserving youth and/or school anonymity. Dataset linkage is an ideal approach for situations in which the outcome of interest is not measured or captured by the SBHC or the comparison school, such as pregnancies or outcomes of births, immunization status for students who initiate but do not complete the series at the SBHC, and school connectedness and educational outcomes. For example, one study linked medical records and birth certificates to examine access to pre-natal care, comprehensiveness of pre-natal care, and birth outcomes among teens receiving prenatal care in a school-based or hospital-based setting. The results indicated that teens who received pre-natal care at an SBHC comprehensive adolescent pregnancy prevention program (CAPP) had lower odds of delivering a low-birth weight baby than those who received CAPP at a hospital-based setting [32]. Another study linked data collected from SBHCs, a Planned Parenthood clinic, school election system information, the National Association of Latino Elected and Appointed Officials, and the U.S. Department of Commerce among other sources, to examine the relationship between the presence of sexual and reproductive services at an SBHC and local demand, resource, and constraint variables. This study found that poverty rate and areas with large proportions of white evangelical Protestants reduced the likelihood of SBHC provision of sexual health services whereas the number of minority elected officials, Blacks as a percentage of the county population, and having a state SBHC association all increased the likelihood of an SBHC providing sexual health services [54].

Other opportunities exist to link datasets. By linking data from the School-Based Health Alliance’s (SBHA) triennial SBHC census to state-wide school-based health surveys (e.g., CHKS), one could examine relationships between SBHC presence and a range of outcomes, including school connectedness, mental health/suicidality, grades, and alcohol and drug use. There are also opportunities to assess the relationship between comprehensiveness of SBHC services and academic performance, attendance, dropouts, or participation in College Board tests by linking U.S. Department of Education (ED) data to SBHA census data through a school match and then controlling for school and neighborhood characteristics, such as percentage of students with free or reduced lunches, race/ethnicity composition (ED), and neighborhood opportunity structure/poverty (census block data from school attendance zones).

The use of secondary data is not without significant limitations. First, there is the issue of matching. Not all SBHCs complete the triennial census; therefore, schools with an SBHC might be incorrectly categorized. Second, there are issues of missing data. Third, the quality of various datasets must always be questioned, explored, and to the extent possible, validated. For example, the national SBHC census questionnaire can be completed by various personnel (e.g., medical directors or administrators) who may have a somewhat different perspective and knowledge base that could lead to biased or inaccurate responses. Fourth, researchers are limited to utilizing pre-determined items that may not measure the construct of interest, an unavoidable byproduct of working with secondary data. A researcher interested in examining the impact of SBHCs on the mental health of sexual minority youth may not be able to adequately answer this research question because the school climate survey does not ask about sexual orientation.

Nonetheless, the use of secondary data makes large-scale, longitudinal studies possible and, as with most secondary data sets, can provide significant cost and time savings. Additionally, the use of secondary data increases the feasibility of looking at the impact of SBHCs nationally and/or regionally. While some hurdles exist (e.g., developing a data security plan, having a clear understanding of the dataset limitations), making use of existing datasets and linking them is a tool that can be used to better understand and assess SBHC impact.

Approach 5: Collaborative Research

There is a dearth of SBHC research resulting from inter-agency partnerships (e.g., schools, SBHCs, community agencies, public health departments) across school districts, counties, and states, despite shared interests in understanding and supporting positive health and academic outcomes for youth. This absence is a function of multiple factors including interpretation of legal restrictions (e.g., Health Insurance Portability and Accountability Act [HIPAA] and Family Educational Rights and Privacy Act [FERPA] laws), financial costs, limited resources, and logistics (e.g., coordination across multiple schools, school districts, states, and government agencies). Nonetheless, large national collaborations are feasible and, when designed properly, could address specific challenges to SBHC evaluation and lead to additional insights about their effects on child and adolescent health outcomes.

Large-scale collaborative evaluations involving numerous SBHCs can: (1) enable evaluators to test how differences across SBHCs (e.g., hours of operation, number of services offered) impact a range of outcomes to better understand what aspects of SBHCs are particularly effective, with sufficient statistical power to conduct stratified analyses; (2) enlist schools and/or clinics and create a more adequate comparison group, similar in demographic and/or health care needs to the served population; (3) develop shared measures for local, state, and national comparisons; and, (4) provide the sample size necessary to detect rare outcomes. However, certain statistical considerations must be taken into account when testing hypotheses that include youth nested within schools (clustering), to account for the fact that students within sites, schools, and classrooms are not independent (clustering effect) [43].

To date, the best example of a large-scale national study was conducted in New Zealand [30]. The aim of the study was to examine the relationship between access to and quality of SBHCs and the reproductive health outcomes of contraceptive use and self-reported pregnancy. The design made use of a two-stage random sampling cluster design, in which high schools were first randomly selected to participate and then students within those high schools were randomly selected and asked to participate (schools = 96, students = 9,107). The large number of schools involved allowed the researchers to examine effects pertaining to heterogeneity in clinic services, including total number of health practitioners, hours per week of services provided, frequency of team meetings, interactions with school staff, and the provision of routine comprehensive health screening to year nine students. Taking nesting into consideration, generalized linear models were selected to account for the hierarchical, multi-level structure of the data. As New Zealand is roughly the size of Oregon, it is questionable whether a national version of the study could be conducted in the United States. However, one could argue that several states or a state with multiple SBHCs could work to marshal resources and develop a similar large-scale, multi-school, -district or –state research collaborative. Perhaps a place to start would be in New York, California, Oregon, or Florida where there are a large number of SBHCs with wide-ranging services and strong, coordinated SBHA State Affiliates.

The ultimate collaborative would be one between the School Based Health Alliance and the Department of Education- because it would unite two distinct, rich sources of data that would allow for a comprehensive understanding of the association between the provision of school-based health services and health and educational outcomes. Together they could develop a sampling frame that includes the previously elusive participation from appropriate comparison or control schools. Joint efforts could result in sufficiently large sample sizes to detect SBHC effects on health outcomes that have been statistically underpowered in previous investigations. Additionally, the partnership could work towards identifying key research questions that benefit both organizations and develop a standardized question bank of relevant items that schools nationally could include in all student surveys.

While large collaborations and research projects may serve to address many of these issues, such projects are fraught with logistical, political, and methodological challenges. For example, one study highlighted the “guest” issue, in which SBHC’s are seen as the invited guest on a school campus thus limiting the possibility developing a full partnership [55]. Different professional backgrounds, cultures, mandates, and funding sources are just a few potential barriers to true partnership formation. Therefore, in addition to a shared vision, significant planning, resource sharing, partnership development, and high levels of communication are necessary to move a large-scale collaboration forward. Fortunately, guidelines exist which can support the development of strong effective collaborations among agencies [[56], [57]].

DISCUSSION

While a solid evidence base exists for the positive impact of SBHCs on improving health care access and utilization, certain health behaviors, and academic outcomes, the evaluation priority has now shifted to a more challenging goal: examining their impact on a variety of population subgroups [26], population health indicators, academic achievement, and cost-effectiveness [34]. The field also needs to conduct evaluations that aim to better understand the mechanisms by which SBHCs may impact the health status or behaviors of specific understudied subpopulations, such as males, sexual minority youth, undocumented and rural students.

The focus of this paper has been to propose specific innovative approaches that can be implemented by clinicians, researchers, evaluators, school and SBHC stakeholders, and policymakers to address the limitations that have beset prior SBHC evaluations [[1], [36], [40]]. Given the expanded availability of SBHC services, advances in statistical approaches, and challenges in data availability, it is time to consider new approaches to rigorous SBHC evaluations and to engage in collaborations across SBHC sites. Moreover, the recent Community Preventive Services Task Force systematic review of SBHCs’ impact on health and educational outcomes outlines new areas of research and evaluation to fill in evidence gaps [25]. Much of the research proposed in this paper cannot be achieved without the requisite funding at the local, state, and/or national level. Unfortunately, one of the most significant barriers to SBHC impact studies is financial. Thus it is crucial to advocate for the continued funding and call on partners at multiple levels, including the Department of Education, NIH, and local and state agencies to support, both financially and through partnerships, additional rigorous SBHC evaluations. Although significant challenges in conducting SBHC evaluation continue to persist, even in the face of new methodological approaches, researchers can ameliorate these limitations by carefully considering context, resources, potential partners, target population, and logistics to identify methodological approaches best suited to their specific research questions and settings.

Implications and Contributions.

Gaps in the evidence base of SBHCs’ impact may reflect methodologic challenges in evaluating SBHCs. With the ultimate goal of improving the rigor of SBHC outcomes evaluation, we review these challenges and their implications, and, using examples from the recent literature, identify a methodological approach to address each one.

Acknowledgments

This study was supported in part by Grant Number 1R01HD073386-01A1 from the National Institute of Child Health and Human Development (NICHD).

Footnotes

The contents of this paper are solely the responsibility of the authors and do not necessarily represent official views of NICHD or NIH.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Melina Bersamin, Prevention Research Center.

Samantha Garbers, Heilbrunn Department of Population & Family Health, Columbia University Mailman School of Public Health.

Melanie A. Gold, Department of Pediatrics and Population & Family Health, Columbia University Medical Center and Columbia University Mailman School of Public Health.

Jennifer Heitel, Heilbrunn Department of Population and Family Health, Columbia University Mailman School of Public Health.

Kathryn Martin, Columbia University Mailman School of Public Health.

Deborah A. Fisher, Pacific Institute of Research and Evaluation.

John Santelli, Columbia University Mailman School of Public Health.

References

- 1.Keeton V, Soleimanpour S, Brindis CD. School-based health centers in an era of health care reform: building on history. Current problems in pediatric and adolescent health care. 2012;42:132–158. doi: 10.1016/j.cppeds.2012.03.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Brindis CD, Klein J, Schlitt J, et al. School-based health centers: accessibility and accountability. Journal of Adolescent Health. 2003;32:98–107. doi: 10.1016/s1054-139x(03)00069-7. [DOI] [PubMed] [Google Scholar]

- 3.Alvarez-Uria G, Midde M, Pakam R, et al. Predictors of attrition in patients ineligible for antiretroviral therapy after being diagnosed with HIV: data from an HIV cohort study in India. BioMed research international. 2013 doi: 10.1155/2013/858023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Dryfoos J. School-based health clinics: a new approach to preventing adolescent pregnancy? Family planning perspectives. 1985;17:70–75. [PubMed] [Google Scholar]

- 5.Billy JOG, Grady WR, Wenzlow AT, et al. Contextual influences on school provision of health services. Journal of Adolescent Health. 2000;27:12–24. doi: 10.1016/s1054-139x(99)00123-8. [DOI] [PubMed] [Google Scholar]

- 6.Slade EP. The relationship between school characteristics and the availability of mental health and related health services in middle and high schools in the United States. The Journal of Behavioral Health Sciences & Research. 2003;30:382–392. doi: 10.1007/BF02287426. [DOI] [PubMed] [Google Scholar]

- 7.Santelli JS, Nystrom RJ, Brindis C, et al. Reproductive health in school-based health centers: findings from the 1998–99 census of school-based health centers. Journal of Adolescent Health. 2003;32:443–451. doi: 10.1016/s1054-139x(03)00063-6. [DOI] [PubMed] [Google Scholar]

- 8.Lofink H, Kuebler J, Juszczak L, et al. 2010–2011 School-based health alliance census report. Washington DC: School-Based Health Alliance; 2013. [Google Scholar]

- 9.Satcher D. Forward to school health policies and programs study (SHPPS): A summary report. Journal of School Health. 1995;65:289. [Google Scholar]

- 10.Kirby D. The impact of schools and school programs upon adolescent sexual behavior. The Journal of Sex Research. 2002;39:27–33. doi: 10.1080/00224490209552116. [DOI] [PubMed] [Google Scholar]

- 11.Basch CE. Healthier students are better learners: a missing link in school reforms to close the achievement gap. New York: Columbia University; 2010. [DOI] [PubMed] [Google Scholar]

- 12.Guo JJ, Wade TJ, Keller KN. Impact of school-based health centers on students with mental health problems. Public Health Reports. 2008;123:768–780. doi: 10.1177/003335490812300613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Santelli J, Kouzis A, Newcomer S. School-based health centers and adolescent use of primary care and hospital care. Journal of Adolescent Health. 1996;19:267–275. doi: 10.1016/S1054-139X(96)00088-2. [DOI] [PubMed] [Google Scholar]

- 14.Allison MA, Crane LA, Beaty BL, et al. School-based health centers: improving access and quality of care for low-income adolescents. Pediatrics. 2007;120:e887–894. doi: 10.1542/peds.2006-2314. [DOI] [PubMed] [Google Scholar]

- 15.Guo JJ, Jang R, Keller KN, et al. Impact of school-based health centers on children with asthma. Journal of Adolescent Health. 2005;37:266–274. doi: 10.1016/j.jadohealth.2004.09.006. [DOI] [PubMed] [Google Scholar]

- 16.Madkour AS, Harville EW, Xie Y. School-based health and supportive services for pregnant and parenting teens: associations with birth outcomes of infants born to adolescent mothers. Journal of Adolescent Health. 2014;54:S36. [Google Scholar]

- 17.Kisker EE, Brown RS. Do school based health centers improve adolescents access to health care, health status, and risk taking? Journal of Adolescent Health. 1996;18:335–343. doi: 10.1016/1054-139X(95)00236-L. [DOI] [PubMed] [Google Scholar]

- 18.Weist MD, Paskewitz DA, Warner BS, et al. Treatment outcome of school-based mental health services for urban teenagers. Community mental health journal. 1996;32:149–157. doi: 10.1007/BF02249752. [DOI] [PubMed] [Google Scholar]

- 19.Ricketts SA, Guernsey BP. Rural schoolbased clinics are adolescents willing to use them and what services do they want. American Journal of Public Health. 2006;96:1588–1592. doi: 10.2105/AJPH.2004.059816. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Ethier KA, Dittus PJ, DeRosa CJ, et al. School-based health center access, reproductive health care, and contraceptive use among sexually experienced high school students. Journal of Adolescent Health. 2011;48:562–565. doi: 10.1016/j.jadohealth.2011.01.018. [DOI] [PubMed] [Google Scholar]

- 21.McNall MA, Lichty LF, Mavis B. The impact of school-based health centers on the health outcomes of middle school and high school students. American Journal of Public Health. 2010;100:1604. doi: 10.2105/AJPH.2009.183590. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Walker SC, Kerns SE, Lyon AR, et al. Impact of school-based health center use on academic outcomes. Journal of Adolescent Health. 2010;46:251–257. doi: 10.1016/j.jadohealth.2009.07.002. [DOI] [PubMed] [Google Scholar]

- 23.Barnet B, Arroyo C, Devoe M, et al. Reduced school dropout rates among adolescent mothers receiving school-based prenatal care. Archives of Pediatrics & Adolescent Medicine. 2004;158:262–268. doi: 10.1001/archpedi.158.3.262. [DOI] [PubMed] [Google Scholar]

- 24.Kerns SE, Pullmann MD, Walker SC, et al. Adolescent use of school-based health centers and high school dropout. Archives of Pediatrics & Adolescent Medicine. 2011;165:617–623. doi: 10.1001/archpediatrics.2011.10. [DOI] [PubMed] [Google Scholar]

- 25.Guide to Community Preventive Services. Promoting health equity through education programs and policies: school-based health. 2015 Available at: http://www.thecommunityguide.org/healthequity/education/schoolbasedhealthcenters.html.

- 26.Community Preventive Services Task Force. [Accessed June 17, 2015];The guide to community preventive services: the community guide. 2015 Available at: http://www.thecommunityguide.org.

- 27.Mason-Jones AJ, Crisp C, Momberg M, et al. A systematic review of the role of school-based healthcare in adolescent sexual, reproductive, and mental health. Systematic reviews. 2012;1:49. doi: 10.1186/2046-4053-1-49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Kirby D. School based clinics research results and their implciations for future research methods. Evaluation and Program Planning. 1991;14:35–47. [Google Scholar]

- 29.Zabin LS, Hirsch MB, Smith EA, et al. Evaluation of a pregnancy prevention program for urban teenagers. Family planning perspectives. 1986:119–126. [PubMed] [Google Scholar]

- 30.Denny S, Robinson E, Lawler C, et al. Association between availability and quality of health services in schools and reproductive health outcomes among students: a multilevel observational study. American Journal of Public Health. 2012;102:e14–20. doi: 10.2105/AJPH.2012.300775. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Minguez M, Santelli JS, Gibson E, et al. Reproductive health impact of a school health center. Journal of Adolescent Health. 2015;56:338–344. doi: 10.1016/j.jadohealth.2014.10.269. [DOI] [PubMed] [Google Scholar]

- 32.Barnet B, Duggan A, Devoe M. Reduced low birth weight for teenagers receiving prenatal care at a school-based health center: effect of access and comprehensive care. Journal of Adolescent Health. 2003;33:349–358. doi: 10.1016/s1054-139x(03)00211-8. [DOI] [PubMed] [Google Scholar]

- 33.Balassone ML, Bell M, Peterfreund N. A comparison of users and nonusers of a school-based health and mental health clinic. Journal of Adolescent Health. 1991;12:240–246. doi: 10.1016/0197-0070(91)90017-g. [DOI] [PubMed] [Google Scholar]

- 34.Hackbarth D, Gall GB. Evaluation of school-based health center programs and services: the whys and hows of demonstrating program effectiveness. The Nursing clinics of North America. 2005;40:711–724. doi: 10.1016/j.cnur.2005.07.008. [DOI] [PubMed] [Google Scholar]

- 35.Jaycox LH, McCaffrey DF, Ocampo BW, et al. Challenges in the evaluation and implementation of school-based prevention and intervention programs on sensitive topics. American Journal of Evaluation. 2006;27:320–336. [Google Scholar]

- 36.Nabors LA, Weist MD, Reynolds MW. Overcoming challenges in outcome evaluations of school mental health programs. Journal of School Health. 2000;70:206–209. doi: 10.1111/j.1746-1561.2000.tb06474.x. [DOI] [PubMed] [Google Scholar]

- 37.Amaral G, Geierstanger S, Soleimanpour S, et al. Mental health characteristics and health-seeking behaviors of adolescent school-based health center users and nonusers*. Journal of School Health. 2011;81:138–145. doi: 10.1111/j.1746-1561.2010.00572.x. [DOI] [PubMed] [Google Scholar]

- 38.Parasuraman SR, Shi L. Differences in access to care among students using school-based health centers. The Journal of School Nursing. 2015;31:291–299. doi: 10.1177/1059840514556180. [DOI] [PubMed] [Google Scholar]

- 39.Wade TJ, Mansour ME, Guo JJ, et al. Access and utilization patterns of school-based health centers at urban and rural elementary and middle schools. Public Health Reports. 2008;123:739. doi: 10.1177/003335490812300610. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Geierstanger SP, Amaral G, Mansour M, et al. School-based health centers and academic performance: research, challenges, and recommendations. Journal of School Health. 2004;74:347–352. doi: 10.1111/j.1746-1561.2004.tb06627.x. [DOI] [PubMed] [Google Scholar]

- 41.Waddell EN, Orr MG, Sackoff J, et al. Pregnancy risk among black, white, and Hispanic teen girls in New York City public schools. Journal of Urban Health. 2010;87:426–439. doi: 10.1007/s11524-010-9454-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Juszczak L, Melinkovich P, Kaplan D. Use of health and mental health services by adolescents across multiple delivery sites. Journal of Adolescent Health. 2003;32:108–118. doi: 10.1016/s1054-139x(03)00073-9. [DOI] [PubMed] [Google Scholar]

- 43.Snijders TA. Power and sample size in multilevel linear models. Encyclopedia of Statistics in Behavioral Science. 2005 [Google Scholar]

- 44.Anderman C, Cheadle A, Curry S, et al. Selection bias related to parental consent in school-based survey research. Evaluation Review. 1995;19:663–674. [Google Scholar]

- 45.Dent CW, Galaif J, Sussman S, et al. Demographic, psychosocial and behavioral differences in samples of actively and passively consented adolescents. Addictive Behaviors. 1993;18:51–56. doi: 10.1016/0306-4603(93)90008-w. [DOI] [PubMed] [Google Scholar]

- 46.Pokorny SB, Jason LA, Schoeny ME, et al. Do participation rates change when active consent procedures replace passive consent. Evaluation Review. 2001;25:567–580. doi: 10.1177/0193841X0102500504. [DOI] [PubMed] [Google Scholar]

- 47.Esbensen FA, Melde C, Taylor TJ, et al. Active parental consent in school-based research: how much is enough and how do we get it? Evaluation Review. 2008;32:335–362. doi: 10.1177/0193841X08315175. [DOI] [PubMed] [Google Scholar]

- 48.Gibson EJ, Santelli JS, Minguez M, et al. Measuring school health center impact on access to and quality of primary care. Journal of Adolescent Health. 2013;53:699–705. doi: 10.1016/j.jadohealth.2013.06.021. [DOI] [PubMed] [Google Scholar]

- 49.Kaufman JS, Cooper RS, McGee DL. Socioeconomic status and health in blacks and whites: the problem of residual confounding and the resiliency of race. Epidemiology. 1997;8:621–628. [PubMed] [Google Scholar]

- 50.Rosenbaum PR, Rubin DB. The central role of the propensity score in observational studies for causal effects. Biometrika. 1983;70:41–55. [Google Scholar]

- 51.Austin PC. An introduction to propensity score methods for reducing the effects of confounding in observational studies. Multivariate Behavioral Research. 2011;46:399–424. doi: 10.1080/00273171.2011.568786. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Luellen JK, Shadish WR, Clark MH. Propensity scores: an introduction and experimental test. Evaluation Review. 2005;29:530–558. doi: 10.1177/0193841X05275596. [DOI] [PubMed] [Google Scholar]

- 53.Stone S, Whitaker K, Anyon Y, et al. The relationship between use of school-based health centers and student-reported school assets. Journal of Adolescent Health. 2013;53:526–532. doi: 10.1016/j.jadohealth.2013.05.011. [DOI] [PubMed] [Google Scholar]

- 54.Wald KD, Button JW, Rienzo BA. Morality politics vs political economy: The case of school-based health centers. Social Science Quarterly. 2001;82:221–233. [Google Scholar]

- 55.Mandel LA. Taking the “guest” work out of school-health interagency partnerships. Public Health Reports. 2008;123:790–797. doi: 10.1177/003335490812300615. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Guthrie GP, Guthrie LF. Streamlining interagency collaboration for youth at risk. Educational Leadership. 1991;49:17–22. [Google Scholar]

- 57.Melaville AI, Blank MJ. What it takes: structuring interagency partnerships to connect children and families with comprehensive services: The Education and [Google Scholar]