Abstract

Evidence-based public health has commonly relied on findings from empirical studies, or research-based evidence. However, this paper advocates that practice-based evidence derived from programmes implemented in real-life settings is likely to be a more suitable source of evidence for inspiring and guiding public health programmes. Selection of best practices from the array of implemented programmes is one way of generating such practice-based evidence. Yet the lack of consensus on the definition and criteria for practice-based evidence and best practices has limited their application in public health so far. To address the gap in literature on practice-based evidence, this paper hence proposes measures of success for public health interventions by developing an evaluation framework for selection of best practices. The proposed framework was synthesised from a systematic literature review of peer-reviewed and grey literature on existing evaluation frameworks for public health programmes as well as processes employed by health-related organisations when selecting best practices. A best practice is firstly defined as an intervention that has shown evidence of effectiveness in a particular setting and is likely to be replicable to other situations. Regardless of the area of public health, interventions should be evaluated by their context, process and outcomes. A best practice should hence meet most, if not all, of eight identified evaluation criteria: relevance, community participation, stakeholder collaboration, ethical soundness, replicability, effectiveness, efficiency and sustainability. Ultimately, a standardised framework for selection of best practices will improve the usefulness and credibility of practice-based evidence in informing evidence-based public health interventions.

Significance for public health.

Best practices are a valuable source of practice-based evidence on effective public health interventions implemented in real-life settings. Yet, despite the frequent branding of interventions as best practices or good practices, there is no consensus on the definition and desirable characteristics of such best practices. Hence, this is likely to be the first systematic review on the topic of best practices in public health. Having a single widely accepted framework for selecting best practices will ensure that the selection processes by different agencies are fair and comparable, as well as enable public health workers to better appreciate and adopt best practices in different settings. Ultimately, standardisation will improve the credibility and usefulness of practice-based evidence to that of research-based evidence.

Key words: Best practices, public health, selection framework, practice-basedevidence

Introduction

Practice-based evidence in public health

According to the World Health Organization (WHO), public health is defined as all organised measures (whether public or private) to prevent disease, promote health, and prolong life among the population as a whole.1 Public health activities can be generally categorised into five areas, namely monitoring and evaluation, health promotion and protection, healthcare service delivery, health system as well as research (Appendix 1).2-4

Evidence may be defined as the available body of facts or information indicating whether a belief or proposition is true or valid.5 Similar to clinical medicine,6,7 evidence-based public health emphasises proof of efficacy,8,9 so that scarce resources are efficiently utilised on interventions which have been shown to bring about desired outcomes,10 and that benefits outweigh harm for both individuals and society.11 Furthermore, it encourages accountability by decision-makers through the use of objective judging metrics to evaluate evidence.12

Many sources of evidence may be considered in decision-making. However, the quality of evidence is often only judged by the internal validity of study designs,13 with randomised controlled trials held as gold standard for minimising bias in study results. This then limits the data considered to research-based evidence in many cases. For example, the WHO Guidelines Review Committee evaluates evidence using the Grades of Recommendation, Assessment, Development and Evaluation (GRADE) approach,14 where one of the assessment criteria for high confidence in the body of evidence is low methodological bias.15 While efficacy under controlled conditions supports causality between the intervention and outcomes, there is growing awareness of the importance of demonstrating effectiveness in actual programme settings, on top of efficacy, for public health interventions.16-18 Evaluating a programme in the real world not only considers interactions between contextual factors and the intervention to ensure feasibility, or external validity, but also broadens the scope of interventions that can be assessed beyond simple individual-based interventions favoured by randomised controlled trials.19,20 Contextual factors such as social determinants of health,10 the ability of public health workers to deliver the interventions to target groups,19 and accessible resources21 all alter effectiveness of interventions in reality. While pragmatic trials may be an alternative in that they are carried out under real-life conditions while preserving internal validity,22 population-wide upstream interventions, for instance policies to promote healthy diets in Finland, remain incompatible with trial settings23 and require more qualitative methods of assessment.24 Therefore, practice-based evidence, as opposed to research-based evidence, has been proposed as a more relevant source of evidence for public health decision-making due to the focus on populations as a unit and complexity of multi-disciplinary interventions.25 Furthermore, use of practice-based evidence may enhance the translation of interventions from research to practice, a problem often cited in literature,26 by considering drivers and barriers in implementation. In this review, practice-based evidence is obtained from field-based assessment of an intervention in a specific real-life setting.27,28 In contrast, research-based evidence refers to empirical data derived from testing hypotheses about the efficacy of the intervention. This may be done through observational or experimental studies, with biases and confounders minimised to elucidate the relationship between the intervention and observed results.29 This review proposes that both sources of evidence are complementary in informing evidence-based practice in public health and greater attention should be given to helping decision-makers tap available practice-based evidence.

Evaluation of existing public health interventions is one valuable source of practice-based evidence.11 While frameworks have been developed to direct the process of evaluation,30 relatively little work has been done to outline criteria for reviewing practices for evidence.31 In addition, programme evaluations are often geared towards ensuring accountability towards funders or improving the programme itself, rather than for sharing of lessons learnt.32

Best practice approach

In particular, selection of best practices is one way via which implemented interventions may be evaluated to generate practice-based evidence.33 The concept of systematically identifying best practices first started in the private sector,34 where a best practice refers to a model of excellence against which counterparts in the industry can benchmark their own operations to better performance.35,36

In the public sector, the notion of best practices also underlies policy transfers between countries37 and is increasingly utilised in various sectors including education,38 immigration39 and public health, the focus of this review.A practice may be broadly taken to mean a policy, activity, intervention, approach, programme and so on.40,41 Similar to industries, the objective of identifying best practices in public health is to avoid wasting resources on reinventing the wheel by learning from others under comparable circumstances.34,42 Such exchange of knowledge not only facilitates improvement of current practices, but also helps those starting new interventions to avoid common mistakes43 and accelerate programme development.44 The increased collaboration and learning between organisations are also in line with the global movement to promote knowledge management as a means to improve outcomes.45-47 Nonetheless, the best practice approach requires dedicated resources for programme evaluation and proper documentation.48 The lack of consensus on the definition and criteria for best practices,42,49 also impedes the use of such practice-based evidence.33 In addition, the reliability and credibility of practice-based evidence depend on a flexible and transparent evaluation process,50 which has been unexamined thus far. Hence, there is an urgent need for this novel review to address the gaps in current literature and facilitate optimal utilisation of valuable practice-based evidence in the form of best practices.

Aim of study

This systematic literature review aims to develop a scientifically sound and feasible framework for the selection of best practices in public health. This seeks to address the research question: what are suitable measures of success of a public health intervention to generate practice-based evidence? Although a review by Baker similarly sought to identify criteria for evaluating research-based and practice-based evidence, the consolidated practice-based criteria were solely derived from 12 expert interviews.31 Hence the comprehensiveness of identified criteria was highly dependent on the knowledge and experience of the 12 public health experts, unlike in a literature review which can cover more extensive and varied sources to incorporate a wider range of opinions. This paper is thus likely to be the first systematic literature review that attempts to synthesise criteria for producing practice-based evidence in public health to guide organisations and decision-makers in identifying and learning from best practices. Furthermore, the interviews were conducted in 2004 and this systematic review will provide an update to the criteria identified in Baker’s paper.

Methodology

Search strategy

Literature search (April to June 2014) was carried out via three main strategies. Literature found through preliminary searches was consulted to develop appropriate search terms.31,51

Firstly, Pubmed (1966-June 2014) and the Global Health Library (2005-June 2014) databases were searched for evaluation frameworks relevant to public health. Secondly, websites and publications of major international health-related organisations were searched to identify criteria and methodologies that had previously been used to select best practices in public health. The WHO library database (WHOLIS, 1948-June 2014) and Intergovernmental Organisation search engine (IGO) were further utilised to ensure the comprehensiveness of the literature search. Lastly, the reference lists of identified articles were hand-searched to select appropriate sources. Details of the search strategy are summarised by database in Appendix 2.

Inclusion and exclusion criteria

In all cases, the search was limited to English records with full text available online (including library searches with available subscriptions at Imperial College London, University of Oxford and World Health Organization) because of practical considerations. Articles looking at best practices or evaluation outside the scope of public health were excluded. In addition, articles listing case studies as best practices without accompanying definition, criteria or selection methodology were also eliminated. However, there was no restriction on the type of literature and grey literature, such as websites and meeting reports, was included. The inclusion and exclusion criteria are summarised in Appendix 3.

Data extraction and synthesis

Once selected, data extracted included basic information like authors and year of publication, definitions of best practice if given, as well as methodology (e.g. expert panels, scoring system) and criteria used to evaluate public health interventions or select best practices.

Unlike standard systematic reviews for public health interventions,52 commonly used data quality assessment tools could not be applied due to the unconventional types of articles included and the focus of the study. Nonetheless, in line with the emphasis on using theories to enhance interventional effectiveness,52,53 data were extracted on whether the theory supporting the evaluation frameworks or best practice selection methods was reported. This was then used to identify high quality papers. It also ensured that the criteria included in the final framework are aligned with public health principles and theories, and not skewed towards any organisation’s interests projected onto their criteria. In addition, evaluation or selection frameworks that were published in peer-reviewed articles were also considered to be of higher quality than other types of documentation as the former are likely to be more robust studies having been through the peer-review process.

Finally, qualitative data synthesis was performed. Various public health evaluation frameworks were first compared to identify common categories and criteria under each category. Frameworks which have been applied in diverse settings were considered more likely to be acceptable to public health workers. The categories derived from these evaluation frameworks then provided a structure for the proposed framework to ensure that all important aspects of a practice are assessed when generating practice-based evidence. Subsequently, criteria previously applied in the selection of best practices were compiled and classified according to the categories outlined. Once again, a criterion which was consistently used by different organisations is possibly widely accepted as a significant indicator of a successful public health intervention and feasible for application, and hence more likely to be included in the final framework.

Results

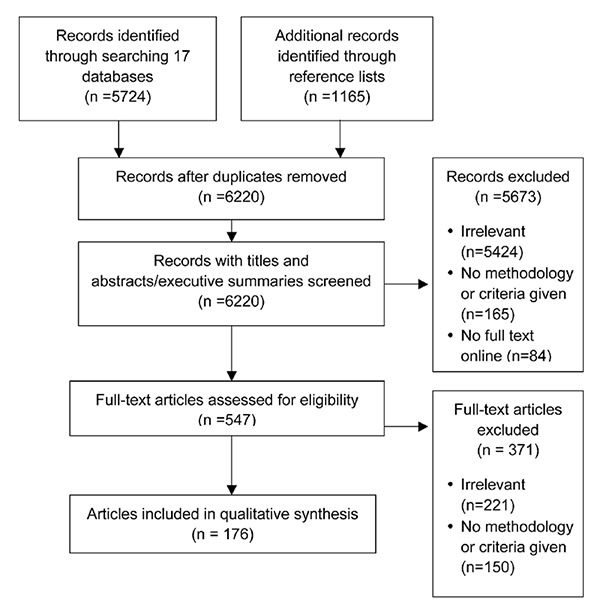

A total of 6889 records were obtained, of which 176 were eventually included in this literature review (Figure 1). The complete list of included records is provided in Appendix 4, sorted by database and order of extraction.

Figure 1.

Results of literature search.

Definition of best practice

To develop a framework for selecting best practices, a working definition of best practice is first necessary. One book, 10 peer-reviewed articles and 21 organisational sources included in this review provided varying interpretations. Alternative terms include good practice, effective solution, promising practice and innovative practice.31,40,47,54-58 Good and effective practice are often used to avoid debate about whether a single perfect intervention exists.47 Nonetheless, the process of selecting best practices is comparable to that for good practices and the former is preferred in this review as it provides greater incentive for countries and organisations to improve their practices.59 However, best practice should not be understood in its superlative form,43 but instead seen to encompass interventions that meet a set of pre-defined criteria to varying degrees and reflect the society’s or organisation’s priorities over time.33 In addition, best and good practices were occasionally differentiated from promising or innovative practices by the level of data supporting the success of the intervention. Best practices are well-established programmes proven to be effective through rigorous evaluations whereas promising or innovative practices are still in their infancy but show some signs of potential effectiveness in the long run.40,55 While having robust evidence to back the success of the practice is ideal, it is perhaps more important to document the level of evidence available to guide decision-makers who are trying to learn from these practices instead of creating separate labels with distinct criteria. Differentiating practices by the level of evidence may also limit the settings and types of interventions that may be included. Therefore, best practices also encompass promising practices with varying levels of supporting evidence in this review.

Kahan, Goodstadt and Rajkumar summed up six frequently used versions of the best practice approach in health promotion, which is useful for clarifying the definition of best practice in this review.50,60 In accordance with the aim of generating practice-based evidence, this review is limited to best practices that are selected against previously established criteria to illustrate what works in reality. This is preferred over using best practices as a compilation of enabling elements, standards or steps that are detached from any cultural or social context and hence difficult to apply in the real world.59

The importance for best practices to demonstrate effectiveness or positive outcomes with regard to programme objectives in a specific real-life context is supported by 25 reviewed sources.17,41-43,47,48,54-56,58,61-75 Additionally, in order to fulfil their purpose as a learning tool across countries and organisations, best practices should be defined by their potential for adaptation to other settings through consideration of implementation and contextual factors, as agreed by 17 sources.17,41-43,47,49,55-57,64,68,69,71-75

In short, a proposed working definition for best practices is: practices that have shown evidence of effectiveness in improving population health when implemented in a specific real-life setting and are likely to be replicable in other settings. Consequently, the emphasis on real-life implementation also requires evaluation with a focus on contextual and implementation factors as compared to experimental settings.68

Existing frameworks for public health evaluation

With reference to the five areas of public health activities mentioned in the introduction (and elaborated in Appendix 1), only one peerreviewed article was focused on evaluating public health surveillance while 39 peer-reviewed articles and nine organisational sources offered diverse frameworks for evaluation of health protection and promotion practices. In addition, three peer-reviewed and two organisational sources focused on healthcare services delivery, and five peerreviewed and seven organisational sources explored health system evaluation. There were no records found for the evaluation of public health research. This is expected due to the search terms used in the literature search to elucidate criteria for the evaluation of practicebased evidence specifically, as opposed to research-based evidence which is beyond the focus of this review.

Regardless of the area of public health activities, the review found a general consensus on the importance of assessing the implementation process as well as short-term and long-term outcomes, as recommended by 36 sources.30,48,63,72,76-107 Monitoring the implementation process strengthens the causal relationship between the intervention and observed outcomes by providing information on the facilitating intermediary links.89 In cases where outcome indicators are unavailable, process evaluation may also act as a provisional indicator of effectiveness in view of its expected impact on outcomes.91 For instance, monitoring the coverage of a target group for a screening programme may be used to gauge the success of the programme on top of outcome data on decreased morbidity and mortality, which may only improve after a long period and are difficult to attribute to a single intervention. On the other hand, monitoring outcomes of public health practices ensures that desired objectives are met and guides resource allocation in favour of effective practices.76 Alternatively, the RE-AIM framework first proposed by Glasgow attempts to condense process and outcome evaluation into five dimensions, namely reach, efficacy,108 adoption, implementation and maintenance. Outcome evaluation involves the measurement of the size of change in a specific desirable outcome (efficacy), while the implementation process is assessed through the percentage of target population receiving the intervention (reach), proportion and representativeness of settings taking up the intervention (adoption), degree to which the intervention is carried out as planned (implementation), as well as sustainability of the intervention and its effects (maintenance). Each of these domains then contributes to the public health impact of the intervention. This framework is an extension of Abram’s proposal that the impact of a programme can be equated to the product of its reach and efficacy.109 Since its introduction in 1999, the RE-AIM framework has been widely used to assess various health promotion programmes in peer-reviewed studies.110-120 Manipulating the five dimensions further produces additional criteria such as efficiency, given by (reach × efficacy)/cost of the intervention.110

Another category typically included in public health evaluations is the context. Contextual factors include programme inputs as well as characteristics of the health issue and target community. Assessment of contextual factors ensures that the intervention is relevant to the target group’s needs and circumstances.48,121,122 Furthermore, it also pinpoints factors that may influence the ability of the programme to achieve its desired outcome,85,87,89,92-95,123 such as political environment and available resources. Since a best practice needs to be transferable between settings, consideration of the context during evaluation is useful in facilitating replication of the practice.123 Hence, inclusion of context, process and outcome evaluation criteria is likely to be fundamental for a framework generating practice-based evidence in public health, which is in line with the theory of change and realistic approach to evaluations where analysis of background factors and process descriptions are necessary to explain outcomes in the complex system of public health.123-125

With regard to the programme content, programmes may sometimes be appraised by their objectives, theoretical underpinnings and scope of interventions. Interventions based on theories, such as the health belief model for behavioural interventions, are likely to facilitate positive out-comes.30,63,78,84,86,121,122 A comprehensive intervention takling both individual and wider health determinants is also aligned with health promotion goals to build an environment that supports healthy lifestyles.83,121

In addition, the quality of evidence illustrating the effectiveness of an intervention is occasionally included as part of the evaluation framework.58,88,126,127 Alternatively, programmes may simply be assessed by the presence of formal formative, process and/or outcome evaluation studies.48,87

It is interesting to note that health system assessments also focus on sustainable and equitable financing of the health system,128,129 an element rarely found in health promotion or healthcare service delivery evaluation. In some cases, fair financing may be subsumed under equity.101,103,104,128-131

Lastly, some evaluations found do not allow for assessment of the programme against standards or comparisons between programmes132-134 and are hence not useful for the selection of best practices. Some reviewed sources also provided individual points of evaluation, highlighting attributes of an ideal public health activity.30,48,58,80,86,87,93,94,126, 127,135-139 Appendix 5 summarises the various points of evaluation cited according to the five main categories described earlier, namely, context, content, process, outcomes and evaluation.

Previously used methodology for selecting best practices

Seven peer-reviewed articles and 32 organisational sources detailed the process by which best practices were selected or public health practices were evaluated. Understanding the methodology will be valuable for the development of a best practice framework as it affects how the framework will be applied and hence its design to enhance usability.

Subjectivity at various stages of selection or evaluation is a universal feature across all reviewed sources. Firstly, best practices were identified from submitted cases,41,43,47,54-56,59,66,68,75,88,140-152 or from literature review and experts’ opinion.44,60,61,64,153-156 For the former, programme managers were commonly required to submit a completed template, which was usually narrative and qualitative to accommodate the uniqueness of the practice.59 The range of interventions identified and quality of information used for assessment are hence reliant on the initiative of the programme managers. In the case of evaluations by experts, the programme managers were instead contacted or reviewers visited intervention sites,17,137 and the selection of interventions to be evaluated was limited by the biases and knowledge of the expert reviewers. In one exception, practices were shortlisted from projects related to United Nations agencies.157 As each approach has its pros and cons, it is important for reviewers to report the limitations of their method of choice and any potential bias on the type of practices selected. Subsequently, reviewers appraise the information collected against pre-set criteria to determine if the intervention can be considered a best practice. Hence, the composition of the panel of reviewers and the selection process are also crucial in ensuring valid and reliable selection of best practices. While reviewers are typically branded as experts in the relevant fields, there is often little information on the background of the reviewers. Besides professional academics or organisational staff, it has also been suggested that best practices should be assessed by the beneficiaries of the intervention.33,59 This adheres to the recommendations for participatory evaluation in public health and allows direct assessment of the acceptability of the intervention.30 However, it may be difficult to engage the most economically disadvantaged beneficiaries of the intervention in reality and to ensure an equitable representation.33 Regardless, careful consideration of the composition of the panel of reviewers is important to avoid biases due to vested interests and details of the composition should be made transparent.

Next, the selection of best practices or evaluation of public health interventions is typically done via consensus after independent assessment by each reviewer.48,60,88 Alternatively, reviewers may be asked to score a programme on each criterion, which will then contribute to the overall score of the programme to facilitate comparisons with other programmes.55,142,145 Either way, subjective views of each reviewer greatly influences the selection and evaluation outcomes and a reliable selection framework should thus consider such subjectivity through appropriate criteria and indicators.

A few studies attempted to provide more objective evaluation methods. Some users of the RE-AIM model have translated each criterion into a mathematical formula, for instance impact of an intervention can be calculated as (reach × efficacy).117 Similarly, Reed proposed a scoring system given by (impact of the intervention × number of people implementing the intervention other than programme staff or percentage of target population involved).158 While these methods may facilitate comparison between different interventions based on their scores, they may also oversimplify or ignore important qualitative information. Furthermore, some degree of subjective judgements is unavoidable in any evaluation, for instance in weighing the importance of the various criteria used.117 Therefore, it is more important to acknowledge the subjectivity in the evaluation process and incorporate it into the proposed framework for evaluating best practices rather than trying (and failing) to eliminate it fully.

Two sources found by this review also recommended validating best practices through rigorous empirical studies of the causality between intervention and outcomes.40,159 Once again, best practices should not only be sourced from settings conducive for research but also other real-life settings where process evaluation may then be used to support any observed correlation between the programme and outcomes in replacement of empirical causality studies.

Nine reviewed sources further provided considerations for choosing indicators in public health evaluation.76,97,99-101,106,139,160,161 Criteria set the benchmark for evaluating an intervention while indicators are measurements used to assess achievement of these standards.76 Ideal indicators are valid because they provide an accurate reflection of what is being measured, relevant because they measure an important phenomenon, practical and cost-effective given accessible information, sensitive to changes arising from programme implementation, reliable in producing consistent results independent of reviewer or time, produce comparable measurements when used in different contexts, easy to interpret and useful for the purpose of selecting best practices.

Criteria used to select best practices

One book, eight peer-reviewed articles and 39 organisational sources presented various criteria for selecting best practices. To ensure that the criteria provide a comprehensive assessment of public health interventions, the criteria were grouped according to the five categories typically used in public health evaluations mentioned earlier, namely context, content, process, outcomes and evaluation. Appendix 6a-e lists the criteria that are cited by each source, with sources arranged by types and databases. Appendix 6a cites peerreviewed articles and book while Appendix 6b cites organisational sources from the database of WHOLIS and WHO Regional Offices of Africa and the Americas. Similarly, Appendix 6c includes sources from WHO Regional Office for Europe, UNDP and the World Bank; Appendix 6d cites sources from US CDC, IGO search engine and hand-searches of reference lists; and Appendix 6e focuses on sources derived from hand-searching reference lists. Appendix 7 then summarises the number of sources citing each criterion.

Almost all sources (44 of 48 sources) agreed that a best practice must be effective and show measurable positive results in achieving pre-defined objectives. This also supports the working definition of a best practice stated earlier, where a best practice must demonstrate what works in reality. Assessing effectiveness is likely to be crucial regardless of the area of public health, as earlier demonstrated. Additional sources providing detailed analysis of each individual criterion were also found. On top of the 48 sources, three additional peerreviewed sources offered different ways of evaluating effectiveness. Lengeler assessed individual and community effectiveness,162 while McDonnell proposed that effectiveness can be measured as (programme efficacy × probability that the programme can deliver its intended outcomes), where the latter is dependent on available human resources, infrastructure and the community’s access to the programme.163 Lastly, Macdonald argued for inclusion of qualitative process indicators in addition to the final outcome evaluation.164 While Macdonald’s claim is supported by evaluation frameworks described earlier,30,48,63,72,76-107 Lengeler’s and McDonnell’s work are less relevant to this review due to the emphasis on scientific studies to establish individual effectiveness and programme efficacy respectively, which may not always be feasible. In short, all positive and negative outcomes of an intervention across time should be taken into account when assessing effectiveness. The objectives of the intervention may also suggest potential targets, for instance achievement of more than 90% of the objectives.145

Another commonly-cited criterion is programme sustainability (32 of 48 sources). On top of the 48 sources, eleven additional peer-reviewed articles provided definitions of sustainability in public health practices. Sustainability may be seen as a long-term continuation of i) activities through local ownership or incorporation into standard practices, otherwise known as institutionalisation;165 ii) benefits as outlined in the objectives of the intervention, including health improvements or heightened attention on the issue; iii) community or organisational capacity to deliver the intervention; or iv) a combination of all three dimensions.5,166-170 In particular, long-term availability of necessary resources, financial or otherwise, to run the intervention is an important point to consider as it greatly affects the maintenance of the activities and their benefits.170 This is especially crucial for externally supported programmes as termination of the programme with the end of funding may limit the potential benefits that can be reaped from initial investments and erode the trust that the community placed in public health workers.5 Contextual and programme elements which may enhance sustainability include alignment of the intervention with national goals, political commitment, community participation, stakeholder partnerships and programme evaluation.171-174 In addition, the timing of appraisal is also crucial, with some sources suggesting that a sustainable intervention should continue for a minimum of five175 or two years after its start54,141,149 or at least one year after the external funding stops.170 Although Stephenson attempted to provide indices to measure sustainability,5 there is no agreed threshold for categorising a practice as sustainable or otherwise.170 Hence, when selecting best practices, it is also important to state the duration of implementation prior to evaluation to inform decision-makers, as well as recognise that sustainability is a continuum where programme elements, benefits and community capacity are maintained to different extents.

Efficiency (24 of 48 sources), or cost-effectiveness, is important in ensuring that scarce resources are used in a prudent and accountable manner, and may be commonly defined as the ability to produce optimal results with minimum resources.43,54,154 On top of the 48 sources, four additional peer-reviewed articles gave further examples of assessment of efficiency in practice. Where the cost of the intervention is known, cost-effectiveness analysis is commonly done176,177 and a threshold can then be applied to determine if the intervention is cost-effective,178 for example by relating the cost per Disability-Adjusted Life-Year averted to the per capita Gross Domestic Product of the country.179 However, in absence of cost-effectiveness calculations, judging the efficiency of practices may rely on evidence of wastage avoidance, cost minimisation56,62 or optimal use of locally accessible resources.180

Potential for replication, or replicability, should also be a main criterion for defining best practices in line with the working definition (24 of 48 sources). Replicability may be defined as the ability of the intervention to continue achieving desired outcomes when adapted to various cultures and settings.54-56,70 Thus, best practices should have key success factors that are independent of the context and available resources.

Next, relevance of the intervention in addressing an important public health issue in the community (21 of 48 sources) depends on the priorities and perceptions of the target community.43,154,155,175 This requires analysis of the disease burden and community profile as well as a needs assessment involving the target community before designing the intervention. Contextual factors such as integration of the intervention into existing structures and its culture appropriateness may also be considered under relevance.70,155 Thus, awareness of the community and settings enhances relevance of the intervention. Furthermore, these descriptions may contribute to the replicability of the intervention by providing information on any contextual factors which may have led to the outcomes observed.

Stakeholder collaboration (17 of 48 sources) and community participation (12 of 48 sources) are two frequently used criteria for selecting best practices and in process evaluation in public health. Both elements are recommended to enhance local ownership,47,54,62 increase the reach of the intervention,148 capitalise on various competencies and incorporate perspectives of the target beneficiaries. Achieving these elements is thus believed to augment effectiveness and sustainability.61,78,181 Furthermore, they are aligned with the principles of public health of being participatory and multi-sectoral in recognition of the fact that improvement in population health requires the collaborative efforts of more than any single actor.4,42,121 On top of the 48 sources, five peer-reviewed articles on community engagement182-186 and one on stakeholder collaboration187 further contribute to this discussion. Markers of ideal community participation include appropriate members and participation process, empowerment of the individuals involved, improved community ties, synergistic and viable coalition where new ideas emerge as well as effective leadership and management. Thus, capacity building of the community (eight of 48 sources) may also be subsumed under community participation as a means through which the latter promotes effectiveness and sustainability of the intervention. On top of the 48 sources, six peer-reviewed articles on capacity building outlined the areas in which empowerment may be observed.188-193 Development of the community’s knowledge and skills on health issues and ways to tackle them, community networks, leadership, resource mobilisation, investments, and organisational structures to support delivery of public health activities will facilitate the maintenance of the intervention and its benefits over time and even aid the community in managing other public health concerns in the future. On the other hand, stakeholder collaboration can be gauged by the synergy achieved, where comprehensiveness and innovation are enhanced by the pooling together of complementary resources and competencies.187 Therefore, community participation and stakeholder collaboration are criteria that both support the attainment of positive outcomes and are themselves important public health goals to be achieved.80 They are also assessed through proxy outcomes such as community empowerment and synergistic cooperation.

Ethical soundness of an intervention (14 of 48 sources) includes respect for an individual’s rights and dignity as well as professionalism by public health workers.41,43,56,154 Reflecting fundamental public health principles, ethical considerations should underpin all activities involving human participants and be made explicit as a criterion for best practices. Furthermore, ethical interventions that do not infringe on an individual’s rights and a community’s norms are more likely to be accepted and utilised by the target group,54 thus enhancing impact through greater reach and adoption.126 Ethical frameworks proposed by eight peer-reviewed articles on top of the 48 sources are also considered. Suggested elements include prevention of harm while ensuring benefits at both individual and population levels, consideration of equity in distribution of benefits and burdens (two of 48 sources), respect for an individual’s autonomy and privacy, informed consent, consciousness of local norms, accountability as well as awareness of vulnerable groups51,194-200 (eight of 48 sources). Specifically, equity may be assessed by the distribution of access, financing and effects201-203 across place of residence, race, occupation, gender, religion, education, socioeconomic status and social capital.204 Davies further suggested eight elements that promote equity, including activities addressing social determinants of health.205 In short, ethical soundness is a basic requirement for any public health intervention and is taken to include equity and social inclusion of vulnerable groups in this review.

Having a theoretical basis underlying public health programmes (11 of 48 sources) and the need for strong evidence of effectiveness (12 of 48 sources) have been mentioned earlier under the chapter on existing frameworks for public health evaluation. While having a theoretical basis will be ideal to explain the logic behind public health interventions, an intervention should not be discounted as ineffective solely because of a lack of underlying theory as inclusion of contextual and process criteria may already provide information about the mechanisms leading to the observed outcomes.82 Similarly, the need for high quality evidence may restrict the selection of best practices to only those amenable to empirical studies and with ample resources to conduct these studies. This may again result in the drawbacks of using only research-based evidence. Therefore, in order to maximise learning from successful initiatives without a theoretical basis or rigorous evaluation, they should still be included in the selection for best practices, though with the level of evidence explicitly stated to inform decision-making.

The next criterion to be discussed is the innovative nature of interventions (11 of 48 sources), defined as those implemented in the context for the first time.54,141,148,150 However, an effective intervention should not be ruled out as a best practice simply because it has been applied previously and thus innovation will not be a significant criterion for selecting best practices. While outcome-related criteria such as effectiveness and sustainability are cited at similar frequency in peer-reviewed and organisational sources, it should be noted that the two types of sources differ in their emphasis on other categories of criteria.

In addition, while most peer-reviewed articles included the need for robust supporting empirical evidence and theoretical backing, criteria such as innovation and ethical soundness are rarely mentioned in these articles but frequently used by organisational sources. This supports the need to include views of both public health researchers and field workers to ensure a comprehensive review through consideration of both peer-reviewed and organisational sources.

Lastly, whether a programme is implemented as planned or well-executed (11 of 48 sources) depends on the required resources and reflects the feasibility of the programme in the context of the target group.206 Other less commonly used criteria also include extensive reach or scale of intervention (nine of 48 sources), which may penalise small-scale targeted initiatives; mandatory formal evaluation studies (seven of 48 sources), which may be restrictive given availability of resources in different settings; support from leaders (seven of 48 sources), which can conceptualised as expressed commitment in public statements, building of relevant infrastructure or budget allocation;207 having clearly defined objectives (six of 48 sources); comprehensiveness (six of 48 sources), such as targeting both determinants of health and environmental factors; integration into local context (five of 48 sources); acceptability (two of 48 sources) and visibility (one of 48 sources). As these criteria were found to be less commonly cited, used without accompanying justification or intermediary towards effectiveness and sustainability of an intervention, they are not considered as essential features of a best practice in this review. Nonetheless, ensuring that these less commonly cited factors are met may further enhance the implementation and effectiveness of the interventions, for instance gaining the buy-in and support from community leaders to ensure the intervention is sustained over time.

Proposed evaluation framework for selection of best practices

Based on the literature review of past evaluations of public health interventions and selections of best practices, this paper proposes a framework for selection of best practices in public health as illustrated in Table 1. Contextual and process elements should be considered together with the outcomes of a practice, as they can further direct adaptation to other settings. Eight criteria across context, process and outcomes were chosen based on their widespread application in evaluation frameworks for various areas of public health as well as best practice selection processes: relevance, community participation, stakeholder collaboration, ethical soundness, replicability, effectiveness, efficiency and sustainability. While the three criteria of having a theoretical basis underlying the intervention, showing strong evidence for effectiveness and being innovative were cited by a number of sources, they were excluded from the final framework as they are not critical for identifying a best practice worthy of emulation as discussed earlier.

Table 1.

Proposed framework for selection of best practices in public health, with examples for each criterion.

| Category Criterion | Example | |

|---|---|---|

| Context 1. | Relevant

|

Evaluate disease burden in community. Involve target groups and stakeholders in needs assessment. Describe existing programmes, social and cultural perception of disease. |

| Process 2. | Engage the community (community participation)

|

Ensure appropriate representation of target groups, including vulnerable groups. Improve knowledge about the disease in local community. Give rise to new approaches due to inclusion of the community. |

| 3. | Involve the right stakeholders (stakeholder collaboration)

|

Give rise to new approaches due to pooling together of resources and competenc. of non-governmental organisations, donors and international organisations. |

| 4. | Ethically sound

|

Promote fair distribution of benefits across ethnicities, socioeconomic status and gender. Involve voluntary participants. Target the poor and females. Benefit the local community and do not deplete local resources. |

| 5. | Replicable*

|

|

| Outcomes 6. | Effective*

|

Reduce morbidity and mortality, achieve universal access to healthcare, and enhance community awareness. Conduct case control studies, patient surveys, and routing monitoring. |

| 7. | Efficient

|

Describe expertise of public health workers required, and costs of intervention. Conduct cost-benefit analysis, and cost-effectiveness analysis. |

| 8. | Sustainable

|

Train local public health workers to administer intervention. Ensure community awareness continues after programme ends. Self-financing of intervention by community. |

*Replicability and effectiveness are fundamental criteria in line with the working definition of best practices

Keeping in mind the subjective nature of the selection process, the background of reviewers and process of selection (whether the practice is identified from submitted case studies or by reviewers themselves) should be made transparent to inform decision-makers of the potential biases. Sub-points and examples are also developed for each of the eight criteria in the proposed framework to guide reviewers. Indicators specific to the health issue of interest may also be chosen to provide additional measurements to facilitate selection. By structuring the framework as a checklist, it not only guides the selection process but also facilitates reporting and dissemination by identifying strengths and weaknesses of the practice of interest. It is important to note that besides effectiveness and replicability, best practices may not necessarily exhibit all the listed criteria.71 However, they should not go against any of the listed points. For instance, while best practices may not have demonstrated a causal link with a decrease in mortality, they should not show an increase in mortality instead. To ensure replicability of best practices in other settings, descriptions of the community, context, resources employed as well as supporting evidence should ideally be included to guide decision-makers in adopting these best practices to their own context. Ultimately, in order for the best practice approach to achieve its purpose as a learning tool, the context-specific nature of programmes must be stressed and elements should be adapted before replication in other settings.33

Lastly, the proposed framework is deliberately kept general to be applicable to any public health action at all levels of implementation, be it individual-, community- or population-based interventions. Depending on the public health issue and types of interventions, the framework may be further fine-tuned to emphasise specific criteria.86 For instance, when applying the framework to health systems, equity and sustainability of the health financing mechanisms may be given greater weight due to their importance.129 The proposed framework should also be updated over time to reflect new priorities and focus of the society and organisation of interest.33

Conclusions

While metrics for assessing empirical studies for the quality of evidence have been extensively examined, the lack of consensus on what constitutes practice-based evidence impedes utilisation of valuable real-world experiences in informing public health interventions. Best practices in public health may be defined as interventions that have been shown to produce desirable outcomes in improving health in real-life settings and are suitable for adaptation by other communities. By consolidating previous best practice selection criteria in secondary literature and comparing them with established public health evaluation frameworks, a framework covering eight criteria (relevance, community participation, stakeholder collaboration, ethical soundness, replicability, effectiveness, efficiency and sustainability) across programme context, process and outcome is proposed in this review to guide the selection of best practices.

Strengths and limitations of study

This review addresses a notable gap in current literature on standards for practice-based evidence in public health by proposing the first framework (Table 1) for the selection of best practices based on an extensive systematic review. Despite the increasing awareness about the benefits of practice-based evidence, there is a lack of consensus on the criteria for assessing such evidence, hence hindering its usability.31 Therefore, the framework suggested in this review provides a much-needed tool for public health workers to tap experiences in the field and evaluate interventions in a logical manner to select noteworthy practices which can then be adapted to other settings as evidence-based practices. Promotion of practice-based evidence widens the scope of evidence beyond research with regard to complex and multi-disciplinary public health work and this novel review is hence a crucial first step in setting standards for generating such evidence.

All literature included in the review was found using the methodology recommended by the Cochrane Collaboration for systematic reviews,208 from formulation of a research question to systematic search and selection of records. As the first systematic review to focus on elucidating criteria for practice-based evidence, this review is therefore a novel attempt to define and conceptualise elements of a best practice in public health in a scientifically sound manner. Furthermore, the use of broad search terms and the search through 17 databases with different focuses and settings aimed to minimise omission of crucial material and increase comprehensiveness and representativeness of included records.

However, the proposed framework is produced following literature search of the stated databases and is by no means exhaustive or conclusive. Due to time and resource constraints, the search was limited to records in English and articles with full text available online. Therefore, inclusion of more databases and removal of search restrictions may improve the comprehensiveness of this review. In addition, data search, extraction and synthesis were only conducted by a single reviewer. In order to minimise errors, search of and data extraction from the first database, Pubmed, were conducted twice and results compared to identify any oversight. Nonetheless, having a second independent reviewer would be ideal to prevent individual bias in the literature search and extraction. Due to the heterogeneity and nature of the literature included, there is also no available quality assessment tool that can be used to determine the bias of included records and this may affect the quality of this review. While extracting the theoretical basis for evaluation or selection and distinguishing between peer-reviewed and organisational sources may hint at the quality of the records, it may be useful to update this review when an appropriate quality assessment tool becomes available. Furthermore, as this is the first literature review that attempts to elucidate criteria for evaluating practice-based evidence, details of the methodology, including search terms and databases used, and the results obtained could not be corroborated with any existing study. Lastly, pilot testing of this framework in evaluation of existing programmes, for instance to evaluate health-related policies across different contexts in the recent movement to promote Health in All Policies in Europe,209 or gathering expert opinion will also be necessary in the future to determine its usability.

Future research

Future studies on improving best practice reporting, dissemination and adoption will be complementary in maximising the potential of the best practice approach.48 Appropriate dissemination is likely to improve the efficiency of programme development as resources may then be devoted to adoption rather than innovation.73 Suitable research may hence go beyond the boundaries of public health and involve knowledge management theories.46,210 This includes building suitable knowledge sharing platforms and helping decision-makers identify elements that can be replicated to their own settings.

Ultimately, improving the selection, dissemination and transfer processes of best practices can then facilitate and promote appropriate use of the best practice approach to generate reliable practice-based evidence which can complement research findings in public health. Inclusion of credible practice-based evidence in informing evidence-based practice is consequently likely to enhance the feasibility of derived interventions and widen the scope of recommended practices. Furthermore, it taps a previously underrated wealth of field-based knowledge and experience that should be equally, if not more, valued as physical or financial resources for practitioners attempting to develop effective public health interventions.

Acknowledgments

The author wishes to thank Dr Peter Scarborough and Dr Emma Plugge from the University of Oxford for their invaluable comments on earlier drafts, as well as the World Health Organization Regional Office for Europe for its support.

References

- 1.World Health Organization. Public health. Available from: http://www.who.int/trade/glossary/story076/en/ [Google Scholar]

- 2.Public Health Functions Steering Committee. Public health in America. Available from: http://www.health.gov/phfunctions/public.htm [Google Scholar]

- 3.Ramagem C, Ruales J. The essential public health functions as a strategy for improving overall health systems performance: trends and challenges since the public health in the Americas Initiative, 2000-2007. World Health Organization; 2008. Available from: http://www.paho.org/PAHO-USAID/index.php?option=com_docman&task=doc_download&gid=10413&Itemid=99999999 [Google Scholar]

- 4.WHO Regional Committee for Europe. Strengthening public health services across the European Region – a summary of background documents for the European Action Plan. 2012. Available from: http://www.euro.who.int/_data/assets/pdf_file/0017/172016/RC62-id05-final-Eng.pdf?ua=1 [Google Scholar]

- 5.Stevenson A, ed. Oxford dictionary of English. 3rd ed. Oxford: Oxford University Press; 2010. [Google Scholar]

- 6.Evidence-Based Medicine Working Group. Evidence-based medicine: a new approach to teaching the practice of medicine. J Am Med Assoc 1992;268:2420-5. [DOI] [PubMed] [Google Scholar]

- 7.Sackett DL, Rosenberg WM, Gray JA, et al. Evidence based medicine: what it is and what it isn’t. BMJ 1996; 312:71-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Jenicek M. Epidemiology, evidenced-based medicine, and evidence-based public health. J Epidemiol 1997;7:187-97. [DOI] [PubMed] [Google Scholar]

- 9.Brownson R, Gurney J, Land G. Evidence-based decision making in public health. J Publ Health Manag Pract 1999;5:86-97. [DOI] [PubMed] [Google Scholar]

- 10.Birch S. As a matter of fact: evidence-based decision-making unplugged. Health Econ 1997;6:547-59. [DOI] [PubMed] [Google Scholar]

- 11.Brownson R, Fielding J, Maylahn C. Evidence-based public health: a fundamental concept for public health practice. Ann Rev Publ Health 2009;30:175-201. [DOI] [PubMed] [Google Scholar]

- 12.Flay B, Biglan A, Boruch R, et al. Standards of evidence: criteria for efficacy, effectiveness and dissemination. Prevent Sci 2005;6:151-75. [DOI] [PubMed] [Google Scholar]

- 13.Hill N, Frappier-Davignon L, Morrison B. The periodic health examination. Can Med Assoc J 1979;121:1193-254.115569 [Google Scholar]

- 14.World Health Organization. WHO handbook for guideline development. World Health Organization; 2011. Available from: http://apps.who.int/iris/bitstream/10665/75146/1/9789241548441_eng.pdf [Google Scholar]

- 15.Balshem H, Helfand M, Schünemann H, et al. GRADE guidelines: 3. rating the quality of evidence. J Clin Epidemiol 2011;64:401-6. [DOI] [PubMed] [Google Scholar]

- 16.Glasgow RE, Lichtenstein E, Marcus AC. Why don’t we see more translation of health promotion research to practice? Rethinking the efficacy-to-effectiveness transition. Am J Public Health 2003;93:1261-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Farris R, Haney D, Dunet D. Expanding the evidence for health promotion: developing best practices for WISEWOMAN. J Womens Health (Larchmt) 2004;13:634-43. [DOI] [PubMed] [Google Scholar]

- 18.Green LW, Ottoson JM. From efficacy to effectiveness to community and back: evidence-based practice vs practice-based evidence. Glasgow RE, Narayan KMV, Meltze D, eds. From clinical trials to community: the science of translating diabetes and obesity research, 12-13 Jan. 2004, Bethesda: National Institutes of Health; 2004. pp 15-18. [Google Scholar]

- 19.Rychetnik L, Frommer M, Hawe P, Shiell A. Criteria for evaluating evidence on public health interventions. J Epidemiol Community Health 2002;56:119-27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kemm J. The limitations of evidence-based public health. J Eval Clin Pract 2006;12:319-24. [DOI] [PubMed] [Google Scholar]

- 21.Walker J, Bruns E. Building on practice-based evidence: using expert perspectives to define the wraparound process. Psychiatr Serv 2006;57:1579-85. [DOI] [PubMed] [Google Scholar]

- 22.Thorpe K, Zwarenstein M, Oxman A, et al. A pragmatic-explanatory continuum indicator summary (PRECIS): a tool to help trial designers. Can Med Assoc J 2009;180:E47-57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Lobstein T, Swinburn B. Health promotion to prevent obesity. McQueen DV, Jones CM, eds. Global perspectives on health promotion effectiveness. New York: Springer; 2007. pp 125-150. [Google Scholar]

- 24.McKinlay J. Paradigmatic obstacles to improving the health of populations: implications for health policy. Salud Pública de México 1998;40:369-79. [DOI] [PubMed] [Google Scholar]

- 25.Green LW. Public health asks of systems science: to advance our evidence-based practice, can you help us get more practice-based evidence? Am J Public Health 2006;96:406-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Glasgow R, Emmons K. How can we increase translation of research into practice? Types of evidence needed. Ann Rev Public Health 2007;28:413-33. [DOI] [PubMed] [Google Scholar]

- 27.Leeman J, Sandelowski M. Practice-based evidence and qualitative inquiry. J Nurs Scholarsh 2012;44:171-9. [DOI] [PubMed] [Google Scholar]

- 28.Wilson KM, Brady TJ, Lesesne C, et al. An organising framework for translation in public health: the knowledge to action framework. Prevent Chronic Dis 2011;8:A46-52. [PMC free article] [PubMed] [Google Scholar]

- 29.Banta H. Considerations in defining evidence for public health. Int J Technol Assess Health Care 2003;19:559-72. [DOI] [PubMed] [Google Scholar]

- 30.Rootman I, Goodstadt M, Hyndman B, et al. Evaluation in health promotion. World Health Organization. Report number: 92, 2001. [PubMed] [Google Scholar]

- 31.Baker EA, Brennan Ramirez LK, Claus JM, Land G. Translating and disseminating research- and practice-based criteria to support evidence-based intervention planning. J Public Health Manag Pract 2008;14:124-30. [DOI] [PubMed] [Google Scholar]

- 32.Kerkhoff LV, Szlezák N. Linking local knowledge with global action: examining the Global Fund to Fight AIDS, Tuberculosis and Malaria through a knowledge system lens. Bull World Health Organ 2006;84:629-35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Øyen E. A methodological approach to best practices. Øyen E, Cimadamore A. eds. Best practices in poverty reduction: an analytical framework. London: Zed Books; 2002. pp 1-28. [Google Scholar]

- 34.Brannan T, Durose C, John P, Wolman H. Assessing best practice as a means of innovation. Local Governm Stud 2008;34:23-38. [Google Scholar]

- 35.Cross R, Iqbal A. The Rank Xerox experience: benchmarking ten years on. Rolstadas A, ed. Benchmarking: theory and practice. New York: Springer; 1995. pp 3-10. [Google Scholar]

- 36.Elmuti D, Kathawala Y. An overview of benchmarking process: a tool for continuous improvement and competitive advantage. Benchmark Qual Manag Technol 1997;4:229-43. [Google Scholar]

- 37.Newmark A. An integrated approach to policy transfer and diffusion. Rev Policy Res 2002;19:151-78. [Google Scholar]

- 38.Peters M, Heron T. When the best is not good enough: an examination of best practice. J Spec Educ 1993;26:371-85. [Google Scholar]

- 39.Bendixsen S, de Guchteneire P. Best practices in immigration services planning. J Policy Anal Manag 2003;22:677-82. [Google Scholar]

- 40.Compassion Capital Fund National Resource Centre. Identifying and promoting effective practices. Compassion Capital Fund National Resource Centre. 2010. Available from: http://www.strengtheningnonprofits.org/resources/guidebooks/Identifying%20and%20Promoting%20Effective%20Practices.pdf [Google Scholar]

- 41.WHO Regional Office for Europe. Best practices in prevention, control and care for drug-resistant tuberculosis. WHO Regional Office for Europe. 2013. Available from: http://www.euro.who.int/_data/assets/pdf_file/0020/216650/Best-practices-in-prevention,control-and-care-for-drugresistant-tuberculosis-Eng.pdf [Google Scholar]

- 42.Jetha N, Robinson K, Wilkerson T, et al. Supporting knowledge into action: the Canadian best practices initiative for health promotion and chronic disease prevention. Can J Public Health 2008;99:I1-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.WHO Regional Office for Africa. Guide for documenting and sharing best practices in health programmes. WHO Regional Office for Africa; 2008. Available from: http://www.afro.who.int/index.php?option=com_docman&task=doc_download&gid=1981 [Google Scholar]

- 44.Joint United Nations Programme on HIV/AIDS. HIV and sexually transmitted infection prevention among sex workers in Eastern Europe and Central Asia. Joint United Nations Programme on HIV/AIDS. 2006. Available from: http://data.unaids.org/pub/Report/2006/jc1212-hivpreveasterneurcentrasia_en.pdf [Google Scholar]

- 45.Knowledge and Learning Group Africa Region. Innovations in knowledge sharing and learning in the Africa region: retrospective and prospective. The World Bank; 2002. Available from: http://siteresources.worldbank.org/AFRICAEXT/Resources/km2Retrospective.pdf [Google Scholar]

- 46.Van Beveren J. Does health care for knowledge management? J Knowledge Manag 2003;7:90-5. [Google Scholar]

- 47.Food and Agriculture Organisation of the United Nations. FAO good practices. Available from: http://www.fao.org/capacitydevelopment/goodpractices/gphome/en/. Accessed May 2014. [Google Scholar]

- 48.Albert D, Fortin R, Lessio A, et al. Strengthening chronic disease prevention programming: the toward evidence-informed practice TEIP. program assessment tool. Prevent Chron Dis 2013;10:1-11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Kahan B, Goodstadt M, Rajkumar E. Best practices in health promotion: a scan of needs and capacities in Ontario. The Centre for Health Promotion, University of Toronto; 1999. Available from: http://www.idmbestpractices.ca/pdf/BP_scan.pdf [Google Scholar]

- 50.Kahan B, Goodstadt M. An exploration of best practices in health promotion. The Centre for Health Promotion, University of Toronto; 1998. Available from: http://www.idmbestpractices.ca/pdf/Hpincan4.pdf [Google Scholar]

- 51.ten Have M, de Beaufort ID, Mackenbach J P, van der Heide A. An overview of ethical frameworks in public health: can they be supportive in the evaluation of programs to prevent overweight? BMC Public Health 2010;10:638-48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Jackson N, Waters E. for the Guidelines for Systematic Reviews in Health Promotion and Public Health Taskforce. Criteria for the systematic review of health promotion and public health interventions. Health Promot Int 2005;20:367-74. [DOI] [PubMed] [Google Scholar]

- 53.Nutbeam D, Harris E, Wise W. Theory in a nutshell: a practical guide to health promotion theories. New York: McGraw-Hill; 2010. [Google Scholar]

- 54.WHO Regional Office for the Americas. Knowledge sharing for health: scaling up effective solutions for improved health outcomes. WHO Regional Office for the Americas; 2012. Available from: http://www.paho.org/sscoop/wp-content/uploads/2012/11/Solutions-and-good-practices-guidelinesENG.pdf [Google Scholar]

- 55.Advance Africa. Advance Africa’s approach to best practices. Available from: http://advanceafrica.msh.org/tools_and_approaches/Best_Practices/index.html. [Google Scholar]

- 56.Oxlund B. Manual on best practices HIV/AIDS programming with children and young people. AIDSNET. 2005. Available from: http://www.safaids.net/files/Manual%20on%20Best%20Practices%20with%20Children%20and%20Young%20People_AIDSnet.pdf [Google Scholar]

- 57.United Nations Children’s Fund. Innovations, lessons learned and good practices. Available from: http://www.unicef.org/innovations/index_49082.html. Accessed May 2014. [Google Scholar]

- 58.Spencer LM, Schooley MW, Anderson LA, et al. Seeking best practices: a conceptual framework for planning and improving evidence-based practices. Prevent Chron Dis 2013;10:E207-15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.United Nations Educational Scientific and Cultural Organisation. UNESCO MOST Clearing House. Available from: http://www.unesco.org/most/bpindi.htm. Accessed May 2014. [PubMed] [Google Scholar]

- 60.Cameron R, Jolin M, Walker R, et al. Linking science and practice: toward a system for enabling communities to adopt best practices for chronic disease prevention. Health Promot Pract 2001;2:35-42. [Google Scholar]

- 61.Bull F. Review of best practice and recommendations for interventions on physical activity. A report for the Premier’s Physical Activity Taskforce on behalf of the Evaluation and Monitoring Working Group. Western Australian Government; 2003. Available from: https://secure.ausport.gov.au/__data/assets/pdf_file/0011/557696/Review_of_best_practice_and_recommendations_for_interventions_on_physical_activity.pdf [Google Scholar]

- 62.Association of State & Territorial Dental Directors. Best practice approach reports. Available from: http://www.astdd.org/school-based-dental-sealant-programs-introduction/. Accessed June 2014. [Google Scholar]

- 63.Thurston W, Vollmann A, Wilson D, et al. Development and testing of a framework for assessing the effectiveness of health promotion. Sozial-und Präventivmedizin 2003;48:301-16. [DOI] [PubMed] [Google Scholar]

- 64.Llyod L. Best practices for dengue prevention and control in the Americas. United States Agency for International Development; 2003. Available from: http://www.ehproject.org/PDF/strategic_papers/SR7-BestPractice.pdf [Google Scholar]

- 65.WHO Regional Office for the Americas. Working to achieve health equity with an ethnic perspective: what has been done and best practices. 2004. Available from: http://www1.paho.org/English/AD/ethnicity-0ct-04.pdf [Google Scholar]

- 66.WHO Regional Office for the Eastern Mediterranean. Success stories for community based initiatives. 2005. Available from: http://www.emro.who.int/images/stories/cbi/documents/publications/success-stories/cbi_successtories.pdf [Google Scholar]

- 67.McNeil D, Flynn M. Methods of defining best practice for population health approaches with obesity prevention as an example. Proc Nutr Soc 2006;65:403-11. [DOI] [PubMed] [Google Scholar]

- 68.World Health Organization. Review of best practice in interventions to promote physical activity in developing countries. 2008. Available from: http://www.who.int/dietphysicalactivity/bestpracticePA2008.pdf [Google Scholar]

- 69.World Health Organization. Implementing best practices in reproductive health: our first 10 years. 2010. Available from: http://www.ibpinitiative.org/images/OurFirstTenYears2010.pdf [Google Scholar]

- 70.CDC Office for State Tribal Local and Territorial Support. CDC Best Practices Workgroup: definitions, criteria, and associated terms. 2010. Available from: http://www.cdc.gov/niosh/z-draftunder-review-do-not-cite/draftwrt/pdfs/Draft-Best-Practice-Definitions-adnd-Criteria-for-review-9-21-10.docx [Google Scholar]

- 71.King L, Gill T, Allender S, Swinburn B. Best practice principles for community based obesity prevention: development, content and application. Obes Rev 2011;12:329-38. [DOI] [PubMed] [Google Scholar]

- 72.Hercot D, Meessen B, Ridde V, Gilson L. Removing user fees for health services in low-income countries: a multi-country review framework for assessing the process of policy change. Health Policy Plann 2011;26:ii5-15. [DOI] [PubMed] [Google Scholar]

- 73.European Monitoring Centre for Drugs and Drug Addiction. Best practice portal. Available from: http://www.emcdda.europa.eu/best-practice. Accessed May 2014. [Google Scholar]

- 74.Public Health Agency of Canada. Best practice portal. Available from: http://cbpp-pcpe.phac-aspc.gc.ca/interventions/about-best-practices/. Accessed: May 2014. [Google Scholar]

- 75.WHO Regional Office for Europe. Good practices in nursing and midwifery: from expert to expert. 2013. Available from: http://www.euro.who.int/__data/assets/pdf_file/0007/234952/Good-practices-in-nursing-and-midwifery.pdf [Google Scholar]

- 76.Bryce J, Roungou JB, Nguyen-Dinh P, et al. Evaluation of national malaria control programmes in Africa. Bull World Health Organ 1994;72:371-81. [PMC free article] [PubMed] [Google Scholar]

- 77.Costongs C, Springett J., Costongs C, Springett J. Towards a framework for the evaluation of health-related policies in cities. Evaluation 1997;3:345-62. [Google Scholar]

- 78.WHO European Working Group on Health Promotion Evaluation. Health promotion evaluation: recommendations to policy-makers. 1998. Available from: http://apps.who.int/iris/bitstream/10665/108116/1/E60706.pdf [Google Scholar]

- 79.de Zoysa I, Habicht J P, Pelto G, Martines J. Research steps in the development and evaluation of public health interventions. Bull World Health Organ 1998;76:127-33. [PMC free article] [PubMed] [Google Scholar]

- 80.Nutbeam D. Evaluating health promotion: progress, problems and solutions. Health Promot Int 1998;13:27-44. [Google Scholar]

- 81.Ogborne A, Birchmore-Timney C. A framework for the evaluation of activities and programs with harm-reduction objectives. Subst Use Misuse 1999;34:69-82. [DOI] [PubMed] [Google Scholar]

- 82.Wimbush E, Watson J. An evaluation framework for health promotion: theory, quality and effectiveness. Evaluation 2000;6:301-21. [Google Scholar]

- 83.Nutbeam D. Health promotion effectiveness. The questions to be answered. Macdonald G, ed. The evidence of health promotion effectiveness. Shaping public health in a new Europe. Paris: Jouve Composition & Impression; 2000. pp 233-235. [Google Scholar]

- 84.Bauer G, Davies J, Pelikan J, et al. Advancing a theoretical model for public health and health promotion indicator development: proposal from the EUHPID consortium. Eur J Public Health 2003;13:107-13. [DOI] [PubMed] [Google Scholar]

- 85.Lee A, Cheng FF, St Leger L. Evaluating health-promoting schools in Hong Kong: development of a framework. Health Promot Int 2005;20:177-86. [DOI] [PubMed] [Google Scholar]

- 86.Bollars C, Kok H, Van den Broucke S, Mölleman G. European quality instrument for health promotion. European project getting evidence into practice. 2005. Available from: http://ec.europa.eu/health/ph_projects/2003/action1/docs/2003_1_15_a10_en.pdf [Google Scholar]

- 87.Molleman GR, Peters LW, Hosman CM, et al. Project quality rating by experts and practitioners: experience with Preffi 2.0 as a quality assessment instrument. Health Educ Res 2006;21:219-29. [DOI] [PubMed] [Google Scholar]

- 88.Brug J, van Dale D, Lanting L, et al. Towards evidence-based, quality-controlled health promotion: the Dutch recognition system for health promotion interventions. Health Educ Res 2010;25:1100-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Douglas F, Gray D, van Teijlingen E. Using a realist approach to evaluate smoking cessation interventions targeting pregnant women and young people. BMC Health Serv Res 2010;10:49-55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Victorian Government Department of Health. Evaluation framework for health promotion and disease prevention programs. 2010. Available from: http://docs2.health.vic.gov.au/docs/doc/AE7E5D59ADE57556CA2578650020BBDE/$FILE/Evaluation%20framework%20for%20health%20promotion.pdf [Google Scholar]

- 91.DeGroff A, Schooley M, Chapel T, Poister T. Challenges and strategies in applying performance measurement to federal public health programs. Eval Program Plann 2010;33:365-72. [DOI] [PubMed] [Google Scholar]

- 92.Davies J, Sherriff N. The gradient in health inequalities among families and children: a review of evaluation frameworks. Health Pol 2011;101:1-10. [DOI] [PubMed] [Google Scholar]

- 93.Payne G, Thompson D, Heiser C, Farris R. An evaluation framework for obesity prevention policy interventions. Prevent Chron Dis 2012;9:1-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Cohen JE, Donaldson EA. A framework to evaluate the development and implementation of a comprehensive public health strategy. Publ Health 2013;127:791-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Milstein RL, Wetterhall SF. Framework for program evaluation in public health. CDC Morbidity and Mortality Weekly Report 1999;48:1-40. [PubMed] [Google Scholar]

- 96.Cornford T, Doukidis G, Forster D. Experience with a structure, process and outcome framework for evaluating an information system. Omega 1994;22:491-504. [Google Scholar]

- 97.United States Department of Health and Human Services. National healthcare quality report 2005. Available from: http://archive.ahrq.gov/qual/nhqr05/nhqr05.pdf [Google Scholar]

- 98.Donabedian A. The quality of care: how can it be assessed? J Am Med Assoc 1988;260:1743-8. [DOI] [PubMed] [Google Scholar]

- 99.Kelley E, Hurst J. Healthcare quality indicators project conceptual framework paper. Organisation for Economic Co-operation and Development; 2006. Available from: http://www.oecdilibrary.org/docserver/download/5l9t19m240hc.pdf?expires=1438495946&id=id&accname=guest&checksum=F575196588ECB6B18 DFD56ED42CDF781 [Google Scholar]

- 100.Arah OA, Westert GP, Hurst J, Klazinga NS. A conceptual framework for the OECD health care quality indicators project. Int J Qual Health Care 2006;18:5-13. [DOI] [PubMed] [Google Scholar]

- 101.Knowles JC, Leighton C, Stinson W. Measuring results of health sector reform for system performance: a handbook of indicators. Partnerships for Health Reform. 1997. Available from: http://info.worldbank.org/etools/docs/library/122031/bangkokCD/BangkokMarch05/Week2/2Tuesday/S3HealthSysPerformance/MeasuringResultsofHSReform.pdf [Google Scholar]

- 102.Handler A, Issel M, Turnock B. A conceptual framework to measure performance of the public health system. Am J Public Health 2001;91:1235-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.National Health Performance Committee. National health performance framework report. Queensland Health. 2001. Available from: http://www.pc.gov.au/research/completed/health-performance-framework-2001/nphfr2001.pdf [Google Scholar]

- 104.Aday L, Begley C, Lairson D, Balkrishnan R. Introduction to health services research and policy analysis. Aday L, Begley C, Lairson D, Balkrishnan R, eds. Evaluating the healthcare system: effectiveness, efficiency, and equity. Chicago: Health Administration Press; 2004. pp 1-26. [Google Scholar]

- 105.Canadian Institute for Health Information. Health indicators. 20132014 Available from: https://secure.cihi.ca/free_products/HI2013_Jan30_EN.pdf. [Google Scholar]

- 106.Jee M, Or Z. Health outcomes in OECD countries: a framework for health indicators for outcome-oriented policymaking. Organisation for Economic Co-operation and Development; 1999. Available from: http://www.oecd-ilibrary.org/docserver/download/5lgsjhvj7s8r.pdf?expires=1438496104&id=id&accname=guest&checksum=0C0092F72A229E50FE0BBF38CFC9B46E [Google Scholar]

- 107.Sosin D. Draft framework for evaluating syndromic surveillance systems. J Urban Health 2003;80:i8-i13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 108.Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health 1999;89:1322-7. [DOI] [PMC free article] [PubMed] [Google Scholar]