Abstract

A fast growing literature of multisensory emotion integration notwithstanding, the chemical senses, intimately associated with emotion, have been largely overlooked. Moreover, an ecologically highly relevant principle of “inverse effectiveness”, rendering maximal integration efficacy with impoverished sensory input, remains to be assessed in emotion integration. Presenting minute, subthreshold negative (vs. neutral) cues in faces and odors, we demonstrated olfactory-visual emotion integration in improved emotion detection (especially among individuals with weaker perception of unimodal negative cues) and response enhancement in the amygdala. Moreover, while perceptual gain for visual negative emotion involved the posterior superior temporal sulcus/pSTS, perceptual gain for olfactory negative emotion engaged both the associative olfactory (orbitofrontal) cortex and amygdala. Dynamic causal modeling (DCM) analysis of fMRI time series further revealed connectivity strengthening among these areas during cross modal emotion integration. That multisensory (but not low-level unisensory) areas exhibited both enhanced response and region-to-region coupling favors a top-down (vs. bottom-up) account for olfactory-visual emotion integration. Current findings thus confirm the involvement of multisensory convergence areas, while highlighting unique characteristics of olfaction-related integration. Furthermore, successful crossmodal binding of subthreshold aversive cues not only supports the principle of “inverse effectiveness” in emotion integration but also accentuates the automatic, unconscious quality of crossmodal emotion synthesis.

Keywords: emotion, fMRI, multisensory integration, olfaction, vision

Organisms as primitive as a progenitor cell integrate information from multiple senses to optimize perception (Calvert et al., 2004; Driver and Noesselt, 2008; based on human and nonhuman primate data). This synergy is especially prominent with minimal sensory input, facilitating signal processing in impoverished or ambiguous situations (known as the principle of “inverse effectiveness;” Stein & Meredith, 1993). Fusing minute, discrete traces of biological/emotional significance (e.g., a fleeting malodor, a faint discoloration) into a discernible percept of a harmful object (e.g., contaminated food), multisensory emotion integration would afford particular survival advantage by promoting defense behavior. Research in multisensory emotion integration has grown rapidly (cf. Maurage and Campanella, 2013), but the prevalent application of explicit (vs. subtle, implicit) emotion cues has limited the assessment of this ecologically highly relevant principle in integrating emotion across modalities.

Furthermore, the “physical senses” (vision, audition and somatosensation) have dominated this research, with the “chemical senses” (olfaction and gustation) largely overlooked (Maurage and Campanella, 2013). Nevertheless, among all senses, olfaction holds a unique intimacy with the emotion system, and olfactory processing closely interacts with emotion processing (Yeshurun and Sobel, 2010; Krusemark et al., 2013). This intimate association stands on a strong anatomical basis: the olfactory system is intertwined via dense reciprocal fibers with primary emotion areas, including the amygdala and orbitofrontal cortex (OFC; Carmichael et al., 1994; based on the macaque monkey), and these emotion-proficient regions reliably participate in basic olfactory processing to the extent that the OFC (the posterior OFC in rodents and the middle OFC in humans) is considered as a key associative olfactory cortex (Zelano and Sobel, 2005; Gottfried, 2010; based on human and rodent data). Importantly, olfaction interacts with emotion processing across sensory modalities. Olfactory cues can modulate visual perception of facial emotion and social likability, even at minute/subthreshold concentrations (Leppänen and Hietanen, 2003; Li et al., 2007; Zhou and Chen, 2009; Forscher and Li, 2012; Seubert et al., 2014). Preliminary neural evidence further indicates that emotionally charged odors modulate visual cortical response to ensuing emotional faces (Seubert et al, 2010; Forscher and Li, 2012). However, mechanisms underlying olfactory-visual emotion integration remain elusive. It is especially unclear how visual cues influence olfactory emotion processing, although the effect of visual cues (e.g., color, image) on standard olfactory perception is well known (Sakai, 2005; Dematte et al., 2006; Mizutani et al., 2010), with the OFC potentially mediating this crossmodal modulation (Gottfried et al., 2003; Osterbauer et al., 2005).

Prior research has provided compelling evidnece of key multisensory convergence zones linking the physical sensory systems (e.g., the posterior superior temporal sulcus/pSTS and superior colliculus) being primary sites of multisensory integration of both emotion and object information (cf. Calvert et al., 2004; Driver and Noesselt, 2008). However, those studies concerned only the physical senses, and absent such direct, dense connections between the olfactory and visual systems, olfactory-visual integration is likely to engage additional brain circuits. Of particular relevance here, the amygdala has been repeatedly implicated in multisensory emotion integration (Maurage and Campanella, 2013) and, as mentioned above, the OFC in olfactory-visual synthesis in standard odor quality encoding (Gottfried et al., 2003; Osterbauer et al., 2005). Indeed, as these areas are not only multimodal and emotion-proficient but also integral to olfactory processing (Amaral et al., 1992; Carmichael et al., 1994; Rolls 2004; based on human and nonhuman primate data), they could be instrumental in integrating emotion information between olfaction and vision. Therefore, examining possible common and distinct mechanisms underlying crossmodal facilitation in visual and olfactory processing would provide unique insights into the literature.

Here, using paired presentation of (negative or neutral) faces and odors in an emotion detection task, we assessed general and visual- or olfactory-relevant facilitation via olfactory-visual (OV) emotion integration (Figure 1). Importantly, to interrogate the principle of inverse effectiveness (i.e., multisensory integration is especially effective when individual sensory input is minimal) in emotion integration, we applied the negative emotion at a minute, imperceptible level and examined whether improved emotion perception via OV integration negatively correlated with the strength of unimodal emotion perception (Kayser et al., 2008; based on the macaque monkey). Lastly, we employed functional magnetic resonance imaging (fMRI) analysis with effective connectivity analysis (using dynamic causal modeling/DCM, Friston et al., 2003) to specify key regions subserving OV emotion integration and the neural network in which they operate in concert.

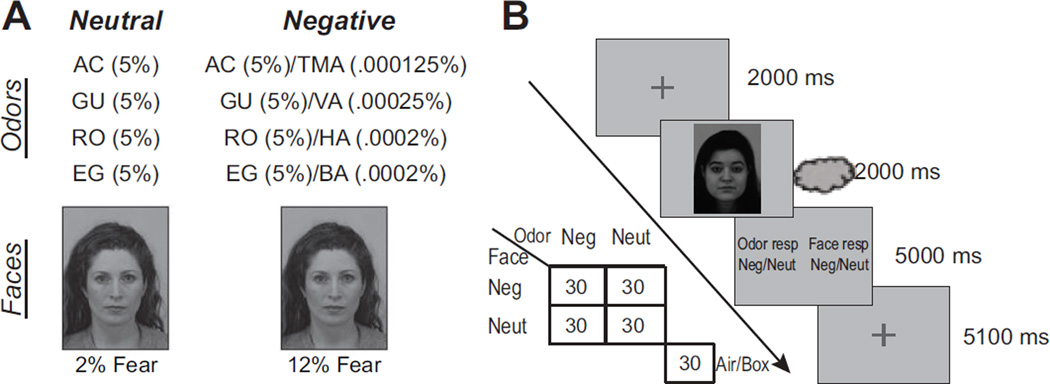

Figure 1.

Stimuli and experimental paradigm. A) Odors and face examples used in the experiment. AC = Acetophenone; TMA = Trimethyl amine; GU = Guaiacol; VA = Valeric acid; RO = Rose oxide; HA = Hexanoic acid; EG = Eugenol; BA = Butyric acid. B) Subjects responded to a face and an odor on each trial as to whether they contained negative emotion. Four odor-face combinations (each consisting of 30 trials; congruent negative stimuli, incongruent combinations with negative cues in either faces or odors, and congruent neutral) were included, forming a 2-by-2 factorial design.

Materials and Methods

Participants

Sixteen individuals (8 females; age 19.6 +/− 3.0 years, range 18–30) participated in the study in exchange for course credit and/or monetary compensation. Participants were screened prior to the experiment to exclude any history of severe head injury, psychological/neurological disorders or current use of psychotropic medication, and to ensure normal olfaction and normal or corrected-to-normal vision. Individuals with acute nasal infections or allergies affecting olfaction were excluded. All participants provided informed consent to participate in the study, which was approved by the University of Wisconsin–Madison Institutional Review Board.

Stimuli

Face Stimuli

Fearful and neutral face images of 4 individuals were selected from the Karolinska Directed Emotional Faces set, a collection of color face stimuli with consistent background, brightness, and saturation (Lundqvist et al., 1998). This resulted in a total of eight face images (four neutral and four fearful). To create minute (potentially subthreshold) negative face stimuli, fearful and neutral faces from the same actor were morphed together using Fantamorph (Abrosoft, Beijing, China), resulting in graded fearful expressions. Based on previous research in our lab (Forscher and Li, 2012), we set the subthreshold fear expression level at 10–15% of the neutral-to-fear gradient (i.e., containing 10–15% of the full fear expression; varying based on the expressiveness of the individual actors), and set neutral face stimuli at a 2% morph to generally match the fearful face images in morphing-induced image alterations (Figure 1A). Our previous data indicate that these very weak negative cues can elicit subliminal emotion processing (Forscher and Li, 2012).

Odor Stimuli

Four prototypical aversive odorants (trimethyl amine/TMA—“rotten fish”; valeric acid/VA—“sweat/rotten cheese”; hexanoic acid/HA—“rotten meat/fat”; and butyric acid/BA—“rotten egg”) were chosen as olfactory negative stimuli. Neutral odor stimuli included four neutral odorants (acetophenone/AC, 5%; guaiacol/GU, 5%; rose oxide/RO, 5%; and eugenol/EG, 5%; all diluted in mineral oil). To render the negative odor hardly detectable, we applied an olfactory “morphing” procedure by mixing very weak concentrations of these odors into the neutral odor solution, resulting in four negative odor mixtures as the olfactory negative stimuli: AC 5%/TMA .000125%; GU 5%/VA .0025%; RO 5%/HA .0002%; EG 5%/BA .0002% (Figure 1A; Krusemark and Li, 2012). As components in a mixture can suppress the perceived intensity of other components, and a strong component can even mask the perceived presence of a weak component (Cain, 1975; Laing et al., 1983), these odor mixtures allowed us to present the threat odors below conscious awareness at practically meaningful concentrations. As shown in our prior study, these minute negative odors can evoke subliminal emotion processing (Krusemark and Li, 2012). These concentrations for the negative mixtures were determined based on systematic piloting in the lab, first with bottles and then an olfactometer. Analogous to the face stimuli, the neutral and negative odorants differed only by the presence of a minute aversive element.

Procedure

Experimental Paradigm

At the beginning of the experiment, each participant provided affective valence ratings for the face and odor stimuli (presented individually) using a visual analog scale (VAS) of −10 to +10 (“extremely unpleasant” to “extremely pleasant”). We used differential ratings between the neutral stimuli and their negative counterparts to provide an index of perceived negative emotion in the unimodal (visual and olfactory) stimuli. Notably, this measure was mathematically independent of and procedurally not confounded with the measures of OV integration. We therefore correlated it with both behavioral and neural measures of OV integration to test the hypothesis of the principle of inverse effectiveness. We also obtained intensity and pungency ratings of the odors using a similar VAS (1–10, “not at all pungent” to “extremely pungent” and “extremely weak” to “extremely strong”). While in the scanner, participants completed a two-alternative forced-choice emotion detection task on faces and odors, respectively (Figure 1B). At the beginning of each trial, a gray fixation cross appeared centrally, indicating to the participant to prepare for the trial, and then the cross turned green, which prompted participants to make a slow, gradual sniff. At the offset of the green cross (250 ms), a face image and an odorant were presented for 2s, followed by a response screen instructing participants to make a button press with each hand as to whether the odor and face stimuli were “neutral” or “negative”. Participants were informed that neutral and negative stimuli were each presented half of the time, accompanied by either congruent or incongruent stimuli in the other modality with equiprobability. As a way to measure subliminal emotion processing, we encouraged participants to make a guess when they were unsure. The order and the hand of odor and face responses were counterbalanced across participants.

The face-odor pairing formed a 2-by-2 factorial design with two congruent and two incongruent conditions. The congruent conditions included a bimodal negative-negative pairing condition (“ONegVNeg,”) and a (purely neutral) bimodal neutral-neutral pairing condition (“ONeutVNeut,”). The incongruent conditions included negative-neutral pairings with negative emotion present in one sense accompanied by neutral stimuli in the other sense (“ONegVNeut”: negative odors paired with neutral faces, operationally defined as the olfactory baseline condition for OV negative emotion integration; “ONeutVNeg”: negative faces paired with neutral odors, operationally defined as the visual baseline condition for OV negative emotion integration; Figure 1B). Also, there was a fifth condition consisting of a blank box display paired with room air stimulation, which was included to provide a BOLD response baseline and to improve the power of fMRI analysis. Each condition was presented 30 times over two runs (totaling 150 trials; Run 1/2 = 80/70 trials), in a pseudo-random order such that no condition was repeated over three trials in a row. Trials recurred with a stimulus onset asynchrony (SOA) of 14.1 s. Note, the simultaneous presentation of both visual and olfactory stimuli in each trial, varying in congruency of emotion, provided balanced sensory stimulation in all four experimental conditions, allowing us to focus directly on emotion integration (beyond standard bimodal sensory integration).

Face stimuli were presented through a goggles system (Avotec, Inc., FL) linked to the presentation computer, with visual clarity calibrated for each participant. Images were displayed centrally with a visual angle of 4.3° × 6.0°. Odor stimuli and odorless air were delivered at room temperature using an MRI-compatible sixteen-channel computer-controlled olfactometer (airflow set at 1.5 L/min). When no odor was being presented, a control air flow was on at the same flow rate and temperature. This design permits rapid odor delivery in the absence of tactile, thermal, or auditory confounds (Lorig et al., 1999; Krusemark and Li, 2012; Li et al., 2010). Stimulus presentation and response recording were executed using Cogent software (Wellcome Department of Imaging Neuroscience, London, UK) as implemented in Matlab (Mathworks, Natick, MA).

Respiratory monitoring

Using a BioPac MP150 system and accompanying AcqKnowledge software (BioPac Systems, CA), respiration was measured with a breathing belt affixed to the subject’s chest to record abdominal or thoracic contraction and expansion. Subject-specific sniff waveforms were baseline-adjusted by subtracting the mean activity in the 1000 ms preceding sniff onset, and then averaged across each condition. Sniff inspiratory volume, peak amplitude, and latency to peak were computed for each condition in Matlab.

Behavioral statistical analysis

Emotion detection accuracy [Hit rate + Correct Rejection (CR) rate] was analyzed first at the individual level using the binomial test to determine whether any given subject could reliably detect the negative cues, thus providing an objective measure of conscious perception of negative emotion (Kemp-Wheeler and Hill, 1988; Hannula et al., 2005; Li et al., 2008). Next, to examine the OV emotion integration effect, we derived an OV integration index (OVI; Cohen and Maunsell, 2010) for each of the two modalities, OVI = (Accuracycongruent−Accuracyincongruent)/(Accuracycongruent+ Accuracyincongruent), indicating the improvement in emotion detection via OV integration above the baseline conditions (i.e., negative cues in either one sense accompanied by neutral stimuli in the other sense).

To test the rule of inverse effectiveness, we examined the correlation between the increase in detection accuracy via OV emotion integration and the level of perceived emotion in unimodal stimuli (Kayser et al., 2008). As mentioned above, we used differential valence ratings of neutral faces/odors and their negative counterparts (all as unimodal stimuli) as an index of unimodal emotion perception (which was also mathematically independent of the OVI scores), in the form of unpleasantness ratings: −1* (pleasantness ratings for negative odors/faces − pleasantness ratings for neutral odors/faces). Hence, larger differential unpleasantness scores (i.e., greater unpleasantness ratings for the negative stimuli than the neutral counterparts) would mean stronger emotion perception in the unimodal stimuli.

T-tests were conducted on group-level emotion detection accuracy, OVI scores and differential unpleasantness scores. Given our clear hypotheses, one-tailed tests were applied for OVI scores (greater than 0) and their correlation with differential unpleasantness scores (negative correlation). Finally, respiratory parameters were entered into repeated-measures ANOVAs for statistical analysis.

Imaging acquisition and analysis

Gradient-echo T2 weighted echo planar images (EPI) were acquired with blood-oxygen-level-dependent (BOLD) contrast on a 3T GE MR750 MRI scanner, using an eight channel head coil with sagittal acquisition. Imaging parameters were TR/TE=2350/20 ms; flip angle=60°, field of view, 220 mm, slice thickness 2 mm, gap 1 mm; in-plane resolution/voxel size 1.72 × 1.72 mm; matrix size 128 × 128. A high-resolution (1×1×1 mm3) T1-weighted anatomical scan was acquired. Lastly, a field map was acquired with a gradient echo sequence.

Six “dummy” scans from the beginning of each scan run were discarded in order to allow stabilization of longitudinal magnetization. Imaging data were preprocessed using AFNI (Cox, 1996), where images were slice-time corrected, spatially realigned and coregistered with the T1, and field-map corrected. Output EPIs were then entered into SPM8 (http://www.fil.ion.ucl.ac.uk/spm/software/spm8/), where they were spatially normalized to a standard template, resliced to 2 × 2 × 2 mm voxels and smoothed with a 6-mm full-width half-maximum Gaussian kernel. Normalization was based on Diffeomorphic Anatomical Registration Through Exponentiated Lie algebra (DARTEL), a preferable approach relative to conventional normalization to achieve more precise spatial registration (Ashburner, 2007).

Next, imaging data were analyzed in SPM8 using the general linear model (GLM). Five vectors of onset times were created, corresponding to the four odor/face combinations and the blank box/air condition. These vectors were coded as delta functions, and convolved with a canonical hemodynamic response function (HRF) to form five event-related regressors of interest. Two parametric modulators were included, the first indicating odor response and the second face response. Condition-specific temporal and dispersion derivatives of the HRF were also included to allow for such variations in the HRF. Six movement-related vectors (derived from spatial realignment) were included as regressors of no interest to account for motion-related variance. The data were high-pass filtered (cut-off, 128 s), and an autoregressive model (AR1) was applied.

Model estimation yielded condition-specific regression coefficients (β values) in a voxel-wise fashion for each subject. In a second step (a random effects analysis), subject-specific contrasts of these β values were entered into one-sample t-tests, resulting in group-level statistical parametric maps of the T statistic (SPM). Based on the extant literature, we focused on a set of a priori regions of interest (ROIs) consisting of emotion regions including amygdala, hippocampus and anterior cingulate cortex, multimodal sensory regions including posterior superior temporal sulcus (pSTS) and the olfactory orbitofrontal cortex (OFColf), and unimodal sensory regions including striate, extra striate and inferotemporal cortices for vision, and anterior and posterior piriform cortices (APC/PPC) for olfaction. Effects in ROIs were corrected for multiple comparisons across small volumes of interest (SVC; p< .05 FWE).

Anatomical masks for amygdala, hippocampus, anterior cingulate cortex, APC and PPC were assembled in MRIcro (Rorden and Brett, 2000) and drawn on the mean structural T1 image, with reference to a human brain atlas (Mai et al., 1997), while anatomical masks for striate, extrastriate and inferotemporal cortices were created using Wake Forest University’s PickAtlas toolbox for MATLAB (Maldjian et al., 2003). For the STS and OFC, due to their relatively large sizes and less demarcated borders, masks were created using 6-mm spheres centered on peak coordinates derived from two review papers: coordinates for the STS (left, −54, −51, 12 and right, 51, −42, 12) were extracted from a review on multisensory integration of emotion stimuli (Ethofer et al., 2006b), while coordinates for the OFC were extracted based on the putative olfactory OFC (left, −22, 32, −16 and right, 24, 36, −12; Gottfried and Zald, 2005). All coordinates reported correspond to Montreal Neurological Institute (MNI) space.

Two primary sets of contrasts were tested. Set 1—General integration effect: 1a) a general additive effect contrasting congruent negative-negative pairing against incongruent negative-neutral pairing [ONegVNeg− (ONegVNeut + ONeutVNeg)/2] and 1b) a superadditive, interaction effect: (ONegVNeg−ONeutVNeut) − [(ONegVNeut −ONeutVNeut)+ (ONeutVNeg −ONeuVNeut)]. Essentially, differential response between each negative condition (congruent or incongruent) and the congruent (pure) neutral (ONeutVNeut) condition was first obtained, reflecting negative-specific responses after responses to general (neutral) bimodal sensory stimulation were removed. We then contrasted the differential terms: differential response to negative emotion in both faces and odors minus the sum of differential responses to negative emotion in faces alone and to negative emotion in odors alone. Note: given the different criteria used in the neuroimaging research of multisensory integration to approach the challenge in selecting statistical criteria for fMRI assessment of the effect (Beauchamp, 2005; Goebel and van Atteveldt, 2009), we included both additive and superadditive effects here to demonstrate the integration effect. Nevertheless, as a voxel contains nearly a million neurons (cf. Logothetis, 2008) including both superadditive and subadditive neurons in comparable numbers and spatially intermixed, these terms are unlikely to reflect true forms of multisensory integration at the neuronal level as applied in electrophysiology research (Beauchamp et al., 2005; Laurienti et al., 2005).

Set 2—Visual or olfactory-related gain via OV integration (Dolan et al., 2001): 2a) Visual perceptual gain: visual negative stimuli accompanied by olfactory negative stimuli minus visual negative stimuli accompanied by olfactory neutral stimuli (ONegVNeg− ONeutVNeg); and 2b) Olfactory perceptual gain: olfactory negative stimuli accompanied by visual negative stimuli minus olfactory negative stimuli accompanied by visual neutral stimuli (ONegVNeg−ONegVNeut). As the congruent negative-negative relative to the incongruent negative-neutral trials contained extra negative input from the other sensory channel, we applied exclusion masks in these two contrasts to rule out the contribution of this extra singular negative input to response enhancement (2a: ONegVNeut − ONeutVNeut; 2b: ONeutVNeg − ONeutVNeut; p <.05 uncorrected). Furthermore, to assess the rule of inverse effectiveness in perceptual gain, we further regressed these two contrasts on the differential unpleasantness ratings. Lastly, we regressed the contrasts on the OVI scores to examine coupling between neural gain and emotion detection improvement via OV integration, which would highlight the brain-behavior association in emotion integration. In all the above contrasts, we masked out voxels in which either of the incongruent conditions failed to demonstrate an increase in signal, to remove “unisensory deactivation” that could result in spurious integration-related response increase (Beauchamp, 2005).

To note, our design of paired (congruent or incongruent) stimulation helped to circumvent the challenge in selecting statistical criteria for fMRI assessment of multisensory integration, due to the inadequate spatial resolution of fMRI for isolating multimodal versus unimodal neurons (Beauchamp, 2005; Goebel and van Atteveldt, 2009; Waston et al., 2014). Specifically, as adopted by several prior studies (Dolan et al., 2001; Ethofer et al., 2006a; Seubert et al., 2010; Forscher and Li, 2012), the contrasts between congruent (negative-negative) versus incongruent (negative-neutral) pairings here overcame the criterion predicament by assuming that “a distinction between congruent and incongruent cross-modal stimulus pairs cannot be established unless the unimodal inputs have been integrated successfully” (Goebel and van Atteveldt, 2009).

Effective Connectivity Analysis–Dynamic Causal Modeling (DCM)

DCM treats the brain as a deterministic dynamic (input-state-output) system, where input (i.e., experimental stimuli) perturbs the state of system (i.e., a neural network), changing neuronal activity, which in turn causes changes in regional hemodynamic responses (output) measured with fMRI (Friston et al., 2003). Based on the known input and measured output, DCM models changes in the state of a given neural network as a function of the experimental manipulation. In particular, DCM generates intrinsic parameters characterizing effective connectivity among regions in the network. Moreover, DCM estimates modulatory parameters specifying changes in connectivity among the regions due to experimental manipulation. These modulatory parameters would thus provide further mechanistic insights into OV integration by revealing effective connectivity (coupling) increase in the presence of congruent versus incongruent negative input.

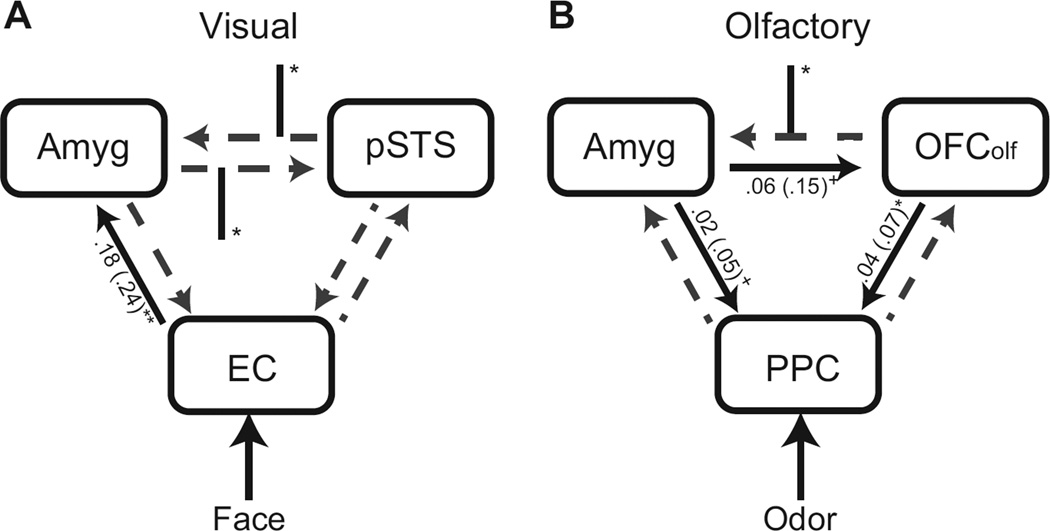

Motivated by results from the fMRI contrasts (see below), we specified a visual and an olfactory network, each comprising an emotion convergence area (i.e., amygdala), a multisensory area (pSTS for vision, OFColf for olfaction), and a unisensory area (extrastriate cortex/EC for vision, PPC for olfaction). All regions in the visual network were in the right hemisphere because the visual ROIs isolated in the fMRI analysis were right-sided. However, all regions in the olfactory network were in the left hemisphere as the olfactory ROIs isolated in the fMRI analysis were left-sided. To the extent the opposite lateralization for the two sensory systems was intriguing, it mapped closely to the previous literature (see more details in the discussion).

To explore connectivity in the networks, we included bidirectional intrinsic connections between all three regions in each network model, with all five experimental conditions acting as modulators on each connection in both directions. Driving (visual and olfactory) inputs were entered to basic, unimodal sensory areas (i.e., EC for vision and PPC for olfaction). We evaluated the intrinsic connectivity of each path using simple t-tests. We also examined the modulatory effects of OV integration on each path using paired t-tests between congruent and incongruent negative conditions. Search spaces (volumes of interest/VOIs) were defined based on the corresponding contrasts. For each subject and each VOI, a peak voxel within 6 mm of the group maximum and within the ROI was first identified. The VOI was then defined by including the 40 voxels with strongest effects given the specific contrast within a 5-mm-radius sphere of the peak voxel (Stephan et al., 2010).

Results

Behavioral Data

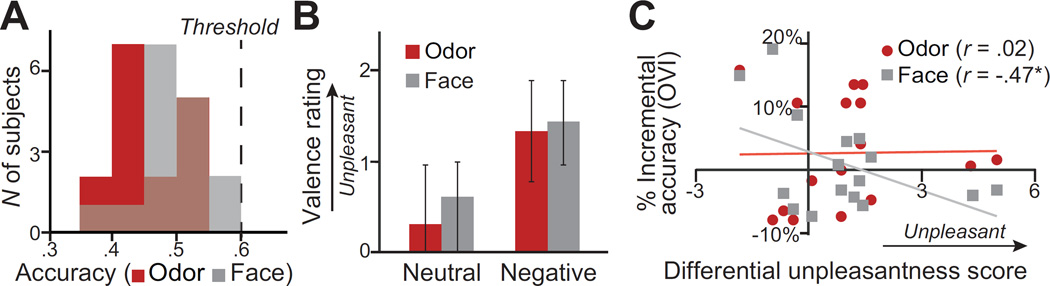

As indicated in the histograms in Figure 2A, no subject’s accuracy (for either face or odor judgments) exceeded the 95% cutoff (one-tailed; .57) of chance performance (.50), indicating subthreshold emotion perception in all subjects. Moreover, group-level accuracy did not exceed the chance level, for either odors [M(SD) = .46(.06)] or faces [.51(.05)], p’s>.13. Furthermore, group-level valence ratings showed that negative faces and odors were rated as somewhat more unpleasant than their neutral counterparts but failed to reach the statistical level of significance (p’s > .06; Figure 2B). Together, these results confirmed the minimal, subthreshold level of negative emotion in the stimuli. Notably, we also excluded possible trigeminal confounds based on equivalent pungency and intensity ratings between the negative and neutral odors, t’s<1.23, p’s>.24.

Figure 2.

Behavioral effects of OV emotion integration. A) Histograms for both visual and olfactory emotion detection accuracy (hits +correct rejections), indicating that none of the subjects performed significantly above chance in either visual or olfactory decisions. Visual accuracy (gray) is overlaid on olfactory accuracy (red); brown color reflects the overlap between the two distributions. B) Valence (unpleasantness) ratings at the group level for faces and odors. 0 = neutral. C) Scatterplots of OVI scores (% incremental accuracy) for odor (red dot) and face (grey square) emotion detection against unimodal emotion perception (indexed by differential unpleasantness scores: negative stimuli − neutral stimuli). Increases in detecting facial negative emotion with congruent olfactory negative input were more evident in individuals with lower perception of unimodal negative cues, as substantiated by a negative correlation between the two variables (r = −.47).* = p< .05 (one-tailed).

Odor OVI scores indicated a marginally significant improvement in olfactory negative emotion detection via OV integration, t(15) = 1.34, p=.1 one-tailed. Although face OVI scores failed to show a simple effect of OV integration (p>.1), they correlated negatively with differential unpleasantness scores (for negative vs. neutral stimuli): greater improvement appeared in those with lower levels of unimodal emotion perception, r = −.47, p = .03 one-tailed (Figure 2C). Therefore, the (marginally significant) improvement in odor emotion detection and the negative correlation in face emotion detection, especially considering the minimal input of negative cues, highlighted the principle of inverse effectiveness in OV emotion integration.

Neuroimaging Data

General OV emotion integration

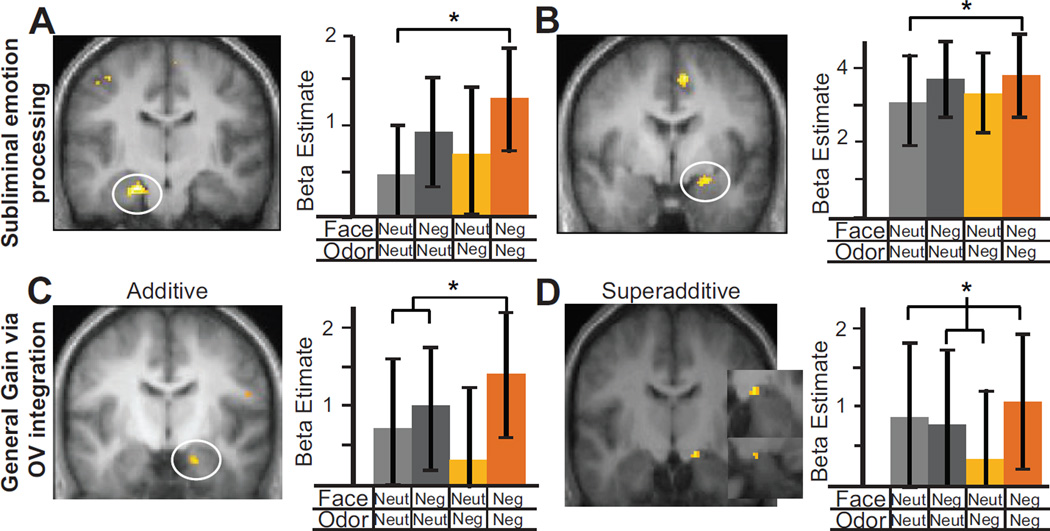

We first validated our experimental procedure using the contrast of Odor (collapsed across all four experimental conditions) vs. Air. We observed reliable activity in the anterior piriform cortex (−30, 6, −18; Z =2.97, p < .05 SVC) and posterior piriform cortex (−22, 2, −12; Z =3.87, p< .005 SVC) in response to odor presentation. Furthermore, despite the minimal, subthreshold level of negative emotion, we found that both amygdala and hippocampus showed enhanced responses to negative-negative stimuli versus neutral-neutral stimuli (ONegVNeg−ONeutVNeut): left hippocampus (−22, −18, −22; Z = 4.08, p< .01 SVC; Figure 3A) and right amygdala (24, −2, −12; Z = 3.05, p =.08 SVC; Figure 3B). These effects thus demonstrated subliminal processing of minimal negative signals in the limbic system, validating our subthreshold emotion presentation. Importantly, in support of OV emotion integration, we observed both an additive [ONegVNeg− (ONegVNeut + ONeutVNeg)/2] and a superadditive, interaction effect [(ONegVNeg −ONeutVNeut)− ((ONegVNeut −ONeutVNeut) + (ONeutVNeg −ONeutVNeut))] in the right amygdala: congruent negative OV input evoked greater responses than incongruent input (additive: 18, −10, −22; Z = 3.74, p = .01 SVC and superadditive:16, −12, −12; Z = 3.08, p =.06 SVC; Figure 3C–D). Furthermore, the right posterior STS (pSTS) also exhibited a general additive effect (52, −48, 12; Z = 4.43, p < .001 SVC).

Figure 3.

Enhanced response to subthreshold negative emotion (congruent negative vs. congruent neutral; ONegVNeg − ONeutVNeut) in the hippocampus (−22, −18, −22) (A) and amygdala (24, −2, −12) (B), demonstrating subliminal processing of minimal aversive signals in the limbic system. General response enhancement in congruent vs. incongruent negative conditions, reflecting additive [ONegVNeg− (ONegVNeut + ONeutVNeg)/2] and superadditive [(ONegVNeg −ONeutVNeut)−([ONegVNeut −ONeutVNeut] + [ONeutVNeg −ONeutVNeut])] effects of OV emotion integration, was observed in the right amygdala (additive: 18, −10, −22 (C); superadditive: 16, −12, −12 (D)). Insets i and ii provide zoom-in coronal and sagittal views of the superadditive cluster, respectively. Group statistical parametric maps (SPMs) were superimposed on the group mean T1 image (display threshold p< .005 uncorrected).

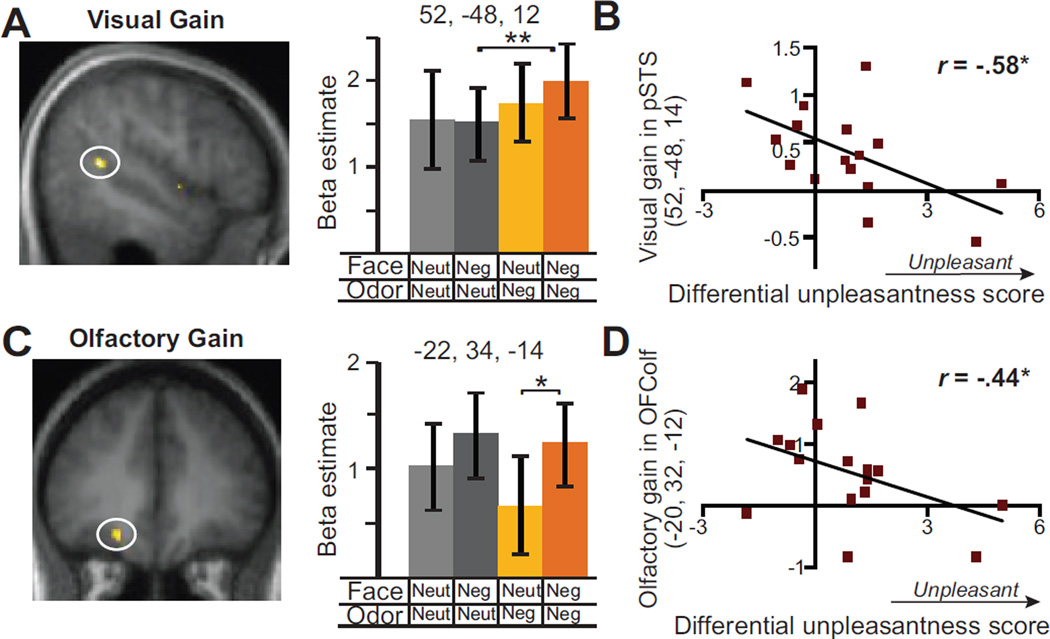

Perceptual gain via OV emotion integration

Visual Perceptual Gain

We next interrogated gain in visual and olfactory analysis of negative emotion via OV emotion integration. Concerning visual gain, we performed the contrast of ONegVNeg− ONeutVNeg (negative faces accompanied by negative odors vs. the same negative faces accompanied by neutral odors), exclusively masked by ONegVNeut − ONeutVNeut (p< .05 uncorrected) to rule out the contribution of singular olfactory negative emotion. The right pSTS cluster emerged again in this contrast with stronger response in the congruent emotion condition (52, −48, 12; Z = 3.84, p<.01 SVC; Figure 4A). Furthermore, neither amygdala nor any olfactory areas emerged from this analysis (p < .05 uncorrected).

Figure 4.

Perceptual gain via OV emotion integration. A–B) Visual perceptual gain: A) SPM and bar graph illustrate enhanced response to facial negative emotion in congruent vs. incongruent pairing (ONegVNeg − ONeutVNeg) in right pSTS. B) Inverse correlation between this response gain in right pSTS and unimodal perception of negative emotion (indexed by differential unpleasantness scores). C–D) Olfactory perceptual gain: C) Group SPM and bar graph illustrate enhanced response to olfactory negative emotion in congruent vs. incongruent pairing (ONegVNeg − ONegVNeut) in left OFColf; D) Inverse correlation between response gain in the left OFColf and unimodal perception of negative emotion. These results thus revealed the neural substrates of visual and olfactory perceptual gain via OV integration, while highlighting the principle of inverse effectiveness in this process. SPMs were superimposed on the group mean T1 image (display threshold p< .005 uncorrected). * = p< .05, ** = p< .01.

To elucidate how the rule of inverse effectiveness operates in visual perceptual gain via OV integration, we regressed this main effect on differential unpleasantness scores (indexing perception of unimodal negative emotion). To circumvent unrealistic correlations forced by rigid correction for multiple voxel-wise comparisons (Vul et al., 2009), especially given the weak, subthreshold negative input, we performed a conjunction analysis between the main contrast above (p< .01 uncorrected) and the regression analysis (p< .05 uncorrected; joint threshold of p< .0005 uncorrected). In support of the rule of inverse effectiveness, the same right pSTS cluster isolated in the main contrast emerged from this analysis, with response enhancement to congruent (vs. incongruent; face only) negative stimuli that was also negatively correlated with differential unpleasantness scores (52, −48, 14; Zmain effect = 2.73, p < .005; Zregressison = 2.35, r = −.58, p < .01; Figure 4B).

Olfactory Perceptual Gain

A corresponding set of analyses was performed concerning olfactory perceptual gain via OV emotion integration (ONegVNeg−ONegVNeut; negative odors accompanied by negative faces vs. the same negative odors accompanied by neutral faces), exclusively masked by ONeutVNeg − ONeutVNeut to rule out the contribution of singular visual negative input. The contrast isolated the left OFColf, exhibiting augmented response to congruent negative input (−22, 34, −14; Z = 3.15, p< .05 SVC; Figure 4C). Furthermore, the amygdala (18, −10, −20, Z = 3.36, p < .05 SVC) also emerged in this contrast.

A similar regression analysis testing the inverse rule of effectiveness in olfactory gain via OV integration isolated the left OFColf, with both a response enhancement to congruent negative stimuli and a negative correlation between the response enhancement and differential unpleasantness scores (−20, 32, −12; Zmain effect= 2.43, p=.01; Zregression = 1.70, r = −.44, p < .05; Figure 4D).

Brain-behavior association in OV emotion integration

Finally, we performed another set of regression analyses to assess direct coupling between neural response gain and emotion detection improvement via OV integration, which would further elucidate the meaning of the neural effects above. Using a similar conjunction analysis as above, we isolated regions exhibiting both a main effect of OV integration (p< .01 uncorrected) and a brain-behavior association (p< .05 uncorrected; joint threshold of p< .0005 uncorrected). Concerning visual response gain (ONegVNeg − ONeutVNeg) on face OVI scores, we identified the same pSTS cluster isolated in the main contrast, where integration-related response enhancement positively correlated with face OVI scores (52, −46, 12; Zmain effect= 2.62, p< .005; Zregression = 2.55, r = .62, p =.005). Concerning olfactory response gain (ONegVNeg − ONegVNeut) on odor OVI scores, we identified the same amygdala cluster isolated in the main contrast, where integration-related response enhancement positively correlated with odor OVI scores (20, −10, −22; Zmain effect= 2.69, p< .005; Zregression= 1.66, r = .43, p<.05).

Neural network underlying OV emotion integration

To attain further mechanistic insights into OV emotion integration, we next conducted effective connectivity analysis using DCM, assessing how the limbic emotion system (mainly, the amygdala) and the multimodal and unimodal sensory cortices interacted during OV emotion integration (Figure 5). For the visual network, one-sample t-tests indicated strong intrinsic connectivity from EC to amygdala [t(15) = 2.96, p< .01]. Paired t-tests further revealed stronger connectivity strength in the path between amygdala and STS in both directions in the presence of congruent relative to incongruent negative input (in faces only; ONegVNeg − ONeutVNeg) [t(15)’s > 2.60, p’s < .05]. For the olfactory network, similar one-sample t-tests showed strong intrinsic connectivity from OFColf to PPC [t(15) = 2.26, p< .05] and marginally significant connections from amygdala to PPC [t(15) = 1.91, p = .08] and from amygdala to OFColf [t(15) = 1.77, p = .1]. Importantly, congruent relative to incongruent negative input (in odors only; ONegVNeg−ONegVNeut) marginally augmented the projection from OFColf to amygdala [t(15) = 1.98, p< .07]. Lastly, we did not find evidence for altered connectivity involving unisensory cortices in either the visual or olfactory network (p’s > .19).

Figure 5.

Effective connectivity results for visual (A) and olfactory (B) systems. Black solid lines and gray dashed lines represent significant intrinsic connections (with parameter estimates alongside) and theoretical but nonsignificant intrinsic connections, respectively. Black intercepting lines represent significant modulatory effects of congruent (vs. incongruent) negative emotion for the corresponding modality (ONegVNeg − ONeutVNeg for the visual model; ONegVNeg − ONegVNeut for the olfactory model). These results demonstrate that congruent visual and olfactory negative input strengthens the connectivity between the amygdala and heteromodal convergence zones, suggesting a predominantly top-down mechanism underlying OV emotion integration. + = p<.10, * = p< .05, ** = p< .01.

Respiration data

We examined respiratory parameters acquired throughout the experiment. Sniff inspiratory volume, peak amplitude and latency did not differ across the four conditions (p’s>.05), thereby excluding sniff-related confounds in the reported effects.

Discussion

The past decade has seen a rapid growth in the research on multisensory integration of biologically salient stimuli, including emotion cues (Maurage and Campanella, 2013). However, mechanisms underlying multisensory emotion integration remain elusive, confounded further by controversial accounts for this process. Moreover, the chemical senses, bearing close relevance to the processing of emotion, have largely been overlooked in this literature. Here we demonstrate that the brain binds an emotionally-relevant chemical sense (olfaction) and a perceptually-dominant physical sense (vision) to enhance perception of subtle, implicit aversive signals. In mediating OV emotion integration, multimodal sensory and emotion areas—pSTS, OFColf and amygdala—exhibit both response enhancement and strengthened functional coupling among them. These neural data provide support to a top-down account for emotion integration between olfaction and vision. Accentuating the rule of inverse effectiveness in multisensory emotion integration, this synergy transpires with minimal negative input, especially in people with weak perception of the unimodal negative information, facilitating perception of biologically salient information in impoverished environments.

Concerning primarily the “physical senses”, prior evidence has implicated multisensory convergence areas (especially pSTS) and multimodal limbic emotion areas (primarily the amygdala) in multisensory emotion integration (cf. Ethofer et al., 2006b; Maurage and Campanella, 2013). Pertaining primarily to flavor processing, previous data also elucidate the participation of limbic/paralimbic areas (e.g., OFC and amygdala) in integration between the chemical senses (gustation and olfaction; cf. Small and Prescott, 2005). However, neural evidence for integration between physical and chemical senses remains scarce, especially in relation to emotion (cf. Maurage and Campanella, 2013). Therefore, current findings would confer new insights into multisensory emotion integration, especially considering the unique anatomy of the olfactory system and its special relation to emotion.

The olfactory neuroanatomy is monosynaptically connected with limbic emotion areas, promoting integrative emotion processing between olfaction and other modalities (Carmichael et al., 1994; Zelano and Sobel, 2005; Gottfried 2010). However, the olfactory system is anatomically rather segregated from the physical sensory areas. This contrasts with the close connections shared by the physical areas, via reciprocal fiber tracts and multisensory transition zones, enabling integration across these senses (e.g. pSTS and superior colliculus; Beauchamp et al., 2004; Kaas and Collins, 2004; Wallace et al., 2004; Driver and Noesselt, 2008; based on human and nonhuman primate data). Furthermore, even input to the amygdala is segregated, with olfactory input arriving at the medial nucleus and all other sensory inputs at the lateral/basal nucleus (Amaral et al., 1992; Carmichael et al., 1994). Lastly, the critical thalamic relay (from peripheral receptors to sensory cortex) exists in all senses except olfaction (Carmichael et al., 1994), precluding upstream, low-level convergence between olfaction and other senses. Despite these special features of the olfactory system, current data confirm the participation of key multimodal areas (e.g., amygdala and pSTS) implicated in emotion integration among the physical senses, accentuating some general mechanisms involved in multisensory integration (Pourtois et al., 2005; Ethofer et al., 2006b; Hagan et al., 2009; Kreifelts et al., 2009; Muller et al., 2011; Waston et al., 2014).

That said, current data also highlight unique characteristics of olfaction-related multisensory integration. While gain in visual emotion perception via OV integration was only observed in the pSTS (part of the associative visual cortex), gain in olfactory emotion perception was seen not only in olfactory cortex (OFColf) but also in the amygdala, highlighting the unique intimacy between olfaction and emotion. Furthermore, the two sensory systems show opposite lateralization of perceptual gain. The substrate of visual gain (pSTS) is localized in the right hemisphere, consistent with extant multisensory integration literature (especially with socially relevant stimuli; e.g., Kreifelts et al., 2009; Werner and Noppeney, 2010; Ethofer et al., 2013; Watson et al., 2014) and right-hemisphere dominance in face/facial-expression processing (Adolphs et al., 1996; Borod et al., 1998; Forscher and Li, 2012). By contrast, the substrate of olfactory gain (OFColf) is left-lateralized, aligning with prior reports of facilitated odor quality encoding in left OFC with concurrent visual input (Gottfried and Dolan, 2003; Osterbauer et al., 2005). Given the subthreshold nature of negative emotion, this left lateralization also concurs with our prior report of the left OFColf supporting unconscious olfactory processing (“blind smell”; Li et al., 2010), raising the possibility of left-hemisphere dominance in unconscious olfactory processing and right-hemisphere dominance in conscious olfactory processing (e.g., odor detection, identification; Jones-Gotman and Zatorre, 1988, 1993).

Further mechanistic insights into OV emotion integration arise from the DCM analysis, revealing strengthened functional coupling among the implicated regions with congruent negative stimulus presentation. In the visual network, congruent negative inputs enhance connectivity between pSTS and amygdala in both directions, highlighting active interplay between emotion and vision systems in crossmodal emotion integration. This up-modulation by OV input could be especially critical as the intrinsic connectivity between these two regions is statistically weak. In the olfactory network, the amygdala→OFColf path is intrinsically strong whereas the opposite projection (OFColf→amygdala) is intrinsically weak. Nevertheless, the latter is augmented with negative input from both senses, resulting in strong bidirectional amygdala-OFColf connectivity. Conceivably, efficient interaction between olfactory and limbic emotion systems provides the critical neural basis for crossmodal facilitation in olfactory perception of negative emotion.

Interestingly, the unisensory areas do not exhibit clear response enhancement to congruent (vs. incongruent) negative input (with PPC and EC showing possible traces of enhancement only at very lenient thresholds, p<.01 uncorrected). Furthermore, these lower-level unisensory areas do not show changes in functional coupling with other regions during congruent emotion presentation. Taken together, regional response enhancement and strengthening of region-to-region connectivity in higher-level multisensory areas (amygdala, OFColf and pSTS) converge to lend support to a top-down mechanism of OV emotion integration: bimodal negative emotion merges first in associative multisensory areas via inter-region interactions, subsequently influencing low-level unisensory responses via reentrant feedback projections.

Top-down accounts represent a conventional view in multisensory integration literature, taking convergence zones or associative high-order regions as the seat of integration (Calvert et al., 2004; Macaluso and Driver, 2005). However, accounts of bottom-up or joint top-down/bottom-up mechanisms have gained increasing support. It is noted that the putative unimodal sensory cortex may be intrinsically multimodal through early subcortical convergence (Ettlinger and Wilson, 1990), synchronized neural oscillation (Senkowski et al., 2007), and/or direct cortico-cortical connections (Falchier et al., 2002; Clavagnier et al., 2004; Small et al., 2013; based on monkey and rodent data). As such, unisensory cortices are capable of integrating multimodal inputs, driving high-level synthesis via the sensory feedforward progression (Foxe and Schroeder, 2005; Ghazanfar and Schroeder, 2006; Werner and Noppeney, 2010; based on human and nonhuman primate data). Nevertheless, previous evidence of bottom-up integration almost exclusively concerns vision and audition, whose strong anatomical and functional associations would enable low-level crossmodal cortico-cortical interactions. Conversely, the relative anatomical segregation between olfactory and visual systems would discourage interaction across low-level sensory regions, such that OV emotion integration would engage higher-order processes mediated by higher-level multimodal brain areas. Lastly, this top-down account is consistent with the notion that olfaction is highly susceptible to top-down, cognitive/conceptual influences (Dalton, 2002). For instance, attention (Zelano and Sobel, 2005), expectation (Zelano et al., 2011) and semantic labeling can drastically change odor perception and affective evaluation (Herz, 2003; de Araujo et al., 2005; Djordjevic et al., 2008; Olofsson et al., 2014).

In support of the principle of inverse effectiveness, the brain regions supporting visual and olfactory gain of emotion perception (pSTS and OFColf, respectively) also exhibit a negative correlation between response enhancement and the level of unimodal emotion perception. Importantly, the behavioral consequence of OV emotion integration also follows a negative relationship with the level of unimodal emotion perception, albeit only in the visual modality. This negative association coincides with previous findings from our lab, where shifts in face likability judgments by a preceding subtle odor correlated negatively with the level of odor detection (Li et al., 2007). Concerning olfactory emotion perception, in spite of the very weak negative input, we still observed a marginal OV integration effect. However, the improvement did not vary with individual differences in unimodal emotion perception. These behavioral findings are consistent with the notion of general susceptibility of olfaction to external influences, which would require more sophisticated measures to capture fine individual differences. Finally, we recognize that effects of subthreshold processing are by definition elusive to behavioral observation, often requiring very sensitive behavioral tasks tapping into implicit processes to uncover, such as affective priming tasks and implicit association tasks. Accordingly, our behavioral effects were significant or marginally significant only with one-tailed tests. That said, these one-tailed tests were based on strong directional a priori hypotheses, and the effects were of medium to large sizes (albeit power was restricted by the small sample size typical for fMRI studies). Moreover, the strong correlation between behavioral and neural effects of OV emotion integration further corroborated these behavioral effects while confirming the meaning of the neural effects. Overall, findings here provide evidence for emotion integration between the senses of smell and sight while informing its underlying mechanisms. In light of the subtle emotion input and the elusive nature of subthreshold emotion processing, we encourage future investigation into this topic using implicit tasks and large samples.

It is worth noting that the application of subthreshold aversive cues here helps to exclude a critical confound in multisensory integration research: associative cognitive processes activated by singular sensory input could give rise to seemingly crossmodal response enhancement (Driver and Noesselt, 2008). For instance, perceiving an object in one modality could elicit imagery or attention/expectation of related cues in another modality, resulting in improved perception (Driver and Noesselt, 2008). In fact, several multisensory studies in humans and monkeys have reported heightened activity in the prefrontal cortex (Sugihara et al., 2006; Werner and Noppeney, 2010; Klasen et al., 2011), which could reflect such associative cognitive processes. As subthreshold presentation prevents these largely conscious cognitive processes, the evidence here provides direct support to the multisensory integration literature. Finally, the subthreshold presentation of negative emotion highlights the automatic, unconscious nature of crossmodal synergy in emotion processing (Vrooman et al., 2001), promoting efficient perception of biologically salient objects as we navigate through the often ambiguous, sometimes confusing biological landscape in everyday life.

Highlights.

Emotion integration across a physical (visual) and a chemical (olfactory) sense

Multisensory emotion integration abides by the rule of “inverse effectiveness”

Common and distinct mechanisms in visual vs. olfactory emotion perception

Neural data favor a top-down account for olfactory-visual emotion integration

Acknowledgements

We thank Elizabeth Krusemark and Jaryd Hiser for assistance with data collection and Guangjian Zhang for helpful input on statistical analysis. This work was supported by the National Institute of Mental Health (grant numbers R01MH093413 to W.L. and T32MH018931 to L.R.N.).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

The authors declare no competing financial interests.

References

- Adolphs R, Damasio H, Tranel D, Damasio AR. Cortical systems for the recognition of emotion in facial expressions. J Neurosci. 1996;16:7678–7687. doi: 10.1523/JNEUROSCI.16-23-07678.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amaral DG, Price JL, Pitkanen A, Carmichael ST. Anatomical Organization of the Primate Amygdaloid Complex. In: Aggleton JP, editor. The Amgydala: Neurobiological Aspects of Emotion, Memory, and Mental Dysfunction. New York, NY: Wiley-Liss; 1992. pp. 1–66. [Google Scholar]

- Ashburner J. A fast diffeomorphic image registration algorithm. Neuroimage. 2007;38:95–113. doi: 10.1016/j.neuroimage.2007.07.007. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Argall BD, Bodurka J, Duyn JH, Martin A. Unraveling multisensory integration: patchy organization within human STS multisensory cortex. Nat Neurosci. 2004;7:1190–1192. doi: 10.1038/nn1333. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS. Statistical Criteria in fMRI Studies of Multisensory Integration. Neuroinformatics. 2005;3:93–113. doi: 10.1385/NI:3:2:093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borod JC, Cicero BA, Obler LK, Welkowitz J, Erhan HM, Santschi C, Grunwald IS, Agosti RM, Whalen JR. Right hemisphere emotional perception: evidence across multiple channels. Neuropsychology. 1998;12:446–458. doi: 10.1037//0894-4105.12.3.446. [DOI] [PubMed] [Google Scholar]

- Cain WS. Odor Intensity: Mixtures and Masking. Chemical Senses. 1975;1:339–352. [Google Scholar]

- Calvert GA, Spence C, Stein BE, editors. The Handbook of Multisensory Processes. MIT Press; 2004. [Google Scholar]

- Carmichael ST, Clugnet MC, Price JL. Central olfactory connections in the macaque monkey. J Comp Neurol. 1994;346:403–434. doi: 10.1002/cne.903460306. [DOI] [PubMed] [Google Scholar]

- Clavagnier S, Falchier A, Kennedy H. Long-distance feedback projections to area V1: implications for multisensory integration, spatial awareness, and visual consciousness. Cogn Affect Behav Neurosci. 2004;4:117–126. doi: 10.3758/cabn.4.2.117. [DOI] [PubMed] [Google Scholar]

- Cohen MR, Maunsell JHR. A neuronal population measure of attention predicts behavioral performance on individual trials. J Neurosci. 2010;30:15241–15253. doi: 10.1523/JNEUROSCI.2171-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox RW. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Dalton P. Olfaction. In: Yantis S, Pashler H, editors. Steven’s Handbook of Experimental Psychology, Vol. 1, Sensation and Perception. 3rd ed. New York (NY): John Wiley; 2002. pp. 691–746. [Google Scholar]

- de Araujo IE, Rolls ET, Velazco MI, Margot C, Cayeux I. Cognitive modulation of olfactory processing. Neuron. 2005;46:671–679. doi: 10.1016/j.neuron.2005.04.021. [DOI] [PubMed] [Google Scholar]

- Demattè ML, Sanabria D, Spence C. Cross-modal associations between odors and colors. Chem Senses. 2006;31:531–538. doi: 10.1093/chemse/bjj057. [DOI] [PubMed] [Google Scholar]

- Djordjevic J, Lundstrom JN, Clément F, Boyle JA, Pouliot S, Jones-Gotman M. A rose by any other name: would it smell as sweet? J Neurophysiol. 2008;99:386–393. doi: 10.1152/jn.00896.2007. [DOI] [PubMed] [Google Scholar]

- Dolan RJ, Morris JS, de Gelder B. Crossmodal binding of fear in voice and face. Proc Natl Acad Sci U S A. 2001;98:10006–10010. doi: 10.1073/pnas.171288598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Driver J, Noesselt T. Multisensory interplay reveals crossmodal influences on “sensory-specific” brain regions, neural responses, and judgments. Neuron. 2008;57:11–23. doi: 10.1016/j.neuron.2007.12.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ethofer T, Anders S, Erb M, Droll C, Royen L, Saur R, Reiterer S, Grodd W, Wildgruber D. Impact of voice on emotional judgment of faces: an event-related fMRI study. Hum Brain Mapp. 2006a;27:707–714. doi: 10.1002/hbm.20212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ethofer T, Pourtois G, Wildgruber D. Investigating audiovisual integration of emotional signals in the human brain. Prog Brain Res. 2006b;156:345–361. doi: 10.1016/S0079-6123(06)56019-4. [DOI] [PubMed] [Google Scholar]

- Ethofer T, Bretscher J, Wiethoff S, Bisch J, Schlipf S, Wildgruber D, Kreifelts B. Functional responses and structural connections of cortical areas for processing faces and voices in the superior temporal sulcus. Neuroimage. 2013;76:45–56. doi: 10.1016/j.neuroimage.2013.02.064. [DOI] [PubMed] [Google Scholar]

- Ettlinger G, Wilson WA. Cross-modal performance: behavioural processes, phylogenetic considerations and neural mechanisms. Behav Brain Res. 1990;40:169–192. doi: 10.1016/0166-4328(90)90075-p. [DOI] [PubMed] [Google Scholar]

- Falchier A, Clavagnier S, Barone P, Kennedy H. Anatomical evidence of multimodal integration in primate striate cortex. J Neurosci. 2002;22:5749–5759. doi: 10.1523/JNEUROSCI.22-13-05749.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Forscher EC, Li W. Hemispheric asymmetry and visuo-olfactory integration in perceiving subthreshold (micro) fearful expressions. J Neurosci. 2012;32:2159–2165. doi: 10.1523/JNEUROSCI.5094-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foxe JJ, Schroeder CE. The case for feedforward multisensory convergence during early cortical processing. Neuroreport. 2005;16:419–423. doi: 10.1097/00001756-200504040-00001. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Harrison L, Penny W. Dynamic causal modelling. Neuroimage. 2003;19:1273–1302. doi: 10.1016/s1053-8119(03)00202-7. [DOI] [PubMed] [Google Scholar]

- Ghazanfar AA, Schroeder CE. Is neocortex essentially multisensory? Trends Cogn Sci. 2006;10:278–285. doi: 10.1016/j.tics.2006.04.008. [DOI] [PubMed] [Google Scholar]

- Goebel R, van Atteveldt N. Multisensory functional magnetic resonance imaging: a future perspective. Exp Brain Res. 2009;198:153–164. doi: 10.1007/s00221-009-1881-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gottfried JA, Dolan RJ. The nose smells what the eye sees: crossmodal visual facilitation of human olfactory perception. Neuron. 2003;39:375–386. doi: 10.1016/s0896-6273(03)00392-1. [DOI] [PubMed] [Google Scholar]

- Gottfried JA, Zald DH. On the scent of human olfactory orbitofrontal cortex: meta-analysis and comparison to non-human primates. Brain Res Brain Res Rev. 2005;50:287–304. doi: 10.1016/j.brainresrev.2005.08.004. [DOI] [PubMed] [Google Scholar]

- Gottfried JA. Central mechanisms of odour object perception. Nat Rev Neurosci. 2010;11:628–641. doi: 10.1038/nrn2883. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hagan CC, Woods W, Johnson S, Calder AJ, Green GGR, Young AW. MEG demonstrates a supra-additive response to facial and vocal emotion in the right superior temporal sulcus. Proc Natl Acad Sci U S A. 2009;106:20010–20015. doi: 10.1073/pnas.0905792106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hannula DE, Simons DJ, Cohen NJ. Imaging implicit perception: promise and pitfalls. Nat Rev Neurosci. 2005;6:247–255. doi: 10.1038/nrn1630. [DOI] [PubMed] [Google Scholar]

- Herz RS. The effect of verbal context on olfactory perception. J Exp Psychol Gen. 2003;132:595. doi: 10.1037/0096-3445.132.4.595. [DOI] [PubMed] [Google Scholar]

- Jones-Gotman M, Zatorre RJ. Olfactory identification deficits in patients with focal cerebral excision. Neuropsychologia. 1988;26:387–400. doi: 10.1016/0028-3932(88)90093-0. [DOI] [PubMed] [Google Scholar]

- Jones-Gotman M, Zatorre RJ. Odor Recognition Memory in Humans: Role of Right Temporal and Orbitofrontal Regions. Brain Cogn. 1993;22:182–198. doi: 10.1006/brcg.1993.1033. [DOI] [PubMed] [Google Scholar]

- Kaas JH, Ph D, Collins CE. The Primate Visual System. Boca Raton, Florida: CRC Press; 2004. [Google Scholar]

- Kayser C, Petkov CI, Logothetis NK. Visual modulation of neurons in auditory cortex. Cerebral Cortex. 2008;18:1560–1574. doi: 10.1093/cercor/bhm187. [DOI] [PubMed] [Google Scholar]

- Kemp-Wheeler SM, Hill AB. Semantic priming without awareness: Some methodological considerations and replications. Q J Exp Psychol. 1988;40:671–692. doi: 10.1080/14640748808402293. [DOI] [PubMed] [Google Scholar]

- Klasen M, Kenworthy CA, Mathiak KA, Kircher TTJ, Mathiak K. Supramodal representation of emotions. J Neurosci. 2011;31:13635–13643. doi: 10.1523/JNEUROSCI.2833-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kreifelts B, Ethofer T, Shiozawa T, Grodd W, Wildgruber D. Cerebral representation of non-verbal emotional perception: fMRI reveals audiovisual integration area between voice- and face-sensitive regions in the superior temporal sulcus. Neuropsychologia. 2009;47:3059–3066. doi: 10.1016/j.neuropsychologia.2009.07.001. [DOI] [PubMed] [Google Scholar]

- Krusemark EA, Li W. Do all threats work the same way? Divergent effects of fear and disgust on sensory perception and attention. J Neurosci. 2011;31:3429–3434. doi: 10.1523/JNEUROSCI.4394-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krusemark EA, Li W. Enhanced Olfactory Sensory Perception of Threat in Anxiety: An Event-Related fMRI Study. Chemosens Percept. 2012;5:37–45. doi: 10.1007/s12078-011-9111-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krusemark EA, Novak LR, Gitelman DR, Li W. When the sense of smell meets emotion: anxiety-state-dependent olfactory processing and neural circuitry adaptation. The Journal of Neuroscience. 2013;33:15324–15332. doi: 10.1523/JNEUROSCI.1835-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laing DG, Willcox ME. Perception of components in binary odour mixtures. Chemical Senses. 1983;7:249–264. [Google Scholar]

- Laurienti PJ, Perrault TJ, Stanford TR, Wallace MT, Stein BE. On the use of superadditivity as a metric for characterizing multisensory integration in functional neuroimaging studies. Experimental Brain Research. 2005;166:289–297. doi: 10.1007/s00221-005-2370-2. [DOI] [PubMed] [Google Scholar]

- Leppanen JM, Hietanen JK. Affect and face perception: odors modulate the recognition advantage of happy faces. Emotion. 2003;3:315–326. doi: 10.1037/1528-3542.3.4.315. [DOI] [PubMed] [Google Scholar]

- Li W, Moallem I, Paller KA, Gottfried JA. Subliminal smells can guide social preferences. Psychol Sci. 2007;18:1044–1049. doi: 10.1111/j.1467-9280.2007.02023.x. [DOI] [PubMed] [Google Scholar]

- Li W, Zinbarg RE, Boehm SG, Paller KA. Neural and behavioral evidence for affective priming from unconsciously perceived emotional facial expressions and the influence of trait anxiety. J Cogn Neurosci. 2008;20:95–107. doi: 10.1162/jocn.2008.20006. [DOI] [PubMed] [Google Scholar]

- Li W, Lopez L, Osher J, Howard JD, Parrish TB, Gottfried JA. Right orbitofrontal cortex mediates conscious olfactory perception. Psychol Sci. 2010;21:1454–1463. doi: 10.1177/0956797610382121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Logothetis NK. What we can do and what we cannot do with fMRI. Nature. 2008;453:869–878. doi: 10.1038/nature06976. [DOI] [PubMed] [Google Scholar]

- Lorig TS, Elmes DG, Zald DH, Pardo JV. A computer-controlled olfactometer for fMRI and electrophysiological studies of olfaction. Behav Res Methods Instrum Comput. 1999;31:370–375. doi: 10.3758/bf03207734. [DOI] [PubMed] [Google Scholar]

- Lundqvist D, Flykt A, Oehman A. The Karolinska Directed Emotional Faces. 1998 [Google Scholar]

- Macaluso E, Driver J. Multisensory spatial interactions: a window onto functional integration in the human brain. Trends Neurosci. 2005;28:264–271. doi: 10.1016/j.tins.2005.03.008. [DOI] [PubMed] [Google Scholar]

- Mai JK, Assheuer J, Paxinos G. Atlas of the Human Brain. San Diego, CA: Academic Press; 1997. [Google Scholar]

- Maldjian JA, Laurienti PJ, Kraft RA, Burdette JH. An automated method for neuroanatomic and cytoarchitectonic atlas-based interrogation of fMRI data sets. Neuroimage. 2003;19:1233–1239. doi: 10.1016/s1053-8119(03)00169-1. [DOI] [PubMed] [Google Scholar]

- Maurage P, Campanella S. Experimental and clinical usefulness of crossmodal paradigms in psychiatry: an illustration from emotional processing in alcohol-dependence. Front Hum Neurosci. 2013;7:394. doi: 10.3389/fnhum.2013.00394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mizutani N, Okamoto M, Yamaguchi Y, Kusakabe Y, Dan I, Yamanaka T. Package images modulate flavor perception for orange juice. Food Qual Prefer. 2010;21:867–872. [Google Scholar]

- Müller VI, Habel U, Derntl B, Schneider F, Zilles K, Turetsky BI, Eickhoff SB. Incongruence effects in crossmodal emotional integration. Neuroimage. 2011;54:2257–2266. doi: 10.1016/j.neuroimage.2010.10.047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olofsson JK, Hurley RS, Bowman NE, Bao X, Mesulam MM, Gottfried JA. A Designated Odor–Language Integration System in the Human Brain. The Journal of Neuroscience. 2014;34:14864–14873. doi: 10.1523/JNEUROSCI.2247-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Osterbauer RA, Matthews PM, Jenkinson M, Beckmann CF, Hansen PC, Calvert GA. Color of scents: chromatic stimuli modulate odor responses in the human brain. J Neurophysiol. 2005;93:3434–3441. doi: 10.1152/jn.00555.2004. [DOI] [PubMed] [Google Scholar]

- Pourtois G, Gelder B De, Bol A, Crommelinck M. Perception of Facial Expressions and Voices and of their Combination in the Human Brain. Cortex. 2005;41:49–59. doi: 10.1016/s0010-9452(08)70177-1. [DOI] [PubMed] [Google Scholar]

- Rolls ET. The functions of the orbitofrontal cortex. Brain Cogn. 2004;55:11–29. doi: 10.1016/S0278-2626(03)00277-X. [DOI] [PubMed] [Google Scholar]

- Rorden C, Brett M. Stereotaxic display of brain lesions. Behav Neurol. 2000;12:191–200. doi: 10.1155/2000/421719. [DOI] [PubMed] [Google Scholar]

- Sakai N, Imada S, Saito S, Kobayakawa T, Deguchi Y. The effect of visual images on perception of odors. Chemical Senses. 2005;30:i244–i245. doi: 10.1093/chemse/bjh205. [DOI] [PubMed] [Google Scholar]

- Schoenbaum G, Gottfried JA, Murray EA, Ramus SJ. Edited volume of the Annals of the New York Academy of Sciences. Vol. 1121. Boston, MA: Blackwell; 2007. Linking Affect to Action: Critical Contributions of the Orbitofrontal Cortex. [DOI] [PubMed] [Google Scholar]

- Senkowski D, Talsma D, Grigutsch M, Herrmann CS, Woldorff MG. Good times for multisensory integration: Effects of the precision of temporal synchrony as revealed by gamma-band oscillations. Neuropsychologia. 2007;45:561–571. doi: 10.1016/j.neuropsychologia.2006.01.013. [DOI] [PubMed] [Google Scholar]

- Seubert J, Kellermann T, Loughead J, Boers F, Brensinger C, Schneider F, Habel U. Processing of disgusted faces is facilitated by odor primes: a functional MRI study. Neuroimage. 2010;53:746–756. doi: 10.1016/j.neuroimage.2010.07.012. [DOI] [PubMed] [Google Scholar]

- Seubert J, Gregory KM, Chamberland J, Dessirier J-M, Lundström JN. Odor valence linearly modulates attractiveness, but not age assessment, of invariant facial features in a memory-based rating task. PLoS One. 2014;9:e98347. doi: 10.1371/journal.pone.0098347. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Small DM, Prescott J. Odor / taste integration and the perception of flavor Multisensory integration Odor / taste integration. Exp Brain Res. 2005;166:1–16. doi: 10.1007/s00221-005-2376-9. [DOI] [PubMed] [Google Scholar]

- Small DM, Veldhuizen MG, Green B. Sensory neuroscience: taste responses in primary olfactory cortex. Curr Biol. 2013;23:R157–R159. doi: 10.1016/j.cub.2012.12.036. [DOI] [PubMed] [Google Scholar]

- Stein BE, Meredith MA. The Merging Of The Senses. Cambridge, MA: MIT Press; 1993. [Google Scholar]

- Stephan KE, Penny WD, Moran RJ, Ouden HEM Den, Daunizeau J, Friston KJ. Ten simple rules for dynamic causal modeling. Neuroimage. 2010;49:3099–3109. doi: 10.1016/j.neuroimage.2009.11.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sugihara T, Diltz MD, Averbeck BB, Romanski LM. Integration of auditory and visual communication information in the primate ventrolateral prefrontal cortex. J Neurosci. 2006;26:11138–11147. doi: 10.1523/JNEUROSCI.3550-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vroomen J, Driver J, de Gelder B. Is cross-modal integration of emotional expressions independent of attentional resources? Cogn Affect Behav Neurosci. 2001;1:382–387. doi: 10.3758/cabn.1.4.382. [DOI] [PubMed] [Google Scholar]

- Vul E, Harris CR, Winkielman P, Pashler H. Puzzlingly High Correlations in fMRI Studies of Emotion, Personality, and Social Cagnition. Perspect Psychol Sci. 2009;4:274–290. doi: 10.1111/j.1745-6924.2009.01125.x. [DOI] [PubMed] [Google Scholar]

- Wallace MT, Ramachandran R, Stein BE. A revised view of sensory cortical parcellation. Proc Natl Acad Sci USA. 2004;101:2167–2172. doi: 10.1073/pnas.0305697101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watson R, Latinus M, Noguchi T, Garrod O, Crabbe F, Belin P. Crossmodal adaptation in right posterior superior temporal sulcus during face-voice emotional integration. J Neurosci. 2014;34:6813–6821. doi: 10.1523/JNEUROSCI.4478-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Werner S, Noppeney U. Distinct functional contributions of primary sensory and association areas to audiovisual integration in object categorization. J Neurosci. 2010;30:2662–2675. doi: 10.1523/JNEUROSCI.5091-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yeshurun Y, Sobel N. An odor is not worth a thousand words: from multidimensional odors to unidimensional odor objects. Annu Rev Psychol. 2010;61:219–241. C1–C5. doi: 10.1146/annurev.psych.60.110707.163639. [DOI] [PubMed] [Google Scholar]

- Zelano C, Sobel N. Humans as an animal model for systems-level organization of olfaction. Neuron. 2005;48:431–454. doi: 10.1016/j.neuron.2005.10.009. [DOI] [PubMed] [Google Scholar]

- Zelano C, Mohanty A, Gottfried JA. Olfactory predictive codes and stimulus templates in piriform cortex. Neuron. 2011;72:178–187. doi: 10.1016/j.neuron.2011.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou W, Chen D. Fear-related chemosignals modulate recognition of fear in ambiguous facial expressions. Psychol Sci. 2009;20:177–183. doi: 10.1111/j.1467-9280.2009.02263.x. [DOI] [PubMed] [Google Scholar]