Abstract

Objective

The aim was to evaluate papers retracted due to falsification in high-impact journals.

Study Design and Setting

We selected articles retracted due to allegations of falsification in January 1, 1980 to March 1, 2006 from journals with impact factor >10 and >30,000 annual citations. We evaluated characteristics of these papers and misconduct-involved authors and assessed whether they correlated with time to retraction. We also compared retracted articles vs. matched nonretracted articles in the same journals.

Results

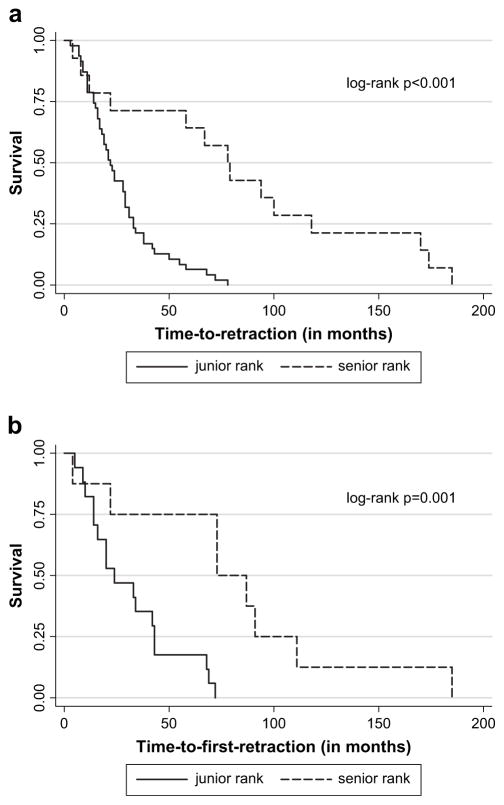

Fourteen eligible journals had 63 eligible retracted articles. Median time from publication to retraction was 28 months; it was 79 months for articles where a senior researcher was implicated in the misconduct vs. 22 months when junior researchers were implicated (log-rank P < 0.001). For the 25 implicated authors, the median time from the first publication of a fraudulent paper to the first retraction was 34 months, again with a clear difference according to researcher rank (log-rank P = 0.001). Retracted articles didn’t differ from matched nonretracted papers in citations received within 12 months, number of authors, country, funding, or field, but were twofold more likely to have multinational authorship (P = 0.049).

Conclusions

Retractions due to falsification can take a long time, especially when senior researchers are implicated. Fraudulent articles are not obviously distinguishable from nonfraudulent ones.

Keywords: Fraud, Falsification, Retraction, Impact, Journals, Senior investigators

1. Introduction

Serious scientific misconduct [1] alarms the scientific and wider community. Publications of fraudulent data distract from the truth, erode trust in scientific research, may lead to adoption of otherwise ineffective or harmful interventions, damage reputations of people and institutes, and create stirs in the news. Several authors have discussed at times one or several cases of misconduct, typically when yet another serious case is revealed [2–8]. However, to our knowledge there is no systematic analysis of scientific misconduct cases that have resulted in retraction of scientific articles.

Of the different types of misconduct, falsification is more egregious and typically affects the veracity of the report more than plagiarism, faked author or ethics approval, or duplication. Given the long-standing resistance to retract published articles, any empirical survey of retracted papers is likely to capture only the tip of the iceberg of fraudulent articles. This is an issue for all types of fraudulent articles, and this may be an even more prominent problem for other types of misconduct besides falsification. Moreover, even for falsification, most of the revealed cases pertain to publications in major journals. Falsification may affect also journals with lesser impact, but usually this is less visible and less subject to public and peer scrutiny, and thus probably more difficult to detect. Regardless, falsification in major journals may be especially harmful for the cause of science.

Here, we performed an empirical evaluation that had three aims. First, we aimed to describe the characteristics of the articles and authors implicated in retractions due to falsification in top-cited journals since 1980. This is probably a select subgroup of fraudulent papers: it represents the fraction of fraudulent publications that has been revealed, and among these, a smaller fraction where the falsification was decisively dealt with retraction. However, this is an important set of cases to study. Second, we aimed to examine how long it took for these publications to be retracted, and whether any determinants correlated with time to retraction. Third, we aimed to evaluate whether these retracted articles differed in any major characteristics against matched nonretracted articles published at the same time in the same journals.

2. Methods

2.1. Eligible articles and authors

We considered articles retracted from top-cited journals between January 1, 1980 and March 1, 2006 with any allegations of falsification. Falsification could affect the data, design, or analysis, and includes also the possibility of complete fabrication. A pilot search verified that relatively few retractions seemed to have referred to misconduct other than falsification and some of them were still contested by authors and/or lawyers, while retractions due to falsification were more clear-cut. Whenever several offenses had been conducted, the retracted article was eligible if falsification (as broadly defined above) was part of them.

We targeted all 21 journals that receive over 30,000 citations annually and have impact factors exceeding 10 (per Journal Citation Reports [JCR], 2004 edition, Thomson Scientific/Institute for Scientific Information [ISI]), i.e., American Journal of Human Genetics (Am J Hum Genet), Annals of Internal Medicine (Ann Intern Med ), Cell, Chemical Reviews (Chem Rev), Circulation, European Molecular Biology Organization Journal (EMBO J ), Gastroenterology, Genes and Development (Genes Dev), Hepatology, Journal of the American Medical Association (JAMA), Journal of Clinical Investigation (J Clin Invest), Journal of Cell Biology (J Cell Biol ), Journal of Experimental Medicine (J Exp Med ), Lancet, Nature, Nature Genetics (Nat Genet), Nature Medicine (Nat Med ), New England Journal of Medicine (N Engl J Med ), Neuron, and Proceedings of the National Academy of Sciences USA (PNAS ). We set no restriction for academic field, however, most of the eligible nonmultidisciplinary journals pertain to the medical and biological sciences, because these fields have more massive publication rates and higher citation densities.

We considered only retracted full articles, excluding retracted reviews, letters to the editor, and meeting abstracts or duplicate publication of information in meeting proceedings. We did not consider retractions due to other types of misconduct where the data, design, and/or analyses are not altered, for example, duplicate publication, plagiarism, faked author approval, or faked patient approval. Retraction was defined to cover any announcement in the publishing journal that the paper was removed due to falsification issues, regardless of the exact language and mode of retraction (letter, note, editorial, other mention). We did not consider expressions of concern that had not led yet to retraction of a paper (e.g., ongoing investigation). However, for authors where falsification had been implicated in the retraction of any article, we also considered in the analysis all other retracted articles from these top-cited journals, regardless of the exact phrasing of reasons (or lack thereof) listed for each retraction.

We searched Thomson ISI Web of Science for articles with retract* OR withdraw* OR fraud* OR “scientific misconduct” OR deception in their titles and published by any eligible top-cited journal. After screening, potentially eligible articles were retrieved for full-text scrutiny. We thus created a list of authors implicated in falsification and a preliminary list of their retracted publications. To obtain a more complete record of retractions, we then performed name searches for each of these authors in the Web of Science and PubMed. We also examined the full list of papers from the eligible journals listed as “Retracted publication” in PubMed and no additional eligible articles were identified.

Typically, one author was implicated solely or primarily in the scientific misconduct in each retracted article. When an article was retracted due to implicated misconduct without a specific author(s) being primarily implicated, we did not assign responsibility to specific authors. These authors, along with their characteristics, did not form part of our analysis.

2.2. Data extraction for retracted and matched control papers

From each eligible retracted article, we recorded the author solely or primarily implicated in the misconduct, whenever available; the number of authors and position of that implicated author (first, last, middle position); journal name; month and year of publication; and month and year of retraction.

For each eligible retracted article, we also identified through the tables of contents the immediately previous full original article and the immediately subsequent full original article in the same issue that had not been retracted. When the retracted article was the first or last in its issue, we extended to the previous or subsequent issue, respectively, to find matching articles. This process allowed a 1:2 matched case–control design. In this way, we adjusted for journal and year of publication. Journal is a key determinant of the citations that an article receives and it may also confound the number of authors, country of origin, funding, and multinational authorship, while year of publication may also be related to some of these parameters (e.g., increasing number of authors and more common multinational projects in more recent years).

For each retracted and matched nonretracted article, we extracted information on number of authors; country of origin for the corresponding author (United States, Europe, other); whether the listed affiliations included more than one countries; the scientific field (life sciences vs. other); and sources of funding (none listed, single source, multiple grants, and/or agencies). We also found the number of citations (per Web of Science) received within 12 months after publication, in the period of 12–36 months after the publication, and in the first calendar year after retraction, excluding citations referring to the alluded misconduct. Focusing on the 12 months after retraction rather than the first calendar year after retraction may be more contaminated with citations that were already in press by the time the retraction was made.

Data extraction was performed independently by two investigators (N.T. and E.E.). Discrepancies were discussed and consensus was reached with a third investigator (J.I.).

2.3. Evaluation of authors

For each eligible author, we collected information from retracted papers, PubMed, Web of Science, Office of Research Integrity, and general web searches to create a profile that included the number of retracted publications (in the 21 top-cited journals, in other journals, and total); time of publication and retraction of these papers; and whether the same author had published any nonretracted papers in the top-cited journals, and, if so, when in relation to the retracted ones. We did not record the complete publication record, because it would be very difficult to exclude same-name authors for all articles besides the ones retracted or published in top-cited journals. We also recorded the rank of the researcher at the time of publication of the first article that was eventually retracted. Junior rank included students, scientists in training, technicians and supporting personnel, and young investigators. Senior rank included professors, lab directors, and experienced investigators. When the rank was not clearly stated in any documents relevant to the misconduct, we assigned senior rank, if the researcher had a publication record of original research articles exceeding 5 years. If the publication record of original research articles did not exceed 5 years, the author was assigned junior rank.

2.4. Analyses

Descriptions of the profiles of eligible retracted papers and authors are presented. We generated Kaplan–Meier plots of the time from publication to retraction for the retracted papers; and of the time from publication of the first eventually retracted paper in any journal to first retraction from any journal for the authors implicated in misconduct. This latter time interval is particularly interesting because it captures the length of the period during which a fraudulent author is covert and may keep publishing without the official stigmatization of retraction.

We evaluated whether the time to retraction for retracted papers was related to total number of authors, rank, authorship position of the main implicated researcher, scientific field, country of correspondence, multinational collaboration, funding, and citations received within 12 months and at 12–36 months after publication. Similarly, we evaluated whether the time to first retraction for an author implicated in scientific misconduct was related to rank of researcher, scientific field, and country of origin. Median and interquartile range (IQR) values are given for times derived from survival analyses, given that these are not normally distributed. Comparative analyses used the log-rank test. Cox models were not used, because the assumption of proportional hazards did not hold for several comparisons.

Finally, we evaluated whether retracted articles in top-cited journals were different compared with matched non-retracted papers. We used conditional logistic regressions to account for matching. The compared characteristics were number of authors, country of origin, multinational study, stated funding, and number of citations (log-transformed for normalization) within 12 months and at the period of 12–36 months after publication. Univariate regressions were performed and statistical significance was claimed at P < 0.05. The analyses had 80% power to detect at alpha = 0.05 a difference of 0.25 standard deviations for the log-transformed number of citations. We planned to do multivariate analyses including variables that had P < 0.10 in the univariate analyses with backward elimination of variables if P > 0.05.

Analyses were performed in Stata 8.0 (College Station, TX). P-values are two-tailed.

3. Results

3.1. Characteristics of retracted articles in top-cited journals

Across the 21 top-cited journals, 14 journals contained 63 retracted articles meeting eligibility criteria, while 7 journals had no such retractions. Most of the retractions had occurred in more recent years, with 50 of the 63 occurring in the last decade (1996–2006). There were another five “expressions of concern” notices where no final retraction note had yet been published and these are not considered here. Twenty-five authors were identified as sole or main perpetrators of misconduct for 61 articles; for two articles, it was not specified which author was primarily implicated. The median number of citations in the first 12 months after publication for the eventually retracted papers was nine (IQR: 4–21); there were a median of 18 (IQR: 7–60) citations in the period of 12–36 months after publication. There were on median three citations (IQR: 1–9) in the first calendar year after the retraction.

Almost half (48%) of the retracted articles had four or fewer authors and in most cases (70%) the investigator implicated in the misconduct was the first author. The United States was the country of origin in 41 (65%) articles. Forty-seven articles (73%) stated that they had received some funding (single source n = 18, multiple sources n = 29).

3.2. Characteristics of authors

The 25 authors who had been specifically implicated in misconduct had published a total of 58 nonretracted papers in any of the 21 top-cited journals during their careers. However, none of these papers was subsequent to the time of publication of the first retraction notice. Forty-three non-retracted papers had been published before the publication of the first retracted paper; another 15 nonretracted had been published in the time interval of other retracted papers (the period between the first retraction and last retraction of that author). The 25 authors had also published another 46 retracted papers in other journals. Almost all of these (n = 40, 87%) were in journals with impact factor above 3 (JCR, 2004 edition).

Eight of the 25 authors had already senior rank at the time of publication of their first paper that was eventually retracted. The senior researchers published 14 of the 61 retracted articles in the top-cited journals, 35 of the 58 nonretracted articles in top-cited journals, and 31 of the 46 retracted papers in other journals.

3.3. Time to retraction of articles

The median survival time of eventually retracted articles from top-cited journals was 28 months (IQR: 15–58), with 81% surviving a year or more. The time to retraction was significantly influenced by authorship position (last author, log-rank P = 0.006) and rank of the main implicated investigator (senior rank, P < 0.001). Senior rank and last author position were strongly correlated (Spearman’s correlation = 0.74, P < 0.001). A few articles were retracted very soon, regardless of rank or position, but after the first year articles where the main implicated author was a senior researcher took much longer to be retracted than articles where the main implicated perpetrator was a junior researcher (Fig. 1a). The median time to retraction was 79 months for articles where senior researchers were implicated vs. 22 months when junior researchers were implicated. All of the papers where senior researchers had been implicated were retracted in the last decade (all in the last 3 years, with two exceptions).

Fig. 1.

Kaplan–Meier plots for (a) the time from publication to retraction for fraudulent articles and (b) for the time from first publication (of an eventually retracted paper) to first retraction for fraudulent authors, according to the rank of the author.

The median time from publication to retraction of the 46 articles that these authors had published in non–top-cited journals was 61 months (IQR: 23–86). Senior authors had a median time to retraction of 79 months vs. 18 months for junior investigators. There was no statistically significant difference for the time it took to retract fraudulent articles in the top-cited journals vs. non–top-cited journals for senior and junior researchers (P = 0.88 and P = 0.15, respectively).

There was a suggestion that articles in the life sciences were also more slowly retracted than articles in physics (P = 0.050), but all 16 physics articles originated from a single investigator. There was also a possibly slower retraction pace of European articles vs. articles originating from the United States or other countries (median time 34 vs. 24 vs. 22), but this did not reach formal statistical significance (P = 0.084). The time to retraction was not significantly affected by the number of authors (log-rank P = 0.47, categorization based on median), origin from many countries vs. one (log-rank P = 0.90), and number of citations received in the first year (log-rank P = 0.65, categorization based on median of citation count). Articles with more citations at 12–36 months were slower to be retracted (log-rank P < 0.001, categorization based on median of citation count), but this was driven by the 16 articles of the one physicist that were retracted very fast and were generally not cited after their retraction.

3.4. Time to retraction of articles: life sciences

When only life science articles were examined (excluding the one physicist with 16 retracted articles), the median survival time of eventually retracted articles from top-cited journals was 29 months (IQR: 12–69), with 77% surviving a year or more. Senior rank and last author position were strongly correlated (Spearman’s correlation = 0.76, P < 0.001). The median time to retraction was 79 months for articles where senior researchers were implicated vs. 21 months when junior researchers were implicated (log-rank P < 0.001). The time to retraction was not significantly affected by country (P = 0.19), the number of authors (log-rank P = 0.53, categorization based on median), origin from many countries vs. one (log-rank P = 0.93), and number of citations received in the first year (log-rank P = 0.44, categorization based on median) or at 12–36 months (log-rank P = 0.28, categorization based on median).

3.5. Time to first retraction for fraudulent authors

The median time to first retraction for the 25 authors was 34 months (IQR: 16–72). The time to first retraction was significantly shorter for junior researchers (log-rank P = 0.001) (Fig. 1b). The median time to first retraction was 77 months for senior researchers vs. 23 months for junior researchers. Country of origin did not influence significantly the time to retraction (log-rank P = 0.41). Exclusion of the single non-life scientist did not change these results (not shown).

3.6. Comparison of retracted vs. nonretracted articles

Table 1 shows comparatively the characteristics of retracted vs. matched nonretracted articles. The retracted articles were twofold (P = 0.049) more likely to have authors from more than one country than the control articles. There were no significant differences between the two groups in terms of citations, number of authors, country of origin (correspondence address), funding, or scientific field. Of the two variables with P < 0.10 in univariate analyses (multinational authorship, citations in first 12 months), only multinational authorship was selected, when we attempted to build a multivariate model. No variables had formally significant results when analyses were restricted to life science retracted articles (Table 1).

Table 1.

Comparison of retracted vs. matched nonretracted articles

| Median (IQR)

|

All data; Conditional logistic regression odds ratio (95% CI) | Excluding retracted non-life science articles; Conditional logistic regression odds ratio (95% CI) | ||

|---|---|---|---|---|

| Retracted papers (N = 63) | Nonretracted papers (N = 126) | |||

| Citations in 12 monthsa | 9 (4–21) | 6 (3–13) | 1.21 (0.95–2.50) per twofold increase | 1.05 (0.84–1.31) per twofold increase |

| Citations in 12–36 monthsa | 18 (7–60) | 23 (10–48) | 0.93 (0.78–1.11) per twofold increase | 0.89 (0.74–1.08) per twofold increase |

| Number of authors | 5 (3–7) | 5 (3–7) | 1.01 (0.95–1.07) per author | 1.02 (0.96–1.10) per author |

|

| ||||

| N (%) | N (%) | |||

|

| ||||

| Country of correspondence | ||||

| USA | 41 (65) | 80 (64) | Reference | Reference |

| Europe | 17 (27) | 33 (26) | 1.02 (0.48–2.16) | 2.42 (0.95–6.14) |

| Other | 5 (8) | 13 (10) | 0.74 (0.24–2.27) | 1.68 (0.43–6.55) |

| Funding | ||||

| Not stated | 13 (21) | 22 (18) | Reference | Reference |

| Single source | 13 (21) | 28 (22) | 0.79 (0.31–2.00) | 1.06 (0.34–3.23) |

| Multiple sources | 37 (58) | 76 (60) | 0.80 (0.34–1.91) | 0.83 (0.29–2.41) |

| Multinational study | ||||

| Yes | 22 (35) | 28 (22) | 2.09 (1.00–4.34) | 2.08 (0.86–5.03) |

| No | 41 (65) | 98 (78) | Reference | Reference |

| Scientific field | ||||

| Physical sciences | 16 (25) | 29 (23) | Reference | N/A |

| Life sciences | 47 (75) | 97 (77) | 0.56 (0.12–2.56) | |

| Life sciences only | ||||

| Clinical or epidemiology | 20 (43) | 37 (40) | 1.32 (0.47–3.69) | 1.32 (0.47–3.69) |

| Otherb | 27 (57) | 56 (60) | Reference | Reference |

Abbreviations: CI, confidence interval; IQR, interquartile range; N/A, Not applicable.

Odds ratios are derived from univariate analyses.

Variable is log-transformed in the conditional logistic regression analyses.

Human material or nonhuman related life sciences research.

4. Discussion

A systematic examination of articles retracted due to falsification from high-impact scientific journals shows that 14 of the 21 examined journals have proceeded to perform such retractions since 1980. The vast majority of retractions that we analyzed have been published in the last decade. On average it has taken over 2 years to retract these articles, but there is considerable variability. The rank of the main implicated author has been the strongest determinant of this variability. Apparently there has been strong resistance to retracting papers where senior investigators were the culprits. Papers where senior scientists have been implicated in the misconduct have taken on average over 6 years to be retracted, whereas most papers where junior researchers were the culprits were retracted much sooner. The same difference is seen when we examine the full retraction history of fraudulent authors. Papers that were eventually retracted were more likely to involve multinational authorship, but otherwise they could not be differentiated on any grounds from others published in the neighboring pages of the same journals.

Even though most of the examined retractions took several years, the suspicion for serious misconduct typically may have been raised earlier. However, this is extremely difficult to place in time for every case, whereas retraction is a more solid time mark. Retracting a paper can be an extremely convoluted, charged process, especially when falsification is implicated. Journals may occasionally be slow and hesitant to retract papers [9–11]. There is a need to purge the literature, but a major offense should not be labeled lightly and obviously the perpetrators and their lawyers or even occasionally also their institutions may be resisting this effort [12,13]. Even for some of the definitively retracted papers that we have analyzed here, authors may still be pursuing actions to rectify the allegations of misconduct. It is even possible, although not very likely, that no scientific misconduct may have occurred for some of these articles and the authors may have been blamed in error. However, it is more likely that several other fraudulent articles still remain untouched in the literature.

Junior researchers may be viewed more critically than established researchers, if they present spectacular findings and/or make their way into high-impact journals. Moreover, it may be easier to audit internally a junior researcher. In several occasions, lab directors were the ones who found out about and revealed the misconduct of their junior assistants [14]. Senior investigators are largely the auditors of their own labs and teams, so they may hide their misconduct better. They can mostly be revealed through external and public scrutiny, although we also have examples where younger researchers helped reveal the misconduct of their senior [15].

For individual authors implicated in serious misconduct, their publication record in high-impact journals terminated without exception after the retractions. Apparently journals and the scientific community do not forget, at least for the widely publicized cases. In some cases, implicated scientists chose to relocate or stop working and their whereabouts become unknown, and in one case a young researcher even committed suicide [16]. Sox and Rennie have stressed that it is important to scrutinize the subsequent work of authors found to be fraudulent in the past [8].

There are no strong alert signs to hint that a paper is fraudulent. We found a potential relationship between retraction and multinational authorship. This should be interpreted with caution, given the borderline statistical significance. If true, it may suggest that falsification may be easier to reveal when collaborators from many countries are involved in the scientific work. It is less likely that multinational studies have higher rates of falsification.

Overall, a fraudulent article looks much the same as a nonfraudulent one. Thus, it would be unfair to claim that misconduct is a failure of the peer-reviewers and journal editors. Even blatant papers of falsification may require careful scrutiny to be revealed [15]. It would be demoralizing to adopt the perspective that every article may be fraudulent. Trust is fundamental for scientific progress, but careful, rigorous replication of research findings by other teams should be strongly encouraged. The very vast majority of refuted [17] research findings, of course, do not represent misconduct.

Some important limitations should be discussed. An additional few recently published papers may be in the investigation phase and may also be fully retracted in the future. Moreover, we focused here on a sample of most influential journals. Internal and external scrutiny is likely to be maximal for articles appearing in these journals and the impact of these papers is most prominent. For the 25 authors that we examined, almost all of their retracted papers were published either in one of the 21 top-cited journals or other quite influential journals with impact factor exceeding 3. Our analyses cannot be extrapolated to authors who may be publishing fraudulent work mostly or exclusively in journals of lower impact.

Perhaps more importantly, we acknowledge that our analysis deals with the visible tip of an iceberg. We suspect that a large number of fraudulent articles are not recognized as such and several that might have been probed seriously for misconduct at some point were not formally retracted eventually. The fact that the vast majority of the retractions that we analyzed have occurred in the last decade is unlikely to mean that falsification is becoming exponentially more common over time. It is not possible to be certain that retraction is increasingly accepted as a mode of dealing with falsified papers. An evaluation of this hypothesis would require data on the denominator of all falsified papers in the literature, but this is not readily available. A prospective accumulation of falsification cases (e.g., as currently recorded by the Office of Research Integrity) might be helpful to further probe this hypothesis in the future. However, even such prospective recording of revealed falsification cases would not bypass the problem of the unknown number of nonrevealed cases. In the absence of such data, understanding of the changes in the forces at play in the retraction process until now may be useful. In 1980, there was no widely accepted system for handling allegations of misconduct. Retraction was very uncommon and much of the action took place in the lay press, if at all [12,13,18]. Formal rules for defining misconduct and investigating, adjudicating and sanctioning misconduct were set in the United States in 1989 and continued to change for several years [19]. Other countries either set different systems or still have no standardized system at all to deal with misconduct [20]. For a long time, journals have been reluctant to publish clear retractions, perhaps under legal and other pressure [12]. The longer time to retraction for articles where a senior investigator is implicated probably also reflects the persisting greater resistance that may still exist in such cases.

Acknowledging these caveats, our analysis of the tip of this important iceberg may help further sensitize scientists and journals to this problem and help promote a more transparent and timely recognition of serious misconduct in the published literature.

References

- 1.Office of Research Integrity. [Accessed September 30, 2006]; Available at http://ori.dhhs.gov.

- 2.Farthing MJ. Retractions in Gut 10 years after publication. Gut. 2001;48:285–6. doi: 10.1136/gut.48.3.285. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Culliton BJ. Darsee apologizes to New England Journal. Science. 1983;220:1029. doi: 10.1126/science.220.4601.1029-b. [DOI] [PubMed] [Google Scholar]

- 4.Dahlberg JE, Mahler CC. The Poehlman case: running away from the truth. Sci Eng Ethics. 2006;12:157–73. doi: 10.1007/s11948-006-0016-9. [DOI] [PubMed] [Google Scholar]

- 5.Farthing MJ. Fraud in medicine. Coping with fraud. Lancet. 1998;352(Suppl 4):SIV11. [PubMed] [Google Scholar]

- 6.Scully C, Baum B. Fraud in scientific publishing. Oral Dis. 2006;12:357. doi: 10.1111/j.1601-0825.2006.01259.x. [DOI] [PubMed] [Google Scholar]

- 7.Smith R. Investigating the previous studies of a fraudulent author. BMJ. 2005;331:288–91. doi: 10.1136/bmj.331.7511.288. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Sox HC, Rennie D. Research misconduct, retraction, and cleansing the medical literature: lessons from the Poehlman case. Ann Intern Med. 2006;144:609–13. doi: 10.7326/0003-4819-144-8-200604180-00123. [DOI] [PubMed] [Google Scholar]

- 9.Schiermeier Q. Authors slow to retract ‘fraudulent’ papers. Nature. 1998;393:402. doi: 10.1038/30807. [DOI] [PubMed] [Google Scholar]

- 10.Cooper-Mahkorn D. Many journals have not retracted “fraudulent” research. BMJ. 1850;1998:316. doi: 10.1136/bmj.316.7148.1850a. [DOI] [PubMed] [Google Scholar]

- 11.Pfeifer MP, Snodgrass GL. The continued use of retracted, invalid scientific literature. JAMA. 1990;263:1420–3. [PubMed] [Google Scholar]

- 12.Whiteley W, Rennie D, Hafner AW. The scientific community’s response to evidence of fraudulent publication. The Robert Slutsky case. JAMA. 1994;272:170–3. [PubMed] [Google Scholar]

- 13.Rennie D, Gunsalus CK. Scientific Misconduct. New definition, procedures, and office—perhaps a new leaf. JAMA. 1993;269:915–7. doi: 10.1001/jama.269.7.915. [DOI] [PubMed] [Google Scholar]

- 14.Dalton R. Collins’ student sanctioned over ‘most severe’ case of fraud. Nature. 1997;388:313. doi: 10.1038/40933. [DOI] [PubMed] [Google Scholar]

- 15.Gerber P. What can we learn from the Hwang and Sudbo affairs? Med J Aust. 2006;184:632–5. doi: 10.5694/j.1326-5377.2006.tb00420.x. [DOI] [PubMed] [Google Scholar]

- 16.Aldhous P. Scientific misconduct. Tragedy revealed in Zurich. Nature. 1992;355:577. doi: 10.1038/355577a0. [DOI] [PubMed] [Google Scholar]

- 17.Ioannidis JP. Contradicted and initially stronger effects in highly cited clinical research. JAMA. 2005;294:218–28. doi: 10.1001/jama.294.2.218. [DOI] [PubMed] [Google Scholar]

- 18.Rennie D. Breast cancer: how to mishandle misconduct. JAMA. 1994;271:1205–7. [PubMed] [Google Scholar]

- 19.Rennie D, Gunsalus CK. Regulations on scientific misconduct: lessons from the US experience. In: Lock S, Wells F, editors. Scientific fraud and misconduct. 3. London: BMJ Publications; 2001. pp. 13–31. [Google Scholar]

- 20.Rennie D. Dealing with research misconduct in the United Kingdom. An American perspective on research integrity. BMJ. 1998;316:1726–8. doi: 10.1136/bmj.316.7146.1726. [DOI] [PMC free article] [PubMed] [Google Scholar]