Abstract

Indoor localization is a significant research area in wireless sensor networks (WSNs). Generally, the nodes of WSNs are deployed in the same plane, i.e., the floor, as the target to be positioned, which causes the sensing signal to be influenced or even blocked by unpredictable obstacles, like furniture. However, a 3D system, like Cricket, can reduce the negative impact of obstacles to the maximum extent and guarantee the sensing signal transmission by using the line of sight (LOS). However, most of the traditional localization methods are not available for the new deployment mode. In this paper, we propose the self-localization of beacons method based on the Cayley–Menger determinant, which can determine the positions of beacons stuck in the ceiling; and differential sensitivity analysis (DSA) is also applied to eliminate measurement errors in measurement data fusion. Then, the calibration of beacons scheme is proposed to further refine the locations of beacons by the mobile robot. According to the robot’s motion model based on dead reckoning, which is the process of determining one’s current position, we employ the filter and the strong tracking filter (STF) to calibrate the rough locations, respectively. Lastly, the optimal node selection scheme based on geometric dilution precision (GDOP) is presented here, which is able to pick the group of beacons with the minimum GDOP from all of the beacons. Then, we propose the GDOP-based weighting estimation method (GWEM) to associate redundant information with the position of the target. To verify the proposed methods in the paper, we design and conduct a simulation and an experiment in an indoor setting. Compared to EKF and the filter, the adopted STF method can more effectively calibrate the locations of beacons; GWEM can provide centimeter-level precision in 3D environments by using the combination of beacons that minimizes GDOP.

Keywords: WSNs, three-dimensional deployment, calibration, localization

1. Introduction

As people’s requirements for life comfort and production security advance, the demand and extent of applications for indoor localization service (ILS) increases drastically. ILS can be applied to several main areas, such as medical monitoring, underground personnel positioning, navigation in industrial production workshop and even virtual reality in the film industry [1]. All of the application settings have the following common trait: the GPS receiver is deployed in the near-surface or indoor environment, even basements, which causes the GPS signal to attenuate and the GPS-based positioning system to fail. Thus, there is great demand for the application of positioning in the aforementioned settings.

Due to many features, such as the incomplete dependence of infrastructures, low energy consumption, relatively low cost, rapid deployment, high scalability, dense node distribution, the ability to maintain normal performance in harsh and special environments, etc., the research and application of ILS stands out among the traditional location acquiring manners [2]. The location information is also of vital importance to the application of WSNs to monitoring. Node localization is a prerequisite and oftentimes a problem for most WSNs and also is the premise and basis of the application of WSNs to target tracking, recognition, monitoring, and so on. In short, it is playing a huge role in the practicability of WSNs.

So far, among a mass of localization research for WSNs, most studies are on 2D positioning systems, whereas studies on 3D positioning are less frequent, but increasingly popular [3,4]. In practical applications, the sensor nodes, including beacons, are usually deployed in 3D space, not in the same horizontal plane, because the indoor items and mobile people could absorb, reflect or even block the signal transmission between beacons and nodes in most indoor layouts. Most traditional 2D localization strategies are not valid for 3D deployment. Therefore, we propose to investigate localization technology to determine the locations of nodes that are three-dimensionally deployed in indoor settings. Compared to 2D deployment, 3D deployment has more advantages in practical significance and application value [5,6,7]. In the paper, we introduce an overview of a localization system that consists of sensor nodes three-dimensionally deployed in indoor settings. Since only some beacons’ locations are known when they are stuck in the ceiling, the self-localization scheme for beacons is studied to determine the locations of all beacons by measuring the distances between beacons and the static node on the floor. To ensure the localization accuracy of beacons, a calibration algorithm is proposed to refine the location coordinates by means of a mobile robot, the details of which are shown in Section 5. Lastly, we present a geometric dilution precision (GDOP)-based optimal node selection scheme to pick the group of nodes with the minimum GDOP to position the target. To improve the localization accuracy, the GDOP-based weighting estimation method (GWEM) is proposed to fuse more information from other nodes. The above research is a completely theoretical solution for localization in 3D indoor settings, and its feasibility and validity are evaluated by simulation and experiment, shown in Section 7.

2. Related Work

WSN-based positioning systems in three-dimensional space have been investigated for a while. Some achievements of this prior research are typical and practical for experiments. The positioning system, called SpotON, using an RF electronic label was designed and developed by Jeffrey Hightower et al. [8]. The whole of the localization zones is covered by multiple base stations, and the distances between unknown moving nodes and base stations are estimated by the RSSI signal attenuation model. To calculate the location coordinates, trilateration is adopted, and the hill-climbing algorithm is used to improve the estimation accuracy.

The Bat positioning system exploited by the AT&T Institution is composed of a recognizer, a receiver and a surveillance center [9]. As the recognizer receives the signal given by the surveillance center, it will respond with an ultrasonic pulse. The receiver receives an RF signal from the surveillance center and an ultrasonic pulse from the recognizer separately and obtains the time difference of arrival (TDOA) to calculate the distances between any two of the recognizers. Then, the data are reported to the surveillance center through networks. The TDOA measurement based on the ultrasonic signal can realize the spatial localization and calculate the coordinates of the unknown nodes by using trilateration or a multilateral algorithm to improve the accuracy in positioning. However, the Bat system is based on wired networks, so the large-scale deployment could be restricted by costs.

The Cricket Positioning System developed by the MIT Artificial Intelligence Lab, specifically aimed at indoor environment applications [10,11], consists of beacons permanently placed in the buildings, nodes equipped on the target and a central server. The ID of every beacon is unique in order to identify its position coordinates and to enable the broadcasting of an RF signal with its self-location information. The objective nodes launch an ultrasonic signal in response to the RF signal. After obtaining the response from beacons, the distances between the beacons are able to be calculated by the TDOA method. The position information can be figured out based on the relevant localization algorithm.

The SUPPER-ID(S-ID) system [12] uses infrared distance measurements to assist ultrasonic distance measurements and to realize position estimation by means of the trilateral positioning principle. The system can solve the deficiencies caused by employing ultrasonic wave or infrared distance measurement independently and extends the coverage range of a single node so as to improve localization accuracy.

All of the above systems are typical three-dimensional positioning systems. Though they can guarantee the proper accuracy of three-dimensional localization, each of them has weaknesses, such as relatively small coverage area, high cost and the tedious manual deployment of large quantities of beacons, which result in the unavailability of self-localization for nodes. However, the distance measurement manners and the hardware system design for measurements are still significant to the three-dimensional positioning research based on WSNs.

In addition, in terms of the two-dimensional WSN positioning algorithm research, many methods have been proposed, which can be classified into two main clusters [13]: range-based and range-free localization algorithms. The former estimates the node’s location by measuring the distance and angle information between each node. The latter realizes localization depending on the network connectivity. The range-based algorithm mainly depends on the following measurements: received signal strength (RSS) [14], angle of arrival (AOA) [15], time of arrival (TOA) [16] and time difference of arrival (TDOA) [17]. The range-free technique algorithm mainly includes: a centroid localization algorithm [18], convex programming [19], DV-hop [20], DV-distance [21], MDS-MAP [22], APIT [23], and so on.

The investigation of WSN positioning techniques in the two-dimensional space is relatively mature. However, the majority of the algorithms cannot fit the localization well in 3D settings. At present, the localization study with respect to 3D scene primarily modifies the classical two-dimensional ones to extend to one more dimension. The main idea is to use spatial geometrical relations, such as spherical coordinates, hyperboloid coordinates, sphere segmentation and cube segmentation, to divide the scene into possible spaces where unknown nodes stay. The centroid of the possible space is the estimation of the unknown node’s coordinates. Quadrilateration and maximum likelihood estimation can also be utilized to determine the coordinates of unknown nodes directly. The literature [24] proposes a 3D self-localization method based on WSNs, named the APIT-3D algorithm, which improves the APIT algorithm to adapt to 3D space. The work in [25] presents a distributed three-dimensional centroid localization algorithm on the basis of the centroid algorithm. The work in [26] advances three two-dimensional positioning algorithms, respectively, and comes up with the 3D-Dv-hop, 3D-centroid and 3D-Dv-distance algorithms [27]. The literature [28] proposes the 3D-MDS algorithm after extending the MDS-MAP to 3D and presents the self-localization method for nodes of WSNs, solving the self-localization problem of the unattended nodes located at unknown places [29].

In 3D Wi-Fi-based localization, most Wi-Fi-based solutions require a process of site survey, where Wi-Fi signatures of an area of interest are annotated with their real recorded locations [30]. The Wi-Fi signatures, i.e., the fingerprint, commonly consist of RSSIs from different APs (access points) in a database after a site survey. When the current fingerprint matches one in the database, the location of interest can be located by the position information corresponding to the fingerprint [31]. Generally the Wi-Fi-based localization accuracy is only able to reach the meter level, mainly because RSSI is highly vulnerable to the effects of furniture and other objects in a room. The density of APs is also sparse, which does not ensure high granularity of localization information [32]. However, in 3D WSN localization, the distance between two nodes can be measured by TOA or TDOA, instead of RSSI, and the density of nodes can be customized according to the application requirements. Therefore, WSN-based localization can reach centimeter level precision in indoor settings. In addition, a site survey, which is necessary for Wi-Fi-based localization, is time and labor intensive. In short, WSNs have much higher localization accuracy than Wi-Fi in indoor circumstances.

3. System Overview

All of the following research is studied in indoor environments. Compared to outdoor WSNs, indoor WSNs are more likely to be customized for the particular application, like locating or tracking an object of interest. In indoor scenes, the primary consideration is how to deploy sensor nodes in 3D space to guarantee accurate communication among nodes. Inspired by ancient navigation, we stick the sensor nodes to the ceiling of the room, like the Sun or constellations, which ancient sailors observed using a sextant or some other instruments to locate the boat. The deployment is shown in Figure 1, where the nodes on the ceiling are beacons, and the node equipped with the moving target is the listener. Furthermore, the Cricket system is employed in a real experiment, and the deployment strategy is capable of ensuring ultrasonic transmission between any two nodes that are in the line of sight (LOS) to the greatest extent.

Figure 1.

The deployment of the system.

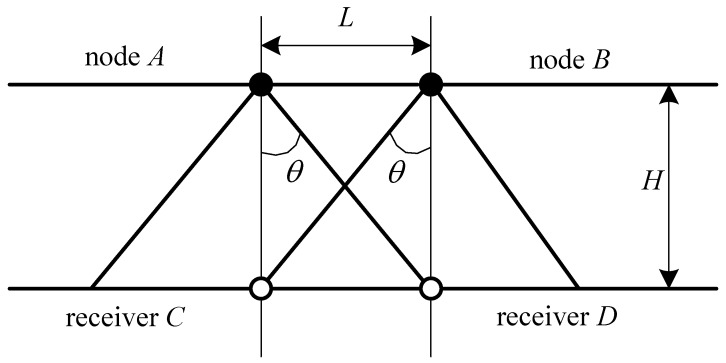

The layout of beacons on the ceiling should be discussed in terms of the number and locations of beacons. Since the transmitting angle of an ultrasonic sensor equipped in the node has an effective transmitting range, the layout of beacons should guarantee that the joint transmitting range is able to cover the monitoring field as much as possible. In the real experiment, we deploy five beacons on the ceiling, which are shown in Figure 1. According to the empirical measurement, the effective transmitting range of an ultrasonic sensor is a cone with about a 60-degree cone angle. Assuming half of the cone angle is θ, is approximate to for simplifying the calculation. The side view of the node layout is shown in Figure 2, where , and cm and cm in our experiment.

Figure 2.

The side view of the node layout.

For a bigger ultrasonic coverage range, the distance between any two of five beacons should be less than L. Through practical measurement, the 3D coordinates of the five beacons are , , , and , respectively, and the maximum distance between nodes is 113 cm, which fits the layout requirement.

Based on the experimental platform shown in Figure 1, we evaluate our methods mentioned in the following sections to locate and track the unknown target. After adequate simulation and experiments, we are able to effectively shrink the localization error into centimeter-level precision, and the accuracy can already satisfy most indoor localization-oriented applications.

4. The Self-Localization of Beacons

Many self-localization methods have been proposed to determine nodes’ coordinates by varying researchers. The majority of existing works is designed for specific application backgrounds; thus, we present here a self-localization scheme suitable for an indoor Cricket-like system, which deploys all nodes, including beacons, onto the ceiling. As described in the previous section, the beacons and the target are not in the same plane. Based on the layout of beacons, it is known that all of the beacons should be in the same plane, which means their z-axes are also identical, meanwhile the ground on which targets move should be parallel to the ceiling on which the nodes are mounted. Under these assumptions, the goal of the self-localization is to determine the accurate locations of the beacons.

Aimed at the research background, we apply the Cayley–Menger determinant to calculate the coordinates of beacons. The Cayley–Menger determinant is always used in distance geometry for determining the volume of a triangular pyramid based on the distances between any two of four vertices. For instance, there is a triangular pyramid with four vertices , , and , and the relation between the volume of the triangular pyramid and its Cayley–Menger determinant can be formulated as , where V is the volume of the triangular pyramid, and indicates the Cayley–Menger determinant, the expression of which is denoted as:

| (1) |

where is the square of the Euclidean distance between and ; while is the short form of . Thus, the linear relation between volume and edge lengths, or rather coordinates of vertices, of the triangular pyramid is built by the Cayley–Menger determinant.

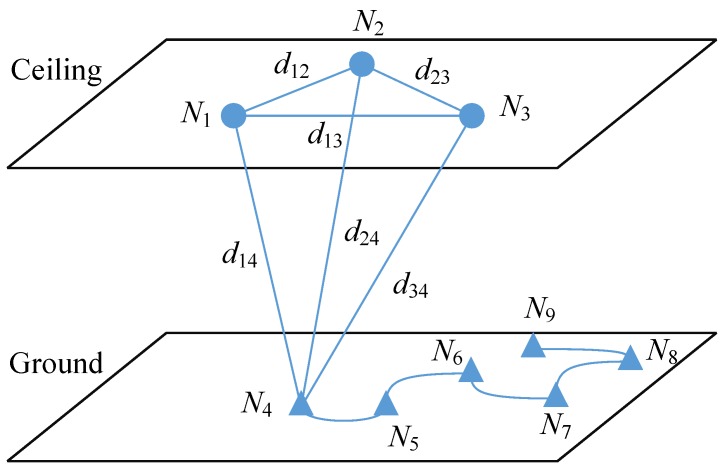

The illustration of the 3D self-localization system is shown in Figure 3, where the circle points refer to beacons and the triangle points refer to the locations through which the target moves. Using ultrasound measurements, the set of distances can be acquired as targets move via the trajectory from to . Due to the assumption that the ceiling is parallel to the ground, the volumes of the triangular pyramids, one of which consists of three beacons, and one target measurement location are identical. According to Equation (1), we can obtain the following result:

| (2) |

where is the Euclidean distance between and .

Figure 3.

The illustration of the 3D self-localization system.

After expanding the determinant in Equation (2), an equation set can be expressed as:

| (3) |

where:

All of the elements of A and B are measurable by time of arrival (TOA), which has centimeter-level accuracy in indoor environments. The vector of X is composed of , and , which are unknown variables that need to be determined. Let and be the practical measurement matrices of A and B, which involve measurement errors. Then, the least squares estimator of X is as follows:

| (4) |

where is a five-dimensional vector in the example, which is indicated as . Thus:

| (5) |

We can estimate the coordinates of beacons by Equation (5) as:

| (6) |

When the number of beacons is over three, any three of them could determine a coordinate system, and all of the varying coordinate systems can be unified by corresponding transformation matrices, which can be easily obtained. Given the number of beacons is five, and are as follow:

| (7) |

Since measurement errors always have negative influences on estimated consequences, differential sensitivity analysis (DSA) is employed to eliminate the influence in this paper. DSA is a method to approximate the function’s variance by first order Taylor series for estimating the function deviation derived from arguments with noise.

The vector includes l variables, which are . Suppose that there is a set in which every element has noise and follows a normal distribution of mean and variance . Then, the covariance can be expressed as:

| (8) |

where denotes the covariance function and R is the Jacobian matrix of Z.

Based on DSA, Equation (3) can be converted to:

| (9) |

where H is the residual error vector. Additionally, the covariance matrix is able to be presented as:

| (10) |

where refers to the set of all of the measured distances and R refers to the Jacobian matrix of with respect to . To simplify this case, we assume that all of the measured distances have the same variance .

To minimize the sum of square of weighted errors:

where , we can acquire the weighted least squares (WLS) estimation as:

| (11) |

Note that the could be calculated by X with noise, thus X will be replaced by in the real calculation.

5. The Calibration of Beacons

5.1. Dead Reckoning

The previous section provided a feasible self-localization method that can position unknown beacons by measuring the distances between beacons and the target. Though the measurement error has been taken into account in the method, it is necessary to calibrate the position of the beacons. In the present paper, we utilized a moving robot with odometers to calibrate the locations of beacons as the robot is moving in the area covered by WSNs.

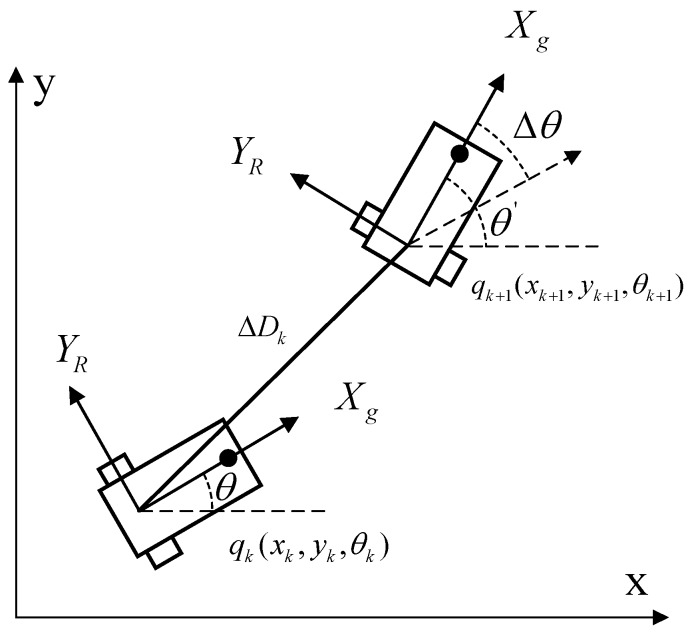

The moving robot UP-voyager II, which is used as the target in our experiment, is driven by the differential actuator mode. This means that there are only two motor-driven wheels with optical-electricity encoders on the robot, and the moving trajectory of the robot can be controlled by forwarding and reversing the wheels. To build the robot’s motion model, we assume that the robot is regarded as a mass point, and it merely moves on a 2D plane. The robot’s pose includes its position and orientation and can be expressed as the vector , where indicates the robot’s coordinate at time k, and indicates the robot’s orientation, i.e., the angle between the velocity direction and positive x-axis, at time k. Let the radii of the two wheels be and , respectively, and the spacing distance of them be a. The optical-electricity encoder has P slits/rad and outputs N impulses in unit interval . Thus, the rotation distances of the two wheels are presented respectively as:

| (12) |

Based on dead reckoning, the illustration of the robot moving from the present state to the next state is shown in Figure 4, where refers to the robot’s pose at the next time step , refers to the moving distance during unit time and refers to the variation of the robot’s orientation. Let be input information at time step k; then, the motion model of the robot can be expressed as

| (13) |

where:

and is the noise of the encoder. Due to the assumption that the robot simply moves on a 2D plane, the Jacobian matrix of with respect to is shown as:

| (14) |

Figure 4.

The motion model of the moving robot.

If the sampling interval is small enough, the robot’s motion model, shown as Equation (13), could match the real trajectory extremely well.

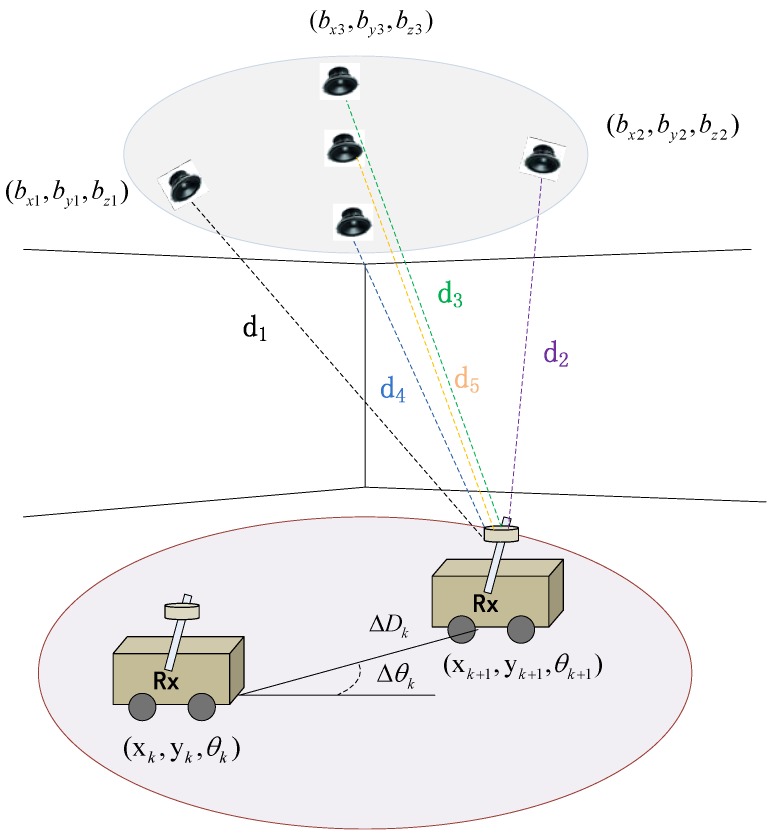

The Cricket System

We suppose that the coordinates of beacon i are , and the state of the robot is ; thus, the distance between them at time step is:

where is the measurement noise being subject to a normal distribution with mean zero.

How the Cricket system works is briefly illustrated in Figure 5. For calibrating beacons, the state should be extended to , and can be derived from based on Equation (13), which is shown as:

| (15) |

Figure 5.

The Cricket system.

To simplify the calculation, Equation (15) can be linearized to be:

| (16) |

where A and C are the Jacobian matrices of state matrix and measurement matrix, respectively, and follow a normal distribution with the same mean zero and different variances, Q and R, respectively.

5.2. Location Optimization Based on Filter Methods

The traditional filter methods, like the Kalman filter, require accurate statistical features of a system’s model and noise to be known, so does the extended Kalman filter (EKF). The EKF, the nonlinear version of the Kalman filter, linearizes nonlinear functions by first order Taylor series to approximate the nonlinear system [33]. However, the noise of the model and measurement cannot be avoided in a practical experiment. The filter is the filter method proposed for the system with an uncertain model and noise distribution, and it is verified to have robust performance in such a case. The filter is also called the minimax filter, which can minimize the maximum estimation error; thus, it is allowed to estimate a state with unknown or hardly determinate noise features [34]. The filter just assumes that the noise is the energy-limited signal, which accords more perfectly with the practical application situation. The literature [35] concludes that compared to the Kalman filter, the filter is less sensitive to the change of variances.

The state and measurement model are presented as Equation (16). Let be the system state estimator; the goal of the filter is to satisfy the following condition:

| (17) |

where J is the assessment function for the filter performance. Considering the worst situation of and , i.e., the worst influence on the estimator from the noise, the function of J can be defined as:

| (18) |

where , and are weighted matrices.

To obtain the optimal estimator , J should meet the condition of , and γ is the scalar designated by users, which can be regarded as the presupposed noise attenuation.

The recursion process of the filter is as follows:

| (19) |

where is the optimal estimator based on the odometer data at the last time step, is the gain factor of the filter and is the parameter of the filter. According to Equation (19), it is easy to find that the filter is similar to the Kalman filter to some extent. When , the filter is an extremely close approximation to the Kalman filter, with the minimum variance for the estimator; when , the filter has the most robustness. Therefore, appropriately choosing the value of γ facilitates a tradeoff between the robustness of the filter system and estimation variance.

Besides the filter, we utilize another filter named the strong tracking filter (STF) to refine estimators, because it is the improved version of the extended Kalman filter to solve the filter problem of a nonlinear system. Compared to the Kalman filter, STF is one of the improved Kalman filter methods: it orthogonalizes the residual error series at every step to extract the useful information from the residual error to estimate the current state [36]. Thus, STF has more robust performance against the mismatch of the model’s parameters than the Kalman filter.

To summarize, the key point of STF is to select an appropriate time-variant gain matrix to make the following equation true.

| (20) |

where is the residual error vector of the measurement matrix, and the residual error sequences in varying time steps should maintain an orthogonality relationship, which is formulated in Equation (20).

When the state model exactly matches the real situation, the residual error of the output of the Kalman filter is a series of non-autocorrelation white noise, which satisfies Equation (20). However, under the influence of the model’s uncertainty, the disturbances on the mean and amplitude of the outputs of the residual error sequences are inevitable; the gain matrix needs to be modified to make Equation (20) still true. Forcing STF to maintain tracking of the real system state is the attribute of STF.

We evaluate the and STF algorithms via simulation, and the details are shown in the following section. For simplicity, we use HF to stand for the filter from now on.

6. The Localization of Targets

The last two sections provided the corresponding methods to locate and calibrate the beacons’ positions. To realize the localization of targets, we propose to respectively apply the Gauss–Newton iterative method and the Cayley–Menger determinant. As only three beacons receive the returned ultrasonic signal, an equation set of three equations can be established by signal measurements, but three equations are not enough to limit any non-linear part. Thus, the Gauss–Newton iterative method and the Cayley–Menger determinant are mainly used to dispel the non-linear part for estimating the solution of equations, i.e., the location of the target. The two methods will be evaluated via the experiment in the following section.

This section principally gives the optimal node selection scheme based on geometric dilution precision (GDOP). In GPS, the localization accuracy could be influenced by the deployment of beacons and the target, and the same phenomenon also happens in the localization of WSNs [37]. Since GDOP can reflect the scaling degree of measurement error, the optimal nodes are the ones that have the minimum GDOP. Based on the definition, GDOP is the amplification coefficient from measurement error to localization error, which is as:

| (21) |

where is the vector of measurement error and is the vector of localization error. We suppose that the variances of all of the measurement distances of beacons are identical, , then the covariance of will be obtained:

| (22) |

where J is the Jacobian matrix of . Let G be:

| (23) |

The GDOP in the localization system can be derived by Equations (22) and (23) as:

| (24) |

Generally, the number of beacons is greater than three, so a method of picking the combination of three beacons that minimizes GDOP needs to be discussed. Intuitively, the number of combinations is , as there are N beacons deployed. The strategy is to find the combination that has the minimum GDOP in all. Based on the strategy, we calculate the GDOP of all of the beacons, which is shown as follows.

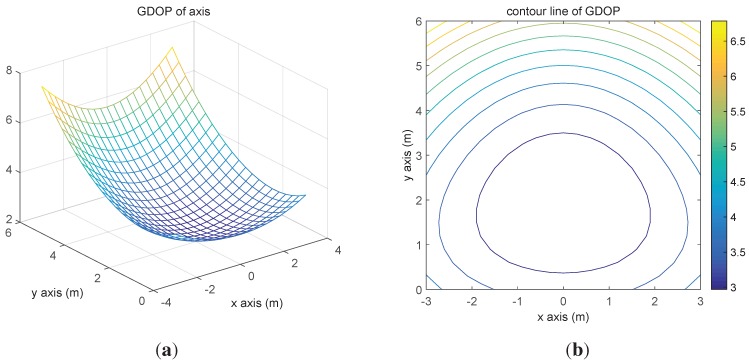

The GDOPs of every node are plotted in Figure 6a, and the corresponding contour line is shown in Figure 6b. According to the two figures, it can be concluded that picking beacons in the middle of the deployment could provide a more accurate location of the target than others.

Figure 6.

Geometric dilution precision (GDOP) distribution. (a) GDOP of the axis; (b) contour line of GDOP.

In practice, we can also apply the measurement information from other beacons besides the three picked ones, which means more information will be merged into the three picked ones. Thus, the GDOP-based weighting estimation method (GWEM) is proposed in the paper to realize information fusion. For simplicity and clearness, the method will be introduced by steps.

Assume that there are N beacons in the experiment, which can compose M combinations where (), and all of the combinations’ index set can be expressed as

To every combination, employ the Cayley–Menger determinant to estimate the location of the target and obtain the estimated consequence , then the corresponding can be derived.

- Weight the sum of the consequences of every combination as the following equation:

(25)

7. Experiment

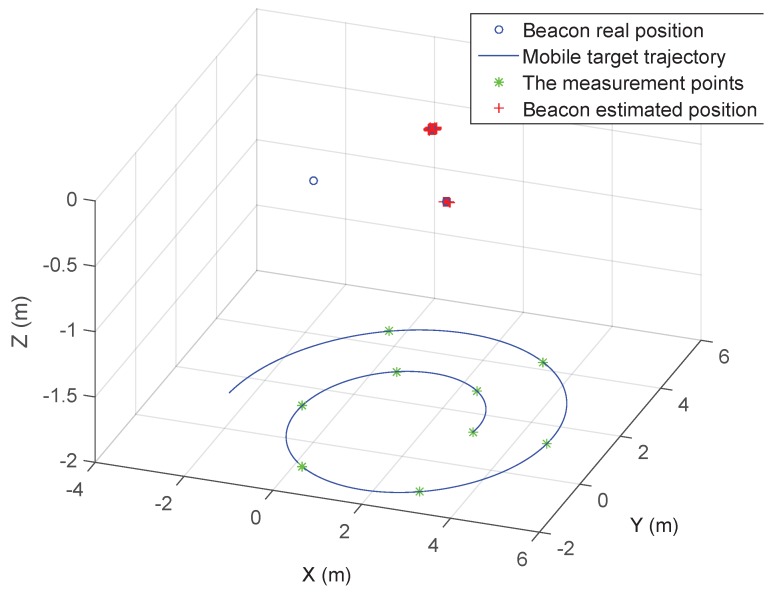

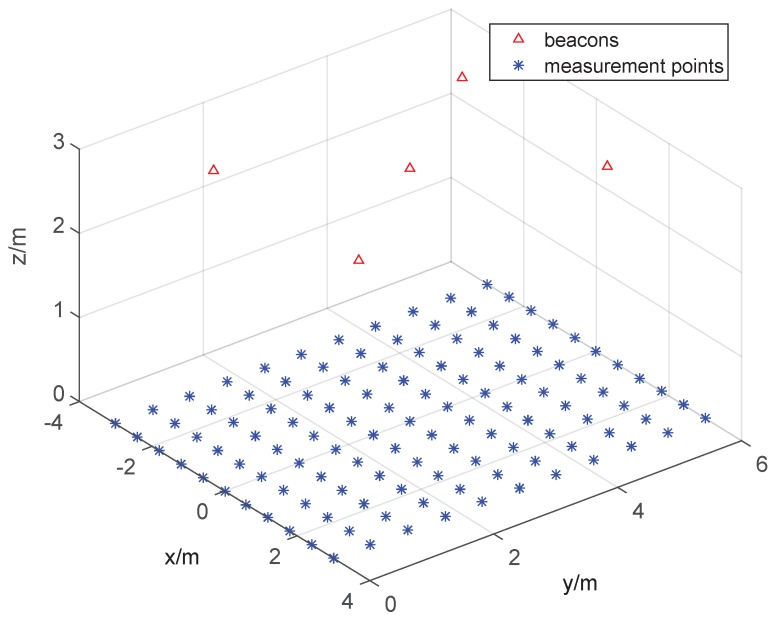

To evaluate the proposed algorithms, we design and conduct a series of simulations and experiments, and the setting of the experiment is described as the statement in the System Overview section. Firstly, we design the simulation to verify the algorithm for the self-localization of beacons, the configuration of which is illustrated in Figure 7. In the figure, we assume that there are three beacons represented by a blue circle on the ceiling, the coordinates of which are , and respectively.

Figure 7.

The simulation configuration.

In the meantime, the mobile target moves along the spiral trajectory, which can be represented as:

| (26) |

where m, m and . The spiral trajectory can guarantee that the measurement information is sufficient for algorithm processing, and the singular point could be avoided efficiently [38]. The measurement points, shown by green stars, are deployed along the trajectory every 60 degrees, and it is assumed that the measurement noise is also white noise with standard variance m. The red cross refers to the position estimated by the proposed self-localization algorithm.

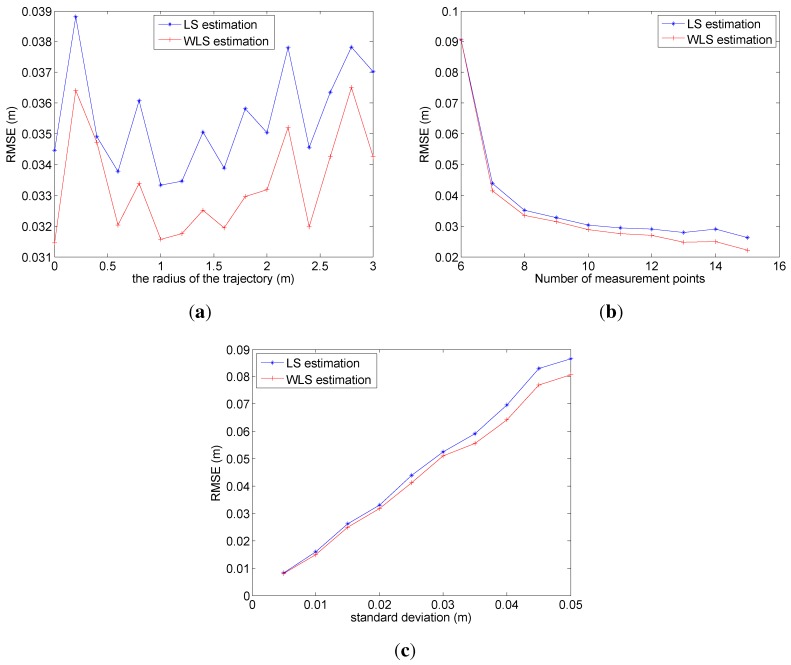

To further analyze the adaptiveness and robustness of the algorithm, the following simulation is conducted. For surveying the impact of the radius of the spiral trajectory on localization accuracy, the varying original radii are picked for the simulation, and the corresponding average RMSE of localization by 100 times of simulation is represented in Figure 8a. In Figure 8a, it is easy to find out that the optimal original radius is 1 m regardless of LS or WLS, and the WLS method is more robust than the LS with the variation of the radius.

Figure 8.

The illustration of impacts. (a) The impact of the radius of the spiral trajectory; (b) the impact of the number of measurement points; (c) the impact of environment noise.

In addition, the impact of the number of measurement points on localization accuracy is shown in Figure 8b. Obviously, following the increase of the number, WLS has a better performance than LS. When the number is over 12, the trend of RMSE by LS begins to flatten, while the one of WLS still descends, which demonstrates that WLS can apply redundant measurements more efficiently than LS.

Then, the impact of environment noise on localization accuracy is considered and shown in Figure 8c. In Figure 8c, the RMSE increases with an approximate linear growth as the standard deviation of environment noise rises, and the slope of the approximate linear relationship is about 1.4. Under the same conditions of simulation, WLS has higher localization accuracy than LS.

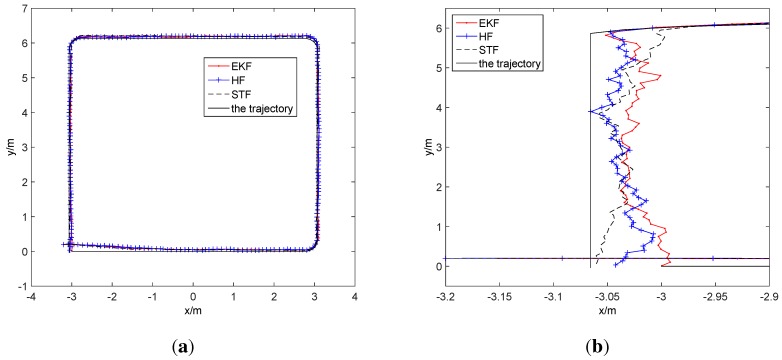

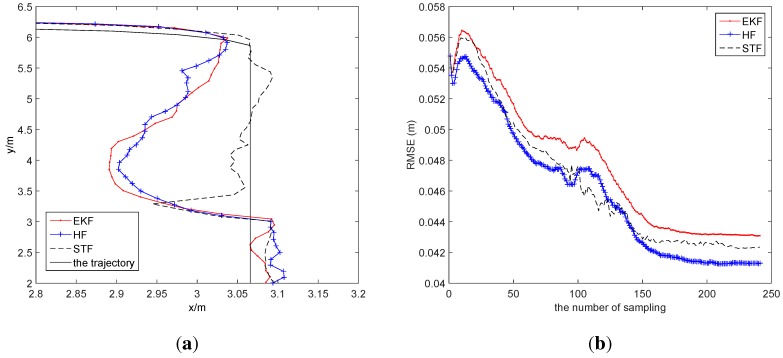

Secondly, we redeploy up to five beacons and redesign the trajectory of the mobile target in the simulation to evaluate the calibration effect by the proposed scheme. The five beacons are deployed at the coordinates of , , , and , respectively. Let the sampling interval be 1 s, the standard deviation of the noise of the distance measurement be 3 cm and the standard deviation of the noise of the odometer be 1 cm. The starting point of the target is at , and the target moves along a square with a 6-m side length. Under the above conditions, the EKF, HF and STF algorithms are applied to track the trajectory, and the result of tracking is shown in Figure 9.

Figure 9.

The tracking by filters. (a) The performance comparison; (b) the partial enlarged view.

In Figure 9, the three filters all provide highly accurate tracking results. For surveying the consequences subtly, the left part of Figure 9a is enlarged to Figure 9b, and in Figure 9b, it is shown that HF and STF have a better performance than EKF. The average localization error of EKF is m, m, (rad)], the one of HF is m, m, (rad)] and the one of STF is m, m, (rad)]. Following the number of sampling increases, the RMSEs of the three approaches obviously have a downward trend, which is shown in Figure 10.

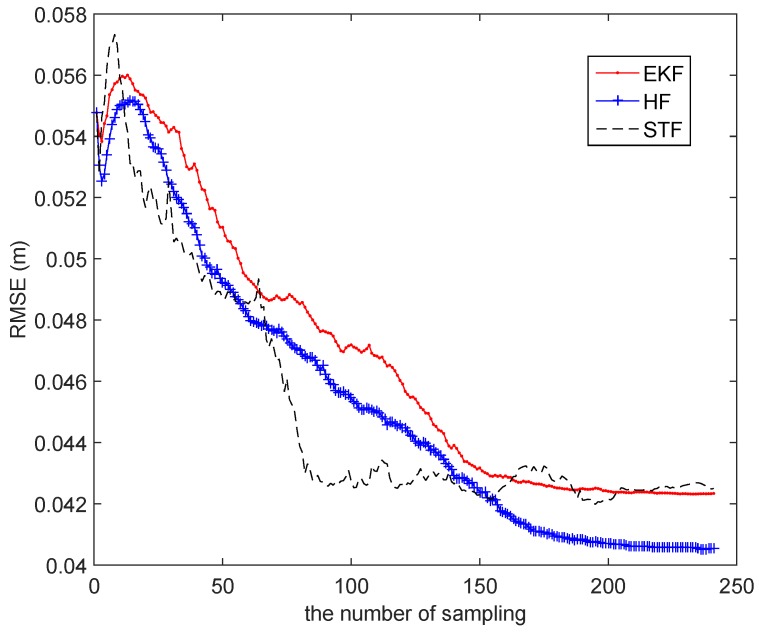

Figure 10.

The RMSE of EKF, the filter (HF) and the strong tracking filter (STF).

In Figure 10, it is presented that the RMSE of STF falls with the fastest speed, and it is the first one to reach a stable state in the three schemes. Thus, STF can satisfy tracking in the shortest possible time, while HF has the best overall effect compared to the two others and demonstrates the excellent adaptiveness of the models.

For investigating the robustness of the three schemes, we artificially add the disturbance, which is a orientation error, at the 90th sampling time step. Then, the average localization error of EKF is [0.0367 m, m, (rad)], the one of HF is m, 0.0739 m, 0.0570 (rad)] and the one of STF is [0.0300 m, 0.0702 m, 0.0369 (rad)]. In Figure 11a, STF clearly has a better tracking performance than EKF and HF. In Figure 11b, the curves of the RMSEs of EKF and HF make a sharp change at the 90th time step, while the curve of STF makes a consecutive change at the same time step, which demonstrates STF to be the most robust algorithm of the three methods.

Figure 11.

The impact of noise. (a) The tracking with noise; (b) the RMSE.

Lastly, we assess the localization accuracy of the localization system by simulation, the configuration of which is the same as described in the previous section. The only difference is that there are 121 measurement points deployed in a 6 cm × 6 cm square region. The diagram is shown in Figure 12.

Figure 12.

The configuration of the simulation.

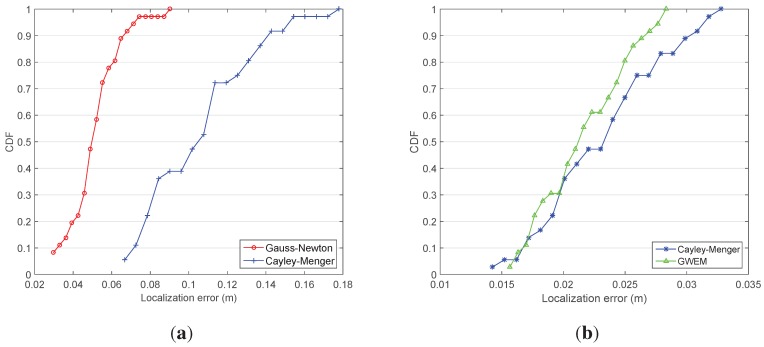

For evaluating the algorithm accuracy and calculation efficiency of the Gauss–Newton iterative method and the Cayley–Menger determinant, three randomly-picked beacons are involved in the simulation. The calculation duration of the Gauss–Newton iterative method is 420.1 s, equal to 0.58 s per iteration, and the calculation duration of the Cayley–Menger determinant is 0.68 s, equal to 0.9 ms per iteration. Undoubtedly, there is a vast difference of calculation efficiency between the two algorithms. In Figure 13a, we compare the CDF of the two algorithms, and it is illustrated that the Cayley–Menger determinant is of high localization efficiency, but low localization accuracy. The Gauss–Newton iterative method is the opposite.

Figure 13.

The comparison of CDF. (a) Gauss–Newton and Cayley–Menger; (b) Cayley–Menger and GWEM.

If the five beacons are all involved in the calculation, the proposed GWEM is capable of fusing the redundancy information into the existing methods. In Figure 13b, GWEM has a better localization performance than the Cayley–Menger method. The average loop time of the former is 10.8 s, while the average loop time of the latter is 10.2 s, which demonstrates that GWEM has the tantamount efficiency compared to the Cayley–Menger method.

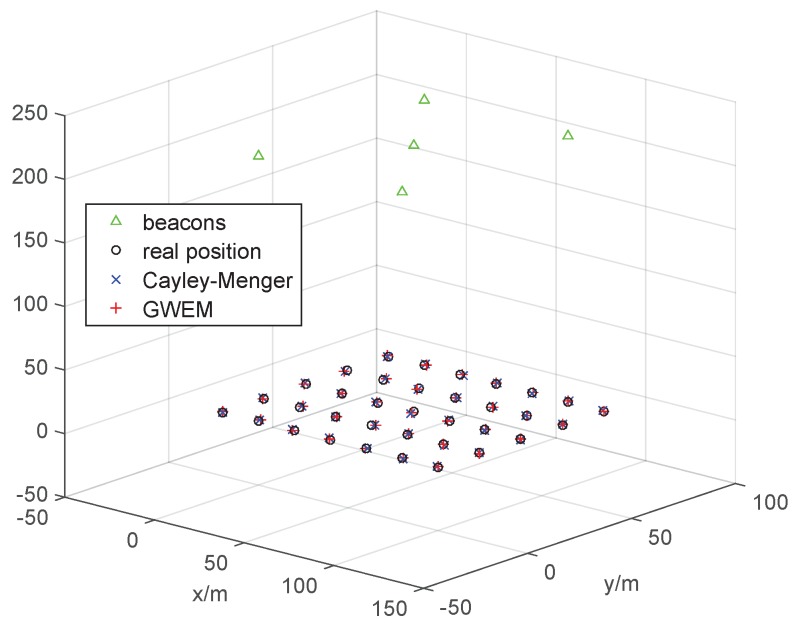

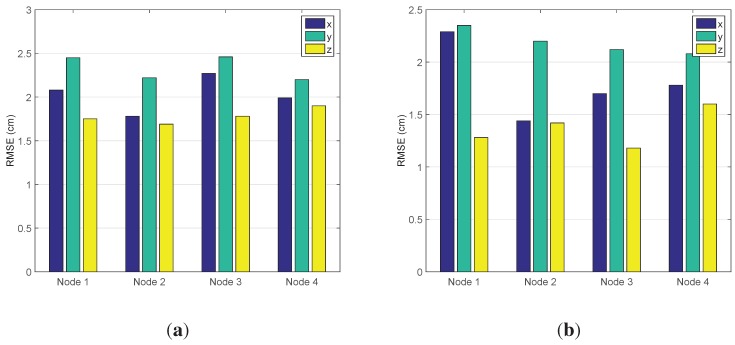

After simulation, we conduct the experiment to verify the proposed algorithms. In the experiment, the localization results are the average of the consequences of 20 trials by means of the Cayley–Menger method and GWEM, respectively, and the results are presented in Figure 14. For clarity, the histogram of the localization error of the two methods is shown in Figure 15. Comparing Figure 15a, and b, it is not difficult to find that GWEM has the lower average error. In the experiment, GWEM has been proven to be a fast and effective indoor localization method.

Figure 14.

The experiment results.

Figure 15.

The comparison of the average error. (a) The average error of Cayley–Menger; (b) the average error of GWEM.

In our 3D localization system, when a robot carrying a node enters the 3D WSN, it can help unknown beacons on the ceiling determine their own positions; meanwhile, the locations of beacons can be calibrated further based on the robot’s trajectory. After the above process, the initialization of the localization system is done, and when the target with a node enters the system again, the system will calculate and decide the trajectory of the target precisely. Therefore, the self-localization and calibration of beacons are the pre-processing task, and the computational cost almost reduces to zero because all of the beacons’ locations are known [39]. When tracking targets, all of the measurements will be delivered to the PC terminal via the sink node; then, the data are processed in MATLAB. The computational complexity is approximately equal to a particle filter in the simulation.

8. Conclusions

The paper provides a series of feasible schemes for localization in 3D settings based on WSNs, which solves three essential problems: the self-localization of beacons, the calibration of beacons after self-localization and positioning and tracking the mobile target in 3D settings by beacons. Aimed at the three problems, the contributions of the paper are as follows. Firstly, the weighted least squares estimation of localization is proposed for self-localization of beacons, which minimizes the influence from measurement errors by means of DSA. Secondly, for higher accuracy, we employ the calibration scheme for beacons with the aid of the mobile robot. Then, after comparing the EKF, HF and STF methods, we conclude that HF has the best adaptiveness to the uncertain state model, and STF has the best tracking performance to the system with great disturbance. Thirdly, analyzing the attributes of the Gauss–Newton iterative method and the Cayley–Menger determinant, we propose the optimal node selection scheme based on GDOP, which can select the group of beacons with the minimum GDOP from all of the beacons. Then, GWEM is presented for fusing more information from other beacons. Lastly, the simulation and experiment are used for evaluating the proposed methods, and the consequences show that the methods are feasible for localization in 3D settings and have high localization accuracy.

Acknowledgments

Supported by the National Natural Science Foundation of China (Nos. 61273078, 61471110), the China Postdoctoral Science Special Foundation (No. 2014T70263), the China Postdoctoral Science Foundation (No. 2012M511164), the Chinese Universities Scientific Foundation (N130404023, N110804004, N140404014), the Liaoning Doctoral Startup Foundation (No. 20121004) and the Foundation of Liaoning Educational Commission (L2014090).

Author Contributions

Zixi Jia, Chengdong Wu and Zhao Li proposed the idea of the paper. Yunzhou Zhang and Zhao Li designed and performed the experiments. Zixi Jia and Yunzhou Zhang analyzed the data. Bo Guan contributed analysis tools. Zixi Jia wrote the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Sichitiu M.L., Ramadurai V. Localization of Wireless Sensor Networks with a Mobile Beacon; Proceedings of the 2004 IEEE International Conference on Mobile Ad-Hoc and Sensor Systems; Fort Lauderdale, FL, USA. 24–27 October 2004; pp. 174–183. [Google Scholar]

- 2.He T., Huang C., Blum B.M., Stankovic J.A., Abdelzaher T. Range-Free Localization Schemes for Large Scale Sensor Networks; Proceedings of the 9th Annual International Conference on Mobile Computing and Networking; San Diego, CA, USA. 14–19 September 2003; pp. 81–95. [Google Scholar]

- 3.Huang C.-F., Tseng Y.-C. The Coverage Problem in a Wireless Sensor Network. Mob. Netw. Appl. 2005;10:519–528. doi: 10.1007/s11036-005-1564-y. [DOI] [Google Scholar]

- 4.Teymorian A.Y., Cheng W., Ma L., Cheng X., Lu X., Lu Z. 3D Underwater Sensor Network Localization. IEEE Trans. Mob. Comput. 2009;8:1610–1621. doi: 10.1109/TMC.2009.80. [DOI] [Google Scholar]

- 5.Franceschini F., Galetto M., Maisano D., Mastrogiacomo L. A Review of Localization Algorithms for Distributed Wireless Sensor Networks in Manufacturing. Int. J. Comput. Integr. Manuf. 2009;22:698–716. doi: 10.1080/09511920601182217. [DOI] [Google Scholar]

- 6.Pottie G.J., Kaiser W.J. Wireless integrated network sensors. Commun. ACM. 2000;43:51–58. doi: 10.1145/332833.332838. [DOI] [Google Scholar]

- 7.Romer K., Mattern F. The design space of wireless sensor networks. IEEE Wirel. Commun. 2004;11:54–61. doi: 10.1109/MWC.2004.1368897. [DOI] [Google Scholar]

- 8.Hightower J., Want R., Borriello G. SpotON: An Indoor 3D Localization Sensing Technology Based on RF Signal Strength. University of Washington; Seattle, WA, USA: 2000. pp. 1–5. [Google Scholar]

- 9.Harter A., Hopper A. A distributed location system for the active office. IEEE Netw. 1994;8:62–70. doi: 10.1109/65.260080. [DOI] [Google Scholar]

- 10.Priyantha N.B., Chakraborty A., Balakrishnan H. The Cricket Location-Support System; Proceedings of the 6th Annual International Conference on Mobile Computing and Networking; Boston, MA, USA. 6–11 August 2000; pp. 32–43. [Google Scholar]

- 11.Guan Y., An S., Liu G. Cricket System Providing Exact Indoor Location Information. J. Chongqing Polytech. Coll. 2004;19:39–41. [Google Scholar]

- 12.Dou H. Research and Realization on Positioning System Based on Ultrasonic Wave. Data Commun. 2008:49–52. [Google Scholar]

- 13.Wang F., Shi L., Ren F. Self-Localization Systems and Algorithms for Wireless Sensor Networks. J. Softw. 2005;16:220–231. doi: 10.1360/jos160857. [DOI] [Google Scholar]

- 14.Capriglione D., Ferrigno L., D’Orazio E., Paciello V., Pietrosanto A. Reliability analysis of RSSI for localization in small scale WSNs; Proceedings of the 2012 IEEE I2MTC International Instrumentation and Measurement Technology Conference; Graz, Austria. 13–16 May 2012; pp. 935–940. [Google Scholar]

- 15.Lee Y.S., Park J.W., Barolli L. A localization algorithm based on AOA for ad-hoc sensor networks. Mob. Inf. Syst. 2012;8:61–72. doi: 10.1155/2012/986327. [DOI] [Google Scholar]

- 16.Caffery J.J., Jr. A new approach to the geometry of TOA location; Proceedings of the 52nd Vehicular Technology Conference, IEEE-VTS Fall VTC 2000; Boston, MA, USA. 24–28 September 2000; pp. 1943–1949. [Google Scholar]

- 17.Eickhoff R., Ellinger F., Mosshammer R., Weigel R., Ziroff A., Huemer M. 3D-accuracy improvements for TDOA based wireless local positioning systems; Proceedings of the 2008 IEEE GLOBECOM Workshops; New Orleans, LO, USA. 30 November–4 December 2008; pp. 1–6. [Google Scholar]

- 18.He Y. Research on Centroid Localization Algorithm for Wireless Sensor Networks based RSSI. Comput. Simul. 2011;28:163–166. [Google Scholar]

- 19.Ma J., Wang J.D., Wen J.W., Xiao J.F. A New Positioning Algorithm Combining RSSI and Convex in Wireless Sensor Network. Command Control Simul. 2013;35:56–61. [Google Scholar]

- 20.Zhang J., Wu Y.H., Shi F., Geng F. Localization algorithm based on DV-HOP for wireless sensor networks. J. Comput. Appl. 2010;30:323–326. doi: 10.3724/SP.J.1087.2010.00323. [DOI] [Google Scholar]

- 21.Wang D., Jia H., Chen F., Wen F., Liu X. An improved DV-Distance localization algorithm for wireless sensor networks; Proceedings of the 2nd IEEE International Conference on Advanced Computer Control (ICACC); Shenyang, China. 27–29 March 2010; pp. 472–476. [Google Scholar]

- 22.Ellis C., Hazas M. A comparison of MDS-MAP and non-linear regression; Proceedings of the 2010 International Conference on Indoor Positioning and Indoor Navigation (IPIN); Zurich, Switzerland. 15–17 September 2010; pp. 1–6. [Google Scholar]

- 23.Wang J.Z., Jin H. Improvement on APIT localization algorithms for wireless sensor networks; Proceedings of the International Conference on Networks Security, Wireless Communications and Trusted Computing; Wuhan, China. 25–26 April 2009; pp. 719–723. [Google Scholar]

- 24.Xiang W.H., Jia C., Wang H.K., Sun G.F. Three-Dimensional Grid of APIT Algorithm in Wireless Sensor Network. Chin. J. Sens. Actuators. 2012;25:639–643. (In Chinese) [Google Scholar]

- 25.Lai X.Z., Wang J.X., Zeng G.X., Wu M., She J.H., Yang S.X. Distributed Positioning Algorithm Based on Centroid of Three-dimension Graph for Wireless Sensor Networks. J. Syst. Simul. 2008;20:4104–4111. [Google Scholar]

- 26.Wang D. Node Self-Localization Algorithms for 3D Wireless Sensor Networks. Southwest Jiaotong University; Cheng Du, China: 2007. [Google Scholar]

- 27.Dou N., Bo G., Wei Z., Yu D.Q. 3D localization method based on MDS-RSSI in wireless sensor network; Proceedings of the 2010 IEEE International Conference on Intelligent Computing and Intelligent Systems (ICIS); Xiamen, China. 29–31 October 2010; pp. 714–717. [Google Scholar]

- 28.Moses R.L., Krishnamurthy D., Patterson R.M. A self-localization method for wireless sensor networks. EURASIP J. Appl. Signal Process. 2003;4:348–358. doi: 10.1155/S1110865703212063. [DOI] [Google Scholar]

- 29.Guevara J., Jiménez A.R., Prieto J.C., Seco F. Auto-localization algorithm for local positioning systems. Ad Hoc Netw. 2012;10:1090–1100. doi: 10.1016/j.adhoc.2012.02.003. [DOI] [Google Scholar]

- 30.Yang Z., Wu C., Liu Y. Locating in fingerprint space: Wireless indoor localization with little human intervention; Proceedings of the 18th Annual International Conference on Mobile Computing and Networking; Istanbul, Turkey. 22–26 August 2012; pp. 269–280. [Google Scholar]

- 31.Ladd A.M., Bekris K.E., Rudys A.P., Wallach D.S., Kavraki L.E. On the feasibility of using wireless ethernet for indoor localization. IEEE Trans. Robot. Autom. 2004;20:555–559. doi: 10.1109/TRA.2004.824948. [DOI] [Google Scholar]

- 32.Chen Z., Zou H., Jiang H., Zhu Q., Soh Y.C., Xie L. Fusion of Wi-Fi, smartphone sensors and landmarks using the Kalman filter for indoor localization. Sensors. 2015;15:715–732. doi: 10.3390/s150100715. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Julier S.J., Uhlmann J.K. Unscented filtering and nonlinear estimation. IEEE Proc. 2004;92:401–422. doi: 10.1109/JPROC.2003.823141. [DOI] [Google Scholar]

- 34.Shen X., Deng L. Game theory approach to discrete H∞ filter design. IEEE Trans. Signal Process. 1997;45:1092–1095. doi: 10.1109/78.564201. [DOI] [Google Scholar]

- 35.Shaked U., Theodor Y. H∞-optimal estimation: A tutorial; Proceedings of the 31st IEEE Conference on Decision and Control; Westin LA Paloma Tucson, AZ, USA. 16–18 December 1992; pp. 2278–2286. [Google Scholar]

- 36.Fan X.J., Liu F., Qin Y., Zhang J. Current statistic model and adaptive tracking algorithm based on strong tracking filter. Acta Electron. Sin. 2006;34:981. [Google Scholar]

- 37.Sharp I., Yu K., Guo Y.J. GDOP analysis for positioning system design. IEEE Trans. Veh. Technol. 2009;58:3371–3382. doi: 10.1109/TVT.2009.2017270. [DOI] [Google Scholar]

- 38.Xu H., Tu Y.Q., Xiao W., Mao Y.W. An archimedes curve-based mobile anchor node localization algorithm in wireless sensor networks; Proceedings of the 2010 8th World Congress on Intelligent Control and Automation (WCICA); Jinan, China. 7–9 July 2010; pp. 6993–6997. [Google Scholar]

- 39.Ruiz D., Ureña J., Gude I., Villdangos J.M. Hyperbolic ultrasonic LPS using a Cayley–Menger bideterminant-based algorithm; Proceedings of the Instrumentation and Measurement Technology Conference (I2MTC’09); Singapore. 5–7 May 2009; pp. 785–790. [Google Scholar]