Abstract

Emotion-related areas of the brain, such as the medial frontal cortices, amygdala, and striatum, are activated during listening to sad or happy music as well as during listening to pleasurable music. Indeed, in music, like in other arts, sad and happy emotions might co-exist and be distinct from emotions of pleasure or enjoyment. Here we aimed at discerning the neural correlates of sadness or happiness in music as opposed those related to musical enjoyment. We further investigated whether musical expertise modulates the neural activity during affective listening of music. To these aims, 13 musicians and 16 non-musicians brought to the lab their most liked and disliked musical pieces with a happy and sad connotation. Based on a listening test, we selected the most representative 18 sec excerpts of the emotions of interest for each individual participant. Functional magnetic resonance imaging (fMRI) recordings were obtained while subjects listened to and rated the excerpts. The cortico-thalamo-striatal reward circuit and motor areas were more active during liked than disliked music, whereas only the auditory cortex and the right amygdala were more active for disliked over liked music. These results discern the brain structures responsible for the perception of sad and happy emotions in music from those related to musical enjoyment. We also obtained novel evidence for functional differences in the limbic system associated with musical expertise, by showing enhanced liking-related activity in fronto-insular and cingulate areas in musicians.

Keywords: music, emotion perception, aesthetics, liking, fMRI, salience network, limbic system

Introduction

Music can convey emotions in a relatively systematic manner within a given music (sub)culture. Adult listeners as well as school-age children are able to perceive and recognize basic emotions expressed by music, particularly happiness and sadness (Krumhansl, 1997; Peretz et al., 1998; Juslin and Laukka, 2004; Baumgartner et al., 2006; Koelsch, 2010; Nieminen et al., 2012). Complex emotions such as love, pride, and jealousy are instead less reproducible by music (Juslin and Laukka, 2004). Basic emotions are characterized by their adaptive or utilitarian function for the behavioral adjustment of individuals to events (Ekman, 1999). Consequently, the basic emotions induced by music do not coincide with the basic emotions triggered by prototypical life events (loss, threat, etc.; Ekman, 1999). It has been suggested that basic emotions experienced in a musical context are weaker than the same emotions occurring in real life and lack the action tendencies typical of basic emotions as well as the “universal” physiological reactions reproduced in individuals of any age and from different cultural background (Scherer, 2004; Zentner et al., 2008). Recent neuroimaging studies aimed at finding the neural correlates of basic emotions in music highlighted the role of the auditory cortex in the superior temporal gyrus, the cingulate cortex, the precuneus, and structures belonging to the reward circuit and limbic system, such as the ventral striatum and the insula, for the perception and induction of happy, joyful music (when compared with neutral or sad music; Mitterschiffthaler et al., 2007; Brattico et al., 2011; Park et al., 2013). Music perceived as sad (compared to neutral or happy music) has also been associated with neural activity in the superior temporal gyrus, the cingulate cortex, the hippocampus/amygdala, and with paralimbic and reward structures such as the ventromedial prefrontal cortex, caudate, and thalamus (Khalfa et al., 2005; Mitterschiffthaler et al., 2007; Brattico et al., 2011). In sum, studies searching for neural correlates of sadness and happiness in music consistently highlighted the role of medial frontal cortices, amygdala, and striatum in generating these emotions.

Along with basic emotions, music induces a separate set of emotions (sometimes termed aesthetic emotions) that are accompanied by evaluative judgments based on formal properties, such as beauty or performance mastering (Scherer, 2004; Silvia, 2005; Brattico and Pearce, 2013; Brattico et al., 2013). Aesthetic emotions in music are typically positive and, following Konecni's (2008) proposal, may be classified as the emotion of “being moved,” the sensation of “thrill” or the sublime awe for a beautiful stimulus. Most scholars have, however, focused on the aesthetic emotion of enjoyment or pleasure derived from a musical activity, which, when conscious, leads to the evaluative judgment of liking a musical piece (Konecni, 2008; Brattico et al., 2013). Musical pleasure has been studied in several ways in the neuroimaging and psychophysiology literature: either by asking subjects to bring their favorite music that induces chills in them (shivers down the spine and goose bumps; Blood and Zatorre, 2001; Grewe et al., 2009), by contrasting classical, instrumental music clips with acoustically-balanced counterparts (Menon and Levitin, 2005; Koelsch et al., 2006) or by correlating behavioral measures of pleasantness (valence) and arousal with brain recordings (Mikutta et al., 2012, 2014; Altenmüller et al., 2014; Jäncke et al., 2015; Trost et al., 2015). These studies showed the involvement of ventromedial and orbitofrontal cortices, amygdala, insula, regions of the reward circuit (particularly, ventral tegmental area and nucleus accumbens), dorsomedial frontal motor areas, hippocampus, anterior cingulate cortex, auditory cortices, and temporal pole during felt musical pleasure or perceived positive valence in music (Blood et al., 1999; Blood and Zatorre, 2001; Brown et al., 2004; Menon and Levitin, 2005; Koelsch et al., 2006; Flores-Gutiérrez et al., 2007; Gosselin et al., 2007; Salimpoor et al., 2013; Trost et al., 2015; Alluri et al., in press). On the other hand, unpleasantness or negative valence from music listening activates the parahippocampal gyrus, amygdala and hippocampus, temporal pole, left anterior cingulate and post-central gyrus, and insula (Blood et al., 1999; Pallesen et al., 2005; Koelsch et al., 2006; Flores-Gutiérrez et al., 2007; Suzuki et al., 2008; Trost et al., 2015). From this brief overview, it is evident that several brain correlates of musical pleasure and displeasure overlap with those for sad and happy emotions in music (for a meta-analysis of brain structures associated with music emotions, cf. Koelsch, 2014).

Psychologically, the overlap or mixture of aesthetic enjoyment and discrete, even negative, emotions, is exemplified by the “tragedy paradox,” a fascinating paradox in music and other arts (Schubert, 1996; Evans and Schubert, 2008). Behavioral studies repetitively showed that the perception of negative basic emotions in music does not correspond with the induction of negative emotions (Juslin and Laukka, 2004; for a review, see Sachs et al., 2015). For instance, a negatively valenced musical passage (such as the Albinoni's Adagio) may be liked by listeners and hence may induce the positive aesthetic emotion of enjoyment. In other words, tears and joy might co-occur during music listening (Vuoskoski and Eerola, 2012; Vuoskoski et al., 2012; Garrido and Schubert, 2013; Taruffi and Koelsch, 2014). Recently, music psychologists observed that people with depression or specific personality traits, such as openness to experience and nostalgia-proneness, possess a greater tendency to prefer listening to sad music. Empathy (the capacity to experience emotions that match those of another person) and absorption (the ability to concentrate so much that awareness of the passage of time and of the external surroundings are lessened) have been found as strongly predictive of liking sad music (Vuoskoski and Eerola, 2011). In relation to these findings, a dissociation theory of aesthetic enjoyment has been proposed, according to which listeners with a propensity for absorption are able to dissociate or de-activate displeasure feelings in aesthetic context, allowing the enjoyment of the emotionally intense stimuli while disregarding their negative content (Garrido and Schubert, 2013). Other authors have argued for an even bolder explanation, such that sad music directly induces pleasant emotions by virtue of the vicarious nature of the musical artistic stimulus (Kawakami et al., 2013, 2014). The reasons to listen to sad music identified in an online survey study (Van den Tol and Edwards, 2013) were the connection with the musical piece or its lyrics, the message communicated, and a high aesthetic value of the music. In sum, the confounding co-presence of emotions during music listening might be closely linked to the overlapping neural activations obtained in the neuroimaging studies described above.

Furthermore, while much is known about how long-term musical training shapes the auditory and somatomotor brain functions and related structures (Fauvel et al., 2014; Pantev et al., 2015; Reybrouck and Brattico, 2015; Schlaug, 2015), little is known concerning its role on emotional musical experience and on the associated brain mechanisms. Preliminary evidence on differences in limbic system functions associated with musical expertise was obtained by James et al. (2008): electric neural activity originating from right medial-temporal structures, including the insula, amygdala, and hippocampal complex, was registered selectively in musicians during listening to chord incongruities inserted in expressive music. Furthermore, an enhanced reactivity of the auditory cortex to unpleasant chords in musicians has been noticed in a neurophysiological study (Brattico et al., 2009). Another neurophysiological study (Mikutta et al., 2014) found enhanced slow mid-frontal theta band activity in professional classical musicians from Austria as opposed to amateur musicians (playing an instrument as hobby) during continuous listening to the first movement of the 5th Symphony by L. van Beethoven. This effect was positively correlated with ratings of pleasantness (valence) of the music, obtained in a session subsequent to the brain recordings (cf. also Mikutta et al., 2012). Musicians (like actors) are often exposed to emotional sounds and, in addition, are trained to express emotions through their playing (Brown et al., 2015). Also, their very reason for starting to play or for choosing music as a profession can often be traced back to their emotional connection to music (Sloboda, 1992). The success of a musician's interpretation and communication of the music (whether from classical, pop/rock or any other genre) relies on her capacity to convey and induce emotions in the listeners (Brown et al., 2015). In other sensory domains, the continuous exposure to a specific set of emotional stimuli alters the neural responses to them in the limbic and reward structures (Kirk et al., 2009). For instance, the bilateral orbitofrontal cortex and the subcallosal anterior cingulate were more active during aesthetic judgments of buildings in a group of architects as compared to controls, even in the absence of group differences in behavioral aesthetic ratings. Recent studies provided initial evidence that the activity and connectivity of the nucleus accumbens in the ventral striatum is enhanced in musicians as compared with non-musicians while listening to expressive or pleasurable (vs. inexpressive or non-pleasurable) music (Chapin et al., 2010; Alluri et al., in press). Based on these findings, it is plausible to hypothesize changes in limbic functions in musicians who have listened to and produced emotionally loaded musical sounds for several years.

Here we wished to disentangle the neural correlates of perception and induction of basic emotions and felt enjoyment (exemplified by liking or disliking) of the same musical material. We additionally examined the effects of musical expertise on this neural relationship. To this end, we asked subjects to bring four of their most liked and disliked musical pieces of happy or sad content to the laboratory. From those pieces, we extracted 18 sec samples and on the basis of a listening test where subjects rated the pieces along several affective scales, we selected the most representative samples for the fMRI session. This was complemented by a listening test, which served to obtain a fine affective classification of the music by subjects and by a detailed acoustic analysis of the music, which instead aimed at extracting the acoustic parameters that might co-vary with the behavioral and brain responses. Perceiving basic emotions in music was expected to involve dissociable limbic and paralimbic brain structures differentially associated with the control of happy and sad emotions, such as the amygdala (Gosselin et al., 2007), the anterior cingulate cortex and insula (Damasio et al., 2000; Lindquist et al., 2012), and the orbitofrontal cortex (Kringelbach, 2005). Furthermore, liking of music should activate the reward system, and in particular the nucleus accumbens, the ventral caudate and the ventral tegmental area (Berridge and Kringelbach, 2008), as previously observed in association with “music chills” and listening to pleasant unfamiliar and familiar music (Blood and Zatorre, 2001; Pereira et al., 2011; Salimpoor et al., 2011, 2013; Zatorre and Salimpoor, 2013). Disliked musical excerpts might also activate areas related to processing of musical dissonance or unpleasantness, such as the parahippocampal gyrus and the temporal poles (Blood et al., 1999; Koelsch et al., 2006).

Method

Subjects

Twenty-nine subjects without any neurological, hearing, or psychological disorder participated in the study (15 females; mean age 23.9 ± 3.1 SD). All subjects were chosen from the age group from 18 to 27 years old, as this has been defined as the age when individuals form their musical taste and have the strongest musical experiences (LeBlanc et al., 1996). Sixteen subjects (mean age: 25.1 ± 2.4 SD; 8 females) were classified as non-musicians since they did not receive any formal musical education apart for few years during their childhood and did not play music professionally (earning money from performance). Some of them could be considered, though, as music amateurs since they had played an instrument as hobby (5 had taken lessons in guitar, 8 in piano and 6 had participated at choirs). Out of the non-musicians only 3 had never played an instrument, whereas 7 had tried to learn more than two instruments in their life. Thirteen subjects (mean age: 22.8 ± 3.7 SD; 7 females) declared to be musicians, and indeed possessed long-term formal musical training. Six were educated in and mainly performed classical music, two musicians were trained in and performed folk and jazz music, and the rest played mainly pop/rock music. Five musicians played string instruments, three percussion instruments, two wind instruments, two keyboard instruments, and one was a singer. All musicians, except one, were also able to play other instruments along with their main one. Seven musicians played mainly classical music, whereas the others performed and practiced mainly jazz, folk or rock/pop music. The musicians started to play their main instrument on average at 9.1 ± 3.4 SD years of age and their second instrument at 10.5 ± 3.7 SD years, collecting a total amount of years of training equal, on average, to 16.2 ± 6 SD. Moreover, they reported practicing their instrument on average for 2.2 ± 1.3 SD hours per day at the time of the experiment and to actively listen to music 18.6 ± 15.6 SD hours per week. Non-musicians declared listening to music for 7.6 ± 5.6 SD hours per week at the time of the experiment.

Subjects were recruited online. The recruitment email specified that we were searching for individuals aged 18–27 years old, without a formal musical background or active in music performance but nevertheless with an interest and constancy in listening to music. Moreover, we asked that subjects like music in general, that they also like sad or nostalgic music, and that they listen to music from several genres. Finally, subjects were requested to be healthy and without metal in their bodies. In the recruitment email it was specified that the study would consist of a first session comprising a listening test and a second session an fMRI measurement, and that the participants would receive monetary compensation. The study procedure was approved by the ethical committee of the Helsinki University Hospital and complied with the Helsinki Declaration.

Procedures

Prior to the experiment

Prior to the listening test, subjects were asked to send or bring us 16 music pieces chosen according to the following guidelines: four liked and happy pieces, four liked and sad pieces, four disliked and happy pieces, and four disliked and sad pieces. It was instructed that the pieces should be from different musical genres and that they should not be associated with any special personal memories. The first instruction was meant to increase the acoustic variability of the musical excerpts and hence minimize the correlations between certain sound features and emotional responses to them whereas the second instruction was aimed at avoiding the possible confound of memory associations external to the music and their effects on affective responses. All subjects were able to select the required pieces. Some subjects needed further instructions to select the disliked but familiar pieces, in which case we encouraged them to think of tunes that they casually hear repeatedly from the media. One subject reported in the post-experimental questionnaire not having being able to select pieces without any autobiographical memory associations. Upon a check of the data, and after noticing no striking discrepancies between the other subjects, we opted to keep the data in the sample. The participants either brought the music pieces to the investigator or sent them via an online form. Four excerpts (18 sec each) with 500 ms fade-ins and fade-outs were created from each music piece with Adobe Audition. The majority of the music pieces were pop/rock songs with clear structure (often including verse, chorus, bridge, hook, and refrain; Davidson and Heartwood, 1997), lasting around 3 min each. The four excerpts were taken mainly from the verse, chorus, bridge and refrain. In the case of classical music or other genres not following any common pop form the excerpts were taken from the different parts of the piece to represent the main motifs. Thus, altogether 64 excerpts were cut from the music selection of each individual participant. The loudness level of the excerpts was normalized to a level of −15 dB. The music excerpts were presented binaurally via headphones with Presentation (Neurobehavioral Systems, Ltd., Berkeley, CA).

Listening test

To control the reliability of subjects' choices of musical pieces and to ensure that our selection of the 18 sec excerpts complied with subjects' affective categorization, we conducted a listening test at the Cognitive Brain Research Unit, University of Helsinki (approved by the local ethical committee). Each subject performed the test individually on the excerpts extracted from their own self-selected music. Beforehand, the participants filled in a consent form, a questionnaire concerning their musical background and music education especially designed for this study, and the “Music in Mood Regulation” (MMR) questionnaire (Saarikallio, 2008) assessing their use of music-related mood-regulation strategies in their everyday life (the results concerning the MMR questionnaire are presented in two separate papers: Saarikallio et al., 2013; Carlson et al., 2015). Also a questionnaire on musical choices was administered, in which subjects were requested to attribute a musical genre to each of the pieces brought to the lab, and to list reasons for liking or disliking those pieces. Subsequently, the 18 sec music excerpts were delivered in random order with Presentation (Neurobehavioral Systems, Ltd., Berkeley, CA) to the subjects binaurally via headphones at 40 dB above their individually determined hearing thresholds.

By pressing a number from 1 to 5 on a keyboard, subjects rated each excerpt after hearing it according to six 5-step bipolar scales: unfamiliar-familiar, sad-happy, feels sad-feels happy, disliked-liked, unpleasant-pleasant, and ugly-beautiful. In fixed order, the first scale appeared on a screen and when an answer was given, the next scale was presented. Thus, the participants were able to think about their answers for as long as they wanted (the written instructions were as follows: “You will give several ratings on the musical excerpts extracted from the musical pieces you selected after listening to each excerpt. Please follow this procedure. First read the text below and try to memorize the content of each rating. Then listen to the musical except twice. Try to give your ratings on the musical excerpt only without thinking too much about the musical piece to which it belongs”). After the six scales were completed, the next excerpt started by pressing a button. The participants were instructed to rate the excerpts according to the views and feelings they had exactly in that moment. The listening test lasted around 1.5 hour in total.

fMRI experiment

The fMRI measurements were conducted with the 3-Tesla scanner (3.0 T Signa VH/I General Electric) in the Advanced Magnetic Imaging (AMI) Centre in the Helsinki University of Technology and were approved by the Coordinating (“Koordinoiva”) ethical committee of the Uusimaa Hospital District and the research committee of the AMI Centre. Before the fMRI measurement, volunteers were informed about the study protocol, signed a written consent form, filled in a safety questionnaire, were encouraged to remove any ferromagnetic material before entering the magnet bore and to relax when in the magnet bore while concentrating on the musical stimuli. During the fMRI session, 33 oblique slices covering the whole brain (field of view 200 × 200 mm; 64 × 64 matrix; slice thickness 4 mm; gap 0 mm) were acquired using an interleaved gradient echo-planar imaging (EPI) sequence (TR = 3 sec; echo time, 32 ms; flip angle 90°) sensitive to blood oxygenation level-dependent (BOLD) contrasts. Continuous acquisition with time to repeat (TR) of 3 sec was used to measure brain responses to the experimental stimuli, assuming that the effect of the scanner noise would be constant and thus easily discernable from the effects of the musical stimulation. Subsequent to a short break after the fMRI session, anatomical T1 weighted MR images (field of view 260 × 260 mm; 256 × 256 matrix; thickness 1 mm; spacing 0 mm) were acquired. The subjects received two movie theater tickets to compensate for their inconvenience after the experiment.

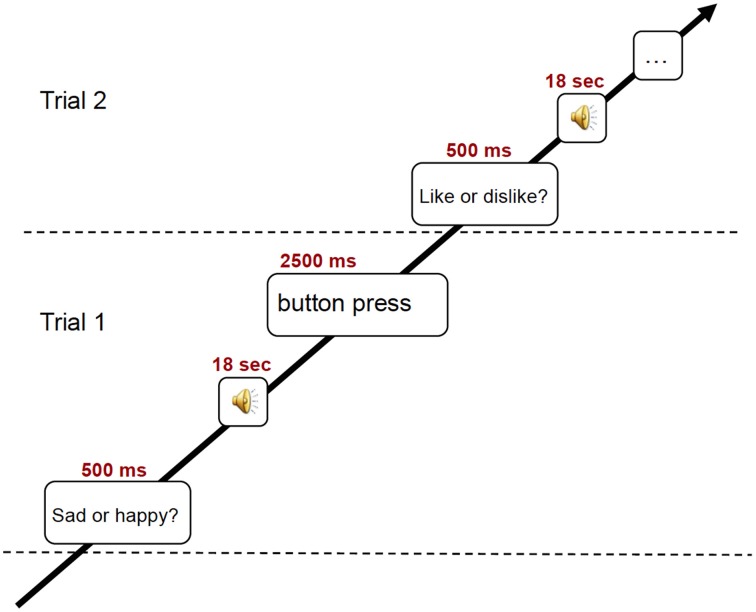

During the fMRI session, subjects listened to 18 sec excerpts of music selected on the basis of the previously conducted listening test. In detail, from the four excerpts for each of the 16 pieces of music brought to the lab by the subjects, the two excerpts obtaining the highest scores in emotional and in familiarity ratings were fed to the stimulation computer and delivered to the subjects in random order via high-fidelity MR-compatible headphones. The sound level was adjusted to be comfortable at an energy level around 80 dB. In the fMRI scanner, the subjects performed one out of two behavioral tasks, preceded by a visual cue (for an illustration of the experimental paradigm, see Figure 1). In one behavioral task prompted by the text “Like? Dislike?” (in Finnish: “Pidän? En pidä?”), the subjects had to indicate whether they liked the piece or not. In the other behavioral task prompted by the text “Sad? Happy?” (in Finnish: “Surullinen? Iloinen?”), the subjects rated the emotional content of the music on a binary scale. Three test trials were presented to the subjects prior to the main session. The text with the visual cue was maintained for the duration of the stimulus and served as fixation point. At the end of the 18 sec stimulus, another text appeared, asking the subjects to answer to the previously seen question (in Finnish: “Vasta nyt”). For the behavioral answer, subjects pressed with the second and third fingers of left or right hand (counterbalanced between subjects) MR-compatible button pads. After a 3 sec interval without any stimulus, a sinusoidal tone indicated the start of the next trial. The total time of the fMRI session was 21 min. Subsequent to a short break after fMRI recording, anatomical T1 weighted MR images were also acquired in about 10 min.

Figure 1.

Schematic illustration of the experimental trial used in the fMRI scanning.

Data analysis

Acoustic parameters

To explore any possible commonality in the acoustic features contained in liked vs. disliked music and in the happy vs. sad music we conducted two generalized linear mixed models with the participant as repeated measures variable. The familiarity ratings for each musical excerpt were included as covariates, similarly to the fMRI analysis. As dependent variables of the linear mixed model, we entered the mean values for each excerpt resulting from the computational analysis of the musical excerpts conducted with the MIRToolbox version 1.3.3 (developed at the University of Jyväskylä; Lartillot and Toiviainen, 2007). The first 24 different acoustic features were extracted, chosen among the ones most studied in the psychoacoustic literature and having clear perceptual attributes. The features were extracted from the stimulus on a frame-by-frame basis (for more details, see Alluri and Toiviainen, 2010; Alluri et al., 2012). A window length of 25 ms with a 50% overlap was used to extract the timbral features, and a frame size of 2 sec with a 33% overlap was used to extract the tonal and rhythmic features. All the features are documented in the original paper presenting the MIRToolbox and in subsequent studies including the more recently included features (Alluri et al., 2012; Eerola et al., 2012). To minimize Type I errors resulting from multiple comparisons we grouped features into six sets according to a classification as suggested by Eerola (2011), excluding structural features, which were not extracted: Dynamics (root mean square energy, low energy), Rhythm (fluctuation peak, fluctuation centroid, tempo, pulse clarity), Timbre (zero crossing rate, centroid, brightness, skewness, kurtosis, flatness, spectral entropy, roughness, irregularity, spectral flux), Pitch (chroma peak), Tonality (key clarity, mode, HCDF, spectral entropy extracting using a 5 sec frame), Articulation (attach time, attack slope). The six feature classes were represented by six principal component scores explaining 70% variance [X2 = 1522.7, p < 0.001, mean squared error, RMSR = 0.05] in the acoustic features across all tracks submitted by the participants. The statistical analyses concerning acoustic features were conducted in IBM SPSS Statistics version 22.

Behavioral ratings in the listening test

The effects of musical expertise on the six different scales of judgments collected in the listening test (familiarity, emotion recognition, emotion induction, liking, pleasantness, and beauty) were investigated in IBM SPSS Statistics version 22, using separate Kruskal-Wallis tests, corresponding to non-parametric mixed ANOVAs with Group as the between-subject factor and the discrete 5-point ratings as the dependent variable. The effects of liking or emotions of the musical stimuli on the six ratings scales were instead studied with separate non-parametric Friedman's rank tests. Pairwise comparisons aiming to test differences between ratings to liked vs. disliked music and happy vs. sad music in musicians and non-musicians were carried out with the non-parametric Wilconxon statistics. Reliability analyses explored the internal consistency, correlation, and covariance of the related scales. Emotion recognition and emotion induction were compared, and so were preference, pleasantness, and beauty, as they are all aesthetic measures.

fMRI data

Whole-brain image analysis was completed using Statistical Parametric Mapping 5 (SPM5) and Voxel Morphometry Mapping (VBM) for preprocessing and upgraded to SPM8 for statistical analyses (http://www.fil.ion.ucl.ac.uk/spm). Images for each participant were realigned to adjust for movement between volumes, and then segmented with VBM into gray matter, cerebrospinal fluid and white matter images. The segmented individual images were then spatially normalized onto the Montreal Neurological Institute (MNI) a priori tissue template of the gray matter according to a 12-parameters affine transformation model. The final preprocessing step included spatial smoothing with a Gaussian filter of 6 mm full-width at half maximum (FWHM). The normalization using segmented gray matter images obtained with VBM as an intermediate step was chosen for its superiority over the direct normalization of EPI images to the MNI template according to pilot tests. Smoothed, normalized brain volumes were screened to determine whether they met the criteria for high quality and scan stability as determined by small motion correction (<2 mm translation and <2° rotation). For statistical analysis, the fMRI responses were modeled using a canonical hemodynamic response function (HRF) with time dispersion and temporally filtered using a high-pass filter of 1/128 Hz to minimize scanner drift. The six movement parameters resulting from realignment preprocessing were modeled as regressors of no interest in the analysis.

Following preprocessing, linear contrasts employing canonical HFR function were used to estimate condition-specific blood oxygen level-dependent activation for each individual and each scan. In a first-level analysis, we compared with paired-samples tests the brain responses during the liked stimuli contrasted directly with the brain responses to the disliked stimuli, and vice versa. Moreover, we contrasted with paired-samples tests the brain responses to sad stimuli with the brain responses to the happy stimuli, and vice versa. These individual contrast images (i.e., weighted sum of the beta images) were then used in second-level random effects models that account for both scan-to-scan and participant-to-participant variability to determine mean condition-specific regional responses. General linear models (GLM's) with Group, Liking and Emotion as factors were then performed and t-tests were conducted to further investigate the significant main effects and interactions.

Further analyses were conducted to analyze the putative effects of sensory processing on brain responses to musical emotions and liking. To this aim, we chose to conduct region-of-interest (ROI) analysis by extracting the signal change from the clusters of activations found to be significant with Marsbar. We decided to opt for this method, rather than regressing out the acoustic features from the GLM analysis, because of the differential role of acoustic features for discrete emotions and for liking judgments. The signal change values were then entered in IBM SPSS Statistics version 22 for studying correlations with the acoustic feature values of each musical excerpt obtained computationally with MIRToolbox analysis. The alpha level was corrected for multiple comparisons by Bonferroni correction (significance at p < 0.001 when considering only the comparisons for the acoustic features that significantly differentiate the stimulus categories).

For the whole-brain fMRI analyses, a statistical threshold of p < 0.001, minimum cluster size (k) = 29 was used, as resulted from the calculation of the alpha level of significance based on Monte Carlo permutations.

Results

Questionnaire on music choices

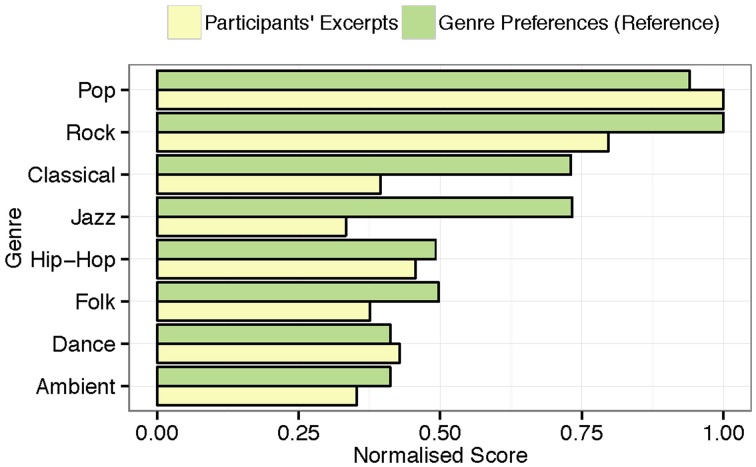

The subjects classified their self-selected musical pieces as belonging to several different genres, e.g., pop, rock, emo, sugary ballad, Finnish iskelmä (melodic pop like “Schlagers”), classical, folk, electronic, and atonal music, etc. They showed detailed knowledge of the musical genres, and their selection reproduces the distribution of musical genre preferences in the Western world, with pop/rock as the most widely listened genre (80%). As illustrated in Figure 2, these findings are analogous to those of a similarly aged (M = 20.64, SD = 2.84) reference sample (n = 346) from the same country (Ferrer et al., 2013), who also predominantly listen to pop/rock music.

Figure 2.

Normalized distribution of musical genres represented by the musical excerpts brought to the lab by the participants and normalized genre preferences from a comparable sample (n = 346).

Behavioral ratings in the listening test

Separate Kruskal-Wallis tests for the six behavioral ratings showed that none of the ratings strongly differed between musicians and non-musicians [main effect of Group for all: 2.2; p > 0.14], except for the emotion recognition of disliked sad music [ 5.4; p = 0.02], with non-musicians classifying the disliked music as sadder (M = 1.7 ±.4 SD) than musicians (M = 2.0 ±.3 SD). Considering that ratings did not consistently differentiate musicians from non-musicians we conducted the subsequent analyses studying effects of Liking and Emotion factors using the whole group of participants.

The familiarity ratings (familiar vs. unfamiliar) differed between stimuli [; p < 0.0001] with the liked music rated as overall more familiar than disliked music (Z = −3.9 for happy music, and Z = −4.2 for sad music, with p < 0.0001 for both) and the disliked happy music rated as more familiar than the disliked sad music (Z = −2.1, p = 0.04). However, for all stimuli the mean scores were positive (>3.9), and hence the musical pieces were overall familiar to subjects.

The significant result for the emotion recognition ratings (happy vs. sad) [; p < 0.0001] revealed that liked happy music was better recognized as happy than disliked happy (Z = −3.3; p = 0.001) and the same applied for sad music (Z = −3.1; p = 0.002). The significant result for the emotion induction ratings (feel happy vs. feel sad) [ 69.7; p < 0.0001] further evidenced that subjects felt more intensively emotions when listening to liked than disliked happy music (Z = −4.6; p < −0.0001; liked happy music: M = 4.4 ±.4 SD; disliked happy music: M = 3.2 ±.6 SD) and to liked than disliked sad music (Z = −2.2; p = 0.03; liked sad music: M = 2.1 ±.6 SD; disliked sad music: M = 2.3 ±.7 SD).

The liking ratings (liked vs. disliked), as expected, differed between stimulus categories [ 74.4; p < 0.0001] with sad music obtaining higher liking ratings than happy music (Z = −2.1, p < 0.04 in Wilcoxon test) and disliked sad or happy music obtaining lower liking ratings than liked sad or happy music (Z = −4.7, p < 0.0001). Pleasantness ratings (pleasant vs. unpleasant), which also differed between stimuli [ 73.8; p < 0.0001], were higher for liked (sad or happy) music than disliked (sad or happy) music (Z = −4.7, p < 0.0001). The beauty ratings (beautiful vs. ugly) differing between stimulus categories [ 72.9; p < 0.0001] revealed that liked music was rated as more beautiful than disliked music (Z = −4.7; p < 0.0001) and sad music was also rated as more beautiful than happy music (Z = −4.0; p < −0.0001; sad music: M = 4.6 ±.4 SD vs. happy music: M = 4.0 ±.7 SD).

Recognition/induction reliability for liked happy excerpts had a standardized Cronbach's alpha coefficient of 0.865. For liked sad excerpts, this value was 0.660. For disliked happy, it was 0.518, and for disliked sad it was 0.608. Liked happy emotion recognition and liked happy perception were the only two variables that were significantly correlated (r = 0.762). Reliability of beauty ratings as measured with Cronbach's alpha coefficient amounted to 0.887 for liked happy, 0.920 for liked sad, 0.915 for disliked happy, and 0.861 for disliked sad music (see Table 1).

Table 1.

Inter-item correlations for the behavioral ratings obtained during the listening tests (above the diagonal) and during fMRI sessions (below the diagonal).

| LHpref | LHpleas | LHbeaut | LSpref | LSpleas | LSbeaut | DHpref | DHpleas | DHbeaut | DSpref | DSpleas | DSbeaut | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| LHpref | – | 0.877 | 0.619 | 0.713 | 0.725 | 0.707 | −0.444 | −0.418 | −0.324 | −0.291 | −0.407 | −0.200 |

| LHpleas | 0.805 | – | 0.675 | 0.567 | 0.691 | 0.605 | −0.481 | −0.414 | −0.342 | −0.371 | −0.525 | −0.332 |

| LHbeaut | 0.491 | 0.613 | – | 0.403 | 0.323 | 0.494 | −0.290 | −0.142 | −0.206 | −0.233 | −0.327 | −0.457 |

| LSpref | 0.671 | 0.566 | 0.325 | – | 0.833 | 0.809 | −0.676 | −0.536 | −0.432 | −0.407 | −0.256 | −0.180 |

| LSpleas | 0.547 | 0.520 | 0.170 | 0.755 | – | 0.736 | −0.636 | −0.582 | −0.476 | −0.432 | −0.342 | −0.182 |

| LSbeaut | 0.584 | 0.545 | 0.356 | 0.775 | 0.716 | – | −0.669 | −0.618 | −0.519 | −0.304 | −0.315 | −0.169 |

| DHpref | −0.399 | −0.376 | −0.125 | −0.574 | −0.469 | −0.724 | – | 0.839 | 0.766 | 0.532 | 0.540 | 0.369 |

| DHpleas | −0.382 | −0.339 | −0.037 | −0.420 | −0.376 | −0.637 | 0.751 | – | 0.737 | 0.353 | 0.591 | 0.141 |

| DHbeaut | −0.369 | −0.323 | −0.231 | −0.433 | −0.411 | −0.592 | 0.749 | 0.588 | – | 0.415 | 0.541 | 0.442 |

| DSpref | −0.212 | −0.283 | −0.120 | −0.303 | −0.399 | −0.340 | 0.503 | 0.351 | 0.393 | – | 0.771 | 0.635 |

| DSpleas | −0.472 | −0.461 | −0.192 | −0.279 | −0.300 | −0.339 | 0.469 | 0.597 | 0.503 | 0.755 | – | 0.617 |

| DSbeaut | −0.129 | −0.277 | −0.416 | −0.108 | −0.103 | −0.191 | 0.261 | 0.097 | 0.417 | 0.527 | 0.419 | – |

LH, liked happy stimuli; LS, liked sad; DH, disliked happy; and DS, disliked sad. Pref, preference ratings; pleas, pleasantness; and beaut, beauty.

We also tested whether the results obtained during the listening test were compatible with those obtained during the fMRI measurement in a separate lab. The ANOVA did not reveal any significant main effect of Experiment (p > 0.95) validating the experimental procedure. Cronbach's coefficients of reliability for the ratings obtained during the fMRI session is illustrated in Table 1.

Acoustic parameters

The musical excerpts liked by musicians differed from those chosen by non-musicians in the acoustic values of Articulation [main effect of Group: F(1, 217) = 105.2, p < 0.0001], Dynamics [main effect of Group: F(1, 262) = 787.2, p < 0.0001], and Timbre [main effect of Group: F(1, 204) = 205, p < 0.0001]. The liked and disliked musical excerpts differed from each other on Pitch [main effect of Liking: F(1, 282) = 4.1, p = 0.04], Articulation [main effect of Liking: F(1, 293) = 4.5, p = 0.03], Rhythm [main effect of Liking: F(1, 260) = 9.9, p = 0.002], Timbre [main effect of Liking: F(1, 424) = 66.5, p < 0.0001]. The differences in acoustic features between sad and happy musical excerpts were even more remarkable: happy significantly differed from sad music in Articulation [main effect of Emotion: F(1, 304) = 30.6, p < 0.0001], Pitch [main effect of Emotion: F(1, 256) = 14.8, p < 0.0001], Rhythm [main effect of Emotion: F(1, 236) = 63.9, p < 0.0001], Timbre [main effect of Emotion: F(1, 229) = 33.6, p < 0.0001], Tonality [main effect of Emotion: F(1, 239) = 6.6, p = 0.01]. The acoustic feature content of happy and sad music also differed between musicians and non-musicians for Articulation [main effect of Group: F(1, 301) = 185.4, p < 0.0001], Dynamics [main effect of Group: F(1, 514) = 514.2, p < 0.0001], and Timbre [main effect of Group: F(1, 179) = 143.1, p < 0.0001].

To summarize, the majority (5/6 feature categories) of the differences between the excerpts were observed between happy and sad emotions. These acoustic differences were consistent with the past research on musical features for different emotional expression (e.g., Eerola, 2011). Also the liked and disliked excerpts showed marked differences. Finally, in feature categories such as the Dynamics and Timbre, the excerpts chosen by musicians and non-musicians varied in a systematic fashion. It is likely that the acoustic differences between musical excerpts depending on musical expertise are related to the musical genres of the excerpts chosen by the two experimental groups, although a full analysis of the genre differences are beyond this investigation.

fMRI responses

Overall ANOVAs

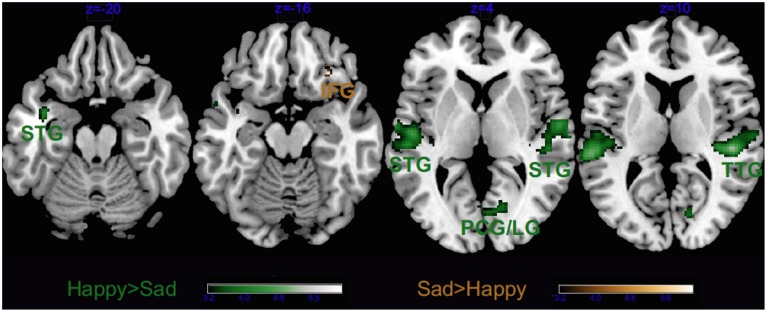

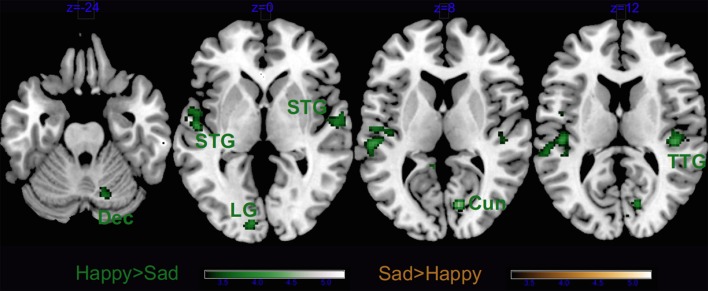

As visible from Figure 3, we obtained a significant main effect of Emotion in several areas, listed in Tables 2, 3, which derived from the higher activity in happy > sad in primary and secondary auditory cortices along the bilateral superior and transverse temporal gyri, and the medial structures such as the cuneus, lingual and posterior cingulate gyri. The contrast sad > happy contributed only with activation in the right lateral prefrontal cortex (inferior frontal gyrus, BA 47/11).

Figure 3.

Main effect of Emotion. STG, superior temporal gyrus; TTG, transverse temporal gyrus; LG, lingual gyrus; IFG, inferior frontal gyrus and PCG, post-central gyrus. Activations were considered significant at p < 0.001, and cluster size k > 29 (as obtained with Monte Carlo permutations).

Table 2.

Coordinates and statistical values of the full factorial analysis of fMRI responses in both musicians and non-musicians.

| Region | L/R | BA | x | y | z | Z | k | Coordinates (x, y, z) |

|---|---|---|---|---|---|---|---|---|

| Main Effects and Interactions | ||||||||

| LIKING | ||||||||

| Middle/superior temporal gyrus | L | BA 21/22 | −59 | −8 | −6 | 6.58 | 1549 | −60, −8, −8 |

| Middle/superior temporal gyrus | R | BA 21/38 | 61 | −6 | −3 | 6.53 | 1524 | 62, −6, −4 |

| Medial frontal gyrus/anterior cingulate cortex | R/L | BA 6/ 24 | 6 | −3 | 61 | 6.16 | 888 | 6, −6, 66 |

| Cingulate gyrus/paracentral lobule | R/L | BA 31 | 8 | −27 | 40 | 5.51 | 671 | 8, −30, 42 |

| Culmen | L | N/A | −34 | −58 | −24 | 5.08 | 112 | −34, −58, −32 |

| Culmen/declive | R/L | N/A | 6 | −57 | −19 | 4.71 | 380 | 6, −58, −26 |

| Precuneus | R | BA7 | 10 | −56 | 47 | 4.53 | 50 | 10, −60, 48 |

| Anterior nucleus | R/L | N/A | 6 | −3 | 9 | 4.37 | 258 | 6, −4, 10 |

| Precentral gyrus | L | BA 6 | −46 | −2 | 39 | 4.07 | 87 | −46,−4, 42 |

| Precentral gyrus | L | BA 6 | −61 | 3 | 13 | 4.06 | 79 | −62, 2, 14 |

| Caudate body | R | N/A | 20 | −3 | 22 | 4.04 | 75 | 20, −4, 24 |

| Caudate body | L | N/A | −18 | 1 | 22 | 3.98 | 43 | −18, 0, 24 |

| Thalamus | L | N/A | 0 | −19 | 16 | 3.98 | 50 | 0, −20, 16 |

| Parahippocampal gyrus | R | BA 20 | 40 | −28 | −15 | 3.97 | 42 | 40, −28, −20 |

| Superior temporal gyrus | R | BA 22 | 48 | 2 | 4 | 3.86 | 57 | 48, 2, 4 |

| Declive/culmen | R | N/A | 28 | −63 | −22 | 3.83 | 117 | 28, −64, −30 |

| Post-central gyrus | L | BA 3 | −28 | −34 | 51 | 3.80 | 38 | −28, −38, 54 |

| Culmen | R | N/A | 6 | −34 | −12 | 3.63 | 32 | 6, −34, −16 |

| Lingual gyrus | L | BA 18 | −22 | −72 | −1 | 3.61 | 31 | −22, −74, −6 |

| Middle frontal gyrus | L | BA 6 | −24 | −1 | 61 | 3.60 | 42 | −24, −4, 66 |

| Pulvinar | L/R | N/A | −2 | −27 | 3 | 3.51 | 46 | −2, −28, 2 |

| EMOTION | ||||||||

| Superior temporal gyrus | L | BA 22/ 41 | −51 | −14 | −1 | 5.15 | 967 | −52, −14, −2 |

| Transverse/superior temporal gyrus | R | BA 41/ 22 | 46 | −25 | 10 | 4.75 | 565 | 46, −26, 10 |

| Superior temporal gyrus | R | BA 22 | 50 | 4 | −5 | 3.90 | 39 | 50, 4, −6 |

| Posterior cingulate/lingual gyrus | R | BA 30/18 | 16 | −68 | 7 | 3.56 | 75 | 16, −70, 4 |

| GROUP | ||||||||

| Declive | R | N/A | 28 | −65 | −19 | 4.49 | 62 | 28, −66, −26 |

| Precuneus | L | BA 7 | −22 | −62 | 36 | 4.24 | 29 | −22, −66, 36 |

| Precuneus | R | BA 7 | 24 | −60 | 38 | 3.90 | 39 | 24, −64, 38 |

| Post-central gyrus | L | BA 3 | −20 | −30 | 53 | 3.61 | 31 | −20, −34, 56 |

| Precentral gyrus | L | BA 6 | −44 | −12 | 39 | 3.52 | 30 | −44, −14, 42 |

| LIKING × GROUP | ||||||||

| Cerebellar tonsil | L | N/A | −10 | −50 | −34 | 3.70 | 32 | −10, −50, −44 |

| EMOTION × GROUP | ||||||||

| Declive | R | N/A | 4 | −65 | −15 | 4.49 | 118 | 4,−66, −22 |

| Red nucleus | L | N/A | −2 | −26 | −14 | 4.32 | 40 | −2,−26, −18 |

| Posterior cingulate | R | BA 30 | 20 | −52 | 15 | 3.60 | 32 | 20, −54, 14 |

Clusters were considered significant at p < 0.001, and cluster size k>29 (as obtained with Monte Carlo permutations). Coordinates are in MNI space. Only effects and interactions producing significant clusters are reported.

Table 3.

Coordinates and statistical values of the t-tests on fMRI responses in both musicians and non-musicians.

| T-tests | ||||||||

|---|---|---|---|---|---|---|---|---|

| LIKE >DISLIKE | ||||||||

| Medial frontal gyrus/anterior cingulate | R/L | BA 6/24 | 6 | −3 | 61 | 6.27 | 1223 | 6, −6, 66 |

| Cingulate gyrus/paracentral lobule | R/L | BA 31 | 8 | −27 | 40 | 5.63 | 802 | 8, −30, 42 |

| Culmen/declive | L/R | N/A | −34 | −58 | −24 | 5.21 | 865 | −34, −58, −32 |

| Precuneus | R | BA 7 | 10 | −56 | 47 | 4.68 | 63 | 10, −60, 48 |

| Anterior nucleus (Thalamus) | R/L | N/A | 6 | −3 | 9 | 4.51 | 497 | 6, −4, 10 |

| Precentral/post-centralgyrus | L | BA 6/3 | −46 | −2 | 39 | 4.23 | 169 | −46, −4, 42 |

| Precentral gyrus | L | BA 6 | −61 | 3 | 13 | 4.22 | 105 | −62, 2, 14 |

| Caudate body | R | N/A | 20 | −3 | 22 | 4.20 | 114 | 20, −4, 24 |

| Caudate body/tail | L | N/A | −18 | 1 | 22 | 4.14 | 99 | −18, 0, 24 |

| Parahippocampal gyrus | R | BA 20 | 40 | −28 | −15 | 4.14 | 55 | 40, −28, −20 |

| Superior temporal gyrus | R | BA 22 | 48 | 2 | 4 | 4.03 | 91 | 48, 2, 4 |

| Middle frontal gyrus | L | BA 6 | −24 | 8 | 47 | 4.02 | 45 | −24, 6, 52 |

| Post-central gyrus | L | BA 3 | −28 | −34 | 51 | 3.96 | 59 | −28, −38, 54 |

| Medial frontal gyrus | R | BA 10 | 8 | 54 | −9 | 3.91 | 42 | 8, 56, −8 |

| Precuneus | R | BA 7 | 8 | −46 | 54 | 3.81 | 45 | 8, −50, 56 |

| Culmen | R | N/A | 6 | −34 | −12 | 3.81 | 58 | 6, −34, −16 |

| Culmen | R | N/A | 4 | −60 | −4 | 3.72 | 36 | 4, −62, −8 |

| DISLIKE >LIKE | ||||||||

| Middle temporal gyrus | L | BA 21/22 | −59 | −8 | −6 | 6.69 | 1719 | −60, −8, −8 |

| Middle temporal gyrus | R | BA 21/38 | 61 | −6 | −3 | 6.64 | 1722 | 62, −6, −4 |

| Amygdala | R | N/A | 22 | −7 | −15 | 4.11 | 30 | 22, −6, −18 |

| Lingual gyrus | L | BA 18 | −22 | −72 | −1 | 3.78 | 53 | −22, −74, −6 |

| HAPPY >SAD | ||||||||

| Superior temporal gyrus | L | BA 22/41 | −51 | −14 | −1 | 5.28 | 1228 | −52, −14, −2 |

| Transverse/superior temporal gyrus | R | BA 41/22 | 46 | −25 | 10 | 4.89 | 858 | 46, −26, 10 |

| Superior temporal gyrus | L | BA 38 | −40 | −1 | −17 | 4.00 | 41 | −40, 0, −20 |

| Posterior cingulate/lingual Gyrus/cuneus | R/R/L | BA 30/18/30 | 16 | −68 | 7 | 3.73 | 127 | 16, −70, 4 |

| SAD >HAPPY | ||||||||

| Inferior frontal gyrus | R | BA 47/11 | 30 | 26 | −15 | 3.70 | 41 | 30, 28, −16 |

| MUSICIANS >NON-MUSICIANS | ||||||||

| Declive | R | N/A | 28 | −65 | −19 | 4.63 | 82 | 28, −66, −26 |

| Precuneus | L | BA 7 | −22 | −62 | 36 | 4.40 | 44 | −22, −66, 36 |

| Precuneus | R | BA 7 | 24 | −60 | 38 | 4.07 | 65 | 24, −64, 38 |

| Precentral gyrus | R | BA 6 | 38 | −10 | 35 | 3.98 | 42 | 38, −12, 38 |

| Cingulate gyrus | L | BA 24 | −4 | −12 | 37 | 3.95 | 32 | −4, −14, 40 |

| Post-central gyrus | L | BA 3 | −20 | −30 | 53 | 3.78 | 53 | −20, −34, 56 |

| Ventral lateral nucleus/mammillary body | L | N/A | −16 | −17 | 10 | 3.71 | 34 | −16, −18, 10 |

| Precentral gyrus | L | BA 6 | −44 | −12 | 39 | 3.70 | 73 | −44, −14, 42 |

| Insula | L | BA 13 | −40 | −32 | 20 | 3.69 | 36 | −40, −34, 20 |

| Inferior parietal lobule | R | BA 40 | 46 | −48 | 47 | 3.67 | 62 | 46, −52, 48 |

| NEGATIVE INTERACTION: LIKING × EMOTION (LS +DH>LH + DS) | ||||||||

| Parahippocampal gyrus | R | BA 19 | 30 | −47 | 1 | 3.74 | 35 | 30, −48, −2 |

| POSITIVE INTERACTION: LIKING × GROUP (LHNM + LSNM + DHM + DSM>LHM + LSM + DHNM + DHM) | ||||||||

| Cerebellar tonsil | L | N/A | −10 | −50 | −34 | 3.87 | 54 | −10, −50, −44 |

| POSITIVE INTERACTION: EMOTION × GROUP (LHNM + LSM + DHNM + LSM>LHM + LSNM + DHM + LHNM) | ||||||||

| Declive | R | N/A | 4 | −65 | −15 | 4.63 | 212 | 4, −66,−22 |

| Red nucleus | L | N/A | −2 | −26 | −14 | 4.47 | 57 | −2,−26, −18 |

| Posterior cingulate | R | BA 30 | 20 | −52 | 15 | 3.78 | 44 | 20, −54, 14 |

| Superior temporal gyrus | L | BA 22 | −57 | 2 | 5 | 3.48 | 29 | −58, 2, 6 |

| Claustrum/insula | L | BA 13 | −36 | −11 | 12 | 3.45 | 38 | −36, −12, 12 |

| POSITIVE INTERACTION: LIKING × EMOTION × GROUP (LHNM + LSM+DHM+DSNM>LHM + LSNM +DHNM + DSM) | ||||||||

| Inferior frontal gyrus | R | BA 47 | 40 | 17 | −9 | 3.57 | 30 | 40, 18, −10 |

| NEGATIVE INTERACTION: LIKING × EMOTION × GROUP (LHM + LSNM +DHNM + DSM>LHNM + LSM+DHM+DSNM) | ||||||||

| Insula/transverse temporal gyrus | L | BA 13/41 | −38 | −28 | 16 | 3.60 | 40 | −38, −30, 16 |

Clusters were considered significant at p < 0.001, and cluster size k>29 (as obtained with Monte Carlo permutations). Coordinates are in MNI space. Only effects and interactions producing significant clusters are reported.

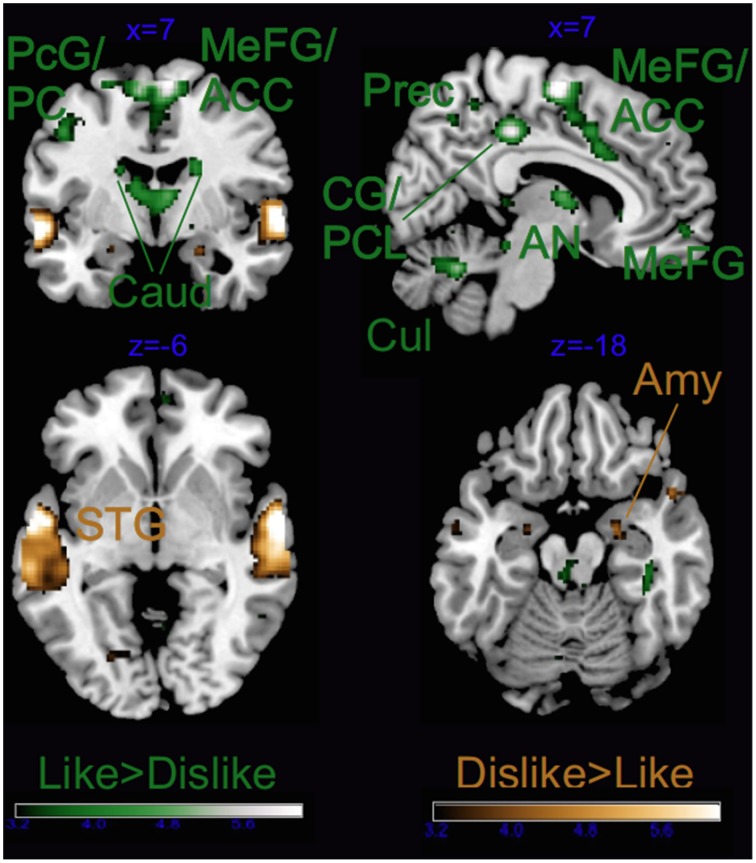

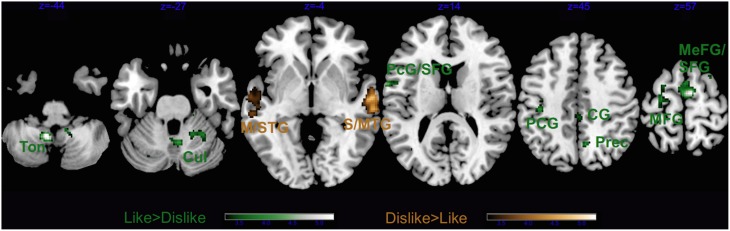

As illustrated in Tables 2, 3 and Figure 4, most of the activations obtained by the Liking factor in the GLM were driven by the contrast Liked > Disliked. Listening to liked music over disliked music activated large clusters in the bilateral medial frontal, anterior cingulate gyri, and paracentral lobule in addition to the bilateral caudate nucleus of the basal ganglia, and the anterior nucleus of the thalamus. In the right hemisphere, we found activations in the parahippocampal gyrus, the superior temporal gyrus, the precuneus and the medial frontal gyrus whereas activations of somatomotor areas (precentral, post-central, and middle frontal gyri) were lateralized to the left hemisphere. Several loci were activated in the cerebellum, including bilaterally the culmen and declive. On the other hand, the opposite contrast Disliked > Liked music resulted in activations only in the bilateral middle temporal gyri, the right amygdala and the left lingual gyrus.

Figure 4.

Main effect of Liking. ACC, anterior cingulate cortex; Caud, caudate; CG, cingulate gyrus; PCL, paracentral lobule; PC, posterior cingulate; Cul, cerebellar culmen; STG, superior temporal gyrus; Amy, amygdala; AN, thalamic anterior nucleus; Prec, precuneus; and PcG, precentral gyrus. Activations were considered significant at p < 0.001, and cluster size k> 29 (as obtained with Monte Carlo permutations).

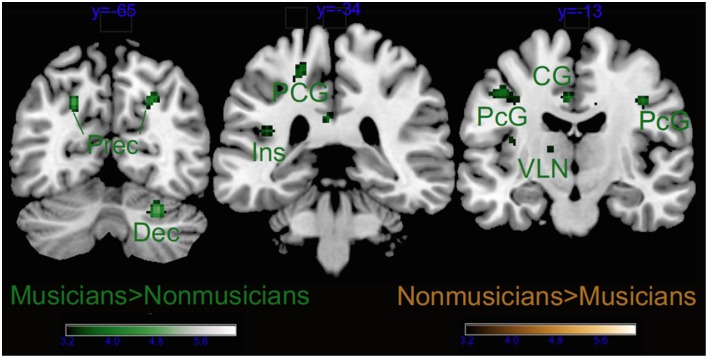

Group as factor also activated several brain areas alone or in interaction with the other factors (see Tables 2, 3 and Figure 5). As revealed by t-tests for the main effect of Group, the main differences between musicians and non-musicians were obtained in somatomotor regions such as the left post-central and precentral gyri, and the right declive of the cerebellum and were explained by their larger activity in the musicians' brains. On the other hand, the non-musicians' brains were never more active than that of musicians during affective listening to music.

Figure 5.

Main effect of Group. Dec, cerebellar declive; Ins, insula; PcG, precentral gyrus; PCG, post-central gyrus; CG, cingulate gyrus; and VLN, ventral lateral nucleus of the thalamus. Activations were considered significant at p < 0.001, and cluster size k > 29 (as obtained with Monte Carlo permutations).

We also obtained significant clusters of activation for the interaction of Group × Emotion in the right posterior cingulate, the red nucleus of the left brainstem, and the right declive of the cerebellum. Liking × Group also was significant in the tonsil of the cerebellum whereas the 3-way interaction Group × Liking × Emotion did not yield any significant activation at our Monte Carlo based alpha level, but when allowing for smaller cluster size, interaction in the brain activity was observed in the insula and the anterior cingulate cortex, as reported in the following sections. To study more closely the complex 3-way interaction of Group × Liking × Emotion we conducted separate analyses for musicians and non-musicians.

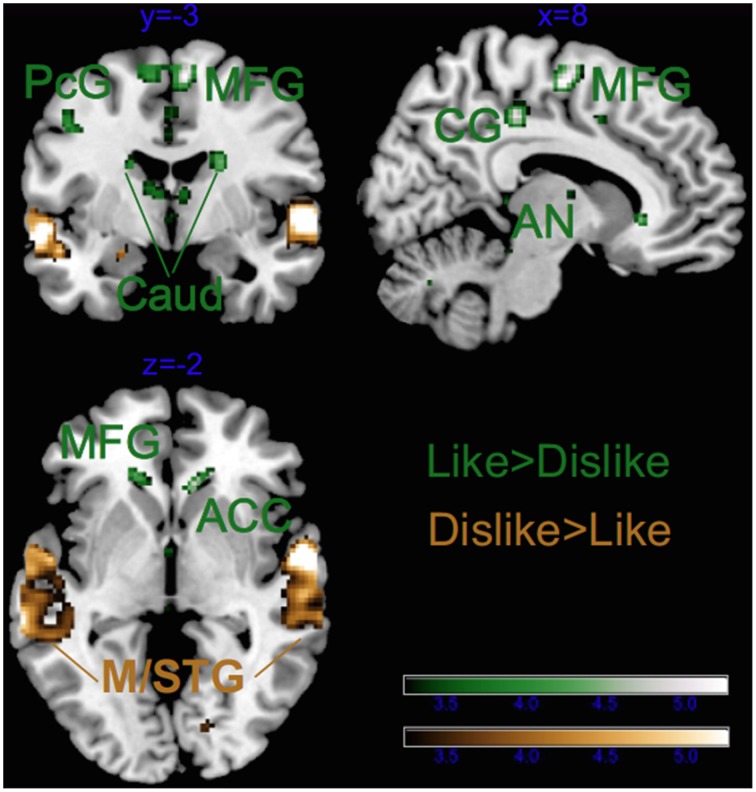

Separate GLM for musicians

Figure 6 and Table 4, illustrate the results for the main effect of Emotion in musicians only. The contrast Happy > Sad revealed that only the bilateral auditory cortices were recruited whereas the opposite contrast (Sad > Happy) revealed no significant clusters. On the contrary, the Liked > Disliked music comparison widely recruited large clusters in the bilateral caudate nuclei (see Figure 7 and Table 4). Greater activations in the frontal lobe, with large right-hemispheric clusters including the medial frontal gyrus (including the orbitofrontal cortex) and the cingulate gyri, extending to the anterior cingulate were also obtained. The left frontal and adjacent parietal lobes were active with the medial frontal gyrus, the paracentral lobule, the inferior parietal lobule, and the precuneus. The right insula, the pulvinar thalamus, and the left declive of the cerebellum were also activated during listening to favorite music in musicians. On the other hand, Disliked > Liked music recruited only auditory areas in musicians, as evidenced from Figure 7 and Table 4.

Figure 6.

Main effect of Emotion for musicians only. STG, superior temporal gyrus. Activations were considered significant at p < 0.001, and cluster size k> 29 (as obtained with Monte Carlo permutations).

Table 4.

Coordinates and statistical values of the full factorial analyses of fMRI responses performed separately for musicians.

| MUSICIANS | ||||||||

|---|---|---|---|---|---|---|---|---|

| Region | L/R | BA | x | y | z | Z | k | Coordinates (x, y, z) |

| Main Effects | ||||||||

| LIKING | ||||||||

| Middle/superior temporal gyrus | L | BA 21/22 | −61 | −8 | −8 | 5.38 | 968 | −62, −8, −10 |

| Middle/superior temporal gyrus | R | BA 21/22 | 61 | −6 | −3 | 5.27 | 1058 | 62, −6, −4 |

| Medial frontal gyrus | R/L | BA 6 | 6 | −5 | 56 | 5.03 | 215 | 6, −8, 60 |

| Cingulate gyrus | R | BA 31 | 10 | −27 | 42 | 4.37 | 92 | 10, −30, 44 |

| Precentral gyrus | L | BA 4 | −48 | −6 | 43 | 4.35 | 64 | −48, −8, 46 |

| Caudate body | L | N/A | −18 | 1 | 22 | 4.19 | 31 | −18, 0, 24 |

| Anterior cingulate | R | BA 24 | 10 | 27 | −3 | 4.14 | 37 | 10, 28, −2 |

| Caudate body | R | N/A | 22 | −1 | 22 | 3.99 | 49 | 22, −2, 24 |

| Precuneus | L | BA 7 | −12 | −46 | 48 | 3.94 | 37 | −12, −50, 50 |

| Medial frontal gyrus | L | BA 10 | −16 | 31 | −3 | 3.76 | 29 | −16, 32, −2 |

| Cingulate gyrus | R | BA 32 | 2 | 10 | 40 | 3.64 | 34 | 2, 8, 44 |

| Paracentral lobule | L | BA 31 | −4 | −27 | 46 | 3.60 | 39 | −4, −30, 48 |

| EMOTION | ||||||||

| Superior temporal gyrus | L | BA 22 | −51 | −4 | −5 | 4.32 | 80 | −52, −4, −6 |

| T-tests | ||||||||

| LIKE > DISLIkE | ||||||||

| Medial frontal gyrus | R | BA 6 | 6 | −5 | 56 | 5.16 | 287 | 6, −8, 60 |

| Cingulate gyrus/paracentral lobule | R/L | BA 31 | 10 | −27 | 42 | 4.52 | 202 | 10, −30, 44 |

| Precentral gyrus | L | BA 4 | −48 | −6 | 43 | 4.50 | 89 | −48, −8, 46 |

| Caudate body | L | N/A | −18 | 1 | 22 | 4.34 | 46 | −18, 0, 24 |

| Anterior cingulate | R | BA 24 | 10 | 27 | −3 | 4.30 | 59 | 10, 28, −2 |

| Caudate body | R | N/A | 22 | −1 | 22 | 4.15 | 71 | 22, −2, 24 |

| Precuneus | L | BA 7 | −12 | −46 | 48 | 4.10 | 53 | −12, −50, 50 |

| Medial frontal gyrus | L | BA 10 | −16 | 31 | −3 | 3.93 | 45 | −16, 32, −2 |

| Cingulate gyrus | R | BA 32/24 | 2 | 10 | 40 | 3.82 | 88 | 2, 8, 44 |

| Pulvinar | R/L | N/A | 6 | −33 | 7 | 3.79 | 64 | 6, −34, 6 |

| Caudate tail | L | N/A | −18 | −22 | 20 | 3.74 | 31 | −18, −24, 20 |

| Declive | L | N/A | −8 | −69 | −17 | 3.71 | 39 | −8, −70, −24 |

| Inferior parietal lobule | L | BA 40 | −50 | −37 | 39 | 3.68 | 36 | −50, −40,40 |

| Insula | R | BA 13 | 44 | 4 | 5 | 3.49 | 32 | 44, 4, 6 |

| DISLIKE > LIKE | ||||||||

| Middle/superior temporal gyrus | L | BA 21/22 | −61 | −8 | −8 | 5.50 | 1300 | −62, −8, −10 |

| Middle/supeior temporal gyrus | R | BA 21/22 | 61 | −6 | −3 | 5.39 | 1298 | 62, −6, −4 |

| HAPPY > SAD | ||||||||

| Superior temporal gyrus | L | BA 22 | −51 | −4 | −5 | 4.47 | 138 | −52, −4, −6 |

| Superior temporal gyrus | R | BA 41 | 48 | −27 | 9 | 3.82 | 41 | 48, −28, 8 |

Clusters were considered significant at p < 0.001, and cluster size k > 29 (as obtained with Monte Carlo permutations). Coordinates are in MNI space. Only effects and interactions producing significant clusters are reported.

Figure 7.

Main effect of Liking for musicians only. ACC, anterior cingulate cortex; Caud, caudate; CG, cingulate gyrus; STG, superior temporal gyrus; MTG, middle temporal gyrus; AN, anterior nucleus of the thalamus; PcG, precentral gyrus; and MFG, middle frontal gyrus. Activations were considered significant at p < 0.001, and cluster size k> 29 (as obtained with Monte Carlo permutations).

Separate GLM for non-musicians

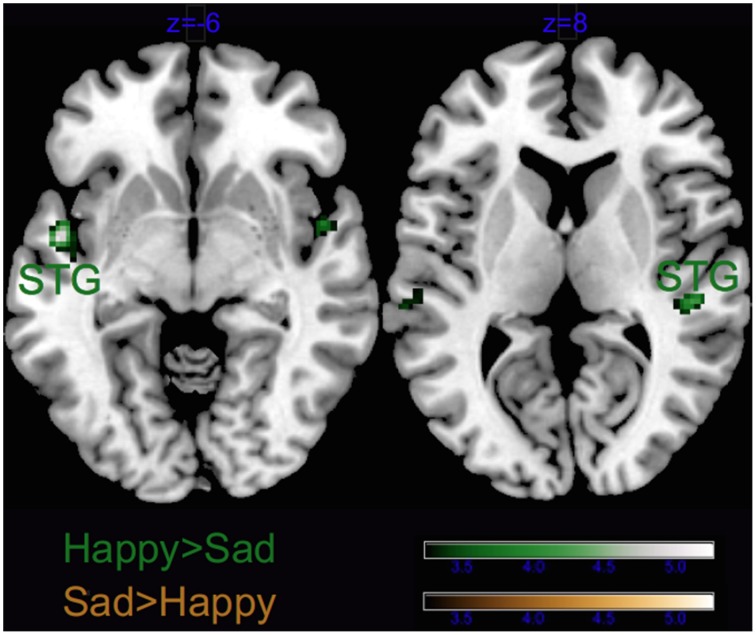

In non-musicians the results of the contrast Happy > Sad music revealed significant clusters in the right hemispheric auditory cortices, the left lingual gyrus, the right cuneus and the right declive of the cerebellum (Figure 8 and Table 5). As in musicians, no significant cluster to Sad > Happy music was found.

Figure 8.

Main effect of Emotion for non-musicians only. Dec, cerebellar declive; STG, superior temporal gyrus; Cun, cuneus; TTG, transverse temporal gyrus; and LG, lingual gyrus. Activations were considered significant at p < 0.001, and cluster size k > 29 (as obtained with Monte Carlo permutations).

Table 5.

Coordinates and statistical values of the full factorial analyses of fMRI responses performed separately for non-musicians.

| NON-MUSICIANS | ||||||||

|---|---|---|---|---|---|---|---|---|

| Region | L/R | BA | x | y | z | Z | k | MNI coord |

| Main Effects | ||||||||

| LIKING | ||||||||

| Cerebellar tonsil | L | N/A | −8 | −52 | −34 | 4.84 | 80 | −8, −52, −44 |

| Medial frontal gyrus | R/L | BA 6 | 6 | −3 | 63 | 4.58 | 148 | 6, −6, 68 |

| Superior/middle temporal gyrus | R | BA 21/22 | 61 | −12 | −1 | 4.21 | 367 | 62, −12, −2 |

| Culmen | R | N/A | 20 | −50 | −21 | 3.95 | 113 | 20, −50, −28 |

| Middle/superior temporal gyrus | L | BA 21 | −59 | −6 | −5 | 3.92 | 175 | −60, −6, −6 |

| Cingulate gyrus | R | BA 31 | 4 | −31 | 40 | 3.89 | 74 | 4, −34, 42 |

| Precentral gyrus/superior temporal gyrus | L | BA 6/22 | −61 | 3 | 13 | 3.85 | 32 | −62, 2, 14 |

| Culmen | R | N/A | 4 | −57 | −19 | 3.68 | 51 | 4, −58, −26 |

| Post-central gyrus | L | BA 3 | −40 | −21 | 45 | 3.63 | 69 | −40, −24, 48 |

| EMOTION | ||||||||

| Cuneus | R | BA 23 | 12 | −73 | 11 | 4.08 | 76 | 12, −76, 8 |

| Superior temporal gyrus | L | BA 22/41/42 | −53 | −12 | −1 | 3.90 | 358 | −54, −12, −2 |

| Transverse temporal gyrus | R | BA 41 | 44 | −23 | 12 | 3.77 | 65 | 44, −24, 12 |

| Superior temporal gyrus | R | BA 22 | 57 | −10 | 2 | 3.75 | 76 | 58, −10, 2 |

| Lingual gyrus | L | BA 17 | −12 | −87 | 3 | 3.53 | 35 | −12, −90, −2 |

| LIKING × EMOTION | ||||||||

| Inferior frontal gyrus | R | BA 47 | 50 | 19 | −8 | 4.28 | 55 | 50, 20, −8 |

| T-Tests | ||||||||

| LIKE > DISLIKE | ||||||||

| Cerebellar tonsil | L | N/A | −8 | −52 | −34 | 4.97 | 99 | −8, −52, −44 |

| Medial/superior frontal gyrus | R/L | BA 6 | 6 | −3 | 63 | 4.72 | 204 | 6, −6, 68 |

| Uvula | L | N/A | −22 | −65 | −25 | 4.11 | 49 | −22, −66, −34 |

| Culmen | R | N/A | 20 | −50 | −21 | 4.11 | 163 | 20, −50, −28 |

| Cingulate gyrus | R | BA 31 | 4 | −31 | 40 | 4.05 | 123 | 4, −34, 42 |

| Precentral gyrus/superior temporal gyrus | L | BA 6/22 | −61 | 3 | 13 | 4.02 | 52 | −62, 2, 14 |

| Culmen | R | N/A | 4 | −57 | −19 | 3.85 | 71 | 4, −58, −26 |

| Precuneus | R | BA 7 | 8 | −56 | 47 | 3.84 | 31 | 8, −60, 48 |

| Post-central gyrus | L | BA 3 | −40 | −21 | 45 | 3.81 | 123 | −40, −24, 48 |

| Middle frontal gyrus/precentral gyrus | L | BA 6 | −24 | −11 | 61 | 3.69 | 73 | −24, −14, 66 |

| DISLIKE > LIKE | ||||||||

| Superior/middle temporal gyrus | R | BA 21/22 | 61 | −12 | −1 | 4.37 | 470 | 62, −12, −2 |

| Middle/superior temporal gyrus | L | BA 21 | −59 | −6 | −5 | 4.08 | 348 | −60, −6, −6 |

| HAPPY > SAD | ||||||||

| Cuneus | R | BA 23 | 12 | −73 | 11 | 0.277 | 116 | 12, −76, 8 |

| Superior temporal gyrus | L | BA 22/41/42 | −53 | −12 | −1 | 0.453 | 633 | −54, −12, −2 |

| Transverse temporal gyrus | R | BA 41/22 | 44 | −23 | 12 | 0.603 | 114 | 44, −24, 12 |

| Superior temporal gyrus | R | BA 22 | 57 | −10 | 2 | 0.624 | 134 | 58, −10, 2 |

| Lingual gyrus | L | BA 17 | −12 | −87 | 3 | 0.853 | 57 | −12, −90, −2 |

| Declive | R | N/A | 16 | −67 | −17 | 0.969 | 43 | 16, −68, −24 |

| POSITIVE INTERACTION: LIKING × EMOTION (LH + DS>LS+DH) | ||||||||

| Inferior frontal gyrus | R | BA 47 | 50 | 19 | −8 | 4.44 | 85 | 50, 20, −8 |

| NEGATIVE INTERACTION: LIKING × EMOTION (LS + DH > LH + DS) | ||||||||

| Paracentral lobule/superor parietal lobule | L | BA 5/7 | −8 | −40 | 59 | 4.17 | 51 | −8, −44, 62 |

| Superior temporal gyrus | R | BA 41 | 38 | −35 | 5 | 3.70 | 36 | 38, −36, 4 |

| Insula | R | BA 13 | 32 | −24 | 21 | 3.59 | 30 | 32, −26, 22 |

Clusters were considered significant at p < 0.001, and cluster size k> 29 (as obtained with Monte Carlo permutations). Coordinates are in MNI space. Only effects and interactions producing significant clusters are reported.

The contrast Liked > Disliked music in non-musicians revealed larger brain activity bilaterally in the medial frontal gyrus, as well as the right cingulate gyrus, and the left superior temporal gyrus extending to the precentral and middle frontal gyri (Figure 9 and Table 5). The cerebellum was also recruited with the right culmen and the left tonsil and uvula. Similarly to musicians, Disliked > Liked music only activated the bilateral auditory cortices.

Figure 9.

Main effect of Liking for non-musicians only. STG, superior temporal gyrus; MTG, middle temporal gyrus; PcG, precentral gyrus; SFG, superior frontal gyrus; PCG, post-central gyrus; CG, cingulate gyrus; MeFG, medial frontal gyrus; MFG, middle frontal gyrus; Prec, precuneus; Cul, cerebellar culmen; and Ton, cerebellar tonsil. Activations were considered significant at p < 0.001, and cluster size k> 29 (as obtained with Monte Carlo permutations).

Correlations between acoustic features and BOLD signal

Pearson's correlation tests between the signal change extracted from the activated clusters for the main effect of Liking with the acoustic features that significantly differentiated the musical stimuli revealed a significant negative relation between the activity in the right declive of the cerebellum and the Dynamics-related features in the musical excerpts (r = −0.304, p = 0.001). The liking-related activity in the right-hemispheric declive also positively correlated with the Timbre-related features of the music (r = 0.335, p < 0.0001). Conversely, for the main effect of Emotion the Dynamics-related features negatively correlated with the neural activity in the right posterior cingulate gyrus (r = −0.241, p = 0.009) and to positively with the neural activity in the left superior temporal gyrus (r = 0.228, p = 0.01). However, these correlations did not survive Bonferroni correction.

Discussion

This study provides two important contributions to the literatures on music emotions and expertise, respectively. First, by using an orthogonal design we succeeded in disentangling the brain structures responsible for the perception of sad and happy emotions in music from those related to liking and, hence, musical enjoyment. Second, we provided original evidence for functional differences in the limbic system between musicians and non-musicians, by showing enhanced liking-related activity in fronto-insular and cingulate areas (belonging to the salience processing network) in individuals who for years had been deeply enjoying music and were trained to convey emotions when performing.

Neural correlates of music liking and disliking

In both groups, listening to their most liked music activated several deep subcortical and medial cortical structures of the brain belonging to neural networks controlling emotional and motivational experiences, that is, the limbic and paralimbic system (amygdala, parahippocampal gyrus, medial prefrontal cortex, anterior cingulate gyrus) and the reward circuit (caudate, medial prefrontal cortex, mediodorsal thalamus). The parahippocampal gyrus has previously been related to the discrimination of valence or pleasantness in music (Blood et al., 1999). The amygdala is instead supposed to have a broader alerting function as it processes the intensity, arousal potential and novelty of affective signals (e.g., Small et al., 2003; Sescousse et al., 2013; Frühholz et al., 2014). Indeed, in the musical domain, amygdalar activity has been reported for positive and negative emotions such as fear and joy (Eldar et al., 2007). Recruitment of the amygdala has also been associated with tonal novelty defined by the incongruity of chords ending a Western tonal cadence (Koelsch et al., 2008).

In the current study, the right amygdala was more active during listening to disliked music clips than during liked ones. Lateralized activity of the amygdala has been observed in a meta-analysis of studies for the visual modality (Fusar-Poli et al., 2009), but contrarily with our findings the left amygdala was associated with negative emotions while the right amygdala with positive ones. Our recent studies, though, confirm the reversed pattern of increased left amygdalar activity and connectivity during continuous listening to music judged as pleasant (Toiviainen et al., submitted; Bogert et al., submitted). As was also suggested in a recent review (Brattico, 2015), the discrepancy between findings obtained in visual and music studies might be related with the distinct functions of the features eliciting emotions in the two modalities; e.g., in vision amygdala is more strongly activated by low spatial frequency content (as compared to high frequency), to allow fast recognition of objects and potential danger such as that conveyed by fearful faces (Vuilleumier et al., 2003), whereas in audition fine spectrotemporal resolution is needed to discern potentially dangerous rough, dissonant signals, such as predator calls (Peretz, 2010).

In conjunction with the amygdala, the inferior frontal regions (Tillmann et al., 2006), the anterior superior temporal gyrus, and the basal ganglia (especially the head of the caudate; Seger et al., 2013) were activated in association with violations of different kinds of musical expectancies. Salimpoor et al. (2015) summarize that the posterior inferior frontal gyrus in the right hemisphere tracks expectancies in musical structure, such as how well chords fit to the preceding context according to the Western harmony rules of chord succession, caudate with temporal anticipation of a reward experience, whereas amygdala (and nucleus accumbens) activation is related to the emotional outcome of the expectancy processes. However, in our study emotion-related structures (in amygdala, parahippocampal gyrus, and caudate) co-activated with dorsomedial rather than inferolateral frontal regions, similarly to recent fMRI findings obtained with pleasant 10 sec excerpts from film soundtracks (Altenmüller et al., 2014). Metabolic activity in the premotor cortex, residing in the precentral frontal gyri, is related to somatomotor representations and imagery. Some of the clusters found active during listening to favorite music belong to the mirror neuron system, which are active not only during action execution but also during action observation (Rizzolatti et al., 1996; Hari et al., 1998). Some authors have hypothesized a role of the mirror neuron system in aesthetic enjoyment and emotion recognition for both the visual and auditory domains (Molnar-Szakacs and Overy, 2006; Gallese and Freedberg, 2007); however, there is scarce consensus in the field due to the yet insufficient evidence of mirror neurons in humans (Pascolo et al., 2010). Nevertheless, several studies using highly pleasurable music from different styles as stimuli also reported activity in premotor and supplementary motor areas (Blood and Zatorre, 2001; Salimpoor et al., 2013). An alternative explanation for neural activity in medial supplementary motor areas (BA 6, but not in primary motor cortex, M1) during affective music listening might be silent vocalization, previously documented in a study of imagined compared with overt singing of an Italian aria (Kleber et al., 2007).

In addition to limbic and motor structures, favorite music activated several thalamic clusters, including dorsomedial and pulvinar regions, which are considered as important value coding centers for reward experiences (Sescousse et al., 2013). In a meta-analysis of 87 neuroimaging studies, the dorsomedial thalamus was one of the structures consistently associated with different monetary, food and erotic rewards (Sescousse et al., 2013). Although it is rarely discussed in the reward literature, and even less in music studies, the dorsomedial thalamus is an important relay center between the basal ganglia and the prefrontal cortex. On the other hand, the pulvinar thalamus, which is connected with cingulate, associative parietal and premotor cortices (Cappe et al., 2009), was also found activated here. This striatal-thalamo-cortical loop, associated in the current study with liked music stimuli, might hence represent a bridge between reward signals in the ventral striatum and higher cognitive processes, such as motivation and goal-directed behavior, occurring in cingulate and frontal areas.

Neural correlates of happiness and sadness in music

Listening to liked music (as opposed to disliked music) elicited neural activity in emotional and motivational structures of the brain, including deep subcortical centers controlling arousal and automatic nervous system activity, whereas listening to happy music (as contrasted to sad music) elicited activity in sensory areas, namely the bilateral primary and non-primary auditory cortices. This replicates previous findings using instrumental classical music (Mitterschiffthaler et al., 2007) and also music excerpts with lyrics from various styles (Brattico et al., 2011). Re-entry mechanisms from the nucleus accumbens into the auditory cortex, boosting the neural activity to happy-sounding, rewarding and motivating sounds, may be at the origin of this effect (Budinger and Scheich, 2009; Puschmann et al., 2013; Salimpoor et al., 2013). However, since also disliked music was included in the fMRI responses to happy musical excerpts, the above explanation holds only partly. An alternative, more likely explanation for the auditory cortex activity during happy music can be found in the acoustic characteristics of happy compared to sad music, such as brighter timbre, more staccato articulation, faster tempo and major tonality as found in the acoustic feature analysis of this study and in previous literature (Brattico et al., 2011) as well as in the specialization of the right and left auditory cortex for spectro-temporal attributes (Zatorre et al., 2002).

Posterior medial structures (lingual gyrus, cuneus, posterior cingulate gyrus) were previously found to be active during resting state (Fox et al., 2009) and also in association with processing of timbral features during continuous listening to music (Alluri et al., 2012). A neural dissociation between basic emotional processing and motivational processes in music has been hypothesized earlier (Vuilleumier and Trost, 2015) and hinted at from behavioral evidence (Fletcher et al., 2013), but thus far never demonstrated. In a previous behavioral study (Matthews et al., 2009), JS, a neurological patient suffering from auditory agnosia of undefined etiology showed chance level recognition of basic emotions (including their negative or positive content) in environmental sounds and musical pieces but preserved enjoyment of favorite music. In sum, our findings concerning the neural correlates of happiness and sadness in music replicate and also clarify previous findings by identifying the brain structures that are specifically activated by the discrete emotions, irrespectively of their hedonic value.

Behavioral ratings relating liking with the intensity of discrete emotions perceived

These findings have been obtained by using music selected by the subjects themselves. This design was adopted for enhancing the potential of music to convey both basic and pleasurable emotions. Based on their behavioral ratings, subjects were successful in selecting music that was perceived as sad or happy. The excerpts extracted from the music, lasting 18 sec, were even able to induce happy and sad felt emotions coherent to expectations, and this was all the more true when the music was also preferred by the subjects, hence replicating behavioral findings (Kreutz et al., 2008; Salimpoor et al., 2009). The capacity of musical (or visual) stimuli to induce feelings in the participants —in other words, of being moved by them— has been considered as a pivotal psychological construct. According to recent accounts, the psychological state of being moved can alone explain the pleasure derived from negatively connoted aesthetic stimuli, such as sadness-inducing music or films or disgust-inducing pictures when displayed in an art context (Kreutz et al., 2008; Hanich et al., 2014; Wagner et al., 2014). Focusing on peak experiences in response to artworks, Konecni (2008) proposed an Aesthetic Trinity Theory including three separate aesthetic responses: “aesthetic awe,” defined at the rare, intense, highly memorable peak experience; the state of “being moved,” a more frequent and less intense experience; and thrills or chills, namely the physiological responses that in Konecni's opinion are more commonly occurring (although other reports found them to be rare; Grewe et al., 2009). In this regard, our data provide further evidence linking the psychological construct of being moved with stronger aesthetic responses to music, namely, higher emotion induction ratings for liked music. In an attempt to chronometrically organize the various processes and responses occurring during a musical aesthetic experience, Brattico et al. (2013) situated the aesthetic emotion of enjoyment in temporal succession after the induction and perception of discrete emotions from music and before liking, conceptualized as a conscious act of judgment of the preceding affective and cognitive reactions to the music heard.

Controlling for familiarity

Our findings were obtained while controlling for familiarity of the musical excerpts. Based on post-experimental interviews and on the behavioral ratings, the excerpts belonging to the different experimental categories were all considered as familiar, corresponding to ratings exceeding the middle one in the scale, with slightly higher ratings of familiarity for the favorite sad music. While confident that the musical excerpts were all familiar to our subjects, even when disliked (and in several instances, those disliked pieces were very corny pop hits of the moment), we included the familiarity ratings from the listening test as individual covariates in the fMRI analysis. This allowed removing those additional effects in the reward areas and limbic system which by themselves would differentiate the liked from the disliked music or the happy from the sad one, simply based on previous listening exposure, as evidenced by a recent fMRI study (Pereira et al., 2011). Indeed, repeated exposure to musical excerpts increased ratings of emotional arousal, induced subjective reports of pleasure and also electrodermal activity in listeners (Salimpoor et al., 2009; van den Bosch et al., 2013).

Sensory-motor brain responses in musicians and non-musicians

Complying with previous literature, the present neuroimaging findings revealed functional differences between the brains of musicians and non-musicians (Münte et al., 2002; Tervaniemi, 2012; Merrett et al., 2013; Reybrouck and Brattico, 2015; Schlaug, 2015). Particularly, musicians (as contrasted with non-musicians) showed an overall increased activity in somatomotor regions, including the precentral and post-central cerebral gyri and the cerebellar declive. Previously, neurophysiological studies demonstrated that the functional adaptations of somatomotor regions are dependent on the specific demands of instrumental practice. For instance, larger neurophysiological responses in the right somatosensory cortex, indexing more accurate representations of the fingers of the left hand in string players, were found as opposed to non-musicians, with the amount of response increase dependent on the years of training (Elbert et al., 1995). In experienced opera singers the increased functional activation dependent on the amount of singing practice has been found in the bilateral regions of the primary somatosensory cortex representing the articulatory vocal tract and larynx, and subcortically in the basal ganglia, thalamus and cerebellum (Kleber et al., 2013). A recent fMRI study also revealed less symmetric functional activation of somatomotor (and other) regions in string players as contrasted with keyboard players (Burunat et al., 2015). Long-term repeated activation of somatomotor cortex in musicians also results in permanent structural changes in the volume of the anatomical structures. Volumetric studies have repeatedly showed larger premotor cortex and cerebellum in (male) musicians as compared with non-musicians (Gärtner et al., 2013; Schlaug, 2015). The changes in right superior and middle frontal regions (along with the hippocampus) depend on the amount of training and are visible from the beginning of musical training and upward, as showed by a volumetric study contrasting musicians who started their training before 7 years of age with those who started later (Groussard et al., 2014), and in another study investigating children who studied music for just 15 months (Hyde et al., 2009). Moreover, a longer persistence with musical practice seems to be necessary for structural changes in the right insula, left posterior cingulate cortex, superior temporal areas, and right supplementary motor area (Groussard et al., 2014).

Limbic system responses in musicians and non-musicians