Significance

The environment is full of temporal information that links specific auditory and visual representations to each other. Especially in speech, this is used to guide perception. The current paper shows that syllables with varying visual-to-auditory delays get preferably processed at different oscillatory phases. This mechanism facilitates the separation of different representations based on consistent temporal patterns in the environment and provides a way to categorize and memorize information, thereby optimizing a wide variety of perceptual processes.

Keywords: oscillations, phase, audiovisual, speech, temporal processing

Abstract

The role of oscillatory phase for perceptual and cognitive processes is being increasingly acknowledged. To date, little is known about the direct role of phase in categorical perception. Here we show in two separate experiments that the identification of ambiguous syllables that can either be perceived as /da/ or /ga/ is biased by the underlying oscillatory phase as measured with EEG and sensory entrainment to rhythmic stimuli. The measured phase difference in which perception is biased toward /da/ or /ga/ exactly matched the different temporal onset delays in natural audiovisual speech between mouth movements and speech sounds, which last 80 ms longer for /ga/ than for /da/. These results indicate the functional relationship between prestimulus phase and syllable identification, and signify that the origin of this phase relationship could lie in exposure and subsequent learning of unique audiovisual temporal onset differences.

In spoken language, visual mouth movements naturally precede the production of any speech sound, and therefore serve as a temporal prediction and detection cue for identifying spoken language (1) (but also see ref. 2). Different syllables are characterized by unique visual-to-auditory temporal asynchronies (3, 4). For example, /ga/ has an 80-ms longer delay than /da/, and this difference aids categorical perception of these syllables (4). We propose that neuronal oscillations might carry the information to dissociate these syllables based on temporal differences. Multiple authors have proposed (5–7)—and it has been demonstrated empirically (7–9)—that at the onset of visual mouth movements, ongoing oscillations in auditory cortex align (see refs. 10–12 for nonspeech phase reset), providing a temporal reference frame for the auditory processing of subsequent speech sounds. Consequently, auditory signals fall on different phases of the aligned oscillation depending on the unique visual-to-auditory temporal asynchrony, resulting in a consistent relationship between syllable identity and oscillatory phase.

We hypothesized that this consistent “phase–syllable” relationship results in ongoing oscillatory phase biasing syllable perception. More specifically, the phase at which syllable perception is mostly biased should be proportional to the visual-to-auditory temporal asynchrony found in natural speech. A naturally occurring /ga/ has an 80-ms longer visual-to-auditory onset difference than a naturally occurring /da/ (4). Consequently, the phase difference between perception bias toward /da/ and /ga/ should match 80 ms, which can only be established with an oscillation with a period greater than 80 ms, that is, any oscillation under 12.5 Hz. The apparent relevant oscillation range is therefore theta, with periods ranging between 111 and 250 ms (4–9 Hz). This oscillation range has already been proposed as a candidate to encode information, and seems specifically important for speech perception (13, 14).

To test this hypothesis of oscillatory phase biasing auditory syllable perception in the absence of visual signals, we presented ambiguous auditory syllables that could be interpreted as /da/ or /ga/ while recording EEG. In a second experiment, we used sensory entrainment (thereby externally enforcing oscillatory patterns) to demonstrate that entrained phase indeed determines whether participants identify the presented ambiguous syllable as being either /da/ or /ga/.

Results

Experiment 1.

Psychometric curves.

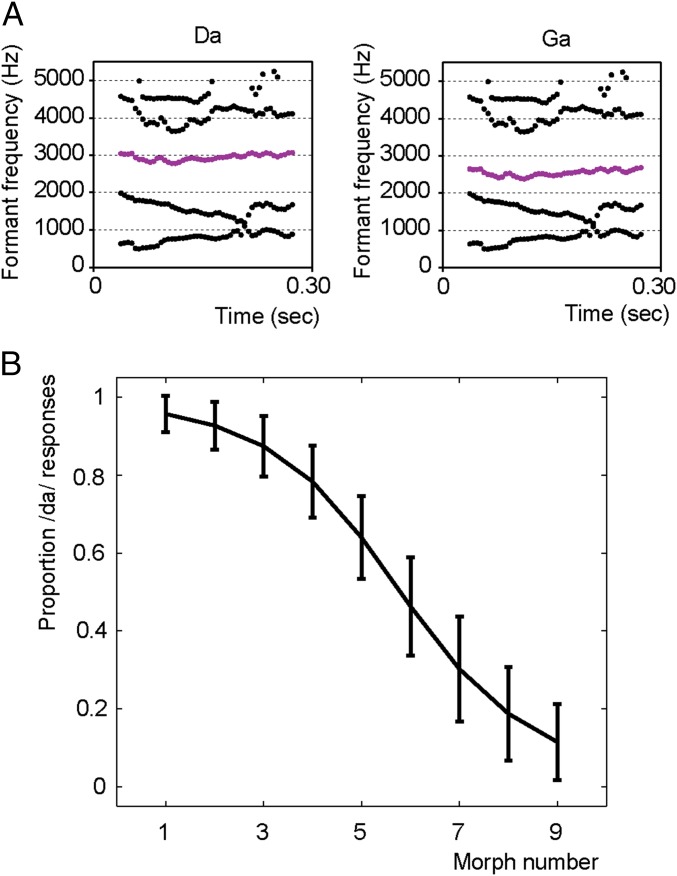

First, we created nine morphs between a /da/ and a /ga/ by shifting the third formant frequency of a recorded /da/ from around 2,600–3,000 Hz (Fig. 1A). We determined the individual threshold at which participants would identify a morphed stimulus 50% as /da/ and 50% as /ga/ by repeatedly presenting the nine different morphs, and participants had to indicate their percept (see SI Materials and Methods for details). Indeed, 18 out of 20 participants were sensitive to the manipulation of the morphed stimulus, and psychometric curves could be fitted reliably (Fig. 1B; average explained variance of the fit was 92.7%, SD of 0.03). The other two participants were excluded from further analyses.

Fig. 1.

Results from morphed /daga/ stimuli. (A) Stimulus properties of the used /da/ and /ga/ stimuli. Only the third formant differs between the two stimuli (purple lines). (B) Average proportion of /da/ responses for the 18 participants in experiment 1. Error bars reflect the SEM.

Consistency of phase differences.

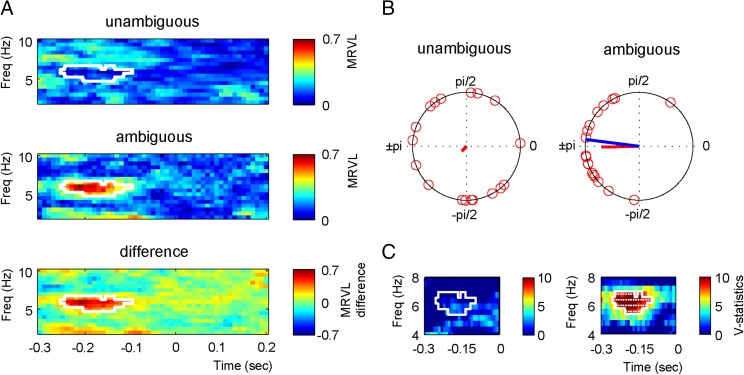

We used the individually determined most ambiguous stimuli to investigate whether ongoing theta phase before stimulus presentation influenced the identification of the syllable. Therefore, we presented both the unambiguous /da/ (stimulus 1) and /ga/ (stimulus 9) and the ambiguous stimulus while recording EEG. Data were epoched −3 to 3 s around syllable onset. To ensure that poststimulus effects did not temporally smear back to the prestimulus interval (e.g., 15), we padded all data points after zero with the amplitude value at zero. For every participant, we extracted the average phase for each of the syllable types for the −0.3- to 0.2-s interval. There were four syllable types: the /da/ and /ga/ of the unambiguous sounds and the ambiguous sound either perceived as /da/ or /ga/. Then, we determined the phase difference between /da/ and /ga/ for both the unambiguous and ambiguous conditions. In the ambiguous condition, prestimulus phase is hypothesized to bias syllable perception, and this should be reflected in a consistent phase difference between the perceived /da/ and /ga/. In the unambiguous condition in the prestimulus phase, time windows should mostly reflect random fluctuations, because participants are unaware of the identity and arrival time of the upcoming syllable and generally identified stimulus 1 as /da/ and stimulus 9 as /ga/, resulting in a low consistency of the phase difference. Note, however, that, in principle, phase differences are possible in this condition, because we did exclude trials in which participants identified the unambiguous syllables as the syllable at the other side of the morphed spectrum. The mean resultant vector lengths (MRVLs) of the phase difference between /da/ and /ga/ were calculated, and Monte Carlo simulations with a cluster-based correction for multiple comparisons were used for statistical testing. A higher MRVL indicates a higher phase concentration of the difference. We found that the ambiguous sounds had a significantly higher MRVL before sound onset (−0.25 to −0.1 ms) around 6 Hz (cluster statistics 19.821, P = 0.006; Fig. 2 A and B). When repeating the analysis including a wider frequency spectrum (1–40 Hz), the same effect was present (cluster statistics 18.164, P = 0.030), showing the specificity of the effect for theta. Because any phase estimation requires integration of data over time, the significant data appear distant from the onset of the syllable. For example, the 6-Hz phase angle is calculated using a window of 700 ms (to ensure the inclusion of multiple cycles of the theta oscillation). The closer the center of the estimation is to an abrupt change in the data (such as a stimulus or the data padding to zero), the more the estimation is negatively influenced by the “postchange data” (e.g., 15).

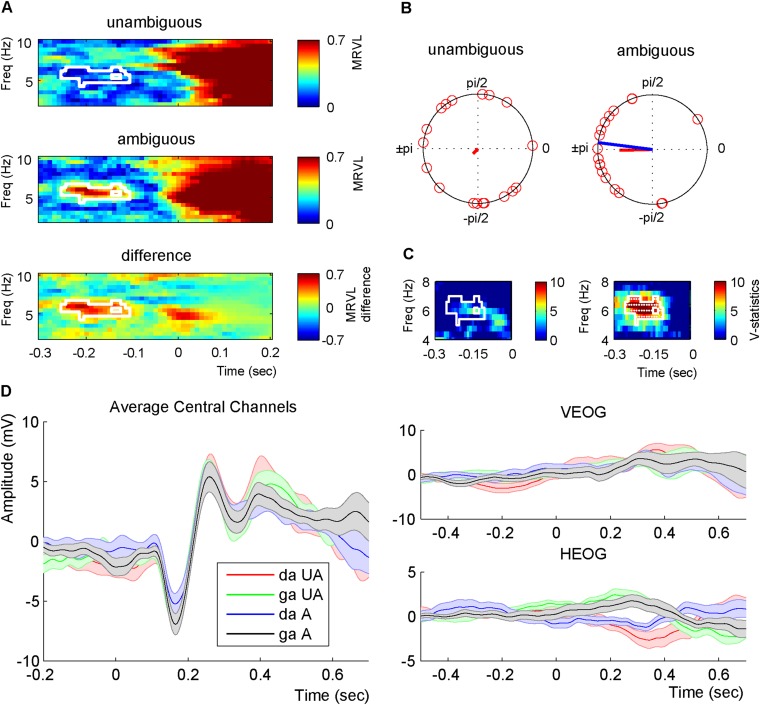

Fig. 2.

Prestimulus phase differences. (A) The mean resultant vector length across participants for the phase difference between /da/ and /ga/ for unambiguous sounds and for the phase difference between perceived /da/ and /ga/ for ambiguous sounds. The white outlines indicate the region of significant differences. (B) Phase differences of individual participants at 6 Hz at −0.18 s for unambiguous and ambiguous sounds. The blue line indicates the 80-ms expected difference. The red lines indicate the strength of the MRVL. (C) V statistics testing whether the phase differences are significantly nonuniformly distributed around 80 ms for all significant points in the MRVL analysis. The white outlines indicate at which time and frequency point the analysis was performed (note the difference in the x and y axes between A and C). White dots indicate significance.

Eighty-millisecond phase differences.

A second hypothesis was that the phase difference of the ambiguous stimuli judged as /da/ vs. /ga/ would match 80 ms, consistent with the visual-to-auditory onset difference between /da/ and /ga/ found in natural speech (4). Therefore, we took all of the significant time and frequency points in the first analysis and tested whether the phase difference of all participants was centered around 80 ms (the blue line in Fig. 2B corresponds to an 80-ms difference). This is typically done with the V test, which examines the nonuniformity of circular data centered around a known specific mean direction. We found that the ambiguous phase differences indeed centered around 80 ms for almost all tested data points, whereas for the unambiguous sounds no such phase concentration was present (Fig. 2C).

From Fig. 2B it is evident that there is a consistent phase difference across participants between /da/ and /ga/ for the ambiguous sounds. When looking at the consistency of the phases of the individual syllables /da/ and /ga/ this consistency drops (compare Fig. 2B with Fig. S1B). Statistical testing confirmed that the /da/ and /ga/ phases seemed distributed randomly (Fig. S1C). At this point, we cannot differentiate whether this effect occurs due to volume conduction of the EEG or individual latency differences for syllable processing (see also ref. 12). When repeating this analysis for each participant, we did find a significant (uncorrected) consistency for multiple participants and a significant different phase between /da/ and /ga/ (Fig. S2; for only two participants this effect survived correction for multiple comparisons).

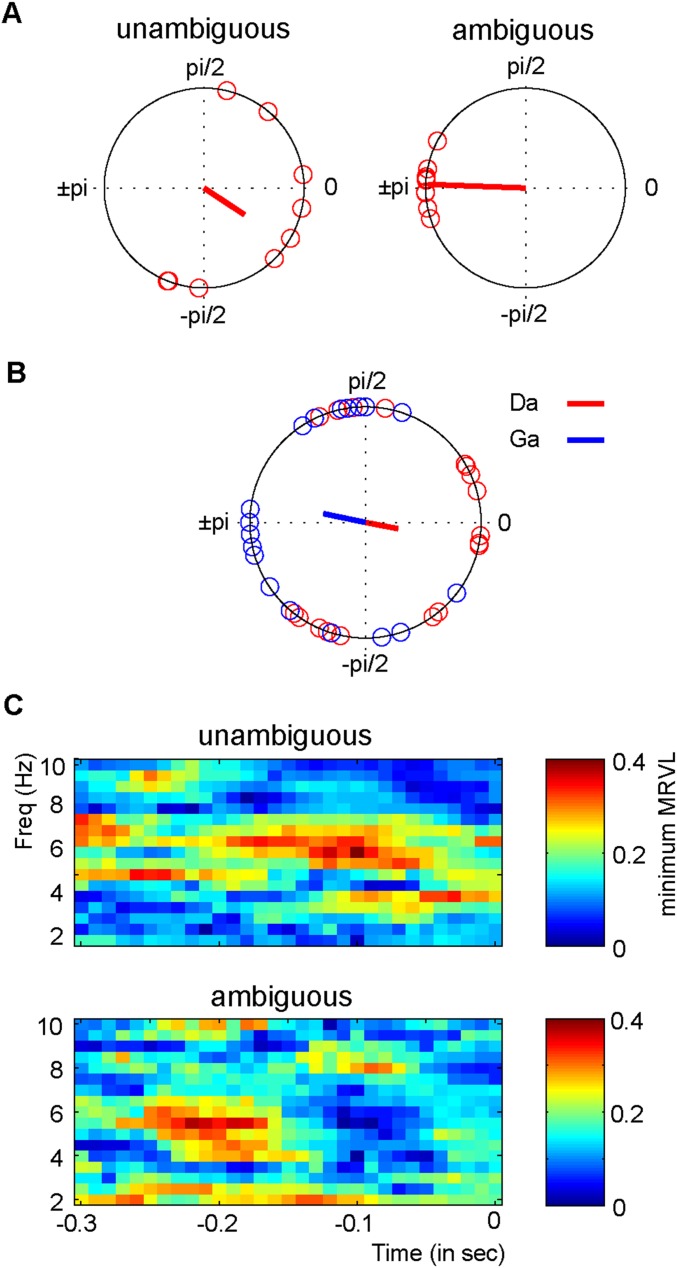

Fig. S1.

Channel and phase consistency. (A) The phase difference between /da/ and /ga/ of all individual channels (averaged across participants). For the ambiguous sounds, there is a very strong consistency for the phases of the separate channels. (B) For the ambiguous condition, the mean phases are plotted for each participant for /da/ (red) and /ga/ (blue) separately. Although there is some phase consistency, it is considerably weaker than the consistency of the difference (compare with Fig. 2B). (C) The minima of the MRVL of /da/ and /ga/ for both the unambiguous and ambiguous condition.

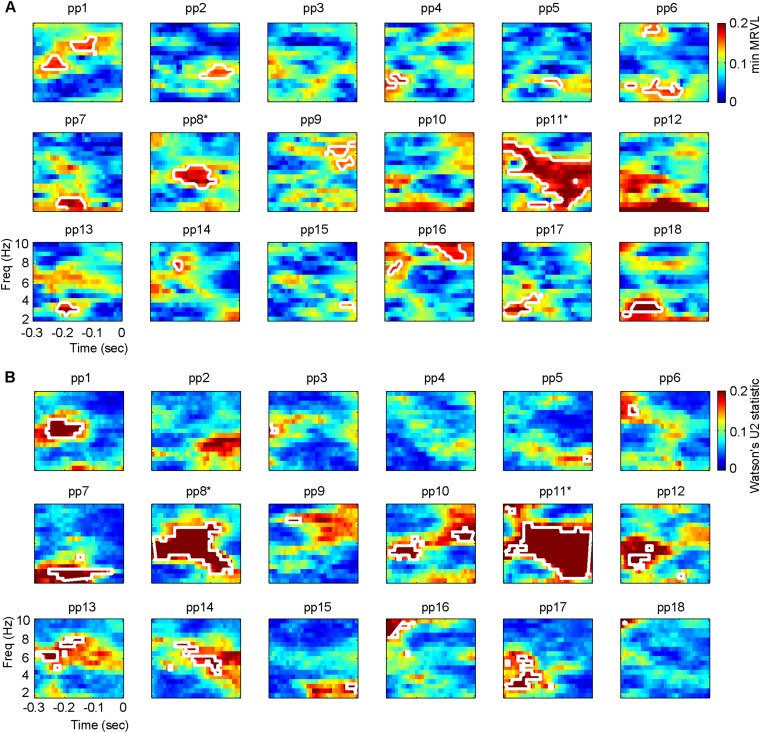

Fig. S2.

Single-subject analysis. (A) For each participant, the lowest intertrial coherence of the two syllable types per time/frequency data point is displayed. The white outlines indicate areas of significant minimum ITC (uncorrected). (B) For each participant, Watson’s U2 statistics comparing the mean phase of /da/ and /ga/ is displayed. The white outlines indicate areas of a significant Watson’s U2 value (uncorrected). The asterisks indicate the participants for whom the effect survived cluster-based corrections for multiple comparisons.

The current reported effects could not be explained by any eye movements (no significant differences between conditions) or any artifacts due to data padding (Fig. S3).

Fig. S3.

Prestimulus phase differences for the original nonpadded data and event-related potentials. (A) The mean resultant vector length across participants for the phase difference between /da/ and /ga/ for the unambiguous sounds and for the phase difference between perceived /da/ and /ga/ for the ambiguous sounds. The white outline indicates the region of significant differences. (B) Phase differences of individual participants at 6 Hz at −0.18 s for unambiguous and ambiguous sounds. The blue line indicates the 80-ms expected difference. The red lines indicate the strength of the MRVL. (C) V statistics testing whether the phase differences are significantly nonuniformly distributed around 80 ms for all significant points in the MRVL analysis. The white outlines indicate at which time and frequency point the analysis was performed. White dots indicate significance. (D) ERPs are displayed of the four different syllable types [/da/ (red) and /ga/ (green) of the unambiguous (UA) and ambiguous (A) sounds either perceived as /da/ (blue) or /ga/ (black)]. ERPs are displayed for the average of the nine used electrodes (Left), the vertical electrooculogram (VEOG; Right Top), and horizontal electrooculogram (HEOG; Right Bottom). Shaded areas indicate the SEM. No significant differences were found for the ERPs.

Experiment 2.

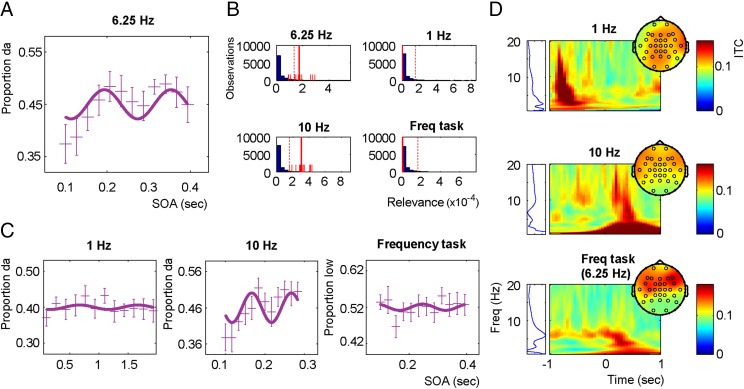

To investigate whether neuronal entrainment results in oscillatory identification patterns, we experimentally induced theta phase alignment using sensory entrainment (16–18) in 12 different participants. In this experiment, auditory stimuli of broadband noise (white noise band pass-filtered between 2.5 and 3.1 kHz, 50-ms length) were repeatedly presented (presumably entraining underlying oscillations at the presentation rate), after which ambiguous sounds were presented at different stimulus onset asynchronies (SOAs; 12 different SOAs fitting exactly two cycles). If ongoing phase is important for syllable identification, the time course of identification should oscillate at the presentation rate. Indeed, the time course of identification showed a pattern varying at the presentation rate of 6.25 Hz (Fig. 3A). To test the significance of this effect, we calculated the relevance value (19). This value is calculated by (i) fitting a sinus to the data and (ii) multiplying the explained variance of the fit by the variance of the predicted values. In this way, the relevance statistic gives less weight to models that have a fit with a flat line. Thereafter, we performed bootstrapping on the obtained relevance values (of the average curve) to show that of the 10,000 fitted bootstraps only 2.83% had a more extreme relevance value (Fig. 3B), suggesting that, indeed, syllable identity depends on theta phase.

Fig. 3.

Results from experiment 2. (A) Grand average proportion of /da/ of all of the participants, with the respective error bars reflecting the within-subject SEM (plusses; vertical extension reflects the error bars) and the fitted 6.25-Hz sinus (solid line). (B) Bootstrap histograms for the relevance statistics for all four conditions. The long solid and dotted red lines represent the relevance value of that dataset and the 95 percentile of all bootstrapped values, respectively. The short solid lines indicate the 12 relevance values when iteratively taking out one participant. The blue bars represent the individual relevance values of all the different bootstraps. (C) The grand average of all participants, with the respective error bars reflecting the within-subject SEM (plusses; vertical extension reflects the error bars) for the three different control conditions used in the experiment and their respective best-fitted sinus (solid line). (D) Intertrial coherence (ITC) plots for all three entrainment frequencies. Zero indicates entrainment offset. (Left) The ITC averaged in the −0.5 to 0 interval (ITC range 0.08–0.12). All of the conditions show a peak at the respective entrainment frequency. However, for 1 Hz, an evoked response of the last entrainment stimulus is present (around −0.8 s). For 10 Hz, and to a lesser extent for 6.25 Hz, evoked responses to the target stimuli are present poststimulus (around 0–1 s). This effect only arises in these frequencies, because the interval target presented is much narrower than for 1 Hz.

Three control experiments were performed. In the first two experiments, the frequency specificity of the effect was investigated by changing the presentation rates of the entrainment train to 1 and 10 Hz. In a third experiment, we wanted to rule out the possibility that the effect already occurs at a lower perceptual level instead of the syllable identification level. Therefore, we band pass-filtered the syllables between 2.5 and 3.1 Hz, maintaining the formant frequency at which the two syllables differ but distorting syllable perception. Participants had to indicate whether they felt the sound was of high or low frequency (this experiment will from now on be called “frequency control”). As a reference for what was considered a high or low frequency, the band pass-filtered stimulus numbers 1 and 9 were both presented at random order at the beginning of the trial.

Results show that for both the 1-Hz and the frequency control, no sinus could be fitted reliably (Fig. 3 B and C; P = 0.80 and P = 0.69, respectively). In contrast, for 10 Hz, a sinus could be reliably fitted (P = 0.011). For all three presentation frequencies there was entrainment at the expected frequency (Fig. 3D).

SI Materials and Methods

Experiment 1.

Participants.

A total of 20 participants (9 male; age range 18–32 y; mean age 25 y) participated in the study. All participants reported having normal hearing and normal or corrected-to-normal vision. The participants were native Dutch speakers. All gave written informed consent before the study. The study was approved by the local ethical committee at the Faculty of Psychology and Neuroscience at Maastricht University. Participants received monetary compensation for participating.

Stimuli.

Stimuli were created by morphing a recorded auditory /da/ to an auditory /ga/ using the synthesis function of the program Praat (32), similar to Bertelson et al. (33). First, the sound was resampled to 11 kHz. To extract the different formants, a linear predictor was created using 10 linear-prediction parameters (which would extract up to maximally five formants), using a sliding moving window of 25 ms, estimating the parameters every 5 ms. To shift the recorded /da/ from /da/ to /ga/, we moved the third formant from the original frequency band of 3 kHz down to 2.6 kHz in steps of −19 Mel (2), resulting in nine stimuli morphed from /da/ to /ga/ (85 dB). Stimuli were presented via ER-30 insert earphones (Etymotic Research).

Procedure.

First, we assessed individual psychometric curves by measuring the exact point at which participants reported hearing a /da/ or a /ga/ in 50% of the trials. To this end, the nine stimuli on the da–ga spectrum were presented in random order while participants indicated whether they heard /da/ or /ga/. The interstimulus interval (ISI) was 1.6, 1.8, or 2 s, whose presentation order was random. In total, 135 trials were presented. A cumulative Gaussian was fitted to the data using the fitting toolbox Modelfree version 1.1 (34), implemented in MATLAB (MathWorks). In the second part of this study, these individually calibrated parameters were used for EEG measurements. Here, only the three stimuli from the middle of the individual psychometric curve as well as the two extremes were presented. Two out of the 20 participants had a shifted psychometric curve, such that the point at which they detected /da/ 50% of the time was at the highest stimulus number used (stimulus 9), and they were therefore excluded. Again, participants had to indicate whether they heard /da/ or /ga/. The ISI was 3, 3.2, and 3.4 s, whose presentation order was random. For the two extremes a total of 80 trials and for the ambiguous stimuli a total of 120 trials were presented, divided into two blocks. During both tasks, participants had to fixate on a white cross presented on a black background. Presentation software was used for stimulus delivery (Neurobehavioral Systems).

EEG acquisition and preprocessing.

EEG data were recorded with online filters of 0.1–200 Hz and a sampling rate of 500 Hz. The BrainAmp MR Plus EEG amplifier (Brain Products) and BrainVision recorder (Brain Products) were used. Nine central Ag-AgCl electrodes were positioned on the head (FC1, FCz, FC2, C1, Cz, C2, CP1, CPz, CP2). The ground electrode was AFz, and the reference was the tip of the nose. Four additional electrodes for eye movements were used (lateral to both eyes, below and above the left eye). All electrodes were positioned using Ten20 paste (Weaver). Impedance was kept below 15 kOhm (5 kOhm for ground and reference). Only nine electrodes were used, because the experiment was part of a more extended setup. However, only the EEG data are shown here.

All data processing was done with FieldTrip (35) and the CircStat toolbox (36). Data were epoched from −3 to 3 around the stimulus onset. Then, bad channels were removed (and replaced with the average of the other remaining recording channels). For most participants, no channels were replaced (for 14 participants no channels were replaced, for 3 participants one channel was replaced, and for 1 participant two channels were replaced). Eye blinks were removed using the function scrls_regression of the eeglab plugin AAR (37) (filter order 3; forgetting factor 0.999; sigma 0.01; precision 50), after which data were resampled to 200 Hz. Finally, with visual inspection, trials with extreme variance were removed.

Data Analyses.

Consistency of phase differences.

The complex Fourier spectra of individual epochs were extracted with Morlet wavelets between 2 and 10 Hz (with the number of cycles used linearly increasing from 1.4 at 2 Hz to 7 at 10 Hz) with a step size of 0.5 Hz (time points of interest −0.3 to 0.2 s in steps of 0.01 s), after which the phases were obtained. To ensure that poststimulus effects did not temporally smear back to the prestimulus interval, we padded all data points after zero with the amplitude value at zero. To investigate whether found effects were not due to this data padding, we repeated the same analysis with the original dataset. Then, we calculated for each individual the mean phase for each condition collapsed over channels. We had four conditions: (i) /da/ of the unambiguous sounds (stimulus number 1; only trials that were identified as /da/ were included), (ii) /ga/ of the nonambiguous sounds (stimulus number 9; only trials that were identified as /ga/ were included), (iii) ambiguous syllables that were identified as /da/ [on average 47.2 trials (SD 18.5) per participant], and (iv) ambiguous syllables that were identified as /ga/ [on average 69.9 trials (SD 17.3) per participant]. To ensure that effects were not due to differences in trial numbers, we randomly drew trials of the three conditions with the highest trial number. The drawn number corresponded to the trial number of the condition with the lowest trial number. We repeated this procedure 100 times, and took the average of this as our final phase estimate. To test whether the phase differences between /da/ and /ga/ were concentrated at a specific phase before sound onset, we calculated the phase difference between /da/ and /ga/ for each time and frequency point for both ambiguous and unambiguous sounds. We expected that for the ambiguous sounds the prestimulus phase difference should correspond to the 80-ms difference found in the previous study. For the unambiguous sounds a weaker prestimulus effect was predicted, because participants were unaware of the identity of any upcoming stimulus and therefore phase fluctuations should be random. Note that we did exclude trials where participants indicated /ga/ for stimulus number 1 (mean number of trials removed 2; range 0–7 trials) and /da/ for stimulus number 9 (mean number of trials removed 2.78; range 0–13 trials), so this could cause small prestimulus phase differences. To test whether there was indeed such a difference in phase concentration dependent on condition, we calculated the mean resultant vector length (MRVL) of the phase difference between /da/ and /ga/ for both conditions across participants. The MRVL varies between 0 and 1, and the higher the value the more consistent the phase difference across participants. We used Monte Carlo simulations implemented in FieldTrip to statistically test that the difference between the MRVLs was not due to random fluctuations (two-sided test with 1,000 repetitions). Data were corrected for multiple comparisons using cluster-based correction implemented in FieldTrip (parameters: cluster alpha of 0.05, using the maximal sum of all of the time and channel bins as a dependent variable; P values reflect two-sided P values in all reported analyses).

Eighty-millisecond phase differences.

For all of the time and frequency points at which a significant difference in MRVL was found, we investigated whether the mean phase indeed corresponded to the 80-ms difference that was expected. Therefore, we performed for both conditions V tests for nonuniformity with a specified mean direction [corresponding to the 80-ms difference (38)], again using the average phase per participant per syllable averaged over channels. This test investigates whether the phase difference between /da/ and /ga/ is nonuniformly distributed around an 80-ms phase difference. The performed tests were corrected for multiple comparisons via false discovery rate [FDR (39)].

Consistency of individual syllable types.

We were interested in whether the mean phase of individual syllable types had a strong phase consistency at similar frequencies and time points. This analysis would indicate that not only is the phase difference consistent across participants but also the exact phase at which perception is biased toward /da/ or /ga/. We again calculated the MRVL, but this time for each syllable type (again using the mean phase per participant and syllable type). Then, we took the minimum MRVL of /da/ and /ga/ for each time and frequency point for the ambiguous and unambiguous sounds separately. To statistically test the strength of the coherence, we permuted the labels of the /da/ and /ga/ for each condition separately in Monte Carlo simulations (1,000 repetitions). This analysis provides a map at which time and frequency point it is unlikely that such a high minimum MRVL is found, and thus reflects data points with high MRVL for both /da/ and /ga/. Data were corrected for multiple comparisons using the same cluster size-based correction as above.

Event-related potential analysis.

We were interested in whether there were any differences between syllable types in the poststimulus event-related potentials (ERPs) and whether eye channels could explain the prestimulus effects. To calculate the ERP, we first band pass-filtered the data between 0.5 and 20 Hz using a second-order Butterworth filter. Then we averaged the data of the four syllable types for each participant. To calculate the vertical electrooculogram, we took the mean of the eye channel below and above the eye (multiplying the channel above the eye by −1). The same was done for the horizontal electrooculogram (using the right and left eye channel; here the right eye channel was multiplied by −1). We tested significant differences between /da/ and /ga/ for both the ambiguous and nonambiguous conditions separately using paired-samples t tests. Data were corrected for multiple comparisons using FDR.

Consistency of individual syllable types: Individual participants.

We performed the same analysis as in Consistency of Individual Syllable Types for each individual participant. Instead of using the average phase, we took the individual phases of all trials (averaged over channels). This analysis would inform us whether for individual participants phases are consistently centered at one phase value. First, we calculated the intertrial coherence (ITC; mathematically equivalent to the MRVL but the commonly used terminology for consistency over trials instead of constancy over average phases) for both /da/ and /ga/ (only for unambiguous trials, because we already showed that the effect is weaker for the ambiguous sounds). Then we again took the minimum ITC as our test statistic. The strength of these minima was again statistically tested using Monte Carlo simulations (1,000 repetitions) using cluster-based corrections for multiple comparisons. For this analysis (and the one in the next section), we did not randomly draw trials when there were unequal trial numbers, because for some participants this would result in very few trials to estimate the ITC. However, it is shown that if the variance in the two groups to be compared does not vary considerably, the chance of a type I error does not change much (40). The variance difference between the conditions was extremely low (the ratio being on average 1:0.96, with a range of 1:0.94–1:1.04).

Phase differences for individual participants.

To test whether for individual participants there was a significant phase difference between perceived /da/ and /ga/, we performed Watson’s U2 test for equal means to test the phase difference between /da/ and /ga/ for all time and frequency points for the phases of individual trials for each participant. Monte Carlo simulations and cluster-based correction for multiple comparisons were used for statistical testing.

Experiment 2.

Participants.

A total of 14 participants (one author; 4 male; age range 18–31 y; mean age 22.2 y) participated in the study. All participants reported having normal hearing and normal or corrected-to-normal vision. All gave written informed consent before the study. The study was approved by the local ethical committee at the Faculty of Psychology and Neuroscience at Maastricht University. For the control studies, we were able to reinvite six of the original participants, but also had to recruit six new participants (2 male; age range 20–31 y; mean age 25.0 y). Participants received monetary compensation for participating. Two of the original 14 participants were excluded from the analysis because they had a ceiling effect in their performance and only heard /da/ for almost all auditory stimuli.

Stimuli.

The same syllable stimuli were used as in experiment 1. Additionally, to entrain the system to sensory stimuli, we presented white noise, band pass-filtered between 2.5 and 3.1 kHz [second-order Butterworth (41, 42)]. This filter was chosen because it included both the third formant frequencies of /da/ and /ga/, increasing the chance that the correct regions would be entrained, as entrainment has been shown to be frequency-specific (43). Stimuli were 50-ms-long. Different entrainment trains lasted 2, 3, or 4 s at a presentation rate of 6.25 Hz. This rate was chosen because then 80 ms would correspond to exactly half a period. After the stimulus train finished, the ambiguous syllable stimulus was presented at stimulus onset asynchronies (SOAs) ranging from 0.1 to 0.58 s in steps of 0.0267 s (exactly fitting two cycles of 6.25 Hz). The stimuli were presented via headphones (stimuli in this experiment were presented around 60 dB). Three control tasks were implemented. In the first control experiment, the presentation rate of the stimulus train was at 10 Hz instead of 6.25 Hz and the SOAs ranged from 0.1 to 0.28 in steps of 0.017 s. In the second control the presentation was 1 Hz. SOAs ranged from 0.1 to 1.93 in steps of 0.167 s. For this condition the entrainment trains lasted 4, 5, or 6 s to ensure that enough stimuli were presented to entrain the system. The third control was identical to the original experiment, with the exception that the syllable stimuli were band pass-filtered between 2.5 and 3.1 kHz to only include the frequency range in which the two syllables differed. For this task, the middle stimulus was used for each participant instead of individual tailored stimuli. The task of the participant was to indicate whether they believed the sound was of high or low frequency. At the start of each trial, filtered syllable numbers 1 and 9 were presented to give a reference of what was “high” and what was “low.” The order of presentation of these stimuli was randomized.

Procedure.

The same psychophysical procedure was used as in experiment 1 to find the most ambiguous stimulus on the da–ga spectrum for individual participants. The stimulus closest to the 50% threshold was used for the main experiment. In the main experiment, three blocks were presented in which trains of band pass-filtered noise were presented. In total there were 324 trials (27 trials per SOA, 108 per entrainment length). All trials were presented in random order. During the experiment, participants were required to fixate on a white cross presented on a black background. Stimuli were again presented via Presentation software (Neurobehavioral Systems), and participants performed the experiment in a sound-shielded room. The three control experiments were presented after each other in random order. For the rest, the procedure was the same as in the original experiment. For seven control participants, EEG was recorded at the same time (the stimuli here were presented through speakers instead of headphones).

Data analysis.

First, the proportion of /da/ identification was calculated for every SOA, thereby creating a time course of proportion /da/ identification. We expected that this time course would follow an oscillatory pattern at 6.25 Hz. Hence, we fitted a sinus at a fixed frequency to the averaged behavioral time course using the function lsqnonlin in MATLAB. The test statistic calculated was the so-called relevance. This statistic is calculated by multiplying the explained variance of the model by the total variance of predicted values, thereby also endorsing predicted models that have a high variance instead of relatively flat lines. To check the likelihood of finding a larger relevance value than the one in our own dataset, we bootstrapped the labels of the phase bins to create randomized time courses and relevance values (n = 10,000). We performed bootstrapping on the average instead of the individual time courses due to a lack of power in the individual data. For the three control analyses the same analysis was performed.

Data analysis: EEG.

All recording parameters and preprocessing steps were the same as in experiment 1, with the exception that data were recorded from the full scalp using 31 electrodes, using the easycapM22 setup (EASYCAP GmbH, Herrsching; excluding electrodes TP9 and TP10 but including channels C1, C3, and CPz). Data were epoched to −3 to 3 around the entrainment train offset. Then, we extracted the ITC by extracting the angle from the complex Fourier transform calculated via Morlet wavelets (four cycles included for the estimate) for each of the three control conditions (1 Hz, 10 Hz, and the frequency control).

Discussion

In the current study, we investigated whether ongoing oscillatory phase biases syllable identification. We presented ambiguous auditory stimuli while recording EEG and revealed a systematic phase difference before auditory onset between the perceived /da/ and /ga/ at theta frequency. This phase discrepancy corresponded to the 80-ms difference between the onset delays of the speech sounds /da/ or /ga/ with respect to the onset of the corresponding mouth movements found in natural speech (4). Moreover, we showed that syllable identification depends on the underlying oscillatory phase induced by entrainment to a 6.25- or 10-Hz presented stimulus train of broadband noise. These results reveal the relevance of phase coding for language perception and provide a flexible mechanism for statistical learning of onset differences and possibly for the encoding of other temporal information for optimizing perception.

Audiovisual Learning Results in Phase Coding.

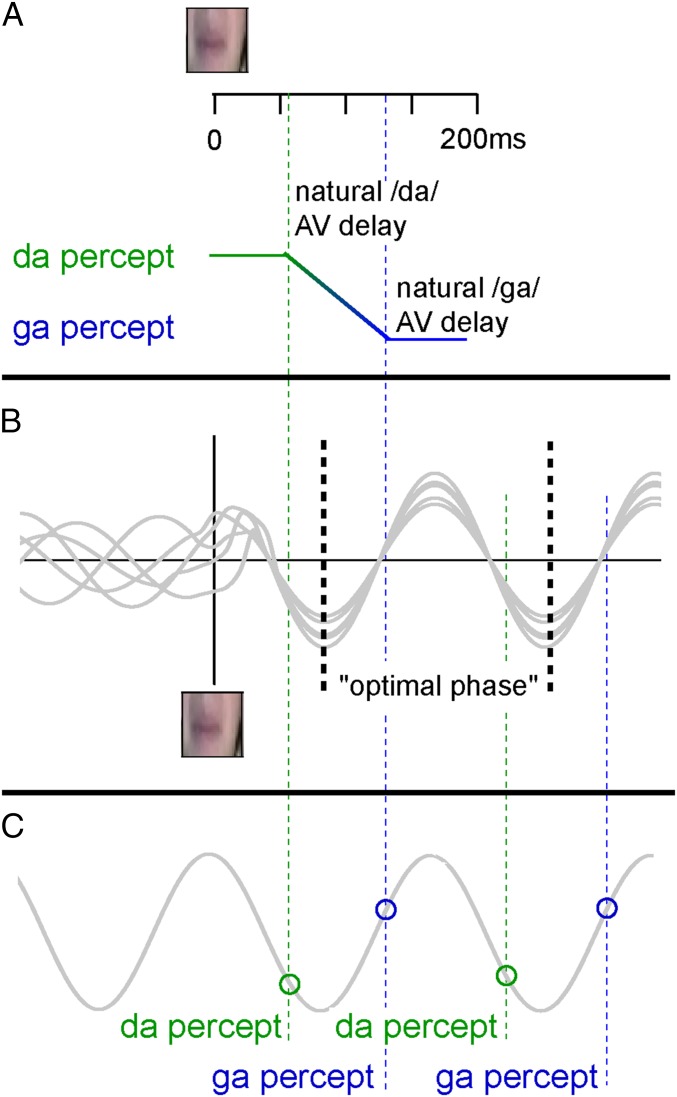

The human brain is remarkably capable of associating events that repeatedly occur together (20, 21), representing an efficient neural coding mechanism for guiding our interpretation of the environment. Specifically, when two events tend to occur together, they will enhance the neural connections between each other, consequently increasing the detection sensitivity of one event in case the associated event is present (22). We propose that this could also work for temporal associations. In a previous study, we showed that the onset between mouth movements and auditory speech signals differs between syllables, and that this influences syllable identification (4). For example, a naturally occurring /ga/ has an 80-ms larger visual-to-auditory onset difference than a naturally occurring /da/ (Fig. 4A) (4). Recent theories propose that visual cues benefit auditory speech processing by aligning ongoing oscillations in auditory cortex such that the “optimal” high excitable period coincides with the time point at which auditory stimuli are expected to arrive, thereby optimizing their processing (Fig. 4B) (8, 10, 23). If this indeed occurs, different syllables should be consistently presented at different phases of the reset oscillation (green and blue lines in Fig. 4B). A similar mechanism has also been proposed by Peelle and Davis (14). Because humans (or rather our brains) likely (implicitly) learn this consistent association between phase and syllable identity, one could hypothesize that neuronal populations coding for different syllables may begin to prefer specific phases, biasing syllable perception at corresponding phases even when visual input is absent (Fig. 4C). The current data indeed support this notion, as we show that the phase difference between /da/ and /ga/ fits 80 ms. The exact cortical origin of this effect cannot be unraveled with the current data, but we would expect to find these effects in auditory cortex.

Fig. 4.

Proposed mechanism for theta phase sensitization. (A) Dependent on the natural visual-to-auditory (AV) delay, voiced-stop consonants are identified as a /da/ or a /ga/ after presenting the same visual stimulus (4). (B) When visual speech is presented, ongoing theta oscillations synchronize, creating an optimal phase (black dotted line) at which stimuli are best-processed. The phase at which a /da/ or a /ga/ in natural situations is presented is different (green and blue lines, respectively), caused by the difference in visual-to-auditory delay. (C) Syllable perception is biased at phases at which /da/ and /ga/ are systematically presented in audiovisual settings even when visual input is absent.

Generalization of This Mechanism.

Temporal information is not only present in (audiovisual) speech. Therefore, any consistent temporal relationship between two stimuli could be coded in a similar vein as demonstrated here. For example, the proposed mechanism should also generalize to auditory-only settings, because any temporal differences caused by articulatory processes should also influence the timing of individual syllables within a word; for example, the second syllable in “baga” should arrive at a later time point as “bada.” It is an open question how these types of mechanisms generalize to situations in which speech is faster or slower. However, it is conceivable that when speaking faster the visual-to-auditory onset differences between /da/ and /ga/ also reduce, thereby also changing their expected phase difference. It has already been shown that cross-modal mechanisms rapidly update changing temporal statistics in the environment (24), by for example changing the oscillatory phase relationship between visual and auditory regions (25).

Our results show that during 10-Hz entrainment an oscillatory pattern of syllable identification is present. This frequency is slightly higher than what is generally considered theta. This likely reflects that the brain flexibly adapts to the changing environment, for example when facing a person who speaks very fast. Thus, although under “normal” circumstances the effect seems constrained to theta (as shown in experiment 1), altering the brain state by entraining to higher frequencies still induces the effect and shows the flexibility of this mechanism.

Excitability Versus Phase Coding.

Much research has focused on the role of oscillations in systematically increasing and decreasing the excitability levels of neuronal populations (23, 26, 27). In this line of reasoning, speech processing is enhanced by aligning the most excitable phase of an oscillation to the incoming speech signal (5, 6). Intuitively, our results seem in contrast to this idea, as it appears that neuronal populations coding for separate syllables have phase-specific responses. However, it could also be considered possible that one neuronal population biases identification in the direction of one syllable, this bias succeeding when excited and failing when suppressed. This interpretation is less likely, considering that the exact phases at which syllable identification was biased varied across participants. Therefore, the phase at which identification is biased toward one syllable does not always fall on the most excitable point of the oscillation for each participant (unless the phases of the measured EEG signal are not comparable across participants). Considering that there are individual differences in the lag between stimulus presentation and brain response (e.g., 18), it would also follow that the phase at which syllable identification is biased does not match across participants. However, more research is needed to irrefutably demonstrate that different neuronal populations code information preferably at a specific oscillatory phase (28).

Conclusion

Temporal associations are omnipresent in our environment, and it seems highly unlikely that these data are ignored by our brain when information has to be ordered and categorized. The current study has demonstrated that oscillatory phase shapes syllable perception and that this phase difference matches temporal statistics in the environment. To determine whether this type of phase sensitization is a common neural mechanism, it is necessary to investigate other types of temporal statistics, especially because it could provide a mechanism for separating different representations (26, 29, 30) and offer an efficient way of coding time differences (31). Future research needs to investigate whether also other properties are encoded in phase, revealing the full potential of this type of phase coding scheme.

Materials and Methods

In total, 40 participants took part in our study (20 per experiment). All participants gave written informed consent. The study was approved by the local ethical committee at the Faculty of Psychology and Neuroscience at Maastricht University. Detailed methods are described in SI Materials and Methods.

Acknowledgments

We thank Giancarlo Valente for useful suggestions for the analysis. Kirsten Petras and Helen Lückmann helped improve the final manuscript. This study was supported by a grant from the Dutch Organization for Scientific Research (NWO; 406-11-068).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1517519112/-/DCSupplemental.

References

- 1.Campbell R. The processing of audio-visual speech: Empirical and neural bases. Philos Trans R Soc Lond B Biol Sci. 2008;363(1493):1001–1010. doi: 10.1098/rstb.2007.2155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Schwartz J-L, Savariaux C. No, there is no 150 ms lead of visual speech on auditory speech, but a range of audiovisual asynchronies varying from small audio lead to large audio lag. PLoS Comput Biol. 2014;10(7):e1003743. doi: 10.1371/journal.pcbi.1003743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Chandrasekaran C, Trubanova A, Stillittano S, Caplier A, Ghazanfar AA. The natural statistics of audiovisual speech. PLoS Comput Biol. 2009;5(7):e1000436. doi: 10.1371/journal.pcbi.1000436. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.ten Oever S, Sack AT, Wheat KL, Bien N, van Atteveldt N. Audio-visual onset differences are used to determine syllable identity for ambiguous audio-visual stimulus pairs. Front Psychol. 2013;4:331. doi: 10.3389/fpsyg.2013.00331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Schroeder CE, Lakatos P, Kajikawa Y, Partan S, Puce A. Neuronal oscillations and visual amplification of speech. Trends Cogn Sci. 2008;12(3):106–113. doi: 10.1016/j.tics.2008.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Peelle JE, Sommers MS. Prediction and constraint in audiovisual speech perception. Cortex. 2015;68:169–181. doi: 10.1016/j.cortex.2015.03.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Luo H, Liu Z, Poeppel D. Auditory cortex tracks both auditory and visual stimulus dynamics using low-frequency neuronal phase modulation. PLoS Biol. 2010;8(8):e1000445. doi: 10.1371/journal.pbio.1000445. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.van Atteveldt N, Murray MM, Thut G, Schroeder CE. Multisensory integration: Flexible use of general operations. Neuron. 2014;81(6):1240–1253. doi: 10.1016/j.neuron.2014.02.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Perrodin C, Kayser C, Logothetis NK, Petkov CI. Natural asynchronies in audiovisual communication signals regulate neuronal multisensory interactions in voice-sensitive cortex. Proc Natl Acad Sci USA. 2015;112(1):273–278. doi: 10.1073/pnas.1412817112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Mercier MR, et al. Neuro-oscillatory phase alignment drives speeded multisensory response times: An electro-corticographic investigation. J Neurosci. 2015;35(22):8546–8557. doi: 10.1523/JNEUROSCI.4527-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Besle J, et al. Tuning of the human neocortex to the temporal dynamics of attended events. J Neurosci. 2011;31(9):3176–3185. doi: 10.1523/JNEUROSCI.4518-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Lakatos P, Chen CM, O’Connell MN, Mills A, Schroeder CE. Neuronal oscillations and multisensory interaction in primary auditory cortex. Neuron. 2007;53(2):279–292. doi: 10.1016/j.neuron.2006.12.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kayser C, Ince RA, Panzeri S. Analysis of slow (theta) oscillations as a potential temporal reference frame for information coding in sensory cortices. PLoS Comput Biol. 2012;8(10):e1002717. doi: 10.1371/journal.pcbi.1002717. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Peelle JE, Davis MH. Neural oscillations carry speech rhythm through to comprehension. Front Psychol. 2012;3:320. doi: 10.3389/fpsyg.2012.00320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Zoefel B, Heil P. Detection of near-threshold sounds is independent of EEG phase in common frequency bands. Front Psychol. 2013;4:262. doi: 10.3389/fpsyg.2013.00262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.de Graaf TA, et al. Alpha-band rhythms in visual task performance: Phase-locking by rhythmic sensory stimulation. PLoS One. 2013;8(3):e60035. doi: 10.1371/journal.pone.0060035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Lakatos P, Karmos G, Mehta AD, Ulbert I, Schroeder CE. Entrainment of neuronal oscillations as a mechanism of attentional selection. Science. 2008;320(5872):110–113. doi: 10.1126/science.1154735. [DOI] [PubMed] [Google Scholar]

- 18.Henry MJ, Obleser J. Frequency modulation entrains slow neural oscillations and optimizes human listening behavior. Proc Natl Acad Sci USA. 2012;109(49):20095–20100. doi: 10.1073/pnas.1213390109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Fiebelkorn IC, et al. Ready, set, reset: Stimulus-locked periodicity in behavioral performance demonstrates the consequences of cross-sensory phase reset. J Neurosci. 2011;31(27):9971–9981. doi: 10.1523/JNEUROSCI.1338-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Summerfield C, Egner T. Expectation (and attention) in visual cognition. Trends Cogn Sci. 2009;13(9):403–409. doi: 10.1016/j.tics.2009.06.003. [DOI] [PubMed] [Google Scholar]

- 21.Fiser J, Aslin RN. Unsupervised statistical learning of higher-order spatial structures from visual scenes. Psychol Sci. 2001;12(6):499–504. doi: 10.1111/1467-9280.00392. [DOI] [PubMed] [Google Scholar]

- 22.Hebb DO. The organization of behavior: A neuropsychological theory. John Wiley & Sons; New York: 1949. [Google Scholar]

- 23.Schroeder CE, Lakatos P. Low-frequency neuronal oscillations as instruments of sensory selection. Trends Neurosci. 2009;32(1):9–18. doi: 10.1016/j.tins.2008.09.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Fujisaki W, Shimojo S, Kashino M, Nishida S. Recalibration of audiovisual simultaneity. Nat Neurosci. 2004;7(7):773–778. doi: 10.1038/nn1268. [DOI] [PubMed] [Google Scholar]

- 25.Kösem A, Gramfort A, van Wassenhove V. Encoding of event timing in the phase of neural oscillations. Neuroimage. 2014;92:274–284. doi: 10.1016/j.neuroimage.2014.02.010. [DOI] [PubMed] [Google Scholar]

- 26.Jensen O, Gips B, Bergmann TO, Bonnefond M. Temporal coding organized by coupled alpha and gamma oscillations prioritize visual processing. Trends Neurosci. 2014;37(7):357–369. doi: 10.1016/j.tins.2014.04.001. [DOI] [PubMed] [Google Scholar]

- 27.Mathewson KE, Fabiani M, Gratton G, Beck DM, Lleras A. Rescuing stimuli from invisibility: Inducing a momentary release from visual masking with pre-target entrainment. Cognition. 2010;115(1):186–191. doi: 10.1016/j.cognition.2009.11.010. [DOI] [PubMed] [Google Scholar]

- 28.Watrous AJ, Fell J, Ekstrom AD, Axmacher N. More than spikes: Common oscillatory mechanisms for content specific neural representations during perception and memory. Curr Opin Neurobiol. 2015;31:33–39. doi: 10.1016/j.conb.2014.07.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Fell J, Axmacher N. The role of phase synchronization in memory processes. Nat Rev Neurosci. 2011;12(2):105–118. doi: 10.1038/nrn2979. [DOI] [PubMed] [Google Scholar]

- 30.Kayser C, Montemurro MA, Logothetis NK, Panzeri S. Spike-phase coding boosts and stabilizes information carried by spatial and temporal spike patterns. Neuron. 2009;61(4):597–608. doi: 10.1016/j.neuron.2009.01.008. [DOI] [PubMed] [Google Scholar]

- 31.Chakravarthi R, Vanrullen R. Conscious updating is a rhythmic process. Proc Natl Acad Sci USA. 2012;109(26):10599–10604. doi: 10.1073/pnas.1121622109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Boersma P, Weenink D. 2013. Praat: Doing Phonectics by Computer (University of Amsterdam, Amsterdam), Version 5.3.56.

- 33.Bertelson P, Vroomen J, De Gelder B. Visual recalibration of auditory speech identification: A McGurk aftereffect. Psychol Sci. 2003;14(6):592–597. doi: 10.1046/j.0956-7976.2003.psci_1470.x. [DOI] [PubMed] [Google Scholar]

- 34.Zychaluk K, Foster DH. Model-free estimation of the psychometric function. Atten Percept Psychophys. 2009;71(6):1414–1425. doi: 10.3758/APP.71.6.1414. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Oostenveld R, Fries P, Maris E, Schoffelen J-M. FieldTrip: Open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput Intell Neurosci. 2011;2011:156869. doi: 10.1155/2011/156869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Berens P. CircStat: A MATLAB toolbox for circular statistics. J Stat Softw. 2009;31(10):1–21. [Google Scholar]

- 37.Gómez-Herrero G, et al. 2006. Automatic removal of ocular artifacts in the EEG without an EOG reference channel. Proceedings of the 7th Nordic Signal Processing Symposium (IEEE, Rejkjavik), pp 130–133. [Google Scholar]

- 38.Zar JH. 1998. Biostatistical Analysis (Prentice Hall, Englewood Cliffs, NJ), 4th Ed. [Google Scholar]

- 39.Benjamini Y, Yekutieli D. The control of the false discovery rate in multiple testing under dependency. Ann Stat. 2001;29(4):1165–1188. [Google Scholar]

- 40.Mewhort DJ, Kelly M, Johns BT. Randomization tests and the unequal-N/unequal-variance problem. Behav Res Methods. 2009;41(3):664–667. doi: 10.3758/BRM.41.3.664. [DOI] [PubMed] [Google Scholar]

- 41.Hari R, Hämäläinen M, Joutsiniemi SL. Neuromagnetic steady-state responses to auditory stimuli. J Acoust Soc Am. 1989;86(3):1033–1039. doi: 10.1121/1.398093. [DOI] [PubMed] [Google Scholar]

- 42.Rees A, Green GG, Kay RH. Steady-state evoked responses to sinusoidally amplitude-modulated sounds recorded in man. Hear Res. 1986;23(2):123–133. doi: 10.1016/0378-5955(86)90009-2. [DOI] [PubMed] [Google Scholar]

- 43.Lakatos P, et al. The spectrotemporal filter mechanism of auditory selective attention. Neuron. 2013;77(4):750–761. doi: 10.1016/j.neuron.2012.11.034. [DOI] [PMC free article] [PubMed] [Google Scholar]