Abstract

Objectives

We assessed the use of administrative-evidence based practices (A-EBPs) among managers of programs in chronic diseases (CD), environmental health (EH), and infectious diseases (ID) from a sample of local health departments (LHDs) in the U.S.

Design

Program managers completed a survey consisting of six sections (biographical data, use of A-EBPs, diffusion attributes, use of resources, and barriers to, and competencies in, evidence-based public health (EBPH)) with a total of 66 questions.

Participants

The survey was sent electronically to 168 program managers in CD, 179 in EH, and 175 in ID, representing 228 LHDs. The survey had previously been completed by 517 LHD Directors.

Measures

The use of A-EBPs was scored for 19 individual A-EBPs, across the five A-EBP domains, and for all domains combined. Individual characteristics were derived from the survey responses, with additional data on LHDs drawn from linked NACCHO Profile survey data. Results for program managers were compared across the three types of programs and to responses from the previous survey of LHD directors. The scores were ordered and categorized into tertiles. Unconditional logistic regression models were used to calculate odds ratios (ORs) and 95% confidence intervals (CIs), comparing individual and agency characteristics for those with the highest third of A-EBPs scores to those with the lowest third.

Results

The 332 total responses from program managers represented 196 individual LHDs. Program managers differed (across the three programs, and compared to LHD Directors) in demographic characteristics, education, and experience. The use of A-EBPs varied widely across specific practices and individuals, but the pattern of responses from directors and program managers was very similar for the majority of A-EBPs.

Conclusions

Understanding the differences in educational background, experience, organizational culture, and performance of A-EBPs between program managers and LHD directors is a necessary step to improving competencies in EBPH.

Keywords: evidence-based public health, organization and administration, public health practice, quality improvement, translational research

Introduction

Public health programs and policies have largely been credited with gains in longevity and quality of life in the previous century, with notable achievements in the reduction of morbidity and mortality from vaccine preventable diseases, tobacco use, and motor vehicle accidents.1,2 Developing, identifying, and implementing public health measures for which there is clear evidence of impact have taken on increasing importance in the context of recent forces of change, including the national voluntary accreditation program for governmental public health agencies3, the Affordable Care Act4, and political and economic pressures subsequent to the 2007-2008 recession.5 Evidence-based public health (EBPH) - described as the integration of science-based interventions with community preferences to improve the health of populations6 - has been widely promoted through the use of the Guide to Community Preventive Services7 for over a decade; however, it is only recently that efforts to measure the reported performance of evidence-based practices have been undertaken.8-10

Identifying administrative evidence-based practices (A-EBPs) in public health – the infrastructural and operational milieu which supports and facilitates EBPH – has been a particular recent focus within the emerging field of Public Health Systems and Services Research (PHSSR).8,9,11 Five major domains of A-EBPs that are modifiable in the short term have been identified; these include practices in workforce development, leadership, organizational climate and culture, relationships and partnerships, and financial processes. In a survey of 517 directors of local health departments (LHDs), Brownson et al documented highest performance in A-EBPs related to relationships and partnerships and lowest performance in practices related to the organizational culture and climate of the agency.9 While these data reflect the important perspectives of LHD directors, there is less known about the knowledge and performance of A-EBPs among program managers, who are more often directly responsible for priority setting and operations related to specific program areas. This is particularly relevant for managers of programs in chronic diseases, environmental health, and infectious diseases, three arenas in which there have been significant contributions to the overall improvements in health alluded to earlier. The purpose of this article, therefore, is to document the performance of A-EBPs among program managers and contrast this with LHD directors, in an effort to identify the specific facilitators, barriers, and training needs requisite for expanding EBPH.

Methods

Data on the use of A-EBPs were collected from responses to a nationwide survey of LHDs. The sampling frame, questionnaire development and testing, and data collection steps have been described previously.9,12 Briefly, a stratified random sample of 1,067 US LHDs was drawn from the database of 2,565 LHDs maintained by the National Association of County and City Health Officials (NACCHO), with stratification by jurisdictional population. The survey instrument was based in part on a public health systems logic model and related frameworks13-16 and previous EBPH-focused research with state and local health departments, where validated and standardized questions existed.17-22 The questionnaire consisted of six sections (biographical data, A-EBPs, diffusion attributes, barriers to EBPH, use of resources, competencies in EBPH), with a total of 66 questions. The A-EBPs section of the instrument was based on a recent literature review and consisted of 19 questions that were newly developed.8 Survey instrument validity and reliability were documented through cognitive response testing (with 12 experts in the field) and test-retest processes (involving 90 LHD practitioners), which resulted in a survey instrument with high reliability, with Cronbach's alpha values for the A-EBPs questions ranging from 0.67 to 0.94.23

Data were collected using an online survey (Qualtrics software24) that was delivered nationally to email accounts of 1,067 LHD directors, reduced to 967 after excluding non-valid email addresses. In their responses, LHD Directors (or designee's) were asked to identify managers/leaders in three program areas within their LHD: chronic diseases (CD), environmental health (EH), and infectious diseases (ID). The online survey was subsequently sent to each program manager directly, including 168 program managers in CD, 179 in EH, and 175 in ID (with a small number of these sent to the same individual who served as program manager for two or more programs), representing 228 LHDs. For LHD directors who provided no contact information for these program managers it is not known if they simply chose not to provide such information or if no such positions existed in their LHD. There were 517 valid responses to the survey (response rate (RR) of 54%) from LHD Directors, 110 (RR 65.5%) from CD managers, 118 (RR 65.9%) from EH managers, and 120 (RR 68.6%) from ID managers. The 332 total responses from program managers represented 196 individual LHDs.

Individual characteristics were derived from the survey responses, with additional data on LHDs drawn from linked NACCHO Profile survey data.25 The use of A-EBPs was scored for 19 individual A-EBPs, across the five A-EBP domains, and for all domains combined. The scores were ordered and categorized into tertiles. Unconditional logistic regression models were used to calculate odds ratios (ORs) and 95% confidence intervals (CIs), comparing individual and agency characteristics for those with the highest third of A-EBPs scores to those with the lowest third. Adjusted odds ratios were derived from a final regression model, which included significant variables and covariates that contributed to the fit of the model. For the adjusted ORs the variables that were retained were population jurisdiction, governance structure, census regions, and highest degree.

Results

The individual characteristics of program managers and LHD directors are provided in table 1. Program managers tended to be younger than LHD directors. The vast majority of CD and ID program managers were female, while the preponderance of EH managers were males. While there was little difference in the average number of years in their current position, LHD directors tended to have more overall work experience in public health, followed closely by EH managers. Managers of CD programs tended to have the least number of years in both current and overall work experience. Overall, program managers had less formal education compared to LHD directors, but there were notable differences in education across the three program areas. Almost half of ID managers had nursing degrees as their highest degree, much greater than for any other individual category. One quarter of ID managers obtained a Master of Public Health (MPH) as their highest degree, while this was true for less than 10% of EH managers. Program managers tended to represent LHDs with larger jurisdictions compared to LHD directors.

Table 1.

Characteristics of Program Managers and Local Health Department Directors, United States, 2012

| Program Managers | LHD Director | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Chronic Disease | Environmental Health | Infectious Disease | Total | |||||||

| Characteristic | No | % | No | % | No | % | No | % | No. | % |

| Individual | ||||||||||

| Age (yrs) | ||||||||||

| 20-29 | 5 | 4.6 | 4 | 3.4 | 10 | 8.5 | 19 | 5.5 | 52a | 10.0 |

| 30-39 | 25 | 22.9 | 19 | 16.4 | 19 | 16.1 | 63 | 18.4 | ||

| 40-49 | 23 | 21.1 | 35 | 30.2 | 21 | 17.8 | 79 | 23.0 | 110 | 21.3 |

| 50-59 | 41 | 37.6 | 40 | 34.5 | 47 | 39.8 | 128 | 37.3 | 228 | 44.1 |

| 60 and older | 15 | 13.8 | 18 | 15.5 | 21 | 17.8 | 54 | 15.7 | 127 | 24.6 |

| Total | 109 | 116 | 118 | 343 | 517 | 100 | ||||

| Gender | ||||||||||

| Female | 94 | 86.2 | 36 | 31.0 | 98 | 83.1 | 228 | 66.5 | 315 | 60.9 |

| Male | 15 | 13.8 | 80 | 69.0 | 20 | 16.9 | 115 | 33.5 | 202 | 39.1 |

| Total | 109 | 116 | 118 | 343 | 517 | 100 | ||||

| Job Position | ||||||||||

| Top executive, health officer, commissioner | 4 | 3.7 | 11 | 9.5 | 6 | 5.1 | 21 | 6.1 | 351 | 67.9 |

| Administrator, deputy, or assistant director | 13 | 11.9 | 20 | 17.2 | 13 | 11.0 | 46 | 13.4 | 117 | 22.6 |

| Manager of a division or program, other | 92 | 84.4 | 85 | 73.3 | 99 | 83.9 | 276 | 80.5 | 49 | 9.4 |

| Total | 109 | 116 | 118 | 343 | 517 | 100 | ||||

| Work Experience | ||||||||||

| Years in current position (mean) | 6.4 | 8.8 | 7.7 | 7.7 | 8.5 | |||||

| Years in Public Health (mean) | 13.6 | 18.2 | 14.1 | 15.3 | 20.4 | |||||

| Highest Degree | ||||||||||

| Doctoral | 11 | 10 | 4 | 3.4 | 7 | 5.8 | 22 | 6.3 | 91 | 17.7 |

| Master of Public Health | 13 | 11.8 | 9 | 7.6 | 30 | 25.0 | 52 | 14.9 | 88 | 17.1 |

| Other masters degree | 28 | 25.5 | 29 | 24.6 | 12 | 10.0 | 69 | 19.8 | 138 | 26.8 |

| Nursing | 19 | 17.3 | 1 | 0.8 | 53 | 44.2 | 73 | 21.0 | 97 | 18.8 |

| Bachelors degree or less | 39 | 35.5 | 75 | 63.6 | 18 | 15.0 | 132 | 37.9 | 101 | 19.6 |

| Total | 110 | 118 | 120 | 348 | 515 | 100 | ||||

| Health Department | ||||||||||

| Census Region | ||||||||||

| Northeast | 12 | 10.9 | 13 | 11.0 | 14 | 11.7 | 39 | 11.2 | 87 | 16.9 |

| Midwest | 37 | 33.6 | 44 | 37.3 | 45 | 37.5 | 126 | 36.2 | 200 | 38.8 |

| South | 41 | 37.3 | 41 | 34.7 | 35 | 29.2 | 117 | 33.6 | 149 | 28.9 |

| West | 20 | 18.2 | 20 | 16.9 | 26 | 21.7 | 66 | 19.0 | 80 | 15.5 |

| Total | 110 | 118 | 120 | 348 | 516 | 100 | ||||

| Population of Jurisdiction | ||||||||||

| <25,000 | 14 | 12.7 | 16 | 13.6 | 19 | 15.8 | 49 | 14.1 | 135 | 26.2 |

| 25,000 to 49,999 | 24 | 21.8 | 26 | 22.0 | 28 | 23.3 | 78 | 22.4 | 110 | 21.4 |

| 50,000 to 99,999 | 22 | 20.0 | 21 | 17.8 | 15 | 12.5 | 58 | 16.7 | 95 | 18.4 |

| 100,000 to 499,999 | 31 | 28.2 | 39 | 33.1 | 37 | 30.8 | 107 | 30.7 | 106 | 20.6 |

| 500,000 or larger | 19 | 17.3 | 16 | 13.6 | 21 | 17.5 | 56 | 16.1 | 69 | 13.4 |

| Total | 110 | 118 | 120 | 348 | 515 | 100 | ||||

| Governance Structure | ||||||||||

| State governed | 8 | 7.3 | 8 | 6.8 | 6 | 5.0 | 22 | 6.3 | 51 | 9.9 |

| Locally governed | 82 | 74.5 | 89 | 75.4 | 101 | 84.9 | 272 | 78.4 | 416 | 80.8 |

| Shared governance | 20 | 18.2 | 21 | 17.8 | 12 | 10.1 | 53 | 15.3 | 48 | 9.3 |

| Total | 110 | 118 | 119 | 347 | 515 | 100 | ||||

Age groups 20-29 and 30-39 combined

Table after Brownson et al 9

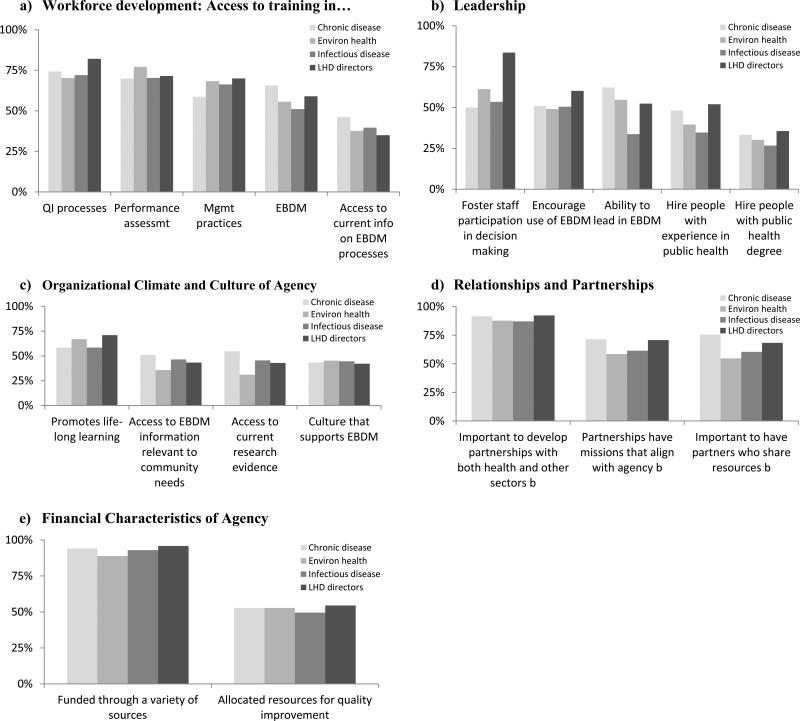

As with LHD directors, responses on the use of A-EBPs varied widely across specific practices and individuals, but the pattern of responses from directors and program managers was very similar (figures 1a-e). The A-EBP showing the lowest response across all individuals was for “hiring people with a public health degree” (30.1% for all program managers and 35.6% for LHD directors – data not shown), while the A-EBP with the highest response rates were for “having a variety of funding sources”. At the level of A-EBP domain, program managers were least likely to engage in leadership, while LHD directors showed least use of practices that reflected the LHD organizational climate and culture. Overall, program managers and LHD directors reported highest use of A-EBPs in the domain of relationships and partnerships. For every domain, directors reported higher values than the sum total of program managers, although this difference was minimal for workforce and organizational climate.

Figure 1.

Administrative evidence-based practices in local health departments, Directors and Program Managers, United States, 2012

Managers of CD programs tended to show the highest level of A-EBP performance compared to EH and ID managers; the highest response for any domain (including by LHD directors) was in the performance of A-EBPs in relationships and partnerships by CD managers. For program managers, the greatest relative difference (highest use - lowest use/total use) across domains was in performance of leadership A-EBPs, while the least relative difference was for workforce development. These relative differences are visually apparent in figures 1a-e.

Predictors of performance of A-EBPs for the three groups of program managers were similar to LHD directors for most characteristics of interest (table 2), including age (older compared to younger), education (higher degrees compared to lower degrees) and jurisdictional population (larger compared to smaller). After adjustment for all statistically significant bivariate predictors there were limited significant findings in comparing the highest to the lowest tertile of attaining A-EBPs among program managers - working in locally governed LHDs predicted higher performance of A-EBPs compared to working in LHDs under state or shared governance (adjusted OR 1.0 compared to OR of 0.1 [95% CI 0.02, 0.5]). This finding is in contrast to predictors for LHD directors, where working in state-governed LHDs predicted highest use.

Table 2.

Predictors of administrative evidence-based practices among directors and program managers in local health departments, United State, 2012

| Program Managers (n=180) | Directors (n=517) | ||||

|---|---|---|---|---|---|

| Characteristic | No in highest tertile | No. in lowest tertile | Unadjusted odds ratio (95% confidence interval) | Adjusted odds ratio (95% confidence interval) | Adjusted odds ratio (95% confidence interval) |

| Individual | |||||

| Age | |||||

| 20-39 | 23 | 20 | 1.0 | -- | 1.0 |

| 40-49 | 22 | 24 | 0.8 (0.3, 1.8) | -- | 1.5 (0.6, 3.9) |

| 50-59 | 31 | 38 | 0.7 (0.3, 1.5) | -- | 2.5 (1.08, 6.0) |

| 60 and older | 16 | 8 | 1.7 (0.6, 4.9) | -- | 1.5 (0.6, 3.7) |

| Gender | |||||

| Female | 61 | 65 | 0.8 (0.4, 1.4) | -- | |

| Male | 31 | 25 | 1.0 | -- | |

| Job Position | |||||

| Manager of a division or program, other | 79 | 80 | 0.8 (0.3, 1.8) | -- | |

| Administrator, deputy, or assistant director | 13 | 10 | 1.0 | -- | |

| Highest Degree | |||||

| Doctoral | 6 | 1 | 7.4 (0.8, 64.9) | 4.9 (0.5, 49.5) | 2.1 (0.9, 5.3) |

| Master of Public Health | 15 | 14 | 1.3 (0.6, 3.2) | 1.1 (0.4, 2.9) | 1.9 (0.8, 4.6) |

| Other masters degree | 23 | 16 | 1.8 (0.8, 3.9) | 1.8 (0.7, 4.6) | 1.9 (0.9, 4.1) |

| Nursing | 18 | 22 | 1.0 (0.5, 2.2) | 1.2 (0.5, 2.7) | 1.5 (0.6, 3.6) |

| Bachelors degree or less | 30 | 37 | 1.0 | 1.0 | 1.0 |

| Health Department | |||||

| Census Region | |||||

| Northeast | 7 | 11 | 1.0 | 1.0 | 1.0 |

| Midwest | 30 | 37 | 1.3 (0.4, 3.7) | 1.3 (0.4, 4.3) | 1.4 (0.6, 3.0) |

| South | 37 | 27 | 2.2 (0.7, 6.3) | 3.7 (0.9, 15.0) | 1.9 (0.8, 4.8) |

| West | 18 | 15 | 1.9 (0.6, 6.1) | 1.6 (0.4, 5.7) | 1.5 (0.6, 3.6) |

| Population of Jurisdiction | |||||

| <25,000 | 8 | 16 | 1.0 | 1.0 | 1.0 |

| 25,000 to 49,999 | 20 | 22 | 1.8 (0.6, 5.2) | 1.6 (0.5, 4.8) | 7.5 (3.3, 17.3) |

| 50,000 to 99,999 | 13 | 16 | 1.6 (0.5, 5.0) | 1.8 (0.6, 5.8) | 4.9 (2.1, 11.2) |

| 100,000 to 499,999 | 34 | 25 | 2.7 (1.0, 7.3) | 2.5 (0.8, 7.3) | 7.1 (3.0, 16.9) |

| 500,000 or larger | 17 | 11 | 3.1 (1.0, 9.6) | 1.7 (0.5, 6.3) | 4.4 (1.6, 12.5) |

| Governance Structure | |||||

| State governed | 71 | 67 | 0.3 (0.1, 1.0) | 0.1 (0.02, 0.5) | 3.1 (1.04, 9.1) |

| Locally governed | 3 | 11 | 1.0 | 1.0 | 1.0 |

| Shared governance | 18 | 12 | 1.4 (0.6, 3.2) | 0.6 (0.2, 1.9) | 2.5 (0.8, 7.6) |

Note: For the final model, significant variables were retained if significant at the 0.2 level.

Table after Brownson et al 9

Discussion

Performance measurement in public health has most frequently focused on the agency and characteristics of the agency director, based most often on responses from a single individual, i.e., the director or designee′.26,27 To our knowledge, this is the first nationwide report on the performance of specific practices at both the top leader as well as program leadership levels, providing insights on EBPH across and within LHDs. While LHD directors and program managers report similar levels of performance in many of the A-EBPs, there are differences in both performance and individual characteristics which have important implications for improving competencies in EBPH.

It is not surprising to find differences in performance of A-EBPs and individual characteristics across the three programs areas of chronic disease, environmental health, and infectious diseases – such differences reflect not only the differences in program content, but the history and organizational milieu of these programs. Chronic disease programs are among the newer major programs to be established at state and local health department levels, and the data on time in current position and overall experience in public health among CD managers in this study may reflect this history.28 Although New York State initiated cancer reporting as early as 1911, it wasn't until 1985 that the first National Conference on Chronic Disease Prevention and Control was held, and 1988 when CDC established the National Center for Chronic Disease Prevention and Health Promotion.29 At the state level, it wasn't until 1993 when all states had established tobacco control and prevention programs, and 1995 when all states had established screening programs for breast cancer.29 The relative higher scores for CD program managers in supporting training for EBPH, leadership in EBPH, and in the domain of relationships and partnerships are consistent with earlier emphases on CD programs in general and cancer control and prevention specifically. Meissner et al described internal and external factors that contribute to success in controlling cancer in the public health setting, which included leadership, use of data, training, and the importance of linkages and coalitions for developing, implementing, and maintaining community-based programs.30 Brownson and Bright defined “cross-cutting areas of focus that will enhance efforts in chronic disease control”, which included a focus on data and science, community and decision-maker support, and meaningful collaborations.29

In contrast to CD programs, ID programs are among the oldest and most well-established programs in public health, reflecting the initial focus of most governmental public health agencies in controlling epidemics of diseases such as yellow fever, smallpox, and tuberculosis.28 Among these three program areas, ID programs are most often connected with clinical care, so it is not surprising to find that almost half of ID managers have a nursing degree as their highest degree. Of the three programs, EH has the greatest variability across LHDs: in many states, EH activities are under the purview of the state and local public health agency, while in others EH functions are carried out by separate agencies at either the state or local level, or both.31,32 EH activities also vary significantly by size of the LHD and jurisdictional population: EH activities in smaller LHDs, with smaller jurisdictional populations tend to be limited to inspection of food establishments and public facilities such as hotels/motels, swimming pools, and daycare facilities, while EH activities in large metropolitan LHDs may include air and water quality, radiation control, and noise pollution.31 Overall, only 11 of the 34 EH-related activities included in the 2010 NACCHO Profile study are provided by more than 50% of LHDs.31 Such differences across program areas are also reflected in the educational background of program managers, which also influences organizational culture, e.g., only 7.4% of EH program managers had an MPH as their highest degree, compared to 12.4% for CD managers and 24.3% for ID managers. The training of program managers overall compared to LHD directors further highlights these differences, as 17.7% of directors had a doctoral degree as their highest degree, compared to 5.5% for program managers.

It is possible that some of the variation observed - e.g., access to evidence-based decision making - across the three types of programs is related to the availability of evidence-based practice guidelines. The recommendations in the Guide to Community Preventive Services7 pertain in large part to chronic diseases. There are a few recommendations that pertain directly to infectious diseases (HIV/AIDS, STIs; vaccinations; and pandemic influenza), but fewer for environmental health (smoke-free policies – often enforced by EH staff; indoor air pollution regarding asthma; and the built environment regarding physical activity). Because of this, it may be that program managers in ID and EH rely more on best practices guidelines within their disciplines.

These findings show some similarities to those from a 2011 NACCHO study, involving a survey of 521 LHD staff in three programmatic areas (tobacco prevention, HIV/AIDS prevention, and immunization) to explore their knowledge, attitudes, and practices related to EBPH in general, and The Community Guide, specifically.10 This study identified several factors associated with increased familiarity with, confidence in skills, or use of EBPH, including level of education (highest degree), specific training in skills needed for EBPH, and funder requirements to use evidence-based interventions. In addition, staff in tobacco prevention programs – one of the programs under the chronic diseases umbrella - were more likely to report that funders required the use of evidence-based practices, and they were more familiar with The Community Guide than staff in HIV/AIDS or immunization programs. In contrast to findings in the current study, however, the NACCHO study found that neither age nor tenure in public health were associated with EBPH awareness, skills, or use; and, that the lower levels of awareness, confidence in skills, and use of EBPH among staff in smaller LHDs disappeared after controlling for education and training in EBPH-related activities.

There was a notable difference in governance structure as a predictor of the performance of A-EBPs for LHD directors compared to program managers. For LHD directors, working in a state-governed LHD was a greater predictor of performance, while working in locally-governed LHDs was a greater predictor for program managers. One can only speculate that for program managers, local autonomy provides an organizational climate more conducive to program-level leadership, while state-governed LHDs may have higher requirements and expectations for LHD directors than locally-governed LHDs. Historically, studies have come to different conclusions in examining correlates of higher overall performance, with some reporting higher performance scores for LHDs which are part of a centralized, state-governed public health system, while others have reported higher performance scores for LHDs in decentralized governance relationships.26,33-35 Determining how organization influences performance, and whether there may be distinct advantages of one governance structure over another remains a topic of intense interest within PHSSR.36-38

The differences in educational background, experience, and performance of A-EBPs between LHD directors compared to program managers, as well as across the three different types of programs, have direct relevance to training and improving competencies in EBPH. Nurses, epidemiologists, and sanitarians, for example, differ in their specific focus on evidence – individual, population, organizational; their skill sets are different; and, the context of practice – clinical, population-focused, regulatory – is different. A “one size fits all” approach to training and strengthening EBPH competencies will not work. Recognizing these differences also acknowledges that major program areas within state and local health departments have been “siloed” over many decades, primarily due to the program-specific nature of their funding.28,39 Wiesner described this as one of four “diseases in disarray”: a “hardening of the categories”.40 A focus on EBPH in general, and A-EBPs in particular, may provide a pathway out of these siloes and a softening of the categories.

These findings, combined with more detailed data on performance of A-EBPs by LHD directors9, bring a special focus to nursing in public health. One of the strongest predictors of AEBP performance is size of the LHD jurisdictional population, with LHD directors in larger populations (>25,000) up to seven times more likely to be high-performing than those in jurisdictions < 25,000, and a nursing degree is the most common single degree of LHD directors in those smaller jurisdictions. Whether these differences reflect different capacities of LHDs simply on the basis of size, whether there is a different focus and skill set among LHD directors who are nurses, or whether small LHDs have a special history and affinity for having nurses as directors is not clear. Overall, 21.7% of program leaders and 18.8% of LHD directors had a nursing degree as their highest degree, with a notable presence of nurses among ID program managers as described earlier. The importance of nursing as a major entry point for future public health professionals has been recognized by the Institute of Medicine in its reports on Who will keep the public healthy?41, and The Future of Nursing: Leading Change, Advancing Health42, with several recommendations on education and leadership development, e.g., the placement of nursing students in public health practice settings and the development of leadership programs in public health nursing. This renewed emphasis on nursing and public health, given the differences noted above for small LHDs, lends itself well to practice-based research which can be actionable.

While differences in leadership practices and performance by both directors and program managers have been well described in the general literature on leadership43, there is very little published information specific to public health. In a study focused on knowledge and use of America's Health Rankings, Erwin et al noted differences in responses to key informant interviews involving the top state health official compared to program leaders, reporting that “Although the majority of [state health officials] are aware of [America's Health Rankings], there appears to be less penetration and much less understanding of the methodology in the rankings at the programmatic level.”44(p.411) The use of America's Health Rankings differed as well, with state health officials using the rankings as more of a policy lever and communications tool, while program directors used the rankings as source of data for comparing with adjoining/similar states. In a current project on setting budgets and priorities, Leider et al report important differences when comparing practices among state health department directors, deputy directors, and program managers in environmental health, emergency preparedness, and maternal and child health.45 The agency director was much more likely to report frequent use of decision or prioritization tools for resource allocation in fiscal year 2011 compared to program managers, and there were distinct differences in such practices across the programs studied. The present study adds to these studies regarding the importance of considering whom to target for survey response, particularly for studies that focus on performance, as perspectives may differ according to who responds.

There are notable limitations to this study. First, all data are self-reported, and there were no attempts to verify the accuracy of responses. Second, the responses may have been biased towards larger LHDs, as the larger the agency the more likely it is to have program managers for all three programs studied. Third, the response rates for LHD directors (54%) and program managers overall (66.8%) were modest and may introduce additional bias by directors and managers serving larger LHDs.

In conclusion, performance of A-EBPs varies between LHD directors and program managers, as well as across different public health program areas. Understanding the differences in educational background, experience, and organizational culture for program managers is a necessary step to improving competencies in EBPH. A common path to improving such competencies may be one means to reduce the silo-reinforcing nature of public health funding. This has important implications for quality improvement –related initiatives such as national voluntary public health accreditation, with standards focused on workforce development and evidence-based public health, but especially for the standards focused on administration and management. The identification of A-EBPs provides a stronger evidence-based platform for revising standards and measures for administrative practices, and this current study provides real-world evidence of how different capacities in achieving A-EBPs exist across different types of programs and levels of leadership. This can be useful not only to those involved in administering accreditation, but also for public health agencies which are preparing for accreditation.

Acknowledgments

This study was supported in part by Robert Wood Johnson Foundation's grant no. 69964 (Public Health Services and Systems Research) and by Cooperative Agreement Number U48/DP001903 from the Centers for Disease Control and Prevention (the Prevention Research Centers Program). We also thank members of our research team: Beth Dodson, Rodrigo Reis, Peg Allen, Kathleen Duggan, Robert Fields, and Katherine Stamatakis, and Drs. Glen Mays and Douglas Scutchfield of the National Coordinating Center for Public Health Services and Systems Research, University of Kentucky College of Public Health.

References

- 1.CDC. Ten great public health achievements--United States, 1900-1999. MMWR Morb Mortal Wkly Rep. 1999 Apr 2;48(12):241–243. [PubMed] [Google Scholar]

- 2.Fielding JE. Public health in the twentieth century: Advances and challenges. Annual Review of Public Health. 1999;20:xiii–xxx. doi: 10.1146/annurev.publhealth.20.1.0. [DOI] [PubMed] [Google Scholar]

- 3.Public Health Accreditation Board [09/30, 2011];Guide to National Accreditation. 2011 http://www.phaboard.org/wp-content/uploads/PHAB-Guide-to-National-Public-Health-Department-Accreditation-Version-1_0.pdf.

- 4.U.S. Congress . Congressional Record. Vol. 156. Vol. 23. Government Printing Office; Mar, 2010. Patient Protection and Affordable Care Act (Law 111–148). p. 2010. [Google Scholar]

- 5.Willard R, Shah GH, Leep C, Ku L. Impact of the 2008-2010 economic recession on local health departments. J Public Health Manag Pract. 2012 Mar-Apr;18(2):106–114. doi: 10.1097/PHH.0b013e3182461cf2. [DOI] [PubMed] [Google Scholar]

- 6.Kohatsu ND, Robinson JG, Torner JC. Evidence-based public health: an evolving concept. Am J Prev Med. 2004 Dec;27(5):417–421. doi: 10.1016/j.amepre.2004.07.019. [DOI] [PubMed] [Google Scholar]

- 7.Zaza S, Briss PA, Harris KW, editors. The Guide to Community Preventive Services: What Works to Promote Health? Oxford University Press; New York: 2005. [Google Scholar]

- 8.Brownson RC, Allen P, Duggan K, Stamatakis KA, Erwin PC. Fostering more-effective public health by identifying administrative evidence-based practices: a review of the literature. Am J Prev Med. 2012 Sep;43(3):309–319. doi: 10.1016/j.amepre.2012.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Brownson RC, Reis RS, Allen P, et al. Understanding the process of evidence-based public health: Findings from a national survey of local health department leaders. Am J Prev Med. 2013 doi: 10.1016/j.amepre.2013.08.013. In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Bhutta C. Local Health Departments and Evidence-based Public Health: Awareness, Engagement and Needs. National Association of County and City Health Officials; Washington: p. DC2011. [Google Scholar]

- 11.Allen P, Brownson RC, Duggan K, Stamatakis KA, Erwin PC. The Makings of an Evidence-Based Local Health Department: Identifying Administrative and Management Practices. Frontiers in Public Health Services and Systems Research. 2012;1(2):2. [Google Scholar]

- 12.Reis RS, Duggan K, Allen P, Stamatakis KA, Erwin PC, Brownson RC. Developing a Tool to Assess Administrative Evidence-Based Practices in Local Health Departments. Frontiers in Public Health Services and Systems Research. 2013 In Press. [Google Scholar]

- 13.Hajat A, Cilenti D, Harrison LM, MacDonald PD, Pavletic D, Mays GP, Baker EL. What predicts local public health agency performance improvement? A pilot study in North Carolina. J Public Health Manag Pract. 2009 Mar-Apr;15(2):E22–33. doi: 10.1097/01.PHH.0000346022.14426.84. [DOI] [PubMed] [Google Scholar]

- 14.Handler A, Issel M, Turnock B. A conceptual framework to measure performance of the public health system. Am J Public Health. 2001 Aug;91(8):1235–1239. doi: 10.2105/ajph.91.8.1235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Mays GP, Smith SA, Ingram RC, Racster LJ, Lamberth CD, Lovely ES. Public health delivery systems: evidence, uncertainty, and emerging research needs. Am J Prev Med. 2009 Mar;36(3):256–265. doi: 10.1016/j.amepre.2008.11.008. [DOI] [PubMed] [Google Scholar]

- 16.Tilburt JC. Evidence-based medicine beyond the bedside: keeping an eye on context. J Eval Clin Pract. 2008 Oct;14(5):721–725. doi: 10.1111/j.1365-2753.2008.00948.x. [DOI] [PubMed] [Google Scholar]

- 17.Jacobs JA, Dodson EA, Baker EA, Deshpande AD, Brownson RC. Barriers to evidence-based decision making in public health: a national survey of chronic disease practitioners. Public Health Rep. 2010 Sep-Oct;125(5):736–742. doi: 10.1177/003335491012500516. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Brownson RC, Ballew P, Brown KL, et al. The effect of disseminating evidence-based interventions that promote physical activity to health departments. Am J Public Health. 2007 Oct;97(10):1900–1907. doi: 10.2105/AJPH.2006.090399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Brownson RC, Ballew P, Dieffenderfer B, et al. Evidence-based interventions to promote physical activity: what contributes to dissemination by state health departments. Am J Prev Med. 2007 Jul;33(1 Suppl):S66–73. doi: 10.1016/j.amepre.2007.03.011. [DOI] [PubMed] [Google Scholar]

- 20.Brownson RC, Ballew P, Kittur ND, et al. Developing competencies for training practitioners in evidence-based cancer control. J Cancer Educ. 2009;24(3):186–193. doi: 10.1080/08858190902876395. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Dreisinger M, Leet TL, Baker EA, Gillespie KN, Haas B, Brownson RC. Improving the public health workforce: evaluation of a training course to enhance evidence-based decision making. J Public Health Manag Pract. 2008 Mar-Apr;14(2):138–143. doi: 10.1097/01.PHH.0000311891.73078.50. [DOI] [PubMed] [Google Scholar]

- 22.Jacobs JA, Clayton PF, Dove C, et al. A survey tool for measuring evidence-based decision making capacity in public health agencies. BMC Health Serv Res. 2012;12:57. doi: 10.1186/1472-6963-12-57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Cronbach LJ. Coefficient alpha and the internal structure of tests. Psychometrika. 1951;16(3):297–334. [Google Scholar]

- 24.Qualtics [December 24, 2011];Qualtrics: Survey Research Suite. 2013 http://www.qualtrics.com/.

- 25.National Association of County and City Health Officials . Core and Modules [Data file] NACCHO; Washington, DC: 2010. National Profile of Local Health Departments Survey. [Google Scholar]

- 26.Erwin PC. The performance of local health departments: a review of the literature. J Public Health Manag Pract. 2008 Mar-Apr;14(2):E9–18. doi: 10.1097/01.PHH.0000311903.34067.89. [DOI] [PubMed] [Google Scholar]

- 27.Roper WL, Mays GP. Performance measurement in public health: conceptual and methodological issues in building the science base. J Public Health Manag Pract. 2000 Sep;6(5):66–77. doi: 10.1097/00124784-200006050-00010. [DOI] [PubMed] [Google Scholar]

- 28.Scutchfield FD, Keck CW. Principles of Public Health Practice. Thomson, Delmar Learning; New York, NY: 2003. [Google Scholar]

- 29.Brownson RC, Bright FS. Chronic disease control in public health practice: looking back and moving forward. Public Health Rep. 2004 May-Jun;119(3):230–238. doi: 10.1016/j.phr.2004.04.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Meissner HI, Bergner L, Marconi KM. Developing cancer control capacity in state and local public health agencies. Public Health Rep. 1992 Jan-Feb;107(1):15–23. [PMC free article] [PubMed] [Google Scholar]

- 31.National Association of County and City Health Officials . National Profile of Local Health Departments. National Association of County and City Health Officials; Washington, DC: 2010. 2011. [Google Scholar]

- 32.Association of State and Territorial Health Officials [06/18, 2013];Profile of State Public Health. 2010 http://www.astho.org/profiles/.

- 33.Richards TB, Rogers JJ, Christensen GM, Miller CA, et al. Evaluating local public health performance at a community level on a statewide basis. J Public Health Manag and Pract. 1995;1(4):70–83. [PubMed] [Google Scholar]

- 34.Suen J, Magruder C. National profile: overview of capabilities and core functions of local public health jurisdictions in 47 states, the District of Columbia, and 3 U.S. territories, 2000-2002. J Public Health Manag Pract. 2004 Jan-Feb;10(1):2–12. doi: 10.1097/00124784-200401000-00002. [DOI] [PubMed] [Google Scholar]

- 35.Mays GP, Halverson PK, Baker EL, Stevens R, Vann JJ. Availability and perceived effectiveness of public health activities in the nation's most populous communities. Am J Public Health. 2004 Jun;94(6):1019–1026. doi: 10.2105/ajph.94.6.1019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Meit M, Sellers K, Kronstadt J, et al. Governance typology: a consensus classification of state-local health department relationships. J Public Health Manag Pract. 2012 Nov;18(6):520–528. doi: 10.1097/PHH.0b013e31825ce90b. [DOI] [PubMed] [Google Scholar]

- 37.Consortium from Altarum I, Centers for Disease C. Prevention, Robert Wood Johnson F, National Coordinating Center for Public Health S, Systems R. A national research agenda for public health services and systems. Am J Prev Med. 2012 May;42(5 Suppl 1):S72–78. [Google Scholar]

- 38.Hyde JK, Shortell SM. The structure and organization of local and state public health agencies in the US: a systematic review. Am J Prev Med. 2012;42(5):S29–S41. doi: 10.1016/j.amepre.2012.01.021. [DOI] [PubMed] [Google Scholar]

- 39.Turnock BJ. Public Health: What it is and How it Works. Fourth ed. Jones and Bartlett Publishers; Sudbury, MA: 2009. [Google Scholar]

- 40.Wiesner PJ. Four diseases of disarray in public health. Annals of Epidemiology. 1993;3(2):196–198. doi: 10.1016/1047-2797(93)90137-s. [DOI] [PubMed] [Google Scholar]

- 41.Gebbie KM, Rosenstock L, Hernandez LM. Who will keep the public healthy? : educating public health professionals for the 21st century. National Academies Press; Washington, D.C.: 2002. [PubMed] [Google Scholar]

- 42.The Future of Nursing: Leading Change, Advancing Health. The National Academies Press; 2011. [PubMed] [Google Scholar]

- 43.Yukl GA. Leadership in Organizations. Pearson Education, Inc.; Upper Saddle River, NJ: 2006. [Google Scholar]

- 44.Erwin PC, Myers CR, Myers GM, Daugherty LM. State responses to America's Health Rankings: the search for meaning, utility, and value. J Public Health Manag Pract. 2011 Sep-Oct;17(5):406–412. doi: 10.1097/PHH.0b013e318211b49f. [DOI] [PubMed] [Google Scholar]

- 45.Leider JP, Sellers K, Resnick B. The Setting Budgets and Priorities project.. AcademyHealth Research Meeting; Baltimore, MD.. June 25, 2013.2013. [Google Scholar]