Abstract

There is growing appreciation that process improvement holds promise for improving quality and efficiency across the translational research continuum but frameworks for such programs are not often described. The purpose of this paper is to present a framework and case examples of a Research Process Improvement Program implemented at Tufts CTSI. To promote research process improvement, we developed online training seminars, workshops, and in‐person consultation models to describe core process improvement principles and methods, demonstrate the use of improvement tools, and illustrate the application of these methods in case examples. We implemented these methods, as well as relational coordination theory, with junior researchers, pilot funding awardees, our CTRC, and CTSI resource and service providers. The program focuses on capacity building to address common process problems and quality gaps that threaten the efficient, timely and successful completion of clinical and translational studies.

Keywords: process improvement, quality improvement, recruitment and retention, clinical research

Introduction

In recent decades, healthcare systems have increasingly adopted process improvement methods from business, industry, and engineering to achieve greater operational efficiency in care processes, improve patient safety and clinical outcomes, reduce costs, and improve patients' experience of care.1 Prior to this, despite clinical advances and vast expenditures, medical care had been primarily run as a “cottage industry” by highly educated and well‐motivated health professionals with no background in process improvement.

The implementation of continuous process improvement has required cultural, logistical, and educational changes that have been challenging for all involved. However, this transformation has been an enormous step forward for healthcare. Key aspects in the last decade have included the codification and use of performance and outcome measures, the application of systems engineering and process improvement approaches across healthcare settings, the adoption of multidisciplinary and multi‐institutional collaborative improvement teams,2 and the publishing of these efforts in major journals.3, 4, 5

The practice of medicine and delivery of healthcare have long been the foundation of biomedical‐ and health‐related research, but to date, continuous process improvement has not been a major focus of research activities. There is growing appreciation that process improvement holds promise for improving quality and efficiency across the translational research continuum, as evidenced in the most recent Funding Opportunity Announcement (FOA) by the National Center for Advancing Translational Sciences (NCATS).6 This paper describes approaches used by Tufts Clinical and Translational Science Institute (CTSI) to develop and implement a conceptual framework for process improvement approaches to research and provides case examples.

Why Apply Improvement Methods to Research Processes?

There is an increasing call for the US research enterprise to have better efficiency, transparency, and impact on health. Congress and the American public are more closely scrutinizing the outcomes of federally funded research for returns on their investments. Research funders and investigators are looking for ways to improve efficiency as they adjust to a shrinking (in real dollars) US research budget.7 The complexity of research is compounded by high costs and delays before research is translated into impact on patients and the public. For instance, in pharmaceutical studies, it costs up to an average of 2.5 billion dollars to bring a new drug to market.8 Given these high costs, even brief delays in the development path of a clinical trial for a potentially successful drug incur substantial financial and human costs.

Recognizing the need for a more effective and efficient research enterprise, several innovations are being implemented in federally funded research. The National Institutes of Health (NIH) initiated the Clinical and Translational Science Award (CTSA) program in 2006 with the explicit goal to address translational gaps, accelerate the uptake of research into policy and practice,9 and to improve research processes, such as participant recruitment into clinical trials. The CTSA program has also supported rigorous, systematic research methodological training through its T and K mechanisms that seek to augment the traditional apprenticeship‐based research training. The most recent CTSA FOA affirms this goal and explicitly calls on CTSAs to “be agents of continuous improvement as they identify gaps and opportunities in the research process and develop innovative solutions.”6

Efforts are underway to promote high quality and efficient clinical trials, yet the systematic application of evidence‐informed improvement methods to translational research has been limited, at least in the published literature. Good Clinical Practice (GCP) principles for conducting clinical trials10 have been adopted within the United States and internationally and the Clinical Trials Transformation Initiative (CTTI)11 promotes use of the principles of Quality by Design12 to identify and promote practices that will increase the quality and efficiency of clinical trials. The Metrics Champion Consortium, an industry association, has developed standardized performance metrics and supports metric benchmarking for clinical trial13 improvement. Pharmaceutical research and development teams, clinical laboratories, Institutional Review Boards (IRBs), and research management programs have all discoursed applications of improvement methods to drug discovery, IRB approval, and other processes.14, 15, 16 Yet such methods are rarely part of public discussions of research, research training program curricula, recommended components within grant applications, or research papers. As academic medical centers' clinical leadership and management are adopting process improvement methods and tools, these approaches have been largely untapped by research teams.

Tufts CTSI's Program to Improve Research Processes

To promote the use of process improvement methods in research, Tufts CTSI developed a series of online training seminars, workshops, and in‐person consultation models. They describe core process improvement principles and methods, demonstrate the use of common improvement tools, and illustrate the application of these methods in case examples.

Initial training sessions in 2013–2014 focused on a process improvement framework for clinical trials. Trials involve complex systems of processes that require ongoing monitoring and improvement to accomplish their aims. Efforts such as CTTI's Quality by Design define critical factors that underpin a trial's success that can be built into the design of a trial to minimize risks to quality.12 While building quality control measures into the design of a trial is important, researchers must often address barriers that were not necessarily anticipated but that develop while the trial is underway. This process improvement framework provides researchers with a toolbox of methods to understand and address those unanticipated barriers, manage risks, and respond to unexpected events.

Process Improvement and QI Principles, Methods, and Tools

The application of process improvement methods to research focuses on the systematic use of tested methods and tools to proactively set up a high quality process or, more commonly, as in early experience of Tufts CTSI, to address areas of ongoing work that need improvement. While improvement models such as Lean and Six Sigma are often used in healthcare, business, and manufacturing, Tufts CTSI chose to employ Gerald J. Langley's Model for Improvement to17 be consistent with partner healthcare and medical education organizations. Having a shared theoretical model and language among practicing clinicians and researchers was seen as important to increase collaboration, reduce the need to learn new frameworks, and reinforce the use of improvement methods in both clinical and research activities.

The Model for Improvement is a published framework for developing, testing, and implementing changes to improve a process that is readily adapted to research (Table 1). In brief, an individual or research team; (1) identifies a problem and formulates an aim to ameliorate a research process problem and analyzes the current structure, processes, and outcomes to identify quality gaps; (2) identifies process or outcome measures to determine when the goal is met and measures to detect inadvertent negative consequences of proposed changes; (3) develops change strategies to improve processes and outcomes; (4) implements rapid, incremental changes in practice and tests each change by tracking both anticipated and unanticipated effects; and (5) adapts, adopts, or abandons a change, depending on the results.

Table 1.

Model for Improvement and tools associated with each stage

| Tufts CTSI research process improvement steps | Corollary in Model for Improvement | Tools and descriptions |

|---|---|---|

Establish aim

Prioritize a problem, Ready the team, Educate the team and develop a shared understanding of the problem

|

Answer: “What are we trying to accomplish?” | Project charter: used to describe the target problem, establish project scope and goals, and delineate team members' roles and responsibilities Cause and effect or “Fishbone” diagram: graphically displays possible causes of a problem or opportunity for improvement Process map: illustrates existing process and future process. Helps to identify needed process changes |

| Identify process, outcome, and balancing measures | Answer: “How will we know that a change is an improvement?” | Measurement forms: describe the numerator and denominator of each measure (not used in case examples) |

| Identify change strategies based on prework conducted above, standard change concepts, or the literature | Answer: “What changes can we make that will result in an improvement?” | Driver diagram: displays an improvement project in a single diagram, includes change strategies and provides a measurement framework for monitoring progress |

| Conduct Plan‐Do‐Study‐Act cycles; study results; and adopt, abandon, or adapt change strategies | Conduct Plan‐Do‐Study‐Act cycles; study results; and adopt, abandon, or adapt change strategies | Run chart: displays improvement measure results over time |

See the Institute for Healthcare Improvement (IHI) Website for additional information about tools and change concepts at: http://www.ihi.org/resources/Pages/Tools/default.aspx

In adapting the Model for Improvement, first, we highlight the importance of PRE‐work: prioritizing a target area for improvement, “readying” a team to address the area, and educating the team to develop a shared understanding of the scope and underlying inefficiencies or quality issues in the target area. Next, we highlighted the importance of clear and specific measures of improvement. These quantitative measures help the team to determine if a specific implemented change leads to improvement in process or outcome measures, or inadvertently creates a related but different problem.

To illustrate the application of these methods to research, a set of key processes was selected that serve as intermediate outcomes for overall clinical trial success (Table 2).18 We then discussed the identification of change strategies that draw on insights garnered from prework, the application of standard “change concepts,”19 or the work of others that has successfully improved a similar process. These changes are implemented by small Plan‐Do‐Study‐Act (PDSA) improvement cycles that consist of planning for a change, experimenting on a small‐scale (doing), observing the results (study), and acting on what is learned by abandoning, adopting, or adapting the change strategy. These PDSA cycles are repeated until the desired aim is achieved.

Table 2.

Sample clinical research outcome and process improvement measures

| Category | Measures |

|---|---|

| Overall study functions | Time to IRB approval, protocol amendment cycle Time to hiring or training research staff |

| Participants (patient or clinician) | Achieving recruitment goal Increasing monthly enrollment rate Increasing the number of minority participants Increasing proportion of participants from particular types of treatment settings Increasing participant retention rate Increasing participant adherence to medications or other treatment procedures Increasing participant and clinician satisfaction with participation in the trial |

| Protocol or services | Increasing access to timely treatment appointments Improving timeliness of collection of samples (blood, etc.) Reducing deviations in the treatment protocol |

| Data collection and analysis | Decreasing rate of data collection or processing related errors Improving collection of primary outcome data |

| Cost | Adhering to trial budget Decreasing per participant study cost Decreasing supply cost variation from budget |

Although a wide array of tools can be drawn from Lean, Six Sigma, and the Model for Improvement, we focused on five basic tools for understanding, addressing, and monitoring delay, inefficiency, and error in research processes: team charters, flowcharts, cause and effect diagrams, driver diagrams, and run charts (Table 1). For larger improvement projects involving a research team, we stressed the use of a team charter to clarify roles and responsibilities of research team members and to document ground rules for communication and research processes. Cause and effect diagrams were demonstrated as a tool to systematically develop a shared understanding of the area in need of improvement prior to brainstorming change strategies. Flow charts served a similar function. Three versions of a flow chart often were created: what the team believes the process entails, what the process actually entails based on the experience of those involved in the process or observation, and a proposed, improved process. Driver diagrams illustrated the use of a tool to clarify the identified aim(s), the identified “drivers” for change, and linked change strategies. Run charts were presented as a means of tracking metrics and changes implemented to see their effect on research processes.

Case Studies of the Application of Process Improvement and QI Methods to Research Processes

Composite case studies were developed for teaching purposes, based on our experience addressing investigator consultation requests to Tufts CSTI and working with research study coordinators affiliated with the Tufts CTSI or conducting clinical trials. Two illustrative case studies used during the trainings are provided below. The first demonstrates the use of a charter, cause and effect diagram, and driver diagram in addressing delays in patient recruitment for a clinical trial; the second employs a flowchart and run chart to improve processes in a study being conducted within Tufts CTSI's clinical trial unit.

Case study #1: recruitment to a clinical trial

The failure to recruit adequate numbers of patients into clinical trials is a frequent threat to success. This leads to underpowered and inconclusive studies. This compromises the ethical basis of human participant involvement—if there is no feasible likelihood of achieving an answer, there is no justification for including humans in studies. Improvement methods can be used to identify barriers to recruitment and to identify and implement effective strategies.

A research team received a grant to test a 14‐week behavior management program with parents of preschoolers in six urban and suburban practices. While the recruitment plan was agreed upon by the medical directors at each practice location prior to the grant's submission, recruitment rates were low across the sites, particularly in the urban health centers. The principal investigator sought out consultation from Tufts CTSI. The Tufts CTSI Research Process Improvement Program, the researcher, and her staff worked with clinical and administrative staff at each site to understand the recruitment process and barriers to success. Preliminary feedback from the sites suggested unique factors were affecting recruitment at each site; the decision was made to form site‐specific small improvement teams composed of a clinician, administrative staff person, and research team member.

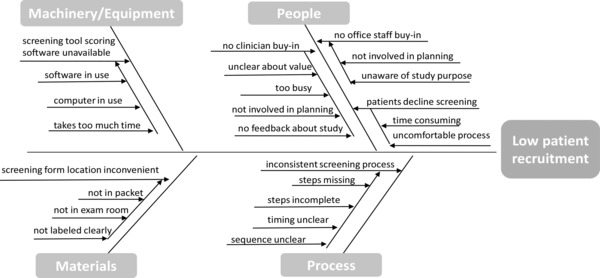

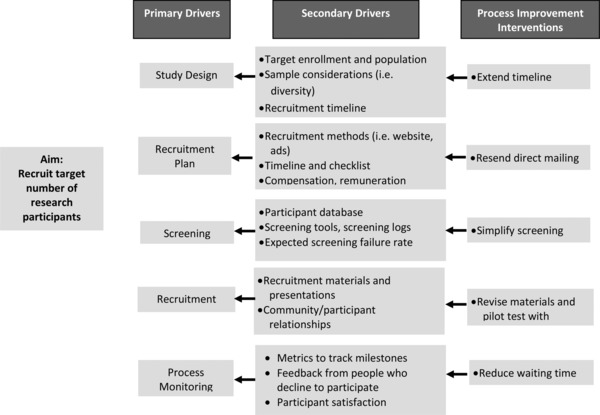

Each site's improvement team created a team charter that included a basic description of the project, its aim, a numeric recruitment goal, and timeframe to achieve the goal (Table 3). The charter kept the scope of the project manageable and assured that the team's membership included the perspectives of critical stakeholders. Each team's goal was to increase the rate of parent recruitment to five families per week by the beginning of the following month. A performance measure was created to ensure that team members had a common understanding of when a change led to an improvement. Teams developed cause and effect diagrams to categorize the possible causes for low recruitment rates across categories (e.g., people, processes, materials, and machinery/equipment; Figure 1). One team identified a cumbersome electronic screening process as impairing recruitment and opted for a simpler paper‐based system. Other sites problem‐solved on the types of recruitment materials that would appeal to their patient populations; one established a parent advisory board to review recruitment materials, and others worked with the research team to pilot test changes with families. Tailored change strategies were developed at each site based on their cause and effect diagrams and driver diagrams (Figure 2). Improvement team work plans were created to identify responsible research and practice staff that would carry out planned PDSA cycles. Staff regularly received feedback and discussed what was, and was not, working. The study team was able to improve their recruitment rates and complete their deliverables for the study on time.

Table 3.

Team charter

| Project title: Improving research participant recruitment |

| Team members: Clinician, site administrative staff, parent, research staff member |

|

Defining our aim(s): What are we trying to accomplish? Improve the rate of participant recruitment to five preschool parents per week in each of the four clinics (20 participants per week) by December 2015. |

|

Describe our measure(s): How do we know that a change is an improvement? The number of preschool parents whose completed recruitment form is obtained by the clinic staff and faxed to the study coordinator each week for each clinic/total number of preschool parents eligible for the study during that week. |

Describe our change strategies: What changes can we make that will lead to improvement? Each change will include a PDSA cycle.

|

Figure 1.

Cause and effect diagram: low recruitment rate.

Figure 2.

Driver diagram: improving recruitment rate.

This case illustrates the importance of capturing different perspectives about the underlying causes of a problem prior to implementing a specific improvement strategy. While quick solutions are appealing, a systematic process of identifying a wide range of possible causes, based on different perspectives, may yield more effective, tailored solutions.

Case study #2: laboratory tests in the Clinical and Translational Research Center (CTRC)

Clinical trial centers manage large numbers of trial protocols with different needs that require working with different stakeholders, including research teams, nursing, pharmacy, imaging, laboratories, and others. Efficiency of integrating all necessary services for an individual trial is an important intermediate measure of success for clinical trial centers and has implications for the quality of the research, staffing, the fiscal stability, participant retention, and the satisfaction of investigative teams and pharmaceutical companies.

A research team working with Tufts CTSI's CTRC was evaluating the efficacy of an experimental drug for patients with hepatitis C and needed to ensure patients had an adequate platelet count immediately before drug administration. Delays in obtaining the blood sample, sending it to the laboratory, and receiving the laboratory results were causing unnecessary holdups in administering the drug. The CTRC needed to reduce turnaround time to assure efficient processes and improve customer (investigator and participant) satisfaction with its services.

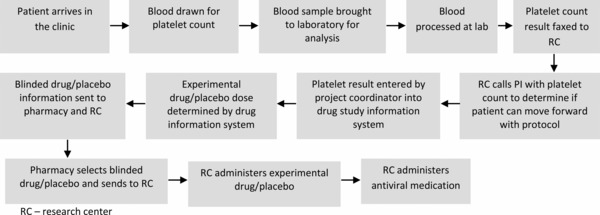

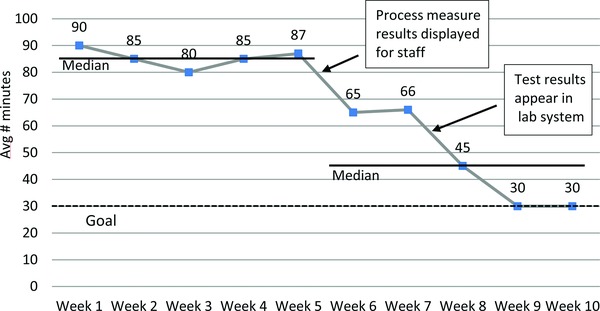

To address the situation, the CTRC employed several tools. First, they formed an improvement team including the research study coordinator, research nurse, principal investigator, research pharmacist, laboratory manager, patient scheduler, and a patient representative. Next, they developed a project charter and agreed on a specific and measurable aim: reducing the turnaround time for platelet count test results by 50% by the end of one month. A measurement plan for the turnaround time allowed the team to define exactly how it would be measured: the average minutes from blood‐draw to when platelet count results were received by the research nurse, calculated on a weekly basis. They also agreed on an operational definition of the measure and how exactly the data was to be collected and the average computed. A flow diagram was used to map out each step in the current process based on the experience and observation of team members (Figure 3). This allowed the team to see which steps might be slowing down the process, and which steps they could alter or eliminate. The team identified that faxed test results were sometimes lost or misplaced resulting in delays in providing the experimental drug. The flow chart was revised to map out a proposed new process. The team implemented and modified a process for electronic reporting of test results over several PDSA cycles. Over the next several weeks, staff attention focused on process inefficiencies and the run chart showed a decline in the turnaround time measure (Figure 4).

Figure 3.

Process flow diagram and process measures: laboratory test result reporting.

Figure 4.

Run chart: average number of minutes from blood drawn to platelet count result received.

This case illustrates that objectively reviewing the actual steps in a process, as well as process delays and inefficiencies, can help to identify where changes will be most effective. Gaining a shared understanding of the problem, a shared vision for an improved process flow, and a common improvement goal, allows team members to appreciate the impact of their own performance. While this case is illustrative of a laboratory challenge in a clinical trial, it also should be applicable to bench research processes.

Discussion

In use in healthcare in recent decades, improvement methods and tools have not been widely used in research, and yet they show substantial potential for improving research processes.20 Our experience in developing and implementing a research process improvement program illustrates how researchers can address challenging research processes using tools and methods previously tested in business and industry.

Our case studies and experience in providing consultation to researchers reinforce the importance of a team with varied perspectives in gaining shared knowledge and understanding of the variety of underlying causes of common research process problems. We have found that process flow charts help identify inefficient and ineffective research activities such as delays in lab test turnaround time that slow testing of experimental drugs. We also have found that cause and effect diagrams can help reveal underlying causes of problems and help teams view them from different perspectives. In doing this work, team charters can help assure the necessary stakeholders are part of the team, establish effective boundaries of the joint work, and delineate roles, responsibilities, and ground rules for communication. Identifying specific and well‐defined desired process and outcome measures, and also measures looking for potentially adverse consequences of changes, allow research teams to clearly recognize whether changes to the process are leading to improvement or having unintended adverse effects. Additionally, driver diagrams can provide a shared vision of the aim and the potential drivers and change strategies to test. Consistent, repeated, and adaptable improvement cycles lead to incremental change and reduce the risk of investing time and energy in failed improvement activities. This can be seen in run charts of measures of a team's efforts and improvement over time.

Our composite case studies and online seminars show potential for training researchers to use established systematic methods to address quality gaps that threaten the successful completion of a study. We currently are applying these approaches to a broader array of research studies, ranging from bench research to stakeholder‐engaged research funded through the Patient Centered Outcomes Research Institute (PCORI). These efforts incorporate the use of additional process improvement tools where applicable, including root cause analysis to “dive deep” into high‐risk errors, “spaghetti diagrams” that geographically track movement over time to identify inefficiencies, and statistical process control analyses to decrease variation in research processes. We also are examining the use of charters proactively in team‐based science, where interdisciplinary research teams may have had limited experience working together and different cultural and linguistic research backgrounds, to clarify expectations and develop ground rules prior to beginning a study (e.g., data ownership, publication). These techniques may prove effective in making translational research processes more efficient and timely, as well as improve interdisciplinary relationships and coordination, and how research results are translated to clinical practice.

The Tufts CTSI Research Process Improvement Program is growing its capacity to support the use of improvement methods by researchers and by Tufts CTSI's resource and service providers. Based on the results of a needs assessment, we are targeting junior faculty with NIH K Career Development awards who often have little training in effective research processes. We also are focusing on recipients of Tufts CTSI pilot awards, to ensure optimal use of these awards and opportunities. We continue to work with the management and staff of our CTRC and other researchers across the Tufts CTSI community. Through the needs assessment, we gained further understanding of the aspects of research processes that pose the most frequent and complex problems, cause delays, or threaten the studies' success. Results suggest that researchers face challenges not only in discreet steps in the research process, such as participant recruitment, but also in the overall management of the study. We are using the results of the needs assessment to develop research improvement teams, incorporate approaches like “value‐stream mapping,”21 provide process improvement consulting services, promote the process improvement resources already available on our ILearn website, and develop further trainings.

While we have based our early efforts on the Model for Improvement, we recognize that some of the research challenges for which we have provided consultation require close attention to improving team processes. To that end, we are applying relational coordination theory 22 to improve team relationships and, by extension, the quality and efficiency of service delivery to our research community and partners. This model conceptualizes the coordination of work as taking place through a network of relationships among participants in work processes and has demonstrated success in improving processes in the airline industry.23 The theory specifies three attributes of relationships that support the highest levels of coordination and performance: shared goals that transcend participants' specific functional goals, shared knowledge that enables participants to see how their specific tasks interrelate with the whole process, and mutual respect that enables participants to overcome the barriers that might prevent them from seeing and taking account of the work of others. We have assessed relational coordination through a survey of Tufts CTSI workgroups and used the results to develop cross cutting coalitions to transform relationships and build shared goals, shared knowledge, and mutual respect across organization boundaries. New approaches and new organizational structures developed through this project will be disseminated to partner organizations using tools, education, consultation and publications.

Our next steps include: (1) developing additional case studies that address other delays in clinical trials and using these examples in trainings; (2) using a similar approach to build the capacity of researchers across the translational spectrum to use process improvement methods as they encounter real world challenges; and (3) further working to enhance research collaboration and relational coordination across teams. We believe this team focus is particularly important as there is a growing appreciation of the need for multidisciplinary team science and the management of a diverse array of stakeholders (e.g., patients, family members, clinicians, advocacy organizations, public agencies). Process improvement approaches that incorporate attention to effective team relationships will be essential as research teams diversify and grow in numbers and complexity.

Conclusion

Just as the application of process improvement methods and tools has advanced quality and efficiency in health care systems, use of these methods in research is needed to promote high quality and efficient clinical trials. This paper describes approaches used by Tufts CTSI to develop and implement a conceptual framework for process improvement in research, and research team relationships, and provides case examples. We have focused on capacity building to address common process problems and quality gaps that threaten the efficient, timely, and successful completion of a study. To date, publications about research process improvement are still sparse, and yet there is a growing recognition of the importance of these efforts. The experiences of academic researchers, pharmaceutical industry, and multisite collaborative research efforts can be a powerful resource to generate and disseminate evidence‐informed best practices needed for reengineering clinical and translational science.

Acknowledgments

This work was supported by the Tufts Clinical and Translational Science Institute, funded through the National Center for Research Resources Award Number UL1RR025752 and the National Center for Advancing Translational Sciences, National Institutes of Health, Award Numbers UL1TR000073 and UL1TR001064. We thank the researchers who worked with us to develop and test specific case examples and Supriya Shah, Lorraine Limpahan, and Alexandra Caro for editorial assistance.

References

- 1. Grol R, Wensing M, Eccles M. Improving patient care: the implementation of change in clinical practice. Philadelphia: Elsevier; 2005, pp. 6–13. [Google Scholar]

- 2. Billet AL, Colletti RB, Mandel KE, Miller M, Meuthing SE, Sharek PJ, Lannon CM. Exemplar pediatric collaborative improvement networks: achieving results. Pediatrics. 2013; 131(Suppl 4): S196–S203. [DOI] [PubMed] [Google Scholar]

- 3. Margolis P, Provost LP, Shoettker PJ, Britto MT. Quality improvement, clinical research, and quality improvement research‐opportunities for integration. Pediatr Clin North Am. 2009; 56(4): 831–841. [DOI] [PubMed] [Google Scholar]

- 4. Groves PS, Speroff T, Miles PV, Splaine MR, Dougherty D, Mittman BS. Discussion on advancing the methods for quality improvement research. Implement Sci. 2013; 8(Suppl 1): S10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Ogrinc G, Mooney SE, Estrada C, Foster T, Goldmann D, Hall LW, Huizinga MM, Liu SK, Mills P, Neily J, et al. The SQUIRE (Standards for QUality Improvement Reporting Excellence) guidelines for quality improvement reporting: explanation and elaboration. Qual Saf Health Care. 2008; 17(Suppl 1): i13–i32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. National Institutes for Health, National Center for Advancing Translational Sciences . Clinical and Translational Science award funding opportunity. Available at: http://grants.nih.gov/grants/guide/rfa‐files/RFA‐TR‐14‐009.html. Accessed March 3, 2015.

- 7.One nation in support of biomedical research? National Institutes of Health. Available at: http://nexus.od.nih.gov/all/2013/09/24/one‐nation‐in‐support‐of‐biomedical‐research/ Accessed November 6, 2014.

- 8.Cost to develop and win marketing approval for a new drug is $2.6 billion. Tufts Center for the Study of Drug Development. Available at: http://csdd.tufts.edu/news/complete_story/pr_tufts_csdd_2014_cost_study. Accessed May 12, 2015.

- 9. Zerhouni EA. Clinical research at a crossroads: the NIH roadmap. J Investig Med. 2006; 54(4): 171–173. [DOI] [PubMed] [Google Scholar]

- 10. Quality guidelines . International Conference of Harmonisation of Technical Requirements of Pharmaceuticals for Human Use (ICH). Available at: http://www.ich.org/products/guidelines/quality/article/quality‐guidelines.html. Accessed November 7, 2014.

- 11. Official recommendations . Clinical Trials Transformation Initiative (CTTI). Available at: http://www.ctti‐clinicaltrials.org/briefing‐room/official‐recommendations. Accessed November 7, 2014.

- 12.Workshops on quality by design (QbD) and quality risk management (QRM) in clinical trials. Clinical Trials Transformation Initiative (CTTI). Available at: http://www.ctti‐clinicaltrials.org/what‐we‐do/investigational‐plan/qbd‐qrm. Accessed November 7, 2014.

- 13. About us . Metrics Champion Consortium (MCC). Available at: http://metricschampion.org/who‐we‐are/about‐us/. Accessed October 7, 2014.

- 14. Andersson S, Armstrong A, Björe A, Bowker S, Chapman S, Davies R, Donald C, Egner B, Elebring T, Holmqvist S, et al. Making medicinal chemistry more effective–application of Lean Sigma to improve processes, speed and quality. Drug Discov Today. 2009; 14(11–12): 598–604. [DOI] [PubMed] [Google Scholar]

- 15. Johnson, T , Joyner, M , DePourcq, F , Drezner, M , Hutchinson, R , Newton, K , Uscinski, KT , Toussant, K . Using research metrics to improve timelines: Proceedings from the 2nd Annual CTSA Clinical Research Management Workshop. Clin Transl Sci. 2010; 3(6): 305–308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Strasser, JE , Cola, PA , Rosenblum, D . Evaluating various areas of process improvement in an effort to improve clinical research: discussions from the 2012 Clinical Translational Science Award (CTSA) Clinical Research Management Workshop. Clin Trans Sci. 6(4): 317–320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Langley GL, Nolan KM, Nolan TW, Norman CL, Provost LP. The improvement guide: a practical approach to enhancing organizational performance. San Francisco: Jossey Bass. 2009; 23–25. [Google Scholar]

- 18. Warden D, Rush AJ, Trivedi M, Ritz L, Stegman D, Wisniewski SR. Quality improvement methods as applied to a multicenter effectiveness trial—STAR*D. Contemp Clin Trials. 2005; 26(1): 95–112. [DOI] [PubMed] [Google Scholar]

- 19. Using change concepts for improvement . Institute for Healthcare Improvement (IHI). Available at: http://www.ihi.org/resources/Pages/Changes/UsingChangeConceptsforImprovement.aspx. Accessed March 18, 2015.

- 20. Schweikhart SA, Dembe AE. The applicability of Lean and Six Sigma techniques to clinical and translational research. J Invetig Med. 2009; 57(7): 748–755. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Scolville R, Little K. Comparing Lean and Quality Improvement. IHI White Paper. Cambridge MA: Institute for Healthcare Improvement; 2014. Available at: www.ihi.org. Accessed May 14, 2015. [Google Scholar]

- 22. Gittell JH, Fairfield KM, Bierbaum B, Head, W , Jackson R, Kelly, M , Laskin R, Lipson S, Siliski J, Thornhill T, Zuckerman J. Impact of relational coordination on quality of care, postoperative pain and functioning, and length of stay: a nine‐hospital study of surgical patients. Med Care. 2000; 38(8): 807–819. [DOI] [PubMed] [Google Scholar]

- 23. Gittel JH. Relational Coordination: Guidelines for Theory, Measurement and Analysis. Relational Collaboration Research Collaborative. 2011. Available at: http://rcrc.brandeis.edu/downloads/Relational_Coordination_Guidelines_8‐25‐11.pdf. Accessed May 14, 2015.