Abstract

Background

In congenital heart surgery, hospital performance has historically been assessed using widely available administrative datasets. Recent studies have demonstrated inaccuracies in case ascertainment (coding and inclusion of eligible cases) in administrative vs. clinical registry data, however it is unclear whether this impacts assessment of performance on a hospital-level.

Methods

Merged data from the Society of Thoracic Surgeons (STS) Database (clinical registry), and Pediatric Health Information Systems Database (administrative dataset) on 46,056 children undergoing heart surgery (2006–2010) were utilized to evaluate in-hospital mortality for 33 hospitals based on their administrative vs. registry data. Standard methods to identify/classify cases were used: Risk Adjustment in Congenital Heart Surgery (RACHS-1) in the administrative data, and STS–European Association for Cardiothoracic Surgery (STAT) methodology in the registry.

Results

Median hospital surgical volume based on the registry data was 269 cases/yr; mortality was 2.9%. Hospital volumes and mortality rates based on the administrative data were on average 10.7% and 4.7% lower, respectively, although this varied widely across hospitals. Hospital rankings for mortality based on the administrative vs. registry data differed by ≥ 5 rank-positions for 24% of hospitals, with a change in mortality tertile classification (high, middle, or low mortality) for 18%, and change in statistical outlier classification for 12%. Higher volume/complexity hospitals were most impacted. Agency for Healthcare Quality and Research methods in the administrative data yielded similar results.

Conclusions

Inaccuracies in case ascertainment in administrative vs. clinical registry data can lead to important differences in assessment of hospital mortality rates for congenital heart surgery.

Keywords: congenital heart disease, outcomes

Introduction

Accurate assessment of hospital performance across medical and surgical disciplines has become increasingly important due to several recent initiatives including public reporting, “pay-for-performance”, designation of centers of excellence, and quality improvement programs (1–4). The success of all of these initiatives in improving patient outcomes is dependent on accurate assessments of performance and the ability to distinguish truly high performing centers. In congenital heart surgery, hospital performance has historically been assessed using a variety of widely available administrative datasets containing information collected for hospital billing purposes. Both the National Quality Forum (NQF) and Agency for Healthcare Research and Quality (AHRQ) currently support the use administrative data to assess congenital heart surgery volume and mortality rates (5,6).

More recently, several clinical registries have emerged in the field, which contain data collected by clinicians and trained data managers using a comprehensive coding and classification system (7). Prior studies have demonstrated inaccuracies in case ascertainment in administrative datasets (related both to miscoding of cases and exclusion of eligible cases) in comparison to clinical registry data, which can lead to differences in outcomes assessment for certain groups of patients (8–11). For example, in an evaluation of >2000 patients undergoing the Norwood operation, the specificity of the administrative vs. registry data in identifying Norwood patients was 99.1%, while the sensitivity was only 68.5%, leading to an 11% relative difference in reported mortality rates between the two data sources for these cohorts (11). However the extent to which these, and other recent findings regarding case ascertainment on a patient level, impact evaluation of hospital-level performance has not been investigated to date.

The purpose of this study is to evaluate the influence of differences in case ascertainment between administrative and clinical registry data on assessment of hospital-level congenital heart surgery mortality rates across a large multi-center cohort.

Patients and Methods

Data Source

A merged dataset containing information coded both within the Society of Thoracic Surgeons Congenital Heart Surgery Database (STS-CHSD - a clinical registry), and the Pediatric Health Information Systems Database (PHIS - an administrative dataset) on children undergoing heart surgery at 33 US children’s hospitals was utilized for this study.

STS-CHSD

As described previously, the STS-CHSD collects peri-operative data on all children undergoing heart surgery at >100 North American centers. Diagnoses and procedures are coded by clinicians and trained data managers using the International Pediatric and Congenital Cardiac Code (IPCCC), a comprehensive system specific to congenital heart disease (12). Data from the most recent STS-CHSD audit demonstrated a 100% rate of completion and 99% rate of agreement (vs. medical record review) for primary operative procedure (the main variable of interest in the present study) (13).

In the STS-CHSD, the primary case ascertainment methodology used to determine cases eligible for inclusion in hospital-level outcomes analyses is the Society of Thoracic Surgeons–European Association for Cardiothoracic Surgery (STAT) system, in which cases are identified and included based on IPCCC procedure codes (14). Included cases may subsequently be grouped into categories of increasing mortality risk. The STAT system has been shown previously to include nearly all cardiac operations performed across centers, although some rare/infrequently performed operations may not be included (14).

PHIS Database

The Children’s Hospital Association PHIS Database is a large administrative database representing >40 US children’s hospitals. Diagnoses and procedures for all children hospitalized at these institutions are coded by billing personnel using International Classification of Diseases, 9th revision (ICD-9) codes. Systematic monitoring in the PHIS Database includes coding consensus meetings, consistency reviews, and quarterly data quality reports. Audits of congenital heart surgery data coded in the database vs. medical record review are not routinely performed in this or other administrative datasets.

In administrative datasets such as the PHIS Database, the case ascertainment methodology most commonly used to identify cases eligible for inclusion in hospital-level outcomes analyses is the Risk Adjustment in Congenital Heart Surgery, version 1 (RACHS-1) system (15). This method uses combinations of ICD-9 diagnosis and procedure codes to identify and include eligible cases. Included cases may subsequently be grouped into categories according to mortality risk. As the RACHS-1 system is known to include ~85% of a program’s overall cardiac surgical volume, methodology has also been developed by AHRQ for use across a variety of administrative datasets, which includes additional operations not classified by RACHS-1 (16,17). For the purposes of this analysis, we used the AHRQ methodology related to reporting of mortality outcomes for our analysis of both hospital volumes and mortality (17).

This analysis was not considered human subjects research by the Duke University and University of Michigan Institutional Review Boards in accordance with the Common Rule (45 CFR 46.102(f)).

Study Population

As previously described and verified, data from the STS-CHSD and PHIS Databases were merged at the patient level for children 0–18 years undergoing heart surgery (with or without cardiopulmonary bypass) at 33 hospitals participating in both databases from 2006–2010, using the method of matching on indirect identifiers (18–20). The merged dataset contains information for each patient as entered into both the clinical registry and the administrative dataset, and ensures that any differences identified cannot be explained by the datasets containing different patients.

From the merged dataset (n=48,058), patients with missing (n=29 STS-CHSD, n=1556 PHIS) or discrepant (n=61) in-hospital mortality status, or discharge date (n=356) between databases were excluded. Importantly, these exclusions were applied to eliminate the possibility that any differences in outcome identified might be related to differences in coding of outcomes themselves, rather than differences in coding/classification of cases.

Data Collection

Operative data (as described above), patient demographics and pre-operative characteristics, and hospital characteristics were collected (21). The primary outcome was in-hospital mortality as this is currently the most commonly used metric to evaluate hospital-level performance in congenital heart surgery.

Analysis

Hospital-level analyses were undertaken to evaluate surgical volumes and in-hospital mortality rates based on the administrative vs. clinical registry data from each hospital. In the primary analysis, hospital volumes and mortality rates were calculated and evaluated based on the cases coded and included within the standard case ascertainment system used within the clinical registry (STAT system) and administrative data (RACHS-1 method), as described above. The AHRQ method in the administrative data was also evaluated. Based on previous studies, the clinical registry data were considered the “gold standard” (8–11,13).

For all analyses, differences in hospital volume and mortality rates based on the information from the administrative vs. clinical registry data were described using standard summary statistics, and hospital rankings for mortality within the study cohort were evaluated (as described in more detail below). Unadjusted hospital mortality rates and 95% confidence intervals (CI) were calculated. Adjustments for operative case mix and patient characteristics were not made as the data from each hospital was compared against itself (ie. as captured in the administrative vs. registry data).

In the evaluation of hospital rankings for mortality within the cohort, we utilized several previously described methods (22–24). First, changes in rank position were assessed based on whether the hospital’s administrative vs. clinical registry data were used (22,23). However, since small changes in rank may not be meaningful from a clinical or policy perspective, additional methods were used to evaluate larger changes. Hospitals were divided into equal sized groups (tertiles for the purposes of this analysis) based on their ranking for mortality (high, middle, low), and the proportion of hospitals changing mortality tertiles within the cohort when the administrative vs. clinical registry data were used was evaluated (22,23). Finally, each hospital’s point estimate for mortality and 95% CI was compared with the aggregate mortality rate in the overall cohort. Hospitals with a 95% CI that did not overlap the aggregate mortality rate were classified as high and low performing statistical outliers, and the proportion of hospitals classified in the different performance groups (high and low outliers, and those performing as expected) when the administrative vs. clinical registry data were used was evaluated (22,24). All analyses were performed using SAS version 9.3 (SAS Institute, Cary, NC) and R version 3.0.1 (R Foundation for Statistical Computing, Vienna, Austria).

Results

Study population

The cohort included 46,056 patients from 33 hospitals. Patient and hospital characteristics are displayed in Table 1. Compared with the overall cohort of hospitals participating in the national STS-CHSD during the study period (n=114), the 33 hospitals included in the present analysis had a higher average annual volume of pediatric cardiac cases (391 vs. 204 cases/year).

Table 1.

Study population characteristics

| N = 46,056 (33 hospitals) | |

|---|---|

| Patient characteristics | |

| Age at surgery | 6.4 months (33 days – 3.5 years) |

| Sex, male | 25,533 (55%) |

| Weight at surgery (kg) | 6.4 (3.6 – 14.3) |

| Proportion of operations included within various case ascertainment methodologies*: | |

| Clinical registry data | |

| STAT | 42,324 (96.8%) |

| Administrative data | |

| AHRQ | 39,763 (90.9%) |

| RACHS-1 | 37,419 (85.5%) |

| Hospital characteristics | |

| Geographic location | |

| South | 11 (33.3%) |

| Midwest | 11 (33.3%) |

| West | 7 (21.2%) |

| Northeast | 4 (12.2%) |

Data are displayed as number and percent or median and interquartile range as appropriate.

Based on n=43,744 cardiovascular operations with and without bypass, excluding patient ductus arteriosus ligation in infants < 2.5 kg at surgery

Case ascertainment

The proportion of operations in the overall cohort included within the various case ascertainment systems used in administrative and clinical registry data is displayed in Table 1. The STAT system in the clinical registry included nearly all operations (96.8%). The AHRQ methodology in the administrative data included 90.9% of operations, and the RACHS-1 system included the fewest operations (85.5%).

Hospital-level volume and mortality

Clinical registry STAT cases vs. administrative data RACHS-1 cases

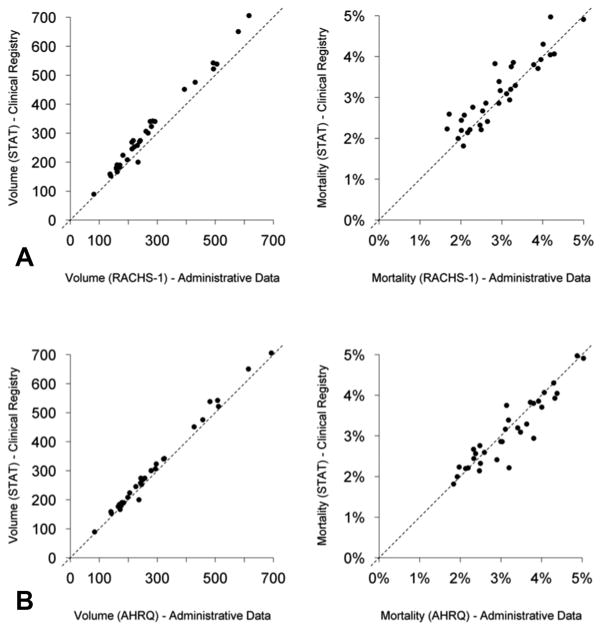

Annual hospital volumes in the clinical registry based on STAT-classified cases ranged from 89–705 cases/year across centers included in the cohort (median=269). Values for hospital volume in the administrative data based on RACHS-1 cases were on average 10.7% lower (Figure 1). Hospital-level mortality rates in the clinical registry data based on STAT cases ranged from 1.8%–5.0% across centers (median 2.9%). Hospital-level values for mortality in the administrative data based on RACHS-1 cases were on average 4.7% lower (although this was variable across hospitals ranging from −33.7% to +13.9%) (Figure 1). Overall, mortality for those operations not classified by RACHS-1 (but classified by STAT) was higher than for those operations that were classified by RACHS-1 (4.2% vs. 2.8%).

Figure 1.

Hospital volume and mortality rates in the administrative vs. clinical registry data.

A: STAT cases (clinical registry) vs. RACHS-1 cases (administrative data).

B: STAT cases (clinical registry) vs. AHRQ cases (administrative data).

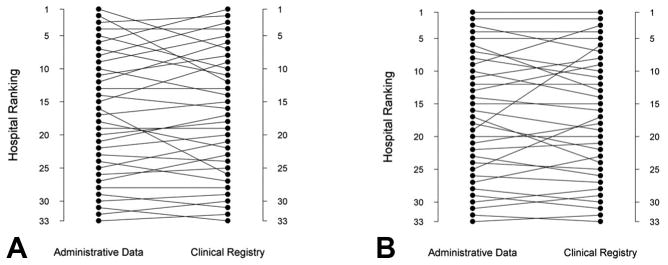

When hospital rankings for mortality were evaluated based on a center’s administrative data (RACHS-1 cases) vs. clinical registry data (STAT cases), rank was unchanged for 4 hospitals (12%) (Table 2). There was a change in ≥ 5 rank positions for 24% of hospitals. For example, one hospital changed from a rank of 2 using the administrative data to 12 using the clinical registry data, and another changed from a rank of 16 to 26 (Figure 2). There was change in mortality tertile (classification as a high, middle or low mortality hospital within the cohort) for 18%, and change in statistical outlier classification for 12% (Table 2). Hospitals changing rank by ≥ 5 positions were larger volume hospitals compared with those who did not change ranking (median volume of STAT classified cases 245 vs. 182 cases/year), and had a higher proportion of high complexity cases (30.6% vs. 26.2% of cases in STAT category 4–5).

Table 2.

Change in hospital mortality ranking

| Rank | Number (%) of hospitals changing mortality rankings based on whether the administrative vs. clinical registry data are used

|

|

|---|---|---|

| Administrative Data (RACHS-1 cases) vs. Clinical Registry Data (STAT cases) | Administrative Data (AHRQ cases) vs. Clinical Registry Data (STAT cases) | |

|

| ||

| No change in rank | 4 (12%) | 7 (21%) |

| Change in rank: | ||

| ≥1 rank positions | 28 (88%) | 26 (79%) |

| ≥ 2 rank positions | 24 (72%) | 21 (64%) |

| ≥ 3 rank positions | 17 (52%) | 16 (48%) |

| ≥ 4 rank positions | 15 (45%) | 10 (30%) |

| ≥ 5 rank positions | 8 (24%) | 5 (15%) |

| Change in mortality tertile | 6 (18%) | 6 (18%) |

| Change in statistical outlier classification | 4 (12%) | 2 (6%) |

Figure 2.

Hospital mortality ranking in the administrative vs. clinical registry data.

A: Hospital ranking for mortality based on the clinical registry (STAT classified cases) vs. administrative data (RACHS-1 classified cases).

B: Hospital ranking for mortality based on the clinical registry (STAT classified cases) vs. administrative data (AHRQ cases).

Clinical registry STAT cases vs. administrative data AHRQ cases

Hospital volumes in the administrative data based on the AHRQ method were more similar to clinical registry volumes based on STAT cases (on average AHRQ hospital volumes were 4.8% lower) (Figure 1). Hospital mortality rates in the administrative data based on the AHRQ methodology vs. in the clinical registry based on STAT methodology varied from 16.1% lower to 45.0% higher across centers. Overall, mortality for those operations not classified by AHRQ (but classified by STAT) was higher than for those that were classified by AHRQ (3.7% vs. 3.0%).

When hospital rankings for mortality were evaluated based on center’s administrative data (AHRQ cases) vs. clinical registry data (STAT cases), rank was unchanged for 7 hospitals (21%) (Table 2). There was a change in ≥ 5 positions for 15% of hospitals. For example, one hospital changed from a rank of 19 using the administrative data to a rank of 6 using the clinical registry data (Figure 2). There was a change in mortality tertile for 18%, and change in statistical outlier classification for 6% (Table 2). Hospitals changing rank by ≥ 5 positions had similar volumes compared with those who did not change ranking (median volume of STAT classified cases 305 vs. 306 cases/year), and had a slightly higher proportion of high complexity cases (28.2% vs. 26.2% of cases in STAT category 4–5).

Comment

The relative merits of different data sources in the assessment of cardiac surgery outcomes have been debated since the 1980’s, when concern amongst cardiac surgeons regarding outcomes reports from the Health Care Financing Administration based on administrative data prompted the formation of registries such as the Northern New England Cardiovascular Disease Study Group registry, and the STS Adult Cardiac Surgery Database (25,26). These datasets were designed to foster complete case ascertainment and appropriate adjustment for patient characteristics and surgical case-mix. In the field of congenital heart surgery, clinical registries did not emerge until later in the decade, and initially included a limited number of centers (26,27). As a result, administrative data sources representing a broader sample of hospitals were more often used for outcomes assessment. There were also concerns regarding the voluntary nature of clinical registry participation and potential exclusion of higher risk cases (28). Our analysis appears to refute these concerns, in that we found the administrative data and associated methodology actually underestimated case volumes, and also excluded higher-risk cases.

While a number of previous studies have demonstrated inaccuracies in coding and classification of congenital heart disease diagnoses and procedures in administrative datasets, the present analysis is the first to evaluate how this impacts hospital-level outcomes assessment (8–11). Our results demonstrate important differences in assessment and ranking of hospital mortality rates between administrative vs. clinical registry data regardless of whether the RACHS-1 or AHRQ methodology is used. Data from the present study and previous analyses suggest that both miscoding/misclassification of individual cases and exclusion of higher risk cases in the administrative data may play a role in these differences (8–11). Although not examined previously in pediatric patients, similar findings have been demonstrated in adult cardiac patients undergoing coronary artery bypass surgery in Massachusetts (29). In the present study, higher volume hospitals and those with a higher complexity case-mix appear to be most impacted. This finding is not surprising as it appears to be the higher-risk/higher mortality cases that are excluded from the algorithms used in administrative data.

Our results have health policy implications, and suggest that current recommendations from NQF and AHRQ supporting the use of administrative data to evaluate congenital heart surgery performance may lead to inaccurate assessment of hospital volumes and mortality rates (5,6). It has been shown in other disciplines that inaccuracies in the designation of high quality hospitals can result in the failure of policies designed to improve outcomes and quality of care. For example, Dimick and colleagues demonstrated that the restriction of coverage by the Centers for Medicare and Medicaid Services for bariatric surgery to hospitals designated as centers of excellence did not result in improvements in quality, likely because the metrics underlying this designation did not accurately identify hospitals providing the highest quality care (30).

Finally, it is important to acknowledge the important role that administrative data and related case ascertainment and risk adjustment methodology have played in paving the way toward improving our understanding of outcomes across congenital heart centers at a time when clinical registry data were not widely available (15). In addition, these limitations with regard to case ascertainment and subsequent outcomes assessment are likely not unique to a particular administrative dataset, but more related to general issues spanning administrative data in general, such as the limitations of the ICD-9 coding system itself, and limited knowledge of coders regarding congenital heart disease. For these reasons, simply applying a different system (such as STAT) for classifying procedures is likely not a viable solution, as this would not address the issue of procedures themselves being coded incorrectly, or that ICD-9 codes for certain procedures (e.g. the Norwood operation) do not exist. Despite these limitations, administrative datasets continue to serve other important functions particularly with regard to resource utilization. Our group and others have demonstrated that linkages between clinical registry and administrative data can allow for integrated, accurate evaluation of both clinical outcomes and cost (31).

Limitations

We were limited to evaluating hospitals participating in both datasets during the study period, which represent ~25% of US congenital heart surgery programs (32). Thus, our findings may not be generalizable to all centers, and in a larger cohort there may be less movement of hospitals across larger groups or categories of hospital performance. In addition, it is unclear how the future implementation of ICD-10 will impact the findings of this study. Because the ICD-10 system remains largely unchanged with regard to specificity of congenital heart disease coding, it is anticipated that the issues raised in our analysis will persist. In addition, it is known that in some cases ICD-10 codes will be back-coded to ICD-9 codes. For example, within the administrative dataset used in this study, this approach will likely be used for reporting of certain outcome measures.

Conclusions

This study suggests that inaccuracies in case ascertainment in administrative vs. clinical registry data can lead to important differences in assessment of hospital mortality rates for congenital heart surgery, and challenges current federal recommendations supporting the use of administrative data for this purpose. Further efforts are necessary to make registry data more readily accessible for outcomes assessment and reporting, research, and quality improvement activities in order to translate these findings into improved quality of care and outcomes for children undergoing heart surgery.

Acknowledgments

Funding source: National Heart, Lung, and Blood Institute (K08HL103631, PI: Pasquali).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Hospital Compare. [Accessed 1/9/2014]; Available at: www.hospitalcompare.hhs.gov.

- 2.OptumHealth Congenital Heart Disease Center of Excellence. [Accessed 1/9/2014]; Available at: https://www.myoptumhealthcomplexmedical.com/gateway/public/chd/providers.jsp.

- 3.Prager RL, Armenti FR, Bassett JS, et al. Cardiac Surgeons and the Quality Movement: the Michigan Experience. Semin Thorac Cardiovasc Surg. 2009;21:20–27. doi: 10.1053/j.semtcvs.2009.03.008. [DOI] [PubMed] [Google Scholar]

- 4.Kugler JD, Beekman RH, Rosenthal GL, et al. Development of a pediatric cardiology quality improvement collaborative: From inception to implementation. From the Joint Council on Congenital Heart Disease Quality Improvement Task Force Congenit Heart Dis. 2009;4:318–328. doi: 10.1111/j.1747-0803.2009.00328.x. [DOI] [PubMed] [Google Scholar]

- 5.Agency for Healthcare Research and Quality. [Accessed 1/9/2014];Pediatric Quality Indicators. Available at: http://www.qualityindicators.ahrq.gov/Modules/PDI_TechSpec.aspx.

- 6.National Quality Forum. [Accessed 1/9/2014];0339 RACHS-1 pediatric heart surgery mortality (REVISED), 0340 Pediatric heart surgery volume(REVISED) Available at: http://www.qualityforum.org/Projects/Surgery_Maintenance.aspx#t=2&s=&p=3|4|.

- 7.Jacobs ML, Jacobs JP, Franklin RCG, et al. Databases for assessing the outcomes of the treatment of patients with congenital and paediatric cardiac disease – the perspective of cardiac surgery. Cardiol Young. 2008;18:101–115. doi: 10.1017/S1047951108002813. [DOI] [PubMed] [Google Scholar]

- 8.Strickland MJ, Riehle-Colarusso TJ, Jacobs JP, et al. The importance of nomenclature for congenital cardiac disease: implications for research and evaluation. Cardiol Young. 2008;18(Suppl 2):92–100. doi: 10.1017/S1047951108002515. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Cronk CE, Malloy ME, Pelech AN, et al. Completeness of state administrative databases for surveillance of congenital heart disease. Birth Defects Res A Clin Mol Teratol. 2003;67:597–603. doi: 10.1002/bdra.10107. [DOI] [PubMed] [Google Scholar]

- 10.Frohnert BK, Lussky RC, Alms MA, et al. Validity of hospital discharge data for identifying infants with cardiac defects. J Perinatol. 2005;25:737–742. doi: 10.1038/sj.jp.7211382. [DOI] [PubMed] [Google Scholar]

- 11.Pasquali SK, Peterson ED, Jacobs JP, et al. Differential case ascertainment in clinical registry vs. administrative data and impact on outcomes assessment in pediatric heart surgery. Ann Thorac Surg. 2013;95:197–203. doi: 10.1016/j.athoracsur.2012.08.074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Franklin RC, Jacobs JP, Krogmann ON, et al. Nomenclature for congenital and paediatric cardiac disease: historical perspectives and The International Pediatric and Congenital Cardiac Code. Cardiol Young. 2008;18(Suppl 2):70–80. doi: 10.1017/S1047951108002795. [DOI] [PubMed] [Google Scholar]

- 13.Jacobs JP, Jacobs ML, Mavroudis C, Tchervenkov CI, Pasquali SK. Executive Summary: The Society of Thoracic Surgeons Congenital Heart Surgery Database - Twentieth Harvest – (January 1, 2010 – December 21, 2013) The Society of Thoracic Surgeons (STS) and Duke Clinical Research Institute (DCRI), Duke University Medical Center; Durham, North Carolina, United States: Spring. 2014. Harvest. [Google Scholar]

- 14.O’Brien SM, Clarke DR, Jacobs JP, et al. An empirically based tool for analyzing mortality associated with congenital heart surgery. J Thorac Cardiovasc Surg. 2009;138:1139–1153. doi: 10.1016/j.jtcvs.2009.03.071. [DOI] [PubMed] [Google Scholar]

- 15.Jenkins KJ, Gauvreau K, Newburger JW, et al. Consensus-based method for risk adjustment for surgery for congenital heart disease. J Thorac Cardiovasc Surg. 2002;123:110–118. doi: 10.1067/mtc.2002.119064. [DOI] [PubMed] [Google Scholar]

- 16.Jacobs JP, Jacobs ML, Lacour-Gayet FG, et al. Stratification of complexity improves the utility and accuracy of outcomes analysis in a Multi-Institutional Congenital Heart Surgery Database: Application of the Risk Adjustment in Congenital Heart Surgery (RACHS-1) and Aristotle Systems in the Society of Thoracic Surgeons (STS) Congenital Heart Surgery Database. Pediatr Cardiol. 2009;30:1117–30. doi: 10.1007/s00246-009-9496-0. [DOI] [PubMed] [Google Scholar]

- 17.Agency for Healthcare Research and Quality. [Accessed 1/9/2014];SAS Software, Version 4.5. Available at: http://www.qualityindicators.ahrq.gov/Software/SAS.aspx.

- 18.Pasquali SK, Jacobs JP, Shook GJ, et al. Linking clinical registry data with administrative data using indirect identifiers: Implementation and validation in the congenital heart surgery population. Am Heart J. 2010;160:1099–1104. doi: 10.1016/j.ahj.2010.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Pasquali SK, Li JS, He X, et al. Perioperative methylprednisolone and outcome in neonates undergoing heart surgery. Pediatrics. 2012;129:e385–391. doi: 10.1542/peds.2011-2034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Hammill BG, Hernandez AF, Peterson ED, et al. Linking inpatient clinical registry data to Medicare claims data using indirect identifiers. Am Heart J. 2009;157:995–1000. doi: 10.1016/j.ahj.2009.04.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. [Accessed 1/9/2014];STS Database Full Specifications. Available at: http://www.sts.org/sites/default/files/documents/pdf/CongenitalDataSpecificationsV3_0_20090904.pdf.

- 22.Drye EE, Normand ST, Wang Y, et al. Comparison of hospital risk-standardized mortality rates calculated by using in-hospital and 30-day models: An observational study with implications for hospital profiling. Ann Intern Med. 2012;156:19–26. doi: 10.1059/0003-4819-156-1-201201030-00004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.The Leapfrog Group. [Accessed 1/9/2014];Calculating the Survival Predictor. Available at: http://www.leapfroggroup.org/media/file/Survival_Predictor_Calculation_Overview.pdf.

- 24.Shahian DM, He X, Jacobs JP, et al. Issues in quality measurement: Target population, risk adjustment, and ratings. Ann Thorac Surg. 2013;96:718–726. doi: 10.1016/j.athoracsur.2013.03.029. [DOI] [PubMed] [Google Scholar]

- 25.Likosky DS. Lessons learned from the Northern New England Cardiovascular Disease Study Group. Progress in Pediatric Cardiology. 2012;33:53–56. [Google Scholar]

- 26.Mavroudis C, Gevitz M, Ring WS, McIntosh C, Schwartz M. The Society of Thoracic Surgeons National Congenital Cardiac Surgery Database. Ann Thorac Surg. 1999;68:601–624. doi: 10.1016/s0003-4975(99)00631-1. [DOI] [PubMed] [Google Scholar]

- 27.Moller JH, Borbas C. The Pediatric Cardiac Care Consortium: a physician-managed clinical review program. QRB Qual Rev Bull. 1990;16(9):310–6. doi: 10.1016/s0097-5990(16)30386-4. [DOI] [PubMed] [Google Scholar]

- 28.Welke KF, Karamlou T, Diggs BS. Databases for assessing the outcomes of the treatment of patients with congenital and paediatric cardiac disease – a comparison of administrative and clinical data. Cardiol Young. 2008;18:137–144. doi: 10.1017/S1047951108002837. [DOI] [PubMed] [Google Scholar]

- 29.Shahian DM, Silverstein T, Lovett AF, et al. Comparison of clinical and administrative data sources for hospital coronary artery bypass graft surgery report cards. Circulation. 2007;115:1518–1527. doi: 10.1161/CIRCULATIONAHA.106.633008. [DOI] [PubMed] [Google Scholar]

- 30.Dimick JB, Nicholas LH, Ryan AM, Thumma JR, Birkmeyer JD. Bariatric surgery complications before vs after implementation of a national policy restricting coverage to centers of excellence. JAMA. 2013;309:792–799. doi: 10.1001/jama.2013.755. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Pasquali SK, Jacobs ML, He X, et al. Variation in congenital heart surgery costs across hospitals. Pediatrics. 2014;133:e553–60. doi: 10.1542/peds.2013-2870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Jacobs ML, Daniel M, Mavroudis C, et al. Report of the 2010 society of thoracic surgeons congenital heart surgery practice and manpower survey. Ann Thorac Surg. 2011;92:762–8. doi: 10.1016/j.athoracsur.2011.03.133. [DOI] [PubMed] [Google Scholar]