Abstract

The automatic computerized detection of regions of interest (ROI) is an important step in the process of medical image processing and analysis. The reasons are many, and include an increasing amount of available medical imaging data, existence of inter-observer and inter-scanner variability, and to improve the accuracy in automatic detection in order to assist doctors in diagnosing faster and on time. A novel algorithm, based on visual saliency, is developed here for the identification of tumor regions from MR images of the brain. The GBM saliency detection model is designed by taking cue from the concept of visual saliency in natural scenes. A visually salient region is typically rare in an image, and contains highly discriminating information, with attention getting immediately focused upon it. Although color is typically considered as the most important feature in a bottom-up saliency detection model, we circumvent this issue in the inherently gray scale MR framework. We develop a novel pseudo-coloring scheme, based on the three MRI sequences, viz. FLAIR, T2 and T1C (contrast enhanced with Gadolinium). A bottom-up strategy, based on a new pseudo-color distance and spatial distance between image patches, is defined for highlighting the salient regions in the image. This multi-channel representation of the image and saliency detection model help in automatically and quickly isolating the tumor region, for subsequent delineation, as is necessary in medical diagnosis. The effectiveness of the proposed model is evaluated on MRI of 80 subjects from the BRATS database in terms of the saliency map values. Using ground truth of the tumor regions for both high- and low- grade gliomas, the results are compared with four highly referred saliency detection models from literature. In all cases the AUC scores from the ROC analysis are found to be more than 0.999 ± 0.001 over different tumor grades, sizes and positions.

Introduction

Cancer has become the deadliest killer, worldwide, over the last decade [1]. By the time physical manifestations become evident, in many cases metastasis has occurred. This results in failure of local tumor control and poor patient prognosis. Quantitative imaging [2], using magnetic resonance imaging (MRI), computed tomography (CT), positron emission tomography (PET), etc., is playing an important role in improved tumor management through noninvasive detection, diagnosis, treatment and prognosis. These days one needs to integrate diverse, multimodal information in a quantitative manner, to provide specific clinical prediction for helping clinicians in accurately estimating patient outcomes.

Of all lethal brain tumors Glioblastoma multiforme (GBM) is the most common. Typically it has poor prognosis because its diagnosis and treatment are still largely guided by immunohistochemistry and histopathology [3]. It becomes challenging in brain tumor patients to have repeated tumor biopsies. Therefore noninvasive techniques like imaging is playing an important tools for assessing glioma during the treatment. MRI provides high spatial resolution and can detect abnormalities at minute level of the brain in terms of both shape and volume. It is being routinely used in the clinical diagnosis and disease characterization followed by disease management, since it provides a superior contrast of soft tissue structures. It is also safe, as it does not involve any exposure to radiation.

Reconstruction and display of detailed 3D images of the brain is possible using magnetic resonance imaging (MRI) [1]. Due to its dependence on biologically variable parameters such as longitudinal relaxation time (T1), transverse relaxation time (T2), and proton density (PD), using different pulse sequences and modifying the imaging parameters variable image contrast can be achieved in MRI. It is known that none of these sequences, individually, are able to depict the entire extent of a malignancy. For example, Fluid-Attenuated Inversion Recovery (FLAIR) causes damping of the ventricular CSF signal such that it appears dark. Different types of contrast enhancing agents, like Gadolinium (Gd), help in highlighting their pathological intra-tumoral take-up in T1-weighted MRI scans (T1C).

Segmentation and detection, of different regions of interest (ROI) in medical images, is manually performed by experts for treatment planning and diagnosis. Automated medical image analysis promises to play an important role in this scenario, particularly in overcoming human bias and the enormity of available data.

Tumors can exhibit different characteristics in different patients inspite of having originated in the same organ. Moreover, variations within a single tumor can cause marked differences among its imaging features—like necrosis or contrast enhancement; being primarily caused by changes in blood flow (or perfusion). Upon superimposing multiple sequences of MR images having prominent glioma regions, it is observed that poorly perfused areas in T1C images exhibit regions of low (or high) water content on T2-weighted images along with mismatches between perfusion and diffusion in the FLAIR sequence. The cells that are likely to be resistant to therapy belong to those regions having poor perfusion and high cell density, and are of particular clinical interest [4].

This highlights the utility of superposing multiple channels of MR imaging, like contrast enhanced T1-, and T2-weighted, as well as FLAIR components, in identifying and extracting heterogeneous tumor region(s).

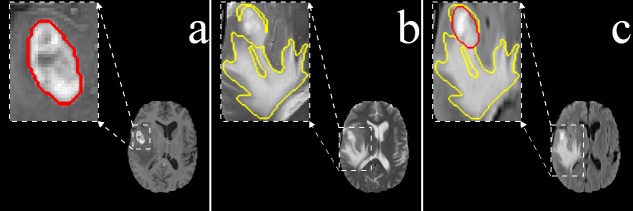

It is observed that radiologists typically delineate the gross tumor core from the T1C MR slices, because the tumor boundary becomes more visible due to emphasized contrast between gray and white matter (Fig 1(a)). The T2 channel, providing better contrast between brain tissue and cerebrospinal fluid (CSF), is preferred for delineating the edema region (Fig 1(b)). Although the edema boundary becomes fuzzy in FLAIR, both tumor and edema regions are appropriately visible here (Fig 1(c)). Each pixel in the tumor is, therefore, defined by its image intensity in different sequences, viz. (i) T1C, (ii) T2, and (iii) FLAIR.

Fig 1. Primary MRI sequences of the brain.

(a) T1C exhibiting enhanced tumor structure and boundary (Red), (b) T2 associated with edema or swelling (Yellow), and (c) FLAIR demonstrating both the edema (Yellow) and enhanced solid core (Red).

The subjective assessment of an image depends heavily on identifying the salient region within it. The term “visual saliency” was coined by Ullman and Sha’ashua [5], and extended by Itti et al. [6] towards the development of a computational architecture. The human visual system is sensitive to the salient regions in an image, due to their high discriminative features, thereby resulting in early visual arousal. When we view a picture or a scene, our eyes immediately get drawn to the relevant (or salient) parts therein, on basis of the attention mechanism of the Human Visual System. The bottom-up visual attention is driven by the intrinsic low-level features of a scene. Top-down attention, on the other hand, is a high-level visual task requiring the search for a specific object. Considering an image as its input a computational saliency model typically generates a topographical map to determine the salient, attention-grabbing nature of each region from the perspective of a viewer in terms of human eye movement [7].

A perceptual quality of human vision, which grab the viewer’s attention and makes the object stand out from the rest is defined as visual saliency. It can also be defined as the outcome of comparing a central region with its surroundings, in terms of unpredictability, contrast and rarity [8, 9]. Saliency detection methods can be broadly classified into (i) biology-based [6, 10], (ii) fully computational [11, 12] and (iii) hybrid [13, 14] methods. The algorithms that detect saliency by using only low-level features, like color, intensity, orientation, incorporate the bottom-up strategy. Those in the top-down strategy include some learning from the training data involving the position or shape of a salient object. It has been observed that often attention is immediately drawn to a salient item, in spite of the existence of many other items (or distractors), without any need to scan the image. A visually salient region is typically rare in an image, and contains highly discriminating information. This concept can, therefore, be expected to have a major bearing towards the fast identification of an ROI or tumor from a medical image.

Existing literature on computational visual saliency models mainly deal with detection from the natural scenes. The ultimate aim of this research, on the other hand, is to develop a saliency-based framework for fast and automated detection of the whole tumor from multi-channel brain MRI involving glioma. Abnormality detection in medical imaging is a key step adopted by radiologists, as they manually search for lesions and/or other such abnormalities in the affected organ for the purpose of diagnosing and writing their report. In this context Computer-Aided Detection (CADe) plays an important role in assisting doctors and radiologists for interpreting medical images, and identifying (any) lesions. Therefore, improving CADe systems in the field of computer vision is an active research area—with particular emphasis on medical imaging.

The role of visual attention mechanism, in the context of medical images, is being investigated in literature. The objective is to model the visual search strategies of experts while also assisting them in improving detection. Nodine and Kundel [15] introduced perception study in case of medical images. They collected the eye (or gaze) tracking data of radiologists, while observing chest X-ray images in presence of tumors, to develop a model for predicting the sequence of events from the time of viewing the X-ray image upto the diagnostic decision-making [16]. Perception study was extended in the context of brain CT images for detecting lesions. For abnormality detection in medical images Jampani et al. [17] investigated the relevance of computational saliency models. They applied three popular methods, extended from the natural scene framework, to obtain saliency maps for finding lesions from color retinal images and chest X-Ray images. The results were validated against ground truth by medical experts. Automated lesions detection from retinal images based on visual saliency was also studied [18, 19].

Over the decade several methods have been developed to automate tumor segmentation, using neural networks, support vector machines (SVM), atlas-based methods, and outlier detection [20]. However satisfactory results often require either a complex prior model or a large amount of training data, thereby restricting the range of application. In this scenario our algorithm presents an intuitive method, by integrating multi-channel MR sequences to generate a pseudo-colored image in order to swiftly detect brain abnormalities without any prior training or supervision phase. We follow the perception pattern of radiologists through saliency detection. The most powerful aspect of our methodology is that it can be implemented in real-time and is robust to changes in parameters; thereby making it applicable to a wide range of MRI data.

We propose a novel saliency detection model for brain glioma mapping, in MRI, taking cue from visual saliency concept in natural scenes. Since attention is immediately drawn to any salient item, there is no need to scan the entire image. The image can, therefore, be processed in parallel to orient visual attention towards the most salient location very fast. Initially visual saliency is employed to quickly identify the ROI. The contribution lies in extending the concept of visual saliency-based object detection (from natural images [8]) to the medical domain, in order to automatically and simultaneously identify ROIs like tumor(s) from images. We develop a bottom-up saliency detection model, where color is typically considered as the most important feature. However we cannot use this feature in case of the inherently gray scale MR images. Therefore we design a novel pseudo-coloring strategy for MRIs, involving a combination of the three sequences, to generate saliency maps that provide the saliency strength at every pixel. Finally a 3D saliency map can be generated, by repeating the above process over each of the 2D MR slices extracted from these three sequences.

Material and Methods

Ethics Statement

“Brain tumor image data used in this work were obtained from the MICCAI 2012 Challenge on Multimodal Brain Tumor Segmentation (http://www.imm.dtu.dk/projects/BRATS2012) organized by B. Menze, A. Jakab, S. Bauer, M. Reyes, M. Prastawa, and K. Van Leemput. The challenge database contains fully anonymized images from the following institutions: ETH Zurich, University of Bern, University of Debrecen, and University of Utah. All human subjects data was publicly available de-identified data. Therefore, no Institutional Review Board approval was required” [20, 21].

The BRATS database [20] as mentioned above, contains four categories of images:

High-grade (HG) glioma cases of 20 real subjects,

Low-grade (LG) glioma cases of 10 real subjects,

High-grade (SimHG) glioma cases of 25 simulated subjects, and

Low-grade (SimLG) glioma cases of 25 simulated subjects.

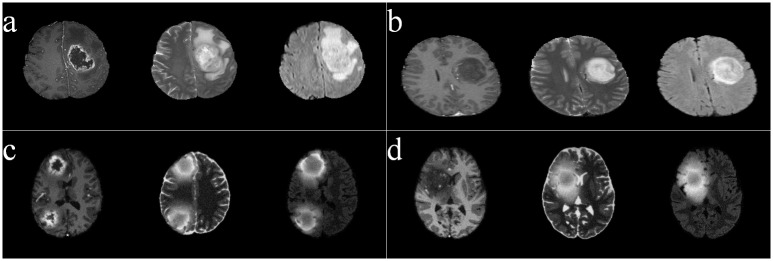

Fig 2 depicts sample images from these four types, with the three adjacent columns (in each case) corresponding to the sequences T1C, T2, and FLAIR, respectively. It is visually obvious that the T1C sequences of the HG images are hyper-intense in the active tumor region, unlike those of the LG type. All images were linearly co-registered and skull stripped.

Fig 2. Sample images, of four categories, from BRATS.

(a) HG, (b) LG, (c) SimHG, and (d) SimLG.

We developed a novel saliency detection model for the whole tumor from brain MR images of glioma, using the three channel sequences viz. FLAIR, T2 and T1C for mapping into a pseudo-color space. The concept of saliency is employed, to enable the algorithm quickly focus on the ROI. Here we use a pseudo-coloring strategy, for MR images, to efficiently generate the saliency map.

Pseudo-coloring

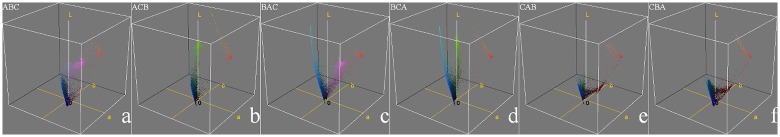

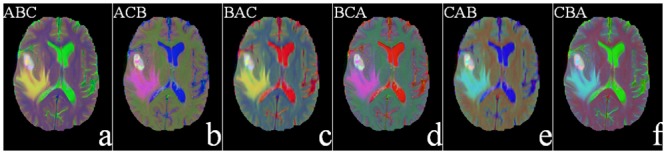

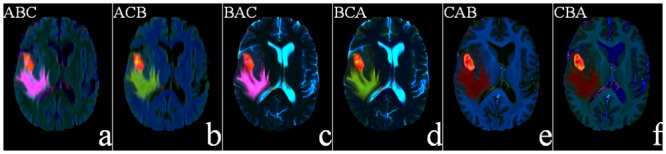

Digital color images are often constructed from three stacked color channels viz. red, green and blue (RGB). These can be decomposed to three gray scale images, in six ways, and recomposed back to the RGB image. For example, let us consider three gray-scale images A, B, C, and let A be assigned to Red, B to Green, and C to Blue. Then the six combinations are ABC, ACB, BAC, BCA, CAB, CBA.

The proposed pseudo-coloring scheme assigns the three MR sequences (FLAIR, T2, T1C) to RGB for generating a “color” MR image. These are false colors (or pseudo-colors), and do not correspond to the color of the imaged tissue(s). However, such pseudo-coloring yields a 24 bit MR image containing about 65,536 times more information than a single-channel gray-scale image. Thereby a single color MR image is capable of detecting and displaying the whole tumor region as the ROI from the pseudo-colored images, as displayed in Fig 3.

Fig 3. Six pseudo-colored brain MR sequences.

(a) FLAIR—T2—T1C, (b) FLAIR—T1C—T2 (c) T2—FLAIR—T1C(d) T2—T1C—FLAIR(e) T1C—FLAIR—T2, and (f) T1C—T2—FLAIR, as mapped to the RGB plane.

It is observed from the figure that the tumor appears white in all sequence combinations. This is because it is equally bright in all the three channels of RGB. The edema, on the other hand, looks yellow in Fig 3(a) and 3(c) because of its brightness along primary color channels R, G. Here these correspond to FLAIR and T2 sequences, and occupy the first two positions in these images.

Since our saliency detection algorithm depends on the center-surround difference of a region with its neighbors, based on the pixel color values, a perceptually uniform color space (distance between any two color is perceived proportional to their distance in the color space) that decorrelates luminance from chrominance information is desirable. Therefore, RGB is found to be not that suitable for delineating the tumor regions. In this context the International Commission on Illumination (CIE) recommended the use of CIE—L* a* b* for representing color difference, with their first distance metric being ΔE76. This is the Euclidean distance for quantifying the difference between two color points , , and formulated as ΔE76 = ||Vi − Vj||, with ||.|| denoting the L2-norm [22].

We next consider the transformation to the CIE—L* a* b* color space for a new way of mapping the brain MR images of glioma. Converting an image into L* a* b* from RGB, results in the separation between the two layers, luminosity and chromaticity. The L* a* b* converted MR images, corresponding to Fig 3, are shown in Fig 4.

Fig 4. Six pseudo-colored (L* a* b*) converted brain MR image sequences, corresponding to the six pseudo-colored (RGB) MR sequences of Fig 3.

It is visually obvious from the figure that, in the first two sequences, ABC and ACB(Fig 4(a) and 4(b)), only the tumor and edema regions get highlighted while all other regions are suppressed. The corresponding color cubes are depicted in Fig 5. From Fig 5(a) and 5(b), we find that the principal components representing the tumor (orange) and edema (violet or green) regions are quite large in both cases. This is because FLAIR reflects both the tumor and edema regions (albeit, with a fuzzy boundary), and in both Fig 5(a) and 5(b) it occupies the first position in the sequence. Therefore we can use either of these two sequences. In this study we choose the sequence ABC for subsequent saliency detection. It may also be noted that T1C suppresses the edema region in Fig 4(e) and 4(f), while T2 also illuminates the CSF in Fig 4(c) and 4(d). This is corroborated from Fig 5(c)–5(f). Hence these four sequences are not considered in our study.

Fig 5. 3-D color cubes showing the color distributions of the L* a* b* converted images of Fig 4.

The algorithm for the pseudo-coloring scheme consists of two steps.

Step 1: Create a pseudo-colored RGB image from the FLAIR, T2, T1C MR image.

Step 2: Transform from RGB color space to CIE–L* a* b* color space to enhance local contrast.

This is followed by the generation of local and global contrast-based saliency map for detecting whole tumor regions, as described below.

Localization of ROI through saliency

In an image a salient region is formed by one (or more) very important piece(s) of composition, to make it stand out from its surroundings. An L* a* b* image (of size M × N) is first transformed to a square image of size w × w. Since the database can contain images of different sizes, these need to be converted to one uniform size (preferably a squared one, here w = 256). Then it is decomposed into several non-overlapping blocks Ri(or patches) of size k × k pixels (where w is a multiple of k), with each being represented by its mean L* a* b* values. The number of patches (w/k × w/k) correspond to the number of pixels in the saliency map. Let the ith patch of the image I(Ri), 1 ≤ i≤ (w/k × w/k), be represented by its mean L* a* b* color values as

| (1) |

Next the saliency of each patch is calculated with respect to all other patches in the image. Color is considered as the most important feature in the bottom-up approach, with the simple color difference between regions providing an efficient way to highlight the salient region(s) with respect to the non-salient patches. The color difference between a pair of patches is defined as the “Euclidean distance” between the corresponding mean color values of L* a* b*. Therefore, for patch Ri, the saliency Sc(Ri) is calculated as the sum of the color difference between , , and , , (Eq (1)), ∀ j ≠ i. It is expressed as

| (2) |

The color difference of a patch with the rest of the patches in the image is summed. If this sum is large then it is considered to be a salient patch. Typically while most salient patches are observed to be concentrated around spatially adjacent areas, the other (non-salient) patches may be distributed anywhere over the whole image. If a region is salient then the probability is large for its surrounding regions to be salient, while the probability of those regions located farther away from it being salient becomes small. Therefore the influence of adjacent regions can be considered to be more important when computing the saliency of a region. Keeping this in view we incorporate the spatial distance between patches as another important factor for calculating image saliency. In the process, we consider (i) the difference of the L* a* b* color values between any two blocks, and (ii) the spatial distance between them. Now Eq (2) gets redefined as

| (3) |

Here where d(Ri, Rj) is the spatial distance between the patches Ri and Rj of the image, and (, ) refers to the mean spatial coordinates of Ri.

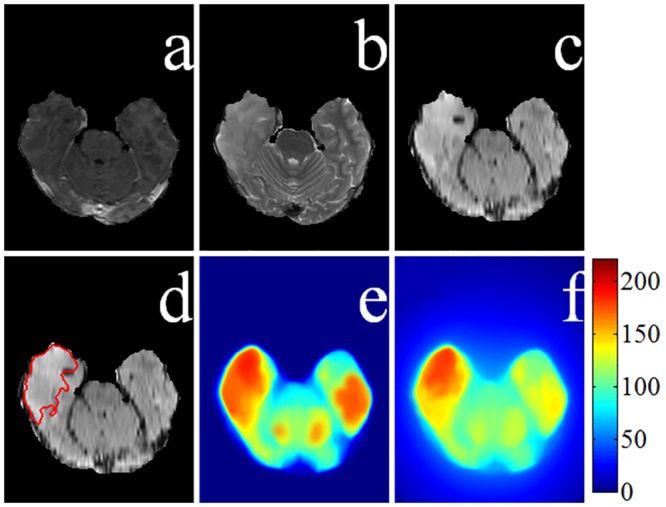

Let us illustrate the situation with MR sequences of a low-grade glioma in Fig 6. While Fig 6(a)–6(c) depict the T1C, T2, and FLAIR sequences, respectively, Fig 6(d) indicates the ground truth about the tumor (including active tumor and edema regions) in the FLAIR mode. Fig 6(f) demonstrates a more accurate (lower false positive) detection of the salient region in the pseudo-colored space, after incorporating the spatial distance component by Eq (3), as compared to Fig 6(e). Since the contrast between the tumor and normal tissue regions is not very large in low-grade glioma images, often the sum of color difference of a patch with respect to all other patches in the image remains large at several locations. This results in an incorrect detection of multiple salient regions by Eq (2).

Fig 6. Saliency map generation for a low-grade glioma.

MRI sequences (a) T1C, (b) T2, and (c) FLAIR. (d) Ground truth superimposed on the FLAIR image. Saliency maps (e) without spatial distance, and (f) with spatial distance component.

Now consider an observer viewing a far-off scene. The focus lies on the entire salient region(s). Again when the same scene is viewed at a closer range, the observer tends to pay more attention to the details within the salient region [6, 23]. We adopted this property of the human visual attention mechanism into our model through the evaluation of multiple-scale based saliency maps. By partitioning an image into smaller sized patches, we can clearly highlight the salient object along with its details.

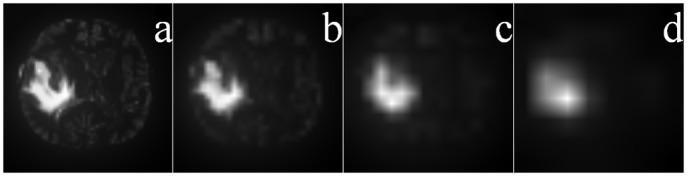

Although the saliency map for a larger patch can help in accurately locating a salient object, its resultant blurring causes disappearance of most details. Saliency maps, depicting the saliency strength at every pixel over different scales, involving varying sizes of the patches (with k = 4, 8, 16, 32, of sizes 4 × 4, 8 × 8, 16 × 16 and 32 × 32) are provided in Fig 7 (after rescaling these to their original sizes). These images correspond to the tumor and edema regions of Fig 4(a). Comparing Fig 7(a)–7(d) we observe that as the contour of the ROI gets gradually blurred, with increasing patch size k, the position of the salient region becomes clearer. Here the block size k relates to the resolution of the saliency map.

Fig 7. Saliency map of pseudo-colored MR image of the whole tumor region of Fig 4(a) at different scales.

Patch sizes of (a) 4 × 4, (b) 8 × 8, (c) 16 × 16, and (d) 32 × 32.

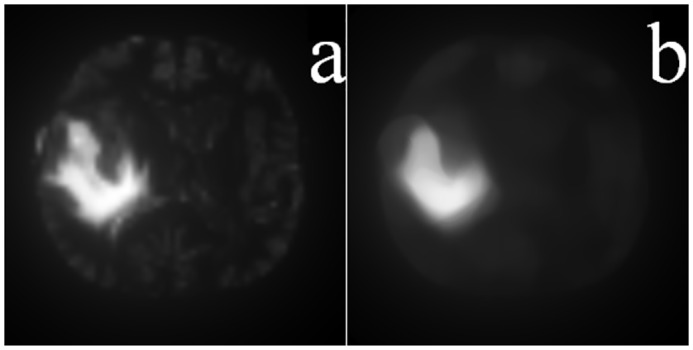

Next, a re-scaling is performed to bring back the saliency maps to the original image size (M × N) using Bilinear interpolation [23]. Let denote the interpolated image at its original size, as generated from the saliency map Sk at scale k. Since the properties of a region depend on the pixels within it, saliency prediction is related to size and scale of the region on which detection is performed. Our algorithm is employed simultaneously over multiple scales, analogous to [23], for capturing the salient region(s) in the MR image at different levels of resolution. Those region(s) consistently highlighted over different resolutions are assumed to be the ones most likely to be salient. Therefore we superimpose these saliency maps, corresponding to the different scales, for computing the final map. For example, the integrated map over the four scales (of Fig 7) contains all important information and is depicted in Fig 8(a). The final saliency map is now computed as

| (4) |

where rk is the weight corresponding to the saliency map at size k. In the present study we have chosen rk = 1/4, ∀ k. Finally a 25 × 25 mean filter is applied to smoothen the saliency map S, in order to help focus on the core region within the actual ROI in the resized image. This is depicted in Fig 8(b), and acts as the reference map for subsequent segmentation.

Fig 8. Final saliency map.

Superimposed saliency map corresponding to Fig 7 and (b) its final smoothened version.

In summary, our contribution lies in introducing the L* a* b* pseudo-color space along with the spatial distance, while computing the saliency at multiple scales.

The algorithm combines extracts saliency maps in multiple scale to generate one final saliency map using a fusion strategy. The underlying assumption about spatial coincidence identifies a region as salient only if it is found to be consistently salient over multiple scales.

Experimental Results and Discussion

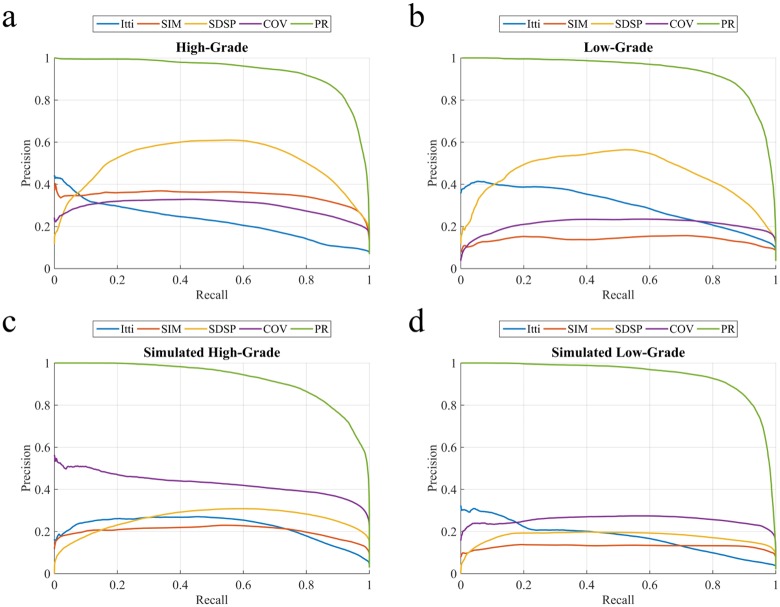

The performance of the proposed saliency detection model (PR), as well as those of four state-of-the-art algorithms from literature (Itti [6], SIM [12], COV [23], and SDSP [24]), are evaluated in terms of the saliency map by comparing it with the ground truth of the whole tumor region encompassing the intra-tumoral structures, namely “edema”, “nonenhancing (solid) core”, “necrotic (or fluid-filled) core”, and “non-enhancing core” as demarcated by expert radiologists and using several performance metrics. The performance of the algorithms was evaluated both qualitatively and quantitatively.

A saliency map is represented as an gray scale image of the same size as that of original image, with the intensity of a pixel indicating its importance for belonging to the tumor region in the original image. While an intensity 0 (pure black) indicates least importance, an intensity of 255 (pure white) corresponds to highest importance. We first generate binary masks for the salient object by thresholding the saliency map, over varying thresholds ranging from 0 to 255. These are compared with the ground truth, based on different metrics.

Precision refers to the percentage of correctly classified salient pixels over the whole image, whereas recall corresponds to the portion of pixels from the ground truth which get detected correctly. Although recall and precision vary at the cost of each other, both the measures are important. Therefore we have maximized both of these. The entire range of gray levels in the image is explored, for exhaustive thresholding, in order to generate two classes; with the positive class representing the ROI and the negative class being treated as the background. Area under the curve (AUC) is estimated by analyzing the receiver operator characteristic (ROC) from these thresholded images. While the true positive rate (TPR) is the proportion of saliency values at actual location above a threshold, the proportion of pixels corresponding to the non-tumorous regions of the ground truth (but wrongly classified as tumor regions) contribute towards the false positive rate (FPR).

The performance of our algorithm is evaluated by computing the precision and recall, along with the TPR and FPR over these thresholded maps. The precision-recall and ROC curves are plotted, by averaging over the set of images for each data group (HG LG, SimHG, and SimLG). The corresponding mean AUC scores (with standard deviation) and p-values using independent samples t-test while comparing our algorithm with four other state-of-the-art methods, are presented in Table 1.

Table 1. Comparison of mean AUC scores with considered saliency models.

| Data group | AUC score (Mean ± SD) | ||||

|---|---|---|---|---|---|

| Itti [6] | SIM [12] | SDSP [24] | COV [23] | PR | |

| HG | 0.824 ± 0.121 (p <0.01) | 0.921 ± 0.041 (p <0.01) | 0.956 ± 0.032 (p <0.01) | 0.891 ± 0.069 (p <0.01) | 0.996 ± 0.002 |

| LG | 0.906 ± 0.080 (p <0.01) | 0.913 ± 0.066 (p <0.01) | 0.976 ± 0.019 (p <0.01) | 0.929 ± 0.032 (p <0.01) | 0.998 ± 0.001 |

| SimHG | 0.916 ± 0.060 (p <0.01) | 0.932 ± 0.042 (p <0.01) | 0.963 ± 0.012 (p <0.01) | 0.974 ± 0.014 (p <0.01) | 0.998 ± 0.003 |

| SimLG | 0.892 ± 0.072 (p <0.01) | 0.925 ± 0.041 (p <0.01) | 0.938 ± 0.031 (p <0.01) | 0.966 ± 0.018 (p <0.01) | 0.999 ± 0.001 |

The four saliency detection methods compared here, viz. Itti [6], SIM [12], COV [23], and SDSP [24], were originally developed for detecting saliency in natural images. Here we apply these on the pseudo-colored RGB MR images. Ref. [6] generates saliency map based on feature integration, with the feature maps at lower level being created by decomposing the visual inputs. The final saliency map is generated by combining all lower level feature maps, using a weighting scheme. “Saliency Estimation Using a Non-Parametric Low-Level Vision Model” (SIM) uses Wavelet transform, in visual attention modelling, to outperform considered models [12]. “Visual saliency estimation by nonlinearly integrating features using region covariances” (COV) compares covariances of non-overlapping neighbouring image regions, using meta-features to estimate the contribution of different feature dimensions towards the overall visual saliency [23]. “A Novel Saliency Detection Method by Combining Simple Priors” (SDSP) [24] detects the salient region in an image by integrating three simple priors, viz. frequency, location and L*a*b* color space to generate the final saliency map. The experimental results are illustrated for each of the four categories of MR images. Fig 9 depicts the precision-recall plots, while Fig 10 shows the corresponding ROC curves. It is observed that the PRoposed model (PR) clearly outperforms the other algorithms. Table 1 presents the comparative AUC scores for each case, with PR again providing the best results (p-value < 0.01, with best scores highlighted).

Fig 9. Comparative study of averaged precision-recall values, with varying thresholds [0—255] on saliency map for four groups of MR images.

(a) HG, (b) LG, (c) SimHG, and (d) SimLG.

Fig 10. Comparative study of ROC curves for averaged TPR-FPR values, with varying thresholds [0—255] on saliency map for four groups of MR image.

(a) HG, (b) LG, (c) SimHG, and (d) SimLG.

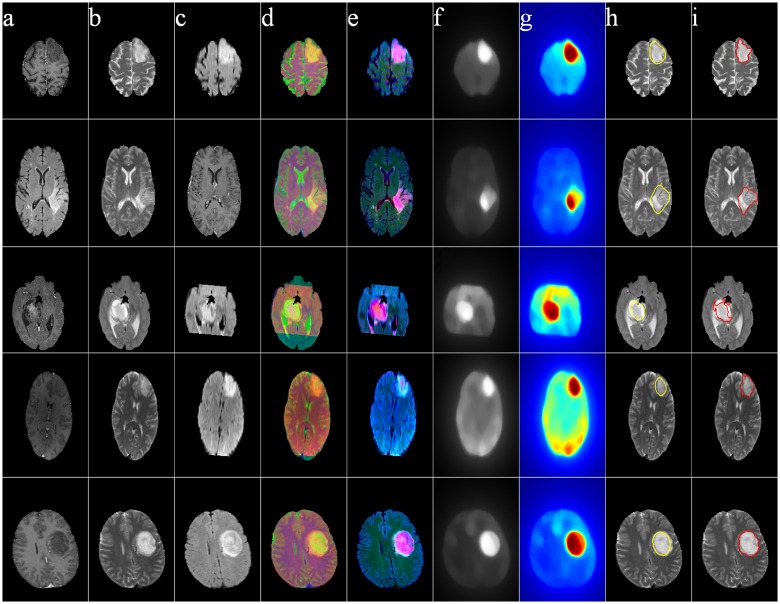

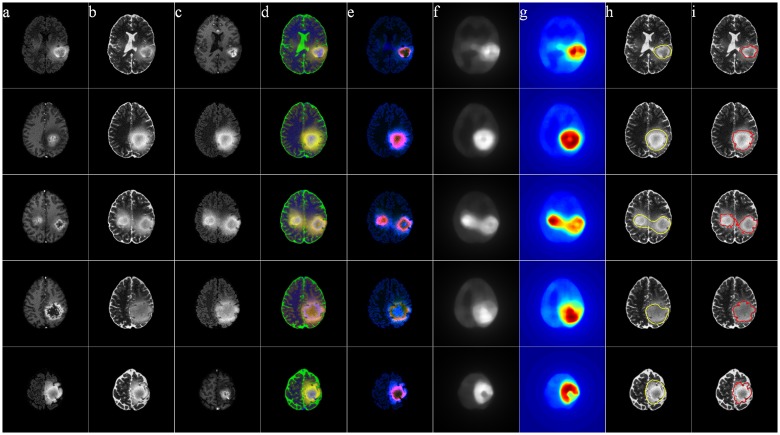

Figs 11–14 illustrate The visual results on 25 patients, five from each of the four groups HG, LG, SimHG, SimLG. The generat saliency maps S by Eq (8) are adaptively thresholded by Ta to generate binary proto-objects which act as prototypes for subsequent segmentation (by any suitable algorithm like region growing or active contour). The threshold is computed as

| (5) |

where S(x, y) denotes the saliency value at location (x, y) of the image, and α is an user-defined parameter empirically set at 2 for high-grade and 3 for low-grade GBM. The qualitative analysis of the extracted ROI establishes that our method is robust to tumor size, shape, position, as well as scanner type.

Fig 11. MR images of five High-Grade Glioma cases.

(a) T1C, (b) T2, (c) FLAIR, (d) pseudo RGB, and (e) L* a* b*, (f) Saliency map, (g) color coded saliency map, with extracted (h) proto-object, and (i) corresponding ground truth.

Fig 14. MR images of five Simulated Low-Grade Glioma cases.

(a) T1C, (b) T2, (c) FLAIR, (d) pseudo RGB, and (e) L* a* b*, (f) Saliency map, (g) color coded saliency map, with extracted (h) proto-object, and (i) corresponding ground truth.

Fig 12. MR images of five Low-Grade Glioma cases.

(a) T1C, (b) T2, (c) FLAIR, (d) pseudo RGB, and (e) L* a* b*, (f) Saliency map, (g) color coded saliency map, with extracted (h) proto-object, and (i) corresponding ground truth.

Fig 13. MR images of five Simulated High-Grade Glioma cases.

(a) T1C, (b) T2, (c) FLAIR, (d) pseudo RGB, and (e) L* a* b*, (f) Saliency map, (g) color coded saliency map, with extracted (h) proto-object, and (i) corresponding ground truth.

We observed, there exists no fully automated algorithm to identify the ROI without any prior training or supervision (to best of our knowledge). Typically a supervised identification of the ROI is followed by the application of appropriate operations like enhancement, feature extraction and /or segmentation. Our algorithm, on the other hand, is able to quickly focus on the ROI (in an automated and unsupervised manner) based on the principle of saliency. This can be followed by the application of any suitable segmentation algorithm to extract the ROI(s). The computational cost of our algorithm is very low, i.e.,

| (6) |

from Eq (6), with C a constant. Therefore it can be easily implemented in real time or inter-operative environment.

We used MATLAB 2014, on an i7 CPU having 3.40GHz clock speed and 16GB of RAM, for our implementation. This was followed by segmentation over the detected ROI of a 2D slice. A 3D saliency map and its corresponding segmentation can be obtained by repeating the above process over each pseudo colored 2D MR slice.

The yellow contour in column (h) in each of Figs 11–14 is generated by thresholding the saliency maps by Ta. This can be used as the initial contour in case of active contour based segmentation, or as the seed in case of region growing based techniques. We have not included any quantitative measures to comparatively evaluate the segmentation accuracy of our results, as our contribution lies in the use of saliency for accurately locating the prototype ROI for subsequent segmentation by any existing technique.

Conclusions

We have designed a novel scheme for saliency detection towards the delineation of whole tumor regions from multi-channel brain MR images. The concept of pseudo-coloring helped in suppressing the less relevant regions, while enhancing its salient parts. The resulting saliency map was compared with four state-of-the-art saliency detection models with respect to the ground truth. Our algorithm provided superior performance, as compared to the considered models, as evident from the experimental results. The AUC score in the ROC analysis was found to range between 0.997 for SimLG and 0.992 for HG images, on an average; which is very high as compared to the existing models.

It may be noted [20] that the identification and segmentation of tumor core region(s) in high-grade glioma is comparatively easier due to contrast enhancement in the T1C MR sequence. For low-grade glioma, on the other hand, identification of tumor core becomes more challenging and difficult due to the absence of enhancement in its T1C sequence. Given that our method produces significantly higher detection scores over related methods in case of low-grade glioma, it holds promise towards better diagnosis and/or prognosis of patients having the disease.

Supporting Information

(PDF)

Acknowledgments

We thank the reviewers for their constructive comments, which helped to enhance the lucidity of the manuscript.

Data Availability

Brain tumor image data used in this work were obtained from the MICCAI 2012 Challenge on Multimodal Brain Tumor Segmentation (http://www.imm.dtu.dk/projects/BRATS2012) organized by B. Menze, A. Jakab, S. Bauer, M. Reyes, M. Prastawa, and K. Van Leemput. The challenge database contains fully anonymized images from the following institutions: ETH Zurich, University of Bern, University of Debrecen, and University of Utah.

Funding Statement

The authors have no support or funding to report.

References

- 1. Mitra S, Uma Shankar B. Medical image analysis for cancer management in natural computing framework. Information Sciences. 2015;306:111–131. 10.1016/j.ins.2015.02.015 [DOI] [Google Scholar]

- 2. Gatenby RA, Grove O, Gillies RJ. Quantitative Imaging in Cancer Evolution and Ecology. Radiology. 2013;269:8–14. 10.1148/radiol.13122697 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Holland EC. Glioblastoma multiforme: The terminator. Proceedings of the National Academy of Sciences. 2000;97:6242–6244. 10.1073/pnas.97.12.6242 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Zinn PO, Sathyan P, Mahajan B, Bruyere J, Hegi M, Majumder S, et al. A novel Volume-Age-KPS (VAK) Glioblastoma classification identifies a prognostic cognate microRNA-gene signature. PloS ONE. 2012;7:e41522 10.1371/journal.pone.0041522 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ullman S, Sha’ashua A. Structural saliency: The detection of globally salient structures using a locally connected network. In: Proceedings of the Second International Conference on Computer Vision; 1988. p. 321–327.

- 6. Itti L, Koch C, Niebur E. A model of saliency-based visual attention for rapid scene analysis. IEEE Transactions on Pattern Analysis and Machine Intelligence. 1998;20:1254–1259. 10.1109/34.730558 [DOI] [Google Scholar]

- 7. Foulsham T, Underwood G. What can saliency models predict about eye movements? Spatial and sequential aspects of fixations during encoding and recognition. Journal of Vision. 2008;8:6 10.1167/8.2.6 [DOI] [PubMed] [Google Scholar]

- 8. Achanta R, Estrada F, Wils P, Süsstrunk S. Salient region detection and segmentation In: Computer Vision Systems. Springer; 2008. p. 66–75. [Google Scholar]

- 9.Ma YF, Zhang HJ. Contrast-based image attention analysis by using fuzzy growing. In: Proceedings of the Eleventh ACM International Conference on Multimedia. ACM; 2003. p. 374–381.

- 10. Walther D, Koch C. Modeling attention to salient proto-objects. Neural Networks. 2006;19:1395–1407. 10.1016/j.neunet.2006.10.001 [DOI] [PubMed] [Google Scholar]

- 11. Zhang L, Tong MH, Marks TK, Shan H, Cottrell GW. SUN: A Bayesian framework for saliency using natural statistics. Journal of Vision. 2008;8:32 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Rosin PL. A simple method for detecting salient regions. Pattern Recognition. 2009;42:2363–2371. 10.1016/j.patcog.2009.04.021 [DOI] [Google Scholar]

- 13. Bian P, Zhang L. Biological plausibility of spectral domain approach for spatiotemporal visual saliency In: Advances in Neuro-Information Processing. Springer; 2009. p. 251–258. [Google Scholar]

- 14.Harel J, Koch C, Perona P. Graph-based visual saliency. In: Advances in Neural Information Processing Systems; 2006. p. 545–552.

- 15. Nodine CF, Kundel HL. Using eye movements to study visual search and to improve tumor detection. Radiographics. 1987;7:1241–1250. 10.1148/radiographics.7.6.3423330 [DOI] [PubMed] [Google Scholar]

- 16. Matsumoto H, Terao Y, Yugeta A, Fukuda H, Emoto M, Furubayashi T, et al. Where do neurologists look when viewing brain CT images? An eye-tracking study involving stroke cases. PloS ONE. 2011;6:e28928 10.1371/journal.pone.0028928 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Jampani V, Sivaswamy J, Vaidya V, et al. Assessment of computational visual attention models on medical images. In: Proceedings of the Eighth Indian Conference on Computer Vision, Graphics and Image Processing. ACM; 2012. p. 80.

- 18. Quellec G, Russell SR, Abramoff MD. Optimal filter framework for automated, instantaneous detection of lesions in retinal images. IEEE Transactions on Medical Imaging. 2011;30:523–533. 10.1109/TMI.2010.2089383 [DOI] [PubMed] [Google Scholar]

- 19.Deepak KS, Chakravarty A, Sivaswamy J, et al. Visual saliency based bright lesion detection and discrimination in retinal images. In: Proceedings of 10th IEEE International Symposium on Biomedical Imaging. IEEE; 2013. p. 1436–1439.

- 20. Menze BH, Jakab A, Bauer S, Kalpathy-Cramer J, Farahani K, Kirby J, et al. The Multimodal Brain Tumor Image Segmentation Benchmark (BRATS). IEEE Transactions on Medical Imaging. 2015;34:1993–2024. 10.1109/TMI.2014.2377694 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Kistler M, Bonaretti S, Pfahrer M, Niklaus R, Büchler P. The Virtual Skeleton Database: An Open Access Repository for Biomedical Research and Collaboration. Journal of Medical Internet Research. 2013;15(11):e245 10.2196/jmir.2930 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Jain AK. Fundamentals of Digital Image Processing. vol. 3 Prentice-Hall, Englewood Cliffs; 1989. [Google Scholar]

- 23. Erdem E, Erdem A. Visual saliency estimation by nonlinearly integrating features using region covariances. Journal of Vision. 2013;13:11 10.1167/13.4.11 [DOI] [PubMed] [Google Scholar]

- 24.Zhang L, Gu Z, Li H. SDSP: A novel saliency detection method by combining simple priors. In: Proceedings of 20th IEEE International Conference on Image Processing (ICIP). IEEE; 2013. p. 171–175.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(PDF)

Data Availability Statement

Brain tumor image data used in this work were obtained from the MICCAI 2012 Challenge on Multimodal Brain Tumor Segmentation (http://www.imm.dtu.dk/projects/BRATS2012) organized by B. Menze, A. Jakab, S. Bauer, M. Reyes, M. Prastawa, and K. Van Leemput. The challenge database contains fully anonymized images from the following institutions: ETH Zurich, University of Bern, University of Debrecen, and University of Utah.