Abstract

Categorization allows organisms to efficiently extract relevant information from a diverse environment. Because of the multidimensional nature of odor space, this ability is particularly important for the olfactory system. However, categorization relies on experience, and the processes by which the human brain forms categorical representations about new odor percepts are currently unclear. Here we used olfactory psychophysics and multivariate fMRI techniques, in the context of a paired-associates learning task, to examine the emergence of novel odor category representations in the human brain. We found that learning between novel odors and visual category information induces a perceptual reorganization of those odors, in parallel with the emergence of odor category-specific ensemble patterns in perirhinal, orbitofrontal, piriform, and insular cortices. Critically, the learning-induced pattern effects in orbitofrontal and perirhinal cortex predicted the magnitude of categorical learning and perceptual plasticity. The formation of de novo category-specific representations in olfactory and limbic brain regions suggests that such ensemble patterns subserve the development of perceptual classes of information, and imply that these patterns are instrumental to the brain's capacity for odor categorization.

SIGNIFICANCE STATEMENT How the human brain assigns novel odors to perceptual classes and categories is poorly understood. We combined an olfactory-visual paired-associates task with multivariate pattern-based fMRI approaches to investigate the de novo formation of odor category representations within the human brain. The identification of emergent odor category codes within the perirhinal, piriform, orbitofrontal, and insular cortices suggests that these regions can integrate multimodal sensory input to shape category-specific olfactory representations for novel odors, and may ultimately play an important role in assembling each individual's semantic knowledge base of the olfactory world.

Keywords: categorization, human, multivariate fMRI, olfaction, perceptual learning

Introduction

A critical function of the brain is to create perceptual representations of external stimuli. Given that humans and other animals are immersed in a highly complex environment, the brain must develop mechanisms for object recognition and identification. Categorization serves as one such strategy by which the brain can organize sensory representations into common perceptual classes that share similar meaning (Seger and Miller, 2010).

Most of our knowledge about the neural basis of object categorization is derived from visual studies. Single-cell recordings in monkeys (Sheinberg and Logothetis, 1997; Sigala et al., 2002) and functional imaging in humans (Gauthier and Tarr, 1997; Ishai et al., 1999; Haxby et al., 2001; Grill-Spector and Weiner, 2014) have highlighted the role of the inferior temporal and fusiform cortices as key regions of visual object processing. Additional studies point to the medial temporal lobe and neighboring regions (Kreiman et al., 2000; Kourtzi and Kanwisher, 2001; Bussey and Saksida, 2005; Litman et al., 2009; Huffman and Stark, 2014) for the synthesis of higher-order visual information, object recognition, and the binding of stimulus features.

In contrast, we know relatively little about how the brain encodes olfactory object categories. Rodent studies suggest that odor-evoked activity takes the form of sparse, distributed pattern representations in piriform cortex (Illig and Haberly, 2003; Rennaker et al., 2007; Stettler and Axel, 2009), and in humans, these piriform patterns encode perceptual information about odor identity (Zelano et al., 2011) and category (Howard et al., 2009). In turn, categorical information about smell can be modulated through learning and experience (Li et al., 2006; Wu et al., 2012). To date, most studies of odor category coding have relied on using familiar and well-established odors, for which category membership is built up over a lifetime. How these representations are acquired, and how the brain assigns novel stimuli to categorical classes, has not yet been explored.

Here we combined a novel cross-modal learning paradigm with fMRI and multivariate pattern-based approaches to characterize the emergence of new odor category representations. Given that odors map loosely onto semantic representations (Lawless and Engen, 1977; Herz, 2005; Olofsson et al., 2014), olfactory perceptual processing typically relies on additional contextual cues (e.g., visual, gustatory, or other) to shape our understanding of odor space (Small et al., 2004; Jadauji et al., 2012; Bensafi et al., 2014). Therefore, to guide odor category learning, we devised an olfactory-visual paired-associates learning task in which novel but category-specific pictures of fruits and flowers were systematically paired with novel odors of ambiguous fruity-floral character (see Fig. 1). Subjects underwent fMRI scanning before and after the paired-associates task, enabling us to assess the de novo emergence of odor-evoked category-specific templates.

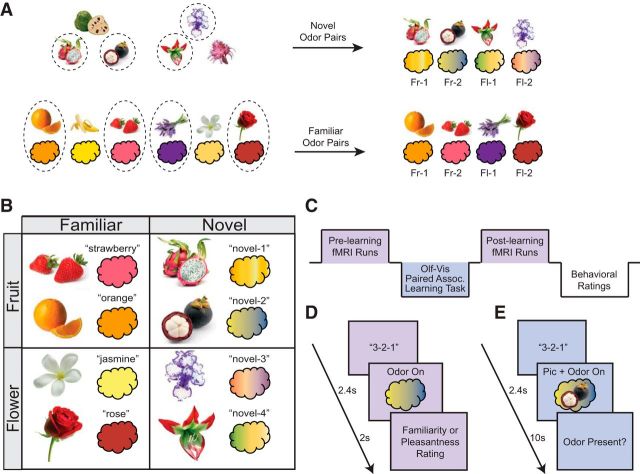

Figure 1.

Stimulus selection and experimental design. A, Top row, Selection of novel pairs. Individual familiarity ratings of six pictures of rare and exotic fruits and flowers were used to select the unfamiliar pictures for each subject. The two most unfamiliar fruit pictures and two most unfamiliar flower pictures for each subject (circled, by way of example) were randomly paired with the four novel odor mixtures (pairings were counterbalanced across subjects). These four pairs constituted the experimental novel odor-picture pairs for that subject. Bottom row, Selection of familiar pairs. Individual odor-picture congruency ratings of six commonly encountered fruits and flowers were used to select the familiar odor-picture pairs. The two most congruent fruit and two most congruent flower pairs for each subject (sample stimuli circled) constituted the experimental familiar odor-picture pairs for that subject. Fr, fruit; Fl, flower. B, Sample set of experimental stimuli. Eight pictures and eight odors were used for each subject, with two pairs in each condition type. C, Experimental timeline. Subjects underwent two identical scanning sessions: one before and one after an odor-picture paired-associates learning task, which took place outside of the scanner. After scanning, subjects made postlearning behavioral ratings on all stimuli. D, fMRI trial sequence. Subjects were cued to sniff at odor delivery. Subjects provided either familiarity or pleasantness ratings on separate trials. Each of the eight odors was presented 12 times in each of three runs. Visual runs were identical, except that a picture was presented instead of an odor. E, Odor-picture paired-associates learning task. On each trial, subjects were cued to sniff at the onset of an odor-picture pair, which was delivered for 10 s so that subjects could make multiple inhalations. Because the task also doubled as an odor detection task, subjects were prompted to indicate whether odor was present on that trial.

Our main prediction was that, through direct cross-modal association, we could guide the perceptual organization of novel odors into discrete perceptual categories. We also expected that these olfactory perceptual changes would be accompanied by category-specific pattern changes in piriform cortex, as well as in multimodal olfactory-associative regions, including orbitofrontal cortex, insula, and/or medial temporal lobe areas. In particular, the entorhinal cortex receives dense monosynaptic projections from the olfactory bulb (Haberly and Price, 1977; Carmichael et al., 1994; Shipley and Ennis, 1996) and shares reciprocal connections with the perirhinal cortex (Burwell and Amaral, 1998; Pitkanen et al., 2000). In turn, the perirhinal cortex receives input from visual associative regions (Suzuki and Amaral, 1994; Ranganath and Ritchey, 2012) and is implicated in visual object representation (Buckley and Gaffan, 1998; Murray and Richmond, 2001; Clarke and Tyler, 2014), as well as associative and cross-modal learning (Pihlajamäki et al., 2003; Taylor et al., 2006; Haskins et al., 2008). Based on these observations, we hypothesized that entorhinal or perirhinal cortex, along with olfactory associative areas, would support the synthesis of newly formed odor categorical representations.

Materials and Methods

Participants.

Eighteen right-handed, nonsmoking participants (11 women; mean age, 25.3 years) without respiratory dysfunction or history of neurological disorders participated in the main fMRI experiment. Because of excessive head movement or technical difficulties during scanning, three fMRI subjects (all female) were excluded from analysis, leaving a total of 15 subjects. An independent group of 11 subjects (4 women; mean age, 27.2 years) also participated in a visual categorization task, and a second group of 11 subjects (8 women; mean age, 26 years) participated in a behavioral odor-picture matching task. All subjects gave informed consent to participate in the experiment according to protocols approved by the Northwestern University Institutional Review Board.

Stimuli.

All visual stimuli were color images of fruits and flowers. Fruit pictures included orange, strawberry, banana, mangosteen, dragonfruit, and cherimoya. Flower pictures included rose, jasmine, lavender, silver vase plant, Asiatic lily, and bearded iris. Odorants were prepared for testing either in pure form, mixed with other odorants, or diluted with mineral oil. Synthetic and natural odorants were purchased from the following: Sigma-Aldrich (SA), Lhasa Karnak Herb (LK), Old Hickory (OH), or Aroma Workshop (AW). The six familiar odor stimuli were strawberry (93% AW Strawberry fragrance, 7% SA citronellyl acetate), orange (99% AW Sweet Orange Oil, 1% LK Bitter Orange Oil), banana (96% mineral oil, 2% OH Banana Extract, 2% SA amyl acetate), lavender (91% mineral oil, 9% AW Lavender Oil), rose (100% Rose Oil), and jasmine (100% Jasmine Oil). Novel odors, which were mixtures made with fruity and floral components, were novel-1 (67% mineral oil, 20% LK Grapefruit Oil, 13% LK Violet Oil), novel-2 (99% mineral oil, 1% AW Plum Blossom Fragrance), novel-3 (94% mineral oil, 3% AW Acai Fragrance, 3% AW Pikaki Fragrance), and novel-4 (96% mineral oil, 2% AW Honeysuckle Fragrance, 2% SA methyl-3-nonenoate). During both the behavioral ratings and scanning, odorants were delivered using a custom-built, MRI-compatible olfactometer. Odorless air was delivered at 3.2 L/min through Teflon-coated tubes inserted into the headspace of amber bottles containing small volumes of liquid odorants, bringing odorized air to the subject's nose.

Respiratory monitoring.

Subjects were affixed with respiratory breathing belts to monitor breathing (Gottfried et al., 2002). Sniff waveforms were extracted for each trial, sorted by condition, and normalized within prescanning and postscanning sessions. Sniff volume as well as sniff duration (time to peak) were calculated for each trial, averaged, and entered into group analysis. Because we found differences in sniff volume between conditions (main effect of familiarity: F(1,14) = 13.074, p = 0.003; time × category interaction: F(1,14) = 9.726, p = 0.008), the trial-by-trial sniff parameters were convolved with a canonical HRF and entered as nuisance regressors into a GLM for fMRI data analysis.

Prescanning behavioral ratings.

Day 1 of the experiment was used to select picture and odor stimuli for use in the main experiment, as well as to obtain baseline perceptual ratings of these stimuli. Each subject provided familiarity ratings for a set of 12 pictures, as well as familiarity, intensity, and pleasantness ratings for a set of 10 odors (stimuli listed above). Subjects also rated the congruency of odor-picture pairs during which a picture and its corresponding odor were presented simultaneously. All ratings were made on a visual analog scale. They also provided fruity/floral ratings for all odors on a visual analog scale ranging between “Fruity” and “Floral.” Finally, subjects made pairwise similarity ratings (2 trials/pair) for all experimental odors. To confirm robust picture category classification, an independent group of subjects (n = 11) was asked to make category ratings for all pictures. Matching accuracy for the novel odors was also collected from a second group of independent subjects (n = 11) to confirm that no odor-picture biases existed.

Experimental stimulus selection.

Experimental stimuli were individually selected for each subject based on the prescanning behavioral ratings to optimize perceptual differences between novel and familiar items (see Fig. 1A). Eight pictures and eight odors were selected for each subject from the pool of 12 pictures and 10 odors (see Fig. 1B). Different approaches were used to select familiar and novel stimuli. Familiar stimuli were selected using each subject's odor-picture congruency ratings, whereby the two fruit and two flower odor-picture pairs (from a total of 4 possible pairs per category) rated as most congruent were assigned as the familiar pairs. This ensured that the final set of 4 familiar odor-picture pairs (2 fruity, 2 floral) was maximally familiar and congruent to each subject. Novel stimuli were selected in a two-step procedure. First, the two most unfamiliar fruit pictures and the two most unfamiliar flower pictures (from a total of 6 unfamiliar pictures) were selected using each subject's familiarity ratings. (Of note, while these specific fruit and flower exemplars were highly unfamiliar, they were explicitly identified as fruits and flowers; see Results). Second, these 4 unfamiliar pictures were paired with the 4 novel odors, thus constituting the final set of 4 novel odor-picture pairs (2 fruity, 2 floral) for the duration of the experiment. The assignment of the novel odors to the unfamiliar pictures was counterbalanced across subjects.

fMRI scanning experiment.

On the scanning day (day 2), subjects underwent a prelearning (baseline) scan session consisting of three 8 min fMRI odor runs. On each trial, subjects were cued to sniff at odor delivery and then prompted to make either a pleasantness or familiarity rating of the odor. The rating types (pleasantness or familiarity) were randomly paired with the stimuli such that subjects could not predict the type of rating they would be asked to make. Each run contained 32 trials, during which each odor was presented four times in pseudorandom order, once within each block of eight trials. These runs were interspersed with visual runs (identical to odor runs, except that the 8 pictures were presented instead of the odors), which were used to perform visual category decoding for comparison to the olfactory decoding data. After the prelearning scanning session, subjects exited the scanner and underwent a computerized odor-picture paired-associates learning task (see below). After the association task, subjects returned to the scanner and underwent a postlearning scanning session, which was identical to the prelearning scanning session.

Olfactory-visual paired-associates learning task.

The paired-associates task took place outside of the scanner, just after the prelearning scanning session, and consisted of two identical 30 min sessions during which each of the eight odor-picture pairs was presented four times in each of three 32-trial blocks (12 repetitions per pair, 96 trials total). On each trial, the start of which was cued with a “3-2-1” countdown, a picture and odor were simultaneously presented for 10 s. To ensure attention to the stimuli, the task doubled as an odor detection test such that, at the end of every trial, subjects had to indicate whether the odor was present or not (in each 32-trial block, one trial of each pair did not contain any odor). Subjects were informed that the odor-picture pairing would remain the same throughout the experiment.

Postscanning behavioral ratings.

Immediately following the postlearning scanning session, subjects were brought out of the scanner and administered an odor-picture matching test. On each trial, one odor was delivered and subjects had to select the matching picture from the group of possible choices. Each odor was delivered twice, once in each half of the test (16 trials total). Subsequently, just as before the prelearning scanning session, subjects also made odor-picture congruency ratings, fruity/floral odor ratings, and odor similarity ratings for comparison with the prescanning data.

fMRI data acquisition.

Gradient-echo T2-weighted echo planar images were acquired with a Siemens Trio 3T scanner using a 32-channel head coil. Imaging parameters were as follows: TR, 2.26 s; TE, 20 ms; slice thickness, 2 mm; gap, 1 mm; matrix size, 128 × 120 voxels; field of view, 220 × 206 mm; in-plane resolution, 1.72 × 1.72 mm; acquisition angle, tilted 30° from the intercommissural plane (rostral > caudal) to reduce susceptibility artifacts in olfactory regions, 36 slices per volume. A 1 mm3 isotropic T1-weighted MPRAGE structural MRI scan was also acquired.

Category decoding: searchlight-based support vector classification.

The fMRI data were preprocessed with SPM8 software (www.fil.ion.ucl.ac.uk/spm/). Images were spatially realigned, but not normalized or smoothed, to preserve the native data. The realigned functional images were entered into a separate event-related GLM for each run, consisting of four conditions (familiar flower, familiar fruit, novel flower, novel fruit; two exemplars per condition) along with sniff volume and sniff duration as nuisance covariates, modeled with stick functions and convolved with a canonical HRF, and movement nuisance regressors. The runwise β parameter estimates (response amplitudes) generated from this model were used in a support vector machine (SVM) classification analysis. The SVM was implemented through a searchlight procedure, in which classification was performed on the information within a 146-voxel sphere, with accuracy mapped to the center voxel. The sphere was then moved systematically throughout the brain to generate a whole-brain continuous map of classification accuracy. Of note, the searchlight was implemented on spatially realigned, but non-normalized and unsmoothed functional images. Within each session (prelearning and postlearning), the linear SVM classifier (LIBSVM; http://www.csie.ntu.edu.tw/∼cjlin/libsvm/) was trained to classify β estimates for familiar fruit versus familiar flower odors, and tested on β estimates for novel fruit versus novel flower odors. Importantly, the classification was performed independently on fMRI data from the prelearning and postlearning scanning session. This approach was ideal for several reasons. First, by training on the familiar items only, we ensured that decoding was guided by the subject's existing category structure of fruit versus flower. Second, by training and testing within each session separately, we could eliminate any session-specific effects, particularly those that might affect classifier training. Third, by training and testing on different sets of stimuli, we ensured that training and test datasets were treated independently. Finally, training and testing on different odor stimuli ensured that we detected category-specific rather than stimulus-specific codes. In other words, category decoding could only be successful if the novel odors came to evoke similar category-specific (as opposed to stimulus-specific) activity patterns that were similar to patterns evoked by the familiar odors of the same category. Accuracy difference maps (postlearning − prelearning session) for each subject were then normalized and smoothed (6 mm FWHM) for statistical group analysis (voxelwise one sample t tests).

Two visual decoding analyses were also implemented. The first analysis was performed to serve as a positive control of visual category information within expected regions. In this analysis, the classifier was trained to decode category (fruit vs flower) for all familiar and unfamiliar pictures across all prelearning and postlearning runs. Cross-validation was done on a leave-one-run-out basis, such that, on every iteration, the classifier was trained on five runs and tested on a sixth run (Kriegeskorte et al., 2006; Kahnt et al., 2011). Accuracy scores were then averaged across the six iterations. The second visual decoding analysis was analogous to the main olfactory decoding procedure, whereby visual category classification was assessed at the regions (voxel centroids) that were identified as significant in the olfactory classification analysis. This analysis enabled us to demonstrate whether the olfactory findings were indeed specific to the olfactory modality. Within each session, the classifier was trained to decode familiar fruit versus familiar flower pictures, and then tested on unfamiliar fruit versus unfamiliar flower pictures. As before, accuracy difference maps (postlearning − prelearning session) were generated for each subject.

Statistics.

Statistical significance for behavioral measures was established using repeated-measures ANOVA, one-sample, and paired t tests, as appropriate. A single-linkage algorithm in MATLAB was used to transform the similarity ratings into a hierarchical cluster tree, which was plotted as a dendrogram. To correct for multiple comparisons in the neuroimaging results, family-wise error (FWE) correction was performed for the olfactory decoding maps across small-volume anatomical masks of perirhinal cortex (PrC), posterior piriform cortex (PPC), orbitofrontal cortex (OFC), and insula (INS), manually drawn with reference to a human brain atlas (Mai et al., 2004). Visual decoding maps were thresholded at FWE whole brain corrected at the cluster level (p < 0.05). We extracted decoding accuracy values from regions that showed significant increases in decoding accuracy from prelearning to postlearning using a separate leave-one-subject-out analysis to maintain independent voxel selection. For this, the group-level analysis was performed with one subject left out, and peak coordinates estimated from this analysis were used to extract accuracy values from the left-out subject. This procedure was repeated iteratively for all 15 subjects, and the resulting accuracy values were submitted to independent group-level analysis. For correlation analyses of behavioral and imaging data, similarity ratings were normalized within-subject before calculation of within- and between-category differences. Spearman's rank correlation coefficients were used for all correlations. Statistical threshold was set at p < 0.05, two-tailed, unless otherwise noted.

Results

Subjects participated in an olfactory-visual paired-associates learning task outside of the scanner, which was preceded and followed by fMRI scanning (identical prelearning and postlearning scan sessions) (Fig. 1C,D). Each trial of the association task involved the simultaneous presentation of a picture and an odor, and subjects were instructed to learn these pairings (total of eight unique pairs, Fig. 1E; for stimulus selection process, see Fig. 1A). Perceptual ratings of all visual and olfactory stimuli were obtained before the first and after the second scanning session to assess behavioral changes.

Stimulus set validation

We first ensured that sufficient visual category information was present in the pictures to guide changes in olfactory category representations. To confirm picture category membership, an independent group of subjects (n = 11) was asked to rate all experimental pictures. All picture stimuli were accurately classified as fruit or flower, regardless of familiarity (p values <0.001, one-sample t tests) (Fig. 2A). To make sure that the formation of any olfactory categorical representations was truly de novo, we developed a robust set of novel odors. These odors needed to be novel and ambiguous yet carry sufficient ecological relevance to be realistically classified as fruity or floral. To this end, we created a set of olfactory stimuli from a combination of synthetic or natural odorants with fruity and floral elements, ensuring completely original odors (see Materials and Methods). A separate behavioral experiment was also performed in which another independent group of subjects (n = 11) was asked to match the unfamiliar pictures and the novel odors without any prior learning. This revealed a random odor-picture assignment (p = 0.47, one-sample t test vs chance; chance = 12.5%), demonstrating that none of the novel odors was predisposed to be consistently classified as fruity or floral.

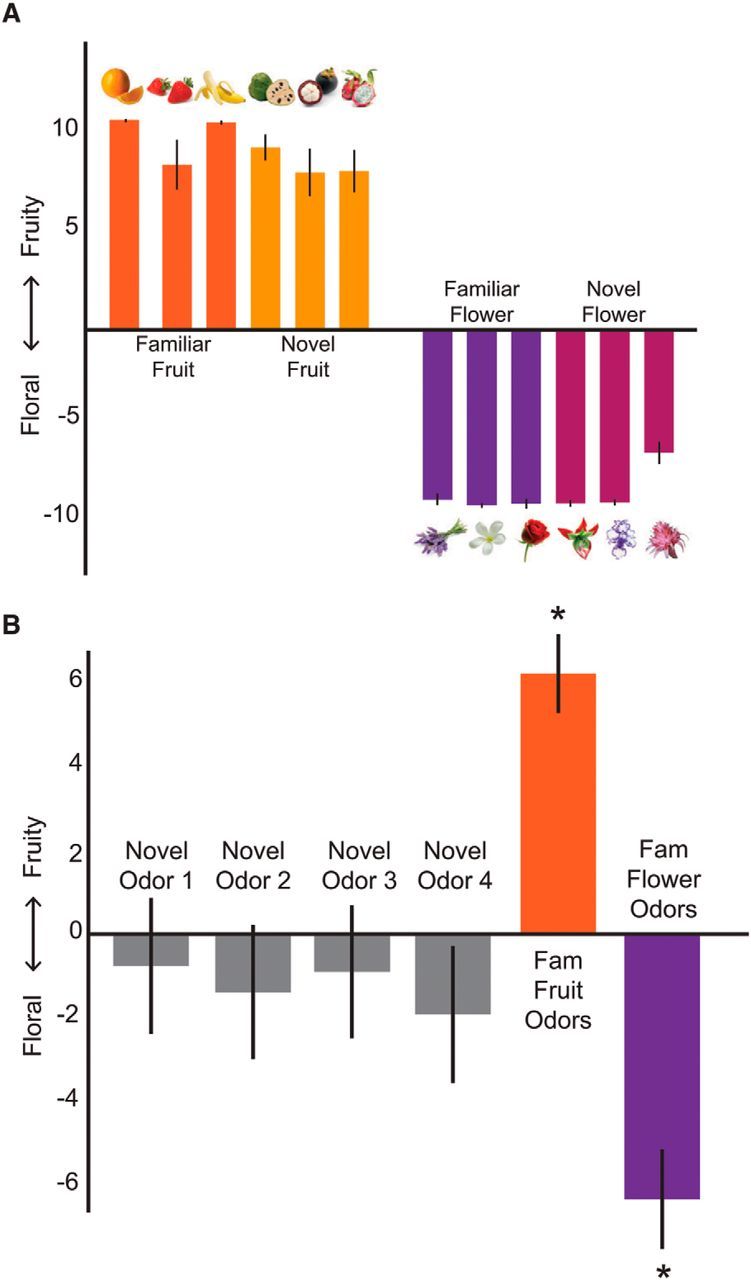

Figure 2.

Preexperimental stimulus set ratings. A, An independent group of subjects rated all possible picture stimuli as accurately belonging to their intended category (p < 0.001, one-sample t tests). B, Preexperimental ratings from experimental subjects confirmed that familiar odors were accurately rated as fruity or floral. *p < 0.01 (one-sample t tests). Importantly, before learning, the novel odors were not consistently rated as belonging to either category (p > 0.26, one-sample t tests). Data are mean ± SEM.

Confirming our assumptions regarding stimulus familiarity for both modalities (visual and olfactory), before the paired-associates learning task, familiar odors and pictures were rated as more familiar than the novel odors and unfamiliar pictures (odor stimuli: p values <0.03, paired t tests, one-tailed; picture stimuli: p values <0.001, paired t tests). Ratings of fruity-ness and floral-ness were used to assess category membership for the odors (Fig. 2B). All familiar odors were accurately classified as fruit or flower (all p values <0.01, one-sample t tests). Importantly, before learning, the novel odors were not consistently rated as belonging to either category (p values >0.26, one-sample t tests).

De novo olfactory perceptual learning

During the paired-associates task, each odor was repeatedly paired with its corresponding picture. As such, the four novel odors were paired with pictures of real but unfamiliar fruits and flowers, such as dragon fruit and silver vase plant (pairings counterbalanced across subjects). We found initial evidence for category learning in subjects' pairwise similarity ratings. Specifically, a hierarchical cluster analysis revealed an emergent category structure for the novel odors, such that from prelearning to postlearning, the similarity structure reorganized to reflect the trained categories (Fig. 3A). This structure mirrored the clustering, which was found for familiar odors in both prelearning and postlearning sessions.

Figure 3.

Perceptual changes across learning. A, Hierarchical cluster analysis was used to characterize effects of paired-associates learning, whereby pairwise ratings of odor similarity were converted to distances. Dendrogram plots represent a preexisting category structure for familiar odors (top) that is sustained from prelearning to postlearning. However, this organization emerges for the novel stimuli (bottom) only after the learning task. Fl, Flower; Fr, fruit. B, Congruency ratings between pictures and odors reveal that both novel fruit and flower pairs were perceived as significantly more congruent after learning, with novel items showing greater increases than familiar items. *p < 0.001, familiarity × session interaction (repeated-measures ANOVA). C, Matching accuracy after learning. Odor-picture matching was significantly above chance (dotted line, 1 of 8 = 12.5%) for all conditions. *p < 0.001 (one-sample t tests).

To quantify these effects, we examined changes in odor-picture congruency ratings. A three-way (familiarity × category × session) repeated-measures ANOVA revealed an increase in perceived congruency between odor-picture pairs from prelearning to postlearning (main effect of session: F(1,14) = 19.433, p = 0.001), and critically, novel pairs showed greater increases than familiar pairs (familiarity × session interaction: F(1,14) = 17.01, p = 0.002) (Fig. 3B). We also observed significant interactions of familiarity × category (F(1,14) = 4.74, p = 0.047) and category × session (F(1,14) = 4.89, p = 0.04), although all stimuli reached the same level of congruency by the postlearning session (no main effect of familiarity, category, or familiarity × category interaction within the postlearning session, p values >0.125). We note that the category interactions may be driven by greater inherent familiarity and knowledge regarding fruits compared with flowers, but given that all stimuli attained an equivalent level of congruency after learning, this difference is unlikely to drive interpretation of our results.

As an additional confirmation of successful categorization, we administered an odor-picture matching test after the postlearning scanning session. Subjects performed significantly above chance on this matching task (mean accuracy = 73.3 ± 12.8%, mean ± SEM, p < 0.001, one-sample t test vs chance; chance = 12.5%) (Fig. 3C). All pairs were significantly above chance, and a two-way (familiarity × category) repeated-measures ANOVA revealed a main effect of familiarity (F(1,14) = 21.07, p < 0.001), but not category (F(1,14) = 0.96, p = 0.34) or a familiarity × category interaction (F(1,14) = 1.05, p = 0.32). Although chance performance is 12.5% (1 of 8), it is worth noting that, for the novel odors, chance level could potentially lie at 25% (1 of 4) if the subject were able to eliminate all familiar items from the potential choices. However, even in this case, matching accuracy was significantly >25% (p < 0.001, one-sample t test vs 25% chance). Accurate picture-odor matching as well as significant increases in congruency, in the context of more global categorical changes as evidenced by the similarity ratings, indicate that subjects were consistent in their choices after learning, reflecting the emergence of new categorical associations.

Olfactory and limbic regions support the formation of novel odor category representations

Our behavioral results demonstrate that visual category information successfully guided olfactory perceptual reorganization, such that the novel odors acquired membership to their associated object category. To investigate the neural underpinnings of this olfactory categorical learning, we applied a whole-brain, searchlight-based (Kriegeskorte et al., 2006; Kahnt et al., 2011) SVM classifier to the fMRI dataset. Specifically, we trained an SVM to classify activity patterns evoked by familiar fruit versus familiar flower odors, and tested the SVM on activity patterns evoked by novel fruit versus novel flower odors, both before and after the paired-associates learning task (Fig. 4A; for details, see Materials and Methods). Thus, in this analysis, while prelearning classification accuracy should be at chance, postlearning classification accuracy can only be above chance if category information for the newly established fruit and flower odors comes to evoke similar multivoxel activity patterns as for familiar fruits and flowers. Accordingly, the central prediction was that classification accuracy for the novel odors would improve significantly from prelearning to postlearning.

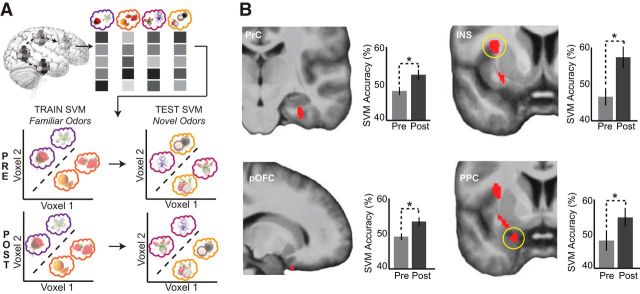

Figure 4.

Searchlight-based SVM category decoding. A, Schematic of the searchlight-based implementation of the SVM classification. Classification was performed within a 146-voxel sphere, with the classifier trained on patterns of fMRI activity for familiar flower versus familiar fruit odors, and then tested on patterns for novel flower versus novel fruit odors, within each session. This analysis was designed to identify brain areas where classification was higher at postlearning versus prelearning. B, Perirhinal cortex (top left, y = −19), posterior orbitofrontal cortex (bottom left, x = 17), insula (top right, y = 5), and posterior piriform cortex (bottom right, y = 3) show significant group-level increases in category decoding accuracy after paired-associates learning. Plots indicate accuracy values extracted from each subject in the leave-one-subject-out analysis, based on the group-level peak coordinate, at prelearning and postlearning, and averaged across subjects. Data are mean ± SEM. *p < 0.05 (paired t tests); display threshold p < 0.001.

Using this analysis, we found significant increases in classification accuracy for novel odors from prelearning to postlearning in the right PrC ([32, −19, −26], Z = 4.34, pFWE-SVC = 0.001) and right posterior orbitofrontal cortex (pOFC, [17, 14, −27], Z = 3.14, pFWE-SVC = 0.024). We also found significant accuracy increases in additional primary and secondary olfactory brain regions: left PPC ([−21, 3, −23], Z = 3.27, pFWE-SVC = 0.016) and right INS ([−33, 5, 12], Z = 3.75, pFWE-SVC = 0.03). To assess statistical robustness of our imaging effects, we performed a leave-one-subject-out analysis (see Materials and Methods) to obtain independently selected voxels to extract accuracy values. Post hoc t tests confirmed that classification accuracy in PrC, pOFC, PPC, and INS was at chance at prelearning (all p values >0.09) and increased to above chance at postlearning (all p values <0.04, one-tailed, one-sample t tests vs chance), with significant improvements in classification accuracy values across learning for all identified regions (all p values <0.04, paired t tests) (Fig. 4B). These findings indicate that in PrC, pOFC, PPC, and INS, originally in a naive state with indistinguishable category-related pattern activity, category-specific patterns emerged following paired-associate learning.

De novo formation of odor categories is specific to the olfactory modality

Although the above findings are suggestive of de novo odor categorization, it remains possible that these effects could have emerged as a general function of the association task, unspecific to the olfactory modality. Therefore, we tested whether multivariate classification of visual fMRI data elicited similar learning-related changes in PrC, pOFC, PPC, and INS. The visual fMRI data allowed us (1) to validate our analytical approach by identifying established regions where visual categorical content is represented, and (2) to perform a symmetric analysis for direct comparison across visual and olfactory domains. Because picture category information was robust even before learning, our first visual analysis involved decoding of fruit versus flower across all six preruns and postruns in a leave-one-run-out cross-validation approach. We identified visual categorization in both right and left lateral occipital complex ([50, −54, −27], Z = 4.28, pFWE-cluster = 0.02; [−50, −51, −14], Z = 3.96, pFWE-cluster = 0.02) (Fig. 5A), consistent with prior studies of visual category coding (Malach et al., 1995; Grill-Spector et al., 2001; Cichy et al., 2011), and serving as a positive control for the identification of visual object processing regions.

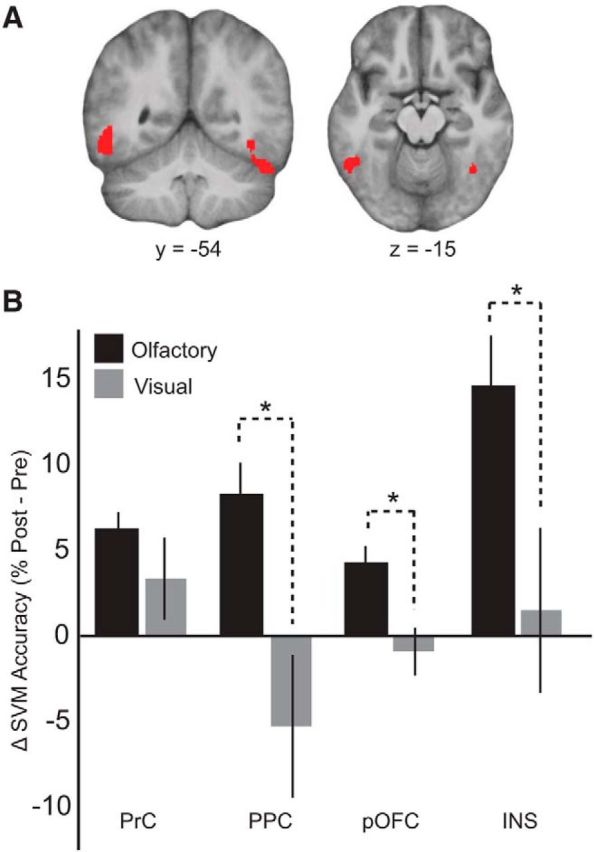

Figure 5.

Learning-related categorical changes are specific to the olfactory modality. A, Visual category decoding across all prelearning and postlearning visual fMRI runs (leave-one-run-out cross-validation) revealed significant category decoding in bilateral lateral occipital complex, serving as a positive control that visual category information was present to guide olfactory perceptual changes. Display threshold pFWE-cluster < 0.05. B, Symmetric category decoding analyses were also performed using the visual fMRI data, revealing significant time × modality interactions in PPC, pOFC, and INS, such that improvements in category decoding accuracy were greater for the olfactory modality than for the visual modality. An interaction was not observed within PrC. Data are mean ± SEM. *p < 0.05, time × modality interaction (repeated-measures ANOVA).

For the second visual decoding analysis, we performed the same imaging analysis as for the olfactory data but now applied to the visual fMRI data, to generate visual category decoding accuracy maps for prelearning and postlearning. We then extracted visual decoding accuracy values from our peak coordinates in PrC, pOFC, PPC, and INS, providing a way to compare classification performance directly between olfactory and visual modalities. Region-wise two-way (time × modality) repeated-measures ANOVA revealed significant time × modality interactions within pOFC (F(1,14) = 5.85, p = 0.03), PPC (F(1,14) = 11.60, p = 0.004), and INS (F(1,14) = 7.38, p = 0.02), such that changes in classification accuracy for the olfactory domain were greater than those observed in the visual domain (Fig. 5B). A time × modality interaction was not observed within PrC (F(1,14) = 1.53, p = 0.24). By comparison, classification accuracy values for visual decoding in pOFC, PPC, and INS were not significantly above chance in either the presession or postsession (p > 0.27, one-sample t tests vs chance). Together, these results suggest that our findings within pOFC, PPC, and INS are not related to mere training-induced or session-dependent changes but are specific to the olfactory domain.

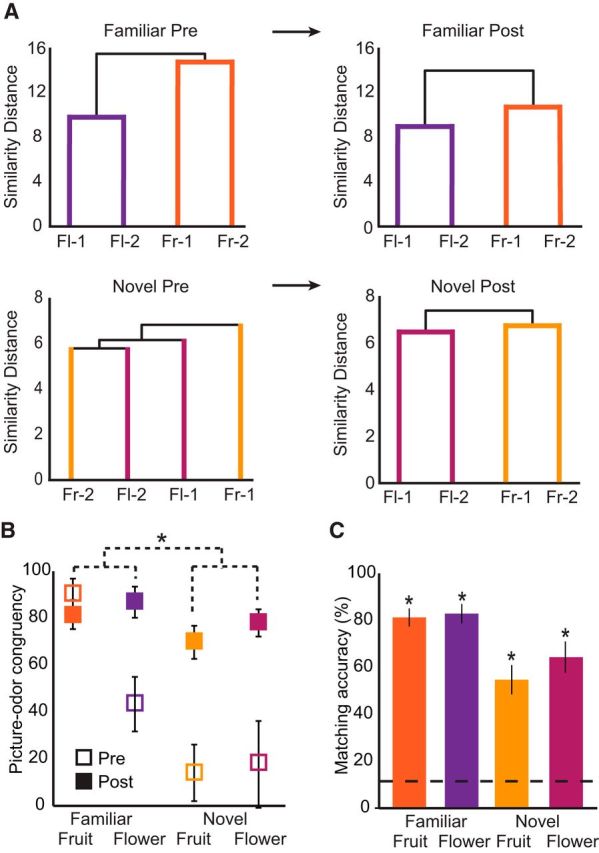

Category-specific fMRI ensemble activity predicts perceptual learning-related behavior

The above findings highlight the key brain regions involved in the formation of category-specific olfactory patterns at the group level. To test whether there was a systematic link between learning-induced regional changes in fMRI pattern coding and subject-specific changes in perceptual ratings, we used two measures of olfactory learning. In one analysis, we examined matching accuracy scores, given that this measure best captures how well subjects learned the correct odor-picture pairs. We found that individual performance on the odor-picture matching test for the unfamiliar pairs was significantly correlated with category decoding accuracy in pOFC (Spearman's ρ = 0.64, p = 0.01) (Fig. 6A), suggesting that subjects with more well-defined category-specific patterns were better able to match the novel odors to their associated visual representations. In contrast, there was no significant correlation with category decoding accuracy in PrC (ρ = −0.14, p = 0.63), PPC (ρ = 0.18, p = 0.54), or INS (ρ = −0.36, p = 0.19).

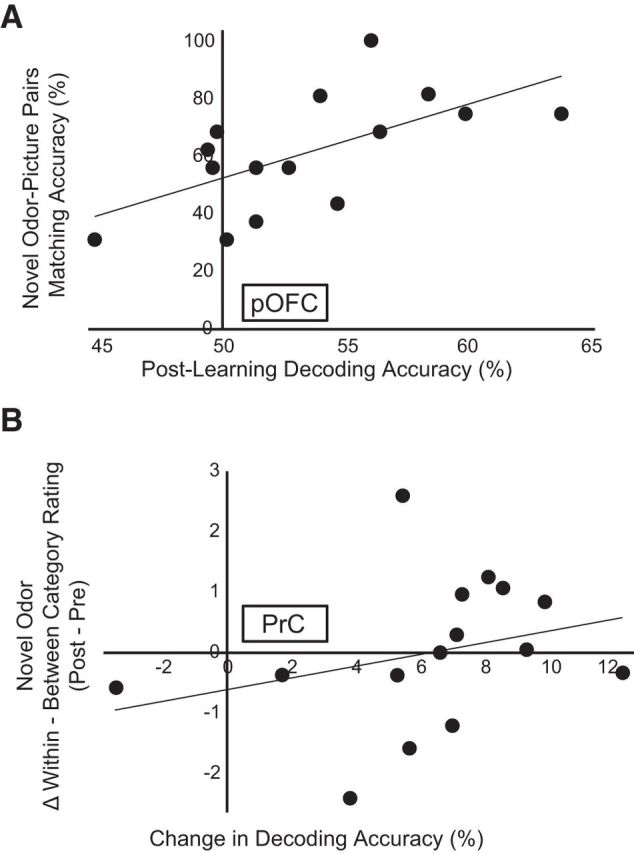

Figure 6.

fMRI magnitude of category decoding accuracy predicts learning-related behavior. A, Across subjects, the magnitude of odor category decoding in pOFC after learning was correlated with matching accuracy for the novel items, such that subjects who exhibited better fMRI odor classification also performed better on the matching test (ρ = 0.64, p = 0.01). B, Subjects showing a greater increase in fMRI decoding accuracy within PrC (from prelearning to postlearning) also showed a greater perceptual change for the novel odors, such that subjects who exhibited stronger improvements in odor classification also showed greater increases in within-category versus between-category similarity ratings (ρ = 0.51, p = 0.03, one-tailed). Each data point represents one subject.

In a second analysis, we examined ratings of odor similarity, given that this measure provides an estimate of categorical perceptual convergence between formerly novel and ambiguous odors. Here we examined category learning more explicitly by regressing pairwise ratings of odor perceptual similarity against the imaging data. To search for changes in category structure across learning, we computed a category index by subtracting the between-category similarity ratings from the within-category similarity ratings. A postlearning increase of within-category ratings and/or a postlearning decrease in between-category ratings would be consistent with an emergent category structure for the novel odors, such that an overall increase in the category index indicates a greater perceptual change. Across subjects, we found that the change in decoding accuracy in PrC from prelearning to postlearning was significantly positively correlated with the change in the category index for novel odors only (Spearman's ρ = 0.51, p = 0.03, one-tailed) (Fig. 6B). This finding indicates that greater categorical discrimination in PrC was associated with greater categorical perceptual differentiation from prelearning to postlearning. On the other hand, a systematic relationship to the category index was not observed within pOFC (ρ = 0.19, p = 0.25), PPC (ρ = −0.13, p = 0.32), or INS (ρ = −0.05, p = 0.43).

Discussion

Using an olfactory-visual paired-associates learning task, we induced a behavioral and neural reorganization of previously ambiguous odor representations. Perceptual changes in olfactory categorical ratings (Fig. 3) were observed in parallel with de novo formation of category-specific fMRI ensemble patterns within PrC, pOFC, PPC, and INS (Fig. 4). This remarkable degree of plasticity within the olfactory and limbic systems adds to previous evidence that olfactory perceptual learning can modify both piriform and orbitofrontal activity patterns (Schoenbaum et al., 1999; Sevelinges et al., 2004; Kadohisa and Wilson, 2006; Li et al., 2006; Jones et al., 2007; Roesch et al., 2007; Li et al., 2008; Chapuis et al., 2009), underscoring the idea that odor information content can be dynamically updated in these regions. We also found that category learning-related changes within PPC, pOFC, and INS were specific to the olfactory modality. Olfactory specificity was strongest in PPC, in line with its role in primary olfactory processing. These modality-specific effects may help tease apart the specific unimodal contributions that combine to form a multisensory object category representation.

It is important to emphasize that, during the prelearning session, subjects were in a naive state regarding the novel odors. It was only after the cross-modal paired-associates task that olfactory categorical representations of fMRI ensemble activity emerged, implying the recruitment of two fundamental but complementary computations: enhanced pattern completion for odors newly affiliated with the same category, and enhanced pattern separation between odors newly affiliated with different categories. This framework aligns closely with rodent studies of olfactory recognition and discrimination in piriform cortex (Barnes et al., 2008; Chapuis and Wilson, 2012), and broadly concurs with recent memory-based studies emphasizing pattern completion and separation mechanisms in the medial temporal lobe to encode input similarities and differences across items, facilitating generalization and discrimination among stimuli (LaRocque et al., 2013; Huffman and Stark, 2014; Reagh and Yassa, 2014). Critically, our data extend these principles to the PrC, where pattern convergence and divergence are both engaged to create new categorical structure.

Given that category learning was based on specific odor-picture pairings, it is possible that subjects used arbitrary stimulus-stimulus (S-S) associations to guide their performance on the odor-picture congruency task, rather than relying on conceptual (i.e., category) knowledge. However, our data support that subjects were able to abstract from S-S associations to form category representations. First, the similarity distances between previously ambiguous odors converged to form a categorical structure that mirrored the corresponding familiar odors (Fig. 3A). In other words, novel fruit odors became more similar with each other, and novel flower odors became more similar with each other, after the paired-associates task. This change in perceived odor quality suggests that the novel odor representations were transformed in a more knowledge-based, conceptual manner. Second, our SVM was trained on familiar fruit versus flower odors and tested on novel fruit versus flower odors. Therefore, decoding accuracy in the test set could only be above chance if the novel odors came to evoke similar category-specific (as opposed to stimulus-specific) activity patterns. Finally, because we trained and tested the classifier on different odors, the results could not be driven by identify-specific information. Together, these findings suggest that, in addition to simple S-S associations, subjects acquired more abstract, categorical representations.

That the OFC participates in the de novo formation of odor categories aligns closely with its role in olfactory multisensory integration (Gottfried and Dolan, 2003; de Araujo et al., 2003; Small et al., 2004; Osterbauer et al., 2005) and olfactory perceptual learning (Li et al., 2006; Wu et al., 2012). The correlation between odor-picture matching performance and OFC categorical representations can be interpreted in the context of olfactory predictive coding mechanisms. Our earlier work showed that, in an olfactory attention-based search task, odor-specific templates of fMRI ensemble activity emerged in OFC before onset of the actual odor stimulus (Zelano et al., 2011). In the current study, success on the odor-picture matching task requires the formation of a robust representation that can be used to predict its visual associate, and our data suggest that OFC is particularly suited to perform this role (Fig. 6A). Hence, with stronger odor-picture associations, the corresponding ability to categorize those odors will also improve. Although speculative, this account would fit with recent models proposing that the OFC and other prefrontal regions are important for constructing “model-based” representations of environmental features, stimulus-outcome associations, and goal-directed behaviors (Gläscher et al., 2010; Daw et al., 2011; Jones et al., 2012).

Our finding of category representation within the insula provides support for this region in multisensory integration. This is consistent with studies identifying the insula as not only responsive to olfactory stimuli (Cerf-Ducastel and Murphy, 2001; Heining et al., 2003; Plailly et al., 2007), but important for taste-olfactory integration (de Araujo et al., 2003; Small and Prescott, 2005). Given that half of our odor stimuli were food odors (fruit smells), here the insula may support a mechanism for categorization that differentiates edibility (food vs nonfood) (Simmons et al., 2005). The insula has been well characterized as a center of gustatory processing (Yaxley et al., 1990; Small, 2010; Veldhuizen et al., 2011), and more recent research extends its role to include the convergence of various types of sensory information (de Araujo and Simon, 2009; Kurth et al., 2010). As a key component of the taste-olfactory, or flavor, network, an important capability of the insula would be to form experience-based representations of palatability, or even edibility, all supporting a framework in which this region must code odor identity information to guide adaptive consummatory behavior.

We acknowledge that, as a surrogate marker of neural activity, the fMRI BOLD response is unable to provide a fine-grained mechanistic understanding at the network, cellular, or synaptic level. For example, response patterns observed in pOFC, PrC, and insula could be driven by a common projection from PPC, rather than reflecting unique independent contributions of each region. Whether the fMRI signals reflect excitatory, inhibitory, or modulatory processing, or whether these effects are based on actions at the cell body, synapse, dendrite, or axon, is also unclear (although data suggest that the fMRI signal is largely a reflection of local field potential activity and dendritic processing) (Logothetis, 2002; Goense and Logothetis, 2008). Therefore, we can only interpret our findings in the context of a network of neural structures contributing to olfactory categorical learning. While we may not achieve a complete mechanistic understanding through the current analyses, we demonstrate strong evidence for the specific involvement of PrC, PPC, pOFC, and INS in the network processing of categorical odor information. Moreover, we show olfactory specificity within our findings, and correlations between fMRI ensemble pattern activity and behavioral indices of odor categorization training, which together expands our current knowledge of multimodal associative learning. Future research is necessary to address more mechanistic questions regarding the formation of categorical odor representations as demonstrated here. Such studies may provide a framework by which multimodal associative learning can expand categorical representations of odors, or for how cortical areas identified in the current study work together to create such novel representations.

Intriguingly, the extent to which subjects used visual semantic content to distinguish novel fruity from novel floral odors (from prelearning to postlearning) was associated with stronger pattern decoding accuracy in PrC (Fig. 6B), highlighting a unique role for this region in shaping olfactory information. We suggest that the involvement of PrC in the acquisition of olfactory categorical knowledge centers on the fact that categorical learning relied on visual semantic input. The PrC is a visual associative region that receives dense, confluent input from unimodal and polymodal visual areas (Suzuki and Amaral, 1994; Lavenex et al., 2004; Staresina and Davachi, 2010) and is reciprocally connected with entorhinal cortex, from which information can be transmitted to the hippocampus and piriform cortex. Thus, it is ideally positioned to bind multisensory features into complex representations, and human and animal studies strongly implicate PrC in both memory and perceptual processing, especially for new associations (Murray et al., 1993; Wan et al., 1999; Pihlajamäki et al., 2003; Taylor et al., 2006; Haskins et al., 2008; Holdstock et al., 2009). Insofar as the PrC can use visual associative information to drive perceptual categorization of novel odor items, our findings provide unique insights into its role in supporting configural coding and recognition.

A categorical framework of object perception, encompassing anything from faces, tools, birds of prey, or Burgundian white wines, can guide stimulus-based behavior with greater efficiency and flexibility, optimizing generalization to perceptually related stimuli that may not have been previously encountered. Here, we provide one ecological model of how humans may learn about and classify novel odor objects. In particular, we outline a functional framework that could support the integration of sensory information and the formation of novel olfactory object representations in humans. The present results suggest a broader role for the perirhinal cortex, in combination with orbitofrontal, piriform, and insular cortices, in visual-olfactory learning. Future investigations may shed light on the developmental processes behind olfactory categorical representations, including how the specific connections between these regions are modulated throughout learning.

Footnotes

This work was supported by National Science Foundation Graduate Research Fellowship Program Grant DGE-0824162 to L.P.Q. and the National Institute on Deafness and Other Communication Disorders Grant R01DC010014 to J.A.G.

The authors declare no competing financial interests.

References

- Barnes DC, Hofacer RD, Zaman AR, Rennaker RL, Wilson DA. Olfactory perceptual stability and discrimination. Nat Neurosci. 2008;11:1378–1380. doi: 10.1038/nn.2217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bensafi M, Croy I, Phillips N, Rouby C, Sezille C, Gerber J, Small DM, Hummel T. The effect of verbal context on olfactory neural responses. Hum Brain Mapp. 2014;35:810–818. doi: 10.1002/hbm.22215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buckley MJ, Gaffan D. Perirhinal cortex ablation impairs visual object identification. J Neurosci. 1998;18:2268–2275. doi: 10.1523/JNEUROSCI.18-06-02268.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burwell RD, Amaral DG. Perirhinal and postrhinal cortices of the rat: interconnectivity and connections with the entorhinal cortex. J Comp Neurol. 1998;391:293–321. doi: 10.1002/(SICI)1096-9861(19980216)391:3%3C293::AID-CNE2%3E3.0.CO;2-X. [DOI] [PubMed] [Google Scholar]

- Bussey TJ, Saksida LM. Object memory and perception in the medial temporal lobe: an alternative approach. Curr Opin Neurobiol. 2005;15:730–737. doi: 10.1016/j.conb.2005.10.014. [DOI] [PubMed] [Google Scholar]

- Carmichael ST, Clugnet MC, Price JL. Central olfactory connections in the macaque monkey. J Comp Neurol. 1994;346:403–434. doi: 10.1002/cne.903460306. [DOI] [PubMed] [Google Scholar]

- Cerf-Ducastel B, Murphy C. fMRI activation in response to odorants orally delivered in aqueous solutions. Chem Senses. 2001;26:625–637. doi: 10.1093/chemse/26.6.625. [DOI] [PubMed] [Google Scholar]

- Chapuis J, Wilson DA. Bidirectional plasticity of cortical pattern recognition and behavioral sensory acuity. Nat Neurosci. 2012;15:155–161. doi: 10.1038/nn.2966. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chapuis J, Garcia S, Messaoudi B, Thevenet M, Ferreira G, Gervais R, Ravel N. The way an odor is experienced during aversive conditioning determines the extent of the network recruited during retrieval: a multisite electrophysiological study in rats. J Neurosci. 2009;29:10287–10298. doi: 10.1523/JNEUROSCI.0505-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cichy RM, Chen Y, Haynes JD. Encoding the identity and location of objects in human LOC. Neuroimage. 2011;54:2297–2307. doi: 10.1016/j.neuroimage.2010.09.044. [DOI] [PubMed] [Google Scholar]

- Clarke A, Tyler LK. Object-specific semantic coding in human perirhinal cortex. J Neurosci. 2014;34:4766–4775. doi: 10.1523/JNEUROSCI.2828-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daw ND, Gershman SJ, Seymour B, Dayan P, Dolan RJ. Model-based influences on humans' choices and striatal prediction errors. Neuron. 2011;69:1204–1215. doi: 10.1016/j.neuron.2011.02.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Araujo IE, Simon SA. The gustatory cortex and multisensory integration. Int J Obes (Lond) 2009;33(Suppl 2):S34–S43. doi: 10.1038/ijo.2009.70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Araujo IE, Rolls ET, Kringelbach ML, McGlone F, Phillips N. Taste-olfactory convergence, and the representation of the pleasantness of flavour, in the human brain. Eur J Neurosci. 2003;18:2059–2068. doi: 10.1046/j.1460-9568.2003.02915.x. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ. Becoming a “Greeble” expert: exploring mechanisms for face recognition. Vision Res. 1997;37:1673–1682. doi: 10.1016/S0042-6989(96)00286-6. [DOI] [PubMed] [Google Scholar]

- Gläscher J, Daw N, Dayan P, O'Doherty JP. States versus rewards: dissociable neural prediction error signals underlying model-based and model-free reinforcement learning. Neuron. 2010;66:585–595. doi: 10.1016/j.neuron.2010.04.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goense JB, Logothetis NK. Neurophysiology of the BOLD fMRI signal in awake monkeys. Curr Biol. 2008;18:631–640. doi: 10.1016/j.cub.2008.03.054. [DOI] [PubMed] [Google Scholar]

- Gottfried JA, Dolan RJ. The nose smells what the eye sees: crossmodal visual facilitation of human olfactory perception. Neuron. 2003;39:375–386. doi: 10.1016/S0896-6273(03)00392-1. [DOI] [PubMed] [Google Scholar]

- Gottfried JA, O'Doherty J, Dolan RJ. Appetitive and aversive olfactory learning in humans studied using event-related functional magnetic resonance imaging. J Neurosci. 2002;22:10829–10837. doi: 10.1523/JNEUROSCI.22-24-10829.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grill-Spector K, Weiner KS. The functional architecture of the ventral temporal cortex and its role in categorization. Nat Rev Neurosci. 2014;15:536–548. doi: 10.1038/nrn3747. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grill-Spector K, Kourtzi Z, Kanwisher N. The lateral occipital complex and its role in object recognition. Vision Res. 2001;41:1409–1422. doi: 10.1016/S0042-6989(01)00073-6. [DOI] [PubMed] [Google Scholar]

- Haberly LB, Price JL. The axonal projection patterns of the mitral and tufted cells of the olfactory bulb in the rat. Brain Res. 1977;129:152–157. doi: 10.1016/0006-8993(77)90978-7. [DOI] [PubMed] [Google Scholar]

- Haskins AL, Yonelinas AP, Quamme JR, Ranganath C. Perirhinal cortex supports encoding and familiarity-based recognition of novel associations. Neuron. 2008;59:554–560. doi: 10.1016/j.neuron.2008.07.035. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Gobbini MI, Furey ML, Ishai A, Schouten JL, Pietrini P. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 2001;293:2425–2430. doi: 10.1126/science.1063736. [DOI] [PubMed] [Google Scholar]

- Heining M, Young AW, Ioannou G, Andrew CM, Brammer MJ, Gray JA, Phillips ML. Disgusting smells activate human anterior insula and ventral striatum. Ann N Y Acad Sci. 2003;1000:380–384. doi: 10.1196/annals.1280.035. [DOI] [PubMed] [Google Scholar]

- Herz R. The unique interaction between language and olfactory perception and cognition. Trends Exp Psychol Res. 2005;8:91–109. [Google Scholar]

- Holdstock JS, Hocking J, Notley P, Devlin JT, Price CJ. Integrating visual and tactile information in the perirhinal cortex. Cereb Cortex. 2009;19:2993–3000. doi: 10.1093/cercor/bhp073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howard JD, Plailly J, Grueschow M, Haynes JD, Gottfried JA. Odor quality coding and categorization in human posterior piriform cortex. Nat Neurosci. 2009;12:932–938. doi: 10.1038/nn.2324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huffman DJ, Stark CE. Multivariate pattern analysis of the human medial temporal lobe revealed representationally categorical cortex and representationally agnostic hippocampus. Hippocampus. 2014;24:1394–1403. doi: 10.1002/hipo.22321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Illig KR, Haberly LB. Odor-evoked activity is spatially distributed in piriform cortex. J Comp Neurol. 2003;457:361–373. doi: 10.1002/cne.10557. [DOI] [PubMed] [Google Scholar]

- Ishai A, Ungerleider LG, Martin A, Schouten JL, Haxby JV. Distributed representation of objects in the human ventral visual pathway. Proc Natl Acad Sci U S A. 1999;96:9379–9384. doi: 10.1073/pnas.96.16.9379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jadauji JB, Djordjevic J, Lundström JN, Pack CC. Modulation of olfactory perception by visual cortex stimulation. J Neurosci. 2012;32:3095–3100. doi: 10.1523/JNEUROSCI.6022-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones JL, Esber GR, McDannald MA, Gruber AJ, Hernandez A, Mirenzi A, Schoenbaum G. Orbitofrontal cortex supports behavior and learning using inferred but not cached values. Science. 2012;338:953–956. doi: 10.1126/science.1227489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones SV, Stanek-Rattiner L, Davis M, Ressler KJ. Differential regional expression of brain-derived neurotrophic factor following olfactory fear learning. Learn Mem. 2007;14:816–820. doi: 10.1101/lm.781507. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kadohisa M, Wilson DA. Effect of odor experience on rat piriform cortex plasticity. Chem Senses. 2006;31:E49–E49. [Google Scholar]

- Kahnt T, Heinzle J, Park SQ, Haynes JD. Decoding the formation of reward predictions across learning. J Neurosci. 2011;31:14624–14630. doi: 10.1523/JNEUROSCI.3412-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kourtzi Z, Kanwisher N. Representation of perceived object shape by the human lateral occipital complex. Science. 2001;293:1506–1509. doi: 10.1126/science.1061133. [DOI] [PubMed] [Google Scholar]

- Kreiman G, Koch C, Fried I. Category-specific visual responses of single neurons in the human medial temporal lobe. Nat Neurosci. 2000;3:946–953. doi: 10.1038/78868. [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N, Goebel R, Bandettini P. Information-based functional brain mapping. Proc Natl Acad Sci U S A. 2006;103:3863–3868. doi: 10.1073/pnas.0600244103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kurth F, Zilles K, Fox PT, Laird AR, Eickhoff SB. A link between the systems: functional differentiation and integration within the human insula revealed by meta-analysis. Brain Struct Funct. 2010;214:519–534. doi: 10.1007/s00429-010-0255-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LaRocque KF, Smith ME, Carr VA, Witthoft N, Grill-Spector K, Wagner AD. Global similarity and pattern separation in the human medial temporal lobe predict subsequent memory. J Neurosci. 2013;33:5466–5474. doi: 10.1523/JNEUROSCI.4293-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lavenex P, Suzuki WA, Amaral DG. Perirhinal and parahippocampal cortices of the macaque monkey: intrinsic projections and interconnections. J Comp Neurol. 2004;472:371–394. doi: 10.1002/cne.20079. [DOI] [PubMed] [Google Scholar]

- Lawless H, Engen T. Associations to odors: interference, mnemonics, and verbal labeling. J Exp Psychol Hum Learn. 1977;3:52–59. doi: 10.1037/0278-7393.3.1.52. [DOI] [PubMed] [Google Scholar]

- Li W, Luxenberg E, Parrish T, Gottfried JA. Learning to smell the roses: experience-dependent neural plasticity in human piriform and orbitofrontal cortices. Neuron. 2006;52:1097–1108. doi: 10.1016/j.neuron.2006.10.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li W, Howard JD, Parrish TB, Gottfried JA. Aversive learning enhances perceptual and cortical discrimination of indiscriminable odor cues. Science. 2008;319:1842–1845. doi: 10.1126/science.1152837. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litman L, Awipi T, Davachi L. Category-specificity in the human medial temporal lobe cortex. Hippocampus. 2009;19:308–319. doi: 10.1002/hipo.20515. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Logothetis NK. The neural basis of the blood-oxygen-level-dependent functional magnetic resonance imaging signal. Philos Trans R Soc Lond B Biol Sci. 2002;357:1003–1037. doi: 10.1098/rstb.2002.1114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mai J, Assheuer J., Paxinos G. Atlas of the human brain. Ed 2. London: Elsevier Academic; 2004. [Google Scholar]

- Malach R, Reppas JB, Benson RR, Kwong KK, Jiang H, Kennedy WA, Ledden PJ, Brady TJ, Rosen BR, Tootell RB. Object-related activity revealed by functional magnetic resonance imaging in human occipital cortex. Proc Natl Acad Sci U S A. 1995;92:8135–8139. doi: 10.1073/pnas.92.18.8135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray EA, Richmond BJ. Role of perirhinal cortex in object perception, memory, and associations. Curr Opin Neurobiol. 2001;11:188–193. doi: 10.1016/S0959-4388(00)00195-1. [DOI] [PubMed] [Google Scholar]

- Murray EA, Gaffan D, Mishkin M. Neural substrates of visual stimulus-stimulus association in rhesus monkeys. J Neurosci. 1993;13:4549–4561. doi: 10.1523/JNEUROSCI.13-10-04549.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olofsson JK, Hurley RS, Bowman NE, Bao X, Mesulam MM, Gottfried JA. A designated odor-language integration system in the human brain. J Neurosci. 2014;34:14864–14873. doi: 10.1523/JNEUROSCI.2247-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Osterbauer RA, Matthews PM, Jenkinson M, Beckmann CF, Hansen PC, Calvert GA. Color of scents: chromatic stimuli modulate odor responses in the human brain. J Neurophysiol. 2005;93:3434–3441. doi: 10.1152/jn.00555.2004. [DOI] [PubMed] [Google Scholar]

- Pihlajamäki M, Tanila H, Hänninen T, Könönen M, Mikkonen M, Jalkanen V, Partanen K, Aronen HJ, Soininen H. Encoding of novel picture pairs activates the perirhinal cortex: an fMRI study. Hippocampus. 2003;13:67–80. doi: 10.1002/hipo.10049. [DOI] [PubMed] [Google Scholar]

- Pitkanen A, Pikkarainen M, Nurminen N, Ylinen A. Reciprocal connections between the amygdala and the hippocampal formation, perirhinal cortex, and postrhinal cortex in rat: a review. Ann N Y Acad Sci. 2000;911:369–391. doi: 10.1111/j.1749-6632.2000.tb06738.x. [DOI] [PubMed] [Google Scholar]

- Plailly J, Radnovich AJ, Sabri M, Royet JP, Kareken DA. Involvement of the left anterior insula and frontopolar gyrus in odor discrimination. Hum Brain Mapp. 2007;28:363–372. doi: 10.1002/hbm.20290. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ranganath C, Ritchey M. Two cortical systems for memory-guided behaviour. Nat Rev Neurosci. 2012;13:713–726. doi: 10.1038/nrn3338. [DOI] [PubMed] [Google Scholar]

- Reagh ZM, Yassa MA. Object and spatial mnemonic interference differentially engage lateral and medial entorhinal cortex in humans. Proc Natl Acad Sci U S A. 2014;111:E4264–E4273. doi: 10.1073/pnas.1411250111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rennaker RL, Chen CF, Ruyle AM, Sloan AM, Wilson DA. Spatial and temporal distribution of odorant-evoked activity in the piriform cortex. J Neurosci. 2007;27:1534–1542. doi: 10.1523/JNEUROSCI.4072-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roesch MR, Stalnaker TA, Schoenbaum G. Associative encoding in anterior piriform cortex versus orbitofrontal cortex during odor discrimination and reversal learning. Cereb Cortex. 2007;17:643–652. doi: 10.1093/cercor/bhk009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoenbaum G, Chiba AA, Gallagher M. Neural encoding in orbitofrontal cortex and basolateral amygdala during olfactory discrimination learning. J Neurosci. 1999;19:1876–1884. doi: 10.1523/JNEUROSCI.19-05-01876.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seger CA, Miller EK. Category learning in the brain. Annu Rev Neurosci. 2010;33:203–219. doi: 10.1146/annurev.neuro.051508.135546. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sevelinges Y, Gervais R, Messaoudi B, Granjon L, Mouly AM. Olfactory fear conditioning induces field potential potentiation in rat olfactory cortex and amygdala. Learn Mem. 2004;11:761–769. doi: 10.1101/lm.83604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sheinberg DL, Logothetis NK. The role of temporal cortical areas in perceptual organization. Proc Natl Acad Sci U S A. 1997;94:3408–3413. doi: 10.1073/pnas.94.7.3408. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shipley MT, Ennis M. Functional organization of olfactory system. J Neurobiol. 1996;30:123–176. doi: 10.1002/(SICI)1097-4695(199605)30:1%3C123::AID-NEU11%3E3.0.CO;2-N. [DOI] [PubMed] [Google Scholar]

- Sigala N, Gabbiani F, Logothetis NK. Visual categorization and object representation in monkeys and humans. J Cogn Neurosci. 2002;14:187–198. doi: 10.1162/089892902317236830. [DOI] [PubMed] [Google Scholar]

- Simmons WK, Martin A, Barsalou LW. Pictures of appetizing foods activate gustatory cortices for taste and reward. Cereb Cortex. 2005;15:1602–1608. doi: 10.1093/cercor/bhi038. [DOI] [PubMed] [Google Scholar]

- Small DM. Taste representation in the human insula. Brain Struct Funct. 2010;214:551–561. doi: 10.1007/s00429-010-0266-9. [DOI] [PubMed] [Google Scholar]

- Small DM, Prescott J. Odor/taste integration and the perception of flavor. Exp Brain Res. 2005;166:345–357. doi: 10.1007/s00221-005-2376-9. [DOI] [PubMed] [Google Scholar]

- Small DM, Voss J, Mak YE, Simmons KB, Parrish T, Gitelman D. Experience-dependent neural integration of taste and smell in the human brain. J Neurophysiol. 2004;92:1892–1903. doi: 10.1152/jn.00050.2004. [DOI] [PubMed] [Google Scholar]

- Staresina BP, Davachi L. Object unitization and associative memory formation are supported by distinct brain regions. J Neurosci. 2010;30:9890–9897. doi: 10.1523/JNEUROSCI.0826-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stettler DD, Axel R. Representations of odor in the piriform cortex. Neuron. 2009;63:854–864. doi: 10.1016/j.neuron.2009.09.005. [DOI] [PubMed] [Google Scholar]

- Suzuki WA, Amaral DG. Perirhinal and parahippocampal cortices of the macaque monkey: cortical afferents. J Comp Neurol. 1994;350:497–533. doi: 10.1002/cne.903500402. [DOI] [PubMed] [Google Scholar]

- Taylor KI, Moss HE, Stamatakis EA, Tyler LK. Binding crossmodal object features in perirhinal cortex. Proc Natl Acad Sci U S A. 2006;103:8239–8244. doi: 10.1073/pnas.0509704103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Veldhuizen MG, Albrecht J, Zelano C, Boesveldt S, Breslin P, Lundström JN. Identification of human gustatory cortex by activation likelihood estimation. Hum Brain Mapp. 2011;32:2256–2266. doi: 10.1002/hbm.21188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wan H, Aggleton JP, Brown MW. Different contributions of the hippocampus and perirhinal cortex to recognition memory. J Neurosci. 1999;19:1142–1148. doi: 10.1523/JNEUROSCI.19-03-01142.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu KN, Tan BK, Howard JD, Conley DB, Gottfried JA. Olfactory input is critical for sustaining odor quality codes in human orbitofrontal cortex. Nat Neurosci. 2012;15:1313–1319. doi: 10.1038/nn.3186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yaxley S, Rolls ET, Sienkiewicz ZJ. Gustatory responses of single neurons in the insula of the macaque monkey. J Neurophysiol. 1990;63:689–700. doi: 10.1152/jn.1990.63.4.689. [DOI] [PubMed] [Google Scholar]

- Zelano C, Mohanty A, Gottfried JA. Olfactory predictive codes and stimulus templates in piriform cortex. Neuron. 2011;72:178–187. doi: 10.1016/j.neuron.2011.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]