Significance

A fundamental tenet of science is that the properties of a material are intimately linked to the nature of the constituent components. Although there are powerful methods to predict such properties for given components, a key challenge for materials design is the inverse process: identifying the required components and their structural configuration for given target properties. This paper presents a new approach to this challenge. A formalism is introduced that generates algorithms for materials design both under equilibrium and under nonequilibrium conditions and operates without the need for user input beyond a design goal. This formalism is broadly applicable, fast, and robust, and it provides a powerful tool for materials optimization as well as discovery.

Keywords: materials design, directed self-assembly, optimization, inverse design

Abstract

Despite the success statistical physics has enjoyed at predicting the properties of materials for given parameters, the inverse problem, identifying which material parameters produce given, desired properties, is only beginning to be addressed. Recently, several methods have emerged across disciplines that draw upon optimization and simulation to create computer programs that tailor material responses to specified behaviors. However, so far the methods developed either involve black-box techniques, in which the optimizer operates without explicit knowledge of the material’s configuration space, or require carefully tuned algorithms with applicability limited to a narrow subclass of materials. Here we introduce a formalism that can generate optimizers automatically by extending statistical mechanics into the realm of design. The strength of this approach lies in its capability to transform statistical models that describe materials into optimizers to tailor them. By comparing against standard black-box optimization methods, we demonstrate how optimizers generated by this formalism can be faster and more effective, while remaining straightforward to implement. The scope of our approach includes possibilities for solving a variety of complex optimization and design problems concerning materials both in and out of equilibrium.

Computer programs that can design material properties have led to exciting, new directions for materials science (1–3). Computational methods have been used to predict crystal (4) and protein (5, 6) structures, yielding the toughest crystals known to mankind (4) and de novo protein configurations unseen in nature (5). Applied to polymers, Monte Carlo methods (7–9) and evolutionary algorithms (10, 11) have paved the way toward optimizing directed self-assembly. Similar methods have been used to identify the crystal structures of patchy, colloidal particles (12). For far-from-equilibrium systems like jammed, metastable aggregates of particles (3), simulation-based optimization has been successfully used to design bulk properties like stiffness (13) and packing density (14) by way of tuning complicated microscale features like particle shape.

However, despite these successes, most of the existing methods work only for narrowly defined classes of materials: Optimization techniques that prove successful at designing one class of materials may struggle or fail on other systems. Thus, designing new materials can require a large investment in trial and error at the level of the algorithm itself, even if, for given parameters, the material’s behavior can be simulated easily.

In black-box approaches, the algorithm tunes the material by adjusting control parameters without considering the likelihood of finding the material in microscale configurations. Instead, the optimizer operates in some auxiliary space, defined outside the physical model, and remains ignorant of the statistics in the physical configuration space. On the other hand, for the overwhelming majority of materials, an accurate description of macroscale behavior comes about by explicitly considering the probability of finding the system in certain microscopic configurations. Several materials optimization approaches exist that take this statistical nature into account, examples include optimizers that design spin configurations (15, 16), patchy colloidal particles (17), and self-assembly driven by short-ranged interactions (18). These approaches build heavily upon the specific model defining the material. As a consequence, it is difficult to extend them beyond the material class they are designed for. Evidently, microscale configurations present key statistical information about a material, which is completely ignored by black-box approaches, yet there is no formalism that generically incorporates this information into materials design.

The question we ask is whether microstate information not only can be used to enhance an optimizer’s speed and range of applicability, but also can become the cornerstone of an approach that automatically transforms a design goal and a statistical model into an optimizer. One can then construct optimizers that can work on material classes that are as broad as those described by statistical mechanics, without the need for ad hoc modification.

Here we take some first steps toward such a framework. We present a formalism that can be used to transform the capacity to predict material behavior into an optimizer that tunes it. Furthermore we find that our formalism often solves optimization problems faster and more reliably than approaches built around black-box methods.

Deriving the Optimization Equations

Our approach starts by assuming a given model that predicts the probability of finding a material in some configuration, x. The model depends on the adjustable parameters and by tuning , a user can impact the emergent, bulk properties, averaged over configuration space. Thus, design proceeds by tweaking to promote a desired, user-specified response.

We work toward this goal, starting from the heuristic equation

| [1] |

where the angle brackets denote an average over configurations weighted by ρ, the overdot denotes a derivative with respect to an artificial time that indexes the optimization steps, and is a function that quantifies how well configuration x represents the user-specified goal. In this way, Eq. 1 attempts to increase the probability of finding the system in states with better than average values of : If a configuration x has a value of greater than the average, then the probability of finding the system near x increases, for average it does not change, and for worse than average it decreases.

As written, Eq. 1 assumes that can be independently set for every possible point in the configuration space, despite the fact that is constrained by the physics behind the material of interest. In actuality, can offer only a limited flexibility through the parameters . Thus, for many problems it will not be possible to exactly satisfy Eq. 1, although it is possible to make a best approximation to Eq. 1, given a particular physical distribution. We achieve this by setting the changes to such that they minimize the average error between the updates implied by Eq. 1 and the actual changes to . Explicitly, we rewrite Eq. 1 as and select the that minimize the average value of the squared error, . The equations of motion for that minimize ε at a given instant in time are found by setting the partial derivatives with respect to equal to zero (Supporting Information):

| [2] |

Eq. 2 is now a closed expression for that depends only on expectation values. Thus, it can function as an algorithm: one can draw samples from , use the samples to evaluate the right-hand side of Eq. 2, and then integrate the equations of motion to generate new, improved parameter settings.

Eq. 2, and its motivating Eq. 1, overlap with a surprising number of different fields. For example, the matrix elements in Eq. 2 resemble kinetic coefficients, suggesting the interpretation that generates a thermodynamic force that pushes the system to solve the design goal (19). Alternatively, Eq. 2 appears in the optimization and mathematics literature as natural gradient descent: It bears the interpretation of a gradient descent method that takes the steepest step such that the change in entropy stays bounded (20–22). Indeed, the matrix in front of Eq. 2 is the Fisher information metric and is constraining the driving force to move in directions of small entropy change (21). This interpretation is also associated with state-of-the-art optimizers like the covariance matrix adaptation evolution strategy (CMA-ES) (22–24); however, here the design parameters are treated as random variables drawn from a Gaussian distribution irrespective of the design problem, and a version of Eq. 2 is used to update the mean and covariance of this auxiliary distribution. This is in contrast to our proposition that should be treated as deterministic variables that evolve according to Eq. 2 with randomness entering only at the level of material configurations. If the task considered is changed to finding the best-fit model parameters to a given set of data, then Eq. 2 represents the direction in parameter space that decreases the fit error most efficiently per unit of behavioral change in the model (25). In this scenario, one can consider regularizing Eq. 2 to produce the Levenburg–Marquart algorithm modified to account for the geometric aspects of the optimization. Finally, one can note that the motivating equation, Eq. 1, is the replicator equation from game theory and evolutionary biology (26–28). Thus, one could also interpret the dynamics as a process of reproduction and competition in a continuous parameter space (26, 27), projected onto .

Whatever the picture, Eq. 2 has a number of powerful properties. In particular, Eq. 2 is invariant to any invertible reparameterization of , including rotations, dilations, and translations in parameter space. If the reward function, , is chosen correctly, the velocity flow is also invariant to rank preserving changes in the design goal (22). Thus, there will be no difference in performance between two design problems that differ by coordinate choice over and/or a rank preserving change in the design goal [e.g., vs. ], provided the initial values of are the same. These invariances also provide stability to the algorithm: by making the search algorithm invariant to both the goal function magnitude and the parameterization, the effect of sampling errors gets bounded in a parameterization invariant way. Thus, errors from sampling parameters in Eq. 2 will not cascade, even if the matrix in Eq. 2 becomes ill-conditioned. Altogether, these features greatly simplify the optimization task because, now, the designer is free from worrying about trivial choices surrounding and the goal function. Details on these points can be found in Supporting Information.

For the task of optimizing materials, we stress one further property: By using Eq. 2 optimization takes place in configuration space, rather than in an auxiliary space introduced to define an optimizer. As we will show, this gives a unique advantage in applying Eq. 2 to materials design: More information is used by the optimizer when updating parameters, without incurring an increase in computational cost. Perhaps best of all, this optimizer is constructed by straightforwardly applying the formalism encoded in Eq. 2 to the relevant statistical model. For example, when is given by the canonical ensemble, , the optimizer follows immediately from Eq. 2 as . Ultimately, Eq. 2 makes the transition from describing a material to designing a material in just one step.

Comparison Against Black-Box Optimizers

To demonstrate these strengths, we test our method against standard approaches that feed simulation parameters into a model by way of a black-box optimizer. We use adaptive simulated annealing (ASA) (29) and the CMA-ES (24). In each test, we allow the optimizers a fixed budget of material simulations, and each simulation requires a fixed amount of computational power. Thus, in our comparisons, computational cost and number of simulations are equivalent and an efficient optimizer is one that solves a design problem simulating as few candidate materials as possible. Implementation details are in Supporting Information.

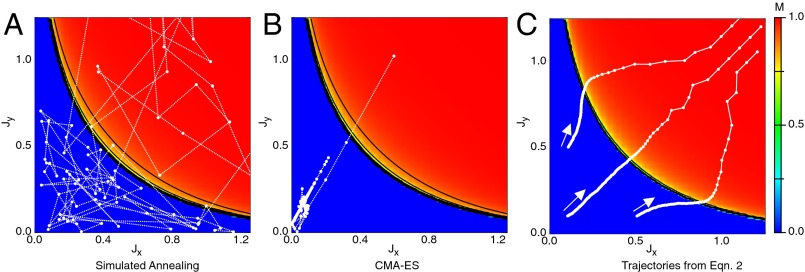

As a first example, we task these two black-box algorithms and our approach with designing a square-lattice Ising model to maximize the magnitude of its magnetization. To do so, each method is allowed to vary the coupling constants that define the energy of spin alignments in the horizontal () and vertical () directions. To implement the black-box methods, we allow each optimizer to guess a set of coupling constants and evaluate the quality of that guess by computing the average magnetization. We find that both ASA and the CMA-ES struggle when searching entirely in the zero magnetization phase. This is an obvious consequence of the fact that the optimizer sees no variation in the quality for each parameter setting. Consequently, it receives no guidance about how to update its parameter guesses and can at best walk randomly until finding the phase boundary (Fig. 1 A and B).

Fig. 1.

Phase transitions and flat landscapes. If a materials design problem features a phase transition as part of the search space, black-box optimizers can struggle or fail due to inefficient use of simulation data. For example, if optimizing the magnetization, M, of a 2D Ising model by changing the coupling constants along the x and y directions ( and , respectively), black-box methods like simulated annealing (A) and the CMA-ES (B) walk randomly if initialized in the zero magnetization state. Our method (C) interprets fluctuations in solution quality against model components as encoded by Eq. 2 and thus can navigate to the magnetized state regardless of initialization.

By contrast, the updates encoded in Eq. 2 navigate a path that links one phase to the other: Fig. 1C shows the flow field generated by Eq. 2 upon taking to be the canonical ensemble with the Ising Hamiltonian, , where denotes summing over nearest neighbors along the x direction, likewise for , and denote the spin variables. Given this statistical model, the control parameters for optimization become and . Finally, the quality function is set to reward states with higher magnetizations (Supporting Information). If, for shorthand, we call the individual energy components and , then Eq. 2 gives the velocity field , where . A similar equation holds for but with the variables x and y appropriately interchanged.

In this form, it is clear that our method will optimize as long as there is covariation between the quality function, f, and the energy components, . Because magnetization and energy are correlated, even if the average magnetization is zero, Eq. 2 can purposefully optimize even when operating in regions of parameter space where the black-box methods fail. The difference between these approaches lies in the fact that black-box methods are trying to solve a problem defined over the space of , whereas our approach is tasked to solve a problem defined on the space of configurations, x, via Eq. 1. The CMA-ES generates multiple guesses of parameters from a Gaussian distributed over all possible λ and ASA samples by assigning an energy value to each choice of λ. These methods associate only one piece of information, the quality function, to full ensembles of configurations defined by each choice of parameters. This is in contrast to our approach that tries to solve the problem of reproducing configurations, x, that are better than average. Consequently, Eq. 2 is able to use information about how fluctuations in configurations correspond to fluctuations in quality because it has been built to exploit the extra fact that the simulation data were generated from a known distribution, ρ.

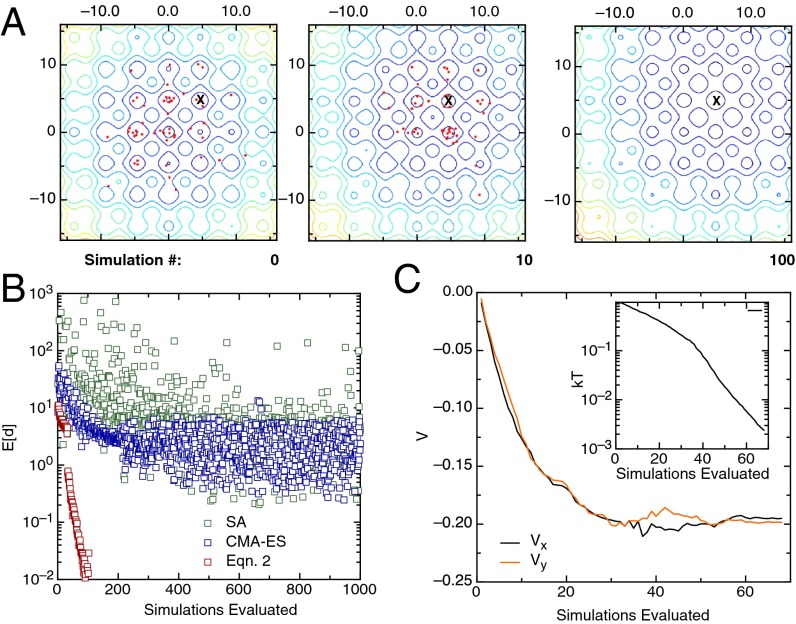

As a second example, we consider a thermalized particle trapped on a 2D substrate, defined on the plane and at thermal equilibrium. The substrate applies a potential to the particle, making some positions more likely than others. We task the optimizer with trapping the particle in a specific potential well. To do so, we give the optimizer the freedom to tune the interaction strength with the substrate, and we give it control over a linear electric field to drive the particle. To make the problem interesting, we use a rough substrate potential: . With the field included, the total Hamiltonian becomes , where and represent the field strength in the two coordinate directions. To simplify the form of Eq. 2, we represent these effects to the optimizer by defining and absorb a factor of into the field coupling constants so that . In discussing the optimizer’s performance, however, we will convert the results to the original, physical variables , , and .

The solution to this problem requires the design engine to make the target well the global minimum, and cool the system to zero temperature to trap the particle. For definiteness, the target well is the point and we initialize the algorithm with the substrate at and the field parameters set to zero.

In Fig. 2A we plot the energy landscapes generated by the optimizer, as well as the points sampled during each iteration. Indeed, the optimizer quickly learns to tilt the landscape, correctly making the target well the global minimum, and then cools the system (Fig. 2B), trapping the particle in the well. By comparing the performance against black-box approaches (Fig. 2C), our approach is both faster and more reliable: It correctly tilts the well after only 35 iterations, whereas it takes simulations for the CMA-ES and ASA. Further, neither of the black-box methods learns to completely cool the system in the allotted 1,000 simulated ensembles.

Fig. 2.

Trapping a particle in a well. Here we treat the problem of a thermalized particle, trapped on a sinusoidal energy landscape superimposed on a quadratic background with a minima at the origin, depicted by the energy contours in A. The optimizer is given control over the system temperature plus a linear field potential in the two coordinates and . Its task is to trap the particle in a specific well located off center at in the plane, marked by a cross in A. The sampled particle locations, given each choice of parameters generated, after evaluating 0, 10, and 100 iterations of Eq. 2 are plotted as red points. The optimizer uses the field to first tilt the potential and make the target well the global minimum, and then it cools the system. In tasking the same problem to black-box optimizers, we find that both ASA and the CMA-ES are able to tilt the well, but never learn to cool the system (B). Comparing how the temperature and field parameters change at each iteration (C) against in B shows that the exponential convergence occurs in concert with an exponential decrease in system temperature. We note that the field parameters and track one another, reflecting the fact that the optimizer is invariant to rotations in the configuration space: The optimizer moves the field along the direction associated with the greatest improvement in solution quality and is insensitive to the fact that the problem was parameterized in the arbitrary coordinates and .

We speculate that this shortcoming is again a consequence of indirect problem representation. We note that when , the particle is almost evenly distributed between both the central well and the four nearest neighbors that surround it. Thus, for black-box methods, noise in the average particle position can play an overpowering role during parameter updates. By contrast, Eq. 2 considers covariances at the finer scale of configuration space. As with our prior example, this extra information makes our method appreciably more robust to flat regions in the search landscape and in this case yields an essentially exponential convergence to the optimized state (Fig. 2B).

Designing a Polymer to Fold into an Octahedron

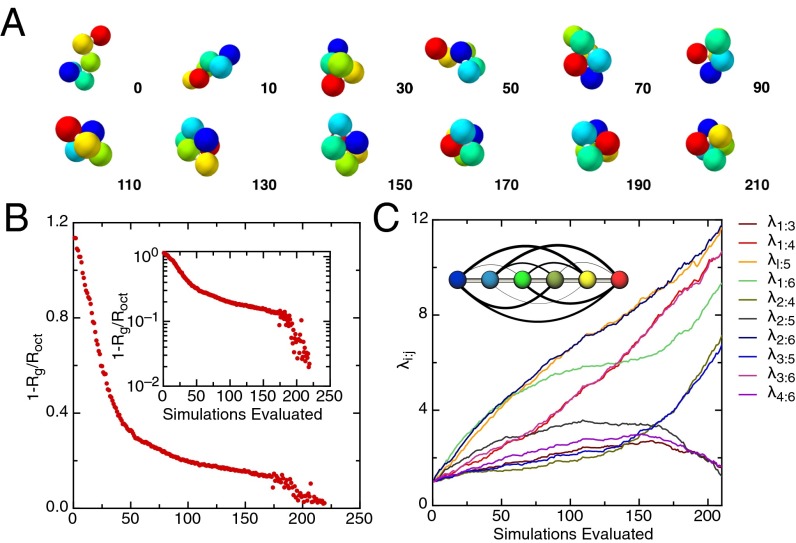

The success of the two simple examples in the previous section invites more complicated design problems. As an example, we consider a basic model for a polymer: a string of hard, colored balls interconnected by rigid rods. The balls are weakly attractive, interaction strengths determined by the colors. For example, red and blue may be attracted more strongly than blue and green.

In principle, by tuning the color interactions, it should be possible to fold the chain into specific, desired shapes. To make a concrete task, we take a chain of six particles and create an optimizer to fold them into an octahedron, defined by minimizing the sum of the distances to the center of mass. Note that the search space is appreciably larger than in the prior examples (dimension 10), and that simply setting all of the interaction strengths to large values will not produce the optimal solution. By choosing the interaction appropriately, the same energy can be given to the octahedral and polytetrahedral configurations for identical coupling constants. Entropic arguments imply that the polytetrahedron will dominate the chain configurations unless the optimizer carefully adjusts the coupling constants to take on unique values (30, 31).

Fig. 3A shows a typical chain configuration from each generation, and Fig. 3B shows the median sum of distances to the center of mass, normalized relative to that of a perfect octahedron. Initially, the coupling constants are set to , and random chain configurations are typical. However, as the optimizer drives the interaction energies to larger values, the shapes become compact and structured. Around 200 generations, virtually every shape generated is octahedral (Fig. 3A).

Fig. 3.

Folding an octahedron out of a linear chain. (A) Typical chain configurations that result after iterating Eq. 2. Numbers indicate the iteration number. Early on, the polymer configurations are dominated by thermal energy and random, yet as the interaction strengths increase and differentiate, more structured objects appear, and ultimately only the octahedron configuration exists (iterations 190–210). (B) Plotting the median percentage of deviation between the polymer’s radius of gyration and the radius of gyration for an octahedron at each iteration shows that the optimizer again produces a monotonic decrease at each step, with an effectively exponential convergence in the last 30 iterations. By the last 10 iterations, the median deviation from a perfect octahedron is roughly 1%. (C) In plotting the coupling constants against iteration number, we find that the optimizer adjusts coupling constants in groups. It is clear that the active coupling constants form the contact network for an octahedron (C, Inset).

By plotting the values of the interaction strengths against iteration number, we find the optimizer’s solution is simple, logical, and arguably optimal. Early on, the optimizer attempts to meet the design goal by simply increasing the coupling strengths to make more compact objects (Fig. 3A). However, as the coupling constants are undifferentiated, the results are predominately polytetrahedral geometries. To compensate, the optimizer deactivates three coupling constants around 100 generations and sends the remainder to infinity (Fig. 3C). The logic behind this maneuver becomes clear by plotting the interactions as a network: The active interactions plus the polymer backbone form the contact graph of an octahedron. This strategy, transforming the contact matrix to an interaction matrix, has been identified as an approach to programing, by hand, the optimal interaction parameters for self-assembly (18). In fact, the specific problem of creating a self-assembling octahedron has been solved using a virtually identical motif (32). Evidently our optimizer can reproduce a well thought-out approach to self-assembly, and it does so automatically, requiring only a model and a design goal.

Optimization of an Out-of-Equilibrium System

Because Eq. 2 holds for any parameterized probability distribution function, it can be used to create optimization schemes beyond the canonical ensemble in the prior examples. The only essential ingredients are a model that predicts the probability of microstates, an engine that samples configurations from said model, and a design goal. As simple extensions, chemical potentials or constraints on pressure could be included as tunable parameters (33). A new theoretical concept, termed “digital alchemy,” extends statistical mechanics to account for microscale geometric parameters, such as the particle shape in a colloidal-nanoparticle assembly (34). Thus, by coupling this approach with our optimization formalism, particle geometry can be tuned to produce optimized bulk responses. One can also note that the range of parameters to design is at the user’s discretion: Eq. 1 can be used to rederive Eq. 2, assuming that some of the model parameters are not controllable by taking them to be time independent. Indeed, for the particle in a well problem, the wavelength of corrugation was taken as a fixed parameter and the resulting optimizer was quite effective. One can also consider optimization for global quality functions that exist over multiple a range of parameters (35). For instance, our approach can optimize the density of a crystal lattice over a range of pressures and system volumes by defining , where is a uniform distribution for V and P over a range of consideration and is the appropriate distribution for microstates given a fixed volume and pressure. Eq. 2 can then be applied to optimize in this extended parameter space to find choices of λ that work well over a range of possible densities and pressures. Abstracting the concept, statistical models could be based on calculations like self-consistent field theory or experiments with the code directly measuring correlation functions in the laboratory and tuning physical parameters.

Moreover, nonequilibrium processes, provided they have a statistical description, are fair game. For example, if one simulates diffusion by adding white noise to a mean drift, then the paths are distributed by a product of Gaussian distributions conditioned on the prior steps. Clearly, the paths are statistical objects, with diffusion and drift as the distribution parameters, λ. Thus, one can build an optimizer that tunes these control parameters using Eq. 2, even if they are time-dependent functions.

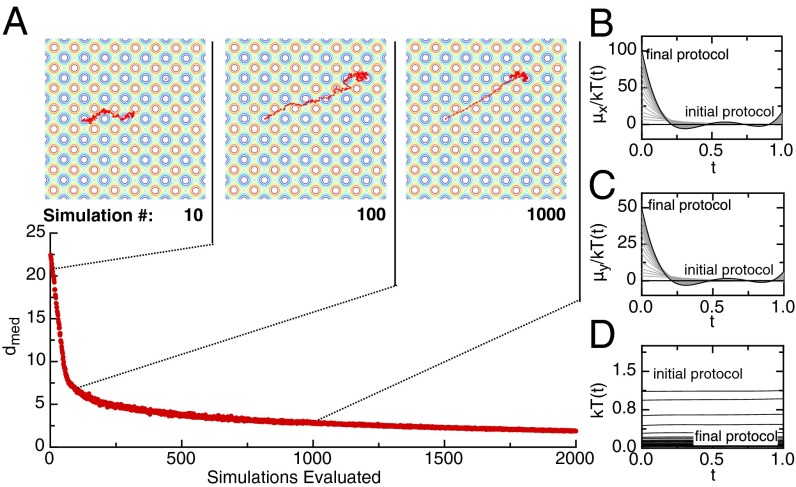

As proof of this point, we return to the problem of a particle trapped on a substrate, but now simulate the particle dynamics. The applied field and the system temperature are treated as functions of time and the optimizer is tasked with moving the particle from one well to another in a given interval.

Fig. 4A shows the median distance to the target well after executing the optimizer’s processing protocol in each generation. In the first 60 generations, the optimizer learns to transport the particle from its starting location to the target well via a large, deterministic driving force. It then spends the remaining iterations monotonically decreasing the system temperature while developing a trapping protocol with the field. After 2,000 iterations, the optimizer seems to traps the particle by oscillating the driving force, changing its direction before the particle can transition to another well. In effect, the optimizer learns to drag the particle to the target and trap it in place, using both the temperature and the field. By 2,000 iterations, of the points in the path fall within the target well. When left to run longer, the optimizer continues to improve the quality of solutions, but at the cost of becoming unphysical, the optimizer generates extremely large fields that move the particle to the well faster and faster. To optimize beyond the proof of concept demonstrated here, one may have to restrict the range of parameters allowed to the optimizer or account for arbitrary velocities by increasing the number of steps in the walk.

Fig. 4.

Optimizing a nonequilibrium process. By using Eq. 2 we can tune processing protocols for a Brownian particle walking on a rough energy landscape controlled by a time-dependent temperature, kT, and linear mean drift components, and . The optimizer has been tasked to adjust to place and trap the random walker in a well located at the x–y coordinates (A) Ensemble median distance to the objective well after executing a processing protocol at each iteration of the algorithm. Callouts show representative paths taken by the particle, and contours in the callouts show lines of constant energy over the substrate potential. The large image represents the protocol executed after 2,000 iterations. Every protocol attempted at 10-iteration intervals is illustrated in B–D, where the temperature, (D) as well as the applied fields in the x direction and y direction normalized by temperature, (B) and (C), are plotted against time. At the particle is released from its initial position at and allowed to wander and the processing protocol is executed until the simulation is stopped at . At each iteration, the optimizer works to monotonically decrease the temperature, while arriving at a field protocol that quickly drives the particle to the target well and then oscillates the fields to trap it there.

Optimization of Directed Self-Assembly

As a final demonstration of our approach we consider the real-world problem of designing the directed self-assembly of block copolymers on a chemically patterned substrate. This represents a task at the forefront of both materials design and sublithographic fabrication (1, 7–11, 36, 37). The goal is to lithographically pattern a substrate with a small number of chemical features such that these features promote block copolymers to self-assemble into a desired target morphology. Here, we consider a task that has been identified as a promising candidate for the manufacture of next-generation semiconductor devices and high-density storage media: self-assembly of AB-diblock copolymers into an ordered striped or lamellar morphology (1, 9–11).

We use a theoretically informed coarse-grain model for block copolymer simulations (36). Polymer chains are simulated as beads that are linked together. The system is considered at fixed temperature and volume, and thus the probability of finding a given microstate configuration is defined in terms of an energy given by three parts. The first is a linear spring bond energy between beads in each polymer chain. The second is a nonbonded interaction energy that characterizes repulsion from unlike species and the material compressibility. Details are in Supporting Information (36). Both the model and the parameters in it represent a polystyrene-block-poly(methyl methacrylate) (PS-b-PMMA) diblock copolymer with a number-averaged molecular weight of 22,000-b-22,000 and a stripe period of 28 nm.

The final contribution to the energy is the substrate interaction. The substrate consists of two regions: the patterned stripes of width w and the background. Both are defined to have short-ranged effects on the polymer beads and assume the form , where defines the decay length of the interaction, z is the distance from the plane of the substrate, and is the interaction strength between the substrate and a bead of type α. Thus, if the particle is over the guiding stripe and of type A, . If the particle is of type A and over the background region, . Following ref. 11, we simplify our model by assuming the interactions are antisymmetric: and . The design problem is to adjust the width of the strips w and the two energy parameters and so the target stripe phase replicates itself m times between two guiding stripes spaced by the polymer period multiplied by m.

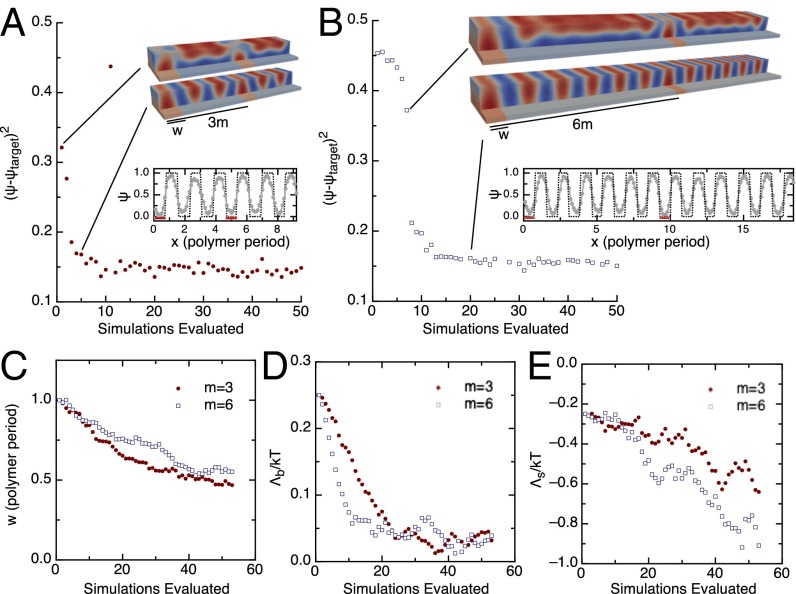

The results for and density multiplication are shown in Fig. 5. For the problem, we ran the optimization four times, varying the time step used in integrating Eq. 2. In every instance, the optimizer not only brought the system to a state that successfully met the design goal, but also, within noise, converged to the same state. The optimized parameters suggest that directed self-assembly is best achieved by setting the stripe width equal to roughly half the polymer period, and . All of these parameters agree with simulation results obtained by a brute-force solution to the problem and experimental verifications performed on the real polymer system (37) and are physically consistent with optimization results obtained for triblock copolymer pattern multiplication (11). These results can be explained by considering the interfacial energies in the system. The background interaction is required to be weak because the background region has roughly equal coverage between the A and B phases and is significantly larger in area than the size of the stripe. Moreover, the interaction strength for the stripe components is larger in the problem (Fig. 5D), because the larger distance between patterned regions requires stronger anchoring to guide assembly.

Fig. 5.

Optimizing directed self-assembly. Here we design the width of the stripe, the strength of its attraction to the red polymer beads , and the attraction strength of the background substrate toward the blue polymer beads, to match the self-assembled phase as closely as possible to the target of alternating stripes. We quantify the success of our optimizer by comparing an order parameter binned along the x axis of the box and averaged over y and z to the target stripe pattern. With Eq. 2, we are able to produce optimized parameters for 3× (A) and 6× (B) density multiplication after simulating 10 and 20 parameter choices. Two characteristic configurations are plotted in A and B, Insets, separated by just a handful of iterations, yet displaying markedly different phases of the polymer. Asymptotic configurations depicting Ψ (solid, marked line) and the target (dashed line) (A and B, Insets) show that the optimized parameters match the desired morphology, typically within or better. In plotting the parameters generated by Eq. 2 (C–E), we find that the interaction with the background brush is the most relevant parameter in directed self-assembly. For both the 6× and 3× problems, the rapid convergence toward the optimized state takes place once the background strength is reduced to . After a weak background is established, the strip width and strength function as fine-tuning parameters that facilitate defect-free assembly.

When the optimizer was run at the most aggressive time step, we were able to achieve convergence for m = 3, (m = 6) in less than 10 (18) iterations, despite the fact that the material required the simulation of roughly 50,000 (100,000) polymer beads. We stress that the performance obtained here is not a consequence of initializing the system too close to an optimal state, but rather evidence of the power behind Eq. 2. Fig. 5 shows that indeed the initial parameters do not produce a solution to the design problem, let alone a stripe pattern of any kind.

This particular problem also has been attempted in some variant using other materials design methods. In fact, our initial conditions were selected to match those given to an implementation of the CMA-ES, solving the same design problem but using triblock copolymers (11). For that problem, the CMA-ES took roughly 50 generations to converge, simulating 32 ensembles in parallel per iteration, each requiring 200,000 Monte Carlo steps. Our approach also used 200,000 Monte Carlo steps per iteration, but required only 10 iterations to converge. If algorithm performance is measured in terms of the number of microstates simulated, then solving directed self-assembly problems by way of the CMA-ES requires at least 5 times as much compute power as the approach proposed here. If it is not possible to run the ensemble simulations in parallel, our approach is roughly 130 times faster than the CMA-ES and completes a full optimization process before the CMA-ES has completed a single iteration. Additionally, inverse Monte Carlo methods have been used to solve directed self-assembly problems involving the placement of guiding posts instead of stripes (8). Although there are relevant physical differences, we note that the results presented for inverse Monte Carlo converge after simulating roughly 30 million microstates. Because this number of microstates simulated is roughly 15 times larger than what was used here, we can speculate that our proposed methods could also be faster for such applications.

Conclusions

To the extent that the goal of materials design is a unified framework that handles a wide range of complex inverse problems, we believe the formalism introduced here represents a significant step forward. By applying Eq. 2, we can solve problems with flat search landscapes (Fig. 1) and multiple-interaction types (Fig. 2), incorporate constraints (Fig. 3), tune processing conditions (Fig. 4), and address application scale design and optimization tasks (Fig. 5). Furthermore, in all of the examples presented, the end result is intuitive even though it was achieved in a complicated search landscape where other optimization schemes struggle or fail. Finally, the fact that processing conditions such as applied fields or temperature protocols and model parameters like internal interaction energies can be optimized with the very same framework presents an unexplored direction for materials design. Because these are the essential aspects that determine the properties of any material, the capacity to tune both simultaneously, one accounting for the other, opens the doors to a more coherent and conceptually complete design program.

Eq. 2 as a Best Approximation

As stated in the main text, Eq. 2 can be derived by choosing parameter updates that minimize the average squared error . In other words, one updates λ so that minimizes ε. This requires that for all of the , which gives the condition

| [S1] |

Multiplying on the left by the pseudoinverse for and moving the second term to the left-hand side, we arrive at Eq. 2:

| [S2] |

We comment briefly on how to select an that leads to a rank-invariant optimizer. Whereas in principle can be anything that rewards solutions that conform well to the design goal, one can gain an added invariance property by designing to reward good configurations based on relative rank rather than absolute value. This makes the optimizer invariant to trivial changes in the design problem that preserve the rank of candidate solutions. To achieve this, we took to equal the probability of selecting another configuration that performs as well as or worse than the current configuration x when drawn randomly from the current distribution, . Explicitly, this requires

| [S3] |

where is a raw quality function that determines how well solutions meet the design goal, and is equal to 1 whenever the inequality in the argument is satisfied and zero otherwise. For example, in the Ising model problem was taken to be the average magnetization of a given configuration, x. Thus, rewards the configurations that have magnetizations that are likely to be larger than others drawn from the same distribution. This rescaling indeed satisfies the requirement that any rank preserving transformation of the raw quality function will not change the flow of parameters. In other words, any rewriting of that preserves the ranking of configurations will not alter the optimizer performance.

We note in passing that by choosing this form for , Eq. 1 in the main text becomes the continuous space replicator equation from evolutionary game theory (26, 27). Thus, we can also interpret in the rank-invariant form as a fitness function that rewards the configuration at x with a unit of fitness for every competitor configuration drawn from the ensemble that performs at a lower or equal quality.

Eq. 2 as a Projection of Eq. 1

Here we derive Eq. 2, starting from the motivating expression without introducing the concept of an error minimization. We begin by noting that has time dependence only through the terms. Thus, via the chain rule the left-hand side can be expanded as

| [S4] |

To isolate update rules for each , we project the equation onto the functions by multiplying both sides by and integrate over configuration space:

| [S5] |

The matrix on the left-hand side is identified as the Fisher information matrix and can be inverted to produce

| [S6] |

As desired, this form is Eq. 2 and the basis of our optimizer.

In this derivation, we essentially multiplied by . Structurally, this is a projection and this gives an alternate interpretation of Eq. 2 as the projection of Eq. 1 onto the basis functions .

Invariance Properties of Eq. 2

As stated in the main text, Eq. 2 hosts a range of invariance properties that yield robust performance over a large class of design problems. One of the most important is that the velocity field generated by Eq. 2 is invariant to any reparameterizations of the design parameters λ, provided the structure of the distribution ρ is left intact. By this we mean that if there is a change of coordinates so that and , then the velocity field generated by Eq. 2 is the same in both parameterizations. This can be shown by direct substitution:

| [S7] |

Rewriting everything in terms of the primed variables requires the substitution , which gives us that

| [S8] |

| [S9] |

Now we use the fact that and the matrix identity to get

| [S10] |

which is the velocity equation that results when initially working from the primed coordinates.

The key idea expressed here is that Eq. 2 assigns a velocity to each possible in the search space that is independent of how is parameterized. Thus, an optimizer starting at a given position will evolve along the same trajectory as another optimizer written in a different coordinate choice. This can provide a big advantage compared with black-box methods. To see why, consider the fact that two different coordinate choices may lead to search spaces that are more or less corrugated in λ space. Because black-box methods will see the search landscapes as distinct, the performance will be better or worse, depending on a trivial reparameterization of ρ. By contrast, optimizers based on statistical mechanics models see no difference whatsoever, provided they are initialized to the same λ point. This means the designer is free to choose any coordinate system without having to worry whether the optimizer performance will degrade.

Error Propagation via Eq. 2

In this section we examine what effect errors in evaluating Eq. 2 can have on the algorithm performance. In particular, the matrix inverse in Eq. 2 might suggest that small errors could be amplified to produce large parameter variations. Here we show that whereas small errors may indeed introduce large variations into the parameters λ, for our algorithm, this occurs only for parameters that have little effect on the simulated material properties. Specifically, we focus on implementations of Eq. 2 that use the rank-based defined in the previous section, integrate Eq. 2 using a small numerical time-step τ, and evaluate expectation values using a Monte Carlo approximation. To assess the fidelity of these approximations, we compare the information lost when representing the true target distribution defined by Eq. 2 with one constructed using the stated assumptions. In bits, this is given by the Kullback–Leibler divergence or , where represents the error due to sampling. If we expand to leading order in the error, we find

| [S11] |

where we have used the notation . Because we are interested in whether an ill-conditioned matrix inverse is problematic, we focus our analysis on the case where the largest contribution in error comes from computing the terms in Eq. 2. Thus, we have that to leading order in the time step, , where is the error on the estimates for . Inserting this relationship into the equation for K and taking the expectation value over sampling realizations for , we find that to leading order in τ,

| [S12] |

where we have used the fact that, averaged over realizations, the mean squared errors for should be given by the covariance on the estimated parameters divided by the number of samples N. We now bound our error estimate, using the fact that

| [S13] |

This equation follows because is a positive definite matrix and so the term appearing in the covariance expression is strictly positive. Inserting this into our expression for K gives

| [S14] |

The final step is to note that the rank-based used to define algorithms in this paper is bounded and the bounds are independent of λ. Specifically, for the system described in the prior section, and for all λ. Thus, we have that , which gives us that

| [S15] |

where d is the number of parameters in the model. Thus, we find the surprising result that to leading order in τ, the information lost by approximating Eq. 2 is bounded and that the bound is independent of both λ and . In other words, to leading order in τ, an approximation to Eq. 2 using the methods described in this paper will lose no more than bits due to sampling noise, for any λ selected by the optimizer.

Methodology for the Ising Model Problem

As discussed in the main text, applying Eq. 2 to the Ising model Hamiltonian gives the optimization equation , where the represent the energy contributions of neighbors along the horizontal and vertical directions. To maximize the average magnetization, we set equal to the probability that another configuration drawn at random from ρ has a lower instantaneous magnetization, , than that of configuration x (Supporting Information, Eq. 2 as a Best Approximation). Specifically, we set , where is unity when the inequality in the argument is satisfied and zero otherwise. With this definition all of the terms in the update equation are expressible as expectation values, and they can be evaluated by making a Monte Carlo approximation with samples drawn from .

To draw these samples from the Ising Hamiltonian at equilibrium, we implemented the Wolff cluster algorithm with variable coupling constants for the horizontal and vertical directions (38). In this Markov chain Monte Carlo method, a single spin is chosen at random and flipped. The neighbors of this site are then flipped randomly based on the energy associated with the old and new configurations. If flipped, any neighbors of the altered site are considered for manipulation, and the process iterates until no new sites are added to the cluster. For our simulations, we used a 25 × 25 grid of spin sites. To minimize correlation between the samples, we ran a burn-in period with 1,000 spin-flip cycles, logging the average number of spins flipped in each iteration. Once this finished, we continued to iterate the spin-flip algorithm and logged samples once every q iterations. We selected q so that the average number of spins flipped per cycle multiplied by the number of cycles between logged samples equals the system size. The process was repeated until 1,000 configurations were saved.

To integrate Eq. 2 we used a modified Euler scheme with a fixed time step of 0.5. We suggest future work could improve this aspect of the algorithm, yet for a proof of concept this simple choice was effective. We note that moving to higher order or variable time-step integrators will have to account for the fact that the Monte Carlo approximation introduces error that can be larger than the truncation error associated to the integration scheme. Thus, using more sophisticated integrators may require a careful analysis of how noise in the velocity terms impacts the optimizer trajectories.

Methodology for the Equilibrium Particle on a Substrate Problem

Following the discussion in the main text, we chose our optimization parameters so that is given by a canonical ensemble of the form , where the represents control parameters for the temperature and is the control parameter for the field contributions. With this choice, Eq. 2 takes the form

| [S16] |

As all of the terms in this equation are expectation values, we approximate them by averaging over samples drawn from ρ. Given a set of interaction strengths , we generated samples for the particle position by running a Metropolis–Hastings random walk. The proposals were generated by adding Gaussian noise to each particle position. For the data shown, the SD of the noise was set equal to . The factor of 5 corresponds to the characteristic spacing between wells in the random walk problem and thus represents the characteristic length scale of the problem. To decrease the correlation between samples, we discarded the first 10,000 steps in the random walk and took 50,000 more steps, recording the position every 1,000 cycles. These 50 particle locations were then sent to the optimizer as samples.

As with the Ising model example, we integrated Eq. 2 with a modified Euler scheme (time step set to 0.5) and used a rank-based quality function for . Specifically, we used the choice , where is the distance between x and the target well and where is unity when the inequality in the argument is satisfied and zero otherwise. With this definition, is the probability that another configuration drawn at random from ρ is farther away from the target well than configuration x.

Methodology for the Polymer Folding Problem

By construction, we required particles to remain a fixed distance D from their neighbors along the chain. Thus, we used a Metropolis–Hasting algorithm with proposal states designed to respect this condition (39, 40). In each iteration, we selected a particle to manipulate at random. If the particle was at the end of the chain, it was given a new position distributed uniformly at random on a unit sphere surrounding its one neighbor. If the particle was in the interior of the chain, then all of the possible new positions for the particle lived on a circle defined by the condition that the particle remains a unit distance from its two neighbors. In this case, we assigned the interior particle a new position drawn at random and uniformly distributed on the circle.

To deal with deep local minima that might trap the Metropolis–Hastings walk, we implemented a parallel tempering scheme (41). We ran two other Monte Carlo simulations in parallel with identical coupling constants, but one with a temperature twice as big and the other with a temperature 10 times as big. The ensembles were allowed to attempt an exchange of configuration with the next closest temperature ensemble every 100 iterations. The probability of exchange was set according to the standard replica-exchange algorithm (41). With these two temperature ratios we found empirically that the probability of accepting an exchange move was roughly 0.30 and always fell between 0.1 and 0.5.

We iterated this process with a burn-in period of 10,000 steps and then took 1,000,000 steps, saving samples every 1,000 cycles. The resulting 1,000 configurations were then used by the optimizer to evaluate the expectation values in Eq. 2. For our quality function, we used a rank-based reward system and defined , where is radius of gyration for the polymer configuration x and where is unity when the inequality in the argument is satisfied and zero otherwise. With this definition, is the probability that another configuration drawn at random from ρ has a larger radius of gyration than the configuration x. With all these parameters in place, Eq. 2 was integrated with a modified Euler scheme, using a time step of 0.25.

We checked that simply setting all of the energy parameters to large, identical values will not produce an octahedron. This comes about by tuning a Lennard-Jones potential with a cutoff distance, , such that for any . We shift the raw Lennard-Jones potential by its value at to keep the potential continuous.

The specific value for was picked by ensuring that, in the octahedron geometry, the only contribution to the particle energy would come from contacting particles. This was achieved by setting the cutoff parameter to be . Because the polytetrahedron geometry has the exact same number of particle contacts (12 contacts) and no two points closer than , these two geometries will have exactly the same energy given the short-ranged potential.

We note, in passing, that there is some residual in our optimizer’s coupling constants. Beyond deactivating the three interactions, some coupling constants have larger values than others. Further, we note that the active coupling constants can be placed in four groups that respect the symmetry around the center of the chain. For instance, the binding energy between the second and the last particle is almost exactly the same as the energy between the first and the second to last particle (Fig. 3 C and D). These variations show that the optimizer is responsive to the chain’s influence on configurations, even though the chain binding the particles together is not programmed explicitly into the structure of the Hamiltonian. In other words, the optimizer can learn constraints programmed implicitly in a model and react using its explicit control parameters.

Methodology for the Nonequilibrium Particle on a Substrate

Here we show how to derive an optimizer that works on an out-of-equilibrium problem. This particular optimizer treats the problem of a particle walking randomly on a substrate and takes the random path traveled by the particle as its configuration space. To model the process, we discretize the path into N steps, each spaced in time by a small interval τ. We pick N and τ so that and set up the problem so that the random walk takes place over a time interval . The path is generated by adding white noise with variance of and a mean drift of to update the particle position at each time step i. Convolving these random additions gives the probability for a full path:

| [S17] |

By expanding the square and absorbing all of the terms independent of into the proportionality constant we get

| [S18] |

For our particular problem, the mean velocity term is made up of a contribution from the substrate, which depends on the particle position , where is the substrate potential, and a contribution from the field that provides a directional bias and depends only on the time index i. Writing these two parts explicitly gives us

| [S19] |

At this stage, we need to assume a particular form for how the control parameters and depend on time. One choice is to expand the parameters, using a set of orthogonal basis functions. We used the Chebyshev polynomials (42) of the first kind, . Specifically, we define for the temperature variables and for the fields. Note the vector notation signifies that there are two sets of , one for the field contributions in the x direction and one for the field component in the y direction. Using this choice of basis functions, we can rewrite the probability function for the path ρ as

| [S20] |

where

| [S21] |

and

| [S22] |

In this form, the distribution is in the same family as all of the prior examples. As far as the optimization engine is concerned, we can treat and as though they were “energy” components and can produce a version of Eq. 2, using covariances between , , and the reward function f:

| [S23] |

Here we use the variable s to denote the optimizer’s time parameter to avoid confusion with the t, the time parameter for the random walk. For the particulars of our optimizer, we again chose a modified Euler integrator with a time step of 0.5 and calculated average values by sampling 100 paths per iteration. We used a rank-based quality function for the in Eq. 2. Specifically, we calculated the distance to the target well averaged over every point in the path and then set equal to the probability that another path drawn at random from the same distribution parameters has a value of greater than or equal to the given path.

Methodology for the Directed Self-Assembly Problem

In this section we provide a brief overview of the key components used to model diblock copolymer directed self-assembly as well as how we implemented Eq. 2 to optimize them. The primary focus of this section is on how one can take a generic Monte Carlo description of a material and convert it into an optimizer via Eq. 2. Because the polymer model has been presented previously in the literature, we provide only a broad level description and, for details, we direct the reader to previously published work (40).

As stated in the main text, the energy for the full system of polymer chains interacting with a chemically modified substrate is given in three parts. The first part is the bonded contribution between polymer beads in a given chain,

| [S24] |

where b is the statistical length segment of the polymer, N is the number of beads in a chain, is the number of polymer chains in the system, and is the position of the ith bead in the jth chain. The second contribution to our model is given by the nonbonded energy between polymer elements of different chains. This contains two parts: one describing compressibility of the polymer melt and the other describing repulsion between different monomers,

| [S25] |

where and are the local densities of the A- and B-type beads, respectively, is the mean squared end-to-end distance, and the invariant degree of polymerization is given by , where is the average bead density. Here the first term characterizes the repulsion between unlike monomers and is scaled by a Flory–Huggins parameter for the model (χ). The second term defines the compressibility and its model parameter κ is inversely proportional to the material’s modulus of compression. Note that the local densities and are evaluated using a particle-to-mesh method.

As noted in the main text, the final energy contribution comes from the particle interaction with the substrate. Each substrate has lithographically patterned stripes of width w and a background material. The two substrate types interact with the particles by way of an energy , where defines the decay length of the interaction, z is the distance from the plane of the substrate, and is the interaction strength between the substrate and a bead of type α. In other words, when a particle of type A is above the patterned region of the substrate, , whereas if it is over the background region, . As stated in the main text, we assume that the model parameters are antisymmetric: and .

Parameters for the simulation model were selected to agree with experiments performed on polystyrene-block-poly(methyl methacrylate) (PS-b-PMMA) diblock copolymers with a number-averaged molecular weight of 22,000-b-22,000 (41). Specifically, we took , , and . The substrate interaction length was set to , in units of the polymer length. Each polymer chain was composed of 32 beads, 16 of type A followed by 16 of type B.

The design goal posed in the main text is to optimize the stripe width, w, and the two interaction energy scales and to promote self-assembly into a striped phase. Because microstate configurations are distributed according to the canonical ensemble, Eq. 2 is given by

| [S26] |

where we have used the fact that .

The partial derivatives involving and are straightforward to evaluate,

| [S28] |

where if the particle is over a stripe and zero otherwise, and the sums and are over all beads of types A and B. To evaluate the partial derivatives with respect to w we decided to approximate the derivatives via a central, finite difference. Because the method already uses a particle-to-mesh approximation, we have a small length cutoff, l. This gave us the approximation

| [S29] |

To finalize the algorithm, we used a Monte Carlo scheme detailed in ref. 36 to draw samples for polymers distributed in a box. The box dimensions, measured in units of the polymer length, were scaled to , where m is the multiplication target for directed self-assembly. Stripes were automatically drawn by the algorithm at and along the y axis. The polymer chains were then randomly initialized in the simulation region to fill to a desired density and were then allowed to equilibrate. In the 3× density multiplication problem, we used 50,000 polymer beads, whereas in the 6× problem this was doubled to roughly 100,000.

We allowed the system a burn-in time of 20,000 Monte Carlo steps before we began sampling. Samples were recorded every 10,000 steps and the full system was run for 200,000 iterations. We decided on 10,000 steps by examining the autocorrelation time for the substrate energy contributions. We found that after roughly 5,000 Monte Carlo steps samples were effectively independent. We drew samples half as frequently to be sure samples remained independent even if the optimizer explored unusual regions of parameter space.

Because this system was appreciably larger than other examples in the text, some care was taken to make integration of Eq. 2 as efficient as possible. Our protocol was to integrate Eq. 2, using a modified midpoint method, initially taking the time step to be 0.5. We allowed this cursory run 100 iterations. We found that it converged in fitness after 80 cycles and had halved the initial fitness value after roughly 20 cycles. This suggested we could dramatically increase the time-step parameter without causing the integration to become unstable. We scaled our time step by a factor of 8 so that the fitness would halve after just 3 iterations and the optimization would complete in 10 cycles. Indeed, we found this was the case in both the 3× and 6× problems as noted in the main text. We then reran the optimizer three times at these settings to find essentially identical results in each case.

Acknowledgments

We thank Arvind Murugan, Jim Sethna, Sid Nagel, Suriyanarayanan Vaikuntanathan, and Tom Witten for many insightful discussions, and the reviewers for highly constructive suggestions. This work was supported by the National Science Foundation through Grant CBET 1334426. We acknowledge additional support through award 70NANB14H012 from the US Department of Commerce, National Institute of Standards and Technology as part of the Center for Hierarchical Materials Design (CHiMaD).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1509316112/-/DCSupplemental.

References

- 1.Jain A, Bollinger JA, Truskett TM. Inverse methods for material design. Am Inst Chem Eng J. 2014;60(8):2732–2740. [Google Scholar]

- 2.Torquato S. Inverse optimization techniques for targeted self-assembly. Soft Matter. 2009;5(6):1157–1173. [Google Scholar]

- 3.Jaeger HM. Celebrating Soft Matter’s 10th anniversary: Toward jamming by design. Soft Matter. 2015;11(1):12–27. doi: 10.1039/c4sm01923g. [DOI] [PubMed] [Google Scholar]

- 4.Oganov AR, Glass CW. Crystal structure prediction using ab initio evolutionary techniques: Principles and applications. J Chem Phys. 2006;124(24):244704. doi: 10.1063/1.2210932. [DOI] [PubMed] [Google Scholar]

- 5.Dahiyat BI, Mayo SL. De novo protein design: Fully automated sequence selection. Science. 1997;278(5335):82–87. doi: 10.1126/science.278.5335.82. [DOI] [PubMed] [Google Scholar]

- 6.Kuhlman B, et al. Design of a novel globular protein fold with atomic-level accuracy. Science. 2003;302(5649):1364–1368. doi: 10.1126/science.1089427. [DOI] [PubMed] [Google Scholar]

- 7.Hannon AF, et al. Inverse design of topographical templates for directed self-assembly of block copolymers. ACS Macro Lett. 2013;2(3):251–255. doi: 10.1021/mz400038b. [DOI] [PubMed] [Google Scholar]

- 8.Hannon AF, Ding Y, Bai W, Ross CA, Alexander-Katz A. Optimizing topographical templates for directed self-assembly of block copolymers via inverse design simulations. Nano Lett. 2014;14(1):318–325. doi: 10.1021/nl404067s. [DOI] [PubMed] [Google Scholar]

- 9.Chang J-B, et al. Design rules for self-assembled block copolymer patterns using tiled templates. Nat Commun. 2014;5:3305. doi: 10.1038/ncomms4305. [DOI] [PubMed] [Google Scholar]

- 10.Qin J, et al. Optimizing directed self-assembled morphology. Soft Matter. 2013;9(48):11467–11472. [Google Scholar]

- 11.Khaira GS, et al. Evolutionary optimization of directed self-assembly of triblock copolymers on chemically patterned substrates. ACS Macro Lett. 2014;3(8):747–752. doi: 10.1021/mz5002349. [DOI] [PubMed] [Google Scholar]

- 12.Bianchi E, Doppelbauer G, Filion L, Dijkstra M, Kahl G. Predicting patchy particle crystals: Variable box shape simulations and evolutionary algorithms. J Chem Phys. 2012;136(21):214102. doi: 10.1063/1.4722477. [DOI] [PubMed] [Google Scholar]

- 13.Miskin MZ, Jaeger HM. Adapting granular materials through artificial evolution. Nat Mater. 2013;12(4):326–331. doi: 10.1038/nmat3543. [DOI] [PubMed] [Google Scholar]

- 14.Miskin MZ, Jaeger HM. Evolving design rules for the inverse granular packing problem. Soft Matter. 2014;10(21):3708–3715. doi: 10.1039/c4sm00539b. [DOI] [PubMed] [Google Scholar]

- 15.DiStasio RA, et al. Designer spin systems via inverse statistical mechanics. Phys Rev B. 2013;88:134104. [Google Scholar]

- 16.Solis EOP, Barton PI, Stephanopoulos G. Formation of nanostructures with desired geometries. 1. Robust static structures. Ind Eng Chem Res. 2010;49(17):7728–7745. [Google Scholar]

- 17.Jankowski E, Glotzer SC. Screening and designing patchy particles for optimized self-assembly propensity through assembly pathway engineering. Soft Matter. 2012;8:2852–2859. [Google Scholar]

- 18.Hormoz S, Brenner MP. Design principles for self-assembly with short-range interactions. Proc Natl Acad Sci USA. 2011;108(13):5193–5198. doi: 10.1073/pnas.1014094108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Landau LD, Lifshitz EM. Statistical Physics. Part 1: Course of Theoretical Physics. 3rd Ed. Pergamon; 1980. pp. 333–368. [Google Scholar]

- 20.Amari S-I. Natural gradient works efficiently in learning. Neural Comput. 1998;10(2):251–276. [Google Scholar]

- 21.Wierstra D, Schaul T, Peters J, Schmidhuber J. 2008. Natural evolution strategies. Evolutionary Computation (IEEE World Congress on Computational Intelligence)(IEEE), pp 3381–3387.

- 22.Ollivier Y, Arnold L, Auger A, Hansen N. 2011. Information-geometric optimization algorithms: A unifying picture via invariance principles. arXiv preprint:1106.3708.

- 23.Akimoto Y, Nagata Y, Ono I, Kobayashi S. Bidirectional relation between CMA evolution strategies and natural evolution strategies. In: Schaefer R, Cotta C, Kołodziej J, Rudolph G, editors. Parallel Problem Solving from Nature, PPSN XI. Springer, Berlin; 2010. pp. 154–163. [Google Scholar]

- 24.Hansen N, Müller SD, Koumoutsakos P. Reducing the time complexity of the derandomized evolution strategy with covariance matrix adaptation (CMA-ES) Evol Comput. 2003;11(1):1–18. doi: 10.1162/106365603321828970. [DOI] [PubMed] [Google Scholar]

- 25.Transtrum MK, Machta BB, Sethna JP. Geometry of nonlinear least squares with applications to sloppy models and optimization. Phys Rev E Stat Nonlin Soft Matter Phys. 2011;83(3 Pt 2):036701. doi: 10.1103/PhysRevE.83.036701. [DOI] [PubMed] [Google Scholar]

- 26.Cressman R. Stability of the replicator equation with continuous strategy space. Math Soc Sci. 2005;50(2):127–147. [Google Scholar]

- 27.Oechssler J, Riedel F. On the dynamic foundation of evolutionary stability in continuous models. J Econ Theory. 2002;107(2):223–252. [Google Scholar]

- 28.Weibull JW. Evolutionary Game Theory. MIT Press; 1997. pp. 69–121. [Google Scholar]

- 29.Ingber L, et al. Adaptive simulated annealing. In: Junior HAeO, Ingber L, Petraglia A, Petraglia MR, Machado MAS., editors. Stochastic Global Optimization and Its Applications with Fuzzy Adaptive Simulated Annealing. Springer, Berlin; 2012. pp. 33–62. [Google Scholar]

- 30.Arkus N, Manoharan VN, Brenner MP. Minimal energy clusters of hard spheres with short range attractions. Phys Rev Lett. 2009;103(11):118303. doi: 10.1103/PhysRevLett.103.118303. [DOI] [PubMed] [Google Scholar]

- 31.Meng G, Arkus N, Brenner MP, Manoharan VN. The free-energy landscape of clusters of attractive hard spheres. Science. 2010;327(5965):560–563. doi: 10.1126/science.1181263. [DOI] [PubMed] [Google Scholar]

- 32.Zeravcic Z, Brenner MP. Self-replicating colloidal clusters. Proc Natl Acad Sci USA. 2014;111(5):1748–1753. doi: 10.1073/pnas.1313601111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Ferrenberg AM, Swendsen RH. New Monte Carlo technique for studying phase transitions. Phys Rev Lett. 1988;61(23):2635–2638. doi: 10.1103/PhysRevLett.61.2635. [DOI] [PubMed] [Google Scholar]

- 34. van Anders G, Klotsa D, Karas AS, Dodd PM, Glotzer SC (2015) Digital alchemy for materials design and optimization. arXiv preprint:1507.04960. [DOI] [PubMed]

- 35.Jain A, et al. Inverse design of simple pairwise interactions with low-coordinated 3d lattice ground states. Soft Matter. 2013;9(14):3866–3870. [Google Scholar]

- 36.Detcheverry FA, et al. Monte Carlo simulations of a coarse grain model for block copolymers and nanocomposites. Macromolecules. 2008;41(13):4989–5001. [Google Scholar]

- 37.Liu CC, et al. Chemical patterns for directed self-assembly of lamellae-forming block copolymers with density multiplication of features. Macromolecules. 2013;46(4):1415–1424. [Google Scholar]

- 38.Wolff U. Collective Monte Carlo updating for spin systems. Phys Rev Lett. 1989;62(4):361–364. doi: 10.1103/PhysRevLett.62.361. [DOI] [PubMed] [Google Scholar]

- 39.Baumgärtner A. Statics and dynamics of the freely jointed polymer chain with Lennard-Jones interaction. J Chem Phys. 1980;72(2):871–879. [Google Scholar]

- 40.Baumgärtner A, Binder K. Monte Carlo studies on the freely jointed polymer chain with excluded volume interaction. J Chem Phys. 1979;71(6):2541–2545. [Google Scholar]

- 41.Earl DJ, Deem MW. Parallel tempering: Theory, applications, and new perspectives. Phys Chem Chem Phys. 2005;7(23):3910–3916. doi: 10.1039/b509983h. [DOI] [PubMed] [Google Scholar]

- 42.Abramowitz M, Stegun IA. Handbook of Mathematical Functions: With Formulas, Graphs, and Mathematical Tables. 9th Ed Dover; New York: 1972. [Google Scholar]