Introduction

One of the most rudimentary and functionally important aspects of human functioning is the emergence of spatial hearing skills that enable children to localize sound and to segregate speech from background maskers or interferers. A child’s ability to understand speech in noisy conditions is likely to facilitate learning in mainstream classroom environments, as well as socialization in everyday situations. A hallmark of spatial hearing abilities is the dependence of the listener on the manner in which the auditory system integrates inputs arriving from sound in space at the two ears. This integration, in normal hearing systems, is mediated by an intricate neuronal circuitry in the brainstem, with auditory mechanisms that are tuned to spatial information. In children with normal hearing (NH), the spatial system utilizes binaural cues with great precision, and the development of the neural circuitry is fairly refined by 4–5 years of age [1] [2]. This early development of spatial hearing likely depends on experience with normal acoustic cues. Unlike NH children, children who are deaf and hear through cochlear implants receive auditory cues that are degraded with regard to numerous stimulus features (for review see [3, 4]). Thus, an important and timely question with regard to bilaterally implanted children is whether they demonstrate maturation of spatial hearing with additional exposure to bilateral stimulation. To the extent that they do, data would suggest that they are able to learn to utilize the information in order to function on tasks that require integration of inputs from the two ears. To the extent they do not, would suggest that either the necessary cues are not available to the children, or possibly that the cues may be available but the children are not using them.

In a recent study[5] with 21 children using BiCIs, 11/21 children had root mean square (RMS) errors that were smaller when they used both CIs than when a single CI was activated, suggesting that they experienced a bilateral benefit. When using both CIs, performance was highly variable amongst the 21 children; RMS errors ranged from 19°–56°, compared to the 5-year-old NH group who had RMS errors ranging from 9°–29°. Similar differences between CI users and NH children were found by van Deun and colleagues[6]. More recently Zheng and colleagues [7] investigated emergence of sound localization skills in a group of 19 bilaterally implanted children, and reported that with additional experience listening through BiCIs, most children improve, as seen in reduced localization errors. The error types were further analyzed to better understand whether the pattern of errors change with experience. Performance ranged from ‘poor localization’ to ‘hemifield discrimination’ (i.e. ability to discriminate left/right) to ‘good spatial hearing mapping’ (i.e. ability to identify source locations within the right or left hemifield). Notably, children tended to improve on the localization task with added experience, as evidenced by reduced localization errors.

In the present study, the goal was to assess the ability of children with BiCIs to hear speech in the presence of background interference, when the interferers were either co-located with the target speech (both in front), or separated from the target speech. Of the 20 children tested here, 11 were also tested on localization as reported by Zheng and colleagues [7], and for those children some comparisons are made between the speech-in-noise data and the localization results. This study sought to determine whether children demonstrate improvement on speech reception thresholds (SRTs), and/or on their ability to benefit from spatial separation of the target and interferers.

MATERIALS AND METHODS

Participants

Participants consisted of 20 children with BiCIs, all of whom were native English speakers, had no diagnosed developmental disabilities, and their primary mode of communication was oral. The children traveled with an adult, to Madison WI. Children visited the lab during prescribed intervals based primarily on the amount of bilateral experience they had, taking care to test as close as possible during intervals of 3–6, 12–18, 21–27, 36–42 and 47–51 months post bilateral activation. One child was also tested after 60 months of bilateral experience. Data presented here consist of repeated measures visits. Biographical data are shown in Table I, including the ages at which each CI was activated, cause of deafness, type of CI devices used and amount of bilateral experience at each of the visits to the lab. Each child visited the lab for 2–3 consecutive days, and was tested on a number of procedures. The child’s own CI processors were always used, and were set to their clinically programmed every-day listening mode, as confirmed by parent and audiologist reports. Before beginning any testing, a subjective loudness balancing procedure was conducted, whereby volume and sensitivity controls were adjusted in order to equalize the perceived loudness between the two CI devices.

| Subject | Sex | Etiology | Age at 1st CI (yr;mo) |

Age at 2nd CI (yr;mo) |

Age at visit (yr; mo) | BiCI expereince at each visit (mo) |

First CI (internal device, processor, ear) |

Second CI (internal device, processor, ear) |

|---|---|---|---|---|---|---|---|---|

| V1 | V2 | V3 | V4 | V1 | V2 | V3 | V4 | |||||||

| CIBW | F | Connexin 26 | 1;1 | 3;9 | 5;10| 7;0 | 2;1 | 3;3 | N24C,Freedom, R | Freedom, Freedom, L |

| CICA | M | Unknown | 2;5 | 2;5 | 5;6 | 6;6 | 3;2 | 4;1 | Pulsarci 100, Opus2, simultaneous | Pulsarci 100, Opus2, simultaneous |

| CICF | F | Meningitis | 1;6 | 2;4 | 5;6 | 6;7 | 3;2 | 4;2 | Freedom Contour, Freedom, R | Freedom Contour, Freedom, L |

| CIDJ | F | Genetic | 1;8 | 5;1 | 8;1 | 8;11 | 3;0 |3;11 | Nucleus24, Freedom, R | Freedom, Freedom, L |

| CIDP | F | Connexin 26 | 0;11 | 2;9 | 4;11| 5;10 | 2;2 |3;1 | V1: Combi40+, Tempo+, L V2: Combi40+, Opus2, L |

V1: Pulsarci100, Tempo+, R V2: Pulsarci100, Opus2, R |

| CIDR | F | Unknown | 1;9 | 2;8 | 4;6 | 6;1 | 1;9 |3;11 | V1:HiRes90K/HiFocus, PSP,R V2: HiRes90K/HiFocus, Harmony, R |

V1:HiRes90K/HiFocus, PSP,L V2: HiRes90K/HiFocus, Harmony, L |

| CICQ | M | Usher Syndrome Type 1 |

1;2 | 3;7 | 5;0| 6;10 | 0;6 |3;2 | V1: Freedom Contour, Freedom, R V2: Freedom Contour, N5, R |

V1: Freedom Contour, Freedom, L V2: Freedom Contour, N5, L |

| CIBU | M | Connexin 26 | 1;2 | 5;1 | 6;3 | 7;3 |8;3 | 1;1 |2;2 |3;1 | V1: Combi40+, Tempo+, L V2: Combi40+, Opus2, L V3: Combi40+, Opus2, L |

V1: Pulsarci100, Tempo+, R V2: Pulsarci100, Opus2, R V3: Sonata, Opus2, R |

| CICY | M | Unknown | 1;0 | 4;8 | 5;8 | 6;9 |7;11 | 1;0 |2;0 |3;3 | HiRes 90K/HiFcous, Harmony, R | HiRes 90K/HiFcous, Harmony, L |

| CICN | F | Connexin 26 | 1;3 | 2;9 | 4;1 |4;11|5;6 | 1;2 |2;1 |3;0 | Freedom Contour, Freedom, R | Freedom Contour, Freedom, L |

| CIDQ | F | Connexin 26 | 0;10 | 4;3 | 6;7 | 7;8 |8;8 | 2;3 |3;4 |4;3 | V1: N24C, Sprint, R V2: N24C, Sprint, R V3: N24C, Freedom, R |

V1: Freedom Contour, Freedom, L V2: Freedom Contour, Freedom, L V3: Freedom Contour, Freedom, L |

| CIEF | F | Unknown | 1;3 | 4;10 | 5;10|6;10|7;11 | 1;0 |2;0 |3;1 | N24C, Freedom, R | Freedom Contour, Freedom, L |

| CIEM | M | Unknown | 2;4 | 2;10 | 4;0 |4;10|5;5 | 1;1 |2;0 |3;1 | V1–V2: Freedom Contour, Freedom, R V3: Freedom Contour, N5, R |

V1–V2: Freedom Contour, Freedom, L V3: Freedom Contour, N5, L |

| CIEK | F | LVAS/progressive | 4;9 | 5;2 | 6;4 | 7;4|8;4 | 1;2 |2;2 |3;1 | HiRes/Fidelity120,Harmony, L | HiRes/Fidelity120, Harmony, R |

| CICL | M | Connexin 26 | 1;5 | 2;9 | 4;10| 5;2|6;11 | 2;2 |3;2 |4;2 | Freedom Contour, Freedom, R | Freedom Contour, Freedom, L |

| CIFA | F | Unknown | 2;1 | 4;3 | 5;7 |6;5 |7;6 | 1;3 |2;2 |3;2 | Freedom Contour, N5, R | CI512,N5, L |

| CIEH | M | Hereditary | 1;1 | 1;1 | 4;1 |5;0 |6;2 | 3;0 |3;11|5;1 | Freedom Contour, Freedom, R | Freedom Contour, Freedom, L |

| CIEE | M | EVA/progressive | 2;10 | 3;11 | 4;3 |5;2 |6;2 |7;1 | 0;4 |1;2 |2;3 |3;2 | V1–V3: Freedom Contour, Freedom, R V4: Freedom Contour, N5, R |

V1–V3: Freedom Contour, Freedom, L V4: Freedom Contour, N5, L |

| CIDW | M | Unknown | 2;3 | 5;0 | 5;4 |6;1 |7;4 |8;0 | 0;4 |1;2 |2;0 |3;0 | HiRes90K/HiFocus, Harmony, L | HiRes90K/HiFocus, Harmony, R |

| CIET | M | LVAS (4 years acoustic hearing) | 4;9 | 4;9 | 6;4 |6;11|7;10|8;11 | 1;6 |2;1 |3;1 |4;1 | Sonata, Opus2, simultaneous | Sonata, Opus2, simultaneous |

This research was approved by, and carried out in accordance with, the University of Wisconsin-Madison Human Subjects IRB regulations. Prior to commencing testing during each visit, parents signed consent forms, and children who were older than age 7 at the time of testing also signed an assent form.

Experimental Setup

During testing, listeners were seated in the sound booth facing a small table that was covered with foam. They were positioned with the head in the center of a semicircular array of loudspeakers (Cambridge Soundworks, Center/Surround IV), which were approximately at ear level, 1.5 m from the listener and were calibrated prior to each testing session. Subjects faced a computer monitor located at 0° azimuth under the front loudspeaker, and used a computer mouse to provide responses to the stimuli by selecting icons displayed on the computer monitor.

Stimuli

Target stimuli were from a closed-set of 25 spondees (a two-syllable word with equal stress on both syllables) selected to be within the vocabulary of children ages 4 years and older [8], and were pre-recorded using a male talker (F0=150 Hz) (all root-mean-square (rms) levels equalized). Interfering sentences were taken from the Harvard IEEE corpus [9] and were pre-recorded separately using a female talker (F0=240 Hz). Sentences were filtered to match the long-term average speech spectrum of the target spondees [8, 10, 11]. Two-talker interferers were created by overlaying two recordings from the same talker.

Design and procedure

Testing was identical to the procedures reported in papers by Misurelli and Litovsky [12, 13]. Speech reception thresholds (SRTs) were measured for target spondees in the presence of the interfering speech, which was fixed at 55 dB SPL. SRTs from 3 conditions with interferers are relevant to the results presented here: SRTFront (target and interferers both 0° front), SRT+90°/+90° (target 0° front, interferers placed in an asymmetrical configuration at 90° to one side or another) and SRT+90°/−90° (target 0° front, interferers placed in a symmetrical configuration with one at +90° and one at −90°). In the asymmetrical condition, interferers were placed 90° to the side of the first CI. To minimize any order effects, all conditions were randomized within interferer type. For each child, 2–3 SRTs per condition were collected and were averaged for data analysis, resulting in one SRT per condition. SRTs in children with BiCIs have been shown to be consistent across multiple measurements [12, 13].

Prior to testing, each listener participated in a brief familiarization task, in order to verify that they could accurately identify visual icons associated with each auditory target spondee. If an individual listener was unable to identify particular spondees, then those target stimuli were not used in the experimental testing (similar approaches were used in our previous studies by Litovsky and colleagues). Out of the 20 participants, 13 participants used a target list less than 25 words on one of the visits, with no list less than 17 words. For the majority of participants who used a list of less than 25 words, the shortened list was only used for the first visit (i.e. younger chronological age and less CI experience). Practice was conducted for all listeners in order to allow each listener to become familiar with various aspects of the testing (i.e. listener position, computer controls, stimuli).

The experimental test consisted of a 4-alternative-forced-choice task [8, 12, 14–16]. Children were given experience hearing the interferer sentences and the male target; subsequently they were instructed to ignore the “lady talkers” and to pay attention to the man’s voice (male target). Trials began with the word “ready,” spoken by the male target, followed by one randomly chosen spondee from the list of 25. The interferers were turned on first followed by the target, and continued for approximately 1s after the target was turned off.

E. Speech reception threshold estimation

SRTs were measured using an adaptive tracking method with a 3-down/1-up procedure. SRTs were calculated using Maximum Likelihood Estimation (MLE) methods, which define threshold as the point on the psychometric function at which the target intensity corresponded to 79.4% correct. This method is identical to the methods used previously by Litovsky and colleagues [8, 12, 14, 16]. SRM was calculated for each listener [12, 13] as follows:

SRMAsymmetrical= SRTFront − SRT+90°/+90°

SRMSymmetrical= SRTFront − SRT+90°/−90°

Positive SRM values indicate an improvement in identification of the target when spatially separated from the interferers versus when the target and interferers are co-located; large SRM indicates greater benefit for speech intelligibility of the target with the sources spatially separated. Negative SRM indicates that a listener performed worse when the target and interferers were spatially separated compared with the co-located condition.

RESULTS

Spatial release from masking

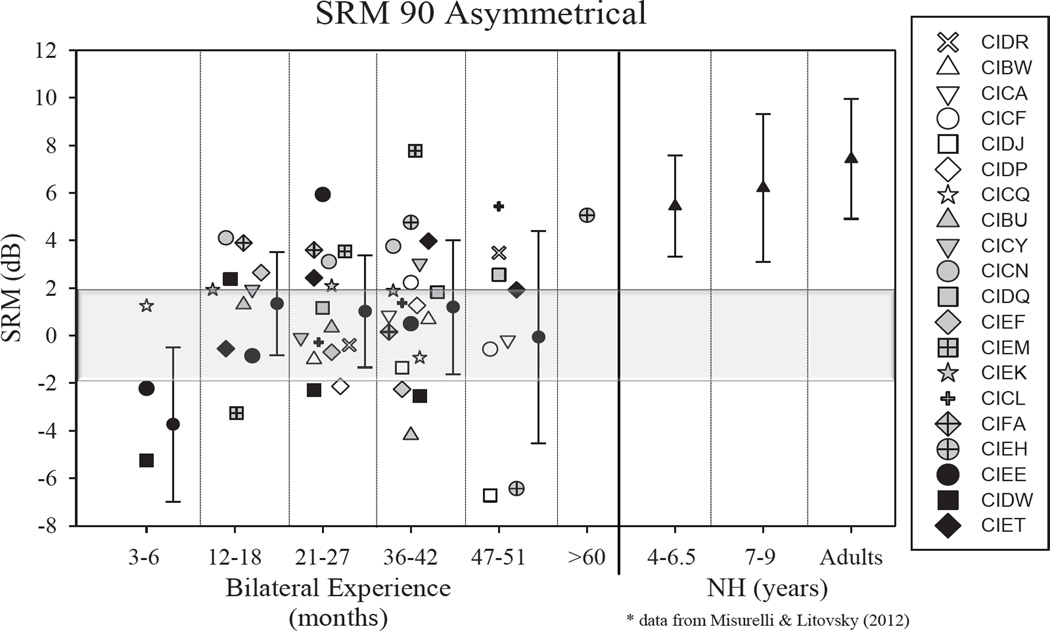

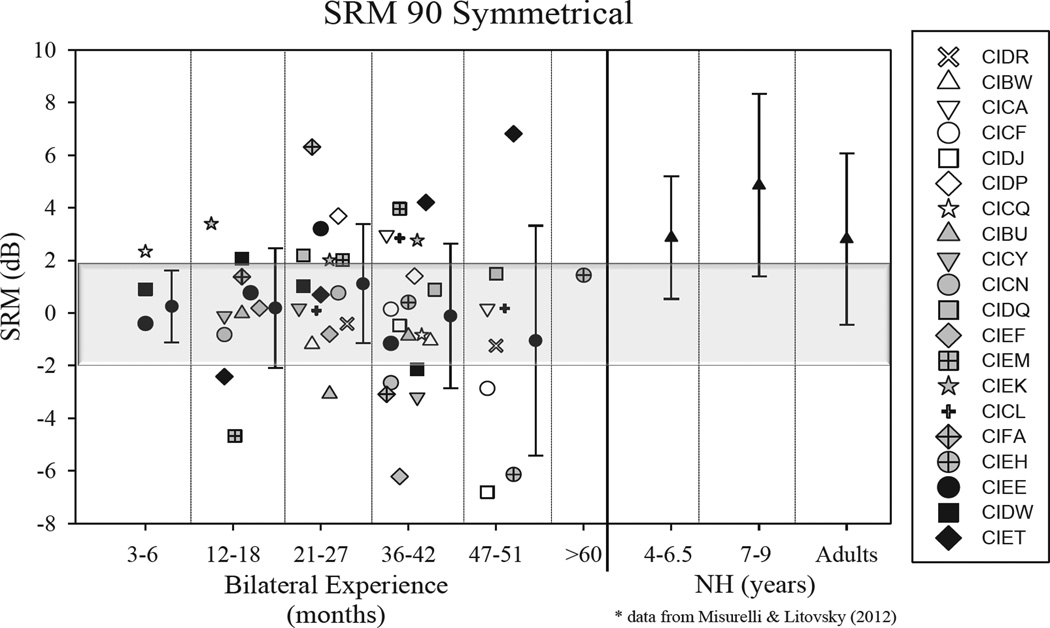

Figures 1 and 2 show SRMAsymmetrical and SRMSymmetrical, respectively. Data are compared for visits 1–4 (with number of months of bilateral experience demarcated), and each child’s data are shown using a different symbol. Data from children and adults with NH (used with permission from [12]) are shown to the right. In general, SRM in the BiCI group was smaller than the average SRM for any of the NH groups, even the youngest who were ~4–6.5 years old. However, numerous children in the BiCI group show SRM values that are clearly within the range of what was seen in the NH groups, suggesting that they were able to take advantage of spatial cues to separate target speech from interferers. In Figure 1–2 the data in each panel show performance at annual testing intervals, starting with a few months after bilateral activation, through 4 years (and in one case >5 years). Some children showed improvement in SRM with additional experience (CIDR, CIDP, CIDQ, CIEM, CICL). Other children showed a decline in SRM with additional bilateral experience (CICF, CIDJ, CIBU, CIEF). Other children still showed no change in SRM (CIBW, CICA, CICQ, CICN, CIEK). Finally, there were children who fluctuated over the various testing intervals (CICY, CIFA, CIEH, CIEE, CIDW, CIET).

Figure 1.

Spatial release from masking (SRM) are shown for the Asymmetrical condition, as the difference in SRTs when the interfering speech sounds were both in front vs. both at 90° to one side. SRM alues are plotted in dB, as a function of the number of months of bilateral experience. Each child’s data are shown in one symbol type. The mean (+/− SD) values are shown for each testing interval with the filled circles in each panel. To the right of the vertical line, data are replotted with permission from Misurelli and Litovsky (2012), taken from children with normal hearing (NH).

Figure 2.

Spatial release from masking (SRM) are shown for the Symmetrical condition, as the difference in SRTs when the interfering speech sounds were both in front vs. one on the right and one on the left, at 90°. SRM alues are plotted in dB, as a function of the number of months of bilateral experience. Each child’s data are shown in one symbol type. The mean (+/− SD) values shown for each testing interval with the filled circles in each panel. To the right of the vertical line, data are replotted with permission from Misurelli and Litovsky (2012), taken from children with normal hearing (NH).

Relationship between SRTs and SRM

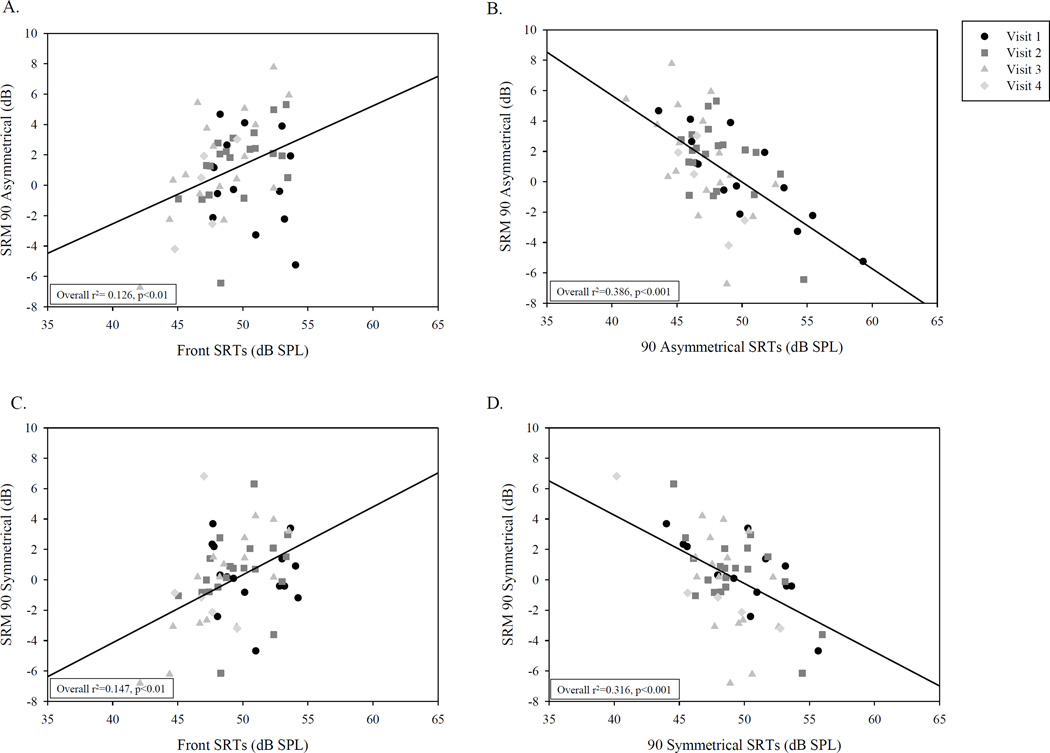

The data from all visits were first entered into overall regression analyses to examine the relationship between SRM and SRTs in either the co-located or separated conditions (SRMAsymmetrical and SRMSymmetrical). The overall regression is shown with a solid black line in Figure 3 (panels A–D). There were significant relationships between SRTs in the co-located (Front) condition and SRM for both SRMAsymmetrical (Fig. 3A; r2=.126; p<.01), and SRMSymmetrical (Fig. 3C; r2=.147, p<.01), suggesting that higher SRTs in the co-located condition were associated with larger release from masking. Results further showed that in the separated conditions, SRM was negatively related to SRTs in SRMAsymmetrical (Fig. 3B; r2=.386, p<.001) and SRMSymmetrical (Fig. 3D; r2=.316, p<.001), suggesting that children who were able to benefit from spatial cues were generally likely to have lower SRTs in the separated conditions, i.e., they were able to hear speech in the presence of spatially separated interferers at lower SNRs. More specifically, looking at children with SRM values ≥2, SRTs in the separated conditions tended to be <52 or <51 dB SPL, for SRMAsymmetrical and SRMSymmetrical, respectively. In contrast, children with higher SRTs tended to show SRM that was weak, absent or even negative.

Figure 3.

Each panel shows correlations between SRM and SRTS. The top and bottom panels include data from the Asymmetrical and Symmetrical conditions, respectively. The left and right panels include data with SRTs from the Front or side, respectively. Data from the four visits to the lab are shown in different symbol type.

Regression analyses were also conducted separately for the different visits (shown in Figure 3 with different symbols for visits 1–4, denoted as V1, V2, V3, V4). First, for SRMAsymmetrical, on V2 and V3, there was a significant relationship between SRTs in the co-located condition and SRM [V2: (r2=.23, p<.05); V3: (r2=.4, p<.01)], suggesting that higher SRTs in the co-located condition were associated with larger release from masking. Furthermore, when children had higher SRTs in SRMAsymmetrical, there was a negative relationship with SRM, i.e., SRM was lower [V1: (r2=.69, p<.01), V2 (r2=.3, p=.01); V3: (r2=.3, p<.05)]. This trend was not apparent in the V1 data, possibly because those children typically had 3–6 months of bilateral experience. Second, for SRMSymmetrical, on V3 only there was a significant relationship between SRTs in the co-located condition and SRM (r2=.7, p<.001). In addition, on V1, V2 and V4 there was a significant negative relationship between SRTs in the Symmetrical condition and SRM [V1: (r2=.48, p<.01); V2: (r2=.4, p<.01); V4: (r2=.9, p<.02)]. The lack of relationship on V3 is not easy to explain, and may be due to the very small variability in SRTs in the separated condition.

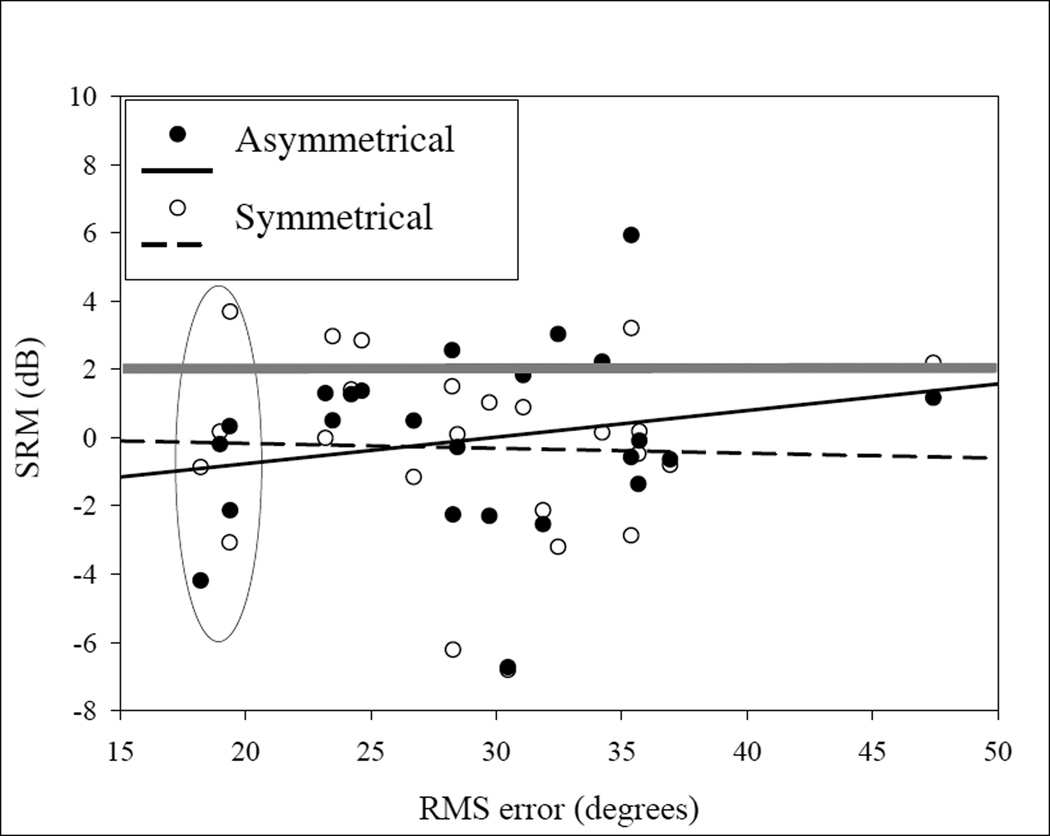

Relationship between SRM and sound localization

The SRM effect involves use of spatial cues. For the Asymmetric conditions, this includes both binaural cues and monaural head shadow cues, whereas for the Symmetrical condition listeners must rely more heavily on binaural cues due to the large reduction of monaural cues [12, 13]. The question thus arises regarding the relationship between performance on SRM tasks and on sound localization tasks. That is, do children who demonstrate large benefit from spatial separation of target speech and interferers, as measured with SRM, also show reduced errors on sound localization tasks? If the same spatial hearing mechanisms govern performance on these two tasks, one might predict that performance on these tasks would be related. Figure 4 shows data from children who participated in localization testing as well as the SRM measures, on repeated visits to the lab. A regression analysis did not reveal a significant relationship between the two tasks, which is not surprising. A close examination of the data in Figure 4 reveals several interesting aspects of these data sets. First, note the oval shape placed around data points with RMS<20°; corresponding SRM values range from −4 dB (negative SRM) to +4 dB. This range of SRM values suggests that, regardless of the fact that these children were amongst the best at localizing sound, they varied greatly in their ability to take advantage of spatial cues for source segregation. Similarly, note the data points above the grey line at SRM >2 dB; corresponding RMS values range from <20° to >37°, showing again that children with largest SRM varied in localization abilities.

Figure 4.

RMS is plotted as a function of sound localization root-mean-square (RMS) error. Data are shown for SRM Asymmetrical and Symmetrical in different symbols.

Discussion

For over a decade, the fields of Audiology and Otology have embraced the medical practice of providing cochlear implants in both ears to children with bilateral profound deafness. The number of recipients was small to begin with (e.g., [17]), and there were concerns over the potential benefits that BiCIs would provide young children who are deaf. In addition, questions arose regarding the potential benefit of delaying treatment in one of the two ears until alternative forms of treatment for deafness become available. Examples of such treatments included hair cell regeneration [18], stem cells [19] and genetic therapy [20, 21].

Although progress in alternative treatments is being made in numerous animal models, lack of current clinical trials with human subjects means that parents of young children are searching for treatment options that would maximize their ability to communicate and learn in mainstream auditory environments. These environments include classrooms, playgrounds and cafeterias, as well as situations involving sports and recreational activities. In NH listeners the ability to function in complex, noisy environments is facilitated by the binaural auditory system. Binaural hearing refers to the ability of the auditory system to compare inputs from the two ears and to compute sound source location bases on differences between the ears in the time of arrival and intensity of the sound source. In addition to enabling accurate sound localization abilities, the binaural system is useful for source segregation, such as hearing speech in noise. A hallmark finding is known as spatial release from masking (SRM), whereby benefits for speech understanding are observed when the target speech and background interferers are spatially separated, compared with when the target and interferers are spatially co-located. The ability to utilize spatial cues results from access to binaural cues, as well as monaural cues. That is, having access to sound in two ears is useful from the perspective of being able to listen to whichever ear has a better signal-to-noise ratio (SNR). For reviews on this topic see ([22] [4] [2]). Today’s clinical treatments for providing input to both ears consist primarily of a cochlear implant in each ear, or for children with residual hearing in one or both ears, combining implantation with acoustic hearing could have benefits as well [23, 24].

The present study examined SRM in a group of 20 children who were fitted with BiCIs and traveled to Madison, WI to participate in research. All but two of the 20 children were fitted with the first CIs by age 2 years 5 months. The two children who were fitted with the first CI after 4 years experienced a progressive hearing loss. All 20 children received their second CI by age 5 years 2 months. Children visited the lab during prescribed intervals based primarily on the amount of bilateral experience they had. SRM data from the first visit have been previously published ([12, 13]), and the present study focused on whether SRM undergoes significant changes as a result of additional bilateral experience. It must be noted that SRM is a derived measure computed from the differences in SRTs in two conditions: target and interferers co-located (Front) vs. spatially separated (Asymmetrical or Symmetrical). Thus, changes in SRM are complex, as they can potentially be affected by a change in the child’s ability to hear speech while the interferers are either in front, or on one side of the head (Asymmetrical) or on both sides of the head (Symmetrical), and also by a combination of these abilities. Changes in performance on these tasks could potentially be affected by changes in the mapping characteristics of the implant speech processor, the directionality of the microphones, and numerous other factors.

The children tested here showed variable effects when SRM was compared on multiple time intervals. Some children showed improvement (increased SRM), others declined or showed no change, and some of the children fluctuated. This finding is complex as it suggests that SRM is not a stable factor in the children’s ability to function in complex acoustic environments. The measure of SRTs that are obtained with the tests reported are stable within each visit, that is SRTs are consistent during repeated measures [12], thus the fluctuations, reductions or lack of improvement are likely to reflect the reality of how children with BiCIs contend in noisy environments. The cause of variability and fluctuations in SRM are difficult to interpret. Some possible factors include the fact that children’s implant speech processors are reprogrammed at various time intervals between testing. Changes are thus made to important settings, including sensitivity and volume that can impact the dynamic ranges and thus the intensity at which children can hear speech in the presence of interfering speech. Similar effects may occur due to changes in programming of the microphone characteristics, which can have different effects on the relative levels of the speech and interferers, depending on what type of directional properties are included. In addition, as children become older and spend more time in classroom environments, many are fitted with assistive devices such as FM systems, and become accustomed to the improved SNR under those listening conditions, which can impact their ability to hear speech in noise under conditions that do not provide the added benefit of the FM system.

Previous studies on BiCI users have also shown mixed results regarding SRM. In adult listeners, spatial benefits for speech understanding have been primarily attributed to monaural cues, such as ‘head shadow’ or the better-SNR effect [25] [26]. Attempts at restoring binaural benefits have shown weak or absent effects (e.g., [27, 28]). Similarly, previous studies in children with BiCIs have shown small effect sizes ([29, 30]), with larger variability than that observed in NH children.

The lack of consistent findings using the SRM measure raises a question regarding the use of SRM as an appropriate task to identify improvements over time. As mentioned above, SRM is a derived value (relative SRTs in two conditions). Thus, one might ask whether stepping back and considering each measure in isolation (SRTs in each condition) might be revealing. The data presented here suggest that not to be the case, as the SRTs in Figure 3 do not show systematic change across annual testing intervals. A further step would then be to render SRTs themselves as being unusable for tracking change in performance over time. Future work might therefore consider measuring percent correct at numerous SNR values. The only caution is that these data are cumbersome to collect, in particular in younger children. Thus, perhaps alternative approaches to addressing the questions might arise from considering biological and clinical factors.

One example of a factor not addressed in this study, that could have contributed to outcomes is whether changes in performance over time are impacted by asymmetry in performance between the ears. Such asymmetry might arise from implanting two ears at different intervals [31]. Also important to consider are potential differences in the neural health of surviving auditory nerve fibers in the two ears. It is also likely that, while the auditory measures are stable over repeated trials, the task engaged specific aspects of executive function that contributed to the results, including individual differences in the ability to inhibit interfering information, switch and/or sustain attention on the task, or other factors such as working memory[32, 33]. The data in this paper do not provide sufficient evidence to directly answer these questions. However, discussion of these issues might open opportunities for future studies that will address these questions more directly.

In this study, a question was asked regarding the relationship between performance on SRM tasks and sound localization; specifically, whether children who demonstrate large SRM are also more likely to show improved localization (i.e. small RMS). There was no evidence of such a relationship, suggesting that the mechanisms involved in performance on these two tasks are not inherently related. This finding is not entirely surprising. Such a relationship has not been a major finding in prior studies on BiCI users. In NH listeners, there appears to be predictability between SRM and performance on binaural unmasking of tones (e.g., [34]). However those tasks engage a healthy binaural system. This is unlike the situation in BiCI users, for whom there are known limitations regarding the provision of well-controlled binaural cues ([4]).

Acknowledgment

This research was supported by a grant from the NIH-NIDCD (R01 DC008365), and also in part by a core grant to the Waisman Center from the National Institute of Child Health and Human Development (P30 HD03352). The authors would like to thank lab members for their help in data collection, and logistics related to this project, especially Shelly Godar. We would also like to thank the children and adults who travel across the country, as well as those from the Madison community, for their participation in this research.

References

- 1.Litovsky RY. Review of recent work on spatial hearing skills in children with bilateral cochlear implants. Cochlear Implants Int. 2011;12(Suppl 1):S30–S34. doi: 10.1179/146701011X13001035752372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Litovsky R. Development of the auditory system. Handb Clin Neurol. 2015;129:55–72. doi: 10.1016/B978-0-444-62630-1.00003-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Litovsky RY, et al. Studies on bilateral cochlear implants at the University of Wisconsin's Binaural Hearing and Speech Laboratory. J Am Acad Audiol. 2012;23(6):476–494. doi: 10.3766/jaaa.23.6.9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Kan A, Litovsky RY. Binaural hearing with electrical stimulation. Hear Res. 2015;322:127–137. doi: 10.1016/j.heares.2014.08.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Grieco-Calub TM, Litovsky RY. Sound localization skills in children who use bilateral cochlear implants and in children with normal acoustic hearing. Ear Hear. 2010;31(5):645–656. doi: 10.1097/AUD.0b013e3181e50a1d. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Van Deun L, van Wieringen A, Wouters J. Spatial speech perception benefits in young children with normal hearing and cochlear implants. Ear Hear. 2010;31(5):702–713. doi: 10.1097/AUD.0b013e3181e40dfe. [DOI] [PubMed] [Google Scholar]

- 7.Zheng Y, Godar S, Litovsky RY. Development of sound localization strategies in children with bilateral cochlear implants. PLoS One. 2015 doi: 10.1371/journal.pone.0135790. In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Litovsky RY. Speech intelligibility and spatial release from masking in young children. J Acoust Soc Am. 2005;117(5):3091–3099. doi: 10.1121/1.1873913. [DOI] [PubMed] [Google Scholar]

- 9.Rothauser E, et al. IEEE recommended practice for speech quality measurements. IEEE Trans. Audio Electroacoust. 1969;17(3):225–246. [Google Scholar]

- 10.Hawley ML, Litovsky RY, Culling JF. The benefit of binaural hearing in a cocktail party: effect of location and type of interferer. J Acoust Soc Am. 2004;115(2):833–843. doi: 10.1121/1.1639908. [DOI] [PubMed] [Google Scholar]

- 11.Hawley ML, Litovsky RY, Colburn HS. Speech intelligibility and localization in a multi-source environment. The Journal of the Acoustical Society of America. 1999;105(6):3436–3448. doi: 10.1121/1.424670. [DOI] [PubMed] [Google Scholar]

- 12.Misurelli SM, Litovsky RY. Spatial release from masking in children with normal hearing and with bilateral cochlear implants: effect of interferer asymmetry. J Acoust Soc Am. 2012;132(1):380–391. doi: 10.1121/1.4725760. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Misurelli M, Litovsky RY. Spatial release from masking in children with bilateral cochlear implants and with normal hearing: Effect of target-interferer similarity. J Acoust Soc Am. 2015 doi: 10.1121/1.4922777. In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Johnstone PM, Litovsky RY. Effect of masker type and age on speech intelligibility and spatial release from masking in children and adults. J Acoust Soc Am. 2006;120(4):2177–2189. doi: 10.1121/1.2225416. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Litovsky RY, Johnstone PM, Godar SP. Benefits of bilateral cochlear implants and/or hearing aids in children. Int J Audiol. 2006;45(Suppl 1):S78–S91. doi: 10.1080/14992020600782956. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Garadat SN, Litovsky RY. Speech intelligibility in free field: Spatial unmasking in preschool children. Journal of the Acoustical Society of America. 2007;121(2):1047–1055. doi: 10.1121/1.2409863. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Litovsky RY, et al. Bilateral cochlear implants in adults and children. Arch Otolaryngol Head Neck Surg. 2004;130(5):648–655. doi: 10.1001/archotol.130.5.648. [DOI] [PubMed] [Google Scholar]

- 18.Rubel EW, Furrer SA, Stone JS. A brief history of hair cell regeneration research and speculations on the future. Hear Res. 2013;297:42–51. doi: 10.1016/j.heares.2012.12.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Ronaghi M, et al. Inner ear hair cell-like cells from human embryonic stem cells. Stem Cells Dev. 2014;23(11):1275–1284. doi: 10.1089/scd.2014.0033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Atkinson PJ, et al. Neurotrophin gene therapy for sustained neural preservation after deafness. PLoS One. 2012;7(12):e52338. doi: 10.1371/journal.pone.0052338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Richardson RT, Atkinson PJ. Atoh1 gene therapy in the cochlea for hair cell regeneration. Expert Opin Biol Ther. 2015;15(3):417–430. doi: 10.1517/14712598.2015.1009889. [DOI] [PubMed] [Google Scholar]

- 22.Middlebrooks JC, Green DM. Sound localization by human listeners. Annu Rev Psychol. 1991;42:135–159. doi: 10.1146/annurev.ps.42.020191.001031. [DOI] [PubMed] [Google Scholar]

- 23.Turner CW, et al. Impact of hair cell preservation in cochlear implantation: combined electric and acoustic hearing. Otol Neurotol. 2010;31(8):1227–1232. doi: 10.1097/MAO.0b013e3181f24005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Gifford RH, et al. Combined electric and contralateral acoustic hearing: word and sentence recognition with bimodal hearing. J Speech Lang Hear Res. 2007;50(4):835–843. doi: 10.1044/1092-4388(2007/058). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.van Hoesel RJM. Exploring the benefits of bilateral cochlear implants. Audiol. Neuro-Otol. 2004;9(4):234–246. doi: 10.1159/000078393. [DOI] [PubMed] [Google Scholar]

- 26.van Hoesel RJ, Litovsky RY. Statistical bias in the assessment of binaural benefit relative to the better ear. J Acoust Soc Am. 2011;130(6):4082–4088. doi: 10.1121/1.3652851. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Loizou PC, et al. Speech recognition by bilateral cochlear implant users in a cocktail-party setting. J Acoust Soc Am. 2009;125(1):372–383. doi: 10.1121/1.3036175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.van Hoesel RJM, et al. Binaural speech unmasking and localization in noise with bilateral cochlear implants using envelope and fine-timing based strategies. J. Acoust. Soc. Am. 2008;123(4):2249–2263. doi: 10.1121/1.2875229. [DOI] [PubMed] [Google Scholar]

- 29.Van Deun L, et al. Earlier intervention leads to better sound localization in children with bilateral cochlear implants. Audiol Neurootol. 2010;15(1):7–17. doi: 10.1159/000218358. [DOI] [PubMed] [Google Scholar]

- 30.Sheffield SW, et al. Availability of binaural cues for pediatric bilateral cochlear implant recipients. J Am Acad Audiol. 2015;26(3):289–298. doi: 10.3766/jaaa.26.3.8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Gordon KA, Wong DD, Papsin BC. Bilateral input protects the cortex from unilaterally-driven reorganization in children who are deaf. Brain. 2013;136(Pt 5):1609–1625. doi: 10.1093/brain/awt052. [DOI] [PubMed] [Google Scholar]

- 32.Houston DM, et al. The ear is connected to the brain: some new directions in the study of children with cochlear implants at Indiana University. J Am Acad Audiol. 2012;23(6):446–463. doi: 10.3766/jaaa.23.6.7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Beer J, Pisoni DB, Kronenberger W. Executive Function in Children with Cochlear Implants: The Role of Organizational-Integrative Processes. Volta Voices. 2009;16(3):18–21. [PMC free article] [PubMed] [Google Scholar]

- 34.Culling JF, Hawley ML, Litovsky RY. The role of head-induced interaural time and level differences in the speech reception threshold for multiple interfering sound sources. J Acoust Soc Am. 2004;116(2):1057–1065. doi: 10.1121/1.1772396. [DOI] [PubMed] [Google Scholar]