Abstract

Predictive or treatment selection biomarkers are usually evaluated in a subgroup or regression analysis with focus on the treatment-by-marker interaction. Under a potential outcome framework (Huang, Gilbert and Janes [Biometrics 68 (2012) 687–696]), a predictive biomarker is considered a predictor for a desirable treatment benefit (defined by comparing potential outcomes for different treatments) and evaluated using familiar concepts in prediction and classification. However, the desired treatment benefit is un-observable because each patient can receive only one treatment in a typical study. Huang et al. overcome this problem by assuming monotonicity of potential outcomes, with one treatment dominating the other in all patients. Motivated by an HIV example that appears to violate the monotonicity assumption, we propose a different approach based on covariates and random effects for evaluating predictive biomarkers under the potential outcome framework. Under the proposed approach, the parameters of interest can be identified by assuming conditional independence of potential outcomes given observed covariates, and a sensitivity analysis can be performed by incorporating an unobserved random effect that accounts for any residual dependence. Application of this approach to the motivating example shows that baseline viral load and CD4 cell count are both useful as predictive biomarkers for choosing antiretroviral drugs for treatment-naive patients.

Key words and phrases: Conditional independence, counterfactual, ROC regression, sensitivity analysis, treatment effect heterogeneity, treatment selection

1. Introduction

Much of contemporary medical research is focused on treatment effect heterogeneity, that is, the fact that the same treatment can have different effects on different patients. The increasing awareness of treatment effect heterogeneity has motivated the development of predictive biomarkers for identifying the subpopulation of patients who would actually benefit from a new treatment [e.g., Simon (2008, 2010)]. Classical examples of predictive biomarkers include genetic markers for cancer treatment, such as the OncoType Dx multi-gene score for breast cancer [Paik et al. (2004)] and the K-RAS gene expression level for colorectal cancer [Karapetis et al. (2008)]. This article is motivated by a new and growing interest in the possibility of using baseline viral load or CD4 cell count as a predictive biomarker for treating human immunodeficiency virus type 1 (HIV-1).

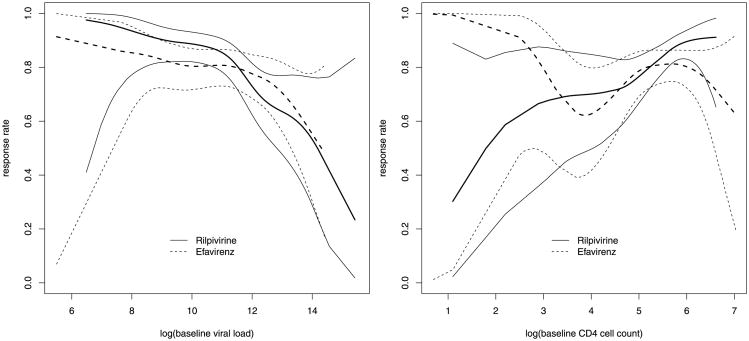

Evaluation of a predictive biomarker is usually based on a subgroup or regression analysis comparing treatment effects on different subpopulations defined by the biomarker [e.g., Gail and Simon (1985), Pocock et al. (2002), Russek-Cohen and Simon (1998)]. Under this approach, the performance of a predictive biomarker is measured by the interaction between marker value and treatment assignment in a regression model for the clinical outcome of interest, which will be referred to as an outcome model. For example, consider the THRIVE study, a phase 3, randomized, noninferiority trial comparing rilpivirine, a newly developed nonnucleoside reverse transcriptase inhibitor, with efavirenz in treatmentnaive adults infected with HIV-1 [Cohen et al. (2011)]. The outcome of primary interest is a binary indicator of virologic response at week 48 of treatment (see Section 4 for details). Our consideration of baseline viral load and CD4 cell count as predictive biomarkers (for choosing between efavirenz and rilpivirine) is motivated by Figure 1, which shows nonparametric estimates of treatment-specific response rates as functions of marker value, separately for each biomarker. Figure 1 suggests a qualitative interaction between treatment and each biomarker, with rilpivirine favored over efavirenz for higher values of baseline CD4 cell count and lower values of baseline viral load. Because the statistical significance in Figure 1 is not straightforward to assess, a simple logistic regression analysis that includes treatment, marker and their interaction is performed (separately for each marker), and the resulting p-value for the treatment-by-marker interaction is 0.059 for viral load and 0.031 for CD4 cell count.

Fig. 1. Nonparametric regression analysis of the THRIVE data: smoothed estimates (thicker lines) and pointwise 95% confidence intervals (thinner lines) for treatment-specific response rates as functions of baseline viral load (left) and CD4 cell count (right).

While informative about possible interactions, Figure 1 is less transparent about the predictive performance of these biomarkers and their comparison. Huang, Gilbert and Janes (2012) point out that a strong interaction is not sufficient for adequate performance of a predictive biomarker, that the scale of the interaction coefficient depends on the functional form of the outcome model, and that the interaction-based approach is ill-suited for developing combination markers. These authors also propose a potential outcome framework where a predictive biomarker is—as the term suggests—treated as a predictor for a desirable treatment benefit. Note that a treatment benefit is necessarily the result of comparing potential outcomes for different treatments applied to the same patient. Under this perspective, predictive biomarkers should be evaluated using familiar measures in prediction and classification [e.g., Pepe (2003), Zhou, Obuchowski and McClish (2002), Zou et al. (2011)]. Specifically, one should consider the true and false positive rates of a binary marker and the receiver operating characteristic (ROC) curve for a continuous marker. This approach allows different markers to be compared on the same scale and facilitates the development of combination markers.

The objective of this article is to evaluate and compare baseline viral load and CD4 cell count as predictive biomarkers under the potential outcome framework. It is important to distinguish this objective from the related problems of identifying potential markers, combining several markers into a hybrid marker, choosing the cutoff point for a given marker and, more generally, developing an individualized treatment strategy. Variable selection techniques such as lasso-based methods have been used to select and combine genetic markers [e.g., Tian et al. (2012)]. Nonparametric multivariate methods [e.g., Foster, Taylor and Ruberg (2011), Qian and Murphy (2011), Su et al. (2008)] and semiparametric methods [e.g., Zhang et al. (2012)] have been used to develop a treatment rule (i.e., a set of criteria for selecting patients), which can be considered a binary hybrid marker obtained by combining multiple markers. A natural question that arises from the THRIVE study is whether or how to combine baseline viral load and CD4 cell count into a hybrid marker with improved predictive accuracy. While that question is beyond the scope of this article, we note that the potential outcome framework and the proposed methods are applicable to any given marker. Once a hybrid marker is developed, it can be evaluated and compared to the individual markers in the same framework using the same methods.

An analytical challenge for the potential outcome framework is that the desired treatment benefit, which involves potential outcomes under different treatments, is usually unobservable because each patient can receive only one treatment in a typical study. A possible exception to this limitation is a cross-over study, which has its own issues [e.g., Poulson, Gadbury and Allison (2012)] and will not be discussed in this article. In a typical clinical study such as the THRIVE study, the standard methodology in prediction and classification is not directly applicable to a predictive biomarker. To address this issue and the resulting identification problem, Huang, Gilbert and Janes (2012) make a monotonicity assumption, namely, that one treatment dominates the other in all individual patients, and suggest a sensitivity analysis for possible departures from the monotonicity assumption. While the monotonicity assumption may be plausible in some situations such as vaccine trials, it may be less appealing as a starting point in other situations. In the THRIVE study, for example, the presence of a qualitative interaction (in the sense that neither treatment has a higher response rate for all realistic marker values) implies that neither treatment is dominant in all patients. Additionally, the approach of Huang, Gilbert and Janes (2012) is developed for a binary outcome and not readily extensible to other types of outcomes.

In this article, we propose alternative methods that do not require monotonicity or assume a binary outcome. Our first step is to account for the dependence between potential outcomes (for different treatments applied to the same patient) by adjusting for relevant covariates, such as demographic variables and baseline characteristics. If the set of measured covariates is sufficient for explaining the dependence between potential outcomes, the aforementioned performance measures can then be identified by assuming conditional independence of potential outcomes given covariates. Possible violations of this assumption can be addressed by introducing a random effect to account for residual dependence. In the next section we formulate the problem in terms of potential outcomes and provide a general rationale for the proposed approach. The proposed methods are then described in Section 3 and applied to the THRIVE study in Section 4. The article ends with a discussion in Section 5. Some technical details are provided in a supplemental article [Zhang et al. (2014)].

2. Notation and rationale

Suppose a randomized clinical trial is conducted to compare an experimental treatment with a control treatment, which may be a placebo or a standard treatment, with respect to a clinical outcome of interest, which may be discrete or continuous. Because there is only one outcome of primary interest in the THRIVE study, we will work with a scalar outcome unless otherwise noted. However, most of our methodology is readily applicable to a vector-valued outcome, with the exception of the sensitivity analysis in Section 3.2 (described fully in Web Appendix B). For a generic patient in the target population, let Y(t) denote the potential outcome that will result if the patient receives treatment t (0 for control; 1 for experimental). Note that the Y(t), t = 0, 1, cannot both be observed at a given time. Let T denote the treatment assigned randomly to a study subject, thus T is a Bernoulli variable independent of all baseline variables. Without considering noncompliance, which is negligible in the THRIVE study, we assume that T is also the actual treatment given to the subject, and write Y = Y(T) for the actual outcome. Should noncompliance become a major issue, we could take an intent-to-treat perspective and compare treatment assignments or use analytical techniques to recover the actual treatment effect [e.g., van der Laan and Robins (2003)]. We assume that large values of Y are desirable. Where necessary, the subscript i = 1, …, n will be attached to random variables to denote individual patients in the trial.

Our interest is in evaluating a predictive biomarker Z, a baseline variable which may be binary or continuous, with higher marker values supporting the use of the new treatment. The biomarker Z is intended to identify the subpopulation of patients who would benefit from the new treatment relative to the control. It can be a continuous variable as in our motivating example or a binary one such as a treatment rule developed using nonparametric multivariate methods. Let the desired treatment benefit be indicated by B = I{(Y(0), Y(1)) ∈ ℬ}, where I{·} is the indicator function and ℬ is the set of desirable outcomes. Note that B is by definition a comparison of the two potential outcomes. For a binary outcome, B might be an indicator for Y(0) < Y(1) or Y(0) ≤ Y(1), depending on which treatment is preferable with identical (efficacy) outcomes. In our example, we set B = I{Y(0) ≤ Y(1)} because rilpivirine is thought to have a better safety profile and will likely be preferred over efavirenz when their efficacy outcomes are identical. For a continuous outcome, we might take ℬ = {(y0, y1) : y1–y0 > δ}, where δ reflects considerations of cost, clinical significance and possibly the safety profiles of the two treatments (if not incorporated into a vector-valued outcome). For an ordered categorical outcome, the definition of B may be more complicated. We shall take the definition of B as given and focus on the evaluation of Z for predicting B. The target B is an intrinsic characteristic of an individual patient, which suggests that Z can be evaluated using well-known quantities in prediction and classification [e.g., Pepe (2003), Zhou, Obuchowski and McClish (2002), Zou et al. (2011)]. For a binary marker, it makes sense to consider the true and false positive rates, defined as TPR = P(Z = 1|B = 1) and FPR = P(Z = 1 |B = 0), respectively. For a continuous marker, it is customary to consider the ROC curve defined as

Here and in the sequel, we use F to denote a generic (conditional) distribution function, with the subscript indicating the random variable(s) concerned. The ROC curve is simply a plot of TPR versus FPR for classifiers of the form I(Z > z), with the threshold z ranging over all possible values.

Because B is never observed, the existing methodology for evaluating predictors, which generally assumes that B can be observed, cannot be used directly to evaluate a predictive biomarker. Nonetheless, we note that TPR, FPR and ROC are all determined by FZ|B, which can be recovered using Bayes' rule from the marginal distribution of Z and the conditional probability πZ(z) = P(B = 1 |Z = z). For a binary marker, 1 – πZ(0) and πZ(1) are negative and positive predictive values, respectively,

| (1) |

where τ = P(Z = 1). For a continuous marker, we have

| (2) |

Since Z is fully observed, the identifiability of FZ|B would follow from that of πZ(z). Once an estimate of πZ(z) is available, it can be substituted into the above displays together with an empirical estimate of τ or FZ, depending on the nature of Z.

Despite its simple appearance, πZ(z) is not straightforward to estimate. In fact, for any conceivable form of ℬ, the quantity πZ(z) = P{(Y(0), Y(1)) ∈ ℬ|Z = z} is not empirically identifiable because it involves the joint distribution of Y(0) and Y(1) given Z = z. Owing to randomization, it is straightforward to identify

for each t ∈ {0, 1}, and to estimate it from a regression analysis for Y given T and Z. However, the dependence structure of Y(0) and Y(1) given Z = z is not identifiable from the data [e.g., Gadbury and Iyer (2000)], which is also known as the fundamental problem of causal inference [Holland (1986)]. Because πZ(z) is not determined by the “marginals” Ft|Z (t = 0, 1), its identification and estimation require additional information or assumptions about the dependence between Y(0) and Y(1) given Z = z. For a binary outcome, this can be achieved by assuming monotonicity [i.e., Y(0) ≤ Y(1) with probability 1] as in Huang, Gilbert and Janes (2012). The monotonicity assumption corresponds to maximal positive dependence of Y(0) and Y(1).

For a general outcome and without assuming monotonicity, we develop alternative methods by adapting the techniques of Dodd and Pepe (2003) and Zhang et al. (2013). To account for the dependence of Y(0) and Y(1), we start by conditioning on relevant covariates that are associated with both outcomes. Let X denote a vector of such covariates measured at baseline, which may include prognostic factors and effect modifiers. In the THRIVE study, X may include gender, race, and baseline age and body mass index. We include Z as a component of X and write X = (Z, W), where W consists of the additional baseline covariates. Writing πX(x) = P{(Y(0), Y(1)) ∈ ℬ|X = x}, a conditioning argument yields

| (3) |

Because FW|Z is empirically identifiable and estimable, the challenge now is to identify and estimate πX(x).

If X is sufficient for explaining the dependence between Y(0) and Y(1), then we can expect that

| (4) |

that is, that Y(0) and Y(1) are conditionally independent given X. This assumption cannot be verified with the observed data and must be based on external information. Under assumption (4), the joint distribution of Y(0) and Y(1) given X is determined by the “marginals”

which are straightforward to identify and estimate. In reality, assumption (4) can be violated because X may not explain all the dependence between Y(0) and Y(1). Such violations can be examined in a sensitivity analysis based on a latent variable that accounts for any residual dependence between Y(0) and Y(1). Under this approach, assumption (4) is relaxed as follows:

| (5) |

where U is a subject-specific latent variable that is independent of X. In other words, U represents what is missing from X that makes assumption (4) break down. Assumption (5) alone is not sufficient to identify πX(x) because U is unobserved. However, by specifying certain quantities related to U, we can perform a sensitivity analysis based on assumption (5), as we demonstrate in the next section.

3. Methodology

We now describe methods for estimating the aforementioned performance measures (TPR, FPR and ROC). As indicated earlier, we will start by estimating πX(x) under assumptions (4) and (5). This can be done using a direct approach and an indirect approach, to be described in Sections 3.1 and 3.2, respectively. The direct and indirect approaches are based on models for πX(x) and FY|T,X(y|t, x), respectively, which we refer to as benefit and outcome models. A benefit model is directly informative about πX(x) and thus more interpretable in the present context, while an outcome model is more familiar to practitioners and easier to estimate and validate using standard techniques. Further comments comparing the two approaches are given at the end of Section 3.2 after the approaches are described and in Section 5. In Section 3.3 we show how to convert an estimate of πX(x) into one of πZ(z). Estimates of the performance measures of interest are given in Section 3.4.

3.1. Direct estimation of πX(x) based on a Benefit model

A benefit model is a parametric model for P(B = 1|X) = P{(Y(0), Y(1)) ∈ ℬ|X}, such as the following generalized linear model (GLM):

| (6) |

where α = (α1, α′X)′ is the regression parameter and ψ is an inverse link function. Since B is binary, the probit and logit links are natural choices.

Suppose the conditional independence assumption (4) holds. To gain some intuition, consider a discrete X taking values in {x1, …, xK}. Within each stratum defined by X = xk, assumption (4) implies that Y(0) and Y(1) are independent of each other, as if they arise from different subjects, which we assume are independent. In other words, given that Xi = Xj, the natural pair (Yi(0), Yi(1)) is identically distributed as the artificial pair (Yi(0), Yj(1)). If Ti = 0 and Tj = 1, then (Yi(0), Yj(1)) is observable as (Yi, Yj), so that πX(xk) = E(B|X = xk) can be estimated by

| (7) |

where Bij = I{(Yi, Yj) ∈ ℬ}, 𝒮tk = {i : Ti = t, Xi = xk}, and ntk denotes the size of 𝒮tk (t = 0, 1; k = 1, …, K).

The question is how to generalize this idea to a nondiscrete X. To follow the logic of (7), one would need to find subjects from different treatment groups with the same value of X, which becomes difficult when X has continuous components (4 in our example). To overcome that problem, we borrow ideas from Dodd and Pepe (2003), who consider semiparametric regression for the area under the ROC curve, and work with an expanded model given by

| (8) |

where β = (β1, β′X, β′dX)′, i ∈ 𝒮0k and j ∈ 𝒮1k. The new features of model (8) relative to model (6) are introduced for the sole purpose of estimating α. Our research question does not pertain to the left-hand side of (8) or the regression coefficient βdX. However, assumption (4) implies that P(Bij = 1|Xi = Xj = x) = P(B = 1|X = x). Thus, when Xi = Xj, model (8) reduces to model (6) with

| (9) |

In that sense, model (8) is a helper model that allows us to estimate model (6) with the observed data. Let 𝒮t = {i : Ti = t} and let nt denote the size of 𝒮t (t = 0, 1). Then the regression parameter β in model (8) can be estimated by solving the equation

| (10) |

where

As suggested by Dodd and Pepe (2003), equation (10) need not include all possible pairs (i, j); it could be based on a subset of pairs such that ‖Xi – Xj‖ < ε, where ‖·‖ denotes Euclidean norm, for some ε > 0. The choice of ε represents a bias-variance trade-off, where a larger ε leads to better efficiency and stability and also more sensitivity to the last component of model (8).

The approach just described relies heavily on the conditional independence assumption (4), which relates model (8) to model (6) through equation (9). Equation (9) does not hold when assumption (4) is violated. However, under alternative assumptions, we have

| (11) |

for a scalar γ. The key assumptions for (11) include assumption (5) and a GLM-like structure analogous to model (6):

| (12) |

where . In Section A of the supplemental article [Zhang et al. (2014)], we state additional assumptions that lead to (11) and give an expression for γ. Since β is identifiable and estimable using the techniques described earlier, α can be estimated as soon as γ is known or estimated. Unfortunately, γ is unidentifiable from the observed data. For the probit and logit links, we show in Section A of the supplemental article [Zhang et al. (2014)] that γ can take any value greater than 2−1/2 ≈ 0.71. Thus, when assumption (4) is in doubt, we can perform a sensitivity analysis based on specified values of γ ∈ (2−1/2, ∞), with γ = 1 corresponding to conditional independence.

3.2. Indirect estimation of πX(x) based on an outcome model

An outcome model is a parametric model, say, FY|T,X(y|t, x; θ), for the conditional distribution of Y given T and X, specified up to a finite-dimensional parameter θ. Let fY|T,X(y|t, x; θ) denote the associated probability density or mass function. A typical outcome model would be a GLM with the following mean structure:

| (13) |

where θ = (θ1, θT, θ′X, θ′TX)′ and ψ is an inverse link function. The parameter θ can be estimated by maximizing the likelihood , and the resulting maximum likelihood estimate (MLE) will be denoted by θ̂. Because of randomization, the outcome model FY|T,X(y|t, x; θ) implies that

which can be estimated by substituting θ̂ for θ.

Under the conditional independence assumption (4), the joint distribution

is identified as

and estimated by replacing θ with θ̂. Write

where the superscript CI stands for conditional independence. The corresponding estimate of πX(x) is then given by

When assumption (4) is in doubt, we can perform a sensitivity analysis based on assumption (5), which implies that

| (14) |

where we generalize the previous notation in an obvious way (with U as an additional conditioning variable). This suggests that we specify a model, say, FY|T,X,U(y|t, x, u; θ*), for the conditional distribution of Y given (T, X, U), with a finite-dimensional parameter θ*. Analogous to the GLM (13), we work with a generalized linear mixed model (GLMM) with

| (15) |

where . The GLMM is not completely identified without additional information, and we propose a sensitivity analysis based on specified values of (or, rather, ), which is described in Section B of the supplemental article [Zhang et al. (2014)].

It is worth mentioning that the random effect U has a different interpretation here than in Section 3.1. In model (15), U represents an unobserved prognostic factor which affects both potential outcomes in the same direction; a change in U may or may not have much effect on the treatment benefit B, depending on the precise definition of B and model (15). Although one could incorporate a random treatment effect into model (15), the resulting method will likely become very complicated. In model (12), U acts like an effect modifier in that a change in U leads directly to a change in the probability of a desirable treatment benefit. (Here we use the term effect modifier in a heuristic sense which may or may not agree with an interaction-based definition.) Thus, aside from modeling assumptions, the direct and indirect approaches also differ in how they deal with departures from assumption (4). The indirect approach is designed to address violations of assumption (4) due to an unmeasured prognostic factor, whereas the direct approach is more appropriate for violations of assumption (4) due to an unmeasured effect modifier.

3.3. Estimation of πZ(z)

Equations (1) and (2) suggest that evaluation of a predictive biomarker Z can be based on πZ(z) = P(B = 1|Z = z) and the marginal distribution of Z. Because the latter is straightforward to estimate, this section is focused on estimation of πZ(z) with a given estimate of πX(x), say, π̂X(x), which may be obtained using any one of the proposed methods. For a binary marker, equation (3) suggests that πZ(z) can be estimated by . We therefore assume that Z is continuous in the rest of this section.

We propose to estimate πZ(z) by substituting an estimate of FW|Z into equation (3). One could specify a parametric model for FW|Z, however, this can be difficult because the dimension of W can be rather large (5 in our example). We therefore exploit the fact that Z is only one-dimensional and employ nonparametric regression techniques in estimating FW|Z. Let ξ : ℝ → [0, ∞) be a kernel function and λ > 0 a bandwidth parameter. Then we can estimate FW|Z(w|z) by

This, together with π̂X(x), can be substituted into equation (3) to estimate πZ(z) as

An important question here is how to choose the bandwidth λ, for which we propose a cross-validation approach. The estimate π̂Z(z) can be regarded as a nonparametric regression of π̂X(Z, W) on Z, and its predictive accuracy can be assessed by comparing the “response” B̃i = π̂X(Xi) with the estimate B̂i = π̂Z(Zi). We propose to partition the sample into a training set and a validation set and choose a value of λ that minimizes the average of (B̃i – B̂i)2 in the validation set with π̂Z(z) estimated from the training set using bandwith λ.

3.4. Estimation of TPR, FPR and ROC

Given π̂Z(z) from Section 3.3, the parameters of interest can be estimated using equations (1) and (2). For a binary marker, this leads to

Where . For a continuous marker, we have

where

An asymptotic analysis of these estimates can be rather tedious, especially because π̂Z(z) involves smoothing and cross-validation. For inference, we recommend the use of bootstrap confidence intervals. To account for all variability in the estimates, the entire estimation procedure, including bandwidth selection based on cross-validation, should be repeated for each bootstrap sample.

4. Application to the THRIVE study

We now apply the methods of Section 3 to the THRIVE study introduced in Section 1, a randomized, double-blind, double-dummy, noninferiority trial at 98 hospitals or medical centers in 21 countries [Cohen et al. (2011)]. The THRIVE study compared rilpivirine with efavirenz for treating HIV-1 in treatment-naive adults, in the presence of common background nucleoside or nucleotide reverse transcriptase inhibitors (N[t]RTIs). The study enrolled 680 adult patients who were naive to antiretroviral therapy, with a screening viral load of at least 5000 copies per ml and viral sensitivity to N(t)RTIs. The patients were randomized in a 1 : 1 ratio to receive oral rilpivirine 25 mg once daily or efavirenz 600 mg once daily, in addition to an investigator-selected regimen of background N(t)RTIs.

The outcome of interest to us is virologic response (viral load below 50 copies/ml) at week 48 of treatment, with patient discontinuation (about 5%) counted as failure. The observed virologic response rates are 86% and 82% in the rilpivirine and efavirenz groups, respectively, and the difference between the two groups (3.5%; 95% CI: −1.7–8.8%) meets a prespecified noninferiority criterion based on a 12% margin (p < 0.0001). Thus, rilpivirine appears comparable to, if not better than, efavirenz in terms of population-level efficacy. However, Figure 1 suggests that individual patients respond differently to the two therapies and that baseline viral load and CD4 cell count could be used as predictive biomarkers. As indicated earlier, we will for safety reasons define individual-level treatment benefit as B = I{Y(0) ≤ Y(1)}, where Y(0) and Y(1) denote potential outcomes for efavirenz and rilpivirine, respectively.

Baseline viral load and CD4 cell count are both log-transformed before entering the benefit and outcome models as covariates. In addition to these biomarkers, the covariate vector X also includes gender, race (black, white or other), age and body mass index at baseline. For the direct approach of Section 3.1, the benefit model is a logistic regression model given by (6), with the aforementioned covariates as linear terms (and no interactions), and the helper model is given by (8) with the same link. For the indirect approach of Section 3.2, the outcome model is a logistic regression model similar to (13) except that interactions of X with T are limited to the two biomarkers. The selection of covariates and interactions in these models is based on subject matter knowledge and not on statistical tests. Estimation of model (8) is based on the 1% of pairs (of control and experimental patients) that are most similar in terms of ‖Xi – Xj‖, as suggested by Dodd and Pepe (2003). (Other proportions, from 0.001 to 1, have been attempted without yielding a material difference.) Under the indirect approach, the simplified method in Web Appendix B is used to estimate θ* for a given . In any case, estimates of πX(x) are converted into estimates of πZ(z) using the kernel smoothing method of Section 3.3, with a Gaussian kernel and a cross-validated bandwidth. The cross-validation is based on a 1 : 1 random partition of the sample into a training set and a validation set, and the bandwidth is chosen among {2l sd(Z) : l = −5, …, 5} using the minimum mean squared error criterion. The formulas of Section 3.4 are used to obtain estimates of ROC curves, and the trapezoidal rule is then employed in calculating the associated AUCs. The above procedure is performed for both biomarkers on the original sample as well as 200 bootstrap samples. Pointwise 90% confidence intervals for ROC curves are obtained using a simple bootstrap percentile method, and bootstrap standard errors are used for inference on AUCs (and AUC differences between the two biomarkers).

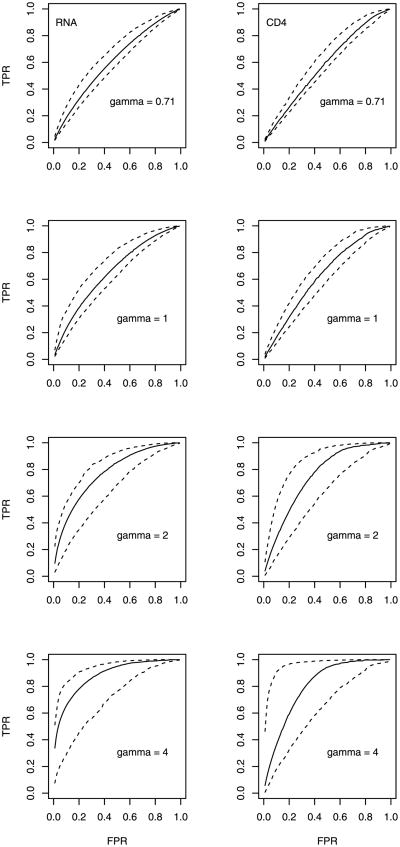

Figure 2 gives a side-by-side comparison of ROC curves for the two biomarkers, estimated using the direct approach of Section 3.1 with γ = 0.71, 1, 2, 4. The value γ = 1 corresponds to assumption (4) of conditional independence, while the value 0.71 is a theoretical lower bound. The associated AUC estimates and standard errors are shown in the upper half of Table 1. Both Figure 2 and the relevant portion of Table 1 show that the two biomarkers are useful as predictive biomarkers, with ROC curves above the diagonal and AUCs greater than 0.5 after accounting for sampling variability. The performance of each biomarker does appear to depend heavily on the value of γ; the AUC estimate increases dramatically with increasing γ. This pattern is confirmed by additional analyses based on other values of γ (results not shown). Given the remarks at the end of Section 3.2, these results suggest that evaluation of a predictive biomarker can be rather sensitive to an unmeasured effect modifier. On the other hand, for each value of γ, the AUC estimate for baseline viral load is higher than that for baseline CD4 cell count, although the difference is not statistically significant. These results suggest that comparison of predictive biomarkers may be less sensitive to the choice of γ, even though this particular data set does not provide strong evidence that baseline viral load is better than baseline CD4 cell count as a predictive biomarker. Whether we are evaluating a single marker or comparing two markers, there is an obvious relationship between increasing γ and greater variability in the estimates, as indicated by widening confidence intervals in Figure 2 and increasing standard errors in Table 1.

Fig. 2. ROC analysis of the THRIVE data using the direct approach: estimated ROC curves (solid lines) and 90% pointwise confidence intervals (dashed lines) for baseline viral load (RNA, left) and CD4 cell count (right), with different values of γ.

Table 1.

AUC analysis of the THRIVE data: point estimates and bootstrap standard errors for the AUCs of baseline viral load (RNA) and CD4 cell count as well as their difference (RNA–CD4), obtained using the direct approach of Section 3.1 and the indirect approach of Section 3.2 with sensitivity parameters γ and , respectively

| Sensitivity parameter | Point estimate | Standard error | |||||

|---|---|---|---|---|---|---|---|

|

|

|

||||||

| RNA | CD4 | Diff. | RNA | CD4 | Diff. | ||

| γ | Direct approach | ||||||

| 0.71 | 0.61 | 0.58 | 0.03 | 0.04 | 0.03 | 0.03 | |

| 1 | 0.65 | 0.63 | 0.03 | 0.05 | 0.04 | 0.05 | |

| 2 | 0.77 | 0.75 | 0.02 | 0.06 | 0.08 | 0.08 | |

| 4 | 0.88 | 0.80 | 0.08 | 0.07 | 0.10 | 0.13 | |

|

|

Indirect approach | ||||||

| 0 | 0.64 | 0.59 | 0.05 | 0.04 | 0.04 | 0.05 | |

| 1.8 | 0.65 | 0.60 | 0.06 | 0.05 | 0.05 | 0.05 | |

| 4 | 0.68 | 0.64 | 0.04 | 0.07 | 0.07 | 0.08 | |

| 8 | 0.72 | 0.70 | 0.02 | 0.09 | 0.09 | 0.11 | |

Figure 3 gives another comparison of the two biomarkers based on ROC curves estimated using the indirect approach of Section 3.2 with , 1.8,4, 8. Here, the value corresponds to conditional independence, and the value 1.8 is a lower confidence bound (to be discussed later). The associated AUC estimates and standard errors are shown in the lower half of Table 1. These results are consistent with those from the direct approach in suggesting that both biomarkers are useful as predictive biomarkers. In particular, the results for conditional independence ( ) are fairly consistent with their counterparts under the direct approach (with γ = 1). Like Figure 2, Figure 3 also shows an increasing trend for the predictive performance of each biomarker as a function of the sensitivity parameter . The trend is confirmed for additional values of , although the results are not shown. Thus, considering the remarks at the end of Section 3.2, it appears that evaluation of a predictive biomarker can also be sensitive to an unmeasured prognostic factor. The results from the indirect approach reinforce the previous observation that baseline viral load appears to perform better than baseline CD4 cell count, although the differences here also fail to reach statistical significance. As is the case with the direct approach, increasing tends to produce greater variability in the estimates.

Fig. 3. ROC analysis of the THRIVE data using the indirect approach: estimated ROC curves (solid lines) and 90% pointwise confidence intervals (dashed lines) for baseline viral load (RNA, left) and CD4 cell count (right), with different values of .

Although the uncertainty in comparing the two biomarkers could potentially be reduced by an increased sample size, some uncertainty will likely remain in evaluating each individual marker, given the apparent dependence on sensitivity parameters. Nonetheless, the increasing trend observed under both approaches suggests that a lower bound for predictive performance may be available under each approach. For the direct approach, the lower bound is given by γ = 0.71, as noted in Section 3.1. For the indirect approach, a lower bound for (and hence for the performance of each biomarker) may be available from longitudinal data (see Web Appendix B). For the THRIVE study, the lower bound for is estimated as 2.5 (95% CI: 1.8–3.7, based on 1000 bootstrap samples) from a GLMM analysis of repeated measurements at 24, 32, 40 and 48 weeks. Although earlier measurements (baseline through 20 weeks) are also available, we restrict our analysis to the later measurements in order to reduce misspecification bias; see Zhang et al. [(2013), Section 4] for a detailed explanation of this strategy. This GLMM analysis suggests that, under the additional assumptions given in Web Appendix B, the value represents the worst case scenario for the indirect approach. The corresponding ROC and AUC estimates are better than those for γ = 0.71 under the direct approach and thus more informative as lower bounds.

Our ROC analyses under both (direct and indirect) approaches also illustrate that measures of predictive accuracy need not correlate with interactions. Although baseline CD4 cell count exhibits a more dramatic interaction in Figure 1, there is no indication (in the same data set) that it outperforms baseline viral load as a predictive biomarker.

5. Discussion

In this article we have proposed new methods for evaluating predictive biomarkers in the potential outcome framework of Huang, Gilbert and Janes (2012). Instead of monotonicity, our starting point is conditional independence of potential outcomes given observed covariates. Possible departures from the latter assumption can be addressed by incorporating a random effect that accounts for any residual dependence between potential outcomes. Because the random effect models are not completely identifiable, we propose a sensitivity analysis approach based on quantities related to the random effect. Our analysis of the THRIVE data reveals a great deal of sensitivity for the performance of each individual biomarker and much less sensitivity for the comparison of the two markers. Despite the uncertainty about individual biomarkers, the lower bounds on their predictive performance, available under both (direct and indirect) approaches, show that they are both useful as predictive biomarkers. For comparing the two markers, our analysis does not show much sensitivity and does not indicate a significant difference in the predictive performance of the two markers.

There does appear to be a lot of sensitivity (particularly in the upper portion) in quantifying the performance of an individual marker. Such sensitivity introduces additional uncertainty into the overall conclusion of the analysis, which is certainly undesirable. We see this as a reminder of the inherent limitation of the observed data for answering certain questions. As in many other statistical problems (e.g., missing data, censoring, confounding), the parameters of interest to us are not identifiable from the observed data without making untestable assumptions. When such assumptions are in doubt, the parameters are partially identified and the associated inference cannot be as sharp as that for point-identified parameters [Manski (2003)]. It may be disappointing to see that different assumptions (about the sensitivity parameter) can lead to a wide range for the parameter of interest. On the other hand, one could argue that the sensitivity analysis serves its purpose well by revealing the limitation of the available data and information.

In theory, point identification is achieved under the conditional independence assumption (6), which requires that the observed covariates be sufficient for explaining the dependence between the potential outcomes. Although we may never be certain that assumption (6) holds, it seems reasonable to believe that the assumption will get close to being true with a growing set of relevant covariates obtained from increasing knowledge of the disease. The more we know about the disease, the more information we have about relevant covariates, the more confidence we should be able to place in assumption (6). In practice, it may be difficult to determine when we have sufficient information to rely on assumption (6) and when we have to perform a sensitivity analysis. One possible solution would be a Bayesian approach with an informative prior on the sensitivity parameter which quantifies our uncertainty about assumption (6). As we become more confident about assumption (6), the prior will become more concentrated at or near that assumption.

Each of the direct and indirect approaches has unique advantages. The direct approach is able to accommodate complex definitions of treatment benefit involving several outcome variables of arbitrary types, as long as they are all observed. The indirect approach is best suited for a single outcome of primary interest. Although the outcome model can include multiple outcomes in principle, their dependence structure can be difficult to specify and estimate. Even for a single outcome, the indirect approach requires a greater amount of modeling (in the sense that an outcome model implies a benefit model) and is therefore more prone to misspecification bias. On the other hand, the indirect approach is able to use all the information in the observed outcome data and therefore may have an efficiency advantage. Finally, for sensitivity analysis, the direct approach is more appropriate for an unmeasured effect modifier, and the indirect approach for an unmeasured prognostic factor. In practice, we recommend that both approaches be used in a complementary fashion, as in our analysis of the THRIVE data.

A reviewer has pointed out that some elements of personal judgment may be involved in choosing among different treatments. While this is not a major issue in the THRIVE data, where the definition of a treatment benefit is quite objective, it can certainly become a major issue in other therapeutic areas such as weight loss. For example, some patients may be willing to accept the extra risks of a surgical procedure (relative to a nonsurgical one) for an additional loss of 20 pounds, while others may not. To incorporate such personal judgment into the proposed approach, we could allow δ to vary across patients, and we would need to be able to measure δ for individual patients or at least be able to predict δ using measurable individual characteristics. In the latter case, the methodology will need to be modified to incorporate a prediction model for δ and the associated variability. It will be of interest to explore that possibility in the context of a suitable application.

Because of the complexity of the proposed methodology, a sample size formula is not yet available; however, for a given application, one could perform a simulation study to gauge the adequacy (in terms of power and precision) of a proposed sample size. Such an assessment should obviously consider the objective of the analysis (e.g., evaluating a single biomarker versus comparing two or more biomarkers). In addition, the nonparametric regression in Section 3.3 may imply a higher requirement on the sample size than do the other parts of the methodology, which are based on parametric regression techniques. A sample size that is inadequate for one-dimensional nonparametric regression may compromise the performance of the methods.

Supplementary Material

Acknowledgments

We thank the Editor (Dr. Karen Kafadar), the Associate Editor and three anonymous reviewers for their insightful and constructive comments, which have greatly improved the manuscript. The views expressed in this article do not represent the official position of the U.S. Food and Drug Administration.

Footnotes

Supplementary Material: Supplement: Technical details (DOI: 10.1214/14-AOAS773SUPP; .pdf). We provide technical details concerning the sensitivity analyses in Sections 3.1 and 3.2.

Contributor Information

Zhiwei Zhang, Email: zhiwei.zhang@fda.hhs.gov.

Lei Nie, Email: lei.nie@fda.hhs.gov.

Guoxing Soon, Email: guoxing.soon@fda.hhs.gov.

Aiyi Liu, Email: liua@mail.nih.gov.

References

- Cohen CJ, Andrade-Villanueva J, Clotet B, Fourie J, Johnson MA, Ruxrungtham K, Wu H, Zorrilla C, Crauwels H, Rimsky LT, Vanveggel S, Boven K THRIVE study group. Rilpivirine versus efavirenz with two background nucleoside or nucleotide reverse transcriptase inhibitors in treatment-naive adults infected with HIV-1 (THRIVE): A phase 3, randomised, non-inferiority trial. Lancet. 2011;378:229–237. doi: 10.1016/S0140-6736(11)60983-5. [DOI] [PubMed] [Google Scholar]

- Dodd LE, Pepe MS. Semiparametric regression for the area under the receiver operating characteristic curve. J Amer Statist Assoc. 2003;98:409–417. MR1995717. [Google Scholar]

- Foster JC, Taylor JMG, Ruberg SJ. Subgroup identification from randomized clinical trial data. Stat Med. 2011;30:2867–2880. doi: 10.1002/sim.4322. MR2844689. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gadbury GL, Iyer HK. Unit-treatment interaction and its practical consequences. Biometrics. 2000;56:882–885. doi: 10.1111/j.0006-341x.2000.00882.x. [DOI] [PubMed] [Google Scholar]

- Gail M, Simon R. Testing for qualitative interactions between treatment effects and patient subsets. Biometrics. 1985;41:361–372. [PubMed] [Google Scholar]

- Holland PW. Statistics and causal inference (with discussion) J Amer Statist Assoc. 1986;81:945–970. MR0867618. [Google Scholar]

- Huang Y, Gilbert PB, Janes H. Assessing treatment-selection markers using a potential outcomes framework. Biometrics. 2012;68:687–696. doi: 10.1111/j.1541-0420.2011.01722.x. MR3055173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karapetis CS, Khambata-Ford S, Jonker DJ, O'Callaghan CJ, Tu D, Tebbutt NC, Simes RJ, Chalchal H, Shapiro JD, Robitaille S, Price TJ, Shepherd L, Au HJ, Langer C, Moore MJ, Zalcberg JR. K-ras mutations and benefit from cetuximab in advanced colorectal cancer. N Engl J Med. 2008;359:1757–1765. doi: 10.1056/NEJMoa0804385. [DOI] [PubMed] [Google Scholar]

- Manski CF. Partial Identification of Probability Distributions. Springer; New York: 2003. MR2151380. [Google Scholar]

- Paik S, Shak S, Tang G, Kim C, Baker J, Cronin M, Baehner FL, Walker MG, Watson D, Park T, Hiller W, Fisher ER, Wickerham DL, Bryant J, Wolmark N. A multigene assay to predict recurrence of tamoxifentreated, node-negative breast cancer. N Engl J Med. 2004;351:2817–2826. doi: 10.1056/NEJMoa041588. [DOI] [PubMed] [Google Scholar]

- Pepe MS. The Statistical Evaluation of Medical Tests for Classification and Prediction Oxford Statistical Science Series. Vol. 28. Oxford Univ. Press; Oxford: 2003. MR2260483. [Google Scholar]

- Pocock SJ, Assmann SE, Enos LE, Kasten LE. Subgroup analysis, covariate adjustment and baseline comparisons in clinical trial reporting: Current practice and problems. Stat Med. 2002;21:2917–2930. doi: 10.1002/sim.1296. [DOI] [PubMed] [Google Scholar]

- Poulson RS, Gadbury GL, Allison DB. Treatment heterogeneity and individual qualitative interaction. Amer Statist. 2012;66:16–24. doi: 10.1080/00031305.2012.671724. MR2934737. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Qian M, Murphy SA. Performance guarantees for individualized treatment rules. Ann Statist. 2011;39:1180–1210. doi: 10.1214/10-AOS864. MR2816351. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Russek-Cohen E, Simon RM. Evaluating treatments when a gender by treatment interaction may exist. Stat Med. 1998;16:455–464. doi: 10.1002/(sici)1097-0258(19970228)16:4<455::aid-sim382>3.0.co;2-y. [DOI] [PubMed] [Google Scholar]

- Simon R. Development and validation of biomarker classifiers for treatment selection. J Statist Plann Inference. 2008;138:308–320. doi: 10.1016/j.jspi.2007.06.010. MR2412589. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simon R. Clinical trials for predictive medicine: New challenges and paradigms. Clin Trials. 2010;7:516–524. doi: 10.1177/1740774510366454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Su X, Zhou T, Yan X, Fan J, Yang S. Interaction trees with censored survival data. Int J Biostat. 2008;4:28. doi: 10.2202/1557-4679.1071. Art. 2, 28. MR2383729. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tian L, Alizadeh AA, Gentles AJ, Tibshirani R. A simple method for detecting interactions between a treatment and a large number of covariates. 2012 doi: 10.1080/01621459.2014.951443. Available at arXiv:1212.2995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van der Laan MJ, Robins JM. Unified Methods for Censored Longitudinal Data and Causality. Springer; New York: 2003. MR1958123. [Google Scholar]

- Zhang B, Tsiatis AA, Laber EB, Davidian M. A robust method for estimating optimal treatment regimes. Biometrics. 2012;68:1010–1018. doi: 10.1111/j.1541-0420.2012.01763.x. MR3040007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang Z, Wang C, Nie L, Soon G. Assessing the heterogeneity of treatment effects via potential outcomes of individual patients. JR Stat Soc Ser C Appl Stat. 2013;62:687–704. doi: 10.1111/rssc.12012. MR3118329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang Z, Nie L, Soon G, Liu A. Supplement to “The use of covariates and random effects in evaluating predictive biomarkers under a potential outcome framework”. 2014 doi: 10.1214/14-AOAS773SUPP. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou XH, Obuchowski NA, McClish DK. Statistical Methods in Diagnostic Medicine. Wiley; New York: 2002. MR1915698. [Google Scholar]

- Zou KH, Liu A, Bandos AI, Ohno-Machado L, Rockette HE. Statistical Evaluation of Diagnostic Performance: Topics in ROC Analysis. CRC Press; Boca Raton, FL: 2011. MR3052764. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.