Abstract

This paper introduces a Projected Principal Component Analysis (Projected-PCA), which employees principal component analysis to the projected (smoothed) data matrix onto a given linear space spanned by covariates. When it applies to high-dimensional factor analysis, the projection removes noise components. We show that the unobserved latent factors can be more accurately estimated than the conventional PCA if the projection is genuine, or more precisely, when the factor loading matrices are related to the projected linear space. When the dimensionality is large, the factors can be estimated accurately even when the sample size is finite. We propose a flexible semi-parametric factor model, which decomposes the factor loading matrix into the component that can be explained by subject-specific covariates and the orthogonal residual component. The covariates’ effects on the factor loadings are further modeled by the additive model via sieve approximations. By using the newly proposed Projected-PCA, the rates of convergence of the smooth factor loading matrices are obtained, which are much faster than those of the conventional factor analysis. The convergence is achieved even when the sample size is finite and is particularly appealing in the high-dimension-low-sample-size situation. This leads us to developing nonparametric tests on whether observed covariates have explaining powers on the loadings and whether they fully explain the loadings. The proposed method is illustrated by both simulated data and the returns of the components of the S&P 500 index.

Keywords and phrases: semi-parametric factor models, high dimensionality, loading matrix modeling, rates of covergence, sieve approximation

1. Introduction

Factor analysis is one of the most useful tools for modeling common dependence among multivariate outputs. Suppose that we observe data {yit}i≤p,t≤T that can be decomposed as

| (1.1) |

where {ft1, ···, ftK} are unobservable common factors; {λi1, ···, λiK} are corresponding factor loadings for variable i, and uit denotes the idiosyncratic component that can not be explained by the static common component. Here p and T respectively denote the dimension and sample size of the data.

Model (1.1) has broad applications in the statistics literature. For instance, yt = (y1t, ···, ypt)′ can be expression profiles or blood oxygenation level dependent (BOLD) measurements for the tth microarray, proteomic or fMRI-image, whereas i represents a gene or protein or a voxel. See, for example, Desai and Storey (2012); Efron (2010); Fan et al. (2012); Friguet et al. (2009); Leek and Storey (2008). The separations between the common factors and idiosyncratic components are carried out by the low-rank plus sparsity decomposition. See, for example, Cai et al. (2013); Candès and Recht (2009); Fan et al. (2013); Koltchinskii et al. (2011); Ma (2013); Negahban and Wainwright (2011).

The factor model (1.1) has also been extensively studied in the econometric literature, in which yt is the vector of economic outputs at time t or excessive returns for individual assets on day t. The unknown factors and loadings are typically estimated by the principal component analysis (PCA) and the separations between the common factors and idiosyncratic components are characterized via static pervasiveness assumptions. See, for instance, Bai (2003); Bai and Ng (2002); Breitung and Tenhofen (2011); Lam and Yao (2012); Stock and Watson (2002) among others. In this paper, we consider static factor model, which differs from the dynamic factor model (Forni et al., 2000, 2015; Forni and Lippi, 2001). The dynamic model allows more general infinite dimensional representations. For this type of model, the frequency domain PCA (Brillinger, 1981) was applied on the spectral density. The so-called dynamic pervasiveness condition also plays a crucial role in achieving consistent estimation of the spectral density.

Accurately estimating the loadings and unobserved factors are very important in statistical applications. In calculating the false-discovery proportion for large-scale hypothesis testing, one needs to adjust accurately the common dependence via subtracting it from the data in (1.1) (Desai and Storey, 2012; Efron, 2010; Fan et al., 2012; Friguet et al., 2009; Leek and Storey, 2008). In financial applications, we would like to understand accurately how each individual stock depends on unobserved common factors in order to appreciate its relative performance and risks. In the aforementioned applications, dimensionality is much higher than sample-size. However, the existing asymptotic analysis shows that the consistent estimation of the parameters in model (1.1) requires a relatively large T. In particular, the individual loadings can be estimated no faster than OP (T−1/2). But large sample sizes are not always available. Even with the availability of “Big Data”, heterogeneity and other issues make direct applications of (1.1) with large T infeasible. For instance, in financial applications, to pertain the stationarity in model (1.1) with time-invariant loading coefficients, a relatively short time series is often used. To make observed data less serially correlated, monthly returns are frequently used to reduce the serial correlations, yet a monthly data over three consecutive years contain merely 36 observations.

1.1. This paper

To overcome the aforementioned problems, and when relevant covariates are available, it may be helpful to incorporate them into the model. Let Xi = (Xi1, ···, Xid)′ be a vector of d-dimensional covariates associated with the ith variables. In the seminal papers by Connor and Linton (2007) and Connor et al. (2012), the authors studied the following semi-parametric factor model:

| (1.2) |

where loading coefficients in (1.1) are modeled as λik = gk(Xi) for some functions gk(·). For instance, in health studies, Xi can be individual characteristics (e.g. age, weight, clinical and genetic information); in financial applications Xi can be a vector of firm-specific characteristics (market capitalization, price-earning ratio, etc).

The semiparametric model (1.2), however, can be restrictive in many cases, as it requires that the loading matrix be fully explained by the covariates. A natural relaxation is the following semiparametric model

| (1.3) |

where γik is the component of loading coefficient that can not be explained by the covariates Xi. Let γi = (γi1, ···, γiK)′. We assume that {γi}i≤p have mean zero, and are independent of {Xi}i≤p and {uit}i≤p,t≤T. In other words, we impose the following factor structure

| (1.4) |

which reduces to model (1.2) when γik = 0 and model (1.1) when gk(·) = 0. When Xi genuinely explains a part of loading coefficients λik, the variability of γik is smaller than that of λik. Hence, the coefficient γik can be more accurately estimated by using regression model (1.3), as long as the functions gk(·) can be accurately estimated.

Let Y be the p×T matrix of yit, F be the T × K matrix of ftk, G(X) be the p × K matrix of gk(Xi), Γ be the p × K matrix of γik, and U be p × T matrix of uit. Then model (1.4) can be written in a more compact matrix form:

| (1.5) |

We treat the loadings G(X) and Γ as realizations of random matrices throughout the paper. This model is also closely related to the supervised singular value decomposition model, recently studied by Li et al. (2015). The authors showed that the model is useful in studying the gene expression and single-nucleotide polymorphism (SNP) data, and proposed an EM algorithm for parameter estimation.

We propose a projected-PCA estimator for both the loading functions and factors. Our estimator is constructed by first projecting Y onto the sieve space spanned by {Xi}i≤p, then applying PCA to the projected data or fitted values. Due to the approximate orthogonality condition of X, U and Γ, the projection of Y is approximately G(X)F′, as the smoothing projection suppresses the noise terms Γ and U substantially. Therefore, applying PCA to the projected data allows us to work directly on the sample covariance of G(X)F′, which is G(X)G(X)′ under normalization conditions. This substantially improves the estimation accuracy, and also facilitates the theoretical analysis. In contrast, the traditional PC method for factor analysis (e.g., Stock and Watson (2002), Bai and Ng (2002)) is no longer suitable in the current context. Moreover, the idea of projected-PCA is also potentially applicable to dynamic factor models of Forni et al. (2000), by first projecting the data onto the covariate space.

The asymptotic properties of the proposed estimators are carefully studied. We demonstrate that as long as the projection is genuine, the consistency of the proposed estimator for latent factors and loading matrices requires only p → ∞, and T does not need to grow, which is attractive in the typical high-dimension-low-sample-size (HDLSS) situations (e.g., Jung and Marron (2009); Shen et al. (2013a,b)). In addition, if both p and T grow simultaneously, then with sufficiently smooth gk(·), using the sieve approximation, the rate of convergence for the estimators is much faster than those of the existing results for model (1.1). Typically, the loading functions can be estimated at a convergence rate OP ((pT)−1/2), and the factor can be estimated at OP(p−1). Throughout the paper, K = dim(ft) and d = dim(Xi) are assumed to be constant and do not grow.

Let Λ be a p×K matrix of (λik)T×K. Model (1.3) implies a decomposition of the loading matrix:

where G(X) and Γ are orthogonal loading components in the sense that EG(X)Γ′ = 0. We conduct two specification tests for the hypotheses:

The first problem is about testing whether the observed covariates have explaining power on the loadings. If the null hypothesis is rejected, it gives us the theoretical basis to employ the projected PCA, as the projection is now genuine. Our empirical study on the asset returns shows that firm market characteristics do have explanatory power on the factor loadings, which lends further support to our projected-PCA method. The second tests whether covariates fully explain the loadings. Our aforementioned empirical study also shows that model (1.2) used in the financial econometrics literature is inadequate and more generalized model (1.5) is necessary. As claimed earlier, even if does not hold, as long as G(X) ≠ 0, the Projected-PCA can still consistently estimate the factors as p → ∞, and T may or may not grow. Our simulated experiments confirm that the estimation accuracy is gained more significantly for small T’s. This shows one of the benefits of using our projected-PCA method over the traditional methods in the literature.

In addition, as a further illustration of the benefits of using projected data, we apply the projected-PCA to consistently estimate the number of factors, which is similar to those in Ahn and Horenstein (2013) and Lam and Yao (2012). Different from these authors, our method applies to the projected data, and we demonstrate numerically that this can significantly improve the estimation accuracy.

We focus on the case when the observed covariates are time-invariant. When T is small, these covariates are approximately locally constant, so this assumption is reasonable in practice. On the other hand, there may exist individual characteristics that are time-variant (e.g., see Park et al. (2009)). We expect the conclusions in the current paper to still hold if some smoothness assumptions are added for the time varying components of the covariates. Due to the space limit, we provide heuristic discussions on this case in the supplementary material of this paper Fan et al. (2015b). In addition, note that in the usual factor model, Λ was assumed to be deterministic. In this paper, however, Λ is mainly treated to be stochastic, and potentially depend on a set of covariates. But we would like to emphasize that the results presented in Section 3 under the framework of more general factor models hold regardless of whether Λ is stochastic or deterministic. Finally, while some financial applications are presented in this paper, the projected-PCA is expected to be useful in broad areas of statistical applications (e.g., see Li et al. (2015) for applications in gene expression data analysis).

1.2. Notation and organization

Throughout this paper, for a matrix A, let ||A||F = tr1/2(A′A) and , ||A||max = maxij |Aij| denote its Frobenius, spectral and max-norms. Let λmin(·) and λmax(·) denote the minimum and maximum eigenvalues of a square matrix. For a vector v, let ||v|| denote its Euclidean norm.

The rest of the paper is organized as follows. Section 2 introduces the new projected-PCA method and defines the corresponding estimators for the loadings and factors. Sections 3 and 4 provide asymptotic analysis of the introduced estimators. Section 5 introduces new specification tests for the orthogonal decomposition of the semi-parametric loadings. Section 6 concerns about estimating the number of factors. Section 7 presents numerical results. Finally, Section 8 concludes. All the proofs are given in the appendix and the supplementary material.

2. Projected Principal Component Analysis

2.1. Overview

In the high-dimensional factor model, let Λ be the p×K matrix of loadings. Then the general model (1.1) can be written as

| (2.1) |

Suppose we additionally observe a set of covariates {Xi}i≤p. The basic idea of the projected PCA is to smooth the observations {Yit}i≤p for each given day t against its associated covariates. More specifically, let {Ŷit}i≤p be the fitted value after regressing {Yit}i≤p on {Xi}i≤p for each given t. This results in a smooth or projected observation matrix Ŷ, which will also be denoted by PY. The projected PCA then estimates the factors and loadings by running the PCA based on the projected data Ŷ.

Here we heuristically describe the idea of projected PCA; rigorous analysis will be carried out afterwards. Let 𝒳 be a space spanned by X = {Xi}i≤p, which is orthogonal to the error matrix U. Let P denote the projection matrix onto 𝒳 (whose formal definition will be given in (2.6) below. At the population level, P approximates the conditional expectation operator E(·|X), which satisfies E(U|X) = 0), then P2 = P and PU ≈ 0. Hence, the projected data Ŷ is an approximately noiseless problem and its sample covariance has the following approximation:

| (2.2) |

Hence, F and PΛ can be recovered from the projected data Ŷ under some suitable normalization condition.

The normalization conditions we imposed are

| (2.3) |

Under this normalization, using (2.2), we conclude that the columns of F are approximately times the first K eigenvectors of the T × T matrix . Therefore, the Projected-PCA naturally defines a factor estimator F̂ using the first K principal components of .

The projected loading matrix PΛ can also be recovered from the projected data PY in two (equivalent) ways. Given F, from , we see . Alternatively, consider the p × p projected sample covariance:

where Δ̃ is a remaining term depending on PU. Right multiplying PΛ and ignoring terms depending on PU, we obtain . Hence the (normalized) columns of PΛ approximate the first K eigenvectors of , the p×p sample covariance matrix based on the projected data. Therefore, we can either estimate PΛ by given F̂, or by the leading eigenvectors of . In fact, we shall see later that these two estimators are equivalent. If in addition, Λ = PΛ, that is, the loading matrix belongs to the space 𝒳, then Λ can also be recovered from the projected data.

The above arguments are the fundament of the projected-PCA, and provide the rationale of our estimators to be defined in Section 2.3. We shall make the above arguments rigorous by showing that the projected error PU is asymptotically negligible, and therefore the idiosyncratic error term U can be completely removed by the projection step.

2.2. Semiparametric Factor Model

As one of the useful examples of forming the space 𝒳 and the projection operator, this paper considers model (1.4), where Xi’s and yit’s are the only observable data, and {gk(·)}k≤K are unknown nonparametric functions. The specific case (1.2) (with γik = 0) was used extensively in the financial studies by Connor and Linton (2007), Connor et al. (2012) and Park et al. (2009), with Xi’s being the observed “market characteristic variables”. We assume K to be known for now. In Section 6, we will propose a projected-eigenvalue-ratio method to consistently estimate K when it is unknown.

We assume that gk(Xi) does not depend on t, which means the loadings represent the cross-sectional heterogeneity only. Such a model specification is reasonable since in many applications using factor models: to pertain the stationarity of the time series, the analysis can be conducted within each fixed time window with either a fixed or slowly-growing T. Through localization in time, it is not stringent to require the loadings be time-invariant. This also shows one of the attractive features of our asymptotic results: under mild conditions, our factor estimates are consistent even if T is finite.

To non-parametrically estimate gk(Xi) without the curse of dimensionality when Xi is multivariate, we assume gk(·) to be additive: for each k ≤ K, i ≤ p, there are (gk1, ···, gkd) nonparametric functions such that

| (2.4) |

Each additive component of gk is estimated by the sieve method. Define {ϕ1(x), ϕ2(x), ···} to be a set of basis functions (e.g., B-spline, Fourier series, wavelets, polynomial series), which spans a dense linear space of the functional space for {gkl}. Then for each l ≤ d,

| (2.5) |

Here {bj,kl}j≤J are the sieve coefficients of the lth additive component of gk(Xi), corresponding to the kth factor loading; Rkl is a “remaining function” representing the approximation error; J denotes the number of sieve terms which grows slowly as p → ∞. The basic assumption for sieve approximation is that supx |Rkl(x)| → 0 as J → ∞. We take the same basis functions in (2.5) purely for simplicity of notation.

Define, for each k ≤ K and for each i ≤ p,

Then, we can write

Let B = (b1, ···, bK) be a (Jd) × K matrix of sieve coefficients, Φ(X) = (ϕ(X1), ···, ϕ(Xp))′ be a p × (Jd) matrix of basis functions, and R(X) be p×K matrix with the (i, k)th element . Then the matrix form of (2.4) and (2.5) is

Substituting this into (1.5), we write

We see that the residual term consists of two parts: the sieve approximation error R(X)F′ and the idiosyncratic U. Furthermore, the random effect assumption on the coefficients Γ makes it also behave like noise and hence negligible when the projection operator P is applied.

2.3. The estimator

Based on the idea described in Section 2.1, we propose a Projected-PCA method, where 𝒳 is the sieve space spanned by the basis functions of X, and P is chosen as the projection matrix onto 𝒳, defined by the p × p projection matrix

| (2.6) |

The estimators of the model parameters in (1.5) are defined as follows. The columns of are defined as the eigenvectors corresponding to the first K largest eigenvalues of the T × T matrix Y′PY, and

| (2.7) |

is the estimator of G(X).

The intuition can be readily seen from the discussions in Section 2.1, which also provides an alternative formulation of Ĝ(X) as follows: let D̂ be a K×K diagonal matrix consisting of the largest K eigenvalues of the p×p matrix . Let Ξ̂= (ξ̂1, …, ξ̂K) be a p×K matrix whose columns are the corresponding eigenvectors. According to the relation described in Section 2.1, we can also estimate G(X) or PΛ by

We shall show in Lemma A.1 that this is equivalent to (2.7). The weight matrix P projects the original data matrix onto the sieve space spanned by X. Therefore, unlike the traditional PC method for usual factor models (e.g., Bai (2003), Stock and Watson (2002)), the projected-PCA takes the principal components of the projected data PY. The estimator is thus invariant to the rotation-transformations of the sieve bases.

The estimation of the loading component Γ that can not be explained by the covariates can be estimated as follows. With the estimated factors F̂, the least-squares estimator of loading matrix is Λ̂ = YF̂/T, by using (2.1) and (2.3). Therefore, by (1.5), a natural estimator of Γ is

| (2.8) |

2.4. Connection with panel data models with time-varying coefficients

Consider a panel data model with time-varying coefficients as follows:

| (2.9) |

where Xi is a d-dimensional vector of time-invariant regressors for individual i; μt denotes the unobservable random time effect; uit is the regression error term. The regression coefficient βt is also assumed to be random and time-varying, but is common across the cross-sectional individuals.

The semi-parametric factor model admits (2.9) as a special case. Note that (2.9) can be rewritten as yit = g(Xi)′ft + uit with K = d + 1 unobservable “factors” and “loading” . The model (1.4) being considered, on the other hand, allows more general nonparametric loading functions.

3. Projected-PCA in Conventional Factor Models

Let us first consider the asymptotic performance of the projected-PCA in the conventional factor model:

| (3.1) |

In the usual statistical applications for factor analysis, the latent factors are assumed to be serially independent, while in financial applications, the factors are often treated to be weakly dependent time series satisfying strong mixing conditions.

We now demonstrate by a simple example that latent factors F can be estimated at a faster rate of convergence by Projected-PCA than the conventional PCA and that they can be consistently estimated even when sample size T is finite.

Example 3.1

To appreciate the intuition, let us consider a specific case in which K = 1 so that model (1.4) reduces to

Assume that g(·) is so smooth that it is in fact a constant β (otherwise, we can use a local constant approximation), where β > 0. Then, the model reduces to

The projection in this case is averaging over i, which yields

where ȳ·t, γ̄· and ū·t denote the averages of their corresponding quantities over i. For the identification purpose, suppose Eγi = Euit = 0, and . Ignoring the last two terms, we obtain estimators

| (3.2) |

These estimators are special cases of the projected-PCA estimators. To see this, define ȳ = (ȳ·1, …, ȳ·T)′, and let 1p be a p-dimensional column vector of ones. Take a naive basis Φ(X) = 1p; then the projected data matrix is in fact PY = 1pȳ′. Consider the T × T matrix Y′PY = (1pȳ′)′1pȳ′ = pȳȳ′, whose largest eigenvalue is p||ȳ||2. From

we have the first eigenvector of Y′PY equals ȳ/||ȳ||. Hence the projected-PCA estimator of factors is . In addition, the projected PCA estimator of the loading vector β1p is

Hence the projected PCA-estimator of β equals . These estimators match with (3.2). Moreover, since the ignored two terms γ̄· and ū·t are of order Op(p−1/2), β̂ and f̂t converge whether or not T is large. Note that this simple example satisfies all the assumptions to be stated below, and β̂ and f̂t achieve the same rate of convergence as that of Theorem 4.1. We shall present more details about this example in Appendix G in the supplementary material.

3.1. Asymptotic Properties of Projected-PCA

We now state the conditions and results formally in the more general factor model (3.1). Recall that the projection matrix is defined as

The following assumption is the key condition of the projected-PCA.

Assumption 3.1 (Genuine projection)

There are positive constants cmin and cmax such that, with probability approaching one (as p → ∞),

Since the dimensions of Φ(X) and Λ are respectively p × Jd and p × K, Assumption 3.1 requires Jd ≥ K, which is reasonable since we assume K, the number of factors, to be fixed throughout the paper.

Assumption 3.1 is similar to the pervasive condition on the factor loadings (Stock and Watson (2002)). In our context, this condition requires the covariates X have non-vanishing explaining power on the loading matrix, so that the projection matrix Λ′PΛ has spiked eigenvalues. Note that it rules out the case when X is completely unassociated with the loading matrix Λ (e.g., when X is pure noise). One of the typical examples that satisfies this assumption is the semi-parametric factor model (model (1.4)). We shall study this specific type of factor model in Section 4, and prove Assumption 3.1 in the supplementary material Fan et al. (2015b).

Note that F and Λ are not separately identified, because for any nonsingular H, ΛF′ = ΛH−1HF′. Therefore, we assume:

Assumption 3.2 (Identification)

Almost surely, T−1F′F = IK and Λ′PΛ is a K × K diagonal matrix with distinct entries.

This condition corresponds to the PC1 condition of Bai and Ng (2013), which separately identifies the factors and loadings from their product ΛF′. It is often used in factor analysis for identification, and means that the columns of factors and loadings can be orthogonalized (also see Bai and Li (2012)).

Assumption 3.3 (Basis functions)

- (i) There are dmin and dmax > 0 so that with probability approaching one (as p → ∞),

maxj≤J,i≤p,l≤d Eϕj(Xil)2 < ∞.

Note that and ϕ(Xi) is a vector of dimensionality Jd ≪ p. Thus, condition (i) can follow from the strong law of large numbers. For instance, {Xi}i≤p are weakly correlated and in the population level Eϕ(Xi)′ϕ(Xi) is well-conditioned. In addition, this condition can be satisfied through proper normalizations of commonly used basis functions such as B-splines, wavelets, Fourier basis, etc. In the general setup of this paper, we allow {Xi}i≤p’s to be cross-sectionally dependent and non-stationary. Regularity conditions about weak dependence and stationarity are imposed only on {(ft, ut)} as follows.

We impose the strong mixing condition. Let and denote the σ-algebras generated by {(ft, ut) : t ≤ 0} and {(ft, ut) : t ≥ T} respectively. Define the mixing coefficient

Assumption 3.4 (Data generating process)

{ut, ft}t≤T is strictly stationary. In addition, Euit = 0 for all i ≤ p, j ≤ K; {ut}t≤T is independent of {Xi, ft}i≤p,t≤T.

- Strong mixing: There exist r1, C1 > 0 such that for all T > 0,

- Weak dependence: there is C2 > 0 so that

- Exponential tail: there exist r2 ≥ 0, r3 > 0 satisfying and b1, b2 > 0, such that for any s > 0, i ≤ p and j ≤ K,

Assumption 3.4 is standard, especially condition (iii) is commonly imposed for high-dimensional factor analysis (e.g., Bai (2003); Stock and Watson (2002)), which requires {uit}i≤p,t≤T be weakly dependent both serially and cross-sectionally. It is often satisfied when the covariance matrix is sufficiently sparse under the strong mixing condition. We provide primitive conditions of condition (iii) in the supplementary material Fan et al. (2015b).

Formally, we have the following theorem:

Theorem 3.1

Consider the conventional factor model (3.1) with Assumptions 3.1–3.4. The projected-PCA estimators F̂ and Ĝ(X) defined in Section 2.3 satisfy, as p → ∞ (J, T may either grow simultaneously with p satisfying or stay constant with Jd ≥ K),

To compare with the regular PC method, the convergence rate for the estimated factors is improved for small T. In particular, the projected-PCA does not require T → ∞, and also has a good rate of convergence for the loading matrix up to a projection transformation. Hence we have achieved a finite-T consistency, which is particularly interesting in the “high-dimensional-low-sample-size” (HDLSS) context, considered by Jung and Marron (2009). In contrast, the conventional PC method achieves a rate of convergence of OP (1/p + 1/T2) for estimating factors, and OP (1/T + 1/p) for estimating loadings. See Remarks 4.1, 4.2 below for additional details.

3.2. Projected-PCA consistency in the HDLSS context

In recent years, substantial work has been done on the PCA consistency on the spiked covariance model (e.g., Johnstone (2001) and Paul (2007)), and is extended to the HDLSS context by Ahn et al. (2007), Jung and Marron (2009) and Shen et al. (2013a). In a high-dimensional factor model yt = Λft + ut, let Σ = cov(yt) be the p × p covariance matrix of yt. Let Ξ = (ξ1, …, ξK) be the leading eigenvectors of Σ. Under the pervasiveness condition, the first K eigenvalues of the p × p covariance matrix Σ = cov(yt) grow at rate O(p). Due to the presence of these very spiked eigenvalues, Fan et al. (2013) showed that the leading eigenvectors of Σ can be consistently estimated by those of the p × p sample covariance matrix as both p, T → ∞. However, either the consistency fails to hold with a finite T or the rate of convergence is slow when T grows slowly as in the HDLSS context.

With a genuine projection P that satisfies Assumption 3.1, the projected-PCA estimates Ξ using the leading eigenvectors of the sample covariance matrix based on the projected data PY. Specifically, recall that Ξ̂ is a p×K matrix whose columns are the eigenvectors corresponding to the first largest K eigenvalues of , and D̂ is a diagonal matrix consisting of the largest K eigenvalues of . The consistency of projected-PCA can be achieved up to a projection error even if T is finite, and the rate of convergence is faster when T also grows.

Let Ṽ be an orthogonal matrix whose columns are the eigenvectors of Λ′Λ, corresponding to the eigenvalues in a decreasing order. Let Σu be the p×p covariance matrix of ut. We have the following result on the convergence of eigenspace spanned by the spiked eigenvalues.

Theorem 3.2

Under the conditions of Theorem 3.1, we have, as p → ∞ (J, T may either grow simultaneously with p satisfying or stay constant with Jd ≥ K), for V = Ṽ′(Λ′Λ)1/2D̂−1/2,

Q: Not clear. What is the order of V? How does it related to Theorem 3.1? Why readers need to know all details below?

Briefly speaking, Ξ̂ approximates the space spanned by the columns of Ξ, which consists of leading eigenvectors of Σ. In addition, in the high-dimensional factor model, we shall prove in the appendix that, for Λ̄ = ΛṼ,

| (3.3) |

As a result, Theorem 3.2 follows from (3.3). There are three sources of estimation errors: (i) the error from (3.3), (ii) the error from approximating PΛ by Ξ̂D̂1/2, which depends on the projected noise PU, and (iii) the projection error . Note that the errors from (i) and (ii) are both asymptotically negligible as p → ∞, and does not require a diverging T. The error of the third type depends on the nature of the loading matrix. In the special case when Λ belongs to the space spanned by X, corresponding to G(X) = Λ, this term is also asymptotically negligible as p → ∞.

4. Projected-PCA in Semi-parametric Factor Models

4.1. Sieve approximations

In the semi-parametric factor model, it is assumed that λik = gk(Xi) + γik, where gk(Xi) is a nonparametric smooth function for the observed covariates, and γik is the unobserved random loading component that is independent of Xi. Hence the model is written as

In the matrix form,

and G(X) does not vanish (pervasive condition, see Assumption 4.2 below).

The estimators F̂ and Ĝ (X) are the projected-PCA estimators as defined in Section 2.3. We now define the estimator of the nonparametric function gk(·), k = 1, …, K. In the matrix form, the projected data has the following sieve approximated representation:

| (4.1) |

where Ẽ = PΓF′+PR(X)F′+PU is “small” because Γ and U are orthogonal to the function space spanned by X, and R(X) is the sieve approximation error. The sieve coefficient matrix B = (b1, …, bK) can be estimated by least squares from the projected model (4.1): Ignore Ẽ, replace F with F̂, and solve (4.1) to obtain

We then estimate gk(·) by

where 𝒳 denotes the support of Xi.

4.2. Asymptotic analysis

When Λ = G(X) + Γ, G(X) can be understood as the projection of Λ onto the sieve space spanned by X. Hence the following assumption is a specific version of Assumptions 3.1 and 3.2 in the current context.

Assumption 4.1

Almost surly, T−1F′F = IK and G(X)′G(X) is a K × K diagonal matrix with distinct entries.

- There are two positive constants cmin and cmax so that with probability approaching one (as p → ∞),

In this section, we do not need to assume {γi}i≤p to be i.i.d. for the estimation purpose. Cross-sectional weak dependence as in Condition (ii) would be sufficient. The i.i.d. assumption will be only needed when we consider specification tests in Section 5. Define γi = (γi1, …, γiK)′, and

Assumption 4.2

Eγik = 0 and {Xi}i≤p is independent of {γik}i≤p.

- maxk≤K,i≤p Egk(Xi)2 < ∞, νp < ∞ and

The following set of conditions is concerned about the accuracy of the sieve approximation.

Assumption 4.3 (Accuracy of sieve approximation)

∀l ≤ d, k ≤ K,

-

the loading component gkl(·) belongs to a Hölder class 𝒢 defined by

for some L > 0;

-

The sieve coefficients {bk,jl}j≤J satisfy for κ = 2(r + α) ≥ 4, as J → ∞,

where 𝒳l is the support of the lth element of Xi, and J is the sieve dimension.

.

Condition (ii) is satisfied by common basis. For example, when {ϕj} is polynomial basis or B-splines, condition (ii) is implied by condition (i) (see e.g., Lorentz (1986) and Chen (2007)).

Theorem 4.1

Suppose . Under Assumptions 3.3, 3.4, 4.1–4.3, as p, J → ∞, T can be either divergent or bounded, we have that

In addition, if T → ∞ simultaneously with p and J, then

The optimal simultaneously minimizes the convergence rates of the factors and nonparametric loading function gk(·). It also satisfies the constraint as κ ≥ 4. With J = J*, we have

and Γ̂ = (γ̂1, …, γ̂p)′ satisfies:

Some remarks about these rates of convergence compared with those of the conventional factor analysis are in order.

Remark 4.1

The rates of convergence for factors and nonparametric functions do not require T → ∞. When T = O(1),

The rates still converge fast when p is large, demonstrating the blessing of dimensionality. This is an attractive feature of the projected-PCA in the HDLSS context, as in many applications, the stationarity of a time series and the time-invariance assumption on the loadings hold only for a short period of time. In contrast, in the usual factor analysis, consistency is granted only when T → ∞. For example, according to Fan et al. (2015a) (Lemma C.1), the regular PCA method has the following convergence rate

which is inconsistent when T is bounded.

Remark 4.2

When both p and T are large, the projected-PCA estimates factors as well as the regular PCA does, and achieves a faster rate of convergence for the estimated loadings when γik vanishes. In this case, λik = gk(Xi), the loading matrix is estimated by Λ̂ = Ĝ(X), and

In contrast, the regular PCA method as in Stock and Watson (2002) yields

Comparing these rates, we see that when gk(·)’s are sufficiently smooth (larger κ), the rate of convergence for the estimated loadings is also improved.

5. Semiparametric Specification Test

The loading matrix always has the following orthogonal decomposition:

where Γ is interpreted as the loading component that cannot be explained by X. We consider two types of specification tests: testing , and . The former tests whether the observed covariates have explaining powers on the loadings, while the latter tests whether the covariates fully explain the loadings. The former provides a diagnostic tool as to whether or not to employ the projected PCA; the latter tests the adequacy of the semiparametric factor models in the literature.

5.1. Testing G(X) = 0

Testing whether the observed covariates have explaining powers on the factor loadings can be formulated as the following null hypothesis:

Due to the approximate orthogonality of X and Γ, we have PΛ ≈ G(X). Hence, the null hypothesis is approximately equivalent to

This motivates a statistic for a consistent loading estimator Λ̃. Normalizing the test statistic by its asymptotic variance leads to the test statistic

where the K × K matrix W1 is the weight matrix. The null hypothesis is rejected when SG is large.

The projected PCA estimator is inappropriate under the null hypothesis as the projection is not genuine. We therefore use the least squares estimator Λ̃ = YF̃/T, leading to the test statistic

Here, we take F̃ as the regular PC estimator: the columns of are the first K eigenvectors of the T × T data matrix Y′Y.

5.2. Testing Γ = 0

Connor et al. (2012) applied the semi-parametric factor model to analyzing financial returns, who assumed that Γ = 0, that is, the loading matrix can be fully explained by the observed covariates. It is therefore natural to test the following null hypothesis of specification:

Recall that G(X) ≈ PΛ so that Λ ≈ PΛ + Γ. Therefore essentially the specification testing problem is equivalent to testing:

That is, we are testing whether the loading matrix in the factor model belongs to the space spanned by the observed covariates.

A natural test statistic is thus based on the weighted quadratic form

for some p × p positive definite weight matrixW2, where F̂ is the projected-PCA estimator for factors and Λ̂ = YF̂/T. To control the size of the test, we take , where Σu is a diagonal covariance matrix of ut under H0, assuming that (u1t, ···, upt) are uncorrelated.

We replace with its consistent estimator: let Û = Y − Λ̂F̂′. Define

Then the operational test statistic is defined to be

The null hypothesis is rejected for large values of SΓ.

5.3. Asymptotic null distributions

For the testing purpose we assume {Xi, γi} to be i.i.d., and let T, p, J → ∞ simultaneously. The following assumption regulates the relation between T and p.

Assumption 5.1

Suppose

{Xi, γi}i≤p are independent and identically distributed;

T2/3 = o(p), and p(log p)4 = o(T2).

J and κ satisfy: , and .

Condition (ii) requires a balance of the dimensionality and the sample size. On one hand, a relatively large sample size is desired (p(log p)4 = o(T2)) so that the effect of estimating is negligible asymptotically. On the other hand, as is common in high-dimensional factor analysis, a lower bound of the dimensionality is also required (condition T2/3 = o(p)) to ensure that the factors are estimated accurately enough. Such a required balance is common for high-dimensional factor analysis (e.g., Bai (2003), Stock and Watson (2002)) and in the recent literature for PCA (e.g., Jung and Marron (2009), Shen et al. (2013b)). The iid assumption of covariates Xi in Condition (i) can be relaxed with further distributional assumptions on γi (e.g., assuming γi to be Gaussian). The conditions on J in Condition (iii) is consistent with those of the previous sections.

We focus on the case when ut is Gaussian, and show that under ,

and under

whose conditional distributions (given F) under the null are χ2 with degree of freedom respectively JdK and pK. We can derive their standardized limiting distribution as J, T, p → ∞. This is given in the following result.

Theorem 5.1

Suppose Assumptions 3.3, 3.4, 4.2, 5.1 hold. Then under ,

where K = dim(ft) and d = dim(Xi). In addition, suppose Assumptions 4.1 and 4.3 further hold, {ut}t≤T is i.i.d. N(0, Σu) with a diagonal covariance matrix Σu whose elements are bounded away from zero and infinity. Then under ,

In practice, when a relatively small sieve dimension J is used, one can instead use the upper α-quantile of the distribution for pSG.

Remark 5.1

We require uit be independent across t, which ensures that the covariance matrix of the leading term to have a simple form . This assumption can be relaxed to allow for weakly dependent {ut}t≤T, but many autocovariance terms will be involved in the covariance matrix. One may regularize standard autocovariance matrix estimators such as Newey and West (1987) and Andrews (1991) to account for the high dimensionality. Moreover, we assume Σu be diagonal to facilitate estimating , which can also be weakened to allow for a non-diagonal but sparse Σu. Regularization methods such as thresholding (Bickel and Levina (2008)) can then be employed, though they are expected to be more technically involved.

6. Estimating the Number of Factors from Projected Data

We now address the problem of estimating K = dim(ft) when it is unknown. Once consistent estimation of K is obtained, all the results achieved carry over to the unknown K case using a conditioning argument*. In principle, many consistent estimators of K can be employed, e.g., Bai and Ng (2002), Alessi et al. (2010), Breitung and Pigorsch (2009), Hallin and Liška (2007). More recently, Ahn and Horenstein (2013) and Lam and Yao (2012) proposed to select the largest ratio of the adjacent eigenvalues of Y′Y, based on the fact that the K largest eigenvalues of the sample covariance matrix grow fast as p increases, while the remaining eigenvalues either remain bounded or grow slowly.

We extend Ahn and Horenstein (2013)’s theory in two ways. First, when the loadings depend on the observable characteristics, it is more desirable to work on the projected data PY. Due to the orthogonality condition of U and X, the projected data matrix is approximately equal to G(X)F′. The projected matrix PY(PY)′ thus allows us to study the eigenvalues of the principal matrix component G(X)G(X)′, which directly connects with the strengths of those factors. Since the non-vanishing eigenvalues of PY(PY)′ and (PY)′PY = Y′PY are the same, we can work directly with the eigenvalues of the matrix Y′PY. Secondly, we allow p/T → ∞.

Let λk(Y′PY) denote the kth largest eigenvalue of the projected data matrix Y′PY. We assume 0 < K < Jd/2, which naturally holds if the sieve dimension J slowly grows. The estimator is defined as:

The following assumption is similar to that of Ahn and Horenstein (2013). Recall that U = (u1, ···, uT) is a p × T matrix of the idiosyncratic components, and denote the p × p covariance matrix of ut.

Assumption 6.1

The error matrix U can be decomposed as

| (6.1) |

where,

the eigenvalues of Σu are bounded away from both zero and infinity.

M is a T by T positive semi-definite non-stochastic matrix, whose eigenvalues are bounded away from 0 and infinity,

- E = (eit)p×T is a p × T stochastic matrix, where eit is independent in both i and t, and et = (e1t, …, ept)′ are i.i.d. isotropic sub-Gaussian vectors, that is, there is C > 0, for all s > 0,

- There are dmin, dmax > 0, almost surely,

This assumption allows the matrix U to be both cross-sectionally and serially dependent. The T × T matrix M captures the serial dependence across t. In the special case of no-serial-dependence, the decomposition (6.1) is satisfied by taking M = I. In addition, we require ut to be sub-Gaussian to apply random matrix theories of Vershynin (2010). For instance, when ut is N (0, Σu), for any ||v|| = 1, v′et ~ N (0, 1). Thus condition (iii) is satisfied. Finally, the almost surely condition of (iv) seems somewhat strong, but is still satisfied by bounded basis functions (e.g., Fourier basis) and follows from the strong law of large numbers given that Eϕ(Xi)ϕ(Xi)′ is well conditioned.

We show in the supplementary material that when Σu is diagonal (uit is cross-sectionally independent), both the sub-Gaussian assumption and condition (iv) can be relaxed.

The following theorem is the main result of this section.

Theorem 6.1

Under assumptions of Theorem 4.1 and Assumption 6.1, as p, T → ∞, if J satisfies and K < Jd/2 (J may either grow or stay constant), we have

7. Numerical Studies

This section presents numerical results to demonstrate the performance of projected-PCA method for estimating loading and factors using both real data and simulated data.

7.1. Estimating loading curves with real data

We collected stocks in S&P500 index constituents from CRSP which have complete daily closing prices from year 2005 through 2013, and their corresponding market capitalization and book value from Compustat. There are 337 stocks in our data set, whose daily excess returns were calculated. We considered four characteristics X as in Connor et al. (2012) for each stock: size, value, momentum and volatility, which were calculated using the data before a certain data analyzing window so that characteristics are treated known. See Connor et al. (2012) for detailed descriptions of these characteristics. All four characteristics are standardized to have mean zero and unit variance. Note that the construction makes their values independent of the current data.

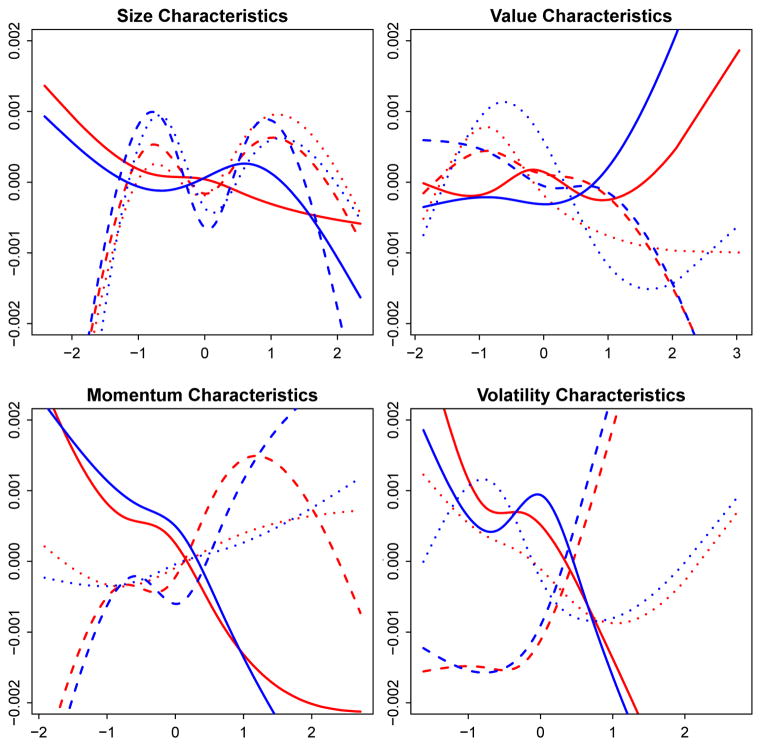

We fix the time window to be the first quarter of the year 2006, which contains T = 63 observations. Given the excess returns {yit}i≤337,t≤63 and characteristics Xi as the input data and setting K = 3, we fit loading functions for k = 1, 2, 3 using the projected-PCA method. The four additive components gkl(·) are fitted using the cubic spline in the R package “GAM” with sieve dimension J = 4. All the four loading functions for each factor are plotted in Figure 3. The contribution of each characteristic to each factor is quite nonlinear.

Fig. 3.

Estimated additive loading functions gkl, l = 1, ···, 4. from financial returns of 337 stocks in S&P 500 index. They are taken as the true functions in the simulation studies. In each panel (fixed l), the true and estimated curves for k = 1, 2, 3 are plotted and compared. The solid, dashed and dotted red curves are the true curves corresponding to the first, second and third factors respectively. The blue curves are their estimates from one simulation of the calibrated model with T = 50, p = 300.

7.2. Calibrating the model with real data

We now treat the estimated functions gkl(·) as the true loading functions, and calibrate a model for simulations. The “true model” is calibrated as follows:

Take the estimated gkl(·) from the real data as the true loading functions.

For each p, generate {ut}t≤T from N(0, DΣ0D) where D is diagonal and Σ0 sparse. Generate the diagonal elements of D from Gamma(α, β) with α = 7.06, β = 536.93 (calibrated from the real data), and generate the off-diagonal elements of Σ0 from with μu = −0.0019, σu = 0.1499. Then truncate Σ0 by a threshold of correlation 0.03 to produce a sparse matrix and make it positive definite by R package “nearPD”.

Generate {γik} from the i.i.d. Gaussian distribution with mean 0 and standard deviation 0.0027, calibrated with real data.

Generate ft from a stationary VAR model ft = Aft−1 + εt where εt ~ N(0, Σε). The model parameters are calibrated with the market data and listed in Table 1.

Finally, generate Xi ~ N(0, ΣX). Here ΣX is a 4×4 correlation matrix estimated from the real data.

Table 1.

Parameters used for the factor generating process, obtained by calibration to the real data.

| Σε | A | ||||

|---|---|---|---|---|---|

| 0.9076 | 0.0049 | 0.0230 | −0.0371 | −0.1226 | −0.1130 |

| 0.0049 | 0.8737 | 0.0403 | −0.2339 | 0.1060 | −0.2793 |

| 0.0230 | 0.0403 | 0.9266 | 0.2803 | 0.0755 | −0.0529 |

We simulate the data from the calibrated model, and estimate the loadings and factors for T = 10 and 50 with p varying from 20 through 500. The “true” and estimated loading curves are plotted in Figure 3 to demonstrate the performance of projected-PCA. Note that the “true” loading curves in the simulation are taken from the estimates calibrated using the real data. The estimates based on simulated data capture the shape of the true curve, though we also notice slight biases at boundaries. But in general, projected-PCA fits the model well.

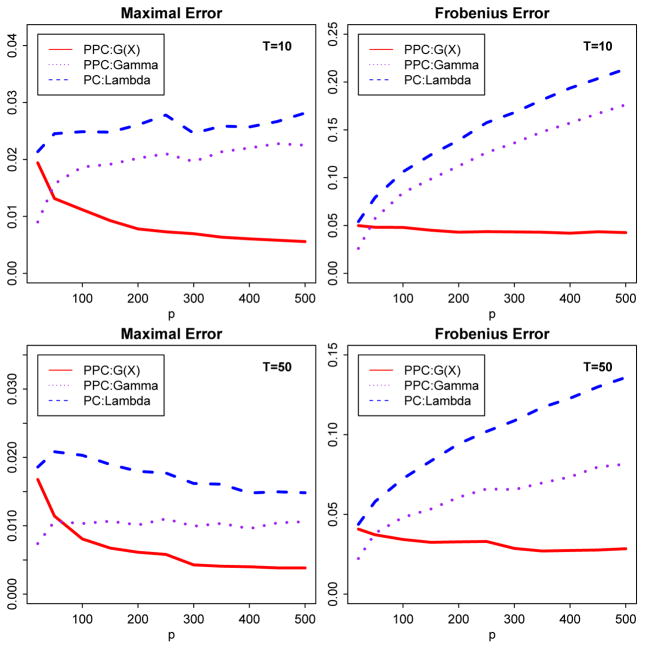

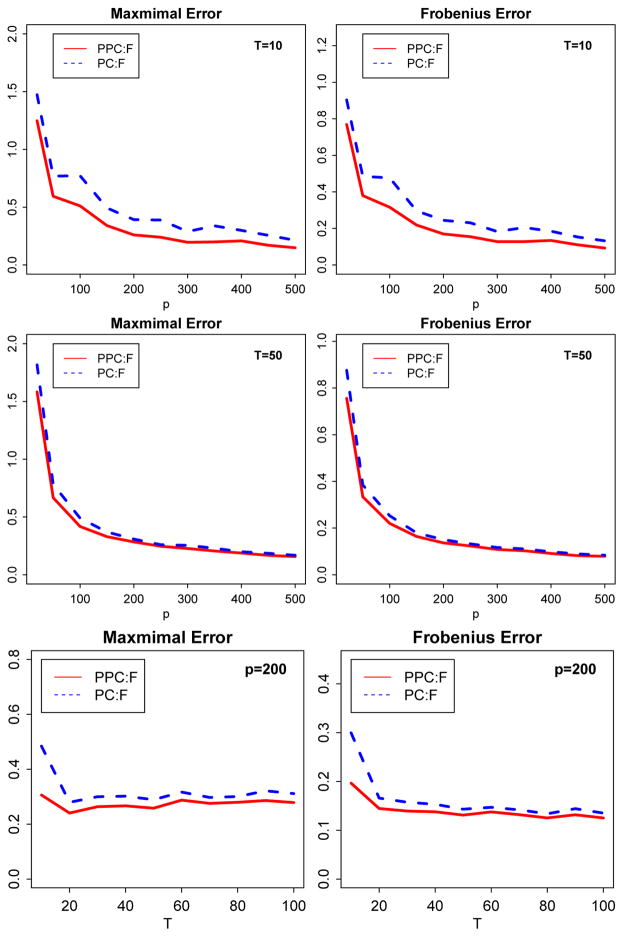

We also compare our method with the regular PC method (e.g., Stock and Watson (2002)). The mean values of ||Λ̂ − Λ||max, , ||F̂ − F0||max and are plotted in Figures 1 and 2. where Λ = G0(X) + Γ (see section 7.3 for definitions of G0(X) and F0). The breakdown error or G0(X) and Γ are also depicted in Figure 1. In comparison, projected-PCA outperforms PC in estimating both factors and loadings including the nonparametric curves G(X) and random noise Γ. The estimation errors for G(X) of projected-PCA decrease as the dimension increases, which is consistent with our asymptotic theory.

Fig. 1.

Averaged ||Λ̂ − Λ|| by projected-PCA (PPCA, red solid) and regular PC (dashed blue) and ||Ĝ − G0||, ||Γ̂ − Γ|| by PPCA over 500 repetitions. Left panel:||·||max, right panel: .

Fig. 2.

Averaged ||F̂ − F0||max and over 500 repetitions, by projected-PCA (PPCA, solid red) and regular PC (dashed blue).

7.3. Design 2

Consider a different design with only one observed covariate and three factors. The three characteristic functions are g1 = x, g2 = x2 − 1, g3 = x3 − 2x with the characteristic X being standard normal. Generate {ft}t≤T from the stationary VAR(1) model, that is ft = Aft−1 + εt where εt ~ N (0, I). We consider Γ = 0.

We simulate the data for T = 10 or 50 and various p ranging from 20 to 500. To ensure that the true factor and loading satisfy the identifiability conditions, we calculate a transformation matrix H such that , H−1G′GH′−1 is diagonal. Let the final true factors and loadings be F0 = FH, G0 = GH′−1. For each p, we run the simulation for 500 times.

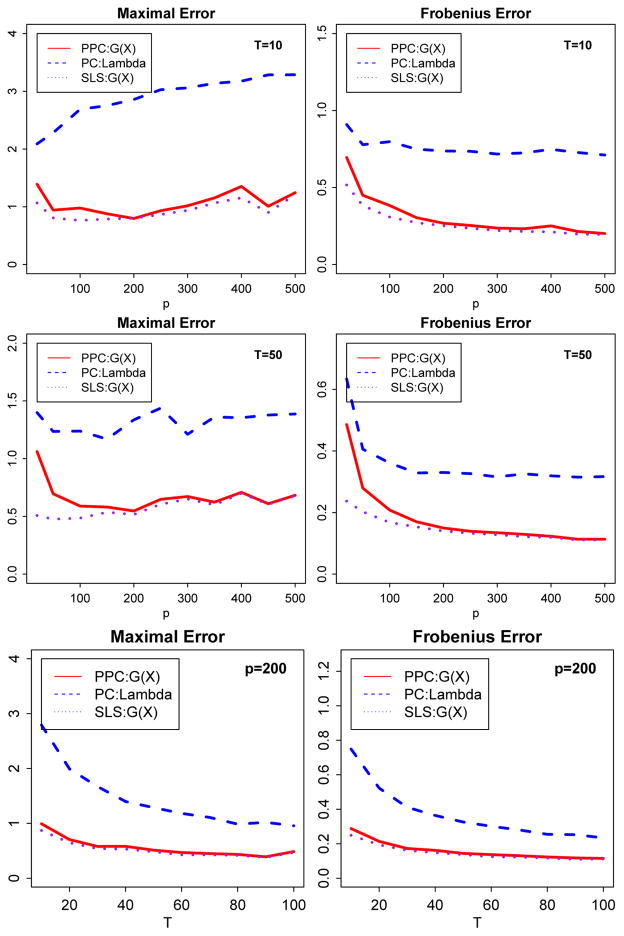

We estimate the loadings and factors using both projected-PCA and PC. For projected-PCA, as in our theorem, we choose J = C(p min(T, p))1/κ, with κ = 4 and C = 3. To estimate the loading matrix, we also compare with a third method: sieve-least-squares (SLS), assuming the factors are observable. In this case, the loading matrix is estimated by PYF0/T, where F0 is the true factor matrix of simulated data.

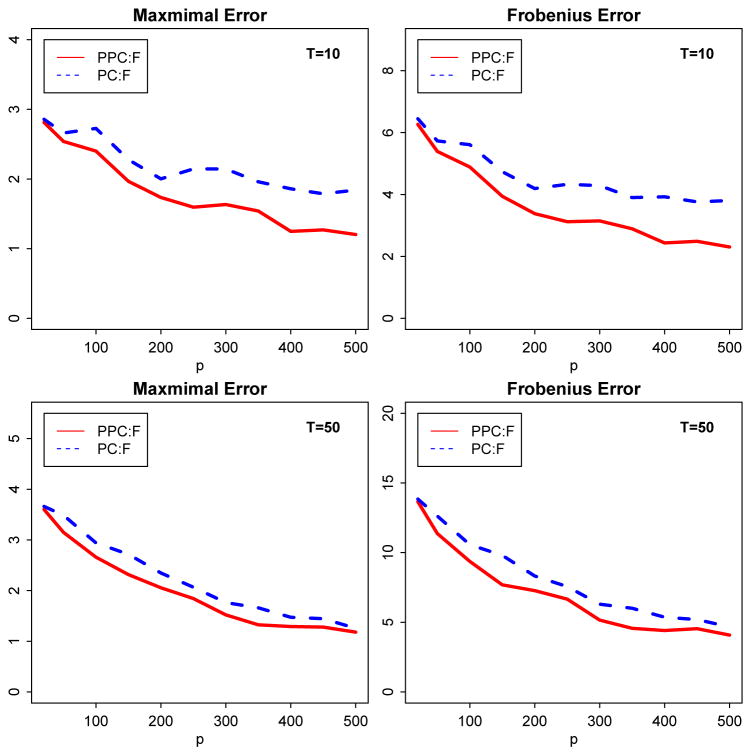

The estimation error measured in max and standardized Frobenius norms for both loadings and factors are reported in Figures 4 and 5. The plots demonstrate the good performance of projected-PCA in estimating both loadings and factors. In particular, it works well when we encounter small T but a large p. In this design, Γ= 0, so the accuracy of estimating Λ = G0 is significantly improved by using the projected-PCA. The projected-PCA method significantly outperforms the traditional PCA. Figure 5 shows that the factors are also better estimated by projected-PCA than the traditional one, particularly when T is small. It is also clearly seen that when p is fixed, the improvement on estimating factors is not significant as T grows. This matches with our convergence results for the factor estimators.

Fig. 4.

Averaged ||Ĝ − G0||max and over 500 repetitions. PPCA, PC and SLS respectively represent projected-PCA, regular PCA and sieve least squares with known factors: Design 2. Here Γ = 0, so Λ = G0. Upper two panels: p grows with fixed T; bottom panels: T grows with fixed p.

Fig. 5.

Average estimation error of factors over 500 repetitions, i.e. ||F̂ − F0||max and by projected-PCA (solid red) and PC (dashed blue): Design 2. Upper two panels: p grows with fixed T; bottom panels: T grows with fixed p.

It is also interesting to compare projected-PCA with SLS (Sieve Least-Squares with observed factors) in estimating the loadings, which corresponds to the cases of unobserved and observed factors. As we see from Figure 4, when p is small, the projected-PCA is not as good as SLS. But the two methods behave similarly as p increases. This further confirms the theory and intuition that as the dimension becomes larger, the effects of estimating the unknown factors are negligible.

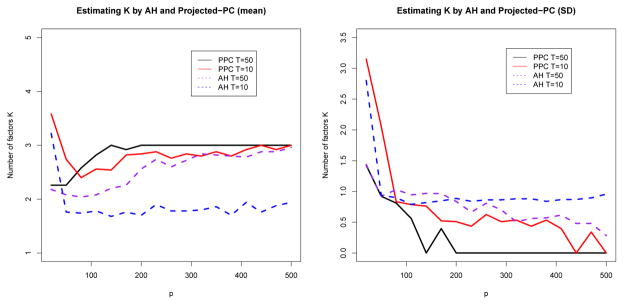

7.4. Estimating number of factors

We now demonstrate the effectiveness of estimating K by the projected-PC’s eigenvalue-ratio method. The data are simulated in the same way as in Design 2. T = 10 or 50 and took the values of p ranging from 20 to 500. We compare our projected-PC based on the projected data matrix Y′PY to the eigenvalue-ratio test (AH) of Ahn and Horenstein (2013) and Lam and Yao (2012), which works on the original data matrix Y′Y.

For each pair of T, p, we repeat the simulation for 50 times and report the mean and standard deviation of the estimated number of factors in Figure 6. The projected-PCA outperforms AH after projection, which significantly reduces the impact of idiosyncratic errors. When T = 50, we can recover the number of factors almost all the time, especially for large dimensions (p > 200). On the other hand, even when T = 10, projected-PCA still obtains a closer estimated number of factors.

Fig 6.

Mean and standard deviation of the estimated number of factors over 50 repetitions. True K = 3. PPCA and AH respectively represent the methods of projected-PCA and Ahn and Horenstein (2013). Left panel: Mean; Right panel: standard deviation.

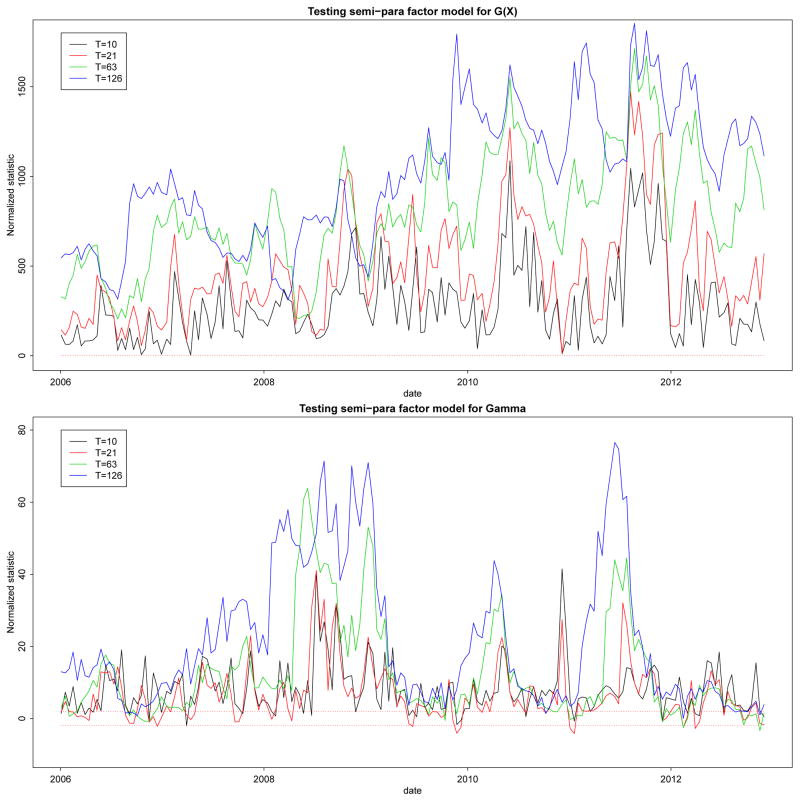

7.5. Loading specification tests with real data

We test the loading specifications on the real data. We used the same data set as in Section 6.1, consisting of excess returns from 2005 through 2013. The tests were conducted based on rolling windows, with the length of windows spanning from 10 days, a month, a quarter, and half a year. For each fixed window-length (T), we computed the standardized test statistic of SG and SΓ, and plotted them along the rolling windows respectively in Figure 7. In almost all cases, the number of factors is estimated to be one in various combinations of (T, p, J).

Fig 7.

Normalized SG, Sγ from 2006/01/03 to 2012/11/30. The dotted lines are ± 1.96.

Figure 7 suggests that the semi-parametric factor model is strongly supported by the data. Judging from the upper panel (testing ), we have very strong evidence of the existence of non-vanishing covariate effect, which demonstrates the dependence of the market beta’s on the covariates X. In other words, the market beta’s can be explained at least partially by the characteristics of assets. The results also provide the theoretical basis for using projected PCA to get more accurate estimation.

In the bottom panel of Figure 7 (testing ), we see for a majority of period, the null hypothesis is rejected. In other words, the characteristics of assets cannot fully explain the market beta as intuitively expected, and model (1.2) in the literature is inadequate. However, fully nonparametric loadings could be possible in certain time range mostly before financial crisis. During 2008–2010, the market’s behavior had much more complexities, which causes more rejections of the null hypothesis. The null hypothesis Γ = 0 is accepted more often since 2012. We also notice that larger T tends to yield larger statistics in both tests, as the evidence against the null hypothesis is stronger with larger T. After all, the semi-parametric model being considered provides flexible ways of modeling equity markets and understanding the nonparametric loading curves.

8. Conclusions

This paper proposes and studies a high-dimensional factor model with nonparametric loading functions that depend on a few observed covariate variables. This model is motivated by the fact that observed variables can explain partially the factor loadings. We propose a projected PCA to estimate the unknown factors, loadings, and number of factors. After projecting the response variable onto the sieve space spanned by the covariates, the projected-PCA yields a significant improvement on the rates of convergence than the regular methods. In particular, consistency can be achieved without a diverging sample size, as long as the dimensionality grows. This demonstrates that the proposed method is useful in the typical HDLSS situations. In addition, we propose new specification tests for the orthogonal decomposition of the loadings, which fill the gap of the testing literature for semi-parametric factor models. Our empirical findings show that firm characteristics can explain partially the factor loadings, which provide theoretical basis for employing projected-PCA methods. On the other hand, our empirical study also shows that the firm characteristics can not fully explain the factor loadings so that the proposed generalized factor model is more appropriate.

APPENDIX A: PROOFS FOR SECTION 3

Throughout the proofs, p → ∞, and T may either grow simultaneously with p or stay constant. For two matrices A, B with fixed dimensions, and a sequence aT, by writing A = B + oP(aT), we mean ||A − B||F = oP (aT).

In the regular factor model Y = ΛF′+U, let K denote a K×K diagonal matrix of the first K eigenvalues of . Then by definition, . Let . Then

| (A.1) |

where

We now describe the structure of the proofs for

Note that F̂ − F = F̂ − FM + F(M − I). Hence we need to bound and respectively.

-

Step 1: prove that .

Due to the equality (A.1), it suffices to bound ||K−1||2 as well as the norm of D1, D2, D3 respectively. These are obtained in Lemmas A.2, A.3 below.

-

Step 2: prove that .

Still by the equality (A.1), . Hence this step is achieved by bounding ||F′Di||F for i = 1, 2, 3. Note that in this step, we shall not apply a simple inequality ||F′Di||F ≤ ||F||F ||Di||F, which is too crude. Instead, with the help of the result achieved in Step 1, sharper upper bounds for ||F′Di||F can be achieved. We do so in Lemma ?? in the supplementary material.

-

Step 3: prove that .

This step is achieved in Lemma A.4 below, which uses the result in Step 2.

Before proceeding to Step 1, we first show that the two alternative definitions for Ĝ(X) described in Section 2.3 are equivalent.

Lemma A.1

Proof

Consider the singular value decomposition: , where V1 is a p×p orthogonal matrix, whose columns are the eigenvectors of ; V2 is a T × T matrix whose columns are the eigenvectors of ; S is a p × T rectangular diagonal matrix, with diagonal entries as the square roots of the non-zero eigenvalues of . In addition, by definition, D̂ is a K × K diagonal matrix consisting of the largest K eigenvalues of ; Ξ̂ is a p × K matrix whose columns are the corresponding eigenvectors. The columns of are the eigenvectors of , corresponding to the first K eigenvalues.

With these definitions, we can write V1 = (Ξ̂, Ṽ1), , and

for some matrices Ṽ1, Ṽ2 and D̃. It then follows that

Lemma A.2

||K||2 = OP (1), ||K−1||2 = OP (1), ||M||2 = OP (1).

Proof

The eigenvalues of K are the same as those of

Substituting Y = ΛF′ + U, and F′F/T = IK, we have , where

By Assumption 3.3, ,

Hence

By Lemma ?? in the supplementary material, . Similarly,

Using the inequality that for the kth eigenvalue, |λk(W) − λk(W1)| ≤ ||W − W1||2, we have |λk(W) − λk(W1)| = OP(T−1/2 +p−1), for k = 1, · · ·, K. Hence it suffices to prove that the first K eigenvalues of W1 are bounded away from both zero and infinity, which are also the first K eigenvalues of . This holds under the theorem’s assumption (Assumption 3.1). Thus ||K−1||2 = OP(1) = ||K||2, which also implies ||M||2 = OP(1).

Lemma A.3

(i) , (ii) , (iii) , (iv) .

Proof

It follows from Lemma ?? in the supplementary material that . Also, and Assumption 3.1 implies . So

By Lemma A.2, ||K−1||2 = OP (1). Part (iv) then follows directly from

Lemma A.4

In the regular factor model .

Proof

By Lemma ?? in the supplementary material and the triangular inequality, . Hence

Right multiplying M to both sides . In addition,

Hence

In addition, from ,

Because Λ′PΛ is diagonal, the same proofs of those of Proposition ?? lead to the desired result.

Proof of Theorem 3.1

Proof

It follows from Lemmas A.3 (iv) and A.4 that

As for the estimated loading matrix, note that

where .

By Lemmas ?? and A.4,

By Lemma ??, , and from Lemma ?? . Hence , which implies

Proof of Theorem 3.2

Proof

Since Ĝ(X) = Ξ̂D̂1/2, by Theorem 3.1, . Hence . By lemma A.2, ||(D̂/p)−1||2 = ||K−1||2 = OP(1), which implies

On the other hand, define Λ̄ = ΛṼ = (Λ̄1, …Λ̄K). Then Λ̄′Λ̄ is diagonal and , j = 1, …, K. This implies that the columns of Λ̄(Λ̄′Λ̄)−1/2 are the eigenvectors of ΛΛ′ corresponding to the largest K eigenvalues. In addition, in the factor model, we have the following matrix decomposition: for Σu = cov(ut), Σ = ΛΛ′ + Σu. Hence by the same argument of the proof of Proposition 2.2 in Fan et al. (2013),

Using ṼṼ′ = I, we have

On the right hand side, the first term is , as is proved above. Still by ||(p−1D̂)−1/2||2 = OP(1), the second term is bounded by

Finally, since (Λ̄′Λ̄)1/2 = Ṽ′(Λ′Λ)1/2Ṽ, so (Λ̄′Λ̄)1/2Ṽ′ − Ṽ′(Λ′Λ)1/2 = 0, which implies the third term is zero. Hence

All the remaining proofs are given in the supplementary material.

Footnotes

One can first conduct the analysis conditioning on the event {K̂ = K}, then argue that the results still hold unconditionally as P(K̂ = K) → 1

SUPPLEMENTARY MATERIAL

Supplement: Technical proofs Fan et al. (2015b)

(). This supplementary material contains all the remaining proofs.

References

- Ahn J, Marron J, Muller KM, Chi YY. The high-dimension, low-sample-size geometric representation holds under mild conditions. Biometrika. 2007;94:760–766. [Google Scholar]

- Ahn S, Horenstein A. Eigenvalue ratio test for the number of factors. Econometrica. 2013;81:1203–1227. [Google Scholar]

- Alessi L, Barigozzi M, Capassoc M. Improved penalization for determining the number of factors in approximate factor models. Statistics and Probability Letters. 2010;80:1806–1813. [Google Scholar]

- Andrews D. Heteroskedasticity and autocorrelation consistent covariance matrix estimation. Econometrica. 1991;59:817–858. [Google Scholar]

- Bai J. Inferential theory for factor models of large dimensions. Econometrica. 2003;71:135–171. [Google Scholar]

- Bai J, Li Y. Statistical analysis of factor models of high dimension. Annals of Statistics. 2012;40:436–465. [Google Scholar]

- Bai J, Ng S. Determining the number of factors in approximate factor models. Econometrica. 2002;70:191–221. [Google Scholar]

- Bai J, Ng S. Principal components estimation and identification of the factors. Journal of Econometrics. 2013;176:18–29. [Google Scholar]

- Bickel P, Levina E. Covariance regularization by thresholding. Annals of Statistics. 2008;36:2577–2604. [Google Scholar]

- Breitung, Pigorsch A canonical correlation approach for selecting the number of dynamic factors. Oxford Bulletin of Economics and Statistics. 2009;75:23–36. [Google Scholar]

- Breitung, Tenhofen Gls estimation of dynamic factor models. Journal of the American Statistical Association. 2011;106:1150–1166. [Google Scholar]

- Brillinger DR. Time series: data analysis and theory. Vol. 36. Siam; 1981. [Google Scholar]

- Cai TT, Ma Z, Wu Y. Sparse pca: Optimal rates and adaptive estimation. The Annals of Statistics. 2013;41:3074–3110. [Google Scholar]

- Candès EJ, Recht B. Exact matrix completion via convex optimization. Foundations of Computational Mathematics. 2009;9:717–772. [Google Scholar]

- Chen X. Handbook of Econometrics, North Holland. 2007. Large sample sieve estimation of semi-nonparametric models; p. 76. [Google Scholar]

- Connor G, Linton O. Semiparametric estimation of a characteristic-based factor model of stock returns. Journal of Empirical Finance. 2007;14:694–717. [Google Scholar]

- Connor G, Matthias H, Linton O. Efficient semiparametric estimation of the fama-french model and extensions. Econometrica. 2012;80:713–754. [Google Scholar]

- Desai KH, Storey JD. Cross-dimensional inference of dependent high-dimensional data. Journal of the American Statistical Association. 2012;107:135–151. doi: 10.1080/01621459.2011.645777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Efron B. Correlated z-values and the accuracy of large-scale statistical estimates. Journal of the American Statistical Association. 2010;105:1042–1055. doi: 10.1198/jasa.2010.tm09129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Han X, Gu W. Estimating false discovery proportion under arbitrary covariance dependence (with discussion) Journal of the American Statistical Association. 2012;107:1019–1035. doi: 10.1080/01621459.2012.720478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Liao Y, Mincheva M. Large covariance estimation by thresholding principal orthogonal complements (with discussion) Journal of the Royal Statistical Society, Series B. 2013;75:603–680. doi: 10.1111/rssb.12016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Liao Y, Shi X. Risks of large portfolios. Journal of Econometrics. 2015a;186:367–387. doi: 10.1016/j.jeconom.2015.02.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Liao Y, Wang W. Supplementary appendix to the paper “projected principal component analysis in factor models”. 2015b doi: 10.1214/15-AOS1364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Forni M, Hallin M, Lippi M, Reichlin L. The generalized dynamic-factor model: Identification and estimation. Review of Economics and statistics. 2000;82:540–554. [Google Scholar]

- Forni M, Hallin M, Lippi M, Zaffaroni P. Dynamic factor models with infinite-dimensional factor spaces: One-sided representations. Journal of econometrics. 2015;185:359–371. [Google Scholar]

- Forni M, Lippi M. The generalized dynamic factor model: representation theory. Econometric theory. 2001;17:1113–1141. [Google Scholar]

- Friguet C, Kloareg M, Causeur D. A factor model approach to multiple testing under dependence. Journal of the American Statistical Association. 2009;104:1406–1415. [Google Scholar]

- Hallin M, Liška R. Determining the number of factors in the general dynamic factor model. Journal of the American Statistical Association. 2007;102:603–617. [Google Scholar]

- Johnstone IM. On the distribution of the largest eigenvalue in principal components analysis. Annals of statistics. 2001:295–327. [Google Scholar]

- Jung S, Marron J. Pca consistency in high dimension, low sample size context. Annals of Statistics. 2009;37:3715–4312. [Google Scholar]

- Koltchinskii V, Lounici K, Tsybakov AB. Nuclear-norm penalization and optimal rates for noisy low-rank matrix completion. The Annals of Statistics. 2011;39:2302–2329. [Google Scholar]

- Lam C, Yao Q. Factor modeling for high dimensional time-series: inference for the number of factors. Annals of Statistics. 2012;40:694–726. [Google Scholar]

- Leek JT, Storey JD. A general framework for multiple testing dependence. Proceedings of the National Academy of Sciences. 2008;105:18718–18723. doi: 10.1073/pnas.0808709105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li G, Yang D, Nobel AB, Shen H. Supervised singular value decomposition and its asymptotic properties. Journal of Multivariate Analysis 2015 [Google Scholar]

- Lorentz G. Approximation of functions. 2. American Mathematical Society; Rhode Island: 1986. [Google Scholar]

- Ma Z. Sparse principal component analysis and iterative thresholding. The Annals of Statistics. 2013;41:772–801. [Google Scholar]

- Negahban S, Wainwright MJ. Estimation of (near) low-rank matrices with noise and high-dimensional scaling. The Annals of Statistics. 2011;39:1069–1097. [Google Scholar]

- Newey W, West K. A simple, positive semi-definite, heteroskedasticity and autocorrelation consistent covariance matrix. Econometrica. 1987;55:703–708. [Google Scholar]

- Park B, Mammen E, Haerdle W, Borzk S. Time series modelling with semiparametric factor dynamics. Journal of the American Statistical Association. 2009;104:284–298. [Google Scholar]

- Paul D. Asymptotics of sample eigenstructure for a large dimensional spiked covariance model. Statistica Sinica. 2007;17:1617. [Google Scholar]

- Shen D, Shen H, Marron J. Consistency of sparse pca in high dimension, low sample size contexts. Journal of Multivariate Analysis. 2013a;115:317–333. [Google Scholar]

- Shen D, Shen H, Zhu H, Marron J. Tech rep. University of North Carolina; 2013b. Surprising asymptotic conical structure in critical sample eigen-directions. [Google Scholar]

- Stock J, Watson M. Forecasting using principal components from a large number of predictors. Journal of the American Statistical Association. 2002;97:1167–1179. [Google Scholar]

- Vershynin R. Introduction to the non-asymptotic analysis of random matrices. 2010 arXiv preprint arXiv:1011.3027. [Google Scholar]