Abstract

Recent studies suggest common neural substrates involved in verbal and visual working memory (WM), interpreted as reflecting shared attention-based, short-term retention mechanisms. We used a machine-learning approach to determine more directly the extent to which common neural patterns characterize retention in verbal WM and visual WM. Verbal WM was assessed via a standard delayed probe recognition task for letter sequences of variable length. Visual WM was assessed via a visual array WM task involving the maintenance of variable amounts of visual information in the focus of attention. We trained a classifier to distinguish neural activation patterns associated with high- and low-visual WM load and tested the ability of this classifier to predict verbal WM load (high–low) from their associated neural activation patterns, and vice versa. We observed significant between-task prediction of load effects during WM maintenance, in posterior parietal and superior frontal regions of the dorsal attention network; in contrast, between-task prediction in sensory processing cortices was restricted to the encoding stage. Furthermore, between-task prediction of load effects was strongest in those participants presenting the highest capacity for the visual WM task. This study provides novel evidence for common, attention-based neural patterns supporting verbal and visual WM.

Keywords: attention, fMRI, intraparietal sulcus, multivariate voxel pattern analysis, verbal, visual, working memory

Introduction

A number of studies have highlighted a common involvement of frontoparietal networks during retention of verbal and visual information in working memory (WM) tasks. These common networks have been observed both for overall activation levels during verbal and visual WM tasks (e.g., Nystrom et al. 2000; Rämä et al. 2001; Hautzel et al. 2002; Brahmbhatt et al. 2008; Lycke et al. 2008; Majerus et al. 2010) as well as for WM load effects. WM load effects, comparing high-load with low-load retention conditions, have been considered to reflect a key index of WM storage capacity (Ravizza et al. 2004; Todd and Marois 2004). Studies exploring load effects in WM have shown the involvement of the posterior parietal cortex and intraparietal sulcus (IPS) in WM load for both verbal and visual WM tasks, whereas the amount of activation within sensory cortices does not appear to be sensitive to WM load (Ravizza et al. 2004; Todd and Marois 2004; Todd et al. 2005). This has led to the proposal that common, attention-based principles support retention of information in verbal and visual WM. In many current theoretical accounts of WM, attentional mechanisms such as attentional focalization and selection are considered to be central for efficient WM performance, by allowing temporary representations of WM content to remain active and in the focus of attention (e.g., Cowan 1995; Fuster 1999; Lavie 2005; Gazzaley and Nobre 2012). Cowan (1995) argued that limitations in the scope of attention define WM capacity, the scope of attention being defined by the amount of information that can be consciously attended at one time. The present study examines the common nature of presumably attention-based cortical networks involved in verbal and visual WM tasks, by using multivariate analysis techniques and by determining to what extent neural patterns associated with WM load not only show overlap but can actually predict WM load across WM modalities.

There is increasing albeit indirect evidence for shared behavioral and neural mechanisms involved in verbal WM, visual WM, and attention. Behaviorally, verbal and visual WM tasks with stimuli designed to share as few features as possible (spatial visual arrays and word-voice pairings) still show tradeoffs between modalities, with the requirement to retain stimulus sets in both modalities reducing performance in both of them compared with unimodal memory maintenance (Saults and Cowan 2007), and the same is true of nonverbal acoustic and visual tasks (Morey et al. 2011). When 2 stimulus sets are to be retained, there is an initial processing phase in which encoding of materials into WM is vulnerable to feature similarity between the sets, followed by an WM maintenance phase in which there is little or no effect of the inter-set similarity, but during WM maintenance, there is still a tradeoff between the sets compared with control conditions in which 1 set can be ignored (Cowan and Morey 2007). At least the WM maintenance phase therefore appears to fit the profile of an attention-demanding process. For verbal materials, this process can be enhanced with a non-attention-demanding process, covert rehearsal (Camos et al. 2011), but that rehearsal process does not appear to come into play in the retention of spatial arrays of visual objects (Morey and Cowan 2004), which thus must depend on attention during WM maintenance.

At the neural level, the dorsal attention network, involved in task-related attention and encompassing the IPS and the superior frontal cortex, is increasingly activated as a function of verbal and visual WM load and levels off when WM capacities are reached (Todd and Marois 2004; Xu and Chun 2006); at the same time, the ventral attention network, involved in stimulus-driven attention and encompassing the temporo-parietal junction and the orbito-frontal cortex (Corbetta and Shulman 2002; Asplund et al. 2010), is deactivated as a function of verbal and visual WM load (Todd et al. 2005; Majerus et al. 2012). This shows that attentional networks compete in the context of verbal and visual WM (Johnston et al. 2012; Green and Soto 2014). Further indirect evidence stems from studies investigating modality-independent networks of WM. These studies showed that frontoparietal networks centered around the left IPS are activated across different WM task modalities and respond to WM load in verbal, visual, and auditory conditions (Brahmbhatt et al. 2008; Majerus et al. 2010; Chein et al. 2011; Cowan et al. 2011). Neural patterns in the parietal cortex have also been shown to allow a distinction between different WM task instructions (Rigall and Postle 2012). The same observation has also been made outside the WM domain, where changes in task instruction during simple reasoning tests correlated with activation patterns in the IPS and dorsolateral prefrontal cortex (Dumontheil et al. 2011). These data suggest that the parietal cortex plays a general role in task control across different WM modalities and cognitive domains, a role that can be defined, at the lowest level of control, as attentional focalization on task-relevant information (Cowan 1995; Gazzaley and Nobre 2012; Duncan 2013; Fedorenko et al. 2013). Finally, the IPS area has also been highlighted as a hub of attention in perceptual tasks that do not involve WM (e.g., Anderson et al. 2010).

This study aims at providing more direct evidence for the assumption of shared neural mechanisms involved in verbal and visual WM and the role of these mechanisms in task-related attentional focalization during WM. We used multivariate voxel pattern analyses (MVPA) based on machine learning models in order to assess the degree of neural pattern concordance between load effects in verbal and visual WM tasks; these methods are more sensitive than standard univariate methods as they allow us not only to determine the functional overlap of neural activation patterns in different task conditions but also to assess the informative value of this overlap for between-task prediction of condition effects. We focused on between-modality predictions of WM load effects, since WM load effects have been considered as a core index of short-term retention capacity (Ravizza et al. 2004; Todd and Marois 2004). Also, contrary to sensory cortices, they recruit the dorsal attention network, as revealed by univariate analysis techniques. MVPA will allow us to confirm or disconfirm these findings, by determining the extent to which these neural patterns associated with WM load effects in both verbal and visual modalities actually contain sufficiently similar information for cross-modal prediction of load effects. If this is not the case, then any overlap of activation levels for verbal and visual WM load may merely be a coincidence, with no systematic correspondence of the neural activation levels and pattern distributions, and underlying cognitive processes, between verbal and visual WM load. For example, outside the WM domain, the IPS has been shown to share increased activation levels in number and letter comparison tasks, but this overlap was not associated with a systematic similarity of activation patterns between the 2 tasks as revealed by subsequent MVPA (Fias et al. 2007; Zorzi et al. 2011). We will also be able to assess whether regions not showing common load effects in univariate analyses can nevertheless predict load effects using MVPA, including regions in sensory processing cortices. We may expect these cross-modal predictions of load effects in sensory cortices particularly for the WM encoding stage, when high- and low-load conditions in both modalities differ in the number of stimuli that are physically present and need to be processed. We should note here that given the likely neural activation level differences between high- and low-load conditions, cross-modal predictions will be based on the informative value of both pattern activation level differences and pattern activation distribution differences; importantly, unlike univariate methods, neural patterns are compared in a data-driven way. Finally, in order to rule out that any cross-modal predictions merely reflect shared differences in task difficulty between high- and low-WM conditions, brain–behavior association analyses were conducted: If discrimination and between-task prediction of load effects is merely a reflection of differences in task difficulty for different WM loads, then those participants with the lowest WM capacity should show the highest discrimination and between-task prediction of load effects since these participants will be most sensitive to difficulty levels; on the other hand, if increased cross-modal predictions of load effects reflect stronger recruitment of shared cognitive processes supporting verbal and visual WM performance, then between-task prediction of load effects should be strongest in participants with high WM capacity.

Verbal WM load was assessed using a delayed probe recognition task for letter sequences contrasting short-term maintenance of low (2 letters), medium (4 letters), and high (6 letters) WM load conditions. This type of task dates back to Sternberg (1966) and is 1 of the most commonly tasks used for assessing verbal WM in both the behavioral and neuroimaging literature (e.g., Henson et al. 2000; Nystrom et al. 2000; Nee and Jonides 2008, 2011; Majerus et al. 2012). An important body of evidence has shown that even with visual presentation, letter sequences are processed using verbal codes, as indicated by the strong and robust psycholinguistic effects observed in WM performance for letter sequences, such as the phonological similarity effect, letter sequence recall being poorer for phonologically similar but visually dissimilar letter, as opposed to phonologically and visually dissimilar letters; when manipulating visual similarity and maintaining phonological similarity of letters constant, there can be effects of visual similarity, but mainly if memory of visual features is explicitly stressed such as when letters are presented in mixed lower/upper case and case needs to be maintained (Conrad 1964; Conrad and Hull 1964; Baddeley 1986; Logie et al. 2000). The visual WM task was a commonly used visual array WM task in which arrays of colored squares are presented very briefly preventing any verbal rehearsal, grouping or other strategic processes. This task depends largely on attentional focalization for further conscious processing and maintenance of stimuli, presents the same set-size relation as purely perceptual selective attention tasks, and shows robust performance tradeoffs with perceptual selective attention tasks (e.g., Luck and Vogel 1997; Todd and Marois 2004; Cowan et al. 2005, 2006; Xu and Chun 2006; Stevanovski and Jolicoeur 2007; Cowan 2001; Anderson et al. 2013; Morey and Bieler 2013). Verbalization is very unlikely given the very brief presentation time (250 ms) whereas color naming of a single square typically takes about 500 ms (Stroop 1935). Morey and Cowan (2004) also showed that a verbal rehearsal suppression task had no impact on performance for this type of visual array WM task, showing that verbal naming strategies are not used in that task. In the original version of this task, participants are instructed to focus their attention on arrays of 2, 4, or 6 colored squares presented for a very brief time, followed by a probe array either identical to the initial array or differing by a color change of a single square. This task leads to the k-estimate of scope of attention capacity, derived from the proportion of correct positive and negative probe decisions, and typically reaches an asymptote between k = 3 and k = 5. On average, an adult participant can consciously hold active in his focus of attention no more than about 4 items, though with some individual variation (Cowan 2001). In the present study, this procedure was further adapted to remove any decision component and to reflect attentional focalization and maintenance as directly as possible, by training a classifier on trials containing arrays of variable set size but which were not followed by any subsequent probe recognition array or response on those trials.

Materials and Methods

Participants

Valid data were obtained for 21 right-handed native French-speaking young adults (9 male; mean age: 22.19 years; age range: 18–33) recruited from the university community, with no history of psychological or neurological disorders. The data of 2 participants had to be discarded due to scanner artifacts; 2 additional participants interrupted the study before complete data acquisition. The study was approved by the Ethics Committee of the Faculty of Medicine of the University of Liège and was performed in accordance with the ethical standards described in the Declaration of Helsinki (1964). All participants gave their written informed consent prior to their inclusion in the study.

Task Description

A practice session outside the MR environment, prior to the start of the experiment, familiarized the participants with the specific task requirements and included the administration of at least 9 practice trials for visual array WM task and 12 practice trials for the verbal WM task, both described later. Following practice, the visual array WM task was presented in a single run and always preceded the verbal WM task (also presented in a single run); this was done in order to avoid any carry-over effect of maintenance strategies between tasks; these strategies being more likely to be implemented in the verbal WM task where stimuli were presented more slowly and where the maintenance interval was much larger. The visual array WM task was constructed to capture non-strategic, attention-based maintenance mechanisms via brief presentation and maintenance durations; this objective is likely to be maximized if no other strategically more demanding task precedes administration of the visual array WM task.

Visual Array WM Task

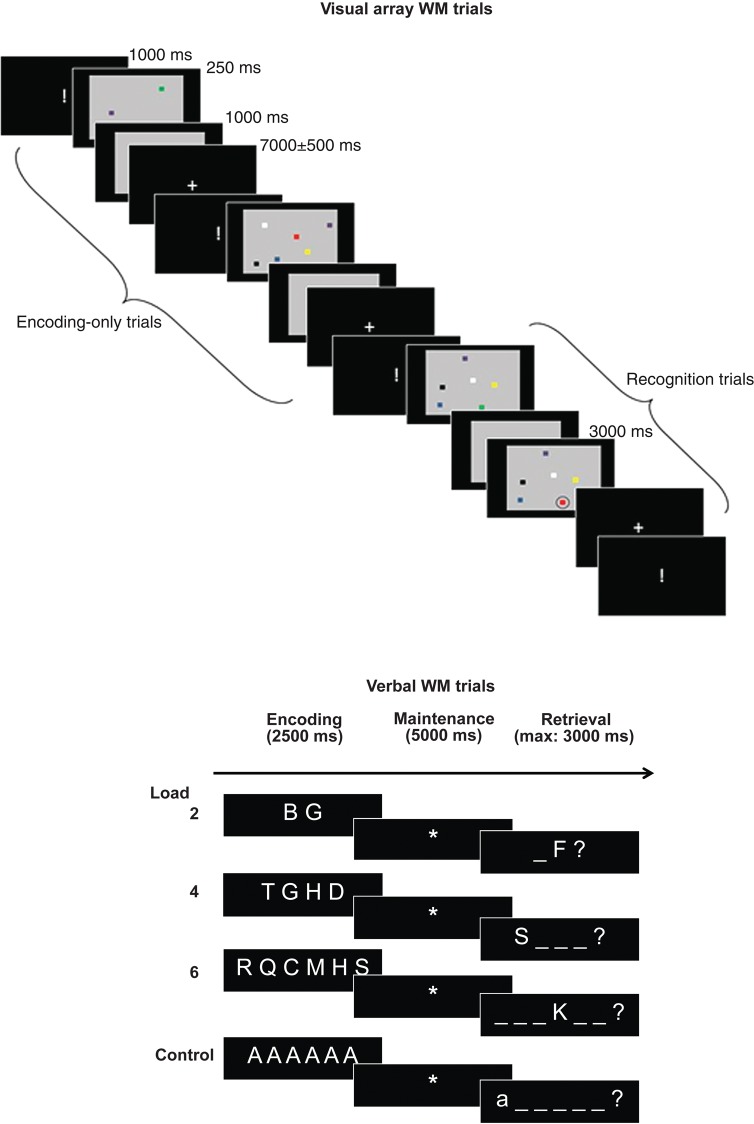

This task, illustrated in Figure 1, was designed to probe neural correlates associated with the ability to focus attention on simultaneously presented visual stimuli under 3 attentional load conditions. Arrays containing 2, 4, or 6 colored squares were presented for a very brief duration (250 ms) in order to avoid any strategic control processes or verbalization. A minority of arrays were followed by probe arrays. The participants were instructed to maintain attention on the array items, and they were informed that for a minority trials, recognition of the arrays would also be tested. The trials used for classifier training, however, did not include these recognition trials in order to train the classifier only on array encoding/maintenance events; the reason for the additional administration of recognition trials was to ensure that participants maintained their focus of attention on the arrays over the entire task duration. The timing was identical up to the point at which a probe array was inserted on recognition trials. In both kinds of trials, the arrays to be studied were presented on a gray background, which remained on the screen for a further 1000 ms after presentation of the array. For each array, the colors of the squares were sampled without replacement from a set of 7 different colors. Inter-trial interval duration was of variable duration and followed a standard normal distribution with a mean of 7000 ms and a standard deviation of 500 ms. During the inter-trial interval, a fixation cross was displayed on the screen. An upcoming trial was announced via the presentation of the sign “!” during 1000 ms. There were 40 trials for each load condition. There was a small number of additional recognition trials (15 per condition) in which the information maintained in the focus of attention was followed by a recognition display in which the target array was displayed with 1 circled square during a maximum of 3000 ms; the participants had to detect a change in color for the circled square (color changed in at least 53% of recognition trials, always to a color that had not been present in the array) by pressing the button under their middle finger for “yes” (i.e., there was a change) and the button under their index finger for “no.” The probe display was cleared after the participant's response. The timing was identical for the encoding-only and recognition trials, up to the point at which a probe array was inserted on the recognition trials. As already noted, the trials of this recognition condition were not included for classifier training. The different conditions were administered in pseudo-random order.

Figure 1.

Schematic drawing of the visual array and verbal WM tasks. Note that for the visual array WM task, only the “encoding-only” trials were used for classifier training.

Verbal WM Task

This task assessed load effects in WM by presenting sequences of 2, 4, or 6 consonant letters sampled without replacement from a pool of 16 different consonants. The letter sequences were presented for 2500 ms on the center of the screen and were organized horizontally. The sequences were then replaced by the sign “*”, indicating that the letter sequences had to be maintained in WM for 5000 ms. After the maintenance interval, a probe letter was shown in 1 of the 2, 4, or 6 possible serial positions indicated by horizontal bars on the center of the screen (see Fig. 1). The participants had to decide within 3000 ms whether the probe letter matched the letter in the indicated serial position in the memory list by pressing the button under their middle finger for “yes” and the button under their index finger for “no.” In 50% of trials, the probe letter did not match the target letter (i.e., a letter not presented in the memory list) or its position (i.e., the letter was part of the memory list but not in the indicated serial position). The probe display was cleared after the participant's response. There were 42 trials for each WM load condition. Finally, a control condition (20 trials) was included, controlling for letter identification, motor response, and decision processes; this condition consisted of the presentation of a sequence containing 2, 4, or 6 times the same vowel A, followed by a 5000-ms delay period indicated by the sign “*” and finishing with a response display showing the same letter in upper or lower case; the participants had to decide whether the case was the same as in the target list by pressing the button under the middle finger for “yes” and by pressing the button under the index for “no.” For all conditions, before the start of a new trial, the sign “!” appeared on the center of the screen during 1000 ms informing the participant about the imminent start of a new trial. The inter-trial interval was of variable duration (random Gaussian distribution centered on a mean duration of 3500 ± 250 ms). The different conditions were administered in pseudo-random order.

MRI Acquisition

The experiments were carried out on a 3T head-only scanner (Magnetom Allegra) operated with a standard transmit–receive quadrature head coil. Functional MRI data were acquired using a T2*-weighted gradient echo echo-planar imaging (GE-EPI) sequence with the following parameters: TR = 2040 ms, TE = 30 ms, FoV = 192 × 192 mm2, 64 × 64 matrix, 34 axial slices with 3 mm thickness and 25% inter-slice gap to cover most of the brain. The 3 initial volumes were discarded to avoid T1 saturation effects. Field maps were generated from a double echo gradient-recalled sequence (TR = 517 ms, TE = 4.92 and 7.38 ms, FoV = 230 × 230 mm2, 64 × 64 matrix, 34 transverse slices with 3 mm thickness and 25% gap, flip angle = 90°, bandwidth = 260 Hz/pixel) and used to correct echo-planar images for geometric distortion due to field inhomogeneities. A high-resolution T1-weighted MP-RAGE image was acquired for anatomical reference (TR = 1960 ms, TE = 4.4 ms, TI = 1100 ms, FOV 230 × 173 mm², matrix size 256 × 192 × 176, voxel size 0.9 × 0.9 × 0.9 mm³). For the visual array WM task, between 699 (the scanner stopped prematurely for 1 participant in the visual array WM task, after administration of 74% of trials, leading to a lower number of functional volumes for this participant) and 960 functional volumes were obtained, and for the verbal WM task, between 951 and 1009 functional volumes were obtained. Head movement was minimized by restraining the subject's head using a vacuum cushion. Stimuli were displayed on a screen positioned at the rear of the scanner, which the subject could comfortably see through a mirror mounted on the standard head coil.

fMRI Analyses

Image Preprocessing

Data were preprocessed and analyzed using SPM8 software (Wellcome Department of Imaging Neuroscience, http://www.fil.ion.ucl.ac.uk/spm) implemented in MATLAB (Mathworks, Inc.) for univariate analyses. EPI time series were corrected for motion and distortion with “Realign and Unwarp” (Andersson et al. 2001) using the generated field map together with the FieldMap toolbox (Hutton et al. 2002) provided in SPM8. A mean realigned functional image was then calculated by averaging all the realigned and unwarped functional scans, and the structural T1-image was coregistered to this mean functional image (rigid body transformation optimized to maximize the normalized mutual information between the 2 images). The mapping from subject to MNI space was estimated from the structural image with the “unified segmentation” approach. The warping parameters were then separately applied to the functional and structural images to produce normalized images of resolution 2 × 2 × 2 mm3 and 1 × 1 × 1 mm3, respectively. The scans were screened for motion artifacts, and time series with motion peaks exceeding 3 mm (translation) or 3° (rotation) were discarded. Finally, the warped functional images were spatially smoothed with a Gaussian kernel of 4-mm full-width at half maximum (Schrouff et al. 2012).

Univariate Analyses

Univariate analyses first assessed brain activation levels associated with visual and verbal WM load. For each subject, brain responses were estimated at each voxel, using a general linear model with event-related and epoch-related regressors. For the visual array WM task, 3 regressors modeled the encoding-only trials (1 per load) as zero-duration events; 3 additional regressors also modeled the recognition trials in order to control for variance related to comparison and response processes additionally associated with these trials. For the verbal WM task, the design matrix included 3 regressors, which modeled sustained activity over the entire verbal WM trial as a function of verbal WM load; the epoch-related regressors ranged from the onset of the encoding period until the end of the recognition period; the sensory and motor control condition was modeled implicitly. For each task, boxcar functions representative for each regressor were convolved with the canonical hemodynamic response. The design matrixes also included the realignment parameters to account for any residual movement-related effect. A high pass filter was implemented using a cutoff period of 128 s in order to remove the low-frequency drifts from the time series. Serial autocorrelations were estimated with a restricted maximum likelihood algorithm with an autoregressive model of order 1 (+ white noise). For each design matrix, linear contrasts were defined for the 3 target load conditions. The resulting set of voxel values constituted a map of t statistics [SPM{T}]. For each task, these contrast images, after additional smoothing by 6-mm FHWM, were then entered in a second-level, random effect ANOVA analysis to assess load responsive brain areas. The additional smoothing was implemented in order to reduce noise due to inter-subject differences in anatomical variability and in order to reach a more conventional filter level for group-based univariate analyses (Mikl et al. 2008).

Multivariate Analyses

Multivariate analyses were conducted using PRoNTo, a pattern recognition toolbox for neuroimaging (http://www.mlnl.cs.ucl.ac.uk/pronto; Schrouff et al. 2013). They were used to determine the similarity of voxel patterns associated with load effects in the visual array and verbal WM tasks. We trained a classifier to distinguish whole-brain voxel activation patterns associated with high versus low load in the preprocessed and 4-mm-smoothed functional images of the visual array WM encoding-only events, using a binary support vector machine (Burges 1998), and by contrasting the lowest load condition (2) to 1 of the other load (4 or 6) conditions; we used 2 2-class classifiers rather than a single 3-class classifier since capacity limitations are known to vary among subjects, some subjects reaching their limits at load 4, others at load 6 for this type of task (Cowan 2001); a clear separation in 3 distinct classes was therefore not expected. This classifier, based on a single, short event, was then used to predict the load condition of the preprocessed and 4-mm-smoothed functional images of the verbal WM task. The WM test events were based on successive 1-s time window shifts of the onsets of verbal WM events modeled as 0-duration events, allowing us to retain an equal number of scans (i.e., 1 scan) for the classifier training and test situations and further allowing us to perform between-task classification as a function of successive events of the verbal WM task (Riggall and Postle 2012); a 1-s time window for the verbal WM task was used in order to be able to compare events of similar short duration in the verbal and visual WM conditions (note that the duration of visual WM events was <1 s, and these events had also been modeled as 0-duration events). The same procedure was applied to the reverse prediction (verbal WM to visual WM), with this time the training classifiers time-shifted as a function of successive verbal WM events, and tested on the same, single visual array WM event. Significance of classification accuracy was assessed at the group level by comparing the distribution of classification accuracy to a chance-level distribution (t-test, P < 0.05 after false-discovery rate correction) and at the individual level using a permutation test (Npermutation: 1000; P < 0.05). A standard mask removing voxels outside the brain was applied to all images, and all models included timing parameters for HRF delay (5 s) and HRF overlap (5 s) ensuring that stimuli from different categories falling within the same 5 s were excluded (Schrouff et al. 2013). A region-of-interest approach was used at a second stage, by repeating the preceding procedures and by limiting the voxel space to a priori-defined volumes-of-interest (see below).

A Priori Locations of Interest

As a rule, for univariate analyses, statistical inferences were performed at the voxel level at P < 0.05 corrected for multiple comparisons across the entire brain volume using Random Field Theory. We in addition focused on a small set of a priori-defined regions-of-interest that have shown interactions between attentional processing and WM in previous studies. These regions included the dorsal attention network with the bilateral posterior IPS [−25, −64, 43; 27, −62, 38], the bilateral superior frontal gyrus [−20, −1, 50; 26, −2, 47] as well as the ventral attention network with the bilateral temporo-parietal junction [−46, −57, 20; 47, −57, 24] and orbito-frontal cortex [−37, 26, −8; 34, 27, −10] (Todd and Marois 2004; Todd et al. 2005; Asplund et al. 2010; Majerus et al. 2012). We also included the left anterior IPS, which has been associated with amodal attentional control processes [−43, −46, 40] (Cowan et al. 2011; Majerus et al. 2012). A small volume correction was applied on a 10-mm radius sphere around these coordinates-of-interest.

For multivariate analyses, 10-mm radius volumes-of-interest were created around these same areas of interest and these volumes-of-interest were then used as masks for the training and test phases, allowing us to determine classification accuracy for these areas of interest. In order to assess the selectivity of the classifications in these areas, we also targeted visual and language processing areas where no between-task predictions were expected, except for shared sensory processing of visual form information during encoding, since the verbal and visual stimuli were both presented in a visual format. These additional volumes-of-interest included the bilateral middle occipital gyrus [−32, −79, 9; 29, −82, 8] (Pessoa et al. 2002) involved in color and basic visual form processing, which may be common to encoding of the square and letter stimuli in the visual and verbal WM conditions. These volumes-of-interest also included the left fusiform [−43, −55, −18] and superior temporal gyri [−58, −36, 10] for orthographic and phonological processing, respectively (McCandliss et al. 2003; Majerus et al. 2010), and which should not be shared between for stimulus encoding in the verbal and visual WM conditions.

Results

Behavioral Analyses

A first within-subjects ANOVA assessed behavioral load effects for the recognition trials in the visual array WM task. These effects were determined using the k parameter of scope of attention capacity measured by this task (Cowan et al. 2005). k is computed by comparing hits (i.e., correct change detections) and correct rejections (i.e., correct no-change responses) for each load condition, via the formula k = N*(pHits + pRejections − 1), N being the amount of stimuli per array. An ANOVA on the k parameter showed a main effect of load, F2,40 = 42.03, P < 0.001, η2 = 0.68 (load 2: 1.86 ± 0.18; load 4: 3.17 ± 0.76; load 6: 4.38 ± 1.63) (the respective proportions of performance correct were as follows: 0.96 ± 0.05, 0.89 ± 0.09, 0.85 ± 0.13). Bonferroni-corrected comparisons showed a significant difference for k between load 2 and load 4 and between load 4 and load 6. Importantly, mean k for the highest load condition was 4.38, indicating that the group-averaged mean scope of attention was about 4 items in the visual array WM task, in line with previous studies. Similar results were obtained when assessing reaction times: We observed a main effect of load, F2,40 = 12.42, P < 0.001, η2 = 0.38, with slower responses for higher load (load 2: 1143 ± 61 ms; load 4: 1234 ± 63 ms; load 6: 1376 ± 96 ms), and response type, F1,20 = 11.90, P < 0.01, η2 = 0.37, with slower responses for rejections as compared with hits (hits: 1187 ± 65; rejections: 1315 ± 64 ms). For the verbal WM task, task accuracy also varied as a function of load, F2,40 = 12.20, P < 0.001, η2 = 0.38, with reduced accuracy for the highest load condition only (load 2: 0.98 ± 0.03; load 4: 0.98 ± 0.02; load 6: 0.94 ± 0.05); for reaction times, a highly significant main effect of load, F2,40 = 311.08, P < 0.001, η2 = 0.94, was observed with response times increasing as a function of load (load 2: 1062 ± 51 ms; load 4: 1203 ± 55 ms; load 6: 1388 ± 54 ms); finally, there was also a small effect of response type, F1,20 = 7.66, P < 0.05, η2 = 0.28, with slower responses for negative probes (positive probes: 1190 ± 55; negative probes: 1246 ± 63 ms). These results confirm the load sensitivity of both the visual array and the verbal WM tasks.

Univariate fMRI Analyses

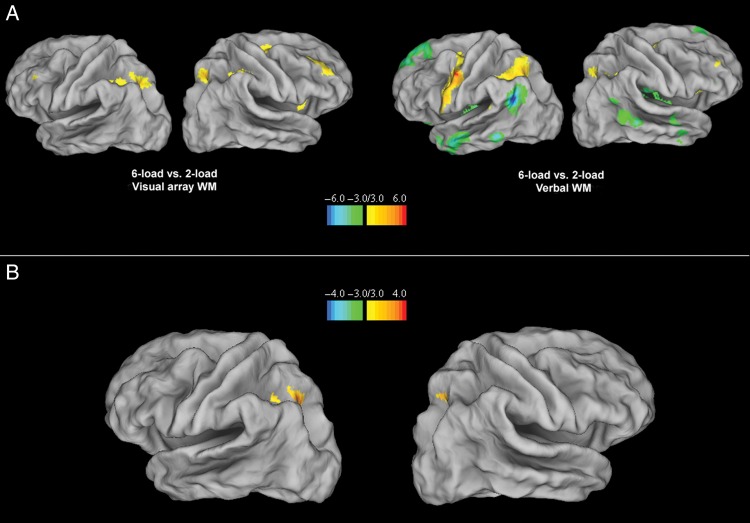

An ANOVA on functional images over the 3 load conditions of the visual array WM task (encoding-only trials) showed a main effect in the dorsal attention network, including the bilateral posterior IPS and the right superior frontal gyrus (see Table 1). These effects were due to significantly higher activation for the 6-load condition relative to the 2-load condition in the bilateral posterior IPS and the bilateral superior frontal gyrus, as well as in the right inferior parietal lobule (see Fig. 2A and Table 1). The 4-load versus 2-load contrast did not lead to significant effects, except for increased activation in a small right posterior IPS area in the 4-load condition.

Table 1.

Peak-level activation foci showing overall load-dependent activity in the visual array and verbal WM tasks

| Anatomical region | ANOVA |

Load 6 > load 2 |

Load 2 > load 6 |

||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| BA area | No. voxels | Left/right | x | y | z | SPM {Z}-value | No. voxels | x | y | z | SPM {Z}-value | No. voxels | x | y | z | SPM {Z}-value | |

| Visual array | |||||||||||||||||

| Dorsal attention network | |||||||||||||||||

| Superior frontal gyrus | 6 | L | 2 | −26 | −6 | 54 | 3.21* | ||||||||||

| Superior frontal gyrus | 6 | 5 | R | 24 | 4 | 50 | 3.23* | 107 | 24 | 4 | 50 | 3.68* | |||||

| Intraparietal sulcus (anterior) | 40 | 5 | −36 | −42 | 42 | 3.18* | |||||||||||

| Intraparietal sulcus (posterior) | 7 | 40 | L | −22 | −64 | 42 | 3.70* | 153 | −22 | −64 | 42 | 4.10* | |||||

| Intraparietal sulcus (posterior) | 7 | 57 | R | 24 | −62 | 52 | 3.96** | 13 | 22 | −60 | 46 | 3.43* | |||||

| Verbal WM | |||||||||||||||||

| ACC/SMA | 6/32 | 350 | B | −6 | 6 | 60 | 5.02 | 369 | −6 | 6 | 60 | 5.27 | |||||

| Posterior cingulate | 30 | B | 1418 | 4 | −50 | 18 | 4.80 | ||||||||||

| Precentral gyrus | 6 | 825 | L | −52 | 0 | 46 | 5.20 | 1450 | −52 | 0 | 46 | 5.63 | |||||

| Cerebellum | VI | 745 | R | 36 | −62 | −32 | 5.21 | 2271 | 36 | −62 | −32 | 5.46 | |||||

| Lingual gyrus | 17 | 309 | L | −14 | −86 | 2 | 5.07 | −14 | −86 | 2 | 5.47 | ||||||

| Insula | 13 | R | 1428 | 40 | −12 | −4 | 4.82 | ||||||||||

| Middle temporal gyrus | 21 | L | 1302 | −52 | −18 | −18 | 4.87 | ||||||||||

| Dorsal attention network | |||||||||||||||||

| Superior frontal gyrus | 6 | 27 | L | −24 | 2 | 54 | 3.65* | 132 | −24 | 2 | 54 | 3.63* | |||||

| Superior frontal gyrus | 6 | 25 | R | 28 | 2 | 54 | 3.70* | 1 | 30 | 2 | 56 | 3.11* | |||||

| Intraparietal sulcus (anterior) | 40 | 156 | L | −38 | −40 | 38 | 4.07* | 73 | −36 | −40 | 40 | 3.83* | |||||

| Intraparietal sulcus (posterior) | 7 | 184 | L | −26 | −62 | 48 | 3.95* | 236 | −26 | −62 | 48 | 4.21* | |||||

| Intraparietal sulcus (posterior) | 7 | 2 | R | 26 | −62 | 48 | 3.45* | 6 | 26 | −62 | 48 | 3.51* | |||||

| Ventral attention network | |||||||||||||||||

| Orbito-frontal cortex | 11/47 | 2245 | L | −10 | 54 | 18 | 5.07 | 3993 | −10 | 54 | 18 | 5.47 | |||||

| 47 | −34 | 32 | −14 | 3.75* | 49 | −36 | 32 | −14 | 4.22* | ||||||||

| 47 | 28 | 30 | −14 | 3.73* | 71 | 28 | 30 | −14 | 4.28* | ||||||||

| Temporo-parietal junction | 39 | 604 | L | −50 | −64 | 28 | 5.15 | 978 | −50 | −64 | 28 | 5.46 | |||||

| Temporo-parietal junction | 39 | R | 76 | 52 | −58 | 26 | 3.52* | ||||||||||

Note: If not otherwise stated, regions are significant at P < 0.05, with voxel-level FWE corrections for whole-brain volume.

*P < 0.05, small volume corrections, for regions-of-interest; **P < 0.001, uncorrected.

Figure 2.

(A). Brain areas showing load-sensitive activations when comparing the 6-load-versus-the 2-load conditions for the visual array (leftward panel) and verbal WM tasks (rightward panel) with a display threshold of 3 ≤ T ≤ 6 and –6 ≤ T ≤ –3 on 3D template of cortical surface (Van Essen et al. 2001). (B). Common load-sensitive areas in the visual array and verbal WM tasks for the 2-load versus 6-load conditions (null conjunction analysis), with a display threshold of 3 ≤ T ≤ 4 and –4 ≤ T ≤ –3 on a 3D template of cortical surface (Van Essen et al. 2001).

An ANOVA on functional images of the verbal WM task also showed a main effect of verbal WM load in the dorsal attention network including the bilateral posterior IPS and the bilateral superior frontal cortex, with more extended involvement, in terms of voxel numbers, in the left posterior IPS as compared with the right posterior IPS (see Table 1). In addition, there were also load effects in the ventral attention network, including the temporo-parietal junction and the orbito-frontal cortex. Additional effects were observed in the anterior part of the left IPS, in the anterior cingulate, the left precentral gyrus, the left lingual gyrus, and the right cerebellum (CrI). Except for the regions in the ventral attention network, all effects were due to higher activation in the 6-load condition relative to the 2-load condition (see Fig. 2A and Table 1). For the ventral attention network, the bilateral temporo-parietal junction and orbito-frontal cortex showed higher activation in the 2-load condition relative to the 6-load condition, in line with previous studies showing deactivation of this network for higher verbal WM load (see Fig. 2A and Table 1); this was also the case for regions in the posterior cingulate, the bilateral inferior temporal gyrus, and the left superior temporal gyrus reflecting further deactivation in the default mode network of which these regions are part of (Buckner et al. 2008). The 2-load versus 4-load contrast did not yield any significant activation.

Overall, overlap for the 2-load versus 6-load contrasts in the visual array and WM tasks was most strongly related to the dorsal attention network, and especially for the left posterior IPS. In order to test this overlap statistically, we conducted a conservative null conjunction analysis on the 6 versus 2 load effects in the visual array and verbal WM tasks, confirming statistically significant overlap in the left posterior IPS, and to a lesser extent, in the right posterior IPS as well as the left anterior IPS (see Table 2 and Fig. 2B).

Table 2.

Peak-level activation foci showing common load sensitivity for 6-load-versus-2-load conditions in the visual array and verbal WM tasks (null conjunction)

| Anatomical region | No. voxels | Left/right | x | y | z | BA area | SPM {Z}-value |

|---|---|---|---|---|---|---|---|

| Intraparietal sulcus (anterior) | 7 | L | −38 | −38 | 40 | 40 | 3.30* |

| Intraparietal sulcus (posterior) | 155 | L | −22 | −64 | 44 | 7 | 3.75* |

| Intraparietal sulcus (posterior) | 1 | R | 26 | −62 | 48 | 7 | 3.12* |

*P < 0.05, small volume corrections for regions-of-interest.

Multivariate fMRI Analyses

Whole-Brain Multivariate Analyses

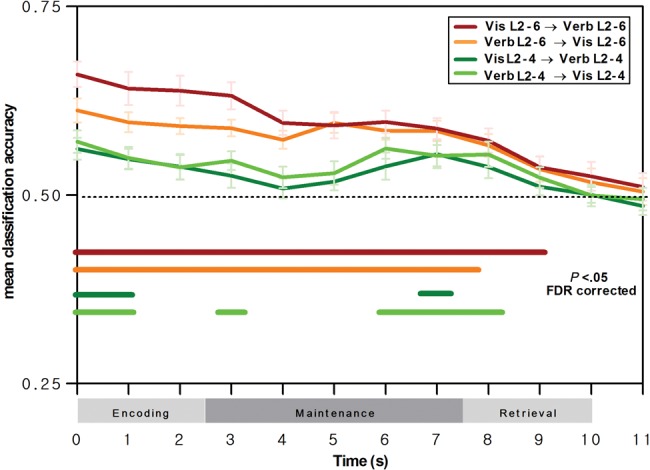

A first set of multivariate analyses assessed between-task prediction of load effects at the whole-brain level. A first classifier was trained to distinguish the 6-load versus the 2-load conditions for functional images for encoding-only trials of the visual array WM task, and a second classifier was trained to distinguish the 4-load versus the 2-load condition. These classifiers were then tested on successive events defining the verbal WM trials, by determining the ability of these classifiers (e.g., 6-vs.-2 visual array load) to decode load conditions in the verbal WM task (e.g., 6-vs.-2 verbal WM load). Figure 3 (red curve) presents mean classification accuracy for the 2 classifiers at the whole-brain level and associated group-level significance of classifications against chance-level classification (t-test, P < 0.05, with false-discovery rate correction for multiple testing). Between-task mean classification accuracy for the 6-versus-2 load classifier was significantly above chance level for all events of the WM trial and fell to chance-level performance at the end of the retrieval stage. Note that the high initial values of the classification curve in Figure 3 and following are due to the 5-s HRF delay implemented in the MVPA, meaning that the actual time of the events used for the classifications is 5 s later relative to the time points indicated on the x-axis. For the 4-versus-2 load classifier (Fig. 3, dark green curve), between-task classification was significantly higher than chance-level classification during the early encoding phase and during the late maintenance/early retrieval phase. A second set of analyses assessed reverse between-task prediction, by training 6-versus-2 load classifiers on the verbal WM task, with a separate classifier for each event of the WM task, and by using these classifiers to predict the load conditions on the single event of the visual array WM task. As shown in Figure 3 (orange curve), except for the retrieval phase, all events of the verbal WM trial contained sufficient information to predict WM load in the single-event visual array WM task; it is important to note here that this included classifiers trained on distinguishing verbal WM load during the maintenance phase, when no stimuli were physically present, and hence between-task classification was based on neural events associated with internal WM load. When performing the same type analysis for predicting 4-versus-2 load conditions in the visual array WM task based on a 4-versus-2 load classifier trained on verbal WM events, we observed overall less robust between-task predictions (Fig. 3, light green curve) but importantly, between-task classification was above chance-level performance for classifiers trained on early as well as late maintenance events.

Figure 3.

Whole-brain classification results for prediction of voxel patterns associated with verbal WM load by voxel patterns associated with attentional load in the visual array WM task, and vice versa, as a function of verbal WM phase. Curves indicate mean between-task classification accuracy and SEM for the 6-versus-2 load classifier (red curve for prediction of verbal WM load by visual WM load; orange curve for prediction of visual WM load by verbal WM load) and for the 4-versus-2 load classifier (dark green curve for prediction of verbal WM load by visual WM load; light green curve for prediction of visual WM load by verbal WM load). Horizontal lines indicate classifications significantly higher than chance-level classification at the group level (t-tests with false-discovery rate correction for multiple testing, P < 0.05). Note that the classifications were conducted by considering a 5-s delay of the hemodynamic response function, and hence the classification events displayed here are shifted by +5 s relative to trial time (see the section Materials and methods for further details).

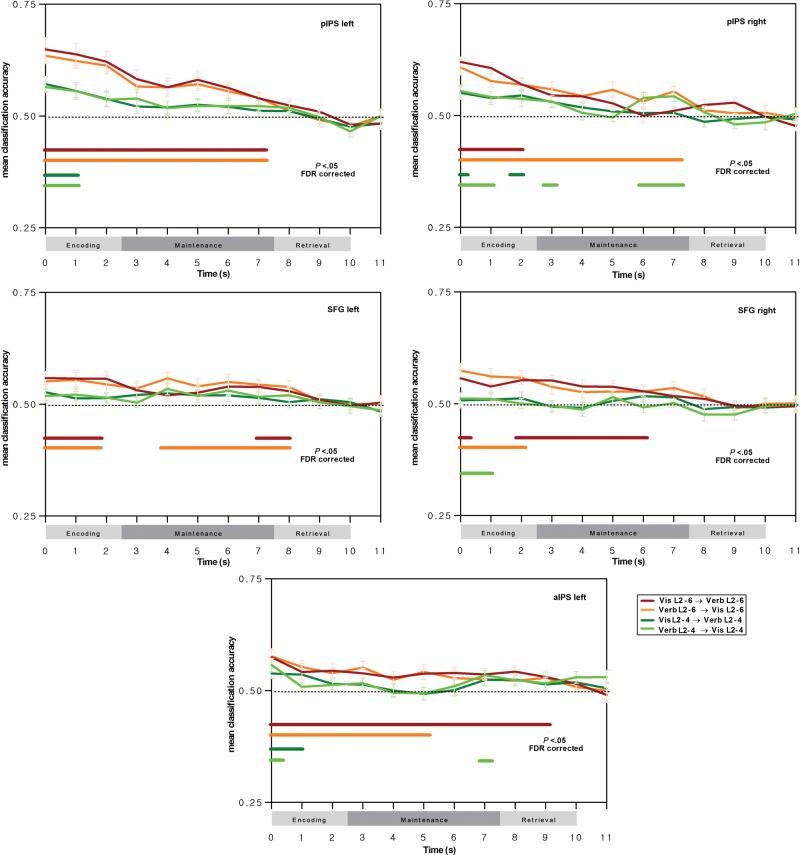

Region-of-Interest Multivariate Analyses

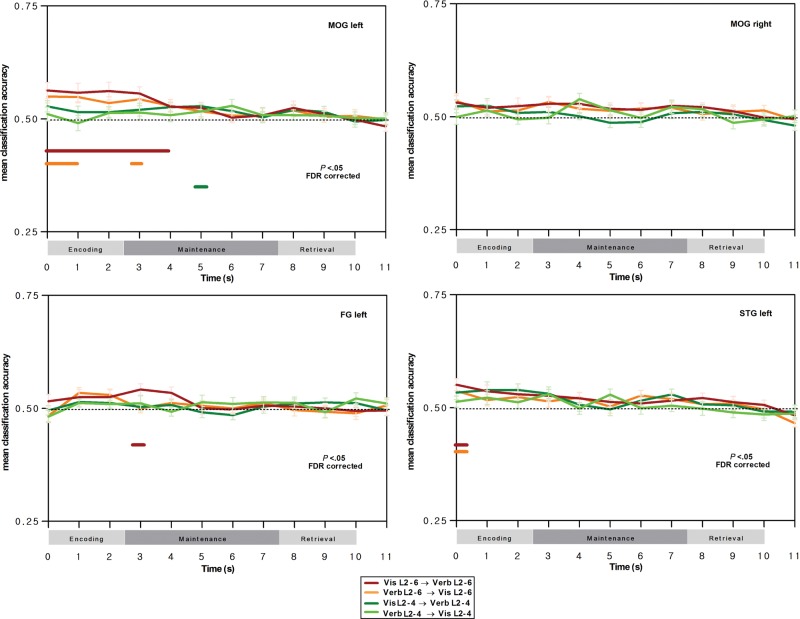

Next, we determined between-task classification accuracy restricted to different regions-of-interest, and this more particularly for the bilateral posterior IPS, which is part of the dorsal attention network, and which, in the univariate conjunction analyses of the previous section, has been shown to support load effects in both the visual array and verbal WM tasks. When restricting classification to posterior IPS regions-of-interest, a very similar profile to the whole-brain classifications was observed, with significant, above chance-level classification accuracy during encoding and during maintenance for the 6-versus-2 load classifier, and this again for prediction of verbal WM load by visual WM load, and vice versa (see Fig. 4, red and orange curves, respectively); this was the case for both the left and right posterior IPS regions, except for the visual WM-to-verbal WM training-prediction direction in the right posterior IPS where classification was significant only during the encoding phase. The same robust between-task prediction of WM load was also observed in the more anterior IPS region-of-interest, for both encoding and maintenance events of the verbal WM task and for both training-prediction directions. When considering the 4-versus-2 load classifiers, between-task classification was overall less robust; significant between-task predictions were observed for events of the early verbal WM encoding stage (for both training-prediction directions) in the left posterior IPS, and for early encoding and late maintenance in the right posterior IPS, and this particularly for the verbal WM-to-visual WM training-prediction direction. For the superior frontal gyrus regions-of-interest of the dorsal attention network, reliable between-task predictions were observed for the 6-versus-2 load classifiers for both training-prediction directions, and this for events of the verbal WM encoding stage and maintenance stage; this finding is important to highlight since these regions had not been identified as supporting cross-modality load effects in the univariate analyses. Finally, as shown in Figure 5, no reliable between-task predictions were observed in regions-of-interest supporting phonological, orthographic, and visual processing; the only somewhat robust between-task predictions were restricted to the encoding events in the left middle occipital gyrus associated with visual stimulus processing, in line with our predictions of shared visual load effects during encoding in the verbal WM and the visual array WM tasks, when the stimuli are physically present.

Figure 4.

Classification results in the dorsal attention network for prediction of voxel patterns associated with verbal WM load by voxel patterns associated with attentional load in the visual array WM task, and vice versa, as a function of verbal WM phase. Curves indicate mean between-task classification accuracy and SEM for the 6-versus-2 load classifier (red curve for prediction of verbal WM load by visual WM load; orange curve for prediction of visual WM load by verbal WM load) and for the 4-versus-2 load classifier (dark green curve for prediction of verbal WM load by visual WM load; light green curve for prediction of visual WM load by verbal WM load). Horizontal lines indicate classifications significantly higher than chance-level classification at the group level (t-tests with false-discovery rate correction for multiple testing, P < 0.05). Note that the classifications were conducted by considering a 5-s delay of the hemodynamic response function, and hence, the classification events displayed here are shifted by +5 s relative to trial time (see the section Materials and methods for further details).

Figure 5.

Classification results in sensory, orthographic, and phonological processing regions-of-interest for prediction of voxel patterns associated with verbal WM load by voxel patterns associated with attentional load in the visual array WM task, and vice versa, as a function of verbal WM phase. Curves indicate mean between-task classification accuracy and SEM for the 6-versus-2 load classifier (red curve for prediction of verbal WM load by visual WM load; orange curve for prediction of visual WM load by verbal WM load) and for the 4-versus-2 load classifier (dark green curve for prediction of verbal WM load by visual WM load; light green curve for prediction of visual WM load by verbal WM load). Horizontal lines indicate classifications significantly higher than chance-level classification at the group level (t-tests with false-discovery rate correction for multiple testing, P < 0.05). Note that the classifications were conducted by considering a 5-s delay of the hemodynamic response function, and hence, the classification events displayed here are shifted by +5 s relative to trial time (see the section Materials and methods for further details).

In order to determine the statistical significance of the specific sensitivity of regions-of-interest in the dorsal attention network for between-task classification during verbal WM maintenance events relative to the left middle occipital gyrus region, we ran a repeated-measures ANOVA on mean classification accuracies with the factors region (left middle occipital gyrus, left posterior IPS) and verbal WM events; the left posterior IPS was selected here as this region-of-interest was associated with the most robust classification behavior over the entire verbal WM task. For the visual WM-to-verbal WM training-prediction direction, we observed a main effect of region, F1,20 = 8.71, , P < 0.01, a main effect of event, F11,220 = 13.24, , P < 0.001, and a significant interaction, F11,220 = 4.23, , P < 0.001; planned comparisons showed an overall higher classification accuracy for load conditions in the left posterior IPS, and this advantage was particularly strong for the early encoding stage (first 2 events) and the late maintenance phase (events 6 and 7) (P < 0.05, after Bonferroni corrections for multiple comparisons). When running the same analysis for the verbal WM-to-visual WM training-prediction direction, very similar results were observed, with a main effect of region, F1,20 = 18.75, , P < 0.001, a main effect of event, F11,220 = 12.47, , P < 0.001, and a significant interaction, F11,220 = 4.84, , P < 0.001; the superiority of classification accuracy in the left posterior IPS was particularly marked for the encoding and late maintenance stage (events 1, 2, 3, and 6) (P < 0.05, Bonferroni-corrected).

Brain–Behavior Associations

Next, we explored associations between between-task classification accuracy and behavioral measures, by assessing the relationship between individual classification consistency and behavioral performance in the verbal WM and visual array WM task (behavioral performance in the visual array WM tasks being based on encoding + retrieval trials). We determined for each participant classification accuracy consistency over the encoding, maintenance, and retrieval stage for whole-brain classifications (visual WM-to-verbal WM training-prediction direction); individual classification consistency was determined by calculating the number of significant individual classifications (the significance of individual classification accuracies was determined by permutation tests, P < 0.05) over the different time points of the verbal WM trial. Individual classification consistency scores were then correlated with verbal WM and visual WM performance scores. A first correlation analysis assessed the association between individual classification consistency and verbal WM behavioral measures: no significant correlation was observed between the classification consistency score and the 6-versus-2 load verbal WM accuracy difference score, r = −0.02, P = 0.93, or the 6-versus-2 load verbal WM RT difference score, r = 0.16, P = 0.48. In other words, verbal WM performance was the same, whether participants showed highly consistent between-task classification or not. However, when performing the same analyses on the visual WM performance score as estimated by the maximum k score, a significant correlation with the individual classification score was observed, r = 0.49, P < 0.05. Similar results were obtained when determining individual classification consistency scores for the verbal WM-to-visual WM prediction direction: The correlation with the maximum k score was significant, r = 0.44, P < 0.05, but this was not the case for the 6-versus-2 load verbal WM accuracy difference score, r = −0.01, P = 0.99 nor for the 6-versus-2 load verbal WM reaction time difference score, r = 0.13, P = 0.58. In other words, those participants presenting the most consistent between-task classifications were those with the highest visual WM capacity. This asymmetric finding is of theoretical importance given that visual WM capacity is considered to reflect scope of attention capacity more directly than verbal WM capacity, as we will discuss later. Finally, note that we observed a positive, although non-significant, direct correlation between verbal and visual WM performance of medium size, r = 0.35, P = 0.12 (correlations computed for accuracy scores for the 6-load conditions in each task where performance showed the greatest variability).

One could argue that these asymmetric findings may be due to task order and associated fatigue effects, given that the visual array WM task was always presented before the verbal WM task. This is, however, unlikely. First, performance levels remained quite high for the verbal WM task even for high-load trials (accuracy: 94%); also, mean response times were very similar for the visual and verbal WM tasks, with no evidence for slowing in the verbal WM task (1218 and 1251 ms, for the verbal and visual WM tasks, respectively); the same is true for the variability of reaction times in both tasks (see the section Results for behavioral results). Furthermore, the accuracy and reaction time data for the verbal WM task are virtually identical to those obtained in other studies where a very similar version of this task was administered right in the beginning of the fMRI session (see Majerus et al. 2012). Second, behavioral performance is correlated here with between-task classification scores, which integrate neural patterns associated with both modalities. Hence, if neural patterns associated with the verbal WM task would have been unreliable due to fatigue effects, then overall between-task predictions of load effects should have become unreliable too, and associations with both verbal and visual WM performance should have been affected.

Finally, in order to further show that the robust classification accuracy for the 6-versus-2 load conditions observed in this study reflects the increase of information held in WM, and not merely the increased difficulty or cognitive effort associated with maintaining 6-versus-2 items in WM, we performed a second type of correlation analyses where we examined the direction of the association between overall classification accuracy and visual and verbal WM capacity. If heightened classification accuracy merely reflects the increased difficulty and cognitive effort between the 2 conditions, then participants with “low” k-capacity should show heightened classification accuracy, and this especially in conditions where capacity limits are reached (i.e., the 6-load condition as compared with the 2-load condition). This was tested by averaging individual classification accuracies for the 8 first events of the verbal WM trials (in order not to bias the results by the presence of non-informative end-of-trial events) and by correlating these mean classification accuracies with the maximum k score of the visual WM task and the accuracy score for the verbal WM task (restricted to 6-load trials where performance was most variable). For the visual WM-to-verbal WM prediction direction and for the 6-versus-2 classifiers, we observed a significant positive correlation between mean classification accuracy and maximum k capacity, r = 0.45, P < 0.05, meaning that the higher classification accuracy, the better visual WM capacity; this rules out an interpretation in terms of increased task difficulty as underlying increased between-task classifications. A non-significant positive correlation was also observed with the verbal WM performance score, r = 0.26, P = 0.25. Similar results were observed when conducting the same analyses for the verbal-to-visual prediction direction: both the visual and verbal WM scores showed significant and large positive correlations with mean classification accuracy for 6-versus-2 load classifiers (r = 0.71, P < 0.001, and r = 0.50, P < 0.05, for visual and verbal WM scores, respectively). When running the same analysis on individual classification accuracies for the left posterior IPS region-of-interest, which had yielded the most robust classification pattern in the dorsal attention network, similar results were also observed: For the visual WM-to-verbal WM prediction, the correlation values were r = 0.27, P = 0.24, and r = 0.08, P = 0.72, for visual and verbal WM scores, respectively; for the verbal WM-to-visual WM prediction, the correlation values were as follows: r = 0.46, P < 0.05, and r = 0.21, P = 0.37. In sum, these analyses show that for individuals with lower WM performance, classification accuracy was also reduced, and this was particularly the case for the visual WM scores, which reflect most directly attention-based WM maintenance processes as we will discuss. Note, however, that we need to remain cautious when interpreting the brain–behavior correlations reported here given the relatively small sample size (N = 21) for analyses looking at associations between interindividual differences in neural and behavioral patterns.

Discussion

We show here that neural activation patterns differentiating high- and low-verbal WM load can be predicted by neural patterns dissociating high and low load in a visual array WM task, and vice versa. A region-of-interest approach showed that this was the case more particularly for the bilateral posterior IPS, which had also been identified in univariate conjunction analyses as supporting cross-modal load effects, and for the bilateral superior frontal cortex, which had not been identified in univariate conjunction analyses. These regions define the dorsal attention network. Furthermore, these cross-modal predictions of WM load effects in these regions were observed during maintenance when stimuli were not physically present, as well as during encoding and retrieval. Multivariate analyses also identified cross-modal predictions of load effects in sensory cortices, but these were limited to the encoding and very early maintenance stage. Finally, cross-modal classification accuracy for 6-versus-2 load conditions was highest and most reliable in those participants presenting the highest visual WM capacity.

The present results provide novel evidence for an increasingly influential account considering that neural substrates involved in retention of verbal and visual WM reflect a common involvement of attentional processes supported by the dorsal attention network. (Todd and Marois 2004; Todd et al. 2005; Majerus et al. 2012). We show that verbal WM load-sensitive neural patterns during the maintenance phase can actually be predicted by neural patterns sensitive to WM load in a visual WM task, and vice versa, and this precisely for regions of the dorsal attention network while cross-modal predictions in sensory cortices were limited to the encoding and the very early maintenance stage. This is also in line with a recent study by Emrich et al. (2013), showing that, if there are any load effects in sensory cortices during maintenance, they are actually reversed, with informative value decreasing with increasing memory load. Furthermore, the visual WM task used here maximized attention-based retention processes since any strategic control and verbal recoding processes were prevented due to the brief presentation rates. This task measured the participants' ability to hold a variable amount of visual information in their WM. This ability has been defined as reflecting the scope of attention and is typically limited to about 4 ± 2 items (Cowan 2001). The present study shows that neural substrates associated with visual scope of attention predict neural patterns associated with verbal WM load, and vice versa. This is further supported by brain–behavior associations where we showed that higher scope of attention capacity was associated with higher cross-modal classification accuracy for the highest load condition relative to the low-load condition: hence participants with higher scope of attention, that is, a focus of attention capacity above 4, were able to hold more information in the focus of attention for the highest load condition, leading to higher classification accuracy relative to participants with a lower scope of attention, that is, a focus of attention capacity of 4 or less. This also shows that the overall higher classification accuracies for the 6-versus-2 classifier relative to the 4-versus-2 classifier are not the result of the higher difficulty and cognitive effort associated with the highest load condition relative to the other 2 conditions, in which case, we should have expected a negative relationship between classification accuracy and behavioral performance. It is also unlikely that other factors such as differences in recruitment of interference, monitoring, or motivational processes between high- and low-load conditions account for the results we observed since these processes are supported by distinct neural networks as those highlighted in this study (anterior cingulate cortex for monitoring; Roelofs et al. 2006; dorsolateral prefrontal and inferior prefrontal cortex for resistance to interference; Schnur et al. 2009; Thompson-Schill et al. 2002; posterior cingulate, angular gyrus, and insular cortex for external and internal motivation, Farrer and Frith 2002; Lee et al. 2012). Also, while some of these regions were activated for high- versus low-load contrasts in the verbal WM tasks, none of these regions was activated for these contrasts in the visual WM task.

Previous studies have highlighted the role of attentional processes in verbal and visual WM essentially via manipulations of attentional factors within WM tasks, and by showing that attentional and WM processes compete for the same resources. Lavie and colleagues showed that when performing concurrently WM and selective visual attention tasks, the number of erroneous detections increases in the visual attention task with high WM load (load theory of attention) (Lavie et al. 2004; Lavie 2005; Kelley and Lavie 2011). These results suggest that high-WM load conditions consume attentional resources which are shared with visual selective attention. Other studies have manipulated the orientation of the focus of attention within WM tasks, by instructing participants to maintain stimuli varying as a function of several dimensions, by focusing, during the maintenance period, the attentional focus on 1 of these dimension or on 1 specific stimulus, or by disrupting the attentional focus during WM encoding (Kuo et al. 2012; Lewis-Peacock et al. 2012; Lewis-Peacock and Postle 2012; Rigall and Postle 2012; Majerus et al. 2013). The posterior parietal cortex appears to be critically involved in these attentional (re)-orientation processes on WM content, since the attended stimulus dimension can be predicted from neural activation patterns in the posterior parietal cortex (Rigall and Postle 2012). Lewis-Peacock et al. (2012) argued that sustained neural activation patterns during the WM maintenance period reflect the attentional focus and the reorientation of this focus rather than heightened activation of information maintained in WM since they observed that neural patterns during the maintenance phase are sensitive to shifts of attention rather than to WM content (see also LaRocque et al. 2013). However, due to the direct manipulation of attentional processes and orientation during WM tasks in these studies, it is difficult to determine whether these attentional parameters are a defining property of WM functioning or whether they arise from the need to implement attentional control processes elicited by the change in WM task set instructions or by the need to coordinate WM and attentional tasks in a dual task situation. The present study goes an important step further, by focusing on neural patterns associated with basic verbal and visual WM processes, without any requirement to redirect attention or to divide attention as a function of task instructions; we show that load-sensitive neural patterns in this core short-term retention situation can be reliably predicted from neural patterns associated with attentional load identified by a separate and independent visual array WM task, and vice versa. One question raised by our study design is whether the visual presentation format for the verbal and visual WM tasks could have contributed to the reliable between-task classifications of neural patterns we have observed. As already noted in the section Introduction, although the letters were presented visually, a large body of evidence has shown that visually presented letter sequences are immediately recoded in phonological codes and verbal WM performance for visually presented letters is sensitive to the phonological similarity rather than the visual similarity of the letters unless maintenance of visual features is explicitly stressed (Baddeley 1986; Logie et al. 2000). Furthermore, an ERP study comparing WM for auditory and visual presentations of letter sequences observed phonological similarity effects for both conditions, although the origin of the ERP marker of phonological similarity differed between the 2 presentations, with a frontotemporal focus for the auditory condition, and a temporo-occipital focus for the visual presentation (Martín-Loeches et al. 1998). A similar finding has been observed by a study comparing auditory and visual presentations of N-back WM tasks for letter stimuli: both modalities led to common activation of the bilateral frontoparietal cortex, with modality differences restricted to inferior temporal, inferior occipital, and middle occipital regions more strongly activated in the visual modality (Rodriguez-Jimenez et al. 2005). This finding can be related to the significant between-modality classifications observed for the middle occipital region-of-interest we observed during encoding, and which we attributed to the processing of shared visual sensory features of the stimuli in the 2 tasks. Importantly, the modality effects reported by Martín-Loeches et al. and Rodriguez-Jimenez et al. for auditorily and visually presented letter sequences did not involve the frontoparietal networks where we observed the most reliable between-task classifications, and this at all WM stages. At the same time, it remains to be shown whether the results observed in the present study generalize to auditorily presented verbal WM tasks; we predict that this should be the case.

The present results further imply that the attentional focus has a variable capacity (Cowan 2001; Morrison et al. 2014). Some studies showed that at the moment of retrieval, only the most recent item was associated with enhanced activity or functional connectivity in the posterior parietal cortex, suggesting that only the most recent item is held in the focus of attention (Talmi et al. 2005; Ötzekin et al. 2009, 2010; Nee and Jonides 2011). A more recent study, however, showed that these findings may have been induced by the specific task requirements, with very long encoding lists (up to 12 items) or task cues presented only at the moment of retrieval, which may have made it difficult to efficiently hold and focus attention on the entire memory list (Morrison et al. 2014). Lewis Peacock et al. (2012) also showed that attention can be focused on at least 2 items at once according to their multivariate classification results. Further convergent evidence comes from a behavioral experiment showing that attention in a perceptual search task can be guided by multiple WM items at the same time (Beck et al. 2012). The present study, showing that high (6-item) and low (2-item) attentional load conditions can be reliably distinguished in posterior parietal cortex and that the strength of this distinction varies as a function of scope of attention capacity of the participants, indicates that the capacity of the attentional focus exceeds 1 item and is flexible, that is, it varies between individuals.

The present findings raise the more general question of the functional relevance of shared neural substrates between verbal and visual WM. Although we showed that higher and more reliable between-task classification accuracy was associated with higher scope of attention capacity, variability in classification accuracy was less reliably associated with variability in behavioral verbal WM performance. It may be that visual scope of attention, although involved in the maintenance of information in verbal WM, is not necessarily associated with verbal WM retrieval success. This possibility is supported by the findings of Lewis-Peacock et al. (2012) who showed that WM success did not differ for items actively held in the focus of attention or not, suggesting that recognition based on distributed neural activation patterns in sensory cortex, that is, in activated long-term memory, can be sufficient (see also Riggall and Postle 2012; Emrich et al. 2013; Rahm et al. 2014). A second possibility is that although attentional focalization and refreshing is the only way to maintain nonverbal visual items, verbal items can be maintained via 2 different processes. Verbal information can be maintained either by attentional refreshing of neural representations as for visual information (Raye et al. 2007), or it can be maintained through covert verbal rehearsal, a much less attention-demanding strategy. Camos et al. (2011) showed that for verbal stimuli, participants can be made to use 1 strategy or another. Given 2 possible strategies for verbal WM maintenance, it may have been that participants showing the strongest cross-modal classification accuracies used the attentional strategy more because their higher scope of attention capacity allowed them to do so, whereas those with the weaker cross-modal classifications relied to a larger extent on the verbal rehearsal strategy for the verbal materials.

To conclude, this study provides new evidence for shared, attention-based neural substrates during retention of verbal and visual information in WM, by demonstrating that univariate neural responses associated with verbal and visual WM load not only overlap in the posterior parietal cortex but also that the multivariate neural patterns in a larger part of the dorsal attention network are sufficiently similar to allow for cross-modal predictions of WM load, and this particularly in participants showing the strongest scope of attention capacity. Future studies need to investigate the functional consequences of these findings for the understanding of behavioral WM capacity limitations.

Funding

This work was supported by grants F.R.S.-FNRS N°1.5.056.10 (Fund for Scientific Research FNRS, Belgium) and PAI-IUAP P7/11 (Belgian Science Policy).

Notes

Conflict of Interest: None declared.

References

- Anderson DE, Vogel EK, Awh E. 2013. A common discrete resource for visual working memory and visual search. Psycholog Sci. 24:929–938. [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- Anderson JS, Ferguson MA, Lopez-Larson M, Yurgelun-Todd D. 2010. Topographic maps of multisensory attention. PNAS. 107:20110–20114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andersson JL, Hutton C, Ashburner J, Turner R, Friston K. 2001. Modeling geometric deformations in EPI time series. NeuroImage. 13:903–919. [DOI] [PubMed] [Google Scholar]

- Asplund CL, Todd JJ, Snyder AP, Marois R. 2010. A central role for the lateral prefrontal cortex in goal directed and stimulus-driven attention. Nat Neurosci. 13:507–512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baddeley AD. 1986. Working Memory. Oxford, UK: Oxford University Press. [Google Scholar]

- Beck VM, Hollingworth A, Luck SJ. 2012. Simultaneous control of attention by multiple working memory representations. Psychol Sci. 23:887–898. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brahmbhatt SB, McAuley T, Barch DM. 2008. Functional developmental similarities and differences in the neural correlates of verbal and nonverbal working memory tasks. Neuropsychologia. 46:1020–1031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buckner RL, Andrews-Hanna JR, Schacter DL. 2008. The brain's default network: anatomy, function, and relevance to disease. The Year in Cognitive Neuroscience. Ann N Y Acad Sci. 1124:1–38. [DOI] [PubMed] [Google Scholar]

- Burges CJC. 1998. A tutorial on support vector machines for pattern recognition. Boston: Kluwer Academic Publishers. [Google Scholar]

- Camos V, Mora G, Oberauer K. 2011. Adaptive choice between articulatory rehearsal and attentional refreshing in verbal working memory. Mem Cognit. 39:231–244. [DOI] [PubMed] [Google Scholar]

- Chein JM, Moore AB, Conway ARA. 2011. Domain-general mechanisms of complex working memory span. NeuroImage. 54:550–559. [DOI] [PubMed] [Google Scholar]

- Conrad R. 1964. Acoustic confusion in immediate memory. Br J Psychol. 55:75–84. [DOI] [PubMed] [Google Scholar]

- Conrad R, Hull AJ. 1964. Information, acoustic confusion and memory span. Br J Psychol. 55:429–432. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL. 2002. Control of goal-directed and stimulus-driven attention in the brain. Nat Rev Neurosci. 3:201–215. [DOI] [PubMed] [Google Scholar]

- Cowan N. 1995. Attention and Memory: An Integrated Framework. New York: Oxford University Press. [Google Scholar]

- Cowan N. 2001. The magical number 4 in short-term memory: A reconsideration of mental storage capacity. Behav Brain Sci. 24:87–185. [DOI] [PubMed] [Google Scholar]

- Cowan N, Elliott EM, Saults JS, Morey CC, Mattox S, Hismjatullina A, Conway ARA. 2005. On the capacity of attention: Its estimation and its role in working memory and cognitive aptitudes. Cognit Psychol. 51:42–100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cowan N, Fristoe NM, Elliott EM, Brunner RP, Saults JS. 2006. Scope of attention, control of attention, and intelligence in children and adults. Mem Cognit. 34:1754–1768. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cowan N, Li D, Moffitt A, Becker TM, Martin EA, Saults JS, Christ SE. 2011. A neural region of abstract working memory. J Cognit Neurosci. 23:2852–2863. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cowan N, Morey CC. 2007. How can dual-task working memory retention limits be investigated? Psychol Sci. 18:686–688. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dumontheil I, Thompson R, Duncan J. 2011. Assembly and use of new task rules in the frontoparietal cortex. J Cognit Neurosci. 23:168–182. [DOI] [PubMed] [Google Scholar]

- Duncan J. 2013. The structure of cognition: attentional episodes in mind and brain. Neuron. 80:35–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emrich S, Riggall AC, LaRocque JJ, Postle BR. 2013. Distributed patterns of activity in sensory cortex reflect the precision of multiple items maintained in visual short-term memory. J Neurosci. 33:6516–6523. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farrer C, Frith CD. 2002. Experiencing oneself versus another person as being the case of an action: the neural correlates of the experience of agency. Neuroimage. 15:596–603. [DOI] [PubMed] [Google Scholar]

- Fedorenko E, Duncan J, Kanwisher N. 2013. Broad domain generality in focal regions of frontal and parietal cortex. PNAS. doi:10.1073/pnas.1315235110. [DOI] [PMC free article] [PubMed]

- Fias W, Lammertyn J, Caessens B, Orban GA. 2007. Processing of abstract ordinal knowledge in the horizontal segment of the intraparietal sulcus. J Neurosci. 27:8592–8596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fuster JM. 1999. Memory in the Cerebral Cortex: An Empirical Approach to Neural Networks in the Human and Nonhuman Primate. Cambridge, MA: MIT Press; 372 p. [Google Scholar]

- Gazzaley A, Nobre AC. 2012. Top-down modulation: bridging selective attention and working memory. Trends Cogn Sci. 16:129–135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green S, Soto D. 2014. Functional connectivity between ventral and dorsal frontoparietal networks underlies stimulus-driven and working memory-driven sources of visual distraction. NeuroImage. 84:290–298. [DOI] [PubMed] [Google Scholar]

- Hautzel H, Mottaghy FM, Schmidt D, Zemb M, Shah NJ, Müller-Gärtner H-W, Krause BJ. 2002. Topographic segregation and convergence of verbal, object, shape and spatial working memory in humans. Neurosci Lett. 323:156–160. [DOI] [PubMed] [Google Scholar]

- Henson RNA, Burgess H, Frith CD. 2000. Recoding, storage, rehearsal and grouping in verbal short-term memory: an fMRI study. Neuropsychologia. 38:426–440. [DOI] [PubMed] [Google Scholar]

- Hutton C, Bork A, Josephs O, Deichmann R, Ashburner J, Turner R. 2002. Image distortion correction in fMRI: a quantitative evaluation. NeuroImage. 16:217–240. [DOI] [PubMed] [Google Scholar]

- Johnston SJ, Linden DE, Shapiro KL. 2012. Functional imaging reveals working memory and attention interact to produce the attentional blink. J Cogn Neurosci. 24:28–38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kelley TA, Lavie N. 2011. Working memory load modulates distractor competition in primary visual cortex. Cereb Cortex. 21:659–665. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuo BC, Stokes MG, Nobre AC. 2012. Attention modulates maintenance of representations in visual short-term memory. J Cogn Neurosci. 24:51–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LaRocque JJ, Lewis-Peacock JA, Drysdale AT, Oberauer K, Postle BR. 2013. Decoding attended information in short-term memory: an EEG Study. J Cogn Neurosci. 25:127–142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lavie N. 2005. Distracted and confused? Selective attention under load. Trends Cogn Sci. 9:75–82. [DOI] [PubMed] [Google Scholar]

- Lavie N, Hirst A, De Fockert JW, Viding E. 2004. Load theory of selective attention and cognitive control. J Exp Psychol Gen. 133:339–354. [DOI] [PubMed] [Google Scholar]

- Lee W, Reeve J, Xue Y, Xiong J. 2012. Neural differences between intrinsic reasons for doing versus extrinsic reasons for doing: an fMRI study. Neurosci Res. 73:68–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis-Peacock JA, Drysdale AT, Oberauer K, Postle BR. 2012. Neural evidence for a distinction between short-term memory and the focus of attention. J Cogn Neurosci. 24:61–79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis-Peacock JA, Postle BR. 2012. Decoding the internal focus of attention. Neuropsychologia. 50:470–478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Logie RH, Della Sala S, Wynn V, Baddeley A. 2000. Visual similarity effects in immediate verbal serial recall. Q J Exp Psychol. 53A:626–646. [DOI] [PubMed] [Google Scholar]