Abstract

Background

Although the efficacy of computerized clinical decision support (CCDS) for acute kidney injury (AKI) remains unclear, the wider literature includes examples of limited acceptability and equivocal benefit. Our single-centre study aimed to identify factors promoting or inhibiting use of in-patient AKI CCDS.

Methods

Targeting medical users, CCDS triggered with a serum creatinine rise of ≥25 μmol/L/day and linked to guidance and test ordering. User experience was evaluated through retrospective interviews, conducted and analysed according to Normalization Process Theory. Initial pilot ward experience allowed tool refinement. Assessments continued following CCDS activation across all adult, non-critical care wards.

Results

Thematic saturation was achieved with 24 interviews. The alert was accepted as a potentially useful prompt to early clinical re-assessment by many trainees. Senior staff were more sceptical, tending to view it as a hindrance. ‘Pop-ups’ and mandated engagement before alert dismissal were universally unpopular due to workflow disruption. Users were driven to close out of the alert as soon as possible to review historical creatinines and to continue with the intended workflow.

Conclusions

Our study revealed themes similar to those previously described in non-AKI settings. Systems intruding on workflow, particularly involving complex interactions, may be unsustainable even if there has been a positive impact on care. The optimal balance between intrusion and clinical benefit of AKI CCDS requires further evaluation.

Keywords: acute kidney injury, clinical decision support systems

Introduction

Acute kidney injury (AKI) is common [1], dangerous [2–5] and costly [1]. Unfortunately, AKI care is also often poor [6, 7]. Education must play a role in addressing this, but our previous work has shown that knowledge gaps are still evident even when AKI teaching is established [8]. The heterogeneity and complexity of AKI may compound difficulties in establishing good practice [9, 10].

Computerized clinical decision support (CCDS) has been promoted as a solution to these problems [11]. Unfortunately, there is a paucity of evidence of efficacy, and the wider literature has demonstrated problems with implementation and integration of other CCDS systems [12–14], with previous initiatives failing due to lack of user engagement in development and implementation [13].

There is a pressing need to evaluate not just quantitative outcomes but also user acceptability and its place within clinical workflows. Our study sought to identify those factors promoting or inhibiting use of an in-patient AKI CCDS system through end-user interviews and quantitative evaluation of their interaction with it. This study was part of a broader, mixed-methods evaluation that also included assessments of impact on practice and outcomes.

Materials and methods

AKI CCDS context and functionality

The study was conducted within our 1800-bed, multiple-site hospital. The renal unit, based at one of two acute sites, provides consultative input for AKI patients across the region.

In-patient records comprised written bedside documentation and an electronic patient record (EPR) for medicines management and test ordering and resulting (Cerner Millennium, Cerner Corporation, USA). The EPR already generated alerts interruptive of workflow (e.g. drug review prompts) and non-intrusive, background flags (e.g. noting previous Clostridium difficile infection).

An AKI CCDS was developed within the EPR by two co-authors (A.H., N.S.K.) to highlight in-patient AKI and link to guidance. An intrusive alert triggered when the individual patient's EPR was first accessed by medical staff after a serum creatinine (Cr) rise of ≥25 μmol/L/day. Correction to a daily rate of rise allowed for instances when Cr testing was less frequent. Setting of the trigger above diagnostic thresholds [9] was intended to minimize alert fatigue and pre-dated a national mandate [11]. In this exploratory study, only one AKI episode was triggered/admission, although the alert, itself, might present multiple times depending on user interaction with it.

The initial alert box highlighted possible AKI, noted the availability of clinical decision support (CDS) and hyperlinked to the local AKI protocol. A mandatory interaction then opened a menu window that required users to choose:

To bypass the alert (if EPR accessed in error or by non-home team clinician),

An exclusion: if receiving dialysis/end-of-life care, or

‘None of the above’.

The alert could then be signed off, although optional links to local protocol and easy reference lists for essential assessments and investigations were available. The latter allowed quick ordering of test panels (e.g. essential bloods, urgent renal ultrasound). From initial alert to close out required at least four clicks. Option (i) made the alert dormant until the EPR was next accessed; the others de-activated it for the duration of the admission. All choices left a visible log on the biochemistry flowchart.

The following were excluded:

The renal unit (due to erroneous alerts with dialysis),

Critical and coronary care, transplantation areas (due to dialysis and the local spectrum of AKI),

Patients <18 years old.

Triggers were prevented on patients who had deceased or discharged between the trigger Cr and first EPR access.

Implementation

Our study comprised three phases:

Phase 1 (16 weeks): rolling implementation of the CCDS system across three pilot wards (Elderly Medicine, General Surgery, Internal Medicine) with usual practice continuing across the rest of the organization,

A planned 8-week hiatus to allow CCDS refinement,

Phase 2 (16 weeks): activation of the revised CCDS across all 64 target wards,

Further CCDS refinement, if required,

Phase 3 (4 weeks): to assess the impact of the revised tool across all 64 target wards.

Publicity was undertaken through:

On-site distribution of help sheets to Phase 1 wards,

E-mail cascades, including the revised help sheet, 6 weeks prior to Phase 2,

Incorporation within the standard training delivered to newly qualified doctors starting within the organization, 4 days prior to Phase 3.

Study timelines were as follows:

Phase 1: 12 August–2 December 2013,

Phase 2: 8 April–29 July 2014,

Phase 3: 8 August–5 September 2014.

The longer than anticipated hiatus before Phase 2 was required for reconfiguration of the tool following feedback and for refinement of diagnostic algorithms.

Qualitative evaluation

Procedures followed were in accordance with the ethical standards of the Local Research Ethics Committee (Ref. 12/NE/0278) and with the Helsinki Declaration of 1975, as revised in 2000.

Recruits were purposively sampled from those who had had experience of the alert. After informed consent, face-to-face interviews of ∼60 min duration were conducted by one of the co-authors, M.T.B. (an experienced qualitative researcher), on hospital premises, adopting an open-ended approach (i.e. without using leading questions, allowing the informant to take the lead and probing revealed themes in depth). Interviews were audio-recorded and transcribed using QSR Nvivo computer data analysis software (QSR International, Victoria, Australia) and analysed for identifiable themes according to the standard procedures of rigorous qualitative analysis (inductive coding, constant comparison, deviant case analysis) [15]. Recruitment continued in each phase until thematic saturation had occurred (i.e. when no new themes were emerging at successive interviews). The interviews were conducted and findings categorized within the framework of Normalization Process Theory (NPT) [16, 17]. NPT proposes that complex interventions only become integrated into existing practice through individual and collective implementation. This requires ongoing investment by individuals and groups in order to ensure continued use and occurs via four mechanisms:

Coherence: how people make sense of what needs to be done.

Cognitive participation: how relationships with others influence outcomes.

Collective action: how people work together to make practices work.

Reflexive monitoring: how people assess the impact of the new intervention.

Each interview theme was therefore assigned to one of these four NPT mechanisms with participant statements extracted to highlight issues of significance for both end users and developers.

Phase 3 interviewees were limited to AKI CCDS naïve doctors. Inconvenience payments were offered based on hourly locum rates.

Quantitative evaluation of end-user interactions

Three patient cohorts (n = 280, each) were identified for a separate evaluation of outcomes and practice. These comprised consecutively triggering patients from: (i) immediate go-live, Phase 2; (ii) 12 weeks post-go-live, Phase 2; and (iii) immediate go-live, Phase 3. Alert bypass rates were compared across patient cohorts using ‘R’ with a two-sample test for equality of proportions and a significance level of 5%. The use of exclusions and test panel ordering were recorded.

Results

Pre-Phase 2 CCDS changes

The alert text was re-configured to include the trigger Cr and up to two preceding results depending on which was recognized as the reference value. The need to exercise clinical judgement about minor rises in very frequent Crs was emphasized. Greater prominence was given to guidance links within the menu window, which also now included a free text option for exclusions and a link to the drug chart, interaction with which was made mandatory.

Pre-Phase 3 CCDS changes

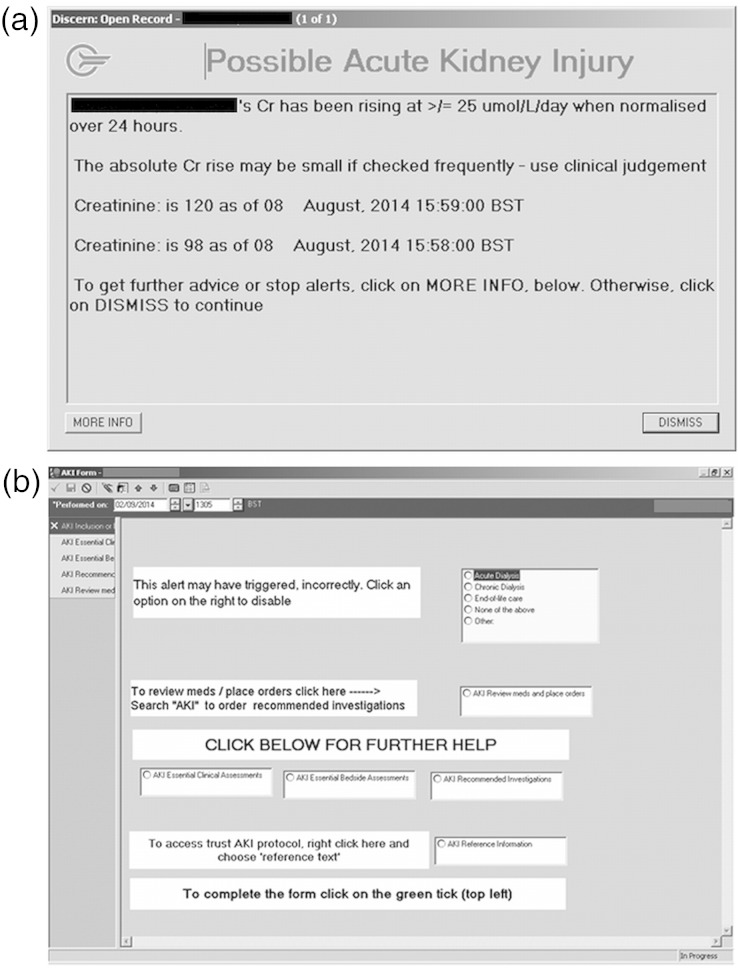

Following Phase 2 feedback, alert interaction was made non-mandatory. Alert appearance was limited to five/admission with no repeat alert for any individual user for 4 h. The alert text was further simplified (figure 1a; figure 1b shows the subsequent menu window).

Fig. 1.

(a) Final, Phase 3 alert text. (b) Subsequent menu window.

Qualitative interview findings

Thematic saturation was achieved with 24 interviews (Table 1).

Table 1.

Numbers interviewed broken down by study phase, specialism and gradea

| Phase 1 | Phase 2 | Phase 3 | Total | |

|---|---|---|---|---|

| Medicine | ||||

| Foundation traineeb | 2 | 1 | 3 | 6 |

| Speciality traineec | 0 | 1 | 0 | 1 |

| Consultantd | 0 | 4 | 0 | 4 |

| Surgery | ||||

| Foundation trainee | 4 | 1 | 0 | 5 |

| Speciality trainee | 0 | 1 | 1 | 2 |

| Consultant | 0 | 0 | 0 | 0 |

| Care of the elderly | ||||

| Foundation trainee | 4 | 2 | 0 | 6 |

| Speciality trainee | 0 | 0 | 0 | 0 |

| Consultant | 0 | 0 | 0 | 0 |

| Total | 10 | 10 | 4 | 24 |

aNo participant was interviewed more than once. Forty-six staff were approached about participation. The most common reason for refusal was lack of time.

bTrainees within 2 years of post-graduation.

cSenior trainees.

dSenior clinicians ≡ attending/staff clinicians.

Trainees, in particular, found a coherent link between the alert and patient care, investing it with greater importance than other alerts due to its perceived relevance. Because of this, they were more likely to undertake earlier patient reassessment. A typical response is shown in Table 2 (Quote 3.1.1—Phase 3, Respondent 1, Quote 1).

Table 2.

Interviewee quotes

| Quote | |

|---|---|

| 3.1.1 | “It is quick and it is simple, it highlights something you should be looking at anyway but it is, you know, always nice to safety net yourself and make sure you definitely review even if it is something you are aware of at the time.” |

| 2.3.1 | “I couldn't bypass it and I haven't had any training or been told about this form. There was no way I could bypass the form. I couldn't. I was so irritated by it I tried to bypass it and proceed and don't have the answers to subsequent questions … I closed the whole system and went on to another system and then I asked the juniors what they had been doing.” |

| 2.6.1 | “I think it's not that you don't trust it, it's just it's difficult to see the severity of it without seeing the blood results. The creatinine may have gone up by thirty or by one hundred and you still get the same warning. Obviously one needs a lot faster action than the other one really … so I think that is why I want to see the blood results.” |

| 2.6.2 | “So it pops up I look down the patient list see kind of how long ago their operation was and what they've come in with, and then I go through and write down a list of the bloods that we haven't just done on the patient, then I click through the blood results and I like to look through their notes to see if they have changed anything, any medications and then I go and see them and look at the urine output. That is how it usually goes, then I send off the bloods or order the ultrasound.” |

| 1.7.1 | “I didn't really go [to see the patient]. I just looked back to the last week and her U&Es were normal so I just ignored it [laughs]. I don't know if it was an earlier warning that hadn't been properly dealt with but it kept flagging up, but, yeah and in that instance there wasn't an AKI.” |

| 3.2.1 | “To be completely honest it looks just like all the pop-ups we get. A lot of them are about medications we prescribed and they have been one off doses and they have stayed on the system and you deleted them. So … and I have to admit some of them I have gotten used to just clicking dismiss and carrying on … but I have noticed when I've had this one and I have gone and actually looked at their bloods.” |

| 3.2.2 | “I thought it was great … it just means you definitely look at it twice and make sure it wasn't something you needed to do intervention wise.” |

Actions prompted by the alert did not always get explicitly documented as such because they were perceived as normal medical activities.

Mandated interaction clearly irritated users, with the highest emotional response coming from senior staff. Unlike trainees who worked together to navigate the CCDS, senior staff struggled to use it. Apart from having ‘too many clicks’, it was cited as an insult to their knowledge; suspicion was expressed of a clandestine audit process (Table 2, Quote 2.3.1).

This response illustrated the impact on workflow of alerts that could not be immediately dismissed or minimized to enable the primary activity to continue. Because of more frequent use, trainees often found ways to bypass it. However, rather than preventing the alert from appearing again, bypassing it simply hid it until the next time the patient's chart was accessed. Workflow interruption was the dominant reason for dislike of the alert but reflected interviewees' antipathy to all EPR pop-ups.

A major driver for alert dismissal was users' need to view historical biochemistry to allow clinical decision-making and prioritization. This came at the expense of lack of engagement with guidance and test ordering. Even when Cr data were introduced into the Phase 2 alert, users still referred back to more comprehensive data to assess alert credibility. Technical limitations prevented presentation of detailed Cr data within the alert box (Table 2, Quote 2.6.1).

In the main, the alert was seen as credible and enhanced cognitive participation (Table 2, Quote 2.6.2). Alert credibility was sometimes strained, though, when it was felt to be too sensitive. Lack of awareness of even lower diagnostic thresholds for AKI was evident, but interviewees also identified instances when an alert appeared not to be associated with an elevation in serum Cr (Table 2, Quote 1.7.1). This may have been explained by unaccounted anomalies in the alert (e.g. patient transfer from a non-alerting venue to a study ward) or by a recurring alert that had just been bypassed and ignored by others.

Participants openly expressed concern about alert fatigue (Table 2, Quote 3.2.1). The only new interview finding in Phase 3 was that the revised alert proved to be more acceptable because of increased ease of dismissal (Table 2, Quote 3.2.2).

Finally, it was evident that at least some users were unaware of CDS functionality downstream of the initial alert (e.g. test ordering, best practice checklists); when its availability was pointed out to them they did feel that this was of potential use.

Quantitative evaluation of end-user interactions

High rates of bypassing were found and increased as Phase 2 progressed (Table 3). Free text exclusion comments often queried the trigger threshold (e.g. ‘no rise’), suggested prior knowledge of AKI (e.g. ‘known acute renal failure post CABG’) or offered an alternative explanation (e.g. ‘previous renal transplant’, ‘pregnancy/postnatal’). Minimal use of test panel ordering was evident in the three patient cohorts from (i) immediate go-live, Phase 2 cohort (5.4%), (ii) 12 weeks post-go-live, Phase 2 (7.5%), and (iii) immediate go-live, Phase 3 (1.1%).

Table 3.

End-user use of bypass function across study phases

| Immediate go-live, Phase 2 | 12 weeks post-go-live, Phase 2 | |

|---|---|---|

| Total number of alert forms completed | 998 | 1101 |

| Total number of bypasses | 788 | 918 |

| Alert form bypass rate (%) | 79* | 83* |

| Median number of bypasses per patient | 3 | 3 |

| Median number of bypasses per doctor | 2 (1–22) | 2 (1–20) |

*P = 0.01.

Each alert form could be completed (i.e. closed out) by bypassing, recording an exclusion or recording an acknowledgment of the form (by clicking option (iii): ‘None of the above’—see the text for details).

In Phase 3, immediate alert dismissal avoided the need for subsequent interaction with the CCDS (the AKI form was completed in only 3/280 alerting patients).

Discussion

CCDS for AKI has been widely promoted [11, 18]. Although a uniform detection algorithm is now mandated across England and Wales [11], the end-user interface may still vary, for instance, in terms of alert intrusion, its mode of delivery and mandation of interaction. Even a single, uniform interface may not suit all circumstances. There is, therefore, a pressing and well-recognized [1, 18] need for evaluation of implementation, function and impact on practice. The evidence, however, remains limited and conflicting.

One prospective, single-centre, intensive care study found that interruptive text alerts led to more timely intervention and more rapid resolution of AKI [19]. However, over 90% of alerts were generated for changes in urine output, alone, suggesting that the intervention was addressing an early, pre-renal response. Moreover, mortality and the need for renal support were unaffected. Another single-centre, parallel group study [20] randomized patients with serum Cr-based AKI to usual care or an interruptive text alert to their clinician and found no difference in outcome, but with some evidence of increased resource utilization. Neither study explored end-user acceptability or the social context of their alerts.

Even when beneficial [21–23], other CCDS systems may have negative impacts including delayed treatment [14]. The limited evidence of patient benefit was illustrated in one review [24] that found only 57% of studies affected user behaviour with only 30% able to demonstrate an effect on patient outcomes. The negative impact of top-down solutions, without ‘end-user pull’ in tailoring CCDS, has been emphasized [13, 25], but specific points of end-user dissatisfaction include alert fatigue [12, 26–29], mandated responses (that are frequently bypassed if workflows are disrupted [30]) and the complexity of clinical decision-making—far from facilitating patient management, CCDS may complicate it by forcing the user to interpret the solution they have been presented with [13]. Without understanding how technologies, people and organizations dynamically interact [31, 32] and the importance of pre-existing attitudes [33], it will remain unclear how to tailor and integrate more complex CCDS (such as for AKI) within existing practice.

We believe our study is the first to undertake qualitative evaluation of an AKI CCDS system and has revealed precisely those themes warned of in the generic literature—alert fatigue, user dissatisfaction and antipathy to mandatory interactions and intrusion.

Development of our system included extensive pilot work, but the broader picture of discontent was only revealed later. Additionally, our preconceived belief in the advantages of a visible audit trail within the biochemistry flowsheet and of exclusion recording to block further alerts was negated by antipathy to mandated interaction which these apparent benefits required. Although mindful of the risks of top-down solutions, our study has revealed the need for an end-user responsive process that, we feel, must extend well beyond initial scoping and implementation.

We found limited engagement with CDS functionality, downstream of the original alert (e.g. test ordering). Lack of awareness was evident, but we speculate that the drive for rapid alert dismissal was an important explanatory factor—when this was facilitated in Phase 3, test panel ordering and the rate of form completion fell off sharply.

An impression of over-sensitive alerting affected the tool's credibility for some. Although this may have reflected poor awareness of current diagnostic thresholds, many patients may never achieve a Cr higher than the one initially triggering even a Stage 1 AKI alert [20]. If alerts are only being generated when AKI is already resolving, one can speculate upon the negative impact on even initially positive end users.

Our study has a number of caveats.

Firstly, our tool was developed in-house and required experiential learning to meet some user demands. Although not an explicit interview finding, delays may have affected the tool's credibility. Secondly, the pre-Phase 2 educational roll-out proved to have had limited reach, requiring a supplementary cascade after 10 weeks; this, we feel, probably reflected the shortfall in educational resource needed to inform a large, multiple-site organization. Thirdly, our CCDS was not intended as an exhaustive surveillance tool. Missed episodes of AKI (due to the higher-than-diagnostic trigger threshold or because this was a repeat AKI episode) might have affected credibility, although this was not apparent in interviews. Fourthly, users were only interviewed once, so temporal changes in experience may have been missed. Finally, we acknowledge that user culture in Phase 2 might have been affected by discussion with pilot ward participants, although we feel it would have been difficult to avoid this in a single-centre study.

We believe that our study offers important lessons about the implementation, integration and acceptability of AKI CCDS. Its significance was its congruency with medical practice, appearing to prompt earlier patient reassessment at least amongst some trainees. Our study has, however, also revealed those themes that have affected other CCDS implementations. Systems intruding on workflow, particularly involving complex or mandatory interactions, may not be sustainable even if there has been a positive impact on care. Although conducted in a single institution within the National Health Service of England and Wales, we believe that our study has relevance to other healthcare communities, all of whom will face common challenges of clinical prioritization, efficiency of workflow and effective team-working, even if the solutions to these may differ. Further evaluation is needed of the optimal balance between intrusion and clinical benefit as well as of the applicability of our findings across different information technology capabilities and clinical settings.

Conflict of interest statement

None declared. This work was presented in abstract form at the joint meeting of the EDTA-ERA and UK Renal Association, London, May 2015.

Acknowledgements

We are extremely grateful to study participants for the generous use of their time and to the Northern Counties Kidney Research Fund, NHS Kidney Care, the Newcastle Health Care Charity and the Newcastle upon Tyne Hospitals NHS Charity for funding of our work. We acknowledge the support of the National Institute for Health Research, through the Local Clinical Research Network.

References

- 1.NICE. Acute kidney injury: prevention, detection and management of acute kidney injury up to the point of renal replacement therapy. NICE clinical guideline 169: National Institute for Health and Care Excellence, 2013 [Google Scholar]

- 2.Lassnigg A, Schmidlin D, Mouhieddine M, et al. Minimal changes of serum creatinine predict prognosis in patients after cardiothoracic surgery: a prospective cohort study. J Am Soc Nephrol 2004; 15: 1597–1605 [DOI] [PubMed] [Google Scholar]

- 3.Uchino S, Bellomo R, Goldsmith D, et al. An assessment of the RIFLE criteria for acute renal failure in hospitalized patients. Crit Care Med 2006; 34: 1913–1917 [DOI] [PubMed] [Google Scholar]

- 4.Kuitunen A, Vento A, Suojaranta-Ylinen R, et al. Acute renal failure after cardiac surgery: evaluation of the RIFLE classification. Ann Thorac Surg 2006; 81: 542–546 [DOI] [PubMed] [Google Scholar]

- 5.Ahlstrom A, Kuitunen A, Peltonen S, et al. Comparison of 2 acute renal failure severity scores to general scoring systems in the critically ill. Am J Kidney Dis 2006; 48: 262–268 [DOI] [PubMed] [Google Scholar]

- 6.Stewart J, Findlay G, Smith N, et al. Adding Insult to Injury: A Review of the Care of Patients Who Died in Hospital with a Primary Diagnosis of Acute Kidney Injury (Acute Renal Failure). London: National Confidential Enquiry into Patient Outcome and Death, 2009 [Google Scholar]

- 7.Stevens PE, Tamimi NA, Al-Hasani MK, et al. Non-specialist management of acute renal failure. QJM 2001; 94: 533–540 [DOI] [PubMed] [Google Scholar]

- 8.Muniraju TM, Lillicrap MH, Horrocks JL, et al. Diagnosis and management of acute kidney injury: deficiencies in the knowledge base of non-specialist, trainee medical staff. Clin Med 2012; 12: 216–221 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Lewington A, Kanagasundaram S. Renal Association Clinical Practice Guidelines on acute kidney injury. Nephron Clin Pract 2011; 118 (Suppl. 1): c349–c390 [DOI] [PubMed] [Google Scholar]

- 10.KDIGO. KDIGO Clinical Practice Guideline for Acute Kidney Injury. Kidney Int Suppl 2012; 2: 1–138 [Google Scholar]

- 11.Patient safety alert on standardising the early identification of Acute Kidney Injury. https://www.england.nhs.uk/2014/06/09/psa-aki/ (24 April 2015, date last accessed) [DOI] [PubMed]

- 12.Gurwitz JH, Field TS, Rochon P, et al. Effect of computerized provider order entry with clinical decision support on adverse drug events in the long-term care setting. J Am Geriatr Soc 2008; 56: 2225–2233 [DOI] [PubMed] [Google Scholar]

- 13.Rousseau N, McColl E, Newton J, et al. Practice based, longitudinal, qualitative interview study of computerised evidence based guidelines in primary care. BMJ 2003; 326: 314–318 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Strom BL, Schinnar R, Aberra F, et al. Unintended effects of a computerized physician order entry nearly hard-stop alert to prevent a drug interaction: a randomized controlled trial. Arch Intern Med 2010; 170: 1578–1583 [DOI] [PubMed] [Google Scholar]

- 15.Strauss AL. Qualitative Analysis for Social Scientists. Cambridge: Cambridge University Press, 1987 [Google Scholar]

- 16.May C, Finch T. Implementation, embedding, and integration: an outline of Normalization Process Theory. Sociology 2009; 43: 535–554 [Google Scholar]

- 17.May CR, Mair F, Finch T, et al. Development of a theory of implementation and integration: Normalization Process Theory. Implementation Sci 2009; 4: 29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Feehally J, Gilmore I, Barasi S, et al. RCPE UK consensus conference statement: management of acute kidney injury: the role of fluids, e-alerts and biomarkers. J R Coll Physicians Edinb 2013; 43: 37–38 [DOI] [PubMed] [Google Scholar]

- 19.Colpaert K, Hoste EA, Steurbaut K, et al. Impact of real-time electronic alerting of acute kidney injury on therapeutic intervention and progression of RIFLE class. Crit Care Med 2012; 40: 1164–1170 [DOI] [PubMed] [Google Scholar]

- 20.Wilson FP, Shashaty M, Testani J, et al. Automated, electronic alerts for acute kidney injury: a single-blind, parallel-group, randomised controlled trial. Lancet 2015; 385: 1966–1974 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Kucher N, Koo S, Quiroz R, et al. Electronic alerts to prevent venous thromboembolism among hospitalized patients. N Engl J Med 2005; 352: 969–977 [DOI] [PubMed] [Google Scholar]

- 22.Finlay-Morreale HE, Louie C, Toy P. Computer-generated automatic alerts of respiratory distress after blood transfusion. J Am Med Inform Assoc 2008; 15: 383–385 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Dexter PR, Perkins S, Overhage JM, et al. A computerized reminder system to increase the use of preventive care for hospitalized patients. N Engl J Med 2001; 345: 965–970 [DOI] [PubMed] [Google Scholar]

- 24.Jaspers MW, Smeulers M, Vermeulen H, et al. Effects of clinical decision-support systems on practitioner performance and patient outcomes: a synthesis of high-quality systematic review findings. J Am Med Inform Assoc 2011; 18: 327–334 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Cresswell K, Majeed A, Bates DW, et al. Computerised decision support systems for healthcare professionals: an interpretative review. Inform Prim Care 2012; 20: 115–128 [DOI] [PubMed] [Google Scholar]

- 26.Kesselheim AS, Cresswell K, Phansalkar S, et al. Clinical decision support systems could be modified to reduce ‘alert fatigue’ while still minimizing the risk of litigation. Health Aff (Millwood) 2011; 30: 2310–2317 [DOI] [PubMed] [Google Scholar]

- 27.Embi PJ, Leonard AC. Evaluating alert fatigue over time to EHR-based clinical trial alerts: findings from a randomized controlled study. J Am Med Inform Assoc 2012; 19 (e1): e145–e148 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Lee EK, Mejia AF, Senior T, et al. Improving patient safety through medical alert management: an automated decision tool to reduce alert fatigue. AMIA Annu Symp Proc 2010; 2010: 417–421 [PMC free article] [PubMed] [Google Scholar]

- 29.Bates DW, Kuperman GJ, Wang S, et al. Ten commandments for effective clinical decision support: making the practice of evidence-based medicine a reality. J Am Med Inform Assoc 2003; 10: 523–530 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Litzelman DK, Tierney WM. Physicians’ reasons for failing to comply with computerized preventive care guidelines. J Gen Intern Med 1996; 11: 497–499 [DOI] [PubMed] [Google Scholar]

- 31.Kaplan B. Evaluating informatics applications—some alternative approaches: theory, social interactionism, and call for methodological pluralism. Int J Med Inform 2001; 64: 39–56 [DOI] [PubMed] [Google Scholar]

- 32.Wears RL, Berg M. Computer technology and clinical work: still waiting for Godot. JAMA 2005; 293: 1261–1263 [DOI] [PubMed] [Google Scholar]

- 33.Kitson A, Harvey G, McCormack B. Enabling the implementation of evidence based practice: a conceptual framework. Qual Health Care 1998; 7: 149–158 [DOI] [PMC free article] [PubMed] [Google Scholar]