Abstract

Background

Human decision making involves the deliberate formulation of hypotheses and plans as well as the use of subconscious means of judging probability, likely outcome, and proper action.

Rationale

There is a growing recognition that intuitive strategies such as use of heuristics and pattern recognition described in other industries are applicable to high-acuity environments in medicine. Despite the applicability of theories of cognition to the intensive care unit, a discussion of decision-making strategies is currently absent in the critical care literature.

Content

This article provides an overview of known cognitive strategies, as well as a synthesis of their use in critical care. By understanding the ways by which humans formulate diagnoses and make critical decisions, we may be able to minimize errors in our own judgments as well as build training activities around known strengths and limitations of cognition.

Keywords: Critical care, Medical decision making, Heuristics, Intuition, Diagnosis

Critical care practitioners need to make accurate and appropriate decisions in a timely manner in situations where there is a high degree of stress and uncertainty and when patients possess little physiologic reserve. Diagnostic decisions in acute care disciplines often commit patients to therapeutic strategies that can be harmful if not correct. Diagnoses and related decisions surround our practice at every moment; however, the schemes our minds use to make these judgments are probably the aspect of medical care that we understand the least. Diagnostic reasoning involves the use of both conscious and subconscious processes, the blend of which depends on the experience of the clinician and the familiarity of the situation. Factors such as overconfidence, fatigue, and time pressure can create an overreliance on intuition when there may be insufficient expertise to justify its use.1,2 Patient harm can result from both errors of commission (for example, wrong diagnosis made, improper therapy given) and omission (not fully appreciating the extent of a patient’s illness and failing to act quickly). Thus, diagnostic reasoning involves both the making of formal diagnoses as well as the formulation of general impressions (rapid deterioration, poor respiratory reserve, etc.) that form the basis of action. This paper will review some of the current concepts of reasoning and their applicability to critical care. With a better understanding of cognitive processes, we can take further steps by designing better error prevention strategies and assessing them experimentally.

Diagnostic Error in Medicine and Critical Care

Is diagnostic reasoning a patient safety problem in critical care? Studies in the United States, United Kingdom, and Australia indicate that errors or adverse events occur in the range of 1.1% to 16.6% of all hospital admissions,3–6 with many showing a greater incidence with age, complexity, and acuity of the patient.3,7–11 Analysis of malpractice claims indicates that diagnostic error is both the leading cause of paid claims and the most costly category.12,13 However connecting error types to actual error rates from audits, self-reports, and other databases without a known denominator is not possible.

The frequency of diagnostic error is probably not fully appreciated in medical practice, especially to the physicians involved. Chart audits found that clinicians report mistakes at about half the frequency reported by the affected patients.14 A study on resident teaching conferences found that the reporting and discussion of error varied markedly between medical and surgical departments; a lack of standards for reporting and discussing errors was noted, along with a paucity of meaningful discussion of errors and their sources in educational activities.15 Residents further admit that errors are sporadically reported to patients (24% of the time), and also infrequently to supervising physicians (50%).16 Self-detection of error is likely poor, as individuals are notoriously unaware of knowledge deficits and generally overrate their performance of difficult clinical tasks.17,18

The concentration of errors in critical care is likely higher than that reported for inpatients in general. A live observational study in Israel documented a rate of 1.7 errors per patient per day.19 In another series, 31% of intensive care unit (ICU) admissions experienced an iatrogenic complication, wherein almost half were considered major complications where human error was a major contributor.9 A prospective incident reporting system in Australia found that adverse events occur in 5% to 25% of patients admitted to the ICU,20 while errors occurred in 20% of patients in a single center study in the United States.21 Finally, a German study collected incident reports from 216 consecutive admissions and found that 15% were victims of error, and 73% of the errors were due to human failures.10 Despite what appears to be a persistence of errors and mishaps, the fraction attributable to diagnostic error is not as clear. Diagnostic reasoning is not always explored in error analysis, and there is little standardization of categories and definitions across investigations.12 Retrospective analyses of patients who experienced unplanned ICU admissions from regular wards found suboptimal care present in 32% to 50% of cases. In these patients, non-recognition of the problem or its severity, lack of supervision, inexperience, and inappropriate treatment were key factors underlying patient morbidity and mortality.22,23

What We Know about Decision Making – General Models for Cognition in Diagnostic Reasoning

When one thinks about diagnostic reasoning in medicine, the strategy that comes to mind is the hypothetical-deductive model. This method is typified by case-based discussions led by senior physicians where complex patients are analyzed and distilled down to one or two possible diagnoses and then differentiated by a key test, maneuver, or response to therapy. Particular strengths, weaknesses, and evidentiary contexts of tests are considered when using this method. In contrast, intuitive methods involve comparing a set of conditions with previously learned patterns or prototypes of disease states; use of intuition occurs almost instantly, but requires a great deal of experience to perform optimally. These two approaches will be expanded upon in the sections below.

The Hypothetical-Deductive Model (HD)

Upon encountering a patient, a hypothesis regarding his condition is generated and then further addressed by physical examination and diagnostic testing. As information becomes available, the hypotheses can be revised or additional hypotheses generated. Typically, recall of prior experiences as well as consideration of disease prevalence figure into hypothesis generation. The dominant hypothesis then creates a framework for subsequent data gathering and analysis, for which confirmation and elimination tend to be major strategies.24 Confirmation is a search for information that confirms a hypothesis, while elimination is a strategy used to rule out a process, often a competing diagnosis or the worse scenario for a given constellation of symptoms. Through this process, hypotheses are further refined, and ideally, verified for plausibility prior to commencing a course of action. Understanding salient features of competing diagnoses and key differentiating factors can improve the selection and interpretation of diagnostic tests and is ideally a key component of hypothesis evaluation.

The scientific foundation of the hypothetical-deductive method is Bayes’ theorem on conditional probability. The theorem states that the probability of a diagnosis (the post-test probability) is dependent upon the probability of the diagnosis prior to a test (the pretest probability) and the strength of evidence added by a test or maneuver. The likelihood ratio calculated from experimental test results is converted to odds to form the strength of evidence. The prevalence of a condition in the population is often a good estimate of the pre-test probability.

Despite its implicit status as the standard diagnostic method, the hypothetical-deductive method can be inefficient or error prone and will not yield the same results for all practitioners. At its best, the hypothetical-deductive method should promote a dynamic and probabilistic view of potential diagnoses and seek information that adjusts the relative probabilities of competing hypotheses. However, in the ICU we often see the opposite, as junior trainees become lost in the acquisition and presentation of data (believing that it will improve diagnostic accuracy) but without using it to generate a testable hypotheses or meaningful course of action.25 Some of this unfocused activity can be attributed to inexperience in medicine and not knowing what evidence to seek when faced with certain conditions.

Intuitive Methods

The nature of illnesses encountered in the emergency department and ICU often necessitates actions in the absence of a comprehensive battery of data and lack of time for iterative testing of hypotheses. With experience, the clinician is able to compare the problem at hand with mental templates or scripts of disease processes stored away from prior experiences.26,27 As will be described, the nature of what we remember is based on an entirely subjective set of experiences including our own clinical cases, diagnoses that we failed to make in the past, and images created by stories from our colleagues and the popular press. Psychologists Daniel Kahneman and Amos Tversky28 explored the cognitive basis for such judgments in a series of influential studies. They found that rather than extensive algorithmic processing of information, humans making judgments resort to a number of simplifying shortcuts or “heuristics,” the use of which is often beyond the awareness of the decision-maker. The psychologists found that each heuristic had specific vulnerabilities that could be exploited in controlled experiments.

This work has been expanded upon in a medical context by Pat Crockery,25 who found that the pace and volume of decisions made in Emergency Medicine create a high reliance on pattern recognition and heuristics. Interest in heuristics has led to research in Internal Medicine and Anesthesiology, where experimental work has verified their use.29,30 Some heuristics likely used in medical decision making are described below. Heuristic judgments can serve as stand-alone judgments of causality or serve as an entry point to the hypothetical-deductive method by identifying diagnoses warranting further evaluation. Some elements of intuition-based decisions are contrasted with deductive methods in table 1. Below is a description of the most common heuristics and some of the systemic biases and weaknesses associated with their use. It is important to note that these are recurrent patterns more than discrete categories; the reader will find degrees of overlap between a number of heuristics.

Table 1.

Contrast between qualities of analytic and intuitive models of decisions in critical care.

| Analytic methods | Intuitive methods | |

|---|---|---|

| Basis | Serial hypothesis testing based on probabilities derived from clinical information | Recognition of clinical patterns |

|

| ||

| Role of probability | Explicit; estimates and models can rank probabilities of multiple options | Implied; pattern match leads to single diagnosis with greatest probability |

|

| ||

| Strengths | Mathematical, promise of reproducible results | Experience based, specific and rapid |

| Portability of results: Evidence-based | ||

|

| ||

| Pitfalls | Results of robust models can be skewed by incorrect estimates of pretest probability and strength of evidence | Overconfidence may cause one to over-estimate experience and under- estimate uncertainty |

| Not useful in stressful events, accuracy is dependent upon effort and time | ||

|

| ||

| Role of information | Requires more information to minimize uncertainty | Uncertainty is accepted and does not slow down decision making |

|

| ||

| Role of biases | Information gathering may follow biases and incorrectly skew the output of supposedly “objective” mathematical models | Heuristics may be associated with biases that skew views toward likelihood and probability |

|

| ||

| Role of experience | Provides workable tools for novices | Not reliable for novices |

| Also benefits experienced providers when clinical patterns are not easily recognized | Allows experience to improve efficiency | |

Representativeness is used to judge the likelihood of an event “A” belonging to a condition “B,” due to similarities between the two. The shortcut is based on prior experiences or well-accepted definitions of B. The heuristic makes no attempt to judge whether A may be a manifestation of some other process that is much more common than B. Thus, a classic weakness of representativeness is its neglect of base rate frequency of the diagnosis considered and failure to consistently compare it with more common options.

The original laboratory studies on representativeness found subjects repeatedly making flawed inferences of likelihood and ignoring information that would have led to more accurate predictions. From this work, it was taken that systematic errors such as these revealed a common fallibility of human decision making. Heller et al31 took a more detailed look by having pediatric residents estimate probabilities in both medical and non-medical scenarios and found that estimates of likelihood more accurately reflected underlying probabilities in the non-medical problems and that statistically incorrect judgments were more common in the residents’ responses to problems based on medical issues. The difference was generally greater when more senior residents were compared to interns.31 These findings suggested that heuristics might not serve as a general strategy for all decisions but tend to be used where familiarity (or perceived familiarity) with a subject leads to confidence that a shortcut can substitute for the more laborious analytic approach. The implication of Heller’s work is that as one gains more experience in a subject area, use of heuristics may increase.

Certainly, representativeness seems to be a tool used by experienced physicians to match the salient features of a patient’s presentation to a database of prior experiences. For the very experienced, a lack of representativeness probably plays an important role in the discovery of new medical entities, for example when a sporadic cluster of young healthy males with pneumocystis pneumonia led to the discovery of AIDS.

The Availability Heuristic is the tendency to assess the likelihood of an event by the ease with which such events are recalled. As high frequency events will be more easily recalled than the less frequent, availability does have the tendency of keeping assumptions of likelihood closer to the base rates of various conditions. Middle-aged men presenting to the emergency department with substernal chest pain should recall numerous instances of coronary artery disease (CAD). However, availability is dependent on what is memorable, and it has been found that since unusual events, famous people, or familiar locations are more memorable, they tend to skew impressions of frequency and likelihood.28 For example, a report of a missed diagnosis (say, aortic dissection) may cause emergency physicians to consider the same diagnosis in situations where he or she would normally suspect myocardial infarction. Likewise, vivid images of adverse events can elevate impressions of risk categories that exceed their actual frequency. Following the terrorist attacks of 2001 in the United States, fear of flying caused more people to take vacations by car; this change in behavior led a greater number of people to use a statistically more dangerous form of travel.32

Unpacking and Support Theory describes the tendency to favor diagnoses and interventions that contain some description of content over categorical descriptions.33 For example, a radiologist may be more likely to approve a computed tomography (CT) scan of the head if the rationale is to “evaluate for tumor, spontaneous bleed, or abscess,” rather to “exclude central nervous system pathology,” even though the latter category actually encompasses a greater set of findings. The act of listing these diagnoses seems to strengthen the case for obtaining the scan, and in experimental settings, enumerating contributing etiologies tends to elevate the perceived probability of the condition in question.

Search Satisficing combines the terms “satisfy” and “suffice,” and was first used by Economics Nobel Prize winner Herbert Simon34 in his description of the human tendency to settle for adequate, but not perfect, solutions. This is sometimes called discounting, because discovery of one possible explanation tends to discount the possibility of alternate diagnoses.31 For example, a physician may fail to recognize a second, subtle fracture present on a radiograph if a more obvious primary fracture was first detected. This heuristic can lead to premature closure, a failure to consider other diagnostic possibilities in situations where there is still uncertainty.

A common finding we see in the ICU is a tendency to find and act upon some recognizable element amongst the sea of findings in a complicated patient. For example, delirium may be recognized as a problem by some, without necessarily connecting it to the fact that it is being driven by another more serious problem (sepsis or hypercapnia, for example), and that treating the change in mental state as a stand-alone condition may occur to the exclusion of understanding the primary problem.

Confirmation Bias describes the tendency to collect redundant information that seeks to confirm an existing hypothesis rather than obtaining studies that differentiate it from competing possibilities. Often the subsequent tests contribute little gain in information and do not alter the probability of disease by Bayesian analysis.35 In the presence of multiple lab studies and other findings, confirmation bias expresses itself as the tendency to filter information in a way that places greater importance on confirmatory evidence and less on findings that may contradict an existing impression.

Sources of this bias may arise from other misassumptions including anchoring (discussed below) or the psychological tendency to seek comfort in a decision, rather than admitting an assumption is incorrect and starting over.25 Patient empathy or other influence from the affective sphere may motivate one to want or not want a certain diagnosis to be present in a patient and to seek evidence to confirm this belief.

The longer one is able to hold onto a decision, the more difficult it may be to give up.36 Thus, diagnostic information that trickles in slowly rather than all at once may unconsciously strengthen an initial belief or hypothesis. Receiving a patient with a provisional diagnosis from another physician may likewise incline one to confirm rather than reformulate the diagnosis.36 The latter is a huge problem particular to critical care, as information is constantly being passed back and forth between operative teams, consultants, on and off shift nurses, and physicians. We frequently admit patients who come attached to a long trail of impressions and existing care plans, both of which have been shown to constrain diagnostic thinking.37,38

Anchoring and Adjustment, and the Power of Suggestion: One of the most reproducible findings in experimental psychology is the influence of an external suggestion on the content of a decision.39 One form of suggestion is anchoring, the ability of a supplied numerical value (the anchor) to skew the estimation of another value. For example, if 100 subjects are asked whether California has 50 major earthquakes each year (all would say false), and then asked to make a more realistic prediction of the rate, the prediction would be significantly higher than one made by a group of 100 subjects who are asked whether California has a major earthquake every 5 years.

Adjustment refers to the process of consciously moving the estimated value away from the anchor toward one thought to be more accurate. Even when presented with absurdly abnormal anchor value, final values continue to reflect the bias introduced by the anchor, with adjustments moving the estimate to the edge of the range of uncertainty, but never as far as one would without the anchor.39,40

The quantitative demonstration of anchoring in controlled experiments may not reflect the struggles of critical care as much as the tendency to anchor to qualitative or named features of a problem. This is especially problematic in dynamic medical situations where labels such as ‘hypovolemic, ‘vasoplegic’, or ‘depressed left ventricle’ appropriately generated in one situation may be incorrectly attached to subsequent episodes of care where they may not be true. Many postoperative and critically ill patients pass through a number of different physiologic states as they deteriorate and convalesce; maintaining an ability to perceive changes in patients without the constraint of prior treatment decisions is an important aspect of cognition in critical care. Recognizing the strength of anchoring, a well-regarded author and diagnostician remarked, “when a case first arrives, I don’t want to hear anyone else’s diagnosis. I look at the primary data.”41

Omission or Status Quo Bias describes the tendency to take a course of inaction or observation over intervention. Typical “intervene or not” decisions in critical care include watchful waiting versus exploratory laparotomy and a decision to minimize fluid administration and accept declining renal function versus infusing fluids and risking an abdominal compartment syndrome. Omission may derive from cultural factors such as the maxim to “first do no harm,” or aversion to the perceived legal consequences of being the last physician to intervene on a patient with a poor outcome.25 Omission bias was addressed in a survey of critical care physicians in which three clinical scenarios were presented, and respondents were queried about what they would do when presented with options of either maintaining the status quo (a CT scan is already ordered, do you want to cancel it?) or performing a new action (physician asked whether s/he wanted to obtain a CT scan for same patient). Physicians’ responses showed a greater inclination to act on a plan that was already in motion rather than change the course in pursuit of the ideal decision.37

Multiple Process Models

Despite a longstanding interest in how individuals make difficult decisions and the elucidation of reproducible areas of weakness, it was not until the late 1980s that the line of investigation moved from controlled laboratory investigations of cognitive predispositions to direct observation of experienced practitioners in natural settings. Gary Klein and associates42 followed fire chiefs, military commanders, and similarly skilled members of high-risk professions and investigated the thought processes utilized when making “tough decisions under difficult conditions such as limited time, uncertainty, high stakes, vague goals, and unstable conditions.” The initial expectation was that key actions resulted from comparison of leading hypotheses or options and that some type of normative analysis of risk/benefit would yield the best choice. In reality, expert decisions were most commonly reached without this deliberation and were based on the subconscious ability to recognize and act on complex patterns—an ability developed through years of experience.43 From such naturalistic analysis of decisions, Klein developed the model of recognition-primed decision making. This model has two main features: (1) perception of the situational variables and characterizing the situation based on prior experience and (2) mentally simulating how a course of action would play out and simulating additional plans if the first does not seem workable.43 The model suggests that in making important decisions, humans have managed to incorporate some of the best elements of the cognitive traditions described above: an initial use of representativeness and pattern recognition, satisficing, or recognizing that a workable solution is the main goal, and not feeling the necessity to find the best solution, and some mediation by conscious rational processes (mental simulation) without the paralysis and inactivity brought by over-deliberation.42 Klein’s model came from extensive post-event probing of his expert subjects, where a recurrent finding was a lack of awareness on the part of the decision-makers that they were even making any decisions!

The dual process model of reasoning postulates that human decisions involve use of both analytic and non-analytic processes that operate in parallel, with pattern recognition being the main mode for familiar situations and decisions and analytic thinking taking over when the situation does not match up with prior experience.44,45 Kahneman39 elaborates on these parallel processes in a recent book entitled Thinking Fast and Slow, where he uses System I and System II respectively, to refer to intuitive and analytic processing of information.39 Accordingly, intuition is frugal, and analytic thinking is costly in terms of energy and time consumption and the level of concentration required to be effective. The hypothetical-deductive method is an example of an analytic method used in medical practice. A unique characteristic of System II is its potential ability to monitor the activity of System I.46 Like the recognition-primed decision model, dual processing has the potential to examine the impact of certain actions and may protect the decision maker from suboptimal choices. Both models seem to describe different formulations of a common mental framework.

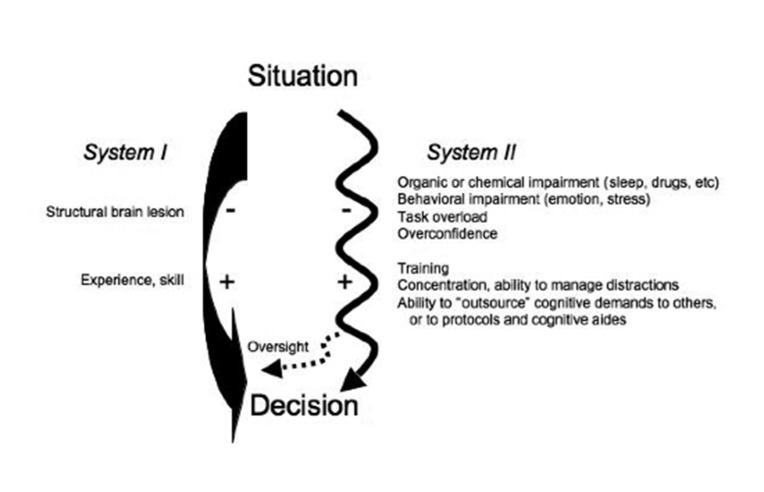

A general model for dual process thinking is presented in figure 1. While more elaborate depictions have been offered,44,47 we believe that a model analogous to fast and slow AV nodal conduction pathways captures the essence of current thinking. A number of external and internal factors influences the overall function of these systems, can slow use of that particular pathway, and dictate that more processing occur via the other. Internal factors include affect, stress, fatigue, emotion and level of confidence, while external factors are uncontrolled variables such as patient acuity, location, availability of resources, and attention given to other thoughts and tasks. Factors such as sleep, substance use, anger, and emotion compromise the function of deliberate and rational decision making (System II) and create a higher reliance on intuitive judgment. This construct gains additional support from functional magnetic resonance imaging studies showing distinct anatomic loci for both deductive and intuitive processing48 and selective absence of these faculties in patients with related brain lesions.44

Figure 1.

A schematic of dual cognitive processes in clinical decision making is shown. A situation or problem requiring a decision activates both pathways simultaneously; however, a path of least resistance is taken. Familiarity with the situation favors the faster non-analytic pathway on the left (System I), while novelty and complexity call for greater analysis and likely use of System II. The thicker arrow on System I is used to indicate a more direct connection and path of lesser resistance than that for System II. Plus and minus signs are shown alongside factors that have been shown to positively or negatively influence the overall quality and output of each system. The dashed arrow, whereby System II provides oversight of tentative conclusions reached by System I, represents metacognition.

Decision Making in Critical Care

Despite the overall importance of understanding diagnostic reasoning in critical care, work in this area has not accompanied the longer-standing traditions of exploring the ethical, affective, and legal dimensions of critical care decisions. The daily variation in personnel, work intensity, and patient acuity seen in the ICU creates a unique environment where decisions and their underlying processes can be examined and perfected.

The practice of critical care often involves the need to make rapid judgments in high-risk and dynamic situations. Recognition of disease patterns and their diversity, as well as instituting empiric therapy for unclear conditions is a typical start to a patient encounter. Situations calling for quick action are almost entirely guided by pattern recognition. Less commonly, patients present with a variety of findings that are hard to assemble into a coherent single diagnosis, and problems are worked on individually while more deliberate acquisition and organization of information takes place. In the latter case, System II is being used with a much higher attentional demand.

It is worth emphasizing that diagnostic reasoning in intensive care is an ongoing process that involves not only the identification of the underlying disorder in a patient (pneumococcal sepsis) but also the ability to recognize changes in a number of physiologic sub-states (ventricular dysfunction, fluid mobilization, microvascular thrombosis) that will alter outcome if not acted upon properly.

There is a general sense that diagnostic errors are more likely to arise from occasional faults in the use of System I and that with a proper command of clinical evidence and its significance (use of System II), errors may be prevented. In critical care, there may be an unappreciated opportunity to improve diagnostic accuracy by conscious review of both systems. For example, if an initial intervention creates a period of stability, this may “buy time” to double-check assumptions, review primary data sources, and invite consultants and others to share their views. In teaching centers, team rounds and sign-overs provide multiple opportunities to re-review a case.

The diagnostic process described in the paragraph above is a general overview of what one would likely see if surveying experienced practitioners in critical care. In contrast, trainees may be overwhelmed during fast paced encounters where there is novelty in both patient problems and stress and urgency. Without adequate experience, decision making may be flawed on a number of fronts. Newer trainees are likely to make better decisions through comparing and contrasting the most common conditions that give rise to the patient’s problem, but this requires time and concentration, neither of which is in sufficient supply. There should be concern that flawed use of heuristics may predominate the diagnostic process when novices experience cognitive overload. Urgency creates a state of arousal that can facilitate information processing; however, higher degrees of stress drastically reduce the number of external cues that can be properly perceived and utilized.49,50 Accordingly, actions based on reasoning or computations may be flawed or impossible to execute with too much stress. Expert practitioners can use intuition to arrive at workable solutions, but with inadequate exposure to prototypic and variants of key cases it is highly doubtful that novices can function in this manner.

Flawed Diagnostic Reasoning: a True Case

A 65-year-old man with symptomatic CAD and congestive heart failure was admitted to the ICU after a routine stenting of the right iliac artery. Postoperative ICU admission was requested due to a history of stable CAD and congestive heart failure (CHF). His ejection fraction was reported to be 28%; he had two admissions in the prior year for CHF exacerbations.

Eight hours after the procedure, the ICU team was called to the patient’s bedside for a drop in systolic blood pressure to less than 90 mm Hg, after being in the 120s previously. Assuming a cardiac etiology, the intern and fellow placed a pulmonary artery catheter to facilitate hemodynamic monitoring and therapy for presumed heart failure. The blood pressure was 85/45 mm Hg, central venous and pulmonary artery pressures were 4 mm Hg and 25/8 mm Hg respectively; the cardiac output (CO) was 3 L/min.

If using the hypothetical-deductive model in our patient, the resident would have perhaps entertained a wider differential diagnosis of shock in post-catheterization patients and may have decided to perform tight heart catheterization as an empiric means of evaluating hemodynamic collapse. Accordingly, he would have recognized that the systemic vascular resistance of 1250 was inconsistent with distributive shock and also noted that the low CO with low filling pressures was inconsistent with cardiogenic shock in a patient with CHF. With an understanding of the salient features of different shock states, the resident would have hopefully recognized the prototypic findings of hypovolemic shock. With some healthy skepticism of catheter pressures, he would have looked for tests or maneuvers that differentiate cardiogenic and hypovolemic shock. A change in blood pressure with passive leg raising, inspection of jugular venous pressure, or an echocardiographic evaluation of the heart would have differentiated between fluid overload and hypovolemia and established the diagnosis of hypovolemic shock. Further evaluation for blood loss would have identified the proximate cause.

In this case, cardiogenic shock provided a highly available and easy way to explain hypotension in a patient with a history of CAD and CHF. The precautionary ICU admission was based on this premise and probably primed the house staff to look for this problem. Assuming this diagnosis, the resident failed to notice the abundance of refuting evidence. Subsequently, he pursued confirmation of the diagnosis by ordering an electrocardiogram (ECG) and cardiac enzymes, and then ordered an infusion of epinephrine to help with the low cardiac output.

The resident believed the patient’s history, along with a low cardiac index, was sufficient grounds to make a diagnosis of cardiogenic shock. Satisfied with this etiology, an objective consideration of the patient’s data and basic categories of shock did not take place until morning rounds. When presenting the case during rounds, the on-call resident reasserted his initial hypothesis of cardiogenic shock multiple times—even after being asked about the low cardiac filling pressures. Other trainees were subsequently asked about their impression of the patient’s problem, and all believed that the story was consistent with cardiogenic shock.

Comment: The diagnostic strategy used in this case is remarkable for a number of reasons. First may be an undeveloped appreciation for the prototypic shock states and their differentiating factors. Equally apparent is a reliance on faulty heuristics. Availability bias is apparent in the initial diagnostic impression. The intern and resident were not experienced in postoperative care, so they took the history of heart failure and precautionary admission as evidence for this diagnosis without questioning its veracity. Search satisficing and early closure were apparent when even after a broader set of information was present, only the single piece of evidence consistent with cardiogenic shock (the low CO) was incorporated into their thinking and believed to affirm the diagnosis. The house staff was apparently blind to evidence contradicting heart failure as a primary disturbance. It was never addressed whether obtaining the ECG and troponins was a diagnostic maneuver arising from confirmation bias or part of a precompiled care routine for suspected CAD. Other team members seemed to be subjected to either availability or anchoring, as they failed to recognize contradictory evidence when presented. Even when told that the patient was probably bleeding from his catheterization site, the presenting intern revised his diagnosis to “cardiogenic shock with hypovolemia,” further demonstrating the power of anchoring, and the absence of analytical thought!

How Can We Improve Our Decision Making?

The discussion of decision making has so far focused on the apparent rift between the rational and methodical approach to diagnostic reasoning that typifies our teaching (System II) and the subconscious nature of decision making that predominates most reasoning under uncertainty (System I). Intuition can be a source of flawed judgment and performance or a means of demonstrating true mastery. Proper use of System II is dependent upon the user’s understanding of diseases and their distinguishing features plus the time to plan and reason through a diagnosis, and therefore, cannot be regarded as the solution to all diagnostic problems. As there are no determinants of when one’s intuition becomes reliable—especially at intermediate levels of experience—it seems important to consider means to offset areas of potential weakness in this aspect of diagnostic reasoning. The section below will introduce strategies that can be employed in critical care units and related educational programs to improve diagnostic accuracy, promote reflective practice, and reduce harm from critical decisions.

Metacognition–Thinking about How We Think

Metacognition refers to the deliberate monitoring of cognitive processes and their impact. In critical care, at least two distinct benefits can emerge from a better understanding of our thinking. First, by identifying the cognitive strategy used to reach a conclusion, the appropriateness of the strategy in a particular context can be evaluated. In this way, errors may be trapped earlier in their evolution, before they cause harm. Authors in several fields have promoted this view.30,45,51–53 Second, an awareness and appreciation of thought processes may provide extra motivation to follow up on their accuracy in novel and unclear situations. With periodic feedback on cognitive performance, the ability to build expertise from experience is bound to be greater.52,54

As a metacognitive strategy, Klein42,43 proposes the “crystal ball” technique wherein an individual or group imagines they are looking into a crystal ball that says that their assessment is wrong, then asks; “if this is the case, then what would be the right answer?” This is a particularly useful strategy in critical care, as many episodes of care fluctuate between periods of action and planning. Once action has led to some stability, critical examination of earlier impressions may lead one to arrive at a more accurate idea of the patient’s problem. The “crystal ball” is another way of granting permission to ask: “why do I not feel right about this decision?” or “what are some leading diagnoses that we left out?” The authors found this technique a useful group exercise after new admissions, as team members begin to commit important decisions such as making the diagnosis, prescribing a high-risk test, or deciding on an operation. The exercise also sends an important cultural message that constructive dissent is important and that team members are expected to speak up and put the patient’s interests first and not to take it personally when their ideas are challenged.

Groupthink and avoidance of group conflict are forms of sociologic bias that restrict independent thought and can be remedied in part with an insistence on hearing divergent views.55 This problem has not yet been explored in critical care; however, the general desire to achieve group consensus on diagnoses and plans and other aspects of team building in the ICU may actually promote conformity rather than protection against early closure or other biases.

A key attribute of the analytic decision-making pathway described above and in figure 1 is its ability to monitor the results of conclusions reached by intuition. Awareness that a decision was reached subconsciously, however valid it may be, may prompt the cautious clinician to revisit the same decision with a different set of tools or to seek a second opinion. However, just because more methodical consideration of a clinical situation can potentially offset the pitfalls of intuitive judgment does not necessarily mean that it will. Forces such as stress and fatigue that cause an initial reliance on System I will also impair its oversight.

Flawed Diagnostic Reasoning: an Earlier Escape? The case of perceived cardiogenic shock was discussed on morning rounds, and correction of misjudgment was accomplished fairly rapidly. After dispatching a fellow to revise the care plan, team members were asked whether any other impressions crossed their mind. None were offered, so the team was led through Klein’s crystal ball exercise where they were told that the crystal ball was telling us that the patient had a different problem, and given this fact, what could be the right diagnosis? Quickly, other ideas were offered, with hemorrhage being the overall consensus. Hemodynamic data was revisited in a manner that compared the patient’s findings with the prototypic forms of shock.

Cognitive Forcing Strategies

Forcing strategies involve the obligate pairing of one action to another. Cognitive forcing strategies (CFS) refer to developing the ability to self-monitor and recognize the use of specific heuristics in decision making, and then invoking predetermined actions to counteract known pitfalls or vulnerabilities.52 For example, a portable echocardiogram is obtained to evaluate hemodynamic instability, and severe systolic dysfunction is instantly apparent. Discovery of poor cardiac function may lead to termination of the exam prior to finding that a severely stenotic aortic valve was really the key driver of hemodynamic compromise. An example of a forcing strategy would be to insist that use of echocardiography involve an evaluation of all relevant structures rather than “spot examinations.”

Similar approaches to debiasing judgments made from other heuristics are presented in table 2. Other generic forcing strategies have been proposed, such as ruling out the worse scenario, deliberate consideration of three different diagnoses or explanations of a problem, and a forced consideration of new diagnoses if three interventions fail to create improvement (the rule of “3s”).30,52 There has been limited experimental evaluation of forcing strategies, none of which have used a native clinical environment. The goal of the forcing strategies was for trainees to expand their differential diagnoses prior to making final judgment. Both uncontrolled and controlled studies have failed to demonstrate clinically or mathematically significant degrees of improvement following this type of intervention.56–58 One interpretation of the results is that the experimental subjects (all students) may not necessarily use non-analytic processing as a primary decision strategy, and if using System II, a “ceiling effect” may exist where further analysis may fail to improve accuracy. Indeed, for both control and interventional arms of one CFS study, diagnostic accuracy was inversely related to the time spent on the diagnosis.58 In contrast, a series of studies conducted by Mamede have shown that structured reflection—systematically listing the supportive, contradictory, and absent findings of an initial and alternate diagnoses for a written case—improved both learning and categorization of illness scripts in medical students.59 The reflective intervention was similarly tested in internal medicine residents where it has some success in overcoming availability bias and improving diagnostic accuracy.29

Table 2.

Heuristics and biases discussed in the text are presented alongside strategies for overcoming the pitfalls inherent to each.

| Heuristic | Strategies to overcome systemic errors | Reference |

|---|---|---|

| Representativeness | Compare disease with prototypes of the condition; be suspicious when there is not a good match | 25 |

| Be aware of relevant prevalence data | 25,80 | |

| Incorporate discussion of common versus variant cases into educational practices | ||

|

| ||

| Availability | Seek base rate of a diagnosis | |

| Be aware of external influences in diagnostic process | 25 | |

|

| ||

| Premature closure | Use tests or other exercises to refute diagnosis | 25,43 |

| Differentiate working vs. final diagnoses | 25 | |

|

| ||

| Anchoring | Insist on first hearing the “story” rather than someone else’s diagnosis | 25,41 |

| Assess all key elements of the case | 41 | |

| Avoid confirmation and early closure; make point of lab tests to “prove” other leading diagnoses. “Crystal ball” exercise | 43 | |

|

| ||

| Confirmation | Use hypothetical-deductive method to assess value and role of contemplated tests | |

| Try to disprove your diagnosis, consider conditions of higher prevalence | 25,43 | |

| Stick to institutional protocols or standard methods when applicable | 36 | |

| Be aware of affective, ethical and social influences | ||

| Reaffirm role of intensivist as independent consultant; avoid influence of referring physicians | ||

|

| ||

| Unpacking or support theory | Be aware that alternate descriptions of the same situation may elicit different estimates of likelihood. | 33 |

| Unpack broad categories and compare alternatives at similar levels of specificity | ||

|

| ||

| Satisficing | Perform secondary searches once it appears the answer is at hand. | |

| Complete standard protocols where applicable. | 25 | |

|

| ||

| Omission or status quo bias | Think of what others would do. Consider existing clinical evidence. | 37 |

| Trust intuition: if you thought of it, you should probably do it | 25 | |

| Try to approach each case as a clean slate, as in de-anchoring | ||

Why is This a Good Decision?

Intensive care rounds are filled with situations where a practitioner or trainees need to anticipate and treat problems. Expert-level guidance in this process is helpful for learning what are expected and unexpected findings in the course of a critical illness and how to act upon findings. For example, a 38.5°C fever in a patient who underwent an open repair of an aortic aneurysm should trigger different thoughts and actions depending on whether it is the first postoperative night or 3 days after the operation. Rounds also present an important opportunity to explore the basis for anticipated actions. “If he gets hypotensive, we will start norepinephrine.” Statements along these lines should be followed with a query into why a particular choice was made. For this particular example, we have heard explanations including “because this is what worked last night on Mr. X,” or “I think he is septic, and norepinephrine is the drug of choice,” or “because all of the clinical data including central venous pressure, mixed venous oxygen saturation, and my physical exam suggest he is vasoplegic.” Was it availability? —i.e. the previous cardiac surgery patient was given norepinephrine when she became hypotensive. Was it anchoring? (the resident heard the patient needed norepinephrine in the operating room), or was it representativeness or physiologic reasoning that found that the patient’s overall physiologic profile looked like vasoplegia, so norepinephrine was chosen? In this example, discussion of the decision strategy would reinforce that deciding on a vasoactive agent is not an act of pairing a disease process and agent, but an act of defining a physiologic picture and adding what is missing. It has been advocated that clinical conferences and other educational activities should be expanded to include an exploration of decision strategies that are encountered in the course of caring for a patient.60

Instructors should identify critical decisions or junctures in care, essentially triggers such as new vasoactive drug, higher oxygen requirement, new metabolic abnormality or accelerated deterioration, where a quick exploration of assumptions and key data sources is warranted. Group demonstration of metacognition may facilitate development of this important ability.

Exhaustive Strategies

Given the risks of early closure, could we protect against misdiagnosis and achieve a broader perspective by obtaining a more comprehensive battery of laboratory and diagnostic tests? Studies that have looked at this question suggest that this may be an overly optimistic view.61,62 Why is this the case? First, most can form a diagnostic impression early on in an encounter, and adding additional information rarely changes the diagnosis but prolongs evaluation and is financially costly. Acquiring “objective information” in the form of diagnostic tests may reflect confirmation bias more than a true hypothetical deductive strategy.62,63 Exhaustive strategies often lack the guidance of experience or intuition and, hence, the necessary perspective to assemble all findings into a sensible picture. However, even experienced ICU physicians have exhibited great variability in how they approach the same patient in terms of information gathering, interpretation, and eventul decisions.64

Diagnostic impressions based on pattern recognition classically neglect the base-rate probabilities of candidate diagnoses. Ironically, an exhaustive strategy that strives to eliminate bias through completeness can utilize tests in an improper manner. For example, in populations with a low prevalence of a disease in question, laboratory tests will yield more false positives than true positives.65 Kirch and Schafii66 reported that in a series of autopsies, the history and physical examination led to the correct diagnosis 60% to 70% of the time, in contrast to only 35% of diagnoses being possible with the use of imaging techniques. It is attractive to think that if biased thinking led to an erroneous conclusion, means to expand the differential diagnosis, and essentially place the correct answer before our eyes, should improve accuracy. Even with a broader information set, clinicians remain attached to the first diagnosis considered (even if wrong)67,68 over diagnoses that were suggested by others.38

The exhaustive strategy is often tolerated as an initial step in learning to care for medical patients. It is perhaps believed that hypothesis development must wait until further experience is gained. Proper guidance regarding the relevance and use of specific studies is essential in preventing “getting lost in the numbers.” Also, without the proper experience base, one may run the risk of falling into the trap of “pseudodiagnosticity.” The latter term refers to building a diagnosis on laboratory or historical finding that may not address the patient’s primary problem.63 For example, a resident recently was operating under the belief that an elevated lactate level was “diagnostic” for severe sepsis in a patient who, in fact, had a large gastrointestinal bleed. Because obtaining lactate levels is part of the workup for sepsis, the resident misapplied this association to arrive at an incorrect diagnosis. Thus, there seems to be no proof that expanding the information set induces a more deliberative approach to decision making or that doing so will overcome the biases of non-analytic judgment.

Demonstrate Proper Use of the Hypothetical-Deductive Methods

When ordering diagnostic tests and imaging, it is important for practitioners and trainees to understand and articulate both the proper use and pitfalls of the studies. Most diagnostic studies cannot deliver omniscient pronouncements of a disease’s presence or absence. Rather, most are deployed clinically after cut-off values for positive and negative results have been established and have demonstrated some utility in modifying pre-test probabilities in specific patient populations.69 Failing to understand these statistical factors in ordering tests may lead to false conclusions, thereby adding more confusion than clarity to the diagnostic process. Thus a hypothetical-deductive strategy can theoretically overcome some of the problems arising from intuitive judgments; however, its use can be overridden with biases just as strong as those it attempts to overcome. In acquiring diagnostic tests, careful attention to test characteristics and disease probability will provide the greatest yield.

The physical examination may languish as a detached set of routines in some trainees’ practice. Showing the interplay between hypothesis formation, gathering of information, and its ability to change probabilities may help validate the use of the history and physical as an efficient diagnostic tool, and not an exhaustive and antiquated exercise.70,71 Finally, many critical care trainees organize thoughts and data into organ-based categories that serve the interests of completeness but may oppose reflection upon key problems and findings. Demanding clarity in data acquisition and synthesis during all phases of patient care is likely to strengthen both analytic and intuitive modes of thought.

Acknowledge the Use and Value of Intuition

In many situations, the System I process provides the most effective starting point for diagnosis and action. For example, an experienced critical care physician may walk into the room of a patient with variceal bleeding and launch right into intubation and transfusion of blood products, while a resident in the same room may be at a loss for the specific criteria supporting these actions. As such, we owe it to our trainees to reconstruct our actions as completely as we can and to show how largely non-analytic judgments result from understanding the significance and trajectory of seeming unrelated signals.

It is our belief that some basic distinguishing patterns for common critical care and postoperative care problems can be taught and can serve as reference points for comparison with variants and to contrast with other disease prototypes.26,72 Revisitation of the different physiologic patterns of shock states and their correspondence to different trends and findings on bedside monitors and physical examination is one example of building intuition and pattern recognition around an accepted theoretical framework.73 Support for use of pattern recognition as an instructional strategy comes from a study involving the interpretation of ECGs by psychology graduate students, wherein students were taught different patterns and used these to form hypotheses for the ECGs principal abnormality. Participants, all of whom lacked domain specific knowledge, were able to produce more accurate results when operating from a combined analytic/non-analytic method than those who were taught a more deliberate form of analysis.74,75 This study, along with Mamede’s use of “reflection” in diagnosis were designed with a dual processing framework of reasoning in mind, which may offer a more robust approach to understanding the diagnostic process than what Norman criticizes as “the didactic presentation of a series of cognitive biases in which the instructional goal can easily be misinterpreted as one of remembering definitions.”56

Establish the Best Conditions for Advanced Learning

Clearly, some situations in critical care call for decisions that emerge with little deliberation, while others require an analytic strategy. For intuition to develop properly, Kahneman and Klein46 both agree that the context for learning must be one of “high validity,” as defined by generally predictable elements and the ability to receive feedback on the quality and outcome of decisions. Thus, if we expect our trainees to advance in their problem solving and decisional skills, they first must be grounded to some sense of what is typical and expected for a particular disease state. Faculty, therefore, may have to exercise greater oversight into how cases are assigned and see that students and early residents initially take care of patients that have prototypical presentations of common problems or routine postoperative courses. To this foundation, more complex cases or classic variants can be added as experience increases.26

Deliberate exploration of the methods underlying diagnostic reasoning should be explored as already noted. Insistence on reviewing primary data is also important for general skill development, as well as for providing some safeguard against early anchoring and closure. While managing acute situations, the practice of thinking out loud is important for generating a shared mental model amongst other team members and often invites others to contribute their thoughts and impressions. Group leaders should also find means of promoting constructive questioning of key assumptions and conclusions; generating the ethic that this is important backup for innate errors in cognition rather than “being right” is absolutely essential. Patient sign-overs may present an additional opportunity to revisit diagnostic reasoning. Recently Dhaliwal and Hauer76 proposed that the re-presentation of cases admitted by night shift or other cross-covering physicians become a time to review the diagnostic reasoning of the admitting physician. This practice would expose trainees and their supervisors to current thoughts on cognition and cognitive error. Success of this intervention again is dependent on a positive view of dissent, and according to the authors, is dependent upon the attending physician’s ability to “model how such commentary can unfold constructively and professionally.”76

Patient simulators offer yet another tool for exploring decision making in critical care.77,78 Standardized scenarios of known problems can be presented to trainees, and their actions can be compared with what instructors consider ideal approaches to the condition. The time and place for different diagnostic strategies (ie, pattern recognition versus physiologic principles versus probabilistic thinking) can be explored in a controlled setting. When errors are encountered, the evolution can be elucidated from participants’ recollections, and unlike the ICU, true actions can be captured with video recordings and reviewed. Simulation exercises guarantee that a cohort of standing practitioners or trainees will be confronted with the same challenges and opportunities to understand decisions and decision-making strategies. Simulation facilities also carry the potential of creating a safe atmosphere for learning, wherein challenging situations and adverse outcomes can be explored without evoking the defensive behavior that may accompany poor outcomes in real patients.

Review Errors; Learn From Them

Intensive care admissions can result from errors made in the course of patient evaluation and treatment elsewhere. For all phases of care, identification of errors offers the opportunity to explore decision-making strategies and their relationship to outcomes. To maximize the yield of analyzing near misses and mistakes, it is important that critical care leaders create an atmosphere of inquiry (rather than blame) that allows for understanding the cognitive and systematic roots of errors.

Conclusion: Towards a Better Understanding of Diagnostic Reasoning in Critical Care

The role of cognition in diagnostic reasoning has been an area of interest in Emergency Medicine for over 15 years and is gaining attention in anesthesiology and critical care; however, examination of the use and validity of different decision pathways is in its infancy. Studies should be conducted to understand the cognitive processes used by skilled and seasoned physicians in critical care and to understand how stressors and other variables impact their performance. The latter will help overcome some of the questions raised from studies on students and early residents.

Rather than regarding different strategies as either friend or foe, it may be more worthwhile starting with an open-ended approach to understand what differentiates high and low levels of performance. Exposure of a cohort of trainees to a standard of septic shock and development of a validated scoring tool revealed a wide range of technical and behavioral performances.79 Similar studies can be conducted with emphasis on evaluating what aspects of decision making underlie variations in performance. Creation of educational programs around the strategies associated with the best diagnostic performance will provide trainees with models of expertise and its nature at an earlier part of their training.

The concentration of errors in critical care certainly mirrors the high burden of illness and number of interventions experienced by this population but also generates some questions of whether physician factors synergize with the patients’ states in a manner that amplifies risk and whether there are means by which this can be minimized. The face validity of the psychological concepts of judgment under uncertainty to critical care seems high; however, naturalistic studies to back up these assumptions are essential to any effort designed to improve patient safety in the ICU.

References

- 1.Croskerry P, Norman G. Overconfidence in clinical decision making. Am J Med 2008;121:S24–S29. [DOI] [PubMed] [Google Scholar]

- 2.Berner ES, Graber ML. Overconfidence as a cause of diagnostic error in medicine. Am J Med 2008;121:S2–S23. [DOI] [PubMed] [Google Scholar]

- 3.Brennan TA, Leape LL, Laird NM, Hebert L, Localio AR, Lawthers AG, Newhouse JP, Weiler PC, Hiatt HH. Incidence of adverse events and negligence in hospitalized patients: results of the Harvard Medical Practice Study I. N Engl J Med 1991;324:370–376. [DOI] [PubMed] [Google Scholar]

- 4.Leape LL, Brennan TA, Laird N, Lawthers AG, Localio AR, Barnes BA, Hebert L, Newhouse JP, Weiler PC, Hiatt H. The nature of adverse events in hospitalized patients. Results of the Harvard Medical Practice Study II. N Engl J Med 1991;324:377–384. [DOI] [PubMed] [Google Scholar]

- 5.Vincent C, Neale G, Woloshynowych M. Adverse events in British hospitals: preliminary retrospective record review. BMJ 2001;322:517–519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Wilson RM, Runciman WB, Gibberd RW, Harrison BT, Newby L, Hamilton JD. The Quality in Australian Health Care Study. Med J Aust 1995;163:458–471. [DOI] [PubMed] [Google Scholar]

- 7.Weingart SN, Wilson RM, Gibberd RW, Harrison B. Epidemiology of medical error. BMJ 2000;320:774–777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Bates DW, Miller EB, Cullen DJ, et al. Patient risk factors for adverse drug events in hospitalized patients. ADE Prevention Study Group. Arch Intern Med 1999;159:2553–2560. [DOI] [PubMed] [Google Scholar]

- 9.Giraud T, Dhainaut JF, Vaxelaire JF, Joseph T, Journois D, Bleichner G, Sollet JP, Chevret S, Monsallier JF. Iatrogenic complications in adult intensive care units: a prospective two-center study. Crit Care Med 1993;21:40–51. [DOI] [PubMed] [Google Scholar]

- 10.Graf J, von den Driesch A, Koch KC, Janssens U. Identification and characterization of errors and incidents in a medical intensive care unit. Acta Anaesthesiol Scand 2005;49:930–939. [DOI] [PubMed] [Google Scholar]

- 11.Thomas EJ, Brennan TA. Incidence and types of preventable adverse events in elderly patients: population based review of medical records. BMJ 2000;320:741–744. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Graber ML. The incidence of diagnostic error in medicine. BMJ Qual Saf 2013;22 Suppl 2:ii21–ii27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Saber Tehrani AS, Lee H, Mathews SC, Shore A, Makary MA, Pronovost PJ, Newman-Toker DE. 25-Year summary of US malpractice claims for diagnostic errors 1986–2010: an analysis from the National Practitioner Data Bank. BMJ Qual Saf 2013;22:672–680. [DOI] [PubMed] [Google Scholar]

- 14.Weissman JS, Schneider EC, Weingart SN, Epstein AM, David-Kasdan J, Feibelmann S, Annas CL, Ridley N, Kirle L, Gatsonis C. Comparing patient-reported hospital adverse events with medical record review: do patients know something that hospitals do not? Ann Intern Med 2008;149:100–108. [DOI] [PubMed] [Google Scholar]

- 15.Pierluissi E, Fischer MA, Campbell AR, Landefeld CS. Discussion of medical errors in morbidity and mortality conferences. JAMA 2003;290:2838–2842. [DOI] [PubMed] [Google Scholar]

- 16.Wu AW, Folkman S, McPhee SJ, Lo B. Do house officers learn from their mistakes? JAMA 1991;265:2089–2094. [PubMed] [Google Scholar]

- 17.Kruger J, Dunning D. Unskilled and unaware of it: how difficulties in recognizing one’s own incompetence lead to inflated self-assessments. J Pers Soc Psychol 1999;77:1121–1134. [DOI] [PubMed] [Google Scholar]

- 18.Spencer FC. Human error in hospitals and industrial accidents: current concepts. J Am Coll Surg 2000;191:410–418. [DOI] [PubMed] [Google Scholar]

- 19.Donchin Y, Gopher D, Olin M, Badihi Y, Biesky M, Sprung CL, Pizov R, Cotev S. A look into the nature and causes of human errors in the intensive care unit. Crit Care Med 1995;23:294–300. [DOI] [PubMed] [Google Scholar]

- 20.Hart GK, Baldwin I, Gutteridge G, Ford J. Adverse incident reporting in intensive care. Anaesth Intensive Care 1994;22:556–561. [DOI] [PubMed] [Google Scholar]

- 21.Osmon S, Harris CB, Dunagan WC, Prentice D, Fraser VJ, Kollef MH. Reporting of medical errors: an intensive care unit experience. Crit Care Med 2004;32:727–733. [DOI] [PubMed] [Google Scholar]

- 22.McGloin H, Adam SK, Singer M. Unexpected deaths and referrals to intensive care of patients on general wards. Are some cases potentially avoidable? J R Coll Physicians Lond 1999;33:255–259. [PMC free article] [PubMed] [Google Scholar]

- 23.McQuillan P, Pilkington S, Allan A, Taylor B, Short A, Morgan G, Nielsen M, Barrett D, Smith G, Collins CH. Confidential inquiry into quality of care before admission to intensive care. BMJ 1998;316:1853–1858. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Kovacs G, Croskerry P. Clinical decision making: an emergency medicine perspective. Acad Emerg Med 1999;6:947–952. [DOI] [PubMed] [Google Scholar]

- 25.Croskerry P. Achieving quality in clinical decision making: cognitive strategies and detection of bias. Acad Emerg Med 2002;9:1184–1204. [DOI] [PubMed] [Google Scholar]

- 26.Bowen JL. Educational strategies to promote clinical diagnostic reasoning. N Engl J Med 2006;355:2217–2225. [DOI] [PubMed] [Google Scholar]

- 27.van Schaik P, Flynn D, van Wersch A, Douglass A, Cann P. Influence of illness script components and medical practice on medical decision making. J Exp Psychol Appl 2005;11:187–199. [DOI] [PubMed] [Google Scholar]

- 28.Tversky A, Kahneman D. Judgment under Uncertainty: Heuristics and Biases. Science 1974;185:1124–1131. [DOI] [PubMed] [Google Scholar]

- 29.Mamede S, van Gog T, van den Berge K, Rikers RM, van Saase JL, van Guldener C, Schmidt HG. Effect of availability bias and reflective reasoning on diagnostic accuracy among internal medicine residents. JAMA 2010;304:1198–1203. [DOI] [PubMed] [Google Scholar]

- 30.Stiegler MP, Neelankavil JP, Canales C, Dhillon A. Cognitive errors detected in anaesthesiology: a literature review and pilot study. Br J Anaesth 2012;108:229–235. [DOI] [PubMed] [Google Scholar]

- 31.Heller RF, Saltzstein HD, Caspe WB. Heuristics in medical and non-medical decision-making. Q J Exp Psychol A 1992;44:211–235. [DOI] [PubMed] [Google Scholar]

- 32.Sargent G. Driving versus flying: the debate is settled. The New York Observer 1998. [Google Scholar]

- 33.Redelmeier DA, Koehler DJ, Liberman V, Tversky A. Probability judgement in medicine: discounting unspecified possibilities. Med Decis Making 1995;15:227–230. [DOI] [PubMed] [Google Scholar]

- 34.Simon HA. Rational choice and the structure of the environment. Psychol Rev 1956;62:129–138. [DOI] [PubMed] [Google Scholar]

- 35.Elstein AS. Heuristics and biases: selected errors in clinical reasoning. Acad Med 1999;74:791–794. [DOI] [PubMed] [Google Scholar]

- 36.Pines JM. Profiles in patient safety: confirmation bias in emergency medicine. Acad Emerg Med 2006;13:90–94. [DOI] [PubMed] [Google Scholar]

- 37.Aberegg SK, Haponik EF, Terry PB. Omission bias and decision making in pulmonary and critical care medicine. Chest 2005;128:1497–5105. [DOI] [PubMed] [Google Scholar]

- 38.van den Berge K, Mamede S, van Gog T, Romijn JA, van Guldener C, van Saase JL, Rikers RM. Accepting diagnostic suggestions by residents: a potential cause of diagnostic error in medicine. Teach Learn Med 2012;24:149–154. [DOI] [PubMed] [Google Scholar]

- 39.Kahneman D. Thinking Fast and Slow. New York: Farrar, Straus and Giroux; 2011. [Google Scholar]

- 40.Englich B, Mussweiler T, Strack F. Playing dice with criminal sentences: the influence of irrelevant anchors on experts’ judicial decision making. Pers Soc Psychol Bull 2006;32:188–200. [DOI] [PubMed] [Google Scholar]

- 41.Groopman J. How Doctors Think. New York: Houghton Mifflin Co; 2007. [Google Scholar]

- 42.Klein G. Naturalistic decision making. Hum Factors 2008;50:456–460. [DOI] [PubMed] [Google Scholar]

- 43.Klein G. Sources of Power, How People Make Decisions. Cambridge, MA: MIT Press; 1998. [Google Scholar]

- 44.Croskerry P. Clinical cognition and diagnostic error: applications of a dual process model of reasoning. Adv Health Sci Educ Theory Pract 2009;14 Suppl 1:27–35. [DOI] [PubMed] [Google Scholar]

- 45.Elstein AS, Schwartz A. Clinical problem solving and diagnostic decision making: selective review of the cognitive literature. BMJ 2002;324:729–732. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Kahneman D, Klein G. Conditions for intuitive expertise: a failure to disagree. Am Psychol 2009;64:515–526. [DOI] [PubMed] [Google Scholar]

- 47.Stiegler MP, Tung A. Cognitive processes in anesthesiology decision making. Anesthesiology 2014;120:204–217. [DOI] [PubMed] [Google Scholar]

- 48.Epstein S. Integration of the cognitive and the psychodynamic unconscious. Am Psychol 1994;49:709–724. [DOI] [PubMed] [Google Scholar]

- 49.Ozel F. Time pressure and stress as a factor during emergency egress. Safety Science 2001;8:95–107. [Google Scholar]

- 50.Wickens C, Stokes A, Barnett B, Hyman F. The effects of stress on pilot judgment in a MIDID simulator. In: Svenson O, Maule J, eds. Time pressure and stress in human judgment and decision making. New York: Plenum Press; 1991. 271–292. [Google Scholar]

- 51.Croskerry P. The cognitive imperative: thinking about how we think. Acad Emerg Med 2000;7:1223–1231. [DOI] [PubMed] [Google Scholar]

- 52.Croskerry P. Cognitive forcing strategies in clinical decisionmaking. Ann Emerg Med 2003;41:110–120. [DOI] [PubMed] [Google Scholar]

- 53.Graber M. Metacognitive training to reduce diagnostic errors: ready for prime time? Acad Med 2003;78:781. [DOI] [PubMed] [Google Scholar]

- 54.Flavell J. Metacognition and Cognitive Monitoring: a new area of cognitive-developmental inquiry. American Psychologist 1979;34:906–911. [Google Scholar]

- 55.Janis IL. Groupthink: Psychological studies of policy decisions and fiascoes. 2nd ed. Boston: Houghton Mifflin Co; 1983. [Google Scholar]

- 56.Norman GR, Eva KW. Diagnostic error and clinical reasoning. Med Educ 2010;44:94–100. [DOI] [PubMed] [Google Scholar]

- 57.Sherbino J, Dore KL, Wood TJ, Young ME, Gaissmaier W, Kreuger S, Norman GR. The relationship between response time and diagnostic accuracy. Acad Med 2012;87:785–791. [DOI] [PubMed] [Google Scholar]

- 58.Sherbino J, Kulasegaram K, Howey E, Norman G. Ineffectiveness of cognitive forcing strategies to reduce biases in diagnostic reasoning: a controlled trial. CJEM 2014;16:34–40. [DOI] [PubMed] [Google Scholar]

- 59.Mamede S, van Gog T, Moura AS, de Faria RM, Peixoto JM, Rikers RM, Schmidt HG. Reflection as a strategy to foster medical students’ acquisition of diagnostic competence. Med Educ 2012;46:464–472. [DOI] [PubMed] [Google Scholar]

- 60.Kassirer JP. Teaching clinical reasoning: case-based and coached. Acad Med 2010;85:1118–1124. [DOI] [PubMed] [Google Scholar]

- 61.Redelmeier DA, Shafir E, Aujla PS. The beguiling pursuit of more information. Med Decis Making 2001;21:376–381. [DOI] [PubMed] [Google Scholar]

- 62.Gruppen LD, Wolf FM, Billi JE. Information gathering and integration as sources of error in diagnostic decision making. Med Decis Making 1991;11:233–239. [DOI] [PubMed] [Google Scholar]

- 63.Bordage G. Why did I miss the diagnosis? Some cognitive explanations and educational implications. Acad Med 1999;74:S138–S143. [DOI] [PubMed] [Google Scholar]

- 64.Kostopoulou O, Wildman M. Sources of variability in uncertain medical decisions in the ICU: a process tracing study. Qual Saf Health Care 2004;13:272–280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Sackett D, Haynes R, Guyatt G, Tugwell P. Clinical Epidemiology, a basic science for clinical medicine. Boston: Little, Brown & Co.; 1991. 69–152. [Google Scholar]

- 66.Kirch W, Schafii C. Misdiagnosis at a university hospital in 4 medical eras. Medicine (Baltimore) 1996;75:29–40. [DOI] [PubMed] [Google Scholar]

- 67.Cunnington JP, Turnbull JM, Regehr G, Marriott M, Norman GR. The effect of presentation order in clinical decision making. Acad Med 1997;72:S40–S242. [DOI] [PubMed] [Google Scholar]

- 68.Leblanc VR, Norman GR, Brooks LR. Effect of a diagnostic suggestion on diagnostic accuracy and identification of clinical features. Acad Med 2001;76:S18–S20. [DOI] [PubMed] [Google Scholar]

- 69.Soreide K, Korner H, Soreide JA. Diagnostic accuracy and receiver-operating characteristics curve analysis in surgical research and decision making. Ann Surg 2011;253:27–34. [DOI] [PubMed] [Google Scholar]

- 70.Trowbridge RL. Twelve tips for teaching avoidance of diagnostic errors. Med Teach 2008;30:496–500. [DOI] [PubMed] [Google Scholar]

- 71.Vazquez R, Gheorghe C, Kaufman D, Manthous CA. Accuracy of bedside physical examination in distinguishing categories of shock: a pilot study. J Hosp Med;5:471–474. [DOI] [PubMed] [Google Scholar]

- 72.Marcum JA. An integrated model of clinical reasoning: dual-process theory of cognition and metacognition. J Eval Clin Pract 2012;18:954–961. [DOI] [PubMed] [Google Scholar]

- 73.Lighthall G. Use of physiologic reasoning to diagnose and manage shock States. Crit Care Res Pract;2011:105348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Ark TK, Brooks LR, Eva KW. Giving learners the best of both worlds: do clinical teachers need to guard against teaching pattern recognition to novices? Acad Med 2006;81:405–409. [DOI] [PubMed] [Google Scholar]

- 75.Eva KW, Hatala RM, Leblanc VR, Brooks LR. Teaching from the clinical reasoning literature: combined reasoning strategies help novice diagnosticians overcome misleading information. Med Educ 2007;41:1152–1158. [DOI] [PubMed] [Google Scholar]

- 76.Dhaliwal G, Hauer KE. The oral patient presentation in the era of night float admissions: credit and critique. JAMA 2013;310:2247–2248. [DOI] [PubMed] [Google Scholar]

- 77.Lighthall GK, Barr J, Howard SK, Gellar E, Sowb Y, Bertacini E, Gaba D. Use of a fully simulated intensive care unit environment for critical event management training for internal medicine residents. Crit Care Med 2003;31:2437–2443. [DOI] [PubMed] [Google Scholar]

- 78.Bond WF, Deitrick LM, Arnold DC, Kostenbader M, Barr GC, Kimmel SR, Worrilow CC. Using simulation to instruct emergency medicine residents in cognitive forcing strategies. Acad Med 2004;79:438–446. [DOI] [PubMed] [Google Scholar]

- 79.Ottestad E, Boulet JR, Lighthall GK. Evaluating the management of septic shock using patient simulation. Crit Care Med 2007;35:769–775. [DOI] [PubMed] [Google Scholar]

- 80.Klein G. Streetlights and shawdows: the key to adaptive decision making. Cambridge, MA: MIT Press; 2009. [Google Scholar]