Abstract

Objectives

Current US medical students have begun to rely on electronic information repositories—such as UpToDate, AccessMedicine, and Wikipedia—for their pre-clerkship medical education. However, it is unclear whether these resources are appropriate for this level of learning due to factors involving information quality, level of evidence, and the requisite knowledgebase. This study evaluated appropriateness of electronic information resources from a novel perspective: amount of mental effort learners invest in interactions with these resources and effects of the experienced mental effort on learning.

Methods

Eighteen first-year medical students read about three unstudied diseases in the above-mentioned resources (a total of fifty-four observations). Their eye movement characteristics (i.e., fixation duration, fixation count, visit duration, and task-evoked pupillary response) were recorded and used as psychophysiological indicators of the experienced mental effort. Post reading, students' learning was assessed with multiple-choice tests. Eye metrics and test results constituted quantitative data analyzed according to the repeated Latin square design. Students' perceptions of interacting with the information resources were also collected. Participants' feedback during semi-structured interviews constituted qualitative data and was reviewed, transcribed, and open coded for emergent themes.

Results

Compared to AccessMedicine and Wikipedia, UpToDate was associated with significantly higher values of eye metrics, suggesting learners experienced higher mental effort. No statistically significant difference between the amount of mental effort and learning outcomes was found. More so, descriptive statistical analysis of the knowledge test scores suggested similar levels of learning regardless of the information resource used.

Conclusions

Judging by the learning outcomes, all three information resources were found appropriate for learning. UpToDate, however, when used alone, may be less appropriate for first-year medical students' learning as it does not fully address their information needs and is more demanding in terms of cognitive resources invested.

Keywords: Medical Education, Problem-Based Learning, Information-Seeking Behavior, Hypermedia, Reading, Information Science

Many medical schools in the United States have adopted learner-centered, pre-clerkship curricula in the form of problem-based learning (PBL) [1]. PBL highlights learning by solving real-life medical problems and is intended to inspire students to ask questions in search of feasible solutions [2]. The PBL approach in medicine also admits that no person can retain all the information that constitutes medicine. Thus, it emphasizes the importance of research skills that help learners find information necessary to address their information needs [3].

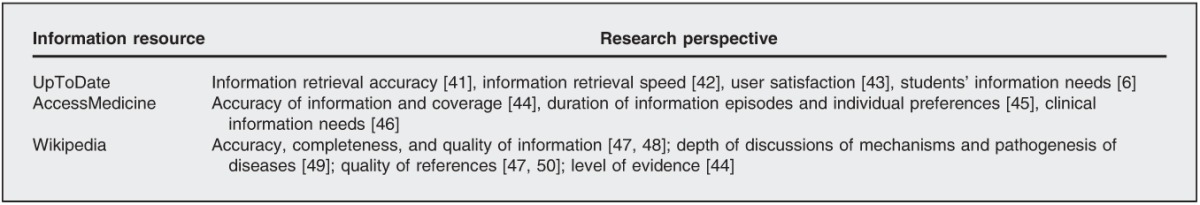

Another distinguishing feature of PBL is the self-directed and self-regulated learning environment [4]. By strategically planning and taking responsibility for their own learning, medical students address their information needs by using a variety of resources, most frequently electronic information resources [5, 6] that they self-select [7, 8]. Among the most commonly used electronic information resources are electronic textbooks (e.g., Harrison's Online) and textbook collections (e.g., AccessMedicine) [5, 6], textbook-like resources (e.g., UpToDate) [6, 9], and electronic encyclopedias (e.g., Wikipedia) [10, 11]. Previous research has provided evidence as to the appropriateness of these information resources for learning and medical practice from several perspectives (Table 1). This body of evidence, however, remains sporadic and somewhat controversial.

Table 1.

Examples of research on the appropriateness of UpToDate, AccessMedicine, and Wikipedia

The authors propose to use the amount of learners' mental effort invested in information acquisition and processing from electronic information resources as a measure of resource appropriateness. We demonstrate the feasibility of the proposed approach with the examples of UpToDate, AccessMedicine, and Wikipedia, the three information resources most commonly used among medical students [5, 6, 9, 10, 11].

Mental effort refers to the cognitive capacity allocated by the learner when working on a task [12]. Mental effort is a measurable dimension of cognitive load that is the central construct of the cognitive load theory (CLT), first introduced by John Sweller in the late 1980s in the context of problem-solving tasks. The main assumption of CLT is the limited processing capacity of human working memory [13], which means that learning is likely to be successful when mental effort is spent predominantly on knowledge acquisition. During task performance, mental effort can be expended in three major ways [14]:

-

1

Mental effort can be expended on overcoming the intrinsic cognitive load imposed by the inherent complexity of the learning material.

-

2

Mental effort can be expended on dealing with the germane cognitive load associated with the efforts of constructing new meaning.

-

3

Mental effort can be expended on overcoming extraneous cognitive load imposed by the format and manner of information organization and presentation.

In other words, mental effort can be spent figuring out the lesson to be learned, figuring out what it means, and overcoming barriers from organization and presentation, which is extraneous to the content of the lesson itself.

The three types of cognitive load are considered additive [15]; in other words, reduction or increment in one type is likely to cause fluctuations in the other two. This means that whether the amount of mental effort invested in overcoming extraneous cognitive load will be detrimental for learning depends on the amount of mental effort invested in overcoming intrinsic or germane cognitive load. If learning requires a significant amount of mental effort for understanding difficult material, mental effort invested in overcoming the barriers associated with its presentation should be reduced to a minimum. Otherwise, overall working memory capacity will be exceeded, which may result in inferior learning. That is why extraneous cognitive load is considered to be most interfering with efficient information processing and the most detrimental for learning [15].

With the growing popularity of electronic learning environments, CLT and its concepts of cognitive load and mental effort have become one of the leading theories used to explore the utility of these environments for learning. Hypermedia is a specific example of an electronic learning environment where CLT is highly relevant [16, 17], because structural and functional peculiarities of hypermedia carry potential risks of imposing high mental effort necessary to overcome sources of extraneous cognitive load. Among these sources are the presence of network-like information structures that are interconnected via hyperlinks [18]; multiple formats of content presentation (e.g., text, audio, graphics, and animation) [18]; and prevalence of learner rather than system control over the order, selection, and presentation of information [19]. To comply with the proposed research direction, we consider the electronic information resources included in this study to be examples of hypermedia. Features and functionality of all three information resources concur with the features and functionality of hypermedia described above.

Several studies have demonstrated how hypermedia features can serve as sources of extraneous cognitive load and affect learners' mental effort. In certain instances, deciding which hyperlinks to follow may require additional mental effort. Conklin referred to this phenomenon as “cognitive overhead” and explained that navigation planning and information retrieval occupy cognitive resources of working memory, making them unavailable for efficient information processing [18].

Despite the prevalence of sources of high extraneous cognitive load in hypermedia, features like multiple formats of information presentation and hyperlinks present excellent learning opportunities. By taking advantage of hyperlinks, learners can construct their own paths to understanding [20]. By exploring hypermedia on their own, learners get a chance to gradually integrate new and existing information and, thus, construct new knowledge [21].

MEASUREMENT OF MENTAL EFFORT

A variety of modalities allow measurement of a learner's mental effort, including assessment of behavior, physiology, psychology, and learning outcomes. Another widely used method for measuring mental effort during task performance is eye tracking, which represents a combination of physiology and psychology. Eye tracking (i.e., recording eye movement data while participants are working on a task) is an accurate and unobtrusive psychophysiologic measure of cognitive processes that can provide insight into the learner's allocation of attention and cognitive capacity during task performance [22].

Two basic components of measureable eye movement during information acquisition are saccades and fixations [23]. Fixations, when the eye is held still, occur during new information acquisition. Saccades are quick, short eye movements or jerks. During saccades, information acquisition is typically impossible. Fixation duration, fixation count, and total visit duration are some of the many characteristics of eye movements helpful in interpreting cognitive processes [24]. When reading involves longer words and words that are less familiar to the learner, fixation frequency increases as a proxy of increased cognitive demands [25, 26]. As text becomes conceptually more difficult and requires more mental effort from the learner to understand, fixation duration increases as well [27]. Contrary to learners with prior expertise and knowledge, novices take longer to fixate on more task-relevant information [28], which prolongs their total visit duration on a task.

Task-evoked pupillary response (TEPR) is another reliable indicator of fluctuations in cognitive processes during task performance [29, 30]. TEPR may be represented by, but is not limited to, metrics like mean pupil dilation (MPD), the average pupil diameter over a given interval of time [31]; lability, the difference between the maximum and the minimum values of pupil size during the task [32]; and percent change in pupil size (PCPS) [29]. Although it is most common to observe an increase in pupil size in response to increased work load [29], it has been suggested that pupil constriction can also be an indication of increased mental effort associated with rigorous information processing demands [30].

STUDY HYPOTHESES

We hypothesized that different resources would differ in the amount of extraneous cognitive load they impose, and this difference could be measured using the eye metrics outlined above. We anticipated these differences would affect learners' mental effort invested in overcoming this type of cognitive load.

Further, according to the principles of additivity of cognitive load, in situations requiring both high mental effort to be expended on understanding the learning material and high mental effort invested in overcoming the sources of extraneous cognitive load, there is usually little room for mental effort associated with knowledge construction (i.e., germane [relevant] cognitive load, the work necessary to actually store the knowledge). Accordingly, we hypothesized that learner interactions with information resources that require higher mental effort toward overcoming the sources of extraneous cognitive load would negatively affect learning.

Finally, we wanted to understand whether or not students' own experiences of interacting with a difficult cognitive load reflected psychophysiological measurements.

METHODS

The experiment was conducted during the summer semester of the 2013/14 academic year. Participants included eighteen first-year medical students, representing the class of 2017 at University of Missouri School of Medicine. The sample included eleven males and seven females. Fifteen participants were between twenty-two and twenty-six years old, with bachelor's degrees. Three students were twenty-seven years or older, with master's degrees. Five students indicated that English was their second language. Participants were approached via email and invited to participate in the research voluntarily with a monetary compensation for their time.

Three electronic information resources—UpToDate, AccessMedicine, and Wikipedia—were included in the experiment based on their popularity among medical students for learning [5, 6, 9, 10, 11]. Students' mental effort during information acquisition and processing from these resources was approximated through the combination of eye metrics such as total fixation duration (in seconds), fixation count (in counts), total visit duration (in seconds), and TEPR (in mm). These were recorded with a Tobii X2-60 eye tracker (Tobii Technology, Sweden), which captures 60 eye samples per second.

We intentionally chose learning material that we believed required similar mental effort to understand (i.e., similar intrinsic cognitive load), which allowed us to focus on measuring mental effort associated with primarily overcoming the sources of extraneous cognitive load. Specifically, we chose three diseases—idiopathic pulmonary fibrosis (IPF), primary sclerosing cholangitis (PSC), and neurosarcoidosis (NS)—that were relatively novel to and complex for the students.

To understand whether or not students' own experiences of interacting with the three information resources reflected psychophysiological measurements, we conducted semi-structured, end-of-session interviews (Appendix, online only).

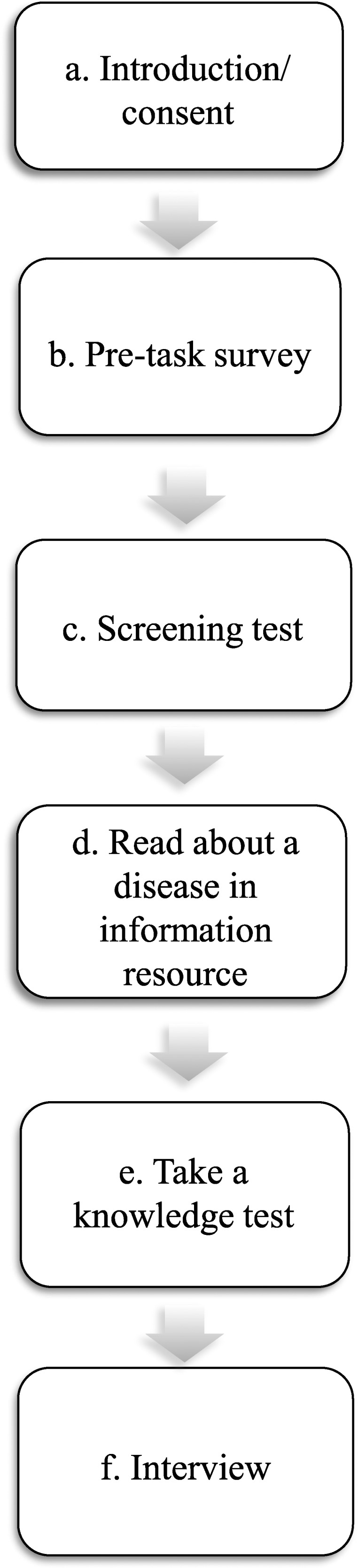

Approval for research was obtained through the governing university's institutional review board. The experimental procedure included several steps (Figure 1). The measured task consisted of each student reading about 3 diseases from the assigned information resources. A repeated (LSD) [33] was used to assign diseases and resources to each participant. (A Latin square is a table in which the all the possible combinations of the factors are displayed.) The permutations of the 3 information resources were assigned as treatment types, while the permutations of the 3 diseases and participants' IDs were assigned as 2 nuisance factors (Table 2, online only). Because the number of treatment variations was small (n=3) but considerable variability among them was expected, replicate Latin squares were used [34]. To accommodate for the conditions of the experiment, a total of 6 Latin squares were randomly generated. Inclusion of 6 3-by-3 Latin squares ensured a total of 54 observations of students' interactions with information resources; each resource was used 6 times for each of the 3 diseases.

Figure 1.

Experimental procedure

Before the reading task, students were surveyed about their level of medical knowledge about each disease. Eight students reported being familiar with PSC, five with IPF, and two with NS; however, only one student reported having a good medical knowledge of IPF. After the reading task, students were given a ten-question, multiple-choice knowledge test to assess their learning about each disease. Test questions were generated from peer-reviewed journal publications. Structurally, each question was formatted in accordance with the manual on constructing written test questions for the basic and clinical sciences [35] and developed with seven answer options: one correct, four distractors, and two more answer options of “I don't know”/“I can't remember reading about it” to prevent participants from randomly guessing. All prepared materials were independently reviewed by two medical school curriculum designers to ensure their coverage in all three resources and appropriateness for the experiment.

During data collection, both quantitative and qualitative data were obtained. Eye movements constituted quantitative data and were analyzed with the repeated LSD. Qualitative data from semi-structured interviews were transcribed verbatim, reviewed, and open coded for emergent themes in QDA Miner qualitative software.

RESULTS

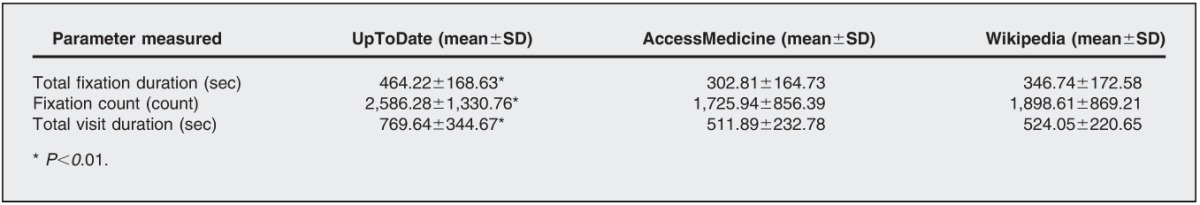

Results of the repeated LSD revealed statistically significant differences in total fixation duration, fixation count, and total visit duration among the 3 information resources (F(2, 32)=7.364, P=0.002), which suggested differences in mental effort experienced by learners when interacting with each resource. Post hoc Fisher's least significant difference test indicated that UpToDate was associated with significantly higher values of all 3 eye movement characteristics (P<0.01), suggesting the highest mental effort (Table 3). Results of the repeated LSD analysis, however, yielded no statistically significant differences in TEPR among the 3 information resources (P>0.05).

Table 3.

Significance of the results

Students' feedback provided additional insight into why UpToDate could have required more mental effort. According to students, when they used UpToDate, they encountered a large volume of information, which was often irrelevant to their information needs. Students explained that background information was not available in UpToDate; therefore, they encountered a lot of unfamiliar medical terminology, which required rereading and sometimes led to incomplete understanding of sentences. Further, UpToDate's layout—high text density, small headings, continuous flow of text rather than its partitioning into sections, and long sentences containing several ideas at a time—was also named as an obstacle to efficient information processing.

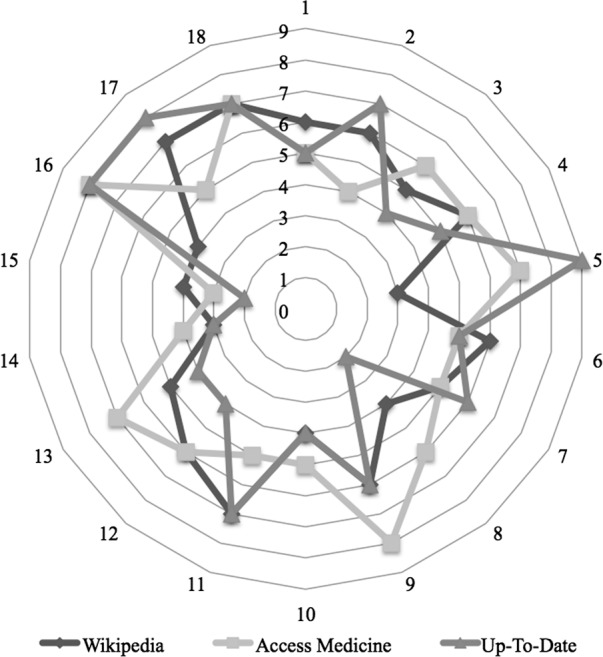

Despite the significant differences in eye metrics suggesting differences in learners' mental effort, results of the repeated LSD showed no statistically significant difference in learning outcomes as measured by the knowledge test (P>0.05). In view of these nonsignificant findings, we conducted additional descriptive statistical analysis of the knowledge test scores, which suggested that, on average, participants were able to answer half of the test questions correctly when using UpToDate (M=5.33, SD=2.06), AccessMedicine (M=5.72, SD=1.36), or Wikipedia (M=5.22, SD=1.31) (Figure 2).

Figure 2.

Results of the knowledge test

Students' feedback might explain the absence of significant differences in learning outcomes among the three resources. As noted, students found UpToDate overwhelming due to the large volume of information and lack of relevance to their information needs. To manage information overload, participants reported using a few coping strategies for information seeking. These included:

-

1

Students reported using CTRL+F, a shortcut that finds a specific word, to target specific pieces of sought information.

-

2

They refrained from using hyperlinks primarily because of their format. Most hyperlinks were sentence-long, which interfered with reading continuity and did not allow students an opportunity to look up just an unfamiliar word. If followed, hyperlinks took students to other pages that were similarly dense with content, which created the danger of being lost in the information resource.

-

3

Use of media elements (e.g., images) was scarce. Media could only be accessed via hyperlinks and viewed in a separate pop up window. This often resulted in additional mental effort required to reinstate in their working memory the meaning of text they read before they viewed the media.

Students found Wikipedia to be extremely helpful for obtaining background understanding of a topic and determining the direction of further research. Among Wikipedia's features that, according to students, promoted information acquisition and processing were (1) readable font, visible headings, clearly demarcated sections, and abundance of easy-to-use hyperlinks that allowed students to look up the meaning of unknown words (this use of hyperlinks is different than using hyperlinks to obtain related information); (2) topic summaries at the beginning of each article; and (3) images embedded in the content pages.

AccessMedicine was named by students as the most appropriate information resource for learning. The majority of students perceived this resource to be written in a more accessible, but still scientific manner, compared to UpToDate, which facilitated their comprehension of the material. Some students suggested that AccessMedicine resembled physical textbooks (e.g., chapters, index, etc.) and therefore, was intuitive to use. Among other features that they cited for facilitating efficient information processing were clear partitioning of information into expandable sections, visible headings, and white space on both sides of the text that promoted more accelerated reading.

DISCUSSION

In this experiment, we evaluated the appropriateness of three electronic information resources (UpToDate, AccessMedicine, and Wikipedia) for learning in the pre-clerkship curriculum. We measured learners' mental effort invested in interactions with each information resource. As a measurement of mental effort, we relied on a combination of eye metrics (total fixation duration, fixation count, total visit duration, and task-evoked pupillary response) that reflected concurrent cognitive processes.

Significant differences in eye metrics indicated mental effort fluctuations among the evaluated resources. UpToDate was associated with significantly higher values of mental effort, as measured in three categories: total fixation duration, fixation count, and total visit duration. Student feedback indicated that content organization and presentation in UpToDate coincided with the sources of extraneous cognitive load typically found in hypermedia and known to increase mental effort associated with overcoming them, for example, deciphering of meaning during the reading processes [36], page length, and amount of text [37].

Analysis of PCPS showed a larger percent of participants' pupil constriction from baseline when using UpToDate, which also indicated a higher experienced mental effort when using this resource [30]. Students identified learning with AccessMedicine and Wikipedia, on the other hand, as requiring less mental effort, most likely due to the formats of material presentation that facilitated efficient information acquisition and processing.

Sweller argued that mental effort expended toward overcoming a combination of high-intrinsic and extraneous cognitive load might overwhelm the limited capacity of the working memory needed for constructing meaning and would thus impair learning [38]. Accordingly, we expected that significantly higher values of mental effort associated with information acquisition and processing in UpToDate would result in inferior learning. Expected differences, however, were not confirmed by the statistical analysis. Instead, participants achieved similar learning outcomes when using all three resources, despite that their indicators of mental effort varied significantly.

Differences among resources

Our results suggest that learning with a resource like Wikipedia was not inferior compared to learning with traditionally recognized information resources like UpToDate and AccessMedicine, possibly because of the way learning material was organized and presented to the students. In other words, this particular case demonstrated that any allegedly questionable quality of information in Wikipedia [39, 40] could be counterbalanced by efficient access to a broad range of information. The findings about the appropriateness of Wikipedia for learning are important for medical educators and librarians as they attempt to reconcile classical textbook medical education with newer digital resources. As medical education becomes deliberately learner centered, educators should strive to deliver materials that maximize student learning. Librarians can and should play a major role in selecting materials and making them available.

The most significant lessons are the features that students find most appealing about user-friendly websites such as Wikipedia. According to the student feedback, maximizing information connectedness with hyperlinks, improving navigation, and making the writing accessible to a general audience stood out as positive factors. It could be argued that these factors might also promote reduced mental effort (even when learning novel and difficult material) and facilitate learning.

The results also indicate that, although students achieved similar learning outcomes, they did so at a higher cost of invested cognitive resources when using UpToDate. This finding demonstrates that, in this particular case, obstacles to efficient use of information, be they visual complexity or unfamiliar terminology, could possibly overshadow the quality of information.

Limitations

The sample was small (eighteen) in one class of one medical school and might not be representative. During the tasks, students were limited in their interactions to just one information resource at a time and given only about twenty minutes to learn about each disease, which may be considered an unnatural learning behavior. In addition, even though the disease topics were carefully chosen for the study, the outcomes might be different if other diseases were used or if students' baseline knowledge of the diseases was established through formal testing.

Future research

Given the importance of information literacy and self-directed learning in PBL, future studies about the appropriateness of information resources are warranted. These studies could include exploring how and which particular interface design characteristics and functionality features of electronic information resources affect students' information behavior and eye movement patterns. In addition, providing students with opportunities for cued retrospective reflection on their own information behavior could help explain the underlying motivations of the observed actions. Finally, introducing instruments to measure long-term learning could shed more light on the appropriateness of electronic information resources. Librarians can play a role in such studies and certainly should use the results to help design and select information resources for medical students. It is likely that similar characteristics pertain to nursing and allied health students as well.

Electronic Content

Biography

Dinara Saparova, MA, PhD (ABD) (corresponding author), ds754@mail.missouri.edu, School of Information Science and Learning Technologies, University of Missouri, 111 London Hall, Columbia, MO 65211; Nathanial S. Nolan, BS,

NSNolan@mizzou.edu, School of Medicine, University of Missouri, One Hospital Drive, MA204, DC018.00, Columbia, MO 65212

Footnotes

This article has been approved for the Medical Library Association's Independent Reading Program <www.mlanet.org/page/independent-reading-program>.

A supplemental appendix and supplemental Table 2 are available with the online version of this journal.

REFERENCES

- 1.Barrows HS. Problem-based learning applied to medical education. Springfield, IL: Southern Illinois University Press; 2000. [Google Scholar]

- 2.Savery JR. Overview of problem-based learning: definitions and distinctions. Interdiscip J Probl Learn. 2006;1(1):9–20. [Google Scholar]

- 3.Woods DR. Problem-based learning: how to gain the most from PBL. Hamilton, ON, Canada: McMaster University; 1994. 176. p. [Google Scholar]

- 4.Blumberg P, Michael JA. Development of self-directed learning behaviors in a partially teacher-directed problem-based learning currculum. Teach Learn Med. 1992;4(1):3–8. [Google Scholar]

- 5.Chen KN, Lin PC, Chang SS, Sun HC. Library use by medical students: a comparison of two curricula. J Librariansh Inf Sci [Internet] 2011 Sep;43(3):176–84. [cited 16 Mar 2014]. < http://lis.sagepub.com/cgi/doi/10.1177/0961000611410928>. [Google Scholar]

- 6.Peterson MW, Rowat J, Kreiter C, Mandel J. Medical students' use of information resources: is the digital age dawning. Acad Med. 2004 Jan;79(1):89–95. doi: 10.1097/00001888-200401000-00019. [DOI] [PubMed] [Google Scholar]

- 7.Rankin JA. Problem-based medical education: effect on library use. Bull Med Lib Assoc. 1992 Jan;80(1):36–43. (Available from: < http://www.pubmedcentral.nih.gov/articles/PMC225613/>. [cited 28 Sep 2015].) [PMC free article] [PubMed] [Google Scholar]

- 8.Dodd L. The impact of problem-based learning on the information behavior and literacy of veterinary medicine students at University College Dublin. J Acad Librariansh [Internet] 2007 Mar;33(2):206–16. [cited 28 Sep 2015]. < http://linkinghub.elsevier.com/retrieve/pii/S0099133306002266>. [Google Scholar]

- 9.Cooper AL, Elnicki DM. Resource utilisation patterns of third-year medical students. Clin Teach. 2011 Mar;8(1):43–7. doi: 10.1111/j.1743-498X.2010.00393.x. [DOI] [PubMed] [Google Scholar]

- 10.Allahwala UK, Nadkarni A, Sebaratnam DF. Wikipedia use amongst medical students-new insights into the digital revolution. Med Teach. 2013 Apr;35(4):337. doi: 10.3109/0142159X.2012.737064. [DOI] [PubMed] [Google Scholar]

- 11.Judd T, Kennedy G. Expediency-based practice? medical students' reliance on Google and Wikipedia for biomedical inquiries. Br J Educ Technol. 42(2):351–60. [Internet] 21 Mar 2011. [cited 18 Mar 2014]. DOI: http://dx.doi.org/10.1111/j.1467-8535.2009.01019.x. [Google Scholar]

- 12.Paas FGWC, Van Merriënboer JJG. Instructional control of cognitive load in the training of complex cognitive tasks. Educ Psychol Rev. 1994;6(4):351–71. [Google Scholar]

- 13.Sweller J. Cognitive load during problem solving: effects on learning. Cogn Sci [Internet] 1988 Jun;12(2):257–85. DOI: http://dx.doi.org/10.1016/0364-0213(88)90023-7. [Google Scholar]

- 14.Sweller J, van Merriënboer JJG, Paas FGWC. Cognitive architecture and instructional design. Educ Psychol Rev. 1998;10(3):251–96. [Google Scholar]

- 15.Van Merriënboer JJG, Sweller J. Cognitive load theory in health professional education: design principles and strategies. Med Educ. 2010 Jan;44(1):85–93. doi: 10.1111/j.1365-2923.2009.03498.x. [DOI] [PubMed] [Google Scholar]

- 16.DeStefano D, LeFevre JA. Cognitive load in hypertext reading: a review. Comput Human Behav [Internet] 2007 May;23(3):1616–41. [cited 25 Nov 2014]. < http://linkinghub.elsevier.com/retrieve/pii/S0747563205000658>. [Google Scholar]

- 17.Gerjets P, Scheiter K. Goal configurations and processing strategies as moderators between instructional design and cognitive load: evidence from hypertext-based instruction. Educ Phychologist. 2003;38(1):33–41. [Google Scholar]

- 18.Conklin J. Hypertext: an introduction and survey. Computer (Long Beach, CA) 1987;20(9):17–41. [Google Scholar]

- 19.Scheiter K, Gerjets P. Learner control in hypermedia environments. Educ Psychol Rev [Internet] 2007 Sep;19(3):285–307. [cited 24 Nov 2014]. < http://link.springer.com/10.1007/s10648-007-9046-3>. [Google Scholar]

- 20.Jacobson MJ, Spiro RJ. Hypertext learning environments, cognitive flexibility, and the transfer of complex knowledge: an empirical investigation. J Educ Comput Res. 1995;12(4):301–33. [Google Scholar]

- 21.Jonassen DH. Designing constructivist learning environments. In: Reigeluth CM, editor. Instructional design theories and models: a new paradigm of instructional theory. New York, NY: Routledge; 1999. pp. 215–39. In. p. [Google Scholar]

- 22.Paas FGWC, Ayres P, Pachman M. Assessment of cognitive load in multimedia learning: theory, methods, and applications. In: DH Robinson, Schraw G., editors. Recent innovations in educational technology that facilitate student learning. Information Age Publishing; 2008. pp. 11–35. In. eds. p. [Google Scholar]

- 23.Rayner K. Eye movements and attention in reading, scene perception, and visual search. Q J Exp Psychol [Internet] 2009 Aug;62(8):1457–506. doi: 10.1080/17470210902816461. [DOI] [PubMed] [Google Scholar]

- 24.Poole A, Ball LJ. Eye tracking in human-computer interaction and usability research: current status and future prospects. In: Ghaoui C, editor. Encyclopedia of human-computer interaction. Pennsylvania: Idea Group; 2005. In. ed. [Google Scholar]

- 25.Rayner K, McConkie GW. What guides a reader's eye movement. Vision Res. 1976;16:829–37. doi: 10.1016/0042-6989(76)90143-7. [DOI] [PubMed] [Google Scholar]

- 26.Rayner K, Sereno SC, Raney GE. Eye movement control in reading: a comparison of two types of models. J Exp Psychol. 1996;22(5):1188–200. doi: 10.1037//0096-1523.22.5.1188. [DOI] [PubMed] [Google Scholar]

- 27.Jacobson JZ, Dodwell PC. Saccadic eye movements during reading. Brain Lang. 1979;8(3):303–14. doi: 10.1016/0093-934x(79)90058-0. [DOI] [PubMed] [Google Scholar]

- 28.Haider H, Frensch PA. Eye movement during skill acquisition: more evidence for the information-reduction hypothesis. J Exp Psychol Learn Mem Cogn. 1999;25(1):172–90. [Google Scholar]

- 29.Iqbal ST, Zheng XS, Bailey BP. Proceedings of the 2004 Conference on Human Factors and Computing Systems, CHI '04 [Internet] New York, NY: ACM Press; 2004. Task-evoked pupillary response to mental workload in human-computer interaction; p. 1477. In. [cited 28 Sep 2015] p. < http://portal.acm.org/citation.cfm?doid=985921.986094>. [Google Scholar]

- 30.Poock GK. Information processing vs pupil diameter. Perceptual Motor Skills. 1973(37):1000–2. doi: 10.1177/003151257303700363. [DOI] [PubMed] [Google Scholar]

- 31.Beatty J, Lucero-Wagoner B. The pupillary system. In: Cacioppo JT, Tassinary LG, Bernston GG, editors. Handbook of psychophysiology. 2nd ed. Cambridge University Press; 2000. pp. 142–62. In. eds. p. [Google Scholar]

- 32.Metalis SA, Rhoades BK, Hess EH, Petrovich SB. Pupillometric assessment of reading using materials in normal and reversed orientations. J Appl Psychol. 1980 Jun;65(3):359–63. [PubMed] [Google Scholar]

- 33.Natrella M. NIST/SEMATECH e-handbook of statistical methods. National Institute of Standards and Technology. 2010 [Google Scholar]

- 34.Replicated Latin squares, STAT 503 design of experiments [Internet]. Pennsylvania State University Online Courses; 2015 [cited 28 Sep 2015]. < https://onlinecourses.science.psu.edu/stat503/node/22>. [Google Scholar]

- 35.Case SM, Swanson DB. Constructing written test questions for the basic and clinical sciences [Internet] Philadelphia, PA: National Board of Medical Examiners; 2002. 3rd ed. [cited 28 Sep 2015] < http://www.nbme.org/publications/item-writing-manual-download.html>. [Google Scholar]

- 36.Antonenko PD, Niederhauser DS. The influence of leads on cognitive load and learning in a hypertext environment. Comput Human Behav [Internet] 2010 Mar;26(2):140–50. [cited 22 Jan 2015]. < http://linkinghub.elsevier.com/retrieve/pii/S0747563209001691>. [Google Scholar]

- 37.Geissler G, Zinkhan G, Watson R. Web home page complexity and communication effectiveness. J Assoc Inf Syst. 2001;2(2) [Google Scholar]

- 38.Sweller J. Cognitive load theory, learning difficulty, and instructional design. Learn Instr. 1994;4:295–312. [Google Scholar]

- 39.Kupferberg N, Protus BM. Accuracy and completeness of drug information in Wikipedia: an assessment. J Med Lib Assoc. 2011 Oct;99(4):310–3. doi: 10.3163/1536-5050.99.4.010. DOI: http://dx.doi.org/10.3163/1536-5050.99.4.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Hasty RT, Garbalosa RC, Barbato VA, Valdes PJ, Powers DW, Hernandez E, John JS, Suciu G, Qureshi F, Popa-Radu M. San Jose S, Drexler N, Patankar R, Paz JR, King CW, Gerber HN, Valladares MG, Somji AA. Wikipedia vs peer-reviewed medical literature for information about the 10 most costly medical conditions. J Am Osteopath Assoc. 2014;114(5):368–73. doi: 10.7556/jaoa.2014.035. [DOI] [PubMed] [Google Scholar]

- 41.Fenton SH, Badgett RG. A comparison of primary care information content in UpToDate and the National Guideline Clearinghouse. J Med Lib Assoc. 2007 Jul;95(3):255–9. doi: 10.3163/1536-5050.95.3.255. DOI: http://dx.doi.org/10.3163/1536-5050.95.3.255. Correction: J Med Lib Assoc. 2007 Oct;95(4):473. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Ahmadi SF, Faghankhani M, Javanbakht A, Akbarshahi M, Mirghorbani M, Safarnejad B, Baradaran H. A comparison of answer retrieval through four evidence-based textbooks (ACP PIER, Essential Evidence Plus, First Consult, and UpToDate): a randomized controlled trial. Med Teach [Internet] 2011;33(9):724–30. doi: 10.3109/0142159X.2010.531155. DOI: http://dx.doi.org/10.3109/0142159X.2010.531155. [DOI] [PubMed] [Google Scholar]

- 43.Campbell R, Ash J. An evaluation of five bedside information products using a user-centered, task-oriented approach. J Med Lib Assoc. 2006 Oct;94(4):435–41. e206–7. [PMC free article] [PubMed] [Google Scholar]

- 44.Pender MP, Lassere BA, Kruesi L, Del Mar C, Anuradha S. Putting Wikipedia to the test: a case study. Presented at: Special Libraries Association Annual Conference; Seattle, WA; 2008. [Google Scholar]

- 45.Hayward RS, El-Hajj M, Voth TK, Deis K. Patterns of use of decision support tools by clinicians. Annual Symposium Proceedings/AMIA Symposium. 2006:329–33. p. [PMC free article] [PubMed] [Google Scholar]

- 46.Esparza JM, Shi R, McLarty J, Comegys M, Banks DE. The effect of a clinical medical librarian on in-patient care outcomes. J Med Lib Assoc. 2013 Jul;101(3):185–91. doi: 10.3163/1536-5050.101.3.007. DOI: http://dx.doi.org/10.3163/1536-5050.101.3.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Lavsa SM, Corman SL, Culley CM, Pummer TL. Reliability of Wikipedia as a medication information source for pharmacy students. Curr Pharm Teach Learn. 2011;3(2):154–8. [Google Scholar]

- 48.Wood A, Struthers K. Pathology education, Wikipedia and the Net generation. Med Teach. 2010 Jan;32(7):618. doi: 10.3109/0142159X.2010.497719. [DOI] [PubMed] [Google Scholar]

- 49.Azer SA. Evaluation of gastroenterology and hepatology articles on Wikipedia: are they suitable as learning resources for medical students. Eur J Gastroenterol Hepatol. 2014;26(2):155–63. doi: 10.1097/MEG.0000000000000003. [DOI] [PubMed] [Google Scholar]

- 50.Haigh C. Wikipedia as an evidence source for nursing and healthcare students. Nurse Educ Today. 2011 Feb;31(2):135–9. doi: 10.1016/j.nedt.2010.05.004. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.