Abstract

Evidence-based practices (EBPs) reach consumers slowly because practitioners are slow to adopt and implement them. We hypothesized that giving psychotherapists a tool + training intervention that was designed to help the therapist integrate the EBP of progress monitoring into his or her usual way of working would be associated with adoption and sustained implementation of the particular progress monitoring tool we trained them to use (the Depression Anxiety Stress Scales on our Online Progress Tracking tool) and would generalize to all types of progress monitoring measures. To test these hypotheses, we developed an online progress monitoring tool and a course that trained psychotherapists to use it, and we assessed progress monitoring behavior in 26 psychotherapists before, during, immediately after, and 12 months after they received the tool and training. Immediately after receiving the tool + training intervention, participants showed statistically significant increases in use of the online tool and of all types of progress monitoring measures. Twelve months later, participants showed sustained use of any type of progress monitoring measure but not the online tool.

Keywords: dissemination, adoption, implementation, evidence-based practice, progress monitoring

The burden of mental illness in our country is high, in part because clinicians are slow to adopt and implement evidence-based practices (EBPs). Seventeen years typically elapse before a research finding is translated to patient care (Weingarten, Garb, Blumenthal, Boren, & Brown, 2000). Even when they do adopt new EBPs, clinicians often fail to implement them persistently over time (Stirman et al., 2012) or to generalize their use of the EBP to domains outside the one in which they were trained (Rosen & Pronovost, 2014).

The implementation science literature tells us that in order to increase adoption and implementation of an EBP, it is essential to provide implementation support that addresses clinicians’ barriers to adopting and implementing a new EBP (Baker et al., 2010; Damschroder et al., 2009). That is, trainers cannot simply present the details of the EBP, but must carefully design an EBP that is not too complex or difficult, and must actively help the clinician overcome impediments to using the EBP in his or her daily workflow.

Several types of data support this notion. Clinicians report that they are more willing to adopt a new EBP if they can integrate it into what they are already doing without changing other aspects of their practice (Cook, Schnurr, Biyanova, & Coyne, 2009; Stewart, Chambless, & Baron, 2012). Borntrager, Chorpita, Higa-McMillan, and Weisz (2009) showed that psychotherapists are more receptive to adopting modules than whole treatments, presumably at least in part because it is easier to integrate a module than an entire treatment into what the therapist is already doing. Casper (2007) showed that clinicians are more likely to adopt a new skill if they are given a chance to report reservations about the skill and impediments to using it to the trainer, who adapts the training to address those reservations and impediments. Other strategies for helping the clinician integrate the skill into his or her workflow include teaching the skill one step at a time in a way that provides the clinician with opportunities to practice and get feedback about performance at each step (Pronovost, Berenholtz, & Needham, 2008), and encouraging clinicians to reflect after a training workshop on what they learned in the workshop and how they can use it in their practice (Bennett-Levy & Padesky, 2014). A final strategy involves providing reminders and accountability mechanisms in the work environment. Pronovost and colleagues (2006) (see also Gawande (2007)) developed and studied a multi-faceted intervention to increase physicians’ use of the evidence-based procedures for installing a catheter in a hospital intensive care unit. The intervention included using a checklist to monitor and verify physicians’ implementation of the evidence-based procedures, and stopping the provider when the procedures were not being followed. The intervention led to large decreases in hospital infection rates. All these pieces of evidence support the notion that increasing clinicians’ use of EBPs requires more than just teaching the EBP; it also requires explicit and systematic efforts to help the clinician integrate the EBP into his or her daily workflow.

Thus, factors that promote adoption and implementation of an EBP include that the EBP is one that the clinician can adopt without modifying other aspects of his or her practice, and the training to use the EBP explicitly and actively helps the clinician integrate the practice into his or her daily workflow. We addressed both these factors as we selected an EBP and carried out a multi-faceted intervention to train psychotherapists to use it.

We selected the EBP of progress monitoring, which we defined as: Using a written or online tool at the beginning of every session to monitor changes in a patient’s symptoms or functioning and using that information to inform the treatment. We selected progress monitoring because it is a relatively straightforward evidence-based practice (see reviews by Carlier et al. (2012) and Goodman, McKay, and DePhilippis (2013)) that the clinician can add to any type of psychotherapy he or she is doing without requiring changes in that psychotherapy.

Our intervention had two parts. It consisted of (1) an online progress monitoring tool that allowed clients to go online to complete the Depression Anxiety Stress Scales (DASS; (Lovibond & Lovibond, 1995)), and (2) a series of four online classes that trained clinicians to use the online tool. We expected each of the two parts of intervention (the tool and the training) to promote participants’ use of progress monitoring in different ways. The online tool was designed to overcome many obstacles that our pilot work had identified as impediments to clinicians’ use of progress monitoring. It overcame the obstacles of selecting a measure by providing a measure (the Depression Anxiety Stress Subscales; DASS) that assessed symptoms that are common in most adult patients seeking psychotherapy. The online tool also overcame obstacles of obtaining a measure, getting it to the patient, scoring and plotting it, interpreting the scores via reference to clinical norms, and addressed clinicians’ reluctance to spend time in the session administering the measure (clients completed the measure online before the session). The classes taught the clinician ways to overcome obstacles of forgetting to assign the measure, patient noncompliance, and uncertainty about how to use the data in the session. The four-session series of classes allowed trainers to break the skill down into steps and teach them one at a time (e.g., introduce the measure to the client, ask the client to complete the measure), and give clinicians practice using the skill during class and between classes, so they could get feedback and help improving their performance and overcoming obstacles. Many of these skills were applicable to progress monitoring tools of all sorts, not just the online tool, and thus promoted generalization of the skill of using the online progress monitoring tool to the larger domain of using all types of progress monitoring measures.

Based on the literature reviewed above, we hypothesized that giving psychotherapists a tool + training intervention that emphasized helping them integrate the EBP of progress monitoring into their daily workflow would be associated with adoption and increased implementation of the EBP. To test these hypotheses, we developed an online progress monitoring tool and a training intervention to help psychotherapists do progress monitoring, and we collected data from psychotherapists before, during, immediately after, and 3, 6, and 12 months after they received the tool + training intervention. Our online progress monitoring tool was a tool that allowed clients to go online to complete the Depression Anxiety Stress Scales (DASS). We predicted that psychotherapists’ use of the online tool would increase immediately after they received the online tool and again after they received training in progress monitoring, and that similar increases would occur in use of all types of progress monitoring measures, reflecting generalization of training in the online tool to all types of progress monitoring. We predicted that the therapists’ increased use of progress monitoring would persist during the 12 months after the intervention. Because use of any type of progress monitoring measure requires fewer changes to the clinician’s practice (no need to use a computer, no need to adopt a new measure, the DASS), we predicted that use of any type of progress monitoring measure would show more sustained implementation than use of the online tool.

Method

Participants

We recruited practitioner participants by advertising on professional e-mail distribution lists (e.g., Association for Behavioral and Cognitive Therapies, Society of Clinical Psychology (Division 12) of the American Psychological Association) and in the co-principal investigators’ (J. B. P. and K. K.) professional networks.

We invited participants who met the following criteria: living in the USA; treating at least five adult patients weekly in outpatient psychotherapy; able to participate in research without needing permission from any administrative authority or able to get such permission quickly; able to attend all sessions of the progress monitoring training; having access to a computer and internet connection in the office where seeing clients; conducting therapy in English; and not regularly using the online tool’s main assessment scale (the Depression Anxiety Stress Scales; DASS).

Twenty-six clinicians responded to our recruitment efforts and participated in the study. Most participants were female (65.4%) and Caucasian (96.2%). Most were Ph.D. psychologists (53.8%); the remaining were masters’ level providers. Participants’ mean age was 45.8 years (SD = 9.8). The majority (69.2%) worked in a private practice setting; 19.1% worked in a mental health clinic, and 11.5% worked in another setting. Participants had practiced psychotherapy for a mean of 12.7 years (SD = 8.8), and spent 22.6 hours/week (SD = 11.2) providing psychotherapy.

Of the 26 psychotherapists who agreed to participate, four dropped out (two due to work and family stressors, one because our online tool was not compatible with his ipad, and one for unknown reasons). Twenty-two completed the training and provided at least some data after the training. Two of these twenty-two participants were lost to follow-up during the post-training follow-up period.

Intervention

The intervention we delivered had two parts: (1) an online tool and (2) training to use the online tool and all types of progress monitoring.

Online tool

We developed an online tool named Online Progress Tracking (OPT) that allowed clients to go online to complete the 21-item version of the Depression Anxiety Stress Scales (DASS), a widely-used scale with good psychometric properties (Brown, Chorpita, Korotitsch, & Barlow, 1997) that assesses symptoms of depression, anxiety, and stress (Lovibond & Lovibond, 1995). With the permission of the measure’s developer, we added two items to the DASS that assessed suicidality. The tool displayed (to the therapist, but not the patient) the three (Depression, Anxiety, Stress) subscale scores, scores on each of two suicide items, and a plot of the patient’s scores on the three subscales over time. OPT also provided alerts and assists to the therapist when patients were not making progress and when they endorsed symptoms of suicidality.

Training to use the online tool

We taught a series of four weekly online classes in which we introduced participants to OPT, trained them to use it, and helped them integrate the tool into their daily workflow.

We trained participants in small groups of four to seven individuals. Group assignment was determined based on class scheduling; the schedule was set in advance and participants registered for whichever class best fit their schedule. Classes were taught by Persons and Eidelman (two classes), Persons and Hong (one class) and Koerner (one class).

We presented the audio portion of the course via a telephone conference call mechanism that allowed us to create smaller breakout groups where participants could practice the skills. We presented the video portion via a web hosting platform that allowed us to show Powerpoint (PP) slides and allowed participants to see the teachers and the PP slides (but not each other). We presented video demonstrations of progress monitoring skills via Youtube clips that participants watched during and/or after class. We sent participants handouts via e-mail before each class. We posted all the class materials (handouts, video demonstrations, PP slides, transcripts of the classes) on a webpage that participants could access at any time. We gave participants access to a listserve that provided a forum to get help from the instructors and their classmates. We used e-mail to introduce classmates to one another at the beginning of the class, and occasionally at other points in the course as needed.

The training consisted of an initial 60-minute orientation meeting and four 90-minute classes. In the orientation meeting, participants met each other, learned how to access the audio and video elements of the class, and learned how to complete the Therapist Diary Card so they could begin collecting baseline data on their use of progress monitoring. The Therapist Diary Card (described below) was used to collect data for the study, and it also contributed to the training by increasing participants’ awareness of their progress monitoring behavior (or lack of it).

Three weeks following the orientation meeting, participants attended the first class. During that class and in a narrated PP slide show they watched shortly before the first class, participants learned the nuts and bolts of using the online tool, learned about the DASS, received passwords giving them access to the tool, and learned the consent process their clients would follow to get access to the tool.

In classes 2, 3, and 4, participants received training that was designed to help them identify and overcome impediments to using the tool, and skills (e.g., handling noncompliance, using the assessment data to guide clinical decision-making, including when the progress monitoring data indicated that the client was not making progress) that were applicable to any type of progress monitoring. The last class included a section in which the trainers helped participants identify strategies they would use to maintain the skills they had learned in the training course.

Measures

Participants’ demographic, training, and practice characteristics

These were assessed via self-report on a scale we designed for this purpose and that participants completed online using Survey Monkey.

Progress monitoring

We assessed progress monitoring in two ways: by examining OPT system data to count the number of times that OPT was used, and by collecting a Therapist Diary Card from participants on which they recorded their requests to clients to complete a progress monitoring measure.

OPT system data

For each participant at each time point, we counted the number of clients who used the DASS on OPT.

Therapist Diary Card

On the Therapist Diary Card, participants listed every session for every adult individual psychotherapy client they saw during the period of a week, and indicated for each session whether they asked the client to complete (a) the Depression Anxiety Stress Scale (DASS); (b) Other Standardized Measures; (c) an Idiographic Log; or (d) no progress monitoring measure. When participants indicated on the Therapist Diary Card that they assigned the DASS, in the vast majority of cases, they assigned the DASS on OPT, although in a handful of cases they used a paper-and-pencil version of the DASS. When participants assigned other progress monitoring measures, these were paper-and-pencil tools that participants obtained and scored on their own. Therapists assigned a wide variety of Other Standardized Measures (including the Beck Depression Inventory, the Outcome Rating Scale (Miller, Duncan, Brown, Sparks, & Claud, 2003), the OQ-45 (M.J. Lambert et al., 2004), or the Burns Anxiety Inventory (Burns & Eidelson, 1998) and Idiographic Logs (including the Dialectical Behavior Therapy Diary Card, a Sleep Diary, or a panic log).

On the Therapist Diary Card, participants reported what measures they assigned to the client, not whether the client actually completed the measure. Thus, we assessed use of the DASS on OPT in two ways: by examining data inside the OPT system that logged the clients of each participant who actually used the DASS, and by examining each participant’s report on the Therapist Diary Card of the clients they asked to use the DASS.

To assess use of Any Type of Progress Monitoring Measure, we counted, on the Therapist Diary Card, for each session the therapist conducted that week, whether the therapist assigned any type of progress monitoring measure (the DASS on OPT, Another Standardized Measure, an Idiographic Log), or no progress monitoring measure. If the therapist assigned the DASS on OPT, Another Standardized Measure, or an Idiographic Log, the therapist was coded as having assigned Any Type of Progress Monitoring Measure in that session.

To assess use of the DASS on the Therapist Diary Card, we counted the number of clients the participant asked to complete the DASS, whereas to assess use of Any Type of Progress Monitoring Measure, we counted the number of sessions the participant assigned Any Type of Progress Monitoring Measure. The rationale for the client/session distinction is that the DASS is designed for weekly use because it assesses symptoms over the past week, whereas Any Type of Progress Monitoring Measure can be used in every session. To adjust for the fact that some participants saw more clients than others, we calculated percentages of clients or sessions in which the participant collected progress monitoring data on the Therapist Diary Card, and we calculated the percentage of clients using OPT based on the participants’ report on the Therapist Diary Card of the number of clients seen each week.

During the baseline period, participants recorded the Therapist Diary Card data on an excel document. After participants got access to OPT (in the first class), they were given the option to use OPT to complete the Therapist Diary Card.

Examination of diary card data from the three baseline weeks indicated that our Therapist Diary Card had good temporal stability, with a Cronbach’s alpha of 0.78 for reported use of the DASS, Cronbach’s alpha of 0.92 for reported use of Other Standardized Measures, Cronbach’s alpha of 0.94 for reported use of Idiographic logs, and Cronbach’s alpha of 0.90 for use of Any Progress Monitoring.

Procedure

Study procedures were reviewed and approved by the Behavioral Health Research Collective Institutional Review Board. After consenting to participate in the research, participants completed the survey of their demographic, training, and practice characteristics. After they attended the Orientation meeting, therapists completed a weekly Therapist Diary Card for three baseline weeks.

Then they attended four weeks of class; each week they completed a Therapist Diary Card and turned it in before class. The lesson plan for each class, including the amount of time to be spent on each section of the class, was determined by a manual for the course that all instructors followed. All participants saw the same PP slides, handouts, and video materials, and participated in the same practice exercises. Because the material was presented by different instructors, didactic presentations varied a small amount from one class to another; class discussion, of course, was different in each class.

After the fourth class, participants had three weeks of unstructured learning during which they had access to the class website and listserv. After three weeks of unstructured learning, the training formally concluded. At that post-intervention point, participants provided a week of Therapist Diary Card data. At the post-intervention point, participants were given access to the larger learning community of participants who had participated in other progress monitoring classes, and to the OPT tool for 1 year. Three months, six months, and 12 months after the intervention ended, participants completed a Therapist Diary Card for one week.

Missing Data

We report in Table 1 the number of participants who provided data on the Therapist Diary Card at each of the 11 assessment points (three baseline weeks, four class weeks, at the completion of the full training period, and 3, 6, and 12 months after the intervention ended). Completion rates for the Therapist Diary Card were high. Among the intent to treat sample (n = 26), we received 242 (84.6%) of the 286 Therapist Diary Cards we requested). Among the intervention completers (n = 22), we received 232 (95.9%)) of the 242 Therapist Diary Cards we requested. OPT system data were available for all participants who remained in the study at each time point.

Table 1.

Proportion of participants using progress monitoring across the study period

| Pre- traini ng week 1 |

Pre- traini ng week 2 |

Pre- traini ng week 3 |

Week followi ng class 1 |

Week followi ng class 2 |

Week followi ng class 3 |

Week followi ng class 4 |

Week followi ng full training period |

3 month s after traini ng |

6 month s after traini ng |

12 month s after traini ng |

|

|---|---|---|---|---|---|---|---|---|---|---|---|

| N for OPT system data | 25 | 25 | 25 | 23 | 23 | 23 | 23 | 23 | 23 | 23 | 23 |

| OPT System Usea | 0 (0) | 0 (0) | 0 (0) | 11.04 (12.68) | 27.00 (31.90) | 32.22 (45.78) | 35.80 (39.64) | 39.95 (46.85) | 21.03 (38.55) | 14.17 (21.47) | 11.07 (19.92) |

| N for self-report data | 25 | 25 | 24 | 23 | 21 | 22 | 21 | 21 | 19 | 19 | 18 |

| Reported DASS Use b | 0.84 (3.10)b | 1.44 (5.39) b | 0.49 (2.40) b | 29.98 (29.18) | 38.95 (34.27) | 45.01 (36.06) | 43.89 (27.66) | 43.77 (32.76) | 28.44 (31.89) | 21.17 (25.32) | 20.26 (28.29) |

| Reported Use of Any Progress Monitoring c | 41.51 (38.26) | 41.41 (35.18) | 40.10 (33.80) | 58.45 (30.89) | 61.57 (31.08) | 68.47 (32.69) | 63.97 (28.56) | 65.32 (30.05) | 60.00 (30.28) | 53.87 (30.03) | 56.96 (32.78) |

Note: The values displayed above are mean percentages with standard deviations in parentheses.

OPT System Use is a count on the OPT System of the number of clients who used the DASS on OPT.

As reported on the Therapist Diary Card.

Values are greater than 0 due to one participant reporting use of paper-and-pencil version of the DASS prior to gaining access to OPT.

Data Analysis and Predictions

To test the hypothesis that our tool + training intervention would be associated with participants’ adoption and implementation of progress monitoring, we used omnibus ANOVAs followed by planned t-tests. Our analyses were focused on four time points: before the intervention (average of the three baseline weeks of data), after participants received the online tool (after the first class), after participants received all of the training (average of data collected after the last class and the week following completion of the full training period), and 12 months after the intervention ended (data collected 12 months after the intervention). We conducted three sets of ANOVAs and follow-up t-tests: the first using OPT system data, the second using DASS data from the Therapist Diary Card, and the third using Any Type of Progress Monitoring data from the Therapist Diary Card.

We did not impute missing data, as the typical imputation strategy of last-data-point-carried-forward would bias the results in favor of finding that participants maintained their use of progress monitoring over time. Instead, we conducted ANOVAS using data from the 18 subjects (82%) who provided data at all four of the time points in the ANOVAs, and we conducted t-tests based on all data available at the relevant time points.

We predicted that use of the DASS on OPT and of Any Type of Progress Monitoring Measure would increase after participants received access to the DASS on OPT and again after they completed the training, and we predicted that participants would maintain their use of DASS on OPT and especially of Any Type of Progress Monitoring Measure 12 months after the intervention tended.

Results

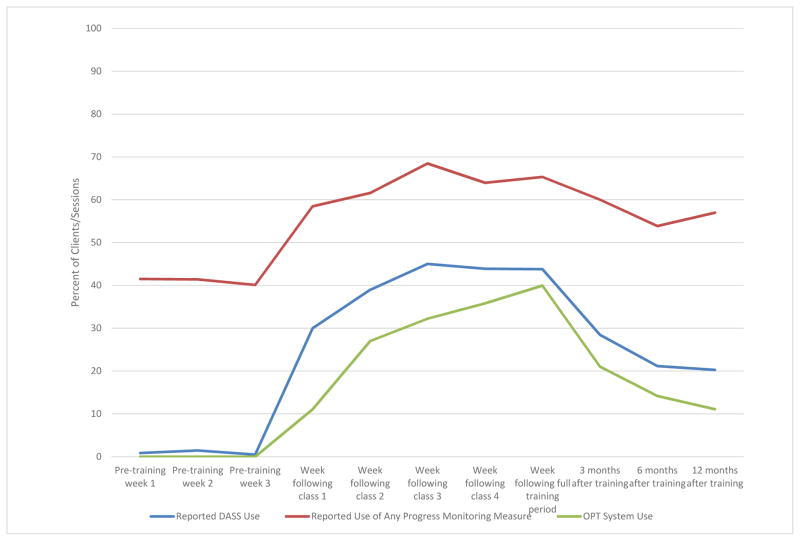

Table 1 and Figure 1 present data on participants’ average use of progress monitoring at each of the 11 time points that we collected data. The Table and Figure present all the data available at each time point.

Figure 1.

Use of Progress Monitoring at Six Time Points.

OPT System Data

The ANOVA examining OPT system data indicated a significant effect of time, F(3, 51) = 10.32, p<.001. As Table 1 shows, participants significantly increased their use of OPT after receiving access to it as compared to before receiving the tool, t = 4.18, p<.001. Participants again significantly increased their use of OPT after the training as compared to after receiving the tool but before the training, t = 3.10, p=.005. Twelve months after the tool + training intervention, participants’ use of OPT was significantly greater than before the intervention, t = 2.36, p<.05, but, contrary to prediction, was significantly smaller than immediately after the intervention, t = −3.31, p<.005.

Use of the DASS Reported on the Therapist Diary Card

The model examining use of the DASS as reported on the Therapist Diary Card showed the same pattern of results. The model showed a significant effect of time, F(3,51) = 13.81, p<.001. As Table 1 shows, use of the DASS as reported on the Therapist Diary Card was slightly different from zero during the baseline period; this was because one participant reported using a paper and pencil version of the DASS during the baseline period. Participants significantly increased their reported use of the DASS after receiving access to OPT as compared to before receiving access to OPT, t = 4.87, p<.001. Participants again significantly increased their reported use of the DASS after the training as compared to after receiving access to OPT but before the training, t = 2.63, p=.05. Twelve months after the tool + training intervention, participants’ reported use of the DASS on OPT was significantly greater than before the intervention, t = 2.78, p<.05, but, contrary to prediction, was significantly smaller than immediately after the intervention, t = −3.53, p<.005.

Use of Any Type of Progress Monitoring Measure Reported on the Therapist Diary Card

The model examining use of Any Type of Progress Monitoring as reported on the Therapist Diary Card indicated a significant effect of time, F(3, 51) = 10.41, p<.001. Participants significantly increased their reported use of Any Type of Progress Monitoring after receiving access to OPT as compared to before receiving access to OPT, t = 2.91, p<.01. Contrary to our prediction, reported use of Any Type of Progress Monitoring did not increase after the training as compared to after receiving access to OPT but before the training, t = 1.82, p>.10. Twelve months after the intervention, participants’ reported use of Any Type of Progress Monitoring was significantly greater than before the tool + training intervention, t = 5.28, p<.001. Consistent with our prediction, reported use of Any Type of Progress Monitoring 12 months after the intervention remained high and was not different than immediately after the intervention, t = −1.78, p>.05.

Discussion

After we delivered an intervention consisting of an online tool (OPT) for administering a standardized progress monitoring scale and four sessions of training to use it, psychotherapists adopted OPT and increased their use of any type of progress monitoring measure. Twelve months after the intervention, the therapists had maintained their gains using any type of progress monitoring measure, but were not using OPT as much as they had immediately following the intervention.

Changes were most impressive for use of any type of progress monitoring measure. Increases in participants’ use of any type of progress monitoring measure following our intervention were statistically significant, and appeared to be large and sustained. Participants reported using any type of progress monitoring in 41% of their sessions before the intervention, in 68% of their sessions after class 3 of the training, and in 57% of sessions 12 months after the intervention. Thus, 12 months after our intervention, participants’ average use of any type of progress monitoring had increased by nearly 40% as compared to before our intervention. We were especially gratified by the increased and sustained use of any type of progress monitoring measure because our tool + training intervention only taught participants explicitly about one progress monitoring measure, the DASS on OPT. Thus, the increased and sustained use of any type of progress monitoring measure indicates that clinicians generalized what we taught them about using the DASS on OPT to the use of other progress monitoring tools. We designed our training to promote generalization, as many of the skills we taught for using the DASS on OPT, such as handling client noncompliance, were completely applicable to the use of other progress monitoring tools.

Results were more modest for OPT, the online tool. Participants did adopt the tool. They used it with 45% of clients after the third training class. However, 12 months after the intervention, participants used the tool with only 20% of clients, a small figure that is statistically significantly greater than before the intervention but statistically significantly less than immediately after the intervention.

We hypothesize that clinicians had difficulty sustaining use of the online tool for several reasons. One hypothesis is that therapists persist in their use of a new EBP when there is some degree of supportive accountability, and after that they tend to drift (Waller, 2009). As the support and accountability of our class receded into the distance, participants’ use of progress monitoring with OPT decreased, and support and accountability may have been particularly important as the therapists struggled with challenges of using a new online tool. Second, although the online tool was designed to reduce obstacles to progress monitoring and did so in several ways, it also introduced impediments, as for example when the clinician was not accustomed to using a computer during a psychotherapy session, when clients were reluctant to use an online system for collecting personal data, or when the DASS, which was the only measure available on OPT, was not suitable for monitoring progress for some clients. We did not collect information about the participants’ patients. As a result, we do not have details about the degree to which the patients’ presenting problems matched the phenomena (symptoms of depression, anxiety, stress) that were assessed by the DASS, but certainly the DASS is not suitable for monitoring progress for all patients (e.g., those seeking treatment for ADHD or psychosis). An important direction for future work is to improve the online tool so that it provides a suite of assessment tools that clinicians can use to monitor progress, and to improve the tool (e.g., by adding an e-mail reminder system) to make it easier to use. This work is already underway as part of an NIMH-funded (2R44MH093993-02A1) project that integrates progress monitoring with computer-assisted therapy to help therapists learn, implement and sustain use of EBPs (go to www.willow.technology). Another impediment may have been the fact that participants did not know for certain that they would have access to OPT after the one-term follow-up point of the study, and so they may have been reluctant to fully commit to using the online tool and shifting their progress monitoring behavior to rely on it.

These impediments to sustained use of the online tool reflect some of the reasons that participants may have been more likely to sustain their increased use of any type of progress monitoring than of the online tool. The use of any type of progress monitoring did not require therapists to do anything different from what they were already doing. Directly related to our training focus of helping clinicians integrate the EBP of progress monitoring into their daily workflow, it is likely to be easier to continue doing something one is already doing than to continue doing something one just learned. Even before our intervention began, participants were using some type of progress monitoring measure in 41% of sessions. Thus, participants had already learned many of the basic skills of progress monitoring, and could use our intervention to increase their compliance with progress monitoring and to expand the range of patients and settings in which they did progress monitoring. Some qualitative reports from our participants suggest that this is indeed how they used our intervention. One participant stated that she attended the training in order to “increase adherence and accountability to self and clients with weekly standardized monitoring.” Another stated, “I do progress monitoring with many of my patients, but I would like to become more systematic with how I use it.” Another reported: “The fact that I am not using some standardized measure with all my clients has bothered me and I was in the process of trying to re-think the way I do outcome and process evaluation in practice when the email about this study came out.” Another stated: “I do weekly progress monitoring in my BPD [borderline personality disorder] client groups and would like to explore expanding this to other clients as is relevant,” and another reported that she was using progress monitoring in an agency where she worked, and wanted to use the intervention to help her use progress monitoring in her private practice.

Second, the ability to select any type of progress monitoring measure gave participants the flexibility to select the measure that integrated most smoothly into their practice and met the needs of each patient. Clinicians could use the DASS on OPT, standardized measures other than the DASS, or an idiographic scale, such as the Diary Card used in DBT.

A limitation of our study is the lack of a control group, which prevents us from concluding definitively that the increases in progress monitoring we observed were due to our intervention rather than to other factors, such as the placebo effects of participating in our training or of focusing one’s attention on one’s progress monitoring behavior. To control for some of these factors, we did include a within-subject no-intervention control condition by collecting data from participants about their progress monitoring behavior during a three-week baseline period.

Another limitation is that we have relatively little information about the psychometric properties of the Therapist Diary Card, which was our main measure of participants’ progress monitoring behavior. We do report good temporal stability of the Diary Card scales during the baseline period, and its validity receives some support from the fact that the pattern of change of the Use of the DASS reported on the Therapist Diary Card was similar to the pattern of change seen in the OPT System Data, as Figure 1 shows.

Another limitation is that our repeated monitoring of participants’ progress monitoring behavior with the Therapist Diary Card may have increased participants’ awareness of and use of progress monitoring after the training ended, and increasing their awareness and use of progress monitoring before the intervention, thus biasing our study against finding immediate effects of our intervention and in favor of finding persistent effects of our intervention. One way we addressed this issue is that we measured clinicians’ use of the DASS on OPT in two ways: via clinician self-report on the Therapist Diary Card, and by counting data from the OPT system. As shown in Figure 1, the two measures exhibit the same pattern of change.1 We are continuing to develop and elaborate OPT to allow researchers to non-intrusively collect information about practitioners’ use of progress monitoring and other EBPs.

Although we asked participants to self-monitor their behavior in order to assess their use of progress monitoring, we did not collect self-monitoring data solely for research purposes. In fact, we viewed self-monitoring as a key element of the training. It was designed to give participants immediate feedback about the effects of their efforts to use progress monitoring, feedback that they could use to adjust their behavior in the desired direction (Ericsson, 2006). Self-monitoring has been shown to have important effects on behavior (Latner & Wilson, 2002; Nelson & Hayes, 1981), and is likely to be an especially important aspect of efforts to obtain sustained implementation of any clinical practice, as in the work by Pronovost and colleagues (2008) on the effects of the physician checklist.

A limitation of our study is that we studied a group of clinicians who were not randomly selected, and who in fact were already using some type of progress monitoring measure at a much higher level than most clinicians, who do not use progress monitoring at all (Hatfield & Ogles, 2004). Selection of this group may have been an inadvertent result of the stringent selection criteria we adopted for research reasons (see above) and also as a result of the extensive commitment we required (participation in orientation and four training sessions at particular times, and submission of lots of data, especially weekly self-report of progress monitoring at multiple time points over more than a year). To address this issue in future studies, we are designing pre-recorded online tutorials that clinicians can access at their convenience, and nonintrusive means of monitoring clinicians’ behavior. At the same time, selection of this group of clinicians allowed us to focus on the important issue of sustained implementation of an EBP in a group of clinicians who were already using it frequently.

An additional limitation is that psychotherapists in our sample were all Americans, most were Ph.D. psychologists in private practice, and we studied only progress monitoring with individual adult clients, and thus our results may not generalize to therapists in other countries, from other disciplines, who work in other settings, or who work with couples, children, families, and groups. In particular, our results may not be typical of therapists working in the large Increased Access to Psychological Therapies (IAPT) program in England, where session-by-session progress monitoring has been incorporated into the provision of evidence-based psychological therapies. Clark (2011) reported that 99% and 88% of patients in each of two demonstration sites provided at least two progress monitoring measures during the course of their treatment. Although these numbers are impressively high, they are difficult to compare to the rates of progress monitoring we report here, as the English rates are percentages of patients providing at least two progress monitoring measures during the course of treatment, and our rates (see Table 1 and Figure 1) are the percentages of clients who provided progress monitoring data during each week of the study.

A final limitation is that although we worked very hard to obtain data from all participants over the more than one year term of the study, we inevitably lost some, and therefore our ANOVAs are based on data from only the 18 participants who provided data on the Therapist Diary Card at all of the study periods in the analysis. The fact that the Therapist Diary Card data show the same pattern of change over time as the data obtained directly from the OPT System, strengthens the conclusions we draw from the Therapist Diary Card data.

A novel feature of our study is that we chose to focus our efforts to increase clinicians’ use of EBPs on a measurement feedback system, not a treatment (see also the work by (Gibbons et al., 2015; M. J. Lambert & Shimokawa, 2011; Miller, Duncan, Sorrell, & Brown, 2005). The large majority of the literature on dissemination of evidence-based practices focuses on treatments. We focused instead on a discrete clinical strategy, what Embry and Biglan (2008) and Weisz, Ugueto, Herren, Afienko, and Rutt (2011) have called a kernel (in contrast to an “ear,” that is, a complete treatment). We did this because of our focus on increasing ease of implementation by teaching an EBP that clinicians could integrate into what they were already doing without changing it. Progress monitoring was also appealing to us because we view it as an essential element of evidence-based clinical practice. Said another way, we don’t think clinicians are really doing evidence-based practice if they are not monitoring the progress of each client they are treating in order to evaluate whether the client is benefitting from the treatment the clinician is offering.

To move forward our work on our measurement feedback system, we plan to strengthen the online tool, as described above (go to www.willow.technology) and to develop other tools (see Persons and Eidelman (2010) and posted at www.cbtscience.com/training) to help clinicians make effective use of progress monitoring data to improve their patients’ outcomes. Clinicians appear to struggle most when their patient is responding poorly to treatment (Kendall, Kipnis, & Otto-Salaj, 1992; Stewart & Chambless, 2008). To assist in these situations, Michael Lambert and his colleagues (e.g., (Harmon et al., 2007) have developed a clinical support tool that they have shown contributes to improved outcome, especially for clients who have a poor initial response to treatment. The beauty of an online tool is that it can provide the clinician with support and assistance always and instantaneously, and can also allow the researcher to study how the clinician uses the tool to guide decision-making.

Our findings and many of those reported by others (Bennett-Levy & Padesky, 2014; Pronovost et al., 2008) support that the notion that addressing implementation obstacles and helping clinicians integrate the EBP into their daily workflow can contribute to increased adoption and sustained implementation of EBPs that can reduce the burden of mental illness.

Highlights.

We studied the evidence-based practice of monitoring psychotherapy progress.

We developed an intervention that consisted of an online tool and training to use it.

We emphasized helping therapists overcome obstacles to using the new practice.

We assessed therapists’ behavior before, during, and after the intervention.

Therapists showed sustained use of any progress monitoring tool.

Acknowledgments

This work was supported by Grant 1R43MH093993-01, awarded by NIMH to Kelly Koerner and Jacqueline B. Persons. We thank Peter Lovibond for giving permission to use the Depression Anxiety Stress Scales, and Janie Hong for her contributions to the development of the training course. Bruce Arnow, Debra Hope, Robert Reiser, Katie Patricelli, Gareth Holman, Shireen Rizvi, and Lauren Steffel made important contributions. We thank research assistants Kaitlin Fronberg, Gening Jin, Nicole Murman, Emma P. Netland, Stephanie Soultanian, and Lisa Ann Yu.

Footnotes

A comparison of the two measures of the use of DASS on OPT shows that the use of the DASS on OPT as assessed by OPT system data is consistently a bit lower than the Therapist Diary Card report (see Table 1 and Figure 1). Possible reasons for this difference include: (1) completion of the Diary Card reminded the therapist to use the DASS; (2) on the Therapist Diary Card, participants report the measures they ask their clients to complete, whereas the OPT system data assess actual use of the tool; (3) Therapist Diary Card data are self-report data, whereas the OPT system data do not depend on self-report; (4) although in our analyses we assume that reports on the Therapist Diary Card that the therapist assigned the DASS is a request to use the DASS on OPT, in a handful of cases we learned that therapists actually assigned a paper-and-pencil version of the DASS.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Baker R, Camosso-Stefinovi J, Gilles C, Shaw EJ, Cheater F, Flottorp S, Robertson N. Tailored interventions to overcome identified barriers to change: effects on professional practice and health care outcomes. Cochrane Database of Systematic Reviews. 2010;3 [Google Scholar]

- Bennett-Levy J, Padesky CA. Use it or lose it: Post-workshop reflection enhances learning and utilization of CBT skills. Cognitive and Behavioral Practice. 2014;21(1):12–19. doi: 10.1016/j.cbpra.2013.05.001. [DOI] [Google Scholar]

- Borntrager CF, Chorpita BF, Higa-McMillan CK, Weisz JR. Provider attitudes toward evidence-based practices: Are the concerns with the evidence or with the manuals? Psychiatric Services. 2009;60(5):677–681. doi: 10.1176/appi.ps.60.5.677. [DOI] [PubMed] [Google Scholar]

- Brown TA, Chorpita BF, Korotitsch W, Barlow DH. Psychometric properties of the Depression Anxiety Stress Scales (DASS) in clinical samples. Behaviour Research and Therapy. 1997;35(1):79–89. doi: 10.1016/S0005-7967(96)00068-X. [DOI] [PubMed] [Google Scholar]

- Burns DD, Eidelson R. Why are measures of depression and anxiety correlated? -- I. A test of tripartite theory. Journal of Consulting and Clinical Psychology. 1998;60:441–449. doi: 10.1037//0022-006x.66.3.461. [DOI] [PubMed] [Google Scholar]

- Carlier IVE, Meuldijk D, Van Vliet IM, Van Fenema E, Van der Wee NJA, Zitman FG. Routine outcome monitoring and feedback on physical or mental health status: Evidence and theory. Journal of Evaluation in Clinical Practice. 2012;18(1):104–110. doi: 10.1111/j.1365-2753.2010.01543.x. [DOI] [PubMed] [Google Scholar]

- Casper ES. The theory of planned behavior applied to continuing education for mental health professionals. Psychiatric Services. 2007;58(10):1324–1329. doi: 10.1176/appi.ps.58.10.1324. [DOI] [PubMed] [Google Scholar]

- Clark DM. Implementing NICE guidelines for the psychological treatment of depression and anxiety disorders: The IAPT experience. International Review of Psychiatry. 2011;23(4):318–327. doi: 10.3109/09540261.2011.606803. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cook JM, Schnurr PP, Biyanova T, Coyne JC. Apples don’t fall far from the tree: Influences on psychotherapists’ adoption and sustained use of new therapies. Psychiatric Services. 2009;60(5):671–676. doi: 10.1176/appi.ps.60.5.671. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JD. Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science. 2009;4(50) doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Embry DD, Biglan A. Evidence-based Kernels: Fundamental Units of Behavioral Influence. Clinical Child and Family Psychology Review. 2008;11(3):75–113. doi: 10.1007/s10567-008-0036-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ericsson KA. The influence of experience and deliberate practice on the development of superior expert performance. In: Ericsson KA, Charness N, Feltovich PJ, Hoffman RR, editors. The Cambridge handbook of expertise and expert performance. New York: Cambridge University Press; 2006. pp. 683–703. [Google Scholar]

- Gawande A. The New Yorker. Dec 10, 2007. The checklist; pp. 86–95. [PubMed] [Google Scholar]

- Gibbons MBC, Kurtz JE, Thompson DL, Mack RA, Lee JK, Rothbard A, Crits-Christoph P. The effectiveness of clinician feedback in the treatment of depression in the community mental health system. Journal of Consulting and Clinical Psychology. 2015;83(4):748–759. doi: 10.1037/a0039302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goodman JD, McKay JR, DePhilippis D. Progress monitoring in mental health and addiction treatment: A means of improving care. Professional Psychology: Research and Practice. 2013;44(4):231–246. doi: 10.1037/a0032605. [DOI] [Google Scholar]

- Harmon SC, Lambert MJ, Smart DM, Hawkins E, Nielsen SL, Slade K, Lutz W. Enhancing outcome for potential treatment failures: Therapist-client feedback and clinical support tools. Psychotherapy Research. 2007;17(4):379–392. [Google Scholar]

- Hatfield DR, Ogles BM. The use of outcome measures by psychologists in clinical practice. Professional Psychology: Research and Practice. 2004;35(5):485–491. doi: 10.1037/0735-7028.35.5.485. [DOI] [Google Scholar]

- Kendall PC, Kipnis D, Otto-Salaj L. When clients don’t progress: Influences on and explanations for lack of therapeutic progress. Cognitive Therapy and Research. 1992;16:269–281. doi: 10.1007/BF01183281. [DOI] [Google Scholar]

- Lambert MJ, Morton JJ, Hatfield D, Harmon C, Hamilton S, Reid RC, Burlingame GM. Administration and scoring manual for the Outcome Questionnaire-45. Orem, UT: American Professional Credentialing Services; 2004. [Google Scholar]

- Lambert MJ, Shimokawa K. Collecting client feedback. Psychotherapy. 2011;48(1):72–79. doi: 10.1037/a0022238. [DOI] [PubMed] [Google Scholar]

- Latner JD, Wilson GT. Self-monitoring and the assessment of binge eating. Behavior Therapy. 2002;33:465–477. [Google Scholar]

- Lovibond SH, Lovibond PF. Manual for the Depression Anxiety Stress Scales. 2. Sydney: Psychology Foundation; 1995. [Google Scholar]

- Miller SD, Duncan BL, Brown J, Sparks J, Claud D. The outcome rating scale: A preliminary study of the reliability, validity, and feasibility of a brief visual analog measure. Journal of Brief Therapy. 2003;2:91–100. [Google Scholar]

- Miller SD, Duncan BL, Sorrell R, Brown GS. The partners for change outcome management system. Journal of Clinical Psychology. 2005;61(2):199–208. doi: 10.1002/jclp.20111. [DOI] [PubMed] [Google Scholar]

- Nelson RO, Hayes SC. Theoretical explanations for reactivity in self-monitoring. Behavior Modification. 1981;5(1):3–14. [Google Scholar]

- Persons JB, Eidelman P. Lack of Progress Worksheet. 2010 Unpublished manuscript. [Google Scholar]

- Pronovost P, Berenholtz S, Needham D. Translating evidence into practice: A model for large scale knowledge translation. British Medical Journal. 2008;337:963–965. doi: 10.1136/bmj.a1714. [DOI] [PubMed] [Google Scholar]

- Pronovost P, Needham D, Berenholtz S, Sinopoli D, Chu H, Cosgrove S, Goeschel C. An intervention to decrease catheter-related bloodstream infections in the ICU. New England Journal of Medicine. 2006;355(26):2725–2732. doi: 10.1056/NEJMoa061115. [DOI] [PubMed] [Google Scholar]

- Rosen MA, Pronovost PJ. Advancing the use of checklists for evaluating performance in health care. Academic Medicine. 2014;89(7):963–965. doi: 10.1097/acm.0000000000000285. [DOI] [PubMed] [Google Scholar]

- Stewart RE, Chambless DL. Treatment failures in private practice: How do psychologists proceed? Professional Psychology: Research and Practice. 2008;39:176–181. doi: 10.1037/0735-7028.39.2.176. [DOI] [Google Scholar]

- Stewart RE, Chambless DL, Baron J. Theoretical and practical barriers to practicioners’ willingness to seek training in empirically supported treatments. Journal of Clinical Psychology. 2012;68(1):8–23. doi: 10.1002/jclp.20832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stirman AW, Kimberly J, Cook N, Calloway A, Castro F, Charns M. The sustainability of new programs and innovations: a review of the empirical literature and recommendations for future research. Implementation Science. 2012;7(17) doi: 10.1186/1748-5908-7-17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Waller G. Evidence-based treatment and therapist drift. Behaviour Research and Therapy. 2009;47:119–127. doi: 10.1016/j.brat.2008.10.018. [DOI] [PubMed] [Google Scholar]

- Weingarten S, Garb CT, Blumenthal D, Boren SA, Brown GD. Improving preventive care by prompting physicians. Archives of Internal Medicine. 2000;160:301–308. doi: 10.1001/archinte.160.3.301. [DOI] [PubMed] [Google Scholar]

- Weisz JR, Ugueto AM, Herren J, Afienko AR, Rutt C. Kernels vs. ears and other questions for a science of treatment dissemination. Clinical Psychology: Science and Practice. 2011;18(1):41–46. doi: 10.1111/j.1468-2850.2010.01233.x. [DOI] [PMC free article] [PubMed] [Google Scholar]