Abstract

Objective

Interictal high frequency oscillations (HFOs) in intracranial EEG are a potential biomarker of epilepsy, but current automated HFO detectors require human review to remove artifacts. Our objective is to automatically redact false HFO detections, facilitating clinical use of interictal HFOs.

Methods

Intracranial EEG data from 23 patients were processed with automated detectors of HFOs and artifacts. HFOs not concurrent with artifacts were labeled quality HFOs (qHFOs). Methods were validated by human review on a subset of 2,000 events. The correlation of qHFO rates with the seizure onset zone (SOZ) was assessed via 1) a retrospective asymmetry measure and 2) a novel quasi-prospective algorithm to identify SOZ.

Results

Human review estimated that less than 12% of qHFOs are artifacts, whereas 78.5% of redacted HFOs are artifacts. The qHFO rate was more correlated with SOZ (p=0.020, Wilcoxon signed rank test) and resected volume (p=0.0037) than baseline detections. Using qHFOs, our algorithm was able to determine SOZ in 60% of the ILAE Class I patients, with all algorithmically-determined SOZs fully within the resected volumes.

Conclusions

The algorithm reduced false-positive HFO detections, improving the precision of the HFO-biomarker.

Significance

These methods provide a feasible strategy for HFO detection in real-time, continuous EEG with minimal human monitoring of data quality.

Keywords: Seizure onset zone, high frequency oscillation, ripple, automated detector, artifact detector

1. Introduction

Epileptologists have been using intracranial monitoring to identify seizure networks since the 1950s. Although technology has changed drastically since that time, clinical procedures to identify these networks still focus on frequency bands restricted to the capabilities of the early pen-and-ink recording systems, i.e., less than 30 Hz. Resective surgeries based on these determinations are successful in only 50–60% of patients undergoing the procedure (Edelvik et al., 2013), with significantly lower outcome rates for certain types of epilepsies (Noe et al., 2013; Yu et al., 2014). High frequency oscillations (HFOs), occurring roughly in the 80–500 Hz range, have been studied (Bragin et al., 2002a; Jirsch et al., 2006; Worrell et al. 2008; Engel et al., 2009) as a potential new biomarker to increase the specificity of the determination of the seizure onset and potentially improve surgery outcomes (Ochi et al., 2007; Jacobs et al., 2008; Crépon et al. 2010; Jacobs et al., 2010; Wu et al., 2010; Andrade-Valenca et al., 2011; Akiyama et al., 2011; Modur et al., 2011; Nariai et al., 2011; Usui et al., 2011; Zijlmans et al., 2011; Jacobs et al., 2012; Park et al., 2012; Haegelen et al., 2013; Cho et al, 2014; Kerber et al., 2014; Okanishi et al., 2014; Malinowska et al., 2014; Dümpelmann et al., 2015). However, a significant challenge to the clinical use of HFOs is the difficulty identifying them in intracranial EEG recordings: they are brief (<100 ms), low amplitude, uncommon (occurring < 0.1% of the time in a given channel), and require significant data processing.

The most well-studied means of identifying HFOs is based on human identification (Haegelen et al., 2013; Kerber et al., 2014; van Diessen et al., 2013; Jacobs et al., 2010; Zelmann et al., 2009). This requires specially trained personnel and is very labor intensive, taking an hour to review 10 minutes of data from a single channel (Zelmann et al., 2009). The feasibility of translating HFO biomarkers into clinical practice is quite low unless automated methods are employed (Worrell et al., 2012).

A variety of automated HFO detection algorithms have been available for some time (Crépon et al., 2010; Blanco et al., 2010; Gardner et al., 2007; Staba et al., 2002; Worrell et al., 2008; Zelmann et al., 2012). Most of these detectors were specifically tuned to handpicked subsets of EEG data. The first, or “Staba” detector, uses a band-pass filter and then searches for oscillations of sufficient length that are significantly different from the background (Staba et al., 2002). This detector is highly sensitive and was designed for use with short, “clean” datasets, as it is quite prone to identifying artifacts as HFOs, particularly fast transients that produce false oscillations due to the Gibb’s phenomenon (Bénar et al., 2010). Later work using this detector on long term human EEG required a complicated, multi-step process to eliminate certain obvious artifacts and group the rest as an “artifact cluster” for later processing (Blanco et al., 2010). That process allowed the automated processing of over 200,000 HFOs in nine patients, a task unfeasible for human processing. Importantly, those automated detections had high concordance with the clinically-determined seizure onset zone (SOZ) and with human HFO markings—in fact they were indistinguishable from markings of human reviewers (Blanco et al., 2011). However, the method was not generalizable to a larger patient population, requiring multiple steps and specific patient tuning. Lack of generalizable HFO algorithms that automatically address false detections has been one of the challenges to translating HFOs into clinical practice.

The main goal of the current work is to provide a reliable algorithm capable of identifying HFOs in long-term intracranial EEG data without any per-patient tuning or operator intervention. The key to this algorithm is an automated means to reject false-positive HFO detections due to artifacts in a manner that generalizes across patients. Our approach is to start with a highly sensitive HFO detector applied to data with a common average reference, redact HFOs associated with detected artifacts, and label the remaining HFOs as “quality HFOs” (qHFOs).

Any automated HFO detector would be suitable for the initial detections; we chose the highly sensitive Staba detector, which has already been validated on brief datasets (Staba et al., 2002), as well as prolonged datasets after multi-step artifact rejection (Blanco et al., 2010). We analyzed 23 patients who underwent surgical implantation with traditional intracranial EEG electrodes and then received resective surgery. The total amount of interictal data spans over 58 days, during which nearly 1.5 million interictal quality HFOs were identified.

We validated this algorithm by human review of a subset of events. We then sought to demonstrate the clinical utility of these detections. It is now established that high rates of manually-selected HFOs are correlated with the epileptogenic zone (Wu et al., 2010; Haegelen et al., 2013; Kerber et al., 2014; Okanishi et al., 2014), and removal of them may correlate better with patient outcome than the clinically-determined SOZ (Jacobs, 2010). Thus a practical validation of our automated method is to show similar results, which we accomplished in two ways. First, we retrospectively compared the qHFO rates within and without the resected volume (RV) and clinically-determined SOZ, and found a strong correlation between high HFO rates and both SOZ and RV. Second, we developed a prospective algorithm capable of identifying the SOZ automatically with interictal HFO data. A novel algorithm was necessary because previous prospective methods resulted in incorrect predictions in our dataset. Our algorithm determines which electrodes are anomalously higher than the rest, but makes no prediction if the data do not suggest a clear enough difference. This flexibility gives the algorithm the option to avoid identifying any SOZ in cases where incorrect identifications are likely.

2. Methods

2.1. Patient population

EEG data from patients who underwent intracranial EEG monitoring were selected from the IEEG Portal (www.ieeg.org; Wagenaar et al., 2015) and from the University of Michigan. From the IEEG Portal, all patient data available in May 2014 were searched for the following inclusion criteria: sampling rate of at least 2,700 Hz, a recording time of at least one hour, data recorded with traditional intracranial electrodes, and available metadata regarding the RV or SOZ. Patients that had both macro- and microelectrode recordings were included, but the microelectrode data were not analyzed herein. This yielded 19 patients, all of which had been recorded at the Mayo Clinic using a Neurolynx (Bozeman, MT) amplifier sampled at 32 kHz with a 9 kHz antialiasing filter (Worrell et al., 2008), then later down-sampled to either 2,713 Hz (MC-02, MC-04, MC-05, MC-07, MC-08) or 5,000 Hz (the remaining MC patients) when stored on the Portal. Of these 19 patients, eight have been analyzed in previous publications (Blanco et al., 2010; Blanco et al., 2011; Pearce et al., 2013). Additionally, data from four patients at the University of Michigan were recorded at 30 kHz (Blackrock, Salt Lake City, antialiasing filter 10 kHz) and down-sampled to 3,000 Hz, resulting in total patient population of 15. All patients were adults with refractory epilepsy undergoing long-term monitoring in preparation for resective surgery. All data were acquired with approval of local IRB and all patients consented to share their de-identified data. Further details about the patient population and attribution for studies on the IEEG portal are provided in Table 1. Note, the human review of HFOs and artifacts utilized a subset of data randomly sampled from all 23 patients. However, eight of the 23 patients were excluded from further analysis due to missing or ambiguous information regarding seizure times, which precluded restricting the analysis to interictal data. Of the remaining 15 patients, ten patients were considered to be the gold standard because they had ILAE Class I outcomes after surgical resections (i.e. their true epileptogenic zone is known to be within the RV). One additional patient with Class I outcome was not included in the “gold standard set” because the entire region spanned by the electrodes was resected, so it was impossible to compare with unresected areas.

Table 1.

Patient data table. Note that patients MC-01 to MC-08 correspond to patients SZ01 to SZ08 in a previous publication (Blanco et al., 2011). MC patients were recorded with a Neuralynx amplifier, and down-sampled to 2,713 Hz (MC-02, MC-04, MC-05, MC-07, MC-08) or 5 kHz (the remaining MC patients). UM patients were recorded with a Blackrock amplifier, down-sampled to 3 kHz. Note, only 12 hours per UM-patient is included on the IEEG portal. Age for the MC patients is based on Blanco et al. (2011). Time per patient and number of qHFOs is the amount within interictal segments.

| Subject | IEEG.org Identifier | Age | Sex | Num. Elec. (Surf., Dep.) | Time [hrs:min] | Num. of qHFOs | qHFO Rate [sec−1] | ILAE Score | Seizure Focus |

|---|---|---|---|---|---|---|---|---|---|

| MC-01 | I001_P034_D01 | 35 | F | 40, 0 | 12:30 | 4,024 | 5.37 | 1 | FL |

| MC-02 | I001_P011_D01 | 24 | M | 22, 0 | 10:10 | 388 | 0.64 | 1 | FL |

| MC-03 | I001_P012_D01 | 21 | F | 28, 8 | 1:40 | 308 | 3.08 | 5 | TL |

| MC-04 | I001_P015_D01 | 39 | F | 51, 8 | 4:10 | 101 | 0.40 | 1 | TL |

| MC-05 | I001_P003_D01 | 21 | F | 44, 8 | 4:00 | 1,080 | 4.50 | 2 | TL |

| MC-06 | I001_P016_D01 | 42 | M | 23, 0 | 88:00 | 2733 | 0.52 | 1 | PL |

| MC-07 | I001_P014_D01 | 42 | F | 44, 0 | 36:20 | 14,893 | 6.83 | 1 | FL |

| MC-08 | I001_P017_D01 | 38 | F | 36, 52 | 5:40 | 1,498 | 4.41 | 1 | TL |

| MC-10 | I001_P001_D01 | † | M | 55, 7 | 119:20 | 98,860 | 13.81 | 5 | TL |

| MC-12 | I001_P005_D01 | † | M | 28, 8 | 34:50 | 5,745 | 2.75 | 1 | TL |

| MC-13 | I001_P006_D01 | † | F | 0, 8 | ‡ | ‡ | ‡ | † | TL |

| MC-14 | I001_P007_D01 | † | M | 62, 4 | ‡ | ‡ | ‡ | NA | TL |

| MC-15 | I001_P008_D01 | † | F | 12, 0 | ‡ | ‡ | ‡ | NA | TL |

| MC-16 | I001_P010_D01 | † | F | 48, 8 | ‡ | ‡ | ‡ | † | TL |

| MC-17 | I001_P013_D01 | † | F | 72, 0 | 87:20 | 10,337 | 1.97 | 1 | PL |

| MC-18 | I001_P019_D01 | † | M | 0, 16 | ‡ | ‡ | ‡ | † | TL |

| MC-19 | I001_P020_D01 | † | M | 84, 8 | ‡ | ‡ | ‡ | † | TL |

| MC-20 | I001_P021_D01 | † | F | 112, 0 | ‡ | ‡ | ‡ | NA | FL/PL |

| MC-21 | Study 036 | † | M | 88, 8 | ‡ | ‡ | ‡ | † | TL |

| UM-01 | UMich-001-01 | 23 | M | 75, 0 | 85:50 | 515,469 | 100.09 | 5 | FL/PL |

| UM-02 | UMich-002-01 | 46 | M | 95, 0 | 115:20 | 429,648 | 62.09 | 1 | OL |

| UM-03 | UMich-003-01 | 43 | M | 41, 0 | 131:20 | 321,536 | 40.80 | 1 | LF |

| UM-04 | UMich-004-01 | 26 | F | 61, 20 | 40:00 | 61,422 | 25.59 | 1 | TL |

unknown.

cannot be determined due to ambiguous or missing information regarding seizure times.

Num. Elec.: number of electrodes. Surf.: surface. Dep.: depth. qHFOs: quality high frequency oscillations. FL: frontal lobe. PL: parietal lobe. OL: occipital lobe. TL: temporal lobe.

For each patient, the clinically-determined SOZ was determined from the official final clinical report, written by the treating physicians. Although it is well known that this determination of SOZ is reviewer-dependent, this was the actual clinical evaluation used for each patient, and thus it represents the true medical practice in each case. All electrodes designated in the clinical report were included. In patients with multiple seizure foci identified, the clinically-determined SOZ was the set of all electrodes in all foci. The RV and surgery outcomes are also based on the available clinical metadata. Patients UM-02 and UM-03 had multiple subpial transections in addition to resection, as the clinical seizure onset zone was found to extend to eloquent areas. For the purposes of this paper and identifying the SOZ, these regions are considered part of the RV as they represent surgically modified regions. Seizure times were also obtained from the clinical reports and/or the authors of previous publications on those patients (Pearce et al., 2013).

2.2. Data selection

All interictal data from all patients were analyzed, without any human review of data quality or awake/sleep stage. This mimics potential clinical settings where a real-time algorithm would not have any input from treating clinicians about which data to analyze. Data were quantized into 10-minute epochs, based on the Staba detector (Staba et al, 2002); see Section 2.3.1. Interictal periods were defined as any 10-minute epochs farther than 30 minutes of a seizure onset (Pearce et al., 2013). To be conservative, data between seizures less than two hours apart were also not considered interictal, and small amounts of data with ambiguous information regarding seizure times were also not analyzed.

2.3. Data processing

2.3.1. HFO detectors

The goal of the qHFO algorithm is to provide an automated method to reduce the number of false HFO detections, thereby improving the quality and specificity so that automated HFO detections could be used for prolonged recordings in clinical settings. The basic structure of the qHFO detector is to a) compute a preliminary set of HFO detections using a sensitive automated HFO detector, b) directly detect artifacts in the data, and c) redact any HFO detections which are coincident with artifact detections. After this process, the remaining HFOs are labeled qHFOs. The general data flow diagram is shown in Fig. 1.

Figure 1.

The data-flow diagram for qHFO detections. Each box represents a detector or major processing step. The final qHFO detection restricts the preliminary Staba HFO detections to those which are not coincident with artifacts.

In this work, the preliminary HFOs are determined by applying the Staba HFO detector (Staba et al., 2002) to data using a common average reference with separate references for the grid and depth channels. The Staba HFO detector, as published, processes 10-minute epochs of data at a time, therefore the basic quantization of data throughout this manuscript is chosen to be 10-minute epochs. Example qHFO detections are shown in Fig. 2A. Note, our method is applicable to any HFO detector with high sensitivity; this detector was chosen due to its familiarity and previous validation.

Figure 2.

Example wave forms. A: quality HFOs (qHFOs). B: HFOs detected using the Staba detector with common average reference, but rejected as they were coincident with artifact detections. Note, the horizontal scale is consistent within a given panel (A or B), but varies across panels. The vertical scale is consistent for panel A and subpanels B3-B5, and is separately consistent for subpanels B1-B2. Redacted false-positive HFO detections include fast DC shifts (B1, B3), fast transients (B2, B4) and data with very slow signal no noise ratio (B5).

Common average reference is a well-known technique when recording high resolution intracranial EEG (Ludwig et al., 2009), has been used in other automated HFO research (Matsumoto et al., 2013), and is analogous to the traditional average reference montage in surface EEG. The purpose of this reference is to remove widespread effects such as line noise, which can produce false HFOs (Stacey et al. 2013). It also allows normalization of studies that use different instrument references (e.g. in this case the Mayo Clinic uses an intracranial reference, while University of Michigan uses an Fz1 surface reference). However, it does not remove focal artifacts, which must be removed separately (see Section 2.3.2).

For comparison of the effect of the qHFO algorithm, a set of nominal HFO detections were computed using the Staba detector applied to data with instrumental reference. The Staba detector was not designed for prolonged, uncontrolled data, and it is well-known that it will detect false positives. However, “noisy” data is precisely what is generated under normal clinical conditions, so this comparison allows us to demonstrate the effect of the artifact reduction by showing how a baseline detector would perform. This comparison is necessary because, to our knowledge, there is no fully automated detector for general clinical data prior to this work.

2.3.2. Artifact detectors

Our experience has been that it is easier to identify artifacts directly from the raw EEG than it is to determine whether a given HFO is likely caused by an artifact. Our goal is to remove signals that are unequivocally caused by non-neural sources. This is accomplished via two artifact detectors that mark each channel individually. Example artifact detections are shown in Fig. 2B. It is important to note that the same set of artifact detectors and parameter settings are used for all patients, with values determined by training on a single patient. Parameters were set to be conservative, i.e. to detect artifacts with high sensitivity.

PopDet

This is a detector of fast transients or fast DC-shifts. Such fast activity is wideband, whereas standard clinical macroelectrodes attenuate neural activity at high frequencies. Clinicians typically easily identify this activity as artifact, but when filtered for HFO processing it can appear identical to an HFO. This detector thus looks at the raw signal for major excursions from baseline in a high frequency band, which are very unlikely to be caused by neural activity. Specifically, this detector identifies when the line-length (sum of the absolute value of the difference) of a 0.1 second window of 850–990 Hz band pass filtered data is the mean. The mean and standard deviation are computed using a five second window ending five seconds previous to the window being evaluated. Each detection is 0.5 seconds long. We optimized the threshold parameter to maximize the HFO-rate asymmetry between electrodes inside versus outside the RV. This was done by training on only one patient (MC-07) in which there was an observable distinction between the distribution of neural and artifactual HFOs. Optimal results were present over a broad range of parameters (i.e., similar results for 5 to 15 standard deviations); we chose the conservative parameters to have higher sensitivity.

BkgStabaDet

If an artifact is present in the reference itself, it will propagate to all recorded channels. In a common average reference, this would occur when a signal is diffuse over all common electrodes. Although there are some physiological diffuse signals, this scenario is not consistent with a focal HFO (Bragin et al., 2002b; Bragin et al., 2011). Thus, we determined that if an “HFO” were detected in the common average reference, it should be considered an artifact. We ran the Staba algorithm directly on the common average reference and marked a likely artifact on all channels involved in that average (all grids or depths) for each HFO detection on the average reference (along with an extra 100 msec before and after to be conservative). This detector required no tuning.

2.3.3. Implementation details

The qHFO detection procedure was implemented using custom C++ code and Matlab (Mathworks, Natick, MA) scripts. The entire qHFO detection procedure was much faster than actual recording time, thus facilitating clinical use. For example, patient UM-04 had 81 electrodes. Applying the qHFO procedure to the 3 kHz data (post-downsampling) using a single core on a desktop PC (Dell XPS 8700) took about 25 seconds per 10-minute epoch. Thus, the entire procedure could easily run real-time in the clinic on a standard PC.

It should be noted that in this report, HFO detections are not stratified by frequency or any other feature: all HFOs are treated equally, with no attempt to isolate types of HFOs (e.g., ripples or fast ripples) that might have stronger association with epilepsy. The rationale is that this report focuses solely on mitigating the effects of false positive detections, a needed preliminary step for future work analyzing the features of qHFOs. Further analysis of HFO features should also address possible bias to the distribution of features, which can be caused by instrumental effects as well as by the automated HFO detection algorithm—all of this is outside the scope of this work.

2.3.4. Detector Validation

Human review of a random sample of detections was used to validate that the qHFOs are indeed mostly neural and that the rejected HFOs are indeed mostly artifacts. Three expert reviewers (clinical epileptologists with experience viewing HFOs) were each presented a random order of 2,000 randomly selected events: 1,000 qHFOs, and 1,000 preliminary HFOs redacted due to being coincident with detected artifacts (“artifactual HFOs”). Events were sampled randomly from all 23 patients. Each event was viewed by showing three seconds of single-channel data, using instrumental reference, with the detected event marked in red. Reviewers marked each event as either “neural”, “artifact”, or “unclear.” The pairwise kappa scores were computed to assess consistency between reviewers. Conglomerate labels were formed by majority vote, with “unclear” being chosen in case of a tie. The percentage of events marked as neural or artifact were then determined separately for events marked as qHFOs or artifactual HFOs by the algorithm. Unclear events were not considered in computing these percentages.

2.4. Correlations between qHFOs and Epileptic Tissue

2.4.1. Seizure onset zone standards

A challenge of studies involving HFOs in human patients is that there exists no gold standard determination of the true SOZ, also called the epileptogenic zone (Luders, 2008). This zone, formally, is the region of tissue that causes the seizure to initiate, though in practice the only way to verify the location of that zone is to resect a region and see if the seizures stop. There are two clinical zones that estimate the epileptogencic zone: 1) the clinically-determined SOZ, i.e., the region where the seizures first become visible to clinicians on the intracranial EEG recording, and 2) the RV, the region actually removed in surgery. Importantly, the clinically-determined SOZ is subjective, has known inter-rater variability, and is not necessarily the same as the RV. Previous HFO papers (Jacobs et al., 2012) suggest that the high-HFO region better corresponds with RV than clinically-determined SOZ. For the direct asymmetry measure, we compare the qHFO rates with both the clinically-determined SOZ and the RV, although in terms of a gold standard the RV provides the only completely objective measure of the epileptogenic zone. For the algorithmically-determined SOZ, the results focus on just the comparison with the RV.

2.4.2. HFO-rate asymmetry

In order to quantify the correlation between HFOs and the clinically-determined SOZ or RV in a direct manner, we assessed the difference in HFO rates (asymmetry) inside and outside each region of interest. The asymmetry was computed by first determining the average HFO rate over all channels in the region of interest, denoted rin, and over all channels not in the region of interest, denoted rout. The asymmetry was then computed as the difference divided by the sum,

| (1) |

The ratio had to be constructed this way to account for instances when rin or rout are zero. As the HFO rates can vary significantly from patient to patient, it is important to use a normalized value, instead of the simple difference. The asymmetry is also bounded between −1 and 1. This method results in one asymmetry value per patient for each choice of HFO detection-type (nominal Staba or qHFO) and choice of region of interest (clinically-determined SOZ or RV).

The asymmetry can be interpreted as follows. Positive values of the asymmetry indicate a higher rate of HFOs within the region of interest than outside the region, whereas negative values indicate higher rates outside the region than inside. A value of zero indicates the rates are equal inside and out, and the values of |A|=1 occurs only when all of the HFOs are either outside (A = −1) or inside (A = 1) the region of interest.

The main result of this measure is the comparison of the asymmetry for nominal Staba HFO detections versus qHFO detections relative to each region (clinically-determined SOZ and RV). An increase in the asymmetry value indicates an improvement in the correlation. The statistical significance of the number of patients for whom the asymmetry increased is determined using the Wilcoxon signed rank test, which considers both the direction and magnitude of the change.

It should be noted that such an asymmetry is not expected to be consistent across patients. It is possible that the clinically-determined SOZ and the RV may not exactly match the true epileptogenic zone. There is also no reason to expect the actual HFO rates to be consistent across patients. Therefore, the average of the asymmetry value across the patient population is not interpretable. The quantity of interest is whether or not the asymmetry increases when using qHFOs over nominal Staba HFO detections. Thus, the statistical test performed (Wilcoxon signed rank test) addresses the statistical significance of the number of patients who had the asymmetry value increase, not any mean or aggregate asymmetry value. Clinically, it is also more relevant to consider the number of patients for which the asymmetry improves rather than changes in the average asymmetry.

2.4.3. Prospective, automated identification of SOZ

In order to assess the clinical impact of the qHFO procedure, we tested how well the qHFOs could be used prospectively to identify the SOZ in patients who became seizure free after surgery. We utilized three quasi-prospective algorithms: the channel with the highest HFO-rate, Tukey’s Upper Fence, and our own prospective method. In each case, we compared the identified putative SOZ with the RV.

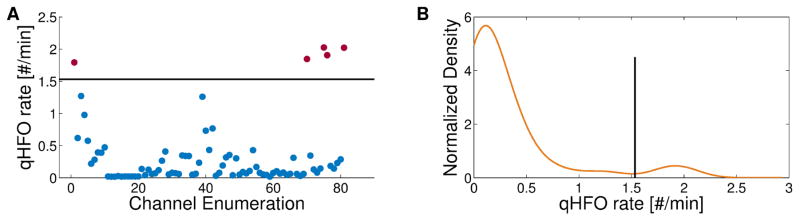

The “highest rate” method simply chooses the single channel with highest HFO rate. Tukey’s upper fence is designed to automatically identify a threshold rate for each patient. After calculating rates in all channels, it sets the threshold at the median plus 1.5 times the inter-quartile radius. All electrodes with rates higher than threshold would be considered SOZ. Our method (the “Michigan method”) also seeks a unique threshold in each patient, but is designed to assure that there is a population of channels with anomalously higher rates, not simply outside a fixed range. The goal of this method is to avoid predicting channels outside the epileptogenic zone, which in a true clinical scenario would lead to resecting the wrong tissue. The strategy is to use the distribution of HFO rates to set the threshold at a natural division in the HFO rates, and to avoid identifying any SOZ if there is no such natural division. We estimate the distribution using Kernel Density Estimation (KDE) with a Gaussian kernel (bandwidth set to 0.94 times Silverman’s Rule of Thumb (Silverman, 1986)) as it is both non-parametric and smooth. Channels are identified as SOZ if there exists multi-peaks in the estimated HFO rate distribution, and if there exists at least one peak above 0.2 HFOs/min with the peak height more than 1.8 times at least one of the two neighboring valley heights. Additionally, we observed that when the overall HFO rate is too large, the HFO rates were less predictive of SOZ. Thus, no prediction is made if the sum of the mean and median HFO rate is above 0.944 HFOs/min. Fig. 3A shows the qHFO rates per channel for an example patient (UM-04), and Fig. 3B shows the KDE of those rates. The determined threshold (1.53 qHFOs/min) is shown on both panels.

Figure 3.

Example SOZ identification using the Michigan method. The qHFO rate per channel is plotted (A) for patient UM-04. The horizontal line represents the threshold as determined by the procedure. The KDE of the distribution of rates from (A) is shown in the second panel (B). The threshold is depicted as a vertical line and separates the peak near 1.9 qHFOs/min from the main portion of the distribution.

To tune the four parameters above (mean plus median threshold, KDE bandwidth factor, minimum peak position and peak/valley ratio), we used hold-out-one validation with all ten “gold standard” patients described in Section 2.1. This method mitigates the risk of in-sample tuning, and also provides an estimation of how the algorithm would perform in other patients. In this method, nine patients are used for training and one patient is used for testing, repeated for each possible choice of the patient held out for testing. Thus, the same data is never used for both testing and training. This is a standard method to optimize the usage of limited available data, limit over-training, and estimate the generalizability of a method to novel data (Lachenbruch and Mickey, 1968).

The full relevant four dimensional parameter space was scanned, involving over 650,000 sample points. For each choice of nine training patients, we considered the set of parameter values that resulted in no identified SOZ outside of RV and optimized the number of patients for which SOZ was identified. This resulted in a large set of equally optimal values for the nine training patients. All parameters in this set were then evaluated on the one held-out, testing patient. The fraction of these parameters yielding either no identification, identification fully within the RV, or not fully within the RV were recorded. The method was repeated for each choice of held out patient. In only one case (holding out MC-07) did any trained parameters result in a falsely identified SOZ in the testing patient (i.e. predicted that a channel was SOZ that was outside the RV). Those parameters were excluded, leaving a region of parameter space, including nearly 5,000 sample points, that was maximally optimal for all choices of training/testing data. One of these sample points near the median was selected as the final parameters. Additionally, the fraction of parameters yielding each type of identification was averaged over the ten choices of held out patient to estimate how the algorithm may respond to novel data. Such averaging of “error rates” over the different held-out data is a standard procedure to estimate generalizablity when using hold-out-one validation (Lachenbruch and Mickey, 1968).

2.5. Data sharing

A compiled version of the C++ and Matlab code is available from the authors upon request. Raw data for the MC patients and samples of the UM patient data are available on www.ieeg.org and can be obtained using the patient identifiers in Table 1.

3. Results

3.1. Human Validation

For the human validation, the reviewers’ instructions were to view raw, unfiltered EEG and mark the segment “neural” if it was potentially physiologically generated, “artifact” if it was likely to be artifactual, or “unclear”. The three human reviewers had similar results. The pairwise kappa scores were 0.76, 0.88, and 0.89. In the consensus labels, only 9.3% of the 2,000 events were marked unclear. Among reviewed qHFOs not marked as unclear, 88.5% were marked as neural. Among artifactual HFOs not marked as unclear, 78.5% were marked as artifacts. To estimate the effect of this on the whole dataset, note that about 630,000 HFOs were rejected as artifacts while nearly 1.5 million were accepted as qHFOs. Thus, based on extrapolating the human review percentages to the full dataset, we estimate that potentially 136,000 HFOs were incorrectly redacted. This results in a reduction in sensitivity about 10%, but increases the specificity from 68.8% to 88.5% (a gain of 28.7%). These results were concordant with the primary goal of the qHFO algorithm, which was to maximize specificity.

3.2. Correlation of nominal and qHFO detections with SOZ and RV

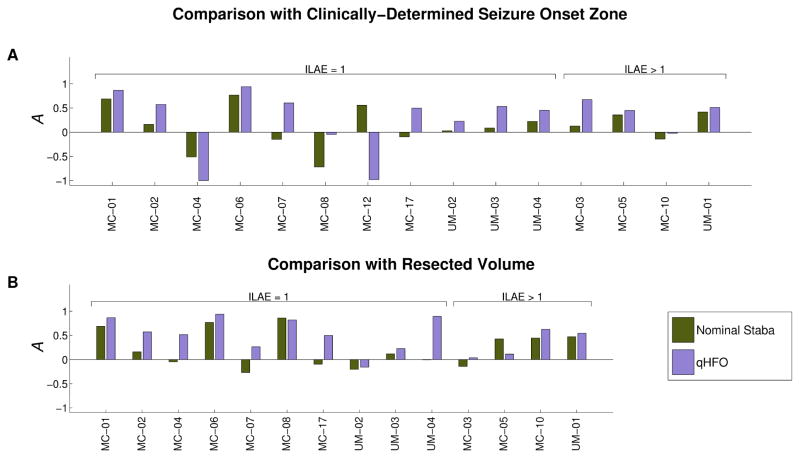

The qHFO detection algorithm was performed with the same parameters on all patients, after training on a single patient. The nominal Staba detector was also independently applied to all data. As a first assessment of our method, we determine whether qHFOs improved the specificity towards epileptic tissue, using the HFO rate asymmetry measure and nominal Staba detections as a baseline. This analysis was performed in all 15 patients that had appropriately-labeled interictal data. In Fig. 4, the asymmetry measure comparing nominal Staba HFO detections and qHFOs is shown for each patient, considering both the clinically-determined SOZ (Fig. 4A) and RV (Fig. 4B) as regions of interest. Recall, asymmetry values greater than zero indicate that the HFO rates are higher in electrodes within the region of interest (clinically-determined SOZ or RV) versus electrodes outside it.

Figure 4.

Comparison of the asymmetry. Values of A=1 imply all HFOs occur in the region of interest, values of A=−1 imply that no HFOs occur in the region of interest, and values of A=0 imply an identical HFO rate inside and outside of the region of interest. Two regions of interest are considered: the seizure onset zone (A) and the resected volume (B). Two methods of detecting HFOs are also compared: the nominal Staba detector (green) and qHFOs (purple). The qHFOs are more specific to both SOZ and RV than nominal Staba HFOs in nearly every patient.

Figure 4A compares the results with respect to the clinically-determined SOZ. To assess significance, we evaluated the response in the ten gold standard patients. The qHFO rates were higher than the nominal Staba algorithm in the SOZ in 9/10 patients (p=0.020, Wilcoxon signed rank test), though several of the asymmetries were close to or below zero. We next compared HFO rates with the resected volume, shown in Fig. 4B. While the RV is usually larger than the clinically-determined SOZ, it is also the best standard for determining the true epileptogenic zone: in any patient that has a Class I outcome, by definition the epileptogenic zone is a subset of the RV. Again, the qHFO algorithm improved the specificity to the RV in 9/10 patients with good surgery outcome, but the significance was greater due to difference in magnitudes (p=0.0037). Thus, one can conclude that the qHFO detection procedure has significantly improved the specificity of HFOs to epileptogenic tissue, compared with the nominal Staba detector.

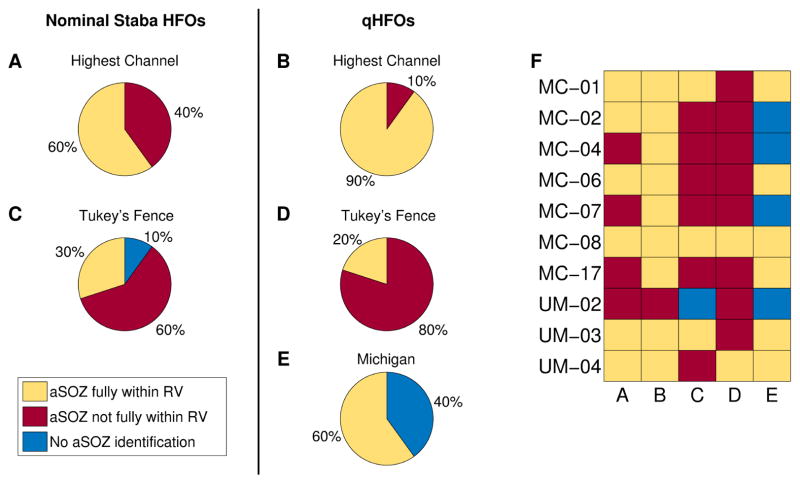

3.3. Algorithmically-determined SOZ results

As past studies have suggested that HFO rates can be used to identify SOZ, a practical method of assessing the potential clinical impact of the qHFO method is to determine how well the qHFOs correlate with SOZ in a clinically-relevant scenario. In doing so, it is crucial to use the same prospective algorithm and determine the clinical implications in each patient. We consider three algorithms to prospectively determine SOZ based on HFO rates, as described in Section 2.4.3, which are then compared with the RV. Results per patient are classified as either a) the algorithm did not identify any tissue as SOZ, b) the algorithmically-determined SOZ was fully within the RV or c) the algorithmically-determined SOZ was not fully within the RV. These three outcomes have very pertinent clinical interpretation: “fully within RV” means the algorithm correctly identified a subset of the RV from interictal data; “not fully within” means the algorithm would have suggested resecting tissue that was not within the true epileptogenic zone (i.e. a false positive prediction), and “no SOZ identified” means that the algorithm made no prediction at all for that patient. This categorization scheme places high emphasis on the avoidance of false-positives, as even one false-positive would imply the resection of tissue that should have been left intact. Patient MC-12 was not included in the following study as the entire region spanned by electrodes was resected.

The algorithmically-determined SOZ using either the nominal Staba HFO rates (Figs. 5A and 5C) or qHFO rates (Figs. 5B, 5D and 5E) is compared with RV for the ten gold standard patients. For the Highest Channel algorithm (A, B), using qHFOs rather than nominal Staba detections improved the outcome (three additional patients correctly identified) but still left one patient with a false positive. The Tukey’s upper fence method (C, D) results in a large number of patients having SOZ identified outside of the RV (false positives), consistent with previous publications (Cho et al, 2014). Tukey’s method actually shows worse performance with qHFOs, due to it ignoring the shape of the HFO rate distribution. The Michigan method, using qHFOs, is able to identify SOZ in a majority of the patients (6/10). However, its method of avoiding false positives causes it to abstain from identifying the SOZ in 4/10 patients. There was no set of parameters that could make a correct prediction in all patients using a universal algorithm. The best example for this is UM-02, in which the single highest rate channel is outside the RV, so HFO rate cannot be used in that patient. In other words, making no prediction based upon HFO rate was actually the most correct result. It is important to note that the Michigan algorithm correctly identified more than one channel in half of the patients (two channels in two patients, and five channels in one patient). Thus it has the versatility to identify a more complete SOZ than simply choosing a single highest-rate channel. In contrast, choosing the highest 2 or 3 channels in every patient produced even more false positives than the Highest Channel (not shown).

Figure 5.

Comparison of the algorithmically-determined SOZ (aSOZ) with the resected volume (RV) in patients with ILAE Class I surgical outcome. Methods uses were the channel with highest HFO rate (A and B), Tukey’s upper fence (C and D) and the Michigan method (E). Results are shown using both nominal Staba detections (A and C) and qHFOs (B, D, and E). Panel F presents the results per patient.

The results of the hold-out-one training procedure estimate the likely result of applying the method to a novel patient’s data, as described in Section 2.4.3. The results were a 52% chance of identifying SOZ within the RV, a 38% chance of not identifying any SOZ, and a 10% chance of a false identification. These results are similar to the final results shown in Fig. 5E, where a single optimal parameter choice is applied to the ten patients with Class I outcomes.

Though it is impossible to fully interpret our qHFO and Michigan algorithms in the four patients with imperfect outcomes, we also compared the results to identify trends. Even in this small dataset, there were four disparate situations: surgery did not cure the seizures if the algorithmically-determined SOZ was not fully resected (MC-10), if it was fully resected (MC-03), if no prediction was made because no electrodes were anomalously high (MC-05), and if no prediction was made because all electrodes were too high (UM-01). We note that, of these four patients, only in the case of MC-03 was the prediction potentially incorrect (i.e. resecting the predicted electrodes did not help). This may have been because of incomplete EEG coverage or because HFO rate simply was not a helpful biomarker in this patient.

4. Discussion

This automated algorithm efficiently identifies artifact-free HFOs in long term EEG. We have validated the results in three distinct ways. First, human review showed significant agreement with the algorithm and with each other in identifying neural HFOs and rejecting artifacts. As expected, sensitivity was slightly lower than specificity. This was according to design—the goal of the algorithm is to remove as many false positive detections as possible, as these might lead to unnecessary surgery.

Two additional methods of validation were automated. The results using both the direct measure (asymmetry) and the quasi-prospective, clinically relevant procedure (automated SOZ identification) showed a consistent picture: qHFO detections are highly correlated with epileptogenic tissue, and improved from the baseline of nominal Staba HFO detections. It is important to note that the original Staba detector was not designed for “noisy” recordings seen in long-term EEG; therefore it is remarkable how well they correlate with epileptic tissue even before removing artifacts with our method. However, the qHFO algorithm clearly improves upon that correlation with high statistical significance. Thus, qHFOs represent a fully automated method to identify a physiologically-relevant sample of HFOs. A single set of parameters was used for all patients, which was tuned on only a single patient’s data. Regarding the universal nature of this algorithm, it important to note that the human validation step only included 13/2000 HFOs from that patient (MC-07), so that validation was a legitimate evaluation of the generalizability of this algorithm.

One potential limitation of this algorithm is that it only rejected two types of noise: oscillations in the common average and large sharp transients (“pops”). Clearly, there are many other types of noise which can cause false HFO detections, and which are not addressed by this algorithm. One area for improvement would be to develop additional noise rejection methods, both to remove false positives and to provide an assessment of the accuracy of the detector. However, the results herein demonstrate that the two given artifact detectors do generalize across many patients and provide clinically-relevant qHFOs.

Though our comparison with RV and SOZ were primarily done to show that the qHFOs were clinically important, this analysis also leads to other clinical questions. The results of Fig. 4 demonstrate that, in patients who became seizure free, the high HFO rate regions often had much better correlation with the RV than with the clinically-determined SOZ. In particular, two patients with good outcomes (MC-04 and MC-12) have asymmetry values of -1 for the clinically-determined SOZ, meaning that no qHFO detections were within that region. Both patients had low nominal HFO rates therein, all of which were redacted as artifacts. Asymmetry versus RV could not be assessed for MC-12 because the RV included all electrodes, but in MC- 04 most qHFOs were within the RV. These results raise the question of whether HFOs may be better predictors of outcome than clinically-determined SOZ, as has been suggested previously (Jacobs, et al., 2010). However, it is premature to make such a statement definitively, and proving it will require prospective clinical trials. This statement is also somewhat unfair statistically, since the RV typically is larger than the clinically-determined SOZ, and it is not surprising that larger resections have a greater chance of seizure freedom. Nevertheless, these results lead to the question of whether clinical practice adequately identifies the epileptogenic zone. Theoretically, it is not required that the region causing the seizures be the first region to show epileptic activity on traditional EEG; just as a smoldering tree in a forest may initiate a fire but not be the first to show flames. New techniques may eventually establish better biomarkers of epileptogenic tissue, possibly HFOs. For instance, other work with high resolution electrodes suggests that the epileptogenic zone may be visible only at high frequencies on microelectrodes, and that traditional EEG signals may simply be an “inhibitory surround” in response to that signal (Schevon et al. 2012).

One important aspect of this study is that it included HFOs from every patient state, not just slow wave sleep. One of the primary reasons for limiting past studies to slow wave sleep was to have “clean” EEG signals and avoid artifacts; however, a major consequence is that HFOs from other brain states are not evaluated, and there are important differences among various brain states (Staba et al., 2004; Bagshaw et al., 2009; Dümpelmann et al. 2015). Our data included all HFOs equally from every brain state over the entire recording, and showed that there are useful correlations between HFOs and epilepsy even when averaging over all states of vigilance.

A comment regarding HFOs and interictal spikes is warranted. Although HFOs have garnered much attention as novel epilepsy biomarkers, they were originally identified as being associated with spikes, which have a much richer history as biomarkers of epilepsy. This leads to the question of whether HFO detection is any better than simple spike detection. Spike detection algorithms are notoriously inaccurate, but for this comparison they are not needed. In practice, clinicians perform a visual analysis quite similar to the Michigan method described herein: they ignore obvious artifacts and identify channels with anomalously high rates of spikes, which they report as a list of abnormal channels. Metadata regarding the clinically identified channels with high spike-rates was available for only six of the 10 patients with good surgery outcomes (MC-02, MC-12, MC-17, UM-02, UM-03, and UM-04). In all of these six patients, the high-spike rate region includes areas outside of the clinically-determined SOZ, and in four of the six, it extended beyond the RV. Only MC-12 and UM-04 had the entire high spike region within the RV, but MC-12 had the entire region spanned by electrodes resected. Thus, as has been accepted for many years, interictal spikes were inaccurate identifiers of the epileptogenic zone. The high HFO rate channels seem to be more specific to ictal onset tissue and yield more information than spikes alone, consistent with previous studies (Jacobs et al. 2008; Crépon et al., 2010; Jacobs et al., 2012).

Much of the HFO research has focused on retrospective comparisons of rates within and without the RV and/or SOZ, similar to Fig. 4. This is a very different question than designing an algorithm to identify SOZ prospectively. Although our method to identify SOZ prospectively was not the main focus of this paper, it shows potential of being highly specific and generally applicable. The challenge with such an algorithm is three-fold. First, every patient will always have a channel with the highest HFO rate—even those without epilepsy (Blanco et al., 2011). Second, it is imperative to determine how many electrodes are abnormal, a number that will obviously vary from patient to patient. Third, the clinical consequence of a false positive prediction is drastic—unnecessary surgical removal of tissue. Our algorithm was tuned specifically to address each of these concerns, especially to avoid false-positives. We compared it with two alternative methods: the highest rate channel and Tukey’s upper fence method. Both of those had false positive predictions. The highest rate method will force a choice, regardless of whether the epileptogenic zone is present within the electrode coverage. The Tukey method was recently used for just this purpose (Cho et al., 2014), but in that paper as well it identified channels outside the RV (i.e. false positive predictions). Thus, we deem both of these methods unacceptable for clinical translation, as prospective use would result in unnecessary resections. In contrast, our method used a single parameter set to identify the potential epileptogenic zone in six of ten patients. In the remaining four patients, the data were too uncertain to make a determination. The ability to avoid a faulty prediction is a feature, rather than a weakness, of this algorithm. The hold-out-one validation used to optimize the parameters, where no data was used for both testing and training, yielded similar results to the application of the optimal parameters to the full cohort. This suggests that the method will generalize well to novel patients. However, this tuning was performed on a small cohort and needs to be tested on a larger set of patients. Overall, the results demonstrated that it is possible for a real-time, universal HFO algorithm to detect HFOs reliably and identify SOZ without any per patient tuning in long-term EEG recordings.

4. Conclusions

The main objective of this paper has been the presentation and validation of an automated, generalizable method to improve the quality of HFO detections: reducing detections from artifacts without applying any per patient tuning or human review of data quality. The resulting detections are called qHFOs. The redaction of artifacts was validated by human review, and the effect of this method was assessed using two methods: 1) asymmetry values, a direct but retrospective method, and 2) prospective algorithms to determine SOZ based upon HFO rate. This required development of a novel method that estimates the distribution of HFO rate among electrodes to identify those that are likely to be within the epileptogenic zone. This method is both prospective and directly clinically relevant. These algorithms allowed analysis of a cohort of 23 patients, and detected nearly 1.5 million qHFOs. According to both measures, the qHFOs improved the correlation of HFO rates with epileptogenic tissue, with high statistical significance. These methods can be used in both research and clinical settings, moving HFO-biomarkers one step closer to clinical translation.

HIGHLIGHTS.

An unsupervised, automated detector runs faster than real time and identified nearly 1.5 million HFOs that were spatially correlated with epileptic tissue.

An artifact-rejection method improves precision of the HFO detector and allows continuous recording from all patient states.

A novel automated method to identify Seizure Onset Zone based upon interictal HFO rate avoids false positives in a pseudo-prospective analysis.

Acknowledgments

Research reported in this publication was supported by the NIH National Center for Advancing Translational Sciences (CATS) under grant number 2-UL1-TR000433 and the NIH National Institute of Neurological Disorders and Stroke (NINDS) under grant number K08-NS069783. The IEEG Portal is supported by the NINDS under grant number 1-U24-NS063930-01.

LIST OF ABBREVIATIONS

- HFO

high frequency oscillation

- qHFO

quality HFO

- SOZ

seizure onset zone

- RV

resected volume

Footnotes

Conflict of Interest

None of the authors have any conflict of interest to be disclosed.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Stephen V. Gliske, Email: sgliske@umich.edu.

Zachary T. Irwin, Email: irwinz@umich.edu.

Kathryn A. Davis, Email: Kathryn.davis@uphs.upenn.edu.

Kinshuk Sahaya, Email: ksahaya@uams.edu.

Cynthia Chestek, Email: cchestek@umich.edu.

References

- Akiyama T, McCoy B, Go CY, Ochi A, Elliott IM, Akiyama M, Donner EJ, Weiss SK, Snead OC, 3rd, Rutka JT, Drake JM, Otsubo H. Focal resection of fast ripples on extraoperative intracranial EEG improves seizure outcome in pediatric epilepsy. Epilepsia. 2011;52:1802–11. doi: 10.1111/j.1528-1167.2011.03199.x. [DOI] [PubMed] [Google Scholar]

- Andrade-Valença L, Mari F, Jacobs J, Zijlmans M, Olivier A, Gotman J, Dubeau F. Interictal high frequency oscillations (HFOs) in patients with focal epilepsy and normal MRI. Clin Neurophysiol. 2012;123:100–5. doi: 10.1016/j.clinph.2011.06.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bagshaw AP, Jacobs J, LeVan P, Dubeau F, Gotman J. Effect of sleep stage on interictal high-frequency oscillations recorded from depth macroelectrodes in patients with focal epilepsy. Epilepsia. 2009;50:617–28. doi: 10.1111/j.1528-1167.2008.01784.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bénar CG, Chauvière L, Bartolomei F, Wendling F. Pitfalls of high-pass filtering for detecting epileptic oscillations: a technical note on “false” ripples. Clin Neurophysiol. 2010;121:301–310. doi: 10.1016/j.clinph.2009.10.019. [DOI] [PubMed] [Google Scholar]

- Blanco JA, Stead M, Krieger A, Viventi J, Marsh WR, Lee KH, Worrell GA, Litt B. Unsupervised classification of high-frequency oscillations in human neocortical epilepsy and control patients. J Neurophysiol. 2010;104:2900–2912. doi: 10.1152/jn.01082.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blanco JA, Stead M, Krieger A, Stacey W, Maus D, Marsh E, Viventi J, Lee KH, Marsh R, Litt B, Worrell GA. Data mining neocortical high-frequency oscillations in epilepsy and controls. Brain. 2011;134:2948–2959. doi: 10.1093/brain/awr212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bragin A, Wilson CL, Staba RJ, Reddick M, Fried I, Engel J., Jr Interictal high-frequency oscillations (80–500 Hz) in the human epileptic brain: entorhinal cortex. Ann Neurol. 2002;52:407–415. doi: 10.1002/ana.10291. [DOI] [PubMed] [Google Scholar]

- Bragin A, Mody I, Wilson CL, Engel J., Jr Local generation of fast ripples in epileptic brain. J Neurosci. 2002;22:2012–21. doi: 10.1523/JNEUROSCI.22-05-02012.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bragin A, Benassi SK, Kheiri F, Engel J., Jr Further evidence that pathologic high-frequency oscillations are bursts of population spikes derived from recordings of identified cells in dentate gyrus. Epilepsia. 2011;52:45–52. doi: 10.1111/j.1528-1167.2010.02896.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cho JR, Koo DL, Joo EY, Seo DW, Hong SC, Jiruska P, Hong SB. Resection of individually identified high-rate high-frequency oscillations region is associated with favorable outcome in neocortical epilepsy. Epilepsia. 2014;55:1872–83. doi: 10.1111/epi.12808. [DOI] [PubMed] [Google Scholar]

- Crépon B, Navarro V, Hasboun D, Clemenceau S, Martinerie J, Baulac M, Adam C, Le Van Quyen M. Mapping interictal oscillations greater than 200 Hz recorded with intracranial macroelectrodes in human epilepsy. Brain. 2010;133:33–45. doi: 10.1093/brain/awp277. [DOI] [PubMed] [Google Scholar]

- Dümpelmann M, Jacobs J, Schulze-Bonhage A. Temporal and spatial characteristics of high frequency oscillations as a new biomarker in epilepsy. Epilepsia. 2015;56:197–206. doi: 10.1111/epi.12844. [DOI] [PubMed] [Google Scholar]

- Edelvik A, Rydenhag B, Olsson I, Flink R, Kumlien E, Källén K, Malmgren K. Long-term outcomes of epilepsy surgery in Sweden A national prospective and longitudinal study. Neurology. 2013;81:1244–1251. doi: 10.1212/WNL.0b013e3182a6ca7b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Engel J, Jr, Bragin A, Staba R, Mody I. High-frequency oscillations: What is normal and what is not? Epilepsia. 2009;50:598–604. doi: 10.1111/j.1528-1167.2008.01917.x. [DOI] [PubMed] [Google Scholar]

- Gardner AB, Worrell GA, Marsh E, Dlugos D, Litt B. Human and automated detection of high-frequency oscillations in clinical intracranial EEG recordings. Clin Neurophysiol. 2007;118:1134–1143. doi: 10.1016/j.clinph.2006.12.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haegelen C1, Perucca P, Châtillon CE, Andrade-Valença L, Zelmann R, Jacobs J, Collins DL, Dubeau F, Olivier A, Gotman J. High-frequency oscillations, extent of surgical resection, and surgical outcome in drug-resistant focal epilepsy. Epilepsia. 2013;54:848–857. doi: 10.1111/epi.12075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jacobs J, LeVan P, Chander R, Hall J, Dubeau F, Gotman J. Interictal high-frequency oscillations (80–500 Hz) are an indicator of seizure onset areas independent of spikes in the human epileptic brain. Epilepsia. 2008;49:1893–907. doi: 10.1111/j.1528-1167.2008.01656.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jacobs J, Zijlmans M, Zelmann R, Chatillon CE, Hall J, Olivier A, Dubeau F, Gotman J. High-frequency electroencephalographic oscillations correlate with outcome of epilepsy surgery. Ann Neurol. 2010;67:209–220. doi: 10.1002/ana.21847. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jacobs J, Staba R, Asano E, Otsubo H, Wu JY, Zijlmans M, Mohamed I, Kahane P, Dubeau F, Navarro V, Gotman J. High-frequency oscillations (HFOs) in clinical epilepsy. Prog Neurobiol. 2012;98:302–15. doi: 10.1016/j.pneurobio.2012.03.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jirsch JD, Urrestarazu E, LeVan P, Olivier A, Dubeau F, Gotman J. High-frequency oscillations during human focal seizures. Brain. 2006;129:1593–608. doi: 10.1093/brain/awl085. [DOI] [PubMed] [Google Scholar]

- Kerber K, Dümpelmann M, Schelter B, Le Van P, Korinthenberg R, Schulze-Bonhage A, Jacobs J. Differentiation of specific ripple patterns helps to identify epileptogenic areas for surgical procedures. Clin Neurophysiol. 2014;125:1339–1345. doi: 10.1016/j.clinph.2013.11.030. [DOI] [PubMed] [Google Scholar]

- Lachenbruch PA, Mickey MR. Estimation of the error rates in discriminant analysis. Technometrics. 1968;10:1–11. [Google Scholar]

- [Accessed May, 2014];The International Epilepsy Electrophysiology Portal. www.ieeg.org. Available at: https://www.ieeg.org.

- Lüders HO. Textbook of Epilepsy Surgery. London: Information Healthcare; 2008. [Google Scholar]

- Ludwig KA, Miriani RM, Langhals NB, Joseph MD, Anderson DJ, Kipke DR. Using a common average reference to improve cortical neuron recordings from microelectrode arrays. J Neurophysiol. 2009;101:1679–1689. doi: 10.1152/jn.90989.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Malinowska U, Bergey GK, Harezlak J, Jouny CC. Identification of seizure onset zone and preictal state based on characteristics of high frequency oscillations. Clin Neurophysiol. 2014 doi: 10.1016/j.clinph.2014.11.007. Epub 2014. [DOI] [PubMed] [Google Scholar]

- Matsumoto A, Brinkmann BH, Stead SM, Matsumoto J, Kucewicz MT, Marsh WR, Meyer F, Worrell G. Pathological and physiological high-frequency oscillations in focal human epilepsy. J Neurophysiol. 2013;110:1958–64. doi: 10.1152/jn.00341.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Modur PN, Zhang S, Vitaz TW. Ictal high-frequency oscillations in neocortical epilepsy: implications for seizure localization and surgical resection. Epilepsia. 2011;52:1792–801. doi: 10.1111/j.1528-1167.2011.03165.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nariai H, Nagasawa T, Juhász C, Sood S, Chugani HT, Asano E. Statistical mapping of ictal high-frequency oscillations in epileptic spasms. Epilepsia. 2011;52:63–74. doi: 10.1111/j.1528-1167.2010.02786.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Noe K, Sulc V, Wong-Kisiel L, Wirrell E, Van Gompel JJ, Wetjen N, Britton J, So E, Cascino GD, Marsh WR, Meyer F, Horinek D, Giannini C, Watson R, Brinkmann BH, Stead M, Worrell GA. Long-term outcomes after nonlesional extratemporal lobe epilepsy surgery. JAMA Neurol. 2013;70:1003–1008. doi: 10.1001/jamaneurol.2013.209. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ochi A, Otsubo H, Donner EJ, Elliott I, Iwata R, Funaki T, Akizuki Y, Akiyama T, Imai K, Rutka JT, Snead OC., 3rd Dynamic changes of ictal high-frequency oscillations in neocortical epilepsy: using multiple band frequency analysis. Epilepsia. 2007;48:286–96. doi: 10.1111/j.1528-1167.2007.00923.x. [DOI] [PubMed] [Google Scholar]

- Okanishi T, Akiyama T, Tanaka S, Mayo E, Mitsutake A, Boelman C, Go C, Snead OC, 3rd, Drake J, Rutka J, Ochi A, Otsubo H. Interictal high frequency oscillations correlating with seizure outcome in patients with widespread epileptic networks in tuberous sclerosis complex. Epilepsia. 2014;55:1602–10. doi: 10.1111/epi.12761. [DOI] [PubMed] [Google Scholar]

- Park SC, Lee SK, Che H, Chung CK. Ictal high-gamma oscillation (60–99 Hz) in intracranial electroencephalography and postoperative seizure outcome in neocortical epilepsy. Clin Neurophysiol. 2012;123(6):1100–10. doi: 10.1016/j.clinph.2012.01.008. [DOI] [PubMed] [Google Scholar]

- Pearce A, Wulsin D, Blanco JA, Krieger A, Litt B, Stacey WC. Temporal changes of neocortical high-frequency oscillations in epilepsy. J Neurophysiol. 2013;110:1167–79. doi: 10.1152/jn.01009.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schevon CA, Weiss SA, McKhann G, Jr, Goodman RR, Yuste R, Emerson RG, Trevelyan AJ. Evidence of an inhibitory restraint of seizure activity in humans. Nat Commun. 2012;3:1060. doi: 10.1038/ncomms2056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silverman BW. Density Estimation for Statistics and Analysis. New York: Chapman & Hall; 1986. [Google Scholar]

- Staba RJ, Wilson CL, Bragin A, Fried I, Engel J., Jr Quantitative analysis of high-frequency oscillations (80–500 Hz) recorded in human epileptic hippocampus and entorhinal cortex. J Neurophysiol. 2002;88:1743–1752. doi: 10.1152/jn.2002.88.4.1743. [DOI] [PubMed] [Google Scholar]

- Staba RJ, Wilson CL, Bragin A, Jhung D, Fried I, Engel J., Jr High-frequency oscillations recorded in human medial temporal lobe during sleep. Ann Neurol. 2004;56:108–15. doi: 10.1002/ana.20164. [DOI] [PubMed] [Google Scholar]

- Stacey WC, Kellis S, Greger B, Butson CR, Patel PR, Assaf T, Mihaylova T, Glynn S. Potential for unreliable interpretation of EEG recorded with microelectrodes. Epilepsia. 2013;54(8):1391–401. doi: 10.1111/epi.12202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Usui N, Terada K, Baba K, Matsuda K, Nakamura F, Usui K, Yamaguchi M, Tottori T, Umeoka S, Fujitani S, Kondo A, Mihara T, Inoue Y. Clinical significance of ictal high frequency oscillations in medial temporal lobe epilepsy. Clin Neurophysiol. 2011;122:1693–700. doi: 10.1016/j.clinph.2011.02.006. [DOI] [PubMed] [Google Scholar]

- Wagenaar JB, Worrell GA, Ives Z, Matthias D, Litt B, Schulze-Bonhage A. J Clin Neurophysiol. 2015;32:235–9. doi: 10.1097/WNP.0000000000000159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Worrell GA, Gardner AB, Stead SM, Hu S, Goerss S, Cascino GJ, Meyer FB, Marsh R, Litt B. High-frequency oscillations in human temporal lobe: simultaneous microwire and clinical macroelectrode recordings. Brain. 2008;131:928–37. doi: 10.1093/brain/awn006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Worrell GA, Jerbi K, Kobayashi K, Lina JM, Zelmann R, Le Van Quyen M. Recording and analysis techniques for high-frequency oscillations. Prog Neurobiol. 2012;98:265–278. doi: 10.1016/j.pneurobio.2012.02.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu JY, Sankar R, Lerner JT, Matsumoto JH, Vinters HV, Mathern GW. Removing interictal fast ripples on electrocorticography linked with seizure freedom in children. Neurology. 2010;75:1686–94. doi: 10.1212/WNL.0b013e3181fc27d0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yu S, Lin Z, Liu L, Pu S, Wang H, Wang J, Xie C, Yang C, Li M, Shen H. Long-term outcome of epilepsy surgery: A retrospective study in a population of 379 cases. Epilepsy Res. 2014;108:555–564. doi: 10.1016/j.eplepsyres.2013.12.004. [DOI] [PubMed] [Google Scholar]

- Zelmann R, Zijlmans M, Jacobs J, Châtillon CE, Gotman J. Improving the identification of High Frequency Oscillations. Clin Neurophysiol. 2009;120:1457–64. doi: 10.1016/j.clinph.2009.05.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zelmann R, Mari F, Jacobs J, Zijlmans M, Dubeau F, Gotman J. A comparison between detectors of high frequency oscillations. Clin Neurophysiol. 2012;123:106–116. doi: 10.1016/j.clinph.2011.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zijlmans M, Jacobs J, Kahn YU, Zelmann R, Dubeau F, Gotman J. Ictal and interictal high frequency oscillations in patients with focal epilepsy. Clin Neurophysiol. 2011;122:664–71. doi: 10.1016/j.clinph.2010.09.021. [DOI] [PMC free article] [PubMed] [Google Scholar]