Abstract

Background

Informed consent for clinical research includes two components: informed consent documents (ICD) and informed consent conversations (ICC). Readability software has been used to help simplify the language of the ICD, but rarely employed to assess the language during the ICC, which may influence the quality of informed consent. This analysis was completed to determine if length and reading levels of transcribed ICCs are lower than their corresponding ICDs for selected clinical trials, and to assess whether investigator experience affected use of simpler language and comprehensiveness.

Methods

Prospective study where ICCs were audio-recorded at 6 institutions when families were offered participation in pediatric phase I oncology trials. Word count, Flesch-Kincaid Grade Level (FKGL) and Flesch Reading Ease Score (FRES) of ICCs were compared to corresponding ICDs, including the frequency that investigators addressed 8 pre-specified critical consent elements during the ICC.

Results

Sixty-nine unique physician/protocol pairs were identified. Overall, ICCs contained fewer words (4,677 vs. 6,364; p=0.0016), had lower FKGL (6 vs. 9.7; p=<0.0001) and higher FRES (77.8 vs. 56.7; p<0.0001) than their respective ICDs, but were more likely to omit critical consent elements, such as voluntariness (55%) and dose limiting toxicities (26%). Years of investigator experience was not correlated with reliably covering critical elements or decreased linguistic complexity.

Conclusions

Clinicians use more understandable language during ICCs than the corresponding ICD, but less reliably cover elements critical to fully informed consent. Focused efforts at providing communication training for clinician-investigators should be done to optimize the synergy between the ICD and conversation.

Keywords: ethics, informed consent, pediatric, oncology, clinical trials, consent documents, phase 1

Introduction

Informed consent is essential for the ethical conduct of clinical research.1 However, an extensive body of literature catalogs the many known deficiencies in the consent process, which involves holding a consent conversation and providing a consent document. Patients do not always understand the nature of clinical trials after having the consent conversation, even when “enhanced” adjuncts such as multimedia aids are used.2,3 Consent documents are frequently criticized for their excessive length, lack of organization and complex language that exceeds the reading ability of most Americans.4–8 While there is consensus that these forms should be at or below an eighth grade reading level, studies have shown the readability of an average consent document to be above a 10th grade reading level, with a mean range of 13–17 pages.9

Many of these shortcomings have been tolerated because the document, while important, is meant to complement the more crucial conversation, which lies at its heart of the informed consent process.2,10 However, how much more clearly and plainly clinician-investigators communicate via the spoken word during consent conversations compared to the written word of the document is unknown.

Therefore, we transcribed consent conversations between investigators and parents of children with cancer who were eligible for phase I studies and performed readability analysis on the investigator-articulated component of the transcript of the consent conversation. We then compared the readability and length of these conversations with its corresponding consent document. We also assessed how frequently clinician-investigators discussed critical elements of consent with parents. Finally, we analyzed whether increasing years of experience of the clinician-investigators correlated with the simplicity of the language they used during the consent conversation as well as their comprehensiveness. We hypothesized that increasing years of experience would correlate with a higher likelihood of using simpler language and being more comprehensive.

Materials and Methods

Results discussed in this study are part of a larger multisite study, the Phase I Informed Consent (POIC) project, designed to understand communication between physicians and families as well as comprehension and decision-making of parents and older pediatric patients.11 Six pediatric cancer phase I centers participated. This study was approved by Institutional Review Boards at each data collection site and at Cleveland Clinic, Cleveland, Ohio (administrative home).

English-speaking families considering participation in an open phase I pediatric (age 0 to 21 years) cancer trial were eligible. Participating families agreed to observation and audio-recording of consent conversations regarding phase I research. A total of 85 families consented to participate in the study. Of the 85 cases, 34 clinician-investigators conducted consent conversations for 33 distinct protocols at the 6 participating sites. If the same clinician-investigator presented the same clinical trial more than once, then only the first conversation was included in the analysis, while the others were excluded. As a result, 69 cases of unique physician/protocol pairs were identified and included in this report.

Informed consent conferences were audiotaped and transcribed according to a predetermined transcription guide, then verified by a second study team member. For this analysis, only the investigator-spoken words were counted; questions or words spoken by the patient or family were excluded. The transcribed conversation was analyzed using Microsoft Word 2010® grammar check software in which the following variables were collected: (1) word count; (2) Flesch-Kincaid Grade Level (FKGL); and (3) Flesch Reading Ease Score (FRES). The FKGL estimates the grade level of education required to understand a document. The FRES measures the ease of reading--a higher score reflects easier readability. These readability tools are validated instruments and are often used in the educational realm as well as in the assessment of insurance documents.12,13 All values reported here were generated by the software; the authors did not manually convert any of the grade level numbers to reading ease scores, or vice versa. The corresponding IRB-approved informed consent document (ICD) for each clinical trial was also analyzed for these three parameters using identical software and then compared to the transcribed conversation.

Years of experience, obtained by self-report, was available for 30 of the 34 clinician-investigators. Critical consent elements were defined as a selection of topics that are uniformly included in ICDs and are believed to be sufficiently fundamental to the informed consent process for phase I oncology trials to the extent that their omission would connote a suboptimal consent process. These included: study purpose; study design, including dosing cohorts and dose escalation procedures; potential side effects; dose limiting toxicities; voluntariness; and withdrawal rights. Level of parental education was ascertained on 47/69 cases, and was divided into two groups for analysis (those that had not attended or completed college vs. those that had completed college or graduate degrees) based on their distribution.

The paired-sign test was used to compare the length and readability of the transcribed conversations with their corresponding documents. Years of investigator experience was correlated with these outcomes using spearman correlation coefficients and with how frequently investigators covered critical consent elements using logistic regression analysis. Mann-Whitney test was used to correlate readability scores with level of parental education.

Results

Of the 69 patients included in this study, 42 (61%) were male, and the median age was 10.6 years. The most common tumor types were brain and central nervous system, followed by soft tissue and bone tumors (Table 1). Of the 30/34 clinician-investigators for whom we had years of experience data, 16 were women (53%) and 27 were attending physicians whereas 3 were fellows. The median years of clinical experience for these investigators was 15 (range, 1–32).

Table 1.

Patient and Trial Characteristics (N=69)

| Variable | Number (%) |

|---|---|

|

| |

| Mean Patient Age (range) | 10.62 (1–20) |

|

| |

| Patient Gender | |

| • Male | 42 (60.9%) |

| • Female | 27 (39.1%) |

|

| |

| Patient Diagnosis | |

| • Bone and Soft Tissues | 19 (27.5%) |

| • Brain and CNS | 24 (34.8%) |

| • Leukemia (Blood) | 7 (10.1%) |

| • Neuroblastoma | 12 (17.4%) |

| • Other | 7 (10.1%) |

|

| |

| Type of Agent Studied | |

| • Receptor/signal transduction modifier | 20 (61%) |

| • Cytotoxic chemotherapy | 7 (21%) |

| • Immunomodulator | 5 (15%) |

| • Antiangiogenic | 1 (3%) |

|

| |

| Trial Information | |

| • Unique phase I trials discussed across study sites | 33 |

| • Unique clinician-investigators s discussing phase I trials | 35 |

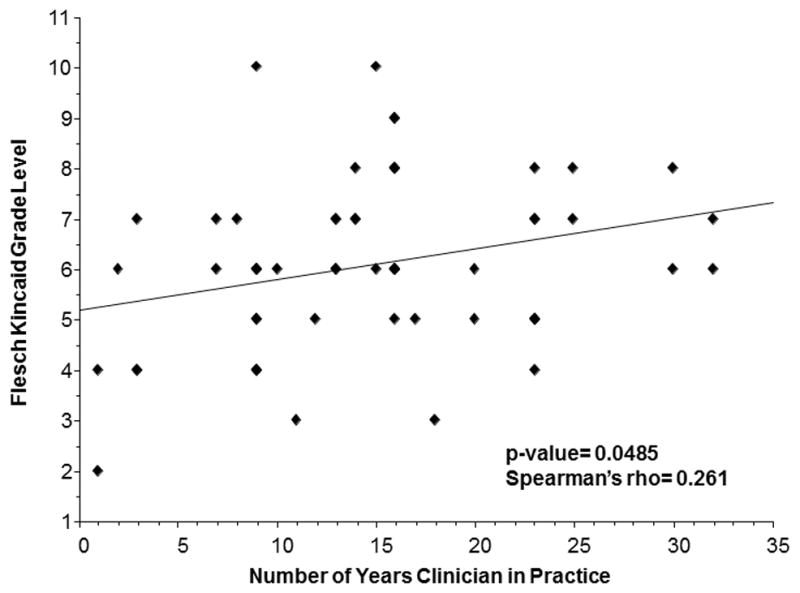

While the investigators nearly always discussed potential side effects (91%), study purpose (86%), and safety mechanisms (87%), they less reliably discussed other critical elements of consent, including explanation of voluntariness (55%), withdrawal rights (58%), dosing cohorts (44%), dose limiting toxicities (26%) and dose escalation (52%). Clinician-investigators with more years of clinical experience appeared to use more complex language with a higher FKGL than those who were less experienced (Figure 1). This increasing linguistic complexity was not accounted for by the conversation being more comprehensive, as investigators who omitted the critical consent elements had higher median years of prior clinical experience than those that did discuss those topics during the conversation. On univariate analysis, however, none of these differences was statistically significant aside from dose limiting toxicity (Table 2).

Figure 1.

Flesch Kincaid Grade Level of Informed Consent Conversation by Number of Years Clinician in Practice (N=58)

Table 2.

Association of Clinical Experience of Clinician-Investigators and Frequency of Discussing Critical Elements of Consent during Informed Consent Conversations

| Critical Element of Consent (CEC) | Median years of experience of investigators who discussed CEC during ICC? | Median years of experience of investigators who did not discuss CEC during ICC? | P-value |

|---|---|---|---|

| Study Purpose | 14 | 20 | 0.48 |

| Risk Side effects | 15 | 16 | 0.27 |

| Voluntariness | 14 | 16 | 0.24 |

| Withdrawal rights | 14.5 | 16.5 | 0.10 |

| Confidentiality | 13.5 | 17 | 0.09 |

| Dosing Cohorts | 13 | 16 | 0.07 |

| Dose escalation | 14 | 16 | 0.96 |

| Dose limiting Toxicity | 9 | 16 | 0.006 |

Overall, the transcribed conversations were significantly easier to read and used fewer words than the corresponding documents (Table 3). The median FKGL for the conversations was nearly four grades lower than that for the corresponding document (6 vs. 9.7; p<0.0001) and the corresponding median FRES was significantly higher (77.8 vs. 56.7; p<0.0001). Table 4 provides context for these values by comparing them to other familiar sample texts. No significant differences were observed for the median FKGL (6 vs 6; p=0.45) or the FRES (78.5 vs. 77.8; p=0.84) for parents with a college degree or higher vs. those with less than a college education, respectively. However, clinician-investigators used significantly more words during the ICC with less educated parental population compared with those with a college degree or more (5673 vs. 3825; p=0.02).

Table 3.

Comparison of length and readability of the informed consent document vs. the transcribed informed consent conversation

| Source | Median Word Count (Range) | Median Flesch Reading Ease Score (Range) | Median Flesch-Kincaid Grade Level (Range) |

|---|---|---|---|

| ICD | 6,364 (4,185 – 8,873) | 56.7 (49.2 – 69.4) | 9.7 (5.8 – 11.7) |

| ICC | 4,677 (1,876 – 15,680) | 77.8 (66.3 – 96) | 6 (2 – 10) |

| p-value* | 0.0016 | <0.0001 | <0.0001 |

Table 4.

Readability Statistics of the ICC and ICD compared to Sample Texts

| Sample Texts | Flesch Reading Ease Score (FRES)* | Flesch-Kincaid Grade Level (FKGL)+ |

|---|---|---|

| Mean ICD | 56.7 | 9.7 |

| Mean ICC | 77.8 | 6 |

| Buzzy Bee and Friends (a children’s book) | 88.6 | 2.0 |

| Readers Digest | 65 | ---- |

| Time Magazine | 52 | ---- |

| Text of this article | 30.8 | 15.1 |

| Harvard Law Review | Low 30s | ---- |

The highest achievable score for the FRES is 121 (text with only one syllable words). General range: 90–100 = Very easy (4th grade); 80–90 = Easy (5th); 70–80 = Fairly Easy (6th); 60–70 = Standard (7–8th grade); 50–60 = Fairly Difficult (Some H.S.); 30–50 = Difficult (H.S.-College); 0–30 = Very Difficult (College and more).]

The FKGL converts the 0–100 number into a number corresponding to the approximate grade level of education required to understand the text.

Easy reading range is 6–10. The average person reads at the level 9. Anything above 17th level can be difficult for university students.

Discussion

Obstacles to obtaining informed consent are inherent to both components of the consent process--the document and the conversation. This study examines both of these critical consent tools and reveals that clinician-investigators use simpler and more understandable language during the consent conversation than that used in the consent document and that this is independent of parental education level. This may occur at the expense of covering all of the critical elements of consent as required in the consent document for regulatory approval. Moreover, and perhaps counter-intuitively, increasing experience of the clinician-investigators did not correlate with more frequent use of simpler language or a higher likelihood of covering the critical elements of consent. These findings underscore the complex dynamic that the consent document and conversation play in the informed consent process and highlight some of the relative advantages and shortcomings of each.

Complex language used in both the document and conversation has been recognized as a major obstacle to achieving patient understanding.5 Substandard health literacy is common in the United States, with half the population reading at or below an 8th grade reading level.14 While substantial efforts have been made to mitigate barriers to patient understanding in routine clinical care,15,16 in the research setting in general, and oncology clinical trials in particular, the need to ensure fully informed consent in trial patients becomes all the more acute and crucial.1 To compare the linguistic complexity of consent documents and conversations, we used validated readability tools that have been extensively studied in the context of consent documents. We applied them in a novel fashion to transcripts of the consent conversation of phase I pediatric oncology trials to ascertain the length and linguistic complexity of the language used by clinician-investigators. The results demonstrate that investigators can, and do, provide informed consent using significantly simpler language and fewer words than that used in consent documents.

To our knowledge, this is the first study in the oncology informed consent literature that sought to apply readability measures designed for the written word to the transcribed spoken word. While methodologically imperfect, this approach has been used before in other contexts. McCarthy and colleagues recorded resident physicians providing informed consent to standardized patients in a simulated clinical scenario, transcribed the conversation and analyzed the data using the FKGL and the FRES.17 They found significant differences in these values between the language of the consenting resident physician and the simulated patient spokesperson. Factors such as the use of passive voice, increasing number of syllables per word and increasing number of words per sentence all lead to inferior scores by these validated readability calculators. It follows that even when applied to transcripts of the spoken word, these instruments should detect varying levels of linguistic complexity. Our data show that in this sample, simpler language and sentence constructs are used during the consent conversation when compared to the corresponding document.

However, a closer comparison of the content covered in the documents and conversations reveals that a number of critical elements, even those as fundamental to research ethics as voluntariness and the rights of withdrawal, were far too frequently omitted from the conversation. The document for each trial, by contrast, invariably covers these critical elements. This highlights an important potential pitfall of the consent conversation. While clinician-investigators use simpler and more comprehensible language during the conversation, it may not be as comprehensive. Simplicity and comprehensiveness are both important goals for informed consent.

Another important question that arose in interpreting these findings relates to the possibility that the experience of the clinician-investigator could influence the complexity of language used and his/her reliability of discussing the major critical elements of consent. Our data suggests that less experienced clinician-investigators used language at a lower grade level, and may have also been more likely to cover the critical elements of consent than their more seasoned colleagues. While the latter was not a statistically significant finding, the apparent trend raises the possibility that clinicians more recently trained may have an easier time being both comprehensive and simple due to more formal communication related education that has been incorporated into postgraduate curricula in recent years.18

In synthesizing these results, the following perspective emerges. Fundamentally, clinician-investigators appear to be able to provide informed consent in easy-to-understand language with the spoken word more reliably than the written word. Clinician-investigators who conduct consent conferences on a routine basis would agree that the consent discussion overshadows the consent document as the primary method of educating and informing patients about the nature and details of a clinical study. However, the language used during consent conversations varies considerably from one investigator to another, and this appears to be independent of experience or parental education level. It follows that the ultimate determinant of how investigators carry out informed consent is likely a blend of their intrinsic communication abilities and how they first learned and observed the consent process.

These observations may provide a key to understanding one of the most impactful ways to improve the informed consent process. We have previously shown that investigators can be taught how to follow a specific systematic approach to presenting clinical studies and can enhance the quality of their consent with training.19 Training investigators, perhaps during their years of residency/fellowship, may be the best way to hone their communication skills and establish good consenting habits (i.e. being simple and comprehensive) early in their career. Our data suggests that clinician-investigators are likely to continue to use those skills as their career continues.

Optimizing the informed consent process requires that we improve the synergy between the document and conversation. The heart of consent should remain with the conversation, where clinician-investigators appear to speak in language that is understandable to patients. Clinician-investigators should make better use of the document during the conversation, which can serve as an indispensable tool that helps to ensure that all critical elements are discussed and a resource that can be reviewed and revisited as a comprehensive supplement to the conversation. Enhancing this synergistic role will require training clinician-investigators how to best communicate during the conversation, and simplifying the corresponding documents to read more like executive outlines than business contracts. Perhaps most importantly, merely communicating the information in a simple and clear way does not guarantee that the participant/parent will understand the content of that conversation. Ultimately, quality informed consent relies on adequate participant understanding which often requires enhanced interviewing techniques (e.g. “teachback”) as part of the ICC – elements that are not addressed in this paper.

There are several limitations to this study. Firstly, applying the FRES and FKGL to transcripts of spoken words, while intuitively reasonable, uses these tools differently than how they were designed. Moreover, consent documents tend to be more comprehensive in their scope than a conversation, which likely explains some of the differences in length between the two. We also limited this analysis to unique physician-study pairs and did not include second and/or third ICCs that a single investigator had with multiple participants for the same study. While we therefore were unable to account for intra-investigator variability, our limited sample size of this repetitive consent precluded any meaningful analysis in this regard, and was therefore excluded to maintain a homogeneous dataset. Perhaps the most substantive limitation of this analysis is its narrow focus on language complexity, which is just one parameter that factors into the complex endpoint of patient understanding.20,21 The Institute of Medicine recognized in its 2004 report that health related oral literacy (i.e. the spoken word) is as vital, or more vital, as health related print literacy (i.e. the written word) to patients being fully informed.22 Oral literacy involves more than just vocabulary skills. As measured by the Oral Demand Literacy framework described by Roter and colleagues, the pace, interactivity, and density of the consent conversation can significantly impact patient understanding independent of the language used.17,23,24 Ensuring clear simplified language may also be overshadowed by whether investigators provided ample opportunity for questions and clarifications, elicited feedback to identify knowledge gaps, and being attentive to the psychosocial state of the patient.25 While our study did not capture these elements of the conversation, it did examine the starting point for any dialogue being understandable, namely the language used. Moreover, our finding that linguistic complexity varies significantly among investigators and is not better among more seasoned clinician-investigators may have meaningful implications for other oral communication skills vital to providing high quality consent.

Moving forward, good communication skills, which is the bedrock of good informed consent, are teachable and implementing systematic training programs for future investigators may be the best way to cultivate their capabilities and assure high quality consenting skills. At the same time, better incorporating the document into the conversation to be used as a “consent prop” to ensure all critical elements pertaining to informed consent are addressed, as well as a safeguard to ensure patients can refer back to a standard information source, can also help improve the overall quality of informed consent in clinical research. Ultimately, additional research is needed to validate whether these interventions would translate into improved participant understanding of the research content, which lies at the heart of informed consent.

Conclusions

Investigators in pediatric oncology trials performed consent conversations using language that was shorter and more easy to understand than that used in the corresponding written consent documents but more often omitted critical elements of the consent process. Increasing years of experience of investigators was not associated with reduced linguistic complexity or fewer omissions of critical elements of consent. Focused efforts at providing communication training for clinician-investigators early in their careers, coupled with enhanced integration of the consent document into the consent conversation, holds promise as a means to optimize the synergy between these two components of informed consent.

Acknowledgments

Funding: This work was supported by National Institutes of Health (NIH) Grant No. R01CA122217 via the National Cancer Institute and the Eunice Kennedy Shriver National Institute of Child Health and Human Development.

The authors would like to thank Doctors Steve Joffe and Paul Appelbaum for their helpful comments on an earlier version of the manuscript. We are also grateful to Amy Moore for assistance with medical editing. Finally, we would like to thank the clinicians, parents, and children who participated in this research.

Footnotes

Conflicts of Interest: None

References

- 1.Emanuel EJ, Wendler D, Grady C. What makes clinical research ethical? Jama. 2000;283:2701–11. doi: 10.1001/jama.283.20.2701. [DOI] [PubMed] [Google Scholar]

- 2.Flory J, Emanuel E. Interventions to improve research participants’ understanding in informed consent for research: a systematic review. Jama. 2004;292:1593–601. doi: 10.1001/jama.292.13.1593. [DOI] [PubMed] [Google Scholar]

- 3.Hoffner B, Bauer-Wu S, Hitchcock-Bryan S, Powell M, Wolanski A, Joffe S. “Entering a Clinical Trial: Is it Right for You?”: a randomized study of The Clinical Trials Video and its impact on the informed consent process. Cancer. 2012;118:1877–83. doi: 10.1002/cncr.26438. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Grossman SA, Piantadosi S, Covahey C. Are informed consent forms that describe clinical oncology research protocols readable by most patients and their families? J Clin Oncol. 1994;12:2211–5. doi: 10.1200/JCO.1994.12.10.2211. [DOI] [PubMed] [Google Scholar]

- 5.Jefford M, Moore R. Improvement of informed consent and the quality of consent documents. Lancet Oncol. 2008;9:485–93. doi: 10.1016/S1470-2045(08)70128-1. [DOI] [PubMed] [Google Scholar]

- 6.Kodish E, Eder M, Noll RB, et al. Communication of randomization in childhood leukemia trials. Jama. 2004;291:470–5. doi: 10.1001/jama.291.4.470. [DOI] [PubMed] [Google Scholar]

- 7.Sharp SM. Consent documents for oncology trials: does anybody read these things? Am J Clin Oncol. 2004;27:570–5. doi: 10.1097/01.coc.0000135925.83221.b3. [DOI] [PubMed] [Google Scholar]

- 8.Paasche-Orlow MK, Taylor HA, Brancati FL. Readability standards for informed-consent forms as compared with actual readability. N Engl J Med. 2003;348:721–6. doi: 10.1056/NEJMsa021212. [DOI] [PubMed] [Google Scholar]

- 9.Koyfman SA, Agre P, Carlisle R, et al. Consent form heterogeneity in cancer trials: the cooperative group and institutional review board gap. J Natl Cancer Inst. 2013;105:947–53. doi: 10.1093/jnci/djt143. [DOI] [PubMed] [Google Scholar]

- 10.Nishimura A, Carey J, Erwin PJ, Tilburt JC, Murad MH, McCormick JB. Improving understanding in the research informed consent process: a systematic review of 54 interventions tested in randomized control trials. BMC medical ethics. 2013;14:28. doi: 10.1186/1472-6939-14-28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Cousino MK, Zyzanski SJ, Yamokoski AD, et al. Communicating and understanding the purpose of pediatric phase I cancer trials. J Clin Oncol. 2012;30:4367–72. doi: 10.1200/JCO.2012.42.3004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Flesch R. A new readability yardstick. Journal of Applied Psychology. 1948;32:2211–33. doi: 10.1037/h0057532. [DOI] [PubMed] [Google Scholar]

- 13.Glinsky A. How valid is the Flesch readability formula? American Psychologist. 1948;3:261. [Google Scholar]

- 14.Literacy NAoA. National Center for Educational Statistics, US Department of Education; [accessed 3/18/14]. http://nces.ed.gov/naal/ [Google Scholar]

- 15.Badarudeen S, Sabharwal S. Assessing readability of patient education materials: current role in orthopaedics. Clinical orthopaedics and related research. 2010;468:2572–80. doi: 10.1007/s11999-010-1380-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Cherla DV, Sanghvi S, Choudhry OJ, Jyung RW, Eloy JA, Liu JK. Readability assessment of Internet-based patient education materials related to acoustic neuromas. Otology & neurotology: official publication of the American Otological Society, American Neurotology Society [and] European Academy of Otology and Neurotology. 2013;34:1349–54. doi: 10.1097/MAO.0b013e31829530e5. [DOI] [PubMed] [Google Scholar]

- 17.McCarthy DM, Leone KA, Salzman DH, Vozenilek JA, Cameron KA. Language use in the informed consent discussion for emergency procedures. Teaching and learning in medicine. 2012;24:315–20. doi: 10.1080/10401334.2012.715257. [DOI] [PubMed] [Google Scholar]

- 18.Green JA, Gonzaga AM, Cohen ED, Spagnoletti CL. Addressing health literacy through clear health communication: A training program for internal medicine residents. Patient education and counseling. 2014 doi: 10.1016/j.pec.2014.01.004. [DOI] [PubMed] [Google Scholar]

- 19.Yap TY, Yamokoski A, Noll R, Drotar D, Zyzanski S, Kodish ED. A physician-directed intervention: teaching and measuring better informed consent. Acad Med. 2009;84:1036–42. doi: 10.1097/ACM.0b013e3181acfbcd. [DOI] [PubMed] [Google Scholar]

- 20.Davis TC, Williams MV, Marin E, Parker RM, Glass J. Health literacy and cancer communication. CA Cancer J Clin. 2002;52:134–49. doi: 10.3322/canjclin.52.3.134. [DOI] [PubMed] [Google Scholar]

- 21.Joffe S, Cook EF, Cleary PD, Clark JW, Weeks JC. Quality of informed consent in cancer clinical trials: a cross-sectional survey. Lancet. 2001;358:1772–7. doi: 10.1016/S0140-6736(01)06805-2. [DOI] [PubMed] [Google Scholar]

- 22.Health Literacy: A Prescription to End Confusion. The National Academies Press; 2004. [PubMed] [Google Scholar]

- 23.Roter DL, Erby LH, Larson S, Ellington L. Assessing oral literacy demand in genetic counseling dialogue: preliminary test of a conceptual framework. Social science & medicine. 2007;65:1442–57. doi: 10.1016/j.socscimed.2007.05.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Tait AR, Voepel-Lewis T, Nair VN, Narisetty NN, Fagerlin A. Informing the uninformed: optimizing the consent message using a fractional factorial design. JAMA pediatrics. 2013;167:640–6. doi: 10.1001/jamapediatrics.2013.1385. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Tamariz L, Palacio A, Robert M, Marcus EN. Improving the informed consent process for research subjects with low literacy: a systematic review. Journal of general internal medicine. 2013;28:121–6. doi: 10.1007/s11606-012-2133-2. [DOI] [PMC free article] [PubMed] [Google Scholar]