Abstract

A hotly debated question in word learning concerns the conditions under which newly learned words compete or interfere with familiar words during spoken word recognition. This has recently been described as a key marker of the integration of a new word into the lexicon and was thought to require consolidation Dumay & Gaskell, (Psychological Science, 18, 35–39, 2007; Gaskell & Dumay, Cognition, 89, 105–132, 2003). Recently, however, Kapnoula, Packard, Gupta, and McMurray, (Cognition, 134, 85–99, 2015) showed that interference can be observed immediately after a word is first learned, implying very rapid integration of new words into the lexicon. It is an open question whether these kinds of effects derive from episodic traces of novel words or from more abstract and lexicalized representations. Here we addressed this question by testing inhibition for newly learned words using training and test stimuli presented in different talker voices. During training, participants were exposed to a set of nonwords spoken by a female speaker. Immediately after training, we assessed the ability of the novel word forms to inhibit familiar words, using a variant of the visual world paradigm. Crucially, the test items were produced by a male speaker. An analysis of fixations showed that even with a change in voice, newly learned words interfered with the recognition of similar known words. These findings show that lexical competition effects from newly learned words spread across different talker voices, which suggests that newly learned words can be sufficiently lexicalized, and abstract with respect to talker voice, without consolidation.

Keywords: Word learning, Lexical integration, Episodic memory, Interlexical inhibition, Visual world paradigm

It is well known that words inhibit each other during spoken word recognition (Dahan, Magnuson, Tanenhaus, & Hogan, 2001; Gaskell & Dumay, 2003; Leach & Samuel, 2007; Luce & Pisoni, 1998; McQueen, Norris, & Cutler, 1994). As listeners hear a word, such as sandal, it inhibits similar words, such as sandwich and Santa. This raises an important question concerning word learning: Under what conditions do these inhibitory links form? This property of lexical inhibition is considered a strong test of whether a new word is integrated into the existing lexicon; if a newly learned word is integrated, it should compete with other, previously known words (see also Leach & Samuel, 2007).

Various studies have used measures of lexical competition and interference to examine the necessary conditions for novel words to become integrated in the lexicon. Gaskell and Dumay (2003) used a phoneme-monitoring task to implicitly teach participants novel words (e.g., cathedruke) that were highly similar to known words (e.g., cathedral). After learning, Gaskell and Dumay assessed whether the novel words were integrated into the lexicon by looking for inhibitory effects of the novel word on the recognition of the corresponding known word, using lexical decision and pause detection reaction times. They found that participants could accurately recognize the novel words immediately after training; however, lexical integration was only observed several days after the first training session.

These results suggest that the initial stage of word-form learning may be qualitatively distinct from the subsequent, full integration of a word into the lexicon. They also suggest that the first stage can be reached immediately after initial exposure to a novel word, but sleep or some form of consolidation may be necessary for the latter stage to be reached. Consistent with this, Dumay and Gaskell (2007, 2012) found that novel-word integration, in the form of lexical competition, is only observed after a period of sleep (controlling for the amount of time between training and test). In addition, Tamminen, Payne, Stickgold, Wamsley, and Gaskell (2010) found that overnight lexical integration is correlated with spindle activity observed during sleep.

One way to account for these results is by assuming that the two stages of word learning described above are subserved by different learning mechanisms involving different representations. The early phase of word learning may rely on episodic memory traces of words, which permit words to be recognized without being integrated with the existing lexicon. However, consolidation leads to truly lexicalized representations, which permit newly learned words to interfere with familiar words (Bakker, Takashima, van Hell, Janzen, & McQueen, 2014; Davis & Gaskell, 2009; Gaskell & Dumay, 2003). Dumay and Gaskell (2012) provided direct support for this hypothesis. They showed that participants were faster to spot embedded words (e.g., muck) in newly learned words (e.g., lirmuckt) immediately after learning, but this pattern reversed the next day. This reversal was taken as evidence of facilitation from episodic memory traces early on, turning into interference from postconsolidation lexicalized items (see also Dumay, Damian, & Bowers, 2012).

Consistent with this, Bakker, Takashima, van Hell, Janzen, and McQueen (2014) examined the intermodal transfer of integration, testing whether newly learned orthographic word forms inhibit familiar words during auditory presentation (and vice versa). They found that when words were acquired via auditory presentation, they interfered with known words during both auditory and orthographic testing, but only after a 24-hr consolidation period. However, when items were trained only orthographically, an even longer consolidation period was required for cross-modal (orthographic→auditory) inhibition effects to arise. These results suggest that consolidation is a gradual process, while still being consistent with the dual-stage model described above.

Other studies, however, have shown evidence of more immediate integration. Fernandes, Kolinsky, and Ventura (2009) demonstrated that words learned via a statistical-learning paradigm (which included more word repetitions and demanded deeper perceptual analysis to extract them) can interfere with familiar words (measured with lexical decision) without sleep. Lindsay and Gaskell (2013) also showed that interference can be observed without sleep when the to-be-learned words are interleaved with familiar words during training. These studies, along with studies of semantic integration measured by event-related potentials (Borovsky, Elman, & Kutas, 2012; Borovsky, Kutas, & Elman, 2010), point to a converging story: New words can be integrated into the lexicon immediately in certain training regimes.

However, these findings could also be based on limitations of the testing regimes; immediate integration could be achieved more often than thought, but it might be difficult to measure. For example, the lexical decision task, a commonly used measure of lexical competition, can be insensitive to competition among familiar words (Marslen-Wilson & Warren, 1994). Other measures, including pause detection and word spotting, exhibit a complex relationship with interference; they can be sensitive to the overall activation in the system or to differing response thresholds, and as a result may not directly capture interference (Mattys & Clark, 2002). This raises the possibility that the null effects for lexical integration commonly found before sleep may not constitute evidence for lack of integration (cf. Kapnoula, Packard, Gupta, & McMurray, 2015).

Kapnoula et al. (2015) thus assessed lexical competition effects from novel words using a variant of the visual world paradigm that is known to be much more sensitive to inhibition (Dahan et al., 2001). This paradigm employs a splicing manipulation to temporarily boost competitor activation. This strengthens its inhibitory effects on the target, making inhibition easier to detect. Activation specifically for the target (as opposed to processing difficulties more broadly) can then be measured fairly precisely using eye movements to its referent (Dahan et al., 2001). Using this paradigm, Kapnoula et al. found evidence for lexical competition immediately after training across two experiments. This is inconsistent with the two-stage model of word learning described earlier, since it suggests that lexicalization can be reached immediately after the first exposure, without the need for consolidation. These results do not rule out the possibility that consolidation plays an important role in word learning (a point we will return to later); however, they demonstrate that consolidation is not a uniquely necessary condition for competition effects to arise.

Whereas Kapnoula et al. (2015) proposed that integration can be achieved immediately, such results could also be achieved in a two-stage model if competition also arises within episodic storage. Episodic traces of the words could be stored without consolidation, be activated at test during word recognition, and compete with known words for the ultimate response. One way to test this relies on the fact that episodic memory is highly sensitive to idiosyncratic details, such as the talker voice. For example, in research on familiar-word recognition, exposure to a set of words in a specific talker’s voice leads to better recognition and memory if the test stimuli are presented in the same voice (as opposed to in a novel one; Bradlow, Nygaard, & Pisoni, 1999; Creel, Aslin, & Tanenhaus, 2008, Exp. 1; Goldinger, 1996, 1998; Palmeri, Goldinger, & Pisoni, 1993). This predicts that the early stages of episodic encoding may be highly context-bound (e.g., talker-specific) and will not abstract across acoustic (talker) variation.

In line with these ideas, Brown and Gaskell (2014) reported talker-specific effects on a recognition memory task immediately after exposure to a set of novel words; however, the following day, both talker-specificity and lexical-competition effects were observed. This makes it clear that early memory representations for new words can include talker voice information; however, because lexical competition was assessed with lexical decision, it is unclear whether immediate integration might have been observed with the more sensitive subphonemic mismatch measure proposed by Kapnoula et al. (2015).

We tested the hypothesis that immediate integration is driven by episodic representations, using a design motivated by classic work on indexical effects on memory and speech perception. Participants were exposed to novel words using two implicit-learning tasks. They were then tested using the cross-splicing/visual world paradigm of Kapnoula et al. (2015) to determine whether these newly learned words competed with known words. Crucially, our testing stimuli were recorded by a different talker (with a different gender) than those used in training. A lack of evidence for interference under these circumstances would suggest that Kapnoula et al.’s findings were due to episodic traces engaging in competition. However, a continued competition effect would suggest that sufficiently lexicalized and abstract representations can be formed immediately and without the need for consolidation.

Method

Participants

A total of 68 native speakers of English participated and received either a gift card, a check, or course credit as compensation. Fourteen of them were excluded from the analyses due to problems with the eyetracking.

Design

The training stimuli consisted of 20 nonwords (the same used in Kapnoula et al., 2015). Each participant was trained on ten nonwords, and the rest of the nonwords were used as control stimuli for testing. Across participants, each nonword served as both a trained and an untrained (control) item. The nonwords were consonant–vowel–consonant (CVC), CCVC, or CVCC1 monosyllabic items ending in a stop consonant. Within each triplet, all final stop consonants had the same voicing and manner and only differed in place of articulation.

Immediately after training, we evaluated the competition induced by the newly learned words on previously known words using a visual world paradigm (VWP) task based on that of Dahan et al. (2001). Each nonword was grouped with two real words for testing: a target and a real-word competitor. This resulted in 20 triplets of one nonword and two words (e.g., jod, job, jog). To construct the auditory stimuli for testing, we cross-spliced the target word in each triplet (e.g., job) with either a different recording of the same word (jobb: matching splice), the other word in the triplet (jogb: otherword splice), or the nonword (jodb: nonword splice), creating three versions of the target word, each of which served as the auditory stimulus in a different trial in the VWP task.

During testing, participants saw four pictures and heard an auditory stimulus. The target word was presented in one of the three versions described above (matching splice, other-word splice, or nonword splice). Since the nonword used for the nonword splice could be either trained or untrained, there were four conditions in testing: matching splice, other-word splice, trained-nonword splice, and untrained-nonword splice. Fixations to the picture of the target (e.g., job) were used as a measure of the lexical activation of the target. Our primary hypothesis was that if the trained items were lexicalized, activation of the target would be less in the trained-nonword (since the activated newly learned word would inhibit the target) than in the untrained-nonword splice condition.

Training: Procedure and stimuli

During training, participants performed two tasks with the trained nonwords. Training was split into 11 epochs, each containing a block of ten listen-and-repeat trials, followed by a block of ten stem-completion trials (10 nonwords × 11 epochs × 2 blocks = 220 trials). During listen and repeat, participants listened to each nonword and then repeated it out loud. During stem completion, they listened to the first part of each nonword (e.g., jo- for the nonword jod) and repeated the whole word. Each nonword was presented once per block in a random order. Training lasted approximately 10–20 min.

The training stimuli were recorded by a female native speaker of American English in a sound-attenuated room, sampling at 44100 Hz. Four different recordings were used for the listen-and-repeat stimuli, and four for the stem completion. All stimuli were delivered over high-quality headphones.

Testing: Procedure and stimuli

Immediately after training, the testing began. After familiarization with the pictures, participants were calibrated with an SR Research EyeLink II head-mounted eyetracker and given instructions, and then they began the task. During each trial, participants saw four pictures (the four items in a VWP set), heard the label for one, and clicked the corresponding picture.

Twenty VWP stimulus sets2 were constructed by grouping each target word from each triplet (e.g., job) with three semantically unrelated filler words, one of which shared the initial phoneme with the target word (e.g., jet, duck, book). Each of the 80 items was equally likely to be the target word across trials. The experimental target words were presented in three different spliced forms (matching splice, other-word splice, or nonword splice). Since each participant was trained on half of the nonwords, the nonword splice created two conditions (trained/untrained). Filler words were spliced with another token of the same word or with one of two nonwords. All words were recorded in a sound-attenuated room at 44100 Hz by a different talker (a male, native speaker of American English) than the one used for the training stimuli, and splicing was always at the zero-crossing closest to the onset of the release. The visual stimuli were drawings of each target item, developed using a standard lab procedure (Apfelbaum, Blumstein, & McMurray, 2011; McMurray, Samelson, Lee, & Tomblin, 2010) in which several candidates are compared by a focus group to determine the best referent of a given word, and this is subsequently edited to ensure uniform color and style and to remove distracting elements.

Eyetracker recording and analysis

We used an SR Research EyeLink II head-mounted eyetracker to record eye movements at 250 Hz. Participants were calibrated with the standard 9-point display. The real-time record of gaze in screen coordinates was automatically parsed into saccades and fixations using the default psychophysical parameters, and adjacent saccades and fixations were combined into a single “look” starting at the onset of a saccade and ending at the offset of the fixation. Boundaries of the ports containing visual stimuli were extended by 100 pixels in order to account for noise and/or head-drift in the eyetracking record.

Results

Accuracy and reaction times (RTs)

Participants’ accuracy was 87.04 % (SD = 10.89 %) in training (on the novel words) and 99.35 % (SD = 1.55 %) in testing (for familiar words), suggesting that participants learned the words and performed the tasks without difficulty. Accuracy at test was at ceiling for all splice conditions, so we did not conduct further analyses (see Table 1 for accuracy and RT results per splice condition). For all of the subsequent analyses, we excluded incorrect trials.

Table 1.

Accuracy and reaction time mean values and standard deviations per splice condition at test

| Matching Splice |

Untrained- Nonword Splice |

Trained- Nonword Splice |

Other-Word Splice |

||||

|---|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | Mean | SD |

| Accuracy | |||||||

| 1 | .01 | .99 | .04 | .99 | .03 | .99 | .02 |

| Reaction Times | |||||||

| 1,253 | 213 | 1,299 | 245 | 1,384 | 224 | 1,395 | 234 |

RTs were evaluated as a function of splice condition with three linear mixed-effects models using the lme4 (version 1.1-7; Bates, Maechler, & Dai, 2009) package in R (R Development Core Team, 2009). All p values were computed using the Satterwaite approximation for degrees of freedom using lmerTest (version 2.0-11; Kuznetsova, Brockhoff, & Christensen, 2013). We conducted three analyses. Before examining the fixed effects, we evaluated models with various random-effect structures and found that across models, the most complex model supported by the data was the one with only random intercepts for both subjects and items.

The first model evaluated interference among familiar words using a scheme similar to that of Dahan et al. (2001), excluding the trained-nonword splice condition and comparing the other three splice conditions into two contrast codes: (1) matching versus untrained-nonword splice (−.5/+.5) and (2) untrained-nonword versus other-word splice (−.5/+.5). Our first analysis showed no RT difference between the matching (M = 1,253 ms) and untrained-nonword splice conditions (M = 1,299 ms), F(1, 1536.3) = 2.07, p = .15. Participants were, however, faster in the untrained-nonword than in the other-word splice condition (M = 1,395 ms), F(1, 1533.5) = 8.61, p = .003, suggesting an effect of lexical inhibition among the familiar words.

The second model examined the effect of training by comparing the two nonword splice conditions with a single contrast (−.5/+.5 for untrained vs. trained). We found that the RTs in the trained-nonword splice condition (M = 1,384 ms) were significantly slower than those in the untrained-nonword splice condition, F(1, 997.5) = 6.65, p = .01, suggesting significant interference from the trained word on the familiar word.

Finally, in the third model, we excluded the matching splice and untrained-nonword splice conditions and compared the trained-nonword and other-word splice condition, using a similar coding scheme. These conditions were not significantly different, F < 1, suggesting that newly learned words behave similarly to previously known words. Overall, the RT analyses suggest that the same items were exerting different effects on familiar words as a function of whether they were trained. This offers some evidence for immediate integration of newly learned words.

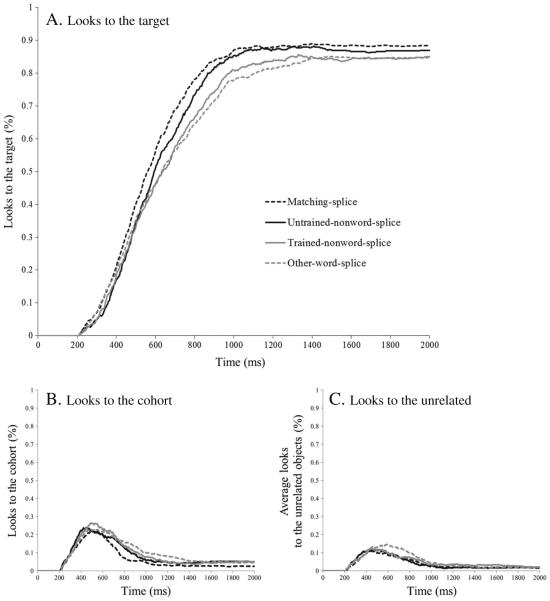

Analyses of fixations

We next examined the fixation data from the testing phase. We first computed the proportion of trials on which participants were fixating the target at each 4-ms time slice as a function of splicing condition (Fig. 1). Target fixations built faster for the matching splice condition, followed by the untrained-nonword, trained-nonword, and other-word splice conditions. The difference between the untrained-nonword and trained-nonword splice conditions is consistent with the hypothesis that the newly learned interfered with familiar-word recognition immediately after training, and might therefore have been integrated into the lexicon.

Fig. 1.

Proportions of trials on which participants fixated the target (panel a), the picture for a word sharing the target’s initial letter (panel b), and either of the unrelated objects (panel c) at each 4-ms time slice, as a function of splice condition

To evaluate the effect of splice condition statistically, we computed the average proportion of fixations to the target between 600 and 1,600 ms post-stimulus-onset3 and then transformed it using the empirical logit transformation. This was our dependent variable in linear mixed-effects models using contrast codes similar to those in our RT analyses. As before, we conducted three analyses. First, we left out the newly learned items to examine competition between known words. Second, we looked at the effect of training by comparing the untrained-nonword to the trained-nonword splice condition. Finally, the third model compared the other-word splice and trained-nonword splice conditions. For all three models, the most complex random-effects structure supported by the data was the one with only random intercepts for subjects and items.

Our first analysis showed that participants looked marginally more to the target in the matching than in the untrained-nonword splice condition, F(1, 1536.7) = 3.36, p = .067. Therefore, participants were somewhat sensitive to the subphonemic mismatch. Target fixations were significantly higher in the untrained-nonword than in the other-word splice condition, F(1, 1533.9) = 16.04, p < .001, replicating the inhibition between known words reported by Dahan et al. (2001) and Kapnoula et al. (2015).

In the second analysis, we examined the effect of training and found that participants made fewer fixations to the target in the trained-nonword than in the untrained-nonword splice condition, F(1, 997.6) = 11.26, p < .001. Given that the training and testing voices differed in gender, this offers strong evidence that the novel lexical representations were abstract enough immediately after training to interfere with the recognition of words spoken by a different talker. Finally, in a third model the trained-nonword splice condition did not differ significantly from the other-word splice condition, F < 1 (as in the RT analyses).4

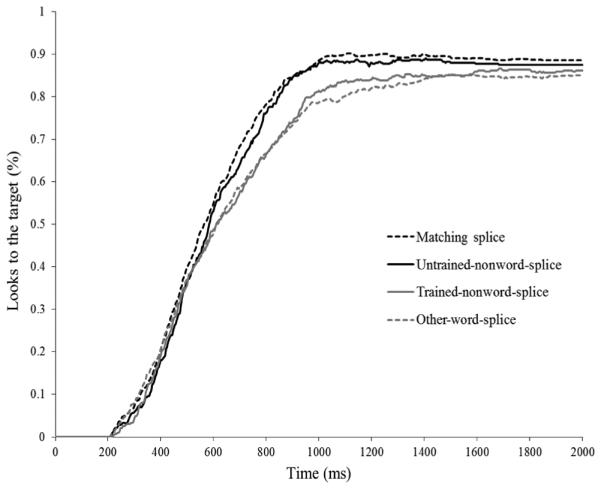

One concern was that during training, participants were exposed not only to the voice of our speaker, but also to the sound of their own voice producing the words, since they had to say the nonwords during both tasks. This could have led to stored exemplars of the novel words in both the trained voice and the participant’s own voice. The training voice was female, and the testing voice was male, making this particularly problematic for male participants; if male participants had episodic representations of the new words in their own voice, they might show abstraction effects, despite an episodic mode of encoding. In contrast, we might expect a weaker and possibly nonexistent interference effect for female participants, since their own voice would be dissimilar to the testing voice, preventing newly learned words from being activated sufficiently to interfere with the familiar words. This predicts a significant training-by-gender interaction, with weaker or no evidence for inhibition among female participants. In contrast to this prediction, Fig. 2 shows that female participants exhibited robust effects of splice condition. To test this statistically, we added gender and Gender × Competition as fixed effects to the second model (comparing the trained-nonword and untrained-nonword splice conditions). The main effect of gender was not significant, F(1, 52.21) = 2.38, p = .129, nor was the Gender × Competition interaction, F < 1.

Fig. 2.

Proportions of trials on which only female participants fixated the target at each 4-ms time slice, as a function of splice condition

Discussion

Our goal was to test whether interference effects from newly learned words immediately after training (Kapnoula et al., 2015) involve episodic or lexicalized representations. We used the same design as Kapnoula et al. (2015), but with different talkers for the training and test stimuli. If participants have only episodic memory traces of the new items, then competition effects should be minimized when the familiar words at test are presented in an acoustically dissimilar voice. In contrast to this prediction, we once again found robust evidence for competition between newly learned and previously known words immediately after training.

This contradicts prior work suggesting that such inhibition can only arise with sleep (Davis, Di Betta, Macdonald, & Gaskell, 2009; Dumay & Gaskell, 2007, 2012; but see Fernandes, Kolinsky, & Ventura, 2009; Lindsay & Gaskell, 2013), and extends the study of Kapnoula et al. (2015) to show that relatively talker-invariant representations engage in this very immediate competition. One of the most important differences between our studies and the prior work on consolidation is our use of manipulated (cross-spliced) stimuli coupled with the visual world paradigm to evaluate interlexical inhibition between specific pairs of words. By experimentally enhancing activation for the competiting word (with the cross-splicing) and focusing our testing on the target activation (with the visual world paradigm), our approach permits a more direct measure of interlexical inhibition than have prior techniques, such as lexical decision and pause detection, in which inhibition must be inferred from processing costs (RTs; see Kapnoula et al., 2015, for a longer discussion of the differences between our paradigm and previous studies). This may have allowed us to detect an interference effect immediately after training when other tasks have not consistently done so.

This is not to suggest that consolidation plays no role in word learning; the evidence is clear and convincing in that regard (McMurray, Kapnoula, & Gaskell, in press). Our study, however, suggests that consolidation may not be a necessary condition for such inhibitory links to be formed. Consequently, the role of consolidation may be to strengthen, stabilize, and/or make more efficient connections that have already been established during online learning (see Takashima, Bakker, van Hell, Janzen, & McQueen, 2014, for fMRI evidence for this; and McMurray et al., in press, for a theoretical proposal). Related to this, it remains to be seen how our effects may change with time (they could decay or be consolidated) and with offline consolidation. That said, our findings provide strong evidence that even though consolidation clearly has a role to play, interference (and thus integration) can be observed without it.

Our results may seem contradictory to previously reported indexical effects on word recognition. These studies have shown poorer memory and recognition for familiar and novel words when there is a mismatch between the voices used in training and testing (Brown & Gaskell, 2014; Creel et al., 2008, Exp. 2), whereas we showed robust interference both when the talker voices matched (Kapnoula et al., 2015) and when they did not (present study). However, our study was not designed to address this issue (since we focused on interference/integration issues). There very well may be indexical effects that we failed to observe (since our study was not designed to do so), and we are not making strong claims that either immediate or integrated lexical representations are fully abstract with respsect to talker voice. Rather, our work suggests that novel words are abstract enough to be activated across changes in talkers and to show interference with familiar words.

These results are important for four reasons. First, they provide strong evidence that early competition effects from unconsolidated words, such as those reported by Kapnoula et al. (2015), do not rely on episodic memory traces; rather, they are most likely to be based on adequately integrated lexical representations. This verifies the hypothesis that newly learned words do not need to be consolidated over a long period of time, involving sleep, in order to become integrated within the lexical system, even though consolidation clearly alters (in some way) the way that newly learned words are processed. Second, our findings suggest that from the earliest moments, newly learned words are not only lexicalized, but have some degree of talker invariance or abstraction. Third, consistent with Gaskell and Dumay (2003; Dumay & Gaskell, 2007) and Bakker et al. (2014), they suggest that inhibition among words may take place among more abstract word-form representations, since none of our newly learned items had semantic representations.

Finally, these findings inform our understanding of the lexicalization of newly acquired words. Bakker et al. (2014) reported gradual development of lexicalization, with an initial stage supporting only intramodal competition (for orthographically learned words), and a subsequent stage in which cross-modal competition arises. The delay of the cross-modal competition effect (as compared to the intramodal one) raises two important points: (1) lexicalization may be a gradual, not an all-or-nothing process, and (2) competition can arise before full (i.e., amodal) lexicalization has been achieved. Our findings not only provide strong evidence for both of these points, but they also extend Bakker et al.’s results by showing that some form of early, preconsolidation lexicalization is also sufficient to yield competition effects.

These results also have important implications for the two-stage model of learning. They suggest that even early (preconsolidated) representations can abstract across talker voices. Considering that indexical effects on word recognition and memory can also persist for several days or longer, this suggests that episodic or abstract encodings of words are not unique signatures of a particular stage of learning and may be fundamental to lexical encoding at every stage (Goldinger, 1998). That being acknowledged, we do not dismiss the wide array of results showing changes in lexical processing with (overnight) consolidation (Bakker et al., 2014; Dumay & Gaskell, 2007, 2012; Tamminen et al., 2010). Our results are not incompatible with the possibility of multiple learning systems, and it is clear that many domains of learning are supported by both hippocampal and nonhippocampal systems (Ashby, Alfonso-Reese, Turken, & Waldron, 1998; Duff, Hengst, Tranel, & Cohen, 2006; Ullman & Pierpont, 2005). That is, even though the integration of newly learned words starts immediately after exposure, it may also be further strengthened by a slow consolidation process (see also Takashima et al., 2014).

Thus, our data do not speak to the complementary-learning-systems proposal; they do, however, suggest that it should not be conceptualized as a two-stage model. If there are two systems, they may not be well distinguished by what is learned (e.g., episodic vs. abstract encoding, or isolated vs. integrated word forms). Both systems may be “striving” for a complete lexical representation (including both episodic and abstractionist components). Instead, they may differ in other factors, such as the timescale over which learning occurs, or the mechanisms by which they improve the lexical pathways that support automatic word recognition.

Acknowledgments

The authors thank Gareth Gaskell and Prahlad Gupta for helpful discussions leading up to this study, Stephanie Packard for assistance with stimulus development, Hannah Rigler and Marcus Galle for recording the stimuli, Jamie Klein and the students of the MACLab for assistance with data collection, and Ira Hazeltine for showing us the true meaning of competition. This project was supported by Grant Number DC008089 awarded to B.M.

Footnotes

When coda clusters were used (CVCC words), the first consonant in the coda was always an approximant.

Note that the VWP stimuli were the same as those used in Kapnoula et al. (2015).

The stem duration (i.e., presplice sequence) of the experimental stimuli had an average duration of ~400 ms. To account for the added 200 ms that is needed to plan an eye movement, we chose 600 ms as the onset of the time window used for our analyses. To choose the time window offset, we took into account participants’ RTs: The average participant RT was ~1, 330 ms; however, among our participants some had an average RT of ~1, 050 ms, and some had averages as high as ~2,000 ms. Therefore, we used a broad time window to ensure that we captured the effects in both fast and slow participants.

We acknowledge that interference from newly learned words could temporarily be boosted due to the recent exposure to the novel words during training, thus possibly creating an advantage for the trained-nonword splice over the other-word splice. That is, the lack of a difference between these two conditions might not reflect that the trained nonwords were fully word-like. On the other hand, familiar words would have a lifelong advantage over the newly learned words. More importantly, our argument rests primarily on the significant difference between splice conditions, not on the nonsignificant difference between trained and familiar words.

References

- Apfelbaum K, Blumstein SE, McMurray B. Semantic priming is affected by real-time phonological competition: Evidence for continuous cascading systems. Psychonomic Bulletin & Review. 2011;18:141–149. doi: 10.3758/s13423-010-0039-8. doi:10.3758/s13423-010-0039-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ashby FG, Alfonso-Reese LA, Turken AU, Waldron EM. A neuropsychological theory of multiple systems in category learning. Psychological Review. 1998;105:442–481. doi: 10.1037/0033-295x.105.3.442. doi:10.1037/0033-295X.105.3.442. [DOI] [PubMed] [Google Scholar]

- Bakker I, Takashima A, van Hell JG, Janzen G, McQueen JM. Competition from unseen or unheard novel words: Lexical consolidation across modalities. Journal of Memory and Language. 2014;73:116–130. [Google Scholar]

- Bates D, Maechler M, Dai B. lme4: Linear mixed-effects models using s4 classes (version 1.1-7) [Software] 2009 Retrieved from http://CRAN.R-Project.org/package1/4lme4i. [Google Scholar]

- Borovsky A, Elman JL, Kutas M. Once is enough: N400 indexes semantic integration of novel word meanings from a single exposure in context. Language Learning and Development. 2012;8:278–302. doi: 10.1080/15475441.2011.614893. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borovsky A, Kutas M, Elman J. Learning to use words: Event-related potentials index single-shot contextual word learning. Cognition. 2010;116:289–296. doi: 10.1016/j.cognition.2010.05.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bradlow AR, Nygaard LC, Pisoni DB. Effects of talker, rate, and amplitude variation on recognition memory for spoken words. Perception & Psychophysics. 1999;61:206–219. doi: 10.3758/bf03206883. doi:10.3758/BF03206883. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown H, Gaskell MG. The time-course of talker-specificity and lexical competition effects during word learning. Language, Cognition and Neuroscience. 2014;29:1163–1179. [Google Scholar]

- Creel S, Aslin R, Tanenhaus M. Heeding the voice of experience: The role of talker variation in lexical access. Cognition. 2008;106:633–664. doi: 10.1016/j.cognition.2007.03.013. doi:10.1016/j.cognition.2007.03.013. [DOI] [PubMed] [Google Scholar]

- Dahan D, Magnuson JS, Tanenhaus MK, Hogan EM. Subcategorical mismatches and the time course of lexical access: Evidence for lexical competition. Language & Cognitive Processes. 2001;16:507–534. doi:10.1080/01690960143000074. [Google Scholar]

- Davis MH, Di Betta AM, Macdonald MJE, Gaskell MG. Learning and consolidation of novel spoken words. Journal of Cognitive Neuroscience. 2009;21:803–820. doi: 10.1162/jocn.2009.21059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davis MH, Gaskell MG. A complementary systems account of word learning: Neural and behavioural evidence. Philosophical Transactions of the Royal Society B. 2009;364:3773–3800. doi: 10.1098/rstb.2009.0111. doi:10.1098/rstb.2009.0111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duff MC, Hengst J, Tranel D, Cohen NJ. Development of shared information in communication despite hippocampal amnesia. Nature Neuroscience. 2006;9:140–146. doi: 10.1038/nn1601. [DOI] [PubMed] [Google Scholar]

- Dumay N, Damian MF, Bowers JS. The impact of neighbour acquisition on phonological retrieval; 53rd Annual Meeting of the Psychonomic Society; Minneapolis, MI. 2012. p. 62. [Google Scholar]

- Dumay N, Gaskell MG. Sleep-associated changes in the mental representation of spoken words. Psychological Science. 2007;18:35–39. doi: 10.1111/j.1467-9280.2007.01845.x. doi:10.1111/j.1467-9280.2007.01845.x. [DOI] [PubMed] [Google Scholar]

- Dumay N, Gaskell MG. Overnight lexical consolidation revealed by speech segmentation. Cognition. 2012;123:119–132. doi: 10.1016/j.cognition.2011.12.009. [DOI] [PubMed] [Google Scholar]

- Fernandes T, Kolinsky R, Ventura P. The metamorphosis of the statistical segmentation output: Lexicalization during artificial language learning. Cognition. 2009;112:349–366. doi: 10.1016/j.cognition.2009.05.002. [DOI] [PubMed] [Google Scholar]

- Gaskell MG, Dumay N. Lexical competition and the acquisition of novel words. Cognition. 2003;89:105–132. doi: 10.1016/s0010-0277(03)00070-2. doi:10.1016/S0010-0277(03)00070-2. [DOI] [PubMed] [Google Scholar]

- Goldinger SD. Words and voices: Episodic traces in spoken word identification and recognition memory. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1996;22:1166–1183. doi: 10.1037//0278-7393.22.5.1166. doi:10.1037/0278-7393.22.5.1166. [DOI] [PubMed] [Google Scholar]

- Goldinger SD. Echoes of echoes? An episodic theory of lexical access. Psychological Review. 1998;105:251–279. doi: 10.1037/0033-295x.105.2.251. doi:10.1037/0033-295X.105.2.251. [DOI] [PubMed] [Google Scholar]

- Kapnoula EC, Packard S, Gupta P, McMurray B. Immediate lexical integration of novel word forms. Cognition. 2015;134:85–99. doi: 10.1016/j.cognition.2014.09.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuznetsova A, Brockhoff PB, Christensen RHB. lmerTest: Tests in linear mixed effects models (R Package Version 2.0-11) 2013 Retrieved from http://cran.r-project.org/web/packages/ lmerTest/index.html. [Google Scholar]

- Leach L, Samuel AG. Lexical configuration and lexical engagement: When adults learn new words. Cognitive Psychology. 2007;55:306–353. doi: 10.1016/j.cogpsych.2007.01.001. doi:10.1016/j.cogpsych.2007.01.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindsay S, Gaskell MG. Lexical integration of novel words without sleep. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2013;39:608–622. doi: 10.1037/a0029243. doi:10.1037/a0029243. [DOI] [PubMed] [Google Scholar]

- Luce PA, Pisoni DB. Recognizing spoken words: The neighborhood activation model. Ear and Hearing. 1998;19:1–36. doi: 10.1097/00003446-199802000-00001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marslen-Wilson W, Warren P. Levels of perceptual representation and process in lexical access: Words, phonemes, and features. Psychological Review. 1994;101:653–675. doi: 10.1037/0033-295x.101.4.653. doi:10.1037/0033-295X.101.4.653. [DOI] [PubMed] [Google Scholar]

- Mattys SL, Clark JH. Lexical activity in speech processing: Evidence from pause detection. Journal of Memory and Language. 2002;47:343–359. [Google Scholar]

- McMurray B, Kapnoula EC, Gaskell MG. Learning and integration of new word-forms: Consolidation, pruning and the emergence of automaticity. In: Gaskell MG, Mirković J, editors. Speech perception and spoken word recognition. Psychology Press; in press. [Google Scholar]

- McMurray B, Samelson VM, Lee SH, Tomblin JB. Individual differences in online spoken word recognition: Implications for SLI. Cognitive Psychology. 2010;60:1–39. doi: 10.1016/j.cogpsych.2009.06.003. doi:10. 1016/j.cogpsych.2009.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McQueen JM, Norris D, Cutler A. Competition in spoken word recognition: Spotting words in other words. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1994;20:621–638. doi: 10.1037//0278-7393.21.5.1209. doi:10.1037/0278-7393.20.3.621. [DOI] [PubMed] [Google Scholar]

- Palmeri TJ, Goldinger SD, Pisoni DB. Episodic encoding of voice attributes and recognition memory for spoken words. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1993;19:309–328. doi: 10.1037//0278-7393.19.2.309. doi:10.1037/0278-7393.19.2.309. [DOI] [PMC free article] [PubMed] [Google Scholar]

- R Development Core Team . R: A language and environment for statistical computing. R Foundation for Statistical Computing; Vienna, Austria: 2009. Retrieved from www.R-project.org. [Google Scholar]

- Takashima A, Bakker I, van Hell JG, Janzen G, McQueen JM. Richness of information about novel words influences how episodic and semantic memory networks interact during lexicalization. NeuroImage. 2014;84:265–278. doi: 10.1016/j.neuroimage.2013.08.023. doi:10.1016/j.neuroimage.2013.08.023. [DOI] [PubMed] [Google Scholar]

- Tamminen J, Payne JD, Stickgold R, Wamsley EJ, Gaskell MG. Sleep spindle activity is associated with the integration of new memories and existing knowledge. Journal of Neuroscience. 2010;30:14356–14360. doi: 10.1523/JNEUROSCI.3028-10.2010. doi:10.1523/JNEUROSCI.3028-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ullman MT, Pierpont EI. Specific language impairment is not specific to language: The procedural deficit hypothesis. Cortex. 2005;41:399–433. doi: 10.1016/s0010-9452(08)70276-4. [DOI] [PubMed] [Google Scholar]