Abstract

Background and Objective

Probabilistic topic models provide an unsupervised method for analyzing unstructured text. These models discover semantically coherent combinations of words (topics) that could be integrated in a clinical automatic summarization system for primary care physicians performing chart review. However, the human interpretability of topics discovered from clinical reports is unknown. Our objective is to assess the coherence of topics and their ability to represent the contents of clinical reports from a primary care physician’s point of view.

Methods

Three latent Dirichlet allocation models (50 topics, 100 topics, and 150 topics) were fit to a large collection of clinical reports. Topics were manually evaluated by primary care physicians and graduate students. Wilcoxon Signed-Rank Tests for Paired Samples were used to evaluate differences between different topic models, while differences in performance between students and primary care physicians (PCPs) were tested using Mann-Whitney U tests for each of the tasks.

Results

While the 150-topic model produced the best log likelihood, participants were most accurate at identifying words that did not belong in topics learned by the 100-topic model, suggesting that 100 topics provides better relative granularity of discovered semantic themes for the data set used in this study. Models were comparable in their ability to represent the contents of documents. Primary care physicians significantly outperformed students in both tasks.

Conclusion

This work establishes a baseline of interpretability for topic models trained with clinical reports, and provides insights on the appropriateness of using topic models for informatics applications. Our results indicate that PCPs find discovered topics more coherent and representative of clinical reports relative to students, warranting further research into their use for automatic summarization.

Keywords: Topic modeling, Primary care, Clinical reports

Introduction

The primary care physician’s (PCP) role is to deliver comprehensive care to their patients. Irrespective of the complexity of a patient’s medical history, the PCP is responsible for organizing and understanding relevant problems to make informed decisions regarding care. Unfortunately, PCPs have high time demands, with a large portion of time involved in indirect patient care (reading, writing, and searching for data in support of patient care) [1]. While the use of automated tools and overviews/summaries for patient records have been studied to facilitate this time consuming process, efforts have been limited to a narrow range of tasks and basic, superficial temporal representations [2–6]. As physicians continue to struggle with how much control they have over their time to deliver an increasing number of services and patient-centered care in managerially driven organizations, they would benefit from utilities that expedite the medical chart review process by providing meaningful automated summarization that assists in answering clinical questions [7]. The development of a model that captures the expression of key concepts could help alleviate some of the time burden felt by PCPs.

Automatic summarization of clinical documents is an active area of research, both in general [8, 9] and specifically in the clinical domain [10]. A key component of developing an automatic summarization system is finding concept similarity, which represents abstract connection between different words beyond their usage and meaning [10]. In small, well-defined domains, it has been shown that ontology-based methods work well [11, 12], but it remains an open problem in broader domains and in general has not been translated to most summarization systems [10]. Topic modeling is a method designed for identifying such abstract connections, so it could potentially be leveraged to achieve concept similarity for summarization systems in the clinical domain.

Probabilistic topic models for language have been widely explored in the literature as unsupervised, generative methods for quantitatively characterizing unstructured free-text with semantic topics. These models have been largely discussed for general corpora (e.g., newspaper articles), and have been developed for many uses, including word-sense disambiguation [13], topic correlation [14], learning information hierarchies [15], and tracking themes over time [16, 17]. In the biomedical domain, work has investigated the use of topic models to evaluate the impact of copy and pasted text on topic learning [18], better understanding and predicting Medical Subject Headings (MeSH) applied to PubMed articles [19], and exploring the correlation between Federal Drug Administration (FDA) research priorities and topics in research articles funded under those priorities [20]. Recently, topic models have been employed in the clinical domain in problems such as cased-based retrieval [21]; characterizing clinical concepts over time [22]; and predicting patient satisfaction [23], depression [24], infection [25], and mortality [26]. Additional work has been performed in using topic modeling methods to search for relationships between themes discovered in clinical notes and underlying patient genetics [27].

Exposing topics directly to PCPs through an integrated visualization is a possible mechanism for automatic summarization and information filtering of clinical records [28]. However, such a system would require that topics are human-interpretable and accurately reflect the contents of the medical record. While there has been work to evaluate the interpretability of topic models for general text collections [29, 30], no work has investigated the ability of a topic model to extract human-interpretable topics from clinical free-text. Clinical documents pose additional challenges in that they contain specialized information that requires significant training and experience to understand. As a result, using lay people as evaluators is probably insufficient for a clinical topic model as they would underperform due to a lack of domain knowledge rather than a lack of topic coherence.

In this paper, we present such an evaluation and compare the results of a topic model at several levels of granularity as interpreted by PCPs and lay people. While previous studies have had physicians evaluate topics from clinical text [24], to the best of our knowledge, no work has sought to compare topic interpretability between target users (PCPs) and baseline lay persons as method for evaluating the ability of a topic model to capture specialized themes. Our goal is to establish that a basic topic model is capable of discovering coherent topics that are representative of clinical reports.

Background

Seminal work in exploring latent semantics in free-text includes latent semantic indexing (LSI) [31], which applies singular value decomposition (SVD) to a weighted term-document matrix to arrive at a lower-rank factorization that can be used to compare the similarity of terms or documents. Through the contextual co-occurrence patterns of words in the matrix, the technique can overcome the problems of synonymy and polysemy. Probabilistic LSI (PLSI) [32] models the joint distribution of documents and words using a set of latent classes. Each document is represented as a mixture model of latent classes (“topics”) that are defined as multinomial distributions over words. Thus, generating a word requires selecting a latent class based on its proportion in the document and then sampling a word based on that latent class’ word distribution. The model is fit using the Expectation Maximization (EM) algorithm [33].

Latent Dirichlet allocation (LDA) [34] is a bag-of-words model that is similar to PLSI in that documents are mixtures of word-generating topics. However, LDA goes a step further and proposes a generative model for document-topic mixtures using a Dirichlet prior on a document’s topic distribution. LDA assumes topics exist in a Dirichlet-distributed latent space, from which document multinomial topic mixtures are drawn. A topic may then be sampled from the topic multinomial, which indexes individual topics from which words are drawn to generate documents. The inclusion of a Dirichlet prior has the benefit of mitigating overfitting, which is a limitation of PLSI [18].

Topic evaluation

LDA models are typically evaluated by computing the likelihood that a held-out document set was generated by a model fit to training documents [35]. Such likelihood metrics are objective and generalize well to different model configurations and data collections. In addition, they provide performance feedback during parameter fitting. Indirect evaluation of topic models may be performed in combination with a classifier, such as a support vector machine (SVM) model, trained on topic model generated features for a particular task.

While the above evaluations inform how well a topic model fits to data (under model assumptions) and the utility of learned topics for subsequent tasks, they do not explicitly measure the human-interpretability of discovered topics. Judging the human interpretability of topics is more challenging, as each person may have different notions of how words interrelate and the meaning they convey. Such subjectivity makes the direct use of topics difficult, as it is uncertain if users will interpret them to mean the same thing when using a system. There has been some work to explore the quality of the semantic representations discovered by topic models [15]. Notably, [29] used Amazon’s Mechanical Turk, an online service to scale task completion by human workers [36], to compare the interpretability over several models using the two tasks of word intrusion and topic intrusion. These tasks measure a topic model’s ability to generate coherent topics and representative document topic mixtures.

Methods

Data Collection

Our data collection consisted of medical reports for patients with brain cancer, lung cancer, or acute ischemic stroke collected by identifying patients from an IRB-approved disease-coded research database. In total, the data set consisted of 936 patients, with a total of 84,201 medical reports. As our use-case of interest for the topic models was to support an automatic summarization system for PCPs, we filtered this collection by report type to select those reports composed primarily of uncontrolled free-text that summarize a patient’s episode of care. When evaluating a new patient, PCPs perform a process of information summarization through chart review using these types of reports, selectively drilling-down to more specific information when needing to address a specific clinical question. We therefore selected Progress Notes, Consultation Notes, History and Physicals (H&Ps), Discharge Summaries, and Operative Reports/Procedures/Post-op Notes from our total set of reports, resulting in 20,161 reports from 924 patients (12 patients did not have a report of interest) that we used to fit topic models. The median number of reports for a patient was 13. The minimum was one report and the maximum was 260 reports. These reports were preprocessed to remove punctuation, stop words, words that occurred in fewer than five documents, and words that occur in every document. Protected health information (PHI) and numbers were also removed automatically using regular expressions. The resulting dataset consisted of 17,993 unique tokens and 5,820,160 total tokens.

Topic Models

In this work, we sought to establish a baseline level of topic interpretability using a general form of topic model, LDA. To compare the interpretability of LDA topics with differing degrees of granularity, three models were fit: one with 50 topics, one with 100 topics, and one with 150 topics. Models were fit in 4,000 iterations of Gibbs sampling using MALLET software [37], which was configured to use hyperparameter optimization, a 200 iteration burn-in period, and a lag of 10.

Model Likelihood

To compare goodness-of-fit across models, the document collection was randomly split, with 80% of all documents used for training and 20% held out for testing. After training, the held-out log-likelihood was then computed for each model using “left-to-right” sampling [35], which estimates P(Wtest|α,β). Resulting likelihoods were normalized by the number of tokens in their respective set. The split into train and test sets was used only for computing predictive model likelihood for comparison across models. The subsequent word and topic intrusion tasks were performed using models fitted to all documents.

Word Intrusion Task

To gauge the human interpretability of topics, we used the tasks of word intrusion and topic intrusion. In the word intrusion task subjects look at a list of six randomly-ordered words and are asked to select the word that “does not belong.” Five of the words are the most probable words given the topic, with the other word having a low probability in the topic. Low probability words are selected by randomly choosing from the least probable 20 words in a topic, with the constraint that the selected word must also be a top five word probability-wise in a different topic. Table 1 shows five example word intrusion questions from each topic model. The precision of a topic model is then the fraction of subjects who correctly identify the intruding word. For model m and topic t, let be the index of the intruding word and be the index of the intruder selected by subject s, with S representing the total number of subjects. Model precision (MP) may then be calculated as:

Table 1.

Top five words from five topics for each model and the random intruder word used in the word intrusion task.

| 50 topics | ||

| # | Top words | Intruder |

| 24 | intact, scan, mri, normal, ph | Liver |

| 26 | radiation, treatment, therapy, oncology, dose | Bid |

| 33 | allergies, disease, family, cancer, social | Lobe |

| 34 | lung, lobe, upper, lymph, surgery | Abdominal |

| 39 | prn, mca, bid, iv, daily | Family |

| 100 topics | ||

| # | Top words | Intruder |

| 2 | brain, ct, mca, infarct, mri | Sodium |

| 6 | assistance, daily, rehabilitation, mobility, activities | Night |

| 8 | trial, daily, bevacizumab, treatment, irinotecan | Problems |

| 23 | count, blood, sodium, potassium, hemoglobin | Prostate |

| 61 | femoral, lower, vascular, extremity, foot | Recurrence |

| 150 topics | ||

| # | Top words | Intruder |

| 3 | effusion, pleural, pericardial, daily, moderate | Abdominal |

| 11 | operation, placed, surgeon, incision, using | Clot |

| 28 | pain, emergency, room, episode, blood | Cath |

| 116 | liver, hepatitis, colon, cirrhosis, post | Commands |

| 118 | rate, exercise, stress, heart, normal | Clear |

Topic Intrusion Task

In the topic intrusion task, subjects are shown four randomly-ordered topics and a report. Three of the topics are those that best summarize the words contained in the displayed report, and one of the topics has a low probability in the report (selected similarly to the word intrusion task). Subjects are asked to select the topic that “does not belong” in the report. As seen in Table 2, for each topic, the five most probable words are displayed (i.e., there are four lists, each with five words). For model m, let represent the point estimate of the topic proportions vector for document d, and let be the intruding topic selected by subject s and be the true intruding topic, with S representing the total number of subjects. The topic log odds (TLO) may then be calculated as:

Table 2.

Topic intrusion task example. Participants are asked to pick the topic that does not belong in the accompanying report. In this example, topic 7 is the intruder.

| Report (truncated) | |||

| The patient is a YY-year-old female seen for continuing care following aortic valve replacement. Today, she has had a low cardiac index although interestingly, she is not acidotic, is mentating well, and is making good urine without the use of diuretics. Thus, this appears to be acceptable for her. We have given the patient aggressive fluid resuscitation in order to help improve her left ventricular outflow tract obstruction. We have gingerly started a small dose of afterload reduction to see if this will help, although this may be problematic in the setting of LVOT obstruction. The patient will be observed carefully. We will likely be able to discontinue her Swan later on today or tomorrow. The patient has been extubated. We will obtain a swallowing… | |||

| Randomly ordered topics | |||

| 93 | 78 | 7 | 23 |

| pulmonary | clear | mca | count |

| status | rate | cx | blood |

| respiratory | extremities | artery | sodium |

| failure | abdomen | infarct | potassium |

| intubated | nontender | trach | hemoglobin |

Topic Evaluators

In order to detect if the topic model is learning specialized clinical themes, we sought to compare the performance of PCPs with baseline lay persons who had no formal training in medicine. Therefore, PCPs and informatics students were surveyed on the interpretability of topics from each of the three models using the word intrusion and topic intrusion tasks. Thus, 10 randomly selected topics from each model and five randomly selected reports were evaluated by each subject for the word intrusion task and the topic intrusion task, respectively. Subjects were recruited via email and evaluations were performed using a web interface, with each subject receiving identical instructions on how to complete the tasks.

Statistical Analysis

Model precision and topic intrusion scores were averaged over trials and subjects. Additionally, the time taken to select an answer was recorded for each subject and the median was found for each model size. For each of the tasks, performances between the models were compared using Wilcoxon Signed-Rank Tests for Paired Samples with alpha equal to 0.05. Differences in performance between students and PCPs were tested using Mann-Whitney U tests for each of the tasks.

Automated Topic Coherence

To investigate if student and PCP determinations of interpretability correspond with automatic measures of topic coherence, we calculated the pointwise mutual information (PMI) for each topic. PMI has been used previously in evaluating topic models [30, 38], and measures the statistical independence of observing two words in close proximity within a text collection. Following previous work, we analyze the top 10 words within each topic, and determine their co-occurrence using a sliding window of 10 words within the corpus of clinical reports. PMI is then calculated over the 10-word windows as follows:

Using the top 10 words from each topic results in (i.e., 45) PMI scores per topic, which were then averaged and compared to average word intrusion results for PCPs and students using the Spearman correlation coefficient.

Results

Models required approximately 75–90 minutes to train on a desktop-grade computer. Table 3 details the results of the held-out log-likelihood analysis. Using log-likelihood, LDA is better able to fit to the data as the number of topics increases, a trend also observed in the literature [29]. Additionally, we noticed that topics, as expected, became more granular as the number of topics increased. For example, the 50 topic model has a single topic for lung disease (pulmonary, pneumonia, pleural, lung, effusion) while the 150 topic model has separate topics for pleural effusion (effusion, pleural, pericardial, daily, moderate) and pneumonia (pulmonary, lung, pneumonia, lobe, cough). The 150 topic model also has separate topics for pacemaker (pacemaker, lead, atrial, ventricular, pacing) and valve repair (valve, aortic, repair, post, endocarditis) while the 50 topic model combines the two (repair, pacemaker, valve, closure, post).

Table 3.

Held-out log-likelihood scores for different LDA models fit to clinical text collection.

| #Topics | Held-out log- likelihood |

|---|---|

| 50 | −7.1951 |

| 100 | −7.0906 |

| 150 | −7.0360 |

Word Intrusion Task

For the word intrusion task, there were 28 total respondents composed of 17 PCPs and 11 informatics students. Table 4 shows example topics and their precision results from each model for PCPs and students. Both PCPs and students performed best in terms of both time and precision on the 100 topic model. The median precision of PCPs using the 100 topic model was 70% and the median time to make a selection was 9.5 seconds. This performance was significantly better than the 50 and 150 topic models in terms of precision (p=0.001 and p=0.01, respectively), but the improvement was not statistically significant in terms of time (p=0.06 and p=0.20, respectively) (Figure 1a,c).

Table 4.

Example PCP and student topic precision results with median PMI score for each model.

| # Topics |

PCP precision |

Student precision |

Median PMI |

Topic | Top words | Intruding word |

|---|---|---|---|---|---|---|

| 50 | 0.94 | 0.81 | 0.31 | 18 | coronary, disease, artery, pressure, cardiac | intact |

| 0.58 | 0.27 | 0.88 | 34 | lung, lobe, upper, lymph, surgery | abdominal | |

| 0 | 0.1 | −0.08 | 19 | prn, mca, bid, iv, daily | course | |

| 100 | 1 | 0.63 | 0.97 | 61 | femoral, lower, vascular, extremity, foot | recurrence |

| 0.65 | 0.45 | −0.09 | 68 | continue, plan, bid, bp, hr | scan | |

| 0 | 0 | −0.90 | 52 | intact, scan, mri, ph, normal | large | |

| 150 | 0.94 | 0.64 | 1.05 | 12 | surgery, risks, discussed, surgical, benefits | cxr |

| 0.53 | 0.45 | 0.25 | 28 | pain, emergency, room, episode, blood | cath | |

| 0.18 | 0 | −0.10 | 120 | respiratory, blood, normal, neurologic, care | mri |

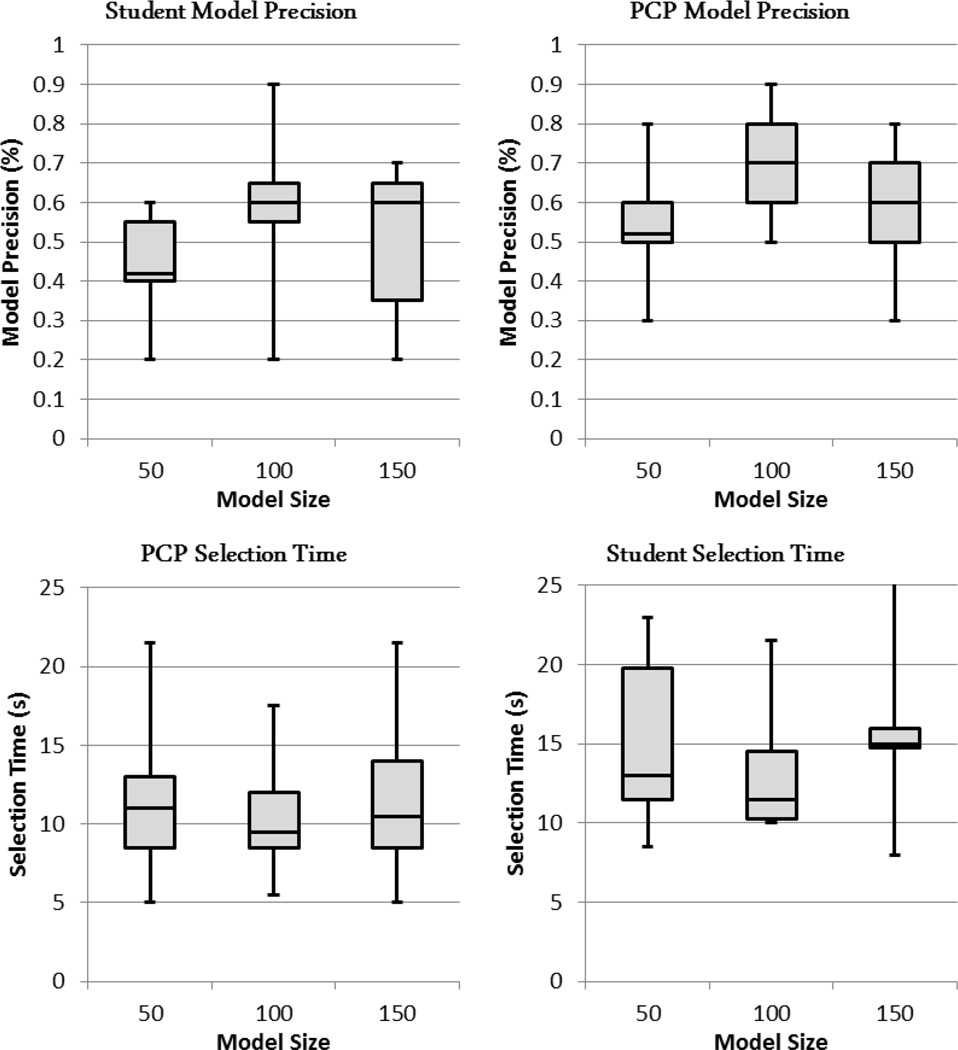

Figure 1.

Time and precision box plots for word intrusion task. Median selection times and precisions were found for each topic model size for both primary care physicians (a,c) and informatics students (b,d) performing the word intrusion task.

Students took a median time of 11.5 seconds and achieved a median precision of 60% using the 100 topic model. This performance was significantly better than the 50 and 150 topic models in terms of time (p=0.045 and p=0.045, respectively) (Figure 1b,d). In terms of precision, it was significantly better than the 50 topic model (p=0.025), but not the 150 topic model (p=0.091). PCPs performed significantly better than students in terms of both time (p=0.027) and model precision (p=0.015).

Topic Intrusion Task

For the topic intrusion task, there were 20 total respondents, with 9 PCPs and 11 informatics students. As the topic intrusion task is more time consuming than the word intrusion task, fewer PCPs participated. Both students and PCPs spent the least amount of time to make selections when using the 100 topic model. The median time required for PCPs to make selections using the 100 topic model was lower than that for the 50 topic model (74 vs. 64 seconds) and the log odds achieved was higher (−1.06 vs. −1.48), but neither were significantly different (p=0.47 and p=0.21, respectively) (Figure 2a,c). The median time required for selections using the 150 topic model was slightly higher (69 seconds) and the median log odds was higher (−0.87), but these were also not significantly different from the PCP results for the 100 topic model.

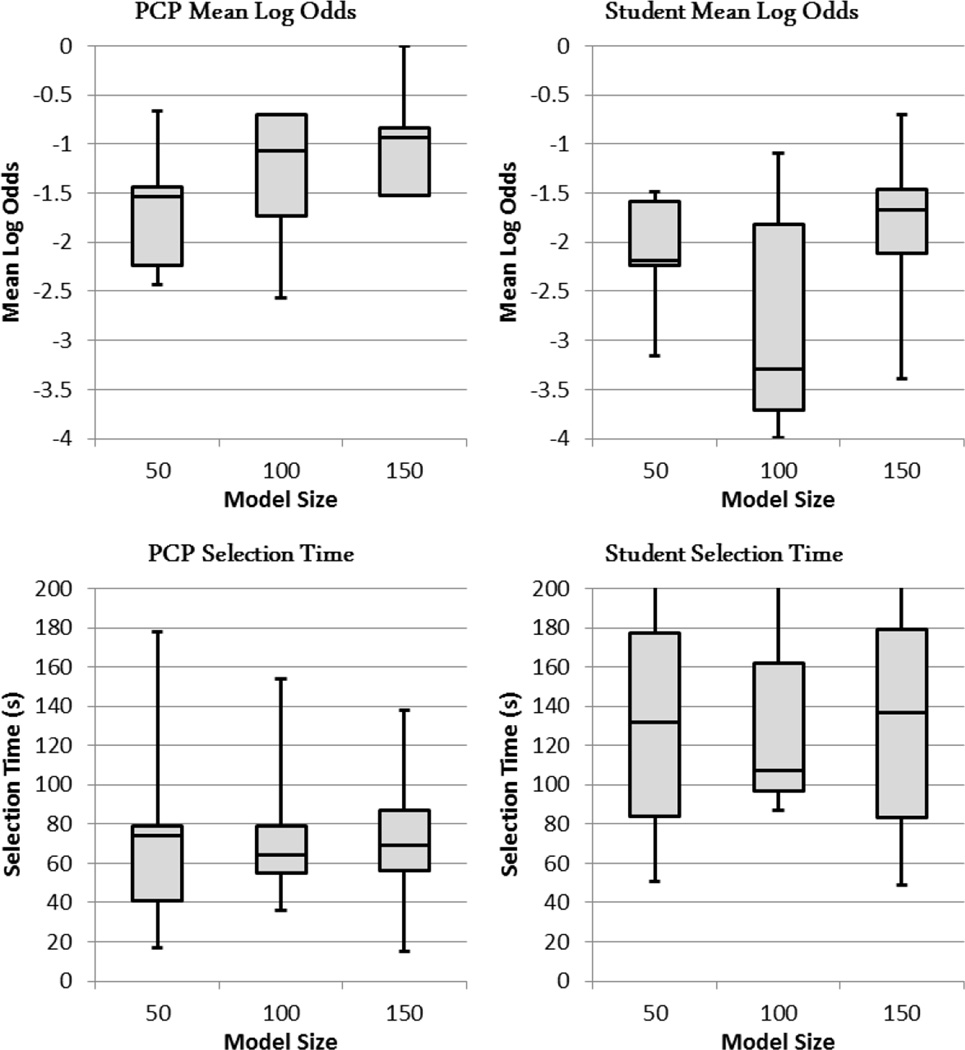

Figure 2.

Time and log odds box plots for topic intrusion task. Median selection times and mean log odds were found for each topic model size for both primary care physicians (a,c) and informatics students (b,d) performing the topic intrusion task.

Students also had the lowest median selection time using the 100 topic model (107 seconds), but it was not significantly different from the selection times using the 50 topic model (132 seconds, p=0.72) nor the 150 topic model (137 seconds, p=0.92) (Figure 2d). However, students also had the lowest log odds for the 100 topic model (−3.29), which was significantly lower than that achieved using the 50 topic model (−2.23, p=0.04) and the 150 topic model (−1.67, p=0.02) (Figure 2b). Again, doctors performed significantly better than students in terms of both time and log odds (p=0.0028 and p=0.0039, respectively).

Results of the topic intrusion task were highly dependent on the probability of the lowest probability relevant topic. In cases where the probability of this topic was low, subjects had a harder time distinguishing it from the intruding topic. Combining results across models, physicians had a median log odds of −0.18 for the eight documents with a third topic probability greater than 0.1 compared to a median log odds of −1.50 for the seven documents with a third topic probability less than 0.1. Students had a similar trend with a median log odds of −1.80 for documents with a high third topic probability and a median log odds of −2.81 for documents with a low third topic probability.

Automated Topic Coherence

Table 5 shows the results of the automated topic coherence analysis broken down by model and evaluator. The model fit with 100 topics has the best performance for both PCPs and students, with the model fit with 50 topics performing the worst for both groups. Example PMI scores for individual topics may be seen in Table 4.

Table 5.

Pearson correlation coefficients between model precision and PMI scores for each model and category of evaluator.

| #Topics | PCP PMI | Student PMI |

|---|---|---|

| 50 | −0.17 | −0.30 |

| 100 | 0.49 | 0.39 |

| 150 | 0.16 | 0.07 |

Discussion

Although the models performed better in terms of log-likelihood with increasing numbers of topics, more topics did not translate to increased human interpretability. The 100 topic model performed significantly better than the other models with respect to the word intrusion task for both PCPs and students. These findings were reinforced by the results of the automatic coherence analysis, which determined that the 100-topic model discovered the most coherent topics. On inspection of the topics, we noticed differences in topic granularity that help to explain these results. The 50 topic model learned broad topics that at times contained common words that were not related in any obvious way. In contrast, the 150 topic model learned topics that were more focused, and grouped less-common words that together were not always distinguishable from the random intruder.

We observed that PCPs performed significantly better than students on both word and topic intrusion tasks, and required significantly less time. As students and PCPs should achieve similar results interpreting general language topics, observed differences between the groups in this study are most likely a result of the PCPs’ specialized clinical knowledge and experience. Our results thus indicate that the topics learned by the models capture the specialized concepts specific to clinical documents. If plotted as a percentage of a patient’s medical reports over a discrete time period (e.g., plot the average topic probability over all reports discretized by day), we believe these topics warrant further investigation for use in an automatic summarization system. In such a system, a user could review historical timelines for each topic, which would provide a PCP with a temporal orientation of medical events, and guide them to relevant documents.

A previous study by Resnik et al. presented topic model output to a clinician to evaluate the quality of topics trained on clinical text [24]. Their model was trained on Twitter data from depression patients and the resulting topics were evaluated by a clinical psychologist to determine which would be relevant in assessing a patient’s level of depression. The evaluation used in their study was more targeted as the physician was looking for topics that were not only cohesive, but also related to the target medical condition. While their approach is qualitatively useful, it relies wholly on a single physician’s intuition. As noted by Lau et al. [30], intuitive ratings of topics do not always correspond with the ability to perform intrusion tasks. We therefore believe that the current approach provides a more rigorous evaluation of the coherence or a topic derived from clinical text.

We found that when fit to a collection of clinical reports, LDA yielded less interpretable topics than previous experiments performed with general text collections [29]. This result may be due to the fact that topics in our work are being learned over a highly specialized collection composed of a large vocabulary of related medical terms. For example, reports detailing different surgeries can share a large number of words (e.g., incision, using, surgeon) and, from a bag-of-words perspective, may only be distinguishable by a small number of anatomical or procedure-specific terms. One possible way to increase the interpretability of topics could be to extend the basic LDA model to better suit the clinical reporting environment. For example, maintaining word ordering with n-grams and including structured variables that capture report contents, patient characteristics, and temporal relationships (e.g., report type, demographics, lab results, etc.) could produce topics more reflective of clinical care. While this information was not explicitly included in the current model, some of it is reflected in the text and is therefore captured in the topics generated (see supplemental material). Nevertheless, it is possible that some report metadata could improve the quality of the topics generated and prior work on general text collections could be adapted for such a model [17, 39].

A limitation of this work is the relatively small sample size. Clinical reports contain information that requires specialized training to understand, as demonstrated by the significant difference in performance between students and PCPs. Because we needed to accommodate PCPs’ clinical schedules, we sought to limit the amount of time required from subjects to one hour with the hope of maximizing participation while still acquiring enough data to establish significance. The topic intrusion task required interpreting reports and was therefore more time consuming, and thus fewer PCPs participated.

While the results presented here indicate that topic models can learn interpretable clinical concepts, work remains in order to translate them into a complete automatic summarization system. In a real-time system, it would be impractical to relearn topics every time a new document was added to the database. The topics, therefore, would not necessarily be optimal for new documents. For instance, if a new treatment is introduced for a given disease, it might not exist in the vocabulary of the documents used to train the model, resulting in an inability for the topics to reflect an association between the treatment and disease. A system could address this issue by periodically relearning topics using new documents, but would need to balance between consistency and plasticity. Future studies should investigate the progression of topics over time and the effect on a summarization system.

Conclusion

In this work we have established a baseline for the interpretability of topics learned from clinical text. While the clinical relevance of the topics was not tested, clinicians significantly outperformed lay subjects, indicating that interpretable topics capturing specialized medical information can be discovered, an important first step in utilizing them in an automatic summarization system. These results were obtained using a general topic model without any modifications for clinical reporting or additional information from clinical knowledge sources. This is an encouraging result as models tailored to clinical reporting would likely provide even greater interpretability. Our future work includes developing models that account for the various forms of data (e.g., free-text, numeric, coded) and temporal dependencies that exist in the medical record, and measuring their performance in an automatic summarization system.

Highlights.

A topic model with three different parameter settings is fit to a large collection of clinical reports.

The interpretability of discovered topics are evaluated by clinicians and lay persons.

Clinicians are significantly more capable of interpreting topics than lay persons.

Topics hold potential for applications in automatic summarization.

Acknowledgments

Funding Statement:

This work was supported by a grant from the National Library of Medicine of the National Institutes of Health under award number R21LM011937 (PI Arnold). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Competing Interests Statement:

The authors have no competing interests to declare.

References

- 1.Pizziferri L, et al. Primary care physician time utilization before and after implementation of an electronic health record: a time-motion study. Journal of Biomedical Informatics. 2005;38(3):176–188. doi: 10.1016/j.jbi.2004.11.009. [DOI] [PubMed] [Google Scholar]

- 2.Plaisant C, et al. Proceedings of the AMIA Annual Symposium. Lake Buena Vista, FL: 1998. LifeLines: using visualization to enhance navigation and analysis of patient records. [PMC free article] [PubMed] [Google Scholar]

- 3.Cousins SB, Kahn MG. The visual display of temporal information. Artificial Intelligence in Medicine. 1991;3(6):341–357. [Google Scholar]

- 4.Feblowitz JC, et al. Summarization of clinical information: a conceptual model. Journal of Biomedical Informatics. 2011;44(4):688–699. doi: 10.1016/j.jbi.2011.03.008. [DOI] [PubMed] [Google Scholar]

- 5.Shahar Y, et al. Proceedings of Intelligent Data Analysis in Medicine and Pharmacology. Cyprus: Protaras; 2003. KNAVE-II: A distributed architecture for interactive visualization and intelligent exploration of time-oriented clinical data. [Google Scholar]

- 6.Hirsch JS, et al. HARVEST, a longitudinal patient record summarizer. Journal of the American Medical Informatics Association. 2015;22(2):263–274. doi: 10.1136/amiajnl-2014-002945. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Konrad TR, et al. It’s about time: physicians’ perceptions of time constraints in primary care medical practice in three national healthcare systems. Medical Care. 2010;48(2):95. doi: 10.1097/MLR.0b013e3181c12e6a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Mishra R, et al. Text summarization in the biomedical domain: a systematic review of recent research. Journal of Biomedical Informatics. 2014;52:457–467. doi: 10.1016/j.jbi.2014.06.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Nenkova A, McKeown K. Mining Text Data. Springer; 2012. A survey of text summarization techniques; pp. 43–76. [Google Scholar]

- 10.Pivovarov R, Elhadad N. Automated Methods for the Summarization of Electronic Health Records. Journal of the American Medical Informatics Association. 2015 doi: 10.1093/jamia/ocv032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Shahar Y, et al. Distributed, intelligent, interactive visualization and exploration of time-oriented clinical data and their abstractions. Artificial intelligence in medicine. 2006;38(2):115–135. doi: 10.1016/j.artmed.2005.03.001. [DOI] [PubMed] [Google Scholar]

- 12.Hsu W, et al. Context-based electronic health record: toward patient specific healthcare. Information Technology in Biomedicine, IEEE Transactions on. 2012;16(2):228–234. doi: 10.1109/TITB.2012.2186149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Boyd-Graber JL, Blei DM, Zhu X. Proceedings of the Joint Meeting of The Conference on Empirical Methods in Natural Language Processing and The Conference on Computational Natural Language Learning. Czech Republic: Prague; 2007. A Topic Model for Word Sense Disambiguation. [Google Scholar]

- 14.Blei D, Lafferty J. A correlated topic model of science. Annals of Applied Statistics. 2007;1(1):17–35. [Google Scholar]

- 15.Blei D, et al. Hierarchical topic models and the nested Chinese restaurant process. Advances in Neural Information Processing Systems 16: Proceedings of the 2003 Conference. 2003;16:17–24. [Google Scholar]

- 16.Wang C, Blei D, Heckerman D. Proceedings of the 24th Conference on Uncertainty in Artificial Intelligence. Finland: Helsinki; 2008. Continuous time dynamic topic models; pp. 579–586. [Google Scholar]

- 17.Wang X, McCallum A. Proceedings of the 12th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. Philadelphia, PE: ACM; 2006. Topics over time: a non-Markov continuous-time model of topical trends; pp. 424–433. [Google Scholar]

- 18.Cohen R, et al. Redundancy-Aware Topic Modeling for Patient Record Notes. PloS one. 2014;9(2):e87555. doi: 10.1371/journal.pone.0087555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Newman D, Karimi S, Cavedon L. AI 2009: Advances in Artificial Intelligence. Springer; 2009. Using topic models to interpret MEDLINE’s medical subject headings; pp. 270–279. [Google Scholar]

- 20.Li D, et al. A bibliometric analysis on tobacco regulation investigators. BioData mining. 2015;8(1):11. doi: 10.1186/s13040-015-0043-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Arnold C, et al. Proceedings of the AMIA Annual Symposium. Washington DC: 2010. Clinical Cased-based Retrieval Using Latent Topic Analysis; pp. 26–31. [PMC free article] [PubMed] [Google Scholar]

- 22.Arnold C, Speier W. Proceedings of the 35th International ACM SIGIR Conference on Research and Development in Information Retrieval. Portland, OR: 2012. A Topic Model of Clinical Reports. [Google Scholar]

- 23.Howes C, Purver M, McCabe R. Investigating topic modelling for therapy dialogue analysis. Proceedings IWCS Workshop on Computational Semantics in Clinical Text (CSCT) 2013 [Google Scholar]

- 24.Resnik P, et al. Beyond LDA: Exploring Supervised Topic Modeling for Depression-Related Language in Twitter. Proceedings of the 2nd Workshop on Computational Linguistics and Clinical Psychology (CLPsych) 2015 [Google Scholar]

- 25.Halpern Y, et al. A comparison of dimensionality reduction techniques for unstructured clinical text. ICML 2012 Workshop on Clinical Data Analysis. 2012 [Google Scholar]

- 26.Ghassemi M, et al. Proceedings of the 20th ACM SIGKDD international conference on Knowledge discovery and data mining. ACM; 2014. Unfolding physiological state: Mortality modelling in intensive care units. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Chan KR, et al. 2013 IEEE 13th International Conference on Data Mining Workshops (ICDMW) IEEE; 2013. An Empirical Analysis of Topic Modeling for Mining Cancer Clinical Notes. [Google Scholar]

- 28.Iwata T, Yamada T, Ueda N. Proceedings of the 14th ACM SIGKDD international conference on Knowledge discovery and data mining. ACM; 2008. Probabilistic latent semantic visualization: topic model for visualizing documents. [Google Scholar]

- 29.Chang J, et al. Reading tea leaves: How humans interpret topic models; Advances in Neural Information Processing Systems 22: Proceedings of the 2009 Conference; 2009. [Google Scholar]

- 30.Lau JH, Newman D, Baldwin T. Machine reading tea leaves: Automatically evaluating topic coherence and topic model quality. Proceedings of the Association for Computational Linguistics. 2014 [Google Scholar]

- 31.Deerwester SC, et al. Indexing by latent semantic analysis. JAsIs. 1990;41(6):391–407. [Google Scholar]

- 32.Hofmann T. Proceedings of the 22nd International ACM SIGIR Conference on Research and Development in Information Retrieval. Berkeley, CA: 1999. Probabilistic latent semantic indexing. [Google Scholar]

- 33.Dempster AP, Laird NM, Rubin DB. Maximum likelihood from incomplete data via the EM algorithm. Journal of the Royal Statistical Society. Series B (Methodological) 1977:1–38. [Google Scholar]

- 34.Blei D, Ng A, Jordan M. Latent Dirichlet allocation. Journal of Machine Learning Research. 2003;3(5):993–1022. [Google Scholar]

- 35.Wallach HM, et al. Evaluation methods for topic models; Proceedings of the 26th Annual International Conference on Machine Learning; 2009. [Google Scholar]

- 36.Buhrmester M, Kwang T, Gosling SD. Amazon's Mechanical Turk a new source of inexpensive, yet high-quality, data? Perspectives on psychological science. 2011;6(1):3–5. doi: 10.1177/1745691610393980. [DOI] [PubMed] [Google Scholar]

- 37.McCallum AK. MALLET: A Machine Learning for Language Toolkit. 2002 [Google Scholar]

- 38.Newman D, et al. Human Language Technologies: The 2010 Annual Conference of the North American Chapter of the Association for Computational Linguistics. Association for Computational Linguistics; 2010. Automatic evaluation of topic coherence. [Google Scholar]

- 39.Mimno D, McCallum A. Proceedings of the 24th Conference on Uncertainty in Artificial Intelligence. Finland: Helsinki; 2008. Topic models conditioned on arbitrary features with dirichlet-multinomial regression; pp. 411–418. [Google Scholar]