Abstract

Background

Previous research suggests that speakers are especially likely to produce manual communicative gestures when they have relative ease in thinking about the spatial elements of what they are describing, paired with relative difficulty organizing those elements into appropriate spoken language. Children with specific language impairment (SLI) exhibit poor expressive language abilities together with within-normal-range nonverbal IQs.

Aims

This study investigated whether weak spoken language abilities in children with SLI influence their reliance on gestures to express information. We hypothesized that these children would rely on communicative gestures to express information more often than their age-matched typically developing (TD) peers, and that they would sometimes express information in gestures that they do not express in the accompanying speech.

Methods & Procedures

Participants were 15 children with SLI (aged 5;6–10;0) and 18 age-matched TD controls. Children viewed a wordless cartoon and retold the story to a listener unfamiliar with the story. Children's gestures were identified and coded for meaning using a previously established system. Speech–gesture combinations were coded as redundant if the information conveyed in speech and gesture was the same, and non-redundant if the information conveyed in speech was different from the information conveyed in gesture.

Outcomes & Results

Children with SLI produced more gestures than children in the TD group; however, the likelihood that speech–gesture combinations were non-redundant did not differ significantly across the SLI and TD groups. In both groups, younger children were significantly more likely to produce non-redundant speech–gesture combinations than older children.

Conclusions & Implications

The gesture–speech integration system functions similarly in children with SLI and TD, but children with SLI rely more on gesture to help formulate, conceptualize or express the messages they want to convey. This provides motivation for future research examining whether interventions focusing on increasing manual gesture use facilitate language and communication in children with SLI.

Keywords: Gesture, speech–gesture redundancy, specific language impairment, language ability, children aged 5–10 years, narrative

Introduction

Nonverbal communication, including manual gestures people produce along with speech, has long been of interest to clinicians who serve individuals with various communication disorders. Research on individual differences in co-speech gestures can inform clinical practices. Although people gesture whenever they speak, there is wide individual variability in how much they do so. This individual variability has been attributed to a variety of factors including culture (for a review, see Kita 2009), personality (e.g., Hostetter and Potthoff 2012), and cognitive and language abilities (e.g., Hostetter and Alibali 2007). For example, Hostetter and Alibali (2007) found that normal adult speakers whose spatial abilities outstrip their verbal abilities gesture at a higher rate than other speakers. It appears that speakers are particularly likely to produce gestures when they have relative ease in thinking about the spatial elements of what they are describing, paired with relative difficulty organizing those elements into appropriate spoken language.

One group of individuals for whom verbal expression is particularly difficult is children diagnosed with specific language impairment (SLI). By definition, children with SLI have within-normal-range nonverbal intelligence and language abilities that are below age level expectations, in the absence of any frank neurological damage, intellectual deficit, hearing, emotional or neurodevelopmental disorders, such as autism (Leonard 1998, Tomblin et al. 1996). The language impairments seen in children with SLI include delayed onset and slower acquisition of lexical and grammatical forms, smaller lexicons, and particular difficulty with comprehending and producing inflectional morphology and complex syntax.

It has been hypothesized that children with SLI gesture more than typically developing (TD) children, perhaps as a result of their verbal deficits. A handful of previous studies have examined this question. Iverson and Braddock (2011) found that children with language impairments produced more gestures than TD children as they described a wordless storybook. However, the majority of the gestures produced by children in this study were deictic (e.g., pointing to objects on the page) or conventional (e.g., shrugging the shoulders to signal `I don't know'). Very few representational gestures, or gestures that act out or depict the meaning of speech (e.g., moving the hand in small circles with the words `he spun around'), were observed in this study. In another study Blake et al. (2008) asked participants to perform cartoon retell and classroom description tasks. A majority of the observed gestures were representational, but there was no significant difference in the frequency of representational gestures produced by children with SLI and their TD peers. Similar results have been reported by Botting et al. (2010). One goal of the present study is to further explore the possibility that children with SLI produce more representational gestures than their TD peers.

In addition to differences in gesture frequency, speakers also differ in the amount of overlap between the information they express in their gesture and that they express in speech. Speakers most often express the same information in gestures as in words. There are instances, however, when speakers convey meanings in gestures that they do not express in the accompanying speech. As one example, McNeill (1992) described a speaker who, in retelling a Sylvester and Tweety cartoon, said, `she chases him out again', and swung her arm as if wielding a weapon. There is nothing in the verbal portion of the utterance about swinging arms or weapons. However, in the original cartoon, Granny had chased Sylvester while swinging an umbrella. The speaker thus expressed an element of the scene in gesture—swinging the umbrella—that was not present at all in speech. In past research, we have referred to such gesture–speech combinations as non-redundant (e.g., Alibali et al. 2009).

When speakers encounter difficulties in communicating information in speech, they sometimes express that information in gestures (de Ruiter 2006). The difficulties may stem from conceptual planning for speech (e.g., Alibali et al. 2000) or difficulty accessing appropriate lexical items (e.g., Rauscher et al. 1996). Alibali et al. (2009) postulated that, within TD populations, individuals with smaller vocabularies or relatively weaker verbal abilities should more frequently produce gestures that are not redundant with speech than individuals who have larger vocabularies or better verbal abilities. In support of this claim, they presented evidence that children produce non-redundant speech–gesture combinations more frequently than adults. It is unknown however, if these age-related differences represent global, qualitative differences between children and adults, or if there is a more gradual, continuous change in the frequency of non-redundant combinations with age, such that younger children are more likely to produce non-redundant speech–gesture combinations than older children. If the change is gradual, an increase in redundant gesture–speech combinations with age might be predicted because children's expressive language abilities increase with age, resulting in greater facility accessing appropriate linguistic forms and packaging information required for speech.

Further evidence for the view that people express information in gestures when they have difficulties communicating in speech comes from a study of healthy adult speakers who have stronger spatial abilities than verbal abilities. These individuals also produce non-redundant gesture–speech combinations more frequently than speakers who have stronger verbal abilities than spatial abilities (Hostetter and Alibali 2011).

A few studies have examined whether children with SLI produce more non-redundant gestures (i.e., express content in their gesture that is not present in speech) when compared with TD peers. These studies are based on the idea that expressive language deficits in children with SLI may lead them to rely more on non-redundant speech–gesture patterns, relative to their TD peers. Both Blake et al. (2008) and Iverson and Braddock (2011), who examined gestures produced in narrative tasks, observed that children with SLI produced more representational gestures in the absence of words than did their TD peers. However, Iverson and Braddock did not find significant group differences in gestures that provided additional or disambiguating content.

One study (Evans et al. 2001) has documented increased production of non-redundant gestures in children with SLI using a speech–gesture coding system developed for Piagetian conservation task explanations (Church and Goldin-Meadow 1986). Evans et al. (2001) reported that children with SLI expressed information uniquely in gesture (and not in the accompanying speech) more often than younger conservation-knowledge-matched TD controls.

On the surface, it seems likely that the weaker verbal abilities of the SLI group may have led to their increased use of non-redundant gesture–speech combinations. There is, however, a second possible interpretation for the increased rate of redundant gesture–speech combinations observed in the SLI group in this particular study. The pattern of frequent gesture–speech `mismatches', in which children express knowledge in gestures but not in their speech, is also characteristic of children who have emerging knowledge of conservation. In particular, previous work has shown that TD children go through a transitional developmental phase in which they frequently express conservation understanding in their gestures but not in their speech. Children frequently produce such gesture–speech mismatches just prior to their showing evidence of conservation understanding (Church and Goldin-Meadow 1986). Thus, in this context, non-redundant gesture–speech combinations appear to signal the emergence of understanding of conservation.

Given that frequent mismatches between gesture and speech in children's conservation explanations have been shown to presage the acquisition of conservation knowledge in TD children, it is possible that the children with SLI in the Evans et al. study used more non-redundant gesture–speech combinations than the TD children because they were closer to acquiring the concept of conservation than the TD children. Although the children were matched for their conservation knowledge (as expressed in their same/different judgments), they may not have been matched for their `readiness to learn' the conservation concept. Indeed, given that the children with SLI were older, they may have been closer to acquiring the concept of conservation than the TD children. Thus, it is unclear why the children with SLI in the Evans et al. (2001) study produced non-redundant gesture–speech combinations: because of their weak expressive language abilities or as a signal of their emerging understanding of conservation.

To establish whether children with SLI use non-redundant gesture–speech combinations more often than their TD peers, we need to use a different type of task that does not that does not involve emerging knowledge such as the conservation task. To do so, we selected a narrative task. Such tasks have been heavily used in research on gesture in typical populations (e.g., McNeill 1992).

Current study

In the current study, we examined use of representational gestures among children with SLI. We were interested both in the frequency of gestures children produce during a narrative task, and in the redundancy of their gesture–speech combinations. To this end, we compared the gestures of children with SLI to those of age-matched, TD children in a simple narrative retell task. Based on findings for individuals with typical language abilities, we hypothesized that, relative to their age-matched peers with typical language development, children with SLI would produce more representational gestures overall, and they would also produce a higher proportion of non-redundant gesture–speech combinations.

As a secondary question, we also examined whether age had an impact on gesture redundancy. In light of previous findings that TD children produce non-redundant speech–gesture combinations more frequently than adults (Alibali et al. 2009), we explored whether there might also be developmental differences in use of non-redundant gesture–speech combinations among children, such that younger children would be more likely to produce non-redundant speech–gesture combinations than older children. An increase in redundant gesture–speech combinations with age might be predicted because children's expressive language abilities increase with age.

Method

Participants

Participants were 15 children with SLI (aged 6;2–9;5) and 18 TD children (TD; aged 5;6–10;0). All children met the following inclusion criteria: (1) Performance Intelligence Quotient above 85, as measured by the Leiter International Performance Scale (LIPS) (Roid and Miller 1997), the Test of Nonverbal Intelligence (Brown et al. 1990), or the Columbia Mental Maturity Scale (Burgemeister et al. 1972); (2) passed a pure tone hearing screening at 500, 1000, 2000, and 4000 Hz and 20 dB HL; (3) normal oral and speech motor abilities, as observed by a trained clinician; and (d) a monolingual, English-speaking home environment. Children also did not display any of the following exclusion criteria: (1) neurodevelopmental disorders other than SLI; (2) emotional or behavioural disturbances; (3) motor deficits or frank neurological signs; or (4) seizure disorders or use of medication to control seizures. Parental report was used to ensure that the children had not been diagnosed with any of these conditions. Attention deficit hyperactivity disorder (ADHD) was not considered an exclusionary criterion for this study; however, none of the parents reported current use of medication to treat ADHD. The children were recruited from public and private schools in a medium-sized Midwest US city. All children with SLI and none of the TD children had a reported history of services to treat speech, language, or learning disabilities provided by school based speech–language pathologists.

Children's language abilities were assessed using the Clinical Evaluation of Language Fundamentals—Revised (CELF-R) (Semel et al. 1987). All children in the SLI group exhibited expressive language deficits, as evidenced by the CELF-R Expressive Language index of lower than 1.00 SD below the mean. Ten out of the 15 children with SLI also exhibited receptive language deficits as evidenced by the CELF-R Receptive Language index of lower than 1.00 SD below the mean. All TD children received standard scores higher than 1.00 SD below the mean on the CELF-R Expressive Language index and the CELF-R Oral Directions receptive subtest. The results for the standardized testing are presented in table 1. As can be seen in table 1, children with SLI scored significantly lower on the CELF-R Expressive Language index, the CELF-R Oral Directions subtest, and IQ when compared with TD peers. The children in the TD group are the same children whose data we discuss in our previous paper (Alibali et al. 2009); the data from the children in the SLI group are original and previously unreported.

Table 1.

Means, standard deviations and ranges for ages and standardized test scores

| SLI | Age (months) | IQa | ELSb | RLSc | ODd |

|---|---|---|---|---|---|

| Mean | 97.07 | 104* | 70.47* | 80.47 | 6.87* |

| SD | 11.90 | 8.77 | 10.31 | 17.41 | 2.50 |

| Range | 74–116 | 89–122 | 54–84 | 50–107 | 3–9 |

| TD | |||||

| Mean | 96.28 | 121.56* | 104.67* | n.a. | 11.72* |

| SD | 13.86 | 7.76 | 10.68 | n.a. | 2.1 |

| Range | 76–120 | 110–136 | 91–130 | n.a. | 8–15 |

Notes:

IQ Standard Score was from the Columbia Mental Maturity Scale, the Leiter International Performance Scale or the Test of Nonverbal Intelligence (mean = 100, SD = 15).

Clinical Evaluation of Language Fundamentals—Revised: Expressive Language Index (mean = 100, SD = 15).

Clinical Evaluation of Language Fundamentals—Revised: Receptive Language Index (mean = 100, SD = 15).

Clinical Evaluation of Language Fundamentals—Revised: Oral Directions subtest (mean = 10, SD = 3).

p < 0.05.

Materials

The stimulus was a 90-s episode of the German children's cartoon Die Sendung mit der Maus, which has been used in previous research on gesture in children's narratives (e.g., Alibali and Don 2001). The cartoon features a tiny elephant and a large mouse, and it includes music but has no words. At the outset of the cartoon, the mouse jumps up onto a high bar, swings back and forth, flips around, and then dismounts. Next, the elephant jumps onto the bar, but the bar bends down, presumably because the elephant is too heavy. The mouse attempts to fix the bar by pushing it up, but is not successful. Next, a green leprechaun with a tall hat enters the scene and walks beneath the bar. As the leprechaun passes under the bar, his hat pushes up on the bar and fixes it.

Procedure

Each child viewed the cartoon stimulus twice, and then retold the story to an experimenter who waited outside the room while the child viewed the cartoon. To encourage children to include more information in their narrations, they were told that the experimenter had not seen the cartoon. After their initial narrations, children received four prompts to encourage them to tell more about the story: (1) tell a little bit more about what happened when the mouse was first on the bar, (2) tell a little bit more about when the mouse's friend tried to jump on the bar, (3) tell a little bit more about what the mouse did to try to fix the bar, and (4) tell a little bit more about the man with the hat.

Coding

Participants' speech was transcribed. All gestures were identified and the words that coincided with each gesture were noted.

Coding gesture meaning

Gestures were assigned meanings using a coding system developed specifically for this cartoon (Alibali et al. 2009). As seen in table 2, the system consists of formal criteria for identifying 13 categories of meanings expressed in gestures. These categories were developed based on TD children's gestures, such that these categories can be assigned from viewing the gestures without listening to the accompanying speech. For example, any gesture produced in the context of retelling this particular cartoon that includes a back and forth trajectory was coded as meaning `swing'. Gestures that met the formal criteria for one of the 13 categories were identified as gestures with codable meanings; these gestures were further coded in terms of the relationship between gesture and speech (see below). Gestures that did not meet the criteria for one of the 13 meaning categories were of three types: (1) beat gestures, which are motorically simple gestures that do not express semantic content, (2) representational gestures that conveyed meanings not represented in our lexicon (e.g., TRUNK), and (3) representational gestures that expressed meanings represented in our lexicon, but that did so in a way that did not meet our formal criteria for interpreting the gestures without the accompanying speech as defined in our previous study (Alibali et al. 2009).

Table 2.

Gesture lexicon: meanings and descriptions of gesture forms (from Alibali et al. 2009)

| Gesture meaning | Description of gesture form |

|---|---|

| Swing | Gesture that includes a back and forth motion; may be produced with hands or legs |

| Spin | Gesture that includes a circular motion, typically repeated and in neutral space; may be produced with one or both hands |

| Bar | Gesture that traces or takes the form of the bar; one or both hands may point, flatten, or form O's to represent round shape of bar |

| Stand | Gesture that traces or takes the form of the stand; one or both hands (typically both) point or flatten to represent upright stand for bar |

| Bar + stand | Gesture that traces or takes the form of the stand; typically produced with both hands; points may trace shape of stand and bar, or hands (with fingertips together) bend at knuckles or wrists so fingers represent bar and palms or arms represent stand |

| Grab bar | Gesture in which hands hover in parallel, sometimes with grasping motion; typically produced with both hands, either in neutral space or above head |

| Bent bar | Gesture in which hands trace shape of bent bar or half of bent bar, or gesture in which hands hover while holding shape of bent bar; may be produced with one or both hands |

| Dismount | Gesture in which hand makes a downward arcing motion; may include a slight upward motion before the downward motion; typically produced with one hand |

| Hat | Gesture made on or above head, in which hands either trace hat shape, form hat shape with hands or point to (imaginary) hat; may be produced with one or both hands using either points or flat hand shapes |

| Jump | Gesture in which hands move up and down several times; typically produced in neutral space or in lap; may be produced with one or both hands, using either flat or curved open hand shapes |

| Push bar up | Gesture in which both hands, palms face up or out, move up; typically produced in high neutral space or above head |

| Up | Gesture in which one hand moves up, in either point, flat, or curved open hand shape; typically produced in neutral space |

| Walk | Gesture that includes alternate stomping motion; can be produced with feet or hands |

Source: Used with permission of John Benjamins publishers.

Coding gesture–speech pairs

For each gesture with a codable meaning, we determined the relationship between gesture and speech: we assessed whether the exact words that co-occurred with the gesture conveyed the meaning that had been assigned to the gesture. For example, one child said `And he did some flips real fast' while producing a gesture meaning SPIN, which co-occurred with words `flips real fast'. This speech–gesture pair contains a gesture that is redundant, as both the gesture and coinciding words expressed the meaning `spin'. Another child produced an utterance, `But then he broke it' together with a gesture meaning HAT. This speech–gesture pair contains a gesture that is non-redundant because the speech does not contain any words expressing the meaning `hat'. In a few cases, participants expressed the meaning conveyed in the gesture in speech, but not at the same moment, that is, not in the words that co-occurred with the gestures. For example, one child said, `He reached up and swinged' and produced a gesture meaning SWING while saying the words `reached up'. This example was coded as non-redundant because the coinciding words (reached up) did not express the same meaning as the accompanying gesture (swing).1 Examples are presented in table 3.

Table 3.

Examples of different patterns of gesture–speech integration

| Participant group | Speech | Gesture | Gesture meaning | Redundant with words? |

|---|---|---|---|---|

| Child with TD | He was [doing flips around the poles] | Right hand point, circular motion | SPIN | Yes |

| Child with SLI | But [then he broke it] | Places hands on head at temples | HAT | No |

Note: Brackets [ ] indicate when the gesture occurred in relationship to the speech.

Reliability of coding

A second coder rescored 20% of the SLI data and 16% of the TD data to assess reliability. Agreement for the SLI group was 89% for the total number of gestures produced, and 91% for assigning meaning to the gestures. Cohen's kappa for determining if gestures with meaning were redundant with speech for the SLI group was 0.82. Agreement for the TD group was 92% for the total number of gestures produced, and 91% for assigning meaning to the gestures. Cohen's kappa for determining if gestures with meaning were redundant with speech for the TD group was 0.72.

Results

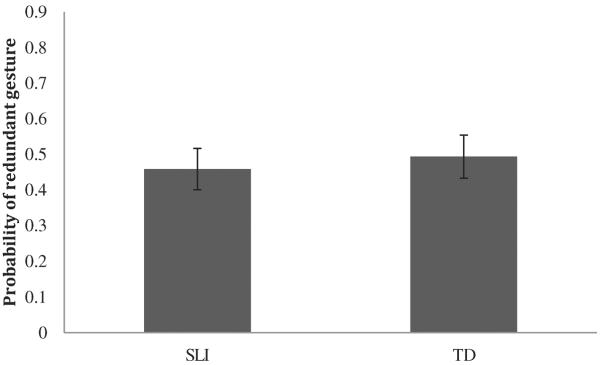

We first compared overall gesture rates for children with SLI and children with typical development. Using the R statistical package, we entered the number of gestures children produced into a Poisson regression model with number of words produced as an offset variable. The offset variable allowed us to estimate the rate of gesturing relative to the offset variable, number of words produced; thus, we were predicting gesture occurrence relative to the amount of speech children produced overall. The data are presented in figure 1. We calculated incidence rate ratios (IRR) as the relative increase in gesture rates produced by children with SLI compared with TD children. Children with SLI produced gestures at a higher rate than TD children. This held for all subcategories of gestures, including total number of gestures, IRR = 1.48, β = −0.40, SE = 0.12, z = −3.24, p < 0.001, gestures that could be coded for meaning, IRR = 1.35, β = −0.30, SE = 0.14, z = −2.11, p = 0.03, and non-redundant gestures, IRR = 1.58, β = −0.46, SE = 0.18, z = −2.47, p = 0.01. Overall, children with SLI produced gestures at a rate 1.48 times that of the TD children; they produced gestures that could be coded for meaning at a rate 1.35 times that of the TD children; and they produced non-redundant gestures at a rate 1.58 times that of the TD children. Thus, children with SLI produced gestures (considering all kinds of gestures together) at a higher rate than their TD peers, and they also produced non-redundant gestures at a higher rate than their TD peers.

Figure 1.

Mean number of gestures per 100 words produced by children in the SLI and TD groups, including (1) all gestures, (2) gestures in the lexicon for this task developed by Alibali et al. (2009), and (3) non-redundant gestures. Error bars represent standard errors.

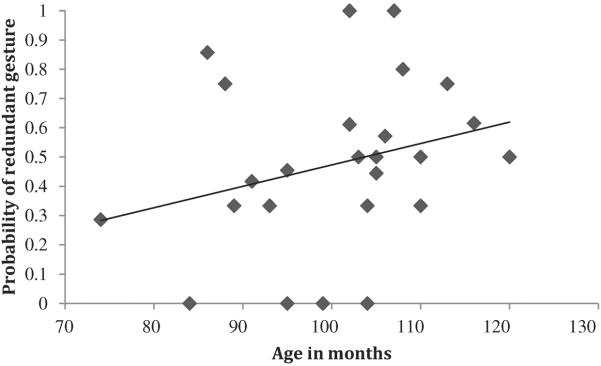

We next asked whether children with SLI were more likely to produce non-redundant gesture–speech combinations than children with typical development. Given that children with SLI produced gestures at higher rates than children with typical development, it is of interest to consider whether the likelihood of producing redundant gesture–speech combinations was similar across the groups. In other words, given that a gesture–speech combination was produced, how likely was it that the gesture conveyed information that was non-redundant with the accompanying speech? To address this question, all codable gestures were entered into a mixed logistic regression with participant and gesture meaning as random factors. The binomial dependent variable was whether gesture was redundant or non-redundant. This analysis was chosen because it accounts for the random effects associated with individual participants and individual gesture meanings. Children who produced no codable gestures were excluded from this analysis, as they had a zero denominator (two children with SLI and six children with typical development).

To test whether group (SLI or TD) affected the likelihood that gestures were redundant, we compared models with and without group as a factor. We built two models: (1) a random model that included only the random factors (participant and gesture meaning), and (2) a model that included group (SLI versus TD) as a fixed factor in addition to the random factors of participant and gesture meaning. We then compared whether model (2) offered a significantly better fit for the data than model (1), based on the chi-square value from an analysis of variance (ANOVA) test of the log likelihood values from the two models.

This modelling revealed that group membership (SLI versus TD) was not significant as a predictor of gesture redundancy. The β-estimate for group was β = 0.22, SE = 0.32, and the addition of group did not result in a significantly better model fit when compared with the random-effects-only model, χ2 = 0.65, p = 0.42. Thus, contrary to our predictions, the likelihood of producing redundant gestures was not significantly higher in the SLI group as compared to the TD group (figure 2).

Figure 2.

Average probability of gesture being redundant for the SLI and TD groups. Error bars represent standard errors.

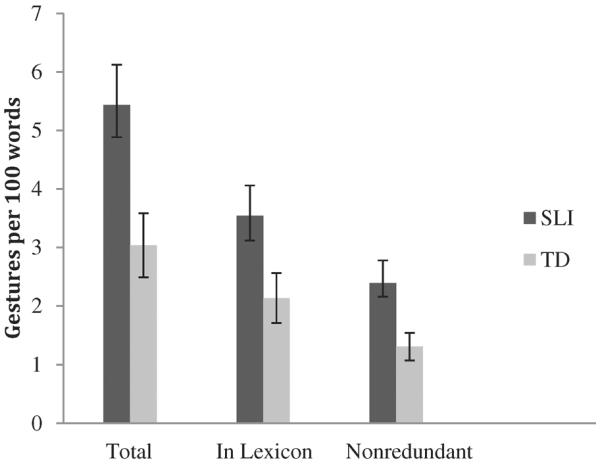

Finally, we addressed the secondary question regarding whether older children in both the SLI and TD groups were more likely to produce redundant gesture–speech combinations than younger children. We hypothesized that the likelihood of producing redundant gesture–speech combinations might increase as expressive language abilities increase. To test this hypothesis, we built upon the mixed logistic regression model with participant and gesture meaning as random factors and group membership as a fixed factor, by adding age and the age × group interaction to the model. Age was treated as a continuous variable.

Age significantly predicted gesture redundancy: the β-estimate for age was −0.03, SE = 0.01, and the addition of age to the model resulted in a better model fit, when compared with the model with only group membership as a fixed predictor, χ2 = 3.72, p = 0.05. The IRR estimate (1.03) indicated that with each increasing month, any particular gesture was 1.03 times more likely to be redundant with the accompanying speech. Thus, consistent with our predictions, older children were more likely to produce redundant gestures than younger children (figure 3). The addition of the group × age interaction term resulted in a β –estimate of −0.03, SE = 0.03, and model fit that was not significantly better than the fit of a model without the interaction term, χ2 = 0.93, p = 0.34. Thus, gesture redundancy increased with age similarly in the SLI and TD groups.

Figure 3.

Probability of gesture being redundant as a function of age (months).

Discussion

This study tested the hypothesis that children who differ in their language abilities also differ in their use of manual representational gestures when communicating. Consistent with this hypothesis, we found that children with SLI, who exhibit weak expressive language abilities, produced representational gestures at higher rates than their TD peers. This finding is consistent with findings reported by Iverson and Braddock (2011) for deictic and conventional gestures, and extends this work to representational gestures, which depict meanings iconically. This finding is important in light of research suggesting that children with SLI have subtle motor deficits (reviewed in Hill 2001): studies of limb praxis suggest that children with SLI tend to be impaired in the motor execution of representational gestures. The results of this study suggest that even given these hypothesized subtle difficulties in motor execution of manual gestures, children with SLI produce representational gestures with interpretable meanings at higher rates than peers.

The increased use of gesture by children with SLI could reflect their difficulties with language production. Representational gestures have been shown to facilitate lexical access (e.g., Rauscher et al. 1996) and conceptual planning of speech (e.g., Alibali et al. 2000). Thus, children with SLI may use gestures as a means to help themselves formulate or conceptualize the messages they want to convey. Note that in the present study, we did not measure message effectiveness. In the future, it would be interesting to investigate whether children with SLI formulate more effective spoken messages when they produce gestures compared with when they do not produce gestures.

Alternatively, children with SLI may produce gestures at particularly high rates due to a preference for representing information in an embodied manner. In this study, children experienced the cartoon story in the highly visual–spatial medium of video. They were then especially likely to use a visual–spatial modality—gesture—in communicating about that information. This suggests that children with SLI may represent information in a grounded or embodied manner, which bears traces of the way that information was acquired, rather than `translate' that information to the more abstract code of speech (Evans et al. 2001). Perhaps the visual–spatial representations of the events on the video activated embodied action representations easily expressed in gestures, but children had difficulty activating the corresponding linguistic representations. For children with SLI, reasoning with more grounded, embodied representations may be an area of relative strength.

It is worth noting that, although it was true at the group level that children with SLI gestured more on average than TD children, it was not the case that every single child with SLI gestured more than every single TD child—the distributions of gesture rate overlapped substantially. Thus, there was substantial variation among children with SLI in their rates of gesture production.

In addition to gesture rates, we also examined gesture–speech redundancy. Specifically, we predicted that children with SLI would produce more non-redundant gesture–speech combinations than their TD peers. Indeed, children with SLI did exhibit an increased rate of such combinations (just as they used more gestures in general). However, we found no evidence that children with SLI were more likely to produce non-redundant gesture–speech combinations than their TD peers. Instead, children with SLI and TD children were equally likely to produce non-redundant gesture–speech combinations. This pattern of findings suggests that, even though children with SLI gestured more, the overall functioning of the gesture–speech system is similar in the two groups. Further, these results suggest that the previous findings that children with SLI produced more non-redundant gestures in a Piagetian conservation task (Evans et al. 2001) may have been a reflection of these children's conceptual understanding of conservation, rather than their having a functionally different speech–gesture system. In the current study, which used the conceptually simpler narrative task, the likelihood of non-redundant gesturing was comparable in children with SLI and children with typical development. Future studies should manipulate task difficulty within the same study and examine the effect on non-redundant gesture use by children with SLI in order to examine this issue directly.

The present findings also differ from those of Hostetter and Alibali (2011), who showed that adults whose spatial abilities are stronger than their verbal abilities were more likely to produce non-redundant gesture–speech combinations than speakers who show the reverse pattern. We expected that children with SLI would be especially likely to produce non-redundant gestures, similar to adults who have relatively stronger spatial than verbal abilities. Our results may differ because of the diagnostic criteria commonly used in studies of SLI, such as the current study. These diagnostic criteria require children to exhibit significant difficulties in oral language together with nonverbal IQs in the normal range, but they do not require children to exhibit a significant discrepancy between verbal and non-verbal abilities, a criteria that was applied some in early SLI research (Leonard 1998). Our results may also differ because of the differences in verbal measures used. In the current study, we used a comprehensive language test as our measure of language ability, whereas Hostetter and Alibali (2011) used a verbal fluency test. Future studies should examine if children who have significant differences between verbal and performance IQs and if children who have language weaknesses more specific to verbal fluency exhibit differences in the likelihood of non-redundant gesture production, like the adults studied by Hostetter and Alibali.

We also found that younger children were more likely to produce non-redundant gesture–speech combinations than older children. It appears that children, regardless of whether they have SLI, become better at integrating meanings expressed in their gestures and in speech as they get older. This further suggests that the overall functioning of the gesture–speech system is similar in the two groups. This is also consistent with the findings reported by Alibali et al. (2009), who showed that children are more non-redundant than adults. In the present study, younger children were less likely than older children to produce gestures that communicated exactly the same information as the words they were producing at that moment. Coordinating meaning in gesture and speech at the word level may be a difficult skill that develops over time and with language experience.

In conclusion, children with SLI produced significantly more representational gestures in a narrative task than did their TD peers. However, when they produced gestures, the likelihood that those gestures were non-redundant was similar for children with SLI and TD children. The fact that children with SLI produce so many gestures overall suggests that they find gestures to be a natural and perhaps useful way to communicate their spatial and embodied knowledge. Future research is needed to determine whether their increased use of gestures fosters their ability to talk about the information or their ability to be understood by others (or both). It is possible that children with SLI may benefit from interventions that focus on using gestures more as a means of communicating more effectively. Since gestures are hypothesized to facilitate lexical access (e.g., Rauscher et al. 1996) and conceptual planning of utterances (e.g., Alibali et al. 2000), it is also conceivable that promoting gesturing in these children may facilitate word finding and conceptual packaging of spatial information.

What this paper adds?

What is already known on the subject?

Normal adult speakers whose spatial abilities outstrip their verbal abilities produce gestures more frequently and express content in their gestures that is not present in their speech more often than other speakers. Studies examining the frequency of gesturing and content expressed in gestures in children with SLI (who exhibit weak verbal abilities in the absence of intellectual disabilities) have yielded inconsistent results.

What this paper adds?

In this sample of children retelling a wordless cartoon story, children with SLI produced gestures significantly more often than age-matched peers. This indicates that these children rely on gestures when communicating, perhaps to facilitate lexical access and conceptual planning of speech. Alternatively, they may represent information in an embodied manner, which bears traces of the way that information was acquired, rather than `translate' that information to the more abstract code of language.

Acknowledgements

This research was supported by the Spencer Foundation (Grant Number S133-DK59, with Julia Evans and Martha Alibali as co-principal investigators). The authors thank Kristin Ryan for work on coding the data. They are most grateful to the parents and children who participated in the study.

Footnotes

Declaration of interest: The authors report no conflicts of interest. The authors alone are responsible for the content and writing of the paper.

These cases were coded as redundant at the clause level in Alibali et al. (2009). In the current study, we chose not to report separately the speech–gesture pairs that were redundant at the clause level, but non-redundant at the word level, because they occurred infrequently in this sample.

References

- Alibali MW, Don LS. Children's gestures are meant to be seen. Gesture. 2001;1:113–127. [Google Scholar]

- Alibali MW, Evans JL, Hostetter AB, Ryan K, Mainela-Arnold E. Gesture–speech integration in narrative: are children less redundant than adults? Gesture. 2009;9:290–311. doi: 10.1075/gest.9.3.02ali. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alibali MW, Kita S, Young AJ. Gesture and the process of speech production: we think, therefore we gesture. Language and Cognitive Processes. 2000;15:593–613. [Google Scholar]

- Blake J, Myszczyszyn D, Jokel A, Bebiroglu N. Gestures accompanying speech in specifically language-impaired children and their timing with speech. First Language. 2008;28:237–253. [Google Scholar]

- Botting N, Riches N, Gaynor M, Morgan G. Gesture production and comprehension in children with specific language impairment. British Journal of Developmental Psychology. 2010;28:51–69. doi: 10.1348/026151009x482642. [DOI] [PubMed] [Google Scholar]

- Brown L, Sherbenou R, Johnsen S. Test of Nonverbal Intelligence—2. PRO-ED; Austin, TX: 1990. [Google Scholar]

- Burgemeister B, Hollander Blum L, Lorge I. Columbia Mental Maturity Scales. Harcourt Brace Jovanovich; New York: 1972. [Google Scholar]

- Church RB, Goldin-Meadow S. The mismatch between gesture and speech as an index of transitional knowledge. Cognition. 1986;23:43–71. doi: 10.1016/0010-0277(86)90053-3. [DOI] [PubMed] [Google Scholar]

- De Ruiter J-P. Can gesticulation help aphasic people speak, or rather, communicate? Advances in Speech Language Pathology. 2006;8:124–127. [Google Scholar]

- Evans JL, Alibali MW, McNeil NM. Divergence of verbal expression and embodied knowledge: evidence from speech and gesture in children with specific language impairment. Language and Cognitive Processes. 2001;16:309–331. [Google Scholar]

- Hill EL. Non-specific nature of specific language impairment: a review of the literature with regard to concomitant motor impairments. International Journal of Language and Communication Disorders. 2001;36:149–171. doi: 10.1080/13682820010019874. [DOI] [PubMed] [Google Scholar]

- Hostetter AB, Alibali MW. Raise your hand if you're spatial: relations between verbal and spatial skills and representational gestures. Gesture. 2007;7:73–95. [Google Scholar]

- Hostetter AB, Alibali MW. Cognitive skills and gesture–speech redundancy. Gesture. 2011;11:40–60. [Google Scholar]

- Hostetter AB, Potthoff AL. Effects of personality and social situation on representational gesture production. Gesture. 2012;12:62–83. [Google Scholar]

- Iverson JM, Braddock BA. Gesture and motor skill in relation to language in children with language impairment. Journal of Speech, Language, and Hearing Research. 2011;54:72–86. doi: 10.1044/1092-4388(2010/08-0197). [DOI] [PubMed] [Google Scholar]

- Kita S. Cross-cultural variation of speech-accompanying gesture: a review. Language and Cognitive Processes. 2009;42:145–167. [Google Scholar]

- Leonard LB. Children with Specific Language Impairment. MIT Press; Cambridge, MA: 1998. [Google Scholar]

- McNeill D. Hand and Mind: What Gestures Reveal about Thought. University of Chicago Press; Chicago, IL: 1992. [Google Scholar]

- Rauscher FH, Krauss RM, Chen Y. Gesture, speech and lexical access: the role of lexical movements in speech production. Psychological Science. 1996;7:226–231. [Google Scholar]

- Roid M, Miller L. Leiter International Performance Scale, Revised. Stoelting; Dale Wood, IL: 1997. [Google Scholar]

- Semel E, Wiig E, Secord W. Clinical Evaluation of Language Fundamentals—Revised (CELF-R) Psychological Corporation; San Antonio, TX: 1987. [Google Scholar]

- Tomblin JB, Records NL, Zhang XY. A system for the diagnosis of specific language impairment in kinder-garten children. Journal of Speech and Hearing Research. 1996;39:1284–1294. doi: 10.1044/jshr.3906.1284. [DOI] [PubMed] [Google Scholar]