Abstract

Background:

This study evaluates the use of in-person focus groups and online engagement within the context of a large public engagement initiative conducted in rural Newfoundland.

Methods:

Participants were surveyed about their engagement experience and demographic information. Pre and post key informant interviews were also conducted with organizers of the initiative.

Results:

Of the 111 participants in the focus groups, 97 (87%) completed evaluation surveys; as did 23 (88%) out of 26 online engagement participants. Overall, focus group participants were positive about their involvement, with 87.4% reporting that they would participate in a similar initiative. Online participation was below expectations and these participants viewed their experience less positively than in-person participants. Organizers viewed the engagement initiative and the combined use of online and in-person engagement positively.

Conclusions:

This study presents a real-world example of the use of two methods of engagement. It also highlights the importance of the successful execution of whatever engagement mechanism is selected.

Abstract

Contexte:

Cette étude évalue l'utilisation des groupes de discussion et de la participation en ligne dans le contexte d'une vaste initiative de participation citoyenne qui a eu lieu à Terre-Neuve, en milieu rural.

Méthodes:

Les participants ont été questionnés pour s'enquérir de leur expérience de participation et pour recueillir des données démographiques. Des entrevues avant et après la tenue de l'initiative ont été menées auprès des principaux organisateurs.

Résultats:

Parmi les 111 participants aux groupes de discussion, 97 personnes (87 %) ont répondu au sondage d'évaluation; de même que 23 (88 %) des 26 personnes qui ont pris part à la participation en ligne. Dans l'ensemble, les participants aux groupes de discussion se montraient positifs face à leur participation; en effet, 87,4 % d'entre eux indiquent qu'ils participeraient encore à une initiative du genre. La participation en ligne n'a pas été aussi importante que ce à quoi on s'attendait. Les personnes qui y ont pris part ont qualifié leur expérience moins positivement que les participants aux groupes de discussion. Les organisateurs ont qualifié positivement l'initiative de participation ainsi que la combinaison entre participation en personne et participation en ligne.

Conclusions:

Cette étude présente un exemple concret de l'utilisation de deux méthodes pour favoriser la participation citoyenne. Elle souligne également l'importance de la réussite d'exécution, peu importe le mécanisme de participation choisi.

Introduction

For the organizers of public engagement initiatives, while there are frameworks available that identify features that need to be considered (Chafe et al. 2009), there is often little evidence available to determine which options for structuring an initiative are preferable in which context. Despite the relative lack of empirical evidence for their effectiveness, electronic and Internet-based methods of public engagement represent a new frontier in public engagement mechanisms. Online engagement can be a cost-effective method of engaging citizens in policy discussions (Weber et al. 2003). They have the potential to allow greater numbers of people or those who find it difficult to attend in-person engagement exercises, the ability to participate. However, given the lack of nonverbal cues, it has been suggested that online discussion may be less effective than face-to-face discussion (Min 2007). Other potential difficulties include the inaccessibility of the online survey to those without Internet service or who have poor communication skills (Van Selm and Jankowski 2006), survey designs that are not always user-friendly (Nair and Adams 2009) and an inability to directly engage respondents in discussions to address any potential misunderstandings (Puleston 2011).

This study evaluates two popular mechanisms of public engagement – a series of in-person, deliberative focus groups and an online survey – used within the Central Region Citizen Engagement Initiative (CRCEI). We evaluated the CRCEI for a number of reasons. The organizers were quite interested in having their initiative evaluated and were open to working closely with us. Because the initiative was structured by a third-party, we could not implement an experimental design. However, the use of two types of engagement within a single real-world engagement initiative offered the opportunity to gather evidence around the experience of designing and implementing these mechanisms within a similar context. Our analysis also provides a detailed account of the challenges and the achievements of an engagement initiative conducted in rural Canada and the organizers' views on the effectiveness of the mechanisms used, which is likely useful for others planning similar engagement initiatives.

Central Region Citizen Engagement Initiative

The CRCEI was developed and run by the CRCEI Working Group, which included members of Central Health, the local Regional Health Authority; the Government of Newfoundland and Labrador's Rural Secretariat, which is responsible for advancing the sustainability of rural regions of the province; Memorial University, which provided advice on the planning and evaluative components of the initiative; the College of the North Atlantic, a public college with campus locations throughout Newfoundland and Labrador; and the Gander-New-Wes-Valley Regional Council of the Rural Secretariat, a citizen-based advisory council. The CRCEI was precipitated by the perceived need by these partners to learn more about citizens' perspective on regional healthcare and the allocation of public resources across sectors in relation to rural sustainability. For the CRCEI, there was a particular focus on capturing the values of the citizens in the region, as they relate to resource allocation and priority setting decision-making.

The CRCEI initiative had two components:

1. Eleven in-person focus groups held throughout the region between February and March 2013; and

2. an online survey was made available to every member of the public in central Newfoundland between May 1st and July 4th, 2013.

For the CRCEI focus groups, participants were recruited by local employees of Central Health in each community where focus groups were held. The manner of recruitment varied slightly by facilitator, but usually included a personal invitation to selected members of the community. These local employees, with support from a person with training in public engagement from the province's Rural Secretariat, also served as the focus group facilitators. At the start of the focus group sessions, participants were provided with a conversation guide, which included various facts about health and education services in the Central region, information about general infrastructure and public services offered in the Central region and an overview of the demographics of the region. The guide also included information about the various organizational values used in decision-making and provided participants with two different scenarios, one in education and one in health, to enable participants to deliberate in a small group about what choices they would make and why. During the focus group, participants were asked individually to list what values they considered most important in decision-making around the use of public resources. Participants were also asked what perspectives or concerns they thought should be used by public sector decision-makers to allocate services in the region. The online survey was carefully designed to mirror the focus group sessions. It was available to residents through the Central Health website (Central Health 2015) and consisted of a downloadable conversation guide and a survey with the same questions about values and perspectives/concerns as those used in the focus group sessions. The online survey was mentioned at the focus group sessions and was available on the front page of the Central Health webpage; however, there was no formal public outreach conducted to inform the public about the online survey.

Methods

The research team worked with the CRCEI Working Group to incorporate our evaluation into the CRCEI. While there is a clear need for increased evaluation of public engagement initiatives, the development and use of evaluative tools is often lagging (Abelson and Gauvin 2006). Many reasons have been cited for this deficiency, including the lack of rigorous and validated evaluative frameworks and the tendency for organizers to overlook the importance of evaluation (Abelson and Gauvin 2006; Mitton et al. 2009; Rowe and Frewer 2005). Among the most recognizable frameworks is one developed by Rowe and Frewer (Abelson and Gauvin 2006; Abelson et al. 2010; Rowe and Frewer 2000), which lists nine evaluative criteria for use in public engagement evaluation, including independence, representativeness, early involvement, influence, transparency, resource accessibility, task definition, structured decision-making and cost-effectiveness. Faced with a limited amount of time for administering our evaluation within the CRCEI, the research team, in consultation with the CRCEI Working Group, modified the Rowe and Frewer framework to focus on five key elements and included two additional criteria: likelihood to participate again and expectations of the organizers. The likelihood for participants to participate in a similar initiative correlates with an increased public confidence in their own ability to participate in a public engagement initiative (Warburton et al. 2007). It also reflects the overall feeling participants have about the initiative (Gregory et al. 2008). Organizers' expectations and whether they were met illustrate how the organizers viewed the initiative and provide an indication of its potential organizational impact (Kathlene and Martin 1991; Rowe and Frewer 2000). If the initiative is well-run, then the organizers will rate the process favourably and be more likely to embrace the recommendations stemming from the engagement (Rowe and Frewer 2000; Warburton 2008). Despite the unsystematic recruitment process used in the CRCEI and the potential for an unrepresentative sample of participants, the criterion of representativeness was included for its importance in understanding the impacts of the different mechanisms for engagement.

The CRCEI Working Group preferred the use of a survey incorporated into the in-person and online sessions over more resource-intensive qualitative methodologies when collecting data from the participants in the CRCEI. The research team developed surveys administered to all participants based on the five elements identified by the Rowe and Frewer framework and two additional elements identified by the research team. Surveys were reviewed and approved by both the research team and the CRCEI Working Group prior to being used.

Surveys had two components, focusing on:

• participants' experience with the engagement initiative; and

• demographic information.

The surveys completed by focus group and online participants were similar, except for minor wording differences to reflect the different contexts (Appendix 1). For participants of focus group sessions, surveys were administered via the TurningPoint 5.0 polling technology (Turning Technologies 2013) with the assistance of the focus group facilitator. This polling technology enables each participant to anonymously register their survey responses via a wireless transmitter. Online participation was based on a survey instrument using the Fluid Survey™ website (FluidSurveys 2014). The criterion of “representativeness” was evaluated by comparing demographic data reported by participants with available census data for the region (Community Accounts 2013, 2008; Statistics Canada 2013). The criteria of “task definition,” “independence,” “resource accessibility,” “fairness” and “likelihood to participate again” were evaluated using survey responses. The criterion of the “expectations of the organizers” was evaluated based on key informant interviews conducted with members of the CRCEI Working Group. All members of the Working Group were asked to participate in an interview before the start of the initiative. Those who completed a pre-initiative interview were also asked to complete another interview after the CRCEI was complete. Similar questions were discussed in both interviews (Appendix 2), which allowed for an examination of any changes in response over the course of the CRCEI (Hermanowicz 2013). All interviews were conducted by one researcher (PW) and were recorded and professionally transcribed. Field notes were also taken during and after each interview and included in the analysis. The data was analyzed using a thematic content analysis approach (Green and Thorogood 2009), with the aim of identifying issues and themes that the interview participants discussed. Initially, the interview transcripts were reviewed and notes and general codes were developed by the primary author (PW). Codes were then further refined and sub-categories were developed to represent the various themes present in the interviews. The coding analysis was regularly reviewed by another author (RC) to validate consistency. Analysis of the interview data was then discussed by all authors to confirm relevant findings. Ethics approval for the project was obtained from the Newfoundland and Labrador Health Research Ethics Authority (2013).

Results

Table 1 lists the communities of participants in the CRCEI in either the focus group or online sessions. For the 111 focus group participants, the survey response rate varied, with 108 (97%) completing the participant experience component and 97 (87%) completing both the participant experience and demographic components. Out of the 26 people who completed the CRCEI's online survey, 23 (88%) completed both the participant experience and demographic components of our evaluation survey.

Table 1.

Communities and number of focus group and online participants

| Community | Number of focus group participants | Number of online participants |

|---|---|---|

| Baie Verte | 10 | |

| Botwood | 5 | |

| Eastport | 10 | |

| Fogo Island | 13 | |

| Gander | 5 | 3 |

| Glovertown | No focus group | 1 |

| Grand Falls-Windsor | 10 | 4 |

| Greenspond | No focus group | 1 |

| Harbor breton | No focus group | 1 |

| Lewisporte | 20 | 1 |

| New Wes Valley | No focus group | 2 |

| New-Wes-Valley | 8 | |

| Springdale | 11 | 7 |

| St. Alban's | 12 | |

| Twillingate | 7 | |

| Not identified | 6 | |

| Total | 111 | 26 |

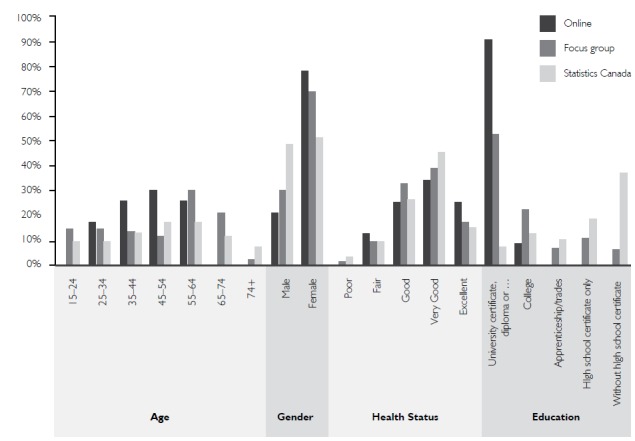

Representativeness

Comparing demographic survey results with data from Statistics Canada, we found that both online and focus group participants were fairly unrepresentative of the adult population in the central region of Newfoundland (Figure 1). While age breakdown and self-reported health statuses are comparable, noticeable differences emerge regarding the level of education and gender of participants. In particular, online participants were overall much better educated than the population average, with 91.3% of online participants and 52.6% of focus group participants reporting a university education, compared with 7.8% of the region's adult residents. Both engagement mechanisms also display a female bias, with 78.3% of online participants and 69.7% of focus group participants being female, compared with Statistics Canada data, which report that 51.0% of the population are female. It is also of note that no one under the age of 24 or over the age of 64 completed the online survey, even though these age groups make up 10.2% and 19.5% of the region's population, respectively.

Figure 1.

Demographic results from online and focus group sessions compared with Statistics Canada data

Participant Experience Criteria

Table 2 displays the percentage agreement (the sum of “agree” and “strongly agree” responses) and the percentage disagreement (the sum of “disagree” and “strongly disagree” responses) for the other evaluative components. Focus group participants were more positive about their engagement experience than the online participants across all of the evaluative criteria measured by the participant experience components of our surveys. In fact, focus group participants' positive ratings ranged between 75 and 96.2% for the five components of their experience. In contrast, the highest positive rating given to any of the components by online participants was 43.4% for the independence of the process. Participants of the online engagement gave two components, task definition and resource accessibility, higher negative scores than positive, highlighting their poor experience.

Table 2.

Participant experience for focus group and online participants

| Evaluative component | Statement to which participants were asked to respond | Focus group percentage agreement (percentage disagreement) | Online percentage agreement (percentage disagreement) |

|---|---|---|---|

| Task definition | I feel that the nature and scope of this citizen engagement session has been well-defined | 75 (10.6) | 30.5 (52.2) |

| Independence | I feel that today's session was run in an unbiased way | 96.2 (0.96) | 43.4 (26.1) |

| Resource accessibility | I feel that the sponsors of today's session provided me with enough time and information, to enable me to take part in the discussion | 92.3 (0.96) | 30.5 (47.8) |

| Fairness | I feel that this citizen engagement session allowed me equal opportunity to provide input | 92.3 (3.9) | 39.1 (21.8) |

| Likelihood to participate again | I would participate in a similar exercise such as today's session again if the opportunity arises | 87.4 (5.8) | 39.1 (21.7) |

Expectations of The Organizers

Six members of the CRCEI Working Group completed pre- and post-interviews. In interviews before the start of the engagement initiative, several dominant themes emerged for the organizers. Most believed that the online process was more of an experiment and would not yield the same depth of discussion as the focus group sessions.

“ I'm thinking, in my head, that you would get more of that [useful information] from that dialogue between people, than you would get when an individual is just thinking about their own … their own thoughts on the issue.” (Study Participant 5)

Key informants generally anticipated that the online component would be more representative than the focus group sessions, based on the assumption that the online technology would be accessible to more citizens. It was also generally felt that there would be more participants in the online survey than in the in-person focus groups.

“ Well, from an online perspective, my expectation is that we'll get a broad overview of public … public input.” (Study Participant 4)

Key informants brought up the idea of learning and building on the initiative several times during the interviews. This was important, as many of the partners involved in the initiative had limited experience with public engagement and saw the CRCEI as an opportunity for their organization to further develop this ability. They also viewed the CRCEI as an opportunity to develop and foster a relationship with the public through information sharing.

“ One of our objectives was to do somewhat of education or awareness to the public about decision-making and the difficulty and how decisions are made.” (Study Participant 5)

During the post-initiative interviews, key informants' views changed regarding representativeness and the online component. This change was most likely owing to the lower than expected number of people who completed the online survey; this point was discussed by one participant who noted the readiness of the population to use the online technology.

“ Is it just at this point in time a reflection of our population and readiness for this sort of activity?” (Study Participant 2)

Despite these concerns, a possible remedy to the unrepresentativeness of the participating public was the use of social media to increase awareness among youth.

“ I think you'd need to use more of a social media, things like Twitter and Facebook and tweets and all this different kind of stuff that kids are into, because there's a lot of people out there that we're not reaching and we know that.” (Study Participant 2)

Nonetheless, they felt that there were still strengths worth discussing and that there were lessons learned from the initiative. Of the major strengths discussed by interviewees, the success of the focus group format was dominant.

“ When you have a situation where you can sit one-on-one in person with people, and have a round of discussions around things that you know, sort of occur to them as they are listening to others speak, you end up getting richer and deeper insights into, you know, what may be happening.” (Study Participant 2)

Key informants interviewed also viewed the collaboration between the various partners involved in the CRCEI as a major success worth touting. Mentioned by several participants, the CRCEI was a rare successful instance of regional collaboration.

“ The strength I think of the entire initiative was that, um, it was a partnership approach. Um … we had multi partners throughout this process.” (Study Participant 3)

However, many weaknesses were also discussed by key informants, including the usability of the information collected from the online component owing to the limited number of participants and the issue of representativeness.

“ The actual deliverable, in terms of what the true values that citizens have and all those types of things that were of interest questions to the partners … I'm reserving judgment yet on whether or not we could or probably should utilize that information because I don't personally feel it is representative of the population.” (Study Participant 3)

Overall, several of the key informants were happy with the way the initiative proceeded, even if they were slightly hesitant regarding the use of online engagement.

“ I think we got some good engagement, some good feedback, some themes. I'm really happy about that, but I really know we'd have been a lot richer if we could have gotten more online [participants] to have a more representative sample and more input, to add to the data.” (Study Participant 1)

Discussion

This study evaluated two mechanisms of engagement, online and in-person, used in the CRCEI. We found that both mechanisms of engagement were unrepresentative of certain aspects of the population of Central Newfoundland, particularly in terms of the level of education and gender of the sample. This result is not surprising, given the recruitment strategy and that many public engagement initiatives often include an unrepresentative sample of the public, including in terms of gender, age, income and employment in the sector being engaged (Lomas and Veenstra 1995). Other online surveys have also shown, as in our study, an over-representation of highly educated participants (Duda and Nobile 2010; Rowe et al. 2006). In fairness, the organizers of the CRCEI did not explicitly attempt to ensure that the initiative was representative, using a direct invitation to certain members of the community and an online survey opened to everyone. However, it is interesting that after the online survey had a much lower response rate than was expected, some of the organizers pointed to the lack of representativeness because of the small number of respondents, as a reason to question the results. It may be the case that being as representative as possible is as important then to balance off criticisms or attempts to undermine the use of the findings of an engagement initiative, as it is to balance concerns about democratic need.

Participants in the focus groups were much more satisfied with their participation than those who participated online across all five criteria. These results are somewhat surprising, given some of the suspected benefits of engaging people online and that the information and tasks given to both sets of participants were closely modelled after each other. In terms of task definition, 75% of focus group participants, but only 30.5% of online participants, agreed with the statement that the scope of the initiative was well-defined. The instructions and information given to each group were designed to be the same. The difference here may be access to the focus group facilitator and other focus group participants, and the rather non-interactive presentation of the information online, which may play a strong role in how participants feel about online surveying (Puleston 2011). It is important that the issues during a public engagement initiative are framed in a manner easy for the public to understand (Sheedy 2008); this may be a particularly pertinent consideration when using online engagement where participants are without access to a facilitator.

Resources for a public engagement initiative can include information, material, time and human resources (Rowe and Frewer 2000). While focus group participants were provided with a copy of the conversation guide prior to the session, online participants were able to access the conversation guide beforehand and spend as much time as possible to review the material and give their responses. The fact that 92.3% of focus group participants, but only 30.5% of online participants, felt that they were given enough time and information likely either reflects the participants were unsure about the tasks they were being asked to perform and had no one to turn to for clarification, or that there is a “halo effect,” in which raters simply selected the same evaluation category throughout the entire evaluation (McLaughlin et al. 2009); in this case, rating all of the evaluative criteria negatively to reflect their overall negative experience.

Due to the rural context of the CRCEI, it was important to reach citizens despite vast geographical boundaries. The organizers felt that, although the online process was an experiment in online engagement, the use of the online technology would allow the opportunity for everyone to participate; an important consideration in a rural context. However, owing to the lower than expected number of online participants, their expectations around the use of online engagement changed, as reflected during the second round of interviewing. The minimal advertising and recruitment efforts completed for the online survey were recognized by organizers after the initiative. Organizers discussed the use of innovative recruitment strategies, including the use of social media, as ways to address this issue. The use of social media has been successful in a similar online style engagement initiative in Northern Ontario (Shields et al. 2010). While the focus groups of the CRCEI were viewed as a success by participants and organizers, it was noted during the interviews that it would have been impractical to provide a focus group for every area. For this reason, organizers commended using both mechanisms of engagement if they were to conduct a similar initiative in the future.

This research project had a number of limitations. Evaluations of public engagement initiatives can be categorized as either process- or outcome-based. Process evaluations of public engagement initiatives focus on how the initiative was conducted. Outcome evaluations focus on the impact of the public's input (Abelson and Gauvin 2006; Weiss 1998). While incorporating an evaluation of impacts of the different mechanisms and their combined impact would have added an important component to our understanding of the different mechanisms, the evaluation of the CRCEI in this project was limited to a process-based evaluation, owing partially to time constraints of the research team and delays in the public reporting of results. Similarly, while our evaluation would have been further strengthened by expanding the qualitative interviews to the participants in the focus groups and online sessions, the organizers felt that surveys were sufficient to be incorporated into the CRCEI. The project was conducted within a particular social and institutional context. While there are no reasons that arose within the project that would lead the researchers to conclude that the conclusions are not applicable to other contexts, particularly in rural areas, discretion needs to be taken in generalizing the findings. Finally, the small number of online participants limited the ability to conduct any statistical analysis on the focus group and online results.

Conclusion

This study offered unique insight into the use of concurrent engagement mechanisms and provides lessons for organizers of similar initiatives in the future. The use of the two engagement mechanisms allowed the organizers to use focus groups to reach citizens near larger centres, while the online component was designed partly so that residents in hard-to-reach locales, or those who were not invited to the focus groups, would also have an opportunity to provide input. Organizers, who were initially hopeful that online engagement would allow a greater proportion of a rural population to participate, ultimately questioned whether the results would be used by decision-makers because of the low participation. While organizers of the engagement exercise interviewed were disappointed with some aspects of the initiative, they discussed ways of improving the online experience and reiterated their support for using two mechanisms of engagement for future initiatives.

Contributor Information

Peter Wilton, Public Engagement Advisor, Nova Scotia Health Authority Halifax, NS.

Doreen Neville, Associate Professor, Division of Community Health and Humanities, Memorial University of Newfoundland, St. John's, NL.

Rick Audas, Associate Professor, Division of Community Health and Humanities, Memorial University of Newfoundland, St. John's, NL.

Heather Brown, Vice President, Rural Health, Central Health.

Roger Chafe, Associate Professor, Division of Pediatrics, Memorial University of Newfoundland, St. John's, NL.

References

- Abelson J., Forest P.-G., Casebeer A., Mackean G., Maloff B., Musto R. et al. 2004. “Towards More Meaningful, Informed and Effective Public Consultation”. Final Report to the Canadian Health Services Research Foundation. Retrieved November 21, 2005. <www.chsrf.ca/final_research/index_e.php>.

- Abelson J., Gauvin FP. 2006. Assessing the Impacts of Public Participation: Concepts, Evidence, and Policy Implications. Ottawa: Canadian Policy Research Networks; Retrieved May 15, 2014. <http://skat.ihmc.us/rid=1JGD4CQS2-16X32NW-1KMD/SEMINAL%20Assessing%20the%20Impacts%20%20OF%20 PUBLIC%20PARTICIPATION%20Concepts,%20Evidence,%20and.pdf>. [Google Scholar]

- Abelson J., Montesanti S., Li K., Gauvin FP., Martin E. 2010. “Effective Strategies for Interactive Public Engagement in the Development of Healthcare Policies and Programs”. Canadian Health Services Research Foundation. Retrieved May 23, 2014. <www.cfhi-fcass.ca/Libraries/Commissioned_Research_Reports/Abelson_EN_FINAL.sflb.ashx>.

- Central Health. 2015. “Welcome to Central Health”. Retrieved May 27, 2015. <http://centralhealth.nl.ca/>.

- Chafe R., Neville D., Rathwell T., Deber R. 2009. “A Framework for Involving the Public in Healthcare Coverage and Resource Allocation Decisions”. Healthcare Management Forum 21 (4): 6–13. [DOI] [PubMed] [Google Scholar]

- Community Accounts. 2008. “Central Health Authority: Census 2006: Highest Level of Schooling”. Retrieved June 5, 2014. <http://nl.communityaccounts.ca/table.asp?_=vb7En4WVgaSzyHNYnJvJwKCus6SfY8W4q5OruZKoh4yDXGeAyb2atJ7Skb2Iz8Kf>.

- Community Accounts. 2013. “Central Health Authority: Canadian Community Health Survey, 2009–2010: Health Status of Individuals”. Retrieved June 5, 2014. <http://nl.communityaccounts.ca/table.asp?_=vb7En4WVgaSzyHNYnJvJwKCus6SfY8W4q5OruZCnkM2Ei2OKiZM_>.

- Duda M.D., Nobile J.L. 2010. “The Fallacy of Online Surveys: No Data Are Better Than Bad Data.” Human Dimensions of Wildlife: An International Journal 15(1): 55–64. [Google Scholar]

- FluidSurvey. 2014. “About FluidSurveys”. Retrieved May 28, 2014. <http://fluidsurveys.com/about/>.

- Green J., Thorogood N. 2009. Qualitative Methods for Health Research, 2 ed., Thousand Oaks, CA: Sage. [Google Scholar]

- Gregory J., Hartz-Karp J., Watson R. 2008. “Using Deliberative Techniques to Engage the Community in Policy Development.” Australia and New Zealand Health Policy 5(16). 10.1186/1743-8462-5-16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hermanowicz J.C. 2013. “The Longitudinal Qualitative Interview.” Qualitative Sociology 36(2): 189–208. [Google Scholar]

- Kathlene L., Martin J.A. 1991. “Enhancing Citizen Participation: Panel Designs, Perspectives, and Policy Formation.” Journal of Policy Analysis and Management 10(1): 46–63. [Google Scholar]

- Lomas J., Veenstra G. 1995. “If You Build It, Who Will Come?”. Policy Opinions 16(9): 37–40. [Google Scholar]

- McLaughlin K., Vitale G., Coderre S., Violato C., Wright B. 2009. “Clerkship Evaluation–What Are We Measuring?” Medical Teacher 31(2): e36–39. [DOI] [PubMed] [Google Scholar]

- Min S.J. 2007. “Online vs. Face-to-Face Deliberation: Effects on Civic Engagement.” Journal of Computer-Mediated Communication 12(4):1369–87. [Google Scholar]

- Mitton C., Smith N., Peacock S., Evoy B., Abelson J. 2009. “Public Participation in Health Care Priority Setting: A Scoping Review.” Health Policy 91(3): 219–28. [DOI] [PubMed] [Google Scholar]

- Nair C.S., Adams P. 2009. “Survey Platform: A Factor Influencing Online Survey Delivery and Response Rate.” Quality in Higher Education 15(3): 291–96. [Google Scholar]

- Puleston J. 2011. “Improving Online Surveys.” International Journal of Market Research 53(4): 557–60. [Google Scholar]

- Rowe G., Frewer L.J. 2000. “Public Participation Methods: A Framework for Evaluation.” Science, Technology & Human Values 25(1): 3–29. [Google Scholar]

- Rowe G., Marsh R., Frewer L.J. 2004. “Evaluation of a Deliberative Conference.” Science, Technology & Human Values 29(1): 88–121. [Google Scholar]

- Rowe G., Frewer L.J. 2005. “A Typology of Public Engagement Mechanisms.” Science, Technology, & Human Values 30(2): 251–90. [Google Scholar]

- Rowe G., Poortinga W., Pidgeon N. 2006. “A Comparison of Responses to Internet and Postal Surveys in a Public Engagement Context.” Science Communication 27(3): 352–75. [Google Scholar]

- Sheedy A. 2008. Handbook on Citizen Consultation: Beyond Consultation. Ottawa: Canadian Policy Research Networks; Retrieved May 15, 2014. <www.cprn.org/documents/49583_EN.pdf>. [Google Scholar]

- Shields K, G., DuBois -Wing, Westwood E. 2010. “Share Your Story, Shape Your Care: Engaging the Diverse and Disperse Population of Northwestern Ontario in Healthcare Priority Setting.” Healthcare Quarterly 13(3): 86–90. [DOI] [PubMed] [Google Scholar]

- Statistics Canada. 2013. “Table 109-5325 – Estimates of Population (2006 Census and Administrative Data), by Age Group and Sex for July 1st, Canada, Provinces, Territories, Health Regions (2013 boundaries) and Peer Groups, Annual (number), CANSIM (database).” Retrieved June 5, 2014. <www5.statcan.gc.ca/cansim/a05?lang=eng&id=1095325>.

- Turning Technologies. 2013. “TurningPoint”. Retrieved May 28, 2014. <www.turningtechnologies.com/polling-solutions/turningpoint>.

- Van Selm M., Jankowski N.W. 2006. “Conducting Online Surveys.” Quality & Quantity 40(3):435–56. [Google Scholar]

- Warburton D., Wilson R., Rainbow E. 2007. “Making a Difference: A Guide to Evaluating Public Participation in Central Government”. Retrieved May 15, 2014. <http://participationcompass.org/media/68-Making_a_Difference_.pdf>.

- Warburton D. 2008. “Evaluation of Defra's Public Engagement Process on Climate Change.” Shared Practice. Retrieved May 23, 2014. <www.sharedpractice.org.uk/Downloads/Defra_CC_evaluation_report.pdf>.

- Weber L.M., Loumakis A., Bergman J. 2003. “Who Participates and Why?: An Analysis of Citizens on the Internet and the Mass Public.” Social Science Computer Review 2003. 21(1): 26–42. [Google Scholar]

- Weiss C.H. 1998. Evaluation, 2nd ed., Upper Saddle River: Prentice Hall. [Google Scholar]