Abstract

Objectives

To evaluate an external quality assessment (EQA) program for human immunodeficiency virus (HIV) rapid diagnostics testing by the Haitian National Public Health Laboratory (French acronym: LNSP). Acceptable performance was defined as any proficiency testing (PT) score more than 80%.

Methods

The PT database was reviewed and analyzed to assess the testing performance of the participating laboratories and the impact of the program over time. A total of 242 laboratories participated in the EQA program from 2006 through 2011; participation increased from 70 laboratories in 2006 to 159 in 2011.

Results

In 2006, 49 (70%) laboratories had a PT score of 80% or above; by 2011, 145 (97.5%) laboratories were proficient (P < .05).

Conclusions

The EQA program for HIV testing ensures quality of testing and allowed the LNSP to document improvements in the quality of HIV rapid testing over time.

Keywords: HIV rapid tests, External quality assessment, Proficiency testing

Access to human immunodeficiency virus (HIV) diagnosis and treatment has expanded rapidly in Haiti. National efforts to scale up care and treatment services for individuals with HIV depend on efficient laboratory services, including HIV testing, which is the main entry point to access to care and treatment. Despite increased access to HIV counseling and testing in developed countries, most people living with HIV in low- and middle-income countries are unaware of their serostatus.1 While available rapid diagnostic tests (RDTs) for HIV have high sensitivity and specificity when performed correctly, incorrect use can result in incorrect results, with serious consequences. It is estimated that 430,000 people out of 86 million who benefited from HIV testing through the US President's Emergency Plan for AIDS Relief will have received erroneous results, assuming an error rate as low as 0.5%.2 Errors can occur at any stage of the testing process: in the preanalytical stage (storage outside the recommended temperatures, collecting specimens incorrectly, and expired test kits), in the analytical stage (deviation from testing procedures, misinterpretation of results, and poor test performance), and the postanalytical stage (documentation errors).3,4

External quality assessment (EQA) is critical to assess the quality of laboratory performance and to ensure accuracy and reliability of laboratory results.5 The World Health Organization recommends a three-phase approach to implement HIV RDTs that includes (1) evaluating HIV rapid test kits, (2) piloting of the selected algorithm, and (3) monitoring the quality of testing through an EQA program. Based on these guidelines, the implementation of a national HIV testing algorithm should be accompanied by ongoing EQA to monitor performance.6 Key components of HIV EQA are (1) retesting, (2) onsite technical assistance, and (3) a proficiency testing (PT) program that evaluates technical competence.7 A PT program is a periodic check on testing processes and laboratory performance8 where unknown samples are sent by an external provider for testing to a set of laboratories, and subsequently the results of all laboratories are analyzed, compared with those of the reference laboratory and reported back.

In Haiti, most HIV testing is performed by trained technicians in a laboratory setting using RDTs. The first step in expanding HIV RDTs was to implement and validate a national algorithm. In 2006, Haiti adopted a testing algorithm based on two sequential rapid tests.9 Three tests were approved: two rapid immunochromatographic assays—Abbott Determine HIV1/2 (Abbott Laboratories, Abbott Park, IL) or OraQuick ADVANCE Rapid HIV-1/2 (OraSure Technologies, Bethlehem, PA)—for use as a screening test and one rapid latex agglutination assay, Trinity Biotech Plc Capillus HIV-1/HIV-2 (Trinity Biotech Plc, Wicklow, Ireland), for use as a confirmatory test. In 2011, the national algorithm was reviewed, and Capillus was replaced by a rapid immunochromatographic assay, HIV (1+2) Antibody (Colloidal Gold) (KHB; Shanghai Kehua Bio-engineering, Shanghai, China).

Following validation by the National Public Health Laboratory (French acronym: LNSP), the HIV RDTs were made available throughout the 10 departments (states/provinces) of the country. In accordance with its essential role in ensuring the quality of HIV tests nationally, the LNSP developed guidelines for test performance, provided rigorous training to guarantee high quality and standardized testing, and established an EQA program for laboratories within the network.

We reviewed the implementation of the EQA program and analyzed data from 2006 through 2011 to evaluate the quality of HIV testing and changes in PT performance over time.

Materials and Methods

Implementation of the PT Program

In 2006, the LNSP conducted an initial training on the implementation of the national algorithm for HIV testing, the importance of the HIV RDT EQA, and the procedures for testing the PT panels. Laboratories from all sectors (public, private, and mixed public/private) in the country were invited to participate in the training and EQA program. Following the initial training, PT panels (described in detail below) were sent out free of charge to the laboratories using cold chain transport. Laboratories were instructed to complete the PT testing within 4 weeks of receiving the PT panels. Each participating laboratory recorded the results of proficiency testing on paper forms along with information about the date of testing and the tests performed (eg, test kits used and number of tests done). These PT results were collected by LNSP staff and recorded in a central database at the LNSP. Panels were distributed once a year in 2006, 2007, and 2008. In 2009, the distribution frequency was increased to twice a year, but because of logistical constraints, panels were distributed once per year in 2010 and 2011. Laboratories with PT performance less than 80% were visited by senior technicians and assessed (eg, for availability of test kits and proper test performance) and received follow-up training on RDT use, the importance of following the national algorithm for HIV RDTs, and Good Laboratory Practices.

PT Panel Description

In 2006 and 2007, panels of six (three positive and three negative) HIV seroconversion serum samples were purchased (seroconversion panels; ZeptoMetrix, Buffalo, NY) and distributed nationally by the LNSP. These panels were nonreactive for hepatitis B surface antigen, human T-lymphotropic viruses 1 and 2, and syphilis. In 2008, to reduce transport costs and in line with evolving recommendations, the liquid PT panels were substituted by panels of dried tube specimens (DTS), prepared at the LNSP according to the method described by Parekh et al.10 Briefly, discarded and deidentified units of blood collected from the national blood transfusion center were characterized using an enzyme-linked immunosorbent assay, and panels of six DTS (three HIV positive and three HIV negative) were prepared by transferring 20 μL of plasma, premixed with 0.1% (v/v) green dye, into 2-mL Sarstedt tubes (Sarstedt Group, Nümbrecht, Germany). The tubes were allowed to dry overnight at room temperature in a biosafety cabinet and stored at 4°C until rehydrated prior to testing. Ten percent of the panels were retested at the LNSP for quality control using the HIV rapid test algorithm. The PT panels were subsequently packaged and shipped to the participating laboratories along with required buffer and instructions for reconstitution.

Analysis of PT Data

Participation rate and PT scores were compared between categories of laboratories and departments during the 6-year period using analysis of variance. Acceptable performance was defined as any PT score of 80% or above. All data were analyzed using SPSS version 13.0 (SPSS, Chicago, IL). We also reviewed forms to identify possible reasons for nonconcordant results and evaluated the impact of training and other corrective actions by assessing follow-up PT scores for laboratories that were not proficient during initial testing.

Results

Participating Laboratories

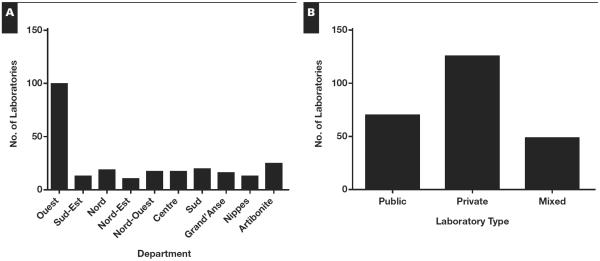

From 2006 through 2011, 263 unique laboratories enrolled in the program and received PT panels Table 1. Overall, 242 laboratories reported results at least once; response rates across laboratories ranged from 86% to 98% (Table 1). Most laboratories participated in the EQA program more than once in the six-year period; 27 (11%) participated only once. The 242 laboratories participating in the national EQA from 2006 through 2011 included laboratories from all 10 geographic departments Figure 1A. The greatest number of participating laboratories was from the Ouest department, with 99 participating laboratories (41% of the total). Of the 242 participating laboratories, 125 (52%) were private institutions, 69 (28%) were public laboratories, and 48 (20%) were mixed Figure 1B (mixed facilities are those with private management and personnel employed by the ministry of health) (Table 1).

Table 1.

Participation in Haiti's National Human Immunodeficiency Virus External Quality Assessment Program From 2006 Through 2011

| Year | No. of Laboratories Enrolled | No. of Laboratories Responding | Response Rate, % |

|---|---|---|---|

| 2006 | 76 | 70 | 92 |

| 2007 | 128 | 122 | 95 |

| 2008 | 76 | 73 | 96 |

| 2009 (Distribution 1) | 179 | 175 | 98 |

| 2009 (Distribution 2) | 209 | 189 | 90 |

| 2010 | 146 | 129 | 88 |

| 2011 | 185 | 159 | 86 |

Figure 1.

Number of laboratories participating in Haiti's national human immunodeficiency virus external quality assessment program by (A) department and (B) institution type.

PT Performance

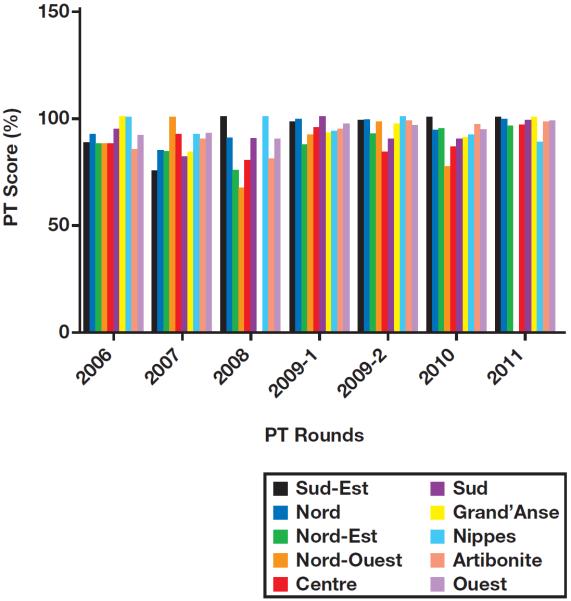

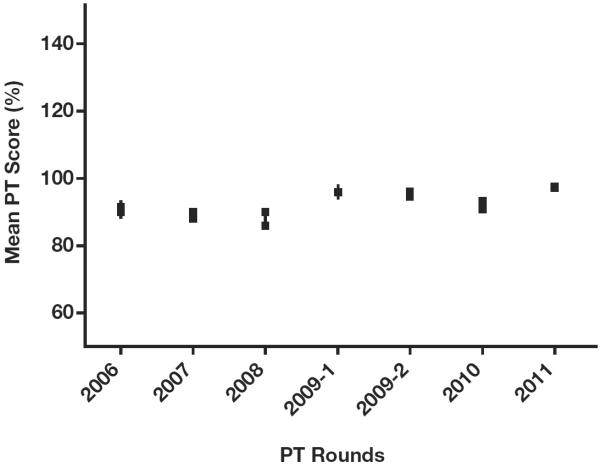

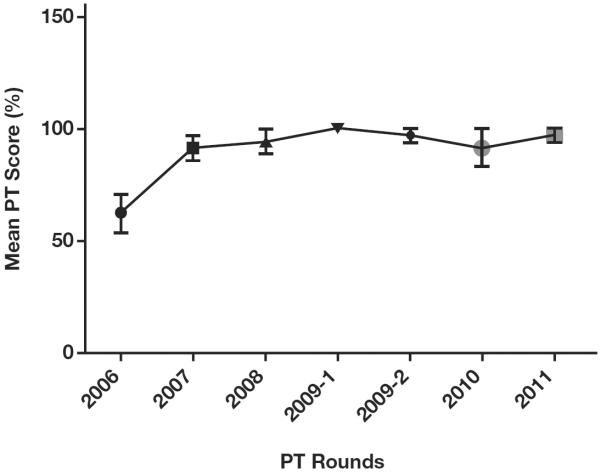

Over all PT rounds between 2006 and 2011, the results of proficiency testing were 93% concordant with reference results. Laboratories from the Nord-Ouest department had the lowest average PT score of 87%, and laboratories from the department/province/state of Nippes scored an average PT score of 95% concordance with reference results, but the differences in scores by department were not significant (P > .05) Figure 2. Increasing PT scores were observed from 2006 through 2011, with the lowest score (87.7%) observed in 2008 and the highest score (97.8%) in 2011 Figure 3. In 2006 and 2007, 49 (70%) and 73 (60%) participating laboratories scored 100% in PT; by 2011, 145 laboratories (91.2%) scored 100% (P < .05). In 2006, 49 (70%) laboratories had a PT score of 80% or above; by 2011, 145 (97.5%) laboratories were proficient (P < .05). Despite the overall trend of improving scores, average PT scores declined in 2008 and 2010 compared with previous years. There were no significant differences in PT scores between the three categories of laboratories (private, public, and mixed; P > .05). Key reasons for discordant results were mainly inaccuracy in interpreting Capillus results and use of a single test to diagnose HIV. Laboratories participating in the EQA program after failing the first PT program in 2006 significantly improved their PT scores (P < .05) following training and onsite technical assistance by senior laboratory technicians from the LNSP; these laboratories have maintained an average PT score of at least 91% in subsequent participations Figure 4.

Figure 2.

Average proficiency testing (PT) score for laboratories participating in Haiti's national human immunodeficiency virus external quality assessment program by department and PT round. P > .05, analysis of variance.

Figure 3.

Performance of all participating laboratories on the national human immunodeficiency virus external quality assessment program from 2006 through 2011. P < .05, analysis of variance. PT, proficiency testing.

Figure 4.

Proficiency testing (PT) scores over time for laboratories scoring less than 80% in 2006. P < .001, Student t test.

Discussion

During the recent rapid expansion of HIV diagnostic and treatment programs in Haiti, it has been imperative to optimize the quality of testing using HIV RDTs. We report the successful implementation of an EQA program for HIV testing in Haiti and overall high rates of proficiency. While PT proficiency is only one measure of testing quality, our results provide some assurance that laboratories in Haiti are providing accurate results using the rapid diagnostics platform.

We observed generally increasing participation in the EQA program from 2006 through 2011, which suggests growing interest and positive response to the national EQA program from HIV testing laboratories. Private laboratories participated extensively in the national HIV RDT EQA program. Since no national laboratory certification policy has been implemented to date, participation in the national EQA program may be considered a means for private laboratories to ensure quality of their testing and increase client confidence in their institutions. The greatest number of participating laboratories was in the Ouest department, which was expected since this department has the largest population, and more than 50% of all laboratories nationally are located in this department.

The decline in PT scores in 2008 and 2010 was likely related to staff turnover following the two major natural disasters (2008 Atlantic hurricane season and 2010 earthquake). Proficiency testing performance significantly improved following training and technical assistance, suggesting that these interventions were effective.

Our results reinforce the previous observations made by others that experience and training significantly affect the accuracy of rapid HIV test results.11–14 We believe the implementation of the EQA program for HIV testing had additional benefits—for example, helping to strengthen the relationships within the laboratory network as well as improving communication between the networks and the national reference laboratory, as it has been observed elsewhere.15

As Haiti scales up the number of hospitals or clinics providing HIV antiretroviral therapy with laboratory capacity, quality assurance of testing will remain a challenge. While increasing efforts have been made to assess and evaluate the quality of HIV RDTs nationwide, poor infrastructure and national policies for a laboratory system still need to be addressed. In addition, there is a need to expand EQA to other laboratory programs and to standardize the panels' distribution frequency. A national laboratory network plan was developed in 2010, categorizing laboratories into a tiered system network with the LNSP as the national reference laboratory and specifying a menu of tests for each level of laboratory. Next steps include implementation of standard laboratory policies and development of a laboratory certification system. Decentralization of laboratory services is also an important priority. As indicated by this evaluation, almost half of the laboratories enrolled in the national HIV testing EQA program are in the Ouest department. Strengthening laboratory infrastructure and testing capacities at the departmental level is key to increasing access to quality of laboratory services in Haiti. With the ultimate goal to achieve international accreditation, four laboratories in the country, including the LNSP, are enrolled in the Strengthening Laboratory Management System Towards Accreditation program, which is a stepwise process to improve quality management based on the model by the World Health Organization's Regional Office for Africa.16 During this process, continuous reinforcement of training and updating of national guidelines in line with rapid technological advancement will also be essential.17 The path is long toward increasing access to high-quality laboratory services in Haiti, but a lot of progress has been made since the inauguration of the LNSP in 2006. The national EQA program for HIV RDTs is a model of success that needs to be replicated to other pathogen diagnoses to extend the laboratory network and ensure the quality of results and patient satisfaction.

Upon completion of this activity you will be able to:

describe the importance of ensuring quality for laboratory testing.

outline the three-stage process recommended by the World Health Organization and the three key components necessary to implement an external quality assurance program for human immunodeficiency virus testing.

discuss the potential impact of training and technical assistance on proficiency testing performance.

Acknowledgment

We thank John Ho, MD, from CDC-Haiti for his critical review and helpful suggestions.

Footnotes

The ASCP is accredited by the Accreditation Council for Continuing Medical Education to provide continuing medical education for physicians. The ASCP designates this journal-based CME activity for a maximum of 1 AMA PRA Category 1 Credit ™ per article. Physicians should claim only the credit commensurate with the extent of their participation in the activity. This activity qualifies as an American Board of Pathology Maintenance of Certification Part II Self-Assessment Module.

The authors of this article and the planning committee members and staff have no relevant financial relationships with commercial interests to disclose.

Questions appear on p 915. Exam is located at www.ascp.org/ajcpcme.

References

- 1.World Health Organization (WHO) [Accessed May 20, 2013];Global HIV/AIDS Response: Epidemic Update and Health Sector Progress Towards Universal Access. www.who.int/hiv/pub/progress_report2011/en/index.html.

- 2.Yao K, Wafula W, Bile EC, et al. Ensuring the quality of HIV rapid testing in resource-poor countries using a systematic approach to training. Am J Clin Pathol. 2010;134:568–572. doi: 10.1309/AJCPOPXR8MNTZ5PY. [DOI] [PubMed] [Google Scholar]

- 3.Wesolowski LG, Ethridge SF, Martin EG, et al. Rapid human immunodeficiency virus test quality assurance practices and outcomes among testing sites affiliated with 17 public health departments. J Clin Microbiol. 2009;47:3333–3335. doi: 10.1128/JCM.01504-09. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Greenwald JL, Burstein GR, Pincus J, et al. A rapid review of rapid HIV antibody tests. Curr Infect Dis Rep. 2006;8:125–131. doi: 10.1007/s11908-006-0008-6. [DOI] [PubMed] [Google Scholar]

- 5.World Health Organization (WHO)/UNAIDS [Accessed May 20, 2013];1996 Guidelines for Organizing National External Quality Assessment Schemes for HIV Serological Testing. www.who.int/diagnostics_laboratory/quality/en/EQAS96.pdf.

- 6.Centers for Disease Control and Prevention (CDC) [Accessed June 6, 2013];Guideline for Appropriate Evaluations of HIV Testing Technologies in Africa. www.afro.who.int/en/clusters-a-programmes/dpc/acquired-immune-deficiency-syndrome/aids-publications.html.

- 7.Centers for Disease Control and Prevention (CDC) [Accessed June 6, 2013];Guidelines for Assuring the Accuracy and Reliability of HIV Rapid Testing. www.cdc.gov/dls/ila/documents/HIVRapidTest%20Guidelines%20(Final-Sept%202005).pdf.

- 8.Dax EM, Arnott A. Advances in laboratory testing for HIV. Pathology. 2004;36:551–560. doi: 10.1080/00313020400010922. [DOI] [PubMed] [Google Scholar]

- 9.Plate DK. Evaluation and implementation of rapid HIV tests: the experience in 11 African countries. AIDS Res Hum Retroviruses. 2007;23:1491–1498. doi: 10.1089/aid.2007.0020. [DOI] [PubMed] [Google Scholar]

- 10.Parekh BS, Anyanwu J, Patel H, et al. Dried tube specimens: a simple and cost-effective method for preparation of HIV proficiency testing panels and quality control materials for use in resource-limited settings. J Virol Methods. 2010;163:295–300. doi: 10.1016/j.jviromet.2009.10.013. [DOI] [PubMed] [Google Scholar]

- 11.Learmonth KM, McPhee DA, Jardine DK, et al. Assessing proficiency of interpretation of rapid human immunodeficiency virus assays in nonlaboratory settings: ensuring quality of testing. J Clin Microbiol. 2008;46:1692–1697. doi: 10.1128/JCM.01761-07. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Peeling RW, Smith PG, Bossuyt PM. A guide for diagnostic evaluations. Nat Rev Microbiol. 2006;4(suppl):S2–S6. doi: 10.1038/nrmicro1568. [DOI] [PubMed] [Google Scholar]

- 13.Chang D, Learmonth K, Dax EM. HIV testing in 2006: issues and methods. Expert Rev Anti Infect Ther. 2006;4:565–582. doi: 10.1586/14787210.4.4.565. [DOI] [PubMed] [Google Scholar]

- 14.Chiu YH, Ong J, Walker S, et al. Photographed rapid HIV test results pilot novel quality assessment and training schemes. PLoS One. 2011;6:e18294. doi: 10.1371/journal.pone.0018294. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Chalermchan W, Pitak S, Sungkawasee S. Evaluation of Thailand national external quality assessment on HIV testing. Int J Health Care Qual Assur. 2007;20:130–140. doi: 10.1108/09526860710731825. [DOI] [PubMed] [Google Scholar]

- 16.Yao K, McKinney B, Murphy A, et al. Improving quality management systems of laboratories in developing countries: an innovative training approach to accelerate laboratory accreditation. Am J Clin Pathol. 2010;134:401–409. doi: 10.1309/AJCPNBBL53FWUIQJ. [DOI] [PubMed] [Google Scholar]

- 17.Parekh BS, Kalou MB, Alemnji G, et al. Scaling up HIV rapid testing in developing countries: comprehensive approach for implementing quality assurance. Am J Clin Pathol. 2010;134:573–584. doi: 10.1309/AJCPTDIMFR00IKYX. [DOI] [PubMed] [Google Scholar]