Abstract

Addiction is the continuation of a habit in spite of negative consequences. A vast literature gives evidence that this poor decision-making behavior in individuals addicted to drugs also generalizes to laboratory decision making tasks, suggesting that the impairment in decision-making is not limited to decisions about taking drugs. In the current experiment, opioid-addicted individuals and matched controls with no history of illicit drug use were administered a probabilistic classification task that embeds both reward-based and punishment-based learning trials, and a computational model of decision making was applied to understand the mechanisms describing individuals’ performance on the task. Although behavioral results showed thatopioid-addicted individuals performed as well as controls on both reward- and punishment-based learning, the modeling results suggested subtle differences in how decisions were made between the two groups. Specifically, the opioid-addicted group showed decreased tendency to repeat prior responses, meaning that they were more likely to “chase reward” when expectancies were violated, whereas controls were more likely to stick with a previously-successful response rule, despite occasional expectancy violations. This tendency to chase short-term reward, potentially at the expense of developing rules that maximize reward over the long term, may be a contributing factor to opioid addiction. Further work is indicated to better understand whether this tendency arises as a result of brain changes in the wake of continued opioid use/abuse, or might be a pre-existing factor that may contribute to risk for addiction.

Keywords: addiction, reward learning, punishment learning

Introduction

Addiction is a special case of impaired decision-making in which individuals continue to seek and use addictive substances despite negative consequences. In the extreme, these consequences can include loss of income, family and friends, as well as illegal activity in acquiring and using illicit substances. Addiction can involve physical dependence, but also psychological dependence, as individuals continue to pursue and use drugs, even when they are well aware of these negative consequences and wish to stop the drug use. Thus, an important component of addiction is an abnormality in decision-making, and specifically how rewarding and punishing feedback are used to optimize behavior. One interpretation is that addicted individuals “chase” short-term reward, which may include both the acute drug effects as well as relief from withdrawal symptoms, at the expense of developing behavioral patterns that maximize reward over a longer time window.

Of particular societal concern are the highly-addictive opioid drugs including heroin, morphine, and a number of other medically-prescribed pain-killers such as oxycodone and hydrocodone. Due to an increasing use of opioids for pain management, accidental addiction leading to opioid abuse is currently a major issue. It is estimated that over 2 million people in the US alone have substance abuse disorders related to prescription opioid drugs, and abuse of these drugs may lead to abuse of heroin because it is cheaper and easier to obtain than prescription opioids (National Institute on Drug Abuse, 2014). Opioid addiction is notoriously difficult to overcome, even when the addicted individual strongly desires to stop using the drug; for example, one study reported 90% relapse rate for opioid-addicted individuals having undergone detoxification treatment (Smyth, Barry, Keenan, & Ducray, 2010). An alternate and widely-preferred approach is maintenance therapy involving medically-supervised use of opioids such as methadone and buprenorphine; however, a recent review of outcomes following buprenorphine maintenance therapy reported that, in every study examined, 1 month following discontinuation of treatment rates of relapse to illicit opioid use exceeded 50% (Bentzley, Barth, Back, & Book, 2015). Given this bleak outlook, it is of great importance to better understand the mechanisms underlying impaired decision-making in opioid-addicted individuals, in order to be able to develop more effective therapies to promote and support these individuals in overcoming their dependence.

In previous work, we and others have used probabilistic reward-and-punishment learning tasks to better understand decision-making impairments in various psychiatric and neurological patient groups (Bódi et al., 2009; Chase et al., 2010; Frank, Seeberger, & O'Reilly, 2004; Herzallah et al., 2013; Myers et al., 2013; Piray et al., 2014; Prevost, McCabe, Jessup, Bossaerts, & O'Doherty, 2011). For example, a widely-used paradigm interleaves reward-learning trials and punishment-learning trials (Bódi et al., 2009). On the reward-learning trials, correct responses are often (but not always) rewarded with point gain, while incorrect responses trigger no feedback; on punishment-learning trials, incorrect responses are often (but not always) punished with point loss, while correct responses trigger no feedback. Thus, the task allows evaluation of the relative speed at which individuals learn to obtain reward vs. avoid punishment; it also allows investigation of how individuals deal with violation of expectancies (since the probabilistic nature of the task means that a response which is usually optimal may nevertheless be incorrect on any specific trial).

A number of prior studies have applied this task to patient populations. For example, Parkinson’s disease (PD) involves progressive death of dopamine-producing neurons in the ventral striatum. Never-medicated PD patients perform similarly to matched controls on punishment-learning trials but are severely impaired at reward-learning trials, consistent with the idea that dopamine plays a key role in reward signaling; however, PD patients treated with dopaminergic drugs showed the reverse pattern: remediated reward learning but impaired punishment learning (Bódi et al., 2009). These results were recently replicated in a second study, which also considered a third group: PD patients who develop impulse control disorders (ICD) following treatment with dopaminergic medication (Piray et al., 2014). Behaviorally, the PD-ICD group showed facilitated reward learning but impaired punishment learning. Other studies with this and similar tasks have documented facilitated reward-based learning in male veterans with severe post-traumatic stress disorder (PTSD) symptoms (Myers et al., 2013), facilitated reward and punishment learning in individuals with anxiety vulnerability (Sheynin et al., 2013), and correlation between reward-based (but not punishment-based) learning and negative (but not positive) symptoms in schizophrenia (Gold et al., 2012; Somlai, Moustafa, Keri, Myers, & Gluck, 2011). Thus, this type of task is able to dissociate qualitative patterns of behavior among different patient populations, presumably reflecting different nodes of dysfunction in the brain for the different disorders.

One way of understanding the mechanisms behind reward-and-punishment learning in these various groups is through reinforcement learning (RL) theories. RL theories suggest that actions are chosen to best maximize rewards (or reward value) in a given state (Barto, Sutton, & Anderson, 1983; Sutton, 1988; Widrow, Gupta, & Maitra, 1973). RL models typically incorporate a concept of prediction error (PE), calculated by comparing actual outcomes (e.g. reward or punishment) against expected outcomes. One class of RL models, the actor-critic models (Barto et al., 1983; Dayan & Balleine, 2002) separates this prediction error from the action selection process. While one module (“critic”) learns to calculate PE, and uses it to predict the value of the current environmental state, a second module (“actor”) uses the PE to learn to select between competing possible actions. Specific parameters in the model can determine the rate at which an individual learns from rewarding or punishing feedback, the explore/exploit tradeoff (the degree to which an individual chooses previously-successful responses vs. occasionally trying new ones) and recency bias (the degree to which an individual simply repeats the most recent prior responses, regardless of past success).

Numerous studies suggest that the actor-critic model may provide a reasonable explanation of feedback-based learning in the brain. For example, in animals DA neurons respond especially to unexpected rewards (Hollerman & Schultz, 1998; Schultz, 1998), as well as to stimuli that signal upcoming or predicted reward (Fiorillo, Tobler, & Schultz, 2003) and reduce firing in response to omission of expected reward (Hollerman & Schultz, 1998). Similarly, in humans, healthy young males who underwent functional neuroimaging while performing the Bódi et al. (2009) task showed activity in the dorsal caudate that was consistent with PE calculations (Mattfeld, Gluck, & Stark, 2011). Further, Piray et al. (2014) applied several RL models to data obtained from PD patients with and without ICDs, described above, and determined the data were best described by an actor-critic model that allowed separate learning rates for reward and punishment trials in both the actor and the critic, a dissociation that appears to have plausible neural substrates (Frank, Moustafa, Haughey, Curran, & Hutchison, 2007; Rutledge et al., 2009). Results from this model suggested that, while PD is associated with reduced reward-based learning in the actor, ICDs are associated with reduced learning in the critic, resulting in an underestimation of adverse consequences associated with stimuli that predict punishment

Here, we ask whether the same evaluation of probabilistic reward-and-punishment learning, paired with RL modeling using an actor-critic model, can provide new insights into the mechanisms underlying the impairment in decision-making in opioid-addicted individuals, and could also suggest how this may be processed on a neural level. We applied the Bódi et al. (2009) task and RL modeling to a group of individuals addicted to opioids (specifically, heroin-addicted patients on opioid maintenance treatment), and a group of individuals who had never abused illicit drugs. Observing evidence for different RL parameters in the opioid-addicted group, relative to non-drug-using controls, would promote understanding of the underlying mechanisms affecting decision-making in opioid-addicted individuals, potentially providing insight into how addiction is maintained and how it might be remediated.

Methods

Participants

Forty-five participants (mean age: 41.2 (SD 10.3) years; 53% female; years of education: 10 (SD 1.8)) with a history of opioid (heroin) addiction were recruited from the Drug Health Services and Opioid Treatment Program at the Royal Prince Alfred Hospital (Sydney, Australia). Opioid dependence was confirmed using DSM-IV criteria and urine and drug screening. All patients were currently being treated with opioid medication (methadone or buprenorphine), and experimental sessions were scheduled 1-6 hours after daily dose. One patient was transferred to another site after testing, and his prior medical record was not available. For all remaining patients, mean duration of treatment at the clinic was 4.61 years (SD 3.7), with patient reporting a mean heroin addiction of 12.1 years (SD 9.29), and a current daily dose of 3.49 mg (SD 2.16). One patient reported having used heroin in the past week; this patient’s data were not excluded from analysis. However, past or current abuse of other substances, including ethanol, cocaine, amphetamines, marijuana, or other opioids, was an exclusion criterion. Some patients had concurrent DSM-IV axis 1 disorders, possibly related to their substance dependence, including 9 participants with schizophrenia (or schizophrenia-related disorders), 5 with depression, 1 with bipolar disorder, as well as 1 participant with a panic disorder and another with a cluster B personality disorder.

As a control group, 35 healthy participants (mean age: 39.0 (SD 11.6) years; 28.6% female; years of education: 12.8 (SD 2.1)) were recruited via word of mouth from the community. Exclusion criteria included history of substance dependence or any other DSM-IV axis I disorders. Although the opioid-addicted and control groups did not differ in age (independent-samples t-test, t(78)=0.88, p=.382), the groups were imbalanced for gender (chi-square test with Yates correction for 2×2 table, χ2=3.98, p=.046) and education (t(78)=6.42, p<.001). Because of this imbalance, as well as prior results suggesting that age affects reward and punishment learning (e.g., Bauer et al., 2013; Simon, Howard, & Howard, 2010), age, gender and education were considered as covariates in the analyses of behavioral data.

The experiment was conducted in accordance with guidelines for the protection of human subjects established by the Declaration of Helsinki, and approved by the Royal Prince Alfred Hospital Ethics Committee and the University of Western Sydney Ethics Committee. All participants provided written informed consent before the initiation of any testing.

Behavioral Task

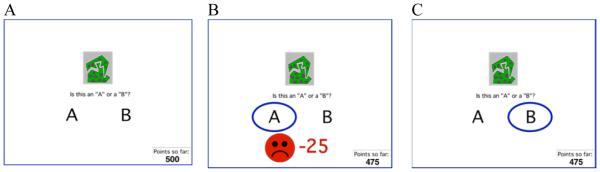

The reward and punishment learning task has been previously described (Bódi et al., 2009), and consisted of interleaved reward-learning and punishment-learning trials. On each trial, the participant viewed one of four images (S1, S2, S3, S4), and was asked to guess whether it belonged to category “A” or category “B” (Figure 1A). Stimuli S1 and S3 belonged to category A with 80% probability and to category B with 20% probability, while stimuli S2 and S4 belonged to category B with 80% probability and to category A with 20% probability (Table 1). Stimuli S1 and S2 were used for reward-learning trials. On these trials, if the participant correctly guessed category membership, a reward of +25 points was received; if the participant guessed incorrectly, no feedback appeared. Stimuli S3 and S4 were used in for punishment-learning trials. On these trials, if the participant guessed incorrectly, a punishment of −25 was received (Figure 1B); correct guesses received no feedback (Figure 1C). The no-feedback outcome was thus ambiguous, as it could signal lack of reward (if received during a trial with S1 or S2) or lack of punishment (if received during a trial with S3 or S4).

Figure 1.

Trial events in the behavioral task (Bódi et al., 2009). (A) On each trial, the participant saw one of four abstract shapes and was asked whether this shape belonged to category A or B. For punishment-based trials, incorrect responses were punished with point loss (B) while correct responses received no feedback (C). For reward-based trials (not shown), correct responses were rewarded with point gain while incorrect responses received no feedback.

Table 1.

Category and feedback structure of the probabilistic classification task.

| Stimulus | Probability class A | Probability class B | Feedback |

|---|---|---|---|

| S1 | 80% | 20% | If correct: +25 If incorrect: ø |

| S2 | 20% | 80% | |

| S3 | 80% | 20% | If correct: ø If incorrect: −25 |

| S4 | 20% | 80% |

The experiment was conducted on a Macintosh i-book, programmed in the SuperCard language (Allegiant Technologies, San Diego, CA). The participant was seated in a quiet testing room at a comfortable viewing distance from the screen. The keyboard was masked except for two keys, labelled “A” and “B” which the participant could use to enter responses. Before the experiment, the participant received the following instructions: In this experiment, you will be shown pictures, and you will guess whether those pictures belong to category “A” or category “B.” A picture does not always belong to the same category each time you see it. If you guess correctly, you may win points. If you guess wrong, you may lose points. You will see a running total of your points as you play. We will start you off with a few points now. Press the mouse button to begin practice.

Participants first completed a short practice phase during which the participant was instructed to press keys so as to observe both punishment and no-feedback outcomes on a punishment trial, and to observe both reward and no-feedback outcomes on a reward trial. The score was initialized to 500 points at the start of practice. Results from these practice trials were not included in the analysis.

After these practice trials, a summary of instructions appeared: So… For some pictures, if you guess CORRECTLY, you WIN points (but, if you guess incorrectly, you win nothing). For other pictures, if you guess INCORRECTLY, you LOSE points (but, if you guess correctly, you lose nothing). Your job is to win all the points you can – and lose as few as you can. Remember that the same picture does not always belong to the same category. Press the mouse button to begin the experiment.

From here, the experiment began. The task contained 160 trials, divided into 4 blocks of 40 trials. Within a block, trial order was randomized. Reward-learning trials (S1 and S2) and punishment-learning trials (S3 and S4) were intermixed. Within each block, each stimulus appeared 10 times, 8 times with the more common outcome (e.g. category “A” for S1 and S3 and “B” for S2 and S4) and 2 times with the less common outcome. Trials were separated by an interval of 2 seconds, during which time the screen was blank. On each trial, the computer recorded whether the participant made the optimal response (i.e. category A for S1 and S3, and category B for S2 and S4), regardless of actual outcome.

Computational Modeling

We fit each subject’s behavioral data to the actor-critic model (Barto et al., 1983; Dayan & Balleine, 2002), using a variant that allows different learning rates for positive and negative prediction errors in the actor and the critic (Piray et al., 2014).

On each trial t, the actor chooses current action at (choosing category A or B) given current input stimulus st, based on the expected outcomes Qt(st,A) and Qt(st,B) if each action is chosen:

All Q-values are initialized to 0 at t=0. Here, β is an explore/exploit parameter that governs the likelihood of choosing the action with highest Q-value (i.e. exploit previously-successful behaviors), vs. trying a different action (i.e.., explore new ones). Tt(st,A) and Tt(st,B) represent a short-term trace of the last response to the current stimulus: Tt(st,A)=1 and Tt(st,B)=0 if category A was chosen at the previous presentation of st and otherwise Tt(st,A)=0 and Tt(st,B)=1. The recency parameter, φ, determines how much recent actions, independent of reward history, affect current response: positive values of φ represent a tendency to repeat previous actions, and negative values represent a tendency for spontaneous alteration.

At the end of each trial, the actual outcome ot is received: ot=1 if reward, ot=−1 if punishment, and ot=0 if no feedback. The critic module computes prediction error δt as the discrepancy between actual and predicted outcome:

where Vt(st) is the critic’s valuation of the current stimulus st. All V-values are initialized to 0 at the start of training, and updated thereafter based on the prediction error:

where αc+ and αc− are the critic’s learning rates for positive and negative prediction errors, respectively. The prediction error is also conveyed to the actor for updating values for the selected action in the current state:

where αa+ and αa− are the actor’s learning rates for positive and negative prediction errors, respectively. Thus, in total the model has six free parameters: β, φ, and the four learning rates.

We used a hierarchical Bayesian procedure for fitting models to participants’ choices (Piray et al., 2014). All parameters were assumed to be free and could vary between 0..1 except the recency bias parameter φ which could vary between −2..+2. The mean and standard deviation of the distribution for the parameter values were estimated using participants’ choices through the expectation-maximization algorithm (Dempster, Laird, & Rubin, 1977). This algorithm alternates between an expectation step and a maximization step; we used Laplace approximation (MacKay, 2003) for the expectation step on each iteration. In addition to generating estimated parameters for each subject, the algorithm also generates negative log likelihood estimate (negLLE) as a measure of “goodness of fit” of the model to each subject’s data (with values of negLLE closer to zero indicating better fit). For additional details of the hierarchical fitting procedure, see (Huys et al., 2012).

In addition to the full, six-parameter actor-critic model, we also fitted the behavioral data using several simpler models. First, we considered models where β was held constant at a default value of 1 (leaving 5 free parameters), and where φ was held constant at a default value of 0 (leaving 5 free parameters). We also examined a model where where there was no critic, thereby leaving Vt(st) to be updated based solely on the difference between the actual outcome and the predicted outcome, irrespective of whether this results in positive or negative feedback (leaving 4 free parameters: αa+ , αa−, β, φ).

Lastly, we fit the data to two simpler models: first, a q-learning model (Frank et al., 2007; Sutton & Barto, 1998; Watkins & Dayan, 1992) where there is only 1 learning rate, α. The prediction error is therefore calculated based on the values of the stimulus-selected action pair, as opposed to the value of the stimulus independent of the selected action (as in the actor critic model). This model therefore has 3 free parameters: αa−, β, φ. Second, we considered a win-stay/lose-shift (WSLS) model where each action is dependent on the outcome of the previous action: if the previous action was successful (a win) then that action is chosen again, otherwise a different option is chosen. This can be described by the following function:

where wt = −1 if the previous presentation of st resulted in a loss, or else, wt = W, where W describes the weight of a win compared to a loss – the larger W, the larger the effect of a win on future actions than the effect of a loss (the effect of a loss increases as W < 1 approaches 0). The second free parameter, g, represents decision noise. Thus, this model has two free parameters (W and g). Table 2 summarizes these models.

Table 2.

Summary of models investigated, with free parameters explored in each. Model names are used in Figure 3.

| Model Names | Description | Free parameters |

|---|---|---|

| Actor-critic | Full actor-critic model | 6 (αc+, αc−, αc+, αc−, β, ø) |

| No β | Actor-critic model with β=1 | 5 (αc+, αc−, αc+, αc−, ø) |

| No ø | Actor-critic model with ø=0 | 5 (αc+, αc−, αc+, αc−, β) |

| Actor only | No critic | 4 (αc+, αc−, β, ø) |

| q-learning | Q-learning model | 3 (αa−, β, ø) |

| WSLS | Probabilistic win-stay/lose-shift | 2 (W, g) |

After estimating model parameters for each participant under each model, we used a Bayesian model selection approach that balances the fit of the model to individual subjects’ data against the complexity of that model (as described in Piray et al. 2014).

Data Analysis

Behavioral data were analyzed via ANOVA on total score with factor of group (control vs. opioid-addicted), and mixed ANOVA on percent optimal responding with trial type (reward and punishment) as the within-subject factor and group (control vs. opioid-addicted) as the between-subject factor. Similarly, to examine specific responding strategies, we used mixed ANOVA and analyzed percentage of win-stay (trials on which the subject repeated a response that had received reward or non-punishment on the prior trial with that stimulus) and lose-shift (trials on which the subject did not repeat a response that had received punishment or non-reward on the prior trial with that stimulus) responses (Moustafa, Gluck, Herzallah, & Myers, 2015). In all analyses, age, gender and education were entered as covariates.

Turning to the modeling data, negLLE measures on the full actor-critic model (and, indeed, on all models examined) were non-normal for both control and opioid-addicted groups (Shapiro-Wilk, all p<.010), and estimated parameters were derived based on Gaussian distribution. Accordingly, non-parametric statistics were used to analyze model results.

For all statistical analyses, the threshold for significance was set at alpha=0.05 (two-tailed), and Bonferroni correction was used to protect against inflated risk of Type I error under multiple comparisons; corrected alpha is reported only where p-values approach uncorrected threshold. For parametric tests, Levene’s test was used to check assumptions of equality of variance (ANOVA) and Box’s test was used to check assumptions of equality of covariance (mixed ANOVA); where Box’s test failed, Greenhouse-Geisser correction was used to adjust df calculations.

Results

Behavioral Task

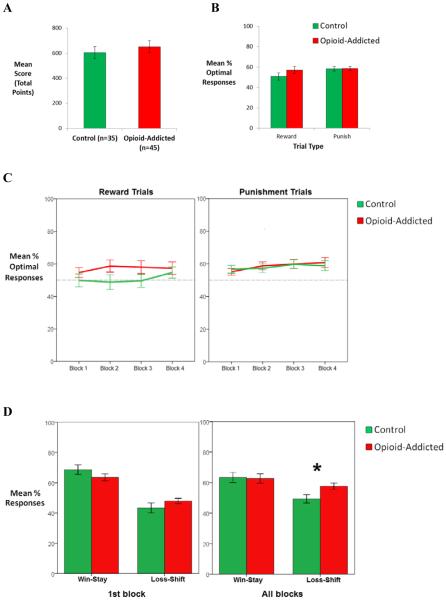

Figure 2A shows that the opioid-addicted group slightly outscored the control group in total points; however this difference was not significant (F(1,75)=1.55, p=0.217). Figure 2B shows performance on reward-based and punishment-based trials, for each group. Again, there was no significant effect of trial type or group and no interaction (all p>0.100).

Figure 2.

Behavioral results. There were no differences between control and opioid-addicted groups in (A) total points scored, (B) percent optimal responding on reward-based and punishment-based trials, or (C) learning curve for each trial type across blocks of 20 trials (all F<2.00, all p>0.200). However, (D) the opioid-addicted group showed greater loss-shift behavior in the first block of 20 trials, and across all blocks in the experiment(p=0.001). Error bars represent SEM.

When optimal performance was analyzed across blocks of 20 trials (Figure 2C), no main effects or interactions were found (all p>.01).

Lastly, when we analyzed win-stay loss-shift responding, a response type x group interaction was found across all trials (F(1,75)=6.01, p=0.017)). Post-hoc analysis revealed that the opioid-addicted group showed more loss-shift behavior than the control group (F(1,75)=11.070, p=0.001); Figure 2D).

Computational Modeling

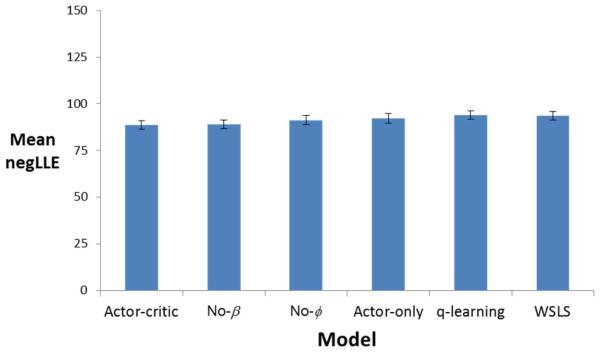

Average model fit, defined in terms of negLLE, is shown for each model in Figure 3. Although all models were similarly successful in fitting individual subject data, as indicated by comparable negLLE, Bayesian model selection determined that the full actor-critic model (6 free parameters) was the best fit to the data, closely followed by the no-β (5-parameter) model (with model evidence of 10546.31 and 10575.49 respectively, where the lower value represents the better fit). As a result, we focused on the full actor-critic model in subsequent analyses.

Figure 3.

Model fit, in terms of negative log likelihood estimate (negLLE), for the six models examined, averaged across subjects.

The actor-critic model fit data from control and opioid-addicted groups equally well, as indicated by no significant difference between groups in negLLE (both Mann-Whitney U=730, p=.577). Indeed, this was true for all models examined (all p>.500).

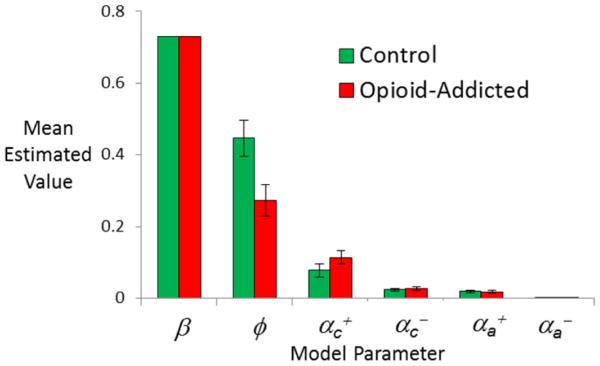

However, there were group differences in estimated parameters derived for each subject in the control and opioid-addicted groups under the full actor-critic model, as shown in Figure 4. Specifically, the control group had significantly greater values of the recency bias φ than the opioid-addicted group (Mann-Whitney U=500, p=.005); the groups did not differ on any other estimated parameter value (all p>.500 except αc+, p=.167).

Figure 4.

Estimated parameter values, for the full actor-critic model, with six free parameters, applied to individual subject data. Estimated values for the recency bias φ were significantly smaller in the opioid-addicted than control group (p=0.006); no other group differences approached significance (all p>.500 except αc+, p=.167). Error bars represent SEM.

We also considered whether estimated parameter values from the full actor-critic model were related to behavior. In particular, estimated values of the recency bias φ were negatively correlated with performance on reward-based trials (Pearson’s r= −.34, p=.002) and positively correlated with performance on punishment-based trials (Pearson’s r=.429, p<.001), indicating that participants with high estimated values of φ tended to perform well on punishment-based trials and worse on reward-based trials. This is generally consistent with the trend shown in Figure 2B for participants in the control group, who typically had higher values of φ, to perform somewhat worse on reward-based trials compared to the opioid-addicted group or compared to their own performance on punishment-based trials. These relationships between φ and performance remained even after partialling out the effects of group (all p<.005). Performance on punishment-based trials was also positively correlated with all the other parameters except αc+(β, αc+, αc+, αc+: all r>.400, all p<.001; αc+: r=−.189, p=.092). There were no other correlations between estimated parameters and performance on reward-based trials, except the negative correlation with φ noted above.

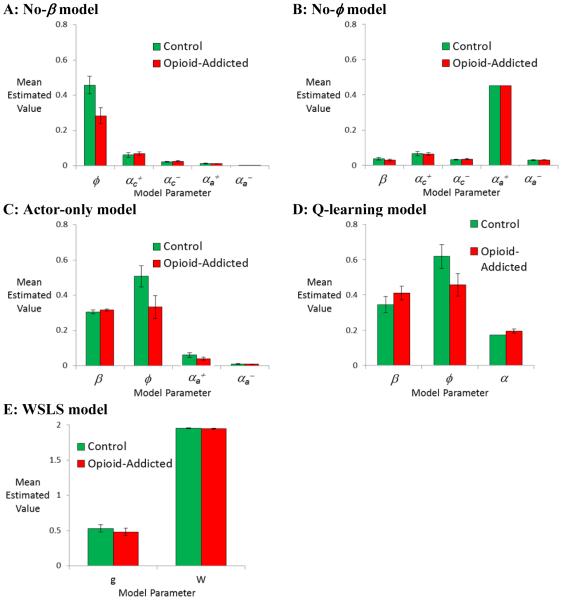

Finally, although the full actor-critic model provided the best description of the subject data, it is nevertheless interesting to consider whether the other models investigated also identified the recency bias as an important source of between-group differences. Figure 5 shows estimated parameters obtained for the control and opioid-addicted groups under each model examined.

Figure 5.

Among the simpler models tested, all those which included a recency bias parameter f (including (A) no-β model, (C) actor-only model, and (D) q-learning model), estimated values of φ are higher in the control than opioid-addicted group; this group difference is significant in (A) and (C), p<.05, and approaches significance in (D), p=.052. No other group differences approach significance in these models (all p>.200). In the no-φ model (B), which does not include a recency bias parameter, there are no group differences in estimated parameters (all p>.200). Finally, in the win-stay/lose shift (WSLS) model (E), there are no group differences (all p>.200).

As shown in Figure 4, the full actor-critic model produced very little variability in estimated values of β, which ranged only from 0.726 to 0.743; this is consistent with the finding noted above that the no-β model (where β was fixed at the default value of 1) was nearly as successful at fitting the data as the full actor-critic model. In fact, under the no-β model, the remaining estimated parameter values were very similar to those obtained under the full actor-critic model, with a group difference in recency bias φ but no group differences in other parameters (Figure 5A).

Similarly, the q-learning model and the Actor-only models, which also included a recency bias parameter, both showed greater estimated values of φ in the control than in the opioid-addicted group; this difference approached significance in the q-learning group (Figure 5D; U=587, p=.052) and reached significance in the Actor-only model (Figure 5C; U=527, p=.011), but no group differences in the other estimated parameters approached significance in either model (all U>670, all p>.250). On the other hand, in the no-φ model, with no recency bias (φ =0) there were no differences in values of estimated parameters (all p>.200). Finally, there were no group differences in the WSLS model (all p>.200), with both the control and opioid-addicted groups generating large estimated values of W, indicating larger weight of win compared to loss feedback (Figure 5E). In summary, then, among those models which included an explicit recency bias parameter (separate from one or more learning rate parameters), all showed a similar pattern to that in the full actor-critic model, with the control group tending to have larger estimated values of φ than the opioid-addicted group.

Discussion

This study attempted to use a behavioral task, combined with computational modeling, to better understand possible mechanisms that could differentiate decision-making processes in opioid-addicted individuals vs. never-addicted controls. The results indicate that, on a task where control and opioid-addicted groups were equated for behavioral performance, there may be underlying differences in how the task is performed. Specifically, using a modified actor-critic model, estimated parameter values in the opioid-addicted group were similar to those in the control group for five parameters: the learning rate parameters and the explore/exploit parameter β. However, the opioid-addicted group had significantly lower estimated values of φ compared to the control group. A range of different models that also included a recency bias parameter (separate from learning rate parameter(s)) showed similar patterns in the control vs. opioid groups, increasing our confidence that this is not merely an artifact of focusing our examination on a particular RL model.

In the RL model, φ is a recency bias that governs how likely a subject is to repeat previous responses to a stimulus, regardless of prior success with that response. At first glance, it may appear paradoxical to assume that the participants with a history of opioid addiction would show less recency bias than controls, since a tendency to recent responses (independent of whether those recent responses provoked desirable outcomes) seems similar to the habit maintenance that has been implicated in other forms of addiction, such as cocaine addicts (Woicik et al., 2011) and in smokers and problem gamblers (de Ruiter et al., 2009).

However, a (modest) recency bias can be beneficial in a probabilistic task, where outcomes vary from trial to trial; even after subjects have acquired the optimal response rule – i.e. the response to a stimulus that most often produces the optimal outcome – sometimes outcomes will occur that violate expectations. A higher value of φ will permit subjects to continue to execute the rule regardless of recent outcomes, rather than modifying the response every time an unexpected punishment or omission of reward occurs. In effect, subjects with a higher value of φ can take the long view, determining what responses are optimal in the long run, despite occasional evidence to the contrary; subjects with a lower φ are more likely to “chase” immediate feedback. At an extreme, the no-φ model, where φ is held to zero, did not provide a better description of the actual subject data than the full actor-critic model, where φ was allowed to take on positive values, indicating that subjects did indeed exhibit a recency bias.

Although the group difference in estimated values of φ was significant, it was modest in size, and did not result in detectable group differences on task performance; in fact, if anything, the opioid-addicted group numerically outscored the control group (Figure 2A). Nevertheless, increased sensitivity to violations of expectancy could help explain why addicts continue to pursue the short-term rewards of drug use at the expense of negative (and primarily long-term) consequences. Crucially, the win-stay/loss-shift analyses (Figure 2D) support such an interpretation and show that subjects in the addicted group modified their responding after non-confirmatory feedback (“loss-shift”) more often than subjects in the control group, while demonstrating a similar responding after confirmatory feedback (“win-stay”).

Interestingly, there is additional evidence for this idea in other tasks designed to test learning and decision-making in addicted individuals. For example, addicts also score significantly lower on the Stanford Time Perception Inventory and Future Time Perspective tests, and this hypersensitivity to reward appears to be preferenced towards immediate rewards (Petry, Bickel, & Arnett, 1998). In addition, patients who have recently received their daily methadone dose show a reduction in perseverative responding on the Wisconsin Card Sorting Task, compared with patients in early methadone withdrawal (Lyvers & Yakimoff, 2003); this may be generally consistent with the reduced recency bias in our addicted patients, who were tested within a few hours after daily methadone dose. Another recent study considered a very different computational model applied to data on the Iowa Gambling Task, and found estimated values for a recency parameter were lower in women with a history of drug use (heroin and/or crack cocaine) than female peers with no history of drug use (Vassileva et al., 2013). Thus, there seems to be converging evidence across a number of tasks, patient populations, and modeling techniques that opioid addiction is associated with a decreased tendency to repeat prior responses. However, further research is clearly needed to investigate whether this tendency in laboratory studies is linked to the addictive power of drugs and other behaviors in real-world contexts.

Another way to understand the effect of lower φ in the model is in terms of working memory. This parameter governs the degree to which the short-term memory trace T modulates action selection; lower values of φ therefore approximate the effect of degraded working memory traces. In fact, there is evidence from other studies that opioid-addicted individuals show poor performance on various tests of working memory (Liang et al., 2014; Vo, Schacht, Mintzer, & Fishman, 2014) as do patients given long-term opioid treatment for chronic pain (Schiltenwolf et al., 2014). In fact, one recent meta-analysis suggests that the only neuropsychological domains that are reliably impaired across studies of chronic opioid exposure are those involving working memory, cognitive impulsivity, and cognitive flexibility (Baldacchino, Balfour, Passetti, Humphris, & Matthews, 2012). Thus, the finding from the RL model that the opioid-addicted group shows reduced φ is consistent with other interpretations of cognitive deficit in this population.

Importantly, RL models of this task applied to data from other patient populations identify different mechanisms characterizing those populations. Thus, for example, RL models applied to patients with dopamine dysfunction (e.g. PD, PD with ICD) tend to identify abnormalities in the relative rates of learning from rewarding vs. punishing feedback (Piray et al., 2014), while RL models applied to data from veterans with PTSD or healthy young adults with anxiety vulnerability suggest abnormalities in the relative valence of different types of feedback (Myers et al., 2013; Sheynin, Moustafa, Beck, Servatius, & Myers, 2015). However, in the current study, φ was the only parameter that differed significantly between groups. Thus, RL may provide a way to understand how different decision-making mechanisms are affected in different patient populations, even under conditions where overall learning (assessed as total points or as percent optimal responding) is unimpaired or minimally impaired.

In the current study as in prior studies, the lack of group differences on behavior is unlikely to be due to a ceiling effect, since as shown in Figure 2 group performance did not approach the theoretical maximum of 100%; rather, in the current study as in prior studies, there was considerable variability in individual performance on reward-based and punishment-based learning, with some individuals performing extremely well on punishment-based trials but below chance on reward-based trials, and others showing better reward-based than punishment-based learning. This variability suggests that individuals allocated resources differently, but the lack of interaction between group and trial type in Figure 2 indicates that the relative rate of reward-based and punishment-based learning was not significantly different between opioid-addicted individuals and controls. This is consistent with the conclusion that the significant difference between groups had to do with recency bias, which would apply equally to reward-based and punishment-based trials.

On the other hand, although only the recency bias parameter was significantly different between groups in the current study, Figure 4 shows that the addicted group did have slightly higher estimated learning rate for reward trials in the critic, although this did not approach significance (p>.500). Such a difference would be plausible, given that reward-based prediction error signals in the critic have been linked to dopamine, and given that the ‘high’ induced by addictive drugs is directly proportional to increased DA (Volkow, Fowler, Wang, & Swanson, 2004). Further, the hedonic effect of both endogenous and exogenous opioids is specifically linked to their action on opioid receptors in the brain (van Ree, Gerrits, & Vanderschuren, 1999), including μ-opioid receptors located on inhibitory interneurons that disinhibit DA neurons (Spanagel, Herz, & Shippenberg, 1992). Thus the current study cannot definitively rule out the possibility that opioid-addicted individuals also have abnormalities in reward processing, and it is possible that a larger study or a more homogeneous sample of addicted individuals might produce a significant group difference on this variable.

Similarly, although the current study found no sex differences on any behavioral or modeling measure (data not shown), our prior study with a sample of opioid-addicted patients drawn from the same population as the current study found an interaction with sex on an avoidance learning task (Sheynin et al., 2016/in press). In that prior study, male – but not female – opioid addicts showed increased avoidance learning on a task that included competing approach-avoidance components. The current results suggest that sex differences among opioid addicts are not necessarily always observed in tasks that assess the ability to learn to avoid aversive outcomes; future work should assess whether it is specifically the feature of competing approach-avoidance components, or some other difference between the tasks, that determines whether sex differences occur.

Obviously, a key limitation of the current study is that it cannot dissociate between the long-term effects of opioid (heroin) addiction vs. the effects of current maintenance treatment on decision-making; in addition, it would be important to know whether the group differences in recency emerge following chronic opioid exposure leading to dysregulation of the endogenous opioid receptor system, or represent pre-existing biases that perhaps confer risk of becoming addicted in the first place. Thus, future studies should examine this issue further, possibly controlling for the acute effects of opioid administration, as well as comparing with individuals who are addicted to other, non-opioid drugs. Additional studies are also indicated to investigate whether the same pattern of altered recency bias occurs in other forms of addiction, or is a unique feature of opioid addiction.

Another key limitation of the current study is that our opioid-addicted group included individuals reporting comorbid DSM-IV axis I disorders, particularly schizophrenia or schizophrenia related disorders (9 of 45, 20%), but also mood, anxiety, and personality disorders. Such comorbidity rates are comparable to, or a little lower than, rates observed in other studies of treatment-seeking opioid users (Brooner, King, Kidorf, Schmidt, & Bigelow, 1997; Milby et al., 1996; Strain, 2002), or indeed in our own prior study with a sample drawn from the same population as the current study (Sheynin et al., 2016/in press). Despite this low rate of inclusion of individuals with comorbidity, since feedback-based learning and decision-making are affected in schizophrenia, depression, and mood disorders (for good recent reviews, see Haber & Behrens, 2014; Whitton, Treadway, & Pizzagalli, 2015), these comorbidities could potentially have affected behavior in the current study. Further, the medications used to treat these studies could affect behavior; in fact, a prior study using this same probabilistic task has shown effects of antidepressant medication on task performance (Herzallah et al., 2013). Although the current study was underpowered to examine comorbidities explicitly, comparison of patients reporting presence vs. absence of comorbidities revealed no differences approaching statistical significance on any behavioral or modeling result. Similarly, when analyses were repeated for only those subjects without comorbidity, all the reported group differences were unchanged. Still, future studies could profitably specifically target groups of opioid-addicted individuals with vs. without different diagnosed comorbidities.

Other limitations of the current study include demographic characteristics that often differ between patients and healthy controls and might have an effect on their cognition (e.g., Le Carret, Lafont, Mayo, & Fabrigoule, 2003). Indeed, in the current study addicts had lower education levels than the controls. While we controlled for this variable in our analysis, future studies should collect additional information (e.g., socioeconomic status) to better address such differences. Lastly, the optimal performance (particularly on reward trials) demonstrated in the current study was fairly low (Figure 2B-C). While such performance is consistent with most of the previous reports which examined putatively healthy individuals on this task (Sheynin et al. 2013, Myers et al. 2013, Piray et al. 2014, Moustafa et al. 2015), it is not always the case (Bódi et al., 2009). As this is the first study to test Australian subjects on this task, discrepancy could result from testing-site variability. Future studies should follow-up on this idea, possibly by conducting a multi-site comparison.

Overall the results of this study suggest that, on a task where opioid-addicted individuals do not show behavioral impairment, they nevertheless differ from controls in the underlying mechanisms of decision-making. Specifically, on a probabilistic task where outcomes sometimes violate expectancies, opioid-addicted individuals are more vulnerable to “chase reward” rather than “sticking by” response rules that have proven optimal over the long term. This tendency may be linked with the addictive power of opioid drugs; however, further evidence is required in order to clarify the exact nature of the interrelation between decision-making and addiction.

Acknowledgments

This work was partially supported by the NSF/NIH Collaborative Research in Computational Neuroscience (CRCNS) Program and by NIAAA (R01 AA018737). The views in this paper are those of the authors and do not represent the official views of the Department of Veterans Affairs or the U. S. Government.

References

- Baldacchino A, Balfour DJ, Passetti F, Humphris G, Matthews K. Neuropsychological consequences of chronic opioid use: A quantitative review and meta-analysis. Neuroscience & Biobehavioral Reviews. 2012;36(9):2056–2068. doi: 10.1016/j.neubiorev.2012.06.006. [DOI] [PubMed] [Google Scholar]

- Barto AG, Sutton RS, Anderson CW. Neuronlike adaptive elements that can solve difficult learning control problems. IEEE Transactions on Systems, Man and Cybernetics, SMC. 1983;13(5):834–846. [Google Scholar]

- Bauer AS, Timpe JC, Edmonds EC, Bechara A, Tranel D, Denburg NL. Myopia for the future or hypersensitivity to reward? Age-related changes in decision making on the Iowa Gambling Task. Emotion. 2013;13(1):19–24. doi: 10.1037/a0029970. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bentzley BS, Barth KS, Back SE, Book SW. Discontinuation of buprenorphine maintenance therapy: Perspectives and outcomes. Journal of Substance Abuse Treatment. 2015;52:48–57. doi: 10.1016/j.jsat.2014.12.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bódi N, Kéri S, Nagy H, Moustafa A, Myers CE, Daw N, Gluck MA. Reward-learning and the novelty-seeking personality: a between- and within-subjects study of the effects of dopamine agonists on young Parkinson's patients. Brain. 2009;132(Pt 9):2385–2395. doi: 10.1093/brain/awp094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brooner RK, King VL, Kidorf M, Schmidt CW, Bigelow GE. Psychiatric and substance use comorbidity among treatment-seeking opioid abusers. Archives of General Psychiatry. 1997;54:71–80. doi: 10.1001/archpsyc.1997.01830130077015. [DOI] [PubMed] [Google Scholar]

- Chase HW, Frank MJ, Michael A, Bullmore ET, Sahakian BJ, Robbins TW. Approach and avoidance learning in patients with major depression and healthy controls: Relation to anhedonia. Psychological Medicine. 2010;40:433–440. doi: 10.1017/S0033291709990468. [DOI] [PubMed] [Google Scholar]

- Dayan P, Balleine BW. Reward, motivation, and reinforcement learning. Neuron. 2002;36(2):285–298. doi: 10.1016/s0896-6273(02)00963-7. doi: S0896627302009637 [pii] [DOI] [PubMed] [Google Scholar]

- de Ruiter MB, Veltman DJ, Goudriaan AE, Oosterlaan J, Sjoerds Z, van den Brink W. Response perseveration and ventral prefrontal sensitivity to reward and punishment in male problem gamblers and smokers. Neuropsychopharmacology. 2009;34(4):1027–1038. doi: 10.1038/npp.2008.175. [DOI] [PubMed] [Google Scholar]

- Dempster AP, Laird NM, Rubin DB. Maximum likelihood from incomplete data via the EM algorithm. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 1977;39:1–38. [Google Scholar]

- Fiorillo C, Tobler P, Schultz W. Discrete coding of reward probability and uncertainty by dopamine neurons. Science. 2003;299:1898–1902. doi: 10.1126/science.1077349. [DOI] [PubMed] [Google Scholar]

- Frank MJ, Moustafa AA, Haughey HM, Curran T, Hutchison KE. Genetic triple dissociation reveals multiple roles for dopamine in reinforcement learning. Proceedings of the National Academy of Sciences USA. 2007;104(41):16311–16316. doi: 10.1073/pnas.0706111104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frank MJ, Seeberger L, O'Reilly R. By carrot or by stick: Cognitive reinforcement learning in Parkinsonism. Science. 2004;306(5703):1940–1943. doi: 10.1126/science.1102941. [DOI] [PubMed] [Google Scholar]

- Gold JM, Waltz JA, Matveeva TM, Kasanova Z, Strauss GP, Herbener ES, Frank MJ. Negative symptoms and the failure to represent the expected reward value of actions. Archives of General Psychiatry. 2012;69(2):129–138. doi: 10.1001/archgenpsychiatry.2011.1269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haber SN, Behrens TE. The neural network underlying incentive-based learning: Implications for interpreting circuit disruptions in psychiatric disorders. Neuron. 2014;83:1019–1039. doi: 10.1016/j.neuron.2014.08.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herzallah MM, Moustafa AA, Natsheh JY, Abdellatif SM, Taha MB, Tayem YI, Gluck MA. Learning from negative feedback in patients with major depressive disorder is attenuated by SSRI antidepressants. Frontiers in Integrative Neuroscience. 2013;7 doi: 10.3389/fnint.2013.00067. Article 67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hollerman J, Schultz W. Dopamine neurons report an error in the temporal prediction of reward during learning. Nature Neuroscience. 1998;1(4):304–309. doi: 10.1038/1124. [DOI] [PubMed] [Google Scholar]

- Huys QJ, Eshel N, O'Nions E, Sheridan L, Dayan P, Roiser JP. Bonsai trees in your head: How the Pavlovian system sculpts goal-directed choices by pruning decision trees. PLoS Computational Biology. 2012;8:e1002410. doi: 10.1371/journal.pcbi.1002410. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Le Carret N, Lafont S, Mayo W, Fabrigoule C. The effect of education on cognitive performances and its implication for the constitution of the cognitive reserve. Developmental Neuropsychology. 2003;23:317–337. doi: 10.1207/S15326942DN2303_1. [DOI] [PubMed] [Google Scholar]

- Liang CS, Ho PS, Yen CH, Kuo SC, Huang CC, Chen CY, Huang SY. Reduced striatal dopamine transporter density associated with working memory deficits in opioid-dependent male subjects: a SPECT study. Addiction Biology. 2014 2014 Dec 1; doi: 10.1111/adb.12203. [ epub ahead of print ] [DOI] [PubMed] [Google Scholar]

- Lyvers M, Yakimoff M. Neuropsychological correlates of opioid dependence and withdrawal. Addictive Behaviors. 2003;28(3):605–611. doi: 10.1016/s0306-4603(01)00253-2. [DOI] [PubMed] [Google Scholar]

- MacKay DJC. Information theory, inference, and learning algorithms. Cambridge University Press; Cambridge, UK: 2003. [Google Scholar]

- Mattfeld AT, Gluck MA, Stark CEL. Functional specialization within the striatum along both the dorsal.ventral and anterior/posterior axes during associative learning via reward and punishment. Learning and Memory. 2011;18:703–711. doi: 10.1101/lm.022889.111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Milby JB, Sims MK, Khuder S, Schumacher JE, Huggins N, McLellan AT, Haas N. Psychiatric comorbidity: Prevalence in methadone maintenance treatment. American Journal of Drug and Alcohol Abuse. 1996;22:95–107. doi: 10.3109/00952999609001647. [DOI] [PubMed] [Google Scholar]

- Moustafa AA, Gluck MA, Herzallah MM, Myers CE. The influence of trial order on learning from reward vs. punishment in a probabilistic categorization task: Experimental and computational analysis. Frontiers in Behavioral Neuroscience. 2015;9:153. doi: 10.3389/fnbeh.2015.00153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Myers CE, Moustafa AA, Sheynin J, VanMeenen K, Gilbertson MW, Orr SP, Servatius RJ. Learning to obtain reward, but not avoid punishment, is affected by presence of PTSD symptoms in male veterans: Empirical data and computational model. PLOS ONE. 2013;8(8):e72508. doi: 10.1371/journal.pone.0072508. [DOI] [PMC free article] [PubMed] [Google Scholar]

- National Institute on Drug Abuse Prescription and over-the-counter medications. 2014 [Google Scholar]

- Petry NM, Bickel WK, Arnett M. Shortened time horizons and insensitivity to future consequences in heroin addicts. Addiction. 1998;93(5):729–738. doi: 10.1046/j.1360-0443.1998.9357298.x. [DOI] [PubMed] [Google Scholar]

- Piray P, Zeighami Y, Bahrami F, Eissa AM, Hewedi DH, Moustafa AA. Impulse control disorders in Parkinson's disease are associated with dysfunction in stimulus valuation but not action valuation. Journal of Neuroscience. 2014;34(23):7814–7824. doi: 10.1523/JNEUROSCI.4063-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prevost C, McCabe JA, Jessup RK, Bossaerts P, O'Doherty JP. Differential contributions of human amygdalar subregions in the computations underlying reward and avoidance learning. European Journal of Neuroscience. 2011;34:134–145. doi: 10.1111/j.1460-9568.2011.07686.x. [DOI] [PubMed] [Google Scholar]

- Rutledge RB, Lazzaro SC, Lau B, Myers CE, Gluck MA, Glimcher PW. Dopaminergic drugs modulate learning rates and perseveration in Parkinson's patients in a dynamic foraging task. Journal of Neuroscience. 2009;29:15104–15114. doi: 10.1523/JNEUROSCI.3524-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schiltenwolf M, Akbar M, Hug A, Pfüller U, Gantz S, Neubauer E, Wang H. Evidence of specific cognitive deficits in patients with chronic low back pain under long-term substitution treatment of opioids. Pain Physician. 2014;17(1):9–20. [PubMed] [Google Scholar]

- Schultz W. Predictive reward signal of dopamine neurons. Journal of Neurophysiology. 1998;80:1–27. doi: 10.1152/jn.1998.80.1.1. [DOI] [PubMed] [Google Scholar]

- Sheynin J, Moustafa AA, Beck KD, Servatius RJ, Casbolt P, Haber P, Myers CE. Exaggerated acquisition and resistance to extinction of avoidance behavior in heroin-dependent males (but not females) Journal of Clinical Psychiatry. 2016/in press doi: 10.4088/JCP.14m09284. to appear. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sheynin J, Moustafa AA, Beck KD, Servatius RJ, Myers CE. Testing the role of reward and punishment sensitivity in avoidance behavior: A computational modeling approach. Behavioural Brain Research. 2015;283:121–138. doi: 10.1016/j.bbr.2015.01.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sheynin J, Shikari S, Gluck MA, Moustafa AA, Servatius RJ, Myers CE. Enhanced avoidance learning in behaviorally-inhibited young men and women. Stress. 2013;16(3):289–299. doi: 10.3109/10253890.2012.744391. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simon JR, Howard JH, Howard DV. Adult age differences in learning from positive and negative probabilistic feedback. Neuropsychology. 2010;24(4):534–541. doi: 10.1037/a0018652. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smyth BP, Barry J, Keenan E, Ducray K. Lapse and relapse following inpatient treatment of opiate dependence. Irish Medical Journal. 2010;103(6):176–179. [PubMed] [Google Scholar]

- Somlai Z, Moustafa AA, Keri S, Myers CE, Gluck MA. General functioning predicts reward and punishment learning in schizophrenia. Schizophrenia Research. 2011;127(1-3):131–136. doi: 10.1016/j.schres.2010.07.028. [DOI] [PubMed] [Google Scholar]

- Spanagel R, Herz A, Shippenberg TS. Opposing tonically active endogenous opioid systems modulate the mesolimbic dopaminergic pathway. Proceedings of the National Academy of Sciences of the United States of America. 1992;89(6):2046–2050. doi: 10.1073/pnas.89.6.2046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strain EC. Assessment and treatment of comorbid psychiatric disorders in opioid dependent patients. Clinical Journal of Pain. 2002;18(4 Suppl):S14–S27. doi: 10.1097/00002508-200207001-00003. [DOI] [PubMed] [Google Scholar]

- Sutton RS. Learning to predict by the methods of temporal differences. Machine Learning. 1988;3:9–44. [Google Scholar]

- Sutton RS, Barto AG. Reinforcement learning: An introduction. MIT Press; Cambridge, MA: 1998. [Google Scholar]

- van Ree JM, Gerrits MA, Vanderschuren LJ. Opioids, reward and addiction: An encounter of biology, psychology, and medicine. Pharmacol Rev. 1999;51(2):341–396. [PubMed] [Google Scholar]

- Vassileva J, Ahn WY, Weber KM, Busemeyer JR, Stout JC, Gonzalez R, Cohen MH. Computational modeling reveals distinct effects of HIV and history of drug use on decision-making processes in women. PLoS One. 2013;8(8):e68962. doi: 10.1371/journal.pone.0068962. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vo HT, Schacht R, Mintzer M, Fishman M. Working memory impairment in cannabis- and opioid-dependent adolescents. Substance Abuse. 2014;35(4):387–390. doi: 10.1080/08897077.2014.954027. [DOI] [PubMed] [Google Scholar]

- Volkow ND, Fowler JS, Wang JJ, Swanson JM. Dopamine in drug abuse and addiction: Results from imaging studies and treatment implications. Molecular Psychiatry. 2004;9(6):557–569. doi: 10.1038/sj.mp.4001507. [DOI] [PubMed] [Google Scholar]

- Watkins CJCH, Dayan P. Q-learning. Machine Learning. 1992;8:279–292. [Google Scholar]

- Whitton AE, Treadway MT, Pizzagalli DA. Reward processing dysfunction in major depression, bipolar disorder and schizophrenia. Current Opinion in Psychiatry. 2015;28:7–12. doi: 10.1097/YCO.0000000000000122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Widrow B, Gupta N, Maitra S. Punish/reward: Learning with a critic in adaptive systems. IEEE Transactions on Systems, Man and Cybernetics, SMC- 1973;3(5):455–465. [Google Scholar]

- Woicik PA, Urban C, Alia-Klein N, Henry A, Maloney T, Telang F, Goldstein RZ. A pattern of perseveration in cocaine addiction may reveal neurocognitive processes implicit in the Wisconsin Card Sorting Test. Neuropsychologia. 2011;49(7):1660–1669. doi: 10.1016/j.neuropsychologia.2011.02.037. [DOI] [PMC free article] [PubMed] [Google Scholar]