Abstract

A common statistical situation concerns inferring an unknown distribution Q(x) from a known distribution P(y), where X (dimension n), and Y (dimension m) have a known functional relationship. Most commonly, n ≤ m, and the task is relatively straightforward for well-defined functional relationships. For example, if Y1 and Y2 are independent random variables, each uniform on [0, 1], one can determine the distribution of X = Y1 + Y2; here m = 2 and n = 1. However, biological and physical situations can arise where n > m and the functional relation Y→X is non-unique. In general, in the absence of additional information, there is no unique solution to Q in those cases. Nevertheless, one may still want to draw some inferences about Q. To this end, we propose a novel maximum entropy (MaxEnt) approach that estimates Q(x) based only on the available data, namely, P(y). The method has the additional advantage that one does not need to explicitly calculate the Lagrange multipliers. In this paper we develop the approach, for both discrete and continuous probability distributions, and demonstrate its validity. We give an intuitive justification as well, and we illustrate with examples.

Keywords: maximum entropy, joint probability distribution, microbial ecology

1. Introduction

We are often interested in quantitative details about quantities that are difficult or even impossible to measure directly. In many cases we may be fortunate enough to find measureable quantities that are related to our variables of interest. Such examples are abundant in nature. Consider a community of microbes coexisting in humans or other metazoan species [1,2]. It is possible to measure the relative abundances of different species in the microbial community in individual hosts, but it could be difficult to directly measure parameters that regulate interspecies interactions in these diverse communities. Knowing the quantitative values of the parameters representing microbial interactions is of great interest, both because of their role in development of therapeutic strategies against diseases such as colitis, and for basic understanding, as we have discussed in [3].

Inference of these unknown variables from the available data is a subject of a vast literature in diverse disciplines including statistics, information theory, and, machine learning [4–7]. In this paper we will be interested in a specific problem where the unknown variables in a large dimension are related to a smaller number of variables whose joint probability distribution is known from measurements.

In the above example, parameters describing microbial interactions could represent such unknown variables, and their number could be substantially larger than the number of measurable variables, such as abundances of distinct microbial species. The distribution of abundances of microbial species in a host population can be calculated from measurements performed on a large number of individual subjects. The challenge is to estimate the distribution of microbial interaction parameters using the distribution of microbial abundances.

These inference problems can be dealt with by Maximum Entropy (MaxEnt)-based methods that maximize an entropy function subject to constraints provided by the expectation values calculated from measured data [4,5,7,8]. In standard applications of MaxEnt, usually, averages, covariances, and, sometimes, higher-order moments calculated from the data are used to infer such distributions [4,5,7]. Including larger number of constraints in the MaxEnt formalism involves calculating a large number of Lagrange multipliers by solving an equal number of nonlinear equations, which can pose a great computational challenge [9]. Here we propose a novel MaxEnt-based method to infer the distribution of the unknown variables. Our method uses the distribution of the measured variables and provides an elegant MaxEnt solution that bypasses direct calculation of the Lagrange multipliers. Instead, the inferred distribution is described in terms of a degeneracy factor, described by a closed form expression, which depends only on the symmetry properties of the relation between the measured and the unknown variables.

More generally, the above problem relates to the issue of calculating a probability function of X from the probability function of Y, where X and Y are both random variables, and Y and X have a functional relationship. This could involve either discrete or continuous random variables. Standard textbooks [10] in probability theory usually deal with cases where (a) variables X are related to variables of Y by a well-defined functional relationship (x = g(y)), with the distribution of the Y variables (y) known, and (b) X resides in a manifold (dimension n) of lower dimension than the Y manifold (dimension m). However, it is not clear how to extend the standard calculations pertaining to the above well-defined case when multiple values of X variables are associated with the same Y, variable. This situation easily arises when n is greater than m. We address this problem here, where we estimate Q(x) from P(y) when n > m. i.e., we infer the higher-dimension variable from the lower-dimension one. We show that when the variables are discrete, no unique solution exists for Q(x), as the system is underdetermined. However, the MaxEnt-based method can provide a MaxEnt solution in this situation that is constrained only by the available information (P(y) in this case) and is free from any additional assumptions. We then extend the results for continuous variables.

2. The Problem

We state the problem, illustrating in this section with discrete random variables. Consider a case when n different random variables, x1, ‥, xn, are related to m (n > m) different variables, y1, …, ym, as {Yi = fi(x1, …, xn)} (f: Rn → Rm). We know the probabilities for the y variables and want to reach some conclusion about the probabilities of the x variables.

We introduce a few terms and notations borrowed from physics that we will use to simplify the mathematical description [11]. A state in the x (or y) space refers to a particular set of values in the variables x1, …, xn (or y1, …, ym). We denote the set of these states as {x1, …, xn} or {y1, …, ym}. The vector notations, x⃗ = (x1, ⋯, xn) and y⃗ = (y1, ⋯, ym), will be used to compactly describe expressions when required. For the same reason, when we use f without a subscript, it will refer to a vector of f values, i.e., y⃗ = f⃗(x⃗) = (f1(x⃗), …, fm(x⃗)). In standard textbook examples in elementary probability theory and physics, we are provided with the probability distribution function P(y⃗), where X is related to Y by a well-defined function, x⃗ = g⃗(y⃗). Such cases are common when Y resides in a higher or equal dimension (m ≥ n) than X. Then Q(x⃗), with lower dimension n, is calculated using

| (1a) |

The summation in Equation (1a) is performed over only those states {y1, ‥, ym} that correspond to the specified state x⃗. However, note that the above relation does not hold even when m ≥ n if multiple values of X variables are associated with the same values of the Y variables, e.g., x2 = y, where −∞ <x< ∞ and 0 ≤ y < ∞. The MaxEnt formalism developed here can be used for estimating Q(x⃗) using P(y⃗) in such cases (see Appendix A1).

Here we are interested in the inverse problem: we are still provided with the probability distribution P(y1, …, ym) and need to estimate the probability distribution Q(x1, …, xn), but now m < n. In this situation, multiple values of the unknown variable X are associated with the same values of observable Y variables and no unique solution for Q(x1, …, xn) exists as the system is underdetermined. Instead of Equation (1a), we use this equation:

| (1b) |

The constraints imposed on the summation in the last term by the relations (y⃗ = f⃗ (x⃗)) between the states in x and y are incorporated using the Kronecker delta function (δab, where, δa,b = 1 when a = b, and, δa,b = 0 when a ≠ b). For pedagogical reasons we elucidate the problem of non-uniqueness in the solutions using a simple example. This example can be easily generalized.

Example 1

We start with a discrete random variable y, with known distribution P(y) = 1/ 3 for y = 0, 1, 2. Then assume that discrete random variables x1 and x2 are related to y, as, y = f(x1,x2) = x1 + x2. We restrict x1 and x2 to being nonnegative integers; hence x1, and x2 can assume only three values, 0, 1, and 2.

It follows that Q(x1,x2) are related to P(y) following Equation (1b) as,

Hence

| (2) |

The above relation provides three independent linear equations for determining six unknown variables, Q(0,0), Q(1,0), Q(0,1), Q(1,1), Q(2,0), and, Q(0,2). Note, the condition of is satisfied by the above linear equations, which also makes Q(1,2) = Q(2,1) = Q(2,2) = 0. Therefore, the linear system in Equation (2) is underdetermined and Q(x1,x2) cannot be found uniquely using these equations. (e.g., Q(0,1) and Q(1,0) could each equal 1/6; or Q(0,1) could equal 1/12, with Q(1,0) = 1/4; etc.)

This issue of non-uniqueness is general and will hold as long as the number of constraints imposed by P(y1, …, ym) is smaller than that of the number of unknown Q(x1, …, xn). For example, when each direction in y (or x) can take L (or L1) discrete values and all the states in x are mapped to all the states in y, then the system will be underdetermined as long as, Lm < L1n.

3. A MaxEnt Based Solution (Discrete)

In this section we propose a solution of this problem using a Maximum Entropy based principle, for discrete variables. We can define Shannon’s entropy [4,5,7], S, given by

| (3) |

and then maximize S with the constraint that Q(x⃗) should generate the distribution P(y⃗) in Equation (1b).

Equation (1b) describes the set of constraints spanning the distinct states in the y space. For example, when each element in the y vector assumes binary values (+1 or −1) there are in total 2m number of distinct states in the y space providing 2m number of equations of constraints. We can introduce a Lagrange multiplier for each of the constraint equations, which we denote compactly as a function, λ(y1, …, ym) or λ(y⃗) describing a map from Rn → R. That is, every possible y vector is associated with a unique value of λ. Also note, when P(y⃗) is normalized, Q(x⃗) is normalized due to Equation (1b), therefore, we will not use any additional Lagrange multiplier for the normalization condition of Q(x⃗). The distribution, Q̂ (x⃗), that optimizes S, subject to the constraints can be calculated as follows. Q(x⃗) is slightly perturbed from Q̂ (x⃗), i.e., Q(x⃗) = Q̂(x⃗) + δQ(x⃗). Then expanding S in Equation (3) and the constraints in Equation (1b) in terms of δQ(x⃗) and setting the terms proportional to δQ(x⃗) zero (optimization condition) yields Q̂ (x⃗) in terms of the Lagrange multipliers, i.e.,

| (4) |

One can indeed confirm that the terms in the expansion of S and the constraints proportional to (δQ)2 at Q(x⃗) = Q̂(x⃗) is −1/ Q̂ (x⃗), thus, Q̂ (x⃗) maximizes S. The method used here for maximizing S subject to the constraints is a standard one [4,11].

The solution for Q̂ (x⃗) from the above Equation (4) is given by,

| (5) |

Note the partition function (usually denoted as Z in textbooks [4,11]) does not arise in the above solution as the normalization condition for Q(x) is incorporated in the constraint equations in Equation (1b). We show the derivation of Equation (5) for Example 1 in Appendix A2 for pedagogical reasons. From the above solution (Equation (5)) we immediately observe the two main features that Q̂ (x⃗) exhibits:

The values of Q̂ (x⃗) for the states {x1, …, xn} that map to the same state y1, …, ym via {fi (x⃗)} are equal to each other. In the simple example above, this implies Q(1,0) = Q(0,1), and, Q(1,1) = Q(0,2) = Q(2,0).

Q̂ (x⃗) contains all the symmetry properties present in the relation {yi = fi(x1, …, xn)}. In the simple example, the relation between y and x was symmetric in permutation of x1 and x2, implying, Q(x1,x2) = Q(x2,x1).

We will take advantage of the above properties to avoid direct calculation of the Lagrange multipliers in Equation (4): For the states {x̃1, ⋯, x̃n} in the x space that map to the same state, ỹ1, ⋯, ỹm, in the y space, Equation (1b) can rewritten as

| (6a) |

| (6b) |

where k(ỹ1, ⋯, ỹm) gives the total number of distinct states {x̃1, ⋯, x̃n} in the x space that correspond to the state, ỹ1, ⋯, ỹm or ỹ⃗. Since, all the states in {x̃1, ⋯, x̃n} will have the same probability, in the second step in Equation (6a) we replace the summation with k(ỹ⃗), multiplied by the probability of any state or in {x̃1, ⋯, x̃n}. We designate k(ỹ⃗) as the degeneracy factor, borrowing a similar terminology in physics. k(ỹ⃗) can be expressed in terms of the Kronecker delta functions as,

| (7) |

Note, the degeneracy factor in Equation (7) only depends on the relationship between {x⃗} and {y⃗}, and, does not depend on the probability distributions, P and Q. In our simple example above, since y = x1 + x2, Q(0,1) and Q(1,0) both correspond to y = 1, therefore k(ỹ = 1) = 2. Equation (6b) is the main result of this section, which describes the inferred distribution Q̂ (x⃗) in terms of the known probability distribution P(y⃗), and, k(y⃗), which can be calculated from the given relation between y and x. Thus, the calculation of Q̂ (x⃗), as shown in Equation (6b), does not involve direct evaluation of the Lagrange multipliers, λ(y⃗). These two quantities are related to P(y⃗), and, k(y⃗), following Equations (5), (6b) and (7), as,

| (8) |

Example 1, continued

We provide a solution for Example 1 presented above. By simple counting, we see the degeneracy factors are

Thus following Equation (2), Q(0,0) = P(0) = 1/3, Q(0,1) = Q(1,0) = P(1)/2 = 1/6, and, Q(2,0) = Q(1,1) = Q(0,2) = P(2)/3 = 1/9. For more complex problems, the degeneracy factors can be calculated numerically. Maximizing the entropy, S, is what made all the Qs be equal for any one y value.

4. Results for Continuous Variables

The above results can be extended when {Xi} and {Yi} are continuous variables. However, there is an issue that makes a straightforward extension of the calculations shown in the discrete case in the continuum limit difficult. The issue is related to the continuum limit of the entropy function S in Equation (3). Replacing the summation in Equation (3) with an integral in the limit of large number of states as the step size separating the adjacent states is decreased to zero creates an entropy expression which is negative and unbounded [12]. This problem can be ameliorated by defining a relative entropy, RE, defined as,

| (9) |

where, u is a uniform probability density function defined on the same domain as q. RE always remains positive with a lower bound at zero. The results obtained by maximizing S in the previous section can be derived by minimizing a relative entropy (RE) defined above with the discrete distributions, Q and a uniform distribution, U, where the integral in Equation (9) is replaced by a summation over the states in the x space. RE in Equation (9) quantifies the difference between the distribution q(x1, …, xn) and the corresponding uniform distribution.

The definition of RE in Equation (9) still has an issue of defining the uniform distribution when the x variables are unbounded. In some cases, it may be possible to solve the problem by introducing finite upper and lower bounds and then analyzing the results in the limit where the upper (or lower) bound approaches ∞ (or −∞). We will illustrate this approach in Example 4, below. Also, see Example 3 for a comparison.

In the continuum limit, the constraints on q(x1, …, xn) or q(x⃗), imposed by the probability density function (pdf) p(y1,‥,ym) or p(y⃗) are given by,

| (10) |

The Dirac delta function for a single variable x is defined as,

| (11) |

where the region R contains the point x = 0.

Since, the pdf p(y⃗) resides in a lower dimension compared to q(x⃗), estimation of q(x⃗) in terms of p(y⃗) requires solution of an underdetermined system.

For continuous variables we can proceed with minimizing the relative entropy using functional calculus [13,14]. The calculation follows the same logic as in the discrete case, we show the steps explicitly for clarity and pedagogy.

The relative entropy (RE) in Equation (9) is a functional of q(x⃗). As in the discrete case, if p(y⃗) is normalized, i.e., ∫ dy1 ⋯dym p(y⃗) = 1, then Equations (10) and (11) imply q(x⃗) is normalized as well, i.e.,

| (12) |

We introduce a Lagrange multiplier function, λ(y⃗), and generate a functional, Sλ[q], that we need to minimize in order to minimize Equation (9) along with the constraints in Equation (10). Since, the normalization condition in Equation (12) follows from Equation (10) we do not treat Equation (12) as a separate constraint.

Sλ[q] is given by,

| (13) |

We can take the functional derivative to minimize S as,

| (14) |

In deriving Equation (14) we used the standard relation . For multiple dimensions this generalizes to, . The chain rule for derivatives of functions can be easily generalized for functional derivatives [14]. Equation (14) provides us with the solution that minimizes Equation (13):

| (15) |

where, u0 is a constant related to the density of the uniform distribution. Note the {xi} dependence in the solution, q̂(x⃗), arises only though f⃗(x⃗).

Substituting Equation (15) in Equation (10),

| (16) |

where,

| (17) |

The second derivative gives,

| (18) |

The second derivative of Sλ in Equation (18) is always positive, since q is positive. Therefore, q̂(x⃗), minimizes the relative entropy in Equation (9). Equations (16) and (17) are the main results of this section, which are the counterparts for the Equations (6b) and (7) in discrete case.

We apply the above results for two examples below.

Example 2

Consider a linear relationship between y and x, e.g., y = x1 + x2, where, 0 ≤ y ≤ ∞ and 0 ≤ x1 ≤ ∞, 0 ≤ x2 ≤ ∞. If the pdf in y is known as, p(y) = 1/μ exp(−y/μ), we would like to know the pdf corresponding q(x1,x2), where, the pdfs p and q are related by Equation (10), i.e.,

The degeneracy factor in the continuous case, according to Equation (17), in this case is,

The second equality results from the fact that the Dirac delta function is zero outside that region. The fourth equality uses the property of the Delta function,

Therefore, .

Example 3

Let , 0 ≤ y ≤ ∞ and 0 ≤ (x1, x2) ≤ ∞. Then κ(y), as given by Equation (17), is,

Therefore, according to Equation (17),

In our final example, we illustrate solving the problem by taking the limit when the upper and/or lower bound(s) approach ± ∞, as mentioned near the beginning of this section of the paper.

Example 4

Let , 0 ≤ y ≤ 2L2 and 0 ≤ (x1, x2) ≤ L. First we calculate κ(y) as given in Equation (17). Therefore, we need to evaluate the integral,

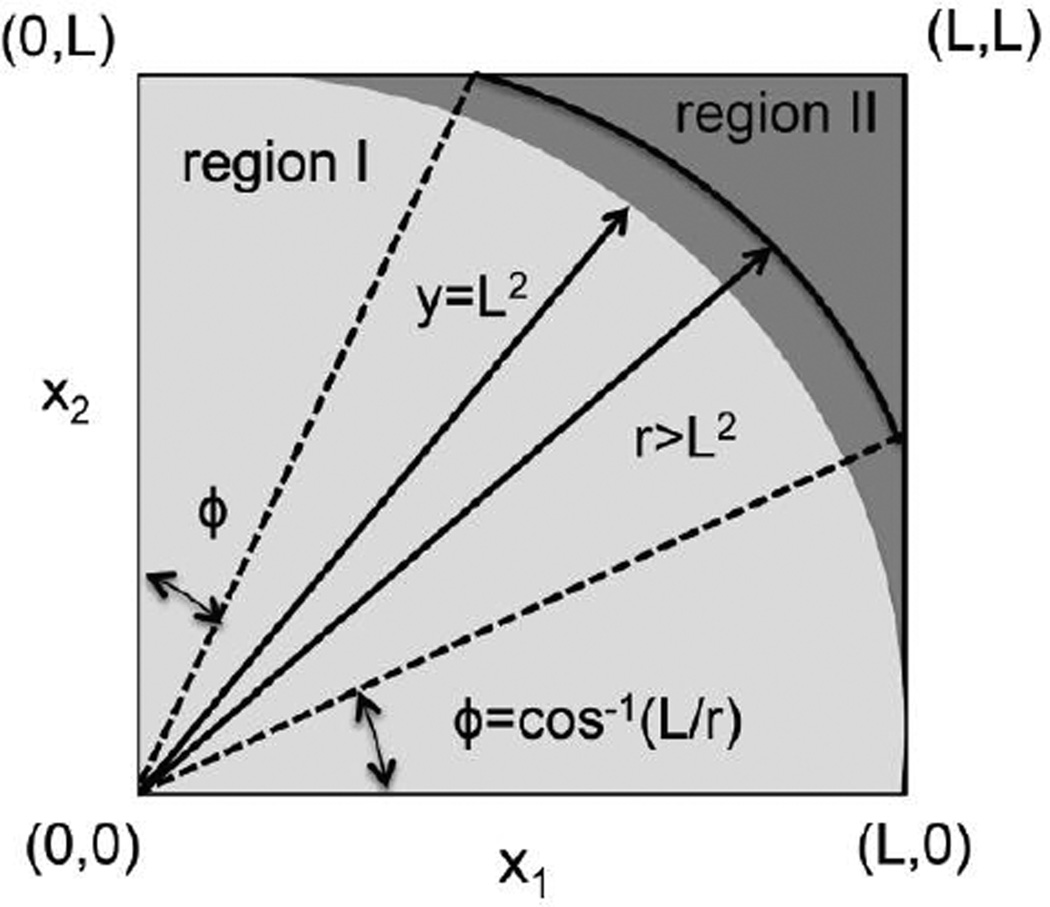

We divide the region of integration (0≤ (x1, x2) ≤ L) into two parts, region I (lighter shade) and II (darker shade) as shown in the Figure 1. Region I contains x1 and x2 values, where, x12 + x22 = y2 ≤ L2, and, region II contains the remaining of the part of the domain (0≤ (x1, x2) ≤ L) of integration. The integrals in these regions are given by the first and the second term after the second equality sign in the equation below.

In region I, where y ≤ L2,

In region II, where L2 ≤ y ≤ 2L2,

Figure 1.

Shows the different regions used in calculating the integral for κ(y) in Example 4.

varies between 0 (on the line x12 + x22 = L2) and π/4 (at x1 = x2 = L). Note, κ(y) = 0 when x1 = x2 = L, which does have any degeneracy. Therefore, Equation (16) is not valid at this point. Thus, as in Example 2 and 3,

Limit L→∞: When y ≤ L2, κ(y) = π/4. Thus, as L→∞, as long as y remains in region I we correctly recover the result in example III. If y is in region II, then we can expand κ(y) in a series of a small parameter ε = (y − L2)/L2 as . This result follows from the expansion of in region II. We can write,

where, 0 < ε[= (y − L2)/L2] ≤ 1. Using series expansion of cos−1(x) [15] we find, , and thus, .

5. Discussion

The problem we have attacked here arose from our work with microbial communities [3], but it also has broader statistical applications. For example, the responses of immune cells to external stimuli involve protein interaction networks, where protein-protein interactions, described by biochemical reaction rates, are not directly accessible for measurement in vivo. Recent developments in single cell measurement techniques allow for measuring many protein abundances in single cells, making it possible to evaluate distribution of protein abundances in a cell population [16]. However, it is a challenge to characterize protein-protein interactions underlying a cellular response because the number of these interactions could be substantially larger than the number of measured protein species [17]. These problems involve determining the distribution of a random variable x, where y is another random variable, and X and Y have a functional relationship. In the more common situation, x has dimensionality less than or equal to that of y, and there is often a unique solution. In contrast, we considered here the case where x’s dimensionality is greater than that of y, so there is no unique solution to the problem.

Since there is no unique solution, we propose taking a MaxEnt approach, as a way of “spreading out the uncertainty” as evenly as possible. In the discrete case, intuition would suggest that if k values of Q sum to a given value of P, then the solution that makes the least additional assumptions is for each Q to equal P/k. This intuition is confirmed by our MaxEnt results for the discrete case. In the continuous case, the intuition is not as obvious. However, the MaxEnt solution does capture the same intuitive idea. Instead of dividing P by k(y⃗) (an integer), we divide p by κ(y⃗), where when y has dimension 1, or more generally by Equation (17). This use of the Dirac delta function has the similar effect of spreading out the uncertainty evenly.

Estimating the distribution Q(x) does not require explicit calculation of the Lagrange multipliers and the partition sum. Rather, Q(x) is directly evaluated following Equation (6b) (or Equation (17) in the continuous case), using the measured P(y) (or p(y)), and, k(y) (or κ(y)), which depends only on the relationship y = f(x). In standard MaxEnt applications, where constraints are imposed by the average values and other moments of the data, inference of probability distributions requires evaluation of the Lagrange multipliers and the partition sum Z. This involves solving a set of nonlinear equations and the relation between the Z and the Lagrange multipliers. Calculating these quantities, which is usually carried out numerically, can pose a technical challenge when the variables reside in large dimensions. In our case, we avoid these calculations and provide a solution for Q(x) in terms of a closed analytical expression, which is general and thus applicable to any well-behaved example. A limitation is that calculation of the degeneracy factor k(y) (or κ(y) in the continuous case) can present a challenge in higher dimensions and for complicated relations between y and x. Monte Carlo sampling techniques [18] and discretization schemes for Dirac delta functions [19] can be helpful in that regard.

Acknowledgments

The work is supported by a grant from NIGMS (1R01GM103612-01A1) to Jayajit Das. Jayajit Das is also partially supported by The Research Institute at the Nationwide Children’s Hospital and a grant from the Ohio Supercomputer Center (OSC). Susan E. Hodge is supported by The Research Institute at the Nationwide Children’s Hospital. Jayajit Das and Sayak Mukherjee thank Aleya Dhanji for carrying out preliminary calculations related to the project.

The founding sponsors had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, and in the decision to publish the results.

Appendix

A1. An example for MaxEnt for y = f(x) when n≤ m

Consider a relation, y = x2, where, y and x are integers, and, 0 ≤ y ≤ 1 and −1 ≤ x ≤ 1. Thus, both x = ± 1 are associated with y = 1. The pdf of X, Q(x), is related to the pdf of Y, P(y), as,

| (A1a) |

| (A1b) |

Therefore, if P(y) is known, Equation (A1) cannot be used to uniquely determine Q(x) as the above system is underdetermined. We can use the MaxEnt scheme developed here to estimate Q(x). Equation (1) determines Q(0) and using Equation (6): Q(1) = Q(−1) = P(1)/2.

A2. Details of the MaxEnt Calculations for Example 1

The three constraints corresponding to P(y) at y = 0, y = 1, and, y = 2, are denoted by λ1, λ2, and λ3, respectively. Therefore, Equation (4) in this case is given by,

The solution for Q̂ can be found by equating the coefficients of each of the δQ to zero since δQ is arbitrary.

Using the above solution and Equation (2) we can easily find

Q̂ is normalized as expected.

Substituting the above equations in the constraint equation in Equation (2) (or Equation (8)) provides the values for the Lagrange multipliers, i.e.,

Footnotes

Author Contributions

Jayajit Das, Sayak Mukherjee and Susan E. Hodge planned the research, carried out the calculations, and wrote the paper. All the authors have read and approved the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

Contributor Information

Sayak Mukherjee, Email: mukherjee.39@osu.edu.

Susan E. Hodge, Email: Susan.Hodge@nationwidechildrens.org.

References

- 1.Human Microbiome Project Consortium. Structure, Function and Diversity of the Healthy Human Microbiome. Nature. 2012;486:207–214. doi: 10.1038/nature11234. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Ley RE, Hamady M, Lozupone C, Turnbaugh PJ, Ramey RR, Bircher JS, Schlegel ML, Tucker TA, Schrenzel MD, Gordon JI. Evolution of Mammals and Their Gut Microbes. Science. 2008;320:1647–1651. doi: 10.1126/science.1155725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Mukherjee S, Weimer KE, Seok SC, Ray WC, Jayaprakash C, Vieland VJ, Edward Swords W, Das J. Host-to-Host Variation of Ecological Interactions in Polymicrobial Infections. Phys. Biol. 2015;12:016003. doi: 10.1088/1478-3975/12/1/016003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Bialek WS. Biophysics: Searching for Principles. Princeton, NJ, USA: Princeton University Press; 2012. [Google Scholar]

- 5.Jaynes ET. Information Theory and Statistical Mechanics. Phys. Rev. 1957;106:620–630. [Google Scholar]

- 6.MacKay DJC. Information Theory, Inference, and Learning Algorithms. Cambridge, UK: Cambridge University Press; 2003. [Google Scholar]

- 7.Presse S, Ghosh K, Lee J, Dill KA. Principles of Maximum Entropy and Maximum Caliber in Statistical Physics. Rev. Mod. Phys. 2013;85:1115–1141. [Google Scholar]

- 8.Caticha A. Towards an Informational Pragmatic Realism. Mind Mach. 2014;24:37–70. [Google Scholar]

- 9.Mora T, Bialek W. Are Biological Systems Poised at Criticality? J. Stat. Phys. 2011;144:268–302. [Google Scholar]

- 10.Rényi A. Probability Theory. Mineola, NY, USA: Dover Books on Mathematics; Dover; 2007. [Google Scholar]

- 11.Reif F. Fundamentals of Statistical and Thermal Physics. Long Grove, IL, USA: Waveland Press; 2008. [Google Scholar]

- 12.Cover TM, Thomas JA. Elements of Information Theory. 2nd ed. Hoboken, NJ, USA: Wiley; 2006. [Google Scholar]

- 13.Ryder LH. Quantum Field Theory. 2nd ed. New York, NY, USA: Cambridge University Press; 1996. [Google Scholar]

- 14.Greiner W, Reinhardt J, Bromley DA. Field Quantization. Berlin, Germany: Springer; 1996. [Google Scholar]

- 15.Abramowitz M, Stegun IA. Handbook of Mathematical Functions, with Formulas, Graphs, and Mathematical Tables. Mineola, NY, USA: Dover; 1965. [Google Scholar]

- 16.Krishnaswamy S, Spitzer MH, Mingueneau M, Bendall SC, Litvin O, Stone E, Pe’er D, Nolan GP. Systems Biology. Conditional Density-Based Analysis of T Cell Signaling in Single-Cell Data. Science. 2014;346:1250689. doi: 10.1126/science.1250689. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Eydgahi H, Chen WW, Muhlich JL, Vitkup D, Tsitsiklis JN, Sorger PK. Properties of Cell Death Models Calibrated and Compared Using Bayesian Approaches. Mol. Syst. Biol. 2013;9:644. doi: 10.1038/msb.2012.69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Press WH, Teukolsky SA, Vetterling WT, Flannery BP. Numerical Recipes: The Art of Scientific Computing. 3rd ed. New York, NY, USA: Cambridge University Press; 2007. [Google Scholar]

- 19.Smereka P. The Numerical Approximation of a Delta Function with Application to Level Set Methods. J Comput Phys. 2006;211:77–90. [Google Scholar]