Abstract

Objective

To determine the effect on inter-hospital patient sharing via transfers on the rate of Clostridium difficile infections (CDI) in a hospital.

Design

Retrospective cohort

Methods

Using data from the Healthcare Cost and Utilization Project California State Inpatient Database, 2005–2011, we identified 2,752,639 transfers. We then constructed a series of networks detailing the connections formed by hospitals. We computed two measures of connectivity, indegree and weighted indegree, measuring the number of hospitals from which transfers into a hospital arrive, and the total number of incoming transfers, respectively. We estimated a multivariate model of CDI cases using the log-transformed network measures as well as covariates for hospital fixed effects, log median length of stay, log fraction of patients aged 65 or older, quarter and year indicators as predictors.

Results

We found an increase of one in the log indegree was associated with a 4.8% increase in incidence of CDI (95% CI: 2.3–7.4) and an increase of one in log weighted indegree was associated with a 3.3% increase in CDI incidence (95% CI: 1.5–5.2). Moreover, including measures of connectivity in the models greatly improved their fit.

Conclusions

Our results suggest infection control is not under the exclusive control of a given hospital but is also influenced by the connections and number of connections that hospitals have with other hospitals.

Background

Risk factors for CDI include advanced age,1–4 prior antimicrobial use,5–11 underlying severity of illness,12,13 and the patient’s environment.14–16 In terms of environmental risk factors for CDI, longer lengths of stay6 and hospital units with more colonization pressure (higher rates of cases) are associated with CDI.17 When considering environmental CDI risk factors, the environment is usually defined at a local level– as a unit17 or a room.14,18 For example, the risk for CDI is higher for a patient occupying a room where a previous occupant had CDI.18 However, patients travel to different rooms, to different units, and also to different hospitals. Thus, perhaps, a more expansive definition of the environment is needed – a health-system-level risk factor – to take into account how hospitals are connected to other hospitals via patient transfers.

Hospitals commonly share patients with other hospitals via direct transfers and indirectly via readmissions. Thus, patient sharing between hospitals may serve as a means for the dissemination of CDI and other healthcare-associated infections. Alternatively, patient transfers may indicate a level of increased severity of illness and thus hospitals with more transfers may be associated with a greater percentage of patients at risk for CDI, due to their underlying disease state. Although simulation studies have helped support the notion that healthcare-associated infections disseminate via patient transfers,19–23 to date, there has been less empirical work focused on measuring the potential for spreading healthcare-associated infections via patient transfers or specifically how the structure of transfer networks affects health care infection rates at included hospitals. The purpose of this paper is to determine if and to what extent the burden of CDI at hospitals is related to the hospital’s connectivity to other hospitals.

Methods

Data

The Healthcare Costs and Utilization Project (HCUP) State Inpatient Database (SID) for California was the primary data source for this project. HCUP is a project of the Agency for Healthcare Research and Quality, and the SID provides event-level information for every inpatient stay in a non-federal hospital for a given state and year. We used the seven years 2005–2011, which include a set of variables for tracking and identifying revisits/readmission for a given patient. In total, the analyzed database is comprised of 24,110,945 records representing over 9,652,292 unique patients in 480 unique hospitals.

Transfer Identification and Graph Construction

We consider patients with at least two records because patients with only one stay will not have a defined interval between stays. The records were ordered by admission date, and discharge date was computed using the length of stay. For each record, we compute the interval between the current record’s admission date and the discharge date of the most recent, non-overlapping stay.

Transfers were identified as a pair of stays with zero days between the discharge date of the first stay and the admission date of the second, and where the hospital in the first stay and second stay differed. We constructed 28 networks, one for each of the quarters from 2005 to 2011. We connected hospitals that shared patients via transfers and constructed a network graph. The connections between hospitals were weighed by the number of transfers between the two hospitals during the given quarter.

The connections between hospitals were used to construct a force-layout graph. The force layout graph treats each hospital like a magnet that repels other magnets and each connection as a spring that draws them together. On the basis of the connections, we used a community detection technique to identity network-based clusters. 24

Network Properties

The network location of a given hospital was characterized by the hospital’s centrality in the network for that quarter. Specifically, we are interested in indegree (the number of unique hospitals from which transfers originate) and weighted indegree (the number of total patients transferred into a hospital) as predictors. We compute these two measures for each of the 28 quarters between 2005 and 2011.

Modeling

We model the mean number of cases of CDI for each hospital by quarter. We used a negative binomial generalized linear model (GLM) with a log link. The negative binomial model distribution accommodates the unique properties of a discrete count response variable. Moreover, unlike the more restrictive Possion distribution, the negative binomial distribution allows the variance to exceed the mean, which may be important for the accurate assessment of standard errors. We consider three models, one that is “network naive” and two that incorporate network information.

All models included three categories of predictor variables. First, we included variables that represent a hospital’s CDI risk stemming from patient mix, namely the log of the fraction of patients over 65 years of age and the log of the hospital’s median length of stay. In addition, we included a set of 479 indicator variables as hospital identifier fixed-effects and 27 indicators for the number of quarters, starting with the first quarter of 2005. These two sets of indicators were included to account for unobserved differences between hospitals, namely practice style and residual heterogeneity in patient populations, and temporal patterns in the CDI rate, respectively. Finally, in order to control for hospital size, each model included an offset defined as the log-transformed number of admissions to the hospital in a particular quarter. In the GLM framework, an offset is simply a variable included as a predictor with the corresponding regression coefficient fixed at one. Note that an offset differs from a usual predictor in that the coefficient is fixed a priori and is not estimated from the data. In our GLMs, the inclusion of the offset permits the interpretation of the outcome as a rate, defined in terms of the mean CDI count relative to admissions for a particular quarter.

The first model (Model 1) was simply based on the aforementioned covariates while the other two models added centrality estimates as predictors. Specifically, model 2 added log indegree and Model 3 added log weighted indegree to the basic set of predictors in Model 1. We did not fit a model with both log indegree and log weighted indegree due to the extremely high correlation between these measures (r=0.91).

We compared the Akaike information criterion (AIC) of each fitted model. AIC is a measure that evaluates the quality of fit for a proposed model, penalizing for the number of predictors. While it does not provide evidence of global lack-of-fit, AIC does provide a useful means for comparing fitted models based on how well they balance the competing objectives of fidelity to the data and parsimony. As a general rule of thumb, a difference of two or more AIC units is considered meaningful in evaluating fitted model quality.

Results

We identified 2,752,639 pairs of records separated by zero days. This amounts to 11% of all discharges. Over the seven years of the study period, 21,256 unique connections or 9.2% of all possible connections are realized at least once. When aggregated to the hospital quarter level, there are 12,490 observations. This is slightly less than the expected 13,356 if all hospitals were included for each of the 28 quarters. The difference between the expected and the actual number of observations occurs due to openings, closings and other gaps in a hospital’s operation.

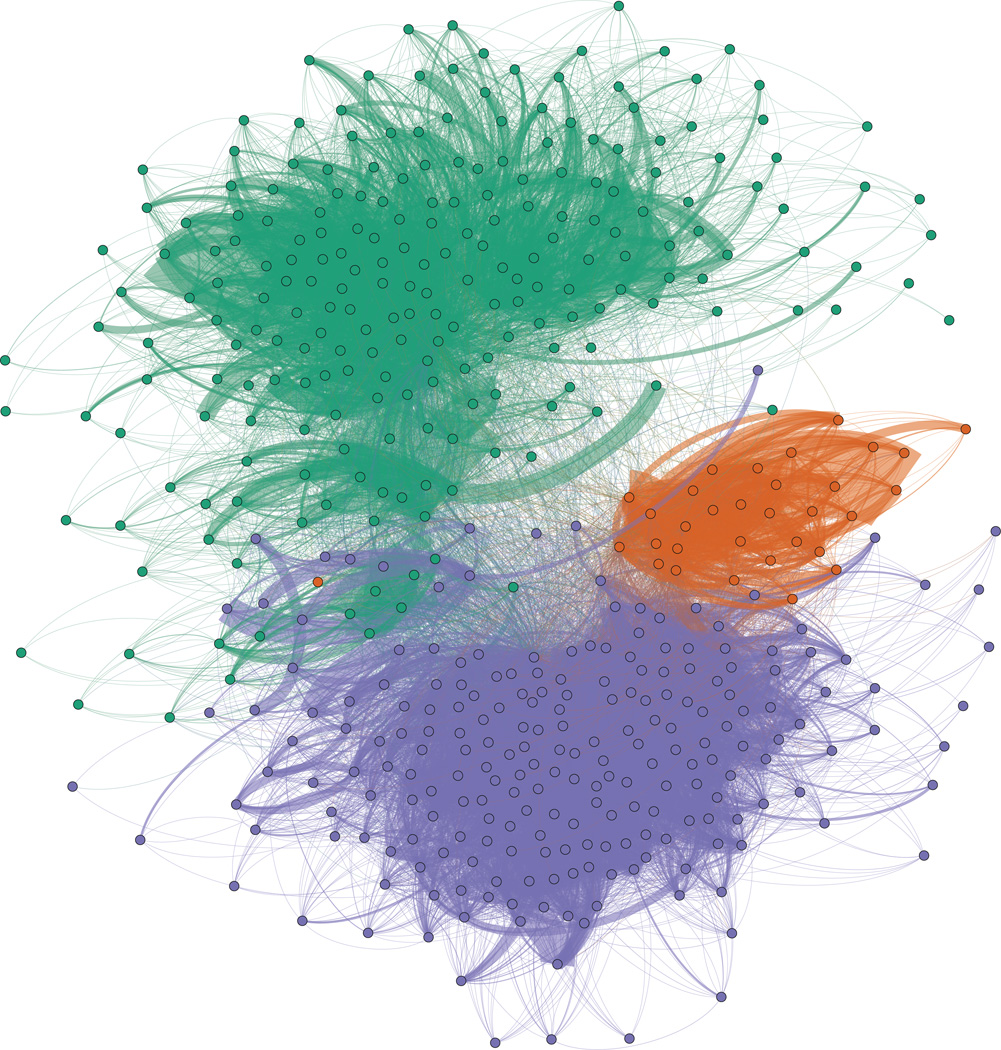

A force layout graph showing these connections is shown in Figure 1. The force layout graph shows the clusters that emerge from the interconnections of the hospitals. Each hospital is represented as a colored circle; the color reflects the “cluster membership.” The solid lines that connect the circles are transfers and act to draw hospitals closer together. This layout is an equilibrium state between the hospital’s tendency to repel other hospitals and the transfer’s tendency to draw them together. As such, the most highly connected hospitals are located toward the center of each cluster’s mass.

Figure 1.

Map of hospitals (dots) and transfers (dark lines) in a force layout projection of the CA data. 3 major clusters in the CA network, colored separately (San Diego in orange, LA in purple and San Francisco and Northern CA in green) , were detected using network modularity clustering. This graph shows how tightly connected hospitals in CA are.

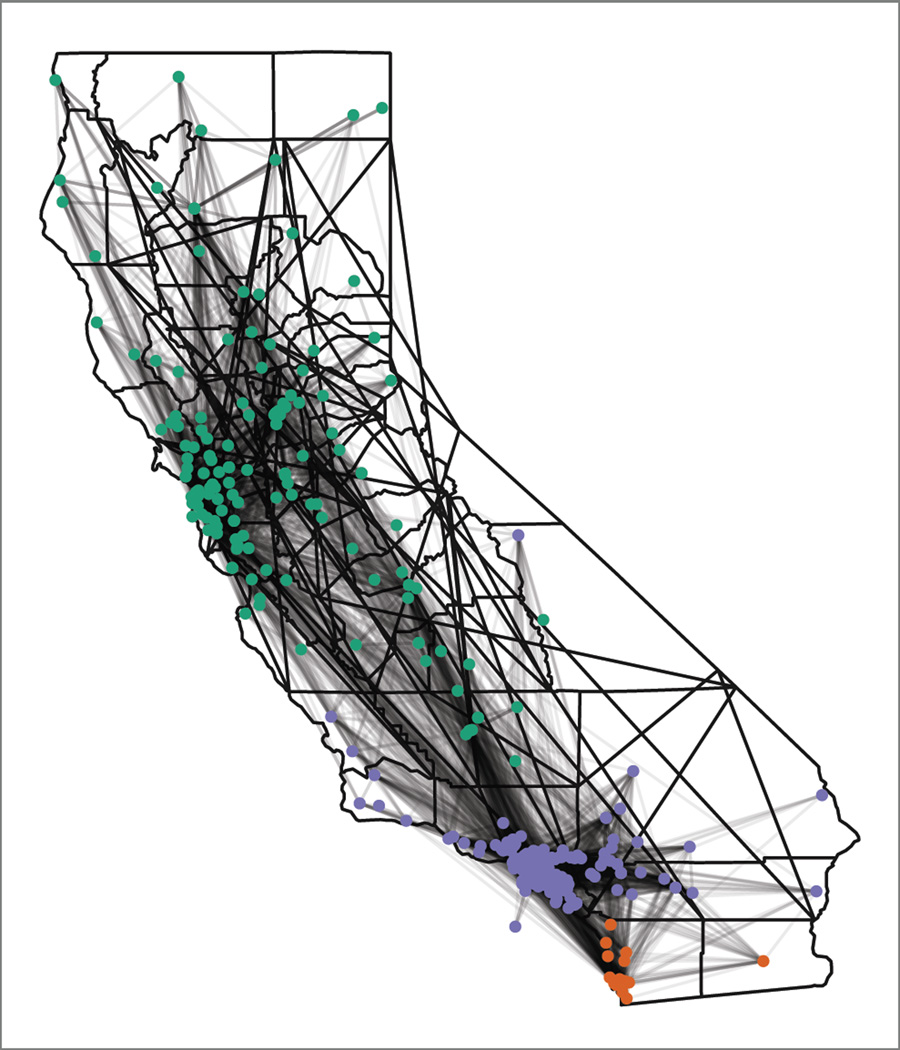

Figure 2 shows the same cluster membership and connections but on a map of the state. The clusters intuitively map to the large urban areas of California (San Diego, Los Angeles and San Francisco and Northern California). However, the map does clearly show that these clusters are not strictly isolated from each other and, in fact, share many connections.

Figure 2.

Map of hospitals (dots) and transfers (dark lines) on a map of CA. The same connections from Figure 1 are projected onto a map of CA. Major clusters are colored separately (San Diego in orange, LA in purple and San Francisco and Northern CA in green), and the map shows how transfers create close connect hospitals, despite geographic distance.

Summary values for the number of cases, stays and predictors are provided in Table 1. All of the variables exhibit highly skewed distributions, with a propensity toward low numbers. The degree of skew, especially in the network variables, is readily evident by comparing the mean and median numbers. The vast majority of hospitals accept a very small number of transfers per quarter (median: 13) from a small number of hospitals (median: 6). However, nearly 15% of hospitals have more than 30% of their admissions in the form of patient transfers and bring in hundreds of transfers from a large set of hospitals.

Table 1.

Hospital–level summary statistics for response and predictor variables

| Mean | Minimum | 1st Quartile |

Median | 3rd Quartile |

Maximum | |

|---|---|---|---|---|---|---|

| Cases of CDI | 17.6 | 0 | 1 | 10 | 27 | 268 |

| Number of Admissions |

1919.7 | 1 | 391.5 | 1222 | 3055.5 | 14426 |

| Median LOS | 5.5 | 1 | 3 | 3 | 4 | 356 |

| Fraction of Patients 65 or Older |

0.37 | 0 | 0.23 | 0.38 | 0.50 | 1 |

| Indegree | 8.9 | 0 | 2 | 6 | 12 | 85 |

| Weighted Indegree |

26.2 | 0 | 3 | 13 | 33 | 356 |

Note: The mean, minimum and maximum value of each of the key variables is included. Because many of these variables are skewed, the 1st quartile (25th percentile) and 3rd quartile (75th percentile) are also given.

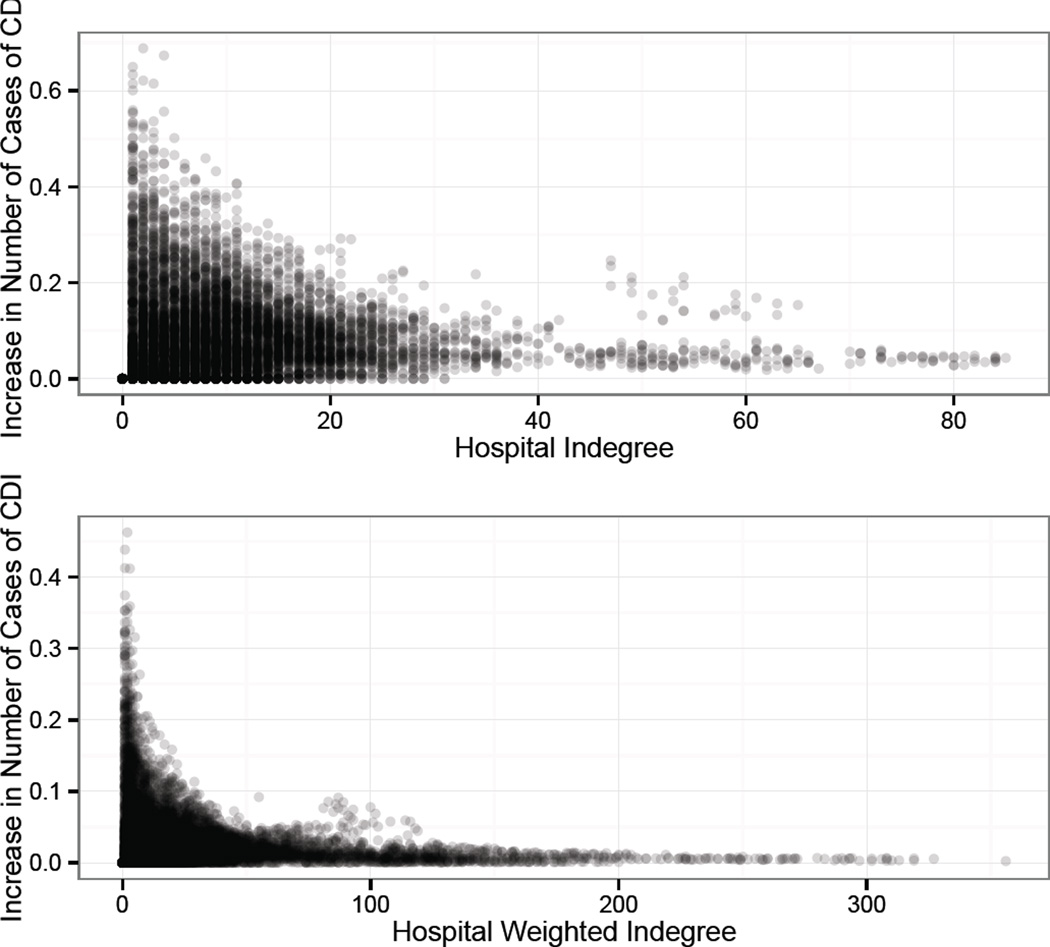

The effect estimates of increasing indegree or weighted indegree are both statistically and clinically significant. The first model indicates that a one unit increase in the log of the indegree, after controlling for other factors, is associated with a 4.8% (95% CI: 2.3–7.4%) increase in the mean number of CDI cases. Likewise, using the second model, a one unit increase in the log of the weighted indegree is associated with a 3.3% (95% CI: 1.5–5.2%) increase in the mean number of CDI cases. To transform these results to a more interpretable form, for each observed hospital over each of the 28 quarters, Figure 2 shows the expected increase in the number of cases of CDI corresponding to an increase of one in the indegree (one additional connected hospital) and weighted indegree (one additional transfer). Because of the log link, we are predicting a multiplicative change in the incidence of a hospital (e.g., 3.3% increase). Consequently, the effect of an additive single unit increase in a predictor is not a constant increase in the mean number of cases but rather a constant multiplier of the mean baseline incidence. As such, when considering various baseline incidence values for different hospitals over the 28 quarters, there is a distribution of possible increases for a single unit increase as opposed to one fixed value.

Additionally, a fitted model that does not consider the network connections has an AIC of 63,543. However, adding indegree and weighted indegree reduces the AIC to 63,531 and 63,534, respectively. This reflects decreases of 9 to 14 AIC units when the network variables are added to the model. Including information about the hospital’s connectivity improves the quality of the fitted model relative to a naive model. This result suggests that network parameters, in the form of indegree and weighted indegree, contain information about a hospital’s CDI rate that is not already contained in the hospital fixed effects, quarter fixed effects, patient age or length of stay.

Discussion

Our results demonstrate that CDI burden at a hospital level can be better understood by knowing how a hospital is connected to other hospitals in terms of patient transfers. Specifically, we find that hospital-level CDI is related to two measures of patient transfers – one that considers the number of hospitals (indegree) and one that considers number of patients or volume of flow (weighted indegree). The purpose of this paper was to demonstrate that transfers are a significant predictor of CDI, and not to compute an effect size for a specific hospital. Nevertheless, associated with increases in indegree and weighted indegree, we estimate increases in mean CDI that are both statistically and clinically significant. The partial test p-values for the estimated effects from these models, combined with the model selection information from the AIC, strongly suggest that CDI incidence is related to transfer volume.

In practice it may not be either possible or desirable to decrease the number or direction of transfers for the sole purpose of preventing CDI. However, our results have broader implications for health policy and infection control. Recently, reimbursement rates have been tied to quality metrics with the assumption that healthcare-associated infections are in part preventable. However, if CDI rates are affected by connectivity, then risk adjustment models that consider only the factors at a given hospital will produce inaccurate results. If the connectivity and the behavior at the connections are outside of the influence of a hospital, imposition of penalties on that hospital may not bring about the desired result. In other words, if healthcare-associated infection rates are, in part, “regionally” determined as opposed to exclusively “locally” determined, these policies may need to consider a hospital’s region and connectivity to avoid inaccurately rewarding or penalizing hospitals.

Our results also have implications for infection control for infections beyond CDI. Hospital transfers can be a mechanism for the transmission of a broad range of infectious agents across healthcare facilities. Routinely, MRSA is likely spread through patient transfers 20,25. In addition, the spread of multi-drug resistant gram negatives has been documented 26,27 and will continue to be an infection-control threat. Emerging infections can quickly spread through populations via healthcare environments, especially when patients that are colonized and/or in an asymptomatic phase of the disease are transferred. In addition, transferred patients may be symptomatic but undiagnosed. Transfers may dramatically affect the spread of diseases that are difficult to treat, e.g., Ebola. In 2003 SARS spread across hospitals in Toronto, and the transmission of the disease was maintained in healthcare facilities.28

Understanding how healthcare workers move and interact with patients within hospitals can help inform both disease surveillance approaches and infection-control interventions.29–32 Understanding how patients move throughout a state will help inform both disease surveillance approaches and infection-control interventions for a wide range of infections. For example, in our hospital graph, the clustering identified three distinct clusters, yet all the clusters are also linked by hospital transfers. Some of the hospitals in our network are more likely to facilitate transfers that connect transfers than other hospitals. Thus, these are the hospitals that may be especially important to consider in terms of state-wide infection-control efforts. Also, our results suggest that hospitals that are more connected are important to consider for surveillance. Thus, understanding the transfer pattern may help allocate scarce surveillance and infection-control resources more effectively.

Although it is possible that transfers and CDI are related mechanistically – CDI is introduced by transferred patients, it is also quite possible that we are measuring something else. Indeed, the hospitals that are more connected may have higher CDI rates for some other reason. For example, highly connected hospitals may have patients with a much higher severity of illness. Accordingly, higher CDI rates could be caused by a greater proportion of patients at a higher risk of developing CDI due to their underlying severity of illness. However, we did control for differences among hospitals with hospital identifier fixed effects. Furthermore, even if our results simply represent an association, and transfers themselves are not directly causing higher CDI rates, our results are still important for infection control: hospital infection rates are related to a hospital’s position in the network.

As with any empirical work, our models are not without limitations. First, the HCUP dataset does not include information about specific nursing homes or long-term care facilities. These facilities may act as intermediates between hospitals and may serve to strongly connect otherwise weakly connected hospitals via indirect transfers.25 Second, we are relying on administrative data, which does not include any microbiologic data. Nor does it include drug data; e.g., antibiotics. However, our hospital identifier fixed effects partially serve to control for differences in diagnosing CDI and antibiotic use among hospitals. Third, we are only able to measure CDI cases that are either identified on admission or during a hospital stay. Clearly, many cases occur after a patient’s stay.33 Future work should explore alternative data sources with post-discharge information in an attempt to correctly attribute possible community cases to institutions and further investigate the role of a hospital’s centrality as a risk factor for CDI. Fourth, network centrality is a risk factor for CDI primarily in only the more-connected hospitals. Finally, our modeling framework accommodates the heterogeneity among hospitals using fixed effects as opposed to random effects. Mixed effects models, based on the latter approach, were considered. However, mixed effects modelling requires the random effects be uncorrelated with the predictors. It is unlikely that the random effects are truly uncorrelated with the indegree of a hospital and, as such, mixed effects models would not accurately estimate the effect of indegree. The fixed effects approach avoids this potential problem, since the correlation of the hospital indicator fixed effects with the predictors is permitted.

Despite the limitations of our work, we demonstrate that hospital transfers are associated with CDI rates. These results have implications for understanding how CDI is spread and for conceptualizing how to attribute CDI risk to hospitals. This may facilitate the design of better infection-control programs, not only for CDI, but for other hospital-associated infections. Although most infection-control programs are promoted at the hospital level, our results provide evidence that infection-control issues related to CDI cross hospital boundaries. In order to understand how CDI is spread and ultimately how the disease can be better controlled, it is important to explore how the interconnectedness of hospitals affects the spread of CDI and other infectious diseases. Finally our approach has implications for designing hospital-based surveillance and may ultimately lead to state-wide infection-surveillance-and-control programs.

Figure 3.

Increase in expected CDI incidence with a one unit increase in indegree (top) or weighted indegree (bottom), adjusted for the observed hospital characteristics. The x-axis represents the initial indegree/weighted indegree and the y-axis represents the increase in the number of expected cases of CDI per quarter for that hospital. The value is the difference between the predicted number at the starting value of indegree/weighted indegree and the predicted number of cases at 1 plus the starting value.

Acknowledgments

Financial Support. This work was, in part, supported by a dissertation fellowship from The American Foundation for Pharmaceutical Education (J.E.S.), The National Heart, Lung and Blood Institute, grant #1K25HL122305-01A1 (L.A.P.), and the UI Health Care eHealth and eNovation Center (P.M.P.).

Footnotes

Presentations: The results in this manuscript were presented at IDWeek 2013, San Francisco, CA, October 2013, the 5th Biennial Conference of the American Society of Health Economists, University of Southern California, June 2014, and the Midwest Social and Administrative Pharmacy Conference, Purdue University, July 2014.

Potential conflicts of Interest. All authors report no conflicts of interest relevant to this article.

Contributor Information

Jacob E. Simmering, University of Iowa, Department of Pharmacy Practice and Science, Iowa City, IA 52242, USA.

Linnea A. Polgreen, University of Iowa, Department of Pharmacy Practice and Science, Iowa City, IA 52242, USA.

David R. Campbell, University of Iowa, Department of Computer Science, Iowa City, IA 52242, USA.

Joseph E. Cavanaugh, University of Iowa, Department of Biostatistics, Iowa City, IA 52242, USA.

Philip M. Polgreen, University of Iowa, Departments of Internal Medicine and Epidemiology, Iowa City, IA 52242, USA.

References

- 1.Halabi WJ, Nguyen VQ, Carmichael JC, Pigazzi A, Stamos MJ, Mills S. Clostridium difficile colitis in the United States: a decade of trends, outcomes, risk factors for colectomy, and mortality after colectomy. Journal of the American College of Surgeons. 2013;217(5):802–812. doi: 10.1016/j.jamcollsurg.2013.05.028. [DOI] [PubMed] [Google Scholar]

- 2.McDonald LC, Owings M, Jernigan DB. Clostridium difficile infection in patients discharged from US short-stay hospitals, 1996–2003. Emerging infectious diseases. 2006;12(3):409. doi: 10.3201/eid1203.051064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Pepin J, Valiquette L, Cossette B. Mortality attributable to nosocomial Clostridium difficile-associated disease during an epidemic caused by a hypervirulent strain in Quebec. CMAJ : Canadian Medical Association journal = journal de l'Association medicale canadienne. 2005;173(9):1037–1042. doi: 10.1503/cmaj.050978. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Campbell RR, Beere D, Wilcock GK, Brown EM. Clostridium difficile in acute and long-stay elderly patients. Age and ageing. 1988;17(5):333–336. doi: 10.1093/ageing/17.5.333. [DOI] [PubMed] [Google Scholar]

- 5.Control CfD. Prevention. Vital signs: preventing Clostridium difficile infections. MMWR. Morbidity and mortality weekly report. 2012;61(9):157. [PubMed] [Google Scholar]

- 6.Palmore TN, Sohn S, Malak SF, Eagan J, Sepkowitz KA. Risk factors for acquisition of Clostridium difficile-associated diarrhea among outpatients at a cancer hospital. Infection Control and Hospital Epidemiology. 2005;26(8):680–684. doi: 10.1086/502602. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Stevens V, Dumyati G, Fine LS, Fisher SG, van Wijngaarden E. Cumulative antibiotic exposures over time and the risk of Clostridium difficile infection. Clinical infectious diseases : an official publication of the Infectious Diseases Society of America. 2011;53(1):42–48. doi: 10.1093/cid/cir301. [DOI] [PubMed] [Google Scholar]

- 8.Thomas C, Stevenson M, Riley TV. Antibiotics and hospital-acquired Clostridium difficile-associated diarrhoea: a systematic review. The Journal of antimicrobial chemotherapy. 2003;51(6):1339–1350. doi: 10.1093/jac/dkg254. [DOI] [PubMed] [Google Scholar]

- 9.Wistrom J, Norrby SR, Myhre EB, et al. Frequency of antibiotic-associated diarrhoea in 2462 antibiotic-treated hospitalized patients: a prospective study. The Journal of antimicrobial chemotherapy. 2001;47(1):43–50. doi: 10.1093/jac/47.1.43. [DOI] [PubMed] [Google Scholar]

- 10.Gurwith MJ, Rabin HR, Love K. Diarrhea associated with clindamycin and ampicillin therapy: preliminary results of a cooperative study. The Journal of infectious diseases. 1977;(135 Suppl):S104–S110. doi: 10.1093/infdis/135.supplement.s104. [DOI] [PubMed] [Google Scholar]

- 11.Brown KA, Khanafer N, Daneman N, Fisman DN. Meta-analysis of antibiotics and the risk of community-associated Clostridium difficile infection. Antimicrobial agents and chemotherapy. 2013;57(5):2326–2332. doi: 10.1128/AAC.02176-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kyne L, Sougioultzis S, McFarland LV, Kelly CP. Underlying disease severity as a major risk factor for nosocomial Clostridium difficile diarrhea. Infection control and hospital epidemiology : the official journal of the Society of Hospital Epidemiologists of America. 2002;23(11):653–659. doi: 10.1086/501989. [DOI] [PubMed] [Google Scholar]

- 13.Loo VG, Bourgault AM, Poirier L, et al. Host and pathogen factors for Clostridium difficile infection and colonization. The New England journal of medicine. 2011;365(18):1693–1703. doi: 10.1056/NEJMoa1012413. [DOI] [PubMed] [Google Scholar]

- 14.Weber DJ, Anderson D, Rutala WA. The role of the surface environment in healthcare-associated infections. Current opinion in infectious diseases. 2013;26(4):338–344. doi: 10.1097/QCO.0b013e3283630f04. [DOI] [PubMed] [Google Scholar]

- 15.Kim KH, Fekety R, Batts DH, et al. Isolation of Clostridium difficile from the environment and contacts of patients with antibiotic-associated colitis. The Journal of infectious diseases. 1981;143(1):42–50. doi: 10.1093/infdis/143.1.42. [DOI] [PubMed] [Google Scholar]

- 16.McFarland LV, Mulligan ME, Kwok RY, Stamm WE. Nosocomial acquisition of Clostridium difficile infection. The New England journal of medicine. 1989;320(4):204–210. doi: 10.1056/NEJM198901263200402. [DOI] [PubMed] [Google Scholar]

- 17.Dubberke ER, Reske KA, Olsen MA, et al. Evaluation of Clostridium difficile–associated disease pressure as a risk factor for C difficile–associated disease. Archives of internal medicine. 2007;167(10):1092–1097. doi: 10.1001/archinte.167.10.1092. [DOI] [PubMed] [Google Scholar]

- 18.ShaughnessyMD MK, MicielliMD RL, DePestelPharmD DD, et al. Evaluation of hospital room assignment and acquisition of Clostridium difficile infection. Evaluation. 2011;32(3):201–206. doi: 10.1086/658669. [DOI] [PubMed] [Google Scholar]

- 19.Karkada UH, Adamic LA, Kahn JM, Iwashyna TJ. Limiting the spread of highly resistant hospital-acquired microorganisms via critical care transfers: a simulation study. Intensive Care Med. 2011;37(10):1633–1640. doi: 10.1007/s00134-011-2341-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Lesosky M, McGeer A, Simor A, Green K, Low DE, Raboud J. Effect of patterns of transferring patients among healthcare institutions on rates of nosocomial methicillin-resistant Staphylococcus aureus transmission: a Monte Carlo simulation. Infect Control Hosp Epidemiol. 2011;32(2):136–147. doi: 10.1086/657945. [DOI] [PubMed] [Google Scholar]

- 21.Ciccolini M, Donker T, Grundmann H, Bonten MJ, Woolhouse ME. Efficient surveillance for healthcare-associated infections spreading between hospitals. Proc Natl Acad Sci U S A. 2014;111(6):2271–2276. doi: 10.1073/pnas.1308062111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Donker T, Wallinga J, Slack R, Grundmann H. Hospital networks and the dispersal of hospital-acquired pathogens by patient transfer. PLoS One. 2012;7(4):e35002. doi: 10.1371/journal.pone.0035002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Donker T, Wallinga J, Grundmann H. Patient referral patterns and the spread of hospital-acquired infections through national health care networks. PLoS computational biology. 2010;6(3):e1000715. doi: 10.1371/journal.pcbi.1000715. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Blondel VD, Guillaume J-L, Lambiotte R, Lefebvre E. Fast unfolding of communities in large networks. Journal of Statistical Mechanics: Theory and Experiment. 2008;2008(10):P10008. [Google Scholar]

- 25.Lee BY, Bartsch SM, Wong KF, et al. The importance of nursing homes in the spread of methicillin-resistant Staphylococcus aureus (MRSA) among hospitals. Med Care. 2013;51(3):205–215. doi: 10.1097/MLR.0b013e3182836dc2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Kay RS, Vandevelde AG, Fiorella PD, et al. Outbreak of healthcare-associated infection and colonization with multidrug-resistant Salmonella enterica serovar Senftenberg in Florida. Infect Control Hosp Epidemiol. 2007;28(7):805–811. doi: 10.1086/518458. [DOI] [PubMed] [Google Scholar]

- 27.Hobson RP, MacKenzie FM, Gould IM. An outbreak of multiply-resistant Klebsiella pneumoniae in the Grampian region of Scotland. J Hosp Infect. 1996;33(4):249–262. doi: 10.1016/s0195-6701(96)90011-0. [DOI] [PubMed] [Google Scholar]

- 28.Svoboda T, Henry B, Shulman L, et al. Public health measures to control the spread of the severe acute respiratory syndrome during the outbreak in Toronto. The New England journal of medicine. 2004;35023:2352–2361. doi: 10.1056/NEJMoa032111. [DOI] [PubMed] [Google Scholar]

- 29.Curtis DE, Hlady CS, Kanade G, Pemmaraju SV, Polgreen PM, Segre AM. Healthcare worker contact networks and the prevention of hospital-acquired infections. PloS one. 2013;8(12):e79906. doi: 10.1371/journal.pone.0079906. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Hornbeck T, Naylor D, Segre AM, Thomas G, Herman T, Polgreen PM. Using sensor networks to study the effect of peripatetic healthcare workers on the spread of hospital-associated infections. The Journal of infectious diseases. 2012;206(10):1549–1557. doi: 10.1093/infdis/jis542. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Fries J, Segre AM, Thomas G, Herman T, Ellingson K, Polgreen PM. Monitoring hand hygiene via human observers: how should we be sampling? Infection control and hospital epidemiology : the official journal of the Society of Hospital Epidemiologists of America. 2012;33(7):689–695. doi: 10.1086/666346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Polgreen PM, Tassier TL, Pemmaraju SV, Segre AM. Prioritizing healthcare worker vaccinations on the basis of social network analysis. Infection control and hospital epidemiology : the official journal of the Society of Hospital Epidemiologists of America. 2010;31(9):893–900. doi: 10.1086/655466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kuntz JL, Polgreen PM. The Importance of Considering Different Healthcare Settings When Estimating the Burden of Clostridium difficile. Clinical infectious diseases : an official publication of the Infectious Diseases Society of America. 2015;60(6):831–836. doi: 10.1093/cid/ciu955. [DOI] [PubMed] [Google Scholar]