Abstract

This paper proposes an improved cuckoo search (ICS) algorithm to establish the parameters of chaotic systems. In order to improve the optimization capability of the basic cuckoo search (CS) algorithm, the orthogonal design and simulated annealing operation are incorporated in the CS algorithm to enhance the exploitation search ability. Then the proposed algorithm is used to establish parameters of the Lorenz chaotic system and Chen chaotic system under the noiseless and noise condition, respectively. The numerical results demonstrate that the algorithm can estimate parameters with high accuracy and reliability. Finally, the results are compared with the CS algorithm, genetic algorithm, and particle swarm optimization algorithm, and the compared results demonstrate the method is energy-efficient and superior.

1. Introduction

Chaos is a universal complex dynamical phenomenon, lurking in many nonlinear systems, such as communication systems and meteorological systems. The control and synchronization of chaos has been widely studied [1–4]. Parameter estimation is a prerequisite to accomplish the control and synchronization of chaos. During recent years many parameter estimation methods have been proposed, such as particle swarm optimization (PSO) [5–8], genetic algorithm (GA) [9–12], and mathematical methods of multiple shooting [13]. However, the GA and PSO algorithms are easily trapped into local-best solution that affects the quality of solutions; the precisions of PSO, GA, and multiple shooting are not high enough. Recently, a novel and robust metaheuristic based method called cuckoo search algorithm was proposed by Yang and Deb [14–16]. The algorithm proved to be very promising and could outperform existing algorithms such as GA and PSO [14]. However, the relatively poor ability of local searching is a drawback, and it is necessary to further improve the performance of CS algorithm to obtain a higher-quality solution. The basic principle of the ICS algorithm is to integrate the orthogonal design and simulated annealing operation to enhance the exploitation optimization capacity.

The remaining sections of this paper are organized as follows. In Section 2, a brief formulation of chaotic system parameters estimation is described. Section 3 elaborates the ICS algorithm, and the results established upon the proposed algorithm and some compared algorithms are given in Section 4. The paper ends with conclusions in Section 5.

2. Problem Formulation

A problem of parameter estimation can be converted into a problem of multidimensional optimization by constructing the proper fitness function.

Let the following equation be a continuous nonlinear n-dimension chaotic system:

| (1) |

where X = (x 1, x 2,…, x n)T ∈ R n denotes the state vector of the chaotic system, is the derivative of X, X 0 = (x 10, x 20,…, x n 0)T ∈ R n denotes the initial state of system, and θ = (θ 1, θ 2,…, θ d)T is a set of original parameters. Suppose the structure of the system (1) is known; then the estimated system can be written as

| (2) |

where denotes the state vector of the estimated system; is a set of estimated parameters. In order to convert the parameter estimation problem into optimization problem, the following objective fitness function is defined:

| (3) |

where i = 1,2,…, M is the sampling time point and M denotes the length of data used for parameter estimation. The parameter estimation of system (1) can be achieved by searching the most proper values of such that the objective function (3) is globally minimized.

It can be found that (3) is a multidimensional nonlinear function with multiple local search optima; it is easily trapped into local optimal solution and the computation amount is great, so it is not easy to search the globally optimal solution effectively and accurately using traditional general methods. In the paper an improved CS algorithm is proposed to solve the complex optimization problem.

3. Improved CS Algorithm

3.1. Basic CS Algorithm

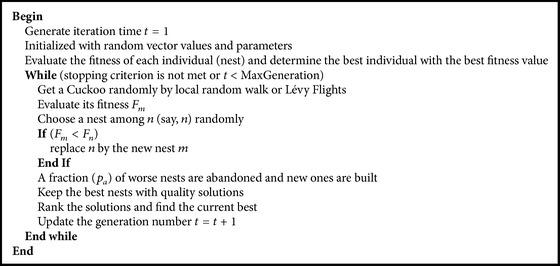

The basic CS algorithm is based on the brood parasitism of some cuckoo species by laying their eggs in the nests of other host birds. For simplicity in describing the basic CS, the following three ideal rules are used [14]: (1) Each cuckoo lays one egg at a time, and dumps it in a randomly chosen set; (2) the best nests with high-quality eggs will be carried over to the next generations; (3) the number of available host nests is fixed, and the egg laid by a cuckoo is discovered by the host bird with a probability p a ∈ [0,1]. In this case, the host bird can either get rid of the egg away or simply abandon the nest and build a complex new nest. Based on the above rules, the basic CS algorithm is described as shown in Algorithm 1 [14].

Algorithm 1.

Basic cuckoo search algorithm.

Furthermore, the algorithm used a balanced combination of a local random walk and the global explorative random walk, controlled by a switching parameter p a. The local random walk can be written as

| (4) |

where x j t and x k t are two different solutions selected randomly by random permutation, H is a Heaviside function, ε is a random number drawn from a uniform distribution, and s is the step size.

On the other hand, the global random walk is carried out by using Lévy flights [14–17]:

| (5) |

Here, α > 0 is the step size scaling factor; Lévy(s, λ) is the step-lengths that are distributed according to the following probability distribution shown in (6) which has an infinite variance with an infinite mean:

| (6) |

3.2. ICS Algorithm

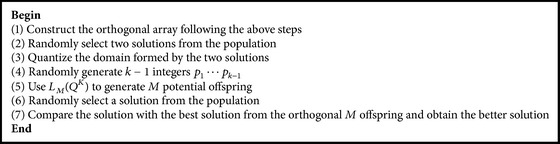

In order to further improve searching ability of the algorithm, the orthogonal design and simulated annealing operation are integrated into the CS algorithm. The basic idea of the orthogonal design is to utilize the properties of the fractional experiment to efficiently determine the best combination of levels [17]. An orthogonal array of K factors with Q levels and M combinations is denoted as L M(Q K), where Q is the prime number, M = Q J, and J is a positive integer satisfying K = (Q J − 1)/(Q − 1). The brief procedure of constructing the orthogonal array L M(Q K) = [a i,j]M×K is described as shown in Procedure 1.

Procedure 1: Procedure constructing the orthogonal array.

Step 1. Construct the basic columns

For k = 1 to J

For i = 1 to Q J

modQ

End for

End for

Step 2. Construct the non-basic columns

For k = 2 to J

For s = 1 to j − 1

For t = 1 to Q − 1

a j+(s−1)(Q−1)+1 = (a s × t + a j)modQ

End for

End for

End for

Step 3. Increment a i,j by one for 1 ≤ i ≤ M, 1 ≤ j ≤ N

The procedure of the orthogonal design algorithm is elaborated as shown in Algorithm 2 and for more detailed information on the orthogonal design strategy, please refer to [17–19].

Algorithm 2.

Orthogonal design algorithm.

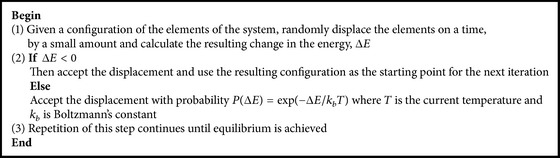

The procedure of simulated annealing algorithm is simply stated as shown in Algorithm 3 [20], and for more detailed information on the simulated annealing, please refer to [20–22].

Algorithm 3.

Simulated annealing algorithm.

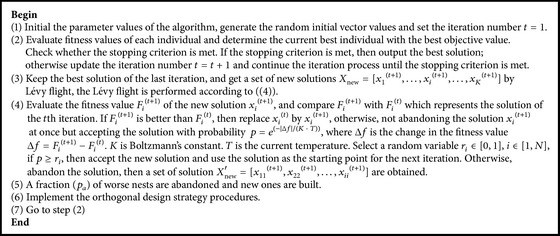

Based on the above description of the orthogonal design strategy and simulated annealing operation, the detailed procedures for parameter estimation with the ICS algorithm can be summarized as shown in Algorithm 4.

Algorithm 4.

Improved cuckoo search algorithm.

4. Simulation Results

To demonstrate the effectiveness of the improved algorithm, the algorithm is used to estimate parameters of Lorenz chaotic system [23] and Chen chaotic system [24].

4.1. Lorenz Chaotic System

Lorenz chaotic system equation [23] is expressed as follows:

| (7) |

where (x, y, z) is the state variables; σ 1, σ 2, σ 3 are the unknown chaotic system parameters which need to be estimated. The real parameters of the system are σ 1 = 10, σ 2 = 28, and σ 3 = 8/3 which ensure a chaotic behavior, in order to obtain the values of some state variables, the fourth-order Runge-Kutta algorithm is used to solve (7), and the integral step is h = 0.01. Then a series of state variables values are obtained and 100 state variables of different times ({(x(n), y(n), z(n)), n = 1, 2, …, 100}) are chosen to be the sample data. The parameters of the algorithm are set as follows: the max iteration number is N = 200, the sample size is M = 100, the annealing mode is shown in (8) where n is the iteration number, and the initial temperature is T 0 = 100. Consider

| (8) |

The objective (fitness) function H is shown in (9), where (x(n), y(n), z(n)) is the nth state variable that corresponds to the true system parameters and is the nth state variable that corresponds to the estimated system parameters:

| (9) |

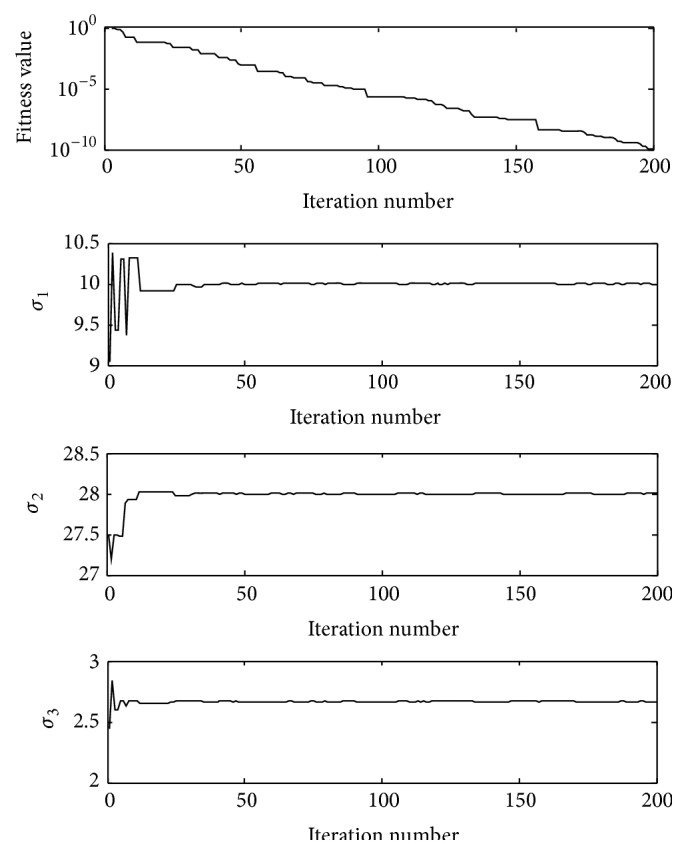

Figure 1 shows the convergence process of the fitness values and three parameters (σ 1, σ 2, σ 3) during the iterations in a single experiment.

Figure 1.

The convergence process of fitness function value and three parameters (σ 1, σ 2, σ 3) during the iterations in a single experiment under the noiseless condition.

In order to eliminate the difference of each experiment, the algorithm is also executed 50 times; then the mean value of the 50 experiments is taken as the final estimated value; the mean value and best value of the 50 experiments are listed in Table 1. The results based on CS (the best parameter setting is p a = 0.25, p a = 0.01), PSO (the best parameter setting is w = 0.8, c = 1.5, where w is the inertia weight and c is acceleration factor), and GA (the best parameter setting is c r = 0.8, m u = 0.1, where c r is the crossover rate and m u is the mutation rate) are also listed in Table 1.

Table 1.

The statistical results based on different methods in the noiseless condition.

| Mean value | Best value | |||||||

|---|---|---|---|---|---|---|---|---|

| ICS | CS | PSO | GA | ICS | CS | PSO | GA | |

| σ 1 | 10.000000 | 9.998736 | 9.985012 | 10.082051 | 10.000000 | 9.999927 | 9.995510 | 10.026911 |

| σ 2 | 28.000000 | 28.000005 | 28.014411 | 27.881034 | 28.000000 | 28.000002 | 28.001304 | 28.004702 |

| σ 3 | 2.666667 | 2.666661 | 2.668102 | 2.681882 | 2.666667 | 2.666665 | 2.666802 | 2.669018 |

| H | 1.1822e − 010 | 3.7614e − 004 | 0.069517 | 0.331901 | 1.2933e − 011 | 2.9556e − 005 | 0.011377 | 0.113969 |

It can be seen from Table 1 that the best fitness values obtained by ICS algorithm are quite better than the other algorithms. The mean values of the established parameters are also with higher precision than others. The estimated values are close to the true values infinitely. It can be concluded in general that the ICS algorithm contributes to superior performance, CS performs nest-best, PSO is better than GA, and GA performs worst.

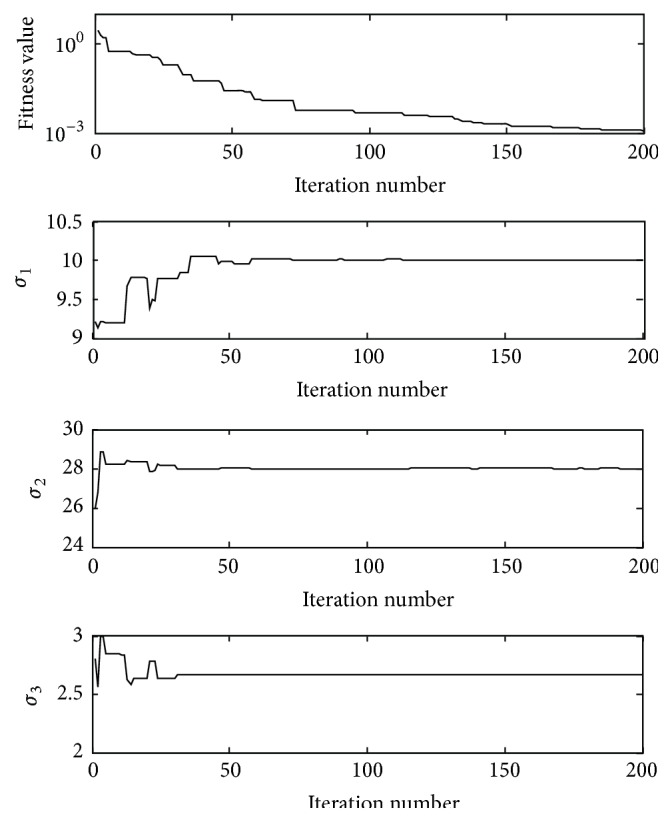

As the actual chaotic systems always associate with noise, in order to test the performance of parameter estimation in the noise condition, the noise sequences are added to the original sample data. The white noise is added to the state variables {(x(n), y(n), z(n)), n = 1, 2, …, 100}; the range of the noise sequences is from −0.1 to 0.1. Figure 2 shows the convergence process of the fitness values and three parameters (σ 1, σ 2, σ 3) during the iterations in a single experiment under the noise condition.

Figure 2.

The convergence process of fitness function value and three parameters (σ 1, σ 2, σ 3) during the iterations in a single experiment under the noise condition.

In order to eliminate the difference of each experiment, the algorithm is executed 50 times, then the mean value of the 50 experiments is taken as the final estimated value, and the corresponding results are listed in Table 2.

Table 2.

The statistical results by different algorithms in the noise condition.

| Mean value | Best value | |||||||

|---|---|---|---|---|---|---|---|---|

| ICS | CS | PSO | GA | ICS | CS | PSO | GA | |

| σ 1 | 9.996110 | 10.080014 | 9.844606 | 10.217998 | 9.998941 | 10.001565 | 9.881002 | 10.044011 |

| σ 2 | 28.002272 | 27.980212 | 27.860013 | 27.661201 | 28.000099 | 28.001995 | 28.022441 | 27.900189 |

| σ 3 | 2.666590 | 2.658890 | 2.700198 | 2.659880 | 2.666675 | 2.667704 | 2.675596 | 2.670228 |

| H | 0.008402 | 0.0390389 | 0.221401 | 0.500227 | 0.000908 | 0.001228 | 0.050931 | 0.255996 |

It can be seen from Table 2 that the four algorithms all have a certain capability of identification of parameters, but the performance of ICS is much better than the other algorithms; it supplies more robust and precise results; although the precision of the estimated parameters is declined compared with the results in the noiseless condition, the precision is still satisfactory. Then it can be concluded that the ICS algorithm possesses a powerful capability for parameters identification in the noise condition.

4.2. Chen Chaotic System

Chen chaotic system equation [24] is expressed as follows:

| (10) |

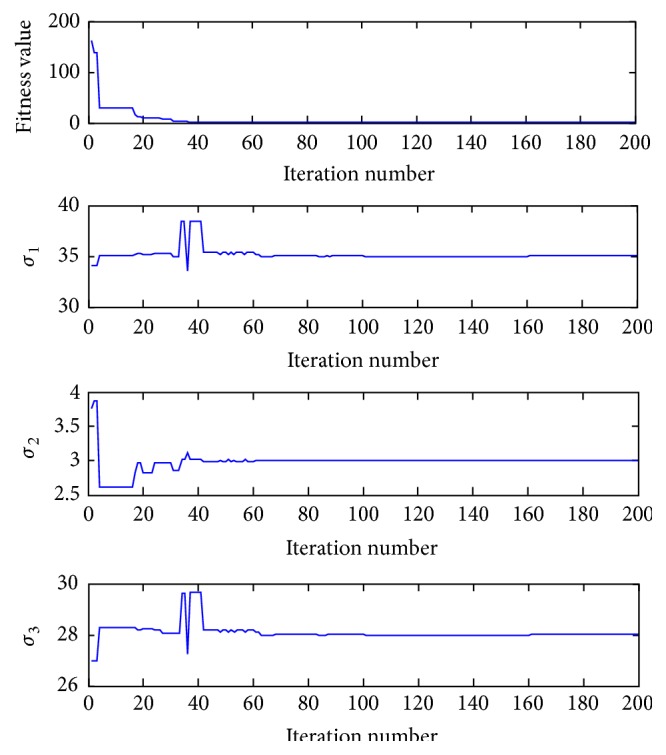

where (x, y, z) is the state variables; σ 1, σ 2, σ 3 are the unknown chaotic system parameters which need to be estimated. The real parameters of the system are σ 1 = 35, σ 2 = 3, and σ 3 = 28 which ensure a chaotic behavior, the fourth-order Runge-Kutta algorithm is used to solve (10), and the integral step is h = 0.01. Then a series of state variables values are obtained and 100 state variables of different times ({(x(n), y(n), z(n)), n = 1, 2, …, 100}) are chosen to be the sample data. The parameters of the algorithm are set as follows: the max iteration number is N = 200, the sample size is M = 100, the annealing mode is shown in (8) where n is the iteration number, and the initial temperature is T 0 = 100. The convergence process of the fitness values and three parameters (σ 1, σ 2, σ 3) during the iterations in a single experiment is shown in Figure 3. In order to eliminate the difference of each experiment, the algorithm is executed 50 times, then the mean value of the 50 experiments is taken as the final estimated value, and the corresponding results are listed in Table 2.

Figure 3.

The convergence process of fitness function value and three parameters (σ 1, σ 2, σ 3) during the iterations in a single experiment under the noiseless condition.

It can be seen from Table 3 that the best fitness values obtained by ICS algorithm are quite better than the other algorithms. The mean values of the established parameters are also with higher precision than others. The estimated values are close to the true values asymptotically. It can be concluded in general that the ICS algorithm contributes to superior performance, CS performs nest-best, PSO is better than GA, and GA performs worst.

Table 3.

The statistical results by different algorithms in the noiseless condition.

| Mean value | Best value | |||||||

|---|---|---|---|---|---|---|---|---|

| ICS | CS | PSO | GA | ICS | CS | PSO | GA | |

| σ 1 | 34.999438 | 35.089675 | 34.844278 | 33.535396 | 34.999945 | 34.996661 | 34.782290 | 35.102699 |

| σ 2 | 2.999951 | 2.999081 | 3.012977 | 3.005031 | 2.999999 | 2.999907 | 2.998694 | 2.991955 |

| σ 3 | 27.999757 | 28.043810 | 27.917648 | 27.291109 | 27.999974 | 27.998427 | 27.895888 | 28.053975 |

| H | 4.6438e − 007 | 4.6628e − 004 | 0.027312 | 0.115259 | 2.9658e − 010 | 3.2051e − 006 | 0.003312 | 0.010562 |

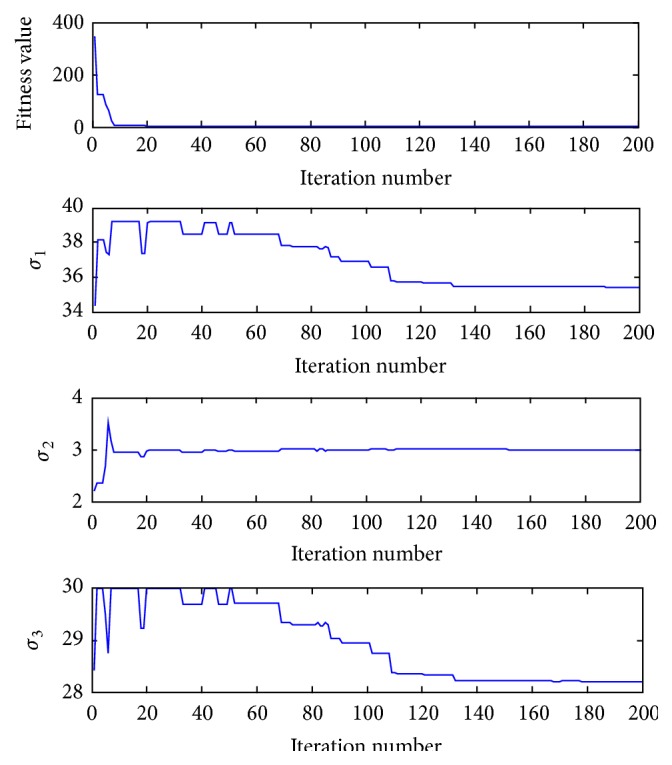

As the actual chaotic systems always come along with noise, in order to test the performance of parameter estimation in the noise condition, the noise sequences are added to the original sample data. The white noise is added to the state variables {(x(n), y(n), z(n)), n = 1, 2, …, 100}; the range of the noise sequences is from −0.1 to 0.1. Figure 4 shows the convergence process of the fitness values and three parameters (σ 1, σ 2, σ 3) during the iterations in a single experiment under the noise condition.

Figure 4.

The convergence process of fitness function value and three parameters (σ 1, σ 2, σ 3) during the iterations in a single experiment under the noise condition.

It can be seen from Table 4 that the four algorithms all have a certain capability of identification of parameters, but the performance of ICS is much better than the other algorithms; it supplies more robust and precise results; although the precision of the estimated parameters is declined compared with the results in the noiseless condition, the precision is still satisfactory. Then it can be concluded that the ICS algorithm possesses a powerful capability for parameters identification in the noise condition.

Table 4.

The statistical results by different algorithms in the noise condition.

| Mean value | Best value | |||||||

|---|---|---|---|---|---|---|---|---|

| ICS | CS | PSO | GA | ICS | CS | PSO | GA | |

| σ 1 | 35.421272 | 35.859540 | 34.108398 | 35.962547 | 34.970096 | 35.112205 | 35.313896 | 10.044011 |

| σ 2 | 2.996796 | 2.993281 | 2.970277 | 3.052984 | 2.999311 | 2.997309 | 2.980455 | 27.900189 |

| σ 3 | 28.204815 | 28.418425 | 27.588450 | 28.434651 | 27.985940 | 28.055617 | 28.162608 | 2.670228 |

| H | 0.009334 | 0.038112 | 0.244276 | 0.591957 | 1.5986e − 004 | 0.001495 | 0.062901 | 0.255996 |

5. Conclusion

In this paper, an energy-efficient and superior ICS algorithm is proposed to estimate chaotic system parameters. The estimated results demonstrate the strong capabilities and effectiveness of the proposed algorithm, compared with the CS, PSO, and GA algorithms; the ICS algorithm supplies more robust and precise results. Besides, the algorithm also has a more powerful capability of noise immunity. In general, the proposed ICS algorithm is a feasible, energy-efficient, and promising method for parameters estimation of chaotic systems.

Acknowledgment

This work is supported by National Natural Science Foundation of China, 61271106.

Conflict of Interests

The authors declare that there is no conflict of interests regarding the publication of this paper.

References

- 1.Sitz A., Schwarz U., Kurths J., Voss H. U. Estimation of parameters and unobserved components for nonlinear systems from noisy time series. Physical Review E—Statistical, Nonlinear, and Soft Matter Physics. 2002;66(1) doi: 10.1103/physreve.66.016210.016210 [DOI] [PubMed] [Google Scholar]

- 2.Guo L. X., Hu M. F., Xu Z. Y., Hu A. Synchronization and chaos control by quorum sensing mechanism. Nonlinear Dynamics. 2013;73(3):1253–1269. doi: 10.1007/s11071-013-0769-z. [DOI] [Google Scholar]

- 3.Parlitz U. Estimating model parameters from time series by autosynchronization. Physical Review Letters. 1996;76(8):1232–1244. doi: 10.1103/physrevlett.76.1232. [DOI] [PubMed] [Google Scholar]

- 4.Agiza H. N., Yassen M. T. Synchronization of Rossler and Chen chaotic dynamical systems using active control. Physics Letters A. 2001;278(4):191–197. doi: 10.1016/s0375-9601(00)00777-5. [DOI] [Google Scholar]

- 5.Gao F., Tong H.-Q. Parameter estimation for chaotic system based on particle swarm optimization. Acta Physica Sinica. 2006;55(2):577–582. [Google Scholar]

- 6.He Q., Wang L., Liu B. Parameter estimation for chaotic systems by particle swarm optimization. Chaos, Solitons and Fractals. 2007;34(2):654–661. doi: 10.1016/j.chaos.2006.03.079. [DOI] [Google Scholar]

- 7.Garcia-Nieto J., Olivera A. C., Alba E. Optimal cycle program of traffic lights with particle swarm optimization. IEEE Transactions on Evolutionary Computation. 2013;17(6):823–839. doi: 10.1109/tevc.2013.2260755. [DOI] [Google Scholar]

- 8.Salahi M., Jamalian A., Taati A. Global minimization of multi-funnel functions using particle swarm optimization. Neural Computing and Applications. 2013;23(7-8):2101–2106. doi: 10.1007/s00521-012-1158-0. [DOI] [Google Scholar]

- 9.Tao C., Zhang Y., Jiang J. J. Estimating system parameters from chaotic time series with synchronization optimized by a genetic algorithm. Physical Review E. 2007;76(1) doi: 10.1103/physreve.76.016209.016209 [DOI] [PubMed] [Google Scholar]

- 10.Wang J., Sheng Z., Zhou B. H., Zhou S. D. Lightning potential forecast over Nanjing with denoised sounding derived indices based on SSA and CS-BP neural network. Atmospheric Research. 2014;137:245–256. doi: 10.1016/j.atmosres.2013.10.014. [DOI] [Google Scholar]

- 11.Wang J., Zhou B. H., Zhou S. D., Sheng Z. Forecasting nonlinear chaotic time series with function expression method based on an improved genetic-simulated annealing algorithm. Computational Intelligence and Neuroscience. 2015;2015:10. doi: 10.1155/2015/341031.341031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Szpiro G. G. Forecasting chaotic time series with genetic algorithms. Physical Review E. 1997;55(3)2557 [Google Scholar]

- 13.Peifer M., Timmer J. Parameter estimation in ordinary differential equations for biochemical processes using the method of multiple shooting. IET Systems Biology. 2007;1(2):78–88. doi: 10.1049/iet-syb:20060067. [DOI] [PubMed] [Google Scholar]

- 14.Yang X.-S., Deb S. Cuckoo search via Lévy flights. Proceedings of the World Congress on Nature & Biologically Inspired Computing (NaBIC '09); December 2009; Coimbatore, India. IEEE; pp. 210–214. [DOI] [Google Scholar]

- 15.Yang X.-S., Deb S. Engineering optimisation by cuckoo search. International Journal of Mathematical Modelling and Numerical Optimisation. 2010;1(4):330–343. doi: 10.1504/IJMMNO.2010.035430. [DOI] [Google Scholar]

- 16.Gandomi A. H., Yang X.-S., Alavi A. H. Cuckoo search algorithm: a metaheuristic approach to solve structural optimization problems. Engineering with Computers. 2013;29(1):17–35. doi: 10.1007/s00366-011-0241-y. [DOI] [Google Scholar]

- 17.Hick C. R. Fundamental Concepts in the Design of Experiments. Austin, Tex, USA: Saunders College Publishing; 1993. [Google Scholar]

- 18.Montgomery D. C. Design and Analysis of Experiments. New York, NY, USA: Wiley; 1991. [Google Scholar]

- 19.Leung Y.-W., Wang Y., Leung Y.-W., Wang Y. An orthogonal genetic algorithm with quantization for global numerical optimization. IEEE Transactions on Evolutionary Computation. 2001;5(1):41–53. doi: 10.1109/4235.910464. [DOI] [Google Scholar]

- 20.Kirkpatrick S., Gelatt C. D., Vecchi M. P. Optimization by simulated annealing. Science. 1983;220(4598):671–680. doi: 10.1126/science.220.4598.671. [DOI] [PubMed] [Google Scholar]

- 21.Kim M. S., Feldman M. R., Guest C. C. Optimum encoding of binary phase-only filters with a simulated annealing algorithm. Optics Letters. 1989;14(11):545–547. doi: 10.1364/ol.14.000545. [DOI] [PubMed] [Google Scholar]

- 22.Golden B. L., Skiscim C. C. Using simulated annealing to solve routing and location problems. Naval Research Logistics Quarterly. 1986;33(2):261–279. doi: 10.1002/nav.3800330209. [DOI] [Google Scholar]

- 23.Lorenz E. N. Deterministic nonperiodic flow. Journal of the Atmospheric Sciences. 1963;20(2):130–141. [Google Scholar]

- 24.Lü J., Chen G. A new chaotic attractor coined. International Journal of Bifurcation and Chaos. 2002;12(3):659–661. doi: 10.1142/s0218127402004620. [DOI] [Google Scholar]