Abstract

One key question in neurolinguistics is the extent to which the neural processing system for language requires linguistic experience during early life to develop fully. We conducted a longitudinal anatomically constrained magnetoencephalography (aMEG) analysis of lexico-semantic processing in 2 deaf adolescents who had no sustained language input until 14 years of age, when they became fully immersed in American Sign Language. After 2 to 3 years of language, the adolescents' neural responses to signed words were highly atypical, localizing mainly to right dorsal frontoparietal regions and often responding more strongly to semantically primed words (Ferjan Ramirez N, Leonard MK, Torres C, Hatrak M, Halgren E, Mayberry RI. 2014. Neural language processing in adolescent first-language learners. Cereb Cortex. 24 (10): 2772–2783). Here, we show that after an additional 15 months of language experience, the adolescents' neural responses remained atypical in terms of polarity. While their responses to less familiar signed words still showed atypical localization patterns, the localization of responses to highly familiar signed words became more concentrated in the left perisylvian language network. Our findings suggest that the timing of language experience affects the organization of neural language processing; however, even in adolescence, language representation in the human brain continues to evolve with experience.

Keywords: age of acquisition, anatomically constrained magnetoencephalography, critical period, plasticity, sign language

Introduction

The architectures of neural circuits are commonly modified by experience during a specific time window in development (Zhou and Merzenich 1993; Makinodan et al. 2012). In humans, reorganization of the brain after damage is greater in children than in adults (Stiles et al. 2012). In healthy development, language is acquired during this early period of heightened plasticity, but it is unknown whether this timing is essential: to what extent does the neural processing system for language require language experience during early life to develop fully? This is a difficult question to answer because infants who hear normally experience language patterns even before birth (Moon and Fifer 2000; Weikum et al. 2012). However, it is possible to investigate the question by studying individuals who are born profoundly deaf. Some deaf children, unlike those who hear, experience little language during childhood because they can neither hear the language spoken around them nor see sign language if it is absent from their environment. In some cases, deaf children experience little or no language until they have the opportunity to interact with other deaf children through sign in school or social settings (Newport 1990; Mayberry 1993; Morford 2003; Berk and Lillo-Martin 2012). These unique developmental circumstances offer a means to ask whether the neural architecture of the human language system is affected by the timing of linguistic experience in relation to age.

The present study is a longitudinal investigation of neural language processing in 2 deaf adolescents (cases) who experienced no childhood language until the age of 14 years when they became fully immersed in American Sign Language (ASL). ASL, like other sign languages, has a linguistic architecture similar to that of spoken languages and obeys linguistic rules at the level of phonology, morphology, syntax and semantics (Klima and Bellugi 1979; Sandler and Lillo-Martin 2006). When children experience sign language from birth, the trajectory and content of their language acquisition parallels the acquisition of spoken languages (Anderson and Reilly 2002; Mayberry and Squires 2006). Under these typical developmental circumstances, sign language in the mature brain is processed in a left frontotemporal brain network, similar to the network used by hearing subjects to understand speech (Hickok et al. 1996; Corina et al. 1999; Petitto et al. 2000; McCullough et al. 2005; Sakai et al. 2005; MacSweeney et al. 2006). Interestingly, this may not be the case for hearing native and non-native signers (Newman et al. 2002; Rönnberg et al. 2004). However, deaf individuals who acquire sign language at a late age, but after a successful acquisition of a spoken and/or written language (as indicated by their reading scores; MacSweeney et al. 2008), also process sign language mainly in the left frontotemporal network.

This is not the case for those deaf individuals who are not exposed to any natural language during childhood. Specifically, late first-language (L1) acquisition of sign has been linked to decreased hemodynamic activity in the classical left-hemisphere language areas and increased activity in the occipital cortex (Mayberry et al. 2011), suggesting a fundamental difference the neural correlates of language when acquisition is delayed. Moreover, late L1 acquisition of sign has been associated with low language proficiency in adulthood and lifelong language processing difficulties across all levels of linguistic structure (phonology, lexical processing; morphosyntax, semantics; Mayberry and Fischer 1989; Newport 1990; Mayberry and Eichen 1991; Emmorey et al. 1995; Mayberry et al. 2002; Boudreault and Mayberry 2006).

The negative effects of delayed language experience are especially prominent in those deaf individuals where no language experience was available during the first several years of life, and sometimes not until adolescence. However, research in this area is limited in quantity and scope, mainly because most North American and European deaf children begin receiving special services and experiencing natural language (spoken or signed) by the time they enter school. Deaf individuals without childhood language experience are commonplace in countries where they grow up in isolation from one another and services for deaf people are limited as for example in Cambodia or Nepal (Hoffmann-Dilloway 2010; Dittmeier 2014). Furthermore, most studies on late L1 learners have used a retrospective paradigm studying adults whose onset of L1 acquisition began at a variety of ages, but who have used sign language for many years. The early stages of late L1 acquisition and its neural correlates, on the other hand, have not been studied extensively.

In North America, some otherwise healthy deaf individuals do not attend school and/or receive any special services until adolescence, due to various circumstances in their upbringing related to social and educational factors (see Morford 2003; Ferjan Ramirez et al. 2013a). In the present study, we investigate the neural correlates of language of 2 such adolescent cases, Carlos and Shawna. They had not been in contact with any spoken or signed language until the age of approximately 14 years when they began to acquire ASL through full immersion, upon placement into the same group home for deaf children.

Prior to ASL immersion, the adolescents lived with their hearing biological parents (Carlos) or guardians (Shawna) who did not use any sign language. At initial ASL immersion, they were observed to rely on behavior and limited use of gesture to communicate. Shawna was kept at home and not sent to school until the age of 12 years and did not receive any special services until age 14;7. At that point, she had received a total of 16 months of schooling, during which she was switched among a number of deaf and hearing schools. Carlos immigrated to the United States of America at the age of 11 years. In his home country, he lived with his hearing family and reportedly had received only a few months of schooling. Upon arrival to the United States of America at the age of 11, he was misplaced in a classroom for cognitively impaired children where the use of sign language was limited. When he was placed in the group home at the age of 13;8, he knew only a few ASL signs and, like Shawna, was illiterate and unable to use or comprehend spoken or signed language. For a more detailed description of their backgrounds, see Ferjan Ramirez et al. (2013a, 2014).

Thus, the 2 individuals studied here each had a unique childhood background, but what they had in common was a lack of language experience throughout childhood. Importantly, Carlos and Shawna are unlike the previously described cases of social isolation and abuse of children who hear normally (Koluchova 1972; Fromkin et al. 1974; Curtiss 1976) in that they were neither emotionally nor nutritionally deprived throughout childhood and, apart from a lack of linguistic experience and schooling, had a healthy upbringing. It is also important to emphasize that the cases' backgrounds are unlike some of the previously described US and Taiwanese deaf children reported to create a systematic gesture system, known as homesign (Morford and Hänel-Faulhaber 2010). Unlike these deaf children, who received special services, attended school by the age of 5, and developed varying levels of literacy, the present cases had received little schooling or special services, were not observed to have created a systematic gesture system, and were illiterate. Their backgrounds may thus resemble those of first-generation homesigners in Latin American countries (Senghas and Coppola 2001) except that the cases had little contact with other deaf children. After 2 to 3 years of full ASL immersion, the language expression of both the adolescents consisted primarily of short and simple utterances. Consistent with their level of their syntactic development, their vocabularies were limited in size but included words from all syntactic categories (nouns, verbs, adjectives, and grammatical words; see also Ferjan Ramirez et al. 2013a).

We previously conducted an anatomically constrained magnetoencephalography (aMEG) study with the cases to investigate lexico-semantic processing after only 2–3 years of ASL exposure. Only those ASL lexical items that were part of their vocabularies were used as experimental stimuli. The cases' neural responses to ASL signs were compared with those of 12 deaf native signers and 11 hearing second language (L2) learners of ASL. At the time of the study (Ferjan Ramirez et al. 2014), both adolescents showed semantically modulated activity that localized primarily to the right superior parietal, anterior occipital, and dorsolateral prefrontal areas. They also exhibited limited semantically modulated activity in parts of the classical left-hemisphere language network; however, the left-hemisphere responses were increased rather than decreased by semantic priming. The neural activation patterns of the cases differed markedly from those of the 2 sets of controls, individuals born deaf who experienced sign language as infants (native learners), and individuals born with normal hearing who experienced spoken language as infants and learned sign language as a second language as young adults (L2 learners). In agreement with previous research, both control groups showed semantically modulated activity that localized primarily to the classical left-lateralized frontotemporal network, and which decreased when words were semantically primed (Leonard et al. 2012), consistent with the well-characterized N400 response (Kutas and Federmeier 2011).

These aMEG results suggested that the cases' neural processing of word meaning after 2 to 3 years of language experience was atypical, both in terms of localization and polarity of the semantically modulated neural activity. We speculated that the unique patterns of right superior parietal and right dorsolateral prefrontal activity observed in Carlos and Shawna may be specifically related to their lack of language exposure during childhood. The next important question we ask here is whether and how these neural responses change as a consequence of continued language experience.

One possibility is that Carlos' and Shawna's neural representations of word meaning will remain unchanged, which would suggest that their having grown up without language experience has permanently altered the way in which their brains process language. Half a century ago, Penfield and Roberts proposed that after early childhood, the human brain becomes “stiff and rigid” (Penfield and Roberts 1959) and language acquisition is no longer possible. A decade later, Lenneberg (1967) proposed that a gradual left-hemisphere specialization for language, completed by puberty, limited language acquisition after adolescence. Research since then has found that the left-hemisphere is specialized for language from a very early age (Dehaene-Lambertz et al. 2002; Imada et al. 2006; Travis et al. 2011). For example, Travis and colleagues found that the lexico-semantic neural responses to English words in 12- to 18-month-olds are mostly adult-like and localize primarily to the left frontotemporal areas, although some additional right hemisphere activity was noted when infants were directly compared with adults. However, the degree to which neural activation patterns in infants are contingent upon language experience is unknown, because nearly all hearing children experience language from before birth.

The speculations as to why learning a language after adolescence often results in less than native-like proficiency typically do not distinguish between the radically different situations of first (L1) versus second (L2) language acquisition. Research with the population of deaf signers where both kinds of language learners co-exist indicates that the effects of age of acquisition are qualitatively different and much more severe for L1 compared with L2. A number of studies with late L1 learners indicate that delayed exposure to natural language has severe and lifelong effects on language proficiency and processing. Specifically, older ages of L1 acquisition have been associated with decreased phonological and morpho-syntactic abilities, as well as a decline in sentence and narrative comprehension (Newport 1990; Emmorey et al. 1995; Mayberry et al. 2002). Late age of L1 acquisition in sign has also been associated with atypical localization of language processing in the brain (Meyer et al. 2007; Mayberry et al. 2011). Our previous MEG study of the same cases described here (Ferjan Ramirez et al. 2014) found atypical localization after 2 to 3 years of L1 learning. Here we ask whether these atypical patterns are stable, or if they change as the cases experience additional language in both formal educational and natural settings. Our results bear on the question of the extent to which language representations are plastic when they are formed for the first time in adolescence.

Materials and Methods

Participants

Carlos' and Shawna's backgrounds were summarized earlier and have been described in detail in our previous publications (Ferjan Ramirez et al. 2013a, 2014). In brief, these 2 adolescents experienced their first language, ASL, at the age of ∼14 years. The present neuroimaging data were collected after they had experienced 39 (Shawna) and 51 (Carlos) months of ASL immersion and are a follow-up investigation of our initial study, henceforth Visit 1 (Ferjan Ramirez et al. 2014) at which Shawna had experienced 24 months and Carols had experienced 36 (Carlos) months of ASL immersion. The present study, Visit 2, was thus conducted after 15 months of additional language exposure. During this time, both cases continued to live in the same group home for deaf adolescents where they interacted with their peers and staff members exclusively through ASL. They also continued to attend the same school, where their main language of communication was ASL. The adolescents' results from Visit 2 are compared with those from Visit 1, as well as with 2 control groups: 12 young deaf adults who acquired sign language from birth (native signers; 6 females, 17–36 years), and 11 young hearing adults who studied ASL in college (L2 signers; 10 females; 19–33 years). All control participants were right-handed adults with no history of neurological or psychological impairment. The native signers were all profoundly deaf and acquired ASL from birth from their deaf parents. The L2 learners were hearing native English speakers who had received 40 to 50 weeks of college-level ASL instruction and used ASL on a regular basis at the time of the study. The cases' results from Visit 1 and the results from the control groups have been reported in detail elsewhere (Leonard et al. 2012, 2013; Ferjan Ramirez et al. 2014) and are reported here only insofar as they are relevant and necessary to the interpretation of results of 2 adolescents at Visit 2. For a summary of participant background characteristics, see Table 1.

Table 1.

Participants' background information and task performance: mean (SD)

| Participant(s) | Age |

Age of ASL acquisition | Picture-sign matching accuracy (%) | Response time (ms) | |||

|---|---|---|---|---|---|---|---|

| Native signers (n = 12) | 30 (6;4) | Birth | 94% (4%) | 619.1 (97.5) | |||

| L2 learners (n = 11) | 22;5 (3.8) | 20 (3;9) | 89% (5%) | 719.5 (92.7) | |||

| Visit 1 | Visit 2 | Visit 1 | Visit 2 | Visit 1 | Visit 2 | ||

| Carlos | 16;10 | 18;1 | 13;8 | 85% | 89% | 733.1 | 650.6 |

| Shawna | 16;9 | 18 | 14;7 | 84% | 87% | 811.4 | 569.4 |

Stimuli and Task

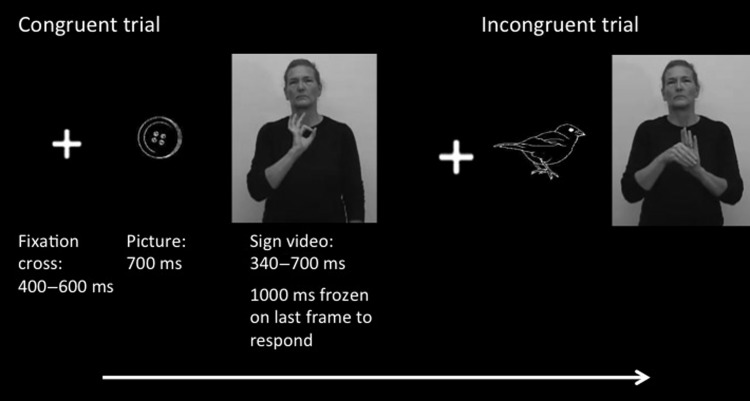

The same stimuli and task from Visit 1 were used for Visit 2. In brief, we used an N400 picture-priming paradigm, similar to what has previously been used in a number of developmental studies (Friedrich and Friederici 2005, 2010; Travis et al. 2011). The N400 (N400m in MEG) is an event-related brain response that peaks about 400 ms after the presentation of a word (or other meaningful stimulus) in multiple modalities and is modulated by the degree of difficulty of contextual integration, word frequency and repetition, and other factors (Kutas and Hillyard 1980; Kutas and Federmeier 2011). We recorded MEG as the participants viewed pictures of objects followed by ASL signs that either matched (congruent; for example “book-book”) or did not match (incongruent; for example, “book-dog”) the picture in meaning (Fig. 1). Each sign appeared in both the congruent and incongruent conditions, thus permitting averages to be constructed that were exactly matched on a sensory level between conditions. Special care was taken so that when a trial was rejected for a particular stimulus in 1 condition, that the corresponding trial to the same stimulus in the other condition was also rejected. Consequently, the incongruent–congruent differences described here cannot be due to uncontrolled sensory differences in the ASL signs. All stimulus signs were concrete nouns and were already part of Carlos' and Shawna's vocabularies at Visit 1. The task was to press a button when the sign matched the picture; response hand was counterbalanced across blocks within participants. The cases and native signers saw 6 blocks of 102 trials each, and the L2 signers saw 3 blocks of 102 trials each. Prior to scanning, all participants performed a short practice run that used a separate set of ASL signs. For a more detailed description of stimuli and task, see Ferjan Ramirez et al. 2014.

Figure 1.

Schematic diagram of task design. Each picture and sign appeared in both the congruent and incongruent conditions. Averages of congruent versus incongruent trials thereby compared responses with exactly the same stimuli.

Anatomically Constrained MEG (aMEG) Analysis

In the above-described picture-priming paradigm, we expect to see a difference between congruent and incongruent trials (N400m), which we localized with aMEG, a noninvasive neurophysiological technique that constrains the MEG activity to the cortical surface as determined by high-resolution MRI. This noise-normalized linear inverse technique has been used extensively to characterize the spatiotemporal dynamics of spoken, written, and signed word processing (Halgren et al. 2002; Marinkovic et al. 2003; Travis et al. 2011; Leonard et al. 2012) and has been validated by direct intracranial recordings (Halgren, Baudena, Heit, Clarke, Marinkovic, Clarke 1994; Halgren, Baudena, Heit, Clarke, Marinkovic, Chauvel et al. 1994; McDonald et al. 2010). However, it must always be borne in mind that source estimation from extracranial MEG is an ill-posed problem (Dale et al. 2000).

In our previous work using the same picture-priming paradigm and stimuli with typically developing hearing and deaf adults, and with the cases, we have observed that the strongest N400 semantic effect tends to occur between 300 and 350 ms postword onset; however, the results are similar when a broader time window is used (for example, 200–400 ms; see Ferjan Ramirez et al. 2014). For the purposes of comparison between Visit 1 and Visit 2, the current study uses the 300- to 350-ms time-window. Analyses using a broader time-window are shown as supplemental figures (Supplementary Figs S1 and S2). Shawna's and Carlos' aMEG responses were studied at the individual level and compared with their own aMEG responses at Visit 1, as well as with the aMEG responses of both control groups. The group data (native signers and L2 learners) represent an average of F-values from individual subjects. All between-subject statistics were performed on the individual F-values. Statistical comparisons are made on region of interest (ROI) time courses, which were selected based on information from the average incongruent–congruent subtraction across all subjects (cases, native signers, and L2 signers).

MEG was recorded in a magnetically shielded room (IMEDCO-AG, Switzerland), using a 306-channel (102 magnetometers and 204 planar gradiometers) Neuromag Vectorview system (Elekta AB). The main fiduciary positions including the nose, nasion, preauricular points, and additional head points were digitized to allow for later co-registration with high-resolution MRI images. Data were collected at a continuous sampling rate of 1000 Hz with minimal filtering (0.1 to 200 Hz). Visually identified bad channels (channels with excessive noise, no signal, or unexplained artifacts) were excluded from further analyses, as were trials with large transients (>3000 fT/cm for gradiometers). Blink artifacts were removed using independent component analysis by pairing each MEG channel with the electro-oculogram channel and removing the independent component that contained the blink (Delorme and Makeig 2004).

A T1-weighted structural MRI was acquired on a GE 1.5-T EXCITE HG scanner, and participants were allowed to sleep or rest during MRI acquisition. Using FreeSurfer (http://surfer.nmr.mgh.harvard.edu/), the cortex was reconstructed from each individual participant's MRI. A boundary element method forward solution was derived from the inner skull boundary (Oostendorp and Van Oosterom 1992), and the cortical surface was tiled with ∼2500 dipole locations per hemisphere (Dale et al. 1999; Fischl et al. 1999). The orientation-unconstrained MEG activity of each dipole was estimated every 4 ms, and the noise sensitivity at each location was estimated from the average pre-stimulus baseline from −190 to −20 ms. Individual subject aMEG movies were constructed from the averaged data in the trial epoch for each condition using only data from the gradiometers. Where group analyses were conducted (native signers and L2 signers), data were combined across subjects by taking the mean activity at each vertex on the cortical surface and plotting it on an average Freesurfer brain (version 450) at each latency. Vertices were matched across participants by morphing the reconstructed cortical surfaces into a common sphere, optimally matching gyral-sulcal patterns and minimizing shear (Sereno and Dale 1996; Fischl et al. 1999). These aMEG maps can be interpreted as estimates of signal-to-noise at each point on the cortical surface; as such, they are analogous to the “z-score maps” often displayed in fMRI analyses (Dale et al. 2000). Statistical comparisons were made on ROI time courses, which were identical to those defined for our previous analyses of Visit 1 data (Ferjan Ramirez et al. 2014), where they were based on maps made from the average incongruent–congruent subtraction across all subjects (12 native signers, 11 L2 signers, and the 2 cases).

Results

Behavioral Results

Both control groups performed with high accuracy and fast reaction times (RTs) (Table 1). The cases show improvement in task performance between the 2 visits especially in RT. At Visit 1, Carlos and Shawna performed at 85% (RT: 733 ms) and 84% (RT: 811 ms) respectively, which was about 2.5 standard deviations away from the natives' group, and within 1 standard deviation of the L2 group. At Visit 2, Carlos' accuracy improved by 4% and his RT decreased by 11%. Shawna's accuracy improved by 3%, and her RT decreased by 30%. These behavioral results show that the adolescents' familiarity with the stimulus signs increased with the additional 15 months of ASL experience between Visits 1 and 2. Their accuracy levels were now similar to the hearing L2 learners and approximated that of the deaf native signers, and their RTs were now within 1 standard deviation of both control groups.

aMEG Results

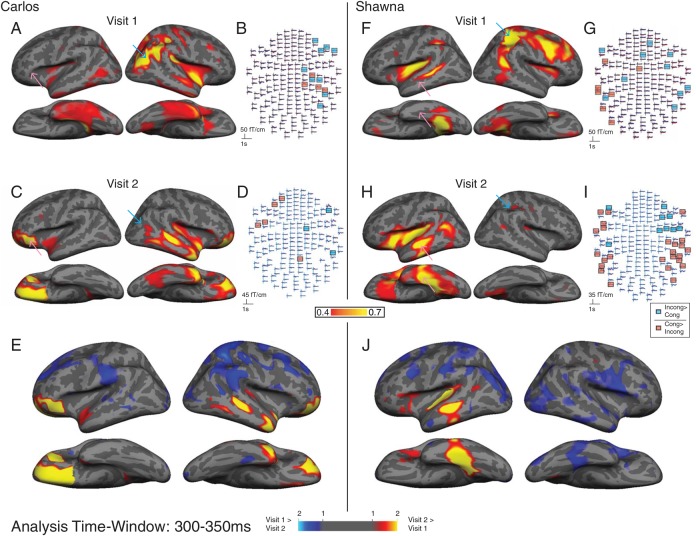

Figure 2 shows the aMEG maps of the strength of the incongruent–congruent activity for Carlos (A–D) and Shawna (F–I). Results from Visit 1 are shown on the top, and results from Visit 2 are shown right below Visit 1 (Fig. 2A, F is reprinted from Ferjan Ramirez et al. 2014). The aMEG maps are a measure of signal-to-noise ratio or the F-ratio of explained variance over unexplained variance. The areas shown in yellow and red represent the strongest neural activity relative to baseline. We also examined whether the differences between incongruent and congruent conditions were due to larger signals in one or the other direction by examining the MEG sensor-level data directly. Panels B, D, G, and I correspond to Panels A, C, F, and H, respectively, but indicate the effects of semantic priming on the estimated brain activity in individual sensors, and in particular whether the activity patterns in the aMEG maps are caused by the expected N400 responses (incongruent > congruent), or a response in the opposite direction (congruent > incongruent). Using a random-effects resampling procedure (Maris and Oostenveld 2007), we found the MEG channels where the semantic effects were significant (P < 0.01) for incongruent > congruent (Panels B, D, F, and H, blue channels) or congruent > incongruent (Fig. 2 B,D,F,H, red channels).

Figure 2.

Contrasting semantic activation patterns to signs for Carlos and Shawna at Visit 1 and Visit 2. aMEG maps (A, C, F, H): The right parietal activation prominent in Visit 1 (cyan arrows, A, F) is no longer present in Visit 2 (cyan arrows, C, H). Conversely, the left posteroventral prefrontal activation in Carlos and the left anterior temporal activation in Shawna in Visit 2 (pink arrows, C, H) are greatly increased compared with Visit 1 (pink arrows, A, F). MEG sensor data (B, D, G, I): At both visits, a large proportion of the cases' neural responses to words are increased (congruent > incongruent, red channels) rather than decreased (incongruent > congruent, blue channels) by semantic priming. Statistical significance was determined by a random-effects resampling procedure (Maris and Oostenveld 2007) and reflects time periods where incongruent and congruent conditions diverge at P < 0.01. Z-score maps (E, J): brain areas where semantic modulation in Carlos (E) and Shawna (J) is greater in Visit 1 compared with Visit 2 (blue) and areas where semantic modulation is greater in Visit 2 compared with Visit 1 (yellow and red). Maps are thresholded at 1 < z < 2.

At Visit 1 (A, B, F, and G), Carlos' and Shawna's signature of word comprehension as indicated by the expected N400 responses (incongruent > congruent; channels highlighted in blue) primarily localized to right superior parietal, anterior occipital, and dorsolateral prefrontal areas. Carlos's aMEG showed minimal left-hemisphere activity (A), and there were no significant incongruent–congruent left-hemisphere effects at the individual sensor level (B). Shawna's aMEG exhibited some effects in parts of the classical left-hemisphere language network (F), but these were predominantly due to congruent > incongruent activity (G, channels highlighted in red).

The cases' neural responses to signs at Visit 2 showed a qualitatively different pattern compared with Visit 1. Most notably, the semantically modulated activity in the right superior parietal and dorsolateral prefrontal areas decreased in magnitude compared with the first visit (Visit 1: A and F; Visit 2: C and H). At the same time, the left-hemisphere semantic effects increased in magnitude compared with the first visit and localized to the superior and inferior temporal cortex, and to perisylvian and orbitofrontal areas. Shawna's sensor-level data (I) still suggest significant right hemisphere activity at Visit 2; however, these activations are weaker than those in her left hemisphere, as indicated by the fact that they do not appear in the aMEG maps at the chosen threshold. Carlos, whose left hemisphere exhibited no significant channels at Visit 1 (B), showed a bilateral distribution of semantically related activity at Visit 2. Together, these results suggest that the neural language processing of both cases has undergone a leftward shift over time. Furthermore, with increasing language experience, their semantic processing appeared to shift from being mainly in parietal and dorsolateral prefrontal regions to temporal and ventral frontal regions.

It is important to note, however, that a large proportion of the cases' semantically modulated activity, particularly in the left hemisphere, still shows an unexpected polarity (i.e., show increases instead of decreases to semantic priming; Fig. 2D, I). Such responses were also present at Visit 1 and have previously been observed in an ERP study with infants prior to the emergence of the canonical N400 patterns (Friedrich and Friederici 2005, 2010). We have also observed such responses in relatively inexperienced L2 users of ASL and have previously suggested that they may represent a neural signature of language learning (Ferjan Ramirez et al. 2014).

We next mapped the z-scores of the aMEG for Carlos' and Shawna's Visit 1 and compared them with their z-scores at Visit 2 (Fig. 2E, J). These maps were constructed by converting aMEG values of the difference between conditions (incongruent–congruent trials) to z-scores separately in Visit 1 and 2. The difference in z-scores between Visits was calculated at each vertex on the model cortex and plotted separately for Carlos (Fig. 2E) and Shawna (J). These z-score maps show the brain areas where semantic modulation in Carlos and Shawna is greater in Visit 1 compared with Visit 2 (shown in blue) and areas where semantic modulation is greater in Visit 2 compared with Visit 1 (shown in yellow and red). For Carlos (Fig. 2E), the activity at Visit 1 was greater than that at Visit 2 in the right superior parietal cortex and in the left parietal cortex and superior frontal cortex. His activity at Visit 2 was greater than that at Visit 1 in the left posterovental prefrontal cortex, in the right frontal and temporal pole, and in the right superior temporal cortex. For Shawna (J), the activity at Visit 1 was greater than that at Visit 2 in the left superior parietal and parieto-occipital cortex, as well as in the right occipital and right parietal cortex. Her activity at Visit 2 was greater than that at Visit 1 in the left superior and inferior temporal cortex and in the left planum temporale (PT).

To assess the statistical significance of the changes in activity patterns between Visits 1 and 2, we conducted repeated-measures analysis of variance (ANOVA) tests on single-trial brain responses, separately for Carlos and for Shawna. Our statistical analyses were conducted in 9 bilateral regions of interest (ROIs) that were defined in our previous analyses of Visit 1 data (Ferjan Ramirez et al. 2014) by considering the aMEG movies of grand-average activity across the whole brain of all 12 native signers, all 11 L2 signers, and the 2 cases. The strongest clusters of neural activity across all the subjects were selected for statistical comparison.

In addition to this z-score comparison of the cases to the control groups, activity was compared across individual trials in each subject to evaluate the effects of visit, hemisphere and ROI, and especially their interactions. Specifically, repeated-measures ANOVA tests were conducted with a 9 (ROI) × 2 (Hemisphere) × 2 (Visit) design, using the normalized aMEG values of the difference between each congruent trial and its incongruent counterpart as the dependent variable. Measurements were conducted in the previously defined N400 time-window (300–350 ms). Results confirmed that the cortical distribution of semantically modulated activity changed with language experience in both Carlos and Shawna. While Carlos' overall lateralization did not change significantly between Visits (Visit × Hemisphere: F1,539 = 1.4, P = 0.2; see also Figure 2A,C,E), his cortical reorganization was reflected in a significant Visit × ROI interaction (F8,532 = 21.2, P < 0.001) and a Visit × Hemisphere × ROI interaction (F8,532 = 20.3, P < 0.001). Shawna's semantic responses underwent a shift into left-hemisphere canonical language areas (see Fig. 2F,H,J), which was reflected by interaction effects between Visit and all other factors (Visit × ROI: F8,512 = 24.6, P < 0.001; Visit × Hemisphere: F1,519 = 118.6, P < 0.001; Visit × ROI × Hemisphere: F8,512 = 54.6, P < 0.001).

Further analyses were conducted to find out which ROIs in Carlos and Shawna showed the effect of Visit. At each ROI, in each hemisphere (a total of 18 ROIs), an unpaired two-tailed t-test was conducted, using the normalized single-trial difference waves as inputs, and testing whether the N400 effect of congruency in Visit 1 differed from that in Visit 2. Because there were 18 t-tests per subject, a Bonferroni-corrected alpha level of 0.0028 was used. In accordance with the analyses presented in Figure 2 (A–E), Carlos showed significantly greater activity in Visit 2 compared with Visit 1 in a number of left and right perisylvian areas: (df = 539; left inferior frontal gyrus (IFG) t = 4.7, P < 0.001; left PT t = 3.7, P < 0.001, left superior temporal sulcus (STS) t = 4.0, P < 0.001, left temporal pole (TP) t = 5.8, P < 0.001, right anterior insula (AI) t = 3.6, P < 0.001, right IFG t = 7.6, P < 0.001, right inferior temporal lobe (IT) t = 4.4, P < 0.001, right lateral occipitotemporal cortex (LOT) t = 3.7, P < 0.001). Also in agreement with her dSPM and z-score maps (Fig. 2F–J), Shawna showed significantly greater activity in Visit 2 compared with Visit 1 in many perisylvian areas, almost exclusively in the left hemisphere: (df = 519; left AI t = 9.9, P < 0.001; left IFG t = 9.6, P < 0.001, left intraparietal sulcus (IPS) t = 4.8 P < 0.001, left IT t = 8.3 P < 0.001, left STS t = 7.5 P < 0.001, left TP t = 4.0 P < 0.001, left pSTS t = 5.0 P < 0.001, right PT t = 3.4 P = 0.001) and reduced activity in Visit 2 compared with Visit 1 in some right hemisphere areas: (right IFG t = 5.9 P < 0.001, right IT t = 5.6 P < 0.001, right TP t = 5.9 P < 0.001).

The next step of our analysis was to directly compare the spatial pattern of statistically significant semantically modulated neural activity in Carlos and Shawna with that of the native and L2 signers. Statistical analyses were conducted in the same 9 bilateral ROIs. As in our previous analyses of Visit 1 (Ferjan Ramirez et al. 2014, significant ROIs were defined as those where Shawna's or Carlos' aMEG values were more than 2.5 standard deviations away from the mean value of each control group. Such a strict threshold was applied (a z-score of 2.5 corresponds to a P-value of 0.0124) because comparisons were conducted in multiple ROIs.

Table 2 presents normalized aMEG values for the subtraction of incongruent–congruent trials for both control groups (A) and for Carlos and Shawna at Visit 2 (B) and Visit 1 (C). At Visit 1, the statistically significant differences between the cases and the control groups were all localized to the right hemisphere ROIs. Carlos showed greater activity than native signers in right LOT and posterior superior temporal sulcus (pSTS), and greater activity than the L2 signers in the right IPS. Similarly, Shawna showed greater activity than the natives in right IFG, IPS, and pSTS, and greater activity than the L2 signers in the right IPS. All of these differences between the cases and the control groups have disappeared by Visit 2, with the only statistically significant effect now localizing to the left STS for Shawna, where neural activity is stronger than that of both control groups. These results thus again suggest that the cases' distribution of semantically related neural activity in response to words has undergone a leftward shift.

Table 2.

Normalized aMEG values for the subtraction of incongruent–congruent trials

| Native mean (sd) |

L2 mean (sd) |

|||

|---|---|---|---|---|

| (A) Control groups | ||||

| ROI | LH | RH | LH | RH |

| AI | 0.39 (0.14) | 0.40 (0.18) | 0.33 (0.12) | 0.36 (0.13) |

| IFG | 0.29 (0.12) | 0.30 (0.12) | 0.26 (0.12) | 0.28 (0.14) |

| IPS | 0.37 (0.10) | 0.32 (0.13) | 0.36 (0.13) | 0.28 (0.08) |

| IT | 0.43 (0.12) | 0.35 (0.11) | 0.36 (0.13) | 0.34 (0.18) |

| LOT | 0.29 (0.12) | 0.29 (0.10) | 0.30 (0.16) | 0.32 (0.15) |

| PT | 0.54 (0.14) | 0.45 (0.17) | 0.45 (0.16) | 0.43 (0.18) |

| STS | 0.43 (0.08) | 0.41 (0.18) | 0.32 (0.09) | 0.36 (0.16) |

| TP | 0.45 (0.16) | 0.46 (0.15) | 0.34 (0.14) | 0.38 (0.16) |

| pSTS | 0.33 (0.09) | 0.27 (0.07) | 0.34 (0.16) | 0.32 (0.15) |

| (B) Cases time 2 | ||||

| Carlos | Shawna | |||

| ROI | LH | RH | LH | RH |

| AI | 0.42 | 0.35 | 0.60 | 0.24 |

| IFG | 0.28 | 0.28 | 0.26 | 0.16 |

| IPS | 0.20 | 0.21 | 0.26 | 0.26 |

| IT | 0.24 | 0.39 | 0.62 | 0.16 |

| LOT | 0.31 | 0.40 | 0.46 | 0.19 |

| PT | 0.26 | 0.48 | 0.59 | 0.33 |

| STS | 0.26 | 0.62 | 0.65a,b | 0.17 |

| TP | 0.29 | 0.59 | 0.41 | 0.18 |

| pSTS | 0.24 | 0.30 | 0.48 | 0.27 |

| (C) Cases time 1 | ||||

| Carlos | Shawna | |||

| ROI | LH | RH | LH | RH |

| AI | 0.27 | 0.43 | 0.38 | 0.43 |

| IFG | 0.26 | 0.31 | 0.34 | 0.60a |

| IPS | 0.31 | 0.54b | 0.41 | 0.66a,b |

| IT | 0.50 | 0.43 | 0.41 | 0.27 |

| LOT | 0.43 | 0.57a | 0.17 | 0.29 |

| PT | 0.33 | 0.57 | 0.52 | 0.33 |

| STS | 0.26 | 0.40 | 0.47 | 0.23 |

| TP | 0.42 | 0.51 | 0.27 | 0.20 |

| pSTS | 0.26 | 0.47a | 0.35 | 0.54a |

a2.5 standard deviations (P = 0.0124) from native mean.

b2.5 standard deviations (P = 0.0124) from L2 mean.

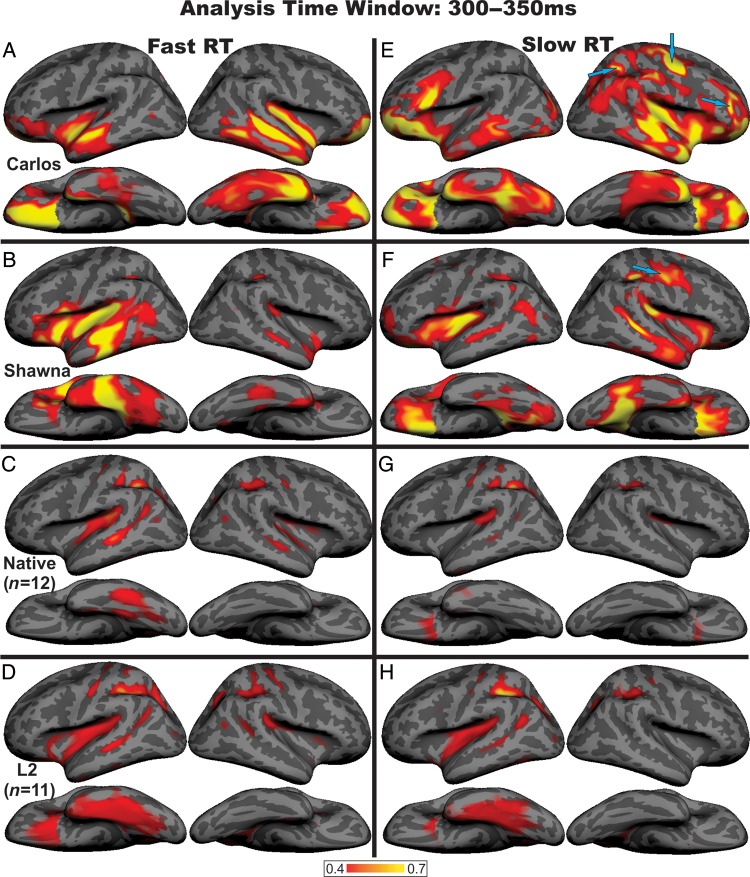

Finally, we asked whether this emerging leftward shift in Carlos and Shawna can be specifically related to their growing familiarity with ASL. If this is indeed the case, their neural responses to those stimulus words with which they are most familiar should be more left-lateralized than the neural responses to those words with which they are less familiar. To test this hypothesis, we split their responses by median RT, the rationale being that those words with fast RTs are the ones with which they are most familiar, whereas those with slower RTs are words with which they may be less familiar. For each case, 2 aMEG maps were created, 1 for the faster RT words and 1 for the slower RT words. The same analyses were conducted with each control participant; these individual aMEG maps were then combined across all subjects within each group, creating 2 group average aMEG maps for each control group, 1 for the fast RT words and another for the slow RT words.

The results presented in Figure 3 indicate that Shawna's and Carlos' neural responses to fast RT words are either left-lateralized (Shawna) or bilaterally distributed (Carlos). In contrast, their responses to slow RT words show strong right hemisphere activity, some of which localizes to superior parietal, anterior occipital, and dorsolateral prefrontal cortex (Fig. 3E,F, blue arrows). These were the areas that showed the strongest activity for Visit 1 (see Fig. 2A,E). The RT median split analyses for the control groups show a markedly different pattern (native signers, C and G; L2 signers, D and H). Both the fast- and slow-RT words are processed by the same brain areas, predominantly localizing to the left-hemisphere superior and inferior temporal sulcus, PT, and inferior parietal sulcus. Together these results show that the cases' neural responses to words with which they are becoming the most familiar, as indicated by RT, look more typical than their neural responses to words with which they are less familiar. These results indicate that the observed changes in neural language processing these 2 individuals show over time are specifically related to language learning.

Figure 3.

Reaction time (RT) analyses. In Carlos and Shawna, the fast RT words are either bilaterally distributed (A, Carlos), or left-lateralized (B, Shawna), whereas the responses to slow RT words are right lateralized or bilaterally distributed (E, Carlos; F, Shawna) and localize to anterior occipital, superior parietal, and dorsolateral prefrontal cortex (E, F, blue arrows). In contrast, for the control participants, semantically modulated activity in response to both fast and slow RTs localizes to a common left-lateralized network (C, G, native signers; D, H, L2 signers). RT ranges for correct trials: Shawna, Visit 1: fast = 381–821 ms, slow = 823–1493 ms; Visit 2: fast = 316–524 ms, slow = 524–1560 ms; Carlos, Visit 1: fast = 413–681 ms, slow = 681–1651 ms; Visit 2: fast = 338–591 ms, slow = 594–1958 ms; native signers: fast = 312–794 ms, slow = 523–1903 ms; L2 signers: fast = 337–812 ms, slow = 601–1897 ms.

Discussion

The present study is a longitudinal examination of neural language processing in adolescent L1 learners and as such provides novel insights concerning the relation between language experience in early life and the neural architecture for language. Carlos and Shawna had little language until age 14 years when they became fully immersed in ASL. Previous studies with other late L1 learners of sign language have shown that delayed L1 acquisition is associated with lifelong low language proficiency, as well as anomalous patterns of language processing in the brain (Newport 1990; Mayberry 1993; Mayberry et al. 2011; Emmorey et al. 1995). However, most late L1 learners so far have been studied after years of language use. The current longitudinal examination asks how the brain begins and continues to process language when formal language input first becomes available in adolescence.

Our initial aMEG studies with Shawna and Carlos indicated that their neural processing of words after 2–3 years of language immersion was atypical, both in terms of brain localization and in terms of the polarity of the semantic priming effect. The specific question under investigation here was whether and how these neural word processing patterns change as language experience increases over time. One possible outcome was that the brain's language system, being permanently altered by language deprivation in early life, would continue to show atypical neural processing patterns for words, despite relatively small but real improvements in both receptive and productive linguistic behavior. Alternatively, if some plasticity has been preserved, then the neural correlates of the cases' language processing would become more typical as they experienced more language.

Our findings support the second hypothesis. The cases' task performance shows improvements both in terms of RT and accuracy. Their semantically modulated neural activity, which previously localized predominantly to the right superior parietal, anterior occipital, and dorsolateral prefrontal cortex, is now either bilaterally distributed (Carlos) or left-lateralized (Shawna). Importantly, the leftward shift is particularly evident in their neural responses to those words with which they are most familiar; the localization of neural responses to less familiar words, on the other hand, remains atypical. This suggests that the observed changes are emerging specifically in response to language learning. While the polarity of the adolescents' brain responses also remains somewhat atypical, our results nevertheless suggest that the human brain is capable of changing its response to words with prolonged language exposure, even when language is first experienced in adolescence.

The observed changes in neural language processing are in agreement with findings in typically developing children and adults. Neural activations outside of the classical left-hemisphere language areas have previously been associated with the processing of a less proficient or a later-acquired language; however, it should be emphasized that, for example, infants' responses to language stimuli, which are predominantly left-lateralized from a young age, show some additional right hemisphere activity when directly compared with adult responses (Travis et al. 2011). Along similar lines, neural responses to words in bilinguals' less dominant language exhibited increased right hemisphere activity compared with the more dominant language (Leonard et al. 2010, 2011). We have recently observed a similar pattern in a group of hearing English speakers who were beginning L2 learners of ASL (Leonard et al. 2013). Their responses to auditory and written English words, as well as to ASL signs were mainly left-lateralized, but the less proficient ASL additionally engaged the right hemisphere. Taken together, the evidence from infant and adult studies seems to suggest that language is less lateralized to the left hemisphere in the early stages of linguistic and biological development when a language has been or is being learned from birth. It should be noted, however, that the noncanonical localization of neural activity in infants and L2 learners is typically not as extensive as what we observed in the cases.

The left frontotemporal network is well established to be the main site of neural generators of the N400 response in typically developing subjects across different language modalities (Halgren, Baudena, Heit, Clarke, Marinkovic and Clarke 1994; Halgren, Baudena, Heit, Clarke, Marinkovic, Chauvel et al. 1994; Marinkovic et al. 2003) and is also involved in the processing of word meaning in infants and L2 learners (Dehaene-Lambertz et al. 2002; Imada et al. 2006; Travis et al. 2011; Leonard et al. 2010, 2013). Neurons in parts of this network are known to respond to the semantic categories of words across modalities (Chan et al. 2011) and are hypothesized to function as “semantic hubs” (Patterson et al. 2007) where word knowledge is stored at the abstract level. Thus, the increasingly prominent neural activity in the left frontotemporal areas that we observed in Carlos and Shawna across Visits 1 and 2 may suggest that they are representing word meaning in a more abstract manner.

Despite the above-described leftward shift, some aspects of Carlos' and Shawna's neural word processing remain atypical. For example, the localization of the semantic priming effect for the less familiar words (words to which they responded with slower RTs) still localizes to the right superior parietal areas and is similar to what we observed at their initial visit. In addition, many of their responses are still increased rather than decreased by semantic priming (congruent > incongruent, rather than incongruent > congruent). Similar responses with reversed polarity have previously been observed in 12-month-old children but disappear soon thereafter as the semantic priming mechanisms mature and the canonical N400 emerges (Friedrich and Friederici 2005; 2010). In a group of 12- to 18-month-olds, Travis et al. (2011) did not find such effects but instead found the N400 to be mostly adult like. It may be that in typically developing infants, the congruent > incongruent effect is present only for a brief period of time. In Carlos and Shawna, these effects are still present after 39 and 51 months of input, suggesting that the robust neuroplasticity associated with infant language learning is reduced in adolescence. It remains to be seen whether their polarity will ever change, as is suggested to occur in infants, and whether their neural processing for words will become as left-lateralized as native signers.

In addition to atypical patterns of lexico-semantic processing, it is also important to note that Carlos's and Shawna's overall language comprehension and production remain at relatively low levels. Studying these 2 individuals over a period of 4 years, we have observed slowed language development (Ferjan Ramirez et al. 2013a, 2013b). There is no evidence of the accelerated vocabulary and morpho-syntactic learning characteristic of very young children that might have been expected to occur with increased language experience. Similarly, the syntactic complexity of their utterances shows only a modest increase over time and remains at a low level despite 4 years of language experience (Ferjan Ramirez et al. 2013b).

The fact that Carlos' and Shawna's language acquisition is slow is not surprising. Other studies have indicated that delays in onset of first-language acquisition affect language acquisition and processing (Curtiss 1976; Newport 1990; Emmorey et al. 1995; Mayberry et al. 2002; Morford 2003; Berk and Lillo-Martin 2012). One well-known case of late first-language acquisition is Genie, a victim of severe social isolation and abuse who was physically isolated from the outside word until 13.5 years old. Although Genie was able to use limited vocabulary and form simple utterances, her grammatical structures remained atypical even after 8 years of language use (Curtiss 1976; Fromkin et al. 1974). Morford (2003) studied 2 deaf homesigners who first began to acquire ASL at the age of 13. Although they quickly replaced their gestures with ASL signs and were able to describe narrative pictures after 3 years of learning, their comprehension of ASL utterances was barely above chance after 7 years of language use.

Other studies report atypical brain activation patterns in response to language stimuli when the onset of language acquisition is delayed. For example, Genie was tested on a dichotic listening paradigm and showed a marked left ear advantage (right hemisphere) in response to linguistic, but not to nonverbal stimuli (Fromkin et al. 1974). In a study on German Sign Language, deaf non-native signers exhibited a variety of neural activation patterns, likely reflecting the fact that age of acquisition was not controlled (Meyer et al. 2007). More follow-up studies with the present individuals and other late L1 learners are needed to determine whether and how the neural correlates of language processing become more typical with increased language experience. One fMRI study using simple ASL sentences has found that the neural patterns of language processing in late L1 learners of ASL, although left-lateralized, tended not to localize to the anterior language areas as in native signers, even after 20 or more years of language use (Mayberry et al. 2011). In the current study, we observed some change toward a more native-like brain response, but it is important to emphasize that we looked exclusively at the processing of word meaning.

Finally, it is important to note that Carlos' and Shawna's neural activation patterns were not identical to one another either at Visit 1 or at Visit 2. Given the uniqueness of their backgrounds, these differences are somewhat expected and make the commonalities in the observed experience-related neural changes even more remarkable. Particularly intriguing is the finding that, for both participants, the activity related to semantic congruency observed in the right dorsal stream (i.e., right anterior occipital and superior parietal) is reduced over time and is being replaced by left frontotemporal activity. One possibility is that this shift in neural activation pattern is driven by a decrease in attentional resources (i.e., more left-hemisphere activity when word processing requires less attention; for example, in response to fast RT words in Visit 2); however, it should be noted that no such shift is evident in control participants when their responses are split by median RT.

We have previously proposed that right dorsal stream activations may be related to the cases' use of articulatory remapping and visual-to-motor transformations to access lexical meaning. These alternative strategies may have initially been used due to the unique way in which they began to acquire their first language. While typically developing infants learn the basic phonetic structure of native language and become specifically tuned to its recurrent sublexical patterns before they are able to produce their first words (Werker and Tees 1984), Shawna and Carlos did not spend a year observing phonological patterns and babbling with their hands but rather began to use ASL for referential communication as soon as it became available. One hypothesis is that the tuning to the phonetic structure of words enables the specific neural configuration for language processing in the left frontotemporal brain network (see Kuhl 2004), parts of which are known to be specifically involved in phonological encoding (Indefrey and Levelt 2004). If form-meaning relationships are established without the discovery of recurring phonological patterns of native language (Morford and Mayberry 2000), word meaning may initially have to be recognized through the dorsal stream, due to the use of mechanisms that are less dependent on deconstructing the words into subparts because the subparts have not yet been learned.

If the above-mentioned hypothesis is correct, our current data would suggest that as more language is experienced, the alternative mechanisms of lexical access that may be used in the initial stages of language learning eventually become replaced by the more canonical mechanisms, supported by the classical left-hemisphere system supporting lexical access and semantic integration. The observed leftward shift in neural lexical processing is accompanied by improvements in task performance, suggesting that language learning itself enables more efficient processing, perhaps by creating more abstract linguistic representations and allowing for greater automaticity in accessing lexical meaning (Mayberry and Eichen 1991).

Taken together, the present results suggest that the human brain remains at least partly sensitive to novel language input throughout adolescence, even after a childhood of language deprivation. The 2 adolescents studied here initially showed atypical neural activation patterns in response to words, which, in some aspects, became much more typical as they experienced more language. Despite these changes, however, some aspects of the cases' neural word representations remain atypical. It may be that the observed leftward shift in the neural responses to word meaning is limited to a set of single words that they have known and used for years. It thus remains to be seen whether the cases' neural responses to all words will ever look completely native-like and whether they will exhibit native-like responses to sentence-level stimuli.

Supplementary Material

Supplementary material can be found at: http://www.cercor.oxfordjournals.org/.

Funding

The research reported in this publication was supported in part by NIH grant RO1DC012797, NSF grant BCS-0924539, NIH grant T-32 DC00041, and an innovative research award from the Kavli Institute for Mind and Brain.

Supplementary Material

Notes

The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. We thank M. Hatrak, D. Hagler, A. Lieberman, A. Dale, B. Rosen, and C. Dubinsky for assistance. Conflict of Interest: None declared.

References

- Anderson D, Reilly J. 2002. The MacArthur communicative development inventory: normative data for American Sign Language. J Deaf Stud Deaf Educ. 7:83–106. [DOI] [PubMed] [Google Scholar]

- Berk S, Lillo-Martin D. 2012. Two word stage: motivated by linguistic or cognitive constraints? Cogn Psychol. 65(1):118–140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boudreault P, Mayberry RI. 2006. Grammatical processing in American Sign Language: age of first-language acquisition effects in relation to syntactic structure. Lang Cogn Proc. 21:608–635. [Google Scholar]

- Chan AM, Baker JM, Eskandar E, Schomer D, Ulbert I, Marinkovic K, Cash SS, Halgren E. 2011. First-pass selectivity for semantic categories in the human anteroventral temporal lobe. J Neurosci. 31(49):18119–18129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corina D, McBurney S, Dodrill C, Hinshaw K, Brinkley J, Ojemann G. 1999. Functional roles of Broca's area and SMG: evidence from cortical stimulation mapping in a deaf signer. NeuroImage. 10:570–581. [DOI] [PubMed] [Google Scholar]

- Curtiss S. 1976. Genie: A Psycholinguistic Study of a Modern-Day ‘wild child’. New York: Academic Press. [Google Scholar]

- Dale AM, Fischl BR, Sereno MI. 1999. Cortical surface-based analysis. I. Segmentation and surface reconstruction. NeuroImage. 9:179–194. [DOI] [PubMed] [Google Scholar]

- Dale AM, Liu AK, Fischl B, Buckner RL. 2000. Dynamic statistical parametric mapping: combining fMRI and MEG for high-resolution imaging of cortical. Neuron. 26:55–67. [DOI] [PubMed] [Google Scholar]

- Dehaene-Lambertz G, Dehaene S, Hertz-Pannier L. 2002. Functional neuroimaging of speech perception in infants. Science. 298:2013–2015. [DOI] [PubMed] [Google Scholar]

- Delorme A, Makeig S. 2004. EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J Neurosci Methods. 134:9–21. [DOI] [PubMed] [Google Scholar]

- Dittmeier C. 2014. Maryknoll/Deaf Ministry/Cambodia. Retrieved from http://parish-without-boarders.net. [Google Scholar]

- Emmorey K, Bellugi U, Friederici AD, Horn P. 1995. Effects of age of acquisition on grammatical sensitivity: evidence from on-line and of-line tasks. Appl Psycholinguist. 16:1–23. [Google Scholar]

- Ferjan Ramirez N, Lieberman A, Mayberry RI. 2013a. The initial stages of first-language acquisition begun in adolescence: when late looks early. J Child Lang. 40(2):391–414. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferjan Ramirez N, Lieberman A, Mayberry RI. 2013b. How far and how fast? A longitudinal study of ASL acquisition in adolescent homesigners. Presented at the Theoretical Issues in Sign Language Research (TISLR) Conference 11 London, UK. [Google Scholar]

- Ferjan Ramirez N, Leonard MK, Torres C, Hatrak M, Halgren E, Mayberry RI. 2014. Neural language processing in adolescent first-language learners. Cereb Cortex. 24:2772–2783. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischl B, Sereno MI, Tootell RB, Dale AM. 1999. High-resolution intersubject averaging and a coordinate system for the cortical surface. Hum Brain Mapp. 8(4):272–284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friedrich M, Friederici AD. 2010. Maturing brain mechanisms and developing behavioral language skills. Brain Lang. 114(2):66–71. [DOI] [PubMed] [Google Scholar]

- Friedrich M, Friederici AD. 2005. Phonotactic knowledge and lexical-semantic processing in one-year-olds: brain responses to words and nonsense words in picture contexts. J Cogn Neurosci. 17(11):1785–1802. [DOI] [PubMed] [Google Scholar]

- Fromkin V, Krashen S, Curtiss S, Rigler D, Rigler M. 1974. The development of language in genie: a case of language acquisition beyond the “Critical Period.” Brain Lang. 1:81–107. [Google Scholar]

- Halgren E, Baudena P, Heit G, Clarke JM, Marinkovic K, Chauvel P, Clarke M. 1994. Spatio-temporal stages in face and word processing. II. Depth-recorded potentials in the human frontal and Rolandic cortices. J Physiol. 88:51–80. [DOI] [PubMed] [Google Scholar]

- Halgren E, Baudena P, Heit G, Clarke JM, Marinkovic K, Clarke M. 1994. Spatio-temporal stages in face and word processing. I. Depth-recorded potentials in the human occipital, temporal, and parietal lobes. J Physiol. 88:1–50. [DOI] [PubMed] [Google Scholar]

- Halgren E, Dhond RP, Christenson N, Van Petten C, Marinkovic K, Lewine JD, Dale AM. 2002. N400-like magnetoencephalography responses modulated by semantic context, word frequency, and lexical class in sentences. NeuroImage. 17:1101–1116. [DOI] [PubMed] [Google Scholar]

- Hickok G, Bellugi U, Klima ES. 1996. The neurobiology of signed language and its implications for the neural organization of language. Nature. 381:699–702. [DOI] [PubMed] [Google Scholar]

- Hoffmann-Dilloway E. 2010. Many names for mother: the ethno-linguistic politics of deafness in Nepal. S Asia. 33(3):421–441. [Google Scholar]

- Imada T, Zhang Y, Cheour M, Taulu S, Ahonen A, Kuhl P. 2006. Infant speech perception activates Broca's area: a developmental magnetoencephalography study. Neuroreport. 17(10):957–962. [DOI] [PubMed] [Google Scholar]

- Indefrey P, Levelt WMJ. 2004. The spatial and temporal signatures of word production components. Cognition. 92:101–144. [DOI] [PubMed] [Google Scholar]

- Klima ES, Bellugi U. 1979. The Signs of Language. Cambridge, MA: Harvard University Press. [Google Scholar]

- Koluchova J. 1972. Severe deprivation in twins: a case study. J Child Psychol Psychiatry. 13:107–114. [DOI] [PubMed] [Google Scholar]

- Kuhl PK. 2004. Early language acquisition: cracking the speech code. Nat Rev Neurosci. 5(11):831–843. [DOI] [PubMed] [Google Scholar]

- Kutas M, Federmeier KD. 2011. Thirty years and counting: finding meaning in the N400 component of the event-related brain potential (ERP). Annu Rev Psychol. 62:621–647. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kutas M, Hillyard SA. 1980. Reading senseless sentences: brain potentials reflect semantic incongruity. Science. 207:203–208. [DOI] [PubMed] [Google Scholar]

- Lenneberg E. 1967. Biological Foundation of Language. New York: John Wiley & Sons. [Google Scholar]

- Leonard MK, Brown TT, Travis KE, Gharapetian L, Hagler DJ, Jr, Dale AM, Elman J, Halgren E. 2010. Spatiotemporal dynamics of bilingual word processing. NeuroImage. 49(4):3286–3294. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leonard MK, Ferjan Ramirez N, Torres C, Hatrak M, Mayberry RI, Halgren E. 2013. Neural stages of spoken, written, and signed word processing in beginning second language learners. Front Hum Neurosci. 7:322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leonard MK, Ferjan Ramirez N, Torres C, Travis KE, Hatrak M, Mayberry RI, Halgren E. 2012. Signed words in the congenitally deaf evoke typical late lexico-semantic responses with no early visual responses in left superior temporal cortex. J Neurosci. 32(28):9700–9705. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leonard MK, Torres C, Travis KE, Brown TT, Hagler DJ, Jr, Dale AM, Elman J, Halgren E. 2011. Language proficiency modulates the recruitment of non-classical language areas in bilinguals. PLoS One. 6(3):e18240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacSweeney M, Campbell R, Woll B, Brammer MJ, Giampietro V, Davis AS, Calvert GA, McGuire PK. 2006. Lexical and sentential processing in British Sign Language. Hum Brain Mapp. 27:63–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacSweeney M, Waters D, Brammer MJ, Woll B, Goswami U. 2008. Phonological processing in deaf signers and the impact of age of first language acquisition. NeuroImage. 40:1369–1379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Makinodan M, Rosen KM, Ito S, Corfas G. 2012. A critical period for social experience-dependent oligodendrocyte maturation and myelination. Science. 337:1357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marinkovic K, Dhond RP, Dale AM, Glessner M, Carr V, Halgren E. 2003. Spatiotemporal dynamics of modality-specific and supramodal word processing. Neuron. 38(3):487–497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maris E, Oostenveld R. 2007. Nonparametric statistical testing of EEG- and MEG-data. J Neurosci Methods. 164(1):177–190. [DOI] [PubMed] [Google Scholar]

- Mayberry RI. 1993. First-language acquisition after childhood differs from second-language acquisition: the case of American Sign Language. J Speech Hear Res. 36:1258–1270. [DOI] [PubMed] [Google Scholar]

- Mayberry RI, Chen J-K, Witcher P, Klein D. 2011. Age of acquisition effects on the functional organization of language in the adult brain. Brain Lang. 119:16. [DOI] [PubMed] [Google Scholar]

- Mayberry RI, Eichen E. 1991. The long-lasting advantage of learning sign language in childhood: another look at the critical period for language acquisition. J Mem Lang. 30:486–512. [Google Scholar]

- Mayberry RI, Fischer S. 1989. Looking through phonological shape to sentence meaning: the bottleneck of non-native sign language processing. Mem Cognit. 17:740–754. [DOI] [PubMed] [Google Scholar]

- Mayberry RI, Lock E, Kazmi H. 2002. Linguistic ability and early language exposure. Nature. 417:38. [DOI] [PubMed] [Google Scholar]

- Mayberry RI, Squires B. 2006. Sign language: acquisition. In: Brown K, Encyclopedia of Language and Linguistics. Vol. 11, 2nd ed Oxford: Elsevier, p. 739–743. [Google Scholar]

- McCullough S, Emmorey K, Sereno M. 2005. Neural organization for recognition of grammatical and emotional facial expressions in deaf ASL signers and hearing non-signers. Cogn Brain Res. 22:193–203. [DOI] [PubMed] [Google Scholar]

- McDonald CR, Thesen T, Carlson C, Blumberg M, Girard HM, Trongnetrpunya A, Sherfey JS, Devinsky O, Kuzniecky R, Doyle WK, et al. 2010. Multimodal imaging of repetition priming: using fMRI, MEG, and intracranial EEG to reveal spatiotemporal profiles of word processing. NeuroImage. 53(2):707–717. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meyer M, Toepel U, Keler J, Nussbaumer D, Zysset S, Friederici AD. 2007. Neuroplasticity of sign language: implications from structural and functional brain imaging. Restor Neurol Neuros. 25:335–351. [PubMed] [Google Scholar]

- Moon C, Fifer WP. 2000. Evidence of transnatal auditory learning. J Perinatol. 20:S37–S44. [DOI] [PubMed] [Google Scholar]

- Morford J. 2003. Grammatical development in adolescent first-language learners. Linguistics. 41:681–721. [Google Scholar]

- Morford JP, Hänel-Faulhaber B. 2010. Homesigners as late learners: connecting the dots from delayed acquisition in childhood to sign language processing in adulthood. Lang Ling Compass. 5/8:525–537. [Google Scholar]

- Morford JP, Mayberry RI. 2000. A reexamination of “early exposure” and its implications for language acquisition by eye. In: Chamberlain C, Morford JP, Mayberry RI, editors. Language Acquisition by Eye. Mahwah, NJ: Lawrence Erlbaum Associates, p. 111–127. [Google Scholar]

- Newman AJ, Bavelier D, Corina D, Jezzard P, Neville HJ. 2002. A critical period for right hemisphere recruitment in American Sign Language Processing. Nat Neurosci. 5(1):76–80. [DOI] [PubMed] [Google Scholar]

- Newport E. 1990. Maturational constraints on language learning. Cogn Sci. 14:11–28. [Google Scholar]

- Oostendorp TF, Van Oosterom A. 1992. Source parameter estimation using realistic geometry in bioelectricity and biomagnetism. In: Nenonen J, Rajala HM, Katila T, editors. Biomagnetic Localization and 3D Modeling. Helsinki: Helsinky Univ. of Technology; Report TKK-F-A689. [Google Scholar]

- Patterson K, Nestor PJ, Rogers TT. 2007. Where do you know what you know? The representation of semantic knowledge in the human brain. Nat Rev Neurosci. 8(12):976–987. [DOI] [PubMed] [Google Scholar]

- Penfield W, Roberts WL. 1959. Speech and Brain Mechanisms. Princeton, NJ: Princeton University Press. [Google Scholar]

- Petitto LA, Zatorre RJ, Gauna K, Nikelski EJ, Dostie D, Evans AC. 2000. Speech-like cerebral activity in profoundly deaf people processing signed languages: implications for the neural basis of human language. Proc Natl Acad Sci USA. 97:13961–13966. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rönnberg J, Rudner M, Ingvar M. 2004. Neural correlates of working memory for sign language. Cogn Brain Res. 20:165–182. [DOI] [PubMed] [Google Scholar]

- Sakai KL, Tatsuno Y, Suzuki K, Kimura H, Ichida Y. 2005. Sign and speech: amodal commonality in left hemisphere dominance for comprehension of sentences. Brain. 128:1407–1417. [DOI] [PubMed] [Google Scholar]

- Sandler W, Lillo-Martin D. 2006. Sign Language and Linguistic Universals. Cambridge: Cambridge University Press. [Google Scholar]

- Senghas A, Coppola M. 2001. Children creating language: how Nicaraguan Sign Language acquired a spatial grammar. Psychol Sci. 12(4):323–328. [DOI] [PubMed] [Google Scholar]

- Sereno MI, Dale AM. 1996. A surface-based coordinate system for a canonical cortex. NeuroImage. 3:S252. [DOI] [PubMed] [Google Scholar]

- Stiles J, Reilly JS, Levine SC, Trauner DA, Nass R. 2012. Neural Plasticity and Cognitive Development. New York: Oxford University Press. [Google Scholar]

- Travis KE, Leonard MK, Brown TT, Hagler DJ, Jr, Curran M, Dale AM, Elman JL, Halgren E. 2011. Spatiotemporal neural dynamics of word understanding in 12- to 18-month-old-infants. Cereb Cortex. 21(8):1832–1839. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weikum WM, Oberlander TF, Hensch R, Werker JF. 2012. Prenatal exposure to antidepressants and depressed maternal mood alter trajetory of infant speech perception. Proc Natl Acad Sci. doi/10.1073/pnas.1121263109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Werker JF, Tees RC. 1984. Cross language speech perception: evidence for perceptual reorganization. Infant Behav Develop. 7:49–63. [Google Scholar]

- Zhou X, Merzenich MM. 1993. Enduring effects of early structured noise exposure on temporal modulation in the primary auditory cortex. Proc Natl Acad Sci USA. 105:4423 (1993). [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.