Abstract

Audition and vision both convey spatial information about the environment, but much less is known about mechanisms of auditory spatial cognition than visual spatial cognition. Human cortex contains >20 visuospatial map representations but no reported auditory spatial maps. The intraparietal sulcus (IPS) contains several of these visuospatial maps, which support visuospatial attention and short-term memory (STM). Neuroimaging studies also demonstrate that parietal cortex is activated during auditory spatial attention and working memory tasks, but prior work has not demonstrated that auditory activation occurs within visual spatial maps in parietal cortex. Here, we report both cognitive and anatomical distinctions in the auditory recruitment of visuotopically mapped regions within the superior parietal lobule. An auditory spatial STM task recruited anterior visuotopic maps (IPS2–4, SPL1), but an auditory temporal STM task with equivalent stimuli failed to drive these regions significantly. Behavioral and eye-tracking measures rule out task difficulty and eye movement explanations. Neither auditory task recruited posterior regions IPS0 or IPS1, which appear to be exclusively visual. These findings support the hypothesis of multisensory spatial processing in the anterior, but not posterior, superior parietal lobule and demonstrate that recruitment of these maps depends on auditory task demands.

Keywords: attention, intraparietal sulcus, retinotopy, short-term memory, working memory

Introduction

Short-term memory (STM) of spatial position supports coding of multiple target locations and offers flexibility in planning and executing actions in the absence of sensory information. Spatial information can be obtained via multiple sensory modalities; however, the cortical mechanisms supporting auditory spatial cognition are much less well understood than those supporting visuospatial cognition. The visual processing stream preserves the spatial organization of the retina, and human cortex contains >20 visuospatial maps (Sereno et al. 1995; Swisher et al. 2007; Silver and Kastner 2009). In contrast, auditory spatial information must be binaurally reconstructed from interaural time and level differences between the cochleae, and no auditory spatial maps have been identified in human cortex. Given the lack of spatial maps, how does the auditory system keep track of complex spatial information?

Prior neuroimaging work demonstrates that visual attention and STM recruit both parietal visuotopic maps and neighboring parietal regions that lack identified spatial maps (e.g., Sheremata et al. 2010; Szczepanski et al. 2010). Both spatial and nonspatial auditory tasks also recruit lateral parietal areas (e.g., Zatorre et al. 2002; Alain et al. 2010), with stronger recruitment for spatial tasks. In nonhuman primates, neurons in the lateral intraparietal area (LIP) and ventral intraparietal area (VIP) can code auditory, visual, or bimodal spatial information; although the auditory responses in area LIP often depend on the salience of the auditory spatial information (Stricanne et al. 1996; Cohen et al. 2005; Schlack et al. 2005; Cohen 2009). Based on overlapping activity in human neuroimaging data of auditory and visual spatial tasks (Lewis et al. 2000; Jiang and Kanwisher 2003; Krumbholz et al. 2009; Tark and Curtis 2009; Smith et al. 2010) and dual coding in nonhuman primate electrophysiology (Cohen et al. 2005; Schlack et al. 2005; Cohen 2009), many researchers have proposed shared multisensory spatial maps. However, these studies are inconclusive regarding the question of visual map recruitment by auditory inputs in humans, as they failed to identify the visuotopic maps of individual subjects. Previously, our laboratory reported that sustained auditory spatial attention failed to activate visuotopically mapped intraparietal sulcus (IPS) regions, but activated abutting regions lateral and anterior to visuotopic maps (Kong et al. 2012); however, this negative finding leaves open the possibility that the prior auditory task lacked sufficient spatial demands to recruit visual parietal maps.

Here, we tested the hypothesis that auditory spatial STM flexibly recruits parietal visuospatial maps by employing demanding spatial and temporal auditory STM tasks that required remembering multiple targets per trial. For each participant, we identified visuotopic IPS regions and compared activity between the spatial, temporal, and baseline auditory STM tasks, while controlling for task difficulty and eye movements. The anterior parietal maps (IPS2–4, SPL1) were activated significantly in the auditory spatial STM task, but not in the auditory timing task, suggesting that these regions can be flexibly recruited under high auditory spatial demands. Neither auditory task recruited posterior regions IPS0 and IPS1, which appear to be exclusively visual.

Materials and Methods

Participants and Paradigm

Eleven healthy, right-handed adults with normal or corrected-to-normal vision and hearing participated, received monetary compensation, and gave informed consent (Institutional Review Boards at Boston University and Partners Healthcare). One participant was excluded due to excessive head movements, leaving 10 participants for analysis (age 22–31 years; 5 females; 2 authors: S.W.M. and M.L.R.).

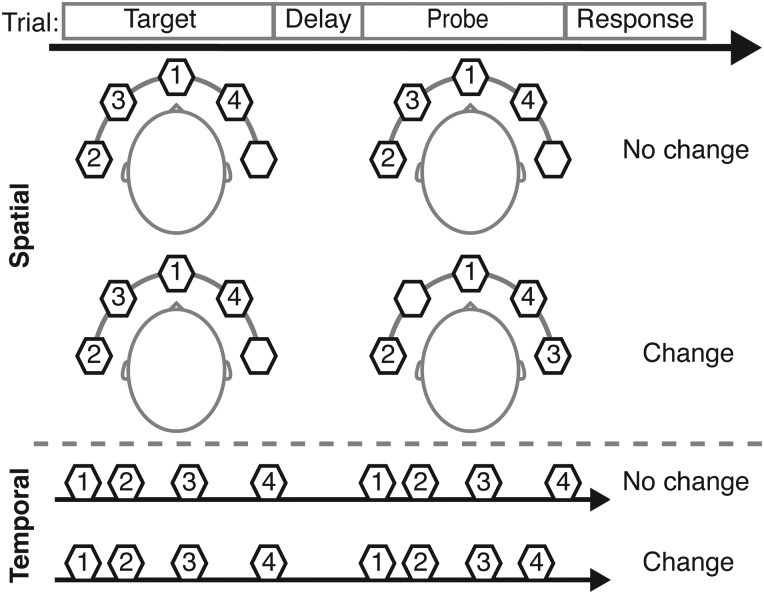

Participants were instructed to attend to either the locations or onset-timing patterns of a series of auditory stimuli in a change-detection task. A verbal cue indicated the relevant feature (location or onset-timing pattern) at the beginning of each block (8 trials). Each trial comprised a target stimulus, a delay, a probe (which either was identical to or differed from the target stimulus in the attended feature with 50% chance), and response period (Fig. 1). Tasks were contrasted with a sensorimotor control condition, in which participants were instructed to refrain from doing the task and to respond with a random button press after each trial (changes never occurred). Each run lasted 7 min and 37.6 s and contained two 59.8-s blocks each of the spatial task, temporal task, and sensorimotor control conditions, plus a 28.6-s block of fixation (no stimuli or button press). A 2.6-s cue preceded each block, and 10.4-s fixation period began and ended each run. These task data (without retinotopy analysis) also were analyzed in the frontal lobe and those results were included a separate report (Michalka et al. 2015); this is the first presentation of the parietal lobe results.

Figure 1.

Auditory spatial and temporal change-detection tasks. Each trial included a target stimulus (2350 ms), a 900-ms delay, and a probe stimulus (2350 ms). Each stimulus comprised 4 sequentially presented, spatialized, complex tones (370 ms duration) with irregular BTIs (120–420 ms). Subjects attempted to detect a change in the location (spatial task) or onset-timing pattern (temporal task) of the tones.

Each stimulus comprised a sequence of 4 complex tones, with each tone containing a selection of the first 3 harmonics of 3 fundamental frequencies (130.81, 174.61, and 207.65 Hz) at equal intensity, ramped on and off with a 16-ms-long cosine squared window. Each tone's duration was 370 ms; tones were separated by between tone intervals (BTIs) randomly assigned to have 3 of 4 possible lengths: 120, 165–185, 230–280, and 320–420 ms. Tones were spatially localized along the azimuth using interaural time differences (ITDs) of −1000, −350, 0, 350, and 1000 µs. The first tone in the sequence was always located centrally (0 µs ITD), whereas the subsequent 3 tones were randomly assigned to have 3 of the 4 remaining ITDs (thus both hemifields contained target stimuli on every trial). In spatial task “change” trials, 1 of the 3 tones after the initial tone was played with the unused ITD. In temporal task “change” trials, one of the probe BTIs either increased or decreased by at least 50 ms compared with the target stimulus. In both conditions, the values of the task-irrelevant dimension (location or timing for the timing and spatial tasks, respectively) were identical for target and probe. In the sensorimotor control condition, the target and probe were always identical along both dimensions.

Stimulus presentation and response collection were conducted using the Psychophysics Toolbox (www.psychtoolbox.org) for MATLAB software (www.mathworks.com). Auditory stimuli were presented via S14 fMRI-Compatible Insert Earphones (Sensimetrics); prior to data collection, participants adjusted sound intensity levels to a comfortably audible in the presence of scanner noise (i.e., 60–80 dB sound pressure level). Responses were collected on a custom-crafted 5-button box. Subjects participated in a behavioral training session prior to fMRI data collection to assure that they were capable of performing the task.

Visuotopic Mapping (Retinotopy) and Region-of-Interest Definition

A phase-encoded retinotopy protocol was used to map the visual field representations within the IPS for each participant [see Swisher et al. (2007) for detailed description]. Participants were asked to maintain fixation while a flickering chromatic radial wedge checkerboard (72° arc; 4 Hz flashing rate; 55.47 s sweep period) rotated around a central fixation point (12 cycles per run; 665.6 s per run; separate clockwise and counterclockwise runs; 4–6 runs per subject). Visuotopic map regions of interest (ROIs) were drawn based on reversals in the polar angle phases analyzed in Freesurfer 4.0.2.

Visuotopic mapping defined 6 regions per hemisphere on individual subjects—IPS0, IPS1, IPS2, IPS3, IPS4, SPL1 (Swisher et al. 2007; Silver and Kastner 2009). We defined 4 additional neighboring parietal ROIs per hemisphere for each subject using an atlas constructed from resting-state functional connectivity (Yeo et al. 2011; https://surfer.nmr.mgh.harvard.edu/fswiki/CorticalParcellation_Yeo2011). Lateral IPS corresponds to the parietal region of the frontoparietal control network. The other 3 regions were defined by excluding the visuotopically defined ROIs from the parietal component of the dorsal attention network, resulting in lateral fundus (funIPS), anterior (antIPS), and dorsomedial (mSPL) ROIs for each subject. We then masked our visuotopic ROIs to include only vertices with a contralateral phase preference and significance threshold of P < 0.05 in the visuotopy data in order to avoid false-positive findings in our visuotopic maps because of adjacency effects. Under these constraints, we were unable to define an SPL1 for one subject and IPS4 for another subject, thus reducing the N for those ROIs.

Oculomotor Control

We instructed subjects to maintain fixation on a white “plus” sign (∼1°) centered on a dark gray background throughout each run. Eight participants were eye-tracked in the scanner using an EyeLink system (http://www.sr-research.com); technical issues prevented proper eye-tracking in the remaining 2 participants, both experienced subjects with a proven ability to hold fixation. All nonexcluded participants averaged <2 saccades (>2°) during the entire data collection period.

Neuroimaging Methods and Analysis

Each subject participated in 3 sets of scans across multiple sessions to collect anatomical scans, functional task data, and visuotopic mapping data. A high-resolution (1.0 × 1.0 × 1.3 mm) magnetization-prepared rapid gradient-echo sampling structural scan was acquired for each subject to computationally reconstruct the cortical surface of each hemisphere using FreeSurfer software (http://surfer.nmr.mgh.harvard.edu/). For the functional auditory task data, T2*-weighted gradient-echo, echo-planar images were collected using forty-two 3-mm slices (no skip), oriented axially (time echo 30 ms, time repetition 2600 ms, in-plane resolution 3.125 × 3.125 mm). Imaging for all auditory data was performed at the Center for Brain Science at Harvard University on a 3-T Siemens Tim Trio scanner with a 32-channel matrix coil. For 6 subjects, structural and visuotopic mapping scans were collected on an identically equipped scanner (i.e., same model and same pulse sequences) at the Martinos Center for Biomedical Imaging at Massachusetts General Hospital.

Each run of functional data was independently registered to the individual's anatomical data using the mean of the functional data, motion-corrected, slice-time corrected, intensity normalized by dividing by the mean across all voxels, and time points inside the brain mask and then multiplying by 100, resampled onto the individual's cortical surface, and spatially smoothed on the cortical surface with a 3-mm full-width half-maximum Gaussian kernel. Surface-based statistical analysis employed a general linear model (GLM), which modeled the 3 types of task block (spatial, temporal, and sensorimotor control), the cue-period preceding each block, and 6 degrees of freedom in motion correction. The default FS-FAST hemodynamic response function [gamma (γ) function; delay: δ = 2.25 s; decay time constant τ = 1.25; Dale and Buckner 1997] was convolved with the modeled time course to create regressors before fitting. In the ROI analysis, the regressor beta-weights (see Supplementary Table 1) for the task conditions were used to calculate the percent signal change for the spatial and temporal conditions relative to the sensorimotor control condition. The GLM and ROI analysis was conducted using Freesurfer FS-FAST (Version 5.1.0).

Statistical Analysis

To accommodate the 2 subjects with incomplete ROI definition (leading to an unbalanced design), we use a linear mixed model with fixed effects of task (2 levels), ROI (10 levels), and hemisphere (2 levels) plus their interactions. This linear mixed model compared the percent signal change relative to the sensorimotor control condition in each ROI. To align with traditional repeated-measures analysis of variance (ANOVA), the model included a full factorial random structure plus intercept using scaled identity covariance with repeated subjects. This approach yields noninteger degrees of freedom; in the results, we round these to the nearest integer. Based on our hypotheses, we were primarily interested in interactions between ROI and task. When this interaction was significant, we conducted a two-tailed paired t-test for each ROI to test the effects of task. We conducted 2 additional two-tailed t-tests per ROI to determine if the spatial and temporal conditions each showed a significant percent signal change relative to the sensorimotor control condition. P-values from post hoc t-tests were corrected for multiple comparisons using the Holm–Bonferroni method. Behavioral data were compared using two-tailed paired t-tests across conditions. SPSS (www.ibm.com/software/analytics/spss/) was used to test the linear mixed model, and the R software package (http://CRAN.R-project.org) was used for t-tests and corrections for multiple comparisons.

Results

Both the spatial and temporal change-detection tasks were intended to be difficult with high STM demands. Although the tasks were designed to yield equivalent performance and were piloted outside of the scanner, performance during fMRI scanning exhibited a trend towards lower performance during the timing condition (66.6 ± 10.1%) than in the spatial condition [77.4 ± 11.1%; t(9) = 2.22, P = 0.054, paired t-test]. Therefore, if greater blood oxygen level-dependent (BOLD) activation is observed for the spatial condition than for the timing condition, this cannot be attributed to task difficulty. Nor can BOLD signal differences be attributed to eye movements; subjects held fixation on over 98% of trials, and eye-tracking revealed no differences between the spatial (98.5 ± 2.4%) and temporal (98.8 ± 2.3%) conditions [t(7) = 0.47, P = 0.65].

We restricted our fMRI data analysis to the parietal lobe, evaluating 6 parietal visuotopic regions: IPS0–4 and SPL1, and 4 neighboring nonvisuotopic parietal regions: latIPS, antIPS, funIPS, and mSPL (see Materials and Methods). A linear mixed model revealed an interaction between ROI and task (F9,318 = 4.79, P = 5.31e−6), but no main effect of hemisphere (F1,50 = 0.17, P = 0.68) or interactions (hemisphere × task: F1,113 = 0.001, P = 0.97; hemisphere × ROI: F9,318 = 1.78, P = 0.07, trending; hemisphere × task × ROI: F9,97 = 0.32, P = 0.97). After finding no interactions with hemisphere, we combined data across hemispheres for further analysis (Table 1).

Table 1.

Montreal Neurological Institute (MNI) coordinates and statistics for auditory spatial versus sensorimotor control (Spatial), auditory temporal versus sensorimotor control (Temporal), and auditory spatial versus temporal (Spatial vs. Temporal), reporting t-statistic (t), and P-value (P) after Holm–Bonferroni correction for multiple comparisons

| MNI coordinates |

Statistics |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Left hemisphere |

Right hemisphere |

Spatial |

Temporal |

Spatial vs. Temporal |

||||||

| Mean | SD | Mean | SD | t-value | P-value | t-value | P-value | t-value | P-value | |

| Visuotopic | ||||||||||

| IPS0 | 24, −81, 20 | 4, 4, 6 | 25, −77, 24 | 3, 6, 5 | −2.124 | 0.626 | −1.718 | 0.839 | −0.154 | 0.881 |

| IPS1 | −18, −79, 38 | 3, 4, 5 | 23, −70, 37 | 4, 3, 7 | −0.004 | 1.000 | −1.880 | 0.743 | 2.256 | 0.101 |

| IPS2 | −16, −69, 47 | 3, 7, 6 | 17, −65, 50 | 4, 3, 7 | 5.024 | 0.010* | 1.251 | 0.970 | 6.180 | 0.002* |

| IPS3 | −21, −61, 55 | 5, 5, 7 | 23, −57, 56 | 5, 5, 7 | 6.020 | 0.003* | 1.569 | 0.906 | 5.146 | 0.005* |

| IPS4 | −29, −53, 50 | 6, 7, 9 | 29, −53, 50 | 6, 7, 7 | 7.594 | 0.001* | 2.145 | 0.626 | 4.462 | 0.011* |

| SPL1 | −11, −61, 55 | 2, 4, 4 | 10, −60, 57 | 3, 5, 4 | 5.166 | 0.011* | 0.574 | 1.000 | 4.218 | 0.012* |

| Nonvisuotopic | ||||||||||

| Anterior IPS (antIPS) | −37, −38, 38 | 5, 5, 4 | 36, −36, 39 | 5, 2, 1 | 9.360 | 0.0001* | 4.004 | 0.034* | 5.632 | 0.003* |

| Lateral IPS (latIPS) | −43, −55, 37 | 0, 0, 0 | 45, −52, 42 | 0, 0, 0 | 5.585 | 0.005* | 4.523 | 0.017* | 3.532 | 0.019* |

| Fundus IPS (funIPS) | −24, −62, 38 | 2, 1, 6 | 28, −61, 39 | 2, 2, 6 | 5.694 | 0.005* | 1.240 | 0.970 | 4.869 | 0.006* |

| Medial superior parietal lobule (mSPL) | −15, −55, 57 | 7, 4, 8 | 11, −55, 60 | 2, 5, 4 | 6.594 | 0.002* | 1.527 | 0.906 | 4.903 | 0.006* |

Note: Statistics have 9 degrees of freedom, except IPS4 and SPL1, which have 8. Bold font and * indicate P < 0.05, Holm-Bonferroni corrected.

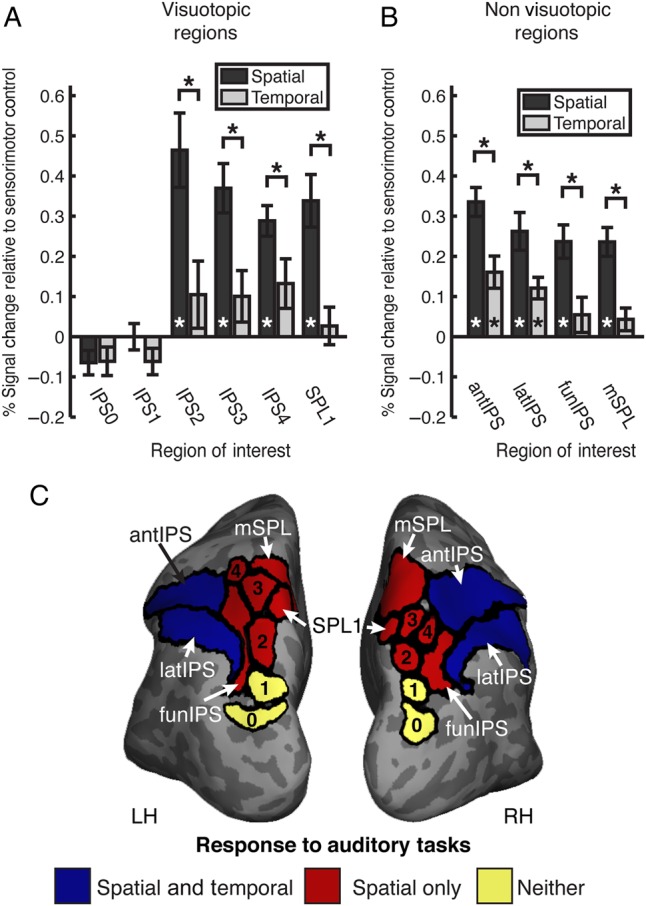

The anterior parietal visuotopic maps (IPS2, IPS3, IPS4, and SPL1) were flexibly recruited under high auditory spatial demands (Fig. 2A and Table 1). Each region demonstrated a stronger response in the auditory spatial compared with temporal condition. Additionally, these regions were recruited for the auditory spatial task, but not for the auditory temporal task when compared with the sensorimotor control. In contrast to the anterior regions, the posterior visuotopic parietal ROIs (IPS0 and IPS1) showed no significant response in either the spatial or temporal task and did not respond differently in the 2 conditions. Thus, IPS0 and IPS1 are visuotopic regions that do not appear to be driven by auditory processing. Similarly, a post hoc analysis of early visual cortex (V1–V3) revealed no significant modulation in either auditory task versus sensorimotor control (both P > 0.5).

Figure 2.

Comparison of auditory spatial and temporal task activations. Average percent signal change relative to sensorimotor control within (A) visuotopic ROIs and (B) nonvisuotopic parietal dorsal attention network ROIs for the spatial (dark gray) and temporal (light gray) tasks. N = 10 for IPS0, IPS1, IPS2, IPS3, latIPS, antIPS, and mSPL; N = 9 for IPS4, and SPL1. Error bars reflect SEM. (C) Summary of functional organization of posterior parietal cortex with respect to auditory spatial and temporal processing.

In regions adjacent to these visuotopic maps, we observed a different pattern of task responses (Fig. 2B). All 4 regions showed a stronger response for the spatial task than the temporal task. However, latIPS and antIPS showed significant activity in both the spatial and temporal tasks, whereas the mSPL and funIPS were recruited for the spatial but not the temporal task. Here, mSPL and funIPS exhibit the same pattern of behavior observed in visuotopic regions IPS2–4 and SPL1, whereas latIPS and antIPS are nonvisuotopic regions recruited for both auditory tasks (Note that all 30 fMRI statistical relationships in Table 1 hold whether error trials are included or excluded in the analysis). An additional post hoc analysis showed no differences for “change” versus “no change” trials in the either the spatial or temporal conditions for any of the parietal ROIs. This functional organization of posterior parietal cortex with regard to auditory spatial and temporal processing is summarized in Figure 2C.

Discussion

The fMRI findings from this auditory STM study provide evidence that anterior visuospatial maps of the superior parietal lobule—IPS2, IPS2, IPS4, and SPL1—are recruited under high auditory spatial demands but not under similarly difficult auditory temporal demands. In contrast, posterior/inferior visuotopic IPS regions—IPS0 and IPS1—did not show a significant response for either the spatial or temporal tasks relative to a sensorimotor control. These results demonstrate that auditory recruitment of anterior visuotopic parietal regions is flexible and dependent on the spatial task demands. In the absence of high spatial task demands, auditory processing failed to recruit any of the visuotopic parietal regions. This study demonstrates a functional dissociation within the visuotopic maps of IPS, and when combined with prior parietal research, suggests that IPS maps may become progressively more multisensory from posterior to anterior. Our findings also mirror white matter tractography findings, demonstrating that IPS0 and IPS1 exhibit robust and retinotopically specific structural connectivity with V1, V2, and V3, whereas more anterior regions of IPS exhibit no more than weak structural connectivity with early visual cortex (Greenberg et al. 2012). Figure 2C shows responses to the spatial and temporal auditory STM tasks for all parietal ROIs.

Adjacent to the visuospatial maps of IPS0–4 and SPL1, parietal regions in which visuotopic maps have not been consistently identified demonstrate a different functional dissociation in auditory STM. All 4 nonvisuotopic regions exhibited stronger responses for the spatial task relative to the temporal task; however, the more lateral regions—latIPS and antIPS—showed significant activity in both tasks, whereas the more medial regions—mSPL and funIPS—were recruited only for the spatial task. AntIPS and latIPS are driven by both auditory tasks and can also be driven in visual attention tasks (e.g., Sheremata et al. 2010), suggesting that they may be multisensory regions that play a more general, nonspatial role in attention and cognitive control. In contrast, funIPS and mSPL display the same response pattern as the superior visuotopic maps: recruitment for high auditory spatial but not temporal task demands. As shown in Figure 2C, these 2 regions share large borders with the superior visuotopic maps. This study suggests that funIPS and mSPL support spatial processing, but further investigation is warranted regarding the mechanisms.

Prior neuroimaging studies of the parietal lobe have reported overlap between visual and auditory tasks (Lewis et al. 2000; Jiang and Kanwisher 2003; Krumbholz et al. 2009; Smith et al. 2010), but such overlap might have occurred outside of the parietal visual maps. The present results demonstrate that auditory STM drove several nonvisuotopic parietal regions that abut the visuotopic regions. The question of auditory processing in visuotopic parietal cortex was only explicitly addressed in one prior study (Kong et al. 2012). In that study, we found that auditory attention drove nonvisuotopic regions anterior and lateral to the visuotopic regions of IPS, but failed to recruit visuotopic IPS. The task in our prior study required attending to 1 of 2 left/right spatialized auditory speech streams, whereas the present auditory spatial task differs in 2 important regards: multiple spatial locations were held in STM and finer spatial resolution was required. Consistent with this past study, here we find that an auditory temporal task elicits no significant activity in visuotopic IPS; however, an auditory spatial task drives anterior visuotopic IPS. These differences demonstrate that task demands affect whether auditory tasks recruit IPS. Also, note that in the present study, although changes only occurred in the attended information domain (space or time), otherwise stimuli were equivalent across tasks. Taken together these results suggest that auditory representations lacking spatial maps are sufficient to support single targets with minimal localization demands (e.g., Stecker et al. 2005), but that visuotopic representations may be recruited to support coding of multiple spatial targets and/or to support finer spatial coding; this may reflect implicit and/or explicit visuospatial recoding. Since each trial presented auditory stimuli at multiple locations, spanning both hemifields, we were not able to examine the spatial specificity of the auditory recruitment of IPS maps, but this will be an important question for future research. Additionally, the relative contributions of the encoding, delay, and response phases of the spatial working memory task could not be addressed in this block-design study.

Previous work observed auditory spatial processing within visuotopic frontal eye fields in the absence of eye movements (Tark and Curtis 2009). Similarly, the effects observed here cannot be attributed to eye movements: eye-tracking indicated no difference in eye movements between the spatial and temporal tasks, and neither task showed a significant response compared with the sensorimotor control in 2 regions driven by saccades: IPS0 and IPS1 (Schluppeck et al. 2005; Konen and Kastner 2008). Mental imagery typically recruits IPS, but also recruits adjacent dorsal occipital lobe regions (e.g., Ishai et al. 2000; Ganis et al. 2004; Slotnick et al. 2005); therefore, it is unlikely that spatial task activation reflects strong mental imagery influences, since posterior IPS and early visual cortex were not recruited. We cannot rule out a contribution from a very abstract mental imagery (e.g., consisting of simple spatial representations) that may not activate occipital regions; on the other hand, abstract spatial mental imagery could be considered to be a form of spatial working memory.

We have recently reported that visual-biased and auditory-biased attentional networks extend into the lateral frontal cortex (Michalka et al. 2015). The visual-biased network includes 2 nodes, superior precentral sulcus (sPCS) and inferior precentral sulcus (iPCS), which are separated by one of the nodes of the auditory-biased network, the transverse gyrus of the precentral sulcus. Similar to the results reported here for anterior IPS, we observed that sPCS and iPCS were much more strongly recruited for the auditory spatial task than for the auditory temporal task, while the auditory network nodes were not more strongly driven by one auditory task or the other. The responses of the visually biased frontal lobe nodes differ from the parietal results reported here in that sPCS and iPCS also exhibited significant (but weaker) activation in the auditory temporal task, whereas the visuotopic parietal areas did not. Although sPCS and iPCS did not exhibit stimulus-driven retinotopic maps in the vast majority of our subjects, other fMRI studies employing working memory and/or saccade tasks have revealed visual maps in sPCS and iPCS (Hagler and Sereno 2006; Kastner et al. 2007; Jerde et al. 2012). Taken together, these results demonstrate that the most anterior (and presumably highest-order) visual cortical maps can be recruited to support highly demanding auditory spatial cognition, but that the posterior visual maps remain unisensory.

The nonhuman primate literature provides evidence of responsiveness to auditory stimuli in at least 2 parietal areas thought to have homologs to the regions investigated here. In macaque electrophysiology, neurons in the LIP and VIP show overlap in their spatial receptive fields for auditory and visual stimuli. Some LIP neurons respond to auditory stimuli, but these responses depend on both the task and stimulus salience (Mullette-Gillman et al. 2005; Cohen 2009). Relative to LIP, VIP exhibits more robust and consistent multisensory responses with stronger firing rates and a greater proportion of neurons responding to auditory stimuli (Schlack et al. 2005). Based on these stronger auditory responses in monkey VIP than in LIP, we propose that VIP may be homologous to human antIPS and latIPS, which were recruited in both auditory tasks, and that monkey LIP may be homologous to human IPS2–4, which were only recruited during our highly demanding auditory spatial task.

Several lines of previous work support our proposed interpretation. The human homolog of monkey LIP appears to shift from lateral to medial banks of IPS (Grefkes and Fink 2005; Swisher et al. 2007), whereas the VIP homologue may be less shifted, lying anterior to the LIP homolog (Sereno and Huang 2014). Other evidence regarding posterior parietal lobe homologies comes from consideration of spatial reference frames, priority maps, eye movements, and responses to other sensory modalities. The native reference frames of vision and audition differ: Visual information is initially encoded in eye-centered coordinates, whereas auditory information is initially encoded in head-centered coordinates. In nonhuman primates, area LIP has long been thought to code spatial information in eye-centered coordinates, whereas area VIP codes spatial information in head-centered coordinates (e.g., Colby and Goldberg 1999; Grefkes and Fink 2005). Note, however, that hybrid coordinates have been observed for motor-related responses in LIP (Cohen 2009; Mullette-Gillman et al. 2009). Visuotopic areas IPS0–4 appear to code visual information in eye-centered coordinates, rather than head-centered coordinates (Golomb and Kanwisher 2012), and thus are candidate LIP homologs. Area LIP has been suggested to contain a priority map of space across attention, working memory, and intentional paradigms (e.g., Bisley and Goldberg 2010); recent human studies indicate that IPS2 also meets the priority map criteria and suggest that IPS2 is a homolog to LIP (Jerde et al. 2012). Studies of saccades and smooth pursuit eye movements suggest that IPS1–2 correspond to LIP, while a region beyond IPS4 (Levy et al. 2007; Konen and Kastner 2008), likely located in our antIPS, corresponds to VIP. VIP in monkeys also exhibits many tactile-visual neurons. Combined tactile-visual studies in humans report a somatosensory body map, which overlaps visual maps observed with wide-field stimuli. These regions appear to lie superior and anterior to IPS2–4 (Sereno and Huang 2014). Thus, multiple lines of evidence thus suggest that human IPS2, and perhaps one or more of its visuotopic neighbors, may be homologous to LIP, whereas antIPS and possibly latIPS may be homologous to VIP.

Although further investigation of parietal lobe homologs is warranted, the current finding of auditory processing in visuotopic IPS extends our understanding of multisensory processing in the human parietal lobe.

Supplementary Material

Supplementary material can be found at http://www.cercor.oxfordjournals.org/.

Funding

This work was supported by CELEST, a National Science Foundation Science of Learning Center (NSF SMA-0835976 to B.G.S.-C.), and the National Institutes of Health (NIH R01EY022229 to D.C.S. and 1F31MH101963 to S.W.M.). Funding to pay the Open Access publication charges for this article was provided by CELEST, a National Science Foundation Science of Learning Center (NSF SMA-0835976).

Supplementary Material

Notes

Sam Ling, Abigail Noyce, Kathryn Devaney, and David Osher provided helpful comments on this manuscript. Conflict of Interest: None declared.

References

- Alain C, Shen D, Yu H, Grady C. 2010. Dissociable memory- and response-related activity in parietal cortex during auditory spatial working memory. Front Psychol. 1:202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bisley J, Goldberg M. 2010. Attention, intention, and priority in the parietal lobe. Ann Rev Neurosci. 33:1–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen Y. 2009. Multimodal activity in the parietal cortex. Hear Res. 258:100–105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen Y, Russ B, Gifford G. 2005. Auditory processing in the posterior parietal cortex. Behav Cogn Neurosci Rev. 4:218–231. [DOI] [PubMed] [Google Scholar]

- Colby C, Goldberg M. 1999. Space and attention in parietal cortex. Annu Rev Neurosci. 22:319–349. [DOI] [PubMed] [Google Scholar]

- Dale AM, Buckner RL. 1997. Selective averaging of rapidly presented individual trials using fMRI. Hum Brain Mapp. 5:329–340. [DOI] [PubMed] [Google Scholar]

- Ganis G, Thompson WL, Kosslyn SM. 2004. Brain areas underlying visual mental imagery and visual perception: an fMRI study. Cogn Brain Res. 20(2):226–241. [DOI] [PubMed] [Google Scholar]

- Golomb J, Kanwisher N. 2012. Higher level visual cortex represents retinotopic, not spatiotopic, object location. Cereb Cortex. 22:2794–2810. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greenberg AS, Verstynen T, Chiu Y-C, Yantis S, Schneider W, Behrmann M. 2012. Visuotopic cortical connectivity underlying attention revealed with white-matter tractography. J Neurosci. 32(8):2773–2782. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grefkes C, Fink G. 2005. The functional organization of the intraparietal sulcus in humans and monkeys. J Anat. 207:3–17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hagler DJ, Sereno MI. 2006. Spatial maps in frontal and prefrontal cortex. Neuroimage. 29(2):567–577. [DOI] [PubMed] [Google Scholar]

- Ishai A, Ungerleider L, Haxby J. 2000. Distributed neural systems for the generation of visual images. Neuron. 28:979–990. [DOI] [PubMed] [Google Scholar]

- Jerde T, Merriam E, Riggall A, Hedges J, Curtis C. 2012. Prioritized maps of space in human frontoparietal cortex. J Neurosci. 32:17382–17390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang Y, Kanwisher N. 2003. Common neural substrates for response selection across modalities and mapping paradigms. J Cogn Neurosci. 15:1080–1094. [DOI] [PubMed] [Google Scholar]

- Kastner S, DeSimone K, Konen CS, Szczepanski SM, Weiner KS, Schneider KA. 2007. Topographic maps in human frontal cortex revealed in memory-guided saccade and spatial working-memory tasks. J Neurophysiol. 97(5):3494–3507. [DOI] [PubMed] [Google Scholar]

- Konen C, Kastner S. 2008. Representation of eye movements and stimulus motion in topographically organized areas of human posterior parietal cortex. J Neurosci. 28:8361–8375. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kong L, Michalka SW, Rosen ML, Sheremata S, Swisher J, Shinn-Cunningham BG, Somers DC. 2012. Auditory spatial attention representations in the human cerebral cortex. Cereb Cortex. 24:773–784. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krumbholz K, Nobis E, Weatheritt R, Fink G. 2009. Executive control of spatial attention shifts in the auditory compared to the visual modality. Hum Brain Mapp. 30:1457–1469. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levy I, Schluppeck D, Heeger D, Glimcher P. 2007. Specificity of human cortical areas for reaches and saccades. J Neurosci. 27:4687–4696. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis J, Beauchamp M, DeYoe E. 2000. A comparison of visual and auditory motion processing in human cerebral cortex. Cereb Cortex. 10:873–888. [DOI] [PubMed] [Google Scholar]

- Michalka SW, Kong L, Rosen ML, Shinn-Cunningham BG, Somers DC. 2015. Short-term memory for space and time flexibly recruit complementary sensory-biased frontal lobe attention networks. Neuron. 87(4):882–892. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mullette-Gillman O, Cohen Y, Groh J. 2005. Eye-centered, head-centered, and complex coding of visual and auditory targets in the intraparietal sulcus. J Neurophysiol. 94:2331–2352. [DOI] [PubMed] [Google Scholar]

- Mullette-Gillman O, Cohen Y, Groh J. 2009. Motor-related signals in the intraparietal cortex encode locations in a hybrid, rather than eye-centered reference frame. Cereb Cortex. 19:1761–1775. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schlack A, Sterbing-D'Angelo S, Hartung K, Hoffmann K-P, Bremmer F. 2005. Multisensory space representations in the macaque ventral intraparietal area. J Neurosci. 25:4616–4625. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schluppeck D, Glimcher P, Heeger D. 2005. Topographic organization for delayed saccades in human posterior parietal cortex. J Neurophysiol. 94:1372–1384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sereno M, Dale A, Reppas J, Kwong K, Belliveau J, Brady T, Rosen B, Tootell R. 1995. Borders of multiple visual areas in humans revealed by functional magnetic resonance imaging. Science. 268:889–893. [DOI] [PubMed] [Google Scholar]

- Sereno M, Huang R. 2014. Multisensory maps in parietal cortex. Curr Opin Neurobiol. 24:39–46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sheremata S, Bettencourt K, Somers D. 2010. Hemispheric asymmetry in visuotopic posterior parietal cortex emerges with visual short-term memory load. J Neurosci. 30:12581–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silver M, Kastner S. 2009. Topographic maps in human frontal and parietal cortex. Trends Cogn Sci. 13:488–495. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Slotnick SD, Thompson WL, Kosslyn SM. 2005. Visual mental imagery induces retinotopically organized activation of early visual areas. Cereb Cortex. 15(10):1570–1583. [DOI] [PubMed] [Google Scholar]

- Smith D, Davis B, Niu K, Healy E, Bonilha L, Fridriksson J, Morgan P, Rorden C. 2010. Spatial attention evokes similar activation patterns for visual and auditory stimuli. J Cogn Neurosci. 22:347–361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stecker G, Harrington I, Middlebrooks J. 2005. Location coding by opponent neural populations in the auditory cortex. PLoS Biol. 3:e78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stricanne B, Andersen RA, Mazzoni P. 1996. Eye-centered, head-centered, and intermediate coding of remembered sound locations in area LIP. J Neurophysiol. 76:2071–2076. [DOI] [PubMed] [Google Scholar]

- Swisher J, Halko M, Merabet L, McMains S, Somers D. 2007. Visual topography of human intraparietal sulcus. J Neurosci. 27:5326–5337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Szczepanski S, Konen C, Kastner S. 2010. Mechanisms of spatial attention control in frontal and parietal cortex. J Neurosci. 30:148–160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tark K, Curtis C. 2009. Persistent neural activity in the human frontal cortex when maintaining space that is off the map. Nat Neurosci. 12:1463–1468. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yeo B, Krienen F, Sepulcre J, Sabuncu M, Lashkari D, Hollinshead M, Roffman J, Smoller J, Zollei L, Polimeni J et al. . 2011. The organization of the human cerebral cortex estimated by intrinsic functional connectivity. J Neurophysiol. 106:1125–1165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zatorre R, Bouffard M, Ahad P, Belin P. 2002. Where is “where” in the human auditory cortex? Nat Neurosci. 5:905–909. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.