Abstract

In the last decade dendrites of cortical neurons have been shown to nonlinearly combine synaptic inputs by evoking local dendritic spikes. It has been suggested that these nonlinearities raise the computational power of a single neuron, making it comparable to a 2-layer network of point neurons. But how these nonlinearities can be incorporated into the synaptic plasticity to optimally support learning remains unclear. We present a theoretically derived synaptic plasticity rule for supervised and reinforcement learning that depends on the timing of the presynaptic, the dendritic and the postsynaptic spikes. For supervised learning, the rule can be seen as a biological version of the classical error-backpropagation algorithm applied to the dendritic case. When modulated by a delayed reward signal, the same plasticity is shown to maximize the expected reward in reinforcement learning for various coding scenarios. Our framework makes specific experimental predictions and highlights the unique advantage of active dendrites for implementing powerful synaptic plasticity rules that have access to downstream information via backpropagation of action potentials.

Author Summary

Error-backpropagation is a successful algorithm for supervised learning in neural networks. Whether and how this technical algorithm is implemented in cortical structures, however, remains elusive. Here we show that this algorithm may be implemented within a single neuron equipped with nonlinear dendritic processing. An error expressed as mismatch between somatic firing and membrane potential may be backpropagated to the active dendritic branches where it modulates synaptic plasticity. This changes the classical view that learning in the brain is realized by rewiring simple processing units as formalized by the neural network theory. Instead, these processing units can themselves learn to implement much more complex input-output functions as previously thought. While the original algorithm only considered firing rates, the biological implementation enables learning for both a firing rate and a spike-timing code. Moreover, when modulated by a reward signal, the synaptic plasticity rule maximizes the expected reward in a reinforcement learning framework.

Introduction

One of the fascinating and still enigmatic aspects of cortical organization is the widespread dendritic arborization of neurons. These dendrites have been shown to generate dendritic spikes [1–3] that support local dendritic processing [4–7], but the nature of this computation remains elusive. An interesting view is that the dendritic nonlinearities endow the neuron with the structure of a 2-layer neural network of point neurons, in particular if the dendrites show themselves step-like dendritic spikes, but also if the dendritic nonlinearities remain continuous [8–11]. Here we show that the dendritic morphology actually offers a substantial additional benefit over the 2-layer network. This is because it allows for the implementation of powerful learning algorithms that rely on the backpropagation of the somatic information along the dendrite that, in a network of point neurons, would not be possible in this form.

Error-backpropagation has become the classical algorithm for adapting the connection strengths in artificial neural networks [12, 13]. In this algorithm, an error at an output unit is assessed by comparing the self-generated activity with a target activity. Plasticity in hidden units is driven by this error that propagates backwards along the connections of the network. Synapses, however, transmit information just in one direction, making it difficult to implement error-backpropagation in biological neuronal circuitries. But this is different for dendritic trees. In the 2-layer structure of a dendritic tree information at the output site may be physically backpropagated across the intermediate computational layer to the synapses targeting the tree.

While the suggested dendritic error-backpropagation is a plasticity rule for supervised learning, it is also suitable for reinforcement learning. Instead of imposing the somatic spiking to learn pre-assigned target spike timings, the somatic spikes can be generated by the dendritic inputs alone, while learning is driven by a delayed reward signal. The synapse itself can be agnostic about the coding and the learning scenario; it learns by continuously adapting synaptic strength according to molecular mechanisms that are identical in the different scenarios.

Various experimental work revealed that synaptic plasticity depends on the precise timing between pre- and postsynaptic action potentials [14, 15] and the postsynaptic voltage [16]. It has further been shown that the specific form of this spike-timing-dependent plasticity (STDP) may vary with the synaptic location on the dendritic tree [17–19], and that synaptic plasticity in general is modulated by dendritic spikes [20–22]. Yet, no coherent view on the impact of dendritic nonlinearities on plasticity has emerged. Correspondingly, beside an early attempt to assign a fitness score to dendritic synapses [23] and the suggestion of a Hebbian-type plasticity rule for synapses on active dendrites [24], no computational framework for synaptic plasticity with regenerative dendritic events exists that would guide its experimental exploration. In our previous study, we derived a reward-maximizing plasticity rule that incorporates dendritic spikes, but no online implementation was presented [25]. Here, starting from biophysical properties of NMDA conductances [26], we consider an integrated somato-dendritic spiking model that captures the main biological ingredients of dendritic spikes and that is simple enough to derive an online plasticity rule for different coding schemes in the context of both supervised and reinforcement learning.

Results

Neuron model

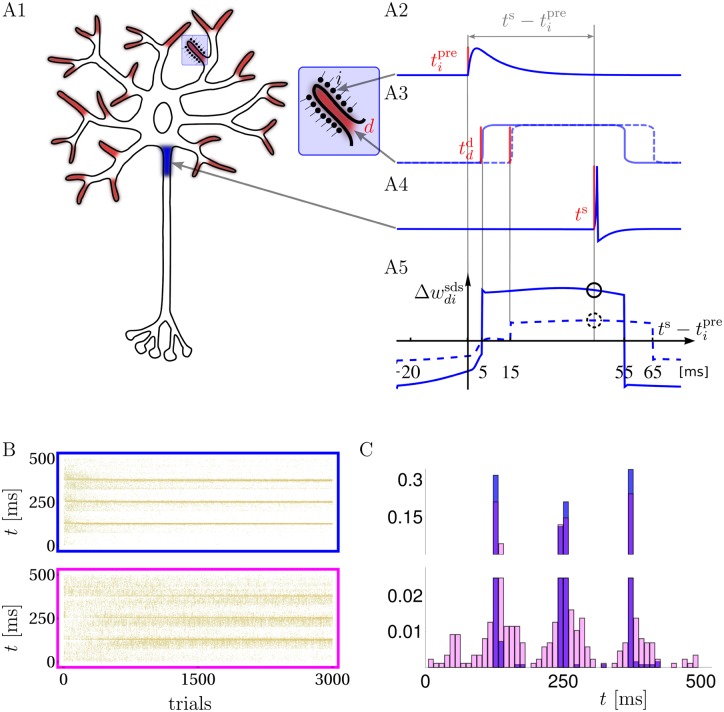

We model a multi-compartment neuron with several active dendritic branches, each directly linked to a somatic compartment (Fig 1A1). The subthreshold dendritic voltage in branch d is the weighted sum of normalized postsynaptic potentials (PSPs) triggered by the presynaptic spikes in the afferents projecting to that branch, . Here, wdi represents the synaptic strength of the synapse from afferent i onto branch d that scales the PSP amplitude. The dendritic branches can generate temporally extended NMDA-spikes of a fixed amplitude, similar to experimental observations in vitro [1, 3, 5] and in vivo [7]. In our model an NMDA-spike is represented by a square voltage pulse of amplitude a and duration Δ = 50 ms (Fig 1A2–1A3). It is stochastically elicited with a rate that is an increasing function of the local subthreshold membrane potential and, implicitly, of the local glutamate level. In fact, in an in vivo scenario the joint voltage and glutamate condition for triggering an NMDA-spike effectively reduces to a single condition on the local voltage alone. This is because the depolarization required to activate the NMDA receptors is only reached when enough glutamate was released, making the glutamate condition automatically satisfied at high enough voltages (see S1 Text).

Fig 1. Neuron model, synaptic plasticity rule and learning of spike timings.

A: Synaptic inputs targeting dendritic NMDA activation zones (A1, red endings with enlargement) propagate, together with possible NMDA-spikes, to the somatic spike trigger zone (A1, blue). Individual postsynaptic potentials in a dendritic branch (PSPs, arriving e.g. at time , A2), may trigger NMDA-spikes, e.g. at time (solid) or 15 ms (dashed) after , forming a local dendritic plateau potential of 50 ms duration (A3). A somatic spike triggered at ts during the ongoing NMDA-spike (A4) causes a synaptic weight change that is large/small depending on whether the NMDA-spike was triggered 5/15 ms after the presynaptic spike (A5, solid/dashed circle, respectively). A5: as a function of for a NMDA-spike at 5 (solid) and 15 ms (dashed). B: Raster plots of freely generated somatic spikes from test trials that are interleaved with learning trials. For the full somato-dendritic synaptic plasticity rule (sdSP) the somatic spikes converge to the 3 target times with a precision of ±3 ms (top), while the rule neglecting the dendritic spikes (i.e. suppressing the term ) achieves a precision of only ±14 ms (bottom). C: The two spike distributions from C after 3000 presentations.

The subthreshold dendritic voltage and the dendritic spike train NMDAd(t) in branch d propagate with some attenuation factor α to the soma where they add up with inputs from other branches to form the somatic voltage . This voltage is also modulated by a spike reset kernel κ(t) incorporating the transient hyperpolarisation caused by each somatic spike (Fig 1A4). For supervised learning, the somatic spikes S(t) are imposed by an external input, whereas in reinforcement learning they are stochastically triggered with an instantaneous rate ρs(t) that is an increasing function of the somatic potential us (Online Methods).

Learning rule

We first consider a supervised learning scenario where somatic spikes S are enforced by one modality (e.g. a visual stimulus) while the synaptic inputs to the dendritic branches are caused by another modality (e.g. representing an auditory stimulus [27]). The strengths of the synapses on the dendrites, wdi, are adapted in order to reproduce the somatic spike train S(t) from just the dendritic input alone, without direct somatic drive. This can be achieved by ongoing synaptic weight changes, , that together maximize the likelihood of observing S in response to this dendritic input. According to the two types of contributions to the somatic voltage, the sub- and supra-threshold dendritic voltages, and NMDAd, the synaptic weight change can also be decomposed into a sub- and suprathreshold contribution, , that take into account the subthreshold somato-synaptic (ss) and the suprathreshold somato-dendro-synaptic (sds) drive. We also refer to as somato-dendritic synaptic plasticity (sdSP).

The somato-synaptic contribution is proportional to the postsynaptic error term (S − ρs) times the local postsynaptic potential PSPi induced by synapse i on that dendritic branch,

| (1) |

This corresponds to the gradient learning rule that was previously derived for a single compartment neuron [28] and that was shown to be consistent with the experimentally observed STDP (see e.g. [29]). The error term in the rule ensures that if the rate ρs is too small for generating S, the weight is increased, and if the rate is too high, the weight is decreased, eventually leading in average to 〈S〉 = ρs.

The main sdSP-effect stems from the somato-dendro-synaptic contribution . The instantaneous synaptic weight change at time t is induced by the dendritic activity Dend in branch d during the interval Δ prior to t. Any NMDA-spike elicited in this interval will affect the somatic voltage at time t, and the likelihood of a dendritic spike is itself influenced by the local synaptic potentials PSPi arriving in this interval and a few milliseconds before (Fig 1A2–1A5). Overall, we obtain an expression of the form

| (2) |

where Dend ∗ PSPi captures the impact of synapse i on the triggering of an NMDA-spike in the preceding interval Δ, and represents the instantaneous somatic firing rate in the absence of a dendritic spike in branch d (see Online Methods). A positive error term tells the synapses on branch d how worth it is to increase their weights in order to trigger a local NMDA-spike; a negative error term suggests to rather decrease the weights since even without NMDA-spike from that branch the somatic firing rate, in average, is too high. When only dendritic nonlinearities without spiking are present, the rule Eq (2) simplifies to a pure 3-factor rule composed of a somatic difference factor, a dendritic factor, and a presynaptic factor that can be applied to dendrites showing supra- or sublinear dendritic summations (see Eq (9) in Online Methods).

The learning rule of Eq (2) can be interpreted as error-backpropagation for spiking neurons where a somatic error signal is propagated back to the dendrites that represent the nonlinear hidden units. These hidden units further modulate the error signal depending on their impact on the output unit. Classical error-backpropagation would also adapt the weights from the hidden units to the output unit. This would correspond to adapting the impact of NMDA spikes on the somatic voltage and could be modeled as dendritic branch strength plasticity [24, 30]. For conceptual clarity we discard from this extension, but the gradient calculations could also be applied to infer an optimal learning rule for these branch strengths.

Supervised learning with active dendrites

The overall synaptic modification, Δwdi, induced by sdSP is obtained by integrating the instantaneous changes over the stimulus duration, . Using the decomposition we may also write and have a closer look to the somato-dendro-synaptic contribution (Fig 1A2–1A5). We fixed a presynaptic spike at time and plotted as a function of the somatic spike time ts for the case of a NMDA-spike at td = 5 ms and 15 ms. The dendritic spike immediately after a presynaptic spike considerably extends the classical time window for causal ‘pre-post’ potentiation to a ‘pre-dend-post’ potentiation. In fact, a presynaptic spike that was taking part in triggering a NMDA-spike may indirectly contribute also to a postsynaptic spike more than 50 ms later. In turn, synaptic depression is induced in an a-causal configuration where the somatic spike comes either before the presynaptic spike or after the NMDA-spike has already decayed.

Endowed with sdSP a neuron is able to learn precise output spike-timings as shown in Fig 1B and 1C; blue) where 3 somatic spike times were imposed during the learning. The dendritic input consisted of 100 frozen presynaptic Poisson spike trains with frequency 6Hz and duration T = 500 ms. The dendritic tree had 20 branches, each being targeted by a random subset of the 100 afferents with a connection probability of 0.5. After repeated pattern presentations with somatic output clamped to the target spikes, the neuron learned to generate the target output from the synaptic input alone with a precision of a few milliseconds. The high spike-time precision is lost when synapses are modified only by the somato-synaptic contribution (Fig 1B and 1C; pink). Because this plasticity contribution is blind to dendritic activity, small synaptic weight changes may cause undesired appearance or disappearance of NMDA-spikes. In this case dendritic spikes arise as unpredicted knock-on effects of synaptic plasticity. Note that the somato-synaptic contribution alone, being identical to the gradient rule [28], would be able to learn the precise spiking (as would also the rules in [29, 31]) if the neuron were note endowed with the dendritic spiking mechanism and instead would only show linear summation with passive voltage propagation.

Reward-modulated somato-dendritic plasticity

We next considered a reinforcement learning scenario where synaptic modifications are modulated by a binary feedback signal R = ±1 that is applied at the end of the stimulus presentation and that assesses the appropriateness of the somatic firing pattern. While this feedback is itself an external quantity, it is assumed to induce an internal signal, e.g. in the form of a neuromodulator, that globally modulates the previously induced synaptic changes. To control the balance between reward and punishment, the internal feedback modulates the past plasticity induction by a factor (R − R∘) with a constant reward bias R∘.

When deriving a plasticity rule that maximizes the expected reward we again obtain the same sdSP (see Eqs (1) and (2)), but now integrated across the stimulus interval and then modulated by the feedback signal,

| (3) |

see S1C Text. We refer to this rule as reward-modulated somato-dendritic synaptic plasticity (R-sdSP). Due to the term it is effectively a 4-factor rule of the form ‘Δw = Rwrd⋅som⋅dend⋅pre’. The intuition is that the intrinsic neuronal stochasticities generate fluctuations in the somatic spiking that deviate from the prediction made by the dendritic input and cause an ‘error’ expressed in the somatic factor of the rule. These fluctuations will be reinforced or suppressed by the feedback signal. As before, the synaptic modification will be strengthened if a presynaptic spike contributed to a dendritic NMDA-spike that in turn affects the somatic voltage.

Reinforcement learning with active dendrites

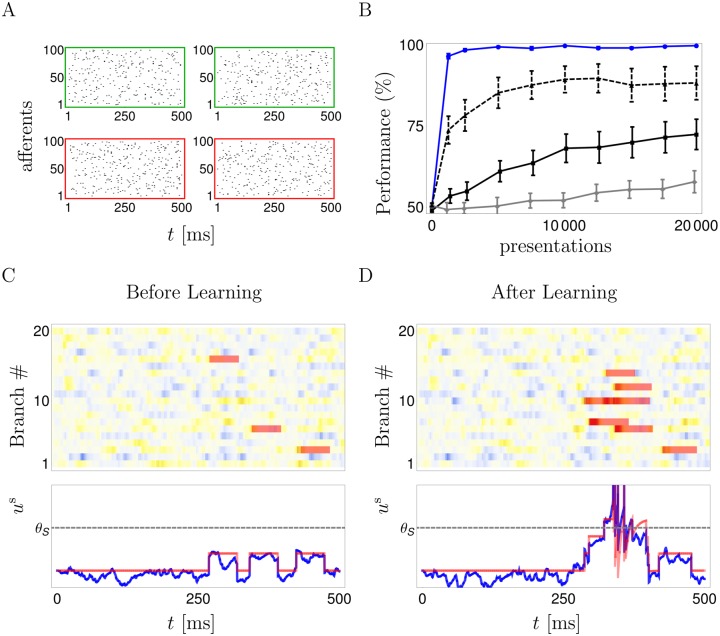

We tested R-sdSP for various coding schemes. First, we considered a standard binary classification of frozen Poisson spike patterns by a postsynaptic spike- / no-spike code (Fig 2A). Each input pattern is defined as above (6Hz in 100 afferents for 500 ms) and belongs to one of two classes. For one class the soma is required to fire at least one spike while for the other class it should be silent. After repeated presentations followed by a reward signal, R-sdSP perfectly learned the correct classification of 4 random patterns. In contrast, reward-modulated STDP (R-STDP, [32]) implemented in its best performing version (see Online Methods and [33]) did not (Fig 2B). To achieve an appropriate alignment of dendritic spikes (Fig 2C and 2D), any successful learning rule needs to take account of the causal chain linking presynaptic spikes to dendritic and somatic spikes, the latter deciding upon reward or punishment. R-sdSP derived from maximizing the expected reward captures this causal relationship, but R-STDP does not, neither with a 10 ms (Fig 2B) nor with a 50 ms learning window (S2 Fig), and hence fails. Interestingly, R-STDP improves when the NMDA-spike generation is suppressed (Fig 2B, dashed). This shows that the increased flexibility in neuronal information processing provided by dendritic nonlinearities will in fact impede learning when a rule is used that does not take the nonlinearities into account.

Fig 2. Binary classification of frozen input spike patterns by a somatic spike / no-spike code for the reward-modulated somato-dendritic synaptic plasticity (R-sdSP).

A: Four input patterns, the two patterns in the top row should elicit no somatic spikes; the patterns in the bottom row should. B: R-sdSP perfectly learns the classification after roughly 1000 presentations (blue solid). In contrast, classical R-STDP fails when applied to the presynaptic–somatic (‘pre-som’, solid black) or the presynaptic–dendritic (‘pre-den’, gray) spike pairs. R-STDP improves when the dendritic spike generation is suppressed (black dashed), although it does not reach the high performance of R-sdSP. C,D: Dendritic and somatic voltages in response to an input pattern that requires spiking, before (C) and after (D) learning. The initially sparse dendritic spikes (NMDAd(t), red bars, overlaid on a intensity plot) become more numerous, co-align and sum up in the soma to enable the somatic firing. Yellow indicates depolarization. Bottom: Time course of the somatic voltage us(t) (blue) with the contribution of the NMDA-spikes and the somatic spike reset kernel (red).

R-sdSP is still able to correctly learn the classification even when the spike timings were noisy with a jitter up to 100 ms, or when the somatic voltage modulating the synaptic plasticity (via ρs and ) was low-pass filtered to mimic the dilution of information back-propagating to the synaptic site (S2 Fig).

Incidentally, the same task from Fig 2 can also be solved in a supervised scenario e.g. with the tempotron where, beside telling a neuron whether it should spike or not spike in response to a stimulus, the neuron is supposed to have access to the time of the voltage maximum within the stimulus interval [0, T], see e.g. [34, 35]. Although with these additional assumptions learning in principle becomes faster, the rules will again suffer from the ignorance about NMDA spikes and the possible acausality between a presynaptic spike and an immediately following somatic spike.

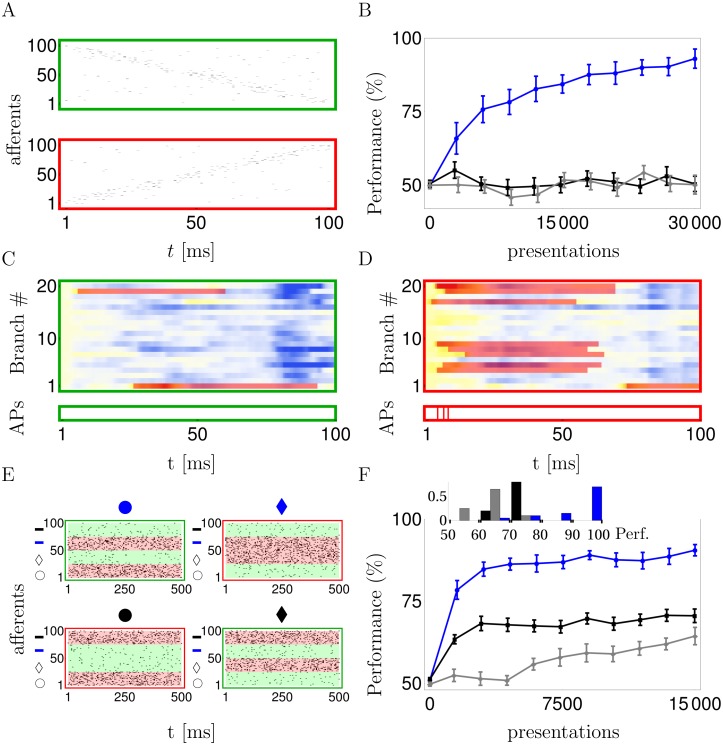

Learning direction selectivity and nonlinear separation

To apply the dendritic learning to a biological example we consider the direction selectivity of pyramidal neurons that was found to be mediated by nonlinear dendritic processing in vitro [5] and in vivo [7]. To mimick directional inputs moving in the stimulus space from right to left and left to right, we randomly enumerated the synapses across the whole dendritic tree and stochastically activated these synapses once in increasing and once in decreasing order (Fig 3A). After the stimulation, a positive reward signal was applied to the synapses when at least one somatic spike was elicited during the left-to-right patterns, or no somatic spike was elicited during a right-to-left pattern. A negative reward signal was applied in the other cases. R-sdSP, but not R-STDP, could learn such direction selectivity (Fig 3B). Individual dendritic branches may become selective to the synaptic activation order and learn to generate NMDA-spikes that, after summation in the soma, eventually trigger somatic action potentials (Fig 3C and 3D). Hence, the neuron learned to employ the dendritic nonlinearities to achieve direction selectivity, even though solving the task does not require them.

Fig 3. R-sdSP can exploit the representational power endowed by active dendrites.

A: Example of presynaptic firing pattern that requires the neuron to be silent (green) or to elicit at least one somatic spike (red). B: R-sdSP (blue), but not R-STDP, learns to become direction selective (black: ‘pre-som’; grey: ‘pre-den’). C, D: The subthreshold dendritic voltages and NMDA traces NMDAd(t) in response to the two input patterns shown in A (color code as in Fig 2). Individual branches developed direction selectivity (green). Bottom: action potentials are only generated for one direction. E: The 4 input patterns of the linearly non-separable feature-binding problem combine one of two shapes (‘circle’ or ‘diamond’) with one of two fill colors (‘blue’ or ‘black’). Each of the four features is represented by 25 afferents (next to the corresponding symbol on the y-axes) that encode its presence or absence by a high (40Hz) or low (5Hz) Poisson firing rate, respectively. F: R-sdSP learns the correct response to the 4 inputs, R-STDP does not (line code as above). Inset: average performance of each run after learning.

A classical task that exceeds the representational power of a point neuron is the XOR (exclusive-or) problem that is equivalent to the linearly non-separating feature binding problem [24]. In this task, the neuron has to respond exclusively to two disjoint pairs of features (e.g. to black & circle and to blue & diamond), but not to the cross combinations of these features (black & diamond and blue & circle). The presence and absence of a feature was encoded in a high and low Poisson firing rate, respectively, of a subpopulation of afferents projecting to our classifying neuron (Fig 3E). R-sdSP on the active dendrites could learn the correct responses, although due to the intrinsic stochasticity failures occurred in some cases. Classical R-STDP failed also in solving the feature binding problem problem on the dendritic tree, whether applied to pre-dend or to pre-som spike pairings (Fig 3F).

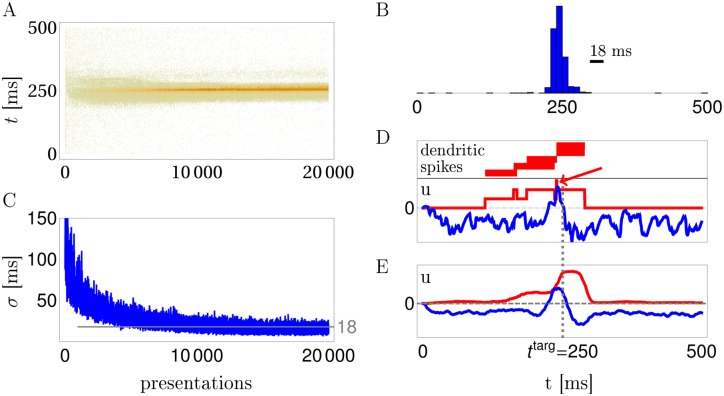

Learning spike timings with delayed reward

Besides learning a spike / no-spike code or a firing rate code, R-sdSP can also learn a spike timing code, i.e. to fire only at specific times. In a first task showing this, the neuron had to learn to spike at a target time ttarg = 250 ms in response to a frozen Poisson spike pattern. Deviations from this time were punished at the stimulus ending, using a graded feedback signal that increases with the magnitude of the deviation (Online Methods). During repeated pattern presentations, while applying R-sdSP and the delayed punishing signal, the postsynaptic spiking becomes concentrated in a narrow time window around the target spike (Fig 4A–4C). To understand the role of the active dendrites we separated the time course of the somatic voltage into the contribution from the subthreshold dendritic potentials and the NMDA-spikes (Fig 4D and 4E). After successful learning, the averaged NMDA-spikes form a broad ridge around ttarg on top of which the subthreshold dendritic voltages act as ‘scorers’. Before and immediately after ttarg the subthreshold voltage is hyperpolarized to prevent somatic spikes from coming too early or too late. Notice that in an individual run the summed NMDA-spikes can form plateaus that are much shorter than the NMDA-spike duration of 50 ms. In the example shown, this arises because just 5 ms after the initiation of an NMDA-spike in one branch another NMDA-spike ends in a second branch, cutting the somatic plateau short to 5 ms (Fig 4D). By virtue of the backpropagated somatic activity, R-sdSP learns to coordinate the timing of the NMDA-spikes in the different branches, creating a narrow window for a somatic spike around the target time.

Fig 4. R-sdSP learns exact somatic spike timing.

A: Somatic spike trains during 20000 trials in a reward based scenario. B: The distribution of somatic spikes after learning of the target time at 250 ms has a Gaussian half-width of 18 ms. C: Evolution of the width (σ) of the spike-time distribution during training. D,E: Separation of the somatic voltage into a contribution from the NMDA-spikes (red) and the subthreshold dendritic potentials (blue) for a single run (D) and averaged across 20 runs (E). Note that after learning the summed NMDA-spikes can form a narrow depolarizations at the target time beyond the duration of an individual spike (arrow in D).

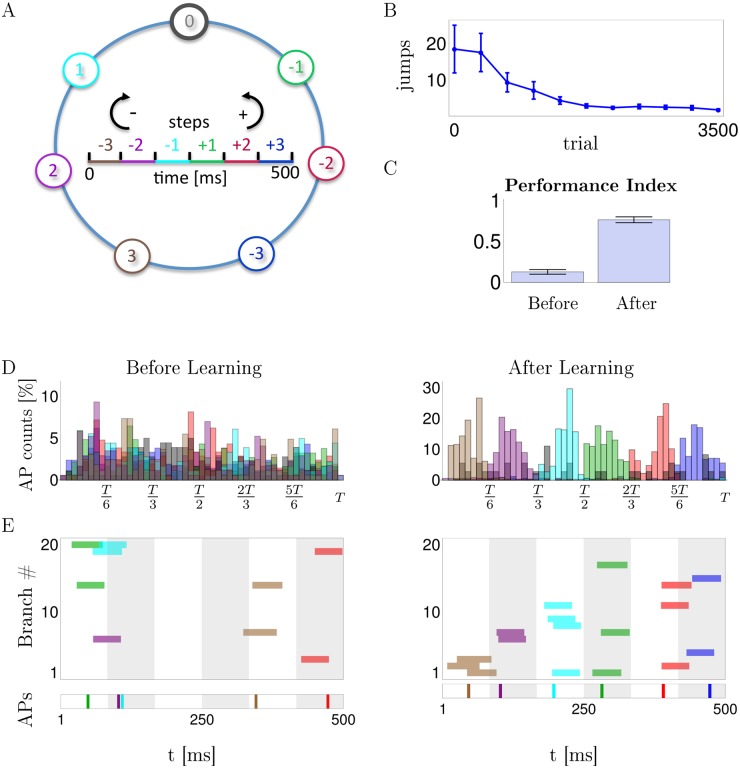

Learning a spike-timing code is also possible if the rewarding / punishing signal is binary and is potentially delayed by several stimulus durations. We conceived a spatial navigation task where 7 positions on a circle are encoded each by a frozen 500 ms spike pattern in 100 afferents projecting to the dendritic branches of the model neuron as before. The task is to jump to position 0 when being in one of the other 6 positions and, after reaching position 0, staying there (Fig 5A). Actions consisted in either no jump or jumps of 1, 2 or 3 steps clock or counter clockwise. No jump is encoded by no somatic spikes, and a jump of n steps in the clock or counterclockwise direction is encoded by the first somatic spike arising in the n’th time bin to the left or right from the center (Fig 5A, inset). A positive reward signal R = 1 is delivered when the agent, being in a non-target position, directly jumps to the target, or when it is at the target position and stays there; else R = −1. After an initial average of 20 random actions needed to reach the target position, the R-sdSP modulated agents learned to eventually reach the target with a single action and stay there (Fig 5B and 5C). While initially the first somatic spike times of our model neuron were uniformly distributed across the 500 ms stimulus interval, the neuron eventually learned to respond in the appropriate time bin of 83 ms duration that encoded the correct jumps (Fig 5D). During learning, the dendritic branches develop a shared selectivity for the patterns and until the first NMDA-spikes become properly aligned in the correct time bin (Fig 5E).

Fig 5. R-sdSP learns the correct spike-timing in a navigational task with binary and delayed feedback.

A: At each position a fixed spike pattern is presented, and the timing of the first somatic spike tells how many steps in the clock (−) or counter clock (+) direction are taken. Color code of the time bin indicates the preferred spike timing for directly jumping to target position 0 when being at the correspondingly colored circle position (see text). B: Evolution of the mean number of jumps needed from a randomly chosen circle position until 0 is reached. C: Performance Index defined as the probability of directly jumping from any of the 6 circle positions to the target, and staying there if already at 0. Before learning this probability is 0.13, after learning it is 0.78. D: Histogram of first somatic spikes at the various positions before and after learning, averaged across patterns and learning runs (color code as in A). (E) Timing of the first NMDA spike in each of the 20 branches (upper panel) and the first somatic spike (lower panel) when stimulated with the patterns associated to the 6 circle positions (colors encode positions as in A). After learning, NMDA-spikes in 2-4 branches co-align and trigger somatic spike timing the appropriate time bin.

Discussion

We derived a synaptic plasticity rule for synapses on active dendrites that minimizes errors in the supervised and maximizes reward in the reinforcement learning scenario. More precisely, the rule follows the gradient of (a lower bound of) the log-likelihood of reproducing a given spike train for supervised learning, and the gradient of the expected reward for reinforcement learning. The rule specifies the optimal timing between the presynaptic, dendritic and somatic spikes, including the time course of the postsynaptic voltages. We showed that neurons can only exploit the increased representational power of active dendrites when synaptic plasticity is modulated by both the somatic and the dendritic spiking. The suggested somato-dendritic spike-dependent synaptic plasticity (sdSP) learns to correctly respond to synaptic input patterns coding by either frozen spikes times or firing rates, while classical STDP fails. It is remarkable that the same plasticity induction that supports the learning of precise spiking in the supervised learning scenario also maximizes the expected reward when modulated by an internal and possibly delayed reward signal, irrespective of whether the postsynaptic code is based on spike times or firing rates.

The neuron model for which we derived the gradient rules considered dendritic spikes as saturating square-shaped depolarizations triggered by the crossing of a dendritic voltage threshold. We showed that the dual voltage-glutamate criterion for NMDA spikes reduces in the presence of balanced excitation and inhibition to a pure voltage criterion. This is because the glutamate condition is always satisfied when reaching the voltage threshold. This leads to a dendritic spike scenario that also includes dendritic sodium [36] or calcium spikes [37] differing in their voltage threshold, duration and amplitude. In the supervised learning scenario, the general plasticity rule we derived consists of a somatic error term that measures the difference between the actual spiking and the instantaneous spike rate, a dendritic rate- and spike-term, and a presynaptic spike term. Potentiation is triggered if the presynaptic spike is followed by a postsynaptic spike within roughly 10 ms, and this time window is stretched to roughly 50 ms if between the pre- and postsynaptic spike an additional dendritic spike occurs. Plasticity, be it potentiation or depression, can also be boosted by a mere nonlinear dendritic depolarizations without dendritic spikes, linking the rule also to computational models considering nonlinear but continuous dendritic processing [8–11, 24]. In the reinforcement learning scenario, the same plasticity rule is modulated by a global reward signal.

As learning is driven by a somatic error term, the synapses must be able to readout this error by disentangling the backpropagating spike and the somatic voltage (or at least a low-pass filtered version of it, see S2C–S2F Fig). Synapses must also read out the local dendritic spike and potential, and the synapse-specific postsynaptic potential (PSP). The PSP may be inferred from the concentration of the local glutamate released by the presynaptic bouton. The somatic and dendritic spikes may be determined from their characteristic voltage upstrokes and sustained depolarizations, and the NMDA spike can be further detected by a rapid increase in the local calcium concentration. Finally, the (subthreshold) somatic and dendritic depolarization may be distinguished by co-sensing local ion concentrations. In fact, the synaptically induced dendritic depolarization goes together with an increased local sodium concentration while the backpropagating somatic voltage does not cause such a ion influx. We assume that synapses developed a molecular machinery to extract these quantities and infer approximate estimates for the terms occuring in our plasticity rules.

Our computational framework for active dendrites contributes to the debate whether plasticity on dendritic branches should depend on the dendritic rather than the somatic spike [38], or whether it subserves synaptic clustering [23, 39] or a homeostatic adaptation [40]. In fact, when seen in the light of learning, synaptic plasticity is predicted to depend on all the postsynaptic quantities. Based on the model of dendritic NMDA receptor conductances in an in vivo stimulation scenario, the learning rule yields a guideline for experimental testings. For instance, it is in line with the observed synaptic depression induced by a synaptically generated dendritic spike alone ([41], but see [42]), or with the extended time window for plasticity induction involving NMDA-spikes [21]. It predicts that an NMDA-spike within roughly 50 ms after an excitatory synaptic input always enhances the synaptic modification. While the sign of the synaptic modification is determined by the presence or absence of a somatic spike following the synaptic input, an additional synaptically evoked NMDA-spike will only enhance it, never revert this sign.

Dendritic structures that have been suggested to form a 2-layer network [8] offer the additional advantage of easily backpropagating the information of the output to the synaptic sites 2 layers upstream. From a computational perspective it is interesting to note that, one the one hand, 2-layer networks represent an universal function approximator [43] while, on the other hand, networks with more than two layers are difficult to be trained [13]. For the dendritic implementation this suggests to limit the internal nonlinearities to a single layer of active dendritic branches. Because stacking dendritic nonlinearities across multiple layers would cause additional cross-talk, the restriction to a single dendritic nonlinearity may just be nature’s solution to the trade-off between achieving more representational power and paying the associated signaling costs required for efficient learning.

Online Methods

Neuron parameters

The postsynaptic potentials are defined by , where the sum extends across all presynaptic spike times of afferent i. The spike reset is defined by κ(t) = ∑ts κ∘(t − ts), where the sum extends across all somatic spike times ts. The two kernels are defined as

with τm = 10 ms and τs = 1.5 ms. Here, Θ(t) = 1 for t ≥ 0 and Θ(t) = 0 for t < 0. The instantaneous rate for generating a NMDA-spike in dendrite d is , and the instantaneous rate of generating a somatic spike is ρs(t)≡ρs(us(t)) with

| (4) |

This model of NMDA-spike generation can be deduced from the biophysical properties of NMDA receptors in a roughly balanced input scenario where the glutamate level required to activate the NMDA receptors is always reached for those voltages that also relieve their magnesium block (see S1A Text). The choice of the saturating rate function for the NMDA generation was motivated both by stability reasons, and also because the dendritic nonlinearities are saturating [11].

The neuronal parameters are rD = 5, βD = βs = 5, θs = 2 and θD = 2.4. We considered n = 20 branches and a dendritic attenuation factor α = 0.06. The probability that one out of the 100 afferents is connected to a given branch was p = 0.5. The amplitude of the dendritic NMDA-spike is a = 6, its duration Δ = 50 ms. Different NMDA-spikes in the same branch add in time but not in amplitude, yielding a dendritic plateau potential in branch d of the form NMDAd(t) = a if at least one NMDA-spike was triggered in the interval Δ before t and NMDAd(t) = 0 else. For simplicity we assumed the two parameters α and a to be identical for all branches, but they may vary across branches or even be treated as adaptable dendritic ‘coupling strengths’ that could be learned by analogous gradient rules as suggested by experimental work [30].

The learning rule

To obtain an online rule that is identical in the supervised and reinforcement scenarios up to reward modulation, we consider an additional low-pass filtering of the instantaneous synaptic changes. Plasticity is then triggered when either the stimulus ends or when reward is applied. We introduce the instantaneous synaptic eligibility for somato-synaptic and the somato-dendro-synaptic contribution, respectively, by

| (5) |

| (6) |

with S(t) = ∑ts δ(t − ts) representing the somatic spike train and the instantaneous somatic escape rate without contribution of the putative NMDA-spike from branch d at time t,

| (7) |

Here, . Note that a low-pass filtered version of ρs and could be extracted at the synaptic site by using the local ionic concentrations to disentangle the local, synaptically generated voltage and NMDA-spike from the backpropagated somatic voltage (see also Discussion). The factor Dend∗PSPi expresses a modulation of the synaptic signal PSPi by the presence or absence of an NMDA-spike in branch d before t, i.e. within the time interval t − Δ to t. If there is such a spike, the last NMDA-spike initiation time at branch d in the interval [t − Δ, t] is denoted by . We then set

| (8) |

where represents the derivative of with respect to .

The upper line in Eq (8) can be understood as a sampling version of the lower line: Let Dd(t) be the sum of delta-functions centered at the triggering times of NMDA-spikes in branch d. In the case that the NMDA-spikes in the same dendritic branch would add up, the upper line becomes , and this averages out to yield the lower line. Since in our case the NMDA-spike triggerings are rare, the two versions for the upper line are roughly equal. We further approximated the integral in the lower line by ςdi defined as low-pass filtered version of the integrand, with filtering time constant . It is also possible to define Dend∗PSPi(t) by any convex combination of the two lines in Eq (8), but an equal weighting of them yielded best performances in our simulations.

It is illustrative to deduce from the above formulas the limit when the dendritic spiking disappears and merely a dendritic nonlinearity remains. Remember that in deriving these formulas we allowed the NMDA spikes to add up, and since for biological frequences NMDA spikes rarely overlap, this assumption is justified. If NMDA spikes are still allowed to add up (although not to infinity), we may formally scale the NMDA amplitude, the NMDA duration and the NMDA rate function by a factor λ → 0, i.e. replacing a → λa, Δ → λΔ and and take the limit of λ converging to 0. The time course of the dendritic spikes in branch d, NMDAd(t), is then replaced by the nonlinearly summed dendritic voltage , and the somato-dendro-synaptic contribution for synapse i on branch d (Eqs (2) and (6), respectively) becomes the 3-factor rule

| (9) |

This rule could be seen as a gradient version of the pair-based rule in [24] and applies to the dendritic nonlinearities considered in [8–10]. An alternative derivation of the rule Eq (9) is to consider the deterministic somatic voltage and derive the learning rule as in [28] for the case of the exponential somatic spike rate ρs(us) defined in Eq (4). The direct gradient calculation leads to the two plasticity components in Eqs (5) and (9), respectively, corresponding to the linear and nonlinear summation of the dendritic potentials .

Coming back to the case with dendritic spikes, the two instantaneous eligibilities for sdSP (Eqs (5) and (6)) are again weighted and low-pass filtered,

| (10) |

with and filtering time constant τE = T/2, T = 500 ms. The supervised learning rule (sdSP) is obtained by clamping the somatic output to the target spike train and updating the weights after each stimulus by the synaptic eligibility trace at that time,

with optimized learning rate η (see below). In reinforcement learning the synaptic weights are updated at the times tRew when a reward signal is applied, i.e. when a stimulus ends (tRew = T in Figs 2–5). For R-sdSP we obtain

The reward signal R depends on the input spike pattern and somatic spike train S, and R0 is a baseline set to R0 = 1 for all simulations with R-sdSP. Setting R0 to a running average of the reward would speed up learning, but for simplicity we refrained from this optimization (see, however, the implementation of R-STDP in Eq (11), where R0 must even depend on the stimulus). For a derivation of the sdSP and R-sdSP as gradient of a target function see S1C Text and [25].

Simulation details

For all tasks and learning rules we optimized the learning rate η such that the performance for the optimized value η = η∘ is better than for the adjacent values η = 1.5 η∘ and η = η∘/1.5. In all the simulations involving R-sdSP we used the binary reward signal R = ±1, except for learning the very precise spike timing in Fig 4 that required a graded reward. There, R = 1 − ∑tsom g(tsom − ttarg) with ttarg = 250 ms, , and the sum extending across all somatic spike times tsom (setting tsom = 0 when no somatic spike was emitted).

The R-STDP plasticity rule was implemented in its best performing version found in [33]. More precisely, Frémaux et al. defined the eligibility

with being the presynaptic spike train in afferent i. They set A− = 0, A+ = 1, and τ+ matched the synaptic time constant τs = 10 ms. In our case the postsynaptic signal post(s) is either the somatic spike train S(t) or the local dendritic spike train Dd(t) in branch d. As pointed out in [33], the constant baseline reward R0 used in gradient rules must be replaced by a running mean across pattern presentations that depends on the identity of the input pattern x, . Here, the history length constant is here set to τp = 5. The weight update after each stimulus presentation is

| (11) |

where is a low-pass version of the eligibility with time constant τE (see Eq (10)).

Supporting Information

A: From the biophysics to a stochastic NMDA-spike model. B: Additional analysis and simulation results. C: Mathematical derivations.D: Appendix.

(PDF)

A: Top: AMPA (full line), NMDA (dashed) and GABAA (dotted) currents, at the peak conductance level, as a function of u defined in Eqns S1 and S2 of the Supporting Information (α = β = 0.05; excitatory currents with positive sign). Bottom: Voltage traces u(t) for 6 different synaptic drives g∘ = 0,25,50,75,100,125nS (curves from light to dark, Eq S3), with NMDA-spikes elicited by the 2 strongest g∘. B: I(u) (‘I–V curves’) defined by the right-hand-side of Eq S4 for the 6 synaptic drives g∘ used in A. C: Zero crossings I(u) = 0 of the family of curves parametrized by g∘ and 6 of with shown in B, for different inhibitory-excitatory balancing ratios β = 0.05 (red), β = 0.10 (blue) and β = 0.15 (green); AMPA/NMDA ratio: α = 0.05. D: The 6 voltage traces u(t) from A plotted against the glutamate time course at the NMDA receptors, g∘ εNMDA(t), overlaid on the red zero-crossing curve shown in C. E: Whenever the Gaussian noise (red cloud) added to the mean (g∘, u) on the red line (center of cloud) drops into the green area, a NMDA-spike is elicited. F: The probability of eliciting a NMDA-spike at a given voltage (P(spike|u), top) is almost the same for the three different balancing ratios β that vary by a factor of 3; it is therefore roughly proportional to the instantaneous spike rate φ(u) ∝ P(spike|u) that is a function of u alone. Yet, because u as a function of g∘ saturates (C), plotting P(spike|u) versus g∘ may still give deviating curves (bottom).

(EPS)

A, B: When introducing a Gaussian jitter in the spike timings of the 4 frozen 6 Hz Poisson spike patterns (A) their classification into a spike / no spike code only smoothly degrades (B). Standard deviation of spike jitter: 10 ms (blue), 20 ms (red), 50 ms (green) and 100 ms (brown). C: The classification is still learnable by R-sdSP when the somatic voltage us(t) is low pass filtered with different time constants: 5 ms (blue), 10 ms (red), 20 ms (green) and 40 ms (brown). D: The performance barely changes when only considering the somato-dendro-synaptic contribution of the rule (Eq (5) in Online Methods, blue dashed). On the other hand, when learning is only based on the somo-synaptic contribution (, Eq (4) in Online Methods) the performance degrades (magenta). Inset: performances over the first 1000 presentations. E, F: Learning curves for R-STDP when the time constant τ+ matches the NMDA-spike duration Δ = 50 ms. E: Still, R-STDP cannot learn a binary classification of 4 randomized spatio-temporal spike patterns, both when applied to the presynaptic–somatic spikes (solid black; dashed: performance when the NMDA-spikes are suppressed) or the presynaptic–dendritic spikes (gray). F: Similarly, R-STDP is not able to learn the XOR-problem (curve legend as in E). Inset: average performance after each of the 20 runs.

(EPS)

Acknowledgments

We thank J.P. Pfister for discussions and feedback to an earlier version of the manuscript.

Data Availability

All relevant data are within the paper and its Supporting Information files.

Funding Statement

The work was supported by the Swiss National Science Foundation (personal grant of WS, 31003A_133094 and 310030L_156863) and the Human Brain Project funded by the European Research Council. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Schiller J, Major G, Koester H.J, and Schiller Y. NMDA spikes in basal dendrites of cortical pyramidal neurons. Nature, 404(6775):285–289, 2000. 10.1038/35005094 [DOI] [PubMed] [Google Scholar]

- 2. Polsky A, Mel B.W, and Schiller J. Computational subunits in thin dendrites of pyramidal cells. Nat. Neurosci., 7(6):621–627, 2004. 10.1038/nn1253 [DOI] [PubMed] [Google Scholar]

- 3. Nevian T, Larkum M.E, Polsky A, and Schiller J. Properties of basal dendrites of layer 5 pyramidal neurons: a direct patch-clamp recording study. Nat. Neurosci., 10:206–214, February 2007. 10.1038/nn1826 [DOI] [PubMed] [Google Scholar]

- 4. Larkum M.E, Nevian T, Sandler M, Polsky A, and Schiller J. Synaptic integration in tuft dendrites of layer 5 pyramidal neurons: a new unifying principle. Science, 325(5941):756–760, 2009. 10.1126/science.1171958 [DOI] [PubMed] [Google Scholar]

- 5. Branco T, Clark B.A, and Hausser M. Dendritic discrimination of temporal input sequences in cortical neurons. Science, 329(5999):1671–1675, September 2010. 10.1126/science.1189664 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Jia H, Rochefort N.L, Chen X, and Konnerth A. Dendritic organization of sensory input to cortical neurons in vivo. Nature, 464(7293):1307–1312, 2010. 10.1038/nature08947 [DOI] [PubMed] [Google Scholar]

- 7. Lavzin M, Rapoport S, Polsky A, Garion L, and Schiller J. Nonlinear dendritic processing determines angular tuning of barrel cortex neurons in vivo. Nature, 2012. 10.1038/nature11451 [DOI] [PubMed] [Google Scholar]

- 8. Poirazi P, Brannon T, and Mel B.W. Pyramidal neuron as two-layer neural network. Neuron, 37:989–999, 2003. 10.1016/S0896-6273(03)00149-1 [DOI] [PubMed] [Google Scholar]

- 9. Caze R.D, Humphries M, and Gutkin B. Passive dendrites enable single neurons to compute linearly non-separable functions. PLoS Comput. Biol., 9(2):e1002867, 2013. 10.1371/journal.pcbi.1002867 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Breuer D, Timme M, and Memmesheimer R.M. Statistical Physics of Neural Systems with Nonadditive Dendritic Coupling. Physical Review X, 4(011053):1–23, 2014. [Google Scholar]

- 11. Tran-Van-Minh A, Caze R.D, Abrahamsson T, Cathala L, Gutkin B.S, and DiGregorio D.A. Contribution of sublinear and supralinear dendritic integration to neuronal computations. Front Cell Neurosci, 9:67, 2015. 10.3389/fncel.2015.00067 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Rumelhart D.E, Hinton G.E, and Williams R.J. Learning representations by back-propagating errors. Nature, 323:533–536, 1986. 10.1038/323533a0 [DOI] [Google Scholar]

- 13. Hinton G.E and Salakhutdinov R.R. Reducing the dimensionality of data with neural networks. Science, 313(5786):504–507, 2006. 10.1126/science.1127647 [DOI] [PubMed] [Google Scholar]

- 14. Markram H, Lübke J, Frotscher M, and Sakmann B. Regulation of synaptic efficacy by concidence of postsynaptic APs and EPSPs. Science, 275:213–215, 1997. 10.1126/science.275.5297.213 [DOI] [PubMed] [Google Scholar]

- 15. Bi G.Q and Poo M.M. Synaptic modifications in cultured hippocampal neurons: dependence on spike timing, synaptic strength, and postsynaptic cell type. J. Neurosci., 18(24):10464–10472, December 1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Sjostrom P.J, Turrigiano G.G, and Nelson S.B. Rate, timing, and cooperativity jointly determine cortical synaptic plasticity. Neuron, 32(6):1149–1164, 2001. 10.1016/S0896-6273(01)00542-6 [DOI] [PubMed] [Google Scholar]

- 17. Froemke R.C, Poo M.M, and Dan Y. Spike-timing-dependent synaptic plasticity depends on dendritic location. Nature, 434(7030):221–225, 2005. 10.1038/nature03366 [DOI] [PubMed] [Google Scholar]

- 18. Letzkus J.J, Kampa B.M, and Stuart G.J. Learning rules for spike timing-dependent plasticity depend on dendritic synapse location. J. Neurosci., 26(41):10420–10429, 2006. 10.1523/JNEUROSCI.2650-06.2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Sjostrom P.J and Hausser M. A cooperative switch determines the sign of synaptic plasticity in distal dendrites of neocortical pyramidal neurons. Neuron, 51(2):227–238, 2006. 10.1016/j.neuron.2006.06.017 [DOI] [PubMed] [Google Scholar]

- 20. Golding N.L, Staff N.P, and Spruston N. Dendritic spikes as a mechanism for cooperative long-term potentiation. Nature, 418(6895):326–331, 2002. 10.1038/nature00854 [DOI] [PubMed] [Google Scholar]

- 21. Gordon U, Polsky A, and Schiller J. Plasticity compartments in basal dendrites of neocortical pyramidal neurons. J. Neurosci., 26(49):12717–12726, 2006. 10.1523/JNEUROSCI.3502-06.2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Remy J and Spruston N. Dendritic spikes induce single-burst long-term potentiation. Proc. Natl. Acad. Sci. U.S.A., 104(43):17192–17197, 2007. 10.1073/pnas.0707919104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Poirazi P and Mel B.W. Impact of active dendrites and structural plasticity on the memory capacity of neural tissue. Neuron, 29(3):779–796, 2001. 10.1016/S0896-6273(01)00252-5 [DOI] [PubMed] [Google Scholar]

- 24. Legenstein R and Maass W. Branch-specific plasticity enables self-organization of nonlinear computation in single neurons. J. Neurosci., 31(30):10787–10802, 2011. 10.1523/JNEUROSCI.5684-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Schiess M.E, Urbanczik R, and Senn W. Gradient estimation in dendritic reinforcement learning. The Journal of Mathematical Neuroscience, 2(2), 2012. 10.1186/2190-8567-2-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Major G, Polsky A, Denk W, Schiller J, and Tank D.W. Spatiotemporally graded NMDA spike/plateau potentials in basal dendrites of neocortical pyramidal neurons. J. Neurophysiol., 99:2584–2601, May 2008. 10.1152/jn.00011.2008 [DOI] [PubMed] [Google Scholar]

- 27. Gerstner W, Kempter R, van Hemmen J.L, and Wagner H. A neuronal learning rule for sub-millisecond temporal coding. Nature, 383(6595):76–81, September 1996. 10.1038/383076a0 [DOI] [PubMed] [Google Scholar]

- 28. Pfister J, Toyoizumi T, Barber D, and Gerstner W. Optimal spike-timing-dependent plasticity for precise action potential firing in supervised learning. Neural Computation, 18:1318–1348, 2006. 10.1162/neco.2006.18.6.1318 [DOI] [PubMed] [Google Scholar]

- 29. Brea J, Senn W, and Pfister J.P. Matching recall and storage in sequence learning with spiking neural networks. J. Neurosci., 33(23):9565–9575, June 2013. 10.1523/JNEUROSCI.4098-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Losonczy A, Makara J.K, and Magee J.C. Compartmentalized dendritic plasticity and input feature storage in neurons. Nature, 452(7186):436–441, March 2008. 10.1038/nature06725 [DOI] [PubMed] [Google Scholar]

- 31. Memmesheimer R.M, Rubin R, Olveczky B.P, and Sompolinsky H. Learning precisely timed spikes. Neuron, 82(4):925–938, May 2014. 10.1016/j.neuron.2014.03.026 [DOI] [PubMed] [Google Scholar]

- 32. Izhikevich E. Solving the distal reward problem through linkage of STDP and dopamine signaling. Cerebral Cortex, 17:2443–2452, 2007. 10.1093/cercor/bhl152 [DOI] [PubMed] [Google Scholar]

- 33. Frémaux N, Sprekeler H, and Gerstner W. Functional requirements for reward-modulated spike-timing-dependent plasticity. J. Neurosci., 30:13326–13337, October 2010. 10.1523/JNEUROSCI.6249-09.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Gütig R and Sompolinsky H. The tempotron: a neuron that learns spike timing-based decision. Nature Neurosci., 9:420–428, 2006. 10.1038/nn1643 [DOI] [PubMed] [Google Scholar]

- 35. Urbanczik R and Senn W. A gradient learning rule for the tempotron. Neural Comput., 21:340–352, 2009. 10.1162/neco.2008.09-07-605 [DOI] [PubMed] [Google Scholar]

- 36. Golding N.L and Spruston N. Dendritic sodium spikes are variable triggers of axonal action potentials in hippocampal CA1 pyramidal neurons. Neuron, 21(5):1189–1200, November 1998. 10.1016/S0896-6273(00)80635-2 [DOI] [PubMed] [Google Scholar]

- 37. Cichon J and Gan W.B. Branch-specific dendritic Ca(2+) spikes cause persistent synaptic plasticity. Nature, 520(7546):180–185, April 2015. 10.1038/nature14251 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Kampa B.M, Letzkus J.J, and Stuart G.J. Dendritic mechanisms controlling spike-timing-dependent synaptic plasticity. Trends Neurosci., 30(9):456–463, September 2007. 10.1016/j.tins.2007.06.010 [DOI] [PubMed] [Google Scholar]

- 39. Larkum M.E and Nevian T. Synaptic clustering by dendritic signalling mechanisms. Curr. Opin. Neurobiol., 18(3):321–331, June 2008. 10.1016/j.conb.2008.08.013 [DOI] [PubMed] [Google Scholar]

- 40. Goldberg J, Holthoff K, and Yuste R. A problem with Hebb and local spikes. Trends Neurosci., 25(9):433–435, 2002. 10.1016/S0166-2236(02)02200-2 [DOI] [PubMed] [Google Scholar]

- 41. Holthoff K, Kovalchuk Y, Yuste R, and Konnerth A. Single-shock LTD by local dendritic spikes in pyramidal neurons of mouse visual cortex. J. Physiol. (Lond.), 560(Pt 1):27–36, 2004. 10.1113/jphysiol.2004.072678 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Gambino F, Pages S, Kehayas V, Baptista D, Tatti R, Carleton A, and Holtmaat A. Sensory-evoked LTP driven by dendritic plateau potentials in vivo. Nature, 515(7525):116–119, November 2014. 10.1038/nature13664 [DOI] [PubMed] [Google Scholar]

- 43. Hornik K, Stinchcombe M, and White H. Multilayer feedforward networks are universal approximators. Neural Networks, 2:359–366, 1989. 10.1016/0893-6080(89)90020-8 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

A: From the biophysics to a stochastic NMDA-spike model. B: Additional analysis and simulation results. C: Mathematical derivations.D: Appendix.

(PDF)

A: Top: AMPA (full line), NMDA (dashed) and GABAA (dotted) currents, at the peak conductance level, as a function of u defined in Eqns S1 and S2 of the Supporting Information (α = β = 0.05; excitatory currents with positive sign). Bottom: Voltage traces u(t) for 6 different synaptic drives g∘ = 0,25,50,75,100,125nS (curves from light to dark, Eq S3), with NMDA-spikes elicited by the 2 strongest g∘. B: I(u) (‘I–V curves’) defined by the right-hand-side of Eq S4 for the 6 synaptic drives g∘ used in A. C: Zero crossings I(u) = 0 of the family of curves parametrized by g∘ and 6 of with shown in B, for different inhibitory-excitatory balancing ratios β = 0.05 (red), β = 0.10 (blue) and β = 0.15 (green); AMPA/NMDA ratio: α = 0.05. D: The 6 voltage traces u(t) from A plotted against the glutamate time course at the NMDA receptors, g∘ εNMDA(t), overlaid on the red zero-crossing curve shown in C. E: Whenever the Gaussian noise (red cloud) added to the mean (g∘, u) on the red line (center of cloud) drops into the green area, a NMDA-spike is elicited. F: The probability of eliciting a NMDA-spike at a given voltage (P(spike|u), top) is almost the same for the three different balancing ratios β that vary by a factor of 3; it is therefore roughly proportional to the instantaneous spike rate φ(u) ∝ P(spike|u) that is a function of u alone. Yet, because u as a function of g∘ saturates (C), plotting P(spike|u) versus g∘ may still give deviating curves (bottom).

(EPS)

A, B: When introducing a Gaussian jitter in the spike timings of the 4 frozen 6 Hz Poisson spike patterns (A) their classification into a spike / no spike code only smoothly degrades (B). Standard deviation of spike jitter: 10 ms (blue), 20 ms (red), 50 ms (green) and 100 ms (brown). C: The classification is still learnable by R-sdSP when the somatic voltage us(t) is low pass filtered with different time constants: 5 ms (blue), 10 ms (red), 20 ms (green) and 40 ms (brown). D: The performance barely changes when only considering the somato-dendro-synaptic contribution of the rule (Eq (5) in Online Methods, blue dashed). On the other hand, when learning is only based on the somo-synaptic contribution (, Eq (4) in Online Methods) the performance degrades (magenta). Inset: performances over the first 1000 presentations. E, F: Learning curves for R-STDP when the time constant τ+ matches the NMDA-spike duration Δ = 50 ms. E: Still, R-STDP cannot learn a binary classification of 4 randomized spatio-temporal spike patterns, both when applied to the presynaptic–somatic spikes (solid black; dashed: performance when the NMDA-spikes are suppressed) or the presynaptic–dendritic spikes (gray). F: Similarly, R-STDP is not able to learn the XOR-problem (curve legend as in E). Inset: average performance after each of the 20 runs.

(EPS)

Data Availability Statement

All relevant data are within the paper and its Supporting Information files.