Abstract

Learning new words is difficult. In any naming situation, there are multiple possible interpretations of a novel word. Recent approaches suggest that learners may solve this problem by tracking co-occurrence statistics between words and referents across multiple naming situations (e.g. Yu & Smith, 2007), overcoming the ambiguity in any one situation. Yet, there remains debate around the underlying mechanisms. We conducted two experiments in which learners acquired eight word-object mappings using cross-situational statistics while eye-movements were tracked. These addressed four unresolved questions regarding the learning mechanism. First, eye-movements during learning showed evidence that listeners maintain multiple hypotheses for a given word and bring them all to bear in the moment of naming. Second, trial-by-trial analyses of accuracy suggested that listeners accumulate continuous statistics about word/object mappings, over and above prior hypotheses they have about a word. Third, consistent, probabilistic context can impede learning, as false associations between words and highly co-occurring referents are formed. Finally, a number of factors not previously considered in prior analysis impact observational word learning: knowledge of the foils, spatial consistency of the target object, and the number of trials between presentations of the same word. This evidence suggests that observational word learning may derive from a combination of gradual statistical or associative learning mechanisms and more rapid real-time processes such as competition, mutual exclusivity and even inference or hypothesis testing.

Keywords: Observational learning, cross-situational learning, associative learning, word learning, statistical learning, eye movements

1.1 Observational learning and referential ambiguity

Early in language acquisition, children are often assumed to learn the mapping between words and objects largely from observation (Gleitman, 1990) without reliable feedback. However, a fundamental problem for observational learning is referential ambiguity (Quine, 1960): in any naming event, there is a vast array of possible interpretations for a novel word. Consequently, learners may require strategies or biases to cope with this ambiguity (Golinkoff, Hirsh-Pasek, Bailey, & Wenger, 1992; Markman, 1990). Recently, Yu and Smith (2007; see also Siskind, 1996) argued the problem of referential ambiguity may in part be an artificial consequence of restricting the analysis of word learning to one encounter with a word. Across multiple situations, there may be sufficient statistical information to support learning. For example, many words (e.g., objects) are more likely to co-occur with their referents than with other objects.

Yu and Smith (2007) tested this in adults (and later in infants, Smith & Yu, 2008): On each trial, participants saw a number of novel objects and heard novel names for each of them, creating considerable ambiguity. Across multiple trials, a word and its referent always co-occurred while its co-occurrence with other objects was lower. After a short training, participants showed above-change accuracy for selecting the words’ referents, suggesting statistics were sufficient to support learning. This raises the possibility that learners have powerful mechanisms for inferring the words’ meanings across multiple situations, even if any given situation is ambiguous.

1.2 How do people learn words in the cross-situational paradigm?

There has since been a large number of experiments examining how mostly adults learn words in observational paradigms (Medina, Snedeker, Trueswell, & Gleitman, 2011; Trueswell et al., 2013; Vouloumanos, 2008; Yurovsky, Yu, & Smith, 2013). This has led to a debate over the mechanism underlying such learning.

Originally, Yu and Smith (2007, 2012) described cross-situational learning as a process of tracking co-occurrence statistics between words and objects across many situations. This is a form of statistical or associative learning in which the word-object pairs with the highest co-occurrence are the correct mapping. However, more recent accounts suggest people could harness cross-situational information using propositional logic (Medina et al., 2011; Trueswell et al., 2013): The most prominent theory of this sort is “propose-but-verify”, in which learners form a single explicit hypothesis after encountering a novel word, which is carried forward unless disconfirmed by later encounters.

Others have proposed hybrid accounts: For example, there are memory-based accounts in which such inferences are made over stored episodes of situations in long-term memory (Dautriche & Chemla, 2014). Bayesian accounts take a hypothesis-testing approach, but evaluate multiple probabilistic hypotheses simultaneously to find the most likely mapping given the data (Frank, Goodman, & Tenenbaum, 2009). Finally, McMurray, Horst and Samuelson (2012) propose that gradual associative learning may be buttressed with real-time decision making to account for both cross-situational learning and other developmental phenomena. These real-time processes may allow the system to engage in more inferential processes in the moment (e.g., mutual exclusivity), while long-term statistics are tracked via associations.

These theories are still developing with newer iterations of purely statistical accounts (Yu & Smith, 2012), propose-but-verify (Koehne, Trueswell, & Gleitman, 2014) and the dynamic associative account (McMurray, Zhao, Kucker, & Samuelson, 2013). While these theories may exhibit stark differences in their core commitments (e.g., whether learning is propositional or associative), they appear flexible in how these commitments get implemented. Consequently, it may be premature to experimentally disentangle them.

However, there are crucial open questions about the basic properties of observational learning, which may constrain how these theories are developed. Thus, we identified four such questions that have played (or may play) a crucial role in these debates and critically evaluated them across two experiments. These questions include the issues of 1) whether participants maintain multiple hypotheses for a given word1; 2) whether information is gradually accumulated; 3) the role of context, and 4) other factors that may shape learning.

1.2.1 Do learners maintain multiple hypotheses about the meaning of a word?

The first question is how many hypotheses learners maintain for a given word. For example, in a dinner table event, when fork is heard for the first time, do learners form a single hypothesis for fork (positing that it refers to either the fork or the spoon), or do they note that this word co-occurred with both objects (but not with a car or boat)? In an associative account, learners track the co-occurrence of multiple objects with a word (e.g. Yu & Smith, 2007), relying on the accumulation of data to resolve any ambiguity. At the dinner table, for example, the learner will eventually encounter the word fork without a spoon, pushing its statistical co-occurrence with fork above that with spoon. Consequently, learners must maintain multiple hypotheses with different degrees of strength. In contrast, early versions of propose-but-verify suggested learners posit a single hypothesis about a word, which can be updated on future encounters. However, more recent propositional accounts also admit multiple hypotheses: For example, learners may recall previously considered hypotheses in the face of memory failure or disconfirming evidence (e.g. Koehne, Trueswell, & Gleitman, 2014).

As an empirical issue, whether learners track one or many hypotheses remains unresolved. This is largely because most studies address this issue indirectly using trial-by-trial autocorrelation analyses. Such analyses infer what a learner may have learned about a word from previous trials’ accuracy, and measure how it predicts performance on subsequent encounters (Trueswell et al., 2013): In propositional accounts, if learners previously selected the correct object, they must have arrived at the right hypothesis and should continue to select the correct object on present trials. However, if they were incorrect on a previous trial, they likely had the wrong hypothesis, and should now be at chance. In contrast, in statistical accounts, even on an incorrect trial, they accumulate more “data” and could show a benefit on subsequent trials. Autocorrelation analyses conducted by Trueswell et al. (2013) supported a single-hypothesis account, and even an analysis of participants’ eye-movements (a potentially more sensitive measure) showed little evidence for any learning after an incorrect trial.

Dautriche and Chemla (2014) pointed out that prior incorrect trials may function differently depending on the information on the current trial: If the prior incorrect selection is present, people may continue to select it and be below chance. Trueswell et al.'s (2013) choice of four foils made it likely that prior selections were repeated, leading them to potentially underestimate what people were learning from incorrect trials. Dautriche and Chemla (2014) decreased the number of foils, and found that people were now above chance in selecting the correct referent even after an incorrect previous encounter.

This offers tentative evidence that learners track multiple hypotheses for a given word. However, these experiments have several shortcomings: First, as Dautriche and Chemla (2014) point out, indirectly inferring what people might know on a previous trial from their overt response(s) oversimplifies the complex mapping between prior and present trials. Moreover, these analyses assume that prior accuracy is a robust (and uniform) index of knowledge. However, early in training a correct response may be due to chance, and response accuracy is therefore confounded by a trial’s position in the learning curve. Thus, the trial-by-trial analyses of Trueswell et al. (2013) and Dautriche and Chemla (2014) may not be sufficient to evaluate the claim of multiple hypotheses.

Moreover, this approach fails to address a second important question. If listeners are retaining multiple hypotheses, what do they do with them? Prior studies focus on whether multiple hypotheses are retained, but they do not address whether these hypotheses are also simultaneously activated in the moment when a novel word is processed.

What is needed is a more direct measure that addresses what listeners bring to bear in the moment: On a single trial, are multiple objects under active consideration as referents for a word? The present study achieves this by examining eye-movements to potential referents relative to the participant’s response. If the participant clicks on the correct referent but simultaneously fixates a second referent (more than some baseline), this offers strong evidence that multiple hypotheses are not only tracked, but influence behavior simultaneously.

1.2.2 What do learners carry forward from prior encounters with a word?

A related issue is whether listeners gradually accumulate information across trials. For example, after a third and fourth encounter with fork, do listeners have stronger associations or more confidence than after the first (even if all encounters favored the correct interpretation)? If so, learners may even accumulate evidence from ambiguous encounters (e.g., the fork/ spoon example).

Evidence for this comes from Yurovsky, Fricker, Yu and Smith (2014), in which adults first learned a small set of words. Then, words that were still at chance received additional training in a second phase along with new words. This initial exposure, even though it seemed to yield no measurable learning for the original words, improved learning in the second phase for all words. This suggests that learners must have acquired some partial knowledge about the original words during the first phase despite at-chance performance.

This contrast with Trueswell et al.'s (2013) autocorrelation analyses showing that if learners were incorrect on a prior encounter with a word they were at chance on subsequent trials (though see Dautriche and Chemla, 2014). However, this style of analysis focuses largely on a single type of information that could be retained - the prior responses to a word (and by inference, hypotheses). .

The converse of this question - whether listeners gradually accumulate statistics about words and objects - has not been properly examined for two reasons. First, the training paradigms themselves were very short (e.g. five repetitions of each word in Trueswell et al., 2013). Statistical and associative accounts are most likely to be accurate when the contribution of any given trial is small (to avoid over-committing to an erroneous prediction). Consequently under these theories, any single trial’s contribution could be small, and a few repetitions may be sufficient to see these effects.

Second, many of these studies did not include analyses that tested for gradual learning. There is a statistical confound with the most versions of the autocorrelation analyses: Trials in which participants were incorrect most likely came from early portions of training , while correct trials were most likely later in training. Thus, a comparison of accuracy as a function of last-encounter performance would be heavily confounded with position in the learning curve. Again, this makes it very difficult to see any effects of gradual learning.

The solution is to simultaneously examine the effect of last-encounter performance and the effect of the number of prior exposures to the word (the contribution of gradual learning) (c.f. Wasserman, Brooks, & McMurray, 2015). This latter factor serves as a covariate to account for where the participant is in the learning curve, and simultaneously offers a statistical test of the gradual learning. The only example of such an analysis we are aware of is Experiment 3 of Trueswell et al. (2013) which found no effect of the number of encounters with a word (though there was an interaction with last-encounter performance). But, as noted earlier, five trials may not be sufficient to observe gradual learning effects. By coupling a positive statistical test of gradual learning with a longer training period, we may find clearer evidence for the influence of the gradual accumulation of statistics on single trial accuracy.

Our goal here was to evaluate whether the amount of exposure predicts accuracy, over and above prior-trial performance (while simultaneously accounting for the statistical confound in the influence of prior trial accuracy). Thus, we replicated Trueswell et al.'s (2013) analyses adding the number of trials as a factor.

1.2.3 How does context influence observational word learning?

An additional factor of recent interest is context. Many words appear in consistent contexts (the kitchen, a farm, etc.), and while context does not tell the listener what object a word refers to, it can have complex effects on learning: For example, if the learner knows that fork is a kitchen word, he or she can rule out referents that do not fall into that category (e.g., a dog), even if they do not precisely know which of the remaining ones is a fork. Under a statistical account, however, context may also impede learning by raising the likelihood of spurious correlations. The fact that forks and spoons frequently appear together, for instance, means that the word fork may be linked to both objects.

Dautriche and Chemla (2014) manipulated contextual consistency by presenting a set of word-referent pairs as a consistent context in the first block of trials. For example, trials 1-4 may have included the objects dog, cat, rabbit, cow, with each one the target on a trial. This established a set (all four are animals) that then served as context. This contextual manipulation improved learning on subsequent blocks when words were presented “out of context” (with other competitors). Later experiments made the grouping of the objects into contexts completely arbitrary, with similar results. This suggests context serves as a memory cue for ruling out competing hypotheses in the moment. Moreover, this appears to challenge associative or statistical account.

However, Dautriche and Chemla’s (2014) context manipulation creates potential benefits for context without the statistical costs. Context only occurred on one block of trials with the same four competitors. Subsequent presentations of a word had random foils, meaning that context was very salient and did not create spurious correlations that could have hurt learning. It is unclear how behavior would be affected if contextual consistency was manipulated across the whole experiment in a more natural, probabilistic manner. Thus, it is possible that context serves as both a source of information that can be used in real-time to eliminate competitors, even as it simultaneously exerts a cost on learning. Our third goal therefore was to investigate the potential associative costs that come with context. This was done by comparing learning if highly co-occurring competing objects were present throughout learning or not.

1.2.4 Do other factors from prior encounters with a word play a role in performance?

Finally, the autocorrelation analysis used until now focused on factors that directly propositional inference and/ or associative learning: whether the learner knew the word on the previous trial and the number of encounters. However, there may be considerably more information on a given encounter that learners could use and could reveal aspects of the mechanism. For example, in the kitchen example above, the spatial arrangement of the fork and spoon could match the current encounter or differ. Would such information matter for learning?

This was examined in an animal learning study by Wasserman et al. (2015). They taught pigeons to map 16 different categories onto 16 unique responses (in a problem analogous to word learning using an operant learning paradigm. They conducted detailed auto-correlation analyses showed both the same effect of last-trial accuracy as Trueswell et al. (2013), and unambiguous evidence for gradual learning. However, they also included a number of other factors not considered by prior work in their autocorrelation analyses: This included the spatial location of the target response (and foils) on the last encounter, how many trials lapsed between repetitions of a category, and learner’s knowledge of the foils. All of these played a role in pigeon learning.

Given the similarities they report between pigeons’ learning and human word learning (as well as the obvious differences), our fourth goal here is to investigate these issues, by examining a range of other factors in our autocorrelation analysis. Such findings may unify cross-situational learning with other forms of learning and memory. For example, well established effects of spacing of training trials (e.g. Ebbinghaus, 1992; Pavlik Jr & Anderson, 2005; Smith, Smith, & Blythe, 2011), predict that the number of trials between successive presentations of the target impact learning. Similarly, recent studies indicate that children’s word representations are initially bound in space (e.g. Samuelson, Smith, Perry, & Spencer, 2011), suggesting the consistency of the spatial arrangement may matter. Identifying such factors at work in cross-situational learning may thus show how this paradigm maps onto broader ideas in learning and development.

1.3 The present study

The present study addresses these four questions across two experiments. Experiment 1 primarily asks whether people maintain multiple hypotheses and activate them in a given moment during learning (Question 1). Learners were exposed to a set of word-object mappings in which each word had two additional objects that serve as highly co-occurring foils (though they were the named target on other trials). We used a variant of the visual world paradigm (VWP) (Tanenhaus, Spivey-Knowlton, Eberhard, & Sedivy, 1995) during cross-situational learning to measure simultaneous activation of multiple hypotheses. Our logic was that if participants click on one object but simultaneously fixate another object on the same trial, this offers strong evidence that both hypotheses were not only retained (as suggested by Dautriche and Chemla, 2014), but also simultaneously activated in the moment as potential referents. Such an analysis was not possible in prior eye-tracking designs (e.g., Trueswell et al., 2013) which did not manipulate co-occurrence, nor condition eye-movement analyses on the response. It is important to note that generally in the VWP, looks to competitors are driven by auditory ambiguity (e.g., words that overlap phonologically) or semantic similarity. However, neither of these factors is present here, and we are filtering trials to only analyze those in which the correct object was chosen. Thus, we expected to see very small differences in looking between the high-co-occurrence competitors and the control foils.

We addressed the second question (concerning gradual learning) by replicating the Trueswell et al. (2013) analyses, and then looking for an effect of gradual learning above and beyond that. This analysis was carried out both for Experiment 1 and 2.

Comparing Experiment 1 and 2 addresses the third question, the issue of whether context can impede learning. In Experiment 2, foil co-occurrence was completely random. Here, the high-co-occurrence competitors of Experiment 1 could have either served as a contextual cue and speeded learning (as in Dautriche and Chemla, 2014) or created statistical uncertainty and slowed it. However, unlike Dautriche and Chemla (2014), this co-occurrence was repeated throughout the experiment to potentially magnify any interfering effects. By comparing the Experiments, we address the possibility that consistent context may also interfere with slower statistical learning process.

Finally, the fourth question — what information is carried forward across trials — is addressed by conducting an autocorrelation analysis on the combined data from Experiments 1 and 2. We consider a range of additional exploratory factors highlighted by Wasserman et al. (2015).like the distance between presentations of a word, spatial layout and foil knowledge.

In both experiments, the learning paradigm was similar to other cross-situational experiments with three objects presented on each trial, one of which was named. As in Trueswell et al. (2013), participants made an overt response on each trial (and they received no feedback). As described, this was crucial for both the fixation and trial-by-trial analyses. Unlike prior studies supporting propositional or memory-based accounts of learning, words were mapped to novel objects, rather than photographs of known objects to disencourage a paired-associative-process (linking novel names to existing names).

One of the most important differences between our experiments and previous studies is that we included significantly more trials (60 encounters with each word). This was motivated by one of our questions: the contribution of the gradual accumulation of information. In unsupervised statistical learning paradigms, the changes from trial to trial are likely to be very small. Hence, we were concerned that with only a few repetitions, the effect of number of exposures would be too difficult to observe. Moreover, a longer experiment was also necessary for our eye movement design: As we wanted to look at correct trials for evidence of activation of alternative hypotheses, learners needed to show very high levels of accuracy for a substantial proportion of the experiment. This is also why we only included eight words to be learned. Finally, the higher number of trials also gave us more power to carry out the extended autocorrelation analyses we used to examine our fourth question.

We acknowledge that this highly repetitive and simplified learning paradigm may be unrepresentative of everyday word learning, which likely features more ambiguity, more items and more variability. However, these decisions were based on theoretical and methodological considerations. In that regard, most cross-situational word learning studies (or word learning studies in general) make similarly reductionist assumptions, though perhaps in different ways (use of a small number of trials, mapping words to already known objects, e.g. Trueswell et al., 2013; Yu & Smith, 2007). Given these sorts of simplifications, we do not claim that these types of experiments capture the phenomenon of word learning as a whole; rather, they isolate and distill critical learning mechanisms that were not possible to investigate in previous experiments and that are likely involved in various forms of observational word learning.

2. Experiment 1

2.1 Method

2.1.1 Participants

Thirty-two native English speakers took part in this experiment. Participants were students at the University of Iowa. Thirty received course credit as compensation, two received gift cards worth $15. Participants underwent informed consent in accord with an IRB approved protocol.

2.1.2 Design and materials

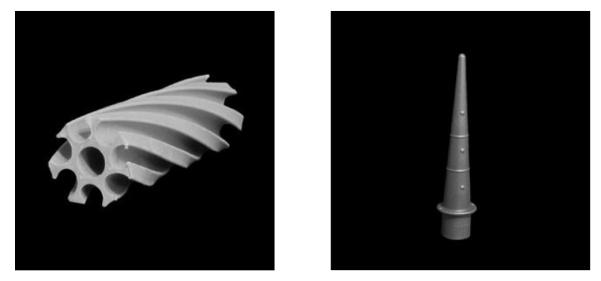

Participants learned eight word-referent pairs over approximately 40 minutes. Referents were novel objects, presented on a black background (Figure 1 for examples). Words were two-syllable, CVCV pseudo words, which were phonologically legal words in English. There was no phonological overlap among any words at onset (Table 1). The specific mapping between each word and its referent was randomized for each subject at the beginning of the experiment.

Figure 1.

Examples of the novel, differently colored objects used in Experiment 1.

Table 1.

Novel words used

| Written form | IPA |

|---|---|

| Mefa | /meɪfɑ/ |

| Goba | /goubɑ/ |

| Jifei | /dʒifeɪ/ |

| Bure | /buɹeɪ/ |

| Naida | /nɑɪdɑ/ |

| Zati | /zæti/ |

| Lubou | /lubo/ |

| Pacho | /pɑt∫ou/ |

During training trials, each word was strongly correlated with a single target referent (co-occurring on 100% of trials). To build “spurious” associations between the word and incorrect competitor referents, high and low co-occurrence competitors were also included. The high co-occurrence (HC) competitor was 60% likely to be seen with the target word; the low co-occurrence (LC) competitor was 40% likely to co-occur with the target word (see Table 2). HC and LC competitors were neither phonologically nor visually related to the target word/object pairs. All of the other five objects were randomly selected from trial-to-trial with a co-occurrence rate of approximately 20%. All words and objects were equally likely to appear throughout the experiment. The random objects (ROs) for each trial were chosen without replacement to avoid spuriously increasing the co-occurrence of an RO with a word.

Table 2.

Example of co-occurrence statistics over all four blocks.

| Object 1 | Object 2 | Object 3 | Object 4 | Object 5 | Object 6 | Object 7 | Object 8 | |

|---|---|---|---|---|---|---|---|---|

| Mefa | 60 | 36 | 24 | 12 | 12 | 12 | 12 | 12 |

| Goba | 12 | 60 | 36 | 24 | 12 | 12 | 12 | 12 |

| Jifei | 12 | 12 | 60 | 36 | 24 | 12 | 12 | 12 |

| Bure | 12 | 12 | 12 | 60 | 36 | 24 | 12 | 12 |

| Naida | 12 | 12 | 12 | 12 | 60 | 36 | 24 | 12 |

| Zati | 12 | 12 | 12 | 12 | 12 | 60 | 36 | 24 |

| Lubou | 24 | 12 | 12 | 12 | 12 | 12 | 60 | 36 |

| Pacho | 36 | 24 | 12 | 12 | 12 | 12 | 12 | 60 |

Each trial included three objects and a single target word. To manipulate the co-occurrence of targets and competitors, we controlled frequency of four trial-types, defined by which competitors were on the screen (Table 3) relative to the target word. In HCLC trials, the target referent, the high co-occurrence competitor and the low co-occurrence competitor were present. In HC trials, the target referent, the high co-occurrence competitor and a randomly chosen object were included. In LC trials, the target referent, the low co-occurrence competitor and a randomly chosen object were present. In RO trials, the target referent was accompanied by two randomly chosen objects. The number of trials of each type was manipulated to obtain the desired co-occurrence frequencies (Table 3).

Table 3.

The four trial-types and the number of times each is repeated in a single block and over the course of the experiment.

| Trial-type | Object types on screen |

Repetitions / block and word |

Repetitions |

|---|---|---|---|

| HCLC | Target HC competitor LC competitor |

2 | 64 |

| HC | Target HC competitor Random object |

7 | 224 |

| LC | Target LC competitor Random object |

4 | 128 |

| RO | Target Random object 1 Random object 2 |

2 | 64 |

Participants responded at the end of each trial by clicking on the object that they thought mapped onto the word they heard. There was not a separate testing phase at the end of training. The experiment was separated into four blocks of 120 trials (resulting in an overall number of 480 trials). Trial-types were randomized within one block; the interval between learning instances for one word was thus completely random within block. Each block consisted of 16 HCLC trials, 56 HC trials, 32 LC trials, and 16 RO trials.

2.1.3 Procedure

Participants were told that their task was to discover which object goes with what word, and that on each trial, they were to indicate their best guess by clicking on that picture. Participants were also told that while we expected them to guess at the beginning, their response should become more informed over time.

At the start of each trial, three pictures were presented on a 19” monitor operating at 1280 × 1024 resolution. Simultaneously, a small blue circle appeared at the center of the screen. Participants were given 1050 msec to inspect the objects. Afterwards, the circle turned red, cueing the participant to click on it to cue the auditory stimulus. When the participant clicked on it, the red circle disappeared and the target word was played via headphones. Participants then clicked on the picture corresponding to the word, and the trial ended. Trials were not time-limited and participants were told to take their time and perform accurately.

Pilot results suggested that participants made fewer eye-movements over the course of the experiment as they learned to identify the objects, and as they learned the exact positions the objects could be in. To minimize this reduction in fixations, objects were presented in a triangle configuration, and the orientation of this triangle was randomly selected on each trial (either two objects on top or one object on top). The location of each object was randomized across the three possible locations of a given triangle on each trial.

2.1.4 Eye-tracking Recording and Analysis

Eye-movements were recorded throughout the experiment using an SR Research Eyelink II head-mounted eye-tracker operating at 250 Hz. Both corneal reflection and pupil were used to obtain point of gaze whenever possible, though for some participants only good pupil readings could be obtained. At the start of the experiment, participants were calibrated with the standard 9-point display. The Eyelink II compensates for head-movements using infra-red light emitters on the edge of the computer screen and a camera on the head to track head position. This yields a real-time record of gaze in screen coordinates. The resulting fixation record was automatically parsed into saccades and fixations using the default “psychophysical” parameter set. We then combined adjacent saccades and fixations into a single “look” which started at the onset of the saccade and ended at the offset of the fixation as in prior studies (McMurray, Aslin, Tanenhaus, Spivey, & Subik, 2008; McMurray, Samelson, Lee, & Tomblin, 2010). To account for noise and/ or head-drift in the eye-track record, the boundaries of the ports containing the objects were extended by 100 pixels when computing the point of gaze. No overlap between the objects resulted from this.

2.2 Results: Overview

For Experiment 1, we first looked at overall accuracy and how performance differed across different trial-types to partially address Question 1 (multiple hypotheses). We then turn to the eye movements using both the standard statistical paradigm as well as a ratio measure to avoid common statistical issues when analyzing eye movements in the VWP. Finally, we replicate Trueswell et al.’s (2013) autocorrelation analyzes in our final part of the results’ section (Question 2: gradual learning).

Three participants were excluded from all analyses, as their learning plateaued at 45% correct (chance = 33.33%), with no improvement in performance over time. This left 29 participants for analysis2.

Data were analyzed separately within each trial-type for the eye movement analyses. The analyses we report here focus on the two most important trial-types, those involving the HC competitor (the HC and HCLC trials). This is because the HC trials are experimentally the most important condition, as there is the largest difference in co-occurrence between the spuriously associated object and the random object. Moreover, as such trials made up almost half of the experiment (a natural consequence of the co-occurrence manipulation) they had the greatest power to reveal any effects. We also ran an extensive set of analyses for the LC and RO trials which are presented in supporting online material and generally showed no differences (see supplement).

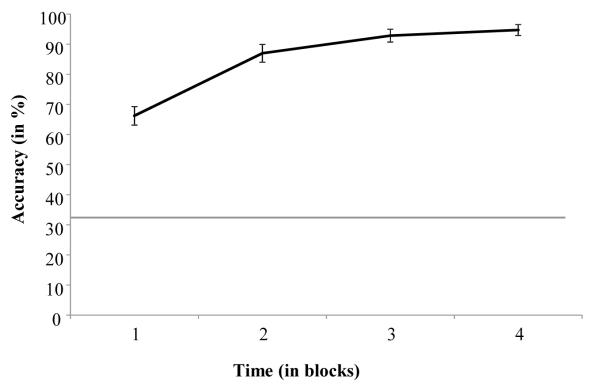

2.2.1 Accuracy

Figure 2 shows the accuracy of the 29 participants’ overt responses (across the four trial-types). Participants’ accuracy was well above chance in each block of the experiment, and 79% of the participants performed above 90% correct by block 3. Overall, accuracy between the different trial-types did not differ substantially (HCLC = 83.35%; HC = 84.67%; LC = 86.66%; RO = 85.51%). Participants’ reaction time (RT) decreased over blocks (overall average RT = 1480.74 msec).

Figure 2.

Average accuracy across blocks. Errors bars mark the standard error of the mean.

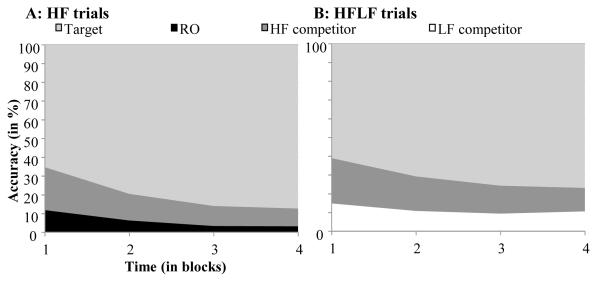

Figure 3 shows the distribution of responses (both correct responses and the distribution of errors) for the HC and HCLC trials. In the HC trials (Panel A), even though participants were highly accurate (the large light gray region), when they make an error, they were more likely to select the high co-occurrence competitor (dark grey) than a randomly chosen object (black), suggesting sensitivity to the co-occurrence manipulation. A similar pattern was observed in the HCLC trials (Panel B). In summary, participants learned the word-object mappings successfully and there are hints in their pattern of overt responses (and errors) that participants were sensitive to the co-occurrence manipulation.

Figure 3.

The distribution of overt responses (indicated by shading) as a function of block. Target (light grey) is correct.

We next turn to the primary analysis addressing Question 1 (maintenance of multiple hypotheses): We examined the fixation record to determine if there is evidence that participants are activating two hypothesized referents for a given word on the same trial. Next, we report a focused autocorrelation analysis designed to replicate the analysis of Trueswell et al. (2013) to determine whether prior choice and number of encounters influence trial-by-trial behavior (Question 2).

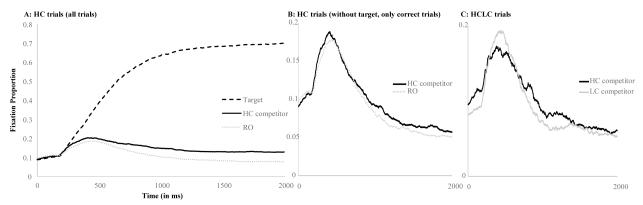

2.3 Analysis of Fixations

Figure 4A shows the typical time course of fixations on the HC trials: after a brief period of initial uncertainty, participants’ looks rapidly converge on the target. More importantly, when we consider looks to the competitors, participants are more likely to look at the HC competitor than the RO. However, this figure combines trials with different responses, potentially conflating different kind of eye-movements. Some of the increased looking to the HC competitor is likely due to trials in which participants clicked on that competitor (and hence would have fixated it heavily). Thus, we restricted our analysis of the eye movements to only trials in which participants clicked on the correct object. This is extremely conservative for two reasons. First, eye-movements reflect motor planning as well as activation dynamics (Salverda, Brown, & Tanenhaus, 2011), and we have restricted ourselves to trials in which this motor plan reflects the correct referent; this should significantly inflate target looking. Second, and perhaps more importantly, this eliminates the trials with the most robust evidence that the HC competitor was under consideration; as a result the absolute magnitude of the effect will be quite small. However, if participants are still fixating that object more than chance even as they click the target, it offers the strongest evidence that multiple hypotheses were not only maintained but that both referents were under consideration at least partially on the same trial.

Figure 4.

Time course graphs for HC trials with looks to the target for all trials (Panel A) and without looks to the target (Panel B) and for HCLC trials (Panel C) including only correct trials.

Figure 4B and C show the time course of fixations after excluding these trials. They display just the looks to the competitor objects as a function of the two trial-types containing HC competitors (for correct trials only). In both the HC and HCLC trials there are somewhat more looks to the HC than the random object (or the LC object). In both cases, this difference appears largely late in the time course of processing.

2.3.1 Statistical Analysis

To examine this statistically, we conducted two mixed effects models examining the HC and HCLC trials separately. For each, we computed the average proportion of fixations to the HC competitor and to either the random object or the LC competitor in the time window between 250 and 2000ms (Figure 5). These were submitted to a linear mixed effects model using the LME4 (version 1.1-5) (Bates & Maechler, 2009), lmerTest (version 2.0-6) packages in R (R Development Core Team, 2011). The independent variables included block (1-4, centered), and object-type (HC vs. RO or the LC, contrast coded as ±0.5). Significance for the coefficients was established using the reported t-statistics with degrees of freedom computed by the Satterwaithe approximation. Random effects included both participant and stimulus. We computed effect sizes (d) by doubling the t-value and dividing it by the square root of the estimated degrees of freedom.

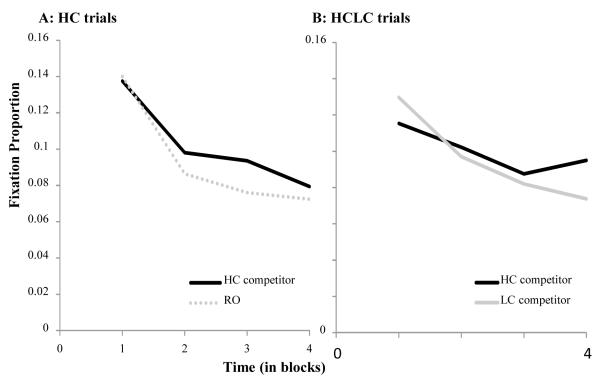

Figure 5.

Looks to the competitors in HC (Panel A) and HCLC trials (Panel B) (separated by block).

Before examining the fixed effects, we compared several models that differed on how these two random effects were implemented to determine the most appropriate one for our data. We compared a series of models with random intercepts for participant and stimulus or with random slopes of the fixed effects on participant. With only eight items, it was difficult to estimate random slopes on item, and this often resulted in very high correlations between random slopes, suggesting the model was overfitting the data; thus only models with intercepts on item were considered. The model containing random slopes of block and object-type on participant (but not their interaction), and random intercepts on stimulus, was the most conservative model (following Barr, Levy, Scheepers, & Tily, 2013) that converged; thus, it was used for analysis.

For the HC trials, we found a significant effect of block (B = −.020, SE = .0029, t(26.8) = 6.80, p < .001, d = 2.63). This was due to the fact that as the experiment progressed, participants were less likely to make eye movements at all as they got better at recognizing the objects and were better able to perform the task with peripheral vision (see Figure 5A). Crucially, there was a main effect of object-type (B = .0085, SE = .0039, t(71) = 2.17, p = .033, d = 0.52) with more fixations to the HC than the RO. The interaction between block and object-type was not significant (p = .278).

For the HCLC trials, the most conservative model included object-type and block as well as their interaction as random slopes on participant. We found a significant effect of block (B = −.013, SE = .0040, t(28) = −3.19, p = .0035, d = 1.20). No significant effect of object-type was observed (p =.446). However, the interaction of block and object-type was marginally significant (B = .011, SE = .005, t(135.8) = 1.93, p = .056, d = 0.33)3, suggesting that participants looked more at the HC competitor (in comparison to the LC competitor) the longer the experiment lasted (c.f. Figure 5). Figure 5 suggests that the interaction is largely driven by an effect of object in block 4 of the experiment. Thus, to examine this interaction, we conducted post-hoc tests in which we split the data into block 1-3 and block 4 and examined the effect of object-type only. On blocks 1-3, there was no effect of object-type (p = .917). On block 4, the effect of object-type was marginally significant (B = .021, SE = .011, t(25.9) = 1.79, p = .086, d = 0.70), though it is worth noting its large effect size.

2.3.2 Fixation Odds-Ratios

While the analytic approach used above is fairly standard for the VWP, it is not ideal for two reasons. First, the data come from an underlying binomial distribution, and particularly when the values are near zero (or one), such data may not meet the assumptions of linear models. The empirical logit transformation is often applied to transform the proportions of interest into log-odds ratios, which scale more linearly. However, this ignores a second factor which is commonly overlooked in the VWP. Looks to the HC and to the RO are not independent of each other: If the participant is looking at one, they cannot be looking at the other. Conventional analyses like the one just presented generally compare the proportion of looks to one object to the proportion of looks to the other. Since these are not independent of each other, this is problematic. In this case, the empirical logit transformation is insufficient to solve the problem: it simply allows us to compare two appropriately scaled variables that are still not independent.

The solution is to collapse the two looking measures into one (e.g., a difference score of some sort). In order to construct such a measure, but still respect the need for a more Gaussian distributed variable, we developed a new transformation based on the empirical logit. We replaced the odds ratio (p/[1-p]) with the odds ratio of looks to the HC over looks to the random object (or the LC competitor; whichever object-type that served as the baseline on that trial-type), as shown in (1).

| (1) |

This can also be written in terms of the absolute number of looks as

| (2) |

Here, Mhc is the number of looks to the HC object, and Nhc is the number of total looks. Since Nhc = Nro, this term can be dropped. However, one problem is that of 0s on either the numerator or the denominator (a log of 0 is negative-infinity). In the empirical logit transformation, this is solved by adding .5 (half a success) to the numerator and denominator when computing the probabilities from the counts. However, doing this created highly skewed distributions, since eye-movements do not occur every four milliseconds. Thus, we added the equivalent of half of a fixation as the correction factor (C). Half a look was calculated by taking the average fixation duration within each trial type (for correct trials only) and then dividing it by four (since the eye-tracker sampled at 4 msec) and two (to create half a look). This resulted for a correction of 45.33 for HC trials and 45.08 for HCLC trials. The denominator is the same with respect to the random object.

| (3) |

As a whole, this is the log of the odds ratio of looks to the HC over looks to the RO, with a positive log-odds-ratio indicating more looks to the HC object. If this ratio was found to be significantly above 0, this would be evidence for participants looking more often at the HC competitor than baseline. This eliminates the non-independence issue since the two values are combined into one, and creates a more linear scaling.

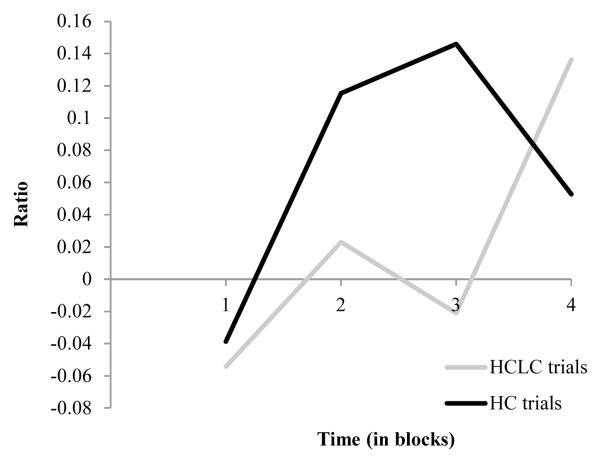

Figure 6 shows the mean log-odds ratio as a function of block and trial-type, and confirms the results from the previous analysis. This measure was above zero for both trial-types on the later blocks, indicating more looks to the HC competitor than the baseline items (the RO or LC items). Data from the two trial-types were combined and examined with a linear mixed effects model with block (centered) and trial-type (HCLC vs. HC, +/−0.5) as fixed factors and subject and item intercepts. More complex (conservative) random effects structures showed a very high correlation (r=−1.00) between random slopes and intercepts, suggesting they may be overfitting the data. This model found that the intercept was significant (B=.046, SE= .022, t(28.5) = 2.05, p = .049, d = 0.80), indicating that this ratio was above 0 (equal looking for the HC competitor and the baseline comparison). This can be seen as further evidence that participants looked more at the HC competitor than the random object or the LC objects across the whole experiment. We also observed a significant effect of block (B=.042, SE= .019, t(1700.6) = 2.16, p = .031, d = 0.11). However, neither trial-type (p = .265) nor the trial-type by block interaction (p = .563) reached significance.

Figure 6.

The mean log-odds ratio as a function of block.

2.3.3 Visual Co-Occurrence

The analyses thus far suggest that learners fixate the HC competitors more than other objects (even as they are clicking on the target). However, one final concern is that this could be driven by purely visual co-occurrence (ignoring which object was named) since visual targets appeared with their HC competitors more frequently than with other objects. If participants were sensitive to co-occurrence at a purely visual level, they may direct eye-movements to these objects independently of the auditory stimulus (and these in turn could mirror the effects of mapping the name onto each object). To rule this out, we investigated fixations to the objects before the stimulus was heard (between −1000 to 0 msec, during the pre-scanning period) of the HC trials. We used raw proportions of fixations as the dependent variable, and included all three objects (target, HC and RO) in the analysis as at this point the participant should have no information as to the eventual target. The fixed effects included block (centered) and object-type (two dummy codes). As in our prior analyses, we used random slopes of block and object for participant, and random intercepts for items. This analysis found no significant effect of object-type4 (F(2, 147.8) = 0.60, p = .551), no significant effect of block (F(1,28)=.18, p=.67), and no interaction (F(2,2722)=.38, p = .682) for HC trials. Thus, participants were not biased to look at one object-type or the other as a result of the co-occurrence manipulation.

We replicated these analyses by combining raw fixations from both the pre-scanning and the post-stimulus periods of the trial and adding trial-period as a factor (pre- or post- stimulus, centered), along with block and object-type. For maximum sensitivity to the hypothesized HC vs. RO difference, the target object was dropped from this analysis and object-type was coded as HC=+.5, RO=−.5. Again, models with random slopes of all three fixed effects and their interactions did not converge; as we were interested primarily in the trial period × object-type interaction, we used random slopes of both of these factors and their interaction on participant (but no slopes of block). There was a main effect of trial-period with more fixations post-stimulus than during the pre-scanning period (B=.038, SE=.0089, t(28)=4.24, p=.0002, d=1.6). While there was no main effect of object-type (B = .0029, SE= .0025, t(181)=1.18, p=.24, d=.18), we did find a significant interaction between object-type and trial period (B=.011, SE=.0054, t(74)=2.04, p=.045, d=.47). Given the prior analysis on just the pre-scanning period, this suggests the effect of object-type was only present during the post-stimulus period. This finding is further evidence for our assertion that participants’ looks were driven by the auditory stimulus and not visual co-occurrence. (We did not investigate other trial types given that if such visual co-occurrence effect existed, the HC trials would have had most power to reveal it.)

2.3.4 Summary of Fixation Analyses

The foregoing analyses present strong evidence that even on the trials on which participants were selecting the correct referent, they are nonetheless considering the HC competitors more than chance. While this effect was numerically small (as expected), it was robust across two analyses and the effect size was moderate. We also showed that it could not be attributed to visual co-occurrence. Thus, learners are clearly maintaining two hypothesized referents for a given word. Our next set of analyses turns to Question 2, i.e. what information participants are carrying forward from trial to trial.

2.4 Autocorrelation analysis

We next examined the trial-by-trial accuracy data using an autocorrelation analysis similar to the approach of Trueswell et al. (2013). This analysis examined performance on the current trial as a function of what happened the last time the same target was seen (we conduct a more complete analysis to address Question 4 more thoroughly after Experiment 2).

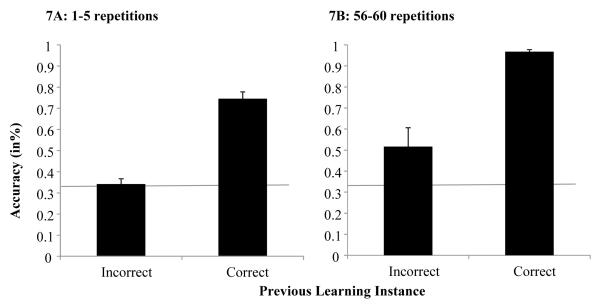

We started with the simplest model, in order to replicate these prior approaches (Figure 7). This analysis started by including only the first five repetitions of each word in order to achieve the same number of repetitions used in Trueswell et al. (2013). Here, the outcome variable was binary, indicating whether the participant clicked on the correct target on the current trial. The only fixed effect was a dummy coded variable indicating if the participant had been correct the last time they heard that word (last-target-correct). The first trial with each target was excluded since there was no prior trial with the same target.

Figure 7.

Accuracy as a function of how participants responded on previous trials with the same target for five target replications (as used by Trueswell et al., 2013) at beginning of experiment (7A) and at the end of the experiment (7B). Error bars indicate the standard error of the mean.

This model was constructed in a mixed models framework, using the binominal linking function. P-values were computed directly from the Wald Z statistics; there are no commonly accepted measures of effect size for logistic models. As before, we first explored the random effect structure that best fit the data using the full data set and a more complete model. Here, we found that random slopes of last-target-correct on participant and word, along with a random intercept of target object best fit the data. (Note that this model also included a fixed effect for trial-type to account for potential additional difficulties of some of the trial-types.) In addition, it should be highlighted that while the typical statistical tests on the intercept for a logistic model compare the coefficient to 0, this assumes a chance level of .5, whilst chance was here .333. Thus, to evaluate against.333, we subtracted −ln(2) from the original intercept (this will be reported as B33 or the adjusted intercept), and then computed a Wald Z-statistic by dividing this modified intercept by the original SE estimated from the model.

This model replicated the effects of Trueswell et al. (2013). We found a significant effect of last-target-correct (B= 1.74, SE= .240, Z= 7.22, p < .001), with much greater performance after a correct trial than an incorrect. The intercept was not significantly different from 0 (B= −0.618, B33=.075, SE= .210, Z= 0.355, p = .723). Since last-target-correct is dummy coded, the intercept tells us the degree to which performance is above chance when the last target was incorrect, indicating that performance was at chance after an incorrect trial (Figure 7A).

However, Figure 7B (which shows repetitions 56-60 at the end of training) suggests this finding may not hold if we allow more time for gradual learning to unfold. Here, when we run the same analysis on the entire set of trials, we again see a highly significant effect of last-target-correct (B= 3.02, SE= .205, Z= 14.72, p < .001), but now the intercept was significantly different from 0 (B=0.10, B33=.79, SE= .141, Z= 5.605, p < .001), indicating that overall performance was above chance even if people responded incorrectly. Thus, by the end of training, while we replicated an effect of last-target-correct, we also find that performance was above chance even when learners were incorrect on the prior encounter, contradicting Trueswell et al. (2013). Thus, the difference in findings between our and Trueswell et al.’s (2013) results is a difference in the number of trials considered. This confirms our intuition that the gradual learning effect may simply be too small to be observable with a small number of trials.

More importantly, as we described, this statistical model (as well as many of those used previously), may underestimate effects of gradual learning as it confounds position in the learning curve with last-target-correct. Incorrect prior trials are more likely to come from the early portion of the learning curve, and correct trials from later points. More importantly, it cannot assess whether any gradual learning effect can be seen over and above last-trial performance. Thus, we extended the prior analysis to ask if there was a further effect of accumulated experience over and above the prior trial behavior. To do this, we added the centered log of the number of exposures to that word up to the current trial (log-target-count) and its interaction with last-target-correct to the model (both were also added as random slopes on participant and word). For this model, we centered last-target-correct to facilitate the interpretation of the included interactions.

When we used this new model on only the first five repetitions of each word, it did not provide a better fit to the data (p = .937). However, using our whole data set, the new model including log-target-count offered a significantly better fit than the prior model (χ2(16) = 735.65, p < .001), providing robust evidence for an influence of a gradual element to learning, over and above the effect of last-encounter responding. In this model, there was a main effect of log-target-count (B = 1.18, SE = 0.15, Z = 8.13, p < .001), indicating that performance was better with more experience, independently of prior trial behavior. There also continued to be a significant main effect of last-target-correct (B = 2.40, SE = 0.26, Z = 9.25, p < .001) with again better performance if the participant was correct on the prior encounter. This reveals that even when accounting for position in the learning curve, there was unique variance associated with the participants’ behavior on prior trials with that target. Finally, the interaction between last-target-correct and log-target-count was significant (B = 0.45, SE = 0.22, Z = 2.05, p = .04)5.

There are two equally accurate descriptions for this interaction: First, the effect of amount of exposure (log-target-count) may depend on whether one was correct on the previous encounter (last-target-correct), and in the extreme, there is only an effect of exposure for trials on which the learner was correct on the prior encounter with that word (e.g., Trueswell et al., 2013). That is, people are building more confidence with each correct response, but not accumulating anything from an incorrect one. Alternatively, the effect of last-encounter performance (last-target-correct) may depend on the amount of exposure. The first interpretation (particularly the extreme version) accords with a propositional account, whilst the latter would concur with associative learning.

To investigate this statistically, we conducted a post-hoc test, in which we asked whether the effect of exposure persisted even for trials that followed an incorrect response; if so, this would indicate that the effect of log-target-count cannot completely depend on previous performance. For this purpose, we split the data by last-target-correct, and only analyzed trials that followed an incorrect response on the previous encounter with the same target word. We used the same model as before, but dropped last-target-correct as a main effect and slope on participant and target word. In this model, there was a main effect of log-target-count (B = 0.66, SE = 0.17, Z = 4.0, p < .001), indicating that an effect of exposure remained even for trials that had not followed a correct response.

The interaction thus indicates that the effect of prior trials increased over the course of training, but that the effect of exposure was present in both types of trials. This suggests that the last-target-correct effect may have been a product of learning, not the cause of it. That is, if the effect of last-target-correct grows with more exposure, it appears more the result of the accumulation of evidence. In contrast, if the last-target-correct effect derives from a sudden inference that drives learning, one would have expected much bigger effects early (when there was more learning to do) than later. Thus, this analysis suggests that the number of repetitions of a word (gradual learning) accounts for variability in participants’ accuracy in addition to the contributions of last-encounter performance (proposing / verifying): participants must bring forward more information from prior encounters with a word.

2.5 Discussion

Experiment 1 revealed two primary findings. First, the eye-tracking results demonstrated that participants were simultaneously considering both the correct (target) referent and a competitor (e.g., the HC competitor) during the same naming event. Specifically, on trials in which participants ultimately selected the correct target, they still fixated the HC competitor more than other objects. This effect was numerically quite small, which was expected given the lack of ambiguity in the displays and the fact that we filtered out the trials on which listeners were considering this competitor so strongly they actually clicked it. Nonetheless, it was significant across several analyses and had a moderate effect size, suggesting that at least sometimes and on some trials, both possible referents were being considered. For HCLC trials, this was only seen at the end of the experiment (reflected in the marginally significant interaction between object-type and block). This offers some tentative evidence that these associations may grow over training. No such differences were observed in the LC trials (see supplement): This may indicate that these differences in co-occurrence (LC competitor versus RO) were too subtle to result in observable differences in association strength. However, for the HC competitors, there was clear evidence for the simultaneous consideration of multiple hypotheses.

It should also be noted that the difference in looks to HC over RO/ LC competitors was driven by eye movements generated late in the trial. This suggests that participants may have had difficulty fully suppressing the HC competitor. In addition, this indicates that a consistent context throughout learning (i.e. the presence of the HC competitor) may impede performace; however, this will become clearer when comparing Experiment 1 and 2 (see below).

Our autocorrelation analyses addressed our second question. They replicated previous work showing an influence of prior responding on current trial accuracy (Dautriche & Chemla, 2014; Trueswell et al., 2013). However, more importantly, we found that adding a gradual component to the statistical model accounted for variance in accuracy over and beyond performance on the previous learning instance with that word. This thus indicates that participants do not simply retain hypotheses in an all-or-nothing fashion – they are also sensitive to the gradual accumulation of co-occurrence statistics. This was found even considering only trials in which participants responded incorrectly on their last encounter with a word. This also provides converging evidence for the question of multiple hypotheses (Question 1) - even when participants did not respond correctly on a prior encounter (they had the wrong hypothesis under a propose-but-verify account), they were still accumulating information relevant to the correct mappings. Thus, learning appears to be shaped both by last-encounter performance as well as overall exposure. Moreover, the fact that the effect of last-encounter performance improves with more learning suggests that the effect of last-encounter performance – argued to be the hallmark of a propose-but-verify strategy - may be a product of learning, not a mechanism of it (echoing the description of classic fast-mapping offered by McMurray et al., 2012).

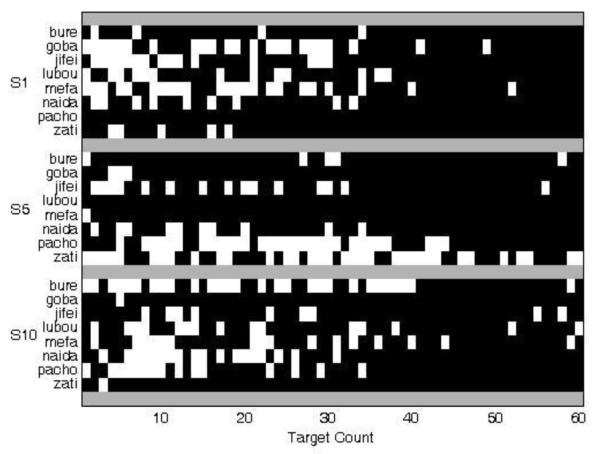

The combined influence of both gradual learning and last-encounter performance is quite clear when examining individual subject data. Figure 8 shows the trial-by-trial accuracy for three participants for each word. Here we see that some words seem to start correct and stay that way (e.g., pacho for S1, goba for S10), seemingly confirming a propose-but-verify strategy. However, other words show a much more sporadic pattern (e.g., goba, for S1, zati for S5) and a substantial number of words show continued oscillation even out to repetition 30 or 40. Yet other words show a fairly robust run of accurate responding (suggesting a correct proposal) that is then lost for a time (e.g., mefa for S10 around repetitions 12-20; pacho for S5 from blocks 1-6). Thus, while the individual learning profiles appear to fit a range of descriptions, there appears to be a strong gradual or probabilistic component to learning.

Figure 8.

Accuracy per item for three exemplary participants (S1, S5, S10) (black = correct trials; white = incorrect trials).

Experiment 2

Experiment 2 investigated one way in which context may influence cross-situational word learning (Question 3). As highlighted before, Dautriche and Chemla (2014) found that providing a stable context at the beginning of training helped learning on subsequent encounters. However, with no exposure to the context after these initial trials, it was impossible to tell if the spurious correlations created by consistent contexts may have hindered learning (since they were never encountered later). In Experiment 1, the HC competitors also offered a form of context, but one that was present probabilistically throughout the experiment, and may therefore be more likely to form spurious associations that impede learning. Thus, to determine whether this aided or hindered learning, Experiment 2 included only RO trials as a comparison.

Moreover, given the apparently conflicting results of our autocorrelation analysis and Trueswell et al.’s (2013), it was important to replicate Experiment 1 under experimental conditions that were more similar to their study; this was the case in Experiment 2 (there were no competitors with enhanced co-occurrence statistics). Thus, Experiment 2 was carried out both to establish a baseline of learning to compare accuracy of Experiment 1 against, and to replicate our autocorrelation analyses.

3. Experiment 2

3. 1 Method

3.1.1 Participants

Nineteen native English speakers took part in this experiment. All were students at the University of Iowa and received course credit as compensation. Participants were consented in accord with an IRB approved protocol.

3.1.2 Design and materials

The same stimuli were used as in Experiment 1. However, the design differed, as only RO trials were included. Thus, every trial included a target referent and two randomly chosen objects. Again, the co-occurrence rate of a word and its target was 100%. All the other seven objects were randomly selected from trial-to-trial without replacement and therefore co-occurred with the target word approximately with a co-occurrence rate of 28%. As in Experiment 1, the total number of 480 trials was separated into four blocks of 120 trials.

3.1.3 Procedure

The same procedure was used as in Experiment 1 with the exception that no eye movements were recorded as our primary hypotheses concerned accuracy.

3.2 Results Overview

Our analysis was conducted in two parts. First, we examined overall accuracy and compared it to Experiment 1 to determine how the co-occurring competitors, i.e. a constant context, affected learning. Second, we turn to our autocorrelation analysis to replicate the prior results with a study design that is closer to that of Trueswell et al.’s (2013) as well as of Dautriche and Chemla’s (2014) experiments.

3.2.1 Accuracy

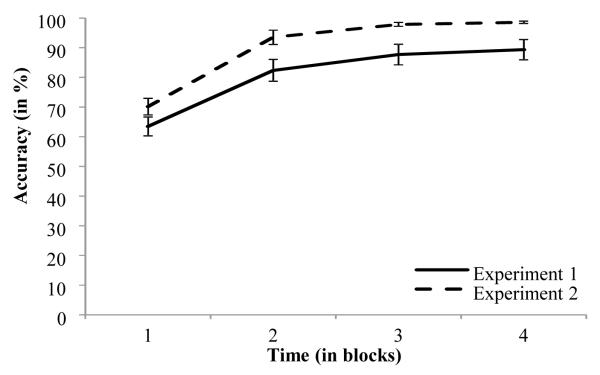

One participant was excluded from the analysis due to a computer error in the experimental script. Note that as the purpose of this was to compare accuracy in Experiment 1 and 2, the three participants who were excluded in Experiment 1 were included again to correctly reflect overall performance.

Figure 9 shows the average accuracy across for both Experiments 1 and 2. It suggests that participants performed better in the absence of high and low co-occurrence competitors. This pattern of results remains if one only compares RO trials of both experiments, suggesting that the presence of HC and LC competitors affected overall performance, not just accuracy in trials that included competitors with enhanced statistics. To evaluate this difference statistically, a binomial mixed model was conducted with participant, target (the object that was the correct referent) and stimulus as random effects. Experiment and block were the fixed effects (coded similarly to the prior analysis). The dependent variable was accuracy. Model selection suggested that a model with random intercepts for stimulus, target and participant as well as random slopes of block on participant, target and participant offered the best fit to the data. Using this, the effect of block was highly significant (B= 1.55, SE= .165, Z= 9.425, p<.001), suggesting improvement over time. Crucially, the effect of experiment was significant (B=.990, SE=.503, Z= 1.969, p =.0489), as performance in Experiment 2 was better than in Experiment 1. The interaction of experiment and block was marginally significant (B= .468, SE= .274, Z= 1.709, p = .087), and may indicate that participants did not simply learn better in Experiment 2 but also more quickly. Thus, highly co-occurring competitors, a probabilistic context, appear to impede learning.

Figure 9.

Average accuracy across blocks for Experiment 1 and 2.

One concern is that these differences may not reflect differences in the overall quality of learning, but the fact that some of the trials in Experiment 1 - those with an HC or LC competitor - were simply harder (though they would have been harder only because people had formed spurious associations). Thus, we controlled for this in a second analysis, by comparing only the RO trials of Experiment 1 to all of the trials from Experiment 2 (which were all RO). We found that a model that included random intercepts for stimulus, target and participant with a random slope of block on participant was best supported by the data. Using this model, the effect of block was still highly significant (B= 1.57, SE= .140, Z= 11.30, p<.001). Interestingly, the effect of experiment was still marginally significant (B= 0.90, SE= .50, Z= 1.81, p=.07), suggesting tentatively that the difference in accuracy across the two experiments was not simply driven by a lower accuracy in HC, HCLC and LC trials in Experiment 1, but that the inclusion of high-occurrence competitors impeded overall learning. However, given this marginal significance, this result needs to be confirmed in further research. The interaction between experiment and block did not reach significance, p = .191.

3.2.2 Autocorrelation Analysis

Our autocorrelation analyses used the general statistical approach as in Experiment 1. This model included last-target-correct (centered), log-target-count (centered) and their interaction as fixed effects. In addition, a random intercept for participant, word and target object was added. We also included a random slope for last-target correct, log-target-count and their interaction term on both participant and word.

We found that (as in Experiment 1) the model which included log-target-count and its interaction with last-target-correct (both were added as random slopes on participant and word) offered a significantly better fit than a model with last-target-correct alone (χ2(16) = 544.85, p< .001), replicating the previously reported influence of a gradual element to unsupervised learning. As in the previous analyses, it was found that there was a significant effect of last-target-correct (B = 2.16, SE = 0.25, Z = 8.49, p < .001) and log-target-count (B = 1.33, SE = 0.17, Z = 8.21, p < .001), indicating that both accuracy on a previous learning instance as well as experience (time) positively predicted accuracy on a current trial. Similar to Experiment 1, the interaction between last-target-correct and log-target-count reached significance (B = .45, SE = 0.19, Z = 2.39, p = .017), suggesting that there was more of an impact of last-target-correct performance later on in the experiment.

3.3 Discussion

This experiment offered a clear answer to our third question, showing that participants learned more poorly in the presence of competitors that co-occur with a target word than in the presence of purely random competitors. This suggests an important caveat to our understanding of context. In natural situations, contexts like a kitchen or a park create sets of objects that frequently co-occur with each other and with their names. If such contexts are repeated across exposure (not just in the beginning of the experiment), this may create spurious associations between words and incorrect referents that can impede learning. It is likely that the statistical difference between Experiment 1 and 2 would have been more pronounced if learning in general had been more difficult, as most participants in Experiment 2 reached ceiling by block 2 (Figure 7).

Indirectly, this offers further evidence that people track more than one object-referent mapping hypothesis at the time too, even if this approach to learning has the potential to impair overall performance. Finally, we replicated the autocorrelation results from Experiment 1 (in the absence of HC and LC competitors) demonstrating that a gradual element significantly increased the fit of the model to the data, even in the presence of a strong effect of the last response. This highlights the need for a theory of word learning that assumes that both factors influence learning, i.e. that participants must bring more information forward from prior trials (Question 2 and 4).

4. Further Effects on Learning

4.1 Overview

Our previous autocorrelation analyses indicated that at least two factors influence learning on a trial by trial basis (the prior choice behavior and the gradual effect of number of exposures). We next extended our investigation to determine what other variables from prior trials may influence performance (Question 4). In part this is motivated by the previously described animal learning work (Wasserman et al., 2015) that suggests a rich tapestry of information on prior encounters with a word can shape responding. This includes the distance between the last encounter with an object and the present trial, the learners’ knowledge of the foils, and the spatial arrangement of the items on prior trials (relative to that on the present trial). In this analysis, we asked whether such variables play a role in human cross-situational word learning over and above the previously examined number of exposures and/ or prior accuracy.

For this purpose, we combined the data from Experiment 1 and 2 in order to obtain more power to detect small effects. As our baseline, we started with the more complex model including last-target-correct, log-target-count (both centered) and the interaction term as fixed effects, and the same random effect structure (random intercept for participant, word and target object; random slopes for last-target correct, log-target-count and their interaction on participant and word). During initial explorations, we also examined models which added experiment and its interactions (with the other terms) as fixed effects. However, this did not improve the fit of the model to the data over the baseline model (p = .104), so these terms were not included in the analyses presented here. It should be noted that the baseline effects of last-target-correct and log-target-count were similar in all cases to the prior analyses. Thus, we do not discuss them here and focus on the most important new findings.

We investigated the following factors: The delay between repetitions (last-trial-distance) may distinguish unsupervised from supervised learning (Carvalho & Goldstone, 2014), or indicate the involvement of a decaying working memory. We also assessed learners’ knowledge of the foils on the last encounter with the target (last-foil-accuracy). This could implicate some form of mutual-exclusivity or competition process that helps in correctly identifying the target (McMurray et al., 2012; Yurovsky, Yu, & Smith, 2013). Finally, we examined the degree to which visual-spatial factors may be involved by assessing whether the target object appeared in the same location on the last encounter (last-target-same-location). This may implicate a spatially organized working memory (Samuelson et al., 2011), a fairly naïve associative learner that had not yet determined the relevant features (Wasserman et al., 2015), or some kind of episodic memory. Each was examined in a separate model, adding one of these three factors along with its interactions to the baseline model (last-target-correct × log-target-count).

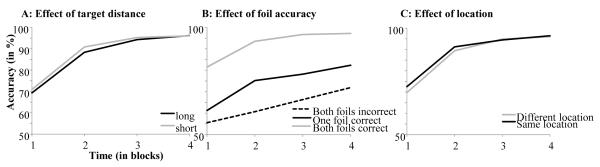

We started by considering the number of trials between the prior encounter with the word and the current trial. Figure 10A shows a small effect of last-trial-distance such that a short distance between the current and prior encounter led to better learning, though this was most pronounced early in training. A model including last-trial-distance (and its interactions) significantly improved the fit to the data (χ2(4) = 36.77, p < .001) over the baseline model. This was due to a significant main effect of last-trial distance on accuracy (B = −.013, SE = .004, Z = −3.02, p = .002). This indicates that an increase in target distance decreases accuracy (regardless of whether participants selected the target or not), potentially suggesting some form of recent memory that may influence performance. Last-trial-distance also interacted with last-target-correct (B = −.03, SE = .01, Z = −3.45, p < .001) as well as with log-target-count (B =.01, SE = .005, Z = 2.51, p = .01), and the three-way interaction was also significant (B =.02, SE = .008, Z = 2.19, p = .03). Earlier in the experiment, the effect of last-target-correct depended on last-target-distance, with a higher distance increasing the probability of an accurate trial if one was incorrect beforehand. This may indicate that the participants’ response on a current trial was influenced by what they remember to have selected when they last encountered that target: This memory effect may decrease if distance is higher or when participants have already encountered a majority of mappings (late in the experiment). However, the main effect (which persisted with the interaction) also suggests some kind of momentum – learning was better if items were repeated nearby, regardless of the response.

Figure 10.

Main effects of target distance (Panel A), foil accuracy (B) and location of target in prior trial (C). Target distance was separated by a median split, median = 6.

We next examined the effect of foil accuracy (Figure 10B). This effect is very pronounced as accuracy is substantially higher if participants had previously responded correctly to the foil objects of a current trial. When we added last-foil-accuracy and its interactions to the model, this significantly improved the fit of the model over and above the baseline (χ2(3) = 12.785, p = .005). This was due to a significant main effect of last-foil-accuracy: Learners were more likely to be correct if they had been correct on a trial on which either of the foils had been the target (B =.34, SE = .122, Z = 2.76, p = .006). This also significantly interacted with log-target-count (B =.34, SE = .11, Z = 3.09, p = .002), as participants were more likely to be accurate if they knew one or both foils late in the experiment. This would seem to implicate some sort of eliminative processes by which increasing knowledge of the foils can be used to rule them out for a target word. This also parallels the last-target-correct × log-target-count interaction suggesting that the influence of foil knowledge is also a product of learning.

Finally, we examined whether performance differed when the target appeared in the same location on two subsequent trials helps learning (last-target-same-location, Figure 10C). This prediction easily falls out of associative or exemplar learning accounts in which words are not just associated with the objects, but perhaps with the whole context, or with co-occurring (irrelevant) factors such as spatial location. As Figure 10C shows, there was a small benefit when the target reappeared in the same spatial location as on the last encounter, though like target distance, this effect waned over training. We found that adding the main effect of last-target-same-location (but not its interactions) improved the fit of the model (χ2(1) = 4.1, p = .04). Accuracy was significantly increased if the target appeared in the same location the trial before (independently of whether one was correct on that trial; B =.15, SE = .07, Z = 2.03, p = .042). The fact that participants’ learning is influenced by a target’s position even if they did not click on it within that trial is strong evidence that they must be sensitive to variables beyond their current response (or hypothesis). This did not interact with any of our measures (as adding the interaction did not increase the fit of the model), suggesting a locus in the dynamics of learning and/or memory.

4.2 Discussion