Abstract

Successful speech perception requires that listeners map the acoustic signal to linguistic categories. These mappings are not only probabilistic, but change depending on the situation. For example, one talker’s /p/ might be physically indistinguishable from another talker’s /b/ (cf. lack of invariance). We characterize the computational problem posed by such a subjectively non-stationary world and propose that the speech perception system overcomes this challenge by (1) recognizing previously encountered situations, (2) generalizing to other situations based on previous similar experience, and (3) adapting to novel situations. We formalize this proposal in the ideal adapter framework: (1) to (3) can be understood as inference under uncertainty about the appropriate generative model for the current talker, thereby facilitating robust speech perception despite the lack of invariance. We focus on two critical aspects of the ideal adapter. First, in situations that clearly deviate from previous experience, listeners need to adapt. We develop a distributional (belief-updating) learning model of incremental adaptation. The model provides a good fit against known and novel phonetic adaptation data, including perceptual recalibration and selective adaptation. Second, robust speech recognition requires listeners learn to represent the structured component of cross-situation variability in the speech signal. We discuss how these two aspects of the ideal adapter provide a unifying explanation for adaptation, talker-specificity, and generalization across talkers and groups of talkers (e.g., accents and dialects). The ideal adapter provides a guiding framework for future investigations into speech perception and adaptation, and more broadly language comprehension.

Keywords: speech perception, generalization, adaptation, statistical learning, hierarchical structure, lack of invariance, non-stationarity

In order to understand speech, listeners have to map a continuous, transient signal onto discrete meanings. This process is widely assumed to involve the recognition of discrete linguistic units, such as phonetic categories, words, and sentences. The relative stability with which we usually seem to recognize these units belies the formidable computational challenge that is posed by even the recognition of the smallest meaning distinguishing sound units (such as a /b/ or /p/). In this paper, we characterize this computational problem and propose how our speech processing system overcomes one of its most challenging aspects, the variability of the signal across different situations (e.g., talkers). This problem is not unique to speech recognition, but is a general property of inferring underlying categories and intentions in a changing (i.e., subjectively non-stationary) world (see references in Qian, Jaeger, & Aslin, 2012). The framework that we propose here thus has broad relevance for understanding how people manage changes in the statistical properties of stimuli across different perceptual and cognitive tasks.

The recognition of phonetic categories is broadly assumed to involve the extraction and combination of acoustic and, if present, visual cues. This is a complex task for several reasons. The speech signal is both transient and typically unfolds at speeds not under the listener’s control. Additionally, perceptual cues to phonetic categories are often asynchronously distributed across the speech signal. That means that some cues to a phonetic category contrast are detectable several syllables in advance of the phonetic segment, while at the same time cues following a segment can still be informative (e.g., rhoticity, Heid & Hawkins, 2000; Tunley, 1999). Beyond the extraction of acoustic cues from the speech signal, there are two problems which have puzzled researchers for decades. First, the mapping from cues to phonetic features or phonetic categories is non-deterministic: from the perspective of a listener, phonetic categories form distributions over multiple cue dimensions, and these distribution overlap with those of other categories. Notably, even multiple instances of the same phonetic category produced by the same talker in the same phonetic context will have different physical properties (Allen, Miller, & DeSteno, 2003; Newman, Clouse, & Burnham, 2001). One cause for these distributions is noise in the biological systems underlying linguistic production (e.g., motor noise in the articulators). Similarly, the perceptual system itself is noisy: neurons that respond to certain acoustic features do not deterministically fire when that feature is present (Ma, Beck, Latham, & Pouget, 2006). Additionally, the acoustic properties of the environment like background noise can further alter the linguistic signal.

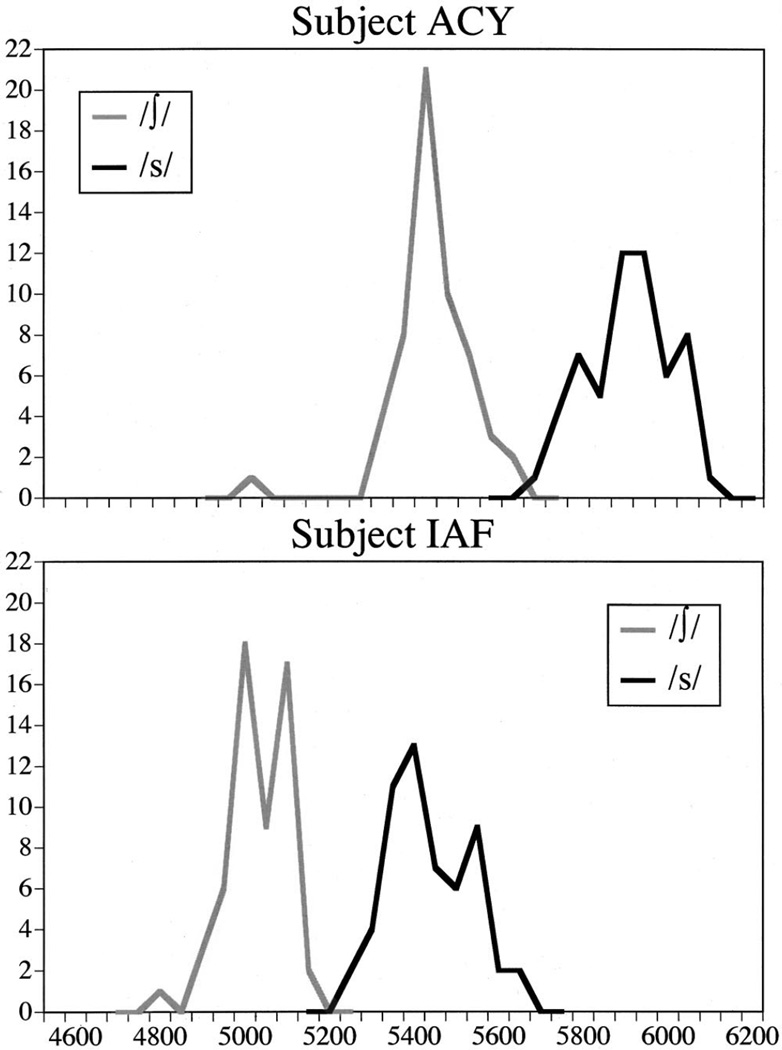

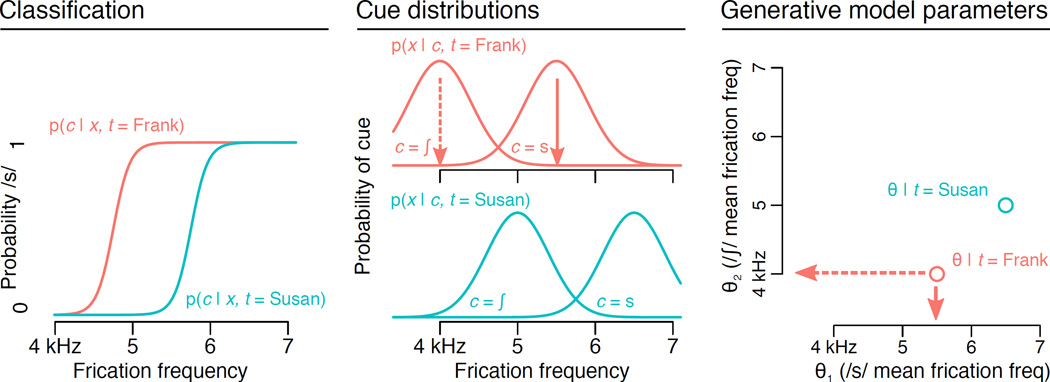

However, arguably the biggest challenge to speech perception is that the mapping from acoustic cues to phonetic categories can vary across situations. A ‘situation’ could be characterized in terms of an individual talker or a group of talkers with a similar way of speaking, or other aspects of the environment which lead to systematic changes in speaking style (like a noisy bar). For example, different talkers sometimes realize even the same phonetic categories, in the same phonetic context, with dramatically differently cue distributions (e.g., Allen et al., 2003; McMurray & Jongman, 2011; Newman et al., 2001). These differences might arise from fixed, physical differences in, for instance, vocal tract size, but they also arise from variable or stylistic factors like language, dialect, or sociolect (e.g., Babel & Munson, 2014; Johnson, 2006; Labov, 1972; Pierrehumbert, 2003). These differences in the cue-to-category mapping can be substantial. Figure 1 shows the distributions of one of the primary cues distinguishing between /s/ and /ʃ/ as produced by two different talkers. Such between-talker variability means that one talker’s “ship” is physically more like another’s “sip”. This problem is known as the lack of invariance and is one of the oldest problems in speech perception (Liberman, Cooper, Shankweiler, & Studdert-Kennedy, 1967). The focus of this article is how listeners manage to accommodate such systematic variability and achieve robust speech recognition.

Figure 1.

Distribution of frication frequency centroids, a crucial cue to the contrast between /s/ and /ʃ/, from two talkers (reproduced with permission from Newman et al., 2001, copyright 2001 Acoustical Society of America).

Overcoming the lack of invariance: The proposal

In the face of the sort of variability between situations—talkers, in this case—seen in Figure 1, it is natural to wonder how we can understand each other at all. We propose that the answer to this question is three-fold:

-

recognize the familiar,

generalize to the similar, and

adapt to the novel

As we discuss below, at least the first and the last of these have been more or less explicitly assumed in previous work, and there is at least preliminary evidence for the second. In a familiar situation, the speech recognition system has a great deal of previous experience to draw on, and by recognizing a familiar situation it can take advantage of this previous experience. Recognition of the familiar underlies, for example, talker-specific interpretation of the acoustic signal (Creel, Aslin, & Tanenhaus, 2008; Eisner & McQueen, 2005; Goldinger, 1998; Kraljic & Samuel, 2007; Nygaard & Pisoni, 1998). Similarly, generalizing to a novel situation based on similar previous experience means the speech recognition system doesn’t have to start from scratch each time a new situation is encountered. For example, such generalization allows us to recognize an accent and adjust our interpretations based on previous experience with similar talkers (Baese-berk, Bradlow, & Wright, 2013; Bradlow & Bent, 2008; Sidaras, Alexander, & Nygaard, 2009). At the same time, novel situations might require adaptation beyond what is expected based on previous experience. For example, when encountering a talker with a novel dialect or accent, the speech recognition system must be prepared to adapt rapidly and flexibly.

We propose that all three of these strategies arise from the function that the speech recognition system fulfills (i.e., the typical goals of speech recognition), and that the basic design of this system reflects the fact that it must function efficiently under normal circumstances. Specifically, we propose that the three strategies emerge from the organizational constraints on the speech recognition system imposed by the presence of variability both within a single situation and between situations. These constraints lead naturally to a few conceptual components for the proposed framework. First, because there is variability within a situation, the mapping between cues and categories is inevitably probabilistic. This makes speech recognition a problem of inference under uncertainty and implies that a robust speech recognition system must use distributional (statistical) knowledge (Clayards, Tanenhaus, Aslin, & Jacobs, 2008; Feldman, Griffiths, & Morgan, 2009; Norris & McQueen, 2008).

Second, because cue distributions themselves vary—sometimes unpredictably—across situations, the system must be prepared, when necessary, to engage in distributional/statistical learning. This is closely related to the notion of life-long implicit learning (Botvinick & Plaut, 2004; Elman, 1990; Chang, Dell, & Bock, 2006), as well as statistical learning theories of language acquisition (Feldman, Griffiths, Goldwater, & Morgan, 2013; McMurray, Aslin, & Toscano, 2009; Vallabha, McClelland, Pons, Werker, & Amano, 2007), a connection we return to below.

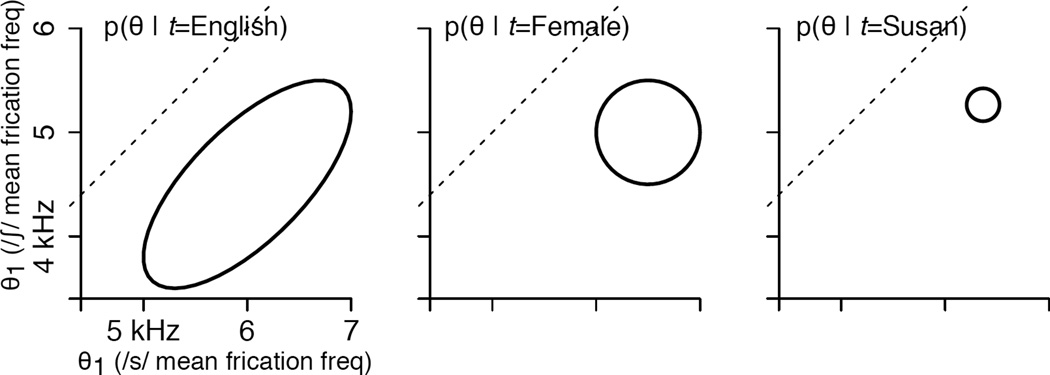

Third, cue distributions do not vary arbitrarily across situations. Rather, the world is structured. For instance, a listener is likely to encounter a particular familiar talker’s cue distributions again, relative to any arbitrary cue distributions, and likewise they are more likely to encounter cue distributions that are similar to those encountered in the past, because of regularities in how talkers vary within a language or more specific grouping like gender, dialect, accent, etc. We propose that in order to take advantage of such structured variability, the speech perception system does not only engage in distributional learning. In its most basic sense, this is demonstrated by our ability to recognize previously encountered talkers, and use talker-specific experience to guide speech perception. Going beyond talker-specificity, we will discuss evidence that argues for sensitivity to structure over groups of talkers or situations. In a world where speech statistics vary in structured ways, life-long adaptation alone is not sufficient for robust speech perception. A robust speech perception system should take advantage of structure in the world that allows previous experience to inform current processing (for similar reasoning applied to other cognitive domains, cf. Qian et al., 2012). It is, we propose, sensitivity to this structure in the world that underlies recognition of familiar situations and generalization to similar ones.

In this paper, we elaborate on this proposal, review the relevant literature, and develop a framework in the tradition of ideal observer models and normative/Bayesian inference (Anderson, 1990) that, we hope, will help guide future work on speech perception. As we detail below, the proposed framework, which we dub the ideal adapter, understands all of (1) to (3) above (i.e., recognition, generalization, and distributional learning) as the result of selecting and adapting the appropriate generative model for the current situation based on the integration of prior and present experience (hence, the name for the proposed framework). This brings a unifying and—at least in parts—formalized computational framework to a set of ideas that have been assumed—more or less explicitly—by others before us. For example, it is widely assumed that speech perception is talker-specific (e.g., Creel & Bregman, 2011; Pardo & Remez, 2006; Pisoni & Levi, 2007) and recent work has begun to investigate our ability to generalize across talkers (e.g., Bradlow & Bent, 2008; Eisner & McQueen, 2005; Kraljic & Samuel, 2007; Sidaras et al., 2009). The ideal adapter framework ties together these different lines of work, emphasizing the crucial roles of both the structure of listeners’ prior knowledge and their ability to learn the statistics of novel situations. It has so far not been fully recognized, we submit, just how far-reaching the consequences of these two aspects of speech recognition are. Laying out the consequences of these two aspects of the framework thus forms the core of this article.

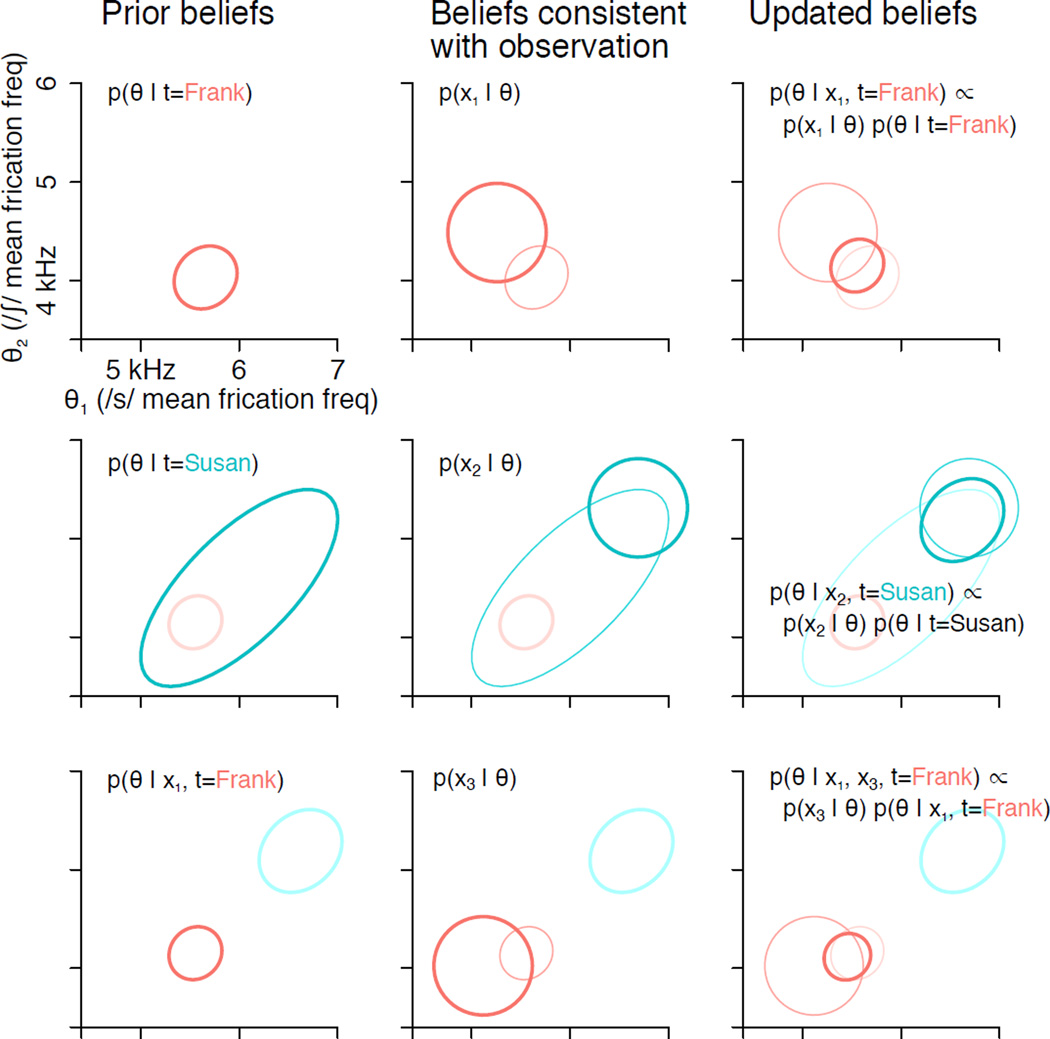

We begin our exposition in Part I with adaptation to the novel. For this, we focus on situations in which listeners have high certainty (i.e., ‘know’) that they need to adapt. We formalize the problem of adaptation and test the predictions of the ideal adapter framework through an implemented model. We focus on two well-studied phonetic adaptation phenomena: the first where listeners recalibrate one phonetic category in response to auditorily ambiguous stimuli labeled as one category (perceptual recalibration or phonetic adaptation, Bertelson, Vroomen, & de Gelder, 2003; Kraljic & Samuel, 2005; Norris, McQueen, & Cutler, 2003), and the second where listeners change their classification behavior after repeated exposure to the same prototypical stimulus (selective adaptation, Eimas & Corbit, 1973; Samuel, 1986). This leads us to develop and test novel predictions of the proposed framework. In this part of the paper, we spend a substantial amount of time developing intuitions about the mechanics of how—in the ideal adapter framework—listeners can update their beliefs about the cue distributions in the current situation based on direct experience. In doing so, we illustrate how the proposed perspective relates to and diverges from standard accounts of speech recognition.

In Part II, we turn to situations where previous knowledge is crucial for robust speech perception: recognition of familiar situations and generalization to similar novel situations. In contrast to the flexibility demanded by novel situations, in familiar situations listeners can benefit from stable representations of past experience. The ideal adapter framework provides a natural link between the distribution of speech statistics in the world—at the level of individual talkers and groups (e.g., dialect, gender, language)—and different strategies for how listeners can achieve robust speech perception in the face of the lack of invariance. In Part II we will discuss what structure there is in the world that listeners can take advantage of and review the evidence that they do take advantage of it. In doing so, we identify directions for future research and isolate a number of specific questions that we consider particularly critical for our understanding of the human speech recognition system.

Finally, we close in Part III by putting the framework we have developed into broader perspective. In particular, we will address how our approach relates to other approaches to the problem of the lack of invariance. Following that, we will discuss how our framework might inform broader issues in speech perception, language comprehension, and more domain-general learning and adaptation. Our approach is a computational-level one and as such compares only indirectly to mechanistic- or algorithmic-level approaches (Marr, 1982), but it nevertheless provides a set of tools for reasoning about speech perception (and language comprehension more generally) which can help sharpen questions for research at other levels. For example, the questions raised by the ideal adapter framework also speak to the debate between episodic, exemplar-based or more abstract phonetic representations (Johnson, 1997a; Goldinger, 1998; McClelland & Elman, 1986; Norris & McQueen, 2008; Pierrehumbert, 2003). They also relate to the acquisition of phonetic categories, which can be seen as another type of distributional learning problem (Maye, Werker, & Gerken, 2002; McMurray et al., 2009; Vallabha et al., 2007), and to language processing at higher levels (e.g., Fine, Jaeger, Farmer, & Qian, 2013; Grodner & Sedivy, 2011; Kamide, 2012; Kurumada, Brown, & Tanenhaus, 2012; Kurumada, Brown, Bibyk, Pontillo, & Tanenhaus, 2014). We also discuss recent research that has found adaptive behavior in language processing above the level of speech perception. At its most basic, the ideal adapter framework also contributes to the burgeoning literature on learning in a variable world (e.g., change detection, Gallistel, Mark, King, & Latham, 2001; hierarchical reinforcement learning, Botvinick, 2012; motor learning, Körding, Tenenbaum, & Shadmehr, 2007). Along with other recent approaches, the ideal adapter stresses that the cross-situational statistics of the world—though being variable—are structured, and that our cognitive systems have evolved to take advantage of this structure.

Part I

The ideal adapter framework

Adaptation in speech perception has received a great deal of attention recently. For example, when listeners initially encounter accented speech, they process it more slowly and less accurately, but this disadvantage dissipates within a matter of minutes (Bradlow & Bent, 2008; Clarke & Garrett, 2004 and references therein). Similarly, listeners rapidly adapt to synthesized and otherwise distorted speech (e.g., Davis, Johnsrude, Hervais-Adelman, Taylor, & McGettigan, 2005). Adaptation is not limited to cases of highly unusual pronunciation, such as foreign accents. Even relatively subtle divergences from standard cue distributions can lead to adaptation. For example, listeners adapt to a talker who produces cue distributions with a typical mean value but less variability than normal (Clayards et al., 2008). This suggests that continuous and implicit adaptation to subtle deviations from auditory expectations is a pivotal component of the human speech perception systems.

What is lacking thus far, however, is a better understanding of how and when we adapt. Specifically, how do listeners detect that their current linguistic representations are inadequate for the current situation, and how is evidence from the currently processed speech stream integrated with previous experience in order to achieve adaptation? Despite the central importance of the lack of invariance problem to speech perception and language understanding (Liberman et al., 1967; Pardo & Remez, 2006), to date there are few cognitive models of adaptation, and as we discuss below, those that do exist do not link it to other strategies for dealing with the lack of invariance. State of the art models of speech perception have begun to address the non-determinism inherent in the mapping from cues to categories, but ignore or abstract away from the specific contributions of the lack of invariance (Feldman et al., 2009; Feldman, Griffiths, et al., 2013; Norris & McQueen, 2008).1

We propose that the first important step is to ask why phonetic adaptation occurs at all, or rather why one would expect speech perception to exhibit adaptive properties. To that end it is helpful to understand speech perception as a problem of inference under uncertainty. The acoustic cues that provide information about the talker’s intended message are variable and ambiguous, and thus each individual cue is only partially informative. In order to effectively infer the underlying message, information must be integrated from many sources, and as we will discuss below, this must be guided by knowledge of the distribution of cues associated with each linguistic unit. However, because of the lack of invariance, these distributions differ across situations (e.g., talkers).

Adaptation through belief updating

Our central proposal is twofold. First, listeners do not have direct access to the true distribution but rather uncertain beliefs about them based on a limited number of observations. Second, inaccurate beliefs about the underlying distributions can lead to slowed or inaccurate phonetic categorization, and in order to achieve robust speech perception across situations, listeners must adapt. In this view, adaptation reflects a sort of incremental distributional learning, and such distributional learning can be computationally characterized as belief updating. This incremental distributional learning has to integrate recent experience with a novel situation with prior knowledge and assumptions about the language. In this sense, the proposed account builds on and expands on the general idea of life-long implicit learning (Botvinick & Plaut, 2004; Chang et al., 2006; Elman, 1990) and that the processing of language input is inevitably tied to implicit learning (e.g., Clark, 2013; Dell & Chang, 2014; Jaeger & Snider, 2013). Unlike much of this work, however, we will argue that the implicit beliefs listeners hold based on previous experience are not unstructured. Rather, they reflect higher-level knowledge (beliefs) about different talkers, groups of talkers, dialect and accents, and so on. We return to this in the second part of the paper.

The first proposition of our framework is that human speech perception relies on a generative model, or the listener’s knowledge of how linguistic units (words, syllables, biphones, phonetic categories, etc.) are realized by different distributions of acoustic cues. Such knowledge allows for speech perception to proceed by comparing how well each possible explanation—higher-level linguistic unit—predicts the currently observed signal. The proposal that language comprehension proceeds via prediction of the signal accounts for a variety of properties of language understanding beyond the ones discussed here (cf. Dell & Chang, 2014; Farmer, Brown, & Tanenhaus, 2013; Jaeger & Snider, 2013; MacDonald, 2013; Pickering & Garrod, 2013) and is closely related to similar proposals from visual perception and other domains (Clark, 2013; Friston, 2005; Hinton, 2007; Y. Huang & Rao, 2011; Rao & Ballard, 1999).

Our second proposition is that the cue values predicted from a given linguistic unit depend not only on what is being said (the phonetic category, biphone, word, etc.) but also on who is saying it, and good speech perception depends on using an appropriate generative model for the current talker, register, dialect, etc.. The listener never has access to the true generative model, but rather only their uncertain beliefs about that generative model. Thus, adaptation can be thought of as an update in the listener’s talker- or situation-specific beliefs about the linguistic generative model. The idea that speech adaptation reflects learning about the linguistic generative model is not in and of itself novel, but it has largely been implicit in the empirical literature thus far and our proposal provides an explicit framework and formalization for understanding the link between learning and processing in speech perception.

Our goal is to provide a framework for understanding, on the one hand, how listeners might best represent past experience with different situations, and on the other hand how listeners can integrate that previous experience with evidence from the currently processed speech signal in order to infer an appropriate generative model for each situation. As we discuss below, the listener needs to bring their beliefs about the distribution of cue values for each category into alignment with the actual distribution that the talker is producing. Because the speech signal unfolds over time, and because the fine-grained acoustic information fades rapidly, this belief updating must be done incrementally. However, this is difficult because each individual speech sound is corrupted by the intrinsic variability of the speech production, transmission, and perception process, and hence not an unambiguous cue to the underlying distribution. That is, when a listener hears a cue value that they do not expect, it could be due either to a change in the underlying distributions, or because deviations from prototypical cue values happen for a variety of other reasons (muscle fatigue, coarticulation, background noise, etc.). The question is thus how the listener should incorporate each new piece of evidence into their beliefs. We address this question by developing an ideal adapter framework, which, in the tradition of computational-level/rational analysis (Anderson, 1990; Marr, 1982), sets out the statistically optimal way to do this integration. By this, we mean that adaptation reflects an inference process which combines prior beliefs and recent experience proportional to the degree of confidence in each.2,

The ideal listener or phonetic categorizer

Our ideal adapter framework builds on a foundation of ideal listener models, which describe the problem of speech perception as statistical inference of the talker’s intentions (Clayards et al., 2008; Feldman et al., 2009; Norris & McQueen, 2008; Sonderegger & Yu, 2010). Such inference includes inferring intermediate linguistic units, like phonetic categories, either as a means to another end or as an end in itself, as in the case of explicit experimental phonetic categorization tasks. Because we focus specifically on the problem of inferring phonetic categories, for our purposes an ideal listener model is better characterized as an ideal phonetic categorizer model. For the broader goal of understanding speech perception, it should, however, be kept in mind that the human listener is not just a phonetic categorization machine, and phonetic categorization typically serves other ends (such as lexical access, Norris & McQueen, 2008, or even the successful inference of communicative intentions, Jaeger & Ferreira, 2013).

Because of the inherent variability of how a phonetic category is realized acoustically, any particular cue value is in principle ambiguous, and thus phonetic categorization is a problem of inference under uncertainty. Such inference can be formally expressed in the language of Bayesian statistics. In the general case, the posterior probability of each category C = ci after observing cue value x is related to the prior probability of ci, p(C = ci) and the likelihood of x under category ci, p(x|C = ci), according to Bayes rule:

Because the denominator of the fraction does not depend on the specific category ci and only serves to ensure that all of the posterior probabilities p(C = ci|x) sum up to one, it is often omitted and the relationship is written as proportionality:

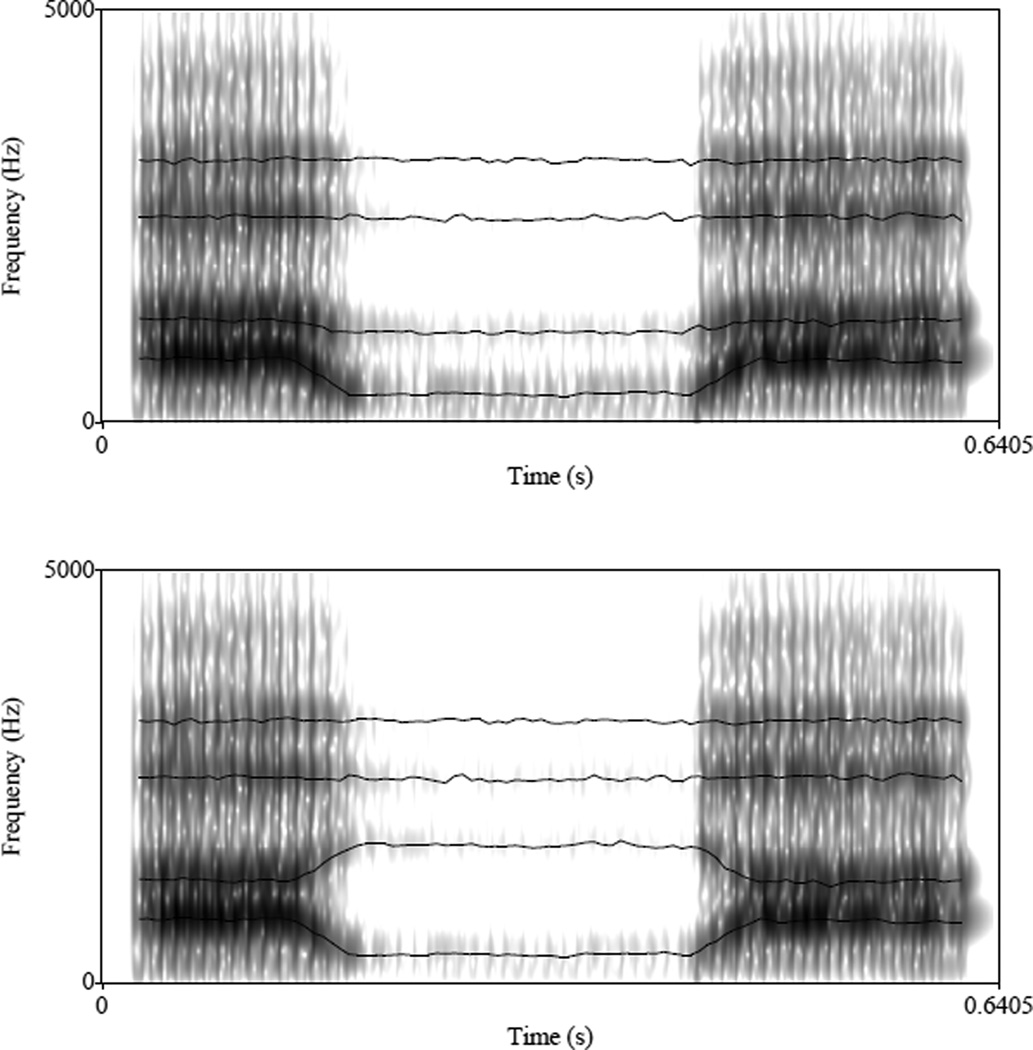

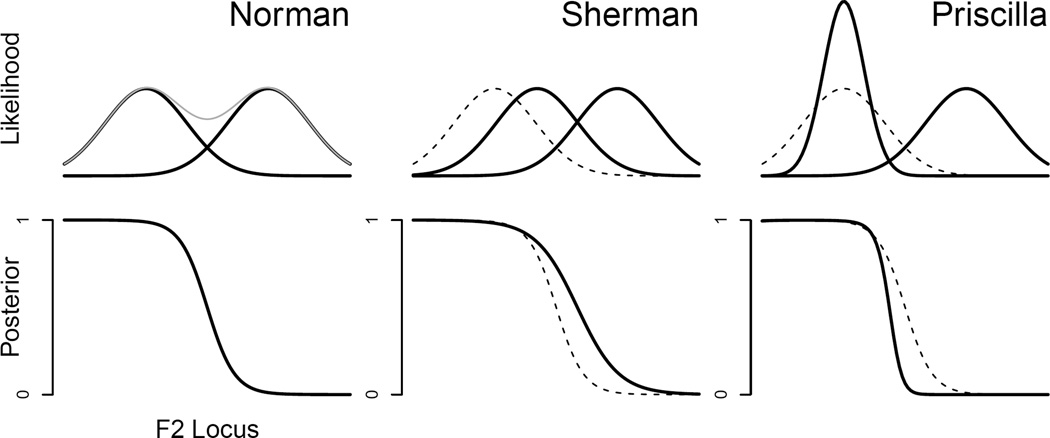

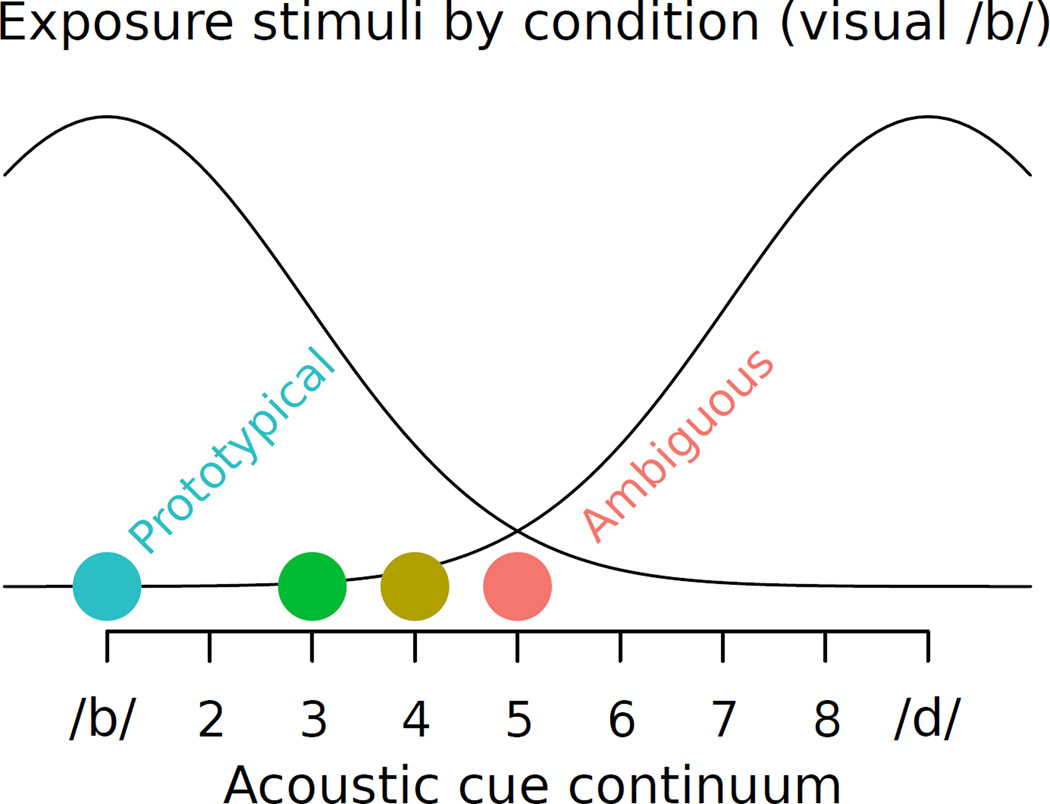

In this paper, we will begin by addressing a very simplified phonetic categorization problem, in which the listener is trying to decide whether a given cue value is a /b/ or /d/, and later discuss how the approach we develop applies in general. One important cue to this phonetic contrast is the F2 locus, or “target” of the second formant transition (Delattre, Liberman, & Cooper, 1955). Figure 2 shows the spectrograms corresponding to synthesized /aba/ and /ada/ tokens (synthesized by Vroomen, van Linden, Keetels, de Gelder, & Bertelson, 2004). Figure 3 (left panel) shows schematically how the distributions of F2 loci differ for /b/ and /d/: /b/ typically has a lower F2 locus, but there is some variability for both /b/ and /d/. There is thus a continuum from /b/-like to /d/-like F2 locus values.

Figure 2.

Spectrograms for /aba/ (top) and /ada/ (bottom) with formant tracks (synthesized as described in Vroomen et al. (2004) and provided by Jean Vroomen). Note the higher second formant (F2) locus for the transitions into and out of the closure for /ada/.

Figure 3.

Relationship between F2 locus likelihood functions p(x|c) (top) and posterior probability of /b/, or classification function p(c|x) (bottom; assuming p(c) = 0.5 for both c =/b/ and /d/), for three different talkers: a ‘normal’ talker (Norman), a ‘shifted’ talker (Sherman), and a ‘precise’ talker (Priscilla). Dashed lines show the /b/ likelihood function and classification function corresponding to the ‘normal’ talker. Light gray line in top left shows the marginal likelihood, p(x) = Σ p(x|c)p(c), which corresponds to the overall distribution of cue values, regardless of which category they came from, and is the sum of the two likelihood functions.

Let’s assume for simplicity’s sake that the listener is only considering F2 locus as a cue to the /b/-/d/ contrast. Given an observed F2 locus value, the listener must infer how likely it is that the talker intended to produce the phonetic category C =/b/. That is, what is the posterior probability p(C = b | F2 locus)?3 This quantity is found via Bayes rule:

| (1) |

| (2) |

| (3) |

Bayes rule captures the fact that the posterior probability depends on three things. First, it depends on the prior probability of the hypothesis, p(b), which could be higher if /b/ is more frequent in the language than /d/, or if there are other contextually available sources of information that make /b/ more likely, like lexical, visual, or coarticulatory cues. Second, it depends on the likelihood p(F2 locus | b), which is the probability of the observed F2 locus value given that /b/ was intended by the talker. Finally, it also depends on how credible other hypotheses are, which is really a consequence of requiring that the posterior probabilities of all hypotheses add up to one. This is equivalent to the overall probability of the observed F2 locus value, regardless of which hypothesis is true, and since this quantity is the same for all potential hypotheses it is frequently omitted, as in (3).

For an ideal listener, the probability of recognizing a /b/ should be the estimate of the posterior probability of /b/ (and likewise for /d/) (Clayards et al., 2008; Feldman et al., 2009). This assumes that the result of speech recognition is not a single category but rather uncertain (or variable) estimates of which categories are more or less likely. This is not a trivial assumption. For example, one might imagine that a listener would improve its categorization accuracy by always ‘guessing’ the category with the highest probability. However, for speech perception more broadly, there is a benefit to maintaining uncertainty about the category, since additional information often becomes available later in the speech signal (e.g., because of the asynchronous nature of acoustic cues). Indeed, human listeners seem to maintain uncertainty about the speech signal for at least a limited amount of time (cf., right-context effects in word recognition, Bard, Shillcock, & Altmann, 1988; Connine, Blasko, & Hall, 1991; Dahan, 2010; Grosjean, 1985)

Treating speech perception as inference under uncertainty provides substantial insight. Much of this comes from the fact that in such a framework, recognition is accomplished not through purely bottom-up template matching but rather by comparing how well each possible higher-level explanation can predict the input signal. This framework provides accounts of effects such as the perceptual magnet effect (Feldman et al., 2009), compensation for coarticulation (Sonderegger & Yu, 2010), and integration of auditory and visual cues (Bejjanki, Clayards, Knill, & Aslin, 2011). It also describes speech and language processing at other levels, including lexical access (Norris & McQueen, 2008), the incremental integration of words into a syntactic parse (Hale, 2001; Levy, 2008a, 2008b), and pragmatic reasoning (M. C. Frank & Goodman, 2012; Goodman & Stuhlmüller, 2013) Moreover, Bayesian inference has been shown to provide a powerful and general computational framework for describing statistically optimal inference under uncertainty, via the integration of prior beliefs and recently observed data. This perspective thus extends to other perceptual and cognitive domains (Griffiths, Chater, Kemp, Perfors, & Tenenbaum, 2010; Kersten, Mamassian, & Yuille, 2004; Tenenbaum & Griffiths, 2001), including sensory adaptation in non-language domains (Fairhall, Lewen, Bialek, & de Ruyter Van Steveninck, 2001; Körding, Tenenbaum, & Shadmehr, 2007; Stocker & Simoncelli, 2006).

One specific advantage of this framework for understanding phonetic adaptation is that it links speech perception behavior with the distribution of cues associated with each category. For an ideal listener, the classification curve for /b/ and /d/ responses is derived directly from the respective posterior probabilities (Figure 3, left panel), which in turn are computed in part from the corresponding likelihood, or distribution, function for each category:

| (4) |

Indeed, listeners do appear to use distributional information in speech perception. Clayards et al. (2008) found that listeners adapt to specific distributions of auditory cues to /b/ and /p/. Listeners in this experiment performed a spoken-word picture identification task, where some of the stimuli were /b/-/p/ minimal pairs like “beach” and “peach”. Listeners were randomly assigned to two conditions. In both conditions, the /b/ and /p/ percepts were drawn from normal distributions over the primary acoustic cue to the /b/-/p/ contrast (voice onset timing, VOT, Lisker & Abramson, 1964). In the high-variance condition, the variance around the VOT category means for /b/ and /p/ was large; in the low-variance condition, it was small. Listeners’ classification boundaries reflected the distribution of cues that they experienced: for low-variance exposure, the classification boundaries were steep, while for high-variance exposure the boundaries were shallower, reflecting the greater uncertainty about the intended category that comes with more variable productions of each category. Moreover, the difference in boundary slopes was quantitatively predicted by the difference in the category variances in each case.

This result demonstrates two points. First, by showing that listeners’ categorization boundaries reflect the variance of the talker’s VOT distributions as predicted by the ideal listener model, they show that listeners are using probabilistic cues in a nearly optimal way. Second, and more importantly for our purposes, they show that listeners are adapting to a change in the statistics of these cues. Because they have no experience with the experimental talker before beginning the experiment, any differences in their classification function after exposure reflects something that they have learned about the talker’s VOT distributions over the course of the experiment.

The natural question to ask is: how do listeners get to the point where distributional information is reflected in their behavior? Intuitively, we might say that coming into a new situation—like an experiment—listeners have some beliefs about the distributions of cues for each phonetic category, and that these beliefs change as the listener gains more experience in that situation. These changes in beliefs about how cues are distributed leads to changes in how any given cue is interpreted, resulting in possibly better comprehension or changes in classification behavior. In the next section we show how—like phonetic categorization—this intuitive idea formally corresponds to statistical inference, but at a different level.

The ideal adapter

Our ideal adapter framework builds on the ideal listener framework described in the last section. The ideal listener depends on distributional information about each category, in the form of the likelihood function p(x |C). We can think of the likelihood function for each category as the listener’s prediction about what cue values are likely to occur given that category is produced, and this prediction is used during speech perception to evaluate how well each hypothesized category explains the particular cue value currently being classified. However, we can also think of the likelihood functions as explanations of (and predictions about) the statistics of cues for each category. Crucially, these explanations come from the listener’s subjective knowledge of cue distributions, and likely are not exactly identical to the true statistics of cues in the world, because a listener only has finite observations to work with and thus incomplete information about the true distributions. The consequence of this is that the listener has uncertain knowledge of cue distributions.

If the statistics of cues associated with each category were identical or at least similar from one situation to the next, information could be accumulated from all observed values to obtain sufficiently accurate—and certain—estimates of the likelihood function. But as discussed above, this is not always the case: talkers can differ dramatically in the acoustic cues they use to realize phonetic categories, and thus the true likelihood function differs across situations (Allen et al., 2003; Labov, 1972; Hillenbrand, Getty, Clark, & Wheeler, 1995; McMurray & Jongman, 2011; Pierrehumbert, 2003).

In order to make good use of bottom-up information from acoustic cues, listeners require the appropriate likelihood function for the current situation. Consider again the case of making a /b/-/d/ decision on the basis of the F2 locus cue, but suppose that we have encountered a new talker—call him Sherman—who produces a different distribution of F2 locus values for /b/, a distribution which is shifted to the right (Figure 3, middle). If the listener continues to use the likelihood function which matches the ‘normal’ talker Norman’s /b/ distribution, comprehension of Sherman’s speech will suffer: cue values which were ambiguous for Norman are now more likely to be generated from /b/ (middle bottom; the ideal classification function for Sherman, the solid line, is above the dashed line). Conversely, cue values that are perfectly ambiguous for Sherman (where the solid line crosses p(b | F2 locus) = 0.5) would be much more likely to be /d/ when produced by Norman. That is, a mismatched likelihood function can result in slowed or inaccurate comprehension: inaccurate because the ideal category boundary depends on the likelihood function, and slower because /b/ cue values which are nearly prototypical and highly likely for the new talker would be ambiguous for the standard talker (the resulting uncertainty slows processing in this sort of task; Clayards et al., 2008; McMurray, Tanenhaus, & Aslin, 2002).

Similarly, consider the third talker in Figure 3 (right), Priscilla, whose /b/ productions are substantially more precise than Norman’s, resulting in a low-variance cue distribution for /b/, but whose /d/ productions show normal variability. Using Norman’s likelihood function to classify Priscilla’s productions has similar consequences in this situation: cue values that would have been ambiguous for Norman are now quite a bit more likely to have come from the /d/ distribution because Priscilla’s /b/s are so precise.

In both of these situations, comprehension difficulties could be avoided if the listener could use the right likelihood function. If the talker is familiar, this might be as simple as retrieving the right likelihood function based on prior experience with the talker (cf. Goldinger, 1998).4 But what if the talker has never been encountered before? This is a distributional learning problem: in order to achieve efficient and accurate comprehension of a novel talker, the listener must learn the cue distributions corresponding to the new talker’s phonetic categories. This is similar to the problem faced by an infant acquiring their first language, although the adult listener starts with a substantial amount of prior knowledge. Most notably, they know that there are different phonetic categories for /b/ and /d/, and that these categories are generally distinguished by the F2 locus cue. As we discuss in the second half of this article, adult listeners also may have experience with similar talkers, providing them with more or less useful previous experience.

Still, inferring the distributions of F2 locus values corresponding to these categories is a difficult task, because the inherent variability in these distributions makes each observed cue value ambiguous as evidence for the underlying distribution: if the observed cue value deviates from the listener’s predictions, is it due to inherent within-category variance (which will produce some outliers), or is it evidence that the predictions themselves—the likelihood functions—are wrong and need to be updated?

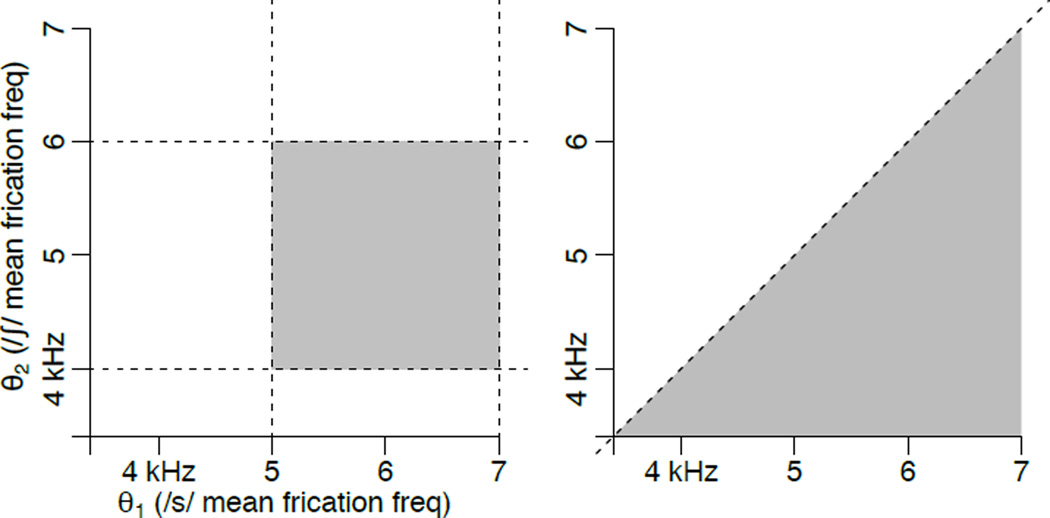

Thus, determining the talker’s category distributions is a problem of inference under uncertainty, just like the problem inferring the talker’s intended category based on an observed cue value, but at another level. That is, in the same way that the listener can use their knowledge of how well each possible category predicts an observed cue value to infer which category is most likely, they can also use knowledge about how well each possible category distribution predicts the observed statistics of their recent experience in order to infer which underlying distributions are more or less likely in the current situation. The statistically optimal solution to this inference problem can again be described using Bayes Rule. For simplicity’s sake, the cue distribution5 for a category c can be represented by its mean μc and variance . Thus the listener’s uncertain beliefs about this cue distribution can be represented by a probability distribution over means and variances.

An ideal adapter must infer both the category label c and and the means and variances of the different underlying categories (where for our example, c = /b/, /d/). Formally, this is expressed by the joint posterior distribution over category labels and means and variances, which combines prior beliefs with the likelihood of the observed evidence:6

| (5) |

This captures the fact that after observing a cue value x, the listener’s joint beliefs about the intended category C = c and the parameters of all categories μ, σ2 depend on two things. First, the updated beliefs depend on the likelihood, how well each possible combination of categorization and category parameters can predict the observation x, . Second, they depend on the listener’s prior beliefs, both about which categories are most likely to be encountered, p(c), and which combinations of category means and variances are most probable, p(μ, σ2). Both aspects of the prior are based on prior experience. The prior over category probabilities depends on the base rate for each category, as well as its probability in context (based on the surrounding sounds or word, or other acoustic cues besides x),7 while the prior over category means and variance depends on the sorts of cue distributions the listener has encountered before and expects to encounter again. The nature of the prior over category means and variances is the focus of Part II below. For now all that matters is that the listener thinks some category means and variances are more likely than others.

The specific way that an ideal adapter updates their beliefs after observing cue value x depends on how they categorize it, and this is captured by the joint posterior distribution p(c, μ, σ2 | x). In general, an observation from category c provides the most evidence about the underlying mean and variance of that category. In the case where the prior beliefs about the parameters of each category are independent of each other, , the beliefs about category ci are only updated if the observation is classified as C = ci.

| (6) |

In cases where there is uncertainty about how the observation x should be categorized, an ideal adapter should update the beliefs about category ci as a mixture of the updated beliefs under each possible categorization, weighted by how likely that categorization is overall (averaging or marginalizing over current category parameter beliefs):

| (7) |

Again, if we assume that the beliefs about different categories are independent, this mixture consists of two components: one where x is categorized as C = ci and beliefs about category ci are updated, and one where it is not and no belief updating occurs:8

To return to the example above of classifying a token as either /b/ or /d/ based on F2 locus, the posterior distribution over the mean and variance of /b/ after observing a particular F2 locus value is thus

In conversational speech, acoustic observations are often labeled with high certainty, and so p(C = ci | x) ≈ 1 for some category ci. Such label information can come both from top-down linguistic context (like phonotactics or lexical disambiguation), or from other bottom-up cues. For example, when distinguishing /b/ from /d/, the closure of the lips during /b/ provides a very informative visual cue, effectively labeling the auditory percept (Vroomen et al., 2004).

In such cases where there is some other source of information that labels the observed F2 locus value as a /b/, the resulting conditional posterior distribution over the mean and variance of /b/ simplifies to:

| (8) |

Here, the relevant prior distribution is just the listener’s prior beliefs about the mean and variance of the F2 locus cue for the /b/ category. Likewise, the likelihood considers only how well each combination of /b/ mean and variance account for the observed cue value. Below, we model incremental adaptation for cases where the category labels are known with high certainty (and thus (8) holds). We also assume that the prior beliefs about /b/ and /d/ are independent. We make these assumptions for the sake of simplicity and tractability in modeling, and it is important to keep in mind that they do not represent assumptions of the framework, which makes qualitatively the same predictions whether or not these assumptions turn out to be true.

In sum, the ideal adapter framework predicts that optimal phonetic adaptation depends on three things: the statistics of the observed percepts (e.g. their mean and variance), the listener’s prior beliefs about the statistics of the relevant categories, and the listener’s belief that there is a need to adapt (including their beliefs about the amount of variation in the relevant category across talkers and situations). In the next five sections, we illustrate the role of the first two factors in phonetic adaptation experiments where the third factor is a given (i.e., where there is a clear need to adapt and for which previous work has shown that listeners indeed adapt, Bertelson et al., 2003; Kraljic & Samuel, 2005; Norris et al., 2003). In order to do this we specify a basic belief updating model in the ideal adapter framework which quantifies how the exposure statistics and the listener’s prior beliefs about those statistics interact.

With this model in hand, we do five things. First, as a basic evaluation we address the phenomenon of phonetic recalibration or perceptual learning. Such perceptual learning is typically thought to be due to changes in the underlying representations of the adapted categories which generally serves the purpose of robust speech perception, and is naturally accounted for by the ideal adapter framework. In particular, we show that the incremental build-up of recalibration is accounted for by our basic belief updating model.

Second, we illustrate how the way in which the model accounts for the build-up of recalibration potentially sheds light on the underlying processes. Specifically, the model captures the fact that recalibration is often ambiguous between a change in the underlying mean of the category versus a relaxation of the criterion for what counts as an acceptable exemplar, or a change in the variance of the category.

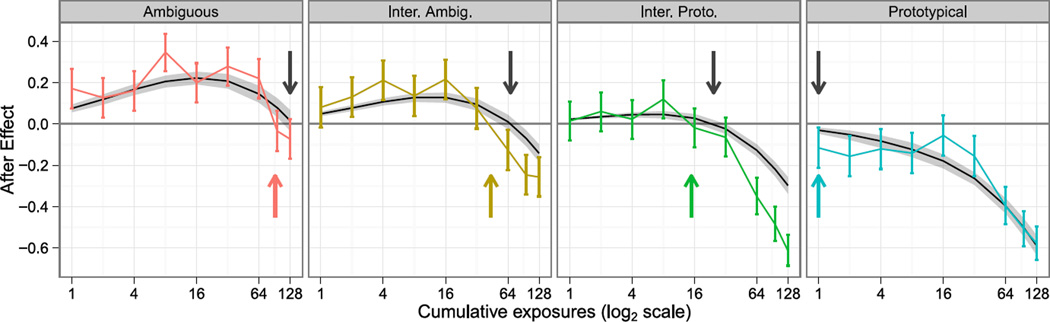

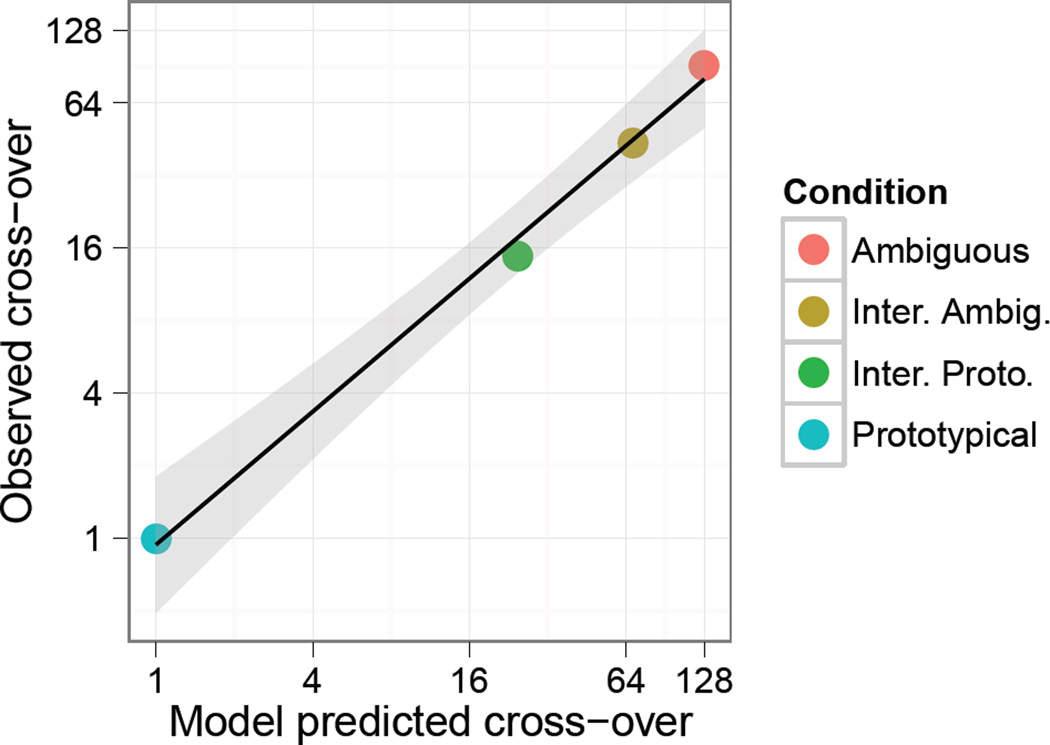

Third, we examine the predictions of this framework for the selective adaptation paradigm, a paradigm which is typically not considered to be due to the perceptual learning which serves robust speech perception. However, we show that the belief updating model accounts for the incremental build-up of this phenomenon as well, using very similar parameters as for recalibration.

Fourth, we explore a little studied property of phonetic recalibration that, prima facie, would seem to stand in conflict with our hypothesis that adaptation serves robust speech perception: prolonged, repeated exposure to the exact same stimulus can eventually undo the recalibration effect (Vroomen, van Linden, de Gelder, & Bertelson, 2007). However, we show that not only is this predicted by the ideal adapter framework under a range of conditions, the belief updating model which accounts for the build-up of selective adaptation and recalibration also accounts for the effect of prolonged repeated exposure in each, and does so simultaneously with a single set of parameters.

Fifth, motivated by the potential link between selective adaptation and recalibration suggested by the proposed framework, we present novel data from a web-based perception experiment which tests the predictive power of our model. Specifically, we test adaptation conditions that are intermediate between recalibration and selective adaptation, for which the model predicts a continuum between classic recalibration and selective adaptation responses.

Basic evaluation of the ideal adapter framework: phonetic recalibration

We begin with an illustration of the basic mechanics of the ideal adapter framework. For this we focus on experiments in which listeners are exposed to a novel talker with an unusual realization of a phonetic contrast. There is a great deal of evidence about the results of incremental adaptation. Much of it comes from studies of “phonetic recalibration”, or “perceptual learning” (Bertelson et al., 2003; Kraljic & Samuel, 2005; Norris et al., 2003). These studies use a continuum between two sounds, generally constructed by interpolating between prototypical endpoint tokens (e.g. Kraljic & Samuel, 2005; Norris et al., 2003) or by parametrically manipulating a critical acoustic cue which distinguishes between the two categories. For instance, a /b/-/d/ continuum might be constructed by manipulating F2 locus, as described above (Bertelson et al., 2003; Vroomen et al., 2004). During an exposure phase, listeners hear the item from this continuum which is most ambiguous between /b/ and /d/. This auditorily ambiguous segment is paired with information which consistently “labels” or disambiguates it as a /b/. This labeling is achieved via, for example, lexical disambiguation (e.g. replacing the /b/ in club with the ambiguous segment, Kraljic & Samuel, 2005; Norris et al., 2003 etc.) or visual disambiguation (pairing the auditorily ambiguous sound with a video of a person articulating a /b/, which results in a visible labial closure unlike articulation of /d/). After exposure, changes to the listener’s classification function are assessed, for example, by means of a classification test over unlabeled sounds drawn from the continuum (e.g. classifying a continuum of sounds from a prototypical /aba/ to a prototypical /ada/ without either lexical or visual disambiguation).

In what follows, we use the notation xc1c2 to refer to a sound that is auditorily ambiguous between categories c1 and c2, and use superscripts to refer to labeled sounds. So, for example, is a sound auditorily ambiguous between /b/ and /d/ which is labeled (disambiguated) as /b/.

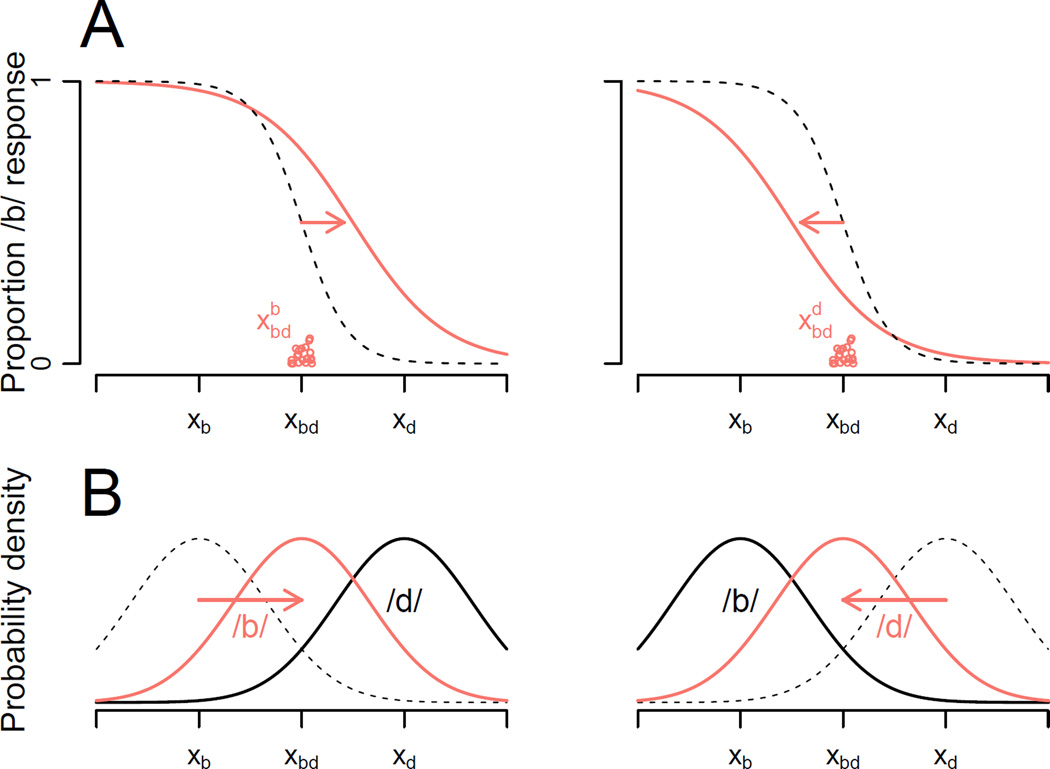

Perceptual recalibration rapidly results in shifted category boundaries. For example, after as few as 10 exposures to , the /b/ category has ‘grown’: more of the continuum is now classified as /b/, when tested without the labeling information (Vroomen et al., 2007). The opposite shift is observed for exposure to . This is illustrated schematically in Figure 4 (A). Similarly rapid perceptual recalibration has been observed along a variety of phonetic contrasts, including vowels (Maye, Aslin, & Tanenhaus, 2008), fricative place of articulation and manner (Kraljic & Samuel, 2005; Norris et al., 2003), and stop consonant place (Bertelson et al., 2003) and voicing (Kraljic & Samuel, 2006). Perceptual recalibration is typically investigated under the assumption that it reflects the same processes that support general accent adaptation. This assumption is not trivial but there is some support that perceptual recalibration is not simply an artifact of the stimuli being presented in isolation (Eisner & McQueen, 2006) or there only being one unusual pronunciation (Reinisch & Holt, 2014). We return to these issues in the next section.

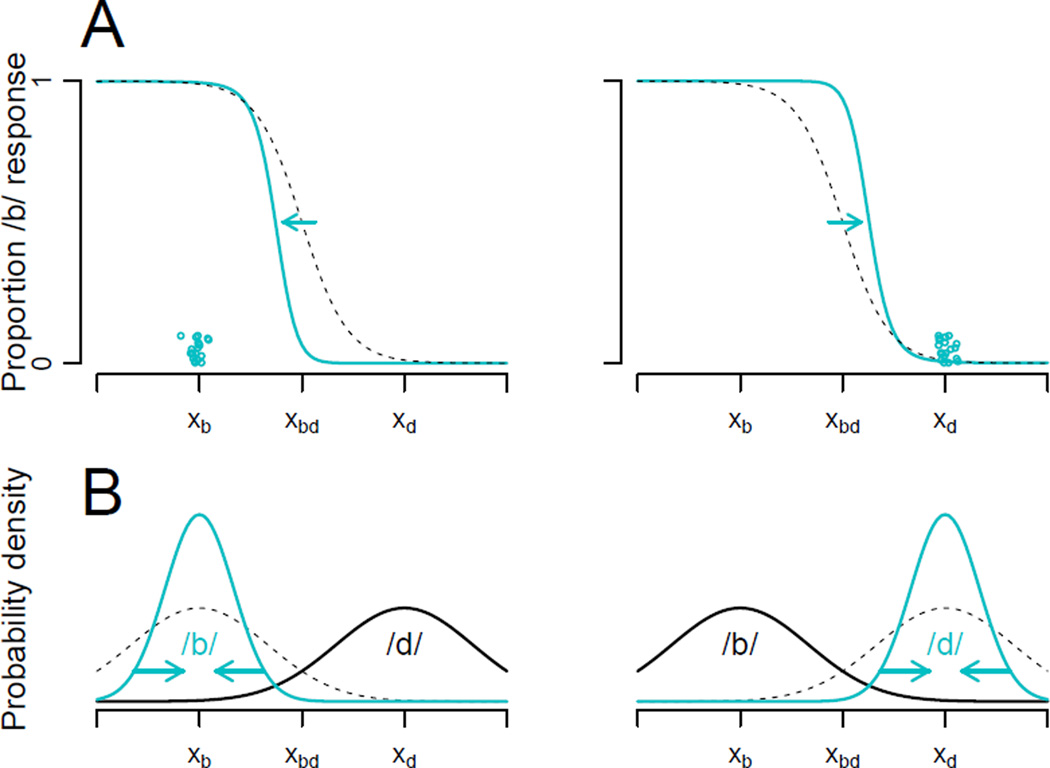

Figure 4.

Schematic illustration of the results of perceptual recalibration on classification of a /b/-/d/ continuum (A), and the changes in the listener’s beliefs about the underlying distributions which we propose to account for the changes in classification (B). Dashed lines show pre-exposure classification functions and distributions, while solid lines show post-recalibration. Left panels show the results of exposure to , and the right to .

Qualitatively, perceptual recalibration exhibits several properties that are expected under the ideal adapter framework. First, recalibration seems to reflect implicit learning over phonetic contrasts, rather than strategic effects such as response bias (Clarke-Davidson, Luce, & Sawusch, 2008), or weakening of the criterion for what counts as an acceptable example of a category (Maye et al., 2008; but see next section). Recalibration also appears to affect speech perception through changes in sublexical phonetic category representations since perceptual recalibration effects generalize to novel words by the same talker containing the recalibrated segment (McQueen, Cutler, & Norris, 2006).

Second, perceptual recalibration seems to last: when listeners classify tokens from the same talker 12 hours after initial testing, the magnitude of adaptation is the same as right after initial exposure (Eisner & McQueen, 2006). As we discuss in more detail in the second part of this article, longevity of changes in category boundaries (for a particular situation) is expected under our proposal that adaptation serves to make speech perception robust to changes in situation.

More specifically, the qualitative changes in classification boundaries observed during perceptual recalibration is naturally predicted by the ideal adapter framework (Figure 4, B). Take, for example, the case where the listener is exposed to (Figure 4, left). As the listener updates their beliefs about the shifted distribution of cues for /b/, shifting the mean towards the observed cue values, stimuli in the middle of the /b/-/d/ continuum which previously had roughly equal likelihood under either category (and thus are sometimes perceived as /b/ and sometimes as /d/) are now more likely to have resulted from /b/, resulting in more /b/ responses to unlabeled test stimuli, especially in the previously-ambiguous region of the continuum.

Incremental recalibration

It is encouraging that the ideal adapter framework provides a qualitative account of the results of recalibration. But can this framework account for incremental changes in behavior? Belief updating is an incremental process, where the listener accumulates information about the talker’s cue distributions one observation at a time. The ideal adapter framework thus not only predicts asymptotic classification behavior—after the listener has fully adapted to the talker’s cue distributions—but also how their classification behavior changes with each additional piece of evidence. Unfortunately, few studies have investigated the incremental effects of exposure to a novel distribution of sounds, such as would be typical for a new talkers.

A notable exception is Vroomen et al. (2007). Listeners in their study were exposed to repetitions of an audio-visual adaptor, which was composed of a video recording of a talker articulating either /aba/ or /ada/, dubbed with an audio item from a 9-item, synthetic /aba/ (xb = 1) to /ada/(xd = 9) continuum. The audio component for each participant was the continuum item that was most ambiguous, xbd. The most ambiguous item was determined during a pre-test block of 98 trials where the entire /aba/-/ada/ continuum was classified.9

Instead of the typical recalibration procedure, where exposure and test are separated, Vroomen et al. (2007) measured the degree and direction of adaptation by interspersing audio-only test blocks throughout each exposure block, after 1, 2, 4, 8, 16, 32, 64, 128, and 256 cumulative exposures to the audio-visual adaptor. Specifically, they measured the average proportion of /b/ responses to six-trial test blocks (the three most ambiguous items {xbd − 1, xbd, xbd + 1} each repeated twice).

Each participant completed sixteen exposure blocks of 256 exposures. Half of the exposure blocks used a /b/ audio-visual stimulus for exposure, and the other half used a /d/. Of the /b/-exposure blocks, half of these used the auditorily ambiguous stimulus as described above, while the other half used the prototypical /b/ endpoint of the acoustic continuum (x = 1), and likewise for the /d/-exposure blocks.10 Because our goal is to illustrate the workings of the ideal adapter framework when the listener has little prior experience that might be relevant for the current situation, we focus on the first 64 exposures of the first block from each participant (we return to the issue of extended exposure below).

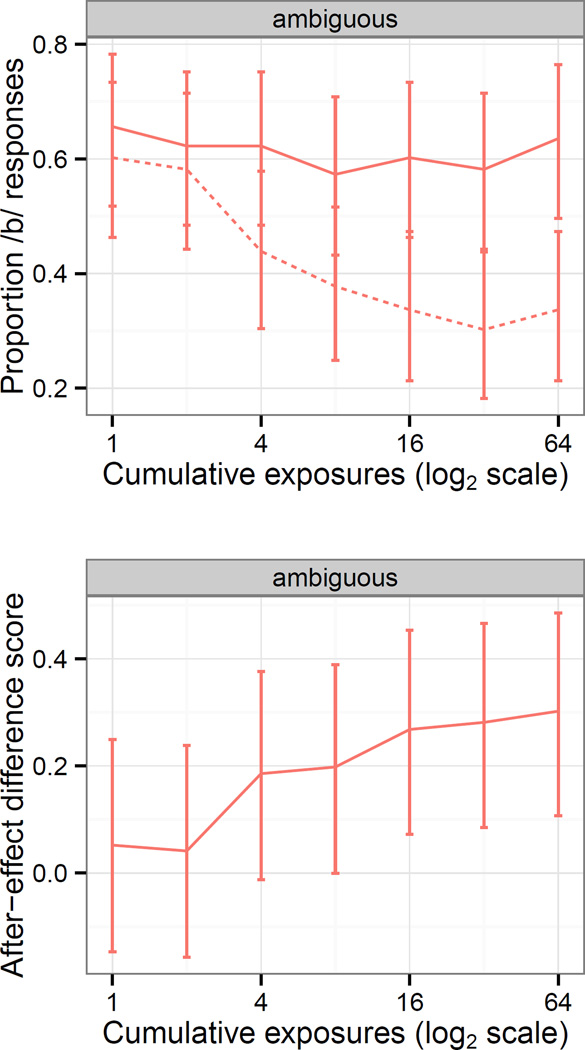

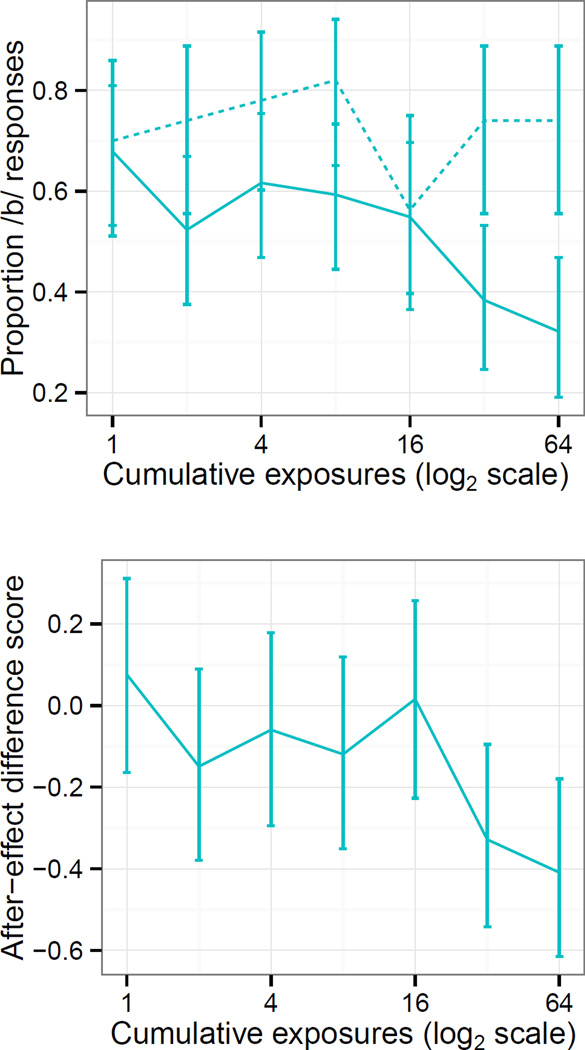

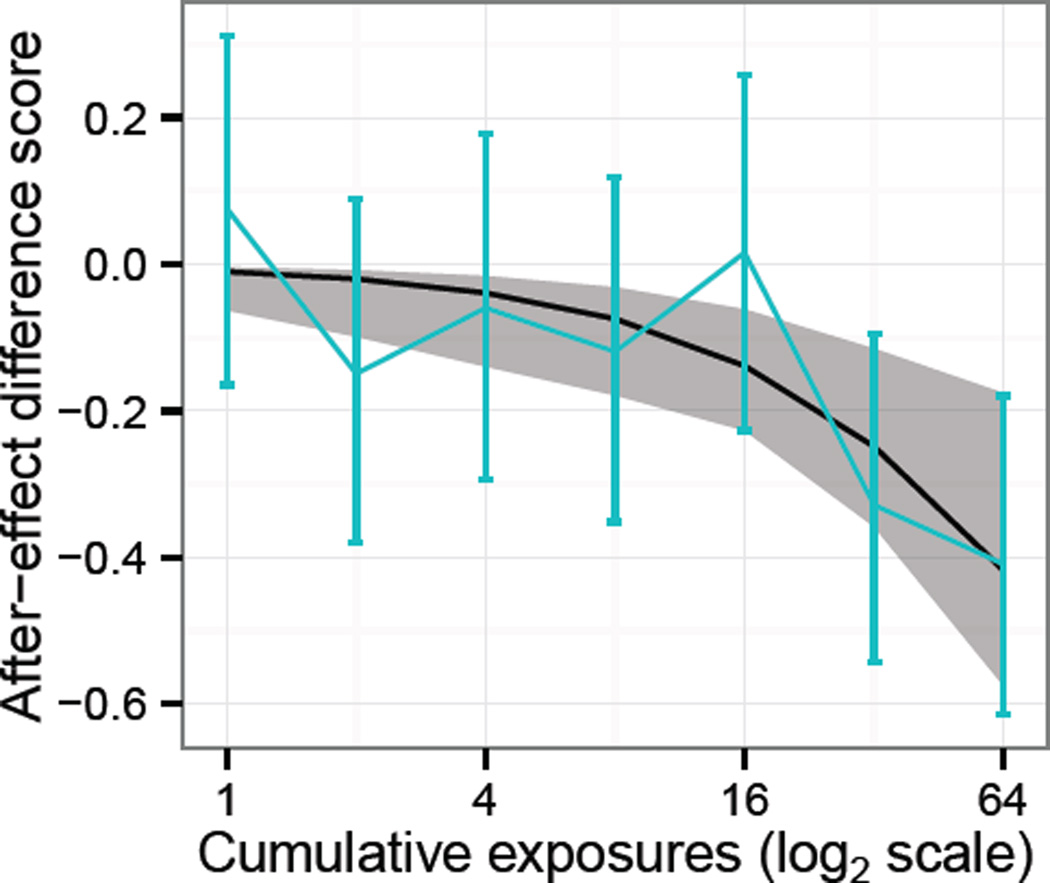

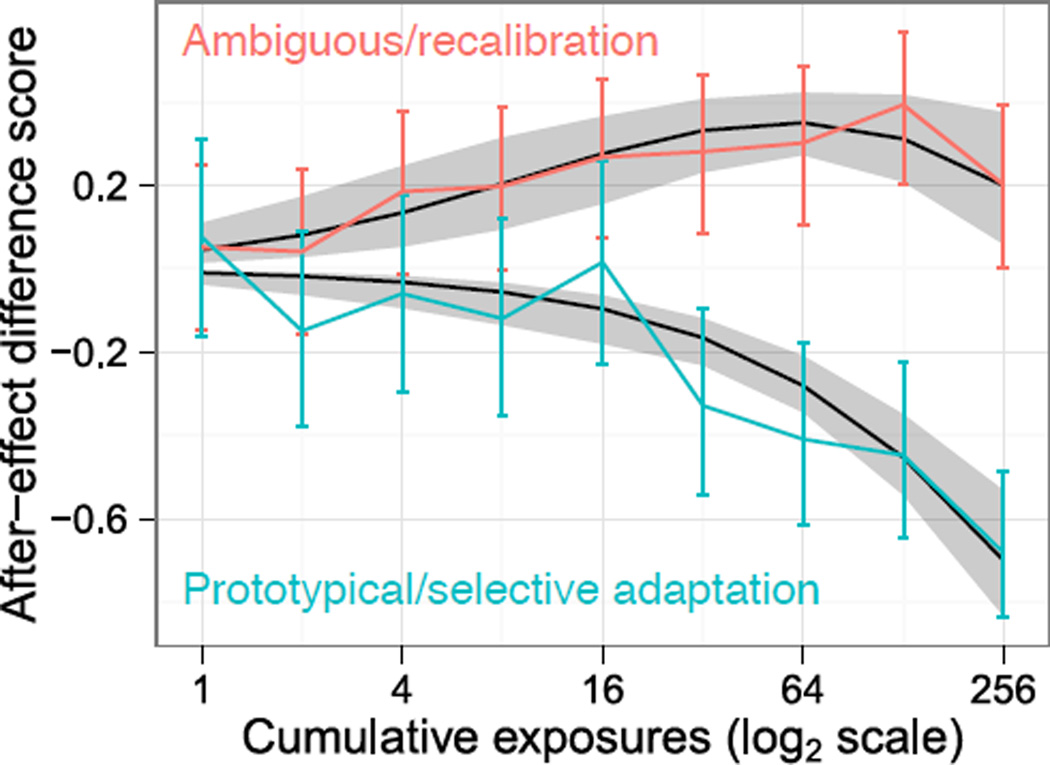

Figure 5 shows the results of Vroomen et al. (2007) demonstrating the build-up of recalibration in the first 64 critical exposures in the first exposure block. The top panel shows the proportion /b/ responses for and adaptors separately; more /b/ responses after /b/ exposure (solid line) indicates recalibration, and vice-versa for /d/ exposure (dashed line). A natural measure of the degree of recalibration is thus the aftereffect difference score between /b/ and /d/ exposure. A positive aftereffect indicates that /b/ exposure increased /b/ responses, and /d/ exposure decreased /b/ responses (and increased /d/ responses), which corresponds to recalibration (Figure 5, bottom).

Figure 5.

Recalibration results from Vroomen et al. (2007), showing both the proportion of /b/ responses (top, solid line /b/ exposure and dashed line /d/ exposure) and the aftereffect difference score (bottom) for the first 64 critical exposures in the first exposure block. Error bars indicate 95% confidence intervals.

Recalibration builds up rapidly but incrementally over the first 64 exposures. As discussed above, this build up follows naturally from the ideal adapter framework, as each exposure to the auditorily ambiguous adaptor contributes to a shift in the listener’s estimate of the category mean. Next, we quantify and test this prediction.

Modeling build-up of recalibration

In order to evaluate the ability of the ideal adapter framework to account for the results of Vroomen et al. (2007) on the incremental build-up of recalibration, we implemented a basic Bayesian belief updating model based on the principles of the ideal adapter framework.

Bayesian belief updating model

We used a mixture of Gaussians as the underlying model of phonetic categories, where each phonetic category c ∈ {b, d} corresponds to a normal distribution over cue values x with mean μc and variance (e.g. Figure 3, top). Thus, the likelihood of observation x under category c is

| (9) |

The listener’s uncertain beliefs about phonetic categories are captured by additionally assigning probability distributions to the means μc and variances of each phonetic category. The prior distribution represents the listener’s beliefs about category c before exposure to the experimental stimuli, and the posterior captures the listener’s beliefs after exposure to stimuli X = (x1, x2, …, xN) which are known to come from category c(which means that the category labels C = c are known). These two distributions are related via Bayes’ Rule:

| (10) |

We used a conjugate prior for the Normal distribution with unknown mean and variance (Appendix A), and this prior distribution has two types of hyperparameters.11 The first set of hyperparameters captures the prior expected values of the means and variances. The second set of hyperparameters captures the degree of confidence, or, conversely, uncertainty associated with the prior beliefs. Put differently, they determine how much current observations are weighted against previous experience in determining beliefs about the category distributions. In this model, there are two different degrees of confidence: one for the category means, denoted κ0, and the other for the category variances, denoted ν0 (see the Appendix for details). An intuitive interpretation of these hyperparameters is as the effective sample size of the prior beliefs. For instance, if the category mean confidence parameter is κ0 = 10, then after ten new observations the listener’s beliefs about the category mean to equally reflect previous and current experience. With fewer than ten new observations, the listener’s beliefs about the category mean will be dominated by the mean expected based on previous experience; with more than ten new observations the beliefs about the mean will be increasingly dominated by the mean of the new observations. These hyperparameters thus capture the gradient trade-off between prior experience and current experience in determining the listener’s beliefs about phonetic categories. They can be thought of as pseudocounts or the number of prior experiences that are relevant for the current situation.12

Finally, it is not a priori obvious whether adaptation occurs at the level of auditory cues individually or at some higher level where information is integrated from multiple auditory and/or visual cues. Thus the model includes a third hyperparameter, w which determines the weight given to the visual cue value in determining the percept. This hyperparameter ranges from w = 0 (perceived cue value is not influenced by the visual cue) and w = 1 (perceived cue value entirely determined by the visual cue). Adaptation over integrated cues might arise because the listener attempts to infer the talker’s intended production based on multiple partially informative cues (including top-down category distribution information, Feldman et al., 2009). For more discussion, refer to the Appendix.

Model fitting

The hyperparameters were fit in a two-step process, which is described in detail in the Appendix. The first step is to fix the expected prior means and variances based on the classification curves measured during pre-test, before exposure to the audiovisual adaptor. These hyperparameters are thus not free parameters of the model, in that they are not adjusted to improve the fit to the actual adaptation test data.

The second step is to estimate the three free hyperparameters (i.e., the effective prior sample sizes ν0 and κ0 and the visual cue weight w) based on the actual adaptation data. The posterior distribution of the free hyperparameters was obtained using MCMC sampling with a weakly informative prior (to ensure a proper posterior, Gelman, Carlin, Stern, & Rubin, 2003). For further details, we refer to Appendix A. Because of the limited amount of data from each participant (only six trials per test block), we chose to fit the model to the aggregate data from all participants (see Appendix A for motivation).

Results and discussion

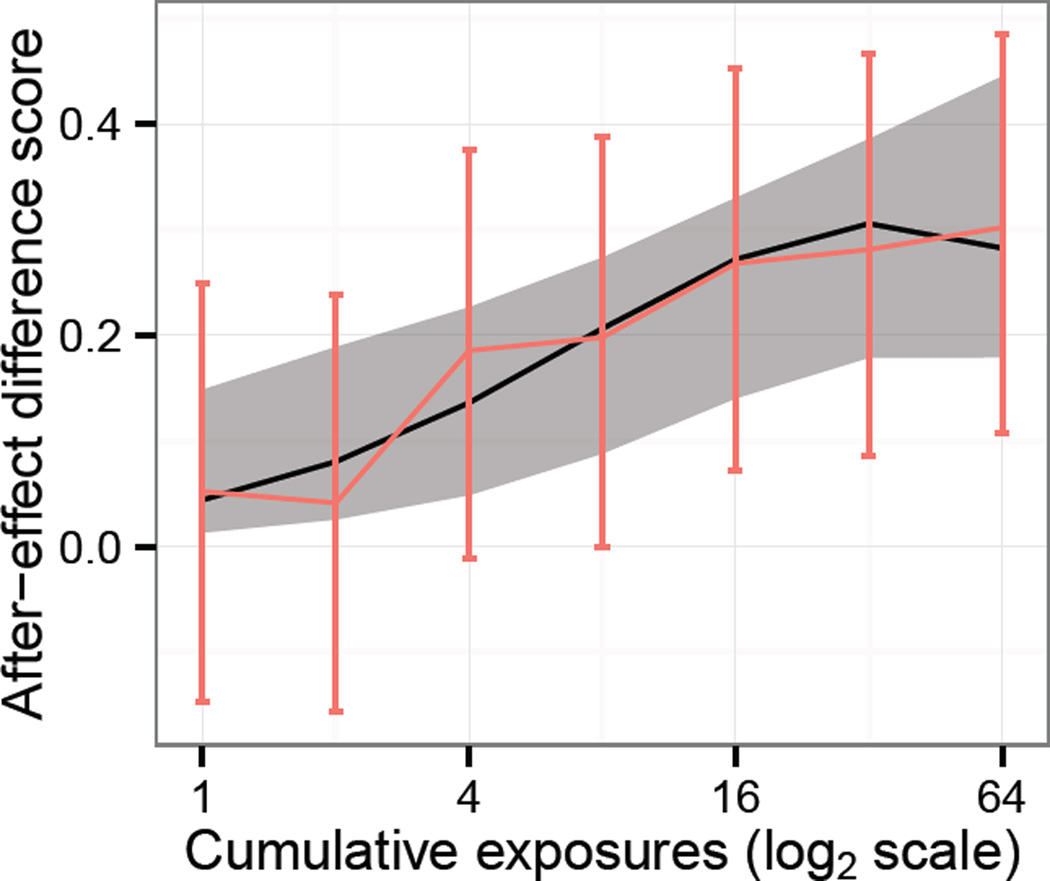

The model’s fit against the data is shown in Figure 6. The predicted responses are plotted on the aftereffect scale for better comparison to Vroomen et al. (2007). The model effectively captures the qualitative fact that recalibration leads to positive aftereffects which build up incrementally, and provides a quantitatively good fit as well (r2 = 0.96). Specifically, the model captures the fact that recalibration starts off relatively weak and gradually becomes stronger, before eventually leveling off. Thus, not only is the qualitative result of recalibration—a positive aftereffect—predicted by the ideal adapter framework, the effect of cumulative exposure on the incremental build-up of the effect is also predicted well. This suggests that listeners incrementally integrate each observed cue value with their prior beliefs in a way that is predicted by the ideal adapter framework.

Figure 6.

Belief updating model fit to build-up of recalibration data from Vroomen et al. (2007). The x-axis shows the number of cumulative exposures to the adaptor (on a log scale), and the y-axis shows the aftereffect difference score. The solid black line shows the MAP (maximum a posteriori) estimate predictions (r2 = 0.96). The error bars and shaded region show 95% credible intervals for the data and model predictions, respectively (see Appendix A).

We can draw a number of conclusions from the values of the hyperparameters themselves. The best-fitting hyperparameter values are ν0 = 71, κ0 = 11, and w = 0.53. First, relative to the real overall sample size—the number of /b/s and /d/s encountered in the world by a typical English-speaking adult—the best-fitting effective prior sample sizes are extremely low. That is, listeners appear to put very little weight on their prior beliefs, adapting very quickly to the shifted cue distribution that they observe while taking slightly longer to adapt to the tight clustering (low variance). This may be surprising at first glance, but it is actually qualitatively predicted by the ideal adapter framework. In the ideal adapter framework, whether or not (and how much) a listener adapts depends on how relevant they think their previous experiences are for the current situation. Thus in situations like a recalibration experiment where listeners encounter odd-sounding, often synthesized speech in a laboratory setting, they may have little confidence, a priori, that any of their previous experiences are directly informative. We discuss this point in length in the second half of this paper, where we elaborate on the crucial role of prior experiences for robust speech perception.

Second, the best-fitting value of the visual cue weight hyperparameter w places approximately equal weight on the audio and visual cue values. This means that, according to the best-fitting model, listeners perceive the cue value as not fully ambiguous. This makes an interesting prediction about the effects of extended exposure to the same stimulus that we return to below.

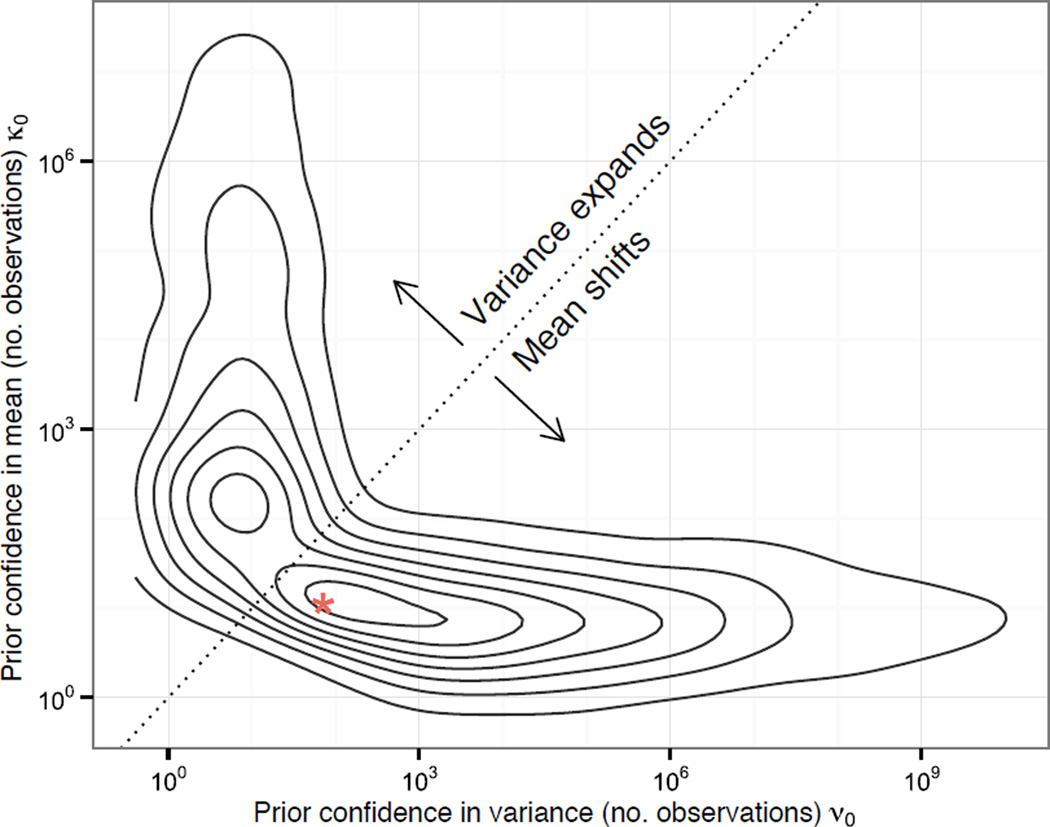

Third, the joint distribution—rather then just the point estimates—of the prior effective sample size hyperparameters (Figure 7) reveal that as long as one of these hyperparameters is low—on the same order of magnitude of the number of exposures to the adaptor stimulus—the other confidence hyperparameter can become extremely large and not change the model’s predictions so much that the likelihood suffers. This is because there are two ways that the positive aftereffect observed here (and in other recalibration studies) might come about after exposure to an ambiguous adaptor stimulus. This is discussed in more detail next.

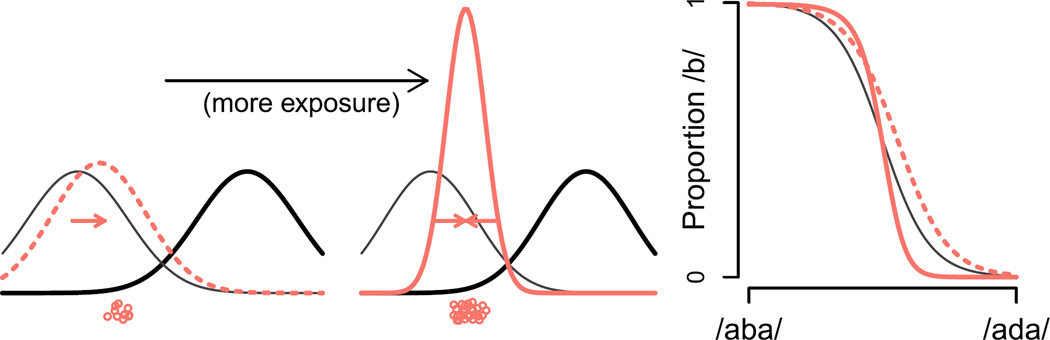

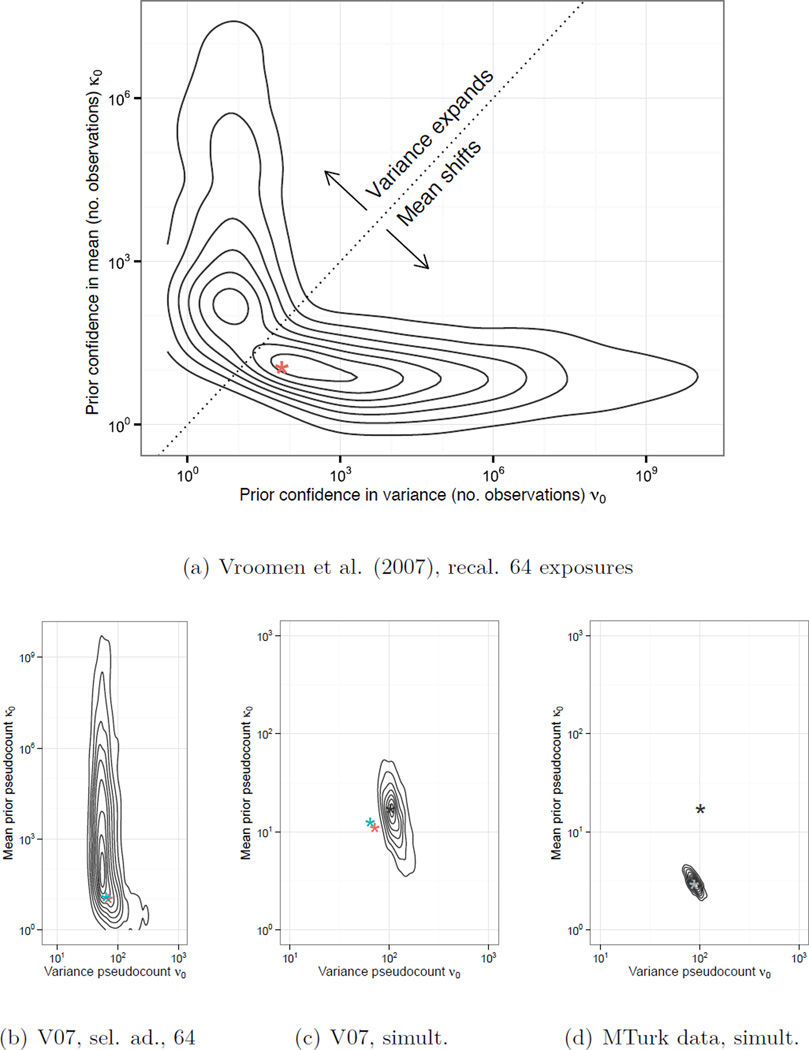

Figure 7.

The belief-updating model finds two different ways of fitting the build-up of recalibration (Figure 6), as illustrated by this density plot of the distribution of the mean and variance prior confidence parameters (κ0 and ν0, respectively) that are consistent with the data (estimated by samples via MCMC). The diagonal shows solutions with equal confidence in prior beliefs about the mean and variance. Points below the line have higher confidence in the variance, and adapt by shifting the category mean. Points above the line have higher confidence in the mean and adapt by expanding the category variance. Note that even though the best-fitting parameters (red asterisk, and curve in Figure 6) are below the line (and shift the mean), there are areas of high posterior probability on both sides of the line (hills on the contour plot).

Recalibration by category shift or expansion?

One of the advantages of model-fitting using Bayesian methods is that it allows us to evaluate the range of model hyperparameters which provide a good fit to the data. For the build-up of recalibration modeled in the previous section, the full posterior distribution over model hyperparameters (effective prior sample sizes and visual cue weight) provides interesting insight into how a human learner might adapt. To illustrate this, we examine the posterior distribution of the prior effective sample sizes for the category means and variances given the behavioral data.

The joint distribution of the two confidence hyperparameters—the mean confidence κ0 and the variance confidence ν0—is shown in Figure 7. This distribution covers an extremely wide range of both hyperparameters, although this entire range still results in qualitatively consistent predictions about the aftereffect at each level of exposure (Figure 6). The wide range covered by the posterior distribution of hyperparameters is due to the limited amount of data available to the model in this particular case. Note that this is not a problem. It merely reflects that these data do not uniquely constrain the model. A human learner exposed to the same data would face the same problem. Indeed, we will see below that when the model is constrained by further data, the posterior distribution of the hyperparameters will become more narrow.

Of interest is that the posterior is bimodal: there are two ways that belief updating can account for the build-up of recalibration. The best-fitting (MAP-estimate) hyperparameters correspond to a shift in the mean of the adapted category: the prior effective sample size of the mean, κ0, is less than that of the variance, ν0, and as a result the prior beliefs about the mean are overcome more quickly than the variance. However, this pattern is only true for roughly half the samples from the joint posterior of the hyperparameters (pMCMC(ν0 > κ0) = 0.55). In the other half of the samples, the prior effective sample size for the mean is on average very high, meaning that the positive aftereffect observed in the data is modeled as a change in the variance of the adapted category, with a mean that is essentially fixed.

This combination of hyperparameters—flexible variance and fixed mean—can lead to a positive aftereffect after exposure to auditorily ambiguous but labeled tokens in the following way. If the listener has very high confidence in the mean of each category coming into a new situation, then repeated exposure to an ambiguous segment which is labeled as belonging to one category is best explained by the hypothesis that the talker is producing that category with a high degree of variability. Increasing the variance of the recalibrated category in this way means that more likelihood is assigned by that category to the previously-ambiguous part of the continuum to the recalibrated category, and thus leads to a positive aftereffect.

Thus, a positive aftereffect is qualitatively consistent with both a shift in mean and an increase in category variance. Moreover, the joint distribution of hyperparameters fit to the build-up of recalibration observed by Vroomen et al. (2007) show that in the ideal adapter framework, the quantitative effect of cumulative exposure on the build-up of recalibration is also ambiguous in the same way.

Maye et al. (2008) behaviorally investigated a similar question. Specifically, they wondered whether positive aftereffects typically observed in recalibration experiments were really due to a shift in the underlying category, or just a relaxation of the criterion for what counts as a good exemplar of the adapted category. They exposed listeners to vowels that were shifted in a particular direction (e.g. shifting the high vowel /ɪ/ in wicked down to the mid vowel /ɛ/ to make ‘wecked’). In a lexical decision task after exposure to such downward shifts, listeners accepted more nonwords that were downward-shifted versions of real words, but not nonwords that were upward-shifted words. This corresponds, in the ideal adapter framework, to a shift in the means of the adapted categories, without a substantial change in the variance.

It may be tempting to conclude based on these results that all recalibration effects result from shifting category means. However, the ideal adapter framework predicts that positive aftereffects due to changes in either means and variances are possible, in different situations, especially depending on whether the listener has greater confidence in their prior beliefs about category variances or means. This is, to the best of our knowledge, a novel prediction. Since one of our goals is to provide a guiding framework for future work on speech processing and adaptation, we elaborate on this prediction.

When might the listener have greater confidence in the mean of a category rather than its variance, and vice versa? In the ideal adapter framework, the listener’s prior beliefs about a category parameter constitute a prediction about the distribution of values they might expect that parameter to take on in the future. The level of confidence in prior beliefs is closely related to the level of variability of a particular category parameter that the listener expects across situations. For cues whose typical values vary across situations (e.g. formant frequencies), an ideal adapter should expect substantial variability in the underlying means, in order to be prepared to shift their beliefs about category means on that cue when appropriate.

The variance of a particular cue for a particular category is closely related to how reliable that cue is at distinguishing one category from another (Allen & Miller, 2004; Clayards et al., 2008; Newman et al., 2001; Toscano & McMurray, 2010): for two categories with fixed means, increasing the variance of both categories means that their distributions will overlap more and, on average, observing that particular cue will be less informative about the intended category. Thus for a cue which varies in reliability from one situation to the next (with relatively stable category means), the ideal adapter should in general be more likely to adjust category variance than means.13

Thus, the ideal adapter framework predicts that there are range of strategies available to the listener for adapting to new talkers. In real-life accent adaptation, there are usually many categories and cue dimensions where an accent is unusual, and in some cases these differences can be due to both changes in the cue values typically used to realize a category (the mean) and changes in how reliable a given cue is at distinguishing a category (the variance). In real speech there are many partially informative cues to any given category, and it may be a completely reasonable strategy for the listener to simply decide that a particular cue is uninformative and ignore it (or at least downweight it).

This points to a critical empirical gap in our understanding of speech perception. Most existing work on phonetic adaptation follows one of two approaches. The first approach emphasizes relatively natural conditions and accent variability, where language occurs in context (e.g. sentences) and listeners must adapt to accents that vary along many categories and cue dimensions (Baese-berk et al., 2013; Bradlow & Bent, 2008; Clarke & Garrett, 2004; Sidaras et al., 2009). The second approach is that of perceptual recalibration/learning, which typically presents speech as isolated words or syllables and emphasizes acoustic manipulation of a single category or auditory cue. While perceptual recalibration has been observed when these unusual pronunciations were presented as part of running speech in a story (Eisner & McQueen, 2006) or as words spoken by a talker who has a real foreign accent (Reinisch & Holt, 2014), it is an open question under what conditions listeners downweight cues and when they track changes in mean cue values during naturalistic accent adaptation.

In order to address listeners’ ability to adapt to novel talkers based on the statistical properties of their speech as predicted by the ideal adapter framework, we see two potentially fruitful directions. First, we think that perceptual recalibration paradigms should be scaled up to explore the role of natural levels of within-category, within-talker variability and the role of controlled deviation in multiple categories and cue dimensions in recalibration. Second, we think that naturalistic accent adaptation paradigms might be refined to specifically investigate how accent difficulty is driven by deviations—from unaccented speech—in the average value of a cue versus unusual variability in that cue. Given that, across talkers, the average value of some cues varies quite a bit (Newman et al., 2001), while for others it is relatively consistent (Allen et al., 2003), it might be expected that listeners will have a harder time adapting to accented speech which is characterized by deviant values of cues that are typically stable across talkers (like VOT Sumner, 2011).

We have discussed how phonetic recalibration is qualitatively predicted by the ideal adapter framework, and presented a model in this framework which captures the incremental build-up of recalibration quantitatively. In the next three sections we show how this framework provides a potentially unifying perspective on phonetic adaptation more broadly.

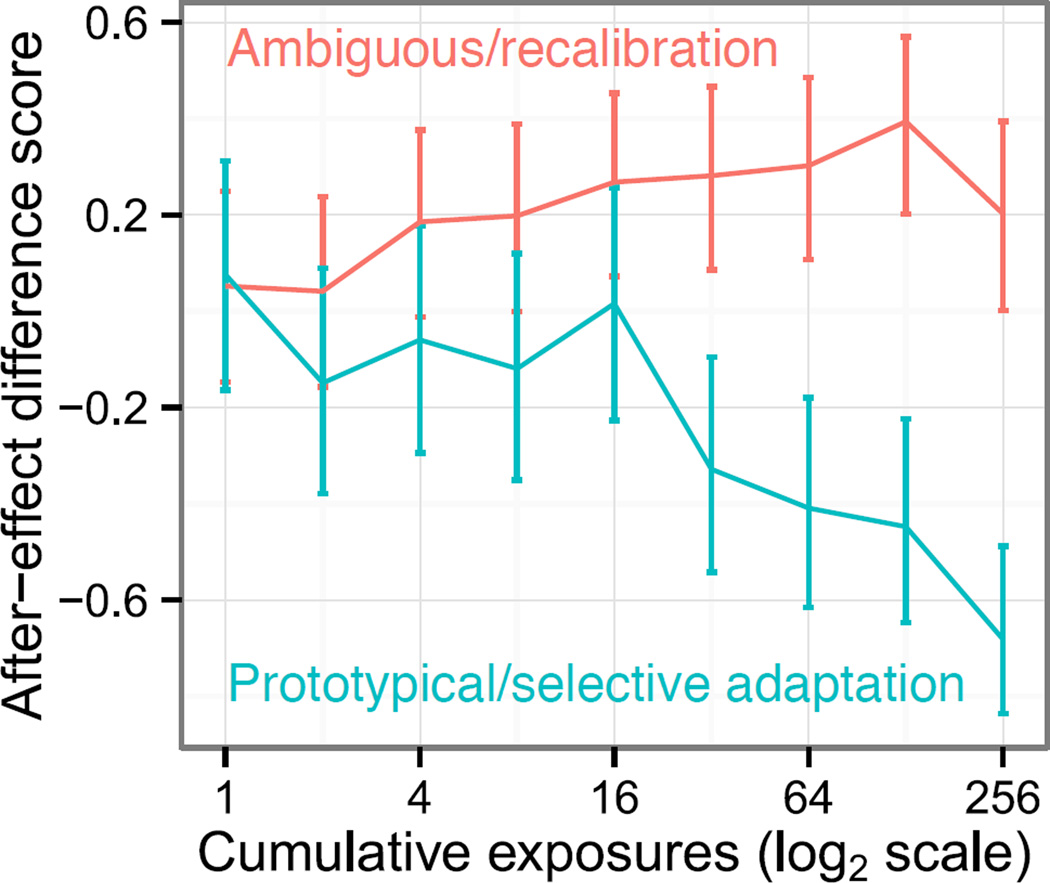

Beyond recalibration: selective adaptation

Next we apply the ideal adapter framework to a phenomenon known as selective adaptation (Eimas & Corbit, 1973; Samuel, 1986). Traditionally, selective adaptation is thought to be due to mechanisms that are distinct from those underlying perceptual recalibration. We will show, however, that the cumulative build-up of selective adaptation is captured by the same belief-updating model introduced in the previous sections.

Selective adaptation occurs after repeated exposure to a single phonetic category, and is characterized by a negative aftereffect, where fewer items on a phonetic continuum are classified as the adapted category. For instance, and as we will discuss in more detail below, Vroomen et al. (2007) found that repeated exposure to a prototypical /b/ audio-visual adaptor constructed from the /b/ endpoint of their /b/-/d/ continuum (rather than the ambiguous midpoint) resulted in fewer /b/ responses during test trials (and vice-versa for /d/).