Abstract

Neural stimulation can be used as a tool to elicit natural sensations or behaviors by modulating neural activity. This can be potentially used to mitigate the damage of brain lesions or neural disorders. However, in order to obtain the optimal stimulation sequences, it is necessary to develop neural control methods, for example by constructing an inverse model of the target system. For real brains, this can be very challenging, and often unfeasible, as it requires repeatedly stimulating the neural system to obtain enough probing data, and depends on an unwarranted assumption of stationarity. By contrast, detailed brain simulations may provide an alternative testbed for understanding the interactions between ongoing neural activity and external stimulation. Unlike real brains, the artificial system can be probed extensively and precisely, and detailed output information is readily available. Here we employed a spiking network model of sensorimotor cortex trained to drive a realistic virtual musculoskeletal arm to reach a target. The network was then perturbed, in order to simulate a lesion, by either silencing neurons or removing synaptic connections. All lesions led to significant behvaioral impairments during the reaching task. The remaining cells were then systematically probed with a set of single and multiple-cell stimulations, and results were used to build an inverse model of the neural system. The inverse model was constructed using a kernel adaptive filtering method, and was used to predict the neural stimulation pattern required to recover the pre-lesion neural activity. Applying the derived neurostimulation to the lesioned network improved the reaching behavior performance. This work proposes a novel neurocontrol method, and provides theoretical groundwork on the use biomimetic brain models to develop and evaluate neurocontrollers that restore the function of damaged brain regions and the corresponding motor behaviors.

Keywords: neurostimulation, spiking network model, biomimetic, kernel adaptive filtering, inverse model, musculoskeletal arm, virtual arm, neuroprosthetics

1. Introduction

Recent years have seen extraordinary progress in technologies that not only allow us to read the brain signals, but also to artificially stimulate neural circuits. Neurostimulation will be the best way to demonstrate our understanding of neural codes (Stanley, 2013), by injecting signals that produce specific natural sensory sensations (O'Doherty et al., 2011; Choi et al., 2012; Klaes et al., 2014), motor behaviors (Overduin et al., 2012; Van Acker et al., 2013), or memory (Hampson et al., 2013) and cognitive (Hampson et al., 2012) influences. Clinically, a major challenge for the next generation of motor-function-restoring brain-machine interfaces (BMIs) is the incorporation of realistic somatosensory feedback via cortical stimulation (Bensmaia and Miller, 2014). These new research directions will enable neurostimulation tools that provide ongoing dynamic neural modulation to treat brain disorders (Underwood, 2013) and repair brain lesions (Sanchez et al., 2012). These could also potentially be used to induce long-term plasticity leading to lasting recovery (Jackson et al., 2006; Koralek et al., 2012; Song et al., 2013).

Meeting these challenges raises a number of theoretical and technological obstacles. To start with, both neural recording and stimulation are still very limited in terms of scale (number of simultaneous neurons), and of spatial and temporal precision. However, new emerging tools, particularly optogenetics, may provide more precise stimulation of groups of single cells (Suter et al., 2014; Warden et al., 2014). A second obstacle is our still rudimentary understanding of neural coding and brain dynamics, which makes it difficult to provide neurostimulation in a way that is physiologically meaningful. For example, we have only described a small fraction of the large number of cell types and physiological responses in the brain (Douglas and Martin, 2012; Harris and Shepherd, 2015). Understanding the relation between neurophysiology and behavior will require characterizing the interactions between the multiple spatial and temporal scales of the brain, ranging from the molecular (< 1 um and < 1 us) to the macroscopic level (>1 cm and >1 s). Many of these, such as the effect of spatiotemporal input patterns in the dendritic trees or the role of physiological oscillations, remain open questions. Another fundamental component to determine is the high-level algorithms or computations the brain employs to encode and manipulate information (Carandini, 2012). An additional complication is that the brain is a highly non-stationary system, as a result of noisy sensory inputs (even in a highly controlled environment) and internal recurrent dynamics, including modulation by thought, attention, motivation, fatigue, hormones, etc. These factors limit the ability to predict the outcome of neurostimulation and, therefore, to achieve targeted neural control. Consequently, neurostimulation studies have been predominantly confined to measuring the elicited neural responses (Clark et al., 2011; Van Acker et al., 2013).

A biomimetic brain simulation does not suffer from many of these limitations, as it provides a fully reproducible and controllable system with full access to all neurons and synapses. The biomimetic system can also be manipulated to reflect different conditions, such as normal physiology vs. pathophysiology. Models are however limited by their dissimilarity to the brain, which means the solutions found using the model may not be directly applicable to the real brain. In the Discussion we examine the current limitations of our model and what is required to gradually bridge the distance to real applications. Nonetheless, our work can serve as theoretical groundwork toward employing brain simulations to develop and evaluate neural control methods. As models continue to augment their level of detail and realism (Markram et al., 2015), they will provide an increasingly useful tool to help understand the interactions between neurostimulation and ongoing intrinsic neural activity. Previously, we employed biomimetic models to explore the effects of neurostimulation on information flow in neocortex (Kerr et al., 2012), plasticity in somatosensory cortex (Song et al., 2013), and oscillations in primary motor cortex (Chadderdon et al., 2014).

In this paper, we utilize a biomimetic spiking model of sensorimotor cortex connected to a realistic virtual musculoskeletal arm (Dura-Bernal et al., 2015b), which provides a direct link between neural activity and motor behavior. We employ this system to demonstrate the use of a neural control method, based on an adaptive inverse model, to restore behavioral performance of the virtual arm after lesioning of the spiking model. The approach consists of first representing the nonlinear spiking dynamics in a reproducing kernel Hilbert space (RKHS), to enable the use of kernel adaptive filtering techniques (Chen et al., 2012; Li et al., 2013) to construct an inverse model of the sensorimotor spiking network (Li et al., 2015). Then, this inverse model is used to derive neurostimulation patterns that restore the pre-lesion patterns of network spiking activity and thereby partially “heal” or compensate for the lesion. Our results suggest employing biomimetic brain and musculoskeletal models could be useful to study the effects of neurostimulation; and demonstrate the efficacy of the kernel adaptive inverse neurocontroller to repair lesioned neural circuits and restore behavioral performance in the simulation.

2. Methods

2.1. Sensorimotor system model

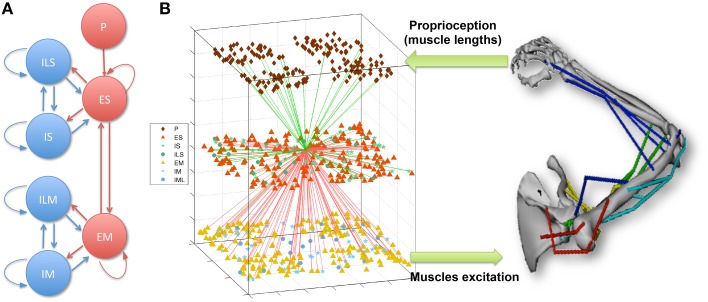

The model of the sensorimotor system consists of a spiking neuronal network connected to a virtual musculoskeletal arm (Figure 1; Neymotin et al., 2013; Dura-Bernal et al., 2015b). The network includes 704 model neurons of 4 different types distributed in 7 subpopulations, and includes cortical-like recurrent connectivity. Using reinforcement learning combined with spike-timing dependent plasticity (STDP), the network is able to learn to drive the virtual arm to reach a target. This section describes the spiking network and musculoskeletal arm models in more detail. The full neuronal network and virtual arm models can be downloaded from ModelDB at http://modeldb.yale.edu/183014.

Figure 1.

(A) Spiking network population connectivity diagram. Proprioceptive (P) units provide input to the sensory (S) population, which is recurrently connected to the excitatory motor (M) population. Both S and M are composed of recurrently connected excitatory (E), fast-spiking (I) and low-threshold spiking (IL) subpopulations. (B) Overview of the sensorimotor system interfacing the spiking network with the virtual musculoskeletal arm. The virtual arm receives neural excitation from the EM population and feeds back proprioceptive information to the P population. As an example of cell connectivity, all incoming (green) and outgoing (red) connections of a single ES neuron are shown.

2.1.1. Spiking neuronal network

Each individual neuron was modeled as event-driven unit which followed a set of rules in order to replicate a set of realistic neuronal features, such as bursting, adaptation, depolarization blockade, and voltage-sensitive NMDA conductance (Lytton and Stewart, 2006; Lytton et al., 2008a,b; Neymotin et al., 2011). This model provides a good trade-off between cell realism and speed of computation, and makes it adequate for simulating networks that include hundreds of these neurons. Each cell had a membrane voltage state variable, which was updated based on three possible events: synaptic input, threshold spike generation, and refractory period. Three types of synaptic inputs were modeled (AMPA, NMDA, and GABAA), using reversal potentials, time constants and delays consistent with physiological data. Spikes were generated when a voltage threshold was crossed, and were propagated to target neurons after a synaptic conduction delay. After spiking, a relative refractory period was simulated by increasing the threshold potential and adding hyperpolarization. In addition to spikes generated by the network neurons, subthreshold Poisson-distributed spike inputs to synapses were used to provide background activity, representing neural input from surrounding regions not explicitly modeled. A comprehensive description of the cell model equations and parameter values is available in previously published papers (Neymotin et al., 2013; Dura-Bernal et al., 2015b) and on ModelDB.

Input from the virtual arm to the neural network was provided by the proprioceptive (P) population, which consisted of 192 NetStims (NEURON spike generators) and encoded the muscle lengths. P units were divided into four subpopulations, each responsible for representing the mean length of one of four muscle groups: shoulder extensors, shoulder flexors, elbow extensors and elbow flexors. Units employed population coding to represent the muscle lengths, such that within each subpopulation, individual units only fired to a small range of lengths.

The neural network represented a simplified model of the two main elements involved in the sensorimotor cortex learning loop (Wolpert et al., 2011): sensory input and motor output. The sensory (S) and motor (M) populations were each comprised of 192 excitatory cells (ES and EM), 44 fast-spiking inhibitory cells (IS and IM), and 20 low-threshold inhibitory cells (ILS and ILM). Recurrent connectivity was present within each cell class, between the excitatory and inhibitory neurons of each population, and between the two main excitatory cell classes (ES and EM) (Figure 1). Cells were connected randomly based on a probability of connection and weights that depended on the presynaptic and postsynaptic cell class and location. ES cells received input from the P units encoding muscle lengths, which enabled them to represent the arm posture by combining information from multiple muscle lengths. The output of EM neurons, which received strong afferent inputs from the ES cells, was used to generate the muscle excitations sent to the virtual arm. Excitation to each muscle group was calculated by summing the number of spikes of the corresponding EM subpopulation (48 neurons for each muscle group) over an 80 ms sliding window, and threshold-normalizing the value between 0 and 1.

The sensorimotor system therefore forms a closed-loop circuit: virtual arm muscle lengths are encoded in the P population, P units project to the S population, which in turn projects to the M population, which provides excitation to the virtual arm muscles. By employing reinforcement learning—reward or punishment depending on whether the arm is getting closer or farther from the target—to modify the synaptic weights via spike-timing dependent plasticity (STDP), the network can learn to drive the arm to a target. This requires a training phase where exploratory movements are enforced in the virtual arm, in order to explore the space and generate the appropriate mapping between input sensory information and output motor commands (Neymotin et al., 2013; Dura-Bernal et al., 2014, 2015b). Currently, the network can only be trained to reach one target at a time. However, it can be extended to learning multiple targets by adding a population to encode target selection, as we (Dura-Bernal et al., 2015a) and others (Spüler et al., 2015) have shown.

The spiking network simulations were run in NEURON 7.3 (Hines and Carnevale, 2001; Carnevale and Hines, 2006) on a Linux workstation with 24 Intel Xeon 2.7 GHz cores and on a High-Performance Computing system with 512 AMD Opteron 2.6 Ghz cores.

2.1.2. Virtual musculoskeletal model

The virtual arm consists of a biomechanical and dynamical model of the upper right limb musculoskeletal system. The original model (Holzbaur et al., 2005), downloadable from the SimTK website (http://simtk.org/home/up-ext-model), was adapted for our purposes by limiting it to shoulder and elbow joint rotation (two degrees of freedom) in the horizontal plane. The model includes 8 skeletal rigid bodies that serve to anchor 18 different muscles, responsible for shoulder extension and flexion, and elbow extension and flexion.

Virtual arm joint kinematics and dynamics were based on anatomical studies, match experimental measurements of an average-sized human adult male, and were implemented using an extension of the Hill-type muscle model (Zajac et al., 1989; Schutte et al., 1993; Thelen et al., 2003). In this model, muscle forces are parameterized based on the optimal fiber length, peak force, tendon slack length and pennation angle, and calculated as a function of four variables: the muscle and tendon lengths, contraction velocity and muscle fiber activation. In turn, muscle activation is derived from an ordinary differential equation driven by an external signal: the muscle excitation. In our system, muscle excitation is provided by the motor population of the spiking network model. The arm kinematics, including position, velocity and acceleration of each joint, are then computed based on the muscle forces using the recursive Newton-Euler algorithm (Featherstone and Orin, 2000). Further details are available in our previous work (Dura-Bernal et al., 2015b) and via ModelDB.

2.2. Adaptive inverse model for neural control

We aim to restore the neural response of the EM population to its pre-lesion state, and consequently recover the original reaching trajectory. A set of probing neurostimulation sequences and their corresponding neural activity were recorded and used to construct an inverse model of the stimulus-response pairs. The goal is to automatically generate a set of optimized neurostimulation patterns given a desired neural response. To learn this inverse mapping, we applied the kernel adaptive filtering (KAF) method (Liu et al., 2010).

2.2.1. Kernel adaptive filtering

Kernel methods (Scholkopf and Smola, 2001) form a powerful unifying framework in classification, clustering and regression, contributing to many impactful applications in machine learning, signal processing and biomedical engineering. KAF is an online learning technique that combines adaptive signal processing with kernel methods. The idea is to transform the input data into a richer and potentially infinite-dimension feature space via a positive definite reproducing kernel, such that the inner product operation in the feature space can be computed efficiently in the input space through the kernel evaluation. KAF performs classical online linear methods in the enriched feature space or reproducing kernel Hilbert space (RKHS). This way it moves beyond the limitations of the linear model to provide general nonlinear solutions in the original input space. This input space is not restricted to numeric data, and could consist, for example, of spike trains (Li et al., 2013). KAF brings together adaptive signal processing and feedforward artificial neural networks, by combining the best of both worlds: the universal approximation property of neural networks and the simple convex optimization of linear adaptive filters.

In the family of kernel adaptive filters, the kernel least mean square (KLMS) algorithm (Liu et al., 2008) is the simplest stochastic gradient descent method that operates on the instantaneous error. A finite impulse response (FIR) filter trained in the RKHS using the least mean squares (LMS) algorithm, it can be viewed as a single-layer feedforward neural network or perceptron. For a set of n input-output pairs {(u1, y1), (u2, y2), …, (un, yn)}, the input vector (where 𝕌 is a compact input domain in ℝm) is mapped into a potentially infinite-dimensional feature space 𝔽. Define a 𝕌 → 𝔽 mapping φ, the feature-space parametric model becomes

| (1) |

where Ω is the weight vector in the RKHS. Using the representer theorem (Scholkopf et al., 2001) and the “kernel trick,” (1) can be written as

| (2) |

where (u, u′) is a Mercer kernel, corresponding to the inner product 〈φ(u), φ(u′)〉, and αi are the weight coefficients. The most commonly used kernel is the Gaussian kernel

| (3) |

where a > 0 is the kernel parameter. To effectively address the growth of the radial basis function structure in KAF, the quantized version of the KLMS algorithm was used (Chen et al., 2012).

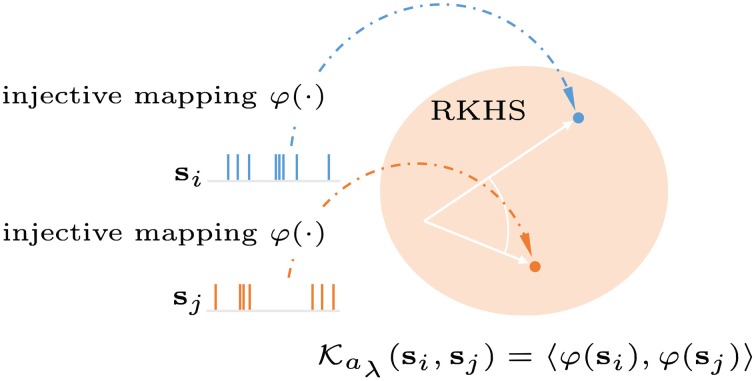

2.2.2. Reproducing Kernel Hilbert Space (RKHS) for spike trains

Here, we briefly describe the reproducing kernel used to map the neural responses to a RKHS for spike trains. A spike train or sequence of M ordered spike times, i.e., s = {tm ∈ :m = 1, …, M} in the interval = [0, T], can be viewed as a realization of an underlying stochastic point process with conditional intensity function λ(t|Ht), where t ∈ = [0, T] denotes the time coordinate, and Ht is the history of the process up to t. Spike trains can be mapped into an RKHS by defining a strictly positive definite kernel, the Schoenberg kernel, between the conditional intensity functions of two point processes (Paiva et al., 2009) as

| (4) |

where aλ > 0 is the spike-train kernel parameter. The intensity function can be estimated by convolving the spike times with a smoothing kernel g(t), yielding

| (5) |

As shown in Figure 2, the entire spike train is mapped into a location or function in the RKHS. For simplicity, we use the rectangular function , where ≫ the inter-spike interval, and U(t) is a Heaviside function. Let denote the spike train for the i-th sample of the n-th spiking unit, the multi-unit spike kernel is taken as the unweighted sum over the kernels on the individual units

| (6) |

Figure 2.

Graphical representation of a reproducing kernel Hilbert space defined on spike trains using the Schoenberg kernel. Spike trains are transformed into a high dimensional feature space (Hilbert space). Applying the kernel trick allows inner products in this space to be computed without explicit reference to the feature vectors.

2.2.3. Inverse model of spiking network

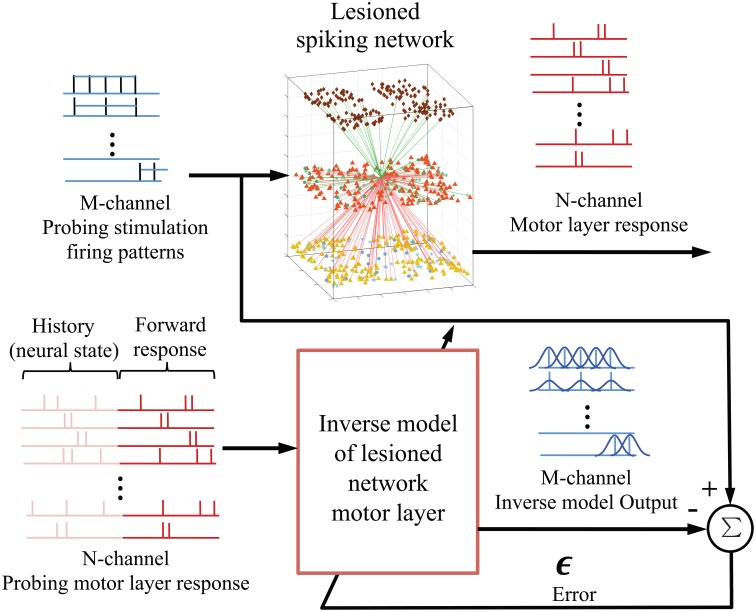

As shown in Figure 3, the goal is to learn an inverse model of the plant P, i.e., lesioned spiking network motor layer, from the probing stimulus-response pairs; and then apply the pre-lesioned motor response to the trained multiple-input-multiple-output (MIMO) decoding model to obtain an optimized repair neurostimulation pattern. The neural responses of the EM population were mapped into a RKHS for spike trains and the filter coefficients were adapted using errors between the desired probing neurostimulation firing rates and the inverse model output. Using cross validation, the kernel parameter in (4) was set at aλ = 0.05. The allowable neurostimulation duration was fixed at 200 ms, from the 100 to 300 ms mark of each trial.

Figure 3.

Integration of kernel adaptive inverse controller with biomimetic spiking model of sensorimotor system. The M-channel probing neurostimulation firing pattern (rates) and the corresponding N-channel EM population responses are used to train an inverse model or mapping of the lesioned spiking network motor layer. The motor layer response window includes the history (provides current state) and the forward response. Once fully trained, the inverse model outputs an estimate of the optimal neurostimulation for a desired neural response.

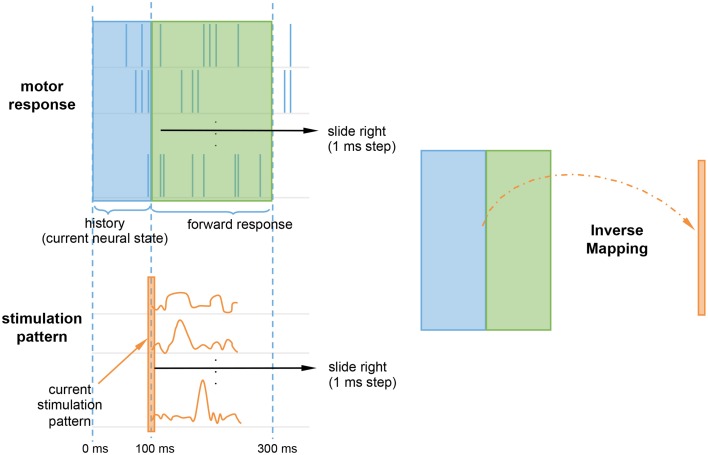

Rather than continuously stimulating the spiking network, we were interested in whether an initial onset of neurostimulation patterns could repair the lesioned reaching trajectories. The topological relationship between the probing stimuli and responses was set using a delay embedding of 300 or 200 ms, for the left and bottom target, respectively. The delays were selected to reflect the expected duration of the stimulation effect or impulse response for each target, determined by subtracting the end the stimulation period (300 ms) from the reaching trajectory duration. To account for the non-stationarity of the spiking network, the stimuli was not only mapped to the forward response, but also to the history of the motor response (Figure 4). The rationale being that, like state machines, to calculate the correct next state, the system needs to know both the input and the the current neural state. The history, or backward motor response, provides the current neural state. Therefore, every 1 ms step, a sliding window containing both the history and the forward response is mapped to the current stimulation pattern. The current stimulation pattern consists of the vector of m firing rates for that time step, where m is the size of the ES population. Since stimulation starts at 100 ms, the initial history window size only ranges 0–100 ms, but will grow to match the size of the forward response window as time progresses.

Figure 4.

Inverse mapping of motor response onto the stimulation pattern. At every time step, a sliding window containing the motor forward response (green) and history (blue) is mapped to the current stimulation pattern (orange). The history accounts for non-stationarity by providing the system with the current neural state. The current stimulation pattern consists of a vector of firing rates for the current time step.

The stimulation patterns generated by the inverse model were input in the spiking network by connecting spike generators (NetStims) to each of the ES population cells. Different stimulation patterns were characterized in terms of the targeted cell, starting time, duration, and firing rate of the external spike generator, which provided input via the AMPA receptor synapse of the cell. The synaptic weight between the spike generator and the cell was ten times larger than that of regular background synaptic inputs.

The Matlab code for the inverse neurocontroller, the probing dataset, and the Python/NEURON code to lesion and stimulate the spiking network are available online at: https://github.com/Neurosim-lab/controller_spiking_network_arm.

3. Results

Initial trained reaching trajectories were perturbed by different types of simulated lesions of the sensorimotor network. These lesions were then repaired using the adaptive inverse controller which aimed to restore the spiking patterns and reaching behavior. Repair commenced by generating a set of probes for the perturbed system, in order to inform the inverse model.

3.1. Simulated lesions and response probing data

Original trajectories were obtained from the spiking network, after training it via reinforcement learning STDP to drive the virtual arm to a specified target. The setup was similar to that of a common sensorimotor experimental task, the center-out reaching task (Hatsopoulos et al., 2004; Hwang and Shadmehr, 2005; Sanchez et al., 2011). The subject starts from a central position in a horizontal 2D plane and has to reach one of the targets in a surrounding circumference. For the purpose of evaluating the neurocontroller, we chose two different targets (bottom and left) located 15 cm from the starting position. These targets were chosen because they involve different sets of muscles: shoulder extensor and elbow flexor for bottom target; and shoulder flexor and elbow extensor for left target (Figure 5). Reaching duration was 600 ms for the left target and 500 ms for the bottom target. Target distance as well reaching durations (or velocities) are consistent with experimental data of humans and primates performing a similar task (Hatsopoulos et al., 2004; Hwang and Shadmehr, 2005; Sanchez et al., 2011).

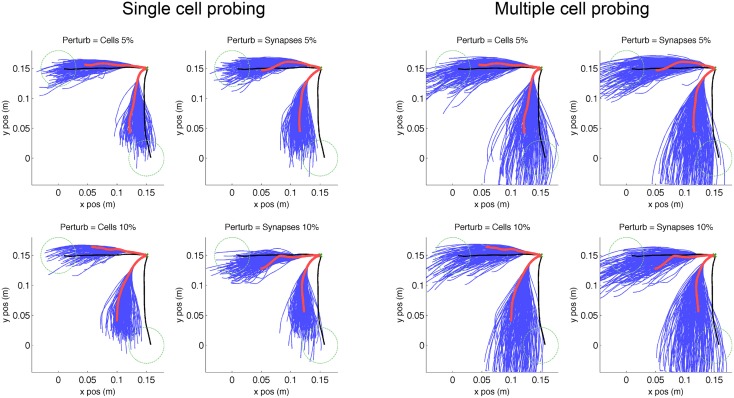

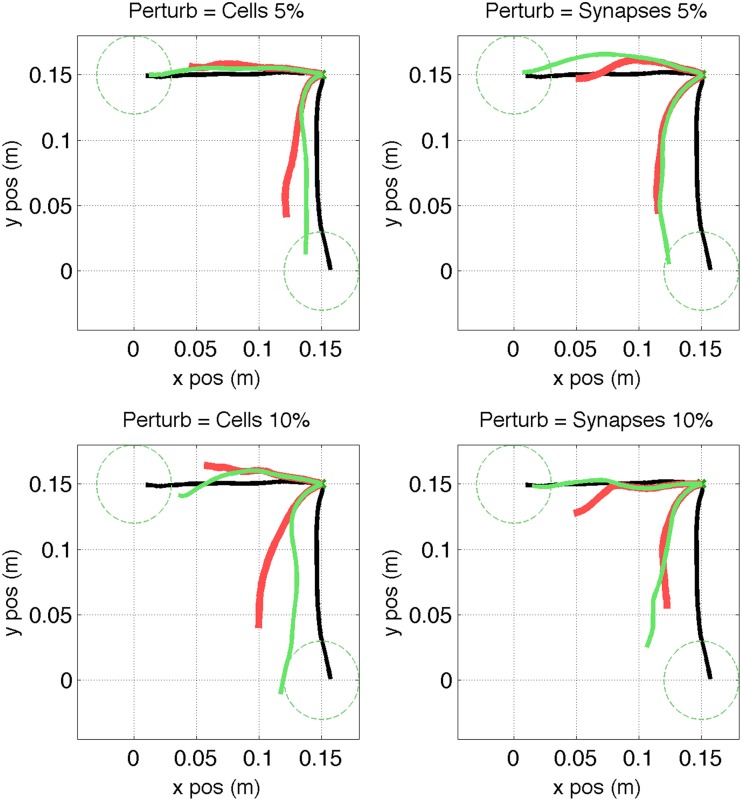

Figure 5.

Virtual arm trajectories after simulated lesion and neurostimulation probing. Original arm trajectories (black), were perturbed due to a network lesion leading to decreased reaching performance (red). A set of single cell and multiple cell probing stimulations (blue) enabled the neurocontroller to build an inverse model of the system. Data is shown for 2 targets and 4 types of perturbation. Target is indicated as green dashed circle.

We simulated two types of lesion for each of the trained targets. The first type of lesion was cell ablation, which consisted of removing 5% or 10% of cells randomly selected from the 192 excitatory-sensory (ES) cells. These cells projected to the excitatory-motor (EM) cells which were the eventual target of trajectory repair. The second type of lesion was removal of 5% or 10% of synaptic connections, selected randomly from the all 21,588 connections in the network. Behavioral degradation was significant for both types of lesions, as seen by the difference between original (Figure 5, black) and post-lesion trajectory (Figure 5, red).

The kernel-based inverse model of the neural system was generated by probing the response of the system to different neurostimulation patterns. Each of the 8 scenarios (2 targets × 2 lesion types × 2 percentages) was probed by stimulating each (remaining) individual ES cells for 200 ms with input spike rate of 250 Hz via AMPA receptors, starting 100 ms after reaching trial onset. A second probing dataset consisted of randomized multiple-cell stimulation patterns to the ES population (1–10 cells), starting at 100 ms and with a random duration between 100 and 500 ms (Figure 5, blue lines). These trajectories reflect the effect of the lesion plus the probing neurostimulation.

3.2. Restoration of pre-lesion spiking patterns and trajectories via neurostimulation

The neurostimulation patterns required to reproduce desired output population neural patterns were obtained using the inverse model generated from the probing data input/output correspondences. This inverse solution determines the pattern of activation of ES cells (inputs) that will be needed in order to get a particular desired output pattern from the EM cells (outputs). The EM cells then provide motor commands to the virtual arm to produce the trajectory. It is desirable to produce the repairing neurostimulation patterns in the early stages of the reaching task, in order to correct the trajectories before they have strayed too far from the desired direction.

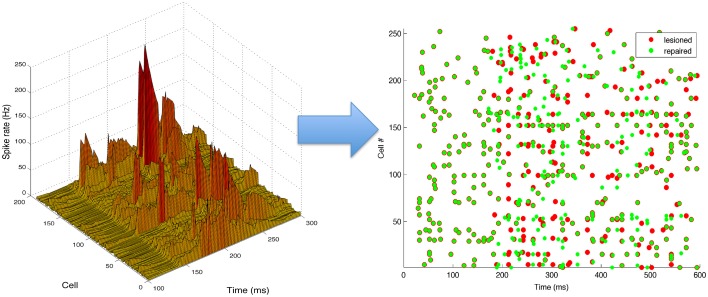

An example of a neurostimulation pattern derived by the inverse model is illustrated in Figure 6 using a 3D representation of the parameter space explored by the algorithm, with dimensions corresponding to the cell number, time and intensity (firing rate) of the neurostimulation. The left panel shows a complex spatiotemporal pattern with relatively strong inputs to 12 ES neurons, with the highest stimulation targeting cell 170 at ~200 ms. Neurostimulation is reflected in the ES cells raster plot (right panel) as a set of new spikes (e.g., cell 170 between 200 and 300 ms). These stimulations then shift activations more broadly due to divergent multisynaptic effects, resulting in changes to the target EM population. Note that the effect of neurostimulation on the ES cells will depend on a number of interacting factors, including co-occurring excitatory and inhibitory inputs from other cells, or the intrinsic state of the cell (e.g., hyperpolarized).

Figure 6.

Neurostimulation pattern derived by the kernel adaptive inverse neurocontroller for the 10% cell lesion of left target reaching. (Left) The pattern is represented in a continuous three-dimensional space that includes the cell number, time and intensity (rate). (Right) Raster plot of activity before (red) and after neurostimulation (green), illustrating the spiking changes derived from neurostimulation (e.g., increased rate of cell 170, from 200 to 300 ms). All S population cells (1–255) are shown, including inhibitory ones (192–255), although only the excitatory cells (1–191) can be stimulated.

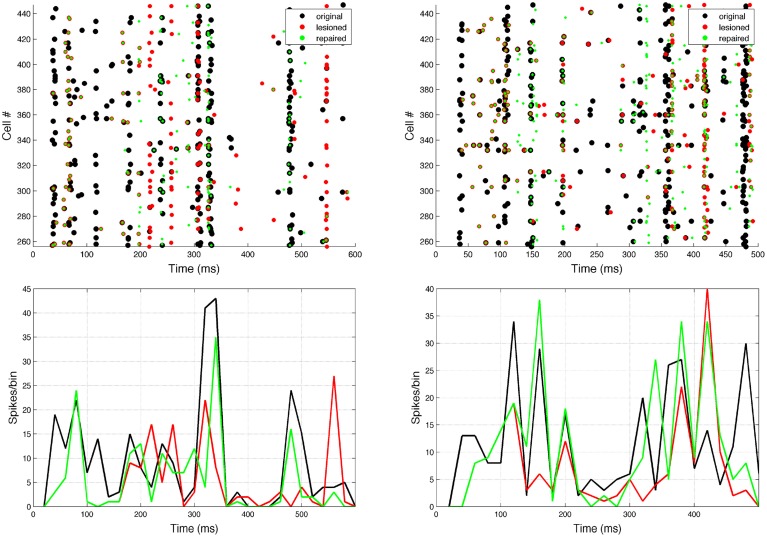

Neurostimulation administered to ES cells recovered some of the original EM spiking patterns (Figure 7). Comparing lesioned (red) with control (black) activity demonstrates that the lesion had a major impact on activity patterns, due to the high recurrent connectivity of the network. Neurostimulation then brought the activity back closer to the original (compare green to black), including restoration of coordinated bursts of activity with precise timing. Two representative examples are included to illustrate the variants in terms of firing patterns for different targets and types of lesions.

Figure 7.

Raster plot and population peri-event time histogram (PETH) of the original (black), lesioned (red), and repaired (green) motor population. Overlaps of black and green dots or lines indicate activity that has been restored via neural stimulation. Note how before the neurostimulation is delivered, the activity of the lesioned and repaired networks is identical. Two representative examples are shown: 10% cell lesion of left target reaching (left panel), and 10% synaptic lesion of bottom target reaching (right panel). Cells ids correspond to the motor population (256–511). PETH bin size is 20 ms.

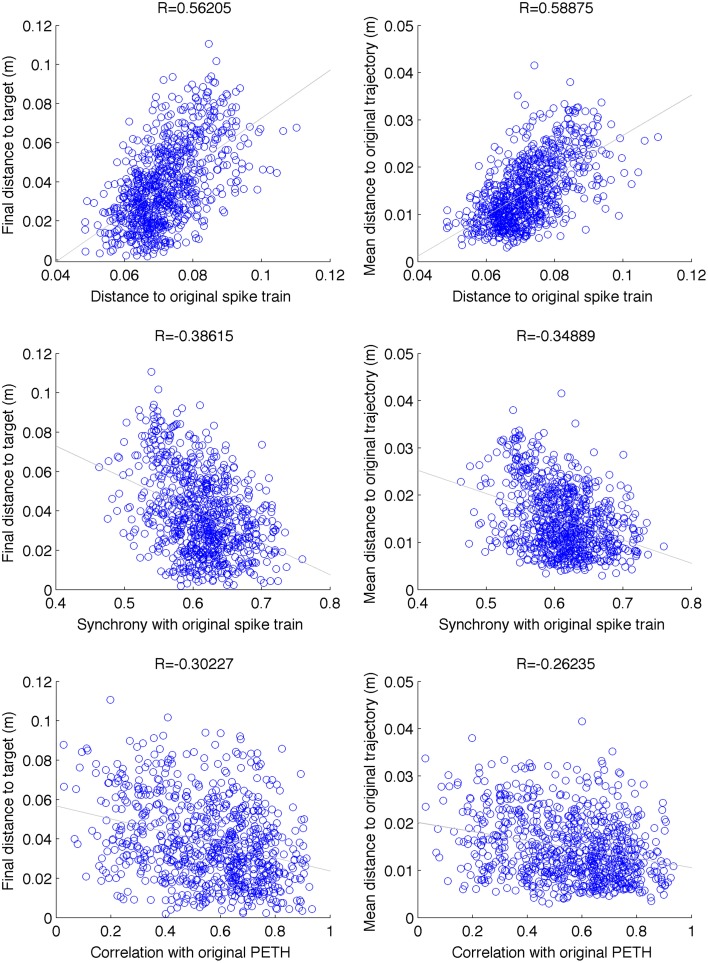

We employed three different spike-train metrics and two behavioral metrics to evaluate the performance of our system. The SPIKE-distance metric (Kreuz et al., 2011, 2015) was used to calculate pairwise dissimilarities between all cells in the motor population (0–1 measure, 0 means identical spike trains), and averaged across all cells. Similarly, the SPIKE-sync metric (Kreuz et al., 2011, 2015) quantified the degree of synchrony between pairs of cells. This metric can be understood as a coincidence detector where the coincidence window is defined adaptively according to the local firing rate. The third metric was the correlation coefficient between the peri-event stimulus histograms (PETHs). To avoid biases due to initial phase, we averaged the result across N − 1 histograms with increasing starting times (1ms steps), where N is the PETH bin size (20 ms). In terms of behavioral metrics we employed the final distance to target at the end of the reaching trial, and the mean point-wise distance along the trajectory.

By analysing 800 random perturbations of the original trajectories, equally distributed among the 2 targets and 4 perturbation types, we found a a relatively strong and significant correlation (|R| = 0.41, N = 800, p < 0.001) between the spike train and behavioral metrics (Figure 8). The strongest correlation was found between the distance to original spike train (SPIKE-distance) and behavioral metrics (R = 0.57). These relations provide a useful reference system to evaluate the results of the repair neurostimulations. At the same time they highlight one of the challenges of this approach: the system includes multiple stages of complex nonlinear dynamics, ranging from the single cell to the virtual arm model. As a consequence, similarity to original spike train is not sufficient to guarantee recovery of the original arm trajectory.

Figure 8.

Scatter plot between different spike-train distance metrics (between original and perturbed) and behavioral performance. Data is shown for 100 random perturbations for each of the 2 targets × 2 types (cell vs synapse) × 2 intensities (5% and 10%) (N = 800). Final distance to target and mean distance to original trajectory were positively correlated with distance to original spike-train (SPIKE-distance metric), and negatively correlated with the level of synchrony with respect to the original spike-train (SPIKE-sync metric) and the correlation with the original PETH. These relations can be used as a reference to evaluate the neurostimulation results. p < 0.001 for all correlations.

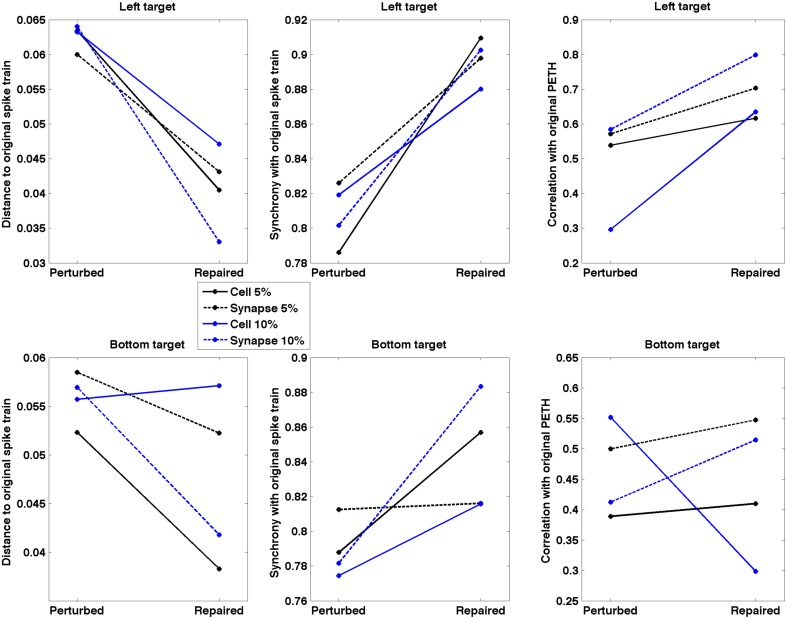

Repaired spike patterns were overall closer to the original ones than the lesioned patterns (Figure 9). All except one (7/8) conditions exhibited a decrease in spike train dissimilarity (SPIKE-dist, mean decrease = 0.017) after neurostimulation. Neurostimulation also increased spike train synchronization with respect to the original pattern (SPIKE-sync, mean increase = 0.072) in all conditions. The correlation between the original and repaired population PETH (20 ms bins) was higher than between the original and perturbed PETH for 7/8 conditions (mean increase = 0.133). Repair of the bottom target 10% cell lesion resulted in an improvement of the synchronization measure, but a decline in the spike distance and PETH correlation measures. This evidences the difficulty and ambiguity that arise when comparing firing patterns, and underlines the need to identify what features of the spike trains are more relevant to the task, as well as to complement the system evaluation with direct behavioral metrics.

Figure 9.

Spike train dissimilarity (SPIKE-dist), spike train level of synchrony (SPIKE-sync) and population PETH correlation between original and lesioned/repaired networks. Data shown for two different targets and four lesion types. Neurostimulation reduced spiking pattern dissimilarity and increased synchrony between the lesioned and original networks for 7/8 conditions; and increased PETH correlation for 6/8 conditions.

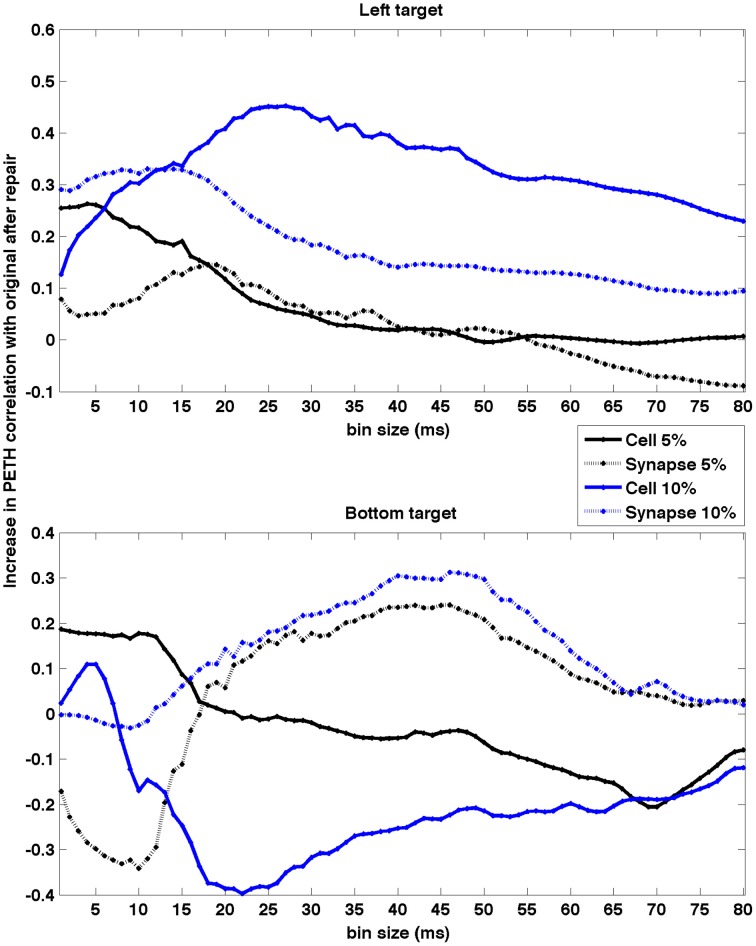

To quantify how the timescale of the analysis affected the neurocontroller results, we evaluated the effect of PETH bin size on the population PETH correlation increase after neurostimulation (Figure 10). This can potentially provide insights into what timescales are more relevant for restoration. Our results indicate different trends depending on the lesion and target. Five of the conditions exhibit higher restoration for smaller bin sizes (< 20 ms) which suggests that spike synchrony played a dominant role. This contrasts with the remaining three conditions where the top restoration performance was obtained for larger bin sizes (~30–50 ms), suggesting firing rates were the predominant factor.

Figure 10.

Effect of PETH bin size on PETH correlation between original and repaired vs. lesioned. Data shown for two different targets and four lesion types. The effect was dependent on type of lesion and target. Higher restoration for smaller bin sizes (< 20 ms) suggests spike synchrony may play a dominant role, whereas for larger sizes (~ 30–50 ms) suggests firing rate is the predominant factor. PETHs were calculated using firing rate to enable comparison of different bin sizes.

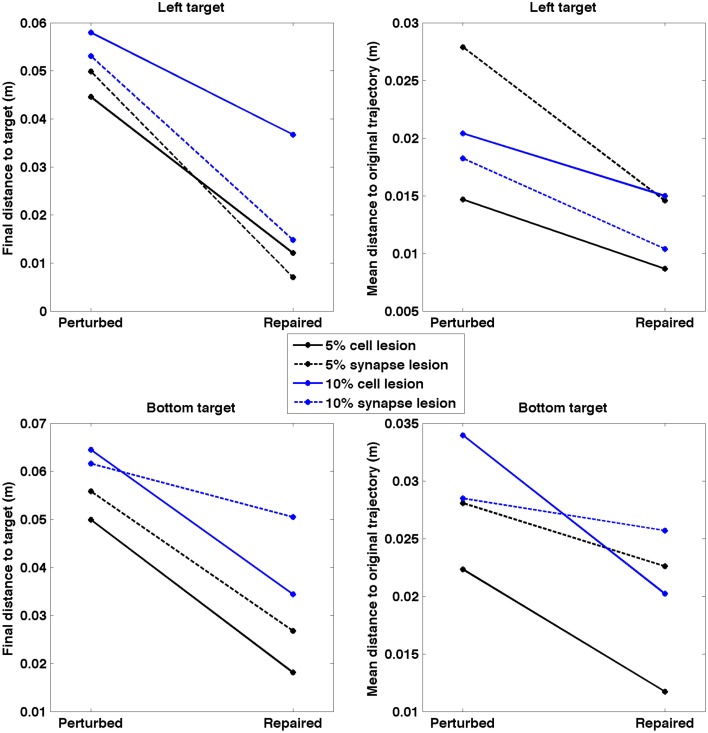

After applying the repair neurostimulation to the lesioned network, the resulting motor activity yielded virtual arm reaching trajectories that more closely resembled the original trajectory (Figure 11). Both final distance to target at the end of the reaching trial, and the mean point-wise distance along the trajectory, were reduced after neurostimulation repair (Figure 12). The trajectories restored in the 5% lesion conditions were closer to the original than those restored in the 10% lesions conditions. Overall, mean distance to target was reduced from 5.47 to 2.51 cm after repair. Mean point-wise distance between trajectories was reduced from 2.43 to 1.61 cm. The same dataset was used to calculate the arm trajectories (Figure 11), behavioral metrics (Figure 12), and spike train metrics (Figure 9). This provides a direct link between neural activity and reaching behavior, thus facilitating the interpretation of the results.

Figure 11.

Virtual arm trajectories after simulated lesion and repair neurostimulation. Original arm trajectories (black), were perturbed due to a network lesion leading to decreased reaching performance (red). Neurostimulation partly repaired the network and restored the target reaching performance (green). Results are shown for 2 targets and 4 types of perturbation. Target area is indicated as dashed circle.

Figure 12.

Quantitative measures of repair neurostimulation. Both metrics reflect a behavioral improvement after neurostimulation for all conditions. (Left) Final distance between hand and target. (Right) Mean point-wise distance to original arm trajectory.

4. Discussion

We implemented a neurocontroller using kernel adaptive filtering techniques on spike trains, which produced neurostimulation patterns that restored neural and functional responses in a lesioned biomimetic spiking network model. Neurostimulation partly restored the behavioral performance of a realistic virtual musculoskeletal arm which was driven by the network, allowing it to reach close to the original trained targets through trajectories similar to those produced before lesioning. The neurocontroller was able to compensate for 2 different types of lesions: a cell death and synaptic loss model. Neurodegenerative and ischemic (stroke) disease (Lytton et al., 1999) may be a cause of cell death. Certain neurodegenerative disease, such as Alzheimer's (Palop and Mucke, 2010; Rowan et al., 2014), as well as traumatic brain injury (Gupta and Przekwas, 2013), have been shown to cause both cell death and synaptic loss. This work also serves as a proof of concept to underline the potential benefits of neural simulations to evaluate neurocontrollers. These include the ability to reproducibly generate the required probing datasets, and detailed access to all effects of neurostimulation on the system, ranging from cells and synapses to virtual arm muscle electromyogram (EMG) or arm position. As discussed in the next subsection, further work is needed to increase the similarity between model and real brains and pave the way toward clinical applications.

One interesting commonality of the neurocontrol solutions for different directions of reach and types of damage was the synchrony with respect to the original spiking oscillations. Coordinated oscillations within and across brain areas are a dramatic feature of brain activity noted in electrocorticography (ECoG), which must be reconciled with the feedforward nature of many tasks. Coordination of firing may well play an important role in feedforward systems as coordinated activation across multiple convergent units would provide strong drive by spatial summation. This provides an important complement to the efficacy of temporal convergence from repetitive firing of presumed labeled-line rate-coding units. Spike timing plays an important role in motor control, as evidenced by studies demonstrating precise spike synchronization is involved in the preparation and execution of movement (Riehle et al., 1997; Grammont and Riehle, 1999; Rubino et al., 2006). Our bin time analysis indicated that, for some conditions, shorter time scales resulted in higher PETH correlation, supporting the importance of precise spike timing. However, for other conditions, longer time scales resulted in higher correlations, suggesting firing rate played a predominant role in neural coding. These longer time bins were also consistent with the sliding window duration (50 ms) used to compute the motor output commands from the EM population. Elucidating the exact role that the different time scales play will require further data and analysis.

Overall, we found the 10% lesions more difficult to fully restore than the 5% ones. Presumably, given the highly recurrent network connectivity, it was harder to find unaffected polysynaptic pathways to reproduce original activity in the more severe condition. This could be further explored from a directed graph-theoretic perspective by looking at numbers and types of remaining motifs in the context of removing nodes and edges. We also note that a physiological system would have continued synaptic plasticity so that the brain would be learning at the same time as the neurocontroller is learning, a phenomenon known as co-adaptation. This effect could be incorporated into our model by continuing the learning mechanisms in the network model during the period of neurostimulation (Song et al., 2013; Rowan et al., 2014), thereby providing some of the long-term neural plasticity induced by neurostimulation. Studies have shown motor cortex plasticity aids in motor function recovery after injury (Kleim et al., 2003; Jackson et al., 2006; Ramanathan et al., 2006), and the development of neurocontrollers will allow more precise deployment of plasticity-inducing stimulation therefore leveraging its rehabilitative effects.

Employing a biomimetic neuronal network to control a biomimetic virtual arm provides a matching of (relatively slow) dynamics that differs greatly from control of a simpler kinematic 2-link arm or a mechanical robotic arm (Dura-Bernal et al., 2015b). This matching of biological verisimilitude also offers the opportunity to understand control in terms of specific muscle contractions that can be compared to clinical cases, as the effectors in the model provide muscle activation rather than control of joint angle. Similarly, the sensory afferents measure muscle length and therefore correspond to the muscle spindle proprioceptors embedded in muscle.

As expected, there are many potential network solutions that can be drawn upon to produce a particular arm trajectory—this is an extremely high dimensional neural system being applied to a lower dimensional virtual arm. In the context of neural Darwinism (Edelman, 1987), our neurocontroller is able to choose, from among these multiple adapted (fit) neural subsystems, ones that are also able to solve the problem in the absence of the original full system. In neural Darwinism, this concept is referred to as neural degeneracy. The same concept also arises in consideration of echo state networks. From this perspective, pieces of these potential systems are selected during the initial probing phase of development of the inverse controller and a full dynamics is then drawn from the population of these dynamical fragments. Further study might enable us to map the fragments that were used in the solution in order to generate an explicit subspace of primitives both at neural firing and muscle synergy levels.

4.1. Limitations and challenged ahead

Our study provides groundwork for the novel application of kernel adaptive filtering methods to neural control, and evaluation of this approach via biomimetic brain models. Our model includes a number of biologically-realistic features, including intrinsic spiking properties for different cell types, cortex-based connectivity or neural oscillations. This level of detail is higher than that of many neural models, such as recurrent neural networks (RNNs), which have been shown to be useful to investigate neural circuit mechanisms underlying cognitive function (Mante et al., 2013; Sussillo et al., 2015). However, translation of this work to clinical applications will requires advancing many aspects. The realism and level of detail of the brain and neurostimulation models need to escalate drastically. The neurocontroller needs to be adapted to robustly exploit the dynamic, incomplete and complex data recorded from the brain. Progress in neural recording and stimulation technologies will also be critical to gradually move toward the clinical domains.

Our cortical model differs from the real brain in many ways, which should be considered when interpreting the results. Our model assumes a controlled scenario with full reproducibility of motor outputs and responses to neurostimulation. This strongly contrasts with the high variability and limited reproducibility in real brains. Our simulation captures several hundred neurons in a single cortical area. In reality, sensorimotor tasks likely involve millions of neurons from many regions (thalamus, basal ganglia, cerebellum, sensorimotor cortices, …) firing in coordinated patterns across and within areas (Douglas and Martin, 2012). The few cell types that we model as point neurons are only a minuscule fraction of the hundreds of cell categories that have been identified (Harris and Shepherd, 2015), each with distinct physiological properties and intricate dendritic and axonal morphologies. Our population-based connectivity matrices are far from capturing brain connectivity, which ranges from the subcellular patterns of synapses along dendrites, to laminar microcircuitry, to long range inter-areal connections. The recent full 3D reconstruction of a microscopic volume of cortical tissue evidenced its extraordinary complexity: 193 dendrites, 1407 axons and 1700 synapses were identified in a 40 × 40 × 50 micrometer volume (approximate size of a single cell body) (Kasthuri et al., 2015). These circuits provide the neural substrate for a myriad of neural coding and computation principles. Understanding and including them in our models is key to bridging the gap between neural activity and perception, cognition or behavior. Linking to behavior also requires more accurate models of the periphery systems, including the spinal cord (Alstermark and Isa, 2012) and motor plant (Loeb and Tsianos, 2015).

Large scale international efforts such as the Human Brain Project or the BRAIN initiative have fueled progress in computational neuroscience. As a result, brain models can incorporate and mimic anatomical and physiological data with an unprecedented level of detail. The model by Potjans and Diesmann (2014) with 80,000 point neurons and 0.3 billion synapses integrated a large body of cell type and connectivity data and reproduced many dynamical properties of cortical microcircuits. More recently, cellular and synaptic organization principles derived from experimental data were used to build what has been labeled as “the most complete simulation of a piece of excitable brain matter to date” (Koch and Buice, 2015; Markram et al., 2015). Cell models were classified into 207 types with distinct electro-physiological and full 3D morphological reconstructions, derived from recording and labeling over 14,000 neurons. The full simulation, which consisted of 31,120 neurons and 37 million synapses occupying approximately the size of a cortical column, enabled studying dynamic interactions across the molecular, cellular and circuit levels. These models, however, still lack direct links to behavior, as well as learning mechanisms, such as STDP or reinforcement learning.

We are similarly working on extending our cortical spiking model to include over 10,000 cells, 0.5 million synapses, 6 cortical layers, spinal cord circuits, and input from premotor cortex which mediates target selection (Chadderdon et al., 2014; Dura-Bernal et al., 2015a). In collaboration with experimentalists, we are also fully characterizing the 3D morphology and electrophysiology of the two main types of pyramidal cells in motor cortex (corticostriatal and corticospinal) (Suter et al., 2013; Neymotin et al., 2015). These will be embedded in the network simulations in order to study the multiscale dynamics linking molecular and cellular processes (McDougal et al., 2013) to the circuit and information processing level (Lytton et al., 2014; Marcus et al., 2014). Applying the neurocontrol approach developed in this paper to the increasingly detailed brain models would be an interesting step toward building practical clinical applications.

At the same time, we need to develop more realistic models of the effects of neurostimulation, which in our model is limited to increasing external inputs to the cells. This can be achieved for example by adding the optogenetic channelrhodopsin channel to the cell model (Ching and Ritt, 2013; Kerr et al., 2014), or by characterizing the recruitment of neurons during intracortical extracellular microstimulation (Overstreet et al., 2013; Hartmann et al., 2015). It is also important to comprehensively model the collateral effects of electric field stimulation, since studies have found that fibers of passage get preferentially excited (McIntyre et al., 2004), potentially leading to undesired effects.

Future improvements to the proposed neurocontroller will focus on its ability to generalize and employ different types of probing data. The inverse mapping assumes that the solution is spanned by the basis formed by the probing patterns. As we increase the complexity of the model and the severity of the lesions to repair, solutions interpolated from a limited number of probes may not effectively address the richness of the circuit dynamics. A possible extension would be to implement multiple iterations, such that the spiking network output after stimulation is fed back as input probing data to the neurocontroller. Another option that could improve performance is to include probing data with a larger spectrum of outcomes, as well as with different timescales. Local field potentials (LFPs) or ECoG signals are interesting candidates, and are closer to the type of probing data that could be obtained from real brains. Similarly, the mapping could be made directly between stimulation and motor responses, such as arm kinematics or muscle activations, leading to solutions with optimal behavior performance but potentially different neural patterns. Although this is an interesting option, an advantage of directly targeting brain signals is that it could potentially be applied in scenarios (e.g., sensory or cognitive dysfunctions) where the target behaviors cannot be clearly specified or are not available.

The neurocontroller described here requires applying very precise spatiotemporal stimulation, at the single-cell and millisecond resolution. Recent studies (Warden et al., 2014) demonstrate this is already possible with optogenetic stimulation, which was, for example, used to activate single place-cells in hippocampus (Rickgauer et al., 2014). High-resolution stimulation was also able to bring retinal prosthetic capabilities closer to normal vision, by optogenetically stimulating 9800 ganglion cells (Nirenberg and Pandarinath, 2012). Further advancements will enable more selective cell targeting and larger number of simultaneous stimulated cells (Suter et al., 2014).

The latest developments in brain-machine interfaces (Miranda et al., 2015) aim to build the next generation of implantable closed-loop neuroprosthetics, with applications including memory restoration (Hiscott, 2014) or treatment of neuropsychological disorders (Nelson and Tepe, 2014). Combining neuroprosthetics with biomimetic brain models has the potential to leverage the system's performance: the simulated circuits can interact directly with the biological brain circuits (Tessadori et al., 2012; Lee et al., 2014) and complement neurocontrol methods with biological mechanisms of co-adaptation and learning to achieve the target functional task (Sanchez et al., 2012; Kocaturk et al., 2015).

Spinal cord stimulation mediated by neurocontrol methods has successfully been employed for motor function restoration (Nishimura et al., 2013; Grahn et al., 2014). Intracortical stimulation to motor cortices has largely been limited to probing and understanding the elicited responses. However, an accumulating body of evidence (Jackson et al., 2006; Arle and Shils, 2008; Jefferson et al., 2015) suggests it could have far-reaching neurorestorative applications if coupled with appropriate neurocontrol methods. Biomimetic brain models may provide a useful tool to develop, evaluate and implement these neurocontrollers.

Author contributions

All authors listed, have made substantial, direct and intellectual contribution to the work, and approved it for publication.

Conflict of interest statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

Research funded by DARPA N66001-10-C-2008 and NIH U01EB017695. We would like to thank Xianlian (Alex) Zhou and Andrzej Przekwas from CFD Research Corporation for providing the virtual arm, and Cliff C Kerr for code to simulate neurostimulation.

References

- Alstermark B., Isa T. (2012). Circuits for skilled reaching and grasping. Annu. Rev. Neurosci. 35, 559–578. 10.1146/annurev-neuro-062111-150527 [DOI] [PubMed] [Google Scholar]

- Arle J. E., Shils J. L. (2008). Motor cortex stimulation for pain and movement disorders. Neurotherapeutics 5, 37–49. 10.1016/j.nurt.2007.11.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bensmaia S. J., Miller L. E. (2014). Restoring sensorimotor function through intracortical interfaces: progress and looming challenges. Nat. Rev. Neurosci. 15, 313–325. 10.1038/nrn3724 [DOI] [PubMed] [Google Scholar]

- Carandini M. (2012). From circuits to behavior: a bridge too far? Nat. Neurosci. 15, 507–509. 10.1038/nn.3043 [DOI] [PubMed] [Google Scholar]

- Carnevale N. T., Hines M. L. (2006). The NEURON Book. New York, NY: Cambridge University Press. [Google Scholar]

- Chadderdon G. L., Mohan A., Suter B. A., Neymotin S. A., Kerr C. C., Francis J. T., et al. (2014). Motor cortex microcircuit simulation based on brain activity mapping. Neural Comput. 26, 1239–1262. 10.1162/NECO_a_00602 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen B., Zhao S., Zhu P., Príncipe J. C. (2012). Quantized kernel least mean square algorithm. IEEE Trans. Neural Netw. Learn. Syst. 23, 22–32. 10.1109/TNNLS.2011.2178446 [DOI] [PubMed] [Google Scholar]

- Ching S., Ritt J. (2013). Control strategies for underactuated neural ensembles driven by optogenetic stimulation. Front. Neural Circuits 7:54. 10.3389/fncir.2013.00054 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Choi J. S., DiStasio M. M., Brockmeier A. J., Francis J. T. (2012). An electric field model for prediction of somatosensory (s1) cortical field potentials induced by ventral posterior lateral (vpl) thalamic microstimulation. IEEE Trans. Neural Syst. Rehabil. Eng. 20, 161–169. 10.1109/TNSRE.2011.2181417 [DOI] [PubMed] [Google Scholar]

- Clark K. L., Armstrong K. M., Moore T. (2011). Probing neural circuitry and function with electrical microstimulation. Proc. R. Soc. B Biol. Sci. 278, 1121–1130. 10.1098/rspb.2010.2211 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Douglas R. J., Martin K. A. C. (2012). Behavioral architecture of the cortical sheet. Curr. Biol. 22, R1033–R1038. 10.1016/j.cub.2012.11.017 [DOI] [PubMed] [Google Scholar]

- Dura-Bernal S., Chadderdon G. L., Neymotin S. A., Francis J. T., Lytton W. W. (2014). Towards a real-time interface between a biomimetic model of sensorimotor cortex and a robotic arm. Patt. Recogn. Lett. 36, 204–212. 10.1016/j.patrec.2013.05.019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dura-Bernal S., Kerr C., Neymotin S., Suter B., Shepherd G., Francis J., et al. (2015a). Large-scale m1 microcircuit model with plastic input connections from biological pmd neurons used for prosthetic arm control, in 24th Annual Computational Neuroscience Meeting (CNS15), BMC Neuroscience (Prague: ). [Google Scholar]

- Dura-Bernal S., Zhou X., Neymotin S. A., Przekwas A., Francis J. T., Lytton W. (2015b). Cortical spiking network interfaced with virtual musculoskeletal arm and robotic arm. Front. Neurorobot. 9:13. 10.3389/fnbot.2015.00013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Edelman G. M. (1987). Neural Darwinism: The Theory of Neuronal Group Selection. New York, NY: Basic Books. [DOI] [PubMed] [Google Scholar]

- Featherstone R., Orin D. (2000). Robot dynamics: equations and algorithms, in ICRA (San Francisco, CA: ), 826–834. 10.1109/robot.2000.844153 [DOI] [Google Scholar]

- Grahn P. J., Mallory G. W., Berry B. M., Hachmann J. T., Lobel D. A., Lujan J. L. (2014). Restoration of motor function following spinal cord injury via optimal control of intraspinal microstimulation: toward a next generation closed-loop neural prosthesis. Front. Neurosci. 8:296. 10.3389/fnins.2014.00296 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grammont F., Riehle A. (1999). Precise spike synchronization in monkey motor cortex involved in preparation for movement. Exp. Brain Res. 128, 118–122. 10.1007/s002210050826 [DOI] [PubMed] [Google Scholar]

- Gupta R. K., Przekwas A. (2013). Mathematical models of blast induced tbi: current status, challenges and prospects. Front. Neurol. 4:59. 10.3389/fneur.2013.00059 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hampson R. E., Gerhardt G. A., Marmarelis V., Song D., Opris I., Santos L., et al. (2012). Facilitation and restoration of cognitive function in primate prefrontal cortex by a neuroprosthesis that utilizes minicolumn-specific neural firing. J. Neural Eng. 9:056012. 10.1088/1741-2560/9/5/056012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hampson R. E., Song D., Opris I., Santos L. M., Shin D. C., Gerhardt G. A., et al. (2013). Facilitation of memory encoding in primate hippocampus by a neuroprosthesis that promotes task-specific neural firing. J. Neural Eng. 10:066013. 10.1088/1741-2560/10/6/066013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harris K. D., Shepherd G. M. (2015). The neocortical circuit: themes and variations. Nat. Neurosci. 18, 170–181. 10.1038/nn.3917 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hartmann C. J., Chaturvedi A., Lujan J. L. (2015). Quantitative analysis of axonal fiber activation evoked by deep brain stimulation via activation density heat maps. Front. Neurosci. 9:28. 10.3389/fnins.2015.00028 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hatsopoulos N., Joshi J., O'Leary J. G. (2004). Decoding continuous and discrete motor behaviors using motor and premotor cortical ensembles. J. Neurophysiol. 92, 1165–1174. 10.1152/jn.01245.2003 [DOI] [PubMed] [Google Scholar]

- Hines M., Carnevale N. (2001). NEURON: a tool for neuroscientists. Neuroscientist 7, 123–135. 10.1177/107385840100700207 [DOI] [PubMed] [Google Scholar]

- Hiscott R. (2014). Darpa: on the hunt for neuroprosthetics to enhance memory. Neurol. Today 14, 8–10. 10.1097/01.NT.0000456276.47073.51 [DOI] [Google Scholar]

- Holzbaur K. R., Murray W. M., Delp S. L. (2005). A model of the upper extremity for simulating musculoskeletal surgery and analyzing neuromuscular control. Ann. Biomed. Eng. 33, 829–840. 10.1007/s10439-005-3320-7 [DOI] [PubMed] [Google Scholar]

- Hwang E. J., Shadmehr R. (2005). Internal models of limb dynamics and the encoding of limb state. J. Neural Eng. 2, S266. 10.1088/1741-2560/2/3/S09 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jackson A., Mavoori J., Fetz E. (2006). Long-term motor cortex plasticity induced by an electronic neural implant. Nature 444, 56–60. 10.1038/nature05226 [DOI] [PubMed] [Google Scholar]

- Jefferson S. C., Clayton E. R., Donlan N. A., Kozlowski D. A., Jones T. A., Adkins D. L. (2015). Cortical stimulation concurrent with skilled motor training improves forelimb function and enhances motor cortical reorganization following controlled cortical impact. Neurorehabil. Neural Repair 30, 155–158. 10.1177/1545968315600274 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kasthuri N., Hayworth K. J., Berger D. R., Schalek R. L., Conchello J. A., Knowles-Barley S., et al. (2015). Saturated reconstruction of a volume of neocortex. Cell 162, 648–661. 10.1016/j.cell.2015.06.054 [DOI] [PubMed] [Google Scholar]

- Kerr C. C., Neymotin S. A., Chadderdon G. L., Fietkiewicz C. T., Francis J. T., Lytton W. W. (2012). Electrostimulation as a prosthesis for repair of information flow in a computer model of neocortex. IEEE Trans. Neural Syst. Rehabil. Eng. 20, 153–160. 10.1109/TNSRE.2011.2178614 [DOI] [PubMed] [Google Scholar]

- Kerr C. C., O'Shea D. J., Goo W., Dura-Bernal S., Francis J. T., Diester I., et al. (2014). Network-level effects of optogenetic stimulation in a computer model of macaque primary motor cortex. BMC Neurosci. 15(Suppl. 1):P107 10.1186/1471-2202-15-S1-P107 [DOI] [Google Scholar]

- Klaes C., Shi Y., Kellis S., Minxha J., Revechkis B., Andersen R. A. (2014). A cognitive neuroprosthetic that uses cortical stimulation for somatosensory feedback. J. Neural Eng. 11:056024. 10.1088/1741-2560/11/5/056024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kleim J. A., Bruneau R., VandenBerg P., MacDonald E., Mulrooney R., Pocock D. (2003). Motor cortex stimulation enhances motor recovery and reduces peri-infarct dysfunction following ischemic insult. Neurol. Res. 25, 789–793. 10.1179/016164103771953862 [DOI] [PubMed] [Google Scholar]

- Kocaturk M., Gulcur H. O., Canbeyli R. (2015). Towards building hybrid biological/in silico neural networks for motor neuroprosthetic control. Front. Neurorobot. 9:8 10.3389/fnbot.2015.00008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koch C., Buice M. A. (2015). A biological imitation game. Cell 163, 277–280. 10.1016/j.cell.2015.09.045 [DOI] [PubMed] [Google Scholar]

- Koralek A. C., Jin X., Long II J. D., Costa R. M., Carmena J. M. (2012). Corticostriatal plasticity is necessary for learning intentional neuroprosthetic skills. Nature 483, 331–335. 10.1038/nature10845 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kreuz T., Chicharro D., Greschner M., Andrzejak R. G. (2011). Time-resolved and time-scale adaptive measures of spike train synchrony. J. Neurosci. Methods 195, 92–106. 10.1016/j.jneumeth.2010.11.020 [DOI] [PubMed] [Google Scholar]

- Kreuz T., Mulansky M., Bozanic N. (2015). Spiky: a graphical user interface for monitoring spike train synchrony. J. Neurophysiol. 113, 3432–3445. 10.1152/jn.00848.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee G., Matsunaga A., Dura-Bernal S., Zhang W., Lytton W., Francis J., et al. (2014). Towards real-time communication between in vivo neurophysiological data sources and simulator-based brain biomimetic models. J. Comput. Surg. 3, 12 10.1186/s40244-014-0012-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li K., Dura-Bernal S., Francis J., Lytton W., Principe J. (2015). Repairing lesions via kernel adaptive inverse control in a biomimetic model of sensorimotor cortex, in Proceedings of 7th International IEEE/EMBS Conference Neural Engineering (NER) (Montpellier: ). [Google Scholar]

- Li L., Park I. M., Brockmeier A., Chen B., Seth S., Francis J. T., et al. (2013). Adaptive inverse control of neural spatiotemporal spike patterns with a reproducing kernel Hilbert space (RKHS) framework. IEEE Trans. Neural Syst. Rehab. Eng. 21, 532–543. 10.1109/TNSRE.2012.2200300 [DOI] [PubMed] [Google Scholar]

- Liu W., Pokharel P., Príncipe J. C. (2008). The kernel least mean square algorithm. IEEE Signal Processing Lett. 56, 543–554. 10.1109/tsp.2007.907881 [DOI] [Google Scholar]

- Liu W., Príncipe J. C., Haykin S. (2010). Kernel Adaptive Filtering: A Comprehensive Introduction. Hoboken, NJ: Wiley; 10.1002/9780470608593 [DOI] [Google Scholar]

- Loeb G. E., Tsianos G. A. (2015). Major remaining gaps in models of sensorimotor systems. Front. Comput. Neurosci. 9:70. 10.3389/fncom.2015.00070 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lytton W., Neymotin S., Kerr C. (2014). Multiscale modeling for clinical translation in neuropsychiatric disease. J. Comput. Surgery 1:7 10.1186/2194-3990-1-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lytton W., Stark J., Yamasaki D., Sober S. (1999). Computer models of stroke recovery: implications for neurorehabilitation. Neuroscientist 5, 100–111. 10.1177/107385849900500214 [DOI] [Google Scholar]

- Lytton W. W., Neymotin S. A., Hines M. L. (2008a). The virtual slice setup. J. Neurosci. Methods 171, 309–315. 10.1016/j.jneumeth.2008.03.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lytton W. W., Omurtag A., Neymotin S. A., Hines M. L. (2008b). Just-in-time connectivity for large spiking networks. Neural Comput. 20, 2745–2756. 10.1162/neco.2008.10-07-622 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lytton W. W., Stewart M. (2006). Rule-based firing for network simulations. Neurocomputing 69, 1160–1164. 10.1016/j.neucom.2005.12.066 [DOI] [Google Scholar]

- Mante V., Sussillo D., Shenoy K. V., Newsome W. T. (2013). Context-dependent computation by recurrent dynamics in prefrontal cortex. Nature 503, 78–84. 10.1038/nature12742 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marcus G., Marblestone A., Dean T. (2014). The atoms of neural computation. Science 346, 551–552. 10.1126/science.1261661 [DOI] [PubMed] [Google Scholar]

- McDougal R. A., Hines M. L., Lytton W. W. (2013). Reaction-diffusion in the NEURON simulator. Front. Neuroinform. 7:28. 10.3389/fninf.2013.00028 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Markram H., Muller E., Ramaswamy S., Reimann M. W., Abdellah M., Sanchez C. A., et al. (2015). Reconstruction and simulation of neocortical microcircuitry. Cell 163, 456–492. 10.1016/j.cell.2015.09.029 [DOI] [PubMed] [Google Scholar]

- McIntyre C. C., Mori S., Sherman D. L., Thakor N. V., Vitek J. L. (2004). Electric field and stimulating influence generated by deep brain stimulation of the subthalamic nucleus. Clin. Neurophysiol. 115, 589–595. 10.1016/j.clinph.2003.10.033 [DOI] [PubMed] [Google Scholar]

- Miranda R. A., Casebeer W. D., Hein A. M., Judy J. W., Krotkov E. P., Laabs T. L., et al. (2015). Darpa-funded efforts in the development of novel brain–computer interface technologies. J. Neurosci. Methods. 244, 52–67. 10.1016/j.jneumeth.2014.07.019 [DOI] [PubMed] [Google Scholar]

- Nelson J. T., Tepe V. (2014). Neuromodulation research and application in the U.S. department of defense. Brain Stimul. 8, 247–252. 10.1016/j.brs.2014.10.014 [DOI] [PubMed] [Google Scholar]

- Neymotin S. A., Chadderdon G. L., Kerr C. C., Francis J. T., Lytton W. W. (2013). Reinforcement learning of two-joint virtual arm reaching in a computer model of sensorimotor cortex. Neural Comput. 25, 3263–3293. 10.1162/NECO-a-00521 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neymotin S. A., Lee H., Park E., Fenton A. A., Lytton W. W. (2011). Emergence of physiological oscillation frequencies in a computer model of neocortex. Front. Comput. Neurosci. 5:19. 10.3389/fncom.2011.00019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neymotin S. A., Suter B. A., Migliore M., Dura-Bernal S., Shepherd G. M. G., Lytton W. W. (2015). Optimizing computer models of layer 5 motor cortex pyramidal neurons using somatic whole cell recordings, in Society for Neuroscience, Annual Meeting (Chicago, IL: ). [Google Scholar]

- Nirenberg S., Pandarinath C. (2012). Retinal prosthetic strategy with the capacity to restore normal vision. Proc. Natl. Acad. Sci. U.S.A. 109, 15012–15017. 10.1073/pnas.1207035109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nishimura Y., Perlmutter S. I., Fetz E. E. (2013). Restoration of upper limb movement via artificial corticospinal and musculospinal connections in a monkey with spinal cord injury. Front. Neural Circuits 7:57. 10.3389/fncir.2013.00057 [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Doherty J. E., Lebedev M. A., Ifft P. J., Zhuang K. Z., Shokur S., Bleuler H., et al. (2011). Active tactile exploration using a brain-machine-brain interface. Nature 479, 228–231. 10.1038/nature10489 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Overduin S. A., d'Avella A., Carmena J. M., Bizzi E. (2012). Microstimulation activates a handful of muscle synergies. Neuron 76, 1071–1077. 10.1016/j.neuron.2012.10.018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Overstreet C. K., Klein J. D., Helms Tillery S. I. (2013). Computational modeling of direct neuronal recruitment during intracortical microstimulation in somatosensory cortex. J. Neural Eng. 10:066016. 10.1088/1741-2560/10/6/066016 [DOI] [PubMed] [Google Scholar]

- Paiva A. R. C., Park I., Príncipe J. C. (2009). A reproducing kernel Hilbert space framework for spike train signal processing. Neural Comput. 21, 424–449. 10.1162/neco.2008.09-07-614 [DOI] [PubMed] [Google Scholar]

- Palop J. J., Mucke L. (2010). Amyloid-[beta]-induced neuronal dysfunction in alzheimer's disease: from synapses toward neural networks. Nat. Neurosci. 13, 812–818. 10.1038/nn.2583 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Potjans T. C., Diesmann M. (2014). The cell-type specific cortical microcircuit: relating structure and activity in a full-scale spiking network model. Cereb. Cortex 24, 785–806. 10.1093/cercor/bhs358 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramanathan D., Conner J. M., Tuszynski M. H. (2006). A form of motor cortical plasticity that correlates with recovery of function after brain injury. Proc. Natl. Acad. Sci. U.S.A. 103, 11370–11375. 10.1073/pnas.0601065103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rickgauer J. P., Deisseroth K., Tank D. W. (2014). Simultaneous cellular-resolution optical perturbation and imaging of place cell firing fields. Nat. Neurosci. 17, 1816–1824. 10.1038/nn.3866 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Riehle A., Grün S., Diesmann M., Aertsen A. (1997). Spike synchronization and rate modulation differentially involved in motor cortical function. Science 278, 1950–1953. 10.1126/science.278.5345.1950 [DOI] [PubMed] [Google Scholar]

- Rowan M. S., Neymotin S. A., Lytton W. W. (2014). Electrostimulation to reduce synaptic scaling driven progression of alzheimer's disease. Front. Comput. Neurosci. 8:39. 10.3389/fncom.2014.00039 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rubino D., Robbins K. A., Hatsopoulos N. G. (2006). Propagating waves mediate information transfer in the motor cortex. Nat. Neurosci. 9, 1549–1557. 10.1038/nn1802 [DOI] [PubMed] [Google Scholar]

- Sanchez J., Lytton W., Carmena J., Principe J., Fortes J., Barbour R., et al. (2012). Dynamically repairing and replacing neural networks: using hybrid computational and biological tools. IEEE Pulse 3, 57–59. 10.1109/MPUL.2011.2175640 [DOI] [PubMed] [Google Scholar]

- Sanchez J. C., Tarigoppula A., Choi J. S., Marsh B. T., Chhatbar P. Y., Mahmoudi B., et al. (2011). Control of a center-out reaching task using a reinforcement learning brain-machine interface, in 5th International IEEE/EMBS Neural Engineering (NER) (Cancún: ), 525–528. 10.1109/ner.2011.5910601 [DOI] [Google Scholar]

- Scholkopf B., Herbrich R., Smola A. J. (2001). A generalized representer theorem, in Proceedings of 14th Annual Conference on Computational Learning Theory, Vol. 2111 (Cambridge, MA: ), 416–426. [Google Scholar]

- Scholkopf B., Smola A. J. (2001). Learning with Kernels, Support Vector Machines, Regularization, Optimization and Beyond. Cambridge, MA: MIT Press. [Google Scholar]

- Schutte L. M., Rodgers M. M., Zajac F., Glaser R. M. (1993). Improving the efficacy of electrical stimulation-induced leg cycle ergometry: an analysis based on a dynamic musculoskeletal model. IEEE Trans. Rehabil. Eng. 1, 109–125. 10.1109/86.242425 [DOI] [Google Scholar]

- Song W., Kerr C. C., Lytton W. W., Francis J. T. (2013). Cortical plasticity induced by spike-triggered microstimulation in primate somatosensory cortex. PLoS ONE 8:e57453. 10.1371/journal.pone.0057453 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spüler M., Nagel S., Rosenstiel W. (2015). A spiking neuronal model learning a motor control task by reinforcement learning and structural synaptic plasticity, in Neural Networks (IJCNN), 2015 International Joint Conference on (Killarney: IEEE; ), 1–8. 10.1109/IJCNN.2015.7280521 [DOI] [Google Scholar]

- Stanley G. B. (2013). Reading and writing the neural code. Nat. Neurosci. 16, 259–263. 10.1038/nn.3330 [DOI] [PubMed] [Google Scholar]

- Sussillo D., Churchland M. M., Kaufman M. T., Shenoy K. V. (2015). A neural network that finds a naturalistic solution for the production of muscle activity. Nat. Neurosci. 18, 1025–1033. 10.1038/nn.4042 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Suter B. A., Migliore M., Shepherd G. M. (2013). Intrinsic electrophysiology of mouse corticospinal neurons: a class-specific triad of spike-related properties. Cereb. Cortex 23, 1965–1977. 10.1093/cercor/bhs184 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Suter B. A., Yamawaki N., Borges K., Li X., Kiritani T., Hooks B. M., et al. (2014). Neurophotonics applications to motor cortex research: a review. Neurophotonics 1:011008. 10.1117/1.NPh.1.1.011008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tessadori J., Bisio M., Martinoia S., Chiappalone M. (2012). Modular neuronal assemblies embodied in a closed-loop environment: towards future integration of brains and machines. Front. Neural Circuits 6:99 10.3389/fncir.2012.00099 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thelen D. G., Anderson F. C., Delp S. L. (2003). Generating dynamic simulations of movement using computed muscle control. J. Biomech. 36, 321–328. 10.1016/S0021-9290(02)00432-3 [DOI] [PubMed] [Google Scholar]

- Underwood E. (2013). Darpa aims to rebuild brains. Science 342, 1029–1030. 10.1126/science.342.6162.1029 [DOI] [PubMed] [Google Scholar]

- Van Acker G. M., III, Amundsen S. L., Messamore W. G., Zhang H. Y., Luchies C. W., Kovac A., et al. (2013). Effective intracortical microstimulation parameters applied to primary motor cortex for evoking forelimb movements to stable spatial end points. J. Neurophysiol. 110, 1180–1189. 10.1152/jn.00172.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Warden M. R., Cardin J. A., Deisseroth K. (2014). Optical neural interfaces. Ann. Rev. Biomed. Eng. 16:103. 10.1146/annurev-bioeng-071813-104733 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolpert D. M., Diedrichsen J., Flanagan J. R. (2011). Principles of sensorimotor learning. Nat. Rev. Neurosci. 12, 739–751. 10.1038/nrn3112 [DOI] [PubMed] [Google Scholar]

- Zajac F. E. (1989). Muscle and tendon: properties, models, scaling, and application to biomechanics and motor control. Crit. Rev. Biomed. Eng. 17, 359–411. [PubMed] [Google Scholar]