Abstract

The feasibility and acceptability of computerized screening and patient-reported outcome measures have been demonstrated in the literature. However, patient-centered management of health information entails two challenges: gathering and presenting data using “patient-tailored” methods and supporting “patient-control” of health information. The design and development of many symptom and quality-of-life information systems have not included opportunities for systematically collecting and analyzing user input. As part of a larger clinical trial, the Electronic Self-Report Assessment for Cancer–II project, participatory design approaches were used to build and test new features and interfaces for patient/caregiver users. The research questions centered on patient/caregiver preferences with regard to the following: (a) content, (b) user interface needs, (c) patient-oriented summary, and (d) patient-controlled sharing of information with family, caregivers, and clinicians. Mixed methods were used with an emphasis on qualitative approaches; focus groups and individual usability tests were the primary research methods. Focus group data were content analyzed, while individual usability sessions were assessed with both qualitative and quantitative methods. We identified 12 key patient/caregiver preferences through focus groups with 6 participants. We implemented seven of these preferences during the iterative design process. We deferred development for some of the preferences due to resource constraints. During individual usability testing (n = 8), we were able to identify 65 usability issues ranging from minor user confusion to critical errors that blocked task completion. The participatory development model that we used led to features and design revisions that were patient centered. We are currently evaluating new approaches for the application interface and for future research pathways. We encourage other researchers to adopt user-centered design approaches when building patient-centered technologies.

Keywords: consumer health information, decision-support systems, ehealth, evidence-based practice, IT design and development methodologies

Introduction

The feasibility and acceptability of symptom and quality-of-life information (SQLI) systems have been demonstrated in the literature.1,2 However, many of these applications have been developed based on what providers, researchers, or vendors have believed is best, with limited attention toward principles of user-centered design.3 While our early research efforts in computerized SQLI screening were similarly based on the needs of clinicians, an increasing emphasis in our research over the last 10 years has focused on the needs of patients. The latest version of the Electronic Self-Report Assessment for Cancer (ESRAC) web application utilized a user-centered design approach during the development process: presenting “patient-tailored” content as well as providing for the “patient-control” of health information. Findings presented here may help inform other researchers building or evaluating computerized SQLI management systems.

Background

Early efforts at computerized patient-reported outcomes focused largely on the needs of the providers. We have previously reported a review on these developmental efforts;1 in brief, foundational work, led by Slack et al.4 in 1964, reported on a computerized data collection system that included question branching and exception reporting to clinicians. Subsequent work has helped establish the utility and feasibility of collecting patient-reported outcomes via computer.2,5–12 Despite the advances made, usability testing was often rudimentary and limited to post hoc acceptability measures.1,13,14 However, in recent years, many researchers have begun to use participatory design approaches which help incorporate the preferences of end users. This approach, within software engineering efforts, often involves engaging end users in the iterative design of a product but not necessarily in the analysis and publication of research data.15,16 In a prior publication, we have provided a discussion of current usability techniques as well as exemplar studies that we found in the literature.3 Our definition of usability is informed by the International Organization for Standardization (ISO) 9241011: “The extent to which a product can be used by specified users to achieve specified goals with effectiveness, efficiency, and satisfaction in a specified context of use.”17 Our own development efforts increasingly have centered on users’ needs. In the first prototype of ESRAC (2001–2003), focus groups consisting of patients and providers were used during the design phase to help establish functional requirements.2 The application was evaluated using an externally developed exit survey14 with patients (n = 45) and clinicians (n = 12). Both groups reported high levels of acceptability in using the application. The clinicians also reported that the graphical summary of symptoms was helpful.12 While rated as acceptable and useful, this early prototype was largely “hard-coded” for specific survey instruments and had a relatively inflexible software architecture.18

With funding from the National Institute of Nursing Research (NINR), we were able to develop ESRAC 1.0 (2004–2008) with a more flexible survey architecture capable of multiple measurement time points, conditional branching, and a refined user interface based on best practices.19–21 The application also contained a researcher interface for editing surveys and downloading normalized datasets.19 Additional aims in this study called for a randomized controlled trial; patients (n = 660) of all cancer types used the application during two measurement points while in the ambulatory setting: prior to starting treatment and during treatment. Subjects were randomized to a control group and an intervention group, with graphical summaries of self-reported SQLI provided to the care team in the latter group. We were able to establish the acceptability of this new platform with patients and clinical efficacy, given that the delivery of the summary report increased discussion of SQLIs without increasing the duration of the visit.22 Capable of supporting a variety of research projects beyond ESRAC, and with an eye toward deployment in a variety of settings using virtual servers and cloud-based hosting, the codebase was named the Distributed Health Assessment and Intervention Research (DHAIR) platform.19

While ESRAC 1.0 had many additional features extending the original prototype, the application was still designed primarily with the clinical care team in mind. The interface for patients was a basic web form allowing the patient to answer validated questionnaires with limited features for feedback, interactivity, or engagement. The resulting summary reports were passed to the clinical care team in preparation for the face-to-face clinic visit. Patients were confined to reporting symptoms only at certain time points and could only access the system in an ambulatory setting on a research study laptop.

The program of research continued with a second NINR award (2008–2010) to enhance the intervention with a patient-centric approach encouraging patients to self-monitor SQLI, access institution-specific and web patient education resources for self-care of SQLI, and receive coaching on how to communicate the SQLI to the clinical team.23,24 We have also reported our participatory and iterative design approaches for developing ESRAC 2.0 within the context of a broader discussion of different approaches to assessing the usability and acceptability of patient-centered technologies.3,25 Here, we report our assessment of user preferences and subsequent results from usability testing of a revised prototype.

Methods

Participatory design and iterative software development techniques with a user-centered focus was the overall framework. Our development efforts were supported by design guidelines having an emphasis on universal usability and older adults.21,26–30 In brief, mixed research methods were employed with qualitative approaches used during focus groups as patient/caregiver preferences were identified. During individual sessions, we used low- and high-fidelity mock-ups and primarily relied on a “think-aloud” approach31 as well as recordings made by the Tobii T60 eye tracker,32 which provided us with both qualitative and quantitative data.

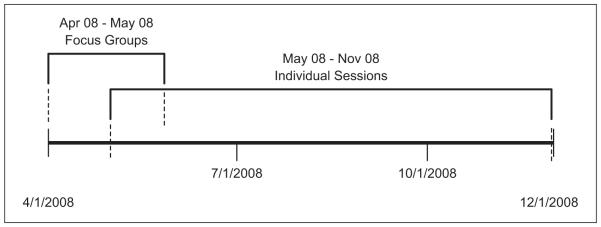

We conducted two focus groups with patients and caregivers during April and June 2008. The first focus group had three patients; the second group had two patients and one caregiver. Eight individual usability sessions were conducted between May and December 2008 (Figure 1). Two of these participants had taken part in the earlier focus groups.

Figure 1.

Timeline of focus groups and individual sessions.

Procedures

Participant characteristics

All study procedures were approved by the Fred Hutchinson Cancer Research Center/University of Washington Cancer Consortium Review Board. Eligible participants included patients with any type of cancer diagnosis or receiving a bone marrow transplant. All participants were 18+ years of age and English speaking. No upper age limit was employed. Participants were allowed to engage in focus group sessions as well as usability sessions. All participants received a US$20 incentive gift card for their participation in a focus group or usability session. Compensation was later increased to US$40 gift cards in an effort to increase recruitment rates.

Focus groups

Participants were recruited by flyers in the clinical area of the outpatient oncology center and through referrals by their nurses to the study coordinator. The participants were encouraged to bring caregivers to the group. Following informed consent procedures, participants answered a short internally developed questionnaire reporting demographics and previous computer use.

Focus groups were facilitated by one of the authors (B.H.). We used four categories of questions outlined by Kruger: Introductory, Transition, Key, and Ending questions.33 Discussion began by asking participants to think about symptoms they may have and what would be helpful in terms of symptom management. Participants were then prompted to think about their last visit and reflect on the process of how they thought about and reported symptoms to their provider. Additional conversation was prompted by reviewing printed copies of the ESRAC 1.0 provider-centered report. The focus groups were asked to consider questions such as the following: Do the surveys ask the types of questions that are important to patients? Do they, as patients, understand what is on the summaries? Do the summaries display data that are important to patients? In the second focus group, participants were presented with key findings to date, so that they could reflect the findings, validate them, and provide additional input, an approach recommended by Garmer et al.34 This focus group was asked to reflect more broadly on questions posed by the design committee, based on information learned from the previous focus group and development activities. During this process, we focused on participants’ suggestions for redesign and modification of the summaries. Low-fidelity mock-ups (“wireframes”) were prepared to stimulate discussion and feedback from patients. These mock-ups were non-interactive drawings that approximated the screen displays and navigation elements that would normally be generated by the application. The mock-ups were arranged around three key areas: graphs for patients to monitor their SQLI over time; tips on how to better communicate with their doctors; and information sharing with family, friends, and caregivers.

Individual usability testing

During individual usability sessions, participants examined low- and high-fidelity mock-ups of the application, while being encouraged to communicate their observations and experience. Along with allowing free exploration of the application, we asked participants to complete several structured tasks, such as finding the graphical summary for a specific symptom or adding a journal entry (Appendix 1), while noting any navigation errors. Sessions were recorded with a portable usability laboratory (PUL). The PUL, consisting of a Tobii T60 eye tracking monitor, screen recording software (Tobii Studio 1.2), and a video camera for recording audio and facial interactions, allowed us to look for patterns of user difficulty and software development issues that needed to be addressed. The Tobii T60 eye tracker is unobtrusive to the user and employs infrared cameras hidden in the base of a 17-inch monitor. Eye movements, as a proxy for user attention, are recorded without chin cradles or obtrusive video cameras. A calibration process required the participant to look at calibration points on the screen for approximately 5 s.32 Eghdam et al.16 used an eye tracker in their evaluation of an antibiotic decision-support tool for providers and found that it was a valuable complement to traditional usability studies in allowing the researchers to discover navigation issues and other user behaviors that may have otherwise been overlooked.

We began by assessing each of four areas marked for revision: (a) the user interface, (b) visual display elements, (c) navigation controls, and (d) the patient-centered summaries. Testing protocols with task lists that matched a typical use case were utilized. An example protocol (Appendix 1) included the following: logging in, locating a symptom report, changing the graph type to a bar chart, customizing the date range, adding a journal entry, and sharing a chart with a caregiver. The task list changed depending on what area of the application we were exploring. Emphasis in early sessions was on an index of symptom reports (“Results List”) that users could use to “drill down” into a focused, interactive view for a specific symptom. In later sessions, task lists were focused on journaling functions as well as sharing charts.

Participants were given instructions to “Tell me what you are thinking about while you use the program.” During the session, the role of the study staff facilitating the usability testing was to encourage the participant to continue to “think-aloud” with prompts such as “Tell me what you are thinking” and “Keep talking.” This “think-aloud” method was first outlined by Virzi.31 Later, participants were given slightly different task lists, with some core items retained, to maximize evaluation of different parts of the application. Initial individual sessions were conducted with low-fidelity paper mock-ups, while later sessions used high-fidelity web prototypes. Using the PUL, we assessed critical navigation paths and deviations that users might make in the course of completing a task. Independent reviewers coded the relative success of participants in completing the task using “1 = No issues, 2 = some hesitation, 3 = marked difficulty, 4 = task failure.”

Analysis

Audio recordings of the focus groups were transcribed and reviewed by the research team using a content analysis approach. Independent reviewers (S.E.W., D.L.B., and G.W.) tagged utterances within each transcript with labels such as “navigation issue,” “information sharing,” and “aesthetics.” Two rounds were conducted with reviewers meeting after each round to discuss tags and to reach consensus when needed. The resulting tags were then prioritized for development.

Tasks in each usability session were later reviewed and coded independently by authors S.E.W. and G.W. using recordings from the eye tracker, embedded audio/video, and external video. Codes were later compared and consensus reached when there were differences in initial coding. Had no consensus been reached, a third author would have been involved in the process.

Results

An internally developed demographic survey was administered once to each of the 12 distinct participants. The typical participant was male (n = 9), aged between 30 and 49 years (n = 9), non-Hispanic (n = 11), and white (n = 10). The sample was quite educated with most (n = 8) having completed college or postgraduate studies. With respect to computer use, all respondents indicated they searched for health information on the Internet, and all reported using computers at home. One respondent had dial-up access at home, while the others reported high-speed access. Two respondents reported never using computers at work, but the remaining participants indicated that they used computers “often” or “very often” when at work.

Focus groups

Participants’ review of the survey component of the platform confirmed that the questions being asked were relevant and that the graphical summaries of reported SQLI were understandable. We identified 12 key patient preferences (Table 1) to help guide our development efforts and subsequent individual testing sessions, 7 of these preferences were implemented but 5 were deferred for various reasons.

Table 1.

Features identified by focus group participants and subsequent decisions to implement or defer.

| Feature | Focus group | Implemented/deferred |

|---|---|---|

| Customizable date range for summaries | 2 | Implemented |

| Individual display of symptoms in scale | 1 | Implemented |

| Show previous responses to questions “At the chart” | 2 | Deferred |

| Information about who created questionnaire scales | 1 | Deferred |

| Add journal/text entry to help annotate SQLI chart | 1 | Implemented |

| Aesthetics/style changes | 1 | Implemented |

| Icons for symptom groups (“picture of a brain … picture of an intestine”) |

1 | Deferred |

| Start bulleted teaching tips with title | 1 | Implemented |

| Link “See more” to expand teaching tips | 1 | Implemented |

| Share summaries and journal entries | Implemented | |

| Show usage logs for whether those invited to share data access the data |

2 | Deferred |

| Add patient labs/records from the clinic system | 1 | Deferred |

SQLI: symptom and quality-of-life information.

Participants reported a preference for being able to customize date ranges, select which specific symptoms in a subscale were displayed on a chart, as well as the ability to see the actual wording from the questionnaire for the variables that were being charted. Some participants wanted access to metadata about the surveys and SQLI (e.g. authors, previous study results). We were unable to implement this fully due to resource constraints but provided links to study related web pages that contain relevant bibliographic references.

One area that proved difficult to implement was a desire by patients to include free text annotations of graphical symptom summaries on the graph itself, as well as an online journal. We accomplished both by presenting a journal entry feature as a stand-alone page as well as an open text box interface for journal entries on the page that displayed graphed SQLI (Appendix 2).

We also received feedback on aesthetics and navigation, such as styling bulleted lists and the use of more icons, particularly related to labeling groups of similar symptoms. We were able to implement most of this feedback but deferred using icon groups in part because there is not always a clear relationship between icons and symptoms. With regard to content, there was preference for inclusion of teaching tips, so that users could access additional information about symptoms and links to external resources. Given the length of some of the teaching tips, there was consensus that displaying some of the preliminary text in a teaching tip with a “see more” link that would expand the text would be beneficial. Participants also wanted to be able to “invite and share” graphical summaries and journal entries with specific people such as family members and caregivers—there was also a desire voiced to have granular control, so that individual symptom elements could be shared without “sharing everything.” We implemented this with a multistep sharing process including “select all” checkboxes to facilitate efficient information sharing while preserving granular control. One area we chose not to implement was the ability for patients to view usage logs that detailed whether shared charts and journals were actually being viewed by those who had received a sharing invitation. We saw potential problems with user privacy and deferred it for possible inclusion in a future study. A common theme related to patient control of information was the desire to have medical records, such as lab data, accessible within the application. This feature was deferred due to being outside our scope and research.

Individual usability sessions

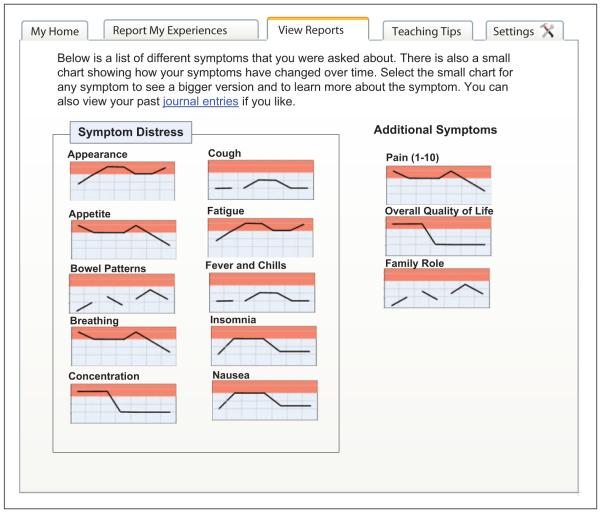

Eight participants completed usability sessions. Three sessions were conducted with low-fidelity paper mock-ups as depicted in Figure 2, the remaining five sessions used high-fidelity mock-ups. One participant began a session that was stopped after 15 min due to connectivity issues; this session was rescheduled at a later time.

Figure 2.

Low-fidelity mock-up showing symptoms index page.

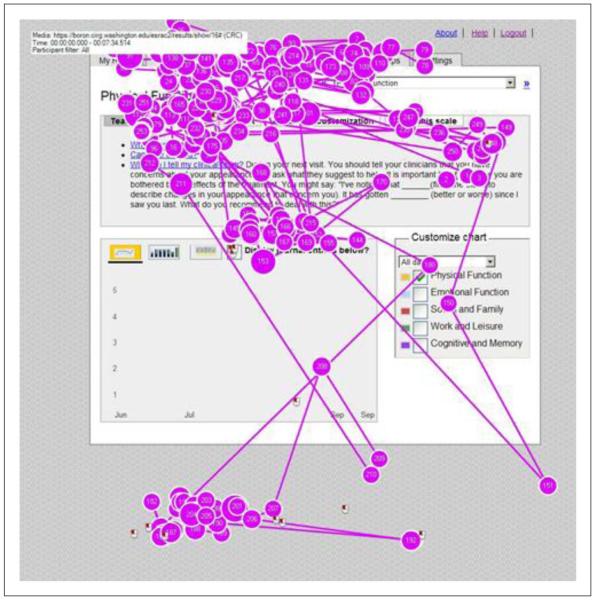

The remaining five sessions used web prototypes and the Tobii T60 eye tracker.32 Using a testing protocol (Appendix 1), participants were asked to complete a series of discrete tasks on screen. The majority were completed with little or no hesitation. However, we identified 65 usability issues ranging from minor user confusion to critical errors that blocked task completion. Of note was initial site navigation confusion in Internet-naïve users. Several participants were confused by the labeling of navigation buttons displayed with a horizontal tabbed folder metaphor. This confusion was confirmed by examination of gaze plots recorded by the eye tracker. These plots showed marked visual scanning as participants searched for the appropriate navigation elements (Figure 3). For the first task, we typically instructed subjects, “Find your nausea report;” however, several participants had difficulty locating the correct tab. At least two of the eight participants navigated first to the “Reports” tab instead of the “Results” tab. Once subjects found the correct tab, they generally did not have trouble completing the task. Through iterative feedback, we eventually settled on “Report My Experiences” and “View My Reports” for these tab labels.

Figure 3.

Gaze plots from eye tracker recordings.

Our rational for using low-fidelity approaches for the first three sessions was that we wanted feedback on key features before investing the time needed to code these features in the high-fidelity web prototype. However, we found feedback from the web prototype sessions to be a richer source of data as the user experience was more interactive, and we were able to record and replay gaze patterns using the eye tracker. These benefits were tempered by the fact that these five high-fidelity sessions were conducted on the development web server which frequently contained debugging code that resulted in lag times contrary to a typical user experience.

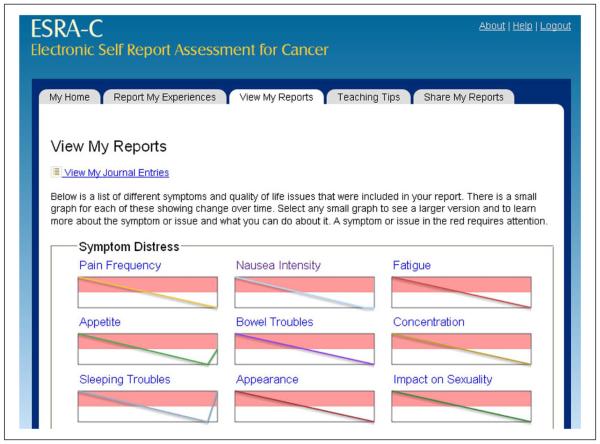

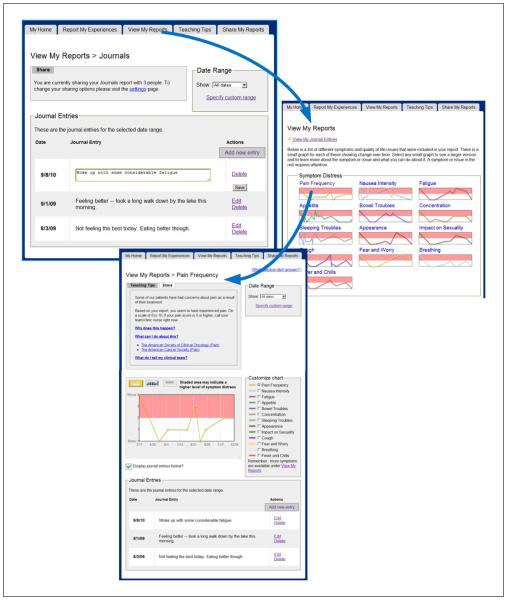

Our final design (Figure 4) found five primary pages using a tabbed navigation: (a) “My Home” which provided a welcome messaging as well as any tailored messaging directed toward the patient about appointment times, links to resuming SQLI surveys, and so on; (b) “Report My Experiences” where users answered questions about SQLI as well as provided free text journal entries; (c) “View My Reports” which showed past entries, starting from a dashboard view and moving into detailed data displays for each symptom; (d) “Teaching Tips” about managing symptoms; and (e) “Share My Reports” where users could invite others to view specific charts and past journal entries.

Figure 4.

Final design of “View My Reports” page.

Discussion

Our findings suggest that patients have a desire to view, manipulate, annotate, and share graphical summaries of their symptom status. Our research efforts incorporated participatory design steps, individual usability testing, and iterative development approaches. Combined together, these approaches allowed for application development that incorporated patient needs. Other researchers have also found success with participatory and iterative design approaches34–36 and with the use of eye trackers for complementary subjective and objective data collection.16

Despite the benefits of using eye trackers for the development of patient-centered technologies, many of the studies we found were related to provider-oriented tools or non-health-related applications.

While we implemented some features that users preferred and revised them to be more usable, we deferred implementing other features largely due to resource and time constraints. One patient preference that was particularly difficult to implement was the desire to annotate symptom charts. We were able to provide a partial solution by allowing patients to add entries to their journals while viewing their symptom charts. While this alignment of patient journals and charts allowed the patients to annotate results, the actual results in the charts were not connected programmatically to the journal entry in the backend database. For example, if a patient changed the date range on a displayed chart to a time in the past and then added a journal entry, that entry would be associated with the current date rather than the intended date. This is an area that could benefit from further study and development. People are growing accustomed to “tagging” content on websites and later using those tags for creating taxonomies and navigation approaches.37,38 How patients can tag their symptom charts is an interesting area worth further study, an extension of this question might look at how patients might create symptom tags and attach them to graphical representations of their body over time.

Another preference that we were unable to meet fully was the desire for patients to see linkages between the symptom scales and the scientific literature. We were unable to implement this due to resource conflicts—but such integration is an inherently patient-centered characteristic that lends transparency for those activated patients who want to research topics further or who may simply want a higher confidence level for the science underlying the assessment and monitoring charts. This desire for more transparency was also noted in participatory research by Garmer et al.34

Our study is limited in a number of ways. Across the board, participants were fairly well educated, and predominantly white, precluding any generalization beyond this demographic. Participants in focus groups may have behaved differently with caregivers present, and our focus groups and individual sessions would have benefited with additional participants. According to usability expert Jacob Nielsen, testing with 3–5 users will identify most problems in an application,39,40 and other researchers have argued for more.41 Although the eye tracker provided valuable feedback on navigation and visual gaze patterns as it has for other researchers,16 it was not utilized to its fullest capabilities. We did not test competing versions of interfaces and focused on the iterative development of one design. We learned from this study that future efforts need to consider more time for usability testing within the project timeline as well as a testing platform that is independent from other development efforts. We found that lag times on the testing server, as well as bugs within the application itself, frequently confounded time-sensitive measurements taken with the PUL. These limitations left us using the recordings more as more qualitative data source versus a quantitative source. Despite these setbacks, these recordings served as a rich source of data and are congruent with the findings by Gerjets et al.42 that speak about the benefits of integrating think-aloud data with eye tracking data.

Conclusion

Participatory research efforts allowed for application development that incorporated patient needs. A number of important features were identified by participants and refined through our iterative development process, particularly with respect to the graphical display of symptoms, teaching tips about symptom management, critical navigation paths, and information sharing. Future efforts should allow generous amounts of time for usability testing within the project timeline as well as a testing platform that is independent from other development efforts. We are currently evaluating new approaches for the application interface and for future research pathways. We encourage other researchers to adopt user-centered design approaches when building patient-centered technologies.

Acknowledgements

The authors wish to thank the Seattle Cancer Care Alliance, the patients and caregivers who participated in this study, as well as research coordinators Kyra Freestar and Debby Nagusky.

Funding

This work was supported by NIH under grant R01 NR 008726.

Appendix 1.

Example individual user testing protocol

1) Login to website with username = ptesta and password = xxxx.

2) Locate a Symptom Report:Please locate the chart related to Fatigue.

Subtasks

a) Data Retrieval:What was your most recent fatigue level?

b) Graphical Selectors:Change chart type to bar chart, change it to a numerical display, back to a line chart.

c) Customize Chart—Add a Symptom:Please add another symptom to the chart so that it is displayed at the same time as fatigue.

d) Customize Chart—Simple Date Change:Change time frame for chart to last month.

e) Customize Chart—Custom Date Change:Change time frame for chart to show June 15th to July 1st.

f) Navigate to New Symptom:Let’s imagine you are done with looking at this symptom and want to view reports about your Nausea.

g) Find Teaching Tip:You’d like to know more about how to talk about this symptom with your doctors and nurses, what would you do on this page?

h) Journal entry, View all:You would like to view some of your past journal entries for this time period. Please display them.

i) Journal entry, Add New:Please add a new entry for today.

j) Journal entry, Edit Past:You notice a typo in the entry for 1/1/2008, please edit it.

k) Journal entry, Delete:Please delete the entry from the 14th.

g) Share Report:You’d like to invite a friend to view this symptom from their computer, what would you do?

Appendix 2.

Alternative access points for patient journaling

Footnotes

Declaration of conflicting interests

The sponsor had no role in manuscript preparation or approval.

Contributor Information

SE Wolpin, University of Washington, USA.

B Halpenny, Dana-Farber Cancer Institute, USA.

DL Berry, University of Washington, USA; Dana-Farber Cancer Institute, USA.

References

- 1.Wolpin S, Berry D, Austin-Seymour M, et al. Acceptability of an electronic self-report assessment program for patients with cancer. Comput Inform Nurs. 2008;26(6):332–338. doi: 10.1097/01.NCN.0000336464.79692.6a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Berry D, Trigg L, Lober W, et al. Computerized symptom and quality-of-life assessment for patients with cancer part I: development and pilot testing. Oncol Nurs Forum. 2004;31(5):E75–E83. doi: 10.1188/04.ONF.E75-E83. [DOI] [PubMed] [Google Scholar]

- 3.Wolpin S, Stewart M. A deliberate and rigorous approach to development of patient-centered technologies. Semin Oncol Nurs. 2011;27(3):183–191. doi: 10.1016/j.soncn.2011.04.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Slack W, Hicks P, Reed C, et al. A computer-based medical history system. N Engl J Med. 1966;274(4):194–198. doi: 10.1056/NEJM196601272740406. [DOI] [PubMed] [Google Scholar]

- 5.Velikova G, Wright EP, Smith AB, et al. Automated collection of quality-of-life data: a comparison of paper and computer touch-screen questionnaires. J Clin Oncol. 1999;17(3):998–1007. doi: 10.1200/JCO.1999.17.3.998. [DOI] [PubMed] [Google Scholar]

- 6.Wright EP, Selby PJ, Crawford M, et al. Feasibility and compliance of automated measurement of quality of life in oncology practice. J Clin Oncol. 2003;21(2):374–382. doi: 10.1200/JCO.2003.11.044. [DOI] [PubMed] [Google Scholar]

- 7.Velikova G, Brown JM, Smith AB, et al. Computer-based quality of life questionnaires may contribute to doctor-patient interactions in oncology. Br J Cancer. 2002;86(1):51–59. doi: 10.1038/sj.bjc.6600001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Velikova G, Booth L, Smith AB, et al. Measuring quality of life in routine oncology practice improves communication and patient well-being: a randomized controlled trial. J Clin Oncol. 2004;22(4):714–724. doi: 10.1200/JCO.2004.06.078. [DOI] [PubMed] [Google Scholar]

- 9.Berry D. Quality of life assessment in persons with cancer. J Registry Manag. 1997;24(1):8–11. [Google Scholar]

- 10.Berry D. Computerized cancer symptom and quality of life assessment. Oncology nursing society PRISM workshop. Newport Beach, CA, 24 October 2001. [Google Scholar]

- 11.Berry D, Galligan M, Monahan M, Karras B, Lober W, Martin S, Austin-Seymour M. Computerized quality of life assessment in radiation oncology. In: Tau ST, editor. Advancing Nursing Practice Excellence: State of the Science. Sigma Theta Tau; Washington, DC: Sep, 2002. 2002. [Google Scholar]

- 12.Mullen K, Berry D, Zierler B. Computerized symptom and quality-of-life assessment for patients with cancer part II: acceptability and usability. Oncol Nurs Forum. 2004;31(5):E84–E89. doi: 10.1188/04.ONF.E84-E89. [DOI] [PubMed] [Google Scholar]

- 13.Newell S, Girgis A, Sanson-Fisher RW, et al. Are touchscreen computer surveys acceptable to medical oncology patients? J Psychosoc Oncol. 1997;15(2):37–46. [Google Scholar]

- 14.Carlson LE, Speca M, Hagen N, et al. Computerized quality-of-life screening in a cancer pain clinic. J Palliat Care. 2001;17(1):46–52. [PubMed] [Google Scholar]

- 15.Reichlin L, Mani N, McArthur K, et al. Assessing the acceptability and usability of an interactive serious game in aiding treatment decisions for patients with localized prostate cancer. J Med Internet Res. 2011;13(1):e4. doi: 10.2196/jmir.1519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Eghdam A, Forsman J, Falkenhav M, et al. Combining usability testing with eye-tracking technology: evaluation of a visualization support for antibiotic use in intensive care. Stud Health Technol Inform. 2011;169:945–949. [PubMed] [Google Scholar]

- 17.International Organization for Standardization/International Electrotechnical Commission (ISO/IEC) 9241–11:1998 Ergonomic requirements for office work with visual display terminals (VDTs)—part 11 guidance on usability [Google Scholar]

- 18.Karras BT, O’Carroll P, Oberle MW, et al. Development and evaluation of public health informatics at University of Washington. J Public Health Manag Pract. 2002;8(3):37–43. doi: 10.1097/00124784-200205000-00006. [DOI] [PubMed] [Google Scholar]

- 19.Dockrey MR, Lober WB, Wolpin SE, et al. Distributed health assessment and intervention research software framework. AMIA Annu Symp Proc. 2005;2005:940. [PMC free article] [PubMed] [Google Scholar]

- 20.SPRY Foundation . Older adults and the World Wide Web: a guide for web site creators. SPRY Foundation; Washington, DC: 1999. [Google Scholar]

- 21.Nielsen J. Web usability for senior citizens: 46 design guidelines based on usability studies with people age 65 and older. 2002 Nielsen Normal Group Report. [Google Scholar]

- 22.Berry DL, Blumenstein BA, Halpenny B, Wolpin S, Fann JR, Austin-Seymour M, Bush N, Karras BT, Lober WB, McCorkle R. Enhancing patient-provider communication with the electronic self-report assessment for cancer: a randomized trial. J Clin Oncol. 8;29:1029–35. doi: 10.1200/JCO.2010.30.3909. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Berry DL, Hong F, Halpenny B, et al. Electronic self-report assessment for cancer: results of a multisite randomized trial. American Society of Clinical Oncology annual meeting. 2012 June 3; Chicago. [Google Scholar]

- 24.Berry D, Halpenny B, Wolpin S. Enrollment rates with on-line versus in-person trial recruitment; 11th national conference on cancer nursing research; Los Angeles, CA. Feb 10–12, 2011. [Google Scholar]

- 25.Wolpin S, Berry D, Whitman G, et al. Development of a user-centered web application for reporting cancer symptoms and quality of life information; American Medical Informatics Association 2009 spring congress; Orlando, FL. May 27–30, 2009. [Google Scholar]

- 26.National Institute on Aging and National Library of Medicine Making your web site senior-friendly. 2013 http://www.nia.nih.gov/health/publication/making-your-website-senior-friendly (Retrieved 7/18/2013)

- 27.Kushniruk A. Evaluation in the design of health information systems: application of approaches emerging from usability engineering. Comput Biol Med. 2002;32(3):141–149. doi: 10.1016/s0010-4825(02)00011-2. [DOI] [PubMed] [Google Scholar]

- 28.Chiang MF, Cole RG, Gupta S, et al. Computer and World Wide Web accessibility by visually disabled patients: problems and solutions. Surv Ophthalmol. 2005;50(4):394–405. doi: 10.1016/j.survophthal.2005.04.004. [DOI] [PubMed] [Google Scholar]

- 29.Youngworth SJ. Electronic Self Report Assessment–Cancer (ESRA-C): readability and usability in a sample with lower literacy. Masters Project, University of Washington School of Nursing, Seattle, WA: 2005. [Google Scholar]

- 30.Usability.gov Your guide for developing usable & useful web sites. http://usability.gov/guidelines/index.html (2011, accessed 20 February 2011)

- 31.Virzi RA. Refining the test phase of usability evaluation: how many subjects is enough? Hum Factors. 1992;34(4):457–468. [Google Scholar]

- 32.Tobii T60 & T120 Eye Tracker. http://www.tobii.com/en/eye-tracking-research/global/products/hardware/tobii-t60t120-eye-tracker/(2011, accessed 10 February 2011)

- 33.Krueger R. Developing questions for focus groups. SAGE; Thousand Oaks, CA: 1998. [Google Scholar]

- 34.Garmer K, Ylven J, MariAnne Karlsson IC. User participation in requirements elicitation comparing focus group interviews and usability tests for eliciting usability requirements for medical equipment: a case study. Int J Ind Ergon. 2004;33(2):85–98. [Google Scholar]

- 35.Stoddard JL, Augustson EM, Mabry PL. The importance of usability testing in the development of an Internet-based smoking cessation treatment resource. Nicotine Tob Res. 2006;8(Suppl. 1):S87–S93. doi: 10.1080/14622200601048189. [DOI] [PubMed] [Google Scholar]

- 36.Kushniruk AW, Patel VL. Cognitive and usability engineering methods for the evaluation of clinical information systems. J Biomed Inform. 2004;37(1):56–76. doi: 10.1016/j.jbi.2004.01.003. [DOI] [PubMed] [Google Scholar]

- 37.Carmagnola F, Cena F, Cortassa O, Gena C, Torre I. Towards a Tag-Based User Model: How Can User Model Benefit from Tags? User Modeling. 2007:445–449. [Google Scholar]

- 38.Kipp MEI, Campbell DG. Patterns and inconsistencies in collaborative tagging systems: an examination of tagging practices. P Am Soc Inform Sci Technol. 2006;43(1):1–18. [Google Scholar]

- 39.Nielson J. Designing web usability: the practice of simplicity. New Riders Publishing; Indianapolis, IN: 2000. [Google Scholar]

- 40.Nielsen J, Landauer TK. Ashlund S, Mullet K, Henderson A, Hollnagel E, White TN, editors. A mathematical model of the finding of usability problems. INTERCHI. 1993:206–213. ACM. ISBN: 0-89791-574-7. [Google Scholar]

- 41.Faulkner L. Beyond the five-user assumption: benefits of increased sample sizes in usability testing. Behav Res Meth Ins C. 2003;35(3):379–383. doi: 10.3758/bf03195514. [DOI] [PubMed] [Google Scholar]

- 42.Gerjets P, Kammerer Y, Werner B. Measuring spontaneous and instructed evaluation processes during web search: integrating concurrent thinking-aloud protocols and eye-tracking data. Learn Instr. 2011;21(2):220–231. [Google Scholar]